Abstract

Mind-wandering assessment relies heavily on the thought probe technique as a reliable and valid method to assess momentary task-unrelated thought (TUT), but there is little guidance available to help researchers decide how many probes to include within a task. Too few probes may lead to unreliable measurement, but too many probes might artificially disrupt normal thought flow and produce reactive effects. Is there a “Goldilocks zone” for how few thought probes can be used to reliably and validly assess individual differences in mind-wandering propensity? We address this question by reanalyzing two published datasets (Study 1, n = 541; Study 2, ns ≈ 260 per condition) in which thought probes were presented in multiple tasks. Our primary analyses randomly sampled probes in increments of two for each subject in each task. A series of confirmatory factor analyses for each probe “bin” size tested whether the latent correlations between TUT rate and theoretically relevant constructs like working memory capacity, attention-control ability, disorganized schizotypy, and retrospective self-reported mind wandering changed as more probes assessed the TUT rate. TUT rates were remarkably similar across increasing probe-bin sizes and zero-order correlations within and between tasks stabilized at 8–10 probes; moreover, TUT-rate correlations with other latent variables stabilized at about 8 thought probes. Our provisional recommendation (with caveats) is that researchers may use as few as 8 thought probes in prototypical cognitive tasks to gain reliable and valid information about individual differences in TUT rate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

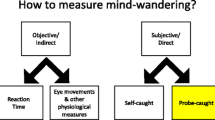

The primary way psychologists assess mind wandering as it occurs, whether in the laboratory or in daily life, is through an experience-sampling technique known as the thought-probe method (for reviews see Smallwood & Schooler, 2006, 2015). Here, subjects engaged in an ongoing activity are presented with periodic visual or auditory signals that ask them to report on their immediately preceding thoughts (for a review of thought-probe variations, see Weinstein, 2018). Researchers typically assess the frequency with which subjects report task-unrelated thoughts (TUTs) to these probes during the ongoing task or activity.

In the laboratory, the thought-probe technique has been successfully implemented in a variety of tasks, including attention-control and working memory tasks (e.g., Kane et al., 2016; McVay & Kane, 2009; Robison et al., 2020; Unsworth & Robison, 2016), passage reading (e.g., Schooler et al., 2004; Smallwood et al., 2008; Unsworth & McMillan, 2013), simulated driving (e.g., Baldwin et al., 2017; Zhang & Kumada, 2018), and video-lecture viewing (e.g., Hollis & Was, 2016; Risko et al., 2012; Szpunar et al., 2013). Probed TUT-report rates appear to be valid individual-differences measures, as they are reliable across different tasks and occasions (e.g., Kane et al., 2016; Unsworth et al., 2020) and they correlate with other measures argued to reflect mind wandering and attentional lapses, such as reaction time (RT) variability (Bastian & Sackur, 2013; McVay & Kane, 2012; Seli et al., 2013b; Unsworth et al., 2010), pupil dilation and eye movements (Reichle et al., 2010; Unsworth & Robison, 2017; Zhang et al., 2020), and retrospective self-reports of mind-wandering propensity (Carriere et al., 2013; Mrazek et al., 2013; Seli et al., 2016; Smeekens & Kane, 2016). Variation in TUT rate is also predicted by measures of theoretically relevant constructs like working memory capacity (WMC) and attention-control ability (Kane et al., 2016, 2017; McVay & Kane, 2012; Robison & Unsworth, 2018; Rummel & Boywitt, 2014), attention-deficit/hyperactivity disorder symptoms (Franklin et al., 2017; Meier, 2021; Seli et al., 2015b), and motivation for and interest in the ongoing activity (Brosowsky et al., 2020; Robison et al., 2020; Seli et al., 2015a).

Although probed TUT reports demonstrate reasonable construct validity (for a review, see Kane et al., 2021), researchers face a challenge in designing mind-wandering studies—deciding on the number and frequency of thought probes to present during a task. Infrequent probing may not provide enough reports to reliably and validly measure the TUT rate, especially in short-duration tasks; infrequent probes may also miss many instances of off-task thought that occur in the time between them. Probing too frequently, in contrast, might disrupt subjects’ natural flow of thought too severely and provide insufficient time between probes to drift off-task; frequent probes might also reactively remind subjects to stay mentally on-task (see Konishi & Smallwood, 2016).

Unfortunately, the literature provides little guidance regarding optimal (or minimal) numbers or frequencies of thought probes. Only a few studies have recently examined the impact of probe frequency on observed TUT rates, either by comparing experimental groups that receive a typical versus a more-than-typical number of probes within a task (Robison et al., 2019; Schubert et al., 2019), or by parametrically varying the frequency of probes across subjects (Seli et al., 2013a). Results have been mixed. Robison et al. (2019, Experiment 1) presented subjects with thought probes after either 7% or 13% of trials in the Sustained Attention to Response Task (SART); neither TUT rate nor task performance differed significantly between groups. In contrast, Schubert et al. (2019) found significantly higher TUT rates for subjects seeing probes after only 3% of SART trials than after 6% of trials. Similarly, in the Seli et al. (2013a) study using a continuous metronome response task (MRT), probes could occur following 0.8–4.2% of trials, and TUT rates increased with more time between probes. At the same time, neither SART performance nor MRT performance in these studies was affected by probe rate, suggesting that probe rate artifactually changed subjects’ subjective reports but not their underlying attentional states.

Although two of three relevant studies show that average TUT rates vary somewhat with probe rate (perhaps varying most across probe rates of 1–6%), only Schubert et al. (2019) also assessed individual differences. Probe rate did not interact significantly with any other variables in their study to predict the TUT rate (including SART performance, WMC, and questionnaire measures of mind wandering propensity), suggesting good news for mind wandering researchers: Probe rate was unrelated to individual differences assessment. But there is only so much we can conclude from one study’s null effects, particularly given the modest range of probe rates tested (3% vs. 6%).

The present study reports two secondary data analyses to address a pragmatic methodological question: How few thought probes are enough to reliably and validly assess individual differences in TUT rates? Given the variety of tasks and contexts in which thought probes have been used, a correspondingly wide range of probe numbers and frequencies have been employed, with some studies using tasks that include as few as four probes (e.g., Forster & Lavie, 2009; Levinson et al., 2012; Robison et al., 2020; Rummel & Boywitt, 2014) and others using tasks with as many as 45–120 probes (e.g., Kane et al., 2016; McVay & Kane, 2009, 2012). Ceteris paribus, using fewer probes has the potential advantages of less reactivity, reduced demand characteristics, more natural or representative task experience, and fewer artificial disruptions of thought flow. Therefore, researchers of mind wandering should strive to include as few probes as are needed for reliable and valid measurement of the TUT rate.

To provide provisional guidance to the field, we reanalyze data from two published studies (Kane et al., 2016; 2021) in which we probed subjects in multiple laboratory tasks and assessed a variety of cognitive and non-cognitive correlates of TUT rate. Our approach mirrors that taken by recent investigations into how few task trials of complex span tasks are needed to reliably and validly measure WMC (Foster et al., 2015; Oswald et al., 2015, Study 1); those studies presented all subjects with full-length complex span tasks but analyzed subsets of the tasks’ data to determine the fewest trials needed to roughly reproduce the full tasks’ correlations with each other and with measures of a related construct (fluid intelligence). In the present study, each analyzed task presented the same number of probes to all subjects, and we analyzed subsets of subjects’ probe responses to determine at what number of analyzed probes (i.e., at what probe bin size) do average TUT rates and, of most importance, TUT rates’ correlations with other variables, stabilize.

Specifically, regarding reliability, we will focus on: (a) the stability of M TUT rates across probe bin sizes (e.g., 2-probe TUT rate vs. 20-probe TUT rate), (b) item-total correlations, considering the correlation of TUT rate from each subset of analyzed probes with that from the largest bin size, within each task (e.g., 2-probe TUT rate × 20-probe TUT rate from the SART), and (c) factor loadings from latent variable models for each task’s TUT rate across different probe bin sizes (e.g., the 2-probe TUT rate loadings on a TUT rate factor for all the indicator tasks vs. the 20-probe TUT rate loadings on a TUT factor for all the indicator tasks). Regarding validity, we focus on the correlations of a TUT rate latent variable with other theoretically relevant constructs across different probe bin sizes (e.g., the TUT rate × WMC latent correlation with TUT rates calculated from 2-probe bins vs. 20-probe bins). In our primary analyses, we selected these analyzed probes randomly for each subject from each task; in secondary analyses that assess the robustness of our findings and conclusions (with details reported in supplemental materials), we analyzed the first n probes that appeared within the task for each subject.

Study 1

Study 1 reanalyzes data from Kane et al. (2016), which tested several hundred subjects in three 2-hour lab sessions. Thought probes were presented within five tasks—two in session 1, two in session 2, and one in session 3—and individual-differences constructs included WMC, attention-control inability (higher scores = worse performance), and several dimensions of psychometrically assessed schizotypy.

Methods

The original Kane et al. (2016) study reported how they determined sample sizes, all data exclusions, and all included measures (Simmons et al., 2012). The study received ethics approval from the Institutional Review Board (IRB) at the University of North Carolina at Greensboro (UNCG), a minority-serving comprehensive state university.

Subjects

As reported in Kane et al. (2016), 541 UNCG undergraduates completed the first session, 492 completed the second, and 472 completed the third. Here is the originally reported demographic information:

Sixty-six percent of our 541 analyzed subjects self-identified as female and 34% as male (5 missing cases), with a mean age of 19 years (sd = 2; 2 missing cases). Also by self-report, the racial composition of the sample was 49% White (European/Middle Eastern descent); 34% Black (African/Caribbean descent); 7% Multiracial; 4% Asian; <1% Native American/Alaskan Native; 0% Native Hawaiian/Pacific Islander; 4% Other (4 missing cases). Finally, self-reported ethnicity, asked separately, was 7% Latino/Hispanic (1 missing case). (Kane et al., 2016, pp. 1026–1027)

Tasks, measures, and procedures

Subjects were each seated at their own workstation and were tested in groups of 1–4. An experimenter remained present throughout the entire session to initiate each task after all subjects had completed the prior one, to read all task instructions aloud, and to monitor subjects’ behavior (and record any problems).

For detailed descriptions of all the computer-administered cognitive tasks and schizotypy questionnaires (both analyzed and unanalyzed), as well as their scoring and dependent measures, see Kane et al. (2016). Below we describe the key constructs of interest for the present study—WMC, attention-control inability, and disorganized schizotypy. Across each the three experimental sessions, subjects completed at least one measure of each construct and at least one probed task (except the schizotypy assessments, which were presented only in sessions 1 and 2).

WMC

Subjects completed six WMC tasks. Four complex span tasks (operation, reading, symmetry, and rotation span) presented sequences of to-be-remembered items (e.g., letters; spatial locations in a matrix) of varying set sizes for immediate serial recall; prior to each memory item, an unrelated processing task required a yes/no response (e.g., a mathematical equation that was correct or incorrect; an abstract pattern that was vertically symmetrical or not). Two memory-updating tasks (an updating counters task and a running span task) required subjects to maintain an evolving set of stimuli (letters or numbers) of varying set sizes and to abandon no longer relevant stimuli. Across all WMC tasks, higher scores reflected more items accurately recalled in serial order.

Attention control

Five tasks required subjects to override a prepotent response in favor of a goal-appropriate one. Subjects completed two antisaccade tasks (requiring identifying stimuli [either arrows or letters] presented to the opposite side of an attention-attracting cue, one task requiring a choice among 3 response options and the other among 4 response options; the dependent variable for each was accuracy rate), a go/no-go SART task (requiring withholding of a key-press response on a minority of semantic-classification trials [animal names appeared on 89% of trials and vegetables appeared on 11%]; dependent variables were d' and intrasubject standard deviation in RT [RTsd]), and two Stroop-like tasks, a number Stroop and a spatial Stroop task (requiring ignoring a salient stimulus dimension in favor of responding to another stimulus dimension; the dependent variable for spatial Stroop was the residual of the incongruent trial error rate regressed on the congruent trial error rate, and for number Stroop was the M RT on incongruent trials). Measures for attention control were scored such that higher scores reflected worse performance (e.g., greater error rate, poorer signal detection, longer or more variable RTs).

Disorganized schizotypy

Subjects completed a battery of valid questionnaires assessing multiple dimensions of schizotypy. In latent variable analyses, Kane et al. (2016) found equivalent TUT correlations with disorganized, positive, and paranoid dimensions of schizotypy (all .21–.22) as well as strong correlations among these schizotypy facets (≥.60). For simplicity, then, we investigated only the disorganized dimension here, analyzing data from the following scales: the Schizotypal Personality Questionnaire–Odd Behavior and Odd Speech subscales (Raine, 1991), the Cognitive Slippage Scale (Miers & Raulin, 1987), and the Dimensional Assessment of Personality Pathology-Basic Questionnaire (6 items from the Cognitive Dysregulation subscale; Livesley & Jackson, 2009). Subjects answered “yes” or “no” to each item. Higher scores reflected greater endorsement of behaviors in each dimension.

Probed thought reports

Thought probes appeared randomly, with some constraints, within five tasks. In the letter flanker task, 12 probes were presented (following 8.3% of total trials), 4 after congruent trials, 2 after neutral trials, 2 after stimulus-response (S-R) incongruent trials, 2 after stimulus-stimulus (S-S) incongruent trials, and 2 after an unanalyzed trial type. In the SART, 45 probes were presented following no-go target trials (6.6% of total trials). In the number Stroop task, 20 probes were presented in the second block of the task, always following incongruent trials (13% of block 2 trials). In the arrow flanker task, 20 probes were presented across the two blocks; 4 were presented in the first block and 16 in the second (10.4% of total trials). Finally, in the 2-back task, 15 probes were presented, following 6.3% of trials.

Each probe presented the following 8 response options and subjects were told to select the one that most closely aligned with the content of their immediately preceding thoughts by pressing the corresponding number key on the keyboard: (1) “the task” (thought related to the stimuli and goals of the task), (2) “task experience/performance” (evaluative thoughts about one’s performance on the task, (3) “everyday things” (thoughts about normal life concerns and activities), (4) “current state of being” (thoughts about one’s physical, cognitive, or emotional states), (5) “personal worries” (worried thoughts), (6) “daydreams” (fantastical, unrealistic thoughts), (7) “external environment” (thoughts about environmental stimuli), and (8) “other” (any thoughts not fitting the other categories). As in Kane et al. (2016), we defined TUTs as response options 3–8.

Probe frequency assessment

For our primary analyses, we randomly selected probes for each subject in each task. To use as much data as possible while remaining consistent across the tasks, we randomly selected probes in increments of two, up to 14 probes, for each subject in each task (except for the letter flanker task, which presented only 12 total probes; in the letter flanker task, then, we repeated the bin 12 data for bin 14). Random selection of probes was independent of the previous bin (i.e., the probes for bin size 2 could be completely different from the probes for bin size 4). For each bin of selected probes for each subject, we calculated the TUT rate.

A limitation of this approach is that all subjects responded to the full set of probes for each task, and so their responses to the randomly selected bins of analyzed probes could have been influenced by the appearance of, or their responses to, other probes. Therefore, our secondary analyses selected the first n probes that appeared to each subject in each task (in increments of two, up to 14, again except for the letter flanker task, which presented only 12 probes), before other probes could have had any influence on reporting. The secondary analyses yielded similar results to the primary analyses; we thus discuss them below but present details in supplementary materials.

Results and discussion

Data used for these reanalyses, as well as Rmarkdown files for all primary and secondary analyses, are available on the Open Science Framework (https://osf.io/f46e7/). We adopted a .05 α-level throughout. Details regarding data exclusions, task scoring, and outlier treatments can be found in Kane et al. (2016). We modeled the cognitive and schizotypy predictors exactly as in Kane et al. (2016), including any residual correlations among indicators (e.g., between the SART d' score and the SART RTsd score for the attention control construct). We first report descriptive statistics and bivariate correlations among the various probe-bin measures to address questions of reliability, and then address questions about reliability and validity using confirmatory factor analyses (CFAs) for each level of probe bin size (2–14).

Mean TUT rate and TUT-rate correlations across probe bin sizes

As seen in Table 1, mean TUT rates were remarkably consistent across probe bin sizes, including bin size 2, with slight decreases in variation around these estimates as bin size increased. All these TUT-rate estimates are also similar to those reported in Kane et al. (2016) for the full complement of thought probes in each task. Thus, it appears we can gather reliable and credible point estimates of TUT rates with as few as two thought probes embedded within a task.

Regarding the correlations presented in Table 2, we first consider the within-task correlations among probe bin sizes, akin to examining item-total correlations in questionnaire research. Within each task, the TUT-rate correlations between even the smallest bin (2 probes) and the largest bin (14 probes) were strong (rs = .56–.78); correlations with the largest bins generally increased with bin size but became stable at about eight thought probes (rs = .80–.96). These within-task correlations suggest that the TUT-rate variation measured by 14 probes was reliably captured by just 8 probes (and even reasonably captured by only 4 probes).

We next consider between-task TUT-rate correlations within each probe bin size. The cross-task correlations are weakest in bin size 2 (Mdn r = .17) and increase to bin size 4 (Mdn r = .29), and to bin size 6 and 8 (Mdn rs = .36 and .37, respectively). Correlations change little but are numerically strongest in bin sizes 10–14 (Mdn rs = .39–.40). These analyses suggest viable estimates of TUT-rate variation with as few as 6–8 probes per task. Across within- and between-task comparisons, then, measuring TUTs with 8 probes may be an optimal approach.

Confirmatory factor analyses across probe bins

Zero-order correlations among TUT-rate assessments suggest we can more reliably capture individual differences in mind wandering propensity using 8 or more probes per task. Here, we tested how TUT-rate-indicator factor loadings, and TUT-rate correlations with cognitive and schizotypy predictors, changed as we included more random thought-probe responses from each task. To do this we ran a series of CFAs in lavaan (Rosseel, 2012), where a TUT-rate factor was modeled at each level of probe bin size (2–14) from each of the 5 probed tasks, and correlated with factors for WMC, attention control, and disorganized schizotypy. As seen in Table 3, all models adequately fit the data (Schermelleh-Engel et al., 2003). Figure 1 displays the overall structural model with path estimates from each model, from probe bin sizes 2 to 14 (for clarity, the factor loadings for each model are presented in Table 4).

Standardized path estimates from confirmatory factor analyses between WMC, attention control failures (Fails), disorganized schizotypy (Disorgz), and TUT-rate bins (2–14). Estimates from each model are separated by the slash starting with the probe bin size 2 model and ending with the probe bin size 14 model. Factor loadings for each model are presented in Table 4

As in the simple bivariate correlations, loadings for each task’s TUT rate on the TUT rate factor were low but mostly acceptable for the 2–4 probe bins, and most consistent from 6–8 probe bins to 14 probe bins, and most consistent with the factor loadings (see Table 4) from the full complement of probes used by Kane et al. (2016). The path estimates between our predictor constructs and the TUT rate factor were also reasonably similar across all probe bin sizes (see Fig. 1), but they generally stabilize within a .02 window from 8 probes upward. For additional comparison, the TUT-rate correlations from the full Kane et al. (2016) model were −.17 for WMC (vs. −.17 from the 8-probe bin), .37 for attention control (vs. .38 from the 8-probe bin), and .21 for disorganized schizotypy (vs. .20 from the 8-probe bin). We provisionally conclude that researchers can reliably and validly estimate individual differences in TUT rate with as few as 8 thought probes, at least in latent-variable studies that assess TUT rates in many tasks.

Secondary analysis of first-n probes

Supplemental Tables 1 and 2 present M TUT rates and TUT-rate correlations, respectively, across probe-bin sizes, with probes drawn consecutively from the beginning of each task, rather than randomly (e.g., bin size 2 reflects data from the first two probes presented in each task). TUT rates changed more here across probe-bin sizes than they did in the randomly selected probe analyses, as expected from findings that TUT rates increase with time on task (e.g., Lindquist & McLean, 2011; McVay & Kane, 2009, 2012; Risko et al., 2012). Despite this general increase, TUT rates appeared to stabilize by bin size 6–10, depending on the task, and TUT-rate correlations across bins within tasks stabilized (with rs ≥ .90 with bin size 14 TUT rate) by bin size 6–8, as with the randomly selected probes. Between-task TUT correlations stabilized in the .30 range for bin sizes 10–14 (Mdn rs = .32–.36), also like the randomly selected probes.

CFAs on these data indicated adequate fit for all models (with TUT rate indicators based on 2–14 probe bins per task; see Supplemental Table 3). Supplemental Figure 1 presents the overall structural model with path estimates and Supplemental Table 4 presents the factor loadings. All TUT-rate factor loadings exceeded .45 for models based on bin sizes 8–14, but TUT-rate loadings were most consistent for models with 10 or more probes (vs. bin sizes 6–8 from randomly selected probes). Path estimates for the correlations between TUT rate and all the other constructs (WMC, attention control, disorganized schizotypy) appeared to stabilize with estimates within a .02 window for bin sizes 10–14 (and for bin sizes ≥ 8 for the WMC and disorganized schizotypy correlations).

Overall, then, despite TUT rates increasing over bin sizes within each task, the correlational findings here strongly replicate those from randomly selected probes. TUT rates calculated from bins of 8 thought probes efficiently demonstrate nearly as strong reliability and validity as those calculated from bins of 14 (and even TUT rates calculated from bins as small as 4 or 6 provide reasonable reliability and validity). These findings indicate that the promising results from randomly selected probes aren’t driven by the appearance of other unmeasured probe responses in the tasks.

Study 2

Study 2 reanalyzes data from Kane et al. (2021), which tested over 1000 subjects from two public universities in North Carolina in a single lab session. Thought probes were presented within two tasks, and individual-differences constructs included an attention-control ability factor and retrospective self-reports of mind wandering after each probed task. The original study manipulated the type of thought probe that appeared between subjects, creating four experimental groups; here we analyze data from two of the four groups (each with ns > 265), in which subjects responded to thought probes asking about thought-content categories, like those used in Study 1 (i.e., the two conditions not analyzed here used different kinds of probes to assess mind wandering).

Methods

This study received ethics approval by the IRBs at UNCG and Western Carolina University (WCU).

Subjects

As reported in Kane et al. (2021), 760 undergraduates from UNCG and 348 from WCU (total n = 1067 following exclusions described in Kane et al., 2021), completed a single laboratory session. For the current study, we analyzed data from two of the four experimental conditions (ns = 266 and 269 in Conditions 1 and 2, respectively).

Tasks, measures, and procedures

As in Study 1, subjects were each seated at a workstation and were tested in groups of 1–4, and an experimenter remained present for instructions, task pacing, and subject monitoring. See Kane et al. (2021) for a detailed description of all computer-administered measures in the original study. Below we describe the key constructs for the present study—attention-control ability and retrospective mind-wandering reports; the ability measures used here were nearly identical to those from Study 1.

Attention-control tasks

Subjects completed two antisaccade tasks (one with letter stimuli and one with arrow stimuli; one requiring a choice among 3 response options and the other among 4 response options) and a go/no-go SART, all identical in structure to those presented in Kane et al. (2016).Footnote 1 Subjects also completed an arrow flanker task in which they responded to a centrally presented arrow (“<” or “>”) flanked by four distractors. This task served as a secondary source of thought-probe measurement and performance data were not analyzed from this task. The attention control latent variable was modeled identically to Kane et al. (2021): accuracy on the two antisaccade tasks and performance on the SART (d' and RTsd across correct “go” trials). Subjects completed the attention-control tasks in the following order (in each condition): antisaccade-letters, SART, antisaccade-arrows, arrow flanker.

Dundee State Stress Questionnaire (DSSQ)

Immediately following completion of the two tasks with thought probes (SART and arrow flanker; see below), subjects answered a set of 12 questions about their conscious experiences in the immediately preceding task. Subjects responded by clicking on their choice along a 1–5 scale labeled, “Never,” “Once,” “A Few Times,” “Often,” and “Very Often.” The six DSSQ items about TUT experiences were analyzed here (we do not analyze the six items about “task-related interference,” i.e., thoughts about task performance). The dependent variable was the mean of the six TUT-item ratings, with higher scores reflecting more frequent TUT experiences during the preceding task.

Probed thought reports

Thought probes appeared randomly, with some constraints, within two tasks: In the SART, 45 probes were presented following rare no-go target trials (following 6.6% of total trials); in the arrow flanker task, 4 probes were presented in the first block of 92 trials and 16 probes were presented in the second block of 92 trials (10.4% of total trials). In the arrow flanker task, half the probes followed incongruent trials and half followed neutral trials. As noted above, here we analyze TUT rate data from two of the four between-subject experimental conditions, which presented similar categorical response options to Kane et al. (2016). Specifically, Condition 1 presented the identical probes to Kane et al. (2016), and Condition 2 presented these probes with one thought category removed: task experience/performance (i.e., thoughts about one’s task performance; this was response option 2 in Condition 1). TUTs were again defined as response options 3–8 in Condition 1, and as response options 2–7 in Condition 2.

Thought probe frequency assessment for the current study

As in Study 1, we again randomly sampled thought-probe data in increments of two probes from each subject's SART and arrow flanker task. Here, however, we sampled up to 20 probes in each task, as the arrow flanker task presented 20 probes. As in Study 1, we will also report secondary analyses that selected the first n probes that appeared to each subject in each task (in increments of 2, from 2–20); again, details of these analyses are available in the supplementary materials.

Results and discussion

As in Study 1, we first report descriptive statistics for TUT rates at each probe bin size, and the bivariate correlations among the bin-size TUT rates. Then we assess CFAs using each bin-size TUT rate (along with factors for attention control ability and retrospective mind wandering reports). We report all analyses separately for Condition 1 and Condition 2.

Mean TUT rate and TUT-rate correlations across probe bin sizes

Table 5 presents descriptive statistics for TUT rate from the SART and arrow flanker tasks, separately for Conditions 1 and 2. For comparison, M TUT rates (as proportions) reported in Kane et al. (2021) using all available thought probe data for Condition 1 were .52 and .45 in the SART and arrow flanker tasks, respectively; in Condition 2, M TUT rates were .58 and .47 in the SART and arrow flanker tasks, respectively. Here, again, TUT rates were remarkably similar across probe bins, whether estimated from 2 probes or 20; standard deviations around those means tended to narrow from 4 probes upward, but from there remained reasonably stable.

As seen in Table 6, within-task correlations also suggested a similar pattern of results to Study 1. Within both the SART and arrow flanker tasks (in both conditions), correlations across probe bins got stronger with more probes and this pattern appeared to level out around 8 thought probes; for example, for the SART in both conditions, TUT-rate correlations with the 20-probe bin were at .80 or higher from bin sizes ≥ 8, and for the arrow flanker task in both conditions, TUT-rate correlations with the 20-probe bin were at .90 or higher from bin size ≥ 8. We note again, however, that even the correlations between the smallest and largest probe bins were quite strong (rs ≈ .55 in both SARTs and rs ≈ .75 in both arrow flanker tasks). As in Study 1, these findings suggest that we can reliably capture individual differences in the TUT rate from as few as 8 thought probes (at least, about as reliably as we can measure them from 20 thought probes).

Examining TUT-rate correlations between the SART and flanker tasks, we again found increasing magnitudes with increasing probe bin size. In Condition 1, there was an increase from the 2-probe bin (r = .25) to the 4-probe bin (r = .44) and then a further increase at the 10-probe bin (r = .50), but additional probes beyond 10 did not increase the correlation substantially. In Condition 2, there was a jump in the correlations from the 6-probe bin (r = .36) to the 8-probe bin (r = .44) and a larger jump once 18 probes were assessed (r = .57). Here, then, 8–10 probes appeared to efficiently capture shared cross-task variance in the TUT rate.

Confirmatory factor analyses across probe bins

In parallel to Study 1, here we tested how correlations between TUT rates and attention-control ability and retrospective mind-wandering ratings (from the DSSQ) change as we include more randomly selected thought probes into our TUT-rate measurement from each task. We again ran a series of CFAs in lavaan where TUT rates were modeled at each level of probe bin (i.e., 2–20 probes) and examined the TUT rate correlations with attention-control and retrospective mind-wandering ratings. In all models, the unstandardized factor loadings for the two TUT indicators and the two DSSQ indicators were set to be equal.

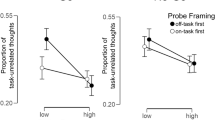

Table 7 presents the results fit statistics for each model, in each condition. Overall, model fit was generally acceptable by traditional standards (except for the RMSEA indices for some Condition 1 models). However, for Condition 1, model fit tended to decrease with increasing probe-number bins, and two models for Condition 2 (2 Probes and 4 Probes) produced CFIs = 1 and TLIs > 1, suggesting some degree of overfitting. Figure 2 presents the general structural model with path estimates for all the models derived from the Condition 1 data across probe-number bins (for clarity, standardized factor loadings are presented separately in Table 8).

Standardized path estimates from confirmatory factor analyses among attention-control ability (Attn Control), retrospective mind-wandering ratings (DSSQ), and TUT rate across different probe-number bins, from Study 2, Condition 1. Estimates from each model are separated by the slash starting with the 2 Probes model and ending with the 20 Probes model. For clarity, factor loadings are presented separately for each model in Table 8

Factor loadings for each task’s TUT rate in Condition 1 were reasonably stable when based on 4 or more probes, but they most closely matched the 20-probe loadings with 10 or more probes. Correlations between attention control and TUT rate were a bit less stable and consistent than those between DSSQ and TUT rate. Generally, however, both stabilized (using a .02 window around the 20-bin-size estimate) for bin sizes ≥ 6–8, with some occasional bins’ correlations outside that window.

In Condition 2, factor loadings for each task’s TUT rate were reasonably stable when based on 8 or more probes (see Table 8). Figure 3 presents the latent variable models for Condition 2. Here, the correlations between attention control and TUT rate factors were numerically weaker, and one model (4 Probes) yielded a nonsignificant correlation, but all correlations were within a range of .08 of the 20-bin value; the relations between retrospective mind-wandering ratings on the DSSQ and TUT rates were a bit more variable, within a range of .11 of the 20-bin value. These path estimates appeared to stabilize for models based on data from 6 or more probes (for attention control) and 12 or more probes (for DSSQ). Considering factor loadings and path estimates across models for Condition 1 and Condition 2, it is more difficult to pin down a single ideal number of probes than it was for Study 1, but analyses based on 6–10 probes per task appear to efficiently provide reasonably strong evidence for TUT rate reliability and validity.

Standardized path estimates from confirmatory factor analyses among attention-control ability (Attn Control), retrospective mind-wandering ratings (DSSQ), and TUT rate across different probe-number bins, from Study 2, Condition 2. Estimates from each model are separated by the slash starting with the 2 Probes model and ending with the 20 Probes model. For clarity, factor loadings are presented separately for each model in Table 8

Secondary analyses of first-n probes

As with Study 1, we also examined M TUT rates and TUT-rate correlations across probe-bin sizes for each task in each condition, with probes drawn consecutively from the beginning of each task (see Supplemental Tables 5 and 6, respectively). TUT rates again increased with the probe bin size in both conditions, likely reflecting a time-on-task effect, but stabilized by bin sizes 6–10, depending on the task and condition. TUT-rate correlations across bins within tasks stabilized (with rs ≥ .90 with bin size 20 TUT rate) by bin size 8–12 (for randomly selected probes, these stabilized by bin size 8), and TUT-rate correlations across tasks stabilized in the .40 range for bin sizes 12 and 8 in Conditions 1 and 2, respectively (for randomly selected probes, these were stabilized by bin sizes 8–10). Overall, then, these findings correspond well to those from the randomly selected probes.

CFAs on the data for Conditions 1 and 2 indicated adequate fit for all models, except for RMSEA fit indices for Condition 1 (see Supplemental Table 7) and again with some overfitting in some Condition 2 models. Supplemental Figures 2 and 3 present the overall structural models with path estimates for Conditions 1 and 2, respectively, and Supplemental Table 8 presents the factor loadings for both conditions. TUT-rate factor loadings for Condition 1 exceeded .50 for models based on bin sizes ≥ 6 and exceeded .60 for models based on bin sizes ≥ 12; loadings for Condition 2 exceeded .50 for all models (even for bin size 2) and exceeded .60 for models based on bin sizes ≥ 6. Path estimates for correlations between TUT rate and the other constructs (attention control, DSSQ mind-wandering ratings) appeared to stabilize with estimates within a .02 window for bin sizes ≥ 6 for attention control and ≥ 10 for DSSQ in Condition 1, and for bin sizes ≥ 8 for attention control and ≥ 6 for DSSQ in Condition 2.

As in Study 1, then, the correlational findings here generally replicate those from randomly selected probes. TUT rates calculated from bins of 6–10 thought probes efficiently demonstrate nearly as strong reliability and validity as those calculated from bins of 20 (and even TUT rates calculated from bins as small as 2 or 4 provide reasonable reliability and validity). Again, the parallels here to those from the randomly selected probes indicate that the findings from randomly selected probes are not driven by subjects’ experiences with additional, non-analyzed probes. Overall, then, a recommendation of 8 probes per task appears to fit the findings well from both Studies 1 and 2.

General discussion

Most recent mind wandering research presents thought probes within ongoing tasks and activities to measure rates of TUTs (Weinstein, 2018). Mind wandering researchers therefore face the question of how many thought probes to present. Too few probes may yield unreliable TUT-rate estimates and too many probes may provide invalid assessments if probes reactively change subjects’ ongoing conscious experiences via frequent interruption and reminders of the potential for TUTs (e.g., Konishi & Smallwood, 2016; Seli et al., 2013a). Is there a “Goldilocks zone” of probe numbers that maximizes the reliability and validity of TUT measurement while minimizing the interrupting and reactive effects of probing?

In the present exploratory reanalyses, we examined how the number of thought probes analyzed from a task might elicit differences in the reliability or validity of TUT-rate individual differences. We reanalyzed two large datasets where U.S. undergraduates completed 2–5 computerized laboratory tasks with embedded thought probes (from Kane et al., 2016, 2021), and we calculated each subject’s TUT rates based on 2–14 randomly selected probes in Study 1 and based on 2–20 randomly selected probes in Study 2 (all in “bin” increments of two); all tasks in Study 1 had originally presented 12–45 thought probes, and all tasks in Study 2 had had presented 20 or 45 probes.

Our reanalyses for both Study 1 and Study 2 examined bin-size changes in mean TUT rates, within-task correlations of TUT rates, between-task correlations of TUT rates, TUT-rate factor loadings in latent-variable models, and TUT-rate factor correlations with other constructs in latent variable models. Generally, the results indicated that TUT rates calculated from 8 randomly selected probes adequately captured similar patterns to those from the largest set of analyzed probes (and to the original correlations that used all available probe information). Note, however, that in some contexts, using 10–12 probes seemed to improve measurement beyond 8 probes, and that in others, using as few as 4–6 probes would suffice. We were surprised to find that TUT rates calculated even from only two randomly selected probes per subject provided some meaningful individual-differences information, but we would not recommend that researchers rely on such a limited TUT-rate assessment of each subject, especially combined with modest sample sizes.

Recommendations

Pending independent replication, our provisional recommendation is that eight thought probes will typically provide efficient assessment of normal variation in TUT rate, at least in laboratory task contexts like those examined here and, perhaps, especially in studies with well-powered designs that use multiple tasks to assess TUT rates. Across the computerized attention and memory tasks we examined here, using eight probes would translate to having probes follow between 0.1% of trials (i.e., in our SARTs) to 5.5% of trials (i.e., in our Study 1 letter flanker task), or one probe every 3.2 min (in our SART) to every 1.5 min (in our Study 1 letter flanker). Studies using much longer or shorter tasks than those represented here may wish to approximate these percentages or inter-probe intervals rather than focusing on the raw number of probes.

Using an economical number of probes has multiple benefits. First, researchers may shorten (reliable) tasks used in the mind wandering literature to accommodate eight thought probes while presenting them after 1–5% of task trials. In doing so, more tasks could be used within a single study, allowing for more varied contexts for estimating TUT rate and enabling the use of latent variable models. Second, as already mentioned, using as few probes as possible minimizes task interruptions, as well as possible demand effects and reactivity to frequent probing.

Limitations and caveats

As a post hoc secondary analysis, this study could not parametrically vary the experience of different probe frequencies for subjects. We could only, instead, vary the number of randomly selected thought probes that contributed to data analyses for each subject, from a task context in which these subjects had responded to many more probes than those analyzed. It is possible, then, that tasks presenting only eight probes to every subject would not yield as reliable or valid TUT rates as indicated by our post hoc analyses here. However, confidence in our conclusions should increase based on the supplemental analyses we reported for Studies 1 and 2 that selected the first n probes that subjects encountered in each task (i.e., the first 2 probes, the first 4 probes, etc.). These analyses also indicated that reliable and valid TUT-rate measurement could be gained from as few as eight probes per subject (if not fewer), despite no other probes having been yet encountered by subjects. Together, our findings encourage future studies to assess whether presenting only eight (or fewer) probes per task allows for reliable and valid measurement of individual differences in TUT rate.

With that said, it is possible that our random selection of probes for each bin allowed some probes to be selected across multiple bins within a task, for at least some tasks for some subjects (e.g., a SART probe from bin 4 could have contributed to SART bin 8). This was necessarily true of our supplemental first-n analyses, where the TUT rate from the first 2 probes also contributed to the TUT rate for the first 4 probes, and it was increasingly true for larger bins that encroached on the maximum bin sizes (e.g., bins 12 and 14 in Study 1; bins 18 and 20 in Study 2). Such dependencies may have artificially inflated within-task probe-bin correlations for both the random-probe and first-n-probe analyses (although bin size 8 yielded similar results across studies even though maximum bin size—and thereby probe-selection overlap—varied across studies). However, they should not have affected between-task correlations at each bin size (and corresponding factor loadings) that contributed to our latent variable analyses and the conclusions we drew from them, namely that construct-valid TUT assessments can be consistently derived from 6–8 thought probes.

Future research should explore whether minimum probe numbers for reliable and valid TUT measurement vary across different task or activity types. Our assessments were limited to attention and memory tasks presenting simple stimuli in a discrete-trial format, and results might differ in more continuous or engaging tasks, or tasks that better mirror typical daily-life activities. For example, during tasks or activities that have more inherent variation in attentional demand, or that evolve from being more to less interesting with time (or vice versa), more probes might be necessary to faithfully capture the dynamics of mind wandering throughout the task. The laboratory tasks we analyzed here presented relatively stable demands over time, with the same stimuli throughout, which might increase the reliability and validity of TUT reports across a small number of probes.

Moreover, we assessed TUT rates from only one kind of thought probe, which asked subjects to categorize their immediately preceding thoughts into one of several content categories (e.g., worries; fantastical daydreams). Other types of probes, which may ask about the temporal orientation of thought content (i.e., future, present, versus past orientation), or the affective valence of thought content (i.e., positive, neutral, negative), might yield different results, as might probes that take a still more different approach, such as those asking subjects to report on the intentionality of their mind wandering, or on the extent to which their thoughts were flowing freely, or on the depth of their mind wandering on a rating scale (see Kane et al., 2021).

Finally, we must emphasize that the current findings—and our recommendations for future work—may only apply to instances where researchers are interested in overall TUT rates, and not in specific forms (i.e., sub-types) of TUTs. Researchers interested in examining differences in types of TUTs (e.g., intentional vs. unintentional; past- vs. present- vs. future-oriented; or negative vs. positive vs. neutral thoughts) should aim to use more thought probes (and perhaps subjects) to ensure that there is an adequate number of responses for each thought type. We have found that subject samples often show zero-inflated distributions of specific thought-report types (e.g., externally driven distractions), which will only become more problematic as the number of probes is reduced (Welhaf et al., 2020). Thus, although our findings suggest that it may be safe to use as few as 8 probes in studies of overall TUT rates, they do not suggest similar reliability or validity for studies of TUT sub-types.

Notes

As noted in Kane et al. (2021), a programming error in one of the antisaccade tasks led the stimuli to be presented at different distances from central fixation at the different sites; performance data were thus standardized within sites for this task.

References

Baldwin, C. L., Roberts, D. M., Barragan, D., Lee, J. D., Lerner, N., & Higgins, J. S. (2017). Detecting and Quantifying Mind Wandering during Simulated Driving. Frontiers in Human Neuroscience, 11, 406. https://doi.org/10.3389/fnhum.2017.00406

Bastian, M., & Sackur, J. (2013). Mind-wandering at the tips of the fingers: Automatic parsing of subjective states based on response time variability. Frontiers in Psychology, 4, 573. https://doi.org/10.3389/fpsyg.2013.00573

Brosowsky, N. P., DeGutis, J., Esterman, M., Smilek, D., & Seli, P. (2020). Mind wandering, motivation, and task performance over time: Evidence that motivation insulates people from the negative effects of mind wandering. Psychology of Consciousness: Theory, Research, and Practice.

Carriere, J. S., Seli, P., & Smilek, D. (2013). Wandering in both mind and body: individual differences in mind wandering and inattention predict fidgeting. Canadian Journal of Experimental Psychology, 67(1), 19.

Forster, S., & Lavie, N. (2009). Harnessing the wandering mind: The role of perceptual load. Cognition, 111(3), 345-355.

Foster, J. L., Shipstead, Z., Harrison, T. L., Hicks, K. L., Redick, T. S., & Engle, R. W. (2015). Shortened complex span tasks can reliably measure working memory capacity. Memory & Cognition, 43(2), 226-236.

Franklin, M. S., Mrazek, M. D., Anderson, C. L., Johnston, C., Smallwood, J., Kingstone, A., & Schooler, J. W. (2017). Tracking distraction: The relationship between mind-wandering, meta-awareness, and ADHD symptomatology. Journal of Attention Disorders, 21(6), 475-486.

Hollis, R. B., & Was, C. A. (2016). Mind wandering, control failures, and social media distraction in online learning. Learning and Instruction, 42, 104-112.

Kane, M. J, Gross, G. M.., Chun, C. A., Smeekens, B. S., Meier, M. E., Silvia, P. J., & Kwapil, T. R. (2017). For whom the mind wanders, and when, varies across laboratory and daily-life settings. Psychological Science, 28, 1271-1289.

Kane, M. J., Meier, M. E., Smeekens, B. A., Gross, G. M., Chun, C. A., Silvia, P. J., & Kwapil, T. R. (2016). Individual differences in the executive control of attention, memory, and thought, and their associations with schizotypy. Journal of Experimental Psychology: General, 145, 1017-1048.

Kane, M. J., Smeekens, B. A., Meier, M. E., Welhaf, M. S., & Phillips, N. E. (2021). Testing the construct validity of competing measurement approaches to probed mind-wandering reports. Behavior Research Methods, 53, 2372–2411.

Konishi, M., & Smallwood, J. (2016). Shadowing the wandering mind: How understanding the mind-wandering state can inform our appreciation of conscious experience. WIREs Cognitive Science, 7, 233–246

Levinson, D. B., Smallwood, J., & Davidson, R. J. (2012). The persistence of thought: Evidence for a role of working memory in the maintenance of task-unrelated thinking. Psychological Science, 23(4), 375-380.

Lindquist, S. I., & McLean, J. P. (2011). Daydreaming and its correlates in an education environment. Learning and Individual Differences, 21, 158-167.

Livesley, W. J., & Jackson, D. N. (2009). Dimensional Assessment of Personality Pathology—Basic Questionnaire (DAPP-BQ): Technical Manual. Sigma Assessment Systems, Inc.

McVay, J. C., & Kane, M. J. (2009). Conducting the train of thought: Working memory capacity, goal neglect, and mind wandering in an executive-control task. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35(1), 196–204.

McVay J. C., & Kane, M. J. (2012). Drifting from slow to “d’oh!”: Working memory capacity and mind wandering predict extreme reaction time and executive control errors. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 525-549.

Meier, M. E. (2021). Testing the attention-distractibility trait. Memory & Cognition, 49(7), 1490–1504.

Miers, T. C., & Raulin, M. L. (1987). Cognitive Slippage Scale. In K. Corcoran & J. Fischer (Eds.), Measures for clinical practice: A sourcebook (pp. 125-127). Free Press.

Mrazek, M. D., Phillips, D. T., Franklin, M. S., Broadway, J. M., & Schooler, J. W. (2013). Young and restless: validation of the Mind-Wandering Questionnaire (MWQ) reveals disruptive impact of mind-wandering for youth. Frontiers in psychology, 4, 560. https://doi.org/10.3389/fpsyg.2013.00560

Oswald, F. L., McAbee, S. T., Redick, T. S., & Hambrick, D. Z. (2015). The development of a short domain-general measure of working memory capacity. Behavior Research Methods, 47(4), 1343-1355.

Raine, A., (1991). The SPQ-A scale for the assessment of schizotypal personality based on DSM-III-R criteria. Schizophrenia Bulletin, 17, 555-564.

Reichle, E. D., Reineberg, A. E., & Schooler, J. W. (2010). Eye movements during mindless reading. Psychological Science, 21(9), 1300-1310.

Risko, E. F., Anderson, N., Sawal, A., Engelhardt, M., & Kingstone, A. (2012). Everyday attention: Variation in mind wandering and memory in a lecture. Applied Cognitive Psychology, 26, 234-242.

Robison, M.K., Miller, A.L. & Unsworth, N. (2019). Examining the effects of probe frequency, response options, and framing within the thought-probe method. Behavior Research Methods, 51, 398–408.

Robison, M.K., Miller, A.L. & Unsworth, N. (2020). A multi-faceted approach to understanding individual difference in mind-wandering. Cognition, 198, 104078.

Robison, M. K., & Unsworth, N. (2018). Cognitive and contextual correlates of spontaneous and deliberate mind-wandering. Journal of Experimental Psychology: Learning, Memory, and Cognition, 44, 85 - 98.

Rosseel, Y. (2012). lavaan: An R Package for Structural Equation Modeling. Journal of Statistical Software, 48(2), 1-36.

Rummel, J., & Boywitt, C. D. (2014). Controlling the stream of thought: Working memory capacity predicts adjustment of mind-wandering to situational demands. Psychonomic Bulletin & Review, 21, 1309-1315.

Schermelleh-Engel, K., Moosbrugger, H., & Müller, H. (2003). Evaluating the fit of structural equation models: Tests of significance and descriptive goodness-of-fit measures. Methods of Psychological Research Online, 8, 23-74.

Schooler, J. W., Reichle, E. D., & Halpern, D. V. (2004). Zoning out while reading: Evidence for dissociations between experience and metaconsciousness. In D. Levin (Ed.), Thinking and seeing: Visual metacognition in adults and children (pp. 203-226). MIT Press.

Schubert, A. L., Frischkorn, G. T., & Rummel, J. (2019). The validity of the online thought-probing procedure of mind wandering is not threatened by variations of probe rate and probe framing. Psychological Research, 84, 1846-1856.

Seli, P., Carriere, J. S., Levene, M., & Smilek, D. (2013a). How few and far between? Examining the effects of probe rate on self-reported mind wandering. Frontiers in Psychology, 4, 430.

Seli, P., Cheyne, J. A., & Smilek, D. (2013b). Wandering minds and wavering rhythms: Linking mind wandering and behavioral variability. Journal of Experimental Psychology: Human Perception and Performance, 39, 1-15.

Seli, P., Cheyne, J. A., Xu, M., Purdon, C., & Smilek, D. (2015a). Motivation, intentionality, and mind wandering: Implications for assessments of task-unrelated thought. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(5), 1417.

Seli, P., Smallwood, J., Cheyne, J. A., & Smilek, D. (2015b). On the relation of mind wandering and ADHD symptomatology. Psychonomic Bulletin & Review, 22(3), 629-636.

Seli, P., Risko, E. F., & Smilek, D. (2016). Assessing the associations among trait and state levels of deliberate and spontaneous mind wandering. Consciousness and Cognition, 41, 50-56.

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2012). A 21 word solution. Dialogue: The Official Newsletter of the Society for Personality and Social Psychology, 26, 4–7.

Smallwood, J., McSpadden, M., & Schooler, J. W. (2008). When attention matters: The curious incident of the wandering mind. Memory & Cognition, 36, 1144-1150.

Smallwood, J., & Schooler, J. W. (2006). The restless mind. Psychological Bulletin, 132(6), 946-958.

Smallwood, J., & Schooler, J. W. (2015). The science of mind wandering: Empirically navigating the stream of consciousness. Annual Review of Psychology, 66, 487-518.

Smeekens, B.A., & Kane, M.J. (2016). Working memory capacity, mind wandering, and creative cognition: An individual-differences investigation into the benefits of controlled versus spontaneous thought. Psychology of Aesthetics, Creativity, and the Arts, 10, 389-415.

Szpunar, K. K., Khan, N. Y., & Schacter, D. L. (2013). Interpolated memory tests reduce mind wandering and improve learning of online lectures. Proceedings of the National Academy of Sciences, USA of the United States of America, 110, 6313–6317.

Unsworth, N., & McMillan, B. D. (2013). Mind wandering and reading comprehension: Examining the roles of working memory capacity, interest, motivation, and topic experience. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 832–842.

Unsworth, N., Redick, T.S., Lakey, C.E., Young, D.L. (2010). Lapses in sustained attention and their relation to executive and fluid abilities: An individual differences investigation. Intelligence, 38, 111-122.

Unsworth, N., & Robison, M. K. (2016). The influence of lapses of attention on working memory capacity. Memory & Cognition, 44(2), 188-196.

Unsworth, N., & Robison, M. K. (2017). The importance of arousal for variation in working memory capacity and attention control: A latent variable pupillometry study. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(12), 1962.

Unsworth, N., Robison, M. K., & Miller, A. L. (2021). Individual differences in lapses of attention: A latent variable analysis. Journal of Experimental Psychology: General, 150(7), 1303–1331.

Weinstein, Y. (2018). Mind-wandering, how do I measure thee with probes? Let me count the ways. Behavior Research Methods, 50, 642–661.

Welhaf, M.S., Smeekens, B.A., Gazzia, N.C., Perkins, J.B., Silvia, P.J., Meier, M.E., Kwapil, T.R., & Kane, M.J. (2020). An exploratory analysis of individual differences in mind wandering content and consistency. Psychology of Consciousness: Theory, Research, and Practice, 7, 103-125.

Zhang, Y., & Kumada, T. (2018). Automatic detection of mind wandering in a simulated driving task with behavioral measures. PLoS ONE, 13(11), Article e0207092.

Zhang, H., Miller, K. F., Sun, X., & Cortina, K. S. (2020). Wandering eyes: Eye movements during mind wandering in video lectures. Applied Cognitive Psychology, 34(2), 449-464.

Author Notes

The original data collection for Study 1 was funded by award number R15MH093771 from the National Institute of Mental Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Mental Health or the National Institutes of Health.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 192 kb)

Rights and permissions

About this article

Cite this article

Welhaf, M.S., Meier, M.E., Smeekens, B.A. et al. A “Goldilocks zone” for mind-wandering reports? A secondary data analysis of how few thought probes are enough for reliable and valid measurement. Behav Res 55, 327–347 (2023). https://doi.org/10.3758/s13428-021-01766-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-021-01766-4