Abstract

Mixed-effects models are frequently used in a variety of disciplines because they can appropriately specify multiple sources of variation. However, precisely because they distinguish between multiple sources of variation, it is difficult to specify a standardized effect size, such as η2. Several approaches to this problem have been proposed, but most do not address models with crossed random factors, and none allows for the range of data and models that researchers typically test. For example, no existing approach handles random slopes for a continuous predictor. We introduce several new, flexible approaches to estimating η2 in mixed-effect models with crossed random factors. We then conduct a simulation to assess new and old methods. We examine their respective strengths and weaknesses and offer recommendations for a simple approach based on the work of Snijders and Bosker (2011).

Similar content being viewed by others

Introduction

Mixed-effects models are valuable tools in disciplines like sociology, education, and cognitive and social psychology. Compared to traditional repeated-measures analyses, these models allow researchers to more appropriately test questions that involve multiple sources of error. For example, consider the academic performance of students at a school with several different teachers. A researcher may be interested in the effect of socioeconomic status on performance, but how should this question be tested? It is critical to note that, in such data, there may be variability due to students (Why does one student outperform another? Is Francesca smarter than Clayton?) and variability between teachers (Why is performance better under one teacher than under another? Is Ms. Jaap a better teacher than Mr. Knispel?). It is important to understand that these data may be organized in several different ways. It may be the case that each student takes all classes with the same teacher (Francesca studies exclusively with Ms. Jaap; Clayton studies exclusively with Mr. Knispel). Alternatively, each student may spend one class period with each teacher (Francesca takes one class with Ms. Jaap and another class with Mr. Knispel; so does Clayton). The question involves the organization of random factors—categorical variables (here, student identity and teacher identity) that account for variance in the data. In the first design, the levels of one factor (student) are said to be nested under levels of the other factor (teacher); in the second design, the factors are said to be crossed. There may also be messier organizations. For example, a given student may take classes with some, but not all, teachers. Whether nested or crossed or messy, failure to account for different sources of variability in data of this sort can bias statistical analyses. Accordingly, mixed-effects models are a critical and increasingly popular tool.

Mixed-effects models, like traditional ordinary least squares (OLS) models, estimate the effects of variables that a researcher wants to test. These fixed effects represent influences that can be understood, explained, formally tested, and ostensibly generalized to new samples. For example, the researcher in the example above is interested in the relationship between socioeconomic status and student performance. This is a fixed effect. If it is significant, we assume that we have learned something about why some students perform better than others, and we can make predictions about a new group of students and teachers. Traditional OLS analyses also include a single random effect: error. Error represents variation in the data that can be estimated but never really understood. For example, mean squared error describes the magnitude of unexplained variation in the data–how much observations vary from one another after accounting for all the fixed effects. Though we can estimate the magnitude of error, it does not allow us to draw inferences. The error term may indicate that Francesca performed 2.3 points better than we would have predicted based on her socioeconomic status, but it does not tell us why. Mixed-effects models allow us to estimate multiple random error terms. For example, we might allow one error term for teachers, one for students, and one residual, and those errors can differ dramatically in magnitude. Where OLS does not account for correlated errors or differentiate between sources of error variance, mixed-effects models allow for and estimate each source of error simultaneously. Again, these errors are random effects. They can be estimated, but they do not help us to understand why some observations are higher and others are lower.

Mixed-effects models provide a solution to a critical problem, helping us appropriately model complex data that involve multiple random factors. But, given the presence of multiple error terms (e.g., one for students and one for teachers), it is often unclear how to estimate an appropriate standardized effect size, like Cohen’s d or eta squared (η2). This issue is the focus of the current paper, which examines diverse strategies for estimating η2. Given calls from critics and demands from journals, researchers may want or need to provide estimates of effect sizes. Effect sizes may also help researchers conceptualize the impact of predictors in their data, and across multiple datasets (as in a meta-analysis). There have been several attempts to develop procedures for estimating effect size in mixed-effects models, many for hierarchical data (see Rights & Sterba, 2019, for a review) and only a few for crossed data. We briefly review these approaches and discuss their limitations before outlining several other approaches that provide interpretable, flexible methods for computing partial η2, which reflects the proportion of variance accounted for by a given parameter (or set of parameters) in the model relative to the variance in a simpler, nested model. We then test these approaches to assess their strengths and weaknesses.

An example

To clarify and demonstrate the ideas we will discuss, it will be helpful to have an example and a dataset to explore. Imagine that a researcher gathers a set of stimuli: four profiles of moderately qualified White male job applicants (targets), including resumes and photos. Though the targets are all nominally White, the four faces vary in Afrocentricity because each (originally White) face was morphed with a Black face to a randomly determined degree: Tom’s face was modified slightly to include only 3% of a Black face, Teddy’s face includes almost 25% of a Black face, and so on. For the sake of scaling, Afrocentricity is scored so that a one-unit increase represents an increase of 10%. The researcher then recruits a sample of six White participants (perceivers) and asks each to complete a scale that measures his or her attitude toward Black people. The perceivers are thus scored in terms of prejudice on a scale from 1 (not at all prejudiced) to 9 (highly prejudiced). Finally, each of the six perceivers is asked to evaluate each of the four targets in terms of qualification for a job by rating them on a sliding scale of perceived qualification that ranges continuously from 1 (not at all qualified) to 5 (very qualified). Note that, because each perceiver evaluates each target, these data involve a perfectly crossed organization (see Table 1). The code to simulate these data and to analyze them in R is provided in the online supplementFootnote 1. Figure 1 presents the data graphically, with separate plots for each participant (e.g., how does participant Pamela rate each of the four targets?). Figure 2 presents a complementary representation with separate plots for each target (e.g., how do the six participants each rate target Tom?).

Sets of ratings from each perceiver as a function of targets’ mean centered Afrocentricity. Each of the six panels shows ratings made by a given perceiver for each of the four targets. Points represent the individual ratings. The solid lines represent the fixed-effect predictions from Model A1. The dashed lines represent predictions from Model A1 that include both fixed and random effects

Sets of ratings of each target as a function of perceivers’ mean centered prejudice. Each of the four panels shows ratings made for a given target by each of the six perceivers. Points represent the individual ratings. The solid lines represent the fixed-effect predictions from Model A1. The dashed lines represent predictions from Model A1 that include both fixed and random effects

The researchers hypothesize that, even though the targets are all White, more prejudiced participants will denigrate applicants who have more Afrocentric features. This hypothesis would be tested by the interaction of participant-level prejudice and target-level Afrocentricity.

It is important to clarify some aspects of the analysis. In general, we consider models in which some number of participants (n) respond to some number of stimuli (m). In the current example, n = 6 and m = 4. The participants are identified with unique participant ID numbers (pnum), and the targets are identified with unique target ID numbers (tnum). Prejudice and Afrocentricity scores are measured continuously, and the variables are mean-centered, yielding variables, prejudice.c and afrocentricity.c. Scores on these variables reflect the degree to which any particular participant (or target) is more or less prejudiced (or more or less Afrocentric) than the average. Zero on the centered variables represents a participant (or target) with an average level of prejudice (or Afrocentricity).

To analyze these data, we create a pair of models. As noted above, each model includes both fixed and random effects. Further, we specify these models such that one is a constrained version of the other. Adopting the terminology of Judd, McClelland, and Ryan (2017a), the more complex or augmented model is called Model A. The simpler compact model is called Model C. Two models (A1 and C1.pxa) are specified, below, according to the lme4 package in R. We also specify a second Model C (C1.afr) because we will refer to it later.

Model A1 estimates the effects of participant prejudice and target Afrocentricity (each centered at its mean), along with their interaction, on participants’ ratings of the target. Model C1.pxa is identical to Model A1, except that it excludes the interaction term, which is the critical term for testing the researchers’ predictions. Model C1.pxa is thus a constrained version of A1 in which the slope of the interaction is forced to be zero. Model C1.pxa is actually named for the term it excludes because this is also the term that is tested, statistically, when we compare that particular Model C with Model A. Results of these two models are shown in Fig. 3. Similarly, the name of Model C1.afr indicates that it drops the Afrocentricity effect.

Each model includes a fixed portion, which specifies effects that can be understood, explained, formally tested, and generalized. Consider the fixed effect of a target’s Afrocentricity on qualification ratings. Though this slope is not significant, directionally, the results suggest that, in this sample of stimuli, targets with higher Afrocentricity tend to receive lower ratings from this sample of participants. By virtue of this fixed effect, we can understand and explain why some targets’ ratings are lower than others. Further, the test of the fixed effect allows us to ask whether this effect will generalize: if the researchers replicated their study with a new group of participants and a new group of targets, is it reasonable to expect that they will find the same relationship? In a new set of targets judged by new perceivers, will targets with greater Afrocentricity receive lower ratings? The test of a fixed effect attempts to answer that question.

Models A and C also include a random portion, which allows for variability that is estimated but not formally understood by the model. For example, if we allow intercepts to vary as a function of participant, we acknowledge that, on average across targets, some participants give higher ratings and others give lower ratings, but we do not attempt to explain what differentiates the high raters from the low raters. That is, we allow for the differences, but the causes of that variation remain a mystery. If we collected a new sample of participants from the same population, we would expect the variance of the intercepts to be similar, but we would have no way to use the random intercepts obtained in the first sample to predict which of the new participants will be high raters and which will be low raters.

Slopes can also vary randomly. The fixed portion of Model A1 includes the interaction of participant prejudice × target Afrocentricity. This fixed effect represents the researchers’ main hypothesis. In general, more Afrocentric targets receive slightly lower ratings. But as we move from low-prejudice participants to high-prejudice participants, the researchers hypothesize that the negative impact of Afrocentricity gets stronger. This interaction reflects participant-to-participant differences in the impact of Afrocentricity, and (as a fixed effect) it attempts to explain or understand those changes as a function of prejudice. These models also allow the effect of Afrocentricity to vary randomly. A random Afrocentricity slope allows for the possibility that, over and above variation in the effect of Afrocentricity that can be explained as a function of prejudice, the slope fluctuates from participant to participant in a manner that is not fully understood. In addition to an overall residual or error term, these random effects (intercepts and slopes) reflect different sources of error in mixed-effects models.

Model A1 appropriately acknowledges the dependence in these data due to the fact that each participant makes four qualification ratings (one for each target) and the qualification of each target is rated six times (once by each participant). The model allows each participant and each target to have his/her own intercept (i.e., intercepts vary randomly as a function of both participant and target). The model also allows the effect of target Afrocentricity to vary randomly from participant to participant, and the effect of participant prejudice to vary randomly from target to target (i.e., the slope of Afrocentricity varies randomly across participants, and the slope of prejudice varies randomly across targets). This Model A1 is the correct, fully specified model for this dataset. To test the effect of the interaction, we compare Model A1 to Model C1.pxa, which is identical except that it excludes the interaction term or forces the interaction effect to equal zero.

The output, like the model itself, is divided into two sections. The fixed effects section (the lower block of output) refers to variability that has been explained or understood by the model. The fixed intercept shows the overall mean of the observations since we have centered our predictors. The (nonsignificant) prejudice slope tells us that, as participant prejudice increases by one unit, ratings of an average target (more specifically, a target of average Afrocentricity) are predicted to decrease by .035 units. The (nonsignificant) Afrocentricity slope tells us that, for a participant with an average level of prejudice, as target Afrocentricity increases by 10%, ratings are predicted to drop by .533 units. Finally, the prejudice × Afrocentricity interaction tells us that the effect of Afrocentricity, which is weakly negative for the average participant (−.533) becomes more extreme as we consider participants with greater levels of prejudice. For every one-unit increase in participant prejudice, the effect of Afrocentricity becomes .129 units more negative. Because these are fixed effects, if we had a new sample of participants and targets (with measures of prejudice and Afrocentricity, respectively), we could use these values to predict how each participant would rate each target.

The random effects section (the upper block of output) involves variability that has been accounted for, but not really explained. This section estimates the degree to which, after accounting for the fixed effects, there is variance due to (a) participants rating the average target more positively or more negatively than would be expected (participant intercept variance, .134), (b) participants being more or less sensitive to increasing Afrocentricity than would be expected based on their level of prejudice (participant Afrocentricity variance, .062), (c) targets receiving more favorable or unfavorable ratings from the average participant than would be expected (target intercept variance, .119), and (d) ratings of a given target being more or less sensitive to participant prejudice than we would expect based on the target’s Afrocentricity (target prejudice variance, .002). Finally, this section includes a residual variance (.005) which is variation that cannot be explained from the fixed effects and that has not been accounted for by the random effects. Critically, all of this variance—the random effects and the residual—is idiosyncratic. If we were to obtain a new group of participants and have them rate a new group of targets, we could not use these estimates to make predictions about how a participant would rate a target.

Procedures for testing fixed effects with these models are relatively well elaborated. For each effect, we obtain estimates of t, and (via some estimation process like Satterthwaite) degrees of freedom, from which we can estimate the probability under the null hypothesis (p values). One issue that has not been comprehensively addressed, however, is how to estimate effect sizes. In general, because Model A is by definition more flexible than Model C, it yields predictions that correspond more closely to the data. That is, the error associated with Model A is typically less than the error associated with Model C (though this is not always true in the case of mixed-effects models). The effect size, η2, reflects the magnitude of this reduction in error. More specifically, η2 represents the proportion of error variation in Model C that has been explained by Model A. So, η2 = (error for Model C – error for Model A) / error for Model C. How, exactly, should we compute this reduction in error? Though there are several treatments involving hierarchical or nested designs, estimation of effect sizes for mixed-effects models involving crossed random factors is particularly opaque.

Existing approaches and their challenges

Snijders and Bosker (2011)

Snijders and Bosker’s (2011) approach involves computing η2 by combining estimates of different variances. The error associated with Model A is computed as a combination of the random variance(s) and the residual. The error associated with Model C is computed in the same fashion. Snijders and Bosker describe their approach in terms of a hierarchical organization of the data, not the crossed organization we have been discussing. To our knowledge, Snijders and Bosker never sought to generalize this approach to a crossed structure, but for the sake of argument, we will do just that. To appreciate their approach, however, we will begin by introducing a much simpler analysis, using Model A2, which estimates the fixed effects of prejudice, Afrocentricity, and their interaction, but includes a much simpler random portion. Here, the random intercept model allows each participant to have his or her own intercept (some participants view the average target as relatively qualified, others view the average target more critically) and of course allows for residual error, but includes no other random effects. Model A2 can be compared to Model C2.pxa, which excludes the interaction, or to Model C2.all, an intercept-only model which excludes all predictors (both C2.pxa and C2.all allow participant intercepts to vary).

If we are interested in the total unexplained variation in individual ratings, we can compute the variation in, say Model A2, as: model var = participant intercept variance + residual variance. The intercept variance captures between-participant variation, and the residual here captures within-participant variation. A similar estimate can be derived for Model C, and an effect size, η2, can easily be computed as the proportion of variance explained by the fixed effects, relative to that Model C.Footnote 2 In our view, there are two problems with applying this approach to models involving crossed random factors. The first is easy to address. The second is much harder to address, and it is the issue that proves problematic for all of the approaches reviewed below.

The easy problem

Snijders and Bosker (2011) frame their approach in terms of a comparison between a model of interest (e.g., Model A2) and a compact model that includes no fixed-effect predictors (Model C2.all). This comparison estimates η2 for the entire set of predictors. It asks, all told, does our model explain variation in the data? But researchers are often interested in testing a single predictor. For example, in the current example, the researchers might want to specifically test the effect of Afrocentricity (not the combined effects of prejudice, Afrocentricity, and their interaction). If a researcher wants to test the partial effect of a predictor, over and above other predictors, this estimate is not appropriate. The solution to this problem is relatively simple. Instead of the approach originally outlined, we might specify a more complex Model C3.afr that excludes only Afrocentricity (the effect we want to test), and then proceed with the strategy, estimating the correct variance by appropriately manipulating and combining the error terms that are relevant to the predictor of interest. This provides an estimate of the partial effect of the fixed effect that interests us, over and above the other fixed effects in the model.

The hard problem

The strategy translates random effect variances into the appropriate error term for a given model. This approach works well when the random portion of the model is simple. For example, with a hierarchical organization (e.g., a two-level model where observations are nested within participants), if only random intercepts are estimated, implementation of this approach requires only trivial algebra. Unfortunately, as the random effects become more complex, so does the translation. The original approach extends in a straight-forward manner to three-level random-intercept models. However, even with a two-level model, random slopes prove challenging for different random effect structures. The difficulty derives from the need to translate variation in the slope (the effect of a predictor) into the metric of the criterion or outcome variable, Y. To do this translation, the researcher must account for the variance of the predictor variable. This process is not too onerous for a categorical predictor (cf. Judd, Westfall, & Kenny, 2017b), but for a continuous predictor, like prejudice or Afrocentricity, the math gets tricky. Applying this approach to hierarchical models, Snijders and Bosker (2011) suggest simply excluding random slopes entirely, and only using models with random intercepts to compute effect size (but see Johnson, 2014; Rights & Sterba, 2019). In our simulations, this solution worked extremely well for hierarchically organized data, presumably because, in these models, variability that would have been accounted for by the random slopes is allocated to the residual error term, where its influence on the effect size is comparable.

In the simulations below, we extend this approach to models with crossed random factors. In the case of our example, this approach might lead a researcher to approximate the correct and fully specified Model A1 with something like Model A2, which simplifies the analysis by excluding random slopes and ignoring target as a random factor. A slightly more complex version involves allowing random intercepts for both factors, but no random slopes as in Models A3, C3.pxa, and C3.afr. We will refer to this approach as SBX (Snijders and Bosker, crossed, see also Luo & Kwok, 2010). We want to give full credit to Snijders and Bosker (2011) for developing this approach, and at the same time, we want to acknowledge that, as far as we know, they never suggested using the approach in this manner.

The models above are the models we fit to obtain the SBX estimates. The output needed to test the effect of Afrocentricity appears in Fig. 4. To compute error for each model, we can simply sum the variances of the random intercepts and the residual (shown in bold). So, the error variance for Model A3 = 0.120 + 0.110 + 0.059 = 0.289. The error variance for Model C3.afr = 0.120 + 0.299 + 0.059 = 0.353. The estimate of effect size can then be computed as

In the case of crossed random factors, we originally believed that mis-specifying the model and ignoring random slopes would distort the estimate of effect size (particularly for models seeking to test something like the effect of Afrocentricity, that is, to compare A3 with C3.afr). As we will see in our simulations, we were wrong: the SBX approach proves to be very effective, even in data with crossed random factors.

Selya et al. (2012)

Selya et al. (2012) offered an approach to effect sizes for nested data. Again, they did not recommend their approach for crossed random factors. The process they outlined involves estimating both Model A and Model C, and for each model, capturing the residual variance. Recall that the residual variance is variation in the data that is not captured by either the fixed or random portions of the model. By comparing the residuals, they compute the relative advantage of Model A over Model C and, so, estimate the effect sizeFootnote 3. Unlike Snijders and Bosker (2011), this approach is presented as a way to calculate the effect size for the partial effect of a particular variable (or set of variables) rather than for the omnibus test of the full set of predictors. And it easily allows for both random intercepts and random slopes. For example, one could estimate Models A1 and C1.pxa allowing the complete random effects. To estimate the error in each model, one would ignore the random intercept and random slope variances, capture the estimates of residual variance for each model, and compare them. Importantly, this approach requires that the random effects are held constant across Models A and C. This requirement reflects the risk that, because Model C has less flexibility in the fixed portion, it may compensate by adjusting the variance of random intercepts or slopes to account for some of the residual error. It would therefore be unfair to compare Model A’s residual to Model C’s residual. There are, again, two problems with this approach, one easy and one hard.

The easy problem

Like Snijders and Bosker (2011), Selya and colleagues frame their approach as a test for nested random factors, not crossed. Their approach extends to crossed factors with no trouble, however. Even with the crossed design in our example, one could estimate the two models in which we are interested (Model A1, Model C1.pxa), capture the residuals, and compare them.

The hard problem

This approach completely excludes random effects from the estimate of the error term. As discussed above, random effects reflect unexplained variation in the model. They constitute error for research that seeks to generalize to new participants and stimuli. Selya and colleagues’ approach treats the random effects as if they were fixed effects, estimating a fundamentally different quantity, similar to something called the conditional effect size. In the case of our example, the approach inherently assumes that a new study would use the same six participants and the same four targets (or at least entities for whom the mean response was already known). Though this assumption may sometimes be justified, it is presumably incorrect for many research endeavors, where the goal is to generalize. In our view, variation due to the random effects (intercepts and slopes) belongs in the error term. Ignoring that variance dramatically magnifies the estimated effect size (cf. Judd, Westfall, & Kenny, 2017b).

Any correction for the second problem requires the researcher to incorporate the random intercept and slope variances into the error term and figure out how to translate those estimates into variation in observed scores. Again, for complicated designs, this is not a trivial task (and it is identical to the hard problem we described for SBX).

Interestingly, in the case of our example, implementing Selya and colleagues’ approach does not lead to a simpler instantiation of our models: we can estimate an intercept and slope for each participant, and an intercept and slope for each target (as in Model A1). And this model can be compared to Model C1.pxa (holding the random effects constant). Unfortunately, as we will see in the simulations below, given any random variation due to participant or target, this approach is biased, yielding an effect size that is too large.

Judd, Westfall, and Kenny (2017b)

Judd, Westfall, and Kenny (2017b) offer a method that accomplishes almost everything we seek. The approach can handle complex random effects, allowing both intercepts and slopes to vary randomly. It can also accommodate crossed random factors, and it does not require complex algebra to estimate the appropriate error terms. Judd and colleagues address the fundamental problem that plagues estimation (namely, translating estimated slope variances into variation in observations) by introducing one key limitation: their approach is predicated on contrast-coded categorical predictors. Recall that the problem in applying Snijders and Bosker’s approach to crossed designs is tied to the distribution of the predictor (e.g., it is tricky to estimate the impact of a random slope for prejudice on the error term when prejudice scores vary continuously from 1 to 7). The use of a categorical predictor (e.g., prejudice is either low or high) simplifies estimation, and allows Judd and colleagues to translate random slope variance to variance in the metric of the criterion variable, Y.

Although the use of categorical predictors simplifies the algebra, it obviously precludes consideration of continuous predictors. There are many cases where adopting this strategy would either not be possible or would force us to incorrectly simplify our representation of the data. In the current example, the researchers might be tempted to perform median splits, classifying participants as either high or low in prejudice, and classifying targets as either high or low in Afrocentricity. This tactic is problematic because it ignores meaningful variability in the predictors. Given that this approach cannot handle continuous predictors, we do not include it in the simulations below.

New approaches

We now describe three distinct approaches for estimating effect sizes. All three approaches are flexible in that they accommodate more complex models and more complex data than the approaches described above. The first approach involves deriving an effect size from the t statistic and its degrees of freedom. The second approach directly estimates error for a given model by comparing the predictions of that model with the observed data. The third approach uses random effect variances to estimate error, and it requires that the random slopes are translated into variation in the metric of the criterion variable (the dependent variable). Only the “translating random slopes” approach, described below, probably counts as completely new, but we have not seen the others examined in the literature.

Converting t “back” to η2 (tback)

In ordinary least squares analysis, if we know the degrees of freedom, there is a simple translation from η2 to the t statistic (Judd, McClelland, & Ryan, 2017a):

And so, of course, we can also translate t back to η2:

In mixed-effects models, we do not know the degrees of freedom, but there are various techniques for estimating them, such as Satterthwaite’s approximation. One easy way to estimate an effect size, then, is to derive η2 from the formula above. For example, based on the Satterthwaite method, Model A1’s output shows that the test of Afrocentricity yielded, t(2.857) = −2.179 Accordingly, we can compute

We will refer to this approach as “tback.” We want to point out that this approach yields what is called an “operative” rather than a raw effect size. In this paper, we generally consider the magnitude of an effect as the proportion of variance it explains relative to the error variance in some Model C. An operative effect size is defined differently. It is assessed relative to the variability in the effect of interest (Cohen, 1988, p. 48). For example, in a repeated measures t test, say using a pre-post design, the “raw” effect size compares the difference in the means (or in the case of η2, the variance explained by that difference) to all the variability in both the pre- and post-measures. The operative effect size, by contrast, compares the mean difference to the variance of the difference score, itself. Because the t test reflects exactly that operative comparison, the effect size derived from the t is an operative effect size. This difference means that the tback approach will, in some cases, estimate a very different kind of effect size than the other approaches we consider. This estimate will typically be larger than the corresponding raw effect because it ignores some of the variance in the data.

Critically, this approach can be used for any test that can be represented as a t (or really, an F) statistic. It can easily incorporate random intercepts and random slopes, it can test hierarchical/nested and/or crossed random factors, it accommodates categorical and/or continuous predictors, it accommodates multiple, correlated predictors for a given factor, it does not require the use of centered and contrast-coded predictors, and it makes no assumptions that there are equal numbers of observations associated with each participant or each stimulus. This approach is also incredibly easy to implement, and it is one of the few realistic, feasible ways to estimate an operative effect.

Drawbacks to the tback approach involve instability and bias, as we will see in the simulations, below. First, for small samples, it can be less stable and can systematically overestimate the effect. (Note that tback estimates an operative effect size, which is larger than the raw effect. But its estimates are inflated even relative to this standard.) Second, although tback provides reasonable estimates for some kinds of effects, it seems to fail dramatically for others.

Direct Estimation of Error (DEE)

Directly estimating the error of a model is fairly simple. Note that the challenges inherent in the several strategies emerge because they attempt to use the estimated variances of the random effects and the residual to estimate the unexplained variability in the observed scores. The DEE approach obviates this process and directly estimates the error variability in the data. Assuming Models A and C have been fitted, there are five steps.

-

1)

Derive predictions for Model A, \( {\hat{Y}}_{Aij} \), for each observation based on the fixed-effects portion of the model.

-

2)

Repeat the process for Model C: find \( {\hat{Y}}_{Cij} \).

-

3)

Compute the sum of squared error in Model A by comparing each prediction to the actual value in the data, squaring the difference, and adding up the squared deviations:

$$ {SSE}_A=\sum \limits_{j=1}^n\sum \limits_{i=1}^m{\left({Y}_{ij}-{\hat{Y}}_{Aij}\right)}^2 $$ -

4)

Repeat the process for Model C:

$$ {SSE}_C=\sum \limits_{j=1}^n\sum \limits_{i=1}^m{\left({Y}_{ij}-{\hat{Y}}_{Cij}\right)}^2 $$ -

5)

Compute the effect size:

$$ {\eta}_{DEE}^2=\frac{\left({SSE}_C-{SSE}_A\right)}{SSE_C} $$

The only (minor) complication to this approach is the code for estimating the predicted values from the fixed effects portion of a given model without adjusting for the random effects, and the solution is easily realized with the “re.form” option shown in Fig. 5.

In R, one can estimate two nested models of interest as usual, say Model A1 and Model C1.afr. In this comparison, we test what many people think of as the “main effect” of Afrocentricity. Really, this is the simple effect of Afrocentricity for a participant with an average level of prejudice. Afrocentricity shows a weak trend (p = 0.12) in Model A’s tests of the individual predictors, such that, for a participant with average prejudice, more Afrocentric faces receive lower ratings. For η2, one can think of the raw effect size as an answer to the question, of all the variability in the data, what proportion is accounted for by target-level differences in Afrocentricity? Model C1.afr (again, named for the effect that it drops) forces the simple effect of Afrocentricity to be zero and eliminates associated random slopes (so differences between participants in the effect of Afrocentricity are not allowed). After estimating both models, one can request the predicted values for each and add them to the dataset using the predict function and specifying that those predictions should not include the random effects (see below). Assuming we have a data frame called d, and we have estimated Model A1 (m.a1) and Model C1.afr (m.c1.afr), steps 1 through 5 are implemented in Fig. 5. When we began this work, we were not aware of the rsquared function embedded in the piecewiseSEM R package (Lefcheck, 2016), which seems to be fundamentally congruent with this approach.

In the current example, we find

Like tback, this approach is extremely flexible. It can be used for any appropriately nested model comparison. For example, it can test an omnibus or a partial effect, incorporate random intercepts and random slopes, test hierarchical/nested and/or crossed random factors, etc. We will review strengths and weaknesses more extensively in the Conclusions. The DEE approach is essentially unconcerned with the structure of the models or the data. It simply uses two nested models (Models A and C) to derive predictions from the fixed effects and compares those predictions to the observed data to determine how much each model deviates from the actual observations.Footnote 4

There are several drawbacks to the DEE approach. First, like tback, for small samples, it can be less stable and can systematically overestimate the effect. Second, in datasets where the number of observations differ across levels of a random factor (e.g., there are 100 data points for one participant and only 10 from another), the result will be more heavily influenced by levels with more data. Third, our simulations, reported below, suggest that the estimates for crossed designs can be inflated when the number of subjects (n) and stimuli (m) differ.

Translating random slopes (TRS)

Like existing approaches, the TRS approach uses random effect variances to estimate error. Rather than simplifying the models (allowing only random intercepts, as in Snijders & Bosker, 2011) or simplifying the data (allowing only categorical predictors, as in Judd and colleagues, 2017), this approach deals directly with the tricky math, translating variance in random slopes into error variance in the metric of the criterion variable. The approach relies on the idea that a regression slope, b, provides a way to translate a change in some predictor, X, into a change in our prediction for the criterion, Ŷ. Conceptually, we can apply this basic idea to random slopes in a mixed-effect model (e.g., the effect of target Afrocentricity on Pamela’s ratings), and by aggregating across all levels of a random factor, estimate the variance in the outcome that is reflected in those random slopes. The derivation of this approach is explained in Appendix A (in the online supplement) and concludes with the idea that we can estimate the error variance associated with a random slope for a given predictor, x, based on our sample sizes for participants (n) and stimuli (m), the variance of the predictor (\( {\sigma}_x^2 \)), and the variance of the random slopes associated with that predictor (\( {\sigma}_b^2 \)). After computing error variances for all the random slopes, we can sum them to find the error for the model in a manner similar to the SBX approach. The formula to translate a slope variance into error variance appears below.

There are several drawbacks to the TRS approach. First, the math is tractable but tedious. Because the process can be automated, this issue is easy to overcome. Second, in real-world data, factors are often crossed imperfectly, such that participants do not all respond to every stimulus in the dataset or do not respond to the same number of stimuli (a messy organization or an unbalanced design). In that case, the assumption that the variance of some stimulus-level predictor is constant across participants (or that the variance of some participant-level predictor is constant across stimuli) may be violated. Third and fourth (similar to the DEE approach), TRS estimates are unstable with small samples and can be inflated when n and m differ.

Review of Approaches

We have discussed five different approaches that can, perhaps with minor adjustments, be used to estimate effect size for crossed random factors and with models that allow for both continuous and categorical predictors. In the next section, we will test these approaches in simulations for which we know the true size of the effect.

We feel it is important to highlight several critical aspects of the analyses. First, all the predictors in these models were mean-centered, which will reduce the correlation between the intercepts and slopes on average. If the predictors were not mean-centered, the information in the two random-effect variances would be partially redundant. Second, in all models, we eliminated random slopes for any fixed effects that were dropped from Model C.

We also want to acknowledge that applying these approaches to real-world data will sometimes be much more complicated than applying them to the example we have been considering (with six perceivers and four targets). We have included Appendix B (in the online supplement), which applies the SBX, DEE, and TRS approaches to a real dataset, and in so doing highlights some of the complexities. This example dataset involves three crossed factors, but the data are not perfectly crossed, and some data are missing.

Simulations

We simulated data to examine the performance of each of the methods that is amenable to a continuous predictor: Snijders and Bosker (SBX), Selya, the back translation from t (tback), DEE, and TRS. In these simulations, we estimated partial η2 at (a) the most granular level (e.g., a cross-factor interaction involving one participant-level predictor and one stimulus-level factor, which we will call Level 1) and (b) at an aggregate level (e.g., the simple effect of a participant-level predictor, which we will call Level 2). The simulations included three different magnitudes of effect size. The true proportion of variance for the effect of interest was specified as either 0.00, 0.05, or 0.10. The simulations generated variance separately at (a) the level of participant, (b) the level of stimulus, and (c) the combination of participant × stimulus. More specifically, participant-level random intercepts were generated as a function of a standardized between-participant predictor with a slope defined as the square root of the true proportion of variance. Error was then added to these intercepts both as a function of random effect variance (with a defined proportion of variance, see below) and residual error. We repeated this process at the level of the stimulus, and again at the most granular level, which represents the combination of participant and stimulus. We then added these three components, weighting each source of variance equally. In each step of the simulation, we effectively built two separate datasets: one in which trupv was used to define the effect of the between-participant predictor, and one in which trupv was used to define the effect of the interaction of the between-participant and between-stimulus predictors.

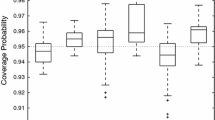

The simulations also orthogonally varied the number of participants, n, and the number of stimuli, m. We simulated datasets including levels of 10, 20, 35, 50, or 60 for each factor. Finally, we included three different magnitudes of non-focal fixed effects, reflecting the size of effects in the model other than the effect being tested, and three magnitudes of random effect variance. As with the true proportion of variance, these effects were estimated at 0.00, 0.05, or 0.10. All of these factors were crossed, yielding 2 (level of analysis) × 5 (n) × 5 (m) × 3 (true effect) × 3 (fixed effect) × 3 (random effect), or 1350 different scenarios reflecting the various combinations of parameters. For each scenario, we simulated 500 studies. In each “study,” we computed effect size estimates using all viable approaches (tback, DEE, TRS, etc.). The means and standard deviations of each approach for each scenario are plotted in Figs. 6 and 7.

Results of each approach used to estimate a “Level-2” simple effect for a participant-level predictor at different values of true η2 (.00, .05, .10), n, and m (10, 20, 35, 50, 60). In addition, we varied the magnitude of both the other fixed and random effects (.00, .05, .10). Trupv = proportion of variance accounted for by effect of interest; n = number of participants; m = number of stimuli; fixpv = proportion of variance of fixed effects; rndpv = proportion of variance of random effects. Note that tback should be compared to a standard that is 3× the value of trupv. The error bars represent ±1 standard deviation.

Results of each approach used to estimate a “Level-1” cross-factor interaction (one participant-level predictor and one stimulus-level factor) at different values of true η2 (.00, .05, .10), n, and m (10, 20, 35, 50, 60). In addition, we varied the magnitude of both the other fixed and random effects (.00, .05, .10). Trupv = proportion of variance accounted for by effect of interest; n = number of participants; m = number of stimuli; fixpv = proportion of variance of fixed effects; rndpv = proportion of variance of random effects. Note that tback should be compared to a standard that is 3× the value of trupv. The error bars represent ±1 standard deviation

There are two primary criteria for evaluating the results. One involves bias. For an unbiased estimate, the mean should be close to the true effect size, trupv (or trupv × 3 for tback, see below). The other involves reliability. For a reliable estimate, the standard deviations should be small.

Clearly, the Selya estimates are inflated and unreliable at both levels of analysis. This inflation is exactly what we would predict because this approach estimates a conditional effect size that does not treat random effect variance as error. Because it reduces the error in both models, the proportional reduction is overestimated: each nonzero effect (trupv = .05 or .10) appears to account for a larger proportion of error than it should.

With regard to tback, recall that the approach necessarily yields an operative effect size. For example, the simple effect of a predictor like prejudice on perceiver ratings, which varies from participant to participant, would be estimated relative to only the between-participant variation. Variation due to stimulus or the stimulus × participant interaction would be ignored. We therefore expect tback to yield higher estimates of effect size. However, given the structure of our simulations, which combine three separate, equally weighted sources of variance, we know that the operative effect size is always three times as large as the raw effect size. For example, when the raw effect size (trupv) shown in the figures is .05, the operative effect will be .15. We must evaluate the tback estimates relative to that altered standard. Interestingly, the tback approach seems to fare reasonably well for Level-2 estimates. It is less reliable and somewhat inflated at low sample sizes, but it tends toward the true operative effect as the sample increases. The Level-1 estimates, however, are severely biased and extremely variable. It is also interesting to note that the magnitude of the problem increases as sample size increases (the opposite of the increased accuracy and precision we typically observe as a function of larger samples). This Level-1 pattern casts some doubt on the viability of the tback approach, and we are uncertain about how it will perform in more complex models.

The pattern of DEE and TRS is similar to that of tback. These approaches perform quite well at Level 2. Their standard deviations decrease as the sample sizes increase (this is reflected in the pronounced triangular pattern, with reduced variation at the right side of the plots), but this effect is not surprising and simply suggests that the estimates are more reliable for larger samples. DEE does show some bias, tending to overestimate the Level-2 effect, but only when the samples are very small. In the estimates of the Level-1 effect, the pattern seems more worrisome. DEE and TRS both show an odd, non-monotonic pattern of bias that seems to stem from an imbalance between the number of participants and stimuli. Whenever the two sample sizes differ, the estimates of η2 are inflated. To estimate a Level-1 effect with these approaches, it may be possible to perform an adjustment. For example, the original effect size estimate might be multiplied by the square root of the ratio of the smaller sample size to the larger sample size. For example, if a study uses 63 participants, but only 25 stimuli, the DEE and TRS estimates of a Level-1 effect might be multiplied by \( \sqrt{\frac{25}{63}} \). Figure 8 shows the results before and after this adjustment is applied, collapsing across all other factors in our simulations. The adjusted estimates are much closer to the true values and show less systematic deviation from those values (the adjustment even eliminates the triangular pattern of increasing reliability at higher sample sizes). Ultimately, however, we are concerned about both approaches. In our initial simulations with three crossed random factors (not reported here), the problems seem to become more severe, and it is not clear how to adjust the estimates appropriately. It may be valuable for future work to explore whether these approaches can be amended to provide better estimates.

In stark contrast to the other approaches, SBX seems relatively unbiased and reliable across the range of parameters we tested (level of analysis, sample size, imbalance in sample size, magnitude of effect of interest, magnitude of effects of disinterest). Like most of the approaches, it shows better reliability with larger samples, but again, this is not surprising. We have also run preliminary tests of this method in data with three crossed random factors. Even in those more complex datasets, SBX seems to provide remarkably accurate estimates.

Conclusions

We reviewed several existing approaches for estimating partial η2 (and other effect sizes) in mixed-effects models. With minor adaptations, two existing approaches can easily handle complex models involving continuous or categorical predictors and crossed random factors (SBX, Selya). In addition, we outlined three new approaches (tback, DEE, and TRS). We then tested all five approaches in a large simulation. The results of that simulation suggest that most of these approaches demonstrate some degree of bias. In particular, they tend to overestimate the true effect. One approach clearly outperformed the others. The SBX approach estimates error based on models that allow only random intercepts. In these models, error variance that would (and should) be reflected in random slopes is allocated to the residual error term. By combining random intercept variance and the residual, this approach seems to accurately capture the model’s overall error even though it represents some portions of that error in the “wrong” location. The cause of the advantage to this approach is not immediately clear to us, but it may derive from its simplicity. For example, the TRS approach may encounter problems because it relies on nuanced estimates of random slope variances and variances in predictors and thus may essentially be overfit to the sample. By contrast, SBX estimates only a few, potentially more stable pools of variance reflecting major factors in the design. In any case, the SBX approach seems remarkably effective across a range of situations, and it offers an exceptionally easy strategy for estimating effect size.

It is important to highlight a critical feature of effect sizes in mixed-effects models: they are much smaller than most researchers would expect. For example, an ordinary least squares analysis an effect that yielded, t(29.81) = 2.445, would yield η2 = 0.167. In a mixed-effects model, that effect size might be only .001–.002 (see Appendix B in the online supplement for an example with real data). It is imperative to remember that these effect sizes represent the variance explained across all of the observations in the dataset. As noted above, these raw effect sizes can be contrasted with operative effect sizes, which represent the explanatory power of a predictor in relation to the test-relevant variance. For example, a variable that explains participant-level variation (does one participant’s mean differ from another participant’s mean?) might be compared not to all of the variation in the dataset, but rather only to variation in participant means. Of the strategies we explored, operative effect sizes can only be computed based on the tback and TRS approaches, but for TRS, that process is very complicated. As it stands, with raw effect sizes in mixed-effects models, researchers and reviewers and editors will need to adjust their expectations away from Cohen’s definitions of small, medium, and large (η2’s of .01, .06, .14), recognizing that those definitions simply do not apply to mixed-effects models.

In general, the SBX approach seems to offer a flexible and simple way to estimate effect sizes in complex mixed-effects models. It clearly seems like the overall winner in our simulations. There are some tradeoffs, however, between the various approaches we considered, and there may be times when a researcher might reasonably want to use tback, DEE, or TRS. Whereas SBX requires the researcher to derive an effect size estimate from a mis-specified model (one that ignores the random slopes), DEE and TRS derive their estimates from the fully specified analytic models being tested. DEE explicitly handles extremely complex models (including multiple correlated predictors, imperfectly crossed data, etc.) and so maximizes flexibility. Unfortunately, in datasets with two crossed factors, it is somewhat biased for small samples, tending to overestimate the effect. TRS is less biased with small samples and can, in principle, be used to estimate an operative effect, but it is also much less flexible. More worrisome, both DEE and TRS may be severely biased in data involving three or more crossed factors. There are other dimensions on which these strategies—and the other approaches we have discussed—can be compared. Table 2 outlines our understanding of some advantages and disadvantages.

Again, the SBX approach seems to succeed on most dimensions. The only real drawbacks we see for SBX are that (a) it involves asking researchers to intentionally mis-specify their models, such that the models used to derive the effect size will differ from the models used to correctly derive statistics like t, p, etc., and (b) it cannot be used to compute operative effect size in models with random slopes because it allocates slope variance to the residual error term. Still, in spite of its simplified random effects, the SBX approach provides almost stunningly accurate (unbiased and reliable) estimates of partial η2, and those estimates are incredibly easy to derive. It seems to provide a good general solution.

DEE direct estimation of error, TRS translation of random slopes

Though we are excited about these approaches, and though we feel they provide a way for researchers to quantify and conceptualize the magnitude of the effects they observe, we want to acknowledge that standardized effect sizes are somewhat troublesome in principle. They conflate aspects of the method and design with the magnitude of the “effect.” For example, an independent variable, x, with a large variance in one study (versus low variance in another), and a dependent variable, y, that is measured reliably in one study (versus unreliably in another) will demonstrate a larger standardized effect size, like η2, even though the slope relating x to y is identical across the two studies. Ultimately, these measures provide only an imperfect estimate of the construct of interest.

Still, journals often require effect sizes. Two of the authors recently faced a situation in which we were asked to report a non-optimal ordinary least squares analysis rather than the more appropriate mixed-effects analysis so that we could conform to the journal’s reporting requirements. Effect sizes may also help people conceptualize the impact of predictors in their own data, or across multiple datasets (as in a meta-analysis). The utility of standardized effects may be bounded, but they do offer an important lens. The SBX approach, in particular, may help researchers estimate effect sizes accurately for the data and models they need to test.

Notes

All supplemental files including both appendices; R code to generate the example dataset, simulations, and all figures; and materials for the analysis of real-world dataset can be found at https://osf.io/p5v6y/?view_only=fcdffbd2335b419487b452907ce2c3d0

Though we focus on estimates of partial η2, note that one could also obtain an estimate of the semi-partial η2 by finding the reduction in error (error for Model C2.pxa − error for Model A2) and dividing by the error for Model C2.all. A similar strategy could be implemented for many of the approaches discussed below.

Actually, the approach offered in this paper does not directly compare the two estimates of error. Rather, each estimate is compared to the estimate of error from the null model (with no predictors) to derive two separate η2’s. Those η2’s are then compared to each other. This roundabout approach adds an unnecessary step, but it does not alter the result

This approach is conceptually similar to Efron’s procedure for estimating a pseudo-R2 effect size for logistic regression. In logistic regression, the criterion variable is categorical and, in the simplest case, can be represented as either a 0 or a 1 (e.g., yes or no, sick or healthy). Efron’s approach involves finding a predicted probability for each data point and computing the difference between that probability (which ranges continuously from 0 to 1) and the observed data (which is represented as either 0 or 1, with no intermediate values). It has been observed that, because the predictions and observed data have different scaling, interpretation of Efron’s pseudo-R2 is not intuitive. In the case of a hierarchical or multilevel model with a continuous criterion variable, however, this approach is simple and interpretable in terms of squared error

References

Cohen, J. (1988) Statistical Power Analysis for the Behavioral Sciences (2nd edn), Lawrence Erlbaum Associates.

Johnson, P. C.D. (2014). Extension of Nakagawa & Schielzeth's R2GLMM to random slopes models. Methods in Ecology and Evolution, 5(9), 944-946. https://doi.org/10.1111/2041-210X.12225

Judd, C. M., McClelland, G. H., & Ryan, C. S. (2017a). Data analysis: A model comparison approach to regression, ANOVA, and beyond. Routledge.

Judd, C. M., Westfall, J., & Kenny, D. A. (2017b). Experiments with more than one random factor: Designs, analytic models, and statistical power. Annual Review of Psychology, 68, 601-625.

Lefcheck, Jonathan S. (2016) piecewiseSEM: Piecewise structural equation modeling in R for ecology, evolution, and systematics. Methods in Ecology and Evolution, 7(5), 573-579. https://doi.org/10.1111/2041-210X.12512

Luo, W., & Kwok, O-M. (2010). Proportional Reduction of Prediction Error in Cross-Classified Random Effects Models. Sociological Methods & Research, 39(2), 188-205. https://doi.org/10.1177/0049124110384062

Selya, A. S., Rose, J. S., Dierker, L. C., Hedeker, D., & Mermelstein, R. J. (2012). A practical guide to calculating Cohen’s f2, a measure of local effect size, from PROC MIXED. Frontiers in psychology, 3, 111.

Snijders, T. A., & Bosker, R. J. (2011). Multilevel analysis: An introduction to basic and advanced multilevel modeling. Sage.

Rights, J. D., & Sterba, S. K. (2019). Quantifying explained variance in multilevel models: An integrative framework for defining R-squared measures. Psychological Methods, 24(3), 309-338. https://doi.org/10.1037/met0000184

Author information

Authors and Affiliations

Corresponding author

Additional information

Open Practices Statements

R code and data to reproduce all analyses, simulations, and figures are available at the following link: https://osf.io/p5v6y/?view_only=4de55bba7fe34289a3aab3e5634a9794

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Correll, J., Mellinger, C. & Pedersen, E.J. Flexible approaches for estimating partial eta squared in mixed-effects models with crossed random factors. Behav Res 54, 1626–1642 (2022). https://doi.org/10.3758/s13428-021-01687-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-021-01687-2