Abstract

This article presents subjective rating norms for a new set of Stills And Videos of facial Expressions—the SAVE database. Twenty nonprofessional models were filmed while posing in three different facial expressions (smile, neutral, and frown). After each pose, the models completed the PANAS questionnaire, and reported more positive affect after smiling and more negative affect after frowning. From the shooting material, stills and 5 s and 10 s videos were edited (total stimulus set = 180). A different sample of 120 participants evaluated the stimuli for attractiveness, arousal, clarity, genuineness, familiarity, intensity, valence, and similarity. Overall, facial expression had a main effect in all of the evaluated dimensions, with smiling models obtaining the highest ratings. Frowning expressions were perceived as being more arousing, clearer, and more intense, but also as more negative than neutral expressions. Stimulus presentation format only influenced the ratings of attractiveness, familiarity, genuineness, and intensity. The attractiveness and familiarity ratings increased with longer exposure times, whereas genuineness decreased. The ratings in the several dimensions were correlated. The subjective norms of facial stimuli presented in this article have potential applications to the work of researchers in several research domains. From our database, researchers may choose the most adequate stimulus presentation format for a particular experiment, select and manipulate the dimensions of interest, and control for the remaining dimensions. The full stimulus set and descriptive results (means, standard deviations, and confidence intervals) for each stimulus per dimension are provided as supplementary material.

Similar content being viewed by others

The human face plays a fundamental role in social interaction. The perception of facial attributes of our conspecifics, for instance, seems crucial for evaluating whether a person is approachable or avoidable (Oosterhof & Todorov, 2008). Indeed, many of the inferences, judgments, and decisions we make about other people are based on their physical appearance, namely their facial features (for an extensive review, see Calder, Rhodes, Johnson, & Haxby, 2011).

Faces communicate a variety of information about a person, from gender, ethnic background, and age, to affective states. For example, people form personality impressions from the facial appearance of other individuals, a process often based on rapid, intuitive, and unreflected mechanisms (Ferreira et al., 2012). Evidence for the validity of the information inferred from facial appearance is mixed with some studies suggesting that these inferences can be fairly accurate, and others showing that facial cues are often misinterpreted (Olivola & Todorov, 2010b; Todorov, Olivola, Dotsch, & Mende-Siedlecki, 2015). Whether accurate or not, people do act upon this information with consequential effects in a variety of domains including mate choices, economic decisions, sentencing decisions, and occupational and electoral success (for reviews, see Todorov, 2012; Todorov et al., 2015).

In the specific domain of face recognition, research has been conducted in a wide range of topics, including identity perception (e.g., Grill-Spector & Kanwisher, 2005), emotion recognition (e.g., Calvo & Nummenmaa, 2016; Russell, 1994), gender discrimination, and age recognition (e.g., M. G. Rhodes, 2009; T. Watson, Otsuka, & Clifford, 2015). These topics have been investigated by means of a variety of methods, including behavioral, cognitive, computational, and neuroimaging (see Calder et al., 2011). Moreover, faces have also been used as stimulus materials in a multiplicity of research areas, including emotion (e.g., Ekman & Friesen, 1971), mimicry (e.g., Hess & Fischer, 2013), emotional contagion (e.g., Hess & Blairy, 2001), interpersonal attractiveness (e.g., Olson & Marshuetz, 2005), weight estimation (e.g., T. M. Schneider, Hecht, & Carbon, 2012), affective priming (e.g., Murphy & Zajonc, 1993), impression formation and person memory (e.g., Todorov et al., 2015), communication and intergroup relations (e.g., Van der Schalk, Fischer, et al., 2011), and eyewitness identification (e.g., Lindsay, Mansour, Bertrand, Kalmet, & Melsom, 2011), and in the study of neuro- and psychological disorders such as autism, prosopagnosia, schizophrenia, and mood disorders (e.g., Behrmann, Avidan, Thomas, & Nishimura, 2011).

Considering the importance and extensive use of faces as stimulus materials, the availability of validated sets acquires utmost importance for the scientific community. The variety of these sets is also important, not only in terms of model features (e.g., age, sex, ethnicity, facial expression) and the dimensions included in the validation procedures (e.g., valence, clarity), but also in stimulus formats (e.g., stills, videos). However, despite the fact that in our daily interactions we often perceive people in motion, most of the available databases include static facial images, which may challenge their ecological validity (e.g., Koscinski, 2013; G. Rhodes et al., 2011; Van der Schalk, Hawk, et al., 2011), and even fewer compare the same faces in different formats (i.e., static vs. dynamic).

In this article, we develop and validate a new set of Stills And Videos of facial Expressions—the SAVE database—that provides norms for the same model displaying three facial expressions (frown, neutral, and smile). The motivations to develop these norms were to validate a stimulus set in both still and video formats and across wide range of relevant evaluative dimensions, as well as to contribute to the phenotypic diversity of the models included in these types of databases. These norms will be useful for different experimental paradigms, particularly when the manipulation (and strict control) of the stimulus characteristics and presentation format is required by the varying demands of different researchers. The review of the available databases presented in the subsequent section will further clarify the relevance of the present work.

Facial expressions databases

The available literature already offers a great amount of validated databases of facial expressions (for a review, see Bänziger, Mortillaro, & Scherer, 2012; for an extensive list, see www.face-rec.org/databases/). These databases are highly diverse regarding model characteristics (e.g., human vs. computer-generated, age, ethnicity, nationality, professional actors or amateur volunteers), expressions portrayed (e.g., specific emotions or mental states), stimulus format (stills or videos), and validation procedures (e.g., coding systems, sample characteristics, and evaluative dimensions included).

Most of the reviewed databases include real human models, with a few including morphed human faces (e.g., Max Planck Institute Head Database: Troje & Bülthoff, 1996) and even avatars (e.g., Fabri, Moore, & Hobbs, 2004). We will focus on databases that include real human models. Among these, some include professional actors (NimStim Set of Facial Expressions: Tottenham et al., 2009), but most use lay volunteers that are either extensively trained (e.g., in using explicit guidelines regarding the optimal representation of the intended facial expressions; Ebner, Riediger, & Lindenberger, 2010) or coached by the experimenters (e.g., by encouraging models to imagine situations that would elicit the intended facial expressions; Warsaw Set of Emotional Facial Expression Pictures—WSEFEP: Olszanowski et al., 2015).

Also, the models portrayed in the different databases are highly diverse in terms of age. For example, some databases exclusively include stimuli portraying children, such as the NIMH Child Emotional Faces Picture Set (Egger et al., 2011), the Dartmouth Database of Children’s Faces (Dalrymple, Gomez, & Duchaine, 2013), or the Child Affective Facial Expression set (LoBue & Thrasher, 2015). Yet, most databases include young to middle-aged adults (e.g., the Karolinska Directed Emotional Faces—KDEF: Lundqvist, Flykt, & Öhman, 1998; NimStim: Tottenham et al., 2009; WSEFEP: Olszanowski et al., 2015) or older adults (e.g., FACES: Ebner et al., 2010).

Face databases also vary in the nationality and ethnicity of the models. For instance, they include Argentinian (Argentine Set of Facial Expressions of Emotion: Vaiman, Wagner, Caicedo, & Pereno, 2015), Chinese (Wang & Markham, 1999), Polish (WSEFEP: Olszanowski et al., 2015), or Swedish models (Umeå University Database of Facial Expressions: Samuelsson, Jarnvik, Henningsson, Andersson, & Carlbring, 2012). Regarding the models’ ethnicity, most databases include exclusively (e.g., Radboud Faces Database—RaFD: Langner et al., 2010; FACES: Ebner et al., 2010; KDEF: Lundqvist et al., 1998) or a majority (the McEwan Faces: McEwan et al., 2014; NimStim: Tottenham et al., 2009) of white or European descent models (for exceptions, see, e.g., the Chicago Face Database—CFD [Ma, Correll, & Wittenbrink, 2015] and the Japanese and Caucasian Facial Expression of Emotion—JACFEE [Matsumoto & Ekman, 1988]).

Regarding the facial expressions portrayed by the models, most stimulus sets include at least a subset of the following emotions: anger, disgust, fear, happiness, sadness, surprise, contempt (e.g., Pictures of Facial Affect: Ekman & Friesen, 1976; JACFEE: Matsumoto & Ekman, 1988; RaFD: Langner et al., 2010; FACES: Ebner et al., 2010), and some also include neutral facial expressions (e.g., NimStim: Tottenham et al., 2009). Others further include embarrassment, pride and shame (University of California, Davis, Set of Emotion Expressions: Tracy, Robins, & Schriber, 2009), or kindness and critical facial expressions (e.g., McEwan et al., 2014). Some databases also contain body expressions (e.g., Bochum Emotional Stimulus Set: Thoma, Soria Bauser, & Suchan, 2013; Bodily Expressive Action Stimulus Test: de Gelder & Van den Stock, 2011).

Regarding the validation procedures, a few databases resort to highly trained raters (using facial action units to evaluate the expressions; e.g., Ekman & Friesen, 1977, 1978), whereas others have used samples of untrained volunteers (e.g., CAFE: LoBue & Thrasher, 2015; NimStim: Tottenham et al., 2009).

Most validation studies have only assessed a limited set of dimensions. Indeed, validation procedures usually focus on emotion recognition, either using forced choice tasks (e.g., Vaiman et al., 2015) or rating scales (e.g., agreement with items such as “This person seems to be angry”; Samuelsson et al., 2012). To our knowledge, only a few exceptions go beyond emotion recognition. For example, the CFD (Ma et al., 2015) also includes target categorization measures (age estimation, racial/ethnic categorization, gender identification), and a set of subjective ratings (e.g., threatening, masculine, feminine, baby-faced, attractive, trustworthy, unusual) as well as objective physical facial features (e.g., nose width, lip thickness, face length, distance between pupils). Likewise, the RaFD (Langner et al., 2010) includes measures of intensity, clarity and genuineness of expression as well as overall valence and target attractiveness.

Another distinctive feature in the available face databases is stimulus format. Most databases include static stimuli (i.e., stills or photographs of facial expressions). However, a few video databases have recently been developed and validated (e.g., MAHNOB Laughter Database: Petridis, Martinez, & Pantic, 2013; Cohn–Kanade AU-Coded Facial Expression Database: Kanade, Cohn, & Tian, 2000; Geneva Multimodal Emotion Portrayals Core Set: Bänziger et al., 2012; and the Amsterdam Dynamic Facial Expression Set: Van der Schalk, Hawk, et al., 2011). For example, ADFES includes brief videos (maximum 6.5 s) of North-European (Dutch) and Mediterranean (second- or third-generation migrants of Turkish or Moroccan descent) models displaying joy, anger, sadness, fear, disgust, surprise, contempt, pride, and embarrassment. These videos were evaluated regarding emotion recognition and model ethnicity, but also in other dimensions such as overall valence, arousal as well as perceived directedness, perceived causation of the emotion, liking and approach–avoidance. Another example is the EU-Emotion Stimulus Set (O’Reilly et al., 2016), which includes videos (2–52 s long) of a broader set of 20 emotions/mental states (e.g., afraid, happy, sad, bored, jealous, and sneaky), and also body gestures and contextual social scenes. The videos were assessed regarding emotional display (forced choice task), valence and intensity of the expression, and the arousal felt by the participants upon exposure to a given video.

Static versus dynamic facial expressions

The majority of studies addressing the processing of facial information have predominantly used static facial stimuli. However, the extensive use of these types of stimuli has recently been questioned (e.g., Horstmann & Ansorge, 2009; Roark, Barrett, Spence, Abdi, & O’Toole, 2003). Specifically, the critiques refer to the low ecological validity of static stimuli, namely because they lack in temporal aspects of facial motion that are relevant for the recognition of facial expressions (e.g., Alves, 2013; O’Reilly et al., 2016). Presentation format might thus affect the amount of information that is retrieved from a given stimulus (e.g., Langlois et al., 2000).

Studies comparing static and dynamic facial expressions (with the latter referring to the buildup of a facial expression from a baseline expression to the full-display of the emotion) are scarce. However, the few studies that have compared participants’ ability to recognize expressions evolving through time from static images of full expressions (e.g., Cunningham & Wallraven, 2009; Fiorentini & Viviani, 2011) have reported interesting results. For example, perceivers performed better when pictures of emotional displays were presented in sequence than with a static presentation (Wehrle, Kaiser, Schmidt, & Scherer, 2000). Moreover, some studies have suggested that perceivers exposed to dynamic (vs. static) emotional displays were more physiologically aroused and exhibited greater facial mimicry (for a review, see Rymarczyk, Żurawski, Jankowiak-Siuda, & Szatkowska, 2016).

But these studies have yielded far from consensual results. For example, some studies reported that dynamic stimuli offer processing advantages (e.g., Ambadar, Schooler, & Cohn, 2005; Bould & Morris, 2008; Cunningham & Wallraven, 2009; Wehrle et al., 2000), namely due to the salience of emotional expressions during dynamic stimuli exposure (e.g., Horstmann & Ansorge, 2009; Rubenstein, 2005). Other studies suggested the contrary (e.g., Fiorentini & Viviani, 2011; Katsyri & Sams, 2008). Yet others indicated no difference between the effects of different stimulus presentation formats. For example, Gold and colleagues (2013) observed that the dynamic properties of facial expressions play a very small role in the perceivers’ ability to recognize facial expressions. Also, the results from Hoffmann, Traue, Limbrecht-Ecklundt, Walter, and Kessler (2013) suggest that stimulus presentation format does not influence the overall recognition of emotions, although the recognition of specific emotions (e.g., surprise and fear) seems to benefit from dynamic presentation. In the context of research on facial attractiveness, static versus dynamic stimuli comparisons did not yield differences in the evaluation of target attractiveness (e.g., Koscinski, 2013; G. Rhodes et al., 2011).

However, the disparity of results across studies may derive from confounds between the amount of information conveyed by static versus dynamic stimuli and the perceivers ability to use such information (Gold et al., 2013). The lack of consistency across studies may also result from the nature of the stimulus materials used. Although some studies have used stimuli based on real human models (actors or nonactors), others included avatars or computer edited faces (e.g., Cigna et al., 2015; Gold et al., 2013; Horstmann & Ansorge, 2009). Yet some authors (e.g., Sato, Fujimura, & Suzuki, 2008) suggest that the use of “real people” is more suitable when using dynamic stimuli.

Finally, and to the best of our knowledge, none of these databases included stimuli that matched the same facial expression across formats (i.e., stills and videos), with the facial expression set “on hold” for a fixed period of time in the video format, and compared them across several subjective dimensions. This is exactly the type of stimulus set we are presenting and validating in this article.

Overview

The present article presents a set of standardized stimulus materials of real human faces that combines important features of the available databases and that can be adjustable to specific research demands. Our database includes subjective normative ratings of stills and videos (of 5 and 10 s) of the same model displaying a negative (frown), neutral, and positive (smile) facial expressions, in several relevant dimensions (attractiveness, arousal, clarity, genuineness, familiarity, intensity, valence, similarity).

We had multiple motivations for developing these norms. First, most of the existing sets include emotion recognition as the main dependent variable. Instead, in the present study we were interested in having each face evaluated in several dimensions. The normative ratings in several dimensions allows the selection of subsets of stimuli to manipulate a specific dimension (e.g., valence) while controlling for others (e.g., attractiveness), particularly in studies using faces as stimuli outside the emotion recognition domain.

Second, and as stated above, evidence comparing emotion recognition of dynamic versus static stimuli is mixed. To our knowledge, none of the existing validated sets permits the comparison of the same model displaying the exact same facial expression in different formats (stills vs. videos) across several dimensions. Our set includes these types of stimulus. Importantly, our videos do not depict the buildup of an expression, but present a facial expression set on hold. This allows a direct comparison between stimuli formats. Moreover, we investigate the impact of these stimuli formats in several subjective dimensions.

Third, our database also includes faces with neutral expression (e.g., NimStim: Tottenham et al., 2009) that can be used as a baseline against which the effects of other facial expressions are compared with. For example, the evidence that a positive or negative face prime influences performance (e.g., Murphy & Zajonc, 1993) becomes more convincing when such a baseline is used.

Finally, although previous sets have included models from different nationalities, no published set includes Portuguese models. Note that most European databases were developed and tested in Northern Europe with models that often have phenotypic features (e.g., hair or eye color) that are different from Southern European ones. Moreover, even those databases that included (the so-called) Mediterranean models (Turkish or Moroccan descendants; ADFES: Van der Schalk, Hawk, et al., 2011) may not be suitable as the facial features of people in other Mediterranean countries in Europe (e.g., Spain, Italy, France, Greece) can be phenotypically quite different from, at least, those of Moroccan models.

In the following section, we provide an overview of the dimensions that have been reported in the literature and that were used in the present study to evaluate the faces. The relevance of these dimensions for face evaluation and their associations are also discussed. These dimensions were selected from those that are commonly used to evaluate other types of visual stimuli (e.g., symbols: Prada, Rodrigues, Silva, & Garrido, 2015; pictures [IAPS]: Lang, Bradley, & Cuthbert, 2008), as well as from face databases (e.g., RaFD: Langner et al., 2010; ADFES: Van der Schalk, Hawk, et al., 2011) that go beyond the scope of emotion recognition.

Dimensions of interest

Valence

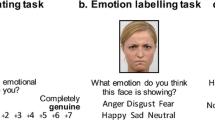

Valence is defined by the intrinsic attractiveness or aversiveness of a given stimulus (e.g., Frijda, 1986). Not only it is a basic property of emotion experience, but is also a fundamental component of emotional responding (Barrett, 2006). Therefore, emotional valence can modulate the characteristics and intensity of emotional responses (Adolph & Alpers, 2010; Nyklicek, Thayer, & Van Doornen, 1997). This modulation is especially true for facial stimuli (Langner et al., 2010; Russell & Bullock, 1985). For example, eyebrow frowning (produced by contracting the corrugator supercilii) is associated with unpleasant experiences, and raised lip corners (produced by contracting the zygomaticus major) are associated with pleasant ones (for a review, see Colombetti, 2005). The valence of facial stimuli has been assessed in a few validation studies (e.g., Adolph & Alpers, 2010; Langner et al., 2010; McEwan et al., 2014; O’Reilly et al., 2016; Van der Schalk, Hawk, et al., 2011). In the present study, we asked participants to indicate the extent to which the expression displayed by the target was negative–positive (1 = Very negative, 7 = Very positive; e.g., Langner et al., 2010; McEwan et al., 2014; O’Reilly et al., 2016).

Arousal

Arousal emerges as a highly relevant dimension of affect, differentiating states of excitement or high activation from states of calm/relaxed or low activation (Osgood, Suci, & Tannenbaum, 1957). Arousal has been assessed in some of the available normative ratings of facial stimuli (e.g., Adolph & Alpers, 2010; Goeleven et al., 2008; McEwan et al., 2014; Van der Schalk, Hawk, et al., 2011). Some of these studies have already established that arousal interacts with other variables, as it is the case of valence. Indeed, convergent empirical evidence indicates that the higher the positive or negative valence of a stimulus is, the more arousing the stimulus is perceived to be (Backs, da Silva, & Han, 2005; Barrett & Russell, 1998; Ito, Cacioppo, & Lang, 1998; Lang et al., 2008; Libkuman, Otani, Kern, Viger, & Novak, 2007). In the present study, arousal was measured for each stimulus by asking participants to indicate to which extent the expression displayed by the target was relaxed or excited (1 = Very relaxed, 7 = Very excited; e.g., McEwan et al., 2014; Van der Schalk, Hawk, et al., 2011).

Clarity

Clarity refers to the amount and quality of the emotional information available to the perceiver (Ekman, Friesen, & Ellsworth, 1982; Fernandez-Dols, Sierra, & Ruiz-Belda, 1993). Thus, clarity is fundamental to the perception of facial expressions as well as to achieve mutual adjustment between people (e.g., Bach, Buxtorf, Grandjean, & Strik, 2009). Clarity has also been defined as referring to the reliability of the signal that permits a quick, accurate and efficient recognition of a facial expression (e.g., Tracy & Robins, 2008). Clarity is therefore often inferred from the capability for accurately identifying the emotions (Bach et al., 2009). Some studies have shown that clarity judgments depend on whether the expression of a specific emotion is genuine or simulated (Gosselin, Kirouac, & Doré, 2005). Indeed, although accuracy in judging simulated expressions is generally high (Ekman, 1982), other evidence suggests that performance in judging the clarity of genuine emotional expressions is not better than chance (Motley & Camden, 1988; Wagner, MacDonald, & Manstead, 1986). Still other studies have shown that clarity can be relatively independent of genuineness (e.g., Langner et al., 2010). Clarity is also positively related to intensity (e.g., Langner et al., 2010). In the present study, subjective clarity was measured by asking participants to judge the extent to which the facial expression displayed by the target was clear (1 = Very unclear, 7 = Very clear; e.g., Langner et al., 2010).

Intensity

The perceived intensity refers to an estimate of the magnitude of the subjective impact of an emotional event or stimulus, and is probably one of the most noticeable aspects of an emotion (Sonnemans & Frijda, 1994). Higher perceived intensity in a facial expression is likely to improve decoding accuracy, but does not necessarily lead to more intense emotional states (Adolph & Alpers, 2010). However, empirical evidence has been showing that the perception of intensity in emotional expressions is not straightforward. Indeed, Hess, Blairy, and Kleck (1997) showed that high intensity was only perceived in negative facial expressions of male actors and positive facial expressions of female actors. In the present study, intensity was measured by asking participants to rate the weakness or strength of the facial expressions depicted in the stimuli presented (1 = Very weak, 7 = Very strong; e.g., Langner et al., 2010).

Attractiveness

The attractiveness of a face refers to the perceived facial appearance of a given target person (e.g., Koscinski, 2013). Some studies have already established that averageness and symmetry of a face are important characteristics for the face to be perceived as attractive (e.g., G. Rhodes, 2006). Attractive faces are also perceived as more similar (e.g., Miyake & Zuckerman, 1993), more positive (Reis et al., 1990) and more familiar (e.g., Monin, 2003). This dimension has important consequences in different interpersonal processes, such as impression formation (e.g., Eagly, Ashmore, Makhijani, & Longo, 1991), social distance (e.g., Lee, Loewenstein, Ariely, Hong, & Young, 2008), perception of mate quality (G. Rhodes, Halberstadt, & Brajkovich, 2001, 2001) and feelings of attraction (Rodrigues & Lopes, 2016) . Attractiveness is one of the few dimensions in which static images and video presentations have been compared (e.g., G. Rhodes et al., 2011; Rubenstein, 2005; for a review see Koscinski, 2013). Overall, these judgments did not differ according to presentation modality (e.g., Koscinski, 2013). Some of the existing databases include this dimension, although usually models are only evaluated when displaying a neutral expression (e.g., RaFD: Langner et al., 2010; CFD: Ma et al., 2015). In the present study we asked participants to indicate the extent to which they considered the target as attractive (1 = Very unattractive, 7 = Very attractive; Langner et al., 2010; G. Rhodes et al., 2011) across facial expressions and presentation format conditions.

Similarity

Similarity with a target refers to the perception of how similar a given target is to the individual (Byrne, 1997). Several studies have shown that similarity can refer to aspects such as attitudes, values or beliefs, personality traits or attributes such as physical appearance or physical attractiveness (Montoya & Horton, 2013). Research has shown that in the absence of additional objective information about the target, individuals tend to perceive greater similarity to oneself (Hoyle, 1993), an effect that is maintained even after an interaction with the target (Montoya, Horton, & Kirchner, 2008). Presumably, this occurs because perceived similarity helps decrease the uncertainty associated to the target (Ambady, Bernieri, & Richerson, 2000). In the present study, we asked participants to indicate to which extent they perceived the target to be similar to themselves (1 = Not at all, 7 = Very; e.g., Norton, Frost, & Ariely, 2007).

Familiarity

The perceived familiarity with a face refers to the averageness of the physical attributes of a given target, such that the more average or prototypical a face is, the more familiar the face is perceived (e.g., Langlois, Roggman, & Musselman, 1994). Familiarity is highly relevant for person perception because it influences judgments in several other dimensions. For instance, more familiar targets are perceived as more similar to oneself (Moreland & Beach, 1992), elicit more positive feelings in the individual (Garcia-Marques, 1999) and greater muscular activity in accordance with these feelings (e.g., zygomaticus major; Winkielman & Cacioppo, 2001). Likewise, positive stimuli are perceived as more familiar (Garcia-Marques, Mackie, Claypool, & Garcia-Marques, 2004) and when participants contract a specific facial muscle (zygomaticus major) while looking at a stimulus, they perceive the stimuli as more familiar (Phaf & Rotteveel, 2005). In the present study we asked participants to indicate the extent to which they considered the target to be familiar (1 = Not familiar at all, 7 = Very familiar; Kennedy, Hope, & Raz, 2009).

Genuineness

The genuineness of facial expressions refers to the extent to which a given expression is considered a truthful reflection of the emotion the target is experiencing (Livingstone, Choi, & Russo, 2014). This is a highly relevant dimension for social interaction, as targets are perceived differently when portraying a genuine or a simulated emotion. For instance, targets are perceived more positively when depicting a genuine smile (e.g., Duchenne smile, possibly indicating happiness), than when depicting a forced smile (Miles & Johnston, 2007). In the present study, we asked participants to rate how faked or genuine was the facial expression portrayed by the target (1 = Faked, 7 = Genuine; Langner et al., 2010).

The brief literature review presented on the evaluative dimensions selected suggests the relevant role of each one of them (and their interactions) for a comprehensive assessment of facial expressions. In the following sections we present the development of the stimulus materials and subsequently we examine the impact of facial expression as well as the role of stimuli presentation format (i.e., stills vs. videos) on each evaluative dimension. We also present the subjective norms for each stimulus in each of these dimensions and the correlations between dimensions.

Development of the stimulus set

Method

Participants

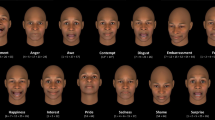

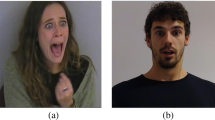

Twenty white Portuguese students (60 % male; M age = 21.75 years, SD = 1.97) from different universities located in Lisbon participated in the development of the stimulus set by posing to a camera in three different facial expressions: frown, neutral, and smile. The order of the posed facial expression was counterbalanced. The procedure was conducted in agreement with the Ethics Guidelines issued by the Scientific Commission of the host institution. For their collaboration participants were compensated with a €5 voucher.

Apparatus

We used a JVC Video Camera (Model HDR-CX210E), and participants were filmed with 1,080 × 1,920 pixels HD resolution, a frame rate of 50p, resulting in a Mpeg file HD 422. The participants were lit from the front with a 60 cm diameter China ball with a standard 50 W light bulb, and exposure compensation in the camera was made accordingly. The China ball was placed about 60 cm above the camera at an equal distance between the camera and the participant. The lightening apparatus was used to soften the light distribution in the shooting field as well as to avoid shades in the participant’ faces and to prevent eye frowning. There was also a foldout white reflector on a stand about 60 cm to the right of the participants providing some filling light. The shooting room had an armchair backed against a grey wall, and the camera was placed on a tripod in front of the armchair at an approximate distance of 50 cm. A small bright yellow plastic stick was glued on the top of the camera near the lenses and served as participants’ eye fixation point. Participants posed for the camera for approximately 6 min, 2 min for each facial expression. Further information regarding participants’ preparation for the shooting sessions is provided below.

Procedure

The videos were recorded in late 2014 at the psychology laboratory of Instituto Universitário de Lisboa (ISCTE-IUL). Upon arrival, participants were briefed about the goals of their participation (i.e., to develop a set of visual stimuli, namely of people displaying different facial expressions) and its expected duration (i.e., 30 min). The consent form clearly stated that their collaboration was voluntary and that they could withdraw at any time. By signing the consent form participants also agreed that the resulting images and video recordings databases would be made available in academic journals and could be used as stimulus materials in future studies.

All sessions were individual and room temperature and lighting were kept constant. The experimenter asked the participants to change into a white t-shirt and to remove all accessories (e.g., jewelry, glasses) and makeup. Male participants were not instructed to remove facial hair (to have male faces that are more representative of faces people see every day; see Tottenham et al., 2009). Makeup powder foundation was applied to all participants to even-out skin imperfections and control skin shinning. A professional film editor with experience in directing recorded the participants for approximately 2 min per facial expression. Participants were asked to sit in an upright position in the armchair placed in front of a grey wall, facing the camera and to focus their gaze in the fixation point set above the camera. Participants were asked to keep their mouth closed during shooting to avoid showing their teeth (e.g., Tottenham et al., 2009). During the recordings, to obtain a varied set of facial expressions (i.e., frowning, neutral and smiling) the director referred to some scenarios, or asked participants to imagine or remember situations that would elicit the intended expression (for similar instructions, see Dalrymple et al., 2013; Olszanowski et al., 2015). For example, to obtain a smiling expression the director asked the participants to think about a funny event that they had recently experienced.

After recording each facial expression, participants responded to the Portuguese adaptation of the Positive and Negative Affect Schedule (PANAS: Galinha & Pais-Ribeiro, 2012). This measure, originally developed by D. Watson, Clark, and Tellegen (1988), assesses positive (PA) and negative (NA) affect as independent mood dimensions. Participants were presented with a list of 20 words (half PA, and the remainder NA) that described feelings and emotions and were instructed to rate to what extent they were experiencing each one (e.g., “enthusiastic,” “hostile”) at that moment using a 5-point scale (1 = Very slightly or not at all, 2 = A little, 3 = Moderately, 4 = Quite a bit, 5 = Extremely). At the end of the session participants received compensation, were thanked and debriefed.

Results

NA and PA scores were computed for each participant according to the facial expression condition (sum of the responses, maximum 50) and analyzed in a repeated measures analysis of variance (ANOVA): 3 (Facial Expression: frown, neutral, smile) × 2 (Affect Scale: NA, PA). Both factors were manipulated within participants. The results revealed a main effect of facial expression, F(2, 38) = 13.45, MSE = 202.11, p < .001, η p 2 = .414, and a main effect of the affect scale, F(1, 19) = 65.05, MSE = 3,967.50, p < .001, η p 2 = .774. Importantly, the expected interaction between the two factors was significant, F(2, 38) = 13.90, MSE = 357.98, p < .001, η p 2 = .422 (see Fig. 1).

Planned contrasts revealed, as expected, that frowning led to higher NA reports than smiling, t(19) = 3.38, p = .003, d = 1.55, and than posing with a neutral facial expression, t(19) = 3.20, p = .005, d = 1.49. Smiling led to higher PA reports than frowning, t(19) = 3.69, p = .002, d = 1.69, and than posing with a neutral facial expression, t(19) = 4.86, p < .001, d = 2.23. The reports of NA after posing with a neutral facial expressions did not differ from those obtained in the smiling condition, t(19) = 1.09, p = .289, d = 0.50. Likewise, the reports of PA after posing with neutral facial expression did not differ from those obtained in the frowning condition, t(19) = –1.67, p = .112, d = 0.77.

In sum, the results obtained with the PANAS indicated that posing with a given facial expression actually influenced how participants felt afterward.

Final set of stimuli

As referred above, each participant was filmed on average for 6 min (2 min per facial expression). Videos were then edited from their original format using Final Cut Pro for Mac (Version 7) and were converted into MOV format using Apple ProRes 422, MOS (mute of sound; pixel size 1,920 × 1,080) codec. Afterward, videos were reconverted into MOV format using H.264 codec (pixel size 1,024 × 576) in QuickTime Player for Mac (Version 10.4) to be compatible with E-Prime software.

The authors screened the 2 min of shooting of each facial expression and chose the 10 s timeframe in which the models held the intended expression, paying special attention to the final frame so as to avoid displaying eye-blinking. From the 10 s clips, 5 s clips were selected. These two standard durations were set following previous procedures (e.g., Ambady & Rosenthal, 1993; Koscinski, 2013; G. Rhodes et al., 2011) and allow for validation of facial expressions recognition in short presentations (i.e., in the 5 s clips).

The stills were obtained a posteriori by freezing one frame of the 5 s clips using Final Cut Pro for Mac (Version 7), and were stored in JPG format (pixel size 1,280 × 720) with a sRGB IEC61966-2.1 color profile. Stills were then aligned and balanced for color, brightness, and contrast using Preview for Mac (Version 2.0).

The final set of stimulus materials includes 180 stimuli: 60 stills (20 frown, 20 neutral, and 20 smile), 60 5 s videos (20 frown, 20 neutral and 20 smile) and 60 10 s videos (20 frown, 20 neutral, and 20 smile).

Validation of the database

Method

Participants and design

A sample of 120 white Portuguese students (77.5 % female; M age = 20.62 years, SD = 3.39) at Instituto Universitário de Lisboa (ISCTE-IUL), volunteered to participate in a laboratory study in exchange for course credit. Participants were not acquainted with the models (as confirmed in the end of the experiment). The design included the following factors: 3 (Presentation Format: stills, 5 s videos, 10 s videos) × 3 (Facial Expression: frown, neutral, smile) × 4 (Stimulus Subsets: A, B, C, D). The last factor was manipulated between participants.

Materials

The entire stimulus set of videos and stills previously developed was used. Examples of the stills are presented in Fig. 2.

Procedure and measures

The participants were invited to collaborate in a study about person perception. The study took place at the psychology laboratory of Instituto Universitário de Lisboa (ISCTE-IUL) and was conducted using the E-Prime software. The procedure was in agreement with the Ethics Guidelines issued by the Scientific Commission of the host institution. Upon arrival, participants were informed about the goals of the study and its expected duration (approximately 20 min), that all the data collected would be treated anonymously, and that they could abandon the study at any time. After giving written consent, participants were asked to provide information regarding their age and sex.

All instructions were presented on the computer screen. Participants were asked to rate each stimulus regarding attractiveness, arousal, clarity, genuineness, familiarity, intensity, valence, and similarity (for the detailed instructions, see Table 1). All responses were given via the keyboard.

To prevent fatigue and demotivation, each participant evaluated a subset of 45 stimuli: 15 stills, 15 5 s videos, and 15 10 s videos from the total pool of 180 stimuli. Overall, each stimulus was evaluated by a sample of 30 participants. The subsets were organized such that each participant would not evaluate the same model displaying the same facial expression in a different presentation format.Footnote 1

Within each experimental condition, the presentation order of the stimuli was completely randomized for each participant. Each stimulus was presented individually in the center of the screen (black background). The exposure time to videos was determined by their own duration (i.e., 5 or 10 s) and the exposure time to photographs was 5,000 ms. Following the offset of the stimulus, the evaluative dimensions were presented in a random order (one per screen). The intertrial interval was 500 ms. After completing the task, participants were thanked and debriefed.

Results

In the following sections, we begin by presenting the preliminary data analysis regarding outliers, gender differences and reliability. Then, we present the tests comparing to which extent different facial expressions (i.e., frown, neutral, smile) and stimulus presentation format (stills, 5 s video, and 10 s video) influenced stimulus evaluations on each dimension. Subsequently, we present the associations between dimensions.

For each stimulus, we calculated means, standard deviations and confidence intervals obtained for each dimension. The full stimulus set (stills in .jpg and videos in both .mov and .avi formats), and the corresponding database in Excel format, organized by stimulus code, are provided as supplementary material and can also be obtained upon request to the first author.

Preliminary analysis

All participants responded to the entire set of questions for all the stimuli presented in their respective conditions. Thus, there were no missing cases. Outliers were identified by considering the criterion of 2.5 SDs above or below the mean evaluation of each stimulus in a given dimension. The result of this analysis yielded a residual percentage (0.64 %) of outlier ratings. There was no indication of participants responding systematically in the same way—that is, always using the same value of the scale. Therefore, no responses were excluded.

First we tested the consistency of participants’ ratings in each dimension by comparing two subsamples of equal size (n = 60) randomly selected from the main sample. No significant differences between the subsamples emerged (all ps > .100).

Then we tested whether all the stimulus subsets yielded equivalent results, by analyzing the mean ratings in each dimension in a repeated measures mixed ANOVA: 4 (Stimulus Subsets) × 8 (Evaluative Dimension), with the latter factor manipulated within participants. Given that only a main effect of evaluative dimension emerged, F(2, 238) = 80.10, MSE = 31.39, p < .001, η p 2 = .41, and that both the main effect of stimulus subset and its interaction with evaluative dimensions were nonsignificant, Fs < 1, the subsequently reported analysis will disregard the specific stimulus subsets.

To test for gender differences in the way that participants rated the stimuli, the mean evaluations on each dimension were compared between male and female participants. Overall, no gender differences were found (see Table 2).

Finally, we calculated the mean ratings in all dimensions, on the basis of model gender (see Table 3).

As is shown in Table 3, stimuli portraying female models were evaluated as being more attractive, more similar, and more genuine than those portraying male models (see Langner et al., 2010). Model gender did not influence ratings in the remaining dimensions.

Impacts of facial expression and stimulus format on evaluative dimensions

The evaluation of each target was examined by computing the mean ratings, per participant, in each dimension for the three types of facial expressions (frown, neutral, and smile) and the three presentation formats (stills, 5 s videos, and 10 s videos). These ratings were analyzed, per dimension, in a repeated measures ANOVA, with Facial Expression and Presentation Format defined as within-participants factors. All means and standard deviations, as well as the results of planned comparisons, are presented in Table 4.

Valence

We observed a main effect of facial expression on valence ratings, F(2, 238) = 609.38, MSE = 472.60, p < .001, η p 2 = .837. As expected, smiling models were considered the most positive ones, followed by neutral and frowning (e.g., Colombetti, 2005). Presentation format did not affect the ratings in this dimension, F(2, 238) = 2.16, MSE = 0.57, p = .118, η p 2 = .018. The interaction between facial expression and presentation format was significant, F(4, 476) = 2.39, MSE = 0.70, p = .050, η p 2 = .020, indicating that the described linear trend was stronger in stills and 10 s videos.

Arousal

We found a main effect of facial expression on arousal ratings, F(2, 238) = 47.66, MSE = 47.43, p < .001, η p 2 = .286, with neutral models being rated as the least arousing. Smiling models were considered more arousing than frowning models. These results are in line with previous findings indicating that the higher the (positive or negative) valence of a stimulus, the more arousing the stimulus is perceived to be (e.g., Backs et al., 2005; Barrett & Russell, 1998; Ito et al., 1998; Lang et al., 2008; Libkuman et al., 2007). Stimulus presentation format did not have a main effect on this dimension, F < 1. However, the interaction between facial expression and presentation format was significant, F(4, 476) = 2.86, MSE = 1.03, p = .023, η p 2 = .023: Smiling and frowning models were perceived as being equally arousing when presented in a still format.

Clarity

Regarding clarity ratings, we observed a main effect of facial expression, F(2, 238) = 125.07, MSE = 116.47, p < .001, η p 2 = .512, with the stimuli portraying a neutral expression being evaluated as the least clear. Although in the literature clarity has mostly been associated with accuracy in identifying a particular facial expression (e.g., Langner et al., 2010), it seems reasonable that neutral facial expressions would be those offering the least amount and quality of emotional information to the perceiver (Ekman et al., 1982; Fernandez-Dols et al., 1993). Smiling expressions were perceived as the clearest ones. The impact of presentation format on this dimension was not significant, F(2, 238) = 2.60, MSE = 1.32, p = .077, η p 2 = .021, nor was the interaction of this factor with facial expression, F(4, 476) = 1.59, MSE = 0.87, p = .176, η p 2 = .013.

Intensity

The results indicated a main effect of facial expression on intensity ratings, F(2, 238) = 113.16, MSE = 79.86, p < .001, η p 2 = .487, with neutral models being considered the least intense. This finding also confirms previous research indicating the higher perceived intensity of positive and negative facial expressions (e.g., Hess et al., 1997). The smiling expression was also considered more intense than the frowning expression. A main effect of presentation format was also observed, F(2, 238) = 3.38, MSE = 1.45, p = .036, η p 2 = .028, with 5 s videos being rated as less intense than either stills or 10 s videos. The interaction between facial expression and stimulus format was not significant, F(4, 476) = 2.25, MSE = 0.96, p = .063, η p 2 = .019.

Attractiveness

We found a main effect of facial expression on attractiveness ratings, F(2, 238) = 70.05, MSE = 36.95, p < .001, η p 2 = .371, with smiling models being considered the most attractive (e.g., Reis et al., 1990). There was also a main effect of presentation format on this dimension, F(2, 238) = 11.39, MSE = 3.01, p < .001, η p 2 = .087, such that increasing attractiveness ratings were observed with longer exposures to the models. These results were unexpected, given that, in previous studies, attractiveness judgments did not differ across presentation formats (e.g., Koscinski, 2013). Facial expression and stimulus format did not interact, F < 1.

Similarity

The results indicated a main effect of facial expression on similarity ratings, F(2, 238) = 92.59, MSE = 75.69, p < .001, η p 2 = .438, with smiling models being perceived as the most similar. The results further indicated that presentation format did not affect the ratings in this dimension, F(2, 238) = 1.88, MSE = 0.56, p = .155, η p 2 = .016. Facial expression and presentation format did not interact, F < 1.

Familiarity

We found a main effect of facial expression on familiarity ratings, F(2, 238) = 21.31, MSE = 11.08, p < .001, η p 2 = .152, with smiling models being perceived as most familiar. This result was not surprising, considering that positive stimuli are perceived as being more familiar (e.g., Garcia-Marques et al., 2004). Also, a main effect of presentation format emerged on this dimension, F(2, 238) = 5.91, MSE = 1.66, p = .003, η p 2 = .047, with familiarity ratings increasing from stills to video formats. Facial expression and stimulus format did not interact on this dimension, F(4, 476) = 1.70, MSE = 0.70, p = .149, η p 2 = .014.

Genuineness

We found a main effect of facial expression on genuineness ratings, F(2, 238) = 7.93, MSE = 6.04, p < .001, η p 2 = .062 with smiling models being considered the most genuine. Likewise, there was a main effect of stimulus format on this dimension, F(2, 238) = 3.54, MSE = 1.48, p = .030, η p 2 = .029, with stills being evaluated as the most genuine. The data also showed an interaction between facial expression and presentation format, F(4, 476) = 2.55, MSE = 1.16, p = .039, η p 2 = .021. Although ratings of the genuineness of 10 s movies were unaffected by facial expression, stills and 5 s movies were evaluated as being more genuine when the models were smiling.

Overall, facial expression had a main effect in all the evaluated dimensions, with smiling models obtaining higher ratings in all of them. On four of the dimensions—attractiveness, genuineness, familiarity, and similarity—the ratings for frowning and neutral expressions were not significantly different. In the remaining four dimensions—arousal, clarity, intensity, and valence—the ratings of frowning and neutral expressions differed, with the former being perceived as more arousing, more clear, and more intense, but also as more negative than neutral expressions.

Stimulus presentation format only influenced the ratings of attractiveness, familiarity, genuineness, and intensity. Both attractiveness and familiarity ratings increased with longer exposure times. Stills were evaluated as more genuine than videos, and 5 s videos were evaluated as the least intense stimuli.

Associations between dimensions

The associations between evaluative dimensions were also explored, revealing overall positive correlations (see Table 5). Due to the high number of ratings, all the correlations were statistically significant. Therefore, only large correlations are reported. Specifically, we observed strong positive correlations between similarity and both attractiveness (r = .536) and familiarity (r = .429). The latter two dimensions were also correlated (r = .410). These correlations were expected, on the basis of findings indicating that similarity increases interpersonal attraction (e.g., Montoya et al., 2008) and that more familiar targets are perceived as being more attractive (e.g., Monin, 2003) and more similar to oneself (Moreland & Beach, 1992). Intensity was positively correlated with arousal (r = .469) and clarity (r = .574). Previous work had already suggested the positive relation between clarity and intensity (e.g., Langner et al., 2010). The association between intensity and arousal is also not surprising. Although previous work suggested that clarity can be relatively independent of genuineness (Langner et al., 2010), we found a strong correlation between these two dimensions (r = .416).

Discussion

The human face is an important vehicle for transmitting information about an individual. Despite being a complex process, the capacity for processing face information is of utmost importance for social interaction (O’Reilly et al., 2016) and seems to be relatively universal (Cigna et al., 2015).

However, and although natural expressions include action (e.g., Ekman, 1994), and people we meet in real life are usually seen in motion, most of the available face databases include static facial images that may represent a challenge to their ecological validity (e.g., Van der Schalk, Hawk, et al., 2011; Koscinski, 2013; G. Rhodes et al., 2011). Moreover, from the databases that include videos of facial expressions, very few compare stills and videos, and even those are limited in the number of dimensions on which the faces are evaluated.

In the present article, we presented validated norms for stills and videos of facial expressions (frown, neutral, smile) that were rated in different dimensions. We set standard and constant video durations (5 and 10 s). This constitutes an improvement over previous work that had included videos of different lengths (e.g., EU [O’Reilly et al., 2016], with video clip durations from 2 to 52 s). By keeping the length constant (5 and 10 s), we minimize video duration as a possible source of bias in the evaluation of facial stimuli. Moreover, in our study the models were coached to imagine situations that would elicit the intended expression (e.g., de Gelder & Van den Stock, 2011), and were filmed while holding that expression. We introduced a manipulation check by assessing the models’ affective state after posing in each of the three facial expressions. Ecological validity was also enhanced by having the faces rated by untrained volunteers, who often constitute the samples that are recruited to participate in the studies using this type of stimuli (e.g., Tottenham et al., 2009).

Furthermore, we tested each stimulus in several dimensions—namely, attractiveness, arousal, clarity, genuineness, familiarity, intensity, valence, and similarity. The development of norms including all these dimensions constitutes an important addition to the mainstream face databases available.

Finally, a validated face database with Portuguese models can be useful for research conducted in Portugal as well as in other Southern European countries, since the phenotypical features of southern Europeans may differ from those of the human models used in most of the published databases. Additionally, the potential of these stimuli can also be foreseen as a comparison group for research in intergroup relations conducted in other countries (e.g., Northern Europe or with a majority of non-Caucasian population).

Overall, the manipulation of facial expression was successful. The type of facial expression influenced, in a consistent way, the positive and negative affect reported by the models (e.g., more positive affect after smiling). Likewise, facial expression influenced the ratings of the models in all the dimensions (e.g., smiling models were rated as more attractive, familiar, positive; neutral models were ratted as least arousing, clear, intense, etc.). The effects of stimulus presentation format were not generalized across dimensions. Yet, we found main effects of this variable on half of the dimensions evaluated (attractiveness, familiarity, genuineness, and intensity). For example, models were considered more attractive when they were presented in videos than in stills, and the perceived genuineness of expression seems to be higher in stills. Therefore, whenever arousal, clarity, valence, and similarity are the dimensions of interest, there seems to be no advantage in using videos.

Altogether, the features of this specific set, namely the ratings in several dimensions, offer numerous possibilities in the selection of stimuli based on the required level on each dimension, as well as their combinations. For example, this set permits the manipulation of a given dimension, while strictly controlling for the others.

Yet, it should be noted that this type of norms can be culture (and even age) specific. For example, Van der Schalk, Hawk, and colleagues (2011) showed that Dutch participants generally performed better in an emotion recognition task when the emotions were displayed by Northern European models, rather than by Mediterranean models. Likewise, it has also been shown that participants are better at identifying faces of people of their own age (for a review of the “own-age bias,” see Dalrymple et al., 2013). Given that our models (and our raters) are young adults, caution is recommended when using our stimuli with samples of different age groups. Therefore, the specificity of our database must be acknowledged along with the need for cross-validation. Furthermore, although all models were recorded directly facing the camera, possible deviations from frontality were not assessed. Previous evidence has suggested that such deviations can bias other judgments about the target such as weight estimation (T. M. Schneider et al., 2012) or personality traits (Hehman, Leitner, & Gaertner, 2013). Hence, future research should seek to extend the current database by including frontality assessments.

The utility of face databases for different areas of research, as well as their potential use in more applied domains, has already been mentioned in the introduction. Indeed, in addition to the research on emotion recognition, many studies have used facial expressions to investigate a myriad of psychological processes. For example, because people frequently form personality impressions from the facial appearances of other individuals, faces are often used as stimuli to promote or reinforce impressions in paradigms like spontaneous trait inferences (e.g., Todorov & Uleman, 2002), stereotypes, or social inference (e.g., Mason, Cloutier, & Macrae, 2006).

The variations in valence found in our stimuli set can be also particularly useful for specific types of research such as affective priming, emotional Stroop, mood and embodiment studies. In affective priming paradigms, faces (e.g., happy vs. angry) can be used as primes that influence (e.g., Murphy & Zajonc, 1993; Murphy, Monahan, & Zajonc, 1995) or interfere (Stenberg, Wiking, & Dahl, 1998) with the subsequent processing of other stimuli. In emotional Stroop tasks, participants are simultaneously exposed to an emotional facial expression (e.g., angry or happy) and an emotional word (“anger” or “happy”) and are asked to either identify the facial expression (e.g., Etkin, Egner, Peraza, Kandel, & Hirsch, 2006) or the emotional word (e.g., Haas, Omura, Constable, & Canli, 2006). Images of facial expressions (happy vs. sad) can also be used to induce congruent moods (e.g., F. Schneider, Gur, Gur, & Muenz, 1994).

Our stimuli can also be used in embodiment studies (for a review, see Semin & Garrido, 2012, 2015; Semin, Garrido, & Farias, 2014; Semin, Garrido, & Palma, 2012, 2013). This approach suggests that exposure to facial expressions triggers implicit imitation as measured by EMG of facial muscles (e.g., Dimberg & Petterson, 2000; Niedenthal, Winkielman, Mondillon, & Vermeulen, 2009). The evaluative and behavioral consequences of such embodied processes were also demonstrated. For example, the exposure to a subliminally presented facial expression of a happy (or angry) face, influence perceiver’s judgments of a novel stimulus (e.g., Foroni & Semin, 2011). Importantly, mimicry is more likely to occur when the relationship with the model is closer or when the model belongs to the ingroup (e.g., Hess & Fischer, 2013). This type of research requires validated facial stimuli of different cultural and age groups.

In addition to its potential utility for different areas of fundamental research, our database can also be used in more applied domains such as political (e.g., Antonakis & Dalgas, 2009; Ballew & Todorov, 2007; Farias, Garrido, & Semin, 2013, 2016; Lawson, Lenz, Baker, & Myers, 2010; Lenz & Lawson, 2011; Little, Burriss, Jones, & Roberts, 2007; Olivola & Todorov, 2010a; Todorov, Mandisodzda, Goren, & Hall, 2005), consumer (e.g., Landwehr, McGill, & Herrmann, 2011; Miesler, Landwehr, Herrmann, & McGill, 2010), interpersonal relationships (Rodrigues & Lopes, 2016), and legal contexts (e.g., Blair, Judd, & Chapleau, 2004; Eberhardt, Davies, Purdie-Vaughns, & Johnson, 2006; Lindsay et al., 2011; Zebrowitz & McDonald, 1991), as well as in clinical research and intervention with populations with neuro- and psychological disorders (e.g., Behrmann et al., 2011).

In sum, the subjective norms of facial stimuli presented in this article have potential relevance to the work of researchers in several research domains. From our database, researchers may choose the most adequate stimuli format for a particular experiment, select and manipulate the dimensions of interest and control for the remaining. Therefore, we consider that the SAVE database constitutes a valuable addition to the existing pool of pretested materials that researchers recurrently need to use in their studies.

Notes

For example, the stimuli evaluated in condition A were stills of Models 1 to 5 smiling, Models 6 to 10 displaying a neutral facial expression, and Models 11 to 15 frowning; 5 s videos of Models 6 to 10 smiling, Models 11 to 15 displaying a neutral facial expression, and Models 16 to 20 frowning; and 10 s videos of Models 1 to 5 frowning, Models 11 to 15 smiling, and Models 16 to 20 displaying a neutral facial expression.

References

Adolph, D., & Alpers, G. (2010). Valence and arousal: A comparison of two sets of emotional facial expressions. American Journal of Psychology, 123, 209–219. doi:10.5406/amerjpsyc.123.2.0209

Alves, N. (2013). Recognition of static and dynamic facial expressions: A study review. Estudos de Psicologia, 18, 125–130. doi:10.1590/S1413-294X2013000100020

Ambadar, Z., Schooler, J., & Cohn, J. F. (2005). Deciphering the enigmatic face: The importance of facial dynamics to interpreting subtle facial expressions. Psychological Science, 16, 403–410. doi:10.1111/j.0956-7976.2005.01548.x

Ambady, N., Bernieri, F., & Richerson, J. (2000). Toward a histology of social behavior: Judgmental accuracy from thin slices of the behavioral stream. In M. Zanna (Ed.), Advances of experimental social psychology (Vol. 32, pp. 201–271). San Diego, CA: Academic Press.

Ambady, N., & Rosenthal, R. (1993). Half a minute: Predicting teacher evaluations from thin slices of nonverbal behavior and physical attractiveness. Journal of Personality and Social Psychology, 64, 431–441. doi:10.1037/0022-3514.64.3.431

Antonakis, J., & Dalgas, O. (2009). Predicting elections: Child’s play! Science, 323, 1183. doi:10.1126/science.1167748

Bach, D. R., Buxtorf, K., Grandjean, D., & Strik, W. K. (2009). The influence of emotion clarity on emotional prosody identification in paranoid schizophrenia. Psychological Medicine, 39, 927–938. doi:10.1017/S0033291708004704

Backs, R. W., da Silva, S. P., & Han, K. (2005). A comparison of younger and older adults’ self-assessment manikin ratings of affective pictures. Experimental Aging Research, 31, 421–440. doi:10.1080/03610730500206808

Ballew, C. C., & Todorov, A. (2007). Predicting political elections from rapid and unreflective face judgments. Proceedings of the National Academy of Sciences, 104, 17948–17953. doi:10.1073/pnas.0705435104

Bänziger, T., Mortillaro, M., & Scherer, K. (2012). Introducing the Geneva multimodal expression corpus for experimental research on emotion perception. Emotion, 12, 1161–1179. doi:10.1037/a0025827

Barrett, L. F. (2006). Are emotions natural kinds? Perspectives on Psychological Science, 1, 28–58. doi:10.1111/j.1745-6916.2006.00003.x

Barrett, L. F., & Russell, J. A. (1998). Independence and bipolarity in the structure of current affect. Journal of Personality and Social Psychology, 74, 967–984. doi:10.1037/0022-3514.74.4.967

Behrmann, M., Avidan, G., Thomas, C., & Nishimura, M. (2011). Impairments in face perception. In A. Calder, G. Rhodes, M. Johnson, & J. Haxby (Eds.), Oxford handbook of face perception (pp. 799–820). New York, NY: Oxford University Press.

Blair, I. V., Judd, C. M., & Chapleau, K. M. (2004). The influence of Afrocentric facial features in criminal sentencing. Psychological Science, 15, 674–679. doi:10.1111/j.0956-7976.2004.00739.x

Bould, E., & Morris, N. (2008). Role of motion signals in recognizing subtle facial expressions of emotion. British Journal of Psychology, 99, 167–189. doi:10.1348/000712607X206702

Byrne, D. (1997). An overview (and underview) of research and theory within the attraction paradigm. Journal of Social and Personal Relationships, 14, 417–431. doi:10.1177/0265407597143008

Calder, A., Rhodes, G., Johnson, M., & Haxby, J. (Eds.). (2011). Oxford handbook of face perception. New York, NY: Oxford University Press. doi:10.1093/oxfordhb/9780199559053.013.0016

Calvo, M. G., & Nummenmaa, L. (2016). Perceptual and affective mechanisms in facial expression recognition: An integrative review. Cognition and Emotion, 30, 1081–1106. doi:10.1080/02699931.2015.1049124

Cigna, M.-H., Guay, J.-P., & Renaud, P. (2015). La reconnaissance émotionnelle faciale: Validation préliminaire de stimuli virtuels dynamiques et comparaison avec les Pictures of Facial Affect (POFA). Criminologie, 48, 237–263. doi:10.7202/1033845ar

Colombetti, G. (2005). Appraising valence. Journal of Consciousness Studies, 12, 103–126.

Cunningham, D. W., & Wallraven, C. (2009). Dynamic information for the recognition of conversational expressions. Journal of Vision, 9(13), 7. doi:10.1167/9.13.7

Dalrymple, K. A., Gomez, J., & Duchaine, B. (2013). The Dartmouth database of children’s faces: Acquisition and validation of a new face stimulus set. PLoS ONE, 8, e79131. doi:10.1371/journal.pone.0079131

de Gelder, B., & Van den Stock, J. (2011). The Bodily Expressive Action Stimulus Test (BEAST): Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Frontiers in Psychology, 2, 181. doi:10.3389/fpsyg.2011.00181

Dimberg, U., & Petterson, M. (2000). Facial reactions to happy and angry facial expressions: Evidence for right hemisphere dominance. Psychophysiology, 37, 693–696. doi:10.1111/1469-8986.3750693

Eagly, A. H., Ashmore, R. D., Makhijani, M. G., & Longo, L. C. (1991). What is beautiful is good, but . . . : A meta-analytic review of research on the physical attractiveness stereotype. Psychological Bulletin, 110, 109–128. doi:10.1037/0033-2909.110.1.109

Eberhardt, J. L., Davies, P. G., Purdie-Vaughns, V. J., & Johnson, S. L. (2006). Looking deathworthy perceived stereotypicality of black defendants predicts capital-sentencing outcomes. Psychological Science, 17, 383–386. doi:10.1111/j.1467-9280.2006.01716.x

Ebner, N., Riediger, M., & Lindenberger, U. (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: Development and validation. Behavior Research Methods, 42, 351–362. doi:10.3758/BRM.42.1.351

Egger, H. L., Pine, D. S., Nelson, E., Leibenluft, E., Ernst, M., Towbin, K. E., & Angold, A. (2011). The NIMH Child Emotional Faces Picture Set (NIMH-ChEFS): A new set of children’s facial emotion stimuli. International Journal of Methods in Psychiatric Research, 20, 145–156. doi:10.1002/mpr.343

Ekman, P. (1982). Emotion in the human face: Guidelines for research and an integration of findings (2nd ed.). New York, NY: Cambridge University Press.

Ekman, P. (1994). All emotions are basic. In P. Ekman & R. Davidson (Eds.), The nature of emotion: Fundamental questions (pp. 15–19). New York, NY: Oxford University Press.

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17, 124–129. doi:10.1037/h0030377

Ekman, P., & Friesen, W. V. (1976). Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., & Friesen, W. V. (1977). Nonverbal behavior. In P. F. Ostwald (Ed.), Communication and social interaction (pp. 37–46). New York, NY: Grune & Stratton.

Ekman, P., & Friesen, W. V. (1978). Facial action coding system. Palo Alto, CA: Consulting Psychologists Press.

Ekman, P., Friesen, W. V., & Ellsworth, P. (1982). Research foundations. In P. Ekman (Ed.), Emotion in the human face (pp. 1–143). New York, NY: Cambridge University Press.

Etkin, A., Egner, T., Peraza, D. M., Kandel, E. R., & Hirsch, J. (2006). Resolving emotional conflict: A role for the rostral anterior cingulate cortex in modulating activity in the amygdala. Neuron, 51, 871–882. doi:10.1016/j.neuron.2006.07.029

Fabri, M., Moore, D., & Hobbs, D. (2004). Mediating the expression of emotion in educational collaborative virtual environments: An experimental study. Virtual Reality, 7, 66–81. doi:10.1007/s10055-003-0116-7

Farias, A. R., Garrido, M. V., & Semin, G. R. (2013). Converging modalities ground abstract categories: The case of politics. PLoS ONE, 8, e60971. doi:10.1371/journal.pone.0060971

Farias, A. R., Garrido, M. V., & Semin, G. R. (2016). Embodiment of abstract categories in space… grounding or mere compatibility effects? The case of politics. Acta Psychologica, 166, 49–53. doi:10.1016/j.actpsy.2016.03.002

Fernandez‐Dols, J. M., Sierra, B., & Ruiz‐Belda, M. (1993). On the clarity of expressive and contextual information in the recognition of emotions: A methodological critique. European Journal of Social Psychology, 23, 195–202. doi:10.1002/ejsp.2420230207

Ferreira, M., Garcia-Marques, L., Ramos, T., Hamilton, D. L., Uleman, J. S., & Jerónimo, R. (2012). On the relation between spontaneous trait inferences and intentional inferences: An inference monitoring hypothesis. Journal of Experimental Social Psychology, 48, 1–12. doi:10.1016/j.jesp.2011.06.013

Fiorentini, C., & Viviani, P. (2011). Is there a dynamic advantage for facial expressions? Journal of Vision, 11(3), 17. doi:10.1167/11.3.17

Foroni, F., & Semin, G. R. (2011). When does mimicry affect evaluative judgment? Emotion, 11, 687–690. doi:10.1037/a0023163

Frijda, N. H. (1986). The emotions. Cambridge, UK: Cambridge University Press.

Galinha, I. C., & Pais-Ribeiro, J. L. (2012). Contribuição para o estudo da versão portuguesa da Positive and Negative Affect Schedule (PANAS): II—Estudo Psicométrico. Análise Psicológica, 23, 219–227. doi:10.14417/ap.84

Garcia-Marques, T. (1999). The mind needs the heart: The mood-as-regulation-mechanism hypothesis (Unpublished doctoral dissertation). Universidade de Lisboa, Lisboa, PT.

Garcia-Marques, T., Mackie, D. M., Claypool, H. M., & Garcia-Marques, L. (2004). Positivity can cue familiarity. Personality and Social Psychology Bulletin, 30, 585–593. doi:10.1177/0146167203262856

Goeleven, E., De Raedt, R., Leyman, L., & Verschuere, B. (2008). The Karolinska Directed Emotional Faces: A validation study. Cognition and Emotion, 22, 1094–1118. doi:10.1080/02699930701626582

Gold, J. M., Barker, J. D., Barr, S., Bittner, J. L., Bromfield, W. D., Chu, N., … Srinath, A. (2013). The efficiency of dynamic and static facial expression recognition. Journal of Vision, 13(5), 23. doi:10.1167/13.5.23

Gosselin, P., Kirouac, G., & Doré, F. (2005). Components and recognition of facial expression in the communication of emotion by actors. In P. Ekman & E. L. Rosenberg (Eds.), What the face reveals (pp. 243–270). New York, NY: Oxford University Press.

Grill-Spector, K., & Kanwisher, N. (2005). Visual recognition: As soon as you know it is there, you know what it is. Psychological Science, 16, 152–160. doi:10.1111/j.0956-7976.2005.00796.x

Haas, B. W., Omura, K., Constable, R. T., & Canli, T. (2006). Interference produced by emotional conflict associated with anterior cingulate activation. Cognitive, Affective, & Behavioral Neuroscience, 6, 152–156. doi:10.3758/CABN.6.2.152

Hehman, E., Leitner, J. B., & Gaertner, S. L. (2013). Enhancing static facial features increases intimidation. Journal of Experimental Social Psychology, 49, 747–754. doi:10.1016/j.jesp.2013.02.015

Hess, U., & Blairy, S. (2001). Facial mimicry and emotional contagion to dynamic emotional facial expressions and their influence on decoding accuracy. International Journal of Psychophysiology, 40, 129–141. doi:10.1016/s0167-8760(00)00161-6

Hess, U., Blairy, S., & Kleck, R. E. (1997). The intensity of emotional facial expressions and decoding accuracy. Journal of Nonverbal Behavior, 21, 241–257. doi:10.1023/A:1024952730333

Hess, U., & Fischer, A. (2013). Emotional mimicry as social regulation. Personality and Social Psychology Review, 17, 142–157. doi:10.1177/1088868312472607

Hoffmann, H., Traue, H. C., Limbrecht-Ecklundt, K., Walter, S., & Kessler, H. (2013). Static and dynamic presentation of emotions in different facial areas: Fear and surprise show influences of temporal and spatial properties. Psychology, 4, 663–668. doi:10.4236/psych.2013.48094

Horstmann, G., & Ansorge, U. (2009). Visual search for facial expressions of emotions: A comparison of dynamic and static faces. Emotion, 9, 29–38. doi:10.1037/a0014147

Hoyle, R. H. (1993). Interpersonal attraction in the absence of explicit attitudinal information. Social Cognition, 11, 309–320. doi:10.1521/soco.1993.11.3.309

Ito, T. A., Cacioppo, J. T., & Lang, P. J. (1998). Eliciting affect using the International Affective Picture System: Trajectories through evaluative space. Personality and Social Psychology Bulletin, 24, 855–879. doi:10.1177/0146167298248006

Kanade, T., Cohn, J. F., & Tian, Y. (2000, March). Comprehensive data- base for facial expression analysis. Paper presented at the Fourth IEEE International Conference on Automatic Face and Gesture Recognition (FG 2000). Grenoble, France.

Katsyri, J., & Sams, M. (2008). The effect of dynamics on identifying basic emotions from synthetic and natural faces. International Journal of Human-Computer Studies, 66, 233–242. doi:10.1016/j.ijhcs.2007.10.001

Kennedy, K. M., Hope, K., & Raz, N. (2009). Life span adult faces: Norms for age, familiarity, memorability, mood, and picture quality. Experimental Aging Research, 35, 268–275. doi:10.1080/03610730902720638

Koscinski, K. (2013). Perception of facial attractiveness from static and dynamic stimuli. Perception, 42, 163–175.

Landwehr, J. R., McGill, A. L., & Herrmann, A. (2011). It’s got the look: The effect of friendly and aggressive “facial” expressions on product liking and sales. Journal of Marketing, 75, 132–146. doi:10.2307/41228601

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective ratings of pictures and instruction manual (Technical Report No. A-6). Gainesville, FL: University of Florida, Center for Research in Psychophysiology.

Langlois, J. H., Kalakanis, L., Rubenstein, A. J., Larson, A., Hallam, M., & Smoot, M. (2000). Maxims or myths of beauty? A meta-analytic and theoretical review. Psychological Bulletin, 126, 390–423. doi:10.1037/0033-2909.126.3.390

Langlois, J. H., Roggman, L. A., & Musselman, L. (1994). What is average and what is not average about attractive faces? Psychological Science, 5, 214–220. doi:10.1111/j.1467-9280.1994.tb00503.x

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. J., Hawk, S. T., & van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition and Emotion, 24, 1377–1388. doi:10.1080/02699930903485076

Lawson, C., Lenz, G. S., Baker, A., & Myers, M. (2010). Looking like a winner: Candidate appearance and electoral success in new democracies. World Politics, 62, 561–593. doi:10.1017/s0043887110000195

Lee, L., Loewenstein, G., Ariely, D., Hong, J., & Young, J. (2008). If I’m not hot, are you hot or not? Physical-attractiveness evaluations and dating preferences as a function of one’s own attractiveness. Psychological Science, 19, 669–677. doi:10.1111/j.1467-9280.2008.02141.x

Lenz, G. S., & Lawson, C. (2011). Looking the part: Television leads less informed citizens to vote based on candidates’ appearance. American Journal of Political Science, 55, 574–589. doi:10.1111/j.1540-5907.2011.00511.x

Libkuman, T. M., Otani, H., Kern, R., Viger, S. G., & Novak, N. (2007). Multidimensional normative ratings for the international affective picture system. Behavior Research Methods, 39, 326–334. doi:10.3758/BF03193164

Lindsay, R. C. L., Mansour, J. K., Bertrand, M. I., Kalmet, N., & Melsom, E. I. (2011). Face recognition in eyewitness memory. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The Oxford handbook of face perception (pp. 307–328). New York, NY: Oxford University Press.

Little, A. C., Burriss, R. P., Jones, B. C., & Roberts, S. C. (2007). Facial appearance affects voting decisions. Evolution and Human Behavior, 28, 18–27. doi:10.1016/j.evolhumbehav.2006.09.002

Livingstone, S. R., Choi, D. H., & Russo, F. A. (2014). The influence of vocal training and acting experience on measures of voice quality and emotional genuineness. Frontiers in Psychology, 5, 156. doi:10.3389/fpsyg.2014.00156

LoBue, V., & Thrasher, C. (2015). The Child Affective Facial Expression (CAFE) set: Validity and reliability from untrained adults. Frontiers in Psychology, 5, 1532. doi:10.3389/fpsyg.2014.01532

Lundqvist, D., Flykt, A., & Öhman, A. (1998). Karolinska Directed Emotional Faces—KDEF (CD ROM). Stockholm, Sweden: Karolinska Institutet, Department of Clinical Neuroscience, Psychology section.

Ma, D. S., Correll, J., & Wittenbrink, B. (2015). The Chicago face database: A free stimulus set of faces and norming data. Behavior Research Methods, 47, 1122–1135. doi:10.3758/s13428-014-0532-5

Mason, M. F., Cloutier, J., & Macrae, C. N. (2006). On construing others: Category and stereotype activation from facial cues. Social Cognition, 24, 540–562. doi:10.1521/soco.2006.24.5.540

Matsumoto, D., & Ekman, P. (1988). Japanese and Caucasian Facial Expressions of Emotion (JACFEE) [Slides]. San Francisco, CA: San Francisco State University, Intercultural and Emotion Research Laboratory, Department of Psychology.

McEwan, K., Gilbert, P., Dandeneau, S., Lipka, S., Maratos, F., Paterson, K. B., & Baldwin, M. (2014). Facial expressions depicting compassionate and critical emotions: The development and validation of a new emotional face stimulus set. PLoS ONE, 9, e88783. doi:10.1371/journal.pone.0088783

Miesler, L., Landwehr, J. R., Herrmann, A., & McGill, A. L. (2010). Consumer and product face-to-face: Antecedents and consequences of spontaneous face-schema activation. Advances in Consumer Research, 37, 536–537.

Miles, L., & Johnston, L. (2007). Detecting happiness: Perceiver sensitivity to enjoyment and non-enjoyment smiles. Journal of Nonverbal Behavior, 31, 259–275. doi:10.1007/s10919-007-0036-4

Miyake, K., & Zuckerman, M. (1993). Beyond personality impressions: Effects of physical and vocal attractiveness on false consensus, social comparison, affiliation, and assumed and perceived similarity. Journal of Personality, 61, 411–437. doi:10.1111/j.1467-6494.1993.tb00287.x

Monin, B. (2003). The warm glow heuristic: When liking leads to familiarity. Journal of Personality and Social Psychology, 85, 1035–1048. doi:10.1037/0022-3514.85.6.1035