Abstract

We collected imageability and body–object interaction (BOI) ratings for 599 multisyllabic nouns. We then examined the effects of these variables on a subset of these items in picture-naming, word-naming, lexical decision, and semantic categorization. Picture-naming latencies were taken from the International Picture-Naming Project database (Szekely, Jacobsen, D’Amico, Devescovi, Andonova, Herron, et al. Journal of Memory and Language, 51, 247–250, 2004), word-naming and lexical decision latencies were taken from the English Lexicon Project database (Balota, Yap, Cortese, Hutchison, Kessler, Loftis, et al. Behavior Research Methods, 39, 445–459, 2007), and we collected semantic categorization latencies. Results from hierarchical multiple regression analyses showed that imageability and BOI separately accounted for unique latency variability in each task, even with several other predictor variables (e.g., print frequency, number of syllables and morphemes, age of acquisition) entered first in the analyses. These ratings should be useful to researchers interested in manipulating or controlling for the effects of imageability and BOI for multisyllabic stimuli in lexical and semantic tasks.

Similar content being viewed by others

Embodied cognition is the theoretical perspective that much of human cognition is based on knowledge gained through sensorimotor (i.e., bodily) experience with the environment (Barsalou, 1999; Clark, 1997; Lakoff & Johnson, 1999; M. Wilson, 2002). An expanding body of research demonstrates that sensorimotor knowledge influences linguistic conceptual processing (e.g., Glenberg & Kaschak, 2002; Siakaluk, Pexman, Dalrymple, Stearns, & Owen, in press; Wellsby, Siakaluk, Pexman, & Owen, 2010; N. L. Wilson & Gibbs, 2007; Yaxley & Zwaan, 2007). Of central importance to the present study is that sensorimotor knowledge has been reported to influence conceptual processing at the lexical (i.e., single-word) level. One example is the imageability variable, which assesses the ease with which words evoke mental images of the objects to which they refer. Many word recognition studies have reported a facilitatory imageability effect such that responses to words rated high in imageability (e.g., peach) are faster and more accurate than responses to words rated low in imageability (e.g., fraud) (Cortese & Fugett, 2004; Cortese, Simpson, & Woolsey, 1997; de Groot, 1989; James, 1975; Kroll & Mervis, 1986; Strain, Patterson, & Seidenberg, 1995). A second example is the body–object interaction (BOI) variable, which assesses the ease with which a human body can physically interact with a word’s referent. A facilitatory BOI effect has been reported such that responses to words rated high in BOI (e.g., purse) are faster and more accurate than responses to words rated low in BOI (e.g., cliff) (Siakaluk, Pexman, Aguilera, Owen, & Sears, 2008; Siakaluk, Pexman, Sears, Wilson, Locheed, & Owen, 2008; Tillotson, Siakaluk, & Pexman, 2008; Wellsby, Siakaluk, Owen, & Pexman, 2011). Perceptual symbol systems theory (Barsalou, 1999, 2003) provides an account for how sensorimotor knowledge may influence lexical conceptual processing.

According to perceptual symbol systems theory, knowledge is inherently multimodal, such that information acquired via sensory systems and motor systems is integrated with information acquired via emotional systems and introspective systems (Barsalou, 1999, 2003). Furthermore, conceptual processing occurs through simulation (i.e., neural reenactment) of those neural states implicated during bodily interaction with the environment. For example, when one thinks about the concept of a football, simulations of previous sensory experience (e.g., what a football looks like when thrown), motor experience (e.g., catching a football), emotional experience (e.g., the excitement that accompanies catching a football), and introspective experience (e.g., knowing the importance of catching a football during a football game) underlie the understanding of this particular concept. Perceptual symbol systems theory can thus be extended to account for the facilitatory imageability and BOI effects in lexical processing in the following manner. First, words that refer to things that afford much sensory experience (e.g., peaches) will develop richer sensory representations than will words that refer to things that do not afford much sensory experience (e.g., fraud). Second, words that refer to things that a human body can easily interact with (e.g., purses) will develop richer motor representations than will words that refer to things that a human body cannot easily interact with (e.g., cliffs). According to perceptual symbol systems theory, richer representations, whether sensory or motor, will elicit richer simulations.

Additionally, we have used a semantic feedback framework to account for the facilitatory effects of richer semantic representations in visual word recognition tasks (e.g., Siakaluk, Pexman, Aguilera, et al., 2008; Siakaluk, Pexman, Sears, et al., 2008; Wellsby et al., 2011). The semantic feedback framework has several important assumptions. First, different word characteristics are processed in different, dedicated sets of units, such that semantic information is processed within semantic units, phonological information is processed within phonological units, and orthographic information is processed within orthographic units. Second, these different sets of units are interconnected so that there is bidirectional information flow between sets of units, and thus processing in one set of units (e.g., semantic processing) may influence processing in another set of units (e.g., phonological processing). Third, the impact of information flow from one set of units to another set of units is dependent on the nature of the connections between the two sets of units. More specifically, words rated high in imageability and words rated high in BOI evoke richer semantic activation among the semantic units than do words rated low in imageability and words rated low in BOI. Two outcomes follow. First, for tasks in which responses are based on either orthographic processing (e.g., lexical decision) or phonological processing (e.g., phonological lexical decision), richer semantic activation for the words rated high in either imageability or BOI leads to greater semantic feedback from semantics to orthography and to phonology, which leads to faster settling among orthographic units (resulting in faster lexical decision latencies) and phonological units (resulting in faster phonological lexical decision latencies). Second, for tasks in which responses are based on semantic processing (e.g., semantic categorization), richer semantic activation for the words rated high in either imageability or BOI leads to faster settling among the semantic units (resulting in faster semantic categorization latencies).

The studies cited above, reporting facilitatory imageability and BOI effects, have all used monosyllabic words as stimuli. As was noted by Yap and Balota (2009), the use of monosyllabic words in visual word recognition studies is typical, for at least two reasons. First, monosyllabic words are simpler stimuli, in the sense that they do not possess certain structural qualities, such as syllabic segmentation, and thus experimental and statistical control of extraneous variables is easier to accomplish for these stimuli. Second, databases for many variables that visual word recognition researchers have examined for monosyllabic words have not been extensively developed for multisyllabic words. Despite these realities, examining the influence of linguistic variables in visual word recognition,primarily using monosyllabic words, is a limited strategy, since the majority of English words are multisyllabic. Yap and Balota further noted that “although the past emphasis on monosyllabic words is understandable, it is possible that the results from monosyllabic studies may not necessarily generalize to more complex multisyllabic words” (p. 503). This issue—namely, whether the effects of linguistic variables observed with monosyllabic words generalize to multisyllabic words—is important, yet it has received little attention. To our knowledge there is only one previously published article that explicitly compared the influence of semantics in visual word recognition between monosyllabic words and multisyllabic words—the Yap and Balota article cited above. There are orthographic and phonological differences between monosyllabic and multisyllabic words, and it seems reasonable to assume that not all variables will exert the same effects with multisyllabic stimuli. Thus, there is a need to determine whether effects of sensorimotor experience, in particular, extend to recognition of multisyllabic words, since this would be predicted by models of embodied cognition.

The present study

The primary purposes of the present study were as follows. First, we wanted to collect imageability ratings and BOI ratings for a large set of multisyllabic words. Second, we wanted to examine whether independent facilitatory effects of imageability and BOI can be observed with multisyllabic words in picture-naming and word-naming (to date, there are no published results regarding whether imageability and BOI exert separate, independent effects in these two tasks). Third, we wanted to examine whether independent facilitatory effects of imageability and BOI extend to multisyllabic words in lexical decision and semantic categorization. To allow for between-task comparisons of the effects of imageability and BOI, the same subset of multisyllabic words were used in each analysis.

We first chose 278 items that had multisyllabic labels from the International Picture-Naming Project (IPNP) database (Szekely et al., 2004). We did so for two reasons. First, the IPNP database provided picture-naming latencies for their stimuli, which afforded the opportunity to extend research on imageability and BOI effects to picture-naming. Second, it allowed for a comparison between picture-naming for these items and word-naming for their corresponding words. Generally, using monosyllabic words, semantic effects, such as the imageability effect, are either relatively modest (Balota, Cortese, Sergent-Marshall, Spieler, & Yap, 2004) or not significant (Cortese & Khanna, 2007) in word-naming tasks, since word-naming is a fast task (and thus, not particularly sensitive to semantic feedback, which may take time to accrue) that does not require participants to identify meanings of words. Importantly, with the present extension to multisyllabic words, word-naming response latencies were expected to be longer and, therefore, potentially more sensitive to semantic influences such as imageability and BOI. Thus, we predicted facilitatory effects of imageability and BOI in word-naming using multisyllabic words. In addition to the items collected from the IPNP database that were used in our picture and word recognition analyses, we also collected imageability and BOI ratings for an additional 321 multisyllabic items selected from the N-Watch database (Davis, 2005).

Imageability ratings and BOI ratings

Method

Participants

Two groups of University of Calgary undergraduate students (one comprising 57 individuals, and the other comprising 59 individuals) provided imageability ratings, and 27 University of Northern British Columbia undergraduate students provided BOI ratings for the 278 items selected from the IPNP database. Two additional groups of University of Calgary undergraduate students (one comprising 41 individuals, and the other comprising 43 individuals) provided imageability ratings, and 41 University of Calgary undergraduate students provided BOI ratings for the 321 items selected from the N-Watch database. All participants were native English speakers and received bonus course credit for their participation.

Stimuli

First, we selected from the IPNP database 278 stimuli that we determined had multisyllabic labels. Second, we further selected 321 multisyllabic stimuli from the N-watch database. As such, we collected imageability ratings and BOI ratings for a total of 599 multisyllabic items, and all these items are included in the archived materials.

As has been noted, two of the primary purposes of the present study were to examine whether imageability and BOI effects are observed in picture-naming and word-naming and to make comparisons of the effects of these two variables between these two tasks. During the process of preparing the ratings materials, we discovered that 30 of the items selected from the IPNP database either had multiword names (e.g., can opener) or were not found in the English Lexicon Project (ELP) database (e.g., unicycle) (Balota et al., 2007). In addition, in order to tightly control for effects of initial phoneme in the hierarchical multiple regression analyses for picture-naming and word-naming, we removed the 25 items that started with a vowel (e.g., arrow). Thus, there were a total of 223 multisyllabic items in the hierarchical multiple regression analyses reported below.

Apparatus and procedure

Imageability ratings were collected in the following manner. For the items selected from the IPNP database, two versions of an online questionnaire were prepared such that 139 items were randomly listed on each questionnaire. For the items selected from the N-Watch database, two versions of an online questionnaire were prepared such that items were randomly listed on each questionnaire. One questionnaire had 161 items, and the other questionnaire had 160 items. For all four imageability questionnaires, 35 abstract items were presented on each questionnaire in order to encourage participants to use the entire rating scale (although the data from the abstract items were not included in the results reported in this article). As such, for the two IPNP versions, there were 174 items on each version, and these were randomly listed on seven pages (with about 25 items per page). For the two N-Watch versions, there were either 196 items or 195 items, and these were randomly listed on eight pages (again, with about 25 items per page). The pages for each version of the four sets of questionnaires were randomized separately for each participant.

BOI ratings were collected in the following manner. For the items selected from the IPNP database, a questionnaire was prepared such that the 278 items were randomly listed on 31 pages (30 pages had 9 items and 1 page had 8 items). For the items selected from the N-Watch database, a questionnaire was prepared such that the 321 items were randomly listed on 13 pages (with about 25 items per page). The pages of both questionnaires were randomized separately for each participant.

For both the imageability and BOI questionnaires, a scale from 1 to 7 was placed to the right of each item, with 1 indicating either low imageability or low BOI and 7 indicating either high imageability or high BOI. Participants were encouraged to use the entire scale in making their ratings. The instructions for the imageability ratings and the BOI ratings are presented in Appendices 1 and 2, respectively.

Results and discussion

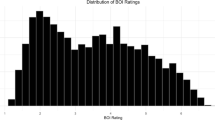

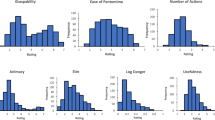

Analyses of the ratings data for the entire set of 599 items showed that the mean imageability rating was 5.58 (SD = 0.81) and the mean BOI rating was 4.13 (SD = 1.12). The correlation between these two variables was small but significant, r(599) = .12, p < .01. The imageability ratings and the BOI ratings for each item are presented in the archived materials. Analyses were also conducted on the items from the two databases separately. For the 278 items selected from the IPNP database, the mean imageability rating was 6.08 (SD = 0.37), and the mean BOI rating was 4.06 (SD = 1.40). The correlation between these two variables was not significant, r(278) = −.08, p = .19. For the 321 items selected from the N-Watch database, the mean imageability rating was 5.14 (SD = 0.83), and the mean BOI rating was 4.19 (SD = 0.81). The correlation between these two variables was significant, r(321) = .43, p < .001. The nonsignificant correlation for the items selected from the IPNP database is likely due to the fact that all the IPNP items were, of necessity, highly imageable (so that pictures could easily be made for them). The correlation was much stronger for the items selected from the N-Watch database, likely because these items had a mean imageability rating that was almost a full point lower than that for the IPNP items (5.14 vs. 6.08, respectively) and the standard deviation was more than double that of the IPNP items (0.83 vs. 0.37, respectively).

Picture-naming and word-naming

Method

Stimuli

The picture-naming and word-naming analyses presented below were based on the analysis of the 223 items that had single-word, multisyllabic labels that began with a consonant. For each of these 223 stimuli, values for HAL log-frequency, number of morphemes, Levenshtein orthographic distance, and Levenshtein phonological distance (see Yarkoni, Balota, & Yap, 2008) were obtained from the ELP database; values for number of syllables were obtained from the N-Watch database; and values for age of acquisition and objective visual complexity of the pictures were obtained from the IPNP database. Lastly, to control for the effects of initial phoneme, dichotomous variables were used to code the following 13 features (1 = presence of the feature; 0 = absence of the feature): affricative, alveolar, bilabial, dental, fricative, glottal, labiodental, liquid, nasal, palatal, stop, velar, and voiced (Spieler & Balota, 1997; Yap & Balota, 2009).

Data analysis

The picture-naming and word-naming data were analyzed using hierarchical multiple regression.Footnote 1 As has been noted, the picture-naming latencies were obtained from the IPNP database, and the word-naming latencies were obtained from the ELP database. For the picture-naming latencies, several items had more than one mean latency in the IPNP database. We simply chose the first mean latency that appeared in the IPNP database for each of these items. In the first step of each analysis, we entered the 13 dichotomous variables to control for the effects of initial phoneme. In the second step of each analysis, the following control variables were entered: HAL log-frequency, number of syllables, number of morphemes, Levenshtein phonological distance,Footnote 2 and age of acquisition. For the picture-naming analysis, objective visual complexity was also entered in step 2. For each analysis, imageability and BOI were entered in the third step. We used a hierarchical multiple regression analysis because it provides two important pieces of information—namely, the change in R 2 when both imageability and BOI are added to the analysis, and whether each of these two variables accounts for a significant amount of unique latency variability.

Results and discussion

The mean response latencies for picture-naming and word-naming were 1,062 ms (SD = 230.9) and 644 ms (SD = 60.0), respectively. Summary statistics for each of the predictor variables used in the present study are shown in Table 1. Zero-order correlations between the predictor variables and the measures of performance are shown in Table 2. The multiple regression results are shown in Table 3.

There are several findings that are of particular importance for the present study. First, for both picture-naming and word-naming, there was a significant change in R 2 when imageability and BOI were added to the analysis. Interestingly, imageability and BOI together accounted for roughly twice as much additional response latency variability in picture-naming (ΔR 2 = .133) as in word-naming (ΔR 2 = .077). Second, imageability and BOI each accounted for a significant amount of unique response latency variability in each task (although in word-naming, the BOI effect approached significance at p = .057). Third, all the semipartial correlations for imageability and BOI were negative, indicating that higher imageability ratings and higher BOI ratings were associated with shorter picture-naming and word-naming latencies.

The picture-naming and word-naming results of the present study can be readily accounted for by perceptual symbol systems theory and the semantic feedback account of lexical processing we described above, in the following manner. For picture-naming, when a pictured object is presented, it first activates structural knowledge of what the picture represents, which then activates semantic information associated with that structural knowledge (Alario et al., 2004). For word-naming, when a word is presented, it activates associated semantic information. Pictures and words that depict or refer to concepts rated high in imageability and high in BOI elicit richer semantic representations; that is, they elicit richer sensorimotor simulations among the semantic units. The increased semantic activation leads to greater semantic feedback from the semantic units to the phonological units, resulting in faster settling among the phonological units and facilitation of picture-naming and word-naming performance (see Siakaluk, Pexman, Aguilera, et al., 2008, for a similar account applied to performance in phonological lexical decision). For the present study, picture-naming latencies were significantly longer than word-naming latencies, likely because of the additional process of mapping structural knowledge activated by the pictured objects onto semantic knowledge in picture-naming. This additional process may have allowed for more time for the combined effects of imageability and BOI (via semantic feedback) to be greater in picture-naming than in word-naming. Importantly, the present study extends previous research by showing that independent facilitatory imageability and BOI effects are observed in picture-naming and word-naming for multisyllabic stimuli.

Lexical decision and semantic categorization

We thought it important to also examine imageability and BOI effects for our multisyllabic words in tasks incorporating a decisional component: lexical decision and semantic categorization. These are the tasks that have been used most frequently in the past to study semantic effects in visual word recognition for monosyllabic words but have not previously been extended to multisyllabic words. We chose to do this by using lexical decision latency data from the ELP database and by collecting semantic categorization latency data. The lexical decision analysis allowed us to further examine semantic feedback effects for multisyllabic words—but, in this case, from semantics to orthography. The semantic categorization analysis allowed us to examine whether richer semantic (i.e., sensorimotor) activation leads to faster settling among the semantic units for multisyllabic words.

Method

Participants

Twenty-nine undergraduate students from the University of Calgary participated in the semantic categorization experiment. All the participants were native English speakers, and all reported normal or corrected-to-normal vision.

Stimuli

The critical stimuli were the original 248 items selected from the IPNP database that had single-word, multisyllabic labels (including those starting with a vowel). An additional 248 abstract words were included as fillers, for a total of 496 words. However, to allow for cross-task comparisons with the picture-naming and word-naming analyses, only the data from the 223 items beginning with a consonant were used in the hierarchical multiple regression analyses for semantic categorization and lexical decision.

Apparatus and procedure

For the semantic categorization task, participants were instructed to decide whether each word referred to a concrete thing or an abstract thing and to make their decisions as quickly and as accurately as possible. Participants made a yes or no response for each word by pressing the appropriate button on a response box. The stimuli were presented in the center of a 20-in. monitor controlled by a computer using E-Prime software (Schneider, Eschmann, & Zuccolotto, 2002). A trial was initiated by a fixation marker that appeared at the center of the computer display. The fixation marker was presented for 1,000 ms and was then replaced by a stimulus item. Response latencies were measured to the nearest millisecond. Stimulus order was randomized separately for each participant. The intertrial interval was 2,000 ms. Before beginning the experiment, participants were given practice trials consisting of five concrete words and five abstract words.

As has been noted, the response latencies for the lexical decision analysis were taken from the ELP database.

Data analysis

The lexical decision and semantic categorization data were analyzed using hierarchical multiple regression. In the first step of each analysis, the following control variables were entered: HAL log-frequency, number of syllables, number of morphemes, Levenshtein orthographic distance, and age of acquisition. In the second step of each analysis, imageability and BOI were entered.

Results and discussion

There were seven items in the semantic categorization data that had error rates greater than 30%. These items were removed only from the semantic categorization analysis. Thus, the lexical decision analysis was based on the full set of 223 words, whereas the semantic categorization analysis was based on 216 words. The mean response latencies for lexical decision and semantic categorization were 677 ms (SD = 80.5) and 840 ms (SD = 109.2), respectively. Zero-order correlations between the predictor variables and the measures of performance are shown in Table 2. The multiple regression results are shown in Table 4.

As was the case with the picture-naming and word-naming results, there was a significant change in R 2 when imageability and BOI were added to the lexical decision and semantic categorization analyses (ΔR 2 = .073 and .226, respectively). In addition, imageability and BOI again accounted for a significant amount of unique response latency variability in the two present analyses, and all the semipartial correlations for imageability and BOI were negative, indicating that higher imageability ratings and higher BOI ratings were associated with shorter lexical decision latencies and semantic categorization latencies. Finally, it should be noted that the semantic variables (imageability and BOI) accounted for a particularly large amount of variance in the semantic categorization task. This is likely due to the fact that the semantic categorization task depends on semantic activation per se, and not just indirect semantic feedback. The lexical decision and semantic categorization results of the present study can also be accounted for by perceptual symbol systems theory and the semantic feedback account of lexical processing. As has been noted, words rated high in imageability and words rated high in BOI (as compared with words rated low on these dimensions) elicit richer semantic representations (or sensorimotor simulations) among the semantic units. For lexical decision, the increased semantic activation leads to greater semantic feedback from the semantic units to the orthographic units, resulting in faster settling among the orthographic units and facilitation of lexical decision performance for words rated high in either imageability or BOI (Siakaluk, Pexman, Aguilera, et al., 2008). For semantic categorization, the increased activation among the semantic units associated with words rated high in imageability and BOI leads to faster settling among the semantic units and facilitation of semantic categorization performance for these types of words (Siakaluk, Pexman, Sears, et al., 2008; Wellsby et al., 2011). Importantly, the present study extends our previous research by showing that facilitatory imageability and BOI effects are observed in lexical decision and semantic categorization for multisyllabic stimuli.

There is one final set of findings that is deserving of further comment: the effects of age of acquisition and imageability in the present study. Previous research has reported mixed effects of imageability, using monsyllabic words. Balota et al. (2004) reported significant effects of imageability in both word-naming and lexical decision tasks, with larger effects in lexical decision. However, they did not include age of acquisition as a predictor variable in their study, because norms for this variable were not available at the time. More recently, Cortese and Khanna (2007) examined the unique contributions of imageability and age of acquisition in word-naming and lexical decision. They reported that with the inclusion of age of acquisition, imageability exerted a significant effect only in lexical decision, whereas age of acquisition exerted a significant effect in both tasks, although the effect was larger in lexical decision. Cortese and Khanna concluded that although “imageability remains a significant predictor of lexical decision performance . . . its role on naming performance is tenuous” (p. 1079).

The pattern of results observed in the present study (which used multisyllabic words) for imageability and age of acquisition is very different from that observed in the Cortese and Khanna (2007) study (which used monosyllabic words). We observed an imageability effect in the analyses of word-naming and lexical decision data, but no age-of-acquisition effect in either task. There are several possible explanations for these different results. One possibility is that the different age-of-acquisition dimensions used in the two studies affected the results. The present study used age-of-acquisition norms from the IPNP database, which are based on the MacArthur Communicative Development Inventories (Fenson et al., 1994). The MacArthur inventory involves a 3-point scale—1 = words acquired between 8 and 16 months, 2 = words acquired between 17 and 30 months, and 3 = words that are not acquired during infancy (i.e., words that are acquired after 30 months)—and is completed by parents. Cortese and Khanna assessed age of acquisition with a 7-point scale—1 = 0–2 years of age, 2 = 2–4 years, 3 = 4–6 years, 4 = 6–8 years, 5 =8–10 years, 6 = 10–12 years, and 7 = 13+ years—completed by undergraduate students. The differences between these scales may have contributed to the different pattern of effects for age of acquisition between our study and that of Cortese and Khanna and point to the need for more age-of-acquisition norms for multisyllabic word items.

Another possibility is that age-of-acquisition effects occur earlier in processing, whereas imageability effects occur later in processing. As would be expected, word-naming response latencies were much longer in the present study (1,062 ms), than they were in the Cortese and Khanna (2007) study (469 ms), as were (but to a smaller degree) lexical decision response latencies (677 and 617 ms, respectively). It may be that age of acquisition exerts a significant effect, but imageability does not, for monosyllabic words because these types of words are responded to relatively quickly, whereas imageability exerts a significant effect, but age of acquisition does not, for multisyllabic words because these types of words are responded to relatively slowly.

A third possibility is that the range of imageability values impacted the effects of this variable on word-naming and lexical decision performance. In Cortese and Khanna (2007), the range of imageability values may have been much greater than in the present study. The stimuli used in the regression analyses in the present study were all of relatively high imageability values. It is therefore possible that imageability exerts larger effects (as in the present study) when the stimuli consist mainly of words rated relatively high in imageability. Further experimentation is necessary to determine which, if any, of these possibilities is responsible for the differences in the pattern of effects of imageability and age of acquisition when multisyllabic words are used, as was the case in the present study, versus when monosyllabic words are used, as was the case in Cortese and Khanna.

Conclusion

In the present study, we collected imageability ratings and BOI ratings for 599 multisyllabic nouns. The results of the multiple regression analyses of the present study (which were conducted on a subset of 223 items) are important for several reasons. First, this is the first report of facilitatory BOI effects in picture-naming. Second, this is the first report of facilitatory BOI effects in word-naming, lexical decision, and semantic categorization using multisyllabic words. Third, the present results show that imageability and BOI make independent contributions to performance in picture-naming and several types of visual word recognition tasks in which multisyllabic stimuli are used. Fourth, the present results demonstrate that knowledge gained through sensorimotor experience is an important form of knowledge that is accessed in lexical conceptual processing through different formats of stimulus presentation (i.e., pictures, words) and different tasks, each having shared and unique processing demands. As such, theories and computational models of lexical conceptual processing need to be able to accommodate the embodied effects reported in the present study. These ratings should prove useful to researchers wanting to manipulate or control for variables that gauge knowledge gained through sensorimotor experience in lexical and semantic tasks.

Notes

Because we did not have access to the original data from the IPNP database, we used unstandardized mean item response latencies for all of the hierarchical multiple regression analyses reported in the present study.

As is shown in Table 2, Levenshtein orthographic distance and Levenshtein phonological distance were correlated at .90 for the stimulus set used in the hierarchical multiple regression analyses. To avoid multicollinearity (Tabachnick & Fidell, 2007), we used the Levenshtein phonological distance variable as a control variable in the two verbal response tasks (i.e., picture-naming and word-naming) and the Levenshtein orthographic distance variable as a control variable in the two buttonpress tasks (i.e., lexical decision and semantic categorization).

References

Alario, F.-X., Ferrand, L., Laganaro, M., New, B., Frauenfelder, U. H., & Segui, J. (2004). Predictors of picture naming speed. Behavior Research Methods, Instruments, & Computers, 36, 140–155.

Balota, D. A., Cortese, M. J., Sergent-Marshall, S., Spieler, D. H., & Yap, M. J. (2004). Visual word recognition of single syllable words. Journal of Experimental Psychology: General, 133, 336–345.

Balota, D. A., Yap, M. J., Cortese, M. J., Hutchison, K. A., Kessler, B., Loftis, B., et al. (2007). The English lexicon project. Behavior Research Methods, 39, 445–459.

Barsalou, L. W. (1999). Perceptual symbol systems. The Behavioral and Brain Sciences, 22, 577–660.

Barsalou, L. W. (2003). Abstraction in perceptual symbol systems. Philosophical Transactions of the Royal Society of London B, 358, 1177–1187.

Clark, A. (1997). Being there: Putting brain, body, and world together again. Cambridge, MA: MIT Press.

Cortese, M. J., & Fugett, A. (2004). Imageability ratings for 3,000 monosyllabic words. Behavior Research Methods, Instruments, & Computers, 36, 384–387.

Cortese, M. J., & Khanna, M. M. (2007). Age of acquisition predicts naming and lexical-decision performance above and beyond 22 other predictor variables: An analysis of 2,342 words. Quarterly Journal of Experimental Psychology, 60, 1072–1082.

Cortese, M. J., Simpson, G. B., & Woolsey, S. (1997). Effects of association and imageability on phonological mapping. Psychonomic Bulletin & Review, 4, 226–231.

Davis, C. J. (2005). N-Watch: A program for deriving neighborhood size and other psycholinguistic statistics. Behavior Research Methods, 37, 65–70.

de Groot, A. M. B. (1989). Representational aspects of word imageability and word frequency as assessed through word association. Journal of Experimental Psychology. Learning, Memory, and Cognition, 15, 824–845.

Fenson, L., Dale, P. S., Reznick, J. S., Bates, E., Thal, D. J., & Pethick, S. J. (1994). Variability in early communicative development. Monographs of the Society for Research in Child Development, 59(5), (Serial No. 242)

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9, 558–565.

James, C. T. (1975). The role of semantic information in lexical decisions. Journal of Experimental Psychology. Human Perception and Performance, 1, 130–136.

Kroll, J. F., & Mervis, J. S. (1986). Lexical access for concrete and abstract words. Journal of Experimental Psychology. Learning, Memory, and Cognition, 12, 92–107.

Lakoff, G., & Johnson, M. (1999). Philosophy in the flesh: The embodied mind and its challenge to Western thought. New York: Basic Books.

Schneider, W., Eschmann, A., & Zuccolotto, A. (2002). E-Prime user’s guide. Pittsburgh, PA: Psychology Software Tools, Inc.

Siakaluk, P. D., Pexman, P. M., Aguilera, L., Owen, W. J., & Sears, C. R. (2008a). Evidence for the activation of sensorimotor information during visual word recognition: The body–object interaction effect. Cognition, 106, 433–443.

Siakaluk, P. D., Pexman, P. M., Sears, C. R., Wilson, K., Locheed, K., & Owen, W. J. (2008b). The benefits of sensorimotor knowledge: Body–object interaction facilitates semantic processing. Cognitive Science, 32, 591–605.

Siakaluk, P. D., Pexman, P. M., Dalrymple, H.-A. R., Stearns, J., & Owen, W. J. (in press). Some insults are more difficult to ignore: The embodied insult Stroop effect. Language and Cognitive Processes. doi:10.1080/01690965.2010.521021

Spieler, D. H., & Balota, D. A. (1997). Bringing computational models of word naming down to the item level. Psychological Science, 8, 411–416.

Strain, E., Patterson, K., & Seidenberg, M. S. (1995). Semantic effects in single-word naming. Journal of Experimental Psychology. Learning, Memory, and Cognition, 21, 1140–1154.

Szekely, A., Jacobsen, T., D’Amico, S., Devescovi, A., Andonova, E., Herron, D., et al. (2004). A new on-line resource for psycholinguistic studies. Journal of Memory and Language, 51, 247–250.

Tabachnick, B. G., & Fidell, L. S. (2007). Using multivariate statistics (5th ed.). Boston: Allyn & Bacon.

Tillotson, S. M., Siakaluk, P. D., & Pexman, P. M. (2008). Body–object interaction ratings for 1,618 monosyllabic nouns. Behavior Research Methods, 40, 1075–1078.

Wellsby, M., Siakaluk, P. D., Owen, W. J., & Pexman, P. M. (2011). Embodied semantic processing: The body–object interaction effect in a non-manual task. Language and Cognition, 3, 1–14.

Wellsby, M., Siakaluk, P. D., Pexman, P. M., & Owen, W. J. (2010). Some insults are easier to detect: The embodied insult detection effect. Frontiers in Psychology, 1, 198. doi:10.3389/fpsyg.2010.00198

Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bulletin & Review, 9, 625–636.

Wilson, N. L., & Gibbs, R. W. (2007). Real and imagined body movement primes metaphor comprehension. Cognitive Science, 31, 721–731.

Yap, M. J., & Balota, D. A. (2009). Visual word recognition of multisyllabic words. Journal of Memory and Language, 60, 502–529.

Yarkoni, T., Balota, D. A., & Yap, M. (2008). Moving beyond Coltheart’s N: A new measure of orthographic similarity. Psychonomic Bulletin & Review, 15, 971–979.

Yaxley, R. H., & Zwaan, R. A. (2007). Simulating visibility during language comprehension. Cognition, 150, 229–236.

Acknowledgments

This research was supported by Natural Sciences and Engineering Research Council of Canada (NSERC) discovery grants to P.D.S and P.M.P. and by a NSERC Undergraduate Student Research Award to S.D.R.B. We thank Michael Cortese and two anonymous reviewers for their very helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

An erratum to this article can be found online at http://dx.doi.org/10.3758/s13428-012-0288-8.

Appendices

Appendix 1 Written instructions used for the imageability rating task

Words differ in their capacity to arouse mental images of things or events. Some words arouse a sensory experience, such as a mental picture or sound, very quickly and easily, whereas other words may do so only with difficulty (i.e., after a long delay) or not at all.

The purpose of this experiment is to rate a list of words as to the ease or difficulty with which they arouse mental images. Any word, that in your estimation, arouses a mental image (i.e., a mental picture or sound, or other sensory experience) very quickly and easily should be given a high imagery rating (at the upper end of the numerical scale). Any word that arouses a mental image with difficulty or not at all should be given a low imagery rating (at the lower end of the numerical scale). For example, think of the word “eagle.” “Eagle” would probably arouse an image relatively easily and would be rated as high. “Relevant” would probably do so with difficulty and be rated as low imagery. Because words tend to make you think of other words as associates, it is important that your ratings not be based on this, and that you judge only the ease with which you get a mental image of an object or event in response to each word.

Your imagery ratings will be made on a 1 to 7 scale. A value of 1 will indicate a low imagery rating, and a value of 7 will indicate a high imagery rating. Values of 2 to 6 will indicate intermediate ratings. Please feel free to use the whole range of values to make your judgments. When making your ratings, try to be as accurate as possible, but do not spend too much time on any one word.

1 | 2 | 3 | 4 | 5 | 6 | 7 |

Low | Medium | High | ||||

Appendix 2 Written instructions used for the body–object interaction rating task

Words differ in the extent to which they refer to objects or things that a human body can physically interact with. Some words refer to objects or things that a human body can easily physically interact with, whereas other words refer to objects or things that a human body cannot easily physically interact with. The purpose of this experiment is to rate words as to the ease with which a human body can physically interact with what they represent. For example, the word “chair” refers to an object or thing that a human body can easily physically interact with (e.g., a human body can sit on a chair, or stand on a chair, or move a chair from one part of a room to another), whereas the word “ceiling” refers to an object or thing that a human body cannot easily physically interact with (e.g., a human body could jump up and touch a ceiling). Any word (e.g., “chair) that in your estimation refers to an object or thing that a human body can easily physically interact with should be given a high body-object interaction rating (at the upper end of the numerical scale). Any word (e.g., “ceiling”) that in your estimation refers to an object or thing that a human body cannot easily physically interact with should be given a low body-object interaction rating (at the lower end of the scale). It is important that you base these ratings on how easily a human body can physically interact with what a word represents, and not on how easily it can be experienced by human senses (e.g., vision, taste, etc). Also, because words tend to make you think of other words as associates, it is important that your ratings not be based on this and that you judge only the ease with which a human body can physically interact with what a word represents. Remember, all the words are nouns (i.e., objects or things) and you should base your ratings on this fact.

Your body-object interaction ratings will be made on a 1 to 7 scale. A value of 1 will indicate a low body-object interaction rating, and a value of 7 will indicate a high body-object interaction rating. Values of 2 to 6 will indicate intermediate ratings. Please feel free to use the whole range of values provided when making your ratings. Circle the rating that is most appropriate for each word. When making your ratings, try to be as accurate as possible, but do not spend too much time on any one word.

1 | 2 | 3 | 4 | 5 | 6 | 7 |

Low | Medium | High | ||||

Rights and permissions

About this article

Cite this article

Bennett, S.D.R., Burnett, A.N., Siakaluk, P.D. et al. Imageability and body–object interaction ratings for 599 multisyllabic nouns. Behav Res 43, 1100–1109 (2011). https://doi.org/10.3758/s13428-011-0117-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-011-0117-5