Abstract

Although virtual reality (VR) is a promising tool for the investigation of episodic memory phenomena, to date there has been relatively little examination of how learning mechanisms operate in VR and how these processes might compare (or contrast) with learning that occurs in real life. Moreover, the existing literature on this topic is spread across several disciplines and uses various distinct apparatuses, thus obscuring whether the differences that exist between studies might be due to genuine theoretical discrepancies or may be more simply explained by accounting for methodological variations. The current review is designed to address and elucidate several issues relevant to psychological researchers interested in understanding and/or using this technological approach to study episodic memory phenomena. The principle objectives of the review are as follows: (a) defining and discussing the various VR systems currently used for research purposes, (b) compiling research of episodic memory effects in VR as they have been studied across several disciplines, and (c) surveying major topics in this body of literature (e.g., how virtual immersion has an impact on memory; transfer effects from VR to the real world). The content of this review is designed to serve as a resource for psychologists interested in learning more about the current state of research in this field and is intended to highlight the capabilities (and constraints) associated with using this technological approach in episodic memory research.

Similar content being viewed by others

Introduction

In experimental psychology, researchers often find themselves facing the problem of creating a study that is sufficient in terms of both its ecological validity and its degree of experimental control (Kvavilashvili & Ellis, 2004). Although control is of course necessary for the careful and systematic manipulation of variables under investigation, tasks that lack an adequate degree of ecological validity may be somewhat misrepresentative of the phenomenon of interest, thus threatening the generalizability of results outside of the laboratory. However, the emergence of virtual reality (VR) technology presents an exciting opportunity for psychologists to increase the ecological validity of a task in a setting that simultaneously maintains the experimental control necessary to reliably evaluate a given psychological construct. More precisely, incorporating VR into an experiment has the potential to enhance a study in terms of both its verisimilitude (i.e., the extent to which an experimental task realistically simulates the real-life situation of interest, thus imposing similar cognitive demands on the subject) as well as its veridicality (i.e., the extent to which experimental results accurately reflect and/or predict the psychological phenomenon of interest; for discussion, see Parsons, 2011; see also Chaytor & Schmitter-Edgecombe, 2003). Virtual environments can be created to reflect a theoretically infinite number of situations in a manner that is in many cases drastically more cost efficient than the creation of its real-world equivalent. Indeed, this technology provides researchers with the means to incorporate tasks that would be impossible to replicate in the controlled context of a laboratory (regardless of cost), such as wide-scale navigational tasks like traveling through a city. Moreover, neuroimaging techniques can be fruitfully employed in conjunction with these tasks, thus allowing for an otherwise impossible glimpse into the neurological underpinnings of these sorts of naturalistic activities (e.g., Spiers & Maguire, 2007).

Although quite promising, much is still unknown about the psychological properties of VR and whether this mode of interaction is similar enough to real life to be an effective proxy for the experimental assessment of different phenomena. For example, how effective is learning information in a virtual environment, and how might this level of efficacy compare with learning as it occurs in real environments? Answering such questions requires an assessment of long-term memory effects within virtual environments, and in particular calls for an evaluation of how studying information in VR relates to episodic memory (defined classically as “information about temporally dated episodes or events, and temporal–spatial relations among these events”; Tulving, 1972, p. 385). An understanding of how episodic memory operates in VR is not only of interest to researchers of cognitive psychology but also has implications for the applied utility of using virtual environments as a platform for learning in both educational and industrial settings.

Although applications of training with VR technology have spanned from fighter jets (Lele, 2013) to fast food (Vanian, 2017), a firm foundation of basic research on episodic memory in VR is essential to understanding the overall utility of learning in VR and how it might compare (or contrast) with real-life training. The extant research on this topic spans a number of fields, including psychology (cognitive and clinical), human factors, and basic perceptual research. Each of these fields assesses the construct of memory in slightly different ways, and, in many instances, there seems to be minimal cross-talk between disciplines concerning experimental results. Furthermore, there is a wide variety of VR apparatuses in use for this line of research, spanning from simple desktop computer interfaces to expansive multimillion-dollar chambers dedicated specifically to the creation of highly immersive VR environments. The technological characteristics of different VR systems may likewise result in different levels of encoding efficiency, thus potentially leading to the appearance of theoretically discrepant results across studies that might be better explained through an examination of the specific methodologies employed. These factors have made it somewhat difficult to appraise the body of research on episodic memory in VR from the perspective of cognitive psychology.

The current review has several objectives. First, this review defines and discusses several key distinctions between VR apparatuses and introduces terminology to more efficiently and effectively convey the basic properties of a given VR system in a manner that quickly distinguishes one setup from another. Additionally, this review contains a selected compilation of representative research that exists across several disciplines related to episodic memory effects within VR. These articles are framed in the context of general research of episodic memory phenomena as they are discussed in the realm of cognitive psychology. They are then synthesized in such a way as to make them familiar to memory researchers while maintaining a degree of technical accuracy specified in research from other disciplines. Furthermore, this review will provide a survey of several major topics of interest to memory researchers considering the use of VR, such as the impact of virtual immersion on memory, possible mnemonic benefits of actively (vs. passively) engaging with the virtual environment, and the existence and extent of transfer effects from VR to real-life assessments of episodic memory. Although reviews focusing on the use of VR exist in other domains of psychology (e.g., Diemer, Alpers, Peperkorn, Shiban, & Mühlberger, 2015; Parsons, Gaggioli, & Riva, 2017; Parsons & Phillips, 2016), to my knowledge, no such review currently exists for episodic memory research at the time of this writing. As such, the ultimate objective of this review is to provide a resource for cognitive psychologists interested in using VR as a methodological tool for studying episodic memory phenomena.

What counts as “virtual reality”?

Before delving into the extant body of research on episodic memory using VR, it is important to first clearly establish what sorts of methodologies fall under the umbrella of “virtual reality”—a term that is frequently used to refer to various experimental apparatuses interchangeably (Wilson & Soranzo, 2015). According to Merriam-Webster, VR can be generally described as follows:

An artificial environment which is experienced through sensory stimuli (such as sights and sounds) provided by a computer and in which one’s actions partially determine what happens in the environment. (Virtual Reality, n.d.).

Although this description is perhaps intuitive, it is also quite broad. A more technically explicit definition of what constitutes VR can be found in the field of human–computer interaction:

Virtual Reality is a scientific and technical domain that uses computer science and behavioral interfaces to simulate in a virtual world the behavior of 3D entities, which interact in real time with each other and with one or more users in pseudo-natural immersion via sensorimotor channels. (Fuchs, Moreau, & Guitton, 2011, p. 8).

Certain key terms within this definition merit explanation in their own right. For instance, the concept of real-time interaction refers to the requirement of a VR apparatus to allow for a user to directly interface with the system (e.g., user-controlled navigation) in such a way that there is minimal delay between a user’s interaction and the associated response elicited from the environment. As such, a computer-generated environment that is observed without interaction would not be classified as VR. Elaboration on the concept of immersion is also critical to understanding this technical definition. In the context of VR research, immersion is classified as the degree to which a VR system produces a naturalistic portrayal of the sensory and interactive elements of a given virtual environment.Footnote 1 Immersion thus serves to isolate the user from the perceptual and interactive elements of the real world by virtue of how faithfully the VR system replicates the sensorimotoric richness of a virtual environment’s analogous real-life equivalent.

Although this definition of VR provides a great deal of specificity, it does not explicitly enumerate which sorts of apparatuses might satisfy these conditions. Indeed, many systems exist that—while varying greatly in terms of their technical features and complexity—might all be broadly categorized as “VR.” However, it is important to recognize that the nature of an experimental task might fundamentally change depending on the apparatus being used. For instance, the act of walking in a virtual environment might be as sophisticated as actually walking on a treadmill that adjusts its speed to match your pace as you navigate in a scene, or as simple as pressing a button or tilting a joystick to indicate the direction of an avatar’s movement. Consequently, the lack of terminological specificity regarding the classification of VR systems could invite inappropriate comparisons of results across experiments, potentially leading to the appearance of theoretically discrepant outcomes when the true cause of discrepancy might actually be the nature of the apparatuses being used. Therefore, it is important to account for the specific properties of different VR systems when seeking to compare results across studies.

To help reduce the ambiguity of what type of equipment is being used in a particular study, it will be helpful to establish terminology that allows for more precision and efficiency in identifying the general properties of a given VR system. Oftentimes, a reader will not know the general nature of the apparatus being employed in an experiment until reaching the Methods section. However, differences in results have been shown to occur depending on what type of VR system is being implemented (e.g., Ruddle, Payne, & Jones, 1999), so an up-front understanding of the basic properties of the apparatus would be helpful in orienting the reader. Although there is a great deal of technological heterogeneity in the field of VR research, the majority of VR systems tend to fall into the three subtypes introduced below: (1) Desktop-VR, (2) Headset-VR, and (3) Simulator-VR.

Desktop-VR

Desktop-VR refers to any virtual environment that uses a standard computer monitor as its visual display (Furht, 2008, p. 963). Additionally, interacting in Desktop-VR makes use of the standard computer mouse and keyboard as input devices. As such, this form of VR is quite cost-effective because of the wide availability of the hardware necessary to run it and software packages available for programming these virtual environments. Furthermore, and unlike other forms of VR, the ubiquity of the standard input devices for Desktop-VR make them much more likely to be familiar to subjects prior to their arrival for an experiment, which could make the training phase of a study quicker and more straightforward. Desktop-VR has been used in psychological research for decades, although this specific term (or variations including the word desktop) is not consistently applied across studies.

Despite the aforementioned benefits of Desktop-VR, there are some drawbacks to consider. First, although the graphical environments of Desktop-VR often exist in 3-D, they are presented on a 2-D display, and therefore only monocular depth cues are available to indicate the distance of objects in the environment (i.e., no stereoscopy). Also, the way subjects interface with Desktop-VR is often not motorically analogous to the action being simulated. For instance, to look “up” in this type of virtual environment might require the subject to physically move the mouse forward, or “picking up” a virtual object may be done by pressing down a button on the keyboard. This mismatch may limit the utility of Desktop-VR in the exploration of memory phenomena that have a relevant motoric component. Finally, these drawbacks typically result in reduced levels of immersion in Desktop-VR relative to other forms of VR.

Headset-VR

Unlike Desktop-VR, Headset-VR is characterized by its use of specialized viewing equipment. Specifically, head-mounted displays (or HMDs) are placed on the head and display computer-generated images directly in front of the eyes. Simultaneously, the HMD detects the head motion of the subject in order to update the visual information being presented in a manner that is consistent with the angle and velocity at which the head is turning—in short, you are able to “look around” the virtual environment in a manner that is natural and not confined to the borders of a conventional computer screen (Furht, 2008). Moreover, in most Headset-VR programs, each eye is presented with images from slightly shifted perspectives such that the virtual environment is viewed stereoscopically, thus providing binocular depth cues for a more comprehensive sense of object distance. In addition to this specialized viewing equipment, many contemporary HMD systems also include hand-held controllers as input devices that allow the user to interact with the environment. The spatial location of these controllers is mapped in the virtual environment, allowing the user to visually observe where the controllers—and, by extension, the user’s hands—are located with respect to the 3-D virtual space.

Movement throughout the Headset-VR environment can take many forms. Users might be in a stationary position (either sitting or standing) as they interact with the environment, with movement limited to the normal range of motion for the head and hands from a fixed location. To simulate a wider range of exploration of the virtual environment, users might also navigate with the help of hand-held controllers, either gradually “walking” through the virtual space or “teleporting” to a designated location while physically remaining stationary. Methods also exist that allow a user to physically walk around while the virtual environment updates itself based upon the user’s position. For instance, sensors (e.g., the HTC Vive Base Stations—aka “Lighthouses”) can be used to designate an empty physical space for the user to walk around a small region of the virtual environment, with a visible barrier projected into the HMD to indicate when a user is reaching the border of this space (to help them avoid collision with unseen physical obstacles). Alternatively, the use of a treadmill synchronized with the computer-generated environment allows the user to walk endlessly through the virtual space, either on one axis (as with a conventional linear treadmill) or in any direction (as with an omnidirectional treadmill). Notably, the proficiency of movement in Headset-VR in terms of speed (i.e., time necessary to navigate a virtual environment), accuracy (i.e., how few collisions occurred in said environment), and length of training necessary for subjects to become familiar with the apparatus varies as a function of which form of virtual locomotion interface is being used, with a pattern generally favoring physical walking, which is translated into the virtual space (see Ruddle, Volkova, & Bülthoff, 2013, for a comparison of several forms of VR locomotion; see also Feasel, Whitton, & Wendt, 2008).

Recently, HMDs have become more accessible than ever before with the advent of several commercially available headsets (e.g., the HTC Vive, the Oculus Rift, PlayStation VR). Software for developing custom VR environments is also readily available and, in many cases, free of cost for noncommercial users (e.g., game engines like Unity3D and the Unreal Engine). This software can be used to incorporate and display a wide range of preexisting virtual 3-D objects and textures, whether they have been obtained from various online sources (e.g., the Unity Asset Store, Google Poly) or from a set of virtual stimuli that have been specifically standardized for use in psychological research (e.g., Peeters, 2018). Additionally, hardware costs have declined substantially, with the headsets listed above currently retailing for approximately $400–$600 (USD) depending on the system.Footnote 2 A current limitation of Headset-VR is that a physical cable connecting the HMD to the computer is required to produce the highest fidelity visual images, which reduces a user’s overall mobility and may even present a tripping hazard if not properly accounted for. However, this issue is likely a temporary technological limitation. Efforts are already underway to produce wireless HMDs capable of high rates of data transmission (e.g., Abari, Bharadia, Duffield, & Katab, 2016), and hardware for wireless HMDs has recently started to become commercially available (e.g., the HTC Vive Wireless Adapter). All things considered, Headset-VR provides perhaps the greatest potential for increasingly widespread use of VR as a methodological tool for the controlled study of memory phenomena.

A comparatively new subclassification of Headset-VR has emerged in recent years that is also worth examining. Specifically, the visual displays and processing capabilities of many contemporary smartphones can be combined with wearable and relatively inexpensive optical hardware (e.g., Google Daydream View, Samsung Gear VR) to create a functional self-contained Headset-VR interface. This alternative HMD setup is known as a Mobile-VR apparatus. Mobile-VR enjoys certain advantages when compared with the conventional HMDs discussed earlier. For instance, these systems are portable and completely wireless, thus eliminating any need to tether the headset to external hardware for graphical processing or motion sensing (allowing the user to engage with VR without being physically restricted to a predesignated location). Additionally, Mobile-VR makes use of the already increasing ubiquity of smartphone devices by sparing the user the additional expense of a dedicated HMD and the hardware necessary for it to function, making such headsets much more cost-effective.

However, there are also some noteworthy drawbacks to Mobile-VR (at least with regard to its current iteration at the time of this writing). Unsurprisingly, the graphical processing capability of VR-capable computers surpasses that of smartphones. Consequently, the resolution of virtual environments rendered in Mobile-VR is comparatively constrained in order to maintain a sufficiently high frame rate, thus reducing sensory immersion (see Carruth, 2017). Furthermore, although Mobile-VR is capable of tracking all three forms of rotational head movement (i.e., roll, pitch, and yaw) from a stationary location, it is generally incapable of registering translational movement as a user moves around in virtual space (i.e., forward-and-backward, up-and-down, and side-to-side movements; see Pal, Khan, & McMahan, 2016, for further discussion of rotational and translational motion tracking in VR systems). As such, current Mobile-VR systems are typically classified as three-degrees-of-freedom (or 3-DOF) devices, whereas many conventional HMDs are capable of tracking six degrees of freedom (6-DOF). However, as the processing capability of smartphones continues to advance, future Mobile-VR devices will be able to display increasingly higher resolution VR environments. Likewise, future devices should be capable of 6-DOF motion tracking as well—perhaps by using the phone’s onboard camera in conjunction with its internal inertial sensors (e.g., accelerometer and gyroscope) to update the user’s location in real time (for discussion, see Fang, Zheng, Deng, & Zhang, 2017). For the time being, researchers should consider how the benefits (and limitations) of Mobile-VR compare with more conventional Headset-VR systems with respect to the specific objectives and experimental design of a given study.

Simulator-VR

Although all forms of VR consist of some form of simulation in the general sense of the word, Simulator-VR (hereafter “Sim-VR”) is distinguished from the previous VR systems primarily by its use of external visual displays (unlike Headset-VR) and specialized input devices (unlike Desktop-VR). Considering the wide variety of systems that fall into this category, Sim-VR setups can largely vary in terms of their immersiveness depending on how the user both observes and interfaces with the environment. Ideally, a more immersive Sim-VR apparatus will feature multiple projector screens or display panels that are configured such that a user is surrounded (either partially or entirely) by the visual imagery of the virtual environment, thus dominating the subject’s field of view. Arguably the most sophisticated example of the visual component of highly immersive Sim-VR is represented by systems known as Computer-Aided Virtual Environments (or CAVEs; see Furht, 2008). CAVEs are entire rooms that are dedicated to displaying a virtual environment and often offer features like head tracking, special glasses that allow the user to view the environment stereoscopically, and ceiling-to-floor graphical displays that completely envelop the user in the computer-generated world (see Slater & Sanchez-Vives, 2016); however, constructing a system with all of these components often comes with a price tag in the millions (Lewis, 2014).

In terms of input devices, some professional simulation systems are designed to allow the user to interface with a particular environment (e.g., the cockpit of an airplane) in a highly naturalistic manner. For instance, driving simulators have been created where the subject enters a full-sized vehicle surrounded by screens on all sides and containing input devices (e.g., steering wheel, brake pedal) that are configured such that the user is able to navigate the virtual environment in a way that is comparable with real life (e.g., Unni, Ihme, Jipp, & Rieger, 2017). Additionally, many VR input devices also feature haptic feedback, thus further enhancing the sensorimotoric correspondence between the virtual training environment and the real-life task. Indeed, input devices with more sophisticated forms of haptic feedback have been found to enhance user performance (e.g., Weller & Zachmann, 2012), which can be useful during training for highly complex procedural tasks such as surgery (see Kim, Rattner, & Srinivasan, 2004; Panait et al., 2009; see also Pan et al., 2015). In short, the ideal Sim-VR system is designed to allow for a faithful reproduction of both the sensory and motoric processes a subject would experience in a given real-life situation.

Although the costs associated with the more immersive systems described above can be prohibitively expensive, Sim-VR can fortunately also be created with comparatively affordable experimental setups (albeit with relative reductions in immersiveness as well). For example, such setups might take the form of a set of screens partially encircling the subject in a U-shaped configuration (e.g., Maillot, Dommes, Dang, & Vienne, 2017). Indeed, even the use of a single screen could cause an apparatus to fall into the category of Sim-VR (assuming the use of a specialized input device),Footnote 3 although in most cases such an apparatus would preferably use a fairly large screen to maximize the portion of the subject’s field of view occupied by the virtual environment. Studies using this variant of Sim-VR might also place subjects in darkened rooms (so that nonvirtual elements of the environment are comparatively obscured from vision), and incorporate task-specific hardware for subjects to interact with, for instance, a treadmill for virtual walking tasks (e.g., Larrue et al., 2014) or a steering wheel, brakes, and accelerator pedal for driving tasks (e.g., Plancher, Gyselinck, Nicolas, & Piolino, 2010). Given the variability of setups employed with Sim-VR, special care should be taken by researchers to clearly define all aspects of the apparatus for the reader, particularly considering that the degree of immersion is likely to vary more within this category than in the previously defined VR classifications.

Differences between VR systems

Although it is possible that the terms introduced above may not address every specific variation of VR system in existence, this taxonomy accounts for the vast majority of VR apparatuses in use for psychological research. It is important to note that this taxonomy is intended to establish a qualitative description of common properties that featurally distinguish different VR systems and should not be thought of as inherently hierarchical in nature (say, with regard to immersion). Indeed, the vast degree of heterogeneity even within each of these classifications would preclude a meaningful hierarchy that could be consistently applied across the literature. Therefore, adherence to this taxonomy should not be seen as a substitute for careful consideration of the specific individual factors within these categories that might influence behavioral performance. Rather, the primary utility of creating these classifications is to facilitate a quick at-a-glance understanding of the basic properties of a VR system in a given study. This can be useful when more broadly considering the influence that a given type of apparatus can have on the results of a study—not only when comparing performance between different VR systems but also when comparing VR performance to a real-life version of the simulated task.

As discussed later in this review, there are occasionally discrepant results in the VR episodic memory literature. To this end, these classifications should aid in the contextualization of seemingly contradictory results to better understand whether they reflect legitimate differences in memory performance or might be explained more parsimoniously by an examination of the technological features of the apparatus in use. As such, for the remainder of this paper these terms will be used to indicate the basic nature of a given VR system and will be followed by a more detailed description of the specific characteristics of the apparatus when appropriate.

Properties of virtual reality immersion and their impact on episodic memory

Perhaps unsurprisingly, many studies reveal a general pattern in which more immersive VR systems promote better episodic memory performance (e.g., Dehn et al., 2018; Harman, Brown, & Johnson, 2017; Ruddle, Volkova, & Bülthoff, 2011; Schöne, Wessels, & Gruber, 2017; Wallet et al., 2011; cf. Gamberini, 2000; LaFortune & Macuga, 2018). Given the potential mnemonic benefits afforded by immersive environments, a consideration of the factors that contribute to immersion is worthwhile. Some researchers classify VR systems in absolute terms of whether they are “immersive” or “nonimmersive” (e.g., Brooks, Attree, Rose, Clifford, & Leadbetter, 1999); however, immersion is not an all-or-nothing variable, but rather exists on a continuum (Bowman & McMahan, 2007). Therefore, when one is considering the impact of immersion on episodic memory, it is important to consider how varying degrees of immersion affect memory performance. This review will not seek to establish a means of operationalizing degrees of immersion in a quantifiable sense, but will instead survey various characteristics that all contribute to the immersiveness of a virtual environment. Furthermore, evidence regarding the impact of each of these factors on episodic memory will be considered below.

Visual fidelity

The property of visual fidelity is defined as how faithfully a VR system reproduces the visible qualities and detail of analogous visual information found in the real world. Nearly all VR systems have, at minimum, some sort of visual display component (cf. Connors et al., 2014), so a consideration of qualities contributing to enhanced visual fidelity is a central element of the immersiveness of an apparatus. However, just because visual fidelity has an impact on immersiveness does not necessarily mean that this specific property of immersion has an impact on memory. Therefore, one should consider what evidence exists from studies that have manipulated visual fidelity as an independent variable and compared memory performance between conditions.

One quality that contributes to the overall visual fidelity of an environment is its level of detail, including features such as color, texture, lighting effects (e.g., shadows), and other visual properties of objects in the virtual environment. To test the impact of visual detail on memory, Wallet et al. (2011) constructed two versions of a virtual environment that reproduced the spatial layout of an area in an actual city: one without color or texture (resulting in a monochromatic environment composed of more simplistic geometric shapes), and the other with the inclusion of color and textures that more clearly defined the nature of the geometric shapes (e.g., buildings, a road). Subjects interacted with this environment in a more basic Sim-VR setup, including a single (large) projector screen and a joystick input. After navigating through the city, subjects completed three assessments to determine how well the navigated route was remembered: (1) a wayfinding task, where subjects reproduced the virtual route in the real world; (2) a sketch-mapping task, which required subjects to draw the visualized route; and (3) a scene-sorting task, in which subjects arranged a set of images taken along the route in chronological order. Subjects who learned the route in the detailed virtual environment performed significantly better on all three assessments when compared with subjects in the undetailed environment.

This general pattern is consistent with neuroimaging research exploring how increased visual detail influences memory performance. Rauchs et al. (2008) instructed subjects to use a four-direction keypad to navigate a virtual town in Desktop-VR, with explicit instructions to learn the layout of the streets and the placement of various target locations within the environment. During retrieval, subjects were placed in an fMRI scanner and instructed to identify and follow the route between two locations in the previously learned environment as quickly and efficiently as possible. On some trials, subjects completed this task in a virtual environment identical to the previous study phase. On other trials, subjects completed the same task in a perceptually impoverished version of the environment, where visual details like colors and textures were removed, but the spatial structure of the environment remained intact. Behavioral results indicated that reduced visual fidelity during retrieval decreased both the efficiency and accuracy of spatial navigation. This decreased performance was consistent with variation in neural activity—relative to the original virtual environment, navigation in the impoverished condition yielded reduced activity in certain brain regions (i.e., the cuneus, left fusiform gyrus, and right superior temporal gyrus; see also Maguire, Frith, Burgess, Donnett, & O’Keefe, 1998). Thus, whereas Wallet et al. (2011) found that encoding with reduced visual detail affects spatial memory performance, this study provides complementary evidence that reduced visual detail at retrieval similarly influences navigational performance (both behaviorally and neurologically).

However, not all experiments reveal a clear benefit of visual detail on memory. For instance, Mania, Robinson, and Brandt (2005) created three versions of a virtual office that varied in terms of the sophistication of environmental lighting effects. Subjects used a Headset-VR system to visually explore the office from a stationary location in the center of the room and were later given a recognition memory test to assess memory for the objects in the room. Although subjects in the mid-quality condition outperformed those in the low-quality condition, recognition in the high-quality group was surprisingly no different from either the low-quality or mid-quality groups (see also Mania, Badariah, Coxon, & Watten, 2010). Such findings reveal that the association between memory and visual detail is unclear and may depend upon which properties of visual detail are being manipulated.

Other research suggests that the effect of visual detail on episodic memory might be subtle and could assist memory for certain items more than others depending on environmental context. To assess this, Mourkoussis et al. (2010) created two versions of a virtual environment (an academic office) with extreme variation in their level of visual detail. The low-detail environment was a basic wireframe model that represented the borders and contours of the objects in the scene. Unlike the high-detail condition, these objects were not filled in with their respective shading or texture—in essence, this amounted to a set of items that were simply outlined in their respective colors. The items in this environment fell into one of two categories: They were either consistent with the context of an academic office (e.g., a bookcase) or inconsistent (e.g., a cash register). Subjects viewed the environment from a stationary position in the center of the virtual office using Headset-VR and were then given an old/new item-recognition test. Although there was no main effect of visual detail on recognition memory, there was an interaction in which memory for inconsistent items was improved in the highly detailed condition, whereas memory for consistent items was not affected by the level of visual detail. This outcome may have particular relevance for researchers hoping to study distinctiveness effects in memory, as it suggests that lower levels of visual detail may dampen effects that generally result in improved memory for items that are incongruent with their environment (for a review of distinctiveness effects, see Schmidt, 1991).

Visual detail is only one factor that contributes to the overall visual fidelity of a virtual environment. The presence or absence of a stereoscopic display also contributes to visual fidelity by allowing the observer to take advantage of binocular depth cues. Bennett, Coxon, and Mania (2010) explored whether this variable affected memory performance by manipulating the presence of these cues in a Headset-VR system. Specifically, half of the subjects viewed the virtual environment stereoscopically, whereas the other half viewed the scene in a “mono” condition that eliminated stereopsis. At retrieval, memory for the spatial configuration of objects was not found to significantly vary as a function of whether stereoscopic cues were available, although responses on remember/know judgments did vary somewhat (specifically, there were more remember judgments in the stereo condition for objects that were thematically consistent with the environment).Footnote 4

Another component of visual fidelity is the amount of visual information available to the user at any given time. Ragan, Sowndararajan, Kopper, and Bowman (2010) studied the impact of field of view (i.e., the portion of the visual world the observer can see at any point in time; FoV) and field of regard (i.e., the area surrounding the observer that contains visual information; FoR) on memory performance in a virtual environment. Researchers used a Sim-VR CAVE setup wherein subjects were seated in the center of a cubic room and rotated the environment using a joystick. To manipulate field of view, subjects were given goggles that were either completely transparent (High-FoV) or contained blinders that limited peripheral vision (Low-FoV). Field of regard was manipulated by altering the number of screens in use—the High-FoR condition used screens to the right, left, and in front of the subject, whereas the Low-FoR group only had the screen directly in front of them. During the task, subjects observed a sequence of events with objects moving on a grid (e.g., a yellow sphere moving to a particular location, followed by placing a red block on top of another object), and then later had to recall the entire sequence in order. Higher fidelity for both field of view and field of regard contributed to increased memory performance (i.e., fewer errors), and High-FoR even reduced the amount of time subjects needed for the memory assessment. Additionally, the condition with both Low-FoV and Low-FoR was significantly slower and more error-prone than any of the other three combinations of these variables.Footnote 5

Multimodal sensory information

Although visual fidelity has a large impact on the immersiveness of a virtual environment, nonvisual sensory stimuli also contribute to immersion and are capable of supplementing an otherwise unimodal VR system. Does the increased immersiveness of a multimodal environment translate to increased performance on memory tasks? If so, do all sensory modalities contribute to this benefit, or only certain ones?

Perhaps the most natural secondary sensory modality to consider would be audition. To investigate the impact of including sound in a virtual environment, Davis, Scott, Pair, Hodges, and Oliverio (1999) created a Headset-VR study with three conditions: high-fidelity sound (typical CD quality sampling rate), low-fidelity sound (comparable to AM radio quality), and no sound. During the study phase, subjects navigated within four virtual rooms using a joystick and observed a variety of objects within each of these rooms. Each room had walls with a distinctive color (red, yellow, green, or gray) as well as distinctive ambient sounds (city, ocean, forest, and storm sounds). Subjects were later given a free recall test in which the high-fidelity audio condition was numerically, but not significantly, better than the no-audio condition (p = .10). However, a subsequent forced-choice recognition assessment was given to evaluate source memory; subjects had to assign images of each object with the respective room in which they were observed. On this task, there was a significant benefit of audio fidelity, with performance in the high-fidelity condition surpassing the no-audio condition. In contrast, the difference between the low-fidelity and no-audio conditions was nonsignificant. These results indicate that although the mere existence of distinctive audio in a virtual environment may not improve source memory performance, the inclusion of high-quality audio may enhance a subject’s ability to effectively encode the context in which an object is observed.

Neuroimaging evidence also supports the notion that including audio may enhance memory encoding in a virtual environment. Andreano et al. (2009) used fMRI to observe brain activity when subjects passively viewed virtual environments with or without the inclusion of auditory cues. Specifically, the visual clips included a prerecorded navigation through different environments, with an auditory cue presented upon the location of an object in the environment (e.g., locating a seashell while walking on the beach). Although no behavioral assessment of memory performance took place in this study, results indicated increased hippocampal activity when subjects experienced the bimodal environments relative to their visual-only counterparts, thus providing neurological evidence concerning the impact that increasing immersion through the inclusion of sound might have on memory encoding. In fact, although it may seem unorthodox, a VR system technically does not require any visual information in order for a subject to learn the spatial layout of a virtual environment. Such nonvisual apparatuses are commonly used in research seeking to improve the spatial navigation abilities of subjects who are blind (for a brief survey of nonvisual VR systems used in blindness research, see Lahav, 2014).

Can other sensory modalities also contribute to memory performance in VR? A Headset-VR study by Dinh, Walker, Hodges, Song, and Kobayashi (1999) incorporated several variations of a virtual environment based on the level of visual detail and the presence or absence of auditory, tactile, and olfactory stimulation. Tactile cues included a fan that turned on in real life when subjects approached the virtual fan, and a heat lamp intended to mimic the impression of standing in the sunshine when they walked to the virtual balcony. The olfactory cue was the scent of coffee presented via an oxygen mask when the subject was in the vicinity of a virtual coffee pot. Although there were no differences between groups in terms of their memory for the overall layout of the environment itself, both olfactory and tactile cues improved recall performance for the location of objects within the environment.

Although all of the previous studies on multimodal sensory information have provided nonvisual cues during the study phase of the experiment, one might reasonably wonder whether an effect might be observed if such cues were provided in the retrieval phase. Could the presence of an olfactory cue used during study reinstate the context of the encoding episode if presented again during retrieval? Moreover, might an olfactory cue presented only during retrieval affect memory in some way if the scent is contextually appropriate for the setting of the virtual environment? Tortell et al. (2007) sought to answer these questions by constructing a unique Sim-VR apparatus designed to produce olfactory cues at varying times during the experiment. The researchers employed a 2 × 2 design to manipulate the presence and timing of olfactory cues, with scent during encoding and scent during retrieval as the two factors. The scent was a custom-designed compound intended to mimic a smell appropriate for the virtual environment, which in this study was “a swampy culvert.” Subjects in all conditions studied the virtual environment and were then given a recognition memory test where they had to indicate which items had not been viewed during encoding. Results indicated a main effect in which the presence of an olfactory cue during the study phase produced a significant improvement in recognition memory. However, no additional benefit was conferred by having the scent presented during both encoding and retrieval. Furthermore, subjects who experienced the scent only during retrieval had worse memory performance than any of the other three conditions.

Active versus passive interaction with a virtual environment

The previous considerations regarding the types of sensory information provided by various virtual environments is integral to understanding the relationship between VR immersion and episodic memory performance. However, a full appreciation of a virtual environment’s immersiveness also requires an inspection of a subject’s interactions within the environment. Interaction is, after all, a critical component of what distinguishes VR from simply watching a video from a first-person perspective. The nature and extent of virtual interaction can take several forms—from having full control of navigation and manipulation of objects to simply having the ability to “turn your head” (literally or figuratively) to observe different regions of the virtual environment (e.g., with a joystick or with head movements tracked by an HMD).

Is it possible that active engagement within the virtual environment might confer benefits to memory performance when compared with subjects with lower levels of virtual engagement? On the surface, this intuition seems reasonable. Indeed, the well-documented enactment effect has demonstrated that participants who perform a certain action (e.g., “move the cup”) are more likely to recall the event relative to subjects who merely listened to the action phrase being uttered. Moreover, the enactment effect occurs even in the absence of the physical object being referenced, meaning that subjects enjoy enhanced memory even when merely pretending to interact with the object being referenced. Finally, subjects tend to remember actions better when they personally carry out the task than when they passively observe the experimenter doing the same task—in short, an advantage of self-performed tasks over experimenter-performed tasks (for a brief overview of the previously mentioned characteristics of the enactment effect, see Engelkamp & Zimmer, 1989). Considering these findings, it is possible that similar effects favoring subject interactivity may occur in VR settings, particularly when subjects are allowed increased control over how they interact with the virtual environment.

Benefits of interaction in VR

To assess the potential impact of object manipulation on memory for stimuli within virtual environments, James et al. (2002) created a set of objects that subjects viewed in a Sim-VR environment (a CAVE producing stereoscopic images). During study, subjects viewed half of the objects with active control over the rotation of the image and the other half with the image rotating on its own. During the subsequent old/new recognition test, stationary images of each object were presented in the CAVE from four different viewpoints, and subjects were instructed to indicate—as quickly as possible—whether each object was previously studied. Results indicated that subjects recognized old items significantly faster when they were studied actively. However, although the speed of recognition was improved in the active condition, the accuracy of recognition was comparable regardless of how an object was studied.

The previous outcome suggests no additional benefit of item-recognition accuracy when the viewpoint of an object is directly manipulated by the subject. However, real-life objects are seldom observed in total isolation, but rather in the context of an environment that likely has various stimuli visible at any given point as one walks from place to place. As such, might active navigation through a more naturalistic virtual environment enhance memory relative to a passive observation of scenery as one moves through a preprogrammed route? Hahm et al. (2007) created a virtual environment consisting of four rooms that were each filled with 15 unique objects. Using a Headset-VR system, subjects either actively navigated around the rooms (using a keyboard) or passively watched as they were moved around the rooms automatically. Accuracy on the old/new recognition task for studied objects was significantly better for subjects who actively explored the rooms, revealing a mnemonic benefit of increased VR interactivity. This basic outcome was later replicated in a similar study by Sauzéon et al. (2012) using Desktop-VR, yet again revealing increased recognition accuracy for objects in the active navigation condition.

Benefits of active interaction in a virtual environment have also been found in applied research. Jang, Vitale, Jyung, and Black (2017) studied the impact of interactive virtual training among medical students studying the anatomy of the inner ear. The specialized Sim-VR apparatus was equipped with a stereoscopic visual display and a free-moving joystick (i.e., one not mounted to a stationary surface) that allowed the user to rotate and zoom in on the virtual model of the inner ear and observe the anatomical substructures contained within. Subjects were instructed to study the physical and spatial configuration of the virtual model through either active manipulation or a passive observation in which the 3-D model was moved “on its own.” Unbeknownst to subjects was the fact that the videos viewed in the passive group were generated by subjects in the active group. This feature allowed for a matched-pairs design to ensure that subjects between conditions were observing the same visual information. At test, subjects were provided with several 2-D images of the inner ear from various perspectives with critical substructures missing in each image (e.g., the semicircular canals). Subjects were then tasked with drawing each substructure in its correct location and shape from a given perspective. An analysis of the drawings revealed that subjects in the active condition were more accurate in the angle, size, and placement of the substructures, revealing a benefit to spatial memory performance. The results from Jang et al. (2017) suggest that the benefit of actively interfacing with a virtual environment is not unique to object recognition, but may extend to spatial properties of memory as well.

Limitations on the benefits of interactivity in VR

Despite several studies that indicate a general benefit of active VR interaction on memory performance, this finding is not universal. Sandamas and Foreman (2003) created a Desktop-VR task in which subjects actively or passively navigated throughout a room containing several objects. Later, memory for the location of each item was tested by subjects marking the spot of each object on a blueprint of the previously explored room. The location of one of the objects was marked before assessment in order to give subjects a point of reference. Subjects who actively navigated the environment had no better memory for the spatial arrangement of objects than those who passively observed movement through the room. Other null effects of VR interactivity on memory have been observed as well. Gaunet, Vidal, Kemeny, and Berthoz (2001) created a low-immersion Sim-VR apparatus in which subjects actively or passively traveled through a city with the experimenter instructing the subject when and where to turn. At the end of the route, spatial memory was assessed by asking subjects to “point” toward the direction that the route began using the joystick. Spatial memory was also assessed via a route-drawing task where subjects used a pen to indicate the path they traveled on a map. In both cases, no difference in spatial performance existed between groups. Moreover, a scene-recognition task assessed each subject’s memory for whether a given image (e.g., a view of a particular intersection) was observed on the route. This assessment also failed to reveal an effect of interaction; therefore, the mnemonic benefits of active navigation were absent for both the spatial and featural characteristics of the route traveled in VR.

Some researchers have proposed that the benefits of active interaction in a VR setting might be more likely when recalling the overall spatial layout of the navigated environment as opposed to the recognition and/or localization of specific items within said environment. Indeed, when Brooks et al. (1999) tested subjects after navigating through a series of interconnected rooms in Desktop-VR, memory for the objects and their location was equivalent for the passive and active conditions. However, prior to these assessments, subjects were first instructed to draw the layout of the rooms (including doorways and passages) shortly after navigation took place. Accuracy of these drawings was determined by a previously established scoring system and rated by judges who were blind to each subject’s condition. Results revealed a significant benefit in spatial recall performance for subjects in the active navigation condition. This outcome is consistent with a Desktop-VR study by Attree et al. (1996), who also found a significant benefit of active navigation on memory for the spatial layout of a set of rooms, but not for the recall or localization of objects contained within the rooms. More recently, Wallet et al. (2011) observed a benefit of active navigation on a subject’s ability to draw a recreation of the route that was previously navigated in a simple Sim-VR setup (i.e., a large projector screen and joystick); a finding that directly contrasts with Gaunet et al. (2001). Interestingly, when subjects were later instructed to chronologically sort a series of images taken along the route, a negative effect of navigation was observed such that passive subjects performed better than active subjects.

Possible interactions between sensory and interactive immersion

Clearly, there are a wide variety of outcomes from studies assessing the impact of active navigation on episodic memory performance in VR. What might explain these discrepant results? One possible explanation lies in the relative levels of sensory immersion provided by the apparatuses in these studies. Most of the experiments described above that found no memory benefits in active navigation employed comparatively low-immersion VR systems, with the exception of the CAVE study by James et al. (2002), whose experiment still produced an improvement in recognition speed resulting from interaction with the virtual environment. The study by Wallet et al. (2011) provides a more direct glimpse into the possible interaction of sensory immersion (high vs. low visual detail) and interactivity (active vs. passive) on memory performance in a large-screen Sim-VR system. Interestingly, the researchers found an interaction on the spatial route-mapping task, with subjects in the active condition doing significantly better in an environment with high visual detail. Moreover, environments with low visual detail resulted in a negative effect of active navigation on the ability to chronologically sort images of scenes from the route.

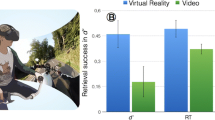

In short, this outcome from Wallet et al. (2011) suggests that enhanced sensory immersion (in this case via visual fidelity) may increase the likelihood of an active navigation benefit for spatial memory and potentially stabilize any reduction in item memory performance relative to passive observers. Although this manipulation of immersion is not perfectly analogous to the variation in immersiveness across different VR apparatuses, the result raises questions about how different properties of sensory immersion might interact with a user’s level of interactive immersion within a virtual environment. Indeed, research comparing recognition memory for objects encountered on a virtually traveled route between Headset-VR and a group that passively watched a video of the same route (on a large 2-D screen) has shown a clear advantage of scene recognition accuracy for the Headset-VR group (Schöne et al., 2017). However, in this study it is not possible to disentangle whether the boost in recognition memory was due to the increased interactivity allowed by Headset-VR (i.e., head-tracking that allowed the user to control where they looked in the environment) or instead was caused by the increased sensory immersion allowed by the HMD apparatus.

When Ruddle et al. (2011) compared the effects of interactive fidelity within the same apparatus, subjects used Headset-VR to navigate an environment either by using a joystick while remaining physically stationary (low-interaction fidelity) or by physically walking around a large room with the movements tracked and translated into the virtual environment (high-interaction fidelity). Considering the demonstrated impact of basic postural configuration (e.g., sitting vs. standing) on cognitive processes even in more conventional non-VR experimental tasks (such as the Stroop effect; see Rosenbaum, Mama, & Algom, 2017), the importance of accounting for how the physical components of VR interactivity might affect memory performance is readily apparent. In this study, higher interaction fidelity resulted in improved performance on both item-recognition and a chronological picture-sorting tasks, but not on a route-sketch-mapping task. However, there was no passive condition for comparison, which, if included, may have equaled or surpassed the item memory performance of active conditions according to other experiments (e.g., Attree et al., 1996) and would have also allowed for a comparison of spatial memory performance between active and passive groups. Mania, Troscianko, Hawkes, and Chalmers (2003) similarly sought to compare memory performance between systems with differing levels of interactive (and sensory) fidelity, including Desktop-VR, variations of Headset-VR with and without head-tracking, and even a group that studied an analogous environment created in real life. In this study, rather than navigating throughout the virtual environment, subjects instead looked around a single object-filled room from a stationary location. As with Ruddle et al. (2011), variations in interactive fidelity had no bearing on spatial memory performance (operationalized in this study as memory for the locations of previously studied objects). However, again, no passive condition was included for comparison, nor was an assessment of item memory included. Clearly, a more comprehensive and systematic assessment of the possible interaction between sensory and interactive immersion on memory performance will require the inclusion of a passive (noninteractive) condition, thereby also allowing for more direct comparisons with the extant literature on active and passive VR experiences.

The volitional and motoric components of VR interactivity

How else might one explain some of the variable episodic memory performance from experiments testing active and passive interaction within a virtual environment? Perhaps some of this discrepancy is due to a lack of clarity regarding which aspect of interactivity subjects are given control of in VR. Although seldom teased apart, there are actually two distinct components that contribute to a subject’s interactions within a virtual environment: the volitional component, which allows a subject to choose how to interact with the environment, and the motoric component, in which subjects physically carry out that interaction via the VR system’s input device(s). Is it possible that these two components contribute differently to memory outcomes associated with active conditions?

To directly test the separable influences of motoric and volitional control during navigation of a virtual environment, Plancher, Barra, Orriols, and Piolino (2013) created three conditions for how subjects navigated a series of roads. Subjects with volitional control instructed the experimenter on which way to turn the car at intersections; subjects with motoric control drove the car themselves, based on the experimenter’s navigational directions; and passive subjects were simply passengers in the car, observing the environment as it passed by. The Sim-VR apparatus was characterized by a large screen that included a view of the interior of the car from a first-person perspective. Additionally, the input devices included a physical steering wheel, accelerator, and brake pedal, resulting in a higher degree of interactive fidelity than driving simulations using more generalized devices such as joysticks or game controllers. To ensure that all subjects viewed the same scenes along the route, the virtual world was constructed to be completely symmetrical, such that the critical objects and their spatial layouts in the scene were identical regardless of which way subjects turned at intersections. During retrieval, subjects were required to complete several tasks assessing memory for the objects they saw and their relative locations in the scenes along the route. Item recognition for the previously observed objects was better among subjects with volitional control than for subjects with motoric control—in fact, even the passive condition outperformed the motoric control condition on this task. In contrast, spatial memory for the location of objects was equivalent between subjects with motoric and volitional control, with both versions of the active condition outperforming the passive group on visuospatial memory tasks.

A later study by Jebara, Orriols, Zaoui, Berthoz, and Piolino (2014) used a nearly identical VR apparatus and virtual environment, but instead created two separate conditions with motoric control. These conditions varied in how much physical interaction was necessary, with one group choosing only the speed at which the route was observed by using the accelerator and brakes (but not turning the steering wheel), and the other group using both the steering wheel and pedals as in Plancher et al. (2013). These conditions were included to determine whether increasing or decreasing motoric control might produce different memory outcomes. Briefly, high-motoric control led to worse item memory than both low-motoric control and volitional control did, and visuospatial memory was equivalent for volitional and low-motor control (which were both superior to the high-motor control and passive conditions). In short, increased motor control resulted in worse episodic memory, which the authors suggest may be due to the increased burden on cognitive resources resulting from increased motoric engagement.

In general, the results of Plancher et al. (2013) and Jebara et al. (2014) indicate that the influence of VR interaction on memory may not be simply due to the occurrence of interaction but may vary depending the component (i.e., volitional or motoric) and degree (e.g., high-motor or low-motor control) of the interaction being considered. However, it should be noted that neither of these studies included a condition in which subjects are given both motoric and volitional control over their interaction with the virtual environment. Without such a condition, it is difficult to predict how the simultaneous inclusion of both properties of interaction directly compares with each property individually. For instance, if both volitional and motoric control individually benefit episodic memory, what might happen if subjects have control over both of these interactive components? Including both components of control also helps to situate this research in the general context of interactivity effects in VR as most experiments do not make this subtle distinction (typically allowing subjects in the active condition both motoric and volitional control over their navigation).

To explore this question, Chrastil and Warren (2015) created a virtual maze that subjects navigated with a Headset-VR apparatus. Subjects were divided into four conditions: (1) a passive condition (where subjects simply viewed a video of maze navigation from a stationary position); (2) a motoric control condition (where subjects physically walked around to navigate the maze, but were guided by an experimenter); (3) a volitional control condition (where subjects were allowed to choose how to navigate the maze by using arrows on a keyboard, but not by actually walking)Footnote 6; and (4) a combined control condition (where subjects exercised both motoric and volitional control to freely walk around the environment). Briefly, the results of several spatial memory tasks indicated that volitional and motoric control might differentially contribute to distinct properties of spatial learning. Specifically, the volitional component was found to be more critical for the acquisition of graph knowledge (e.g., learning the spatial relationships connecting different locations in the maze such as landmarks and junctions), whereas motoric engagement contributed to survey knowledge (e.g., a more holistic “map-like” knowledge of the maze’s layout and components). Additionally, subjects with both motoric and volitional control often performed better than when only one component of interaction was present, suggesting the possibility of an additive benefit for spatial memory.

Evidence from neuroimaging also seems to corroborate the notion that different components of navigation might uniquely contribute to performance depending on the type of spatial memory task being used. Hartley, Maguire, Spiers, and Burgess (2003) incorporated a Desktop-VR navigational task into an fMRI scanner to observe neural activity when subjects engaged in two distinct navigational tasks. Before scanning, subjects navigated virtual towns under two conditions: free exploration in Town 1 (where subjects were given both volitional and motoric control over their navigation), or guided exploration in Town 2 (where subjects controlled their virtual movement but were explicitly instructed on how to navigate in order to learn specific preestablished routes). During test, subjects completed wayfinding (Town 1) and route-following (Town 2) tasks while in the scanner. Successful navigation resulted in differential levels of activation for distinct neural substrates depending on the conditions of the spatial memory task. In particular, a successful way-finding task was associated with increased hippocampal and parahippocampal activity, whereas the route-following task more strongly engaged regions such as the right caudate nucleus, motor and premotor cortices, and the supplementary motor area. Thus, when compared with wayfinding, the authors concluded that route knowledge seemed to predominantly rely upon an action-based representation of route navigation with a relative reduction in demand for cognitive resources associated with more general characteristics of spatial processing.

Other considerations of VR interactivity

There are certainly many situations in which increasing interactive immersion in VR can strengthen aspects of memory performance, but this enhancement is clearly not universal. As detailed above, mnemonic benefits associated with interactivity may be constrained by various factors. Such factors include the degree of interactive fidelity (i.e., how closely the interaction with the VR system matches the real-world action being simulated), whether the subject is exercising volitional or motoric control (or both), and which aspect of memory is being evaluated by the behavioral assessment (e.g., item memory vs. spatial memory). However, while a consideration of these features can help to contextualize the results of a given study (e.g., by acknowledging the difference in cognitive demands associated with the volitional and motoric components of interactivity), a clear pattern of results across the literature is still quite difficult to conclusively discern. As such, an account of other factors that might influence the results or interpretation of experiments on VR interactivity is worthwhile and should serve to better equip researchers seeking to further investigate this topic.

One possible contributor to the ambiguity in this area of research is terminological in origin. Specifically, when interactive fidelity is experimentally manipulated. it may be tempting for researchers to classify their conditions as high fidelity and low fidelity for the sake of direct comparison. Although this is a fair characterization in a relative sense (after all, in any particular experiment one condition can be more interactively immersive than the other), one must be cautious to avoid overgeneralizing results attached to these labels as being representative of the full spectrum of interactive fidelity. For instance, consider that an input device that is considered “low fidelity” in one study might be classified as “high fidelity” in another study simply by virtue of how its features compare with some other interactive condition included in the experiment. To address this issue, the Framework for Interaction Fidelity Analysis (or FIFA) was designed as a method to evaluate interactive fidelity more objectively (see McMahan, Lai, & Pal, 2016). Although not developed for memory research, FIFA has provided a framework to more reliably evaluate trends across studies of interactive immersion. Indeed, an interesting pattern seems to be emerging whereby certain aspects of user performance (e.g., navigational efficiency and accuracy) in medium-fidelity conditions is actually worse than both high-fidelity and low-fidelity conditions, resulting in a U-shaped relationship between interactive fidelity and user performance (e.g., Nabiyouni, Saktheeswaran, Bowman, & Karanth, 2015; for review, see McMahan et al., 2016). It is possible that this unintuitive trend may have ramifications for memory research as well—for instance, increased navigational difficulty with medium-fidelity devices may potentially be impeding effective encoding by increasing the cognitive load of subjects in this condition. If one were to consider such a device as the “high-fidelity” condition in a given experiment, one might conclude that increased interactive fidelity does not enhance memory when, in actuality, the broader relationship between these concepts may simply be nonlinear. This observation illustrates the potential value of appraising the interactive fidelity of conditions from a single experiment within the wider spectrum of interactive immersion. Consequently, this suggests that future memory research in this area may benefit from attempts to develop and incorporate a system designed to evaluate and label the construct of interactive fidelity in a more experimentally independent manner.

Research on the topic of VR interactivity may also be influenced by properties of experimental design. Experiments commonly feature manipulations of VR interaction between subjects, but within-subjects designs are less frequently used and comparatively understudied (e.g., James et al., 2002). This point merits consideration because design effects are known to influence various memory phenomena. With respect to action memory, the enactment effect tends to be more robust in the context of within-subjects designs than in between-subjects designs (Engelkamp & Dehn, 2000; Steffens, Buchner, & Wender, 2003; see also Peterson & Mulligan, 2010). Given the conceptual similarity between studies of enactment and investigations of interactivity in virtual environments, it is possible that the frequent usage of between-subjects designs in this domain could be dampening the effects of VR interaction on memory. Consequently, features of experimental design might be contributing to the observed discrepancies in results across studies on this topic and should thus be considered in future research.

Other noteworthy variations exist between studies on the subject of VR interactivity as well. For instance, some studies involve interaction from a stationary position, whereas others require virtual locomotion throughout the environment. Likewise, experiments differ in terms of whether interaction is characterized by a subject’s movement (of the head or body) in virtual space, or whether it involves the direct manipulation of individual objects in this space (e.g., picking up and/or rotating an object to inspect it). Most experiments also generally assess performance from intentional encoding tasks, with incidental encoding being comparatively underresearched with respect to this topic. Based on the various outcomes from current research, there is clearly a lot of work still needed to definitively determine what sorts of benefits may be provided by interaction with a virtual environment and what specific properties of memory (e.g., item memory or spatial memory) might enjoy this enhancement.

The “reality” of virtual reality: Transfer effects and comparing VR with real-life training

One of the great potential benefits of VR is the ability to create a theoretically infinite number of ecologically valid scenarios in a controlled setting. As such, their potential for use in training applications has been of great interest, particularly in fields where comparable real-life environments would be too expensive or difficult to create. Such programs also offer the prospect of learning tasks in a less risky environment, such as surgeons learning to operate in VR before an operation on a human. Despite these potential benefits, the utility of VR training in such settings depends entirely upon the effective transfer of learning from the VR setting to the real-world environment being simulated. Without the transfer of learning, training in VR would only be useful if retrieval also takes place in VR. If that is the case, VR may prove to be an inefficient use of time relative to live training in the real world. To that end, it is important to study transfer effects in the context of VR training. Moreover, even assuming that this transfer occurs, one should also still compare levels of performance between subjects learning in VR and those learning the same task in real life. After all, even if learning transfers from VR, performance will not necessarily be comparable with real-life training. As such, basic research geared toward identifying and reducing gaps in performance between these two modes of learning (and understanding why such gaps exist in the first place) is critical to maximizing the potential utility of VR as an instructional tool.

Transfer from VR to the real world

Many studies that have inspected the general transfer of learning from VR to real life have successfully demonstrated that information observed initially in a virtual environment can be reliably assessed in an analogous real-life environment. Recall the Sim-VR (CAVE) study by Ragan et al. (2010), in which subjects learned a procedural task with objects moving around the environment in an ordered sequence. After the study phase, subjects were assessed on their memory for the sequence in both the CAVE setup as well as in a room adjacent to the CAVE that served as its real-life counterpart. The testing environment had no effect on either the speed or the accuracy of recalling the steps of the learned procedures. This result demonstrates that information encoded in VR does not exclusively enhance memory performance for tasks that are later retrieved in VR, but instead extends to real environments as well.

Transfer of learning can even take place with virtual environments that do not train a subject on the specific task that they will later have to replicate in the real world. In a study by Connors et al. (2014), researchers created an audio-only Desktop-VR environment to test whether transfer of spatial information could occur for subjects who are blind. As most studies of spatial navigation in VR contain visual information as the primary modality for conveying the layout of a virtual environment, this study is a noteworthy methodological departure that allowed the authors to assess if spatial learning in VR is a vision-dependent phenomenon. Moreover, the researchers had subjects learn the layout of the virtual environment in one of two ways: either through training on preselected routes or through a ludic (i.e., game-based) approach in which the subjects freely navigated the environment with the goal of collecting jewels and avoiding monsters who threatened to steal them. After the study phase, subjects were tested on their ability to navigate a series of routes not explicitly taught during training. These navigation tasks were completed first in the virtual environment and then inside the physical building after which the virtual environment was modeled. Subjects who trained in the virtual environment were able to successfully navigate these routes with comparable accuracy in both VR and in the actual building, thus demonstrating a transfer of spatial learning from VR to real life. Furthermore, results indicated that the spatial accuracy and navigation speed of subjects who trained with the ludic version of the virtual environment was generally comparable with (and occasionally superior to) the performance of subjects who were taught specific routes during the study phase. These results not only demonstrate a transfer effect in a nonvisual modality but also highlight the potential utility of using an undirected ludic-based strategy in VR learning tasks (as such an approach might be more interesting to learners and potentially just as useful for the transfer of knowledge to the real world).

Real-life versus VR training

Although studies such as those described above lend support to the notion that VR training can transfer to tasks completed in real life, they do not indicate how training in VR compares with real-life training. Understanding this comparison is key to determining the comparative utility of virtual and real-world training. Performance comparisons between VR and real-life learning tasks are variable, but in a number of cases real-life training outperforms learning that occurs in a virtual environment. For instance, Flannery and Walles (2003) placed subjects in either a virtualFootnote 7 or real-life office and later assessed their item recognition for objects that were seen in the environment (subjects were not explicitly instructed to study the environment). Subsequent item-recognition scores were significantly higher for subjects who observed the real-life office, indicating a relative disadvantage for virtual learning. Similarly, Hoffman, Garcia-Palacios, Thomas, and Schmidt (2001) had subjects touch a series of objects both in real life and in Headset-VR. In the Headset-VR condition, the location of the hands relative to the virtual object was tracked, but there was no tactile feedback. Again, item-recognition performance was superior for subjects who interacted with the objects in real life.