Abstract

One of the most influential ideas in recent decades in the cognitive psychology literature is conflict monitoring theory. According to this account, each time we experience a conflict (e.g., between a colour word and print colour in the Stroop task), attentional control is upregulated to minimize distraction on subsequent trials. Though influential, evidence purported to support this theoretical model (primarily, proportion congruent and congruency sequence effects) has been frequently criticized. Furious debate has centered on whether or not conflict monitoring does or does not occur and, if so, under which conditions. The present article presents an updated review of this debate. In particular, the article considers new research that either (a) seems particularly damaging for the conflict monitoring view or (b) seems to provide support for the theory. The author argues that new findings of the latter sort are still not compelling, several of which have already-demonstrated confounds and others which are plausibly confounded. Further progress has, to a greater extent than not, provided even stronger support for the position that conflict monitoring is merely an illusion. Instead, the net results can be more coherently understood in terms of (relatively) simpler learning/memory biases unrelated to conflict or attention that confound the key paradigms.

Similar content being viewed by others

Whether it is the screaming kids in the backseat stealing our attention from the road or a cacophony of chattering voices in the café interfering with our ability to listen to a conversation partner, distraction is everywhere. To optimally interact with our world, we often need to divert attention away from these sources of distraction and focus on a single target. One laboratory-based example of this comes from the colour-word Stroop paradigm (Stroop, 1935), in which participants are presented with coloured colour words (e.g., the word “blue” printed in red) and are required to suppress the dominant tendency to read the word, and instead identify the print colour of the word (i.e., red). The relative success of selective attention is reflected by high accuracy in colour identification (i.e., we can avoid reading the word . . . most of the time). However, performance is slower and less accurate when the word and colour are incongruent (e.g., “blue” in red) compared with when they are congruent (e.g., “blue” in blue). This congruency effect provides clear evidence that participants are not completely successful at filtering out distracting information, despite the intention to do so. Related effects are observed in the flanker (Eriksen & Eriksen, 1974), Simon (Simon & Rudell, 1967), and various other tasks.

Conflict monitoring account

It is obvious that we are able (however imperfectly) to focus our attention on specific stimuli or stimulus dimensions in order to achieve a task goal. For instance, if we are at a loud cocktail party, we are able to focus our attention on our conversation partner and tune out the background noise (Cherry, 1953), or at least primarily (Moray, 1959). A more particular view about one of the ways in which attention might be controlled, however, comes from the conflict monitoring (or conflict adaptation) account. The conflict monitoring account proposes that participants dynamically reduce the amount of attention allocated to distracting information (e.g., the word in a Stroop task) and/or increase the amount of attention allocated to target information (e.g., the colour of a Stroop stimulus) in response to experienced conflict (e.g., Botvinick, Braver, Barch, Carter, & Cohen, 2001). That is, each time conflict (e.g., between two potential response options) is experienced, control is upregulated, and each time conflict is not experienced (or minimal), control is downregulated. This is illustrated in Fig. 1. Conflict monitoring (and closely related accounts) is extremely popular. For instance, according to Google Scholar, eight papers (Botvinick et al., 2001; Botvinick, Cohen, & Carter, 2004; Botvinick, Nystrom, Fissell, Carter, & Cohen, 1999; Carter et al., 1998; Kerns et al., 2004; MacDonald, Cohen, Stenger, & Carter, 2000; E. K. Miller & Cohen, 2001; Ridderinkhof, Ullsperger, Crone, & Nieuwenhuis, 2004) that are 20 years old or less have well over 30 thousand citations between them as of the time of writing this paper. Most of the proposed evidence for conflict monitoring comes from proportion congruent and congruency sequence effects.

a (Simplified) visual representation of conflict monitoring. Each time a high conflict event is experienced, control increases. Without conflict, control decreases. The grey line indicates the current amount of control. The observed microadjustments are fundamental to the conflict monitoring account. b Model via which attention is controlled. Conflict between responses is measured and summed up by a conflict-monitoring device, that then increases or decreases the attention focused on the colour and word

The proportion congruent (PC) effect is the observation that the magnitude of the congruency effect is modulated by the proportion of congruent trials in the task. In particular, the congruency effect is smaller in a task with mostly incongruent (e.g., 75% incongruent, 25% congruent) trials, relative to a task with mostly congruent (e.g., 75% congruent, 25% incongruent) trials (Logan & Zbrodoff, 1979; Logan, Zbrodoff, & Williamson, 1984). Although initially described in a very different way, the PC effect is typically taken as evidence for conflict-driven attentional control (Botvinick et al., 2001; Cheesman & Merikle, 1986; Lindsay & Jacoby, 1994; Lowe & Mitterer, 1982). According to the conflict monitoring view, the congruency effect is reduced in the mostly incongruent condition because participants experience frequent conflict, and they deal with this conflict by adapting attention more strongly away from the distracter and/or more strongly toward the target. Thus, the word has less influence on colour identification, thereby reducing the congruency effect. In the mostly congruent condition, however, conflict is (relatively) less frequent and attentional control is lax.

The congruency sequence effect (CSE), also sometimes referred to as the Gratton effect or (conflating mechanism with observation) the conflict adaptation effect, is the observation that congruency effects are smaller following an incongruent trial, relative to a congruent trial (Gratton, Coles, & Donchin, 1992). According to the conflict monitoring view, this results from a decrease in attention to the distracter and/or increase in attention to the target following a conflicting incongruent trial (Botvinick et al., 1999). That is, after experiencing conflict on one trial, control is increased. Thus, the word has less influence on colour identification on the following trial, reducing the congruency effect. In contrast, after a congruent trial, attention is more lax. It is worth noting that the same “microadjustments” illustrated in Fig. 1 can in principle explain both the CSE (i.e., effect of immediately preceding adjustment) and PC effect (i.e., summed effect of many small adjustments).

Goals of the current review

The notion that humans are able to control their attention to achieve a task goal is, of course, both intuitive an uncontroversial. Conflict monitoring, on the other hand, is not. That is, attention may be controllable, but not necessarily in the way that the conflict monitoring account suggests (i.e., conflict-driven microadjustments of attention). As such, the strong position that conflict monitoring may not be real should not be overgeneralized as a suggestion that cognitive and attentional control, more broadly, are unimportant for our interactions with the world.Footnote 1 In a previous review (Schmidt, 2013b), the author presented the case that many key findings in the conflict adaptation domain could be coherently understood in terms of much simpler learning/memory biases. That is, confounds present in typical PC and CSE procedures may be what actually produces the key effects, and not conflict monitoring per se.

Since this initial review, much new research has been conducted on this issue, including a special issue devoted entirely to this debate (Schmidt, Notebaert, & Van den Bussche, 2015). The present article is not intended as a general review on the attentional control literature, of which there are many (e.g., E. Abrahamse, Braem, Notebaert, & Verguts, 2016; Bugg & Crump, 2012; Egner, 2008, 2014). Instead, this article provides an updated position piece to illustrate that there remain reasons for skepticism toward conflict monitoring theory. As the current paper aims to be a continuation of the 2013 review, redundancy with the prior review will be avoided to the extent possible. Of course, several key findings inevitably need to be revisited to maintain the coherency of the current review, but the present paper focuses more heavily on research that has been conducted since 2013. In other cases, the current article revisits speculative ideas that were presented in the previous review that have since been tested (and, for the most part, confirmed). This review will not aim to argue that the question of whether or not conflict adaptation exists can be resolved immediately but will attempt to show that evidence is stronger than ever for the notion that (nonconflict) learning/memory biases might be a sufficient account of findings from the attentional control domain.

Learning/memory view and the PEP model

Before proceeding to a discussion of particular key points of debate in the attentional control literature, it is useful to first indicate what the author refers to when talking about learning (or memory) confounds in attentional control procedures. It is, of course, the case that conflict monitoring involves learning/memory processes (E. Abrahamse et al., 2016), like any other account (i.e., conflict monitoring involves learning about conflict). However, when referring to “learning confounds” the author specifically refers to learning unrelated to conflict, and learning processes that are (unless otherwise specified) unrelated to attention. Thus, the key issue is decidedly not whether learning processes are involved in PC effects and CSEs (a trivial question with an obvious affirmative answer), but rather whether the learning driving these effects is related to the monitoring of conflict and conflict-triggered adjustment of attention.

As one example, Schmidt and Besner (2008; see also, Mordkoff, 1996) argue that the PC effect is confounded by contingencies, such that the distracting word is predictive of what response to make for some trials. Specifically, congruent trials benefit in the mostly congruent condition (e.g., “blue” is presented most often in blue, so “blue” is predictive of a blue response), thus increasing the congruency effect. Depending on the task contingencies, incongruent trials benefit in the mostly incongruent condition (e.g., “purple” is presented most often in green, so “purple” is predictive of a green response), thus decreasing the congruency effect. As such, simple learning about what colour response is likely given the presented word produces an interaction of exactly the same form as that predicted by the conflict monitoring account. Thus, it could be that the PC effect has nothing to do with conflict adaptation at all. Instead, it may be the incidental result of participants learning the regularities between stimuli and responses.

Similarly, Mayr, Awh, and Laurey (2003; see also, Hommel, Proctor, & Vu, 2004; Mordkoff, 2012; Schmidt & De Houwer, 2011) argued that the CSE is biased by feature repetitions. For instance, both the colour and word repeat on a complete repetition trial (e.g., “red” in blue followed by “red” in blue), and these trials are substantially faster than trials in which features do not repeat (e.g., “red” in blue followed by “green” in purple). Following a congruent trial, a complete repetition is only possible if the next trial is also congruent, thus increasing the difference between congruent and incongruent trials. Following an incongruent trial, a complete repetition is only possible if the next trial is also incongruent, thus decreasing the difference between congruent and incongruent trials. These feature repetition biases work to produce an interaction of the same form as that predicted by the conflict adaptation account. Thus, it may be that the CSE, too, is explainable solely in terms of basic learning and memory biases, and not conflict adaptation per se.

In both of the examples above, the proposed learning/memory mechanism is unrelated to conflict or attention. That is, participants are simply biased to repeat the response that they have (frequently or recently) made to a stimulus before. Throughout this review, many instances of this sort of learning/memory bias will be discussed. It should be noted in advance that many “different” memory biases will be discussed throughout the review, including contingency learning, feature integration, temporal learning, and practice effects. All of these “different” biases, however, can be coherently conceptualized as different consequences of one memory storage and retrieval process. This conceptual point has been clearly illustrated with the parallel episodic processing (PEP) model, a neural network model that stores memories of trial events and retrieves these memories on the basis of similarity to anticipate responses (Schmidt, 2013a, 2013c, 2016a, 2016b, 2018; Schmidt, De Houwer, & Rothermund, 2016; Schmidt, Liefooghe, & De Houwer, 2017; Schmidt & Weissman, 2016; for related models, see Hintzman, 1984, 1986, 1988; Logan, 1988; Medin & Schaffer, 1978; Nosofsky, 1988a, 1988b). Feature integration, contingency learning, and practice effects are all a direct consequence of one retrieval mechanism, and temporal learning is an extension of the same idea to the temporal (time) dimension. The PEP model has been used to simulate results from a broad range of domains, from skill acquisition, to contingency learning, binding, task switching, instruction and goal implementation, response timing, and, related to the present review, “attentional control.” Though a full discussion of this particular model is beyond the scope of the present article, the PEP model will be referenced throughout this article as a benchmark for the learning account, as it has been used to simulate a range of findings from the “attentional control” domain without monitoring conflict or adapting attention.

Item-specific proportion congruent (ISPC) effects

Although the traditional variant of the PC procedure involves presenting participants with either mostly congruent stimuli or mostly incongruent stimuli (or both, but in different blocks), in the item-specific proportion congruency (ISPC) procedure, participants are presented with some stimuli (e.g., blue and green) that are mostly congruent and other stimuli (e.g., red and yellow) that are mostly incongruent, all intermixed into one procedure (Jacoby, Lindsay, & Hessels, 2003). The congruency effect is still smaller for mostly incongruent stimuli relative to mostly congruent stimuli, termed an ISPC effect. As discussed in the prior review (Schmidt, 2013b), this produces a logical predicament for the conflict monitoring view, because any sort of conflict-driven attentional control to ignore a distracting word would have to be triggered by the conflict associated with individual distracter words, which requires knowledge of the distracting word, which can only be known after attending to the distracting word. That is, you cannot decide whether or not to attend to a distracting stimulus until after you already have. Of course, this notion is not completely unworkable, as it can be assumed that attention weights are gradually adjusted over the course of a trial, with attention slowly drifting away from the word as evidence accrues that the word is typically incongruent and/or towards the word as evidence accrues that the word is typically congruent (i.e., the identity of a word determines the attentional weight given to it, but in a more recurrent fashion).

Though some solutions to this logical predicament have been proposed (e.g., Blais, Robidoux, Risko, & Besner, 2007; Verguts & Notebaert, 2008), the alternative view is that the ISPC effect is exclusively due to contingency biases in the task, as discussed earlier. In the prior review (Schmidt, 2013b), it was discussed how Schmidt (2013a) was able to compare (a) high and low contingency items of equal PC, revealing a robust contingency bias, and (b) mostly congruent and mostly incongruent items of equal contingency, revealing no remaining PC effect. These results strongly suggested that the ISPC effect is exclusively due to contingency biases. A subsequent report by Hazeltine and Mordkoff (2014) reached a similar conclusion with a different dissociation procedure. In particular, the frequencies of individual congruent, incongruent, and neutral word–colour pairs were manipulated (high, medium, and low) partially independently from PC (high, medium, and low). While there were robust effects of contingencies of comparable magnitude for congruent, incongruent, and neutral items, there were no remaining differences between the different PC levels. Together, these two reports provide the most straightforward dissociations between contingency and conflict monitoring biases to date, and both suggest very strong support for a contingency-only interpretation of the ISPC effect. Similar dissociation logic was also applied in fMRI research (Grandjean et al., 2013), where the correlation between the ISPC effect and anterior cingulate cortex (ACC) BOLD signals (previously observed by Blais & Bunge, 2010) could be traced back to the contingency bias (and not conflict monitoring) when dissociating the two.

In stark contrast to the above-discussed results, other findings have emerged that seem to be inconsistent with the contingency-only account of the ISPC effect. Of particular interest are a series of experiments by Bugg and Hutchison (2013; see also, Bugg, 2015; Bugg, Jacoby, & Chanani, 2011). According to these authors, conflict monitoring does exist but only shows itself when not overwhelmed by more informative stimulus–response regularities (i.e., contingencies). In the more typical designs, in which both mostly congruent and mostly incongruent stimuli were predictive of a response, evidence again strongly supported the contingency-only view. However, the authors argued that attention control was observed when words were made less predictive of the target colour by presenting mostly incongruent words in many different incongruent colours (i.e., rather than frequently in one). Some of the evidence argued as support for the attentional control view relied heavily on additive factors logic (Sternberg, 1969), which is often problematic (Ridderinkhof, Vandermolen, & Bashore, 1995; Smid, Lamain, Hogeboom, Mulder, & Mulder, 1991). In particular, Schmidt and Besner (2008) suggested that congruency and contingency effects should produce roughly additive effects, as they are due to different processes, and an overadditive interaction between PC and congruency might therefore be interpreted as evidence of conflict monitoring. This reasoning, however, is demonstrably flawedFootnote 2 in any sort of cascading system (Schmidt, 2013a). Most of the evidence for attentional control from Bugg and colleagues relies on interpreting interactions between contingency and congruency factors. Some, however, does not, and this data will be considered in further depth below.

First, large differences in performance were observed between incongruent items with words that were mostly incongruent versus mostly congruent, with responses slower for the latter. Neither incongruent item type involved (especially) high contingency pairings. That is, the logic of the studies was that because none of the incongruent words were strongly predictive (or even above-chance predictive) of one of the incongruent colours, no contingency bias existed. This logic is flawed, however. In particular, mostly congruent and mostly incongruent items did vary notably in contingencies, with mostly congruent items being extremely infrequent (e.g., four of 32 or 64 presentations), and mostly incongruent items being relatively more frequent (e.g., 12 of 32 or 64 presentations). Recent work with nonconflict paradigms has indicated that contingency effects are not merely observed as a difference between greater-than-chance and less-than-chance pairings, but vary along a continuum (Forrin & MacLeod, 2018; Schmidt & De Houwer, 2016a; see also, J. Miller, 1987). That is, participants respond much more slowly to very infrequent stimulus pairings relative to moderately frequent stimulus pairings. Thus, the fact that very low frequency, mostly congruent words were responded to more slowly than around-chance frequency, mostly incongruent words is completely consistent with the contingency learning view (perhaps even a necessary a priori prediction).

Second, Bugg and Hutchison (2013) observed that responses to (high contingency) congruent stimuli in the mostly congruent condition were not appreciatively faster than (low contingency) congruent stimuli in the mostly incongruent condition. This difference was consistently trending in the correct direction across studies, but was not as large as the effect for incongruent items. This finding can also be considered consistent with a simple learning view, for a reason related to the previous discussion about the interaction between congruency and contingency being not perfectly additive. In particular, contingency effects (along with most other types of effects) tend to scale down with faster responses. In fact, simulations with the PEP model also produce a smaller contingency effect for congruent items (Schmidt, 2013a). The exact magnitude of the asymmetry in contingency effects for congruent versus incongruent items that each account (contingency learning or conflict monitoring) should expect is, unfortunately, ambiguous. Thus, these results do not provide clear answers to the debate. Dissociation procedures that allow more direct contrasts between (a) high and low contingency items of equal PC and (b) mostly congruent and mostly incongruent items of equal contingencies are more desirable in this respect (e.g., procedures akin to Hazeltine & Mordkoff, 2014; Schmidt, 2013a, as discussed earlier).

Third, and most critically, Bugg and Hutchison (2013) found that the ISPC effect transferred to novel items. In particular, when new colour words were presented in the (previously) mostly congruent and mostly incongruent colours, responding was slower to the incongruent items in the mostly congruent colour relative to the same items in the mostly incongruent colour. This effect was, however, only marginally significantFootnote 3 in one small sample. This finding, if replicable, would be problematic for a pure contingency learning view (i.e., without supplemental assumptions), as there is no contingency for the new transfer words. It was also observed in another study that incongruent words that were mostly congruent were responded to more slowly in novel colours than to those that were mostly incongruent. This only occurred in a four-choice task where mostly congruent words were presented frequently in the congruent colour and mostly incongruent words were presented at (roughly) chance contingencies in all colours. Thus, this transfer effect can equally well be explained as a low contingency cost for the mostly congruent words (e.g., the word “blue” leads to an expectation of a blue stimulus, which is not appropriate).

Another potential problem with the line of studies by Bugg and Hutchison (2013) and others is that there may be a contingent attentional capture confound, as outlined in Schmidt (2014a). In particular, evidence for conflict adaptation is generally only observed in the restricted case where the mostly congruent condition involves (almost by necessity) highly predictive distracters (i.e., an item cannot be “mostly congruent” unless it is presented highly frequently in its congruent colour) and, critically, the mostly incongruent condition involves nonpredictive (or low predictive) stimuli (e.g., “yellow” is presented in many incongruent colours, but in no one incongruent colour with especially high frequency). This is unlike most typical ISPC preparations, where words are equally predictive of a single response in both conditions (e.g., “blue” most often in blue and “yellow” most often in red). It is well known that attention is attracted to informative stimuli (Cosman & Vecera, 2014; Jiang & Chun, 2001; see also, Badre, Kayser, & D’Esposito, 2010, for the relationship to policy abstraction). That is, cues in the environment that our cognitive system has learned are predictive of an outcome are attended (i.e., because they, probabilistically or deterministically, indicate the action that is most appropriate). Indeed, similar notions have been around at least as far back as early learning research on cue competition effects (e.g., Mackintosh, 1975; Pearce & Hall, 1980; Sutherland & Mackintosh, 1971). Thus, it may indeed be the case that attention to distracting words is higher in the mostly congruent conditions of the four-choice conditions of Bugg and Hutchison relative to the mostly incongruent conditions, but this might be due to a difference in stimulus informativeness, and not to conflict detection and conflict-driven attention adjustment.

As the above discussion aims to demonstrate, no findings have appeared that are clear enough to discard a learning-only view of ISPC effects. This is not to say that conflict monitoring has been conclusively falsified, either. Alternative interpretations have been provided for some of the key findings that have been used to argue against the simple learning view, but more testing is needed to clearly distinguish between conflicting views. At minimum, it seems clear that if conflict adaptation biases do exist in the ISPC effect, they only occur under a restricted set of scenarios (this “last resort” view will be discussed in the Making Senses of Inconsistencies section).

List-level proportion congruent (LLPC) effects

As the standard PC effect is inherently confounded by item-specific biases (i.e., each word is mostly congruent in the mostly congruent condition and each word is mostly incongruent in the mostly incongruent condition), the question naturally arises whether there is anything more to the PC effect than item-specific biases. Thus, the list-level proportion congruent (LLPC) effect is the observation of a PC effect that is due to the overall proportion of congruent trials in the task, independent of any individual item biases (i.e., whether due to item-specific contingency or to control biases). As discussed in the previous review (Schmidt, 2013b), some early results with straightforward dissociation procedures involving manipulating LLPC with some contingency-biased inducer items, and intermixing contingency-unbiased diagnostic items produced no evidence for a LLPC effect (e.g., Blais & Bunge, 2010). However, the prior review also discussed how subsequent results suggested that an effect independent of item-specific biases might be observable (Bugg & Chanani, 2011; Bugg, McDaniel, Scullin, & Braver, 2011; Hutchison, 2011). Some subsequent reports revealed similar results (e.g., Wühr, Duthoo, & Notebaert, 2015).

Also as discussed in the prior review (Schmidt, 2013b), however, temporal learning might be a potential confound in these studies. Learning about timing information can occur just as readily as learning about correlated responses (Matzel, Held, & Miller, 1988), and it has long been known that task pace can produce profound influences on performance (Grice, 1968; Grice & Hunter, 1964; Kohfeld, 1968; Los, 1996; Ollman & Billington, 1972; Strayer & Kramer, 1994a, 1994b; Van Duren & Sanders, 1988). Whether learning to optimally time responses while avoiding inflated errors (Kinoshita, Mozer, & Forster, 2011) or simply learning to anticipate (either strategically or simply out of boredom) responding at a similar speed as previous trials (Schmidt, 2013c), this has implications for the LLPC effect (along with other findings, like repetition priming; Kinoshita, Forster, & Mozer, 2008; Mozer, Colagrosso, & Huber, 2002; Mozer, Kinoshita, & Davis, 2004). The faster pace engendered by the mostly congruent condition allows participants to be especially well prepared to respond quickly to (frequent) congruent trials, whereas the slower pace in the mostly incongruent condition does not. An inversed but smaller effect can also occur for incongruent trials. In this way, the simple pace of the task can produce an interaction of the same form as the LLPC effect.

Early evidence for a temporal learning interpretation of the LLPC effect was multifaceted (see the previous review; Schmidt, 2013b). In addition to statistical modelling of the influence of previous trial response times on the LLPC and neural network modelling results with the PEP model, it was shown that a LLPC-like interaction is produced by manipulating the task pace with a nonconflict manipulation (e.g., stimulus contrast), termed a proportion easy effect (Schmidt, 2013c). Subsequent research has further ruled out a potential item-specific bias in these proportion easy experiments (Schmidt, 2014b) by employing an identical (but nonconflict) inducer/diagnostic design as that used to study LLPC effects proper. One limitation with some of this past work was that demonstrating a LLPC-like effect in a nonconflict task does not necessarily imply that the same timing bias exists in a conflict task,Footnote 4 or that such a bias explains the entirety of the LLPC effect.

Yet further evidence in favour of a temporal learning interpretation of the LLPC effect comes from Schmidt (2017). Unlike prior evidence in support of the temporal learning view, a dissociation approach was adopted that allowed a direct contrast of the learning and control views. As in other reports, LLPC was manipulated with contingency-biased (inducer) items, but assessed with contingency-unbiased (diagnostic) items. Feature integration biases were also eliminated by alternating between two subsets of stimuli (as in Schmidt & Weissman, 2014, discussed later). Critically, however, some of the contingency-biased items were presented along with a wait cue (square). On wait cue trials, participants needed to withhold their response until the wait cue disappeared. Using this manipulation, it was possible to completely equate the mostly incongruent and mostly congruent blocks for task pace, even though conflict proportions were still manipulated. This completely eliminated the LLPC effect (which was otherwise still present and robust when wait cues were only presented briefly). These results prove problematic for the conflict monitoring view, while lending further credence to the temporal learning account. As with any study, of course, these results might be (re) interpreted differently. For instance, it might be proposed that the wait manipulation somehow impaired conflict monitoring. For instance, wait cues might trigger a “task switch” and this reduces conflict-driven attentional control.Footnote 5 Consistent with this, the CSE has been observed to be smaller on a task switch (Kiesel, Kunde, & Hoffmann, 2006), though these results should be interpreted with caution given the substantial feature integration biases that confound the switch cost in the same way that they confound the CSE (Schmidt & Liefooghe, 2016). In any case, alternative interpretations of the wait cue data might be possible that “save” the conflict monitoring account, though this would require supplementary assumptions to explain why conflict is not adjusted and/or adapted to in such a situation.

Whether all past reports of a (contingency-unbiased) LLPC effect can be accounted for by temporal learning or other factors is not clear. Most past reports that have looked at the LLPC effect have not taken this potential confound into consideration. The limited set of studies that have (mostly by the present author and some as-yet-unpublished replications by others) have observed consistent evidence in favour of the simple learning view, but more work will be needed to confirm whether a confound-free LLPC effect is observable in at least some variant of a conflict task. Relatedly, other potential biases other than conflict monitoring should be considered. Related to the discussion in the Item-Specific Proportion Congruent Effect section, one example is contingent attentional capture, which might play a role in some LLPC designs. For instance, some LLPC experiments use (almost by necessity) strongly contingent distracters in the mostly congruent condition, but (not by necessity) nonpredictive or weakly predictive distracters in the mostly incongruent condition (e.g., Bugg & Chanani, 2011; Bugg, McDaniel, et al., 2011; and one of the two mostly incongruent filler conditions of Hutchison, 2011).

As a further consideration, the LLPC effect might also be explained by attentional adaptation unlike that predicted by the conflict monitoring (or attention capture) account. In particular, the microadjustment control mechanism in the conflict monitoring model is fundamental for explaining most of the effects discussed in other sections of this paper (e.g., a CSE can only occur if attention control shifts in a meaningfully different way following congruent vs. incongruent items). In contrast, the LLPC effect can be explained by the same microadjustments (as most will be for more control in the mostly incongruent condition, and less control in the mostly congruent condition) but can also be explained by a single large shift (or relatively few or gradual shifts) in attention after detecting the conflict frequency (e.g., as modelled earlier by Cohen & Huston, 1994). That is, participants could be adjusting their attention based on the global difficulty of the task. Whether this would still count as “conflict monitoring” (as traditionally understood) is unclear, especially when such an adjustment could be due to something other than conflict (directly). For instance, participants might notice error frequency (e.g., “I am making too many errors, so I need to focus better on the colour”) or even task pace (e.g., “I am responding too slowly . . .”). Further consideration of this “light switch” model of attentional control will be discussed in the Other Findings section.

Context-specific proportion congruent (CSPC) effects

Further research has been conducted on context-specific proportion congruent (CSPC) effects. In a CSPC experiment, stimuli are mostly congruent when presented in one context (e.g., location on a screen), but mostly incongruent when presented in another context (e.g., another location on the screen). Various different contextual stimuli have been used, including stimulus locations (Corballis & Gratton, 2003; Crump, Gong, & Milliken, 2006; Crump, Vaquero, & Milliken, 2008), fonts (Bugg, Jacoby, & Toth, 2008), colours (Heinemann, Kunde, & Kiesel, 2009; Lehle & Hubner, 2008), and even temporal presentation windows (Wendt & Kiesel, 2011). These experiments also involved different tasks, such as Stroop, prime-probe, and flanker tasks.

As mentioned in the previous review (Schmidt, 2013b), however, there are some concerns with CSPC effects. One of these concerns is context-specific contingency learning. That is, while a simple word-response contingency cannot explain why congruency effects are smaller in the mostly incongruent relative to mostly congruent condition, the word and location together are strongly predictive of the correct response. For instance, if “blue” is presented mostly in blue (mostly congruent) in the top location, but mostly in “red” (mostly incongruent) in the bottom location, then a “blue” response can be anticipated when seeing the word “blue” and a target in the top location, whereas a “red” response can be anticipated when seeing the word “blue” and a target in the bottom location. Although it is certainly well known that compound learning like this does occur (e.g., in occasion setting research; Holland, 1992; see also, Mordkoff & Halterman, 2008) and it can be demonstrated that a simple contingency learning mechanism produces “context-specific” contingency learning effects natively (e.g., see Schmidt, 2016a, for PEP model simulations), the possibility that context-specific contingency learning biases might explain all or part of the CSPC effect was not investigated clearly until recently.

A recent series of studies by Schmidt and Lemercier (2018) has demonstrated quite clearly that context-specific contingency learning biases are, indeed, present and quite robust in CSPC procedures. In particular, the authors used a Stroop task with a stimulus font as the contextual stimulus. Contingency biases could be quantified in a dissociation procedure (modelled on Schmidt, 2013a) that allows a comparison of high and low contingency incongruent trials of equal congruency proportions (mostly incongruent). For instance, if “green” is presented most often in red in Font X, then “green” in red in Font X is high contingency, whereas “green” in blue in Font X is low contingency. The word “green” is (equally) mostly incongruent in both cases. This revealed a robust contingency effect in response times and errors. Furthermore, after controlling for these contingency biases, no remaining CSPC effect was observed. In particular, mostly congruent and mostly incongruent items of equal contingencies (low contingency) can be compared in the same dissociation procedure. This revealed no evidence for context-specific attentional control, with some results even suggesting an effect in the reversed direction. Perhaps even more problematically, the results suggested that this contingency learning is not particularly “context specific” (i.e., general to each context) but rather heavily item specific (i.e., specific to individual font-word-colour pairings). In particular, in one experiment some items were mostly incongruent in one (font) context and mostly incongruent in the other (font) context, whereas some other items had the reversed context-to-PC mapping. With this manipulation, a (not exactly) “context-specific” PC effect was still observed: high-frequency word-font-colour combinations were responded to faster than low-frequency word-font-colour combinations, but the font context did not (overall) predict PC (i.e., in each font, some words were mostly congruent and some were mostly incongruent). With a more traditional CSPC design (i.e., all words mostly congruent in one context and mostly incongruent in the other), the CSPC effect was no larger.

Another line of evidence for context-specific attention control independent of contingency biases comes from Crump and Milliken (2009; see also, Heinemann et al., 2009). In this report, Stroop-like stimuli were used, with distracting colour words and target colours, with location serving as the context-specific cue. CSPC was manipulated with contingency-biased inducer items (e.g., “red” and “green”), but tested with contingency-unbiased critical items (e.g., “blue” and “yellow”). A robust CSPC effect was observed even for the contingency-unbiased critical items. This finding, of course, cannot be explained by (context-specific) contingency learning and is therefore seemingly inconsistent with the results of Schmidt and Lemercier (2018). A subsequent series of experiments did, however, fail to replicate this key transfer effect (Hutcheon & Spieler, 2017), though the original authors were able to replicate the effect again (Crump, Brosowsky, & Milliken, 2017), albeit with much smaller effect sizes. Although further replications from independent labs would be desirable, these results do suggest that some small CSPC effect might still remain after controlling for (“context-specific”) contingency biases.

Related experiments have been conducted that point in the same direction. For instance, Reuss, Desender, Kiesel, and Kunde (2014) observed CSPC transfer from one set of numbers (e.g., 1-4-6-9) to another set (e.g., 2-3-7-8) in a magnitude judgement task (viz., <5 or >5). Similarly, Cañadas, Rodríguez-Bailón, Milliken, and Lupiáñez (2013) observed CSPC transfer from inducing male and female faces to diagnostic male and female faces. These manipulations, however, are less clean than the Crump and Milliken (2009) manipulation, as there is categorical overlap between diagnostic and inducer items in these later studies. For instance, both diagnostic and inducer female faces belonged to the same “female” category (and, invariably, also shared physical feature similarities). Similarly, both diagnostic and inducer numbers belonged to the same number magnitude category (and were also, invariably, numerically closer to the manipulated items; e.g., 2 is closer to 1 and 4 than to 6 and 9). Thus, categorical-level contingency learning could produce CSPC “transfer” (e.g., female predicts a left response task wide). Categorical contingency learning does occur in the absence of conflict (see, especially, Schmidt, Augustinova, & De Houwer, 2018). The same caveat does not apply to the Crump and Milliken manipulation, as each colour is uniquely associated with its own response.

Yet other results have demonstrated that (location-based) CSPC effects “transfer” to new locations (Weidler & Bugg, 2016; Weidler, Dey, & Bugg, 2018). For instance, if the far bottom-left location is mostly congruent and the far top-right location is mostly incongruent, then there is also a larger congruency effect in the intermediate bottom-left than the intermediate top-right location, even though PC is not manipulated for these intermediate locations. Contrary to what the authors argued, however, this does not argue against a compound-cue contingency learning account. Rather, it only shows that whatever mechanism produces a (location-based) CSPC effect is not specific to exact x,y coordinates on the screen, but to conceptual spaces. Indeed, it is not clear why the conflict monitoring account should make this prediction or why the contingency learning account should not.

Above discussion aside, if transfer does occur from manipulated to nonmanipulated items, then it is still uncertain whether this remaining CSPC effect is due to conflict monitoring. In the prior review (Schmidt, 2013b), it was pointed out that context-specific temporal learning biases might contribute to such an effect. Subsequent research seems to confirm this notion. Not only was the PEP model able to simulate such context-specific temporal learning biases (Schmidt, 2016a), but experimental research with a context-specific proportion easy manipulation (Schmidt, Lemercier, & De Houwer, 2014) successfully showed that a CSPC-like interaction is produced in the absence of conflict or even distracting stimuli. In particular, one context (location) was associated with more faster responses (mostly easy) than another location (mostly hard), but “easy” and “hard” stimuli were not congruent and incongruent. Instead, participants simply identified target letters that were printed in a (relatively) higher contrast (easy to see) versus a lower contrast (slightly harder to see). It seems unclear how a (context-specific) conflict monitoring account can accommodate such findings, but these results are completely consistent with context-specific temporal learning. Further reinforcing this notion, diffusion modelling by King, Donkin, Korb, and Egner (2012) suggests that CSPC effects result from changes in criterions (response thresholds), consistent with a temporal learning (or any other criterion-based) account, and not from changes in the drift rate (evidence accumulation), as the conflict monitoring account necessarily needs to predict (i.e., as changes in attentional control should alter the speed of evidence accumulation).

The current results do not allow for a definitive conclusion about whether or not context-specific attentional control does play some role in one or more variants of the CSPC procedure. However, several results provide strong challenges to this notion. At minimum, recent results suggest that if context-specific attentional control does occur at all, clear learning biases are present in the typical preparations (i.e., context-specific contingency and temporal learning biases). Thus, even if clearer evidence for context-specific attentional control does emerge in future research, all future research with the CSPC should aim to reduce or eliminate such biases (i.e., if the goal is to study attentional control), especially given that the vast majority of the effect seems to be due to contingency biases.

Congruency sequence effects (CSEs)

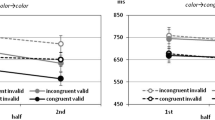

In the prior review (Schmidt, 2013b), it was discussed how the CSE has been shown to be heavily biased by feature integration, or binding, biases (Hommel et al., 2004; Mayr et al., 2003). Concerns with contingency biases due to more frequent than chance presentations of individual congruent and incongruent stimuli was also discussed (Mordkoff, 2012; Schmidt & De Houwer, 2011). Since the original review, new approaches have emerged that attempt to eliminate these feature integration and contingency biases by design (Duthoo, Abrahamse, Braem, Boehler, & Notebaert, 2014; Kim & Cho, 2014; Schmidt & Weissman, 2014, 2015, 2016; Weissman, Egner, Hawks, & Link, 2015; Weissman, Jiang, & Egner, 2014). Perhaps the cleanest of such manipulations comes from Schmidt and Weissman (2014; see also, Mayr et al., 2003, for a similar, but unsuccessful manipulation). In this procedure, feature integration biases are eliminated by alternating on odd and even trials between two subsets of stimuli (e.g., left and right arrows on odd trials, and up and down arrows on even trials). In this way, it is impossible for stimuli or responses to repeat from the previous trial. No contingency bias is present, either, even with a 50:50 congruent:incongruent ratio. In contrast to earlier contingency-unbiased studies that eliminated feature integration effects after the fact (i.e., by deleting all trials except complete alterations), this procedure revealed very robust CSEs. Interestingly, however, the CSE only seems to be especially robust with this preparation for certain tasks (Weissman, Egner, et al., 2015; Weissman et al., 2014). Stroop and flanker tasks (the two most commonly used procedures) revealed no evidence of CSEs, though other reports have revealed small but significant CSEs in these tasks (Duthoo et al., 2014; Jiménez & Méndez, 2014; Kim & Cho, 2014; Weissman, Colter, Drake, & Morgan, 2015). However, strong CSEs are observed with prime-probe arrow and direction word tasks, in which distracters and targets were arrows (or direction words) and the distracter was presented in advance of the target. Similarly, a strong CSE was observed for the temporal flanker task, in which flanking letters are presented in advance of a central target letter. These results might suggest that preexposure of the distracter increases the magnitude of CSEs. A CSE was also observed for the Simon task with simultaneous stimulus presentation, however (but see Mordkoff, 2012).

Although these CSEs might seem encouraging for the attentional control view, a subsequent series of experiments by Weissman and colleagues raises concerns. Several findings with the novel procedure seem particularly inconsistent with the conflict monitoring view. For instance, a CSE is still observed with long prime-probe intervals, even though the main effect of congruency is no longer significant (Weissman, Egner, et al., 2015). That is, there is a significantly positive congruency effect following a congruent trial, and a significantly negative congruency effect following an incongruent trial. As the amount of conflict experienced has been argued to be roughly correlated with response time (Yeung, Cohen, & Botvinick, 2011), a null congruency effect suggests no difference in conflict between congruent and incongruent trials. Thus, a CSE interaction should not be produced according to the conflict monitoring view. More problematically, attentional control should never be capable of reversing the congruency effect. The conflict monitoring account only allows for a null congruency effect in the case of perfect attentional control (i.e., no influence of the distracting stimulus). Relatedly, the CSE does not seem to correlate with the magnitude of the congruency effect (Weissman et al., 2014).

A CSE in the absence of feature integration and contingency biases might thus be due to processes other than conflict monitoring. There are several potential alternative interpretations, all of which (to one degree or another) seem to provide better fit to the extant data than the conflict monitoring account. In the original review (Schmidt, 2013b), it was pointed out that temporal learning biases might explain the CSE. That is, after a fast (e.g., congruent) response, the congruency effect will be larger, because congruent trials benefit from early preparation for a similarly fast response. After a slow (e.g., incongruent) response, the congruency effect will be smaller, because participants are less prepared to respond quickly to a subsequent congruent trial. Subsequent work has lent some credence to this notion. In particular, linear mixed-effect analyses of the relationship between previous trial response times and current trial response times produced a pattern of interactions between congruency, previous trial congruency, and previous trial response time that were completely in accord with the temporal learning account and simulated data from the PEP model (Schmidt & Weissman, 2016). Results were not consistent with the conflict monitoring account. Although further research is certainly needed, these results are encouraging for a temporal learning view. However, the reversed congruency effects observed in some conditions are as problematic for the temporal learning view as the conflict monitoring view (i.e., temporal learning should also not predict reversed congruency effects).

Another alternative view is that participants (perhaps especially with prepresented distracters) develop expectancies about the likely match between the distracter and upcoming target (Weissman, Egner, et al., 2015). This is related to the original account of the CSE (Gratton et al., 1992), where it was proposed that participants expect an incongruent trial following an incongruent trial (and therefore control attention away from distracters), and expect a congruent trial following a congruent trial (and are therefore lax in attentional control). As with any attention-based account, reversed congruency effects should never be predicted. However, expectancies could also be nonattentional in nature. In particular, following a congruent trial, where the distracter matched the target, participants might see the distracter and expect that the (upcoming) target will be the same. For instance, the participant sees a leftward pointing arrow distracter and anticipates a left key response. Following an incongruent trial, participants might expect needing to make the oppositeFootnote 6 response indicated by the distracter (e.g., see leftward pointing arrow and anticipate a right response). Notably, this (nonattentional) response expectancy account does allow for reversed congruency effects following an incongruent trial. For instance, if a participant sees a left-pointing arrow distracter and anticipates that a right response will be needed, then a right response (incongruent trial) can be made faster than a left response (congruent trial), as long as the response expectancy bias exceeds the main effect of congruency.

Still other alternative interpretations are possible. For instance, Hubbard, Kuhns, Schafer, and Mayr (2017) propose that attentional settings from the immediately preceding trial might simply passively carry over from the preceding trial (which would presumably not require an active conflict monitor). Relatedly, participants might recruit the same “task set” (including attentional settings) as the immediately preceding trial (Hazeltine, Lightman, Schwarb, & Schumacher, 2011). Similar to the expectancy-based attention or conflict monitoring views, however, these alternative accounts should also never predict a reversed congruency effect (i.e., decreasing attention to the distracter can, at most, reduce a congruency effect to zero). Additionally, while these accounts can presumably explain CSE (and LLPC) effects, they would presumably struggle with ISPC and CSPC effects, which are stimulus triggered.

As another side note, the paradigms that seem to work the best to produce large and robust CSEs seem to be those in which there is a high perceptual overlap between distracting and target stimuli (e.g., distracting and target arrows, distracting and target direction words, distracting and target flanking letters/colours). Schmidt and Weissman (2015) have further showed that the magnitude of the CSE is sizeably reduced without this perceptual overlap (e.g., distracting arrows to target direction words). At first glance, this might seem to suggest that perceptual match/mismatch might play an important role in the CSE (e.g., match/mismatch repetitions being faster than alternations),Footnote 7 but Schmidt and Weissman further showed that it only matters that the distracter is a potential target. In particular, it did not matter whether arrows or direction words were distracters to arrows or direction words, as long as both arrows and direction words could be targets on some trials. These results were therefore interpreted as showing that attentional capture of distracters that look like a potential target increases the CSE.

It is also noteworthy that another approach for studying CSEs in the absence of confounds also exists. Though generally directed toward the study of generalisation of control, many studies have combined two or more types of conflict tasks together (e.g., Stroop and Simon) to see whether attentional control transfers across tasks. For instance, does Stroop conflict on Trial n − 1 decrease Simon compatibility effects on Trial n? In some (but not all) of these designs the stimuli and responses do not necessarily overlap across tasks, so a CSE that spans from one task to the next would be evidence against a simple feature binding account. This literature has produced rather mixed results, where cross-task CSEs are sometimes observed (Freitas, Bahar, Yang, & Banai, 2007; Freitas & Clark, 2015; Kan et al., 2013; Kleiman, Hassin, & Trope, 2014; Kunde & Wühr, 2006) and sometimes not (Akcay & Hazeltine, 2011; Funes, Lupiáñez, & Humphreys, 2010; Wendt, Kluwe, & Peters, 2006). Where generalisation across tasks does occur, this might depend on overlap in the source of conflict (Freitas & Clark, 2015; see Egner, 2008, for a review). To the extent that cross-task CSEs can be observed for two paradigms, they might be used to assess competing accounts of the CSE. For instance, if the pace of the two tasks is drastically different, then the temporal learning account might not predict a CSE.

In sum, evidence for conflict monitoring with the CSE is still ambiguous. On the one hand, new paradigms have emerged that have produced more robust evidence for CSEs in the absence of feature integration and contingency learning biases. On the other hand, many or most of these new results seem problematic for the conflict monitoring view. Various other alternative accounts of the results (some attentional, and some not) exist, and future research will be needed to distinguish between these alternatives.

Other findings

Some other results have emerged in recent years that do not fit as cleanly into the previous sections of this review. Two particularly interesting new directions are discussed here. Results that might seem to provide some of the most compelling evidence for conflict adaptation come from a paper by E. L. Abrahamse, Duthoo, Notebaert, and Risko (2013). These authors present evidence to show that the PC (and ISPC) effect is differentially modulated by a shift from mostly congruent to mostly incongruent lists than a shift in the reverse direction. Specifically, if a mostly congruent block comes first, then the congruency effect starts very large and diminishes drastically when switching to a mostly incongruent block. In contrast, if a mostly incongruent block comes first, then the congruency effect starts small and only moderately increases when switching to a mostly congruent block. The idea the authors forwarded is that item and/or list-specific control processes carry over from one block to the next. That is, after a mostly congruent block, attention to the distracter is high and it is therefore rapidly determined that conflict has increased, resulting in an upregulation of control. However, after a mostly incongruent block where attention is tightly controlled away from the distracter, the reduction in conflict is not noticed and attentional control is resultantly not relaxed.

This asymmetrical list shifting effect does not make much sense from the simplest version of the contingency learning account, as one might posit that participants should simply be responsive to the contingencies of the current block. That is, if the PC effect in a mostly congruent block is magnitude x, and the PC effect in a mostly incongruent block is y (i.e., where x > y due to contingency biases), then the decrease in the congruency effect from a mostly congruent block to a mostly incongruent block (x − y) should be equivalent to the increase from a mostly incongruent block to a mostly congruent block (y − x).

This asymmetric effect can, however, be accommodated within a simple learning perspective by also taking into consideration practice effects (i.e., skill acquisition). The longer one performs a novel speeded task, the faster and faster one can respond (Newell & Rosenbloom, 1981). This produces a highly regular practice curve, with performance improving rapidly early on and continuing to improve at ever-diminishing rates as performance approaches an asymptotic ideal. This psychometric property is highly regular, with individual participant trial-to-trial improvements following an exponential decay function (Heathcote, Brown, & Mewhort, 2000; Myung, Kim, & Pitt, 2000; which looks more like a power function when averaged across participants in blocks; see Logan, 1988; Newell & Rosenbloom, 1981). Schmidt and colleagues (Schmidt & De Houwer, 2016b; Schmidt et al., 2016) further demonstrated that a logical consequence of such practice curves is that any effect (congruency or otherwise) should decrease with practice. This is because initially slow trial types (e.g., incongruent trials) have more room for practice-based improvements than do initially fast trial types (e.g., congruent trials). Indeed, congruency effects do shrink with practice (Dulaney & Rogers, 1994; Ellis & Dulaney, 1991; MacLeod, 1998; Simon, Craft, & Webster, 1973; Stroop, 1935). Thus, congruency effects decrease substantially when switching from a mostly congruent to a mostly incongruent block because (a) the new contingencies result in a smaller congruency effect, and (b) the added practice also reduces the congruency effect. In contrast, congruency effects only increase a small amount when switching from a mostly incongruent block to a mostly congruent block because (a) the new contingencies result in a larger congruency effect, but (b) the added practice reduces the congruency effect. It was demonstrated that reanalysis of the asymmetric list shifting data with a control for practice-based reductions in the congruency effect eliminated the asymmetry (Schmidt, 2016b). In the same paper, it was further demonstrated that the PEP model successfully reproduces the asymmetric list shifting effect, because both practice curves and contingency effects are a result of a single, similarity-based episodic retrieval mechanism.

Indeed, it would be surprising if the asymmetric list shifting effect was, in fact, measuring conflict monitoring. Past results with both the ISPC and LLPC procedures suggest strongly that if conflict monitoring occurs at all, then it should decidedly not occur when strong contingencies are present in the design (Bugg, 2014; Bugg & Chanani, 2011; Bugg & Hutchison, 2013; Bugg, Jacoby et al., 2011). Thus, the asymmetric list shifting effect served as another example of a finding that seemingly fit very well with a conflict monitoring perspective while (apparently) ruling out simple learning confounds. However, on closer inspection, assessment of learning biases in the task not only provided strong evidence for a source of confounding but also seemingly explains away the whole effect.

Another highly related conclusion regarding practice confounds was reached in another recent report. In some initial work by Sheth et al. (2012), it was observed that CSEs were eliminated in patients after ablation of the anterior cingulate cortex (cingulotomy). This was claimed as lending support to the notion that the ACC is responsible for conflict monitoring. A recent study (van Steenbergen, Haasnoot, Bocanegra, Berretty, & Hommel, 2015) revealed, however, that the exact same CSE with the same procedure is simply eliminated via practice (i.e., present during pretest, but not during posttest), without the cingulotomy. In other words, the CSE diminished with practice, hard stop.

Another interesting finding comes from Whitehead, Brewer, Patwary, and Blais (2016; see also, Levin & Tzelgov, 2016). In this paper, the authors made use of the colour-word contingency learning paradigm (Schmidt, Crump, Cheesman, & Besner, 2007) in two variants. In the typical colour-word contingency learning paradigm, participants respond to the print colour of colour-unrelated neutral words. Each word is presented most often in one colour (e.g., “move” most often in blue, “sent” most often in green), and performance is found to be robustly faster and more accurate to high contingency trials (e.g., “move” in blue), where the word is presented in the frequently paired colour, relative to low contingency trials (e.g., “move” in green), where the word is presented in an infrequently paired colour. Thus, this task provides a straightforward measure of contingency learning. Whitehead and colleagues also included a between-group condition in which the neutral words were replaced with incongruent colour words (see also, Musen & Squire, 1993) and contingencies were manipulated in the same way (e.g., “red” most often in blue). A robust contingency effect was observed for both the neutral word contingency manipulation and for the incongruent word contingency manipulation. Critically, however, the contingency effect was robustly smaller for incongruent stimuli. The authors argued that this finding is problematic for the simple contingency learning view of (item-specific) PC effects, because it is not clear why the effect should be reduced for incongruent stimuli. Indeed, we might even expect that the effect should be increased due to scaling with longer incongruent response times (e.g., see Schmidt, 2016b; Schmidt & De Houwer, 2016b; Schmidt et al., 2016).

Whitehead et al. (2016) interpreted their results as evidence for conflict-mediated learning (see Verguts & Notebaert, 2008, 2009), a variant of the conflict monitoring account. In particular, for participants experiencing incongruent-trial conflict, learning of the (target) colour-to-response links is boosted, thus decreasing the influence of the contingency via the distracting word. The authors additionally point out (rightly) that the PEP model, while able to simulate simple ISPC effects (Schmidt, 2013a), has no provisions that allow it to simulate decreased contingency effects for incongruent stimuli. There is, however, at least one alternative interpretation of this asymmetry in contingency learning effects, which also suggests that the comparison of neutral and incongruent contingency learning is entirely unrelated to ISPC effects.

In particular, it is noteworthy that in all variants of the PC procedure the task includes, on at least some meaningful proportion of the trials, conflicting stimuli. That is, the distracting words (e.g., “blue”) correspond to potential (colour) responses, which are sometimes incompatible with the response that actually needs to be made (e.g., the red response). In the neutral-word contingency learning procedure, the situation is entirely different. While neutral words might engender some task conflict (Goldfarb & Henik, 2007; Levin & Tzelgov, 2014, 2016; MacLeod & MacDonald, 2000), they should not engender response conflict. That is, there is no need to filter (Dishon-Berkovits & Algom, 2000; Garner, 1974; Melara & Algom, 2003) the distracting word at all. It may be supposed that attention to the word is, in fact, strategically minimized in a task containing incongruent stimuli. This is, of course, similar in at least one respect to the conflict monitoring notion, in that attention is proposed to be influenced by the presence of conflict. However, critical to the conflict monitoring account of PC effects (and CSEs) is the notion that microadjustments are made with each experience of conflict (or lack thereof). That is, to effectively account for PC effects and CSEs, it must be assumed that participants are making many small shifts in attentional control, and not just one large strategic shiftFootnote 8 when noticing “this task is hard” in a Stroop task after experiencing (or being instructed about) one or two incongruent stimuli.

Note that if this latter “light switch” modelFootnote 9 of attentional control (i.e., “on” in a conflicting filtering task, but relaxed or “off” when no filtering is needed) is correct, then the data of Whitehead et al. (2016) is not necessarily informative about whether or not attentional control is differentially recruited to mostly congruent versus mostly incongruent stimuli in an ISPC procedure (or in a PC procedure more generally). Both mostly congruent and mostly incongruent tasks require attentional filtering. It could potentially be argued that attentional filtering is recruited more strongly with a high proportion of conflicting trials, of course (i.e., the basic question of the present review), but this was not demonstrated in the target study. The suggestion here is that there is no difference in the amount of attentional control between mostly incongruent conditions with very, very frequent conflict and mostly congruent conditions with (relatively speaking) still frequent conflict (e.g., 1 of 4 trials with 25% incongruent).

While further empirical tests could certainly be directed at testing this notion directly, two lines of research back up this chain of reasoning. First, when contingencies for neutral and incongruent (and congruent) stimuli are manipulated within participant in one large block, the magnitude of contingency effects for neutral and incongruent (and congruent) stimuli are all roughly identical (Hazeltine & Mordkoff, 2014). This fits perfectly with the “light switch” model of attentional control, as both neutral and incongruent contingency effects are tested within the same (conflict present) filtering task. Second, the notion that the Whitehead et al. (2016) data provide evidence for conflict monitoring contributions to the ISPC effect is inconsistent with all past work that has aimed to dissociate contingency and attentional control contributions to the ISPC effect. The contingency bias has always and consistently either explained the entire ISPC effect (Hazeltine & Mordkoff, 2014; Schmidt, 2013a) or, at minimum, explained the entire ISPC effect when a strong contingency bias was present in the task (Bugg, 2014; Bugg & Hutchison, 2013; Bugg, Jacoby et al., 2011). Though alternative interpretations are possible (see the ISPC section above), the net evidence clearly suggests that if conflict monitoring occurs at all, then it only occurs when there is not a strong contingency bias. Just such a contingency bias certainly was present in the Whitehead and colleagues manipulation: all words (colour words or neutral) were 75% predictive of a single high contingency response in a five-choice task (or 50% in the control condition, also well above chance; 20%).

Making sense of inconsistencies

Some of the cleanest and most straightforward dissociations between simple learning effects and conflict monitoring have produced very robust evidence of learning confounds, and no hint at all (even with reasonably powered sample sizes) of any residual conflict adaptation effects (and often with strong Bayesian evidence for the null). It is therefore puzzling that many other reports produce evidence of very robust PC effects or CSEs that are (at first blush) similarly free of confounds. Though one might be tempted to assume either (a) drastically inflated Type II errors in the former studies, or (b) drastically inflated Type I errors in the latter studies, this does not seem like the most likely interpretation of this incongruity. Indeed, there are two other highly plausible alternative interpretations. First, it is possible that conflict monitoring does exist, but only shows itself under certain circumstances. Second, it is possible that all evidence for conflict monitoring is really just confounds in disguise, only the confounds of a given series of experiments are less obvious at first glance. The following discussion will consider both of these alternatives in turn.

According to one view, which can be termed the last resort view (Bugg, 2014), conflict-driven attentional control does exist, but is only engaged in when other predictive strategies fail. For instance, if there is a strong contingency bias in the task, then participants will attend to words and use them to anticipate responses, and not adapt attention away from the words when experiencing conflict. However, if there are no contingencies in the task, or if the contingencies are too weak, only then do participants switch to an attentional control strategy. The more global notion is that attentional strategies are costly/effortful to implement, and the system therefore only resorts to attentional control processes when simpler predictive strategies fail. This account would therefore explain why very straightforward dissociation procedures (e.g., Hazeltine & Mordkoff, 2014; Schmidt, 2013a) find very robust evidence for contingency learning biases, but no additional evidence for conflict adaptation: the contingency bias has prevented conflict adaptation.

The idea that conflict adaptation is only observable in the restricted case in which predictive information, such as contingency biases, are eliminated has some plausibility. When predictive information is present, the contingency serves as the maximally useful cue in the task to aid in performance. If “distracting” stimuli effectively cue the probable responses, then the maximal strategy is to attend to these predictive cues (even if mostly incongruent). That is, adjusting attention away from this predictive information (i.e., when experiencing conflict) might therefore be suboptimal. When predictive information is absent, participants might seize on the only task information remaining: more abstract congruency information. This does, however, add a homunculus (i.e., prioritization of two different attentional strategies based on contingency perception) on top of a homunculus (i.e., conflict monitoring), unless a single process can be proposed that achieves both functions.

If the last resort account is correct, then a bold and shocking conclusion logically follows from it. Namely, if conflict monitoring is only the last resort, then the vast majority of prior reports (including the original reports of PC effects and CSEs) on which the conflict adaptation account was based did not produce any genuine conflict adaptation. That is, the vast majority of past reports included exactly the sort of learning biases that, by last resort logic, should have made conflict monitoring impossible. In other words, the last resort argument might seem to “save” conflict monitoring from the chopping block, though implies that the wealth of the literature argued as evidence for conflict monitoring did not observe it (i.e., the account was right, but conflict adaptation has only been observed very recently in carefully controlled and decidedly more complex reports). This is of course a surprising and provocative suggestion, but the only one that can follow from last resort logic.

Not only is this account surprising, but it also seems to undermine much of what has been claimed about conflict adaptation. For instance, studies of the brain correlates of conflict adaptation must necessarily have been measuring something else (i.e., learning biases). For instance, if the ISPC effect was found to correlate with ACC activation (e.g., Blais & Bunge, 2010), but the task included a strong contingency bias, then this ACC activation actually had nothing to do with conflict adaptation. Instead, the ACC BOLD signal may be indexing contingency learning, instead (for supportive evidence, see Grandjean et al., 2013). Alternatively, of course, something completely unrelated might be responsible for the correlation (e.g., Grinband et al., 2011a, 2011b; cf. Yeung et al., 2011), as discussed in the previous review (Schmidt, 2013b). Indeed, if the last resort account is correct, then there is no good evidence at all that ACC, DLPFC, or other brain regions are related to conflict adaptation.Footnote 10 In fact, this is a more global problem for the purported brain–behaviour links in the attentional control domain, as confounds have been consistently present in neuroscience papers on the topic (and more generally, we have no idea what areas like the ACC actually do, as they apparently do everything; Gage, Parikh, & Marzullo, 2008). However, the last resort account would add an additional layer of complication to the mix: one cannot even control for confounds after the fact (e.g., by deleting stimulus repetitions from the analysis); rather, such confounds cannot be in the design in the first place. Similarly, almost all the behavioural evidence for how and when conflict monitoring occurs and what factors modulate it must similarly be wrong. Instead, such studies tell us only about how and when the learning/memory biases in the tasks occur and what factors modulate that.

Consider another example. The CSE has recently been found to be observable in certain tasks (especially robustly in prime-probe) independent of feature repetition and contingency biases (Schmidt & Weissman, 2014). Perhaps these CSEs are driven by conflict adaptation (but see the Congruency Sequence Effect section above). If so, it is nevertheless the case that almost every experiment conducted on the CSE did not measure conflict adaptation at all, including the original report by Gratton and colleagues (Gratton et al., 1992).Footnote 11 This is because almost every preceding experiment was heavily biased by contingency and/or binding biases, which would mean that conflict monitoring should have been prevented according to the last resort view. This would mean that our knowledge about CSEs is not nearly as rich as we think. Instead, it is limited to a very small number of recently conducted experiments. If so, this would be a surprising realization. There are also data inconsistent with this notion. For instance, Weissman, Hawks, and Egner (2016) report data suggesting that, if anything, control processes might contribute more to the CSE in a task where feature binding biases are present. In particular, the CSE was larger in the presence of binding biases, even after controls for binding biases. As these authors point out, this is the exact opposite of what the last resort account should predict.

An alternative to the last resort account, of course, is simply that conflict monitoring does not exist at all. Instead, the data that seem to support the conflict monitoring account might be the result of learning biases such as those described in the present review (or other processes not yet imagined). The alternative “learning view” of PC effects and CSEs retains viability for two key reasons. The first is parsimony. The learning view appeals exclusively to learning/memory principles that must be true regardless of whether conflict monitoring is also real. The idea that we are influenced by our learning history, responding faster and more accurately to stimulus compounds that frequently co-occurred in the past (e.g., word-colour or even word-location-colour compounds), is certainly not controversial. Similarly, the notion that recent co-occurrences of stimuli bias us to repeat the responses we have made to the same stimuli (i.e., binding or feature integration effects) is similarly not controversial (and even follows logically from the same principles used to explain contingency learning effects; see Schmidt et al., 2016). Although mechanistic accounts vary in their exact assumptions, timing biases (i.e., temporal learning) on highly repetitive tasks are also well known (e.g., see Grosjean, Rosenbaum, & Elsinger, 2001, for a review). Given that so much of what is observed in the attentional control literature directly follows from these principles alone, conflict monitoring theory therefore bears the burden of proof for demonstrating extra explanatory power. Second, while a lot of data exists that could be interpreted in multiple possible ways (i.e., due to the presence of uncontrolled confounds), the cleanest dissociation procedures have predominantly pointed toward either true null effects (Hazeltine & Mordkoff, 2014; Schmidt, 2013a, 2016b, 2017; Schmidt & De Houwer, 2011; Schmidt & Lemercier, 2018) or at least findings that directly contradict attentional control logic (e.g., the negative congruency effects following incongruent trials in Weissman, Egner, et al., 2015).