Abstract

The last decade has seen a dramatic rise in the number of studies that utilize the probe-caught method of collecting mind-wandering reports. This method involves stopping participants during a task, presenting them with a thought probe, and asking them to choose the appropriate report option to describe their thought-state. In this experiment we manipulated the framing of this probe, and demonstrated a substantial difference in mind-wandering reports as a function of whether the probe was presented in a mind-wandering frame compared with an on-task frame. This framing effect has implications both for interpretations of existing data and for methodological choices made by researchers who use the probe-caught mind-wandering paradigm.

Similar content being viewed by others

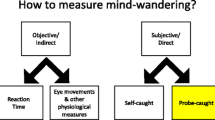

Mind-wandering is said to occur when instead of paying attention to the task at hand, one engages in task-unrelated thoughts (Smallwood & Schooler, 2006). Research on mind-wandering has exploded in the past decade: comparing 1996–2005 with 2006–2015, according to Google Scholar there were four and 377 articles, respectively, with the term “mind-wandering” in the title in each 10-year period (see also Callard, Smallwood, Golchert, & Margulies, 2013). Along with this exponential growth in output, a methodological fragmentation has occurred: there is no consensus as to how mind-wandering is measured. The present experiment, which focuses on the probe-caught method of measuring mind-wandering, demonstrates that this inconsistency could be problematic to the field.

The probe-caught method of collecting mind-wandering data involves asking participants to report their thought-state at various points throughout a task. Any study that uses this methodology can be identified by the use of “probes,” i.e., questions about the participants’ thought-state, which interrupt a task and provide the participants with a number of options to describe what they are currently attending to. A recent review by the first author (Weinstein, in press) revealed that amongst 145 studies that used the probe-caught method in the last decade, there were at least 69 different probe- and response-option variants. In that review, the variants were classified into four main categories: dichotomous, where one thought-state (e.g., “mind-wandering”) was presented and participants indicated whether it applied to them in the current moment by answering yes or no (e.g., Risko, Anderson, Sarwal, Engelhardt, & Kingstone, 2012); dichotomous, where participants chose between two contrasting thought-states (usually on-task vs. mind-wandering, e.g., Forster & Lavie, 2009); categorical, where multiple thought-states were presented (e.g., “The lecture,” “The time/The computer,” or “Something else”; Risko, Buchanan, Medimorec, & Kingstone, 2013); and scale, where participants indicated their thought-state along a continuum (e.g., a 6-point Likert scale from on-task to off-task; Morrison, Goolsarran, Rogers, & Jha, 2013). Of most interest to the current experiment, a contrasting pattern emerged between the dichotomous yes/no studies and the dichotomous (two thought-state) studies: in the first case, almost all (29/31) studies asked participants to indicate whether they were mind-wandering (as opposed to whether they were on-task); whereas in the second case, all (25/25) studies asked participants whether they were on-task or mind-wandering, in that specific order (that is, on-task always came first).

In the current experiment, we ask: does it matter how the mind-wandering probe is framed? According to the research by Tversky and Kahneman (1981) on framing effects, it could. That is, we might expect participants to report more mind-wandering when they are asked whether they are mind-wandering than when they are asked whether they are on-task. There is already some indication that demand characteristics could be at play in mind-wandering paradigms: when Vinski and Watters (2012) exposed a group of participants to an honesty prime, these participants reported mind-wandering less frequently than control participants. This finding led the authors to suggest that perhaps baseline rates of mind-wandering reflect artificial inflation due to demand characteristics. Another source of inflation could be present in the studies that ask participants whether they are mind-wandering, as compared with those that ask participants to choose between on-task and mind-wandering thought-states. In the current experiment, we asked participants whether they were mind-wandering or, conversely, whether they were on-task ten times during the course of a 20-min reading task. To preface the findings, we found a striking difference between conditions in terms of the rates of reported mind-wandering.

Method

Participants

We recruited participants from the undergraduate participant pool at the University of Massachusetts Lowell. Participants were 110 undergraduates, and included 24 females (22%); average age was 19.4 years (SD = 2.0). We recruited students from a variety of schools across campus, including 32 participants (29%) from the Business school; 29 participants (26%) from the College of Fine Arts, Humanities, and Social Sciences; 17 participants (16%) from the Sciences; 15 participants (14%) from the Engineering School; and five participants (5%) from Health Sciences. Eighteen participants (16% of the sample) were not native English speakers, and had spoken English for an average of 9.7 years (SD = 5.7 years).

Participants received credit towards their General Psychology course for participation. The procedure lasted 1 h. Participants were tested in groups of 11–18 students in a classroom, with four sessions in the mind-wandering condition and four sessions in the on-task condition, so the version of the experiment participants received depended on which session they signed up for. Participants were not aware of the two different conditions when they signed up for the experiment. Due to random fluctuations in participant sign-ups and attendance, we ended up recruiting 60 participants in the mind-wandering condition and 50 participants in the on-task condition.

Design

We used a between-subjects design with one independent variable: probe framing (mind-wandering/on-task). The procedure was identical for the two conditions, with the exception of the probe- and response-option text.

Materials

For the reading task, we adapted a transcript of a TED talk on ecology given by theoretical physicist Geoffrey West, found at www.ted.com. We edited the transcript to be more readable by including screenshots of the figures the text referred to, which we took from the video of the talk, at www.youtube.com.

We constructed a test that consisted of 20 questions, which could each be answered with a word or short phrase (see Appendix A). Test questions were in the cued recall format (no answer options were given). Based on pilot data, we expected a mean accuracy of 50% on the test.

Participants used Turning Technology clickers to report mind-wandering during the reading task. We adapted the java-based API to present a Powerpoint slide at a variable interval and accept buttons 1 or 2 on the clicker as responses. The java program allowed the experimenter to set the following parameters: task length, number of probes, minimum interval between probes, and maximum interval between probes (see Procedure section for the settings we used). The program recorded each button press with a timestamp and the clicker number. Probe responses were later matched to test data and demographics by the clicker number and session date and time.

For the probes, we created two Powerpoint slides that differed only in terms of wording (see Fig. 1a and b).

Procedure

The experiment consisted of a 1-min practice phase, a 20-min reading phase, a 5-min retention interval, and a 10-min test. When participants arrived at the classroom, they were asked to take a seat at a desk with a packet and a clicker. When either all participants had arrived or it was 5 min past the session start time, the experimenter locked the classroom door and no further participants were admitted. Participants were asked to silence their phones and put them away. The experimenter then gave the following instructions:

“In this experiment, you will be reading a transcript of a lecture on ecology, and then you will be quizzed on it. It is 12 pages long. You will have 20 minutes to read it at your own pace. If you finish reading and the 20 minutes is not over, please start again at the beginning.”

Participants were asked not to write on the transcript. The experimenter then gave a description of mind-wandering, without mentioning the term itself (the same exact description was given in the two framing conditions):

“At any one point during reading, your attention may be focused on the text, or on something else. For example, you might be thinking about shopping, about interacting with your loved ones, or even about how bored you are.”

Participants were then told that they each had a clicker (which was on the desk in front of them), and that while they were reading, they would hear a ding, at which point a question would pop up on the screen. Participants were shown an example of the probe on the screen at the front of the class. The probe was identical to those that participants would receive throughout the procedure, and differed by framing condition (mind-wandering/on-task; see Fig. 1a and b). The experimenter read out the text of the probe. Participants were asked to use only buttons 1 and 2 corresponding to the two possible response options to respond, and were given a practice phase to familiarize themselves with the clickers. For the practice phase, participants were told that they would hear a ding, at which point a probe would appear on the screen that they should answer using button 1 or 2. The practice phase lasted 60 s and included three probes at 20-s intervals. Participants were asked to practice responding to the probes; there was no additional task for participants to engage in during the practice.

After the practice phase, participants were asked to turn to the packet and start reading. The experimenter deployed the program with the following parameters: 20-min task, ten probes, minimum probe interval 75 s, and maximum probe interval 135 s. The program then randomized the exact timings of each probe based on these parameters, with a different set of randomized probe times for each session. All groups received a probe approximately every 2 min during the reading task, for a total of ten probes across 20 min.

After the 20-min reading time had elapsed, the experimenter announced that the reading phase was over, and collected the transcripts and clickers. At this point, participants were asked to turn to the next section of their packet and complete a crossword puzzle (5-min distractor) before answering the test questions. Participants had 10 min to complete the 20-question test. After the test, participants completed a demographic questionnaire that also included questions about how interesting they found the lecture transcript (5-point Likert scale from “not at all interesting” to “very interesting”) and how difficult they found reading the lecture transcript (5-point Likert scale from “not at all difficult” to “very difficult”).

Results

Data scoring and cleaning

Participants’ mind-wandering probe response data were matched to their test and demographic data by an Excel macro via clicker number and session date and time. Tests were hand-scored using a rubric created by the authors, with each question scored out of 3 points. The rubric allowed for 1, 2, or 3 points to be assigned to each answer, and is presented in Appendix B. Thus, total scores on the test could range from 0 to 60. Scores were converted to percentages for analyses. Tests were scored either by the second author or a research assistant. Forty-three of the tests were scored by both to determine inter-rater reliability, which was computed on overall test scores and found to be very high (r = .97, p < .001).

For the probe responses, in cases where more than one button press was recorded in response to a probe, only the first response was counted in the analyses; we removed 70 duplicate button presses out of 939 data points (7.5%). Eighteen participants (six in the on-task condition and 12 in the mind-wandering condition; 16% of the sample) responded to fewer than half of the probes, and they were eliminated from the analyses. After removing these participants, we ended up with 44 participants in the on-task framing condition and 48 participants in the mind-wandering framing condition. As a sensitivity test we also performed the reported analyses without excluding any participants’ data; this did not consequentially affect any of the results reported below.

Probe responses

Button clicks were coded as mind-wandering or on-task with mappings that differed by condition: in the mind-wandering condition, button 1 represented mind-wandering and button 2 represented on-task, whereas in the on-task condition, the reverse was true. Figure 2 presents the split of responses amongst three possibilities in the two conditions: mind-wandering, on-task, or skipped probe. For the analyses, we compared the response rate (all responses regardless of whether they were on-task or mind-wandering, which can be seen in Fig. 2 by summing the grey and white portions of each bar); and the mind-wandering rate (grey portions of Fig. 2).

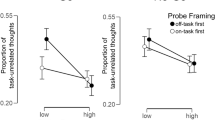

Table 1 presents means and standard deviations in each framing condition for the test scores, and self-reported mind-wandering, interest, and difficulty. Mind-wandering reports differed significantly between conditions: Participants reported mind-wandering on 3.40 of the ten probes in the mind-wandering condition, but on only 2.30 of the ten probes in the on-task condition; t(90) = 2.51, p = .01, d = 0.52 [0.11, 0.94]. As an additional check, the same analysis was also performed on the proportion of answered probes, rather than proportion of all probes; participants reported significantly more mind-wandering as a proportion of answered probes in the mind-wandering condition (37% vs. 26% in the on-task condition); t(90) = 2.18, p = .03, d = 0.45 [0.04, 0.87]. Other than the self-reported rates of mind-wandering, there were no differences between the framing conditions on any of the other variables: test scores, self-reported interest, or self-reported difficulty. In addition, the response rate did not differ significantly between conditions, with an average response rate of 90% (after removal of the 18 participants who responded to fewer than half of the probes); t(90) = 1.07, p = .29, d = 0.22 [−0.19, 0.63].

Correlates of mind-wandering

Table 2 presents correlations between self-reported mind-wandering and other variables of interest by framing condition. Note that when a correlation is significant in one group but not another, this does not mean that the two correlations differ significantly from each other. We tested whether the correlation coefficients differed between conditions with the Fisher r-to-z transformation, which showed that none of the differences were significant. Other than the relationship between age and self-reported interest (which was not a significant correlation in either framing condition), all correlations were in the same direction in both framing conditions.

Discussion

The methods used to collect probe-caught mind-wandering data over the past decade have been extremely heterogeneous, and these differences could be an unexamined source of the variability in levels of reported mind-wandering between studies. One source of variation in methodology that is evident when comparing these studies is the framing of the probe and response options. In the current experiment, we manipulated probe framing by asking participants whether they were mind-wandering or whether they were on task while engaged in a reading task. Asking participants whether they were on task substantially reduced mind-wandering reports compared to the mind-wandering framing, which has been used in 29 of the 31 published studies that asked participants to answer dichotomous yes/no questions about their thought state (Weinstein, in press). Our results suggest that rates of self-reported mind-wandering are prone to fluctuation due to subtle methodological factors. In a previous study that led to a similar conclusion, increasing the rate at which probes were presented during a task decreased mind-wandering reports without affecting an objective measure of mind-wandering (Seli, Carrier, Levene, & Smilek, 2013). Zedelius, Broadway, and Schooler (2015) also showed that participants were able to improve the accuracy of their thought-state reports when incentivized to do so.

Why did this difference in framing lead to different self-reported mind-wandering rates in the current experiment? There are two broad possibilities: one is that the framing affected true rates of mind-wandering, and the other is that the framing artificially affected participants’ answers through a response bias. We first explore the possibility that framing had an impact on actual mind-wandering, which we find to be the less compelling explanation. This explanation assumes that the wording of the probe frame shifts participants’ internal experience of the task. How might this occur? One possibility is that the probe framing sets expectations for how participants ought to experience the task.Footnote 1 That is, perhaps being repeatedly probed about whether they were paying attention to the task (in the on-task condition) encouraged participants to actually do so. Meanwhile, in the mind-wandering framing condition, participants may have been reassured that experiencing bouts of inattention from the task was normal, and thus they may have made less of an effort to control their thoughts and return them back to the reading.

What evidence do we have that actual rates of mind-wandering differed between the two framing conditions? We would argue that this evidence is lacking. Given the relationship between mind-wandering and test performance, a real increase in mind-wandering should have been accompanied by a decrease in test scores in the mind-wandering framing group, and we found no such evidence. In addition, the lack of difference in number of missed probes between probe-framing conditions, which could be seen as an index of deep mind-wandering, suggests that true mind-wandering rates were similar in the two conditions, and only the self-reports were affected by the framing manipulation. As such, there appears to be no evidence in favor of the hypothesis that the actual rates of mind-wandering differed. Future research could use a vigilance task that includes objective on-line task performance data to help confirm or disprove this hypothesis.

The second, and in our opinion more likely, explanation of the current results is that probe framing affected participants’ responses without affecting true rates of mind-wandering. Satisficing – the tendency to avoid cognitive effort on a survey question in favor of selecting the optimal answer (Krosnick, 1991) – provides a simple explanatory mechanism for this effect. Here, satisficing could be occurring either because participants judged the first response option to be a good enough description of their thought state, or because they were agreeing with the statement as it was presented (i.e., acquiescing). Since the first option in both cases also indicated a “yes” response, it is not possible to distinguish between the two forms of satisficing in the current design. In either case, the difference in framing of the probe would be expected to push guesses or low-confidence responses (see Seli, Jonker, Cheyne, Cortes, & Smilek, 2015) into the response option favored by the framing of each condition, thereby increasing mind-wandering responses in the mind-wandering condition. This explanation predicts no difference in test performance between probe-framing conditions, which is what we found in the current study.

Which framing better reflects theoretically expected or desirable qualities? In the mind-wandering condition there was a significant correlation between difficulty and mind-wandering rates, while this correlation was much smaller and not significant in the on-task condition. The correlation is consistent with studies showing an increase in mind-wandering during reading of more difficult texts (Feng, D’Mello, & Graesser, 2013). The fact that we found the correlation in the mind-wandering condition but not in the on-task condition suggests that the former may more accurately represent mind-wandering rates,Footnote 2 or at least has theoretically more desirable properties. However, since the difference between these two correlations was not statistically significant, strong conclusions cannot be drawn on this basis. It is also possible that other correlates of mind-wandering, including situational factors and individual differences, may not necessarily correlate with on-task thought – and vice-versa.

Which probe framing should researchers use? The simplest, most parsimonious solution is that the framing of the probe should match the construct of interest. That is, if you are interested in measuring self-reported mind-wandering, then ask participants whether they are mind-wandering; and if you are interested in self-reported task attentiveness, then ask participants whether they are on task. An important reason to do this is that it avoids making the assumption that mind-wandering and on-task states lie on opposite ends of a continuum – an assumption often made (but rarely defended) in the mind-wandering literature. To address this assumption, we recently began an investigation to determine whether a “flow” state might better characterize the opposite of mind-wandering than does an “on-task” state (Weinstein & Wilford, 2016). The results we present in the current experiment suggest that the relationship between mind-wandering and on-task states is not a zero-sum game, and it is not necessarily possible to easily infer one from the other. Further research should address the factors that might play into the choice of primary construct (i.e., mind-wandering or on-task thought), or whether there is a benefit to using mixed designs in which both constructs are measured.

Our results have important implications both for conclusions drawn from existing research and for future research. Researchers and science journalists alike need to be careful when reporting mind-wandering rates from individual papers (e.g., “people reported mind wandering about half the time” [Bloom, 2016] in an article for The Atlantic describing the experience sampling study by Killingsworth & Gilbert, 2010). Even when tasks are similar, seemingly subtle differences in the probe and response option wordings could be affecting self-reported mind-wandering rates. An important point to note is that while we only included two response options at each probe, many past studies have used much longer lists of options (e.g., Frank, Nara, Zavagnin, Touron, & Kane (2015) included eight response options and Ottaviani et al. (2015) included nine response options at each probe). The potential for response bias may increase as the number of response options increases, due to the increasing difficulty of making such judgments (Krosnick, 2009). Researchers planning experiments that utilize probe-caught mind-wandering methods should carefully consider the framing of the probe they choose to use, referring to Weinstein (in press) for an exhaustive list of mind-wandering probe variants currently in the literature.

Notes

We thank an anonymous reviewer for this suggestion.

We thank reviewer James Farley for this suggestion.

References

Bloom, P. (2016). The reason our minds wander. The Atlantic. Retrieved from http://www.theatlantic.com/science/archive/2016/03/imagination-as-proxy/474918/

Callard, F., Smallwood, J., Golchert, J., & Margulies, D. S. (2013). The era of the wandering mind? Twenty-first century research on self-generated mental activity. Frontiers in Psychology, 4.

Feng, S., D’Mello, S., & Graesser, A. C. (2013). Mind wandering while reading easy and difficult texts. Psychonomic Bulletin & Review, 20, 586–592.

Forster, S., & Lavie, N. (2009). Harnessing the wandering mind: The role of perceptual load. Cognition, 111, 345–355.

Frank, D. J., Nara, B., Zavagnin, M., Touron, D. R., & Kane, M. J. (2015). Validating older adults’ reports of less mind-wandering: An examination of eye movements and dispositional influences. Psychology and Aging, 30, 266–278.

Killingsworth, M. A., & Gilbert, D. T. (2010). A wandering mind is an unhappy mind. Science, 330, 932.

Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied Cognitive Psychology, 5, 213–236.

Morrison, A. B., Goolsarran, M., Rogers, S. L., & Jha, A. P. (2013). Taming a wandering attention: Short-form mindfulness training in student cohorts. Frontiers in Human Neuroscience, 7.

Ottaviani, C., Shahabi, L., Tarvainen, M., Cook, I., Abrams, M., & Shapiro, D. (2015). Cognitive, behavioral, and autonomic correlates of mind wandering and perseverative cognition in major depression. Frontiers in Neuroscience, 8.

Risko, E. F., Anderson, N., Sarwal, A., Engelhardt, M., & Kingstone, A. (2012). Everyday attention: Variation in mind wandering and memory in a lecture. Applied Cognitive Psychology, 26, 234–242.

Risko, E. F., Buchanan, D., Medimorec, S., & Kingstone, A. (2013). Everyday attention: Mind wandering and computer use during lectures. Computers & Education, 68, 275–283.

Seli, P., Carriere, J. S., Levene, M., & Smilek, D. (2013). How few and far between? Examining the effects of probe rate on self-reported mind wandering. Frontiers in Psychology, 4.

Seli, P., Jonker, T. R., Cheyne, J. A., Cortes, K., & Smilek, D. (2015). Can research participants comment authoritatively on the validity of their self-reports of mind wandering and task engagement? Journal of Experimental Psychology: Human Perception and Performance, 41, 703–709.

Smallwood, J., & Schooler, J. W. (2006). The restless mind. Psychological Bulletin, 132, 946–958.

Tversky, A., & Kahneman, D. (1981). The framing of decisions and the psychology of choice. Science, 211, 453–458.

Vinski, M. T., & Watter, S. (2012). Priming honesty reduces subjective bias in self-report measures of mind wandering. Consciousness and Cognition, 21, 451–455.

Weinstein, Y. (in press). Mind-wandering, how do I measure thee? Let me count the ways. Behavior Research Methods.

Weinstein, Y., & Wilford, M. M. (2016, November). Mind-Wandering and Flow: Are They Two Sides of the Same Coin? Talk presented at the 28th annual meeting of the Psychonomic Society, Boston, MA.

Zedelius, C. M., Broadway, J. M., & Schooler, J. W. (2015). Motivating meta-awareness of mind wandering: A way to catch the mind in flight? Consciousness and Cognition, 36, 44–53.

Acknowledgements

Fabian Weinstein-Jones created the Excel Macro to match mind-wandering data to test scores and demographics. Sowmya Guthikonda adapted the Turning Technology java-based API to collect mind-wandering responses. This project was funded by a University of Massachusetts Lowell internal Seed Grant. H.J. De L. was funded by the University of Massachusetts Lowell Fine Arts, Humanities, and Social Sciences Emerging Scholars program, and T. v.d. Z. was funded by the Leids Universiteits Fonds. We thank James Farley for extensive and helpful reviewer comments.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 100 kb)

Rights and permissions

About this article

Cite this article

Weinstein, Y., De Lima, H.J. & van der Zee, T. Are you mind-wandering, or is your mind on task? The effect of probe framing on mind-wandering reports. Psychon Bull Rev 25, 754–760 (2018). https://doi.org/10.3758/s13423-017-1322-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-017-1322-8