Abstract

Visual perception is altered near the hands, and several mechanisms have been proposed to account for this, including differences in attention and a bias toward magnocellular-preferential processing. Here we directly pitted these theories against one another in a visual search task consisting of either magnocellular- or parvocellular-preferred stimuli. Surprisingly, we found that when a large number of items are in the display, there is a parvocellular processing bias in near-hand space. Considered in the context of existing results, this indicates that hand proximity does not entail an inflexible bias toward magnocellular processing, but instead that the attentional demands of the task can dynamically alter the balance between magnocellular and parvocellular processing that accompanies hand proximity.

Similar content being viewed by others

Visual attention and perception are core brain processes that allow us to represent and interact with the world around us. It is striking, therefore, that such processes are affected by the proximity of an observer’s hands to visual stimuli. That is, the same object at a fixed distance from the observer will be processed differently, depending on whether or not the observer’s hands are adjacent to the object (for a reviews, see Brockmole, Davoli, Abrams, & Witt, 2013; Goodhew, Edwards, Ferber, & Pratt, 2015). An outstanding theoretical question, however, is the mechanism that underlies this difference in visual processing between the space near the hands (“near-hand space”) and other locations.

Early theoretical accounts for altered visual processing near the hands focused on differences in visual attention as the defining variable of near-hand-space visual processing. Specifically, Abrams, Davoli, Du, Knapp, and Paull (2008) suggested that in near-hand space there is particularly thorough and prolonged processing of, and delayed disengagement from, objects; this is termed the “detailed evaluation” account. Abrams et al. (2008) found three key pieces of evidence in favor of detailed evaluation. For visual stimuli in near-hand space, these include increased visual search times to identify a target among distractors; reduced inhibition of return (IOR), the period of inhibition applied to a location after disengagement of attention from it (Klein, 2000); and an exacerbated attentional blink (AB), the deficit in identifying the second of two targets in a rapid stream of stimuli, which persists for several hundred milliseconds after the first target and is intensified by overinvestment of attentional resources in the first target (Arend, Johnston, & Shapiro, 2006; Olivers & Nieuwenhuis, 2005). The slower search times, reduced IOR, and exacerbated AB are all consistent with a tendency to process thoroughly and a disinclination to disengage attention from stimuli when they are in near-hand space (Abrams et al. 2008). Critically, this account predicts generic effects on visual attention that vary as a function of task (i.e., whether attention is required), but do not vary as a function of the stimulus properties. In other words, all stimuli and objects, irrespective of their properties, should receive detailed evaluation.

More recently, a new theoretical account of altered visual perception in near-hand space was proposed, which drew on the physiological properties of the two major visual classes of visual cells: the magnocellular (M) and parvocellular (P) cells. M and P cells essentially represent a trade-off in temporal versus spatial precision when processing visual input. That is, relative to P cells, M cells have faster conduction speeds and larger receptive fields, are more sensitive to high temporal frequencies (changes in luminance across time), and are more sensitive to low spatial frequencies (LSFs, where the spatial frequency is changes in luminance across space)—corresponding to the global shape or “gist” of an object or scene, rather than finer details, which is what P cells subserve. M cells also possess greater contrast sensitivity, whereas P cells process color (Derrington & Lennie, 1984; Livingstone & Hubel, 1988). In real-world vision, M and P cells collaborate to create and update our dynamic conscious perception of objects and scenes. However, given a set of stimuli or task requirements, the relative balance of the contributions of M versus P cells may change.

It has been proposed that near-hand space enjoys enhanced M-cell input, at the expense of P-cell input (Gozli, West, & Pratt, 2012). That is, this account predicts that the perception of objects and the performance of visual tasks whose properties match those that are preferred by M cells should be improved by hand proximity, whereas the performance of those tasks whose properties do not match should be impaired. Consistent with this, it has been shown that temporal resolution is enhanced but spatial resolution is impaired in near-hand space, as measured by temporal and spatial gap detection tasks (Gozli et al., 2012). Furthermore, LSFs are preferentially processed in near-hand space, at the expense of high spatial frequencies (HSFs), as measured by orientation identification of centrally presented Gabors (Abrams & Weidler, 2013). Moreover, this spatial-frequency preference is eliminated by the application of red diffuse light (Abrams & Weidler, 2013), which is known to selectively suppress M cells (Breitmeyer & Williams, 1990), due to the presence of a subset of on-center, off-surround M cells whose surround is inhibited by red light in the receptive field (Dreher, Fukada, & Rodieck, 1976; Wiesel & Hubel, 1966).

Similarly, object-substitution masking, in which the perception of a briefly presented target is impaired by a temporally trailing but non-spatially-overlapping mask (Di Lollo, Enns, & Rensink, 2000), is reduced in near-hand space (Goodhew, Gozli, Ferber, & Pratt, 2013). Given that object-substitution masking reflects overzealous temporal fusion of the target and mask, thereby preventing conscious perception of the target (for a review, see Goodhew, Pratt, Dux, & Ferber, 2013), and further given that M cells directly contribute to target perception by facilitating object segmentation (Goodhew, Boal, & Edwards, 2014), this result supports the M-cell account of altered processing near the hands. Finally, color processing is impoverished in near-hand space (Goodhew, Fogel, & Pratt, 2014; Kelly & Brockmole, 2014). Altogether, then, a constellation of evidence implicates enhanced M-cell input, at the expense of P-cell input, to visual processing near the hands (for a comprehensive review, see Goodhew et al. 2015). Note that the predictions from this theory depend critically on the properties of the stimulus (and whether they are M- or P-cell preferred), but are invariant to any attentional or task requirements.

Given the accumulating evidence in favor of the M-cell account, how can this be reconciled with Abrams et al.’s (2008) evidence for detailed evaluation in near-hand space? Gozli et al. (2012) suggested that at least the findings of slowed visual search and exacerbated AB could be explained within the M-cell framework, given that these visual tasks used alphanumeric characters. Gozli et al. suggested that processing such characters would require encoding fine details, which constitute HSFs, and therefore are P-cell preferential. Given the increased M-cell input in near-hand space, Gozli et al. reasoned that performance on these tasks would suffer with hand proximity, because the stimuli used were not M-cell preferred. However, a closer examination of the evidence undermines the assumption that alphanumeric characters are necessarily HSF. Specifically, Abrams et al.’s stimuli appear to have actually been LSF, and therefore should have been M-cell preferred. The letters used measured 3° × 1.5° of visual angle. Stimuli of a similar size (e.g., 1.93° × 2.34°) have been used to constitute a “global” (vs. local) letter in Navon figures (Hubner, 1997), and global letters are said to enjoy a processing advantage due to their LSF content (Navon, 1977; Shulman, Sullivan, Gish, & Sakoda, 1986).

If Abrams et al.’s (2008) visual search stimuli were truly LSF in nature, rather than HSF, as Gozli et al. (2012) claimed, then there are two major interpretations for this result. Both of these potential interpretations have crucial implications for theoretical development in this area. The slowed visual search could reflect that detailed evaluation occurs whenever an attentionally demanding task is required in near-hand space, irrespective of spatial-frequency content. Consistent with this idea, the evidence in favor of the M-cell account to date has largely been limited to tasks that do not tax or require multiple shifts of spatial attention (e.g., gap detection for centrally presented stimuli, orientation discrimination of centrally presented Gabors), whereas visual search for a feature conjunction target does (Treisman & Gelade, 1980). Alternatively, a hand-proximity-by-task-properties interaction could affect the balance of M versus P processing, such that when a task is not demanding of spatial attention, there is an M-cell bias in near-hand space, whereas when the task is attention demanding (e.g., visual search), this pattern qualitatively shifts to a P-cell bias. This is consistent with evidence that shifting attention to a location results in a P-cell bias of processing at that location (Yeshurun & Levy, 2003; Yeshurun & Sabo, 2012). This would imply that the relative balance of M- or P-cell processing in near-hand space can be dynamically shifted, depending on the nature of the processing required to complete the task at hand. The purpose of the present experiment was to disentangle these possibilities.

Experiment 1

Here we used a task that is demanding of spatial attention (visual search) and varied the spatial frequency of the search arrays to be either M-cell (LSF) or P-cell (HSF) preferential. In this case, the detailed-evaluation account predicts that visual search performance should vary as a function of hand proximity, irrespective of spatial frequency (and given the previous literature, this would suggest that detailed evaluation is limited to search-type tasks and not to centrally presented gap detection or orientation identification). The M-cell account, in contrast, predicts that visual search performance in near-hand space should be facilitated for the LSF arrays and impaired for the HSF arrays. Alternatively, if the balance of M versus P in near-hand space can be dynamically altered by the nature of the processing demanded by the task, then visual search should induce a P-cell bias, as evidenced by an advantage in near-hand space for HSF arrays relative to the LSF arrays.

Method

Participants

The participants were 35 volunteers (24 female, 11 male), recruited from among undergraduate psychology students at the Australian National University and from the Canberra community via a participation website. Participants’ mean age was 22.3 years (SD = 3.8); they provided written informed consent and were compensated for their time.

Stimuli and apparatus

The search arrays consisted of either four (set size 4, SS4) or eight (set size 8, SS8) Gabors arranged in a notional annulus around fixation (7° radius), presented on a gray background. All Gabors within an array had the same spatial frequency, which was either 1 cpd (LSF) or 10 cpd (HSF). The LSF Gabors were also presented at 5% contrast (due to the superior contrast sensitivity of M cells), whereas the HSF Gabors were presented at 100% contrast (see Fig. 1). Participants responded via computer mice, which were either attached via Velcro to the left and right sides of the computer screen (rendering the visual stimuli on the screen in “near-hand space”) or attached to the left and right sides of a board that was placed on the participants’ lap (“far-hand space”), under the table on which the computer sat (see Fig. 2).

Illustration of a low-spatial-frequency, set size 8 search display in Experiment 1. The Gabors within the array could be small (2.1°) or large (4.3°) and could be oriented 15° off vertical (to the left or right) or horizontal. In this example, the target Gabor is the second from the top on the left, as it is the largest, closest-to-vertically oriented Gabor. Here the correct response would be “right,” since this Gabor is tilted to the right of vertical.

Illustration of the setup in the near-hand space condition and the far-hand space condition. In the near-hand space condition, the response mice (and therefore the participants’ hands) were positioned approximately 20 cm from the center of the screen (and therefore the visual stimuli), and in far-hand space, the mice were at approximately 50 cm horizontal and 55 cm vertical separation from the center of the screen. Viewing distance was fixed with a chinrest at 44 cm. The stimuli were created using the Psychophysics Toolbox extension in MATLAB and were presented on a gamma-corrected cathode-ray tube (CRT) monitor running at a refresh rate of 75 Hz. A similar setup had been successfully used previously (Goodhew, Fogel, & Pratt, 2014).

Procedure

Participants first completed a practice block of 12 trials prior to the experiment (which included some initial trials at a slowed-down presentation speed), in which feedback was provided on the accuracy of their responses in order to familiarize them with the task. Participants were required to score 75% correct or better on this block (which was repeated if necessary) in order to progress to the experiment. The experiment consisted of 512 trials (256 per hand position). Rest breaks were scheduled halfway through each hand position block.

Each trial began with a fixation-only screen for 1,000 ms, followed by the search array for 160 ms (too short to execute a saccadic eye movement), and then the screen was blank until response. Participants’ task was to identify the orientation (left vs. right) of the target Gabor as quickly and accurately as possible (by clicking the corresponding left or right mouse) while maintaining central fixation. The target was defined as the largest, closest-to-vertical Gabor. We defined the target in terms of both size and orientation in order to create a conjunction visual search task (Treisman & Gelade, 1980), thereby ensuring that participants engaged in a serial, attentive search rather than a parallel, preattentive process to complete the task. The distractor Gabors could be small and horizontally oriented, small (2.1°) and 15° off vertical, or large (4.3°) and horizontally oriented. On each trial, the distractors were randomly sampled from among these distractor options. The intertrial interval was 1,600 ms, and hand proximity was blocked (order counterbalanced), whereas spatial frequency and set size were randomly intermixed.

Results and discussion

Participants were excluded from the analysis if their accuracy in any condition fell at or below 50% (six exclusions). Trials were excluded from the analysis if responses were made in less than 100 ms or were more than 2.5 SDs above the participant’s mean response time (2.5% of trials for hands far, 2.9% for hands near). The remaining data were submitted to a repeated measures analysis of variance (ANOVA) on accuracy (percentages correct). This revealed a main effect of spatial frequency, F(1, 28) = 290.74, p < .001, ηp 2 = .912, such that accuracy was greater for LSFs (94%) than for HSFs (73%) (consistent with Kveraga, Boshyan, & Bar, 2007). We also found a main effect of set size, F(1, 28) = 56.72, p < .001, ηp 2 = .670, such that accuracy was higher for SS4 (86%) than for SS8 (82%), as would be expected with a conjunction visual search (Treisman & Gelade, 1980). No main effect of hand proximity was apparent (F < 1), and neither the hand-proximity-by-set-size nor the spatial-frequency-by-set-size interaction was significant (Fs < 1). However, an interaction between hand proximity and spatial frequency, F(1, 28) = 6.72, p = .015, ηp 2 = .193, was qualified by a three-way interaction among hand proximity, spatial frequency, and set size, F(1, 28) = 7.00, p = .013, ηp 2 = .200. This reflects the fact that the two-way interaction was evident at SS8 but not at SS4 (see Fig. 3). At SS8, accuracy for HSF arrays was significantly higher for hands near than for hands far, t(28) = 2.33, p = .027, whereas with LSF arrays there was the opposite trend, for higher accuracy with hands far than with hands near, t(28) = 1.98, p = .058.

These results indicate a target-identification advantage in processing the large-set-size HSF visual search arrays in near-hand space relative to far-hand space. One possible interpretation for this result is that it reflects a general P-cell bias for the visual processing of multiple items near the hands, making it easier to both process the HSF distractors and identify the HSF target, and more difficult to both process the LSF distractors and identify the LSF target. An alternative interpretation, however, is that this pattern of results stems exclusively from differences in distractor rejection efficiency. That is, the advantage in the HSF arrays may reflect the ease of disengaging attention from the HSF distractors to continue to the search for the target, and the disadvantage in the LSF arrays of disengaging attention from the LSF distractors. This explanation would essentially represent a hybrid between the M-cell enhancement and detailed-evaluation accounts, whereby attentional disengagement is delayed for M-cell-preferred stimuli in near-hand space.Footnote 1 This would account for why the advantage was specific to the larger set size, because there were more distractors to reject. To test this possibility, in Experiment 2 we modified the set size 8 arrays to consist of equal mixes of HSF and LSF items (which would nullify any spatial-frequency differences in distractor rejection efficiency), while varying the spatial frequency of the target. This would mean that there would be no systematic advantage or disadvantage for distractor rejection of a particular spatial frequency, and instead any differences would necessarily be driven by differences in the efficiency of target processing, thereby indicating a processing advantage for a particular spatial frequency. Here, if an advantage for the HSF arrays were still observed in near-hand space, it would undermine the explanation that this advantage resulted from greater efficiency in HSF-distractor rejection, and would instead support a parvocellular processing bias in near-hand space.

Experiment 2

Method

Participants

The participants were 33 volunteers (16 female, 17 male) whose mean age was 23.0 years (SD = 3.2).

Stimuli, apparatus, and procedure

These were identical to the respective aspects of Experiment 1, except that the set size 8 arrays consisted of four LSF and four HSF items. The spatial frequency of the array was therefore defined by the frequency of the target.

Results and discussion

Participants were excluded from the analysis if their accuracy in any condition fell below 50% in two or more conditions (eight exclusions). Trials were excluded from the analysis if responses were made in less than 100 ms or were more than 2.5 SDs above the participant’s mean response time (3.1% of trials for hands far, 2.6% for hands near). The remaining data were submitted to a repeated measures ANOVA on accuracy (percentages correct). This revealed no main effect of hand proximity (F < 1) and a main effect of spatial frequency, F(1, 24) = 735.35, p < .001, ηp 2 = .968, such that accuracy was greater for LSFs (93%) than for HSFs (56%). We also found a main effect of set size, F(1, 24) = 24.73, p < .001, ηp 2 = .507, whereby accuracy was higher for SS4 (77%) than for SS8 (72%). We observed no interaction between hand proximity and spatial frequency (F < 1), but an interaction between hand proximity and set size, F(1, 24) = 7.69, p = .011, ηp 2 = .243, was driven by a reduced set-size effect (i.e., the difference in accuracy between SS8 and SS4) in near-hand space (3%), relative to far-hand space (7%). There was also an interaction between spatial frequency and set size, F(1, 24) = 4.51, p = .044, ηp 2 = .158, such that the set-size effect was greater for HSF-target arrays (7%) than for LSF-target arrays (3%). Finally, these interactions were qualified by a three-way interaction among hand proximity, spatial frequency, and set size, F(1, 24) = 4.58, p = .043, ηp 2 = .160. This occurred because the accuracy for HSF-target SS8 arrays was enhanced in near-hand space relative to far-hand space, t(24) = 2.30, p = .031 (see Fig. 4). That is, even though now the SS8 arrays consisted of a mixture of spatial-frequency distractors, participants were still significantly improved at identifying an HSF target as compared with an LSF target. This bolsters the conclusions from Experiment 1 that this reflects a parvocellular bias in near-hand space at the larger set size, and not differences in the efficiency of distractor rejection. This interpretation implies that the relative bias between M cells and P cells in near-hand space qualitatively shifts between low and high set sizes. Consistent with this, we observed a trend toward a magnocellular bias in near-hand space at SS4, such that the identification of LSF targets was improved in near-hand space, t(24) = 1.91, p = .069.

General discussion

Here we found an HSF advantage in near-hand space on target-identification performance in a visual-search task. This advantage was present both when the entire array was HSF (Exp. 1) and when the array was a mixture of spatial frequencies but the to-be-identified target was HSF (Exp. 2). This implies a P-cell bias for visual processing near the hands, relative to far from the hands. This result is not consistent with either the existing detailed-evaluation or M-cell enhancement accounts. In light of the existing evidence, we propose a novel theoretical account, according to which when the demands on spatial attention are low (few items in the display), there is an M-cell bias in near-hand space, whereas when the demands on attention are high (larger number of items in the display, with the potential for crowding), there is a P-cell bias in near-hand space (see Fig. 5 for an illustration of why this would be adaptive). This framework can explain a large range of the previous results, including enhanced single-stimulus temporal gap detection and single-Gabor LSF identification in near-hand space (Abrams & Weidler, 2013; Gozli et al. 2012), and also our present findings of enhanced accuracy for HSF items in near-hand space, in addition to Abrams et al.’s slowed visual search in their LSF arrays (Abrams et al., 2008).

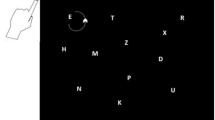

Why would the additional items in the larger set size elicit a P-cell bias? This most likely reflects a mechanism that selectively up-regulates the contribution of P cells in order to constrict the attentional spotlight, and thereby minimize perceptual interference from spatially proximal items. Consistent with this notion, a small, focused spotlight of attention induced at a static location or as the result of shift of attention to a location produces a a P-cell bias at that location, thereby enhancing spatial acuity (Goodhew, Shen, & Edwards, 2015; Yeshurun & Carrasco, 1998; Yeshurun & Sabo, 2012). Without such constriction, it is possible that both the target and distractors could be processed within the single, large receptive field of a magnocellular neuron, which would not allow the identity of the target to be resolved in isolation. This can be seen by comparing the diagram on the left (large receptive field, allowing potential confusion from multiple orientations) versus the diagram on the right (smaller receptive field, able to resolve the orientation of a single line without interference). It is therefore adaptive for the system to switch to preferential uptake of parvocellular neurons’ input in near-hand space, to make use of these cells’ more refined receptive fields for greater spatial acuity.

Of course, it is highly likely that the “balance point” between M-cell and P-cell processing is not dictated by a particular number of items in the display, but instead depends on an interaction between the stimuli, their density, and the demands of the task. For example, the finding of reduced object-substitution masking in near-hand space (Goodhew, Gozli, et al., 2013) is actually consistent with this framework, because despite using large-set-size displays in the previous study, the target was always signaled via the unique presence of the four-dot mask, thus strongly reducing the attentional demands of the task. Indeed, emerging evidence indicates that object-substitution masking is not modulated by attention (Argyropoulos, Gellatly, Pilling, & Carter, 2013; Filmer, Mattingley, & Dux, 2014). Similarly, Kelly and Brockmole (2014) found an impairment in visual memory for color content in near-hand space when the requirement was to encode six items into memory. This finding is consistent with the M-cell account, since M cells do not process color. Although set size 6 is intermediate between the two set sizes used here, and therefore is not inconsistent with the present experimental context, given that Kelly and Brockmole’s stimuli were sparser and that the requirement was to encode all of the items into memory (potentially creating more “diffuse” attentional demands than the speeded search for a particular target), it is likely that their shift did not occur at the same point as for the stimuli and task used here. Finally, recent evidence has even indicated that the positioning of a single hand, instead of two hands near the visual stimuli, can induce a shift to a P-cell bias (Bush & Vecera, 2014). Future research should focus on the factors that compel the shift from M to P processing.

In conclusion, understanding the properties of M and P cells is crucial to understanding altered visual perception near the hands, but the nature of these interactions is not fixed, as had previously been thought, but instead varies as a function of attentional demands.

Notes

The authors thank Davood Gozli for suggesting this possibility.

References

Abrams, R. A., Davoli, C. C., Du, F., Knapp, W. H., III, & Paull, D. (2008). Altered vision near the hands. Cognition, 107, 1035–1047. doi:10.1016/j.cognition.2007.09.006

Abrams, R. A., & Weidler, B. J. (2013). Trade-offs in visual processing for stimuli near the hands. Attention, Perception, & Psychophysics, 76, 1242–1252. doi:10.3758/s13414-013-0583-1

Arend, I., Johnston, S., & Shapiro, K. (2006). Task-irrelevant visual motion and flicker attenuate the attentional blink. Psychonomic Bulletin & Review, 13, 600–607. doi:10.3758/BF03193969

Argyropoulos, I., Gellatly, A., Pilling, M., & Carter, W. (2013). Set size and mask duration do not interact in object-substitution masking. Journal of Experimental Psychology: Human Perception and Performance, 39, 646–661. doi:10.1037/a0030240

Breitmeyer, B. G., & Williams, M. C. (1990). Effects of isoluminant-background color on metacontrast and stroboscopic motion: Interactions between sustained (P) and transient (M) channels. Vision Research, 30, 1069–1075. doi:10.1016/0042-6989%2890%2990115-2

Brockmole, J. R., Davoli, C., Abrams, R. A., & Witt, J. K. (2013). The world within reach: Effects of hand posture and tool use on visual cognition. Current Directions in Psychological Science, 22, 38–44. doi:10.1177/0963721412465065

Bush, W. S., & Vecera, S. P. (2014). Differential effect of one versus two hands on visual processing. Cognition, 133, 232–237. doi:10.1016/j.cognition.2014.06.014

Cousineau, D. (2005). Confidence intervals in within-subject designs: A simpler solution to Loftus and Masson’s method. Tutorial in Quantitative Methods for Psychology, 1, 42–45.

Derrington, A. M., & Lennie, P. (1984). Spatial and temporal contrast sensitivities of neurones in the lateral geniculate nucleus of the macaque. Journal of Physiology, 357, 219–240.

Di Lollo, V., Enns, J. T., & Rensink, R. A. (2000). Competition for consciousness among visual events: The psychophysics of reentrant visual processes. Journal of Experimental Psychology: General, 129, 481–507. doi:10.1037/0096-3445.129.4.481

Dreher, B., Fukada, Y., & Rodieck, R. W. (1976). Identification, classification and anatomical segregation of cells with X-like and Y-like properties in the lateral geniculate nucleus of old-world primates. Journal of Physiology, 258, 433–452.

Filmer, H. L., Mattingley, J. B., & Dux, P. E. (2014). Size (mostly) doesn’t matter: The role of set size in object substitution masking. Attention, Perception, & Psychophysics, 76, 1620–1629. doi:10.3758/s13414-014-0692-5

Goodhew, S. C., Boal, H. L., & Edwards, M. (2014a). A magnocellular contribution to conscious perception via temporal object segmentation. Journal of Experimental Psychology: Human Perception and Performance, 40, 948–959. doi:10.1037/a0035769

Goodhew, S. C., Edwards, M., Ferber, S., & Pratt, J. (2015). Altered visual perception near the hands: a critical review of attentional and neurophysiological models. Neuroscience & Biobehavioral Reviews, 55, 223–233. doi:10.1016/j.neubiorev.2015.05.006

Goodhew, S. C., Fogel, N., & Pratt, J. (2014b). The nature of altered vision near the hands: Evidence for the magnocellular enhancement account from object correspondence through occlusion. Psychonomic Bulletin & Review, 21, 1452–1458. doi:10.3758/s13423-014-0622-5

Goodhew, S. C., Gozli, D. G., Ferber, S., & Pratt, J. (2013a). Reduced temporal fusion in near-hand space. Psychological Science, 24, 891–900. doi:10.1177/0956797612463402

Goodhew, S. C., Pratt, J., Dux, P. E., & Ferber, S. (2013b). Substituting objects from consciousness: A review of object substitution masking. Psychonomic Bulletin & Review, 20, 859–877. doi:10.3758/s13423-013-0400-9

Goodhew, S. C., Shen, E., & Edwards, M. (2015). Selective spatial enhancement: attentional spotlight size impacts spatial but not temporal perception. Psychonomic Bulletin & Review. doi:10.3758/s13423-015-0904-6

Gozli, D. G., West, G. L., & Pratt, J. (2012). Hand position alters vision by biasing processing through different visual pathways. Cognition, 124, 244–250. doi:10.1016/j.cognition.2012.04.008

Hubner, R. (1997). The effect of spatial frequency on global precedence and hemispheric differences. Perception & Psychophysics, 59, 187–201. doi:10.3758/BF03211888

Kelly, S. P., & Brockmole, J. R. (2014). Hand proximity differentially affects visual working memory for color and orientation in a binding task. Frontiers in Psychology, 5, 318. doi:10.3389/fpsyg.2014.00318

Klein, R. M. (2000). Inhibition of return. Trends in Cognitive Sciences, 4, 138–147. doi:10.1016/S1364-6613(00)01452-2

Kveraga, K., Boshyan, J., & Bar, M. (2007). Magnocellular projections as the trigger of top-down facilitation in recognition. Journal of Neuroscience, 27, 13232–13240. doi:10.1523/jneurosci.3481-07.2007

Livingstone, M., & Hubel, D. (1988). Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science, 240, 740–749. doi:10.1126/science.3283936

Navon, D. (1977). Forest before trees: The precedence of global features in visual perception. Cognitive Psychology, 9, 353–383. doi:10.1016/0010-0285%2877%2990012-3

Olivers, C. N. L., & Nieuwenhuis, S. (2005). The beneficial effect of concurrent task-irrelevant mental activity on temporal attention. Psychological Science, 16, 265–269. doi:10.1111/j.0956-7976.2005.01526.x

Shulman, G. L., Sullivan, M. A., Gish, K., & Sakoda, W. J. (1986). The role of spatial-frequency channels in the perception of local and global structure. Perception, 15, 259–273. doi:10.1068/p150259

Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12, 97–136. doi:10.1016/0010-0285(80)90005-5

Wiesel, T. N., & Hubel, D. H. (1966). Spatial and chromatic interactions in lateral geniculate body of rhesus monkey. Journal of Neurophysiology, 29, 1115–1156.

Yeshurun, Y., & Carrasco, M. (1998). Attention improves or impairs visual performance by enhancing spatial resolution. Nature, 396, 72–75. doi:10.1038/23936

Yeshurun, Y., & Levy, L. (2003). Transient spatial attention degrades temporal resolution. Psychological Science, 14, 225–231. doi:10.1111/1467-9280.02436

Yeshurun, Y., & Sabo, G. (2012). Differential effects of transient attention on inferred parvocellular and magnocellular processing. Vision Research, 74, 21–29. doi:10.1016/j.visres.2012.06.006

Author note

This research was supported by an Australian Research Council Discovery Early Career Research Award (No. DE140101734) awarded to S.C.G. The authors thank Mark Edwards for assistance in developing the Gabor stimuli, and Reuben Rideaux for assistance with the data collection.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Goodhew, S.C., Clarke, R. Contributions of parvocellular and magnocellular pathways to visual perception near the hands are not fixed, but can be dynamically altered. Psychon Bull Rev 23, 156–162 (2016). https://doi.org/10.3758/s13423-015-0844-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-015-0844-1