Abstract

When we plan sequences of actions, we must hold some elements of the sequence in working memory (WM) while we execute others. Research shows that execution of an action can be delayed if it partly overlaps (vs. does not overlap) with another action plan maintained in WM (partial repetition cost). However, it is not known whether all features of the action maintained in WM interfere equally with current actions. Most serial models of memory and action assume that interference will be equal, because all action features in the sequence should be activated to an equal degree in parallel; others assume that action features earlier in the sequence will interfere more than those later in the sequence, because earlier features will be more active. Using a partial repetition paradigm, this study examined whether serial position of action features in action sequences maintained in WM have an influence on current actions. Two stimulus events occurred in a sequence, and participants planned and maintained an action sequence to the first event (action A) in WM while executing a speeded response to the second event (action B). Results showed delayed execution of action B when it matched the first feature in the action A sequence (partial repetition cost), but not when it matched the last feature. These findings suggest that serial order is represented in the action plan prior to response execution, consistent with models that assume that serial order is represented by a primacy gradient of parallel feature activation prior to action execution.

Similar content being viewed by others

Everyday actions like driving home from work and cooking dinner require action planning. We must decide what to do and when to do it (Keele, 1968; Lashley, 1951; Miller, Galanter, & Pribram, 1960). In some cases, we plan just a single action, like reaching for a coffee cup (Hommel, 2004). In other cases, we plan sequences of actions: Choosing a route home involves choosing a series of turns; making chicken wings involves frying the wings, preparing the sauce, and pouring the sauce on the wings (Houghton & Hartley, 1995; Jeannerod, 1997; Rhodes, Bullock, Verwey, Averbeck, & Page, 2004). When we plan sequences of actions, we must hold some elements of the sequence in memory while we execute others (Logan, 2004; Schneider & Logan, 2006). This article is concerned with interactions between the action plans we hold in memory and the actions we carry out (Logan, 2007), asking whether all parts of a sequential plan held in memory interfere equally with an ongoing action. Some theories of serial memory predict that all components of the action plan will interfere, because they are all active at the same time (Anderson & Matessa, 1997; Crump & Logan, 2010; Hartley & Houghton, 1996; Houghton & Hartley, 1995; Lashley, 1951; Rosenbaum, Inhoff, & Gordon, 1984). Other theories predict that earlier components of the action plan will interfere more than later components, because earlier components are more active than later ones (Averbeck, Chafee, Crowe, & Georgopoulos, 2002; Page & Norris, 1998; Rhodes et al., 2004). We tested these predictions in an experiment that examined serial order effects in the interaction between ongoing actions and action plans held in working memory (WM).

Research shows that executing an action plan can be delayed if it partly overlaps with an action plan maintained in WM. For example, executing a left-hand action is delayed if it shares a feature code (“left”) with an action plan maintained in WM (“left hand move up”), as compared with when it does not (“right hand move up”; Stoet & Hommel, 1999; Wiediger & Fournier, 2008). This delay is referred to as a partial repetition cost. These costs appear restricted to events in which action features maintained in WM are integrated into a single action plan (Fournier & Gallimore, 2013; Mattson, Fournier, & Behmer, 2013) and the current action imposes a demand on WM (Fournier et al., 2010; Weidiger & Fournier, 2008). Partial repetition costs are assumed to occur when a feature code from the current action plan reactivates (primes) the action plan maintained in WM. The action features are integrated in the action plan, so reactivating (priming) one feature should activate other features with which it is integrated. This leads to temporary confusion as to which action plan is relevant for the current task: the current plan or the plan maintained in WM (Hommel, 2004; Matson & Fournier, 2008). The irrelevant feature code or action plan must be inhibited, and the time required to inhibit it delays selection of the action plan for the current task (see also Sevald & Dell, 1994). It is not known, however, whether costs are contingent on the serial position of the overlapping feature code maintained in WM. Past research has manipulated feature overlap only for action feature codes represented at the beginning of the action sequences. The present research examines serial position effects in partial repetition costs.

The interaction between partial repetition costs and serial position provides insight into the representation of action plans in WM. The action elements may be represented equally, and serial order may be imposed later, during response selection and execution (Hartley & Houghton, 1996; Houghton, 1990), or the action elements may be represented by a gradient of activation in WM that preserves serial order (Rhodes et al., 2004). There is evidence for both possibilities. Highly skilled, hierarchically controlled tasks like speaking and typing appear to activate all lower-level elements in WM in parallel (e.g., the phonemes in spoken words or the letters in typed words; Crump & Logan, 2010; Logan, Miller, & Strayer, 2011). Serial order is determined during action execution (Burgess & Hitch, 1999; Estes, 1972; Hartley & Houghton, 1996; Rumelhart & Norman, 1982). Less practiced tasks appear to activate the elements of action plans differentially in WM, creating a primacy gradient of activation in which each successive element is activated less than the preceding one (Page & Norris, 1998; Rhodes et al., 2004). Thus, serial order is determined in WM and in action execution.

The present study used a partial repetition paradigm (Stoet & Hommel, 1999) to examine serial position effects in the interaction between current actions and action sequences maintained in WM. Two different visual events (A and B) were presented sequentially. When event A was presented, participants planned and maintained a sequence of joystick movements (e.g., “move left then up”) based on its identity. While they maintained the action plan in WM, event B appeared, calling for an immediate “left” or “right” joystick movement based on its identity. After participants executed a speeded response to event B (e.g., “left” movement), they executed the planned action for event A (e.g., “move left then up”). The main manipulation was whether the action to event B overlapped with the first or second feature of the action plan for event A. If the features of an action plan are maintained in WM by a gradient of activation, partial repetition costs should be greater when the current action matches the first feature of the action plan maintained in WM. The first feature in the sequence should be more easily reactivated (primed) by the current action, and this reactivated feature should spread activation to the other feature it is bound to within the action plan. If the features of an action plan are maintained in WM in parallel, partial repetition costs should be the same whether the current action matches the first or the last feature of the action plan maintained in WM. Each feature in the action plan should have an equal chance of being reactivated and spread activation to the other feature to which it is bound.

Method

Participants

Sixty-five undergraduates from Washington State University participated for optional extra credit in psychology classes. This study was approved by the Washington State University Institutional Review Board, and informed consent was obtained. Participants had at least 20/40 visual acuity and could accurately identify red/green bars on a Snellen chart. Five participants were excluded for not following instructions. Data were analyzed for 60 participants.

Apparatus

Stimuli appeared on a computer screen ~61 cm from the participant. E-Prime software (1.2) presented stimuli and collected data. A custom-made apparatus (Fig. 1) with a round, plastic (ultra-high molecular weight polyethylene) handle (joystick: 5.1 × 2.9 cm) mounted on a plastic (polyvinyl chloride) square box (30.5 cm2) recorded responses. The apparatus was recessed into a table, centered along the participant’s body midline. The joystick could move along a 1.1-cm-wide track 11.35 cm to the left, right, up, or down from the center and 22.7 cm (per side) around the perimeter of the box. A magnet at the bottom of the joystick triggered response sensors below the track; sensors were located 1.5 and 7.5 cm in left, right, up, and down directions from the apparatus center and 3.9 cm on each side of the outer corners of the track. Four spring-loaded bearings at the center of the apparatus provided tactile feedback when participants centered the joystick. Participants knew that a movement had been completed from the tactile feedback received as the joystick hit the right or left and up or down edges of the track. All joystick responses were made with the dominant hand. A hand-button held in the nondominant hand initiated each trial.

Two different views of the joystick apparatus used to respond to event B and event A. The black circles at the bottom of the track represent the response sensors. The joystick (white circle) is intact in the apparatus on the left. All responses (action A and action B) began from the center location of the track. The apparatus on the right shows the joystick removed from the track. This view shows the additional four sensors (black circles) at the center of the track and the four spring-loaded bearings (gray circles) that provided tactile feedback that the joystick was centered. The joystick alone is shown below the apparatus on the right, with the grip handle on top and the magnet (black) on the bottom. The joystick was constructed to slide smoothly across the track

Stimuli and responses

Event A

Event A (1.6° visual angle) was a white arrowhead (0.85° visual angle) pointing to the left (<) or right (>), with an asterisk (0.35° visual angle) located above or below the arrowhead. The arrowhead was centered 1.6° of visual angle above a white central fixation cross (0.7° visual angle). Event A (action A) required two joystick movements. One movement (left or right) was indicated by the arrowhead direction; a left-pointing arrowhead signaled a left movement, and a right-pointing arrowhead signaled a right movement. Another movement was indicated by the asterisk location; an asterisk above the arrowhead signaled an up movement (away from the body), and an asterisk below the arrowhead signaled a down movement (toward the body).

Event B

Event B was a red or green number symbol (#, 1.0° visual angle) centered (0.30° visual angle) below the central fixation cross. Event B (action B) required a speeded left or right joystick movement dependent on color. Half of the participants responded to the red number symbol with a left movement and to the green number symbol with a right movement; the other half had the opposite stimulus–response assignment.

Procedure

Figure 2 shows the trial events. Stimuli appeared on a black background. A white fixation cross occurred in the middle of the screen throughout each trial. Each trial was initiated by pressing the hand button. Afterward, the fixation cross (cross) appeared alone for 1,000 ms. Next, event A appeared above the cross for 2,000 ms, followed by the cross alone for 1,250 ms. During this time, participants planned a response to event A (action A). Then event B appeared below the cross for 100 ms, followed by a blank screen for 4,750 ms or until a response was detected for event B (action B). Participants were instructed to execute action B quickly and accurately. After executing action B, a blank screen appeared until participants centered the joystick. Once centered, a gray screen signaled the execution of action A. Participants had 5,000 ms to execute an accurate, nonspeeded action A response. After executing action A, participants centered the joystick. Once centered, a black screen appeared (1,250 ms), followed by response reaction time (RT) and accuracy feedback for action B (600 ms) and accuracy feedback for action A (350 ms). Then the initiation screen for the next trial appeared. Participants initiated the next trial when ready.

All responses were initiated from the center of the joystick apparatus. Action B RT was measured from event B onset until a response triggered the left or right sensor, 7.5 cm from apparatus center. Action A was collected via three response sensors: the first located 1.5 cm left, right, up, or down from apparatus center; the second located 7.5 cm left, right, up, or down from apparatus center; and the third located 3.9 cm from an outer corner. Participants were instructed not to execute any part of action A until after executing action B. Also, they were not to move fingers or use external cues to help them remember action A; they were told to maintain action A in memory.

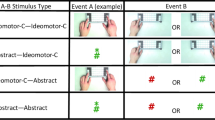

Two factors were manipulated. First, action feature overlap between action B and action A was manipulated within participants. The left or right action B response either overlapped with the left or right action A response or did not. Second, the order of action feature execution (left or right first vs. up or down first) for action A was manipulated between participants (see Table 1). Half of the participants first moved the joystick in the left or right direction indicated by the arrowhead and then moved the joystick in the up or down direction indicated by the asterisk. The other half of the participants first moved the joystick in the up or down direction indicated by the asterisk and then moved the joystick in the left or right direction indicated by the arrowhead. Thus, the location of the feature in the action A sequence (first or second) that overlapped with action B was manipulated between participants.

Action A and B stimuli were equally paired together, and action overlap (overlap or no overlap) occurred with equal probability in a random order in each block of trials. Also, for half of the participants, action B was the same as or different from the first feature in the action A sequence; for the other half, action B was the same as or different from the second feature in the action A sequence. Participants completed one 90-min session consisting of 56 practice trials and seven blocks of 32 experimental trials (with a short break after the third block). Afterward, participants answered questions about the strategies used. Participants who used or reported using external cues (e.g., moving fingers, vocalizing the response out loud) when planning action A in the experimental trials were excluded (n = 5), since they violated explicit task instructions.

Results

A mixed design analysis of variance with the within-subjects factor of action overlap (overlap vs. no overlap) and the between-subjects factor of overlap location (first vs. second feature) was conducted separately on action A error rate, action B correct RT, and action B error rate. The correct RT and error analyses for action B were restricted to trials where action A was accurate. Approximately 0.06% of trials from each participant were lost due to action A recording errors. Figure 3 shows the action B correct RTs and error rates for the action feature overlap conditions when overlap occurred at the first- versus second-feature location in the action A sequence.

Action A

The average error rate was 4.76%, and no significant effects were found, F < 1. Thus, action overlap did not influence action A recall accuracy.

Action B

Only a significant interaction between action overlap and overlap location was found for mean correct RT, F(1, 58) = 7.79, p < .01, η p 2 = .12. Pairwise comparisons showed that RT was significantly longer for the action overlap (M = 570 ms) than for the no-overlap (M = 559 ms) condition when overlap occurred at the first-feature location in the action A sequence, F(1, 58) = 5.41, p < .03, η p 2 = .09. Also, RT was shorter (although not significantly so) for the action overlap (M = 547 ms) than for the no-overlap (M = 554 ms) condition when overlap occurred at the second-feature location in the action A sequence, F(1, 58) = 2.63, p > .12, η p 2 = .04. For error rate, there were no significant effects, Fs < 1. Thus, RT interpretations were not due to a speed–accuracy trade-off.

Discussion

This study showed serial position effects in the interaction between current actions and action sequences maintained in WM. A partial repetition cost occurred only when the current action overlapped with the first feature in the action sequence maintained in WM. There was no effect when the current action overlapped with the second feature in the action sequence. These findings are consistent with models of serial memory that assume that elements of an action plan are represented by a gradient of activation in WM prior to the selection of action elements for output (Page & Norris, 1998; Rhodes et al., 2004). The findings do not support models of serial memory or action control that assume that all elements of an action plan are activated to the same extent in WM and serial order is determined later, during the selection of action elements for output (Anderson & Matessa, 1997; Crump & Logan, 2010; Hartley & Houghton, 1996; Houghton & Hartley, 1995; Lashley, 1951; Logan et al., 2011; Rosenbaum et al., 1984). Because previous studies investigating partial repetition costs always involved the first feature in the action sequence (hand followed by a movement; Miller, 1982), it was unclear whether costs in executing current actions were influenced by differences in feature activation between serial positions in the representation of the action plan maintained in WM. The present study showed that interference is greater when the current action overlaps with the first versus last feature within an action plan maintained in WM—indicating that interference is influenced by the serial order of action features maintained in memory.

Our results and interpretations are consistent with those of Sevald and Dell (1994), who showed that repeating two words as rapidly as possible was slower when the words shared an initial phoneme (cat, cab) and faster when they shared an ending phoneme (cat, bat) than when they did not share any phonemes. They suggested that shared initial segments reactivated the discrepant segments, leading to competition and inhibition of the discrepant word or its components. No such reactivation occurred when final segments were shared (see Mattson et al., 2012). O’Seghdha and Marin (2000) found partial repetition costs when final phonemes were shared, although these costs were much smaller. They suggested that phonemes are first accessed in parallel and are then assigned sequentially to a word frame for output. This idea parallels the idea that action features are initially activated independently, in parallel, and then are bound into an action plan (Hommel, 2004)—with the added assumption that feature binding is ordered (Mattson et al., 2012). Thus, there is evidence that components of words and manual actions are ordered prior to selection and retrieval, and a primacy gradient of component activation is one way this could be accomplished.

In addition, our results corroborate physiological evidence for a gradient of activation of features within an action plan prior to action execution. Averbeck et al. (2002) trained monkeys to draw geometric shapes (e.g., triangle, square) with a specific order to the individual segments. They recorded activity from single neurons in the prefrontal cortex of monkeys while they drew the shapes and found different patterns of neural activity corresponding to individual segments of the shapes as the monkeys drew each segment. Then monkeys drew the shapes after a delay between a cue to draw a specific shape and a cue to begin drawing. Averbeck et al. found parallel activation of the patterns corresponding to the segments of the cued shape during the delay period. There was greater activation for the first segment than for the second and greater activation for the second than for the third, suggesting a gradient of activation in WM that represents the serial order of the sequence.

It is possible that differences in activation between serial positions are due to a combination of lateral- and self-inhibition. In our study and those described above, an action plan had to be temporarily inhibited. Activation of self-inhibition decays over time, so later features in the sequence may be suppressed more than earlier features (Li, Lindenberger, Rünger, & Frensch, 2000). However, the trend toward facilitation in the present study and the facilitation observed by Sevald and Dell (1994) when the final components in the sequences overlapped argue against this explanation.

Importantly, the goal in the present study was to make a specific sequence of motor responses based on discrimination of a perceptual stimulus. If executing a particular action sequence is the primary goal, the action sequence appears to be represented prior to readying the system to execute the action (see also Averbeck et al., 2002). This finding is relevant to newly learned tasks, where executing actions in a particular sequence is imperative (e.g., “move the stick-shift right then up” when driving) and is an integral part of the primary goal (e.g., put the vehicle in first gear). However, if the end state of the action (e.g., typing a word) is the primary goal, the action feature sequence may not be represented prior to action selection (Cattaneo, Caruana, Jezzini, & Rizzolatti, 2009; Crump & Logan, 2010; Stürmer, Aschersleben, & Prinz, 2000). In this case, the goal may be represented separately from the action sequence required to meet that goal (as in hierarchical control models)—particularly if the sequence can be executed automatically. Here, the goal may serve as a cue to activate the required action sequence in parallel with action order determined during response selection. Another possibility is that novel sequences are preplanned in a nonmotoric, short-term motor buffer that is part of WM, while highly practiced movement sequences are represented by a motor chunk (a particular motor code) that hardly loads on nonmotoric WM (Abrahamse, Ruitenberg, De Kleine, & Verwey, 2013; Verwey, 1999, 2001; Yamaguchi & Logan, 2013). Because the motor chunk associated with the primary goal does not impose much of a load on nonmotoric WM, goal-based chunk selection may not show the interference found in the present study. Here, serial order is maintained in the motor chunk (code) that unfolds when the chunk is selected.

In summary, we examined interactions between the action plans we hold in memory and the actions we carry out and showed that the first feature of the action plan maintained in memory interfered more with current actions than did the last feature. Our results complement other research that has shown a bias in activating (priming) the first feature in the action sequence, as compared with other features in the sequence (Dell, Burger, & Svec, 1997; Inhoff, Rosenbuam, Gordon, & Campbell, 1984; Crump & Logan, 2010) and physiological evidence showing order-specific cellular activity (Averbeck et al., 2002; Tanji & Shima, 1994). Importantly, we showed that features of an action plan are not equally activated in parallel when maintained in WM prior to executing a response. The first feature in the sequence appears to be more active than the last. This finding contrasts the assumptions made by most serial models of memory and action control. While our findings are consistent with a primacy gradient of action feature activation maintained in WM prior to response execution (Rhodes et al., 2004), it is possible that activation of the first feature in the action sequence is different from all others. We are currently investigating this issue. Importantly, the present study provides insight into the processes involved in action planning, as well as the cognitive structure of action plans, in cases where we may need to temporarily maintain actions plans in memory while carrying out a current action, at least for newly learned tasks.

References

Abrahamse, E., Ruitenberg, M., De Kleine, E., & Verwey, W. B. (2013). Control of automated behaviour: Insights from the Discrete Sequence Production task. Frontiers in Human Neuroscience, 7, 82.

Anderson, J. R., & Matessa, M. P. (1997). A production system theory of serial memory. Psychological Review, 104, 728–748.

Averbeck, B. B., Chafee, M. V., Crowe, D. A., & Georgopoulos, A. P. (2002). Parallel processing of serial movements in prefrontal cortex. Proceedings of the National Academy of Sciences, 99(20), 13172–13177.

Burgess, N., & Hitch, G. J. (1999). Memory for serial order: A network model of the phonological loop and its timing. Psychological Review, 106, 551–581.

Cattaneo, L., Caruana, F., Jezzini, A., & Rizzolatti, G. (2009). Representation of goal and movements without overt motor behavior in the human motor cortex: A transcranial magnetic stimulation study. The Journal of Neuroscience, 29(36), 11134–11138.

Crump, M. J. C., & Logan, G. D. (2010). Hierarchical control and skilled typing: Evidence for word level control over the execution of individual keystrokes. Journal of Experimental Psychology: Learning, Memory and Cognition, 36, 1369–1380.

Dell, G. S., Burger, L. K., & Svec, W. R. (1997). Language production and serial order: A functional analysis and a model. Psychological Review, 104, 123–147.

Estes, W. K. (1972). An associative basis for coding and organization in memory. Coding Processes in Human Memory, 161–190.

Fournier, L. R., & Gallimore, J. M. (2013). What makes an event: Temporal integration of stimuli or actions? Attention, Perception & Psychophysics. doi:10.3758/s13414-013-0461-x

Fournier, L. R., Wiediger, M. D., McMeans, R., Mattson, P. S., Kirkwood, J., & Herzog, T. (2010). Holding a manual response sequence in memory can disrupt vocal responses that share semantic features with the manual response. Psychological Research, 74, 359–369.

Hartley, T., & Houghton, G. (1996). A linguistically constrained model of short-term memory for nonwords. Journal of Memory and Language, 35, 1–31.

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Science, 8, 494–500.

Houghton, G. (1990). The problem of serial order: A neural network model of sequence learning and recall. In Current research in natural language generation (pp. 287–319). Academic Press Professional, Inc.

Houghton, G., & Hartley, T. (1995). Parallel models of serial behavior: Lashley revisited. Psyche, 2, 1–25.

Inhoff, A. W., Rosenbaum, D. A., Gordon, A. M., & Campbell, J. A. (1984). Stimulus-response compatibility and motor programming of manual response sequences. Journal of Experimental Psychology. Human Perception and Performance, 10(5), 724–733.

Jeannerod, M. (1997). The cognitive neuroscience of action. Cambridge, MA: Blackwell.

Keele, S. W. (1968). Motor control in skilled motor performance. Psychological Bulletin, 70, 387–403.

Lashley, K. S. (1951). The problem of serial order in behavior. In L. A. Jeffress (Ed.), Cerebral mechanisms in behavior. New York: Wiley.

Li, K. Z., Lindenberger, U., Rünger, D., & Frensch, P. A. (2000). The role of inhibition in the regulation of sequential action. Psychological Science, 11(4), 343–347.

Logan, G. D. (2004). Working memory, task switching, and executive control in the task span procedure. Journal of Experimental Psychology: General, 133, 218–236.

Logan, G. D. (2007). What it costs to implement a plan: Plan-level and task-level contributions to switch costs. Memory and Cognition, 35(4), 591–602.

Logan, G. D., Miller, A. E., & Strayer, D. L. (2011). Electrophysiological evidence for parallel response selection in skilled typists. Psychological Science, 22, 54–56.

Mattson, P. S., Fournier, L. R., & Behmer, L. P., Jr. (2012). Frequency of the first feature in action sequences influences feature binding. Attention, Perception, & Psychophysics, 74(7), 1446–1460.

Mattson, P. S., & Fournier, L. R. (2008). An action sequence held in memory can interfere with response selection of a target stimulus, but does not interfere with response activation of noise stimuli. Memory and Cognition, 36, 1236–1247.

Miller, J. (1982). Discrete versus continuous stage models of human information processing: In search of partial output. Journal of Experimental Psychology: Human Perception and Performance, 8(2), 273–296.

Miller, G. A., Galanter, E., & Pribram, K. H. (1960). Plans and the structure of behavior. New York: Holt, Rinehart & Winston.

O'Seaghdha, P. G., & Marin, J. W. (2000). Phonological competition and cooperation in form-related priming: sequential and nonsequential processes in word production. Journal of Experimental Psychology: Human perception and performance, 26(1), 57.

Page, M. P. A., & Norris, D. (1998). The primacy model: A new model of immediate serial recall. Psychological Review, 4, 761–781. doi:10.1037/0033-295X.105.4.761-781

Rhodes, B. J., Bullock, D., Verwey, W. B., Averbeck, B. B., & Page, M. P. A. (2004). Learning and production of movement sequences: Behavioral, neurophysiological, and modeling perspectives. Human Movement Science, 23, 699–746.

Rosenbaum, D. A., Inhoff, A. W., & Gordon, A. M. (1984). Choosing between movement sequences: A hierarchical editor model. Journal of Experimental Psychology: General, 113(3), 372–393.

Rumelhart, D. E., & Norman, D. A. (1982). Simulating a skilled typist: A study of skilled cognitive-motor performance. Cognitive Science, 6(1), 1–36.

Schneider, D. W., & Logan, G. D. (2006). Hierarchical control of cognitive processes: Switching tasks in sequences. Journal of Experimental Psychology: General, 135, 623–640.

Sevald, C. A., & Dell, G. S. (1994). The sequential cuing effect in speech production. Cognition, 53(2), 91–127.

Stoet, G., & Hommel, B. (1999). Action planning and the temporal binding of response codes. Journal of Experimental Psychology: Human Perception and Performance, 25, 1625–1640.

Stürmer, B., Aschersleben, G., & Prinz, W. (2000). Correspondence effects with manual gestures and postures: A study on imitation. Journal of Experimental Psychology: Human Perception and Performance, 26, 1746–1759.

Tanji, J., & Shima, K. (1994). Role for supplementary motor area cells in planning several movements ahead. Nature, 371, 413–416.

Verwey, W. B. (1999). Evidence for a multistage model of practice in a sequential movement task. Journal of experimental psychology. Human perception and performance, 25(6), 1693–1708.

Verwey, W. B. (2001). Concatenating familiar movement sequences: The versatile cognitive processor. Acta psychologica, 106(1), 69–95.

Weidiger, M., & Fournier, L. R. (2008). An action sequence withheld in memory can delay execution of visually guided actions: The generalization of response compatibility interference. Journal of Experimental Psychology: Human Perception and Performance, 34(5), 1136–1149.

Yamaguchi, M. & Logan, G.D. (2013). Pushing typists back on the learning curve: Revealing chunking in skilled typewriting. Journal of Experimental Psychology: Human Perception and Performance, in press.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fournier, L.R., Gallimore, J.M., Feiszli, K.R. et al. On the importance of being first: Serial order effects in the interaction between action plans and ongoing actions. Psychon Bull Rev 21, 163–169 (2014). https://doi.org/10.3758/s13423-013-0486-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0486-0