Abstract

Implicit learning in the serial reaction time (SRT) task is sometimes disrupted by the presence of a secondary distractor task (e.g., Schumacher & Schwarb Journal of Experimental Psychology: General 138:270–290, 2009) and at other times is not (e.g., Cohen, Ivry, & Keele Journal of Experimental Psychology: Learning, Memory, and Cognition 16:17–30, 1990). In the present study, we used an instructional manipulation to investigate how participants’ conceptualizations of the task affect sequence learning under dual-task conditions. Two experimental groups differed only in terms of the instructions and presequence training. One group was instructed that they were completing two separate tasks, whereas the other group was instructed that they were performing a single, integrated task. The separate group showed sequence learning, while the integrated group did not. These findings suggest that the conceptualization of task boundaries affects the availability of the sequential information necessary for implicit learning.

Similar content being viewed by others

Humans have a remarkable ability to perform new multistep tasks. Learning such tasks can be challenging not only because of their complexity, but also because they are acquired in settings where there may be distraction and interruption. For a system attempting to encode relationships among the steps of a task, irrelevant events may make it difficult to abstract the task-related information.

For example, when baking a cake, we must learn to follow certain steps in the same order each time (e.g., softening the butter, cracking the egg, and blending). Not only is it important that performance be stable in the face of interruption, but learning should be robust; that is, it should not be more difficult to bake the cake the next time in a different environment with different intervening events. Thus, discriminating between events that relate to a task and those that are irrelevant would facilitate learning and its transfer to novel task settings.

A widely used task for studying sequence learning is the serial reaction-time (SRT) task (Nissen & Bullemer, 1987), which requires participants to make a series of speeded choice-RT responses. During training, the stimuli are presented in a repeating sequence, but participants are not informed of the sequence. Nonetheless, the sequence is learned, as measured by increased RTs when the stimuli appear in a random order (Nissen & Bullemer, 1987).

How do secondary tasks affect sequence learning?

Secondary tasks are often used in SRT experiments to prevent awareness of the sequence. In many SRT experiments, robust sequence learning is observed in the presence of a secondary task (e.g., Cohen, Ivry, & Keele, 1990; Jiménez & Vázquez, 2005; Schvaneveldt & Gomez, 1998), but in other experiments, the secondary task prevents or greatly reduces sequence learning (e.g., Heuer & Schmidtke, 1996; Nissen & Bullemer, 1987; but see Frensch, Wenke, & Rünger, 1999).

Schmidtke and Heuer (1997) proposed an integration account of learning in dual-task scenarios: Sequence learning can be disrupted because the two tasks become functionally integrated. That is, the relationship between successive trials in the sequence task can become obscured if the random secondary task has been integrated with the sequence task to form a single, partly random task. Schmidtke and Heuer tested this hypothesis by using a sequential distractor task and manipulating the length of the distractor sequence so that it was either the same as (six elements) or different from (five elements) the length of the primary sequence. They found more learning in the primary task when the sequences were the same length than when they were different, and proposed that learning was reduced in the different-length condition because the resulting integrated visual–auditory sequence was dramatically longer than when the sequences were the same length. Additional experiments showed that other factors, such as the processing requirements associated with the secondary task, can affect task integration. For example, the researchers showed that when the secondary task did not require an overt response, no evidence of task integration was apparent.

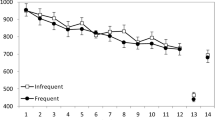

Schumacher and Schwarb (2009) proposed an alternative account of the disruption of learning under dual-task situations. They pointed out that adding a secondary task not only prevents explicit learning, but also increases the overall RT. In a meta-analysis, they found more dual-task interference when no sequence learning was reported; in studies in which sequence learning remained robust in the presence of a secondary task, RTs for the sequence task were similar under the single- and dual-task conditions. The authors concluded that when the two tasks occupied the response selection stage concurrently, sequence learning was diminished.

To test this account, Schumacher and Schwarb (2009) compared sequence learning under dual-task conditions that differed in terms of the stimulus onset asynchrony (SOA) between the stimuli for the sequence and secondary tasks. As predicted, sequence learning was eliminated only when the SOA was short. The authors concluded that central-processing resources involved in response selection are required for sequence learning, and when the secondary task requires these resources at the same time as the primary task, learning is disrupted.

Schumacher and Schwarb (2009) also examined whether participants’ strategies affected sequence learning. In a second experiment, they showed that instructing participants to prioritize the sequence task resulted in substantial sequence learning in the short-SOA condition; prioritizing the primary task encouraged participants to respond to the tasks serially. Consequently, the response selection stages assumed to be critical for sequence learning did not overlap between the two tasks.

The Schmidtke and Heuer (1997) and Schumacher and Schwarb (2009) accounts are not mutually exclusive. In Schmidtke and Heuer’s Experiment 1, the SOA between the presentations of the stimuli for the two tasks was only 50 ms. This short interval made it possible that participants were still processing the secondary task when the stimulus for the sequence task appeared (Schumacher & Schwarb, 2009). Moreover, Schmidtke and Heuer allowed for the secondary task’s effect to be moderated by task demands and participants’ strategies; they claimed that random secondary tasks only inhibit learning when the stimuli are task relevant. Thus, both accounts acknowledge that the effects of a secondary task on sequence learning may be influenced by top-down control.

Evidence from other domains has supported the claim that top-down control may determine what sequential information is learned by specifying whether certain features of the stimuli are part of the task set. Dreisbach and Haider (2009) showed that instructions can influence participants’ representations of the task such that their responses are less susceptible to interference from distractor information. This idea has been extended to sequence learning: Gaschler, Frensch, Cohen, and Wenke (2012) showed that manipulating the instructions affected which task features were included in the learned sequence representation. The authors concluded that the way that the task is described to participants directly influences the content of the sequence knowledge.

Present study

In the present study, we build on these findings to test whether participants’ conceptualization of task boundaries affects sequence learning. Whereas Gaschler et al. (2012) used instructions to manipulate the information bound into stimulus–response (S–R) pairs, we used the instructions to manipulate whether the S–R pairs belonged to one or to two tasks. Instructions that encouraged participants to conceptualize the streams of pairs as integrated should diminish sequence learning when one of the tasks is random, whereas instructions encouraging participants to conceptualize the tasks as separate should protect sequence learning from the random task. The manipulation was implemented across two groups that performed with the same S–R associations: Visual stimuli required right-hand responses, and auditory stimuli required left-hand responses. All of the S–R associations were given equal weights in both groups.

The only differences between the groups were the instructions that they received and the initial practice. The separate group was told to complete two distinct tasks, and in the practice blocks, the two S–R sets were given separately. The integrated group was told that they were going to be doing one, multipart task: playing the part of a hotel concierge. In the practice blocks, the two S–R sets appeared together, but they were presented at half of the experimental rate.

We hypothesized that the random task should produce greater disruptions in sequence learning for the integrated group, because the random auditory stimuli should be integrated with the sequence of visual stimuli, reducing the salience of the sequence. In contrast, the separate group should conceptualize the S–R sets as distinct, reducing interference from the tones and leading to greater sequence learning.

Method

Participants

A group of 72 undergraduates from the University of Iowa participated in partial fulfillment of a course requirement and reported normal or corrected-to-normal vision and hearing. The participants were randomly assigned to one of the two experimental groups. Eight participants were excluded from the analysis because their accuracy fell below 80 % correct across both tasks, leaving 32 participants in each group.

Stimuli and apparatus

The experiment was run with E-Prime 1.0 software (Psychology Software Tools Inc., Sharpsburg, PA) on a PC computer with a 17-in. monitor approximately 60 cm from the participant. The visual stimuli were colored squares, 3.1 deg wide, presented for 200 ms on a black background, 3 deg to the right of a central fixation cross, which measured 1 deg × 1 deg. Red, blue, green, and yellow squares required pushing the “h,” “j,” “k,” and “l” keys, respectively, with the fingers of a participant’s right hand. The auditory tones lasted 160 ms. The low tone (220 Hz) required pressing the “a” key with the left middle finger, and the high tone (3250 Hz) required pressing the “s” key with the left index finger.

Procedure

Instructions were presented verbally and in written form on the computer screen. The separate group was told to perform two distinct tasks, in which four different colored squares and two tones were each mapped to a unique response. The integrated group was told that the auditory and visual stimuli related to a common task, a simplified version of relaying messages at a hotel: The visual stimulus indicated the room that required service, and the auditory stimulus indicated the type of service. Although they used the same mappings as the separate group, they were told to imagine that their responses relayed this information to the hotel staff. Thus, each pair of responses could be conceptualized as conveying a single request (e.g., housekeeping for room #2). The instructions are included in the supplemental materials.

Critically, the events of each trial were identical for the two groups in the dual-task blocks.Footnote 1 The fixation cross remained throughout the block of trials. Each trial lasted for 3 s total and began with the presentation of the auditory stimulus, which lasted for 150 ms. The visual stimulus appeared 600 ms after the auditory stimulus.

Participants were instructed to make their responses as quickly and accurately as possible and were not informed of the sequence of the visual stimuli. If the response was incorrect or not produced within 1,500 ms, an error screen appeared for 1,000 ms. For the separate group, the error screen said “Incorrect Visual and Auditory Response” if both responses were wrong, “Incorrect Visual Response” if the error was on the visual task, and “Incorrect Auditory Response” if the error was on the auditory task. For the integrated group, the error screen said “Incorrect Service and Room” if they made mistakes on both the visual and auditory tasks, “Wrong Service” for an error on the visual task, and “Wrong Room” for the auditory task.

We presented 12 blocks, each with 61 trials. For the separate group, the first block was the visual task only, and the second block was the auditory task. For the integrated group, the first two blocks included both tasks, but the stimuli were presented at half the rate as in the remaining blocks. In this way, the two groups received the same amount of practice on both tasks. Beginning with the third block, the events were identical for both groups. The sequence in the visual task was introduced on the fourth block and continued through the ninth. The sequence was a six-item hybrid sequence (e.g., red, blue, red, green, blue, and yellow) in which two items were only ever followed by one color (unique) and two items were followed by two colors (ambiguous; see Cohen et al., 1990). In the 10th and 11th blocks, the visual stimuli were random (all colors were equally likely), and in the 12th block, the sequence was reintroduced.

At the end of the session, a questionnaire was given in order to assess sequence knowledge. First, participants were asked whether they had used any special strategies. Second, they were asked whether the experimental blocks differed in any ways, and third, whether some of the stimuli were more frequent than others. The fourth and fifth questions directly addressed whether the stimuli sometimes appeared in a sequence; participants indicated whether or not they had noticed a pattern in either the color task or the tone task, and whether they thought that the color stimuli sometimes appeared in a sequence. Then they guessed the sequence for the color task, regardless of their previous answers. Finally, the participants rated on a scale of 1 to 7 how strongly they felt that they had been completing one versus two tasks.

Results

Questionnaire

Responses to the first four questions were assigned a single number to capture explicit knowledge of the sequence. Four points were given if the first question’s answer mentioned a sequence, three if the second question’s answer indicated that some blocks contained a sequence, two if the third question’s answer was “yes” or a more detailed answer, one if participants believed that they were in the sequence group, and zero points if participants made no mention of a sequence or a strategy involving sequences and believed that they were not in the sequence group. The mean ratings were 0.92 for the separate and 0.80 for the integrated group,Footnote 2 t < 1, indicating that explicit knowledge of the sequence was low and not affected by the instructional manipulation.Footnote 3

With regard to the ratings indicating whether they were performing one or two tasks, the average ratings were 3.8 for the integrated group and 5.2 for the separate group, t(54) = 3.18, p < .01, indicating that the instructional manipulation affected participants’ conceptualizations of the tasks.

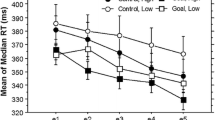

Visual task

We eliminated trials from the analyses of RTs because of incorrect responses, being the first trial of each block, and RTs of less than 200 ms (7 %). RTs on Block 3 were slower for the separate (773 ms) than for the integrated (632 ms) group, t(31) = 3.80, p < .001, likely reflecting that this was the first time that the separate group had performed the two tasks together. However, this difference was short-lived; by Block 4, we found no difference between RTs for the separate group (654 ms) and the integrated group (663 ms), t(31) < 1 (see Fig. 1).

To obtain a measure of sequence learning, sequence Blocks 9 and 12 were compared with random Blocks 10 and 11 (see Cohen et al., 1990). An analysis of variance (ANOVA) with Block Type (random or sequence) as a within-subjects factor and Group (separate or integrated) as a between-subjects factor was conducted on the RTs. The main effect of block type was marginally significant, F(1, 62) = 3.59, MSE = 4,765.32, p = .06, and the main effect of group was not significant, F < 1. Critically, the Group × Block Type interaction was significant, F(1, 62) = 9.68, MSE = 1,328.52, p < .01. For the separate group, RTs were 32 ms faster for the sequence blocks (636 ms) than for the random blocks (668 ms), t(31) = 2.89, p < .01 (Fig. 1), indicating that this group learned the sequence embedded in the visual task. No learning was observed for the integrated group; they were 8 ms slower in the sequence blocks (640 ms) than in the random blocks (632 ms), t(31) = 1.22, p = .23. In sum, sequence learning was observed for participants who were instructed that the two tasks were separate but not for participants who were instructed that the stimuli belonged to a single task.

Accuracy

Percentages correct were submitted to an ANOVA identical to that for the RTs, and no main effects or interactions were apparent (all Fs < 1). The mean percentage correct for the visual task was 92 % for both groups in both the random blocks (10 and 11) and the sequence blocks (9 and 12).

Auditory task

Incorrect responses (5 %), the first trial of the block, and trials with RTs less than 200 ms (4 %) were all removed. The RTs from Blocks 3–12 were compared from both groups, and no difference emerged between the separate (746 ms) and integrated (696 ms) groups, t(31) = 1.09, p = .29. An ANOVA identical to that for the visual task was conducted on the RTs for Blocks 9–12. We found no main effects—group, F < 1; block type, F(1, 62) = 1.04, MSE = 2,728.76, p = .31—and no interaction, F < 1.

Accuracy

No significant main effects were apparent: group, F(1, 62) = 1.23, MSE = .003, p = .27; block type, F < 1. The interaction was also not significant, F(1, 62) = 1.88, MSE = .001, p = .18. The percentage correct for the auditory task was 96 % for both groups in both the random blocks (10 and 11) and the sequence blocks (9 and 12).

Interresponse intervals

To assess whether the two groups scheduled cognitive operations differently, we compared the interresponse intervals (IRIs) across Blocks 3–12. No significant difference was apparent between the mean IRIs for the separate group (688 ms) and the integrated group (658 ms), t(31) < 1. To obtain a measure of response grouping, the standard deviation of the IRIs was calculated for each participant, but we found no significant difference between the standard deviations for the separate group (295) and the integrated group (268), t(31) = 1.54, p = .13.

Discussion

We asked whether conceptualizing two streams of stimuli and responses as belonging to the same or to different tasks would affect whether the sequence information in one of the streams would be learned. The results clearly indicated that the conceptual organization of the task affects learning: Sequence learning was observed only when the streams were conceptualized as belonging to separate tasks. Note that both groups made the same responses to the same stimuli, but only the separate group showed sequence learning. Thus, the data indicate that temporal factors are not the sole factor responsible for task integration.

We propose that the way that participants view the relationship between two sets of stimuli and responses plays a key role in learning: When the two streams of stimuli are conceptualized as belonging to the same task, they are integrated. This is detrimental to sequence-learning processes when one of the streams is random. However, when the tasks are conceptualized as separate, the random auditory stream is segregated from the visual stream, and the regularities among the visual stimuli can be learned. It is unclear whether the instructional manipulation primarily affects encoding of the sequence or performance (see Frensch et al., 1999), as it is possible that the integrated task instructions could cause cross-talk that would interfere with the expression of sequential learning.

Abrahamse, Jiménez, Verwey, and Clegg (2010) suggested that sequence knowledge can include successive features of the stimuli, the responses, or the S–R pairs, depending on which aspects of the task are most relevant. The authors pointed out that seemingly trivial inconsistencies across studies, such as which fingers are used, can change the goal of the task, and consequently, the content of the learned sequence representation. Our findings are consistent with this account, but we have also shown that in addition to specifying goal-related information, task representations can contain boundaries that affect the readiness with which particular associations can be made.

This framework is similar to the theory of event coding (Hommel, Müsseler, Aschersleben, & Prinz, 2001), in which action drives the formation of event files that bind together distinct features of the stimulus and response that are associated with a given trial. Note that the retrieval of event files is obligatory and affects performance on trials that share features with previous trials, presumably in an fashion analogous to the way that sequence representations benefit the performance of complex actions. If the event file proposal were to be extended to include multiple events (i.e., a “task file”), the sequence of actions might be more easily encoded if random events were bound in a representation that was separate from the sequential events.

As they perform a novel task, individuals build a representation that specifies the range of S–R pairs that are part of the same task. Relative timing, for example, may serve as a cue for whether S–R pairs belong to the same tasks: Events that are closer together in time are more likely to be considered as belonging to the same task. This interpretation is not mutually exclusive with the claim of Schumacher and Schwarb (2009) that the timing of events is critical for learning under dual-task conditions. However, we propose that timing may not affect learning by inducing overlap in central operations; rather, timing may alter the task representation, which in turn determines the extent to which different streams of stimuli are integrated.

Given that many tasks involve multiple modalities and overlapping operations, behavior should not be constrained by physical properties of the stimuli or their relative timings. We often integrate multiple modalities into a single task (e.g., when we cook), but at other times it is important to keep auditory and visual information separate (e.g., when we write an email with the radio on). This flexibility suggests that task structure should not rely on the physical properties of the stimuli and responses. A learning mechanism that bootstraps on multimodal task representations would serve behavior better in complex, real-world environments. This proposal is consistent with findings from an executive control study that showed that sequential modulations of congruency effects are not dependent on stimulus or response modalities, but rather depend on participants’ representations of the task boundaries (Hazeltine, Lightman, Schwarb, & Schumacher, 2011). Such findings suggest that task boundaries are ultimately determined by participants’ subjective representations of the task. This is particularly striking, given that both sequential modulations and implicit sequence learning typically occur without participants’ awareness.

Our findings indicate that task representations provide a framework to facilitate associations between functionally related events. Task representations may be influenced by the modalities or timing of events but are ultimately determined by the individual’s conceptualization of his or her actions. Such flexibility is crucial for the complex behaviors that dominate our lives.

Notes

When the secondary task is tone counting and does not require a response, the next stimulus in the sequence is often presented 200 ms after the previous response, and the secondary task is presented in between the two. However, as was shown by the short interresponse interval conditions in Schmidtke and Heuer (1997) and Schumacher and Schwarb (2009), using a secondary task that requires a response can lead to dual-task interference if sufficient time is not given between stimulus presentations. As in several other SRT experiments (e.g., Heuer & Schmidtke, 1996), we used a long response–stimulus interval and a long intertrial interval in order to allow participants enough time to process and respond to the stimuli for both tasks before presenting the next stimulus (see Schumacher & Schwarb, 2009, for a discussion).

Thirty of the participants completed questionnaires in the integrated group, and 26 completed questionnaires in the separate group.

To ensure that a few participants with explicit awareness of the sequence were not driving the overall pattern of results, the RT data from the sequence task were reanalyzed without the data from participants who correctly reported four or more items (five participants from the separate group and four from the integrated group). The results were similar; a main effect of block type emerged, F(1, 52) = 4.12, MSE = 3,225.96, p < .05, but no main effect of group, F < 1, and a marginal interaction, F(1, 52) = 3.33, MSE = 2,605.96, p = .074. Follow-up t tests revealed the same difference between the random (674 ms) and sequence (654 ms) blocks for the separate group, t(29) = 2.78, p < .01, and no evidence of different RTs between the random (651 ms) and sequence (650 ms) blocks for the integrated group, t < 1.

References

Abrahamse, E. L., Jiménez, L., Verwey, W. B., & Clegg, B. A. (2010). Representing serial action and perception. Psychonomic Bulletin & Review, 17, 603–623. doi:10.3758/PBR.17.5.603

Cohen, A., Ivry, R. I., & Keele, S. W. (1990). Attention and structure in sequence learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 16, 17–30. doi:10.1037/0278-7393.16.1.17

Dreisbach, G., & Haider, H. (2009). How task representations guide attention: Further evidence for the shielding function of task sets. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 477–486.

Frensch, P. A., Wenke, D., & Rünger, D. (1999). A secondary tone-counting task suppresses expression of knowledge in the serial reaction task. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, 260–274.

Gaschler, R., Frensch, P. A., Cohen, A., & Wenke, D. (2012). Implicit based sequence learning based on instructed task set. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 1389–1407.

Hazeltine, E., Lightman, E., Schwarb, H., & Schumacher, E. H. (2011). The boundaries of sequential modulations: Evidence for set-level control. Journal of Experimental Psychology. Human Perception and Performance, 37, 1898–1914.

Heuer, H., & Schmidtke, V. (1996). Secondary-task effects on sequence learning. Psychological Research, 59, 119–133.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The Theory of Event Coding (TEC): A framework for perception and action planning. The Behavioral and Brain Sciences, 24, 849–878. doi:10.1017/S0140525X01000103

Jiménez, L., & Vázquez, G. A. (2005). Sequence learning under dual-task conditions: Alternatives to a resource-based account. Psychological Research, 69, 352–368.

Nissen, M. J., & Bullemer, P. (1987). Attentional requirements of learning: Evidence from performance measures. Cognitive Psychology, 19, 1–32. doi:10.1016/0010-0285(87)90002-8

Schmidtke, V., & Heuer, H. (1997). Task integration as a factor in secondary-task effects on sequence learning. Psychological Research, 60, 53–71.

Schumacher, E. H., & Schwarb, H. (2009). Parallel response selection disrupts sequence learning under dual-task conditions. Journal of Experimental Psychology. General, 138, 270–290.

Schvaneveldt, R. R., & Gomez, R. L. (1998). Attention and probabilistic sequence learning. Psychological Research, 61, 175–190.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 99.0 MB)

Rights and permissions

About this article

Cite this article

Halvorson, K.M., Wagschal, T.T. & Hazeltine, E. Conceptualization of task boundaries preserves implicit sequence learning under dual-task conditions. Psychon Bull Rev 20, 1005–1010 (2013). https://doi.org/10.3758/s13423-013-0409-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0409-0