Abstract

Conflict adaptation is one of the most popular ideas in cognitive psychology. It purports to explain a wide range of data, including both brain and behavioral data from the proportion congruent and Gratton paradigms. However, in recent years, many concerns about the viability of this account have been raised. It has been argued that contingency learning, not conflict adaptation, produces the proportion congruent effect. Similarly, the Gratton paradigm has been shown to contain several confounds—most notably, feature repetition biases. Newer work on temporal learning further calls into question the interpretability of the behavioral results of conflict adaptation studies. Brain data linking supposed conflict adaptation to the anterior cingulated cortex has also come into question, since this area seems to be responsive solely to time-on-task, rather than conflict. This review points to the possibility that conflict adaptation may simply be an illusion. However, the extant data remain ambiguous, and there are a lot of open questions that still need to be addressed in future research.

Similar content being viewed by others

The role of cognitive control in basic mental functions is one of the primary questions of interest for cognitive psychologists. One of the most popular ideas in the literature is conflict adaptation, the idea that we deal with conflict between stimuli in our environment by shifting attention away from the source of conflict and toward the stimulus we wish to process. The Stroop paradigm (Stroop, 1935) offers the most common way of studying conflict adaptation. In this task, participants identify the print color of a color word. Response times and error rates are increased to incongruent stimuli (e.g., the word BLUE printed in red; BLUEred), relative to congruent stimuli (e.g., BLUEblue). Other commonly used paradigms include the Eriksen flanker task (Eriksen & Eriksen, 1974), in which congruent or incongruent distracting letters (or words) are presented on either side of a centrally located target letter (or word), and the Simon task (Simon & Rudell, 1967), in which a distracting stimulus location is either congruent or incongruent with the response that needs to be made to the target (e.g., a left keypress for a stimulus on the right side of the screen). In paradigms such as these, evidence for conflict adaptation comes from the observation that the size of the congruency effect can be altered in response to changes in conflict. In particular, this article discusses the proportion congruent (PC) and Gratton paradigms.

Conflict adaptation theory has a lot of explanatory power. However, the goal of this article is to explore whether or not conflict adaptation must be assumed in order to explain such phenomena as the PC and Gratton effects. Some of the mounting evidence against the highly popular conflict adaptation account will be discussed, and it will be argued that simpler, nonconflict learning and memory processes can just as easily explain the extant results. The article will begin by discussing PC effects. This section will discuss a contingency learning account of item-specific learning effects. This will be followed by a discussion of the role of temporal learning biases in list-level learning effects. Finally, a compound-cue contingency learning and temporal learning account of context-level learning will be presented. The following section will turn to the Gratton paradigm, where the role of stimulus binding and contingency learning biases will be highlighted. The next section discusses the brain data that are often used to argue for conflict adaptation, and the problems in interpreting such data will be highlighted. Overall, this article will argue that, although highly intuitive and seemingly able to explain a wide range of data, conflict adaptation may not actually exist. Learning and memory biases might, instead, provide a sufficient account. However, there are a lot of open questions that still need answers before it can be conclusively determined whether or not conflict adaptation does exist. In this vein, the final section of the article will discuss possible future directions.

Proportion congruent

In the context of a Stroop or similar paradigm, the PC effect is the observation that congruency effects are larger with a higher proportion of congruent to incongruent trials (Lowe & Mitterer, 1982). For instance, if 75 % of the stimuli are congruent and only 25 % are incongruent, the congruency effect (i.e., incongruent – congruent) will be quite large. In contrast, if only 25 % of the stimuli are congruent and 75 % are incongruent, the congruency effect will be quite small. The PC effect is normally interpreted as evidence for conflict adaptation (e.g., Botvinick, Braver, Barch, Carter, & Cohen, 2001; Cheesman & Merikle, 1986; Lindsay & Jacoby, 1994). Specifically, the claim is that when the proportion of congruent trials is low, participants detect that the word generally interferes with processing of the color, so they decrease attention to the word. Because they attend less to the word, it has less impact on performance. Thus, a smaller difference between incongruent and congruent trials is observed. In contrast, when most of the trials are congruent, participants detect that conflict is infrequent and, thus, allow attention to the word. Thus, the conflict effect is larger. In short, the conflict adaptation account assumes that participants are able to learn about the proportion of conflict trials and are then able to shift their attentional strategy in response to this.

In the computational model of Botvinick and colleagues (2001), this learning is achieved by a conflict-monitoring mechanism. This mechanism measures the level of conflict that occurs on each trial, and then the cognitive system uses this conflict information to adjust attention (i.e., conflict adaptation). Over the course of an experiment, information about conflict can accumulate. Thus, in a low-PC task, a very large amount of conflict has been encountered on previous trials. Conflict adaptation is therefore increased to reduce the impact of the distracting word on color identification. In contrast, in a high-PC task, conflict occurs much less frequently, and thus conflict adaptation is decreased. Via these mechanisms, the conflict-monitoring model was able to simulate the PC effect. This model is also able to account for other results (e.g., the Gratton effect), as will be discussed in later sections of this review.

Item-specific proportion congruent

The conflict adaptation account of PC effects has subjective appeal, because it seems straightforward and plausible. However, some recent work has provided several difficulties for the conflict adaptation account. One particularly problematic finding is the observation that PC effects are strongly determined by item-specific pairings. For instance, Jacoby, Lindsay, and Hessels (2003) manipulated proportion congruency at the item level. Specifically, some words were presented most often in their congruent color (high-PC items; e.g., BLUEblue), and other words were presented most often in an incongruent color (low-PC items; e.g., REDorange). High- and low-PC items were intermixed, thus making it impossible for participants to know whether a given trial would be a high- or low-PC item in advance. In other words, learning that the word tends to conflict or tends not to conflict in the task as a whole (i.e., list-level conflict adaptation, which I will return to later) was impossible. Despite this fact, participants produced an item-specific PC (ISPC) effect: Congruency effects were larger for high-PC items than for low-PC items.

The more traditional idea that conflict adaptation occurs as a reaction to the general frequency of conflict in the task as a whole obviously cannot explain item-specific differences in conflict effects. This includes the Botvinick and colleagues (2001) conflict-monitoring model, since this model also relies on conflict information recorded across the task as a whole. Some have proposed, however, that conflict monitoring and adaptation might occur in a more flexible and item-specific manner. For instance, Blais, Robidoux, Risko, and Besner (2007) have presented a variant of the conflict-monitoring computational model in which attention is flexibly modulated for each word. In other words, the model keeps track of the level of conflict associated with each distracting word, rather than the level of conflict associated with the task as a whole. As a result, presentation of a high-PC word (e.g., BLUE) will lead to weaker conflict adaptation than will presentation of a low-PC word (e.g., RED). Such a model was therefore able to simulate ISPC effects.

There is a logical problem with this approach, however, because it is not clear how the cognitive system can know whether a given word is high or low PC until it has already been identified. In other words, the system cannot know whether or not it should (high PC) or should not (low PC) attend to the word until the word has already been identified. The computational model knows this in advance, but it is impossible for a real cognitive system to know this. Given this problem, another approach was subsequently presented by Verguts and Notebaert (2008) in which conflict modulates learning. In this model, conflict is a signal to increase Hebbian learning. For low-PC items, frequent conflict leads to a stronger connection between the color node and the color task demand unit, which then increases in top-down influence to reduce conflict. In a sense, this model, too, uses information about which stimulus is presented in order to determine whether or not to attend to it (i.e., the degree to which the task demand unit favors identification of the target depends on the identity of the target). To what extent this makes sense is not clear, but such models are capable of producing ISPC effects by assuming that conflict adaptation is highly flexible and rapid.

Contingency learning

While flexible conflict adaptation models can provide an explanation for ISPC effects, such effects are easily interpretable in terms of a very different process than conflict adaptation: contingency learning. Schmidt and colleagues (Schmidt, 2013; Schmidt & Besner, 2008; Schmidt, Crump, Cheesman, & Besner, 2007; see also Mordkoff, 1996) have argued that the reason for larger Stroop effects for high- relative to low-PC items has to do with the predictability of the response based on the identity of the word. For high-PC items, the word is predictive of the congruent response. For instance, if BLUE is presented most often in blue, then BLUE is predictive of a blue response. This will lead participants to respond faster than normal to congruent trials, thus increasing the difference between incongruent and congruent trials. In sharp contrast, for low PC items, the word is predictive of an incongruent response (e.g., RED is predictive of an orange response). This will lead participants to respond faster than normal to incongruent trials, thus decreasing the difference between incongruent and congruent trials. These contingency biases therefore confound PC experiments and are capable of producing a PC effect on their own. Conflict adaptation does not have to be assumed.

Schmidt and Besner (2008) further decomposed the ISPC effect and showed that, indeed, the effect is specifically driven by a speeding of those trials on which the word accurately predicts the correct response. They further argued that the basic congruency effect and the contingency learning biases are the result of two entirely different sets of processes. Because of this independence, they suggested that congruency and contingency effects would not interact with each other. Support for this notion came with the observation that contingency learning and congruency effects are additive. While this additive pattern has been observed elsewhere (e.g., Atalay & Misirlisoy, 2012), deviations from additivity are also sometimes observed (e.g., Blais & Bunge, 2010; Bugg, Jacoby, & Chanani, 2011). For instance, in a word–picture Stroop task, Bugg and colleagues observed that incongruent trials were more influenced by item PC (and thus contingency) than were congruent trials. In other words, the difference between high-PC and low-PC incongruent trials was larger than the difference between high-PC and low-PC congruent trials.

Although such results were taken as evidence against the contingency account of PC effects, the additivity assumptions of Schmidt and Besner (2008) can be regarded as “just so” properties of the early version of the contingency account. In other words, Schmidt and Besner argued that contingency and congruency should be additive, but this is not a necessary conclusion from the idea that PC effects are driven by contingency learning. Even if congruency and contingency effects are driven by independent processes, an interaction can still result from the cascading of one process into another. Indeed, subsequent modeling work of Schmidt (2013) has shown that pure additivity is highly unlikely. The conceptual reason for this is that there is an overall difference in reaction time between incongruent and congruent trials. As a result, contingency biases have more time to affect the results on incongruent trials than on congruent trials. Simulations with the contingency learning model of Schmidt produced an interaction between contingency and congruency of exactly this form (viz., a greater effect of PC on incongruent than on congruent trials). Although additive patterns are still extremely difficult to interpret in terms of conflict adaptation, interpretations of deviations from additivity do not necessarily support conflict adaptation. In other words, an overadditive interaction (e.g., such as the one of Bugg et al., 2011a) does not logically falsify the contingency learning account.

Because nonadditive relationships between contingency and congruency are sometimes observed, Schmidt (2013) developed a new way of distinguishing between conflict adaptation and contingency learning. Specifically, the contingencies in the task were manipulated such that conflict adaptation and contingency learning could be dissociated. This was accomplished by generating three types of incongruent trials: (1) low PC and high contingency (e.g., GREENred, where GREEN is presented mostly in red), (2) low PC and low contingency (e.g., YELLOWred, where YELLOW is presented mostly in green), and (3) high PC and low contingency (e.g., BLUEred, where BLUE is presented mostly in blue). The first two types of incongruent trials did not vary in proportion congruency (both low PC) but did vary in contingency (high vs. low). Comparison of the two revealed significant contingency learning effects. In contrast, the last two types of incongruent trials did not vary in contingency (both low contingency) but did vary in item PC (high vs. low). Comparison of the two revealed no evidence of conflict adaptation. Furthermore, comparisons between items that did not vary in contingency or the item PC associated with the distracting word but did vary in the item PC associated with the target color (high vs. low) also failed to produce evidence for conflict adaptation. As a whole, such results demonstrated sizable effects of contingency learning, with no hint of a contribution of conflict adaptation effects. Although failure to observe conflict adaptation does not logically entail that (item-specific) conflict adaptation does not exist ever, it does raise the suspicion.

In contrast to Schmidt (2013), Bugg and colleagues (2011) claimed to show an effect of proportion congruency where contingency learning is impossible. In a picture–word Stroop, they manipulated their task to maximize attention to the target picture rather than the distracting word, with the rationale that this would minimize the effectiveness of contingency information that normally dominates ISPC effects, thus allowing conflict adaptation to have a stronger effect. In their Experiment 2, high-PC incongruent items were compared with low-PC incongruent items. High-PC incongruent items were responded to more slowly than low-PC incongruent items, consistent with conflict adaptation predictions. Because the experiment put a processing bias on the target picture rather than the distracting word, the authors argued that high- and low-PC incongruent items vary in PC associated with the target but do not vary in contingency associated with the target (i.e., targets are 100 % predictive of the response, irrespective of condition). However, this reasoning is faulted. The contingency account argues that participants use distracting information to anticipate the response to the target. In these experiments, the distractors in the low-PC incongruent condition had a higher contingency (18.75 % or 37.5 %, depending on the word) than in the high-PC incongruent condition (6.25 % or 12.5 %). Thus, the contingency account should expect faster responses to the low-PC relative to high-PC incongruent items, as observed. There are further difficulties in interpreting any of the results of this experiment, because the frequency of the different distractors was not equated: High-PC words were presented overall twice as frequently as low-PC words. It is not entirely clear how this atypical stimulus frequency bias may have affected any of the key observations in the experiment (e.g., an overall item- or category-level expectancy or, conversely, item- or category-level habituation). It is ambiguous whether the patterns of results observed in this experiment and the subsequent one (where bias was shifted to the distracting word) were due to the changes in the contingency and stimulus frequency matrix or to conflict adaptation. Overall, the combined evidence from various reports is mixed, and further research is needed.

List-level proportion congruent

So far, this review has focused primarily on item-specific PC effects. An independent question is whether learning about the proportion congruency of the experiment as a whole is possible. This is termed list-level conflict adaptation. The published work so far clearly indicates that the bulk of the PC effect is explainable by item-specific learning. In most cases, evidence for list-level PC effects independent of ISPC effects was not found (e.g., Blais & Bunge, 2010). There are a few findings, however, that suggest that a very small contribution of list-level PC might exist (Bugg & Chanani, 2011; Bugg, McDaniel, Scullin, & Braver, 2011; Hutchison, 2011). For instance, Hutchison compared items of equal item PC that were mixed together with other context items that were either mostly congruent or mostly incongruent. In other words, PC was manipulated at the level of the list, but not at the level of the items that were being analyzed. This procedure produced a list-level PC effect. That is, the Stroop effect was larger in the high-PC context relative to the low-PC context. A similar result was found by Bugg and Chanani, using a picture–word Stroop task (i.e., a picture target and a distracting word).

Given such results, one might argue that list-level conflict adaptation is real. Indeed, adaptation to conflict across the entire task seems more plausible than adaptation to individual items. Furthermore, such a list-level PC effect is impossible to explain in the context of the contingency account of PC effects. The critical items being analyzed do not vary in contingency between the high- and low-PC contexts. The remaining effect thus must be explainable by a process other than contingency learning. This other process could be conflict adaptation, although there are still other possibilities.

Temporal learning

Confounds other than contingency learning biases might be present in list-level PC experiments, such as those of Hutchison (2011) and Bugg and Chanani (2011). Work on temporal learning can fill this void. The role of time in learning has a long history in both philosophy (e.g., Hume, 1739/1969) and experimental psychology (e.g., Michotte, 1946/1963) and is arguably just as important as contingencies in learning the relation between events. According to the temporal coding hypothesis (Matzel, Held, & Miller, 1988), episodic memories of trials include more than just information about the presented stimuli and the response that was made; they also contain information about time. As a result of this, participants not only learn about what to respond, but also learn when to respond. A classic example of temporal learning comes from the literature on mixing costs (for a review, see Los, 1996). For instance, Grice and Hunter (1964) presented an experiment in which participants pressed a key when they detected a tone that was either high or low intensity. Some participants were presented only with one tone intensity (i.e., high or low). In such pure lists, responses were predictably slower to low- relative to high-intensity tones. Other participants received a mix of high- and low-intensity tones. A mixing cost was observed for these mixed lists, in that both high- and low-intensity tones were responded to more slowly than in pure lists. Importantly, this mixing cost was larger for low-intensity tones. Grice (1968) argued that participants accept less evidence (i.e., lower threshold) if presented only with low-intensity tones and will require a bit more (i.e., higher threshold) if presented only with high-intensity tones. When the two types are mixed, there is more uncertainty, and the (experiment-wide) threshold for responding is set much higher. This produces a small cost for high-intensity tones and an especially large cost for low-intensity tones. Although there are other mechanistic accounts of mixing costs (e.g., Kohfeld, 1968; Ollman & Billington, 1972; Strayer & Kramer, 1994a, 1994b; Van Duren & Sanders, 1988), the general idea in the literature is that participants are able to alter when (e.g., after how much evidence) they anticipate being able to respond.

Like contingency learning, temporal learning has an adaptive value. For instance, the adaptation to the statistics of the environment (ASE) model (Mozer, Kinoshita, & Davis, 2004; see also Kinoshita, Forster, & Mozer, 2008; Kinoshita, Mozer, & Forster, 2011) explains temporal learning in terms of the need to balance speed and accuracy (see also the decision model of Mozer, Colagrosso, & Huber, 2002). The threshold will therefore be lower when most of the trials can be accurately identified quickly (e.g., high PC), thus allowing for faster responses without sacrificing accuracy. However, the threshold will be high when correct identification tends to take longer (e.g., low PC), thus coming at a cost to speed in order not to inflate errors. Schmidt (2012b) proposed a similar account based on episodic learning, where it is assumed that information about response time is encoded in trial episodes. Upon retrieval, this temporal information serves to assist in anticipating when a response can be made. By correctly anticipating when to respond, participants will be especially fast at responding at the expected time.

Temporal learning processes such as these can explain list-level PC effects. In the high-PC context, most of the trials are congruent. Congruent trials are responded to quickly, so the high frequency of quick responses will lead participants into a rapid pace of responding to congruent trials (e.g., because of an earlier temporal expectancy or a lower threshold), with a penalty to the infrequent incongruent trials. Thus, even for contingency-unbiased items, the Stroop effect will be large. In contrast, in the low-PC context, most of the items are incongruent and, thus, participants are slow to respond. Their pace of responding is therefore a bit lax, and there is less of a benefit for congruent trials. The result, even for contingency-unbiased items, is a reduced Stroop effect.

Indeed, work by Kinoshita and colleagues (2011; see also Kinoshita et al., 2008) demonstrated that the PC effect in masked priming is strongly determined by previous response times. The argument, based on the ASE account, was that previous response times will have been much shorter, on average, in the high-PC condition, relative to the low-PC condition. When previous response times are shorter, temporal expectancy will also be faster, and the threshold for responding will be lower. Previous trial response times can therefore serve as a proxy for temporal expectancy. The prediction, then, is that as previous response times speed up, the congruency effect will get larger. This is because faster trials are affected more by a threshold shift than are slower trials (e.g., Kinoshita & Lupker, 2003), meaning that the benefit for congruent trials, relative to incongruent trials, will be larger the faster the temporal expectancy. Indeed, previous response time and congruency were found to interact in just this way, independently of the PC factor. This shows that the different temporal expectancies in the high- and low-PC conditions are a source of bias in estimating conflict adaptation. Although the PC effect was not eliminated entirely by controlling for previous response time, previous response time can be regarded only as a weak proxy of temporal expectancy (e.g., participants inevitably account for more trials than just the most recent one). As a result, deconfounding temporal expectancy and conflict adaptation is a tricky enterprise. Conflict adaptation may still play a role, but this is difficult to determine with current methods, thus leaving room for future research.

Although the work of Kinoshita and colleagues (2011) was focused on the PC effect as a whole (rather than the list-level PC effect in particular), it lends further credence to the suggestion that temporal learning plays a sizable role in PC paradigms. In other words, temporal learning represents a powerful confound that will contaminate list-level PC experiments and produce a list-level effect even for contingency-unbiased items. Indeed, forthcoming work by Schmidt (2012b) demonstrates the same effect of previous response times on the list-level PC effect in Hutchison’s (2011) data. Thus, the list-level PC effect, like the ISPC effect, is explainable in terms of simple learning biases. However, it still remains unclear whether the entire list-level PC effect is due to temporal learning or whether part of the effect is explainable by conflict adaptation.

Note that the temporal learning account is not circular and the relation between congruency and response speed is only indirect. Congruent trials are responded to faster than incongruent trials because of a difference in conflict, but it is the response speed, and not congruency per se, that determines the temporal expectancy. The conflict adaptation account assumes that participants adapt to conflict, whereas the temporal learning account assumes that participants adapt to time-on-task. In the context of a conflict paradigm, time-on-task is only incidentally related to conflict. This is a subtle distinction but an important one. One key difference is that the temporal learning account suggests that participants should be capable of learning time-on-task information even when something other than conflict (e.g., contrast or word frequency) determines time-on-task. It is not clear why a conflict adaptation account should make the same prediction, yet such findings are observed frequently in the temporal learning literature. For instance, Kinoshita and Lupker (2003) found that the word frequency effect (i.e., faster responses to high- relative to low-frequency words) was larger when preceded by fast (quickly identifiable) nonword trials, relative to slow nonword or (slow) exception word trials. Similarly, Schmidt (2012b) was able to mimic list-level PC effects with nonconflict stimuli, using a contrast manipulation. When most of the targets were easy (high contrast), the contrast effect (low – high) was larger than when most of the targets were hard (low contrast). There were no distractors or conflicts to adapt to. Results such as these demonstrate that variation in average response time is all that is needed to produce apparent list-level PC effects. Of course, it could still be the case that both temporal learning and conflict adaptation contribute to the list-level PC effect. The currently published results are ambiguous in this respect.

Context-level proportion congruent

Yet another variant of the PC procedure uses differing contexts to alter proportion congruency. For instance, Crump, Gong, and Milliken (2006) presented color word distractors that were followed by color block targets that appeared at one of two locations (above or below fixation). Color blocks were high PC with the word when presented at one location (e.g., above) and low PC when presented at the other location (e.g., below). Words were thus not contingent on the response in the task as a whole, but PC differed at the two context locations. The congruency effect was larger at the high-PC location, relative to the low-PC location. A similar experiment was presented by Bugg, Jacoby, and Toth (2008), in which display font was used as the context cue for color–word Stroop stimuli. Similar to the results in Crump et al., a larger congruency effect was found for the high-PC font than for the low-PC font. In both types of experiment, the word was not directly predictive of the response, which might suggest that a role for conflict adaptation does exist after all.

Contingency learning

It could be argued that context-level PC experiments do not really rule out contingency learning. While a single distractor (e.g., word) might not be predictive of the response on its own, the two distracting dimensions together are. For instance, BLUE and the above location predict a blue response, whereas BLUE and the below location predict a red response. Thus, compound-cue contingency learning is a definite possibility. Indeed, work on occasion setting indicates that this sort of compound-cue learning does, in fact, occur in many learning environments (for a review, see Holland, 1992). In the context of Crump and colleagues (2006), for instance, the location serves as an occasion setter determining what the word predicts. However, this multiple-cue account has yet to be tested in a nonconflict variant of these PC paradigms.

Another limitation on the occasion-setting or multiple-cue account of context-level PC effects is that it cannot explain recent findings by Crump and Milliken (2009). In these experiments, the location-specific PC of some context items was manipulated, whereas the location-specific PC of other, transfer items was not. The context items made it such that one location (e.g., above) was high PC, whereas the other location (below) was low PC. The transfer items were contingency unbiased, however. Despite this fact, transfer items produced a PC effect (viz., a larger congruency effect at the high- than at the low-PC location). The contingency account is unable to explain such a finding. The results therefore seem to support the notion that context-level conflict adaptation can occur under such a scenario.

Temporal learning

However, there are several things to note about this very particular version of the Stroop task. In a sense, list-level proportion congruency is being manipulated at the context (location) level, rather than at the block or subject level. In other words, one location is a high list-level PC task, and the other is a low list-level PC task. Thus, if (nonconflict) learning processes are sensitive to context, all of the caveats of the list-level PC task equally apply to the context-specific version. For instance, the temporal learning bias for the above location (high PC) will be for fast responses (thus producing a large congruency effect), whereas the temporal learning bias for the below location (low PC) will be for slow responses (thus producing a relatively smaller congruency effect). Although this explanation is post hoc, the only assumption that a nonconflict learning account needs to make to explain context-level PC effects is that learning biases are sensitive to context (something we already know to be true from other work; e.g., Holland, 1992). All the rest of the findings with context-specific paradigms directly follow from the learning factors (i.e., contingency and temporal learning) already discussed in this review. This includes the transfer effects of Crump and Milliken (2009), because there is a context-specific temporal learning bias. Thus, there are nonconflict interpretations of PC effects at the item, list, and context levels.

A caveat to this context-specific temporal learning idea, however, is that it assumes that temporal expectancy is not task-wide but can, instead, vary from trial to trial depending on context. This would mean that participants can jump back and forth between a fast rhythm at the high-PC location and a slow rhythm at the low-PC location. This is certainly plausible, but there is currently no evidence that this can, in fact, occur. If temporal learning can occur only at the overall task level, a temporal learning account is unable to explain the transfer effects observed by Crump and Milliken (2009). If there are no other learning confounds, conflict adaptation may be the only viable account that remains. Further research on this topic will therefore prove highly diagnostic of the explanatory power of the learning account.

Summary

The literature on PC effects is clearly mixed. The analysis presented here suggests that an account based exclusively on learning and memory processes is at least a viable competitor to the more popular conflict adaptation theory. However, the collective results are ambiguous. Some results suggest that ISPC effects might be explainable exclusively by contingency learning confounds, but this has not gone unchallenged. Tentative work on temporal learning suggests that list- and context-level effects may also be explainable by nonconflict processes. Especially with regard to context-level effects, however, this view is still highly speculative.

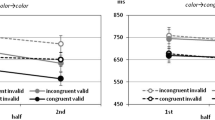

Gratton

A second method used to study possible conflict adaptation effects is the Gratton effect. The Gratton (or sequential congruency) effect is the observation that congruency effects are larger following a congruent, relative to an incongruent, trial (Gratton, Coles, & Donchin, 1992). The conflict adaptation account of the Gratton effect is similar to the conflict adaptation account of PC effects. Specifically, it is argued that attention to the word is decreased following a conflicting incongruent trial. Thus, congruency effects are reduced following a conflict trial. Following a congruent trial, however, attention to the word is relatively higher, thus leading to a greater impact of the word on performance.

There are several versions of the conflict adaptation account as it relates to the Gratton effect. Gratton and colleagues (1992) initially proposed an expectancy account, whereby an expectation that the congruency of the previous trial will be the same as the congruency of the current trial leads to an increase in conflict adaptation following an incongruent trial and a decrease following a congruent trial.

The conflict-monitoring model provides a slightly different interpretation. In addition to PC effects, the conflict-monitoring model of Botvinick and colleagues (2001; see also Verguts & Notebaert, 2008) has successfully simulated the Gratton effect. The model accomplishes this because the level of conflict experienced on the most recently encountered trial has the largest effect on behavior on the following trial. Thus, if an incongruent trial was just experienced, the conflict monitor will have processed this high level of conflict, and the conflict adaptation signal will thus be increased during the following trial. The word will resultantly have less of an impact on color identification, and the congruency effect will be reduced. In contrast, if the previous trial was congruent, the conflict adaptation signal will be weaker. Thus, the word will have a larger effect on the following trial, and the congruency effect will be increased.

A slight variant of the conflict-monitoring model is the adaptation-by-binding account of Verguts and Notebaert (2009; see also Verguts & Notebaert, 2008). According to this account, experiencing conflict leads to a strengthening of learning processes. Among other things, connections between target stimuli and task demand units are strengthened when conflict is experienced. Thus, on the following trial, the task demand unit will have a stronger effect on the input units, thus reducing interference from the distracting word. The expectancy, conflict-monitoring, and adaptation-by-binding accounts propose slightly different mechanisms for instantiating conflict adaptation but have in common the claim that attention to the distractor is weakened, relative to the target, following a conflicting incongruent trial.

Binding

Similar to list-, item-, and context-level PC effects, alternative interpretations have also been forwarded for the Gratton effect. Most notably, Mayr, Awh, and Laurey (2003; see also Hommel, Proctor, & Vu, 2004) pointed to the fact that different types of feature repetitions are present in each of the four cells of the Gratton design. For instance, a complete repetition occurs when both the word and the color of the previous trial are repeated on the current trial. Such trials are responded to extremely rapidly. Critically, complete repetitions are possible only for congruent trials followed by a congruent trial (congruent–congruent; e.g., BLUEblue followed by BLUEblue) and incongruent–incongruent trials (e.g., BLUEred followed by BLUEred). Such complete repetitions are not possible for congruent–incongruent or incongruent–congruent trials. When Mayr and colleagues analyzed only complete alternation trials, where both the word and the color changed (e.g., BLUEred followed by GREENyellow), the Gratton effect was eliminated. Subsequent work has confirmed that removing these stimulus-binding biases substantially reduces the Gratton effect, but a significant remaining effect is often observed (e.g., Akçay & Hazeltine, 2007, 2011; Clayson & Larson, 2011; Funes, Lupiáñez, & Humphreys, 2010; Van Gaal, Lamme, & Ridderinkhof, 2010; Verbruggen, Notebaert, Liefooghe, & Vandierendonck, 2006). Thus, binding plays a sizable role but is clearly not the whole story (see Egner, 2007, for a review). A role for conflict adaptation could, therefore, still exist.

An alternative way of controlling for feature repetitions is to use multiple stimuli from a category. For instance, Egner, Ely, and Grinband (2010; see also, Egner, 2011; Egner, Etkin, Gale, & Hirsch, 2008; Egner & Hirsch, 2005; Etkin, Egner, Peraza, Kandel, & Hirsch, 2006) gave participants a two-choice gender task in which they had to decide whether a facial picture was male or female. The distracting word “male” or “female” was presented over the top of the picture, thus creating congruent trials (i.e., when the picture and word were the same gender) and incongruent trials (i.e., when the picture and word were different genders). Different faces were always presented from one trial to the next, and the case (i.e., upper- or lowercase) of the word always varied, thus meaning that the exact stimulus never repeated from one trial to the next. This was argued to get around the problem of feature repetitions.

Arguably, however, this approach is even more problematic than the standard approach. Each response is linked with a category of stimuli. Within each category, female faces match each other and male faces match each other. That is, there are specific facial features that define a female versus male face. Thus, even at the basic visual feature level, there are shared features within gender categories. These features repeat when congruency repeats and alternate when congruency changes. The same can be said for upper- versus lowercase words of the same identity (i.e., upper- and lowercase versions of the same words share many specific visual features in common). As a result of this, 50 % of congruent–congruent and incongruent–incongruent trials are complete repetitions. The remaining 50 % of congruent–congruent trials are complete alternations. Complete alternations are not possible for any of the three other conditions. In short, this two-choice procedure makes feature repetition biases substantially worse. Egner and Hirsch (2005) do, however, provide one contrast comparing incongruent–incongruent trials that are either a complete repetition or a complete “switch” (i.e., where the word and picture match the picture and word, respectively, of the previous trial), and no advantage was found for complete repetitions. This does provide some evidence against the notion that category-level learning might not have occurred. However, this did not represent a complete test across all trial types, and category-level learning such as this has been observed in various learning preparations (e.g., Goschke & Bolte, 2007; Schmidt & De Houwer, 2012b). Thus, although results with this version of the Gratton paradigm do provide some support for the notion that conflict adaptation occurs, these results should probably be interpreted with caution. As will be discussed in the following section, these results are also inconsistent with work that more convincingly controls for feature repetitions, lending credence to the suggestion that this procedure may not be bias-free as intended.

Another approach to assessing stimulus repetition confounds was presented by Notebaert and Verguts (2007). Instead of deleting all trials except complete alternations, they coded for each repetition type (e.g., word repetition, color repetition, etc.) and calculated a regression. Using this approach, a Gratton effect was found to still be present independently of the stimulus repetition regressors. The regression approach of Notebaert and Verguts is questionable, however, since it implicitly assumes that the various types of feature repetitions (word–word, color–color, etc.) are additively related to each other and to congruency. This is probably not the case and is impossible to adequately control for, since most of the potential interrelationships are inherently confounded with one another. Indeed, Notebaert and Verguts point to this problem themselves in the concluding section of their article and state that the significant Gratton effect they found “should probably not be interpreted as the ultimate proof for conflict adaptation” (p. 1259). Research currently ongoing in our lab confirms the magnitude of these problems, showing that this regression approach misses systematic variance due to feature repetitions (Schmidt & De Schryver, 2012). As a result, the measure of conflict adaptation in the Notebaert and Verguts regression is still confounded with feature repetition biases.

Arguably, deleting all data other than alternation trials (Mayr et al., 2003) is still the superior method. One may like to argue that this results in conflict adaptation being assessed with only one type of transition (viz., complete alternations), but this is irrelevant: If conflict adaptation is a real process, it should happen on these complete alternations just like any other trial. Still, stimulus repetitions do not explain the entire Gratton effect, suggesting a possible role for conflict adaptation.

Contingency learning

Although stimulus repetitions cannot explain the entire Gratton effect, recent work by Schmidt and De Houwer (2011) pointed to further confounds in the Gratton paradigm. The most important of these confounds are contingency biases. In past experiments that attempted to control for stimulus-binding biases, distractors were generally presented more often than would be expected by chance in their congruent color. The most typical procedure, for instance, is to have an equal number of congruent and incongruent trials. Because the task must be at least four-choice in order to be able to delete all types of stimulus repetitions, 50 % congruent is well above chance. Said differently, words end up being predictive of their congruent response (e.g., BLUE is predictive of a blue response because it is presented in blue most often). Even in nonconflict tasks, any such predictive word–response relationships will be rapidly learned by participants (e.g., Schmidt et al., 2007; Schmidt & De Houwer, 2012a, 2012b, 2012c; Schmidt, De Houwer, & Besner, 2010). With such contingency biases in the task, congruent trials are high contingency (predictive of the correct response), and incongruent trials are low contingency (not predictive).

It is known that the contingency effect (low – high contingency trials) is larger following a high-contingency (in this case, also congruent) trial than following a low-contingency (in this case, also incongruent) trial (Schmidt et al., 2007). There are several possible reasons for why this might occur. Perhaps the most likely explanation is in terms of temporal learning. Following a fast response (e.g., on a high-contingency trial), participants are prepared for another quick response. This provides a benefit if the following trial is also fast (high contingency) and/or a cost if the following trial is slow (low contingency). Thus, the contingency effect is increased following a high-contingency trial. The exact opposite is true following a slow (e.g., low-contingency) trial. Another slow response is expected, thus conferring a benefit to a slow (low-contingency) trial and/or a cost to a fast (high-contingency) trial. Thus, the contingency effect is decreased following a low-contingency trial. Other explanations are also possible (see Schmidt & De Houwer, 2011, for more on this issue), but the key point is that an interaction between contingency on the current and previous trials does occur and contingency biases can, therefore, produce a Gratton effect on their own. Conflict adaptation does not, therefore, have to be assumed. Indeed, by presenting distractors equally often with all targets and also controlling for feature repetition confounds, Schmidt and De Houwer (2011) eliminated the Gratton effect in both Stroop and Eriksen flanker tasks.

Subsequent work by Mordkoff (2012) lends further credence to the suggestion of Schmidt and De Houwer (2011) that contingency plays a key role in Gratton effects. Mordkoff directly demonstrated that the Gratton effect in a Simon task was present after stimulus repetition trims in a contingency-biased task but was not present in a contingency-unbiased task. This demonstrates quite clearly that contingency biases, like stimulus repetition biases, must be controlled for in Gratton experiments if one means to study conflict adaptation. All distractors must be presented equally often with all targets, or the experiment is inherently confounded.

Unfortunately, in almost all instances in which feature repetitions were controlled for, contingencies were not (e.g., Akçay & Hazeltine, 2011). This is because the most effective way of trimming out feature repetitions is to use a task with four or greater choices, and most experimenters have favored a 50:50 congruent:incongruent ratio. While this ratio maximizes the even spread of observations over cells in the Gratton design, a contingency bias is introduced (i.e., 50 % congruent responses is way above chance in a four-choice task). Two-choice tasks are generally contingency unbiased, but there are some concerns for the methods used to account for feature repetition biases, as was discussed above (e.g., it is impossible to have a complete alternation for each of the four cells of a Gratton design with only two alternatives).

Expectancy

Expectancy may also play a role in the Gratton effect. As was already mentioned, the account initially proposed for the Gratton effect by Gratton and colleagues (1992) was that participants have an expectancy that congruency will repeat from one trial to the next. Specifically, following a congruent trial, participants expect another congruent trial. This leads participants to allow attention to the word, resulting in increased interference. Following an incongruent trial, participants will expect another incongruent trial. This leads participants to focus attention on the target (i.e., conflict adaptation), resulting in reduced interference. Note that this is still a conflict adaptation account, only one that relies on expectation rather than conflict monitoring or Hebbian learning. Thus, these sorts of expectancies would be consistent with the conflict adaptation account.

That said, expectancies might take a different form, and conflict adaptation might not be necessary. A related account was presented by Schmidt and De Houwer (2011), in which they argued that the memory encoding processes required during a congruent versus incongruent trial are slightly different. For instance, there are two potential responses to encode for an incongruent trial, but only one for a congruent trial. When information is available for encoding also changes. Thus, the cognitive system has to reconfigure slightly when congruency changes in order to encode information that does not match the encoding template of the previous trial. This leads to a reconfiguration or congruency switch cost, similar to a task switch cost. When congruency repeats, there is an encoding benefit for encoding the same sort of information. These benefits and costs will produce a Gratton effect. Following a congruent trial, congruent trials will have a benefit and incongruent trials will have a cost, thus increasing the congruency effect. Following an incongruent trial, the reverse is true. A similar account could be forwarded on the basis of temporal learning alone. When congruency repeats, the temporal expectancy based on the previous trial matches the current trial, thus providing a benefit. When congruency switches, the temporal expectancy is violated, thus incurring a cost.

Note that this encoding account is similar to the expectancy account of Gratton and colleagues, only that congruency is incidental: Congruency determines expectancies, but participants do not adapt attention in response to conflict (i.e., conflict adaptation). Schmidt and De Houwer (2011) found some evidence for this sort of expectancy-based effect in the Gratton. They separated the type of errors to be expected by the conflict adaptation account (word reading errors) from those expected by general encoding costs (random keypress errors). There was evidence for an increase in random keypress errors following a change in congruency (consistent with the encoding account) but no evidence for decreases in word-reading errors following a conflicting incongruent trial (inconsistent with the conflict adaptation account). Not only did these results fail to show any evidence for conflict adaptation, but also they pointed to yet another task confound. As a caveat, this was observed only in the errors of one experiment. Controlling for contingencies and feature repetitions was sufficient to eliminate the effect in response times and the response times and errors of another experiment. Thus, this sort of expectancy account can be regarded only as tentatively supported. Also unfortunate, controlling for expectancy-based effects such as these can prove even more challenging than other confounds. For instance, the method of Schmidt and De Houwer works only for errors. Future research is therefore needed in order to better partial out such confounds when attempting to measure conflict adaptation.

Higher-order sequence learning

Also interesting is work on higher-order sequence learning. For instance, Durston et al. (2003) observed an increase in incongruent reaction times the greater the number of recently preceding congruent trials. Relatedly, Clayson and Larson (2011) observed a decrease in response times for congruent trials the longer the sequence of congruent trials. A similar trend was also observed for incongruent trials, with a decrease in reaction times the longer the run of incongruent trials. Such findings can be interpreted in terms of conflict adaptation. The more congruent trials there are, the more participants rely on the distracting word, thus speeding congruent trials but making for a larger cost for a sudden incongruent trial. With a string of incongruent trials, attention is gradually focused more on the target color, thus reducing the interfering effects of the incongruent distractors.

Such results are not, however, inconsistent with the learning view of Gratton effects. As participants’ temporal expectancy speeds up, they will be better at congruent trials and worse at incongruent trials. As a participants’ expectancy slows down, they will be better at incongruent trials and worse at congruent trails. Like many other results, the learning view here replicates the predictions of conflict adaptation theory because of the similarities between the two accounts. In both cases, the argument is that participants alter their behavior to adapt to what they previously experienced. The only difference is that conflict adaptation theory proposes that participants adapt to experienced conflict, whereas the learning view says that participants adapt to time-on-task. This is exactly what makes disentangling conflict adaptation from learning and memory biases so challenging.

Summary

The preceding analysis suggests that Gratton effects, like PC effects, may be driven exclusively by learning and memory processes. If true, then conflict adaptation is not what drives the Gratton effect. It is additionally worth highlighting that stimulus binding and contingency learning effects could be regarded as two by-products of the same memory mechanisms. The difference is merely that stimulus-binding effects represent transitory connections between stimuli and responses in memory, whereas contingency learning effects represent memory biases accumulated across several bound episodes. A learning and memory account may well prove to be a potent alternative account for Gratton effects. However, it should be stressed that, like the work on PC effects, evidence against conflict adaptation theory is still sparse and, in many cases, speculative.

Neuroscience

Related to conflict adaptation is the notion of conflict monitoring. Botvinick and colleagues (2001) proposed that in order to adapt to conflict, the cognitive system has a conflict-monitoring device to measure how much conflict is being experienced. This monitoring device can then signal attentional adaptation. Neuroimaging research has purported to link conflict monitoring to the anterior cingulated cortex (ACC) and dorsolateral prefrontal cortex (DLPFC). For instance, Kerns et al. (2004) found that the Gratton effect correlates with ACC activation. Similarly, Blais and Bunge (2010) found that ACC activation was correlated with the ISPC effect. A particularly impressive article was presented by Sheth et al. (2012), in which individual neurons in the ACC were found to correlate with the level of conflict of items on the current trial and items on the previous trial. Following specific lesions to this area, the Gratton effect was eliminated. This was interpreted as evidence that these ACC neurons are responsible for recording and keeping track of conflict.

In neuroimaging work with the PC paradigm, Wilk, Ezekiel, and Morton (2012) further showed that areas such as the ACC and DLPFC (in addition to the anterior insula and inferior parietal cortex) are sensitive to moment-to-moment changes in conflict. That is, rather than being sensitive to the PC of the task as a whole (e.g., due to a stable task set), these areas were sensitive to the amount of recently experienced conflict. This work used a size congruity paradigm, in which participants identified the numerically larger of two digits while ignoring the physical size of the digits (incongruent trials being when the numerically larger stimulus is the physically smaller stimulus). This work also allows for contingency learning, however, since physical size is predictive of the correct response. For instance, in the high-PC condition the response corresponding to the physically larger stimulus is likely correct (i.e., because the physically larger stimulus is likely also numerically larger). This will benefit congruent trials and impair incongruent trials. In the low-PC condition, the reverse is true: The physically smaller stimulus is likely to be numerically the largest. Resultantly, moment-to-moment changes in conflict also incidentally correspond to moment-to-moment changes in contingency strength.

Although the linking of brain areas to conflict monitoring and adaptation can be regarded as speculative, such work purports to add extra credence to the conflict-monitoring and adaptation account by suggesting a physiological basis for the account. However, If these brain–behavior correlations are meaningful (a point which I will return to shortly), such correlations could be measuring the very memory biases that non-conflict adaptation accounts propose drive the PC and Gratton paradigms that are used for this brain research. Indeed, such areas have already been linked to learning and memory processes (e.g., see a review by Cabeza & Nyberg, 1997). For instance, the Kerns et al. (2004) experiment controlled for binding biases but was confounded with contingencies. Thus, the correlation between the Gratton effect and ACC activation can just as easily be interpreted as evidence that contingency learning occurs in the ACC, rather than conflict adaptation. Similarly, the individual neuron recording and lesion studies of Sheth and colleagues (2012) could be interpreted as evidence that these nodes encode for temporal information, something that is directly confounded with conflict (i.e., congruent trials are responded to quickly, whereas incongruent trials are responded to slowly).

The larger problem, however, is that there is reason to doubt that correlations between these paradigms and activation in the ACC and DLPFC are meaningful. Grinband et al. (2011) observed that activation in the ACC (which is highly correlated with the DLPFC) seems to be wholly related to time-on-task, not conflict adaptation. In other words, activation in the ACC steadily increases from the moment of stimulus onset until a response is made. Independent of response times, it is not sensitive to congruency. This dependence on time-on-task was observed even in a task where no conflict was present, no distractors were present, and there was not even a target to identify. Participants simply pressed a single key when a stimulus disappeared off the screen. The length of the stimulus presentation was correlated with ACC activation. Although this is only preliminary work, these results strongly suggest that activation in the ACC is little more than an alternative (and very expensive) measure of reaction times. Thus, any effect observed in behavior (e.g., contingency learning, Gratton, PC) will correlate with ACC activation. If true, then such brain data adds little to the debate that we did not already know from simpler behavioral measures. Moreover, the fact that the ACC correlates with time-on-task is consistent with a temporal learning account. In other words, given that the ACC seems to be responsive to temporal information, it may play a key role in the development of temporal expectancies. This temporal learning account of the ACC is even consistent with the finding of a time-on-task correlation during their task that had no distractors, conflict, or stimulus identification.

Other research with EEG has also been forwarded as neural evidence for conflict adaptation. For instance, West and Alain (2000; see also West & Alain, 1999) found that N450 negativity over the fronto-central region (argued to be caused by the ACC; e.g., MacDonald, Cohen, Stenger, & Carter, 2000) was reduced in a low-PC task, relative to a high-PC task. They suggested that this indicates increased conceptual-level suppression when conflict is expected in the low-PC task. The authors also found that a temporo-parietal slow potential (SP) was reduced in the low-PC task, which they argued indicates that perceptual-level color processing requires stronger activation in order to surpass the inhibition in the conceptual system. If West and Alain are correct in their interpretation of these EEG patterns, this would provide clear support for conflict adaptation theory. Of course, the usual caveats with neuroimaging work still apply. Correlating a behavioral effect with brain activations may hint at a mechanistic interpretation of said brain regions, but alternative interpretations are always possible. As was already mentioned in the discussion of the fMRI work, it could instead be the case, for instance, that frontal activations such as the N450 index temporal expectancy, with a weaker activation in the low-PC task indicating a slower expectancy.

Larson, Kaufman, and Perlstein (2009b) found that N450 negativity was more negative for incongruent trials, relative to congruent trials, but failed to find a sequential effect. That is, there was no decrease in the N450 following an incongruent, relative to a congruent, trial. However, the conflict SP was modified by previous congruency. There is some research to indicate, however, that the SP may actually be due to time-on-task rather than conflict (West, Jakubek, Wymbs, Perry, & Moore, 2005). This experiment also had a strong contingency bias (70 % congruent in a three-choice task) and controlled for only two types of feature repetitions. As was already discussed, such confounds make it much harder to know whether learning or conflict factors are responsible for any brain activations observed. Furthermore, Larson, Kaufman, and Perlstein (2009a; see also, Larson, Farrer, & Clayson, 2011) found that the SP was reduced in participants with traumatic brain injuries, relative to control participants, despite showing no signs of behavioral changes in the size of the Gratton effect. The SP thus seems to be dissociable from the behavioral Gratton effect. Interestingly, the authors interpreted the impaired SP as evidence for a reduction in conflict adaptation, which seems odd given the lack of an effect in behavior.

Summary

There is a rich literature of EEG, fMRI, and lesion studies linking various brain regions, such as the ACC and DLPFC, to conflict monitoring and adaptation processes. As highlighted in this section, however, there are many interpretational problems with such data. First, neuroimaging work with healthy participants is inherently correlational. It is therefore difficult to know whether the areas that are most active during certain tasks are really performing the hypothesized processes or whether such activation differences are more incidental (e.g., a response to time-on-task). Even with lesion studies, a further issue is that we currently do not know whether impaired behavioral effects are due to impairment of conflict adaptation or to impairment of the learning and memory confounds present in the paradigms used. Brain research in support of conflict adaptation is nevertheless compelling, and more work is therefore required to see whether the learning and memory account provides a better explanation. It is important, however, to highlight the fact that neuroimaging work can only be regarded as speculative. Behavioral data should still probably be relied on most strongly when selecting between competing accounts.

Future directions

Conflict adaptation theory is highly popular in cognitive psychology, and this is not without reason. It has high explanatory power for a broad range of behavioral and brain data. Of course, it is alternatively possible that the findings conflict adaptation theory purports to explain are driven by other factors, such as learning and memory biases. This review presented some results that tentatively suggest that the alternative view may be correct, in addition to some newer ideas that can be regarded as plausible, although highly speculative. In this sense, it is not entirely clear whether conflict adaptation is an idea that we can abandon or one that still retains explanatory power for part of the effects under study.

Even if conflict adaptation is real (whether list-, item-, or context-wide), there is still a benefit to the literature to exploring the hypothesis that it is not. De Houwer (2011; see also De Houwer, 2007) discussed the functional–cognitive view for psychological research. He argued that a strictly cognitive approach (i.e., treating behavioral results as proxies for mental processes) can impair theorizing by restricting the possible interpretations of results. As De Houwer (2011) put it, “Merely entertaining the idea that a mental construct is a necessary cause of a behavioral effect could encourage researchers to ignore evidence that questions this idea” (p. 203). In the functional approach, behavioral results are defined simply in terms of changes in the environment. For instance, the PC effect is defined not as a change in the congruency effect due to conflict adaptation but as a change in response time differences due to manipulations of the proportion of congruent trials. The functional–cognitive approach combines the two, determining the environmental conditions in which an effect is observed (functional) and building mental interpretations to fit with this knowledge (cognitive).

As applied to the current discussion, by considering the results of a paradigm (e.g., Gratton) as a behavioral effect and not assuming that conflict adaptation is the correct mental mechanism responsible for it, discovery of alternative influences on the data becomes possible. For instance, if one does not assume that the ISPC effect is driven by item-specific conflict adaptation, one can hypothesize about other task influences (e.g., contingencies) that could explain the critical ISPC interaction between congruency and item-specific proportion congruency. Even if a conflict adaptation effect remains on top of that alternative (contingency) effect, we have still learned something new about what and how participants learn. For this reason alone, assessing data with the eye of ignoring conflict adaptation as the default mental explanation of the data can be a powerful approach to strengthening the literature.

That being said, in the current state of the literature, it is certainly incorrect to say that there is sufficient evidence to abandon conflict adaptation theory as a potential explanation. Future research is still needed to determine how much explanatory power a nonconflict account really has. This is particularly true for temporal learning research. While there is some evidence that temporal learning might play a role in producing the list-level PC effect, it still remains to be determined how much of this effect can be explained by temporal learning. Future work might aim to find a way to directly dissociate temporal learning biases from list-level PC. If this could be achieved, it would allow us to assess whether there is a portion of the list-level PC effect that cannot be explained by temporal learning. If so, then this would lend credence to the conflict adaptation account for list-level effects (although, of course, further biases are still possible).

Of even more interest, context-level PC effects call for more scrutiny. This article has presented the idea that temporal learning might be context dependent. In other words, contextual cues (e.g., location) may serve as occasion setters to differing temporal expectancies. This would be an interesting finding if it could be demonstrated, but currently this idea is entirely speculative. It might, for instance, alternatively be the case that temporal learning biases can occur only at a task-wide level and not shift rapidly in response to contextual cues. Further research on these possibilities is therefore welcome. In particular, future research might aim to introduce a contextual manipulation to the (nonconflict) contrast paradigm of Schmidt (2012b) or the word frequency paradigm of Kinoshita and Lupker (2003). For instance, if one location is associated with mostly easy (high-contrast) items and the other location is associated with mostly hard (low-contrast) items, will the contrast effect be larger at the former location, relative to the latter?

Similarly, future research on transfer effects could prove useful. There is some evidence that temporal learning might contribute to list-level PC effects, but it still remains to be demonstrated that these temporal expectancies do, in fact, carry over from frequency-biased context items to frequency-unbiased transfer items. If such a finding cannot be observed, this would put a large hole in the claim that context-level PC effects are solely due to temporal learning.

The role of contingencies in ISPC effects also needs more attention in future research. So far, the evidence has been mixed. The new dissociation procedure of Schmidt (2013) provides tentative support for the notion that contingencies are the only active variable that we need to consider, but this support comes from only one experiment. Future work might therefore aim to apply variants of this dissociation procedure to a wider range of experimental preparations to determine how much explanatory power the contingency account has. Evidence for conflict adaptation may still remain in all or some of the many experimental preparations in one or more of the various conflict paradigms.

Concluding remarks

The overarching goal of this review was to highlight the difficulties faced by conflict adaptation accounts. As can be seen, evidence for or against conflict adaptation effects in conflict paradigms such as the Stroop is ambiguous. Several results are particularly damaging for the conflict adaptation account (e.g., Grinband et al., 2011; Schmidt, 2013; Schmidt & De Houwer, 2011), but other results provide difficulties for the null hypothesis (e.g., Crump & Milliken, 2009). Implicit learning processes exert a powerful impact on performance (Schmidt, 2012a). Thus, by manipulating proportions and sequential dependencies in PC and Gratton paradigms, the potential for learning is introduced. We know that participants learn something, of course, but whether they can learn anything about conflict is an open question. The most rigorous attempts to control for confounds often eliminate the original effect. Thus, if conflict adaptation is real, it might be quite subtle and context dependent. Whether or not conflict adaptation is observable and at what levels it can occur (e.g., list, item, context, sequential, etc.) are still very important questions for the literature that still lack definitive answers. In some versions of a given paradigm, evidence for conflict adaptation seems strong, whereas in others, this is less the case. There are caveats with every approach, so the summed results from the literature do not tell a clear story. At minimum, it is hoped that this review demonstrates that an account that does not appeal to the notion of conflict adaptation is still very viable.

The alternative account for PC and Gratton effects is multifaceted but revolves around one central idea. Encoding of previously encountered trials (e.g., into episodic memory) will lead to incidental response biases during subsequent retrieval. The most recently encoded trials will have a powerful effect in producing sequential modulations, such as feature-binding effects (Hommel, 1998), negative priming (Rothermund, Wentura, & De Houwer, 2005), and the Gratton effect. Averaging over several trials produces contingency learning biases and temporal learning biases, which help to explain basic contingency learning effects, mixing costs, a portion of the Gratton effect, and PC effects at the item, list, and context levels. In other words, all the evidence for conflict adaptation can quite conceivably be explained away by simple memory encoding and retrieval processes. The appearance of conflict adaptation could therefore be an illusion. Some of the evidence for this null (i.e., non-conflict adaptation) account is based on strong experimental support and some on novel post hoc explanations provided in this review. Clearly, the evidence does not unequivocally support either view, and further research is definitely called for. However, the hope is that this review has demonstrated that an account based purely on learning and memory biases is a viable alternative to an account that additionally assumes the presence of conflict-monitoring and adaptation processes.

One might conceivably object that conflict adaptation is not that much different from the learning account presented here, because conflict adaptation involves learning as well. However, it is important to note some differences. The nonconflict account assumes only that participants learn about when and what to respond. The conflict adaptation account additionally assumes that participants are able to learn about the amount of conflict they are experiencing (conflict monitoring) and are able to flexibly adjust attention in response to the amount of conflict experienced (conflict adaptation). These two processes are not assumed by the nonconflict account and are the processes that I wish to question in this review. A more parsimonious account that excludes conflict-monitoring and adaptation mechanisms may be sufficient. Of course, a simpler account is not necessarily the correct account.

If conflict adaptation is assumed to be real, however, confounds such as those discussed in the present review cannot be ignored. It is never sufficient to simply reference work suggesting that conflict adaptation exists after full controls. If the goal is to assess conflict adaptation independently of known task confounds, it is always necessary to control for every identified confound in every experiment. This should seem obvious, but it is not common practice. For instance, although it has been shown that controlling for feature repetitions sizably reduces the Gratton effect (e.g., Mayr et al., 2003), these biases are not always controlled for. Independently of one’s theoretical bent regarding the larger question of whether or not conflict adaptation is observable, such confounds must always be attended to.

References

Akçay, Ç., & Hazeltine, E. (2007). Conflict monitoring and feature overlap: Two sources of sequential modulations. Psychonomic Bulletin & Review, 14, 742–748.

Akçay, Ç., & Hazeltine, E. (2011). Domain-specific conflict adaptation without feature repetitions. Psychonomic Bulletin & Review, 18, 505–511.

Atalay, N. B., & Misirlisoy, M. (2012). Can contingency learning alone account for item-specific control? Evidence from within- and between-language ISPC effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 1578–1590.

Blais, C., & Bunge, S. (2010). Behavioral and Neural Evidence for Item-specific Performance Monitoring. Journal of Cognitive Neuroscience, 22, 2758–2767.

Blais, C., Robidoux, S., Risko, E. F., & Besner, D. (2007). Item-specific adaptation and the conflict monitoring hypothesis: A computational model. Psychological Review, 114, 1076–1086.

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., & Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychological Review, 108, 624–652.

Bugg, J. M., & Chanani, S. (2011). List-wide control is not entirely elusive: Evidence from picture-word Stroop. Psychonomic Bulletin & Review, 18, 930–936.

Bugg, J. M., Jacoby, L. L., & Chanani, S. (2011a). Why it is too early to lose control in accounts of item-specific proportion congruency effects. Journal of Experimental Psychology. Human Perception and Performance, 37, 844–859.

Bugg, J., Jacoby, L. L., & Toth, J. P. (2008). Multiple levels of control in the Stroop task. Memory & Cognition, 36, 1484–1494.

Bugg, J. M., McDaniel, M. A., Scullin, M. K., & Braver, T. S. (2011b). Revealing list-level control in the Stroop task by uncovering its benefits and a cost. Journal of Experimental Psychology. Human Perception and Performance, 37, 1595–1606.

Cabeza, R., & Nyberg, L. (1997). Imaging cognition: An empirical review of PET studies with normal subjects. Journal of Cognitive Neuroscience, 9, 1–26.

Cheesman, J., & Merikle, P. M. (1986). Distinguishing conscious from unconscious perceptual processes. Canadian Journal of Psychology, 40, 343–367.

Clayson, P. E., & Larson, M. J. (2011). Conflict adaptation and sequential trial effects: Support for the conflict monitoring theory. Neuropsychologia, 49, 1953–1961.

Crump, M. J., Gong, Z., & Milliken, B. (2006). The context-specific proportion congruent Stroop effect: Location as a contextual cue. Psychonomic Bulletin & Review, 13, 316–321.

Crump, M. J. C., & Milliken, B. (2009). The flexibility of context-specific control: Evidence for context-driven generalization of item-specific control settings. Quarterly Journal of Experimental Psychology, 62, 1523–1532.

De Houwer, J. (2007). A conceptual and theoretical analysis of evaluative conditioning. The Spanish Journal of Psychology, 10, 230–241.

De Houwer, J. (2011). Why the cognitive approach in psychology would profit from a functional approach and vice versa. Perspectives on Psychological Science, 6, 202–209.

Durston, S., Davidson, M. C., Thomas, K. M., Worden, M. S., Tottenham, N., Martinez, A., Watts, R., Ulug, A. M., & Caseya, B. J. (2003). Parametric manipulation of conflict and response competition using rapid mixed-trial event-related fMRI. NeuroImage, 20, 2135–2141.

Egner, T. (2007). Congruency sequence effects and cognitive control. Cognitive, Affective, & Behavioral Neuroscience, 7, 380–390.

Egner, T. (2011). Right ventrolateral prefrontal cortex mediates individual differences in conflict-driven cognitive control. Journal of Cognitive Neuroscience, 23, 3903–3913.

Egner, T., Ely, S., & Grinband, J. (2010). Going, going, gone: Characterizing the time-course of congruency sequence effects. Frontiers in Psychology, 1, 154.

Egner, T., Etkin, A., Gale, S., & Hirsch, J. (2008). Dissociable neural systems resolve conflict from emotional versus nonemotional distracters. Cerebral Cortex, 18, 1475–1484.

Egner, T., & Hirsch, J. (2005). Cognitive control mechanisms resolve conflict through cortical amplification of task-relevant information. Nature Neuroscience, 8, 1784–1790.

Eriksen, B. A., & Eriksen, C. W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Perception & Psychophysics, 16, 143–149.

Etkin, A., Egner, T., Peraza, D. M., Kandel, E. R., & Hirsch, J. (2006). Resolving emotional conflict: A role for the rostral anterior cingulate cortex in modulating activity in the Amygdala. Neuron, 51, 871–882.