Abstract

This article introduces a new computational model for the complex-span task, the most popular task for studying working memory. SOB-CS is a two-layer neural network that associates distributed item representations with distributed, overlapping position markers. Memory capacity limits are explained by interference from a superposition of associations. Concurrent processing interferes with memory through involuntary encoding of distractors. Free time in-between distractors is used to remove irrelevant representations, thereby reducing interference. The model accounts for benchmark findings in four areas: (1) effects of processing pace, processing difficulty, and number of processing steps; (2) effects of serial position and error patterns; (3) effects of different kinds of item–distractor similarity; and (4) correlations between span tasks. The model makes several new predictions in these areas, which were confirmed experimentally.

Similar content being viewed by others

Working memory can be characterized as a system for holding a limited amount of information available for processing. Its limited capacity has been shown to have considerable generality across various contents and methods of measurement (Kane et al., 2004; Oberauer, Süß, Schulze, Wilhelm, & Wittmann, 2000). Variations in working memory between groups and between individuals have been shown to correlate with performance in a broad range of complex cognitive activities (for a review, see Conway, Jarrold, Kane, Miyake, & Towse, 2007).

The most commonly used paradigm for measuring working memory capacity is the complex-span paradigm. There are several variants of complex span, the earliest being the reading span (Daneman & Carpenter, 1980) and counting span (Case, Kurland, & Goldberg, 1982) tasks, later followed by operation span (Turner & Engle, 1989) and spatial variants of the paradigm (Shah & Miyake, 1996). The general schema of all complex-span tasks is that encoding of a list of memoranda (e.g., words, letters) for serial recall is interleaved with a distracting processing task (e.g., reading a sentence or verifying an equation). The term complex span has been coined in contrast to simple span, which refers to immediate serial recall without a parallel distractor task.

Multiple variants of complex span have been validated as measures of working memory capacity by the findings that they correlate well with each other and with other indicators of working memory capacity (Oberauer et al., 2000; Schmiedek, Hildebrandt, Lövdén, Wilhelm, & Lindenberger, 2009) and that they are good predictors of a range of performance indicators in tasks that are theoretically assumed to require working memory, such as tests of reasoning and fluid intelligence (Conway, Kane, & Engle, 2003), text comprehension (Daneman & Merikle, 1996), and explicit learning of a rule (Unsworth & Engle, 2005), as well as a number of experimental tasks requiring cognitive control, such as the Stroop task (Kane & Engle, 2003) and the antisaccade task (Unsworth, Schrock, & Engle, 2004). Therefore, understanding the cognitive processes in the complex-span paradigm would be a fundamental step toward understanding the capacity limits of cognition. The success of complex span as a measure of working memory capacity has inspired much experimental work and various theoretical efforts directed at analyzing the underlying processes (e.g., Barrouillet, Bernardin, & Camos, 2004; Bayliss, Jarrold, Gunn, & Baddeley, 2003; Engle, Cantor, & Carullo, 1992; Oberauer & Lewandowsky, 2011; Towse, Hitch, & Hutton, 2000; Unsworth & Engle, 2007).

With few exceptions, theories of the processes involved in complex span, like theories of working memory in general, have so far remained verbal descriptions of mechanisms. This is problematic because it is generally acknowledged that working memory is a complex system, and comprehensive theories of working memory typically assume numerous mechanisms and processes that operate together (Baddeley, 1986; Cowan, 1995). With theories of such complexity, unambiguously determining predictions for a specific set of circumstances easily surpasses our human reasoning abilities (Farrell & Lewandowsky, 2010). The problem is often compounded by the vagueness of verbal theories, which leave many critical details unspecified (for an example, see Lewandowsky & Farrell, 2011). These problems can be addressed using computational modeling. Writing a theory as a computer program forces the theorist to specify the model in sufficient detail for the program to run. Moreover, running the program provides a means to derive precise and unambiguous predictions from the model. Every single decision on the way from the general principles of a theory to its detailed implementation, and every step on the way to its predictions for a specific experiment, is fully transparent in the programming code.

Computational modeling has been applied fruitfully to one experimental paradigm of working memory research, the serial-recall task (Burgess & Hitch, 1999; Farrell & Lewandowsky, 2002; Henson, 1998b; Page & Norris, 1998). The goal of the present work is to apply what we have learned from modeling of serial recall to developing a computational model of behavior in the complex-span paradigm. This is no trivial step, because complex span appears to rely on core cognitive abilities to a far greater extent than does simple span. For example, even though the surface similarity between different complex-span tasks (e.g., operation span vs. sentence span) is far less than the surface similarity between simple-span tasks from different domains, performance correlates more highly across domains for the complex-span than for the simple-span task (Kane et al., 2004). Moreover, because the complex-span task shares many features with other paradigms of working memory research—short-term retention of information, a requirement to retain serial order, distraction by a concurrent task, and coordination of multiple competing processes—a computational model of complex span will serve as a springboard for more precise theorizing in the field as a whole.

One central theoretical question about working memory is why it has limited capacity. Many theories explain the capacity limit by assuming that representations in working memory quickly decay over time unless they are actively maintained by rehearsal or refreshing (Baddeley, 1986; Barrouillet et al., 2004). This assumption has been incorporated into the only two computational models of complex span proposed so far (Daily, Lovett, & Reder, 2001; Oberauer & Lewandowsky, 2011). The assumption of rapid time-based decay, however, has been repeatedly questioned by empirical observations (for a review, see Lewandowsky, Oberauer, & Brown, 2009). One common alternative to decay is that working memory capacity is limited by interference between representations (Jonides et al., 2008; Nairne, 2002; Saito & Miyake, 2004). To date, however, the concept of interference has remained underspecified, thus limiting its theoretical utility (Jonides et al., 2008). We overcome this limitation here by instantiating the interference notion in a detailed computational model of complex span.

Our model attributes the capacity limit of working memory entirely to interference. The model accounts for all of the findings that provided the initial empirical support for a decay-based theory of complex span, the time-based resource-sharing (TBRS) theory of Barrouillet, Camos, and colleagues (Barrouillet et al., 2004; Barrouillet, Bernardin, Portrat, Vergauwe, & Camos, 2007). The TBRS theory has, arguably, been the strongest contender for explaining complex-span performance to date, and therefore we will compare our new model to a computational implementation of the TBRS theory (Oberauer & Lewandowsky, 2011).

This article proceeds as follows: We start by presenting our model—first informally as a set of theoretical assumptions, and then formally as a computational instantiation. We then apply the model to four sets of empirical findings. These represent benchmark findings from the complex-span paradigm that should serve as priority targets for modeling. The first is a set of findings concerning the relation between short-term retention and the temporal parameters of concurrent processing. These findings provided the empirical basis for the TBRS theory (Barrouillet et al., 2007; Barrouillet, Portrat, & Camos, 2011). The second set of findings represents a detailed analysis of recall errors in complex span, which has proved highly informative for models of simple span. The third set of findings concerns the effects of different kinds of similarity between memory items and distractors. The fourth set pertains to the pattern of correlations between span tasks across different domains that arises from the study of individual differences. The model is shown to handle all four sets of findings.

A distributed neural-network model for complex span

Our model is an extension of the SOB (“serial-order-in-a-box”) model, a distributed neural-network model of serial recall (Farrell & Lewandowsky, 2002). The initial SOB was an auto-associator in the tradition of the brain-state-in-a-box (BSB) architecture (Anderson, Silverstein, Ritz, & Jones, 1977), from which the model derived its name. The second version, called C-SOB (Farrell, 2006; Lewandowsky & Farrell, 2008b), has a two-layer structure, with one layer representing serial positions and the other representing items (the prefix “C” stands for “context,” because the position representations are a form of context). Both item and position representations are distributed—that is, they consist of patterns of activation across a large number of processing units in the network. Different items are represented by different patterns across the same set of units. Thus, item representations have well-defined similarity relations to each other, reflected in the similarity of the patterns representing them; the same holds for positions. Items are encoded in C-SOB through Hebbian associations between item and position representations: The first list item is associated with the first position representation (a.k.a. a position marker), the second item is associated with the second position marker, and so on. Memory for order is maintained by the patterns of association in the weight matrix that connects position markers to item representations. The use of context markers to represent order is a standard tool among memory theorists and has gained substantial empirical support (Lewandowsky & Farrell, 2008b).

Memory performance is limited because all item-to-position associations are superimposed in the same weight matrix, so that at the point of recall the matrix represents each individual association only in a distorted fashion. One feature of both SOB and C-SOB, which is at the heart of much of the models’ predictive power, is that encoding strength is determined by an item’s novelty. Novelty is assessed by computing an expectation for each incoming item, on the basis of already-encoded memories, and determining the similarity between this expectation and the actual item. The more novel the incoming item is, the more strongly it is encoded. This process of assessing novelty to determine an item’s encoding strength is termed “novelty-gated encoding.” The assumption of novelty-gated encoding has been part of SOB since its inception, and has received independent empirical support (Farrell & Lewandowsky, 2003).

Our new model, SOB-CS (CS for “complex span”), builds directly on C-SOB (Farrell, 2006; Lewandowsky & Farrell, 2008b), maintaining its original theoretical principles but slightly updating its mathematical formalization (see Electronic Supplementary Material for an explanation of these technical details). In addition, SOB-CS incorporates two further theoretical assumptions whose introduction was necessitated by the presence of distractors in the complex-span task.

First, we assume that processing a distractor, such as reading a word or carrying out an arithmetic operation, inevitably results in the encoding of a representation of the distractor into working memory in the same way as the memoranda (Oberauer & Lewandowsky, 2008). There is considerable precedent in the literature for this assumption (e.g., Logan, 1988). Therefore, distractors create interference with encoded item representations. Novelty-gated encoding applies to distractors in the same way that it applies to items, so that repeatedly processing the same distractor incurs less interference than does processing different distractors.

Second, like most models of working memory, ours assumes that the system engages in active restoration of an unimpaired memory state when time allows. This assumption is motivated by the finding that memory performance in complex span is better when distractor operations are demanded at a slower pace, leaving more free time between each distractor and the next stimulus (Barrouillet et al., 2004; Barrouillet et al., 2007). Whereas in decay-based models, active restoration typically refers to boosting decayed traces up to their original strength (by some sort of rehearsal or refreshing mechanism), active restoration must be conceptualized differently in interference-based models. When the main limiting factor for performance is interference, active restoration must reduce the impact of interference. This can be accomplished in several ways. For reasons of parsimony, we have so far implemented only one of them in SOB-CS, by applying a mechanism that is already embodied in all SOB models to date—namely, the removal of interfering material from memory, which by implication restores the quality of earlier memories. Because the removal notion is central to SOB-CS, it deserves to be placed into a broader theoretical context.

One general theoretical insight that emerged from our modeling work is that a successful model must have a mechanism for clearing working memory of no-longer-relevant contents. Without this, the system would soon be overloaded with outdated material. For example, when mentally solving an expression such as “24 × 3,” it would be inopportune to retain “3” in working memory during the final step of adding “12” to “60”—even though the concept “3” necessarily had to be brought to mind a brief moment before in order to compute “12.” In general, rapid updating of working memory would be impossible without a clearing or removal mechanism, because the system would soon be choked by proactive interference, and demonstrably, this does not happen (Kessler & Meiran, 2008; Oberauer & Vockenberg, 2009).

In decay-based models, removal of old contents from memory occurs by default (viz., they simply fade away), and active maintenance must be engaged to retain contents that are still relevant. Interference-based models operate by the reverse logic: All contents are maintained by default, and active removal is necessary to remove those that are no longer relevant. Thus, whereas decay-based models must be equipped with a mechanism for active maintenance, interference-based models must include a mechanism for active removal.

This necessary link between interference and removal has been largely ignored in the literature (for an exception, see Hasher, Zacks, & May, 1999). SOB-CS provides a precise mechanism explaining how removal is accomplished whenever there is free time in-between distractor operations—for example, during a pause between solving a distracting equation and presentation of the subsequent memorandum. During those pauses, the immediately preceding distractor representation is gradually removed from memory using Hebbian antilearning. This operation gradually undoes the association between the distractor and the position marker (see Kessler & Meiran, 2008, for the related idea of “dismantling” outdated bindings in an updating task).

The assumption of distractor removal (or “unbinding”) is a generalization of an assumption that is common in models of serial recall: Once a list item is recalled, it is removed from memory to avoid perseveration. There is strong evidence to support such a mechanism for response suppression (Farrell & Lewandowsky, 2004; Henson, 1998a), and it has been implemented in many models of serial recall (G. D. A. Brown, Preece, & Hulme, 2000; Burgess & Hitch, 1999; Page & Norris, 1998). In all versions of SOB, response suppression has been modeled using the mechanism of Hebbian antilearning. Accordingly, response suppression is an instance of removing no-longer-relevant information from memory. Here, we simply generalize this notion to distractors.

By specifying how information is removed from working memory, we flesh out one basic operation for controlling the contents of memory. Control over which information is held in working memory is being recognized as an important source of individual differences in working memory capacity (Hasher et al., 1999; Jost, Bryck, Vogel, & Mayr, 2010; Vogel, McCollough, & Machizawa, 2005). Explicitly modeling the control processes operating on the contents of working memory is a prerequisite for understanding why working memory capacity also correlates with various control processes in tasks with little involvement of memory (Kane, Conway, Hambrick, & Engle, 2007).

To summarize, strong conceptual considerations mandate the presence of some control process that can clear working memory of unwanted contents. The removal notion is supported by data and theoretical precedent, and in SOB-CS we instantiate the removal process using a mechanism of proven theoretical utility.

In SOB-CS, removal of distractors plays a role similar to that of rehearsal or refreshing of memory items in other theories. Our model does not presently include a maintenance process for the strengthening of items (e.g., rehearsal or refreshing), for three reasons. First, rehearsal or refreshing are necessary mechanisms of maintenance when memory traces are assumed to decay over time; however, in a model that attributes forgetting to interference, the threat to remembering comes from the presence of interfering material, not from the decay of memoranda, and therefore, the most effective way of protecting memory is to remove the sources of interference. Second, the existing evidence does not yield strong support for a causal role of rehearsal in complex-span tasks. While there is no doubt about the existence of articulatory rehearsal, the evidence for it being causally responsible for superior memory performance is less than compelling. For example, people who report using articulatory rehearsal in a complex-span task do not perform much better than those who report just reading the memory items as their strategy, and more effective strategies, such as elaboration, are reported by only a minority of participants (Dunlosky & Kane, 2007; Kaakinen & Hyönä, 2007). Third, as we show below, we have successfully modeled benchmark findings cited in support of refreshing without actually requiring the refreshing of memory items, and we therefore have omitted that mechanism for reasons of parsimony.

We remain open to the possibility that a rehearsal or refreshing mechanism might become necessary in a future extension of the model, if new results become available that mandate its inclusion. To summarize this crucial point: We do not claim that people do not rehearse during complex-span tasks. The existence of rehearsal is beyond dispute. We also do not rule out the possibility that rehearsal benefits memory; however, the evidence to date has turned out to be inconclusive upon closer inspection. What we demonstrate in the remainder of this article is that rehearsal is not needed to account for benchmark data in complex span.

In addition to the two new assumptions just discussed, we make explicit two hitherto tacit assumptions in SOB. First, previous versions of SOB have—for simplicity—modeled basic processes as time invariant, on the basis that in all paradigms to which the theory has been applied to date, sufficient time was available for processes to run to completion. Under those circumstances, the theory could leave temporal aspects of those processes unspecified for parsimony’s sake. By contrast, in SOB-CS, we must explicitly model the time dependence of encoding and retrieval processes because, in complex span, performance is strongly influenced by temporal parameters (Barrouillet et al., 2004). In particular, we make the uncontroversial assumption that the degree of encoding and the extent of removal of stimuli increases up to a point, as more time is available to those processes. There is considerable evidence that encoding into short-term memory takes time to be accomplished (Jolicœur & Dell’Acqua, 1998). Likewise, there is evidence that removal of information from working memory takes time (Oberauer, 2001).

Second, we make explicit the notion of a focus of attention in SOB-CS. The last stimulus encoded into working memory, or the last item manipulated, typically enjoys a privileged status of heightened availability (Garavan, 1998; McElree, 2006; Oberauer, 2003a), supporting the notion that, by default, the last representation operated upon remains in the system’s focus of attention. In SOB-CS, as in other distributed neural-network models, there is at any point in time a pattern of activation in each layer of units that (more-or-less accurately) represents an event (i.e., an item or a distractor in a specific position). We regard this currently active representation as the content of the focus of attention. The active representations in the focus of attention are those that are available for processes such as encoding (through Hebbian learning) and removal (through Hebbian antilearning, as explained below). By default, the last-presented stimulus (item or distractor), together with its serial position, is in the focus of attention, and thereby the association between that stimulus and its position can be encoded or removed.

The following sections present SOB-CS formally. To facilitate exposition, the variables used in the equations and their roles in the model are summarized in Table 1. The MATLAB model code is available as Supplementary Materials for this article.Footnote 1

Architecture and representations

SOB-CS consists of two layers of units that are fully interconnected by a weight matrix W. The item layer, with 150 units, represents items; the position layer, comprising 16 units, represents the serial positions in the current list. Items are represented by vectors of +1 and –1, constructed at random in accordance with constraints on their similarity structure. Position markers are constant vectors of values between –1 and +1, constructed such that their similarity reflects their ordinal relation. The similarity between any two position markers (expressed as their cosine) decreases exponentially with their absolute ordinal distance:

where i and j are the positions of the ith and jth items, p i and p j are vectors representing positional markers at those positions, and s p is a fixed parameter determining the degree of overlap of successive positional markers (s p = .5 throughout, as in Farrell, 2006, and Oberauer & Lewandowsky, 2008).Footnote 2

Whereas position markers are shared by all tasks requiring memory for serial order, the representations of stimuli depend on the category of items and the distractors involved in a task. We constructed representations for four categories of stimuli used in the experiments that we simulated: letters, digits, words, and generic visuospatial stimuli, each of which can serve as memory items or as distractors. For letters, we used the representations of 16 consonants (six similar and ten dissimilar) constructed by Farrell (2006) and Lewandowsky and Farrell (2008a) for use with C-SOB. The similarity structure between these 16 vectors reflects a three-dimensional multidimensional scaling solution for an empirical confusion matrix between these letters (Hull, 1973). The average similarity between consonant representations, computed as their cosine, was .65 for the similar and .50 for the dissimilar subsets.

Nine vectors were constructed to represent digits. These were created from a common prototype such that their average similarity was .50; this value reflects the fact that digits are, on average, less confusable than letters (Jacobs, 1887). Finally, we created nine sets of nine words each. The words within each set were similar to each other (cosine = .65), and words from different sets were dissimilar (cosine = .5). For simulations of experiments not manipulating similarity, we used a random mixture of similar and dissimilar letters or words for all memory lists and distractor sets. Visuospatial stimuli (used in Simulations 5 and 6) were generated in the same way as the words, except that they are represented in a separate section of the item layer.

Distractor representations were created in the same way as the item representations—that is, by sampling individual distractors from a distractor prototype. For simulations in which items and distractors came from the same broad category (i.e., digits, letters, or words), we used the same prototype for generating both items and distractors, so that the average similarity between an item and a distractor equaled that between two items and between two distractors. For simulations in which the items and distractors came from different categories, we derived the prototype of the distractors from the prototype of the items, so that they had a similarity governed by the item–distractor similarity parameter s c , which was set to .35, the same value as in previous simulations (Oberauer & Lewandowsky, 2008).Footnote 3

Encoding and recall

Figure 1 provides a schematic illustration of the encoding process in SOB-CS. Each presented memory item activates its representation in the item layer, and at the same time, the representation of the next available list position is activated in the position layer. The representations active in the item layer and the position layer jointly constitute the current focus of attention. Encoding into working memory occurs by associating the item representation with the position representation in the focus. Once associative encoding is completed, that item–position pair is replaced in the focus by the next item–position pair. Encoding uses standard Hebbian learning (see, e.g., Anderson, 1995):

Schematic illustration of encoding in SOB-CS. Top left panel: State of the weight matrix after encoding stimulus i – 1, W i–1. The new position vector p i is activated in the position layer, and its activation is forwarded through W i–1 to generate an expectation (= W i–1 p i ) for the incoming stimulus in the item layer. Activation values of the units in the position and item layers are coded by shade (white = –1, gray = 0, black = 1). A new stimulus, represented by vector v i , is initially matched against the expected vector. The degree of match is measured as energy E i . Top right panel: Energy E i is translated into the asymptote of encoding strength, A, by the logistic function, governed by free parameters e and g. Parameter e is the point on the E i scale at which A = 0.5 (illustrated by the dotted line), and parameter g determines the steepness of the function around that point. The dashed line shows the resulting A for a stimulus with E i = –300. Bottom left panel: Encoding strength η e for encoding stimulus i is computed as a negatively accelerated exponential function of the time t spent on encoding. The dotted line shows the resulting encoding strength for an item encoded for 1.5 s; the dashed line shows encoding strength for a distractor encoded for 0.3 s. Bottom right panel: Updating of the weight matrix according to the outer product of the position representation p i T and the item representation v i , multiplied by the encoding strength η e . Bold lines are the connection weights increased in this learning event because they connect units with same-signed activation. Thin lines are connection weights that are decreased because they connect units with opposite-signed activation

where W is the weight matrix connecting the position layer to the item layer, v i is the vector representing the ith presented item, and p i is the positional marker for the ith serial position, which is transposed for computing the outer product of the two vectors.

We model encoding as a time-dependent process: The encoding strength η e for the ith item, η e (i), is calculated as a function of the time spent encoding the item:

where Α(i) is the asymptote of the encoding strength of item i, R is the rate of encoding, and t e is the time spent on encoding. Thus, the encoding strength η e that determines the strength of Hebbian association is assumed to grow toward asymptote at an encoding rate of R. The asymptote itself is a function of the novelty of the incoming item, which is defined as the energy between the to-be-learned association and the information captured by W up to that point. Specifically, the asymptote A(i) for the encoding strength of item i is a logistic function of that item’s energy, E i :

where e and g are the threshold and gain parameters, respectively, of the logistic function. For all simulations, e was set to –1,000 and g to 0.0033. Simulations of simple span have shown that these values generate the highest level of memory accuracy without producing empirically unrealistic serial-position curves. The use of a logistic function smoothly restricts A(i) to fall between zero and unity, thus preventing the occurrence of implausible (e.g., <0) encoding weights. Constraining encoding strength in this way represents an improvement over previous instantiations of the model, in which the encoding strength was not bounded, without altering the basic principle of energy-gated encoding.

The energy of the ith association is given by

(see Lewandowsky & Farrell, 2008b). The computation of energy can be interpreted as the generation of an expectation for the item in position i, given the current state of memory as reflected in the state of the weight matrix before encoding of item i. The expectation is computed by cueing the weight matrix W with the new position p i . Energy is the negative dot product between the expectation (computed as Wp i ) and the actual item v i , and it reflects the degree of mismatch between the expectation and the actual item—that is, the item’s novelty. Equation 4 implies that more novel items, which have less negative (or even positive) energy values, are encoded more strongly. The use of energy to compute the weighting of incoming information is a core principle of SOB that turns out to be critical for the model’s predictions for complex span. Recent research on the processing of novelty in the hippocampus lends support to a mechanism very similar to the one assumed for SOB-CS (Kumaran & Maguire, 2007).

Our computation of encoding strength in SOB-CS differs from previous instantiations of SOB in that it makes encoding strength time-dependent. The duration of encoding an item into working memory can be estimated from dual-task studies (Jolicœur & Dell’Acqua, 1998) and from studies using masked presentation of visual stimuli (Vogel, Woodman, & Luck, 2006). These studies converge on an estimate of 150–300 ms as the average time for encoding an item into working memory. Jolicœur and Dell’Acqua applied a formal model to three of their experiments, from which we estimated the encoding rate for individual letters to be about six items per second.Footnote 4 We therefore set the encoding rate to R = 6 in Eq. 3. This value implies that η e (i) reaches 95 % of its asymptote after 500 ms. Thus, with encoding times of 500 ms or more, encoding in SOB-CS is virtually indistinguishable from encoding in previous versions of C-SOB, and Eq. 3 functionally reduces to the simple equality η e (i) = A(i) that—bar the use of a logistic squashing function—is familiar from earlier applications of C-SOB.

Retrieval proceeds by reinstating the position markers in their original order, one by one, as cues for the items with which they were associated. For position i, retrieval is cued by presenting the activation pattern for positional marker p i in the position layer and updating the activation in the item layer by forwarding activation through the weight matrix:

where the resultant vector in the item layer, v i ', is a distorted version of the original item vector v i , consisting of a blend of v i and the other item representations involved in the trial, which will all have been associated to partially overlapping positional markers. To determine which item to recall, the retrieved vector v i ' is matched to all vectors v j of known retrieval candidates in long-term memory. The similarity of v i ' to each retrieval candidate is computed as

In this equation, the Euclidean distance measure D is weighted by the free parameter c, which determines the discriminability between retrieval candidates. With larger values of c, similarity falls off more steeply with distance, so that the most similar retrieval candidate is more clearly discriminated from the less similar ones. For computational reasons, D is normalized by subtracting the minimum distance across all of the n retrieval candidates from the distance for each candidate.

The probability of recalling an item j is computed from these similarities by the Luce choice rule:

where n is the number of retrieval candidates. A candidate is then selected for recall by random sampling among the candidate set, with the probability of sampling for each candidate being determined by Eq. 8. The intact representation of the candidate selected for output, v o,i , replaces the originally retrieved vector v i ', thus placing a clearly identified retrieval candidate into the focus of attention. Figure 2 provides an illustration of retrieval in SOB-CS.

Schematic illustration of retrieval in SOB-CS. Left panel: The positional cue p i is activated in the position layer and forwards its activation through the weight matrix W to the item layer, creating an approximation of the item vector, v i ′. This vector is compared to all vectors of retrieval candidates, and their distance D(v i ′, v j ) is computed for all retrieval candidates. Right panel: Distance is converted into similarity by a Gaussian gradient. Three gradients are shown, for the three values of c used in different simulations in this article

The set of recall candidates includes not only the list items but also other items in the experimental vocabulary. This enables the model to generate extralist intrusion errors. When the distractors come from the same stimulus category as the items (e.g., both are words), we assume that the distractors are also included in the candidate set, so that intrusions of distractors in recall can be modeled. (When distractors are categorically different from the memoranda, they are excluded from the set of candidates because people can prevent intrusions on the basis of categorical information.)

Overt recall itself impairs memory for the remaining items; this effect is known as output interference (Cowan, Saults, Elliott, & Moreno, 2002; Fitzgerald & Broadbent, 1985; Oberauer, 2003b). In SOB-CS, as in C-SOB, we implemented output interference by adding Gaussian noise with a standard deviation N o to each weight in W after recall of each item.

A common assumption in models of serial recall is that recalled items are suppressed in order to avoid perseveration. Response suppression has been modeled in SOB by Hebbian antilearning (Anderson, 1991). Hebbian antilearning operates in the same way as Hebbian learning, except that a negative encoding strength is used. The negative sign implies that the association between the recalled item, v o,j , and its position is removed from the weight matrix:

where j is the output position and η s (j) is the strength of suppression at output position j.

Like Hebbian learning during encoding, Hebbian antilearning during response suppression takes time, and SOB-CS explicitly represents this. Therefore, the antilearning strength of suppression, η s (j), is a function of time:

where t s is the time devoted to response suppression of item j (set to 1s for all simulations, the approximate duration of serial recall of letters; Farrell & Lewandowsky, 2004, Exp. 2), and r is the rate of removal of representations from working memory. Ω(j) is the asymptotic value of antilearning strength, computed by a logistic function from the item’s energy:

where E j is the just-recalled item’s energy and E 1 is the energy of the item recalled at the first output position. Dividing by E 1 corrects for the overall energy of the list. That is, when more items are stored, or positional markers overlap more, the energy computed during recall will on average be higher, and dividing by E 1 effectively uses the energy of the first item to correct for this potential variability. For the first output position, a default value Ω(j) = 1 is used. The energy of the recalled item j is computed using the same formula as Eq. 5, but with the presented item replaced by the recalled item:

Distractor encoding and removal

Following our previous work (Oberauer & Lewandowsky, 2008), we assume that all distractors are associated with the position of the immediately preceding item. That item’s position is still held in the focus of attention because position representations are updated only when a new memory item is presented. Distractors are represented in the same way as items, as random vectors of –1 and +1 generated according to the category of stimuli (digits, consonants, or words), as described earlier. Processing of a distractor implies activating that distractor in the focus of attention, upon which Hebbian learning automatically associates it with the currently active position marker, using the same mechanism as for the learning of list items (Eq. 2):

where W is the weight matrix, d j,k is the vector representing distractor k following item j, p j is the position marker of position j, and η e (j, k) is the encoding strength of the distractor. The asymptotic encoding strength of the kth distractor following item j is computed, like that of an item, as a logistic function of its energy:

The energy of every distractor k following item j, E j,k , is computed as before, according to Eq. 5, using the position representation of the preceding item, p j . Because distractors are associated with positions already occupied by an item, and because all representations within a content domain are at least moderately positively correlated, distractors are typically encoded with less strength than are items, because of energy-gated encoding. Like encoding of items, encoding of distractors is a process that takes time, such that the encoding strength increases with the duration of distractor encoding, t d :

The distractor is encoded while attention is devoted to it (Phaf & Wolters, 1993). Like items, distractors reach near-asymptotic encoding strength after about 500 ms.

As noted earlier, any free time during complex-span processing is used to remove the immediately preceding distractor, using the same mechanism of Hebbian antilearning introduced above for response suppression:

where η r (j, k) is the antilearning strength for distractor d j,k , which is the kth distractor following the item in position j. Removal is a gradual process that, as compared to encoding, proceeds relatively slowly. Therefore, the strength of antilearning is computed as a function of the available free time, t f , and the asymptotic removal strength of the distractor, Ω(j, k):

where r is the rate of removal, which also governs the rate of response suppression (Eq. 10). The asymptote of removal strength is computed in the same way as the strength of response suppression:

where E 1,1 is the energy of the first distractor in the first processing episode. For the first distractor in a trial, Ω(1, 1) = 1.

Estimates from experiments in which participants were instructed to (temporarily or permanently) remove part of the contents of working memory have shown that removal takes between 1 and 2 s (Oberauer, 2001, 2002). Therefore, we set r to 1.5, which implies that the rate of antilearning for removal has reached 95 % of its asymptote Ω(j, k) after 2 s.

To summarize, SOB-CS has four fixed parameters and six free parameters (see Table 1). We regard as fixed parameters those that were treated as fixed parameters in previous versions of C-SOB and whose values we did not change; they all pertain to the similarity between representations. We regard as free parameters those that we adjusted manually—either on the basis of independent evidence, as in the case of the rate parameters for encoding and removal, or to find values that generated good model fits to the benchmark data. We set the free parameters to the same values in all simulations reported in this article, except in a few cases, which will be explicitly noted: the threshold and gain parameters of the logistic function that translates energy into encoding strength, e = –1,000 and g = 0.0033; the encoding rate, R = 6; the removal rate, r = 1.5; the confusability parameter for items during retrieval, c = 1.3; and the output interference parameter, N o = 1.5.

Before moving on to describe the application of SOB-CS to data from the complex-span paradigm, we first will briefly summarize an alternative account of complex-span performance, TBRS* (Oberauer & Lewandowsky, 2011), as that model serves as an important baseline for assessing SOB-CS’s account of several key phenomena.

An alternative theory: The time-based resource-sharing (TBRS) model

A new model must at the very least explain the data that constitute the main empirical support for extant theories and models. One contender for explaining performance in complex span is the time-based resource-sharing (TBRS) theory (Barrouillet et al., 2004), which has recently been instantiated in a computational model, TBRS* (Oberauer & Lewandowsky, 2011). TBRS* is the most sophisticated implementation yet of two popular assumptions about working memory: that memory traces decay rapidly over time, and that decay can be prevented by some form of active maintenance (i.e., rehearsal or refreshing). It is clear from the foregoing discussion that those assumptions stand in diametric opposition to the architecture of SOB-CS. Our first goal in this article is therefore to demonstrate that SOB-CS can explain the key phenomena cited in support of TBRS and TBRS* without committing to the core assumptions of the TBRS theory. Here, we summarize the TBRS theory and the key findings in its support.

The TBRS theory rests on two basic assumptions: First, forgetting is driven by time-based decay, and this decay must be offset by reactivation or refreshing of items to prevent loss of that information. Second, working memory has at its disposal a general attentional mechanism that can be devoted to only one task at a time. In the complex-span paradigm, this mechanism must engage with the distracting processing demands in-between encoding items. However, attention can rapidly switch between processing operations (e.g., carrying out an addition in an operation span task) and refreshing memory items, thereby using even small temporal gaps between individual operations to carry out refreshing. As a consequence, the model predicts that memory performance will decline with increasing time during which attention is occupied by distractor processing, and that it will improve with increasing free time that can be devoted to refreshing. These predictions can be summarized by the concept of cognitive load, defined as the proportion of available processing time between two memory items during which attention is captured by the distractor task:

with N representing the number of operations in a processing episode, a the time demand of each individual operation, and T the total time available for that processing episode. The TBRS theory predicts that memory is a monotonically declining function of increasing cognitive load, as less time is proportionally available for refreshing items. Additionally, when cognitive load is held constant, Barrouillet et al. (2004) predicted that the number of distractor operations would have no effect on memory.

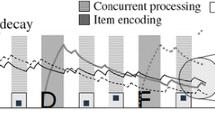

To test these predictions, Barrouillet, Camos, and their colleagues developed a version of the complex-span paradigm that increases experimental control over participants’ scheduling of individual processing steps in the task (Barrouillet et al., 2004; Barrouillet et al., 2007). Following presentation of each memory item, participants work through a computer-paced sequence of processing steps (e.g., reading aloud a digit or making a speeded choice judgment). Figure 3 shows the schematic flow of events in this version of complex span. Presentation of each item is followed by a processing episode of fixed duration T, which is broken down into N processing steps. In each processing step, a relatively elementary cognitive operation is carried out on a distractor stimulus (e.g., reading a word, carrying out an arithmetic operation, or classifying a stimulus by a keypress). This operation is assumed to capture central attention for duration a. The remainder of the available time during each processing step is free time that, according to the TBRS theory, is used for refreshing memory traces.

Schematic flow of events of the complex-span paradigm of Barrouillet et al. (2004). Presentation of each memory item is followed by a processing episode that consists of a computer-paced series of distractor stimuli, each demanding a response, followed by a free-time interval until the onset of the next distractor. Operation duration refers to the time for which generation of a response to a distractor captures the central attentional mechanism, which is not directly observable but can be approximately inferred from response latencies. Cognitive load is defined as aN/T, where N is the number of distractors in a processing episode, a is the operation duration, and T is the total time

Experiments within this paradigm have revealed three consistent regularities that lend support to the cognitive-load equation: First, memory performance decreases as the pace at which processing steps are required increases (Barrouillet et al., 2004; Barrouillet et al., 2007). This effect has been found across a large variety of memory materials and distractor tasks (Hudjetz & Oberauer, 2007; Vergauwe, Barrouillet, & Camos, 2010), and with children as well as adults (Barrouillet, Gavens, Vergauwe, Gaillard, & Camos, 2009; Portrat, Camos, & Barrouillet, 2009). In terms of the cognitive-load equation, increasing the pace means increasing the ratio of aN to T by increasing N, reducing T, or both.

Second, when pace is held constant and the time demand of individual operations is increased by making the operations more difficult, memory suffers (Barrouillet et al., 2007). This is predicted by the theory because increasing the time demand a while holding the ratio of N and T constant increases cognitive load. Figure 4 illustrates the first two effects: Memory span declined as the pace of the processing task was increased, and span was lower for the processing task with longer response times overall (i.e., the parity judgment task).

Effect of cognitive load on memory span. Participants remembered consonants and carried out one of two distractor tasks (parity judgment or location judgments) that differed in their mean response times. Pace was manipulated by demanding 4, 6, and 8 judgments within a constant total time of 6.4 s. From “Time and Cognitive Load in Working Memory,” by P. Barrouillet, S. Bernardin, S. Portrat, E. Vergauwe, and V. Camos, 2007, Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, pp. 570–585. Copyright 2007 by the American Psychological Association. Reproduced with permission p. 577

Third, when the pace and the time demands of individual operations are held constant, increasing the number of operations following each memory item—and, hence, the total duration for each processing episode—has often been found to leave memory performance unaffected (Barrouillet et al., 2004; Oberauer & Lewandowsky, 2008). This is predicted by the cognitive-load equation: Increasing the number of operations at a constant pace means increasing both N and T by the same proportion, so cognitive load is unchanged. This third finding, however, needs to be qualified: In a series of experiments using word reading as the distractor task, we varied the number of distractor words to be read aloud after each memory item. If the same word was repeated four times, memory was as good as when reading a single word, but when three different distractor words followed each item, additional forgetting was observed (Lewandowsky, Geiger, Morrell, & Oberauer, 2010).

In sum, the TBRS theory and its computational implementation, TBRS*, currently offer the strongest alternative account to SOB-CS for explaining key experimental results from the complex-span paradigm. We therefore regard the findings that provided initial crucial support for TBRS as the first set of benchmark results that our new model needs to explain.

Complex span: Benchmark findings and new predictions

There are no established criteria for what constitutes a benchmark finding in a field. This is an unfortunate situation, because it enables theorists to focus on those results that their preferred model handles best. To rein in this opportunistic selection of phenomena, we here state our selection criteria explicitly. We argue that benchmark findings should meet two criteria: (1) They should be theoretically informative; that is, they should count as support for, or a challenge to, the most successful theories in the field. (2) They should be robust; that is, they should be replicable across variations of theoretically unimportant features of method and materials. The findings that we selected as benchmarks for a model of complex span, summarized in Table 2, meet both of these criteria.

The benchmarks and the accompanying new predictions can be grouped into four sets. The first set consists of effects related to the interplay between short-term retention and processing, which is a longstanding topic of research and theorizing on working memory (Bayliss et al., 2003; Case et al., 1982; Towse et al., 2000). These are the effects that constitute the empirical support for the TBRS, described above. The second set consists of serial-position curves, error patterns, and transposition gradients. These are findings that, although given relatively little attention so far in the working memory literature, have been important in constraining models of serial recall. The third set pertains to the effects of similarity between memory items and distractors. These similarity effects are diagnostic for the mechanisms of interference in working memory. We test a new prediction arising from the assumptions in SOB-CS about the interference between items and distractors, and address the longstanding question of whether the disruption of immediate memory (“storage”) by distractor processing is domain-general or domain-specific. The fourth set concerns individual differences, which have been a major topic of research with the complex-span task. One of the reasons that so much interest has focused on complex span (and other working memory tasks) is that 50 % of the variance in working memory capacity across individuals is shared with measures of fluid intelligence (Conway et al., 2003). In addition, there has been considerable interest in the patterns of correlations between simple-span and complex-span tasks; we focus on those correlations because they fall within the scope of SOB-CS. In the remainder of this article, we will report the simulations through which we applied SOB-CS to these four groups of benchmark findings and present new model predictions, together with data testing them.

Cognitive load and the number of operations

The cognitive-load effect (Barrouillet et al., 2004) is an important regularity concerning the interplay between memory and processing in working memory. It implies that whereas the processing of distractors impairs memory, more generous intervals of free time between individual processing steps can be used to restore memory. The TBRS theory (Barrouillet et al., 2004) identifies decay as the cause of forgetting during processing operations, and refreshing as the beneficial force in the free intervals between operations. In contrast, SOB-CS assumes that distractor processing damages memory via the interference introduced from distractor representations entering working memory (Eqs. 13–15), and that the beneficial effect of free time arises from the gradual removal of distractor representations in-between processing steps (Eqs. 16–18).

In Simulation 1, we investigated the behavior of SOB-CS by simulating a hypothetical experiment that combined the three independent variables that have been manipulated in separate behavioral experiments to establish the first set of benchmark findings. The simulated experiment was the same as the one we used to demonstrate the feasibility of TBRS* (Oberauer & Lewandowsky, 2011, Simulation 1). The independent variables were (1) number of distractor operations per episode (zero, one, four, or eight), where zero operations defines the simple-span baseline; (2) operation duration (0.3, 0.5, or 0.7 s)—that is, the duration of attentional capture for each operation; and (3) free time following each operation (0, 0.1, 0.6, 1.2, or 2 s). Crossing of the latter two variables generated 15 levels of cognitive load, computed as operation duration divided by the sum of operation duration and free time (for a summary of the cognitive-load values, see Table 3). The simulated experiment used letters as memory items and digits as distractors, as did most of the experiments of Barrouillet et al. (2004). The simulated experiment involved 500 virtual participants, each of whom completed three trials for each list length (one to nine) in each condition.

Cognitive load

Figure 5 shows memory performance as a function of cognitive load for the four levels of number of operations (the simple-span baseline was replicated 15 times, generating one data point plotted at each level of load; the variability between these 15 identical replications provides an estimate of random noise in the simulated data). The top panel presents performance expressed as span, computed as in Barrouillet et al. (2004), and the bottom panel presents results as the proportions of items recalled in correct order for seven-item lists. Both figures show that memory accuracy declined in an approximately linear fashion with increasing cognitive load, in accordance with the data.

Results of Simulation 1. Top: Memory span as a function of cognitive load and number of distractor operations. Bottom: Proportions of correct-in-position recall as a function of cognitive load and number of operations. Dashed lines are best-fitting linear regression slopes. The 15 data points for “0 operations” are exact replications because cognitive load was not defined for that condition; their variability reflects the amount of random noise in the simulated data. The highest level of cognitive load is represented by three data points for each level of number of operations, because for all three operation durations, load = 1 when free time = 0

The span-over-load function produced by SOB-CS is not entirely linear; it contains small but systematic nonmonotonicities. These deviations from linearity can be explained by looking at the effects of the two variables that constitute cognitive load, the duration of each operation and the free time following it. Figure 6 plots span as a function of operation duration and free time (averaging conditions with one, four, and eight operations). Operation duration has only a very small effect, and hardly any effect beyond 0.5 s, because after 0.5 s the strength of distractor encoding has nearly reached asymptote. Free time has a much larger effect, albeit with diminishing returns. The comparatively small effect of operation duration explains the nonmonotonicity in the span-over-load function.Footnote 5

The results of Simulation 1 clarify how SOB-CS accounts for the first two of the three benchmark results cited in support of the TBRS theory. First, increasing the pace of processing for a fixed total processing duration decreases the free time after each distractor operation, thereby leaving less time to remove the preceding distractor. Second, increasing the duration of individual operations while holding their pace constant has two effects in SOB-CS. One is that longer attention to each distractor leads to stronger encoding, thereby creating more interference. This effect is small and levels off after 500 ms. The other, more pronounced effect is that as more of the fixed time between two operations is spent on processing the distractor, less time is left for removing it.

Unpacking cognitive load: Operation duration and free time

Cognitive load is determined by two temporal variables, the duration of distractor operations and the free time in between them. These components play different roles in SOB-CS and TBRS*. In SOB-CS, increasing the operation duration has only a limited effect through increasing the strength of encoding of interfering distractors (up to about 500 ms), whereas increasing the free time has a more pronounced beneficial effect because removal of the preceding distractor is a relatively slow process. In TBRS*, extending the operation duration leads to more decay, which continues as long as attention is captured by the operation, and extending the free time enables more refreshing of memory items. The two models differ in that SOB-CS predicts only a very small effect of operation duration if free time is held constant, whereas TBRS* predicts a much larger effect of operation duration (Oberauer & Lewandowsky, 2011, Fig. 6).

To empirically dissociate operation time and free time, we carried out three new experiments, using a method introduced by Portrat, Barrouillet, and Camos (2008). Participants remembered letters or words, and in between each pair of memoranda they carried out a size judgment task on words presented as distractors, deciding for each distractor whether the object it represented was larger or smaller than a soccer ball. Operation duration was varied between trials by selecting objects close or distant in size to a soccer ball. After each keypress indicating a decision, a predetermined free-time interval was added before display of the next distractor (i.e., the next word to be judged). The amount of free time was varied orthogonally to operation duration. These experiments are described in detail in Electronic Supplementary Material. The top panel of Fig. 7 shows correct-in-position recall accuracy. Whereas free time had a consistent and sizeable effect across all three experiments, the manipulation of operation duration had only negligible (Exps. 1 and 2) or small (Exp. 3) effects.

Top panel: Mean proportions of correct recall from three experiments varying operation duration (via difficulty: distant vs. close words) and free time (short vs. long) independently. Error bars are 95 % confidence intervals for within-subjects comparisons. Middle panel: Predictions of SOB-CS (Simulation 2). Bottom panel: Predictions of TBRS* (parameters: decay rate = 0.4, processing rate = 6, rate SD = 1.0, refreshing operation = 80 ms, retrieval threshold = 0.05, noise = 0.02)

In Simulation 2, we modeled these experiments with SOB-CS and, for comparison, with TBRS*. We used the response latencies for size judgments to estimate the operation duration (separate estimates were taken for the first operation after each memory item and for successive operations because their latencies differed substantially; see Electronic Supplementary Material). Overt response latencies do not reflect the time for which a cognitive operation captures central attention (Barrouillet et al., 2007; Pashler, 1994) because sensory and motor processing components can be carried out independently of central attention. Estimates of the duration of those noncentral processing components are consistently between 350 and 550 ms (S. D. Brown & Heathcote, 2008; Ratcliff, Thapar, & McKoon, 2004, 2010). We therefore subtracted a noncentral component of 500 ms from the measured times to obtain an estimate of central processing duration per size judgment. The noncentral 500 ms were added to the nominal free time because this time could be used to remove distractors (SOB-CS) or to refresh memory items (TBRS*). In other words, we extended the nominal free time by 500 ms and reduced the distractor-processing time by the same amount in order to reflect the likelihood that some proportion of the distractor time did not involve the attentional bottleneck. Without this assumption, both models would predict greatly exaggerated effects of free time, and TBRS* would underpredict memory performance.

Figure 7 also shows the predictions of SOB-CS (middle panel) and those of TBRS* (bottom panel). For SOB-CS, we used the default parameter values, except for the discrimination parameter c, which we raised to 2.0 to bring the predictions into the overall accuracy range of the data. For TBRS*, we also used the default parameter values (Oberauer & Lewandowsky, 2011), except for the decay rate, which we reduced from 0.5 to 0.4 to raise performance to the empirical accuracy level. The simulation results of SOB-CS confirm what we saw in Simulation 1: Free time had a relatively large effect, whereas the effect of operation duration was tiny. The simulation with TBRS* shows much larger effects of operation duration than does SOB-CS. This is because in TBRS*, longer operations lead to more decay, which results in substantial forgetting.

For a quantitative comparison of the data with the predictions of the two models, we focused on the critical effect of operation duration. Across the three experiments, the mean effect of the manipulation of operation duration (i.e., size judgment difficulty) on memory was a 2.3-percentage-point loss of performance, with a 95 % confidence interval of [0.8, 3.8]. The predicted effect from SOB-CS was 1.0 percentage points, falling inside the confidence interval of the data. The predicted effect of TBRS* was 8.6 percentage points, clearly outside the confidence interval. Therefore, the data support the unique prediction of SOB-CS that the effect of cognitive load primarily reflects a beneficial effect of free time following a distractor, whereas the duration required to process the distractor plays only a minor role.

One potential objection to our model comparison in this section is that our simulations were contingent on our estimate of the time for sensory and motor processes (500 ms), during which central attention was not occupied. With different estimates for the duration of noncentral processes, TBRS* might give a better account of the data, and SOB-CS might look worse. To investigate this issue, we ran the simulations with different values for the assumed duration of noncentral processes, ranging from an implausibly short 0.1 s to an implausibly long 0.8 s. The results of these simulations are presented in Electronic Supplementary Material; they show that, irrespective of the particular estimate of the noncentral component in the size-judgment latencies, SOB-CS gives a better account of the data than does TBRS*.

The effect of the number of operations

The third benchmark finding cited in support of the TBRS is that the number of operations in between memoranda has no effect on memory. Barrouillet et al. (2004) predicted from their model that as long as cognitive load was held constant, the number of successive distractor operations in a complex-span task should not affect memory performance. This prediction plays an important role in the TBRS theory, because it protects the theory against a challenge that other decay-based theories face. Much evidence against decay has come from studies showing that extending a distractor-filled retention interval has no effect on memory (for a review, see Lewandowsky, Oberauer, & Brown, 2009). The TBRS theory apparently escapes this challenge by predicting that the retention interval will have no effect as long as cognitive load is held constant. Therefore, it is important to examine this prediction carefully.

Simulations with TBRS* have revealed a deviation from the predictions derived by Barrouillet et al. (2004), for reasons that are intuitively obvious upon closer inspection. TBRS* predicts that memory will decline with an increasing number of operations when cognitive load is at least moderately high (Oberauer & Lewandowsky, 2011). Brief reflection reveals this prediction to be inevitable within the TBRS theory: The only circumstance under which performance can be independent of the number of operations is when the time for refreshing exactly balances the decay experienced during processing. Whenever the effect of decay is stronger than that of refreshing during an individual processing operation (and the free time following it), the TBRS theory must predict that increasing the number of operations will lead to worse memory. We confirmed this prediction by simulation with TBRS* (Oberauer & Lewandowsky, 2011). We next consider the empirical pattern involving the effects of the number of operations, before we turn to simulations of SOB-CS to investigate whether the model can reproduce that empirical pattern.

Empirically, the effect of increasing the number of operations is quite nuanced and is determined by the relationship between the successive distractors in a processing episode (Lewandowsky et al., 2010; Lewandowsky, Geiger, & Oberauer, 2008). When the distractors are all identical (e.g., “April, April, April”), saying them three or four times does not lead to more forgetting than does saying them once. In contrast, when three different distractors (e.g., “April, May, June”) follow each memory item at encoding, recall is substantially impaired relative to a single distractor. Thus, any forgetting that could be attributed to decay is turned on or off depending on properties of the stimuli that are not considered relevant by the TBRS theory.

By contrast, these effects are predicted by a key principle of the SOB model series—namely, novelty-gated encoding: After processing and encoding the first distractor, each further identical distractor has negligible novelty, and hence is encoded with negligible strength. In contrast, when successive distractors differ from one another, each of them is to some degree novel, and therefore is encoded with substantial strength, thus adding to interference. When a series of different distractors follows each memory item, SOB predicts that memory will suffer when more of them are added.

To illustrate this pattern, the top panel of Fig. 8 shows representative data from an experiment with four conditions (Lewandowsky et al., 2010, Exp. 3). The experiment involved a simple-span condition (no distractors), a condition with a single word to be read aloud after each memory item, a condition with four identical words to be read after each item, and a condition with three different words to be read after each item (four identical and three different words took an approximately equal amount of time to articulate). Participants were asked to read the distractors as quickly as possible, and the experimenter continued the sequence of events as soon as participants had finished speaking. The data in Fig. 8 show that performance dropped substantially from simple span to the condition with a single distractor, an effect that was largely additive with serial position. Reading the same word four times produced little additional forgetting; the small additional loss of memory was confined to the primacy part of the list. Reading three different words, in contrast, incurred a substantial further loss of memory. This effect was again largely additive with serial position.

Top panel: Serial-position curves for simple span (“No Distractors”) and complex span with three conditions of word reading: a single word (“1 Distractor”), the same word four times (“4 Identical”), and three different words (“3 Different”). The data are from Experiment 3 of “Turning Simple Span Into Complex Span: Time for Decay or Interference From Distractors?” by S. Lewandowsky, S. M. Geiger, D. B. Morrell, and K. Oberauer, 2010, Journal of Experimental Psychology: Learning, Memory, and Cognition, 36, pp. 958–978. Copyright 2010 by the American Psychological Association. Adapted with permission. Error bars are 95 % confidence intervals for within-subjects comparisons. Middle panel: Predictions of SOB-CS (Simulation 3). Bottom panel: Predictions of TBRS*. From “Modeling Working Memory: A Computational Implementation of the Time-Based Resource-Sharing Theory,” by K. Oberauer and S. Lewandowsky, 2011, Psychonomic Bulletin & Review, 18, pp. 10–45. Copyright 2011 by the Psychonomic Society. Reproduced with permission p. 38

The middle panel of Fig. 8 shows the results of Simulation 3, in which we applied SOB-CS to the same experimental conditions. The simulation used letters as memoranda and words as distractors to match the materials in the experiment. Representations were generated such that different words had an average similarity (i.e., vector cosine) of .5 with each other, and words overall had an average similarity of approximately .1 with the letters. Operation duration was set to 0.5 s, the value that results in near-asymptotic encoding of each distractor, in accordance with the measured word-reading latencies (>2 s for three words). Free time was set to 0.1 s to reflect the fact that there was hardly any temporal gap between the reading of successive words, as enforced by the experimenter, who urged participants to speak continuously without pauses and advanced the display sequence as soon as they had finished speaking.

With the exception of the recency effect for the single-distractor condition (which was predicted but absent in the data), the simulation closely matched the empirical data. In particular, SOB-CS accurately reproduced the interaction between number of distractor operations and distractor similarity: Increasing the number of distractors had an adverse effect on memory if and only if the distractors differed. This interaction presents a challenge for TBRS, which has no mechanisms sensitive to the similarity between distractors. This raises the question: how well could TBRS* account for the data of Experiment 3 in Lewandowsky et al. (2010) if additional assumptions were made that were particularly favorable to the model?

The bottom panel of Fig. 8 reproduces a simulation with TBRS* for that experiment (Oberauer & Lewandowsky, 2011). For this simulation, we assumed that whereas reading a new word occupies the attentional bottleneck for 0.3 s, repeating the same word does not require any further attention after the first word. Thus, cognitive load is assumed to be substantially lower in the condition with four identical than with three different distractors. It is important to realize that those assumptions are maximally favorable to TBRS*: In actual fact, reading-aloud repetitions of a word off the screen are unlikely to be completely attention-free. Only if we make this favorable assumption can TBRS* account for the relative accuracies of the four experimental conditions, averaged across serial positions. However, even under these favorable circumstances, TBRS* erroneously predicts that the effects of distractors will be entirely absent at the first list position and will increase strongly over serial positions, particularly at the last position. We will explore the reason for this erroneous prediction in the next section, when we discuss serial-position effects.

To summarize, SOB-CS correctly predicts that the effect of the number of distractor operations is modulated by the similarity of successive distractors. TBRS* can provide a post-hoc explanation for this modulation, but still it accounts for the detailed pattern of data less well than does SOB-CS.

Discussion: Cognitive load and number of operations

The strong and approximately linear relationship between memory performance and cognitive load (Barrouillet et al., 2004; Barrouillet et al., 2011) has been one of the important discoveries of the last decade in the field of working memory. There is little doubt that this function results from the interplay of two opposing processes: one that is detrimental to memory and occurs during distractor processing, and one that is beneficial to memory and occurs during brief pauses in between processing of the memoranda and distractors.

To date, the only available explanation for the cognitive-load effect has identified time-based decay and refreshing of memory traces, respectively, as those two opposing processes. This explanation lies at the core of the TBRS model. Independent evidence, however, strongly speaks against a major role of time-based decay in short-term or working memory (Lewandowsky, Oberauer, & Brown, 2009). This raises the question of whether the effect of cognitive load on immediate memory can be explained without assuming decay. Our simulations have established that SOB-CS reproduces the benchmark cognitive-load findings from complex-span tasks without invoking decay, and without invoking rehearsal or refreshing. These simulation results show that the crucial finding upon which the TBRS was built—the cognitive-load function—does not constitute unique evidence for that theory. On the contrary, when cognitive load is broken down into its two temporal components—operation duration and free time—SOB-CS arguably provides a better quantitative account of their individual effects than does TBRS*.

SOB-CS also accounts for the detailed pattern of results concerning the third benchmark, the effect of the number of operations. A previous version of C-SOB correctly predicted that under high cognitive loads, the number of operations would matter if and only if the distractors differed from each other; SOB-CS reproduced that pattern here. TBRS* can explain this finding only with the addition of favorable assumptions about variations in operation duration, and even then it mispredicts the interaction of the distractor effect with serial position.

The success of SOB-CS in modeling the first three benchmark findings lends support to the assumptions responsible for this success: Forgetting in working memory is primarily due to interference; concurrent processing adds to interference because distractor information is encoded into working memory; and the strength of distractor encoding is modulated by the distractor’s novelty, whereas free time following distractor operations can be used to reduce interference by gradually unbinding the preceding distractor from its context marker.

Inside complex span: Serial-position curves and error patterns

Our second set of empirical benchmarks involves the serial-position curve for the conventional method of scoring (i.e., recall of the correct item in the correct position), as well as for item errors and order errors within the complex-span task. SOB-CS makes two novel predictions for these benchmarks, both of which pertain to a comparison of simple to complex span. Our search of the literature revealed that the experiments of Lewandowsky et al. (2010) are the only ones that afford a controlled comparison of the serial-position curves for simple and complex span. Simulation 3 above demonstrated that SOB-CS accurately reproduces these serial-position curves. For the present discussion, we will focus on the condition without distractors (simple span) and on the condition with three different distractors following each letter, because that condition is most representative of complex-span tasks.

Serial-position curves

One prediction from SOB-CS is that the serial-position curves of simple span and complex span are largely parallel, as is shown in the middle panel of Fig. 8. This prediction is important because it distinguishes SOB-CS from models assuming decay together with rehearsal or refreshing to counteract it, such as the TBRS* model. As noted above, TBRS* predicts a strong interaction of the contrast between simple and complex span with serial position, with hardly any effect of distractor processing on recall of the first list item, and increasingly adverse effects for later list items. The reason for this prediction is that TBRS* must assume cumulative refreshing; that is, in each free-time interval, refreshing starts with the first list item. Decay models of complex span must assume cumulative rehearsal or refreshing, because decay alone imposes a strong recency gradient on memory strength (i.e., early list items decay more than later items). Cumulative refreshing, which prioritizes earlier list items, is needed to overcome this recency gradient and instead to produce a primacy effect on recall (Oberauer & Lewandowsky, 2011).Footnote 6

Cumulative refreshing largely protects the first item from decay in complex span. As cognitive load in complex span increases, refreshing progresses less far into the list (because less free time is available), but as long as cognitive load is not extremely high, the first item is always refreshed. In consequence, TBRS* must predict that the first list item is largely immune to manipulations of interference or cognitive load, but that those effects will increase across serial positions. As we noted in the introduction, any theory assuming decay needs a mechanism of rehearsal or refreshing, and therefore faces this problem.

SOB-CS does not predict this strong interaction, because it does not require cumulative refreshing to maintain a list in memory. Instead, distractor interference and distractor removal apply in the same way to each list position. As is shown in the top panel of Fig. 8, the prediction of largely parallel serial-position curves for simple and complex span was borne out by the data. This confirms the first new prediction of SOB-CS.

Item and order errors