Abstract

Sequential modulation is the finding that the sizes of several selective-attention phenomena—namely, the Simon, flanker, and Stroop effects—are larger following congruent trials than following incongruent trials. In order to rule out relatively uninteresting explanations of sequential modulation that are based on a variety of stimulus- and response-repetition confounds, a four-alternative forced choice task must be used, such that all trials with any kind of repetition can be omitted from the analysis. When a four-alternative task is used, the question arises as to whether to have the proportions of congruent and incongruent trials be set by chance (and, therefore, be 25% congruent and 75% incongruent) or to raise the proportion of congruent trials to 50%, so that it matches the proportion of incongruent trials. In this observation, it is argued that raising the proportion of congruent trials to 50% should not be done. For theoretical, practical, and empirical reasons, having half of the trials be congruent in a four-alternative task aimed at providing unambiguous evidence of sequential modulation should be avoided.

Similar content being viewed by others

One of the more intriguing discoveries in the study of selective attention is the finding that the magnitude of all three of the best-known phenomena in this area—namely, the Simon, flanker, and Stroop effects, defined as the differences in performance between congruent and incongruent trials—are all larger when the preceding trial had been congruent rather than incongruent (see, e.g., Egner, 2007, for a recent review). This pattern of results has been given several names, including “suppression of the direct route” (Frith & Done, 1986), “the Gratton effect” (after Gratton, Coles, & Donchin, 1992), “reactive gating” (Mordkoff, 1998), and “conflict adaptation” (e.g., Botvinick, Braver, Barch, Carter, & Cohen, 2001); it will here be referred to as “sequential modulation” (Hazeltine, Akçay, & Mordkoff, 2011), mostly to avoid using a label that is tied to a particular explanation (which could later turn out to be wrong).

The reason that researchers have been so intrigued by sequential modulation is that it seems to provide a new and useful window through which to view both within-trial attentional selection and between-trial changes in attentional control. As an example of the former type of reasoning, if attentional selection operates in a nonspecific manner and acts to prevent the processing or transmission of irrelevant information in general (e.g., Broadbent, 1958; Eriksen & Eriksen, 1974), congruency effects may be reduced following incongruent trials, but they should never reverse to become a benefit for incongruency. In contrast, if attentional selection operates by suppressing the particular value of the irrelevant information (e.g., Neill, 1977; Tipper & Cranston, 1985) or otherwise operates in an item-specific manner (e.g., Blais, Robidoux, Risko, & Besner, 2007), congruency effects following incongruent trials may actually become negative. With regard to between-trial changes in attentional control, if this is achieved using a general-purpose (higher-order) mechanism, changes in selectivity with regard to one type of irrelevant information should also have effects on other types of irrelevant information (e.g., Freitas, Bahar, Yang, & Bahar, 2007; Kunde & Wühr, 2006). Conversely, if the control of selective attention is domain-, modality-, or dimension-specific, then changes in one sort of selectivity could well be independent of changes in other sorts of selectivity (e.g., Akçay & Hazeltine, 2008; Funes, Lupiáñez, & Humphreys, 2010; Wendt, Kluwe, & Peters, 2006).

Turning to the methods employed in experiments concerning sequential modulation, it has recently become increasingly popular to use four-alternative forced choice (4-AFC) tasks, as opposed to simpler 2-AFC tasks. In fact, it is now almost a requirement that any study aimed at exploring sequential modulation employ a task with at least four different stimuli and responses (see Schmidt & De Houwer, 2011, for a recent and detailed discussion). There is good reason for this: only when (at least) four different stimuli and responses are employed can all of the stimulus- and response-repetition confounds that make interpretation difficult be controlled (see, e.g., Chen & Melara, 2009; Mayr, Awh, & Laurey, 2003; Nieuwenhuis et al., 2006; Notebaert & Verguts, 2007; Schmidt & De Houwer, 2011; but see Hazeltine et al., 2011, for a way to avoid confounds and still employ a 2-AFC task). A total of five such confounds have been described: (1) whether the current trial is an exact repetition of the previous one; (2) whether the relevant attribute of the stimulus (and, therefore, the correct response) is repeated across adjacent trials, but the irrelevant attribute changes; (3) whether the irrelevant attribute repeats, but the relevant changes; (4) whether the previous relevant attribute is associated with the current irrelevant attribute; and (5) whether the previous irrelevant attribute is associated with the current relevant attribute. These are confounds because they are not equally likely across the four subconditions that produce the data used to demonstrate sequential modulation (see, e.g., Mayr & Awh, 2009). For example, when a congruent trial follows an incongruent trial, it is twice as likely that at least one attribute is being repeated as when a congruent trial follows another congruent trial. Even more, when two congruent trials occur in succession, all trials with repetitions of some sort will be exact repetitions, which are known to enjoy a substantial response-time (RT) advantage, while congruent trials that follow incongruent trials can never be exact repetitions.

To obtain a set of data on which a “clean” analysis can be conducted, a 4-AFC task is required. This holds because the only way to control for all of the confounds simultaneously is to omit from analysis all of the trials that include a repetition of any sort. In order to have trials that have no repetitions of any sort in all of the needed conditions—including the condition in which an incongruent trial (which by definition has two different attribute values) follows a completely different incongruent trial (which, again, has two different attribute values)—a minimum of four stimuli and responses are needed. If sequential modulation is observed on these “no-repetition” trials, then one has unambiguous evidence of an intertrial effect that cannot be explained in terms of mere repetitions. Given that unambiguous evidence of such an effect is what most theorists consider to be the most important (see, especially, Schmidt & De Houwer, 2011), the use of 4-AFC tasks is becoming the standard for all research on sequential modulation.

However, a second recent trend in the methods used to study sequential modulation is much harder to defend. Several studies have employed designs under which congruent trials occur more often than they would by chance—that is, more often that they would if the relevant and irrelevant attributes of the stimulus were selected independently. In particular, in several recent studies the researchers have used 4-AFC tasks with designs under which congruent trials occur 50% of the time, instead of the 25% that would be expected by chance (e.g., Akçay & Hazeltine, 2007, 2011; Wendt & Kiesel, 2011); other experiments have used rates of congruence that are even farther from what would be expected by chance, such as 70% congruent trials in a 3-AFC task (e.g., Kerns et al., 2004; Larson, Kaufman, & Perlstein, 2009; Mayr & Awh, 2009). The goal of this observation is to convince the reader that this should not be done; 50% congruent should, instead, be avoided when the task is 4-AFC. For simplicity, the three arguments against 50% congruence in 4-AFC tasks will be presented in the context of the Simon task, but parallel arguments can be made for flanker and Stroop tasks, as well. Thus, this observation could rightfully be seen as an extension and elaboration of several ideas that were recently put forward by James R. Schmidt and Jan De Houwer (2011).

Theoretical reason: 50% congruence forces the “task-irrelevant” attribute to become informative

The first argument against 50% congruence in 4-AFC tasks can be approached in several ways. One approach is rooted in information theory and starts by noting that a balanced 4-AFC task places the participants under 2.00 bits of response uncertainty. If the irrelevant attribute of the stimulus, such as location in the case of the Simon task, is truly and technically irrelevant, the participants would continue to suffer from 2.00 bits of response uncertainty even if they were to identify the location of the stimulus. This is true for designs in which the relevant and irrelevant attributes are selected at random and independently, since there is no correlation between the location of the stimulus and the location of the correct response when congruent trials only happen by chance. But this is not true under 50%-congruent designs; now, instead of continuing to suffer from 2.00 bits of response uncertainty, after identifying the location of the stimulus, the value drops to 1.79 bits (i.e., 1 × .5 × 1.00 bit + 3 × .167 × 2.585 bits). In short, the so-called “irrelevant attribute” of the stimulus, under a 50%-congruent 4-AFC design, is actually informative, providing the subject with 0.21 bits of information (for the same idea in slightly different contexts, see Mordkoff, 1996; Schmidt & Besner, 2008).

The second way to think about the theoretical problem associated with having congruent trials occur more often than they would by chance is to consider the analogous situation for exogenous spatial cuing (e.g., Posner & Cohen, 1984). By definition, in order for the effect of a spatial precue to be labeled “exogenous” (or due to bottom-up attentional capture), the target must not occur in the cued location more often than chance (see, e.g., Luck & Thomas, 1999; Tassinari, Aglioti, Pallini, Berlucchi, & Rossi, 1994). Therefore, when the number of possible target locations is increased from the typical two to the less-typical four (e.g., Mordkoff, Halterman, & Chen, 2008), the proportion of valid-cue trials must be decreased from 50% to 25%, such that the cue continues to provide no useful information. To do otherwise would change the task into the hybrid situation that involves voluntary attentional deployment as well as exogenous capture.

The third way to approach this issue is to ask what instructions ought to be given to participants under each of the two types of design. In the case of the chance-congruence design, there would seem to be nothing inappropriate with the typical instructions, which, in the case of a Simon task, usually say something like “Respond only to the [relevant attribute, e.g., color] of the stimulus and try to ignore its location; the location of the stimulus is completely irrelevant.” In the case of the 50%-congruent design, however, the same instructions could well be argued to be misleading, at best. If the location of the stimulus in a 4-AFC Simon task is actually informative, telling the participants to ignore the location is the same as asking them to avoid optimizing their performance (for evidence that subjects will, indeed, use subtle correlational information to optimize their performance in selective-attention tasks, see, e.g., Miller, 1987; Mordkoff, 1996; Mordkoff & Halterman, 2008; Mordkoff & Yantis, 1991). If you ask participants to avoid using information that could actually help them, you might end up studying obedience to authority, instead of selective attention.

None of this is intended to argue that it isn’t worth studying the effects of stimulus location in a task in which the primary relationship is between stimulus value and response location, but other relationships do also exist; nor should it suggest that instructions couldn’t be devised that would clearly compel participants to try to ignore the stimulus’s location, even when it could be helpful. Rather, the claim is only that, if the goal of the research is to unambiguously study the ability of people to process incoming information selectively and then to move onward to study how this ability is modulated by recent events—such as the congruence of the previous trial—the use of a task that provides participants with no incentive to deviate from the desired form of processing should be preferred.

Practical reason: 50% congruence creates a larger imbalance in the number of trials per condition

It is well-known that a between-subjects design with a fixed total N has the most power when the participants are evenly distributed across the conditions (i.e., balanced designs have more power than unbalanced designs). A typical reexpression of this principle (which is slightly hyperbolic) goes like this: “The power of a between-subjects analysis depends primarily on the size of the smallest group.” A corollary to this idea applies to the number of individual trials in each condition under a within-subjects design (i.e., the amount of data per condition per participant that are averaged prior to the main statistical analysis). In this case, the rule of thumb is “The amount of noise that each participant will contribute to the ANOVA is inversely proportional to the smallest number of trials per condition (across all conditions).” More accurately, for a given total number of trials per participant, the values that the participants will contribute to the analysis will have the lowest mean (error) variance if the trials are evenly distributed across the various conditions.

In light of the above ideas, one argument in favor of using a 50%-congruent design in an experiment concerning sequential modulation is that this would appear to equalize the numbers of trials in each of the four conditions that define the phenomenon. To be clear, recall that the four key conditions for a sequential analysis of a selective-attention effect are congruent trials that follow congruent trials (hereafter, C–C), congruent trials that follow incongruent trials (I–C), incongruent trials that follow congruent trials (C–I), and two incongruent trials in a row (I–I). Without considering any other issue (yet), it would seem clear that one would have equal numbers of trials in each of the four key conditions if the probability of a given trial being congruent were 50%; in this way, a quarter of the trials would be in each of the four trial-pair conditions. If, instead, you have only 25% congruent trials (i.e., you use a chance design and a 4-AFC task), only one-sixteenth of the data will be in the C–C condition, while nine-sixteenths will be in the I–I condition.

However, when one omits all trials with any kind of stimulus or response repetition—as has become standard, to control for the five different potential confounds (see above)—the expected numbers of trials that will be retained in each condition become much harder to estimate. To make this more clear, Fig. 1 shows which trials would be retained for a “clean” test for sequential modulation (i.e., a test with all forms of repetition controlled). For this illustration, the four possible responses and stimulus locations are both arranged in a square. In the margins of the table-like figure, the direction of the arrow within a box indicates the value of the relevant attribute of the stimulus (and, therefore, the location of the correct response), while the location of the arrow within the box indicates the value of the irrelevant attribute. Thus, for example, an up-left-pointing arrow in the upper left corner of the box indicates one of the four possible congruent displays, while the same up-left-pointing arrow in the bottom-right corner indicates one of the 12 possible incongruent displays.

Retained and omitted trials from a four-alternative forced choice task when all forms of repetition are being controlled. Each arrow direction indicates the relevant value of the stimulus on a trial and, therefore, the location of the correct response; arrow location within the box indicates the location of the stimulus within the display. The color of the left half of a cell indicates the congruence of the previous trial, while the color of the right half indicates the congruence of the current trial; in both cases, green = congruent and red = incongruent. If a cell is an exact repetition (ExR; black cells), nothing else is listed. If a cell is a response repetition (RespR; dark gray) or a stimulus-location repetition (LocR; also dark gray), nothing further is also listed. The two forms of negative priming occur when the previous stimulus location matches the current response (L2R; light gray) and when the previous response matches the current stimulus location (R2L; also light gray); either or both may be true

The interior cells within Fig. 1 that have green on both the left and right are the retained trials for Condition C–C. The cells with red on the left and green on the right are those retained for Condition I–C. Green on the left with red on the right indicates trials retained for Condition C–I. And red on both sides denotes Condition I–I. The remaining cells in the figure—that is, those in some value of gray—are the trial pairs that would be omitted for including at least one kind of repetition. As can be seen, the proportions of specific combinations that are retained, as opposed to omitted, are not the same for the four conditions. Of the 16 specifics that would be classified as being in Condition C–C, a dozen would be retained, while the other four would all be omitted for being exact repetitions. Of the 48 specifics in Condition I–C, 24 would be retained, while the other 24 would be omitted for being either a response repetition or a stimulus-location repetition. The same is true for Condition C–I. Finally, and most dramatically, of the 144 specific combinations in Condition I–I, only 24 would be retained, with the others being omitted due to being an exact repetition, a response or stimulus-location repetition, or one of the two types of negative priming. What matters, however, is the condition with the smallest number of retained trials per participant. This would be Condition C–C, with only 12 “keepers” out of the 256 possible trial pairs.

So far, the idea of increasing the proportion of congruent trials makes very good sense, given that this would provide the greatest benefit to Condition C–C, which is the smallest condition under the chance-congruence design. However, raising the proportion of congruent trials to 50% is going much too far. If one reweights the number of trials in the C–C condition by a factor of 9 (since, to move from 25% to 50% congruent, you must use each congruent display three times as often), reweights the I–C and C–I conditions by a factor of 3 (for the same reason), and leaves the I–I condition as it is, one finds that the smallest condition is now Condition I–I, with 24 trials (as before) out of a new total number of 576 possible trial pairs. (The number of trials out of 576 that would be retained for C–C is now 108, and the numbers retained in I–C and C–I are both now 72; this is why the new smallest is Condition I–I, with only 24.) Thus, while the smallest condition under the 25%-congruent (chance) design was 12 out of 256 trials, or 4.69% of the data, the smallest condition under the 50%-congruent design is 24 out of 576 trials, or only 4.17% of the data. In other words, switching to a 50%-congruent design will actually hurt the statistics (albeit only slightly), instead of helping.Footnote 1

If the reader is wondering what the optimal proportion of congruent trials would be—at least from the standpoint of maximizing the number of trials in the smallest condition and assuming that all trials with any sort of repetition will be omitted from the analysis—the answer is 32%. This can be rounded to one-third without being very wrong or very difficult to program. But even the mention of this should not to be read as an endorsement of such a design, given the other two arguments against nonchance designs, but it would be better than 50% congruent, if the reader isn’t convinced.

Empirical reason: 50% congruence can produce questionable evidence of sequential modulation

Finally, there is an empirical reason to avoid having 50% of the trials be congruent in a 4-AFC task examining sequential modulation. Ever since the issue of the five confounds became widely known, the most important question has become whether any evidence of sequential modulation is found when all of these are controlled by omitting all trials with any sort of repetition. To provide a small contribution to this literature, an experiment was conducted using a 4-AFC Simon task with one group of 24 participating under a 25%-congruent (chance) design and another group of 24 participating under a 50%-congruent design. The task used a color-to-button mapping with both the stimulus and response locations arranged in a square (see on-line Supplemental Materials for details).

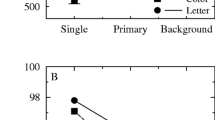

The mean RTs from the four key conditions (after the removal of all trials with any repetitions) are displayed in Fig. 2, along with their associated error rates. As can be seen, and as was confirmed by ANOVA, the 25%-congruent condition produced no reliable evidence of sequential modulation in mean RTs [i.e., no interaction between the current and previous congruency: F(1, 23) = 0.09, p = .765]. The Simon effects (with standard errors) following congruent and incongruent trials were 99.28 ± 13.83 and 92.71 ± 14.19 ms, respectively (both ps < .001). In contrast, the 50%-congruent condition did produce the interaction that constitutes sequential modulation [F(1, 23) = 5.64, p = .026, η 2p = .197]; a Simon effect of 102.17 ± 16.24 ms emerged following congruent trials, but one of only 83.01 ± 16.34 ms following incongruent trials (both ps < .001). In summary, sequential modulation in mean RTs depended on the proportion of congruent trials and was only observed under the 50%-congruent design. When congruence was at chance, no evidence of sequential modulation was found, even though this condition had slightly more statistical power.Footnote 2

With regard to the errors, the 25%-congruent condition again produced no evidence of sequential modulation [F(1, 23) = 0.31, p = .584]; the Simon effects were 13.8 ± 2.6% following congruent trials and 12.1 ± 3.0% following incongruent trials (both ps < .001). In contrast, the interaction between current and previous congruence was significant for the 50%-congruent design [F(1, 23) = 4.53, p = .044, η 2p = .165], but in the opposite direction from that found for mean RTs, with a 15.3 ± 2.7% Simon effect following congruent trials, and a 24.2 ± 4.7% Simon effect following incongruent trials (both ps < .001). In summary, as was true for mean RTs, sequential modulation in accuracy depended on the design and was only observed when congruent trials occurred 50% of the time.

When the RT and accuracy data are considered simultaneously, it appears that the 50%-congruent design can induce a rather complicated form of speed–accuracy trade-off, with the “standard” form of sequential modulation being found in the mean RTs (i.e., a larger Simon effect following congruent trials) but “reversed” sequential modulation in the proportions of errors (i.e., a larger Simon effect following incongruent trials). One possible explanation for this is that participants in the 50%-congruent condition came to expect that a congruent trial would follow an incongruent trial (maybe on the basis of a false belief in the so-called “law of averages”). Thus, when two incongruent trials occurred in succession, accuracy on the second trial of the pair was particularly low. In any event, if a particular design has been shown to produce opposite patterns in mean RTs and accuracy, it should be avoided.

Conclusions

Sequential modulation is a fascinating and useful phenomenon, but most early demonstrations were plagued by various confounds. In order to control for all five of the repetition-based confounds that might cloud interpretation, a four-alternative task must be used (at a minimum). Under 4-AFC, however, if the congruence of the trials is left at chance, then only 25% of the trials will be congruent. In order to equalize the amounts of data that will be collected in each of the four key conditions, one might be tempted to raise the proportion of congruent trials to 50%. But this should not be done for three different reasons.

First, by introducing a correlation between the supposedly irrelevant attribute of the stimulus and the correct response, the task has ceased to purely concern the target issue, which is the ability of people to process information selectively when they have no good reason to go against these instructions. In blunt terms, an attribute that is technically informative is not really irrelevant, regardless of what the instructions might say. Second, rather than equalizing the amounts of data across the four conditions, raising the proportion of congruent trials to 50% actually makes the smallest condition even smaller than if congruence were left at chance. It just happens that a different condition is now the smallest. Third, raising the proportion of congruent trials to 50% can have important and highly unwanted effects on the data. For at least one set of conditions—namely, a color-to-button Simon task, which is one of the most popular for the study of sequential modulation—the 50%-congruent design can produce opposite patterns for mean RTs and proportions of errors. For these three reasons, it is argued here that congruence be left at chance in all future studies of sequential modulation.

With regard to the evidentiary status of sequential modulation, it should first be noted that the majority of the experiments concerning this question have either used a two-alternative task or have failed to remove all trials with some kind of stimulus or response repetition. Therefore, these data do not provide unambiguous evidence, even if they have provided prima facie evidence. Of the experiments that remain, the present results join those of Schmidt and De Houwer (2011), in particular, in finding no evidence of sequential modulation in the standard versions of Simon, flanker, and Stroop tasks when chance levels of congruence are used and repetitions are controlled (see also Puccioni & Vallesi, in press). Thus, one might be tempted to conclude, at this point, that all existing evidence of sequential modulation is tainted in some way. This conclusion, however, would be too strong, as there are a few demonstrations of reliable sequential modulation in cases in which all of the repetition confounds have been controlled and congruence was at a chance level, albeit in tasks that were much more complicated than the original forms of the Simon, flanker, and Stroop tasks. In one case, reliable amounts of sequential modulation were observed when trials alternated between two different tasks (Freitas et al., 2007); in another case, reliable sequential modulation was observed in a novel task that combined elements of the spatial version of the Stroop task and temporal flankers (Kunde & Wühr, 2006). Demonstrations of significant sequential modulation have also occurred when congruence was at chance and most—but not all—of the repetition confounds were controlled (e.g., Notebaert et al., 2006). Clearly, more research is necessary. It is suggested, however, that this work be done using 4-AFC tasks with chance levels of congruence.

Notes

These expected trial frequencies are all based on the assumption that trials are being selected with replacement (as this simplifies the mathematics considerably). If, instead, trial selection is done without replacement, as is more typical, the reported values are slightly inaccurate. However, as can be shown (albeit not in a footnote of reasonable length), sampling without replacement will still not make the 50%-congruent design as good as the 25%-congruent design in terms of the size of the smallest condition. Furthermore, given that errors are most frequent when the current trial is incongruent, having the smallest cell be one that involves these trials should, again, be avoided.

The claim of greater power for the 25%-congruent design is based on the slightly smaller error terms for this condition, as compared to the 50%-congruent condition. This was expected, given that the 25%-congruent design has a more even distribution of retained trials across the four key conditions (see the previous section).

References

Akçay, Ç., & Hazeltine, E. (2007). Feature-overlap and conflict monitoring: Two sources of sequential modulations. Psychonomic Bulletin & Review, 14, 742–748.

Akçay, Ç., & Hazeltine, E. (2008). Conflict adaptation depends on task structure. Journal of Experimental Psychology: Human Perception and Performance, 34, 958–973. doi:10.1037/0096-1523.34.4.958

Akçay, Ç., & Hazeltine, E. (2011). Domain-specific conflict adaptation without feature repetitions. Psychonomic Bulletin & Review, 18, 505–511. doi:10.3758/s13423-011-0084-y

Blais, C., Robidoux, S., Risko, E. F., & Besner, D. (2007). Item-specific adaptation and the conflict-monitoring hypothesis: A computational model. Psychological Review, 114, 1076–1086. doi:10.1037/0033-295X.114.4.1076

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., & Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychological Review, 108, 624–652. doi:10.1037/0033-295X.108.3.624

Broadbent, D. E. (1958). Perception and communication. New York: Pergamon Press.

Chen, S., & Melara, R. D. (2009). Sequential effects in the Simon task: Conflict adaptation or feature integration? Brain Research, 1297, 89–100. doi:10.1016/j.brainres.2009.08.003

Egner, T. (2007). Congruency sequence effects and cognitive control. Cognitive, Affective, & Behavioral Neuroscience, 7, 380–390.

Eriksen, B. A., & Eriksen, C. W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Perception & Psychophysics, 16, 143–149. doi:10.3758/BF03203267

Freitas, A. L., Bahar, M., Yang, S., & Banai, R. (2007). Contextual adjustments in cognitive control across tasks. Psychological Science, 18, 1040–1043. doi:10.1111/j.1467-9280.2007.02022.x

Frith, C. D., & Done, D. J. (1986). Routes to action in reaction time tasks. Psychological Research, 48, 169–177.

Funes, M. J., Lupiáñez, J., & Humphreys, G. (2010). Analyzing the generality of conflict adaptation effects. Journal of Experimental Psychology: Human Perception and Performance, 36, 147–161. doi:10.1037/a0017598

Gratton, G., Coles, M. G. H., & Donchin, E. (1992). Optimizing the use of information: Strategic control of activation of responses. Journal of Experimental Psychology: General, 121, 480–506. doi:10.1037/0096-3445.121.4.480

Hazeltine, E., Akçay, Ç., & Mordkoff, J. T. (2011). Keeping Simon simple: Examining the relationship between sequential modulations and feature repetitions with two stimuli, two locations and two responses. Acta Psychologica, 136, 245–252.

Kerns, J. G., Cohen, J. D., MacDonald, A. W., III, Cho, R. Y., Stenger, V. A., & Carter, C. S. (2004). Anterior cingulate conflict monitoring and adjustments in control. Science, 303, 1023–1026. doi:10.1126/science.1089910

Kunde, W., & Wühr, P. (2006). Sequential modulations of correspondence effects across spatial dimensions and tasks. Memory & Cognition, 34, 356–367.

Larson, M. J., Kaufman, D. A. S., & Perlstein, W. W. (2009). Neural time course of conflict adaptation effects on the Stroop task. Neuropsychologia, 47, 663–670.

Luck, S. J., & Thomas, S. J. (1999). What variety of attention is automatically captured by peripheral cues? Perception & Psychophysics, 61, 1424–1435. doi:10.3758/BF03206191

Mayr, U., & Awh, E. (2009). The elusive link between conflict and conflict adaptation. Psychological Research, 73, 794–802.

Mayr, U., Awh, E., & Laurey, P. (2003). Conflict adaptation effects in the absence of executive control. Nature Neuroscience, 6, 450–452.

Miller, J. (1987). Priming is not necessary for selective-attention failures: Semantic effects of unattended, unprimed letters. Perception & Psychophysics, 41, 419–434. doi:10.3758/BF03203035

Mordkoff, J. T. (1996). Selective attention and internal constraints: There is more to the flanker effect than biased contingencies. In A. Kramer, M. G. H. Coles, & G. Logan (Eds.), Converging operations in the study of visual selective attention (pp. 483–502). Washington, DC: APA.

Mordkoff, J. T. (1998, November). Gating of irrelevant information in selective attention. Paper presented at the 39th Annual Meeting of the Psychonomic Society, Dallas, TX.

Mordkoff, J. T., & Halterman, R. (2008). Feature integration without visual attention: Evidence from the correlated flankers task. Psychonomic Bulletin & Review, 15, 385–389.

Mordkoff, J. T., Halterman, R., & Chen, P. (2008). Why does the effect of short-SOA exogenous cuing on simple RT depend on the number of display locations? Psychonomic Bulletin & Review, 15, 819–824.

Mordkoff, J. T., & Yantis, S. (1991). An interactive race model of divided attention. Journal of Experimental Psychology: Human Perception and Performance, 17, 520–538. doi:10.1037/0096-1523.17.2.520

Neill, W. T. (1977). Inhibitory and facilitatory processes in selective attention. Journal of Experimental Psychology: Human Perception and Performance, 3, 444–450.

Nieuwenhuis, S., Stins, J. F., Posthuma, D., Polderman, T. J. C., Boomsma, D. I., & De Geus, E. J. (2006). Accounting for sequential trial effects in the flanker task: Conflict adaptation or associative priming? Memory & Cognition, 34, 1260–1272.

Notebaert, W., Gevers, W., Verbruggen, F., & Liefooghe, B. (2006). Top-down and botom-up sequential modulations of congruency effects. Psychonomic Bulletin & Review, 13, 112–117.

Notebaert, W., & Verguts, T. (2007). Dissociating conflict adaptation from feature integration: A multiple regression approach. Journal of Experimental Psychology: Human Perception and Performance, 33, 1256–1260.

Posner, M. I., & Cohen, Y. A. (1984). Components of visual orienting. In H. Bouma & D. G. Bouwhuis (Eds.), Attention and performance X: Control of language processes (pp. 531–556). Hillsdale: Erlbaum.

Puccioni, O., & Vallesi, A. (in press). Sequential congruency effects: Disentangling priming and conflict adaptation. Psychological Research. doi:10.1007/s00426-011-0360-5

Schmidt, J. R., & Besner, D. (2008). The Stroop effect: Why proportion congruent has nothing to do with congruency and everything to do with contingency. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34, 514–523.

Schmidt, J. R., & De Houwer, J. (2011). Now you see it, now you don’t: Controlling for contingencies and stimulus repetitions eliminates the Gratton effect. Acta Psychologica, 138, 176–186.

Tassinari, G., Aglioti, S., Pallini, R., Berlucchi, G., & Rossi, G. F. (1994). Interhemispheric integration of simple visuomotor responses in patients with partial callosal defects. Behavioral Brain Research, 64, 141–149.

Tipper, S. P., & Cranston, M. (1985). Selective attention and priming: Inhibitory and facilitatory effects of ignored primes. Quarterly Journal of Experimental Psychology, 37A, 591–611.

Wendt, M., & Kiesel, A. (2011). Conflict adaptation in time: Foreperiods as contextual cues for attentional adjustment. Psychonomic Bulletin & Review, 18, 910–916.

Wendt, M., Kluwe, R. H., & Peters, A. (2006). Sequential modulations of interference evoked by processing task-irrelevant stimulus features. Journal of Experimental Psychology: Human Perception and Performance, 32, 644–667. doi:10.1037/0096-1523.32.3.644

Author Note

The author thanks Eliot Hazeltine, Jaeyong Lee, Cathleen Moore, and James Schmidt for many useful discussions of these issues, as well as comments on the manuscript. The data were collected by the 2011 Amps Lab team: Matt Georges, Andrea Gerke, Ashley Johnson, Taharat Khan, Laura Mikolajczyk, and Mike Papso. This work was supported by the University of Iowa.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOC 43 kb)

Rights and permissions

About this article

Cite this article

Mordkoff, J.T. Observation: Three reasons to avoid having half of the trials be congruent in a four-alternative forced-choice experiment on sequential modulation. Psychon Bull Rev 19, 750–757 (2012). https://doi.org/10.3758/s13423-012-0257-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-012-0257-3