Abstract

According to the ideomotor principle, action preparation involves the activation of associations between actions and their effects. However, there is only sparse research on the role of action effects in saccade control. Here, participants responded to lateralized auditory stimuli with spatially compatible saccades toward peripheral targets (e.g., a rhombus in the left hemifield and a square in the right hemifield). Prior to the imperative auditory stimulus (e.g., a left tone), an irrelevant central visual stimulus was presented that was congruent (e.g., a rhombus), incongruent (e.g., a square), or unrelated (e.g., a circle) to the peripheral saccade target (i.e., the visual effect of the saccade). Saccade targets were present throughout a trial (Experiment 1) or appeared after saccade initiation (Experiment 2). Results showed shorter response times and fewer errors in congruent (vs. incongruent) conditions, suggesting that associations between oculomotor actions and their visual effects play an important role in saccade control.

Similar content being viewed by others

For a long time, the predominant view in cognitive psychology held that action is stimulus driven—that is, that actions are determined mainly by the properties of preceding stimuli (see Donders, 1868, who mainly studied actions as responses to stimuli). However, a contrasting view maintained that anticipated action effects determine the action (ideomotor view; Greenwald, 1970; James, 1890; see Stock & Stock, 2004). For example, one might turn up the heater because it is cold (stimulus-driven view) or because it should be warm (ideomotor view).

In a typical experiment in favor of the ideomotor view, participants execute actions followed by specific effects. Crucially, prior to the action, a stimulus appears that either shares features with the action effect (congruent stimulus) or is incongruent/unrelated. Whenever performance is enhanced for congruent (vs. incongruent/unrelated) stimuli, this is interpreted as evidence for the assumption that action effects are represented prior to (and used for) action preparation. For example, in a study by Elsner and Hommel (2001), participants performed a free choice task, in which keypresses were systematically followed by (task-irrelevant) high- versus low-pitched tones (learning phase). Later, these tones were utilized as stimuli, and whenever the required response matched the previous key–tone mapping, performance was facilitated. Similar effects were also reported for forced choice tasks without a dedicated learning phase. Here, keypresses were triggered by stimuli that were accompanied by additional task-irrelevant stimuli, the latter being congruent versus incongruent with the action effects (e.g., Hommel, 1996). Other studies generalized these findings by showing similar results for visual action effects (e.g., Kunde, 2001). Note that in these studies, the action effects were usually completely arbitrary.

A theoretical framework that incorporates the ideomotor view of action is the theory of event coding (TEC; Hommel, Müsseler, Aschersleben, & Prinz, 2001). One of its main assumptions is that actions are coded in terms of their perceptual consequences. Therefore, there is no fundamental difference between the domains of perception and action. The underlying representations of such perception–action compounds are called event files, in which feature codes that overlap in time are integrated, such as features of stimuli, associated actions, and their perceptual consequences. According to TEC, perception is the consequence of action as well as its source, and both domains are mutually coordinated (Hommel, 2009).

Although most studies on action effects were conducted in the manual domain (keypresses, grasping), the general claims of the ideomotor view and TEC should also extend to other action modalities. For example, Haazebroeck and Hommel (2009) explicitly stated that “we actively move our eyes, head, and body to make sure that our retina is hit by the light that is reflecting the most interesting and informative event” (p. 32), suggesting that saccades are also an action domain driven by goal-directed cognition (Milner & Goodale, 2008). Accordingly, recent studies no longer view our visuomotor system solely as a perceptual device, but also as an action modality (e.g., Huestegge & Koch, 2009, 2010a) aiming at the perception of new relevant information in the context of a task. For each saccade, its effect lies in the perception of the postsaccadic object. Interestingly, and in contrast to previous studies utilizing arbitrary action effects, the perceptual effects of eye movements are never arbitrary but are an integral part of the action. As a consequence, the visual system can be regarded as a natural integrator of the domains of perception and action (Huestegge & Koch, 2010b), and the anticipation of actions effects might thus be of great importance. Alternatively, it may be that eye movements are not controlled by an internal representation of an action effect, since peripheral vision might be used as a reliable external representation of potential action effects. Thus, a closer study of the role of action effects in saccade control appears vitally important. Interestingly, previous models of eye movement control consist mainly of a “when” and a “where” processing stream (e.g., Findlay & Walker, 1999), whereas any effects of target identity (in the absence of alternative targets) on processing efficiency would apparently be a novel and fundamental addition.

Interestingly, around the time of the present study, another (independent) research group found first evidence for action–effect associations in saccade control when the action effects consisted of changes in facial expression (from neutral to happy vs. angry faces; Herwig & Horstmann, 2011). However, the critical action effect always appeared 100 ms after saccade execution, so that the action effects (e.g., a happy face) were not identical with the saccade targets (always a neutral face). Thus, these results cannot easily be generalized to basic saccade control. Furthermore, it remains an open question whether their effects are limited to emotional face processing and how the temporal dynamics of action–effect associations evolve.

The aim of the present study was to test whether learned associations between spatial codes (e.g., based on saccades toward the left hemifield) and corresponding (task-irrelevant) information about target object identity (e.g., based on foveation of a square resulting from saccades toward the left hemifield) generally affect saccade control. Participants responded to lateralized imperative auditory stimuli with spatially corresponding saccades to peripheral targets. The saccade target (i.e., the action effect) was a square in one visual hemifield and a rhombus in the other hemifield. Crucially, prior to the presentation of the imperative auditory stimulus, a central, task-irrelevant visual stimulus appeared that was congruent, incongruent, or unrelated to the subsequent peripheral saccade target (see Hommel, 1996, for a similar design). We reasoned that if saccade preparation toward the peripheral target object (indicated by the auditory stimulus) involves the visual representation of the target’s identity, the additionally processed central visual stimulus should affect saccade performance, depending upon its congruency with the target. More specifically, a congruent visual stimulus might prime (i.e., speed up) saccadic response time (RT), whereas an incongruent stimulus should (according to TEC) activate a conflicting event file—that is, a representation that includes the representation of an eye movement to the wrong side. This should interfere with the required response and slow down saccadic RT and/or enhance error rates. To gather information regarding the underlying processing timeline, we additionally varied the interval between the visual and auditory stimuli (see Ziessler & Nattkemper, 2011, for a similar manipulation in the manual domain).

In Experiment 1, the saccade target (i.e., the action effect) was already present prior to saccade initiation (i.e., the action) to resemble a natural situation for saccade programming (i.e., without any display changes with respect to the saccade target). To rule out potential perceptual grouping effects, we utilized a saccade-contingent display change procedure in Experiment 2, where saccade targets were visible only after saccade initiation.

Method

Participants

Eighteen participants (university students) with normal or corrected-to-normal vision participated in Experiment 1 (mean age = 24 years), and 18 new participants took part in Experiment 2 (mean age = 22 years).

Apparatus

Participants sat in front of a 21-in. cathode ray monitor (temporal resolution, 100 Hz; spatial resolution, 1,240 × 1,068 pixels) at a viewing distance of 55 cm. Eye movements of the right eye were measured using an Eyelink II system (SR Research, Canada) with a temporal resolution of 500 Hz. The experiment was programmed using Experiment Builder (SR Research, Canada).

Stimuli and procedure

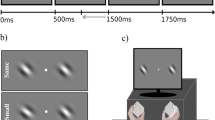

Figure 1 depicts a schematic trial sequence in Experiment 1. First, a green central fixation cross and two green saccade targets (square and rhombus) 10° to the left and right in the periphery were presented on a black background. The size of the fixation cross and the two saccade targets amounted to 1.4°. The relative position of the two targets (e.g., square left/rhombus right) was counterbalanced across subjects. Then, the central fixation cross was replaced by a task-irrelevant visual stimulus (400-ms duration). The visual stimulus was a square, a rhombus, or a circle (equally distributed across trials). Note that the square and the rhombus were either congruent or incongruent with the saccade target, whereas the circle was unrelated (baseline condition). After the central visual stimulus, the lateralized imperative auditory stimulus was presented (50-ms beep of 600 Hz to the left or right ear via headphones). The time interval between trial onset and the onset of the auditory stimulus was randomized (500/1,000/1,500 ms). The interval between the visual and the auditory stimuli (stimulus onset asynchrony, SOA) amounted to 200 or 400 ms.

Schematic representation of a trial sequence in Experiment 1. In Experiment 2, the square/rhombus in the periphery appeared only after the initiation of a saccade toward the peripheral target positions. Whenever participants fixated the screen center in Experiment 2, crosses (similar to the central fixation cross) served as placeholders at the peripheral target positions

After the onset of the imperative auditory stimulus, the fixation screen was still present for 1,500 ms. Within this time interval, participants were instructed to shift their gaze as quickly as possible to the peripheral target that spatially corresponded to the auditory stimulus. While in Experiment 1 the saccade targets were present throughout the trial, saccade target onset in Experiment 2 occurred during saccade execution (using peripheral plus signs as placeholders before saccade execution and after the return saccade to the central fixation cross). The experiments (45 min) consisted of four blocks with 81 randomized trials each. Prior to each block, participants underwent calibration.

Design

The variables congruency (congruent, incongruent, unrelated) and SOA (400 ms, 200 ms) were manipulated intraindividually. We measured the latency and direction of saccade responses. Saccades that covered at least half of the distance between fixation cross and saccade target were considered as responses. Mean RTs were calculated on the basis of trials with correct responses. Missing responses or direction errors were considered as errors.

Results

Only 0.6 % of all trials were excluded from the analysis due to blinks or anticipatory saccades (latencies < 60 ms). RTs of correct responses were submitted to a three-way ANOVA with congruency and SOA as within-subjects factors and experiment (1 vs. 2) as a group factor. Figure 2 depicts mean saccade RTs. There was a significant main effect of congruency, F(2, 68) = 25.79, p < .001, η p² = .43. RTs were shortest in congruent conditions (252 ms, SE = 6.14), longest in incongruent conditions (266 ms, SE = 6.22), and at an intermediate level for trials with unrelated stimuli (260 ms, SE = 6.70). The effect of SOA was significant, F(1, 34) = 46.63, p < .001, η p² = .58, indicating longer RTs for the SOA = 400 ms condition (275 ms, SE = 7.65), as compared with the SOA = 200 ms condition (244 ms, SE = 5.46). The main effect of experiment was significant, too, F(1, 34) = 14.59, p < .001, η p² = .30, indicating greater RTs in Experiment 2 (involving display changes) than in Experiment 1 (283 vs. 235 ms). However, the experiment variable did not significantly interact with congruency or SOA, and there was no significant three-way interaction, all ps > .10. There was a significant interaction of SOA and congruency, F(2, 68) = 9.94, p < .001, η p² = .23. To further qualify this interaction, we conducted separate one-way ANOVAs for each SOA condition, averaged across experiments. In both the 200-and 400-ms SOA conditions, there was a significant effect of congruency, F(2, 70) = 14.82 and 16.54, respectively, ps < .001. In the SOA = 200 ms condition, congruent trials differed significantly from both incongruent and baseline trials (both ps < .001), whereas there was no significant difference between incongruent and baseline trials (p > .10). In the SOA = 400 ms condition, incongruent trials differed significantly from both congruent and baseline trials (both ps < .001), but there was no significant difference between congruent and baseline trials (p > .10). Taken together, these results point to a beneficial effect of congruency with a short SOA and to an adverse effect of incongruency with a long SOA.

To explore potential learning effects, we compared RTs (only for congruent vs. incongruent conditions) across the four blocks, averaged across experiments. Due to sphericity violations, we applied Greenhouse–Geisser corrections. There was a significant effect of congruency, F(1, 35) = 49.49, p < .001, η p ² = .59, and a significant effect of block, F(3, 105) = 9.12, p < .001, indicating gradually decreasing RTs. Importantly, there was no significant interaction, F(3, 105) = 2.56, p > .05. In fact, the congruency effect nominally tended to decrease across blocks, indicating that the buildup of saccade target representations already occurred within the first few trials.

Overall, error rates were quite low (below 3%). Nevertheless, statistical analyses resulted in a significant effect of congruency, F(2, 68) = 7.50, p = .001, η p ² = .18. Mean error rates amounted to 1.9% (SE = 0.4) for congruent conditions, 3.2% (SE = 0.5) for incongruent conditions, and 2.8% (SE = 0.6) for trials with unrelated visual stimuli. There were slightly more errors in Experiment 1 (3.7%) than in Experiment 2 (1.6%), F(1, 34) = 4.92, p = .033, η p ² = .13. The significant interaction between experiment and congruency, F(2, 68) = 4.01, p = .023, η p ² = .11, indicated a slightly greater congruency effect in Experiment 1 (2%) than in Experiment 2 (0.6%). There was no significant effect of SOA, F < 1, and no significant interaction of congruency and SOA, F(2, 68) = 1.15, p > .10. However, there was a significant three-way interaction, F(2, 68) = 7.41, p = .001, η p ² = .18, indicating that the congruency effect was greater in Experiment 1 (vs. 2), especially in the SOA = 400 ms condition.

Discussion

The congruency effect in both experiments indicates that participants processed and learned the specific (task-irrelevant) identity of the objects in the left and right hemifields in the course of the visual display exploration induced by our saccade task. Moreover, the learned associations of the identity of a saccade target (e.g., a square) and its spatial code (e.g., left) affected saccade control. This can be inferred from the fact that the additional task-irrelevant presentation of the central visual stimulus influenced saccade performance, depending on the congruency of the visual stimulus with the saccade target within each trial: Saccade performance (RTs/errors) was worse in incongruent versus congruent conditions. This is in line with the assumption that learned associations between oculomotor actions (e.g., leftward saccades) and their effects (e.g., foveation of a square) affect saccade control (ideomotor view of action; Greenwald, 1970; James, 1890). Therefore, previous findings in the context of manual keypresses (e.g., Elsner & Hommel, 2001; Hommel, 1996; Kunde, 2001) or grasping movements (e.g., Craighero, Fadiga, Rizzolatti, & Umiltà, 1999) generalize to the visual system, which is inherently characterized by a strong integration of the domains of perception and action (e.g., Huestegge & Koch, 2010b).

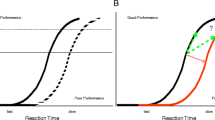

The inclusion of the baseline condition (unrelated stimuli) provided further information about underlying processes. As was expected, mean baseline RTs fell in between the RT levels of congruent and incongruent conditions. However, a closer look at the individual SOA levels revealed a beneficial effect of congruent stimuli in the SOA = 200 ms condition, whereas incongruent stimuli produced similar RTs as the baseline condition. Most likely, congruent visual stimuli served as primes that preactivated the corresponding response, eventually facilitating saccade execution. This priming process seems to build up comparatively quickly, since its effects were already present in the 200-ms SOA condition.

However, the SOA = 400 ms condition revealed an adverse effect of incongruent visual stimuli, whereas congruent stimuli produced similar RTs as the baseline condition. This probably reflects a distinct, slower process during which incongruent visual stimuli preactivate incorrect responses, eventually causing interference. Maybe the incorrect response has to be suppressed and (in a time-consuming process) replaced with the correct response, and the increased error rates for incongruent stimuli partially reflect the failure to inhibit the incorrect response on some trials. Taken together, this result pattern might indicate that two separable processes underlie the overall congruency effect: (1) a beneficial and fast priming effect produced by congruent visual stimuli and (2) a slower interference effect produced by incongruent visual stimuli.

Note that this processing timeline is slightly at variance with results from a recent study on action effects in the manual domain by Ziessler and Nattkemper (2011). They manipulated SOA within a flanker paradigm and reported data suggesting that effect-related information is involved only in later stages of response processing (i.e., when the effect-related stimulus appears with or after the imperative stimulus). Also, they did not find clear evidence for costs of incongruent (relative to baseline) conditions. These differences are probably due to modality-specific processing dynamics, since they utilized visual (instead of auditory) imperative stimuli and manual (instead of oculomotor) actions.

By and large, the present results are in line with central assumptions of TEC (Hommel, 2009; Hommel et al., 2001). Congruent stimuli may cause a preactivation of the corresponding event file, which contains the representation of the correct response, eventually resulting in faster response execution. In contrast, incongruent stimuli may preactivate an event file that contains the representation of the incorrect response, and the activation of the correct response is associated with performance costs in terms of prolonged RTs and inflated errors. Since unrelated stimuli are not associated with any of the two specific responses, they produce neither specific performance benefits nor costs.

The present results also appear to be relevant for models of saccade control, which typically consist of “when” and “where” processing streams (e.g., Findlay & Walker, 1999). Our results suggest that target identity information (even in the absence of alternative targets) directly influences processing efficiency in the “when” stream. Thus, the addition of a “what” processing stream that feeds down to the stream of “when” computations may result in a more complete picture of cognitive processes during oculomotor control.

One possible explanation of the congruency effect might be that the visual stimulus triggers a representation of its associated spatial code (e.g., a square triggers the concept of “left,” because squares were always presented at the left location), which then primes (or interferes with) the subsequent response. According to this explanation, the visual stimulus would be equivalent to an (albeit uninformative) symbolic cue, and the congruency effect would rather occur at the input (stimulus-processing) stages of task processing instead of around the time of response selection. This explanation would establish a weaker account of the role of action effects in saccade control. On the one hand, this mechanism would still require that action effects are processed, associated with the target locations, and retrieved during a trial, since (at least in Experiment 2) the action effect occurred only as a result of the action. On the other hand, however, this explanation would not necessitate a strong ideomotor account that assumes that a representation of the anticipated action effect is a necessary part of response planning on each trial.

However, the weaker (stimulus-processing-related) account appears to be inconsistent with the finding that long SOAs produced longer RTs. If the visual stimulus was (despite its uninformativeness) used to activate a spatial code for subsequent response preparation, more preparation time should lead to better performance in congruent conditions. Instead, the main effect of SOA suggests that the processing of the visual stimulus is rather effortful (instead of facilitating), because an increase of processing time for the visual stimulus was generally associated with an increase of its disruptive effect on saccade RTs. Therefore, it may seem more plausible to assume that the onset of the imperative auditory stimulus indeed evoked an (effortful) visual representation of the anticipated action effect on the majority of trials, which then was primed by (or subject to interference from) the additionally processed central visual stimulus. However, more research may be needed to fully qualify the locus of the present congruency effect in the timeline of task processing.

Since the present experimental design did not involve a separate acquisition phase for learning the action effects without the presence of the imperative auditory stimuli, it is in principle possible that the central presentation of the saccade effect preactivated the saccade either directly (via saccade–effect associations), or indirectly (via tone–effect associations, which then preactivate the corresponding saccade). However, previous studies already presented evidence for the existence of direct action–effect associations for manual actions with auditory effects (Elsner & Hommel, 2001), but also for oculomotor actions with visual effects (Herwig & Horstmann, 2011; see below), strongly suggesting that our present results were also based on direct action–effect associations.

Another alternative explanation of the congruency effect in Experiment 1 could be that due to the continuous presentation of the saccade targets, a congruent central visual stimulus yields perceptual grouping with the same object in the periphery (due to Gestalt factors), subsequently triggering an attention shift toward the respective hemifield. However, the experimental procedure in Experiment 2 effectively ruled out this explanation, and despite an overall RT increase (probably due to disruptive effects of the display change), the overall result pattern was identical. Thus, while Experiment 1 showed that (anticipated) action effects affect saccade control under natural conditions (e.g., without changes of the saccade target), Experiment 2 ruled out a perceptual grouping explanation.

As was mentioned above, around the time of the present study, another research group reported evidence for action–effect associations in saccade control when the action effects consisted of changes in facial expression (Herwig & Horstmann, 2011), instead of abstract geometrical stimuli. Interestingly, some aspects of their data even suggested that action effects were activated endogenously—that is, without the need for external imperative stimulation. On the one hand, this study further underlines the credibility of the present effects. On the other hand, our present findings extend these data by indicating that saccade control is generally affected by action effect representations, irrespective of the specific stimulus type. Additionally, Herwig and Horstmann used a design in which the effects appeared 100 ms after saccade execution, so that the action effects were not identical with the targets of each saccade. In contrast, our present findings appear to have strong implications for saccade control in general, since we found evidence that internal representations of action effects play a quite fundamental role in guiding each saccade to its target.

References

Craighero, L., Fadiga, L., Rizzolatti, G., & Umiltà, C. (1999). Action for perception: A motor-visual attentional effect. Journal of Experimental Psychology: Human Perception and Performance, 25, 1673–1692.

Donders, F. C. (1868). Die Schnelligkeit psychischer Prozesse. Archiv für Anatomie, Physiologie und wissenschaftliche Medicin, 657–681.

Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology: Human Perception and Performance, 27, 229–240.

Findlay, J. M., & Walker, R. (1999). A model of saccade generation based on parallel processing and competitive inhibition. The Behavioral and Brain Sciences, 22, 661–674.

Greenwald, A. G. (1970). Sensory feedback mechanisms in performance control: With special reference to the ideomotor mechanism. Psychological Review, 77, 73–99.

Haazebroeck, P., & Hommel, B. (2009). Anticipative control of voluntary action: Towards a computational model. In G. Pezzulo, M. V. Butz, O. Sigaud, & G. Baldassarre (Eds.), Anticipatory behavior in adaptive learning systems— from psychological theories to artificial cognitive systems (pp. 31–47). Berlin: Springer.

Herwig, A., & Horstmann, G. (2011). Action–effect associations revealed by eye movements. Psychonomic Bulletin & Review, 18, 531–537.

Hommel, B. (1996). The cognitive representation of action: Automatic integration of perceived action effects. Psychological Research, 59, 176–186.

Hommel, B. (2009). Action control according to TEC (theory of event coding). Psychological Research, 73, 512–526.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. The Behavioral and Brain Sciences, 24, 849–878.

Huestegge, L., & Koch, I. (2009). Dual-task crosstalk between saccades and manual responses. Journal of Experimental Psychology: Human Perception and Performance, 35, 352–362.

Huestegge, L., & Koch, I. (2010a). Crossmodal action selection: Evidence from dual-task compatibility. Memory & Cognition, 38, 493–501.

Huestegge, L., & Koch, I. (2010b). Fixation disengagement enhances peripheral perceptual processing: Evidence for a perceptual gap effect. Experimental Brain Research, 201, 631–640.

James, W. (1890). The principles of psychology (2 vols.). New York: Henry Holt. Reprinted Bristol: Thoemmes Press, 1999.

Kunde, W. (2001). Response–effect compatibility in manual choice reaction tasks. Journal of Experimental Psychology: Human Perception and Performance, 27, 387–394.

Milner, A. D., & Goodale, M. A. (2008). Two visual systems re-viewed. Neuropsychologia, 46, 774–785.

Stock, A., & Stock, C. (2004). A short history of ideo-motor action. Psychological Research, 68, 176–188.

Ziessler, M., & Nattkemper, D. (2011). The temporal dynamics of effect anticipation in action planning. Quarterly Journal of Experimental Psychology, 64, 1305–1326.

Authors’ Note

Lynn Huestegge and Magali Kreutzfeldt, Institute of Psychology, RWTH Aachen University, Aachen, Germany. We thank Bernhard Hommel, Jochen Müsseler, Arvid Herwig, Geoff Cole, Robert Proctor, and Steve Tipper for comments on previous drafts of the manuscript and those who kindly volunteered to participate in the study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Huestegge, L., Kreutzfeldt, M. Action effects in saccade control. Psychon Bull Rev 19, 198–203 (2012). https://doi.org/10.3758/s13423-011-0215-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-011-0215-5