Abstract

An important question for the study of social interactions is how the motor actions of others are represented. Research has demonstrated that simply watching someone perform an action activates a similar motor representation in oneself. Key issues include (1) the automaticity of such processes, and (2) the role object affordances play in establishing motor representations of others’ actions. Participants were asked to move a lever to the left or right to respond to the grip width of a hand moving across a workspace. Stimulus-response compatibility effects were modulated by two task-irrelevant aspects of the visual stimulus: the observed reach direction and the match between hand-grasp and the affordance evoked by an incidentally presented visual object. These findings demonstrate that the observation of another person’s actions automatically evokes sophisticated motor representations that reflect the relationship between actions and objects even when an action is not directed towards an object.

Similar content being viewed by others

Recent research indicates that the human motor system is not only involved in the production of purposeful behavior but also plays a central role when observing the actions of others (Wilson & Knoblich, 2005). For example, mirror neurons have been discovered in the premotor and parietal cortices of the macaque monkey (e.g., di Pellegrino, Fadiga, Fogassi, Gallese, & Rizzolatti, 1992) that fire not only when the monkey performs an action but also when it passively observes this action, suggesting that action observation involves a mapping of others’ actions onto the observer’s own motor system. In humans, studies using functional magnetic resonance imaging have provided evidence that action observation and execution rely on closely overlapping neuronal systems (e.g., Chong, Cunnington, Williams, Kanwisher, & Mattingley, 2008; Chong, Williams, Cunnington, & Mattingley, 2008; Kilner, Neal, Weiskopf, Friston, & Frith, 2009; Oosterhoff, Wiggett, Diedrichsen, Tipper, & Downing, 2010).

Action mirroring has been demonstrated behaviorally with stimulus-response compatibility paradigms. These studies have shown that others’ actions are automatically mapped on the observer’s own motor system and facilitate similar responses (e.g., Brass, Bekkering, Wohlschläger, & Prinz, 2000; Stürmer, Aschersleben, & Prinz, 2000). Such effects have been demonstrated for various visual components of observed actions, such as the body parts used (Bach, Peatfield, & Tipper, 2007; Bach & Tipper, 2007; Gillmeister, Catmur, Liepelt, Brass, & Heyes, 2008), hand posture (Stürmer et al., 2000; Brass et al., 2000), kinematics (Edwards, Humphreys, & Castiello, 2003; Griffiths & Tipper, 2009) and timing information (e.g., Flach, Knoblich, & Prinz, 2004). Note, however, that all these properties exclusively reflect the behavior of the body part. What has not been fully explored so far is how object properties affect action mirroring. This is an important issue since human action typically occurs in the context of objects and research on action execution has demonstrated direct links between object perception and motor performance. For example, during grasping, the hand’s finger configuration, orientation and aperture are dynamically adjusted to the visual features of the goal object (e.g., Jeannerod, Arbib, Rizzolatti, & Sakata, 1995; Smeets & Brenner, 1999). Even passive viewing of an object has been shown to activate the appropriate grasp for the object (i.e., the object’s ‘affordances’, Craighero, Fadiga, Rizzolatti, & Umiltà, 1998; Gibson, 1979; Tucker & Ellis, 2001).

The notion that the goal object is important in both executed and observed actions is central to the present study. The evidence reviewed above suggests that when we see an object, our motor system prepares for the actions this object affords. It has been suggested by many authors that similar predictions—driven by so-called ‘forward models’—are made when observing others’ actions (e.g., Csibra, 2007; Flanagan & Johansson, 2003; Kilner, Friston, & Frith, 2007; Miall, 2003; Wolpert, Doya, & Kawato, 2003). However, what happens to the action perception system when a prediction is falsified by another source of information? Take, for example, a mismatch between the grip size of the observed hand (aperture between the forefinger and the thumb) and the size of the predicted target object. If an observed person makes a narrow grip, it is unlikely that the target of their action is a large object. Is the predicted model of the observed behavior disrupted in this case? If it is, then this should also disrupt observed-executed action compatibility effects.

This study tests whether the automatic extraction of object affordances also affects the processing of actions that are merely observed. To test for interactions of action observation and affordance systems, we manipulated two components of observed grasps: the direction of the reach and the appropriateness of the grasp to an object’s affordances (e.g., Jeannerod, 1995; Smeets & Brenner, 1999). Participants were shown videos of a hand reaching to the left, right or directly across a workspace and moved a joystick to the left or right to report whether the grasp aperture was small or large. A simple to-be-ignored object was also presented at the top of the screen on each trial (see Fig. 1, for examples). In order to establish a context of goal-directed actions, which has been shown to be important for mirroring processes to be engaged (e.g., Liepelt, von Cramon, & Brass, 2008; Longo & Bertenthal, 2009), the hand usually moved straight ahead towards the irrelevant object. The crucial trials, however, were those in which the reach was directed away from the object, to the left or right, creating a situation in which observed reach direction was either compatible or incompatible with the participants’ left/right responses. On the basis of prior research on action observation and stimulus-response compatibility (e.g., Bosbach, Prinz, & Kerzel, 2005; Brass et al., 2000; Simon, 1969; Stürmer et al., 2000), we expected the observed reach direction to facilitate compatible responses, rendering the execution of joystick movements in the same direction more efficient.

The important question was whether inappropriate object affordances would modulate these compatibility effects. We manipulated the match between observed grasp and the affordance of the irrelevant object. On ‘appropriate’ trials, the grasp would be narrow and the irrelevant object small, or the grasp would be wide and the object large. On ‘inappropriate’ trials, a narrow grasp would be paired with a large object, and a wide grasp with a small object. If the representation of the observed action is disrupted by a grasp-object mismatch, then any reach-response-direction compatibility effects should be reduced when observing reaches with inappropriately sized grips. Such effects would demonstrate that motor effects during action observation do not only reflect simple visuomotor matching processes, but also the automatic detection of the potential for successful action, based on an integration of object and action properties, even when not required for the task and when object and action are spatially separated.

Method

Participants

Twenty-two right-handed volunteers (14 female), ranging from 18 to 25 years, with a mean age of 19.9 years, participated in return for course credits. They gave informed consent and were unaware of the aims of the study.

Apparatus and stimuli

Stimulus presentation was controlled by Eprime 2.0, on a 17-inch monitor placed 60 cm away from the participant. Participants responded to the stimuli with the movement of a custom bi-directional joystick (15 cm in height) to the left or right. Reaction time was recorded by microswitches when the handle had moved through 20 degrees in either direction.

Forty videos were recorded with a Sony DCR-TRV900E camcorder fixed to a tripod. The camcorder was positioned over the right shoulder of the right-handed female actor and angled downwards such that only her right hand and the black workspace (40 x 40 cm) were in shot. At the start of each video, the actor’s hand rested at the center of the lower section of the workspace, rotated such that the back of the hand faced the camera with thumb and forefinger resting on the table, with a 6-cm aperture (exact positions marked by black tabs invisible on the final stimuli). One of two simple three-dimensional objects was placed in the center at the top of the workspace (i.e., directly in front of the hand position). The small object measured 2 × 2 × 2 cm, and the large object was wider (10 cm).

The actor was instructed to make fluent but precise reaching actions to one of three locations: Straight ahead, 30 degrees to the left, or 30 degrees to the right. She was asked to complete the reach by placing her forefinger and thumb on one set of markers placed 10 cm apart at each location, or on another pair of markers 2 cm apart. In the case of the ‘straight-ahead’ reaches, since either the small or large object was placed at this location, she was asked to grasp the object if the grip she was performing fitted the object, and to mime the grasp if it did not. Hence, there were a total of 3 (directions: Left, Right, Straight) x 2 (grip: Wide, Narrow) x 2 (object: Large, Small) = 12 reach types.

Between six and 20 examples of each reach were recorded, and selected by independent observers for subjective visual quality, fluency of movement and consistency, such that 40 videos were selected as stimuli for the experiment. Eight videos were of reaches to the left, with two examples of each combination of wide/narrow grasps in the presence of a centrally placed large or small object. Similarly, eight videos showed rightward reaches. These videos were clipped such that the second frame was movement onset, and the final frame was penultimate frame prior to contact with the object/table markers. The final videos therefore showed the hand in motion throughout, never touching the object or the table at the end of the video (see Fig. 1 for examples). Mean video durations were 881 ms (SD = 84 ms) in the appropriate condition and 896 ms (SD = 87 ms) in the inappropriate condition (durations were not significantly different, t(38) = .35, p = 0.73.

The remaining 60% of videos (n = 24) were straight reaches towards the object, equal numbers of which were wide and narrow grips towards large and small objects. The trials in which straight reaches were shown were not analyzed, as they merely served as filler items to establish a context of goal directed action, and were not balanced for perceptual parameters (mean duration = 792 ms, SD = 84).

Procedure

Participants were seated in front of the computer, and asked to view the videos. They were required to decide whether the aperture between the thumb and index finger was narrow or wide. They were asked to ignore the object at the top of the screen and the direction in which the hand moved, as these were irrelevant to their task, and only respond with respect to the grip aperture. Participants were instructed to firmly grip the joystick and, for instance, to move it to the left if the observed finger aperture was narrow and to the right if it was wide. The polarity of these responses was reversed for alternating subjects. Participants completed between 16 and 32 practice trials until they were comfortable with the task. Two hundred trials followed, with each of the 40 videos presented in a random order once in each of five blocks. Hence, there were 80 left and right videos and therefore 20 trials per condition in the 2 x 2 within-subjects design. A trial commenced with a fixation cross in the center of the screen for one second, followed by a blank screen for 1 s. The video was then played until its conclusion or response, whichever occurred earliest. A blank screen was then presented for 1 s before the start of the next trial. Incorrect responses received immediate feedback in the form of a red cross in the center of the screen (for 500 ms), which was also presented if no response was recorded after 3 s from the offset of the video.

Results

Errors

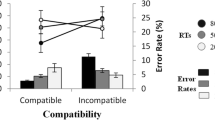

The percentage of errors made by each subject in each condition were submitted to a 2 x 2 repeated-measures ANOVA, with the factors ‘Appropriateness’ (aperture between observed finger and thumb was appropriate or inappropriate for grasping the object) and ‘Compatibility’ (observed reach direction and required joystick movement was compatible or incompatible). There was no main effect of ‘Appropriateness’, F(1, 21) = 1.61, MSe = 25.4, p = .22, η 2 p = .07, but a significant main effect of ‘Compatibility’, F(1, 21) = 6.97, MSe = 102, p = .015, η 2 p = .25, with fewer errors on ‘Compatible’ trials (2.3%) than ‘Incompatible’ trials (8.0%). Importantly, the predicted interaction between these two factors was significant, F(1, 21) = 7.66, MSe = 14.8, p = .012, η 2 p = .27. T-test showed that this interaction was due to a significant compatibility effect when the finger aperture was appropriate for the size of the object, t(21) = 3.08, p = 0.006 (1.8 vs. 9.8% errors), but not significant when the size of the grip did not match the size of the object, t(21) = 1.72, p = 0.10 (2.7 vs. 6.1%) (see Fig. 2).

Mean error rates (left panel) and reaction times (right panel) for each condition in each experiment. Error bars show the within-subjects standard error (from the interaction term, cf. Loftus & Masson, 1994)

Reaction time

After removal of each participants’ erroneous trials (5.1% of trials), timeouts and trials with RTs greater than three standard deviations above their mean reaction time (< 1% of trials), mean reaction times for each condition were submitted to the same analysis as above (see Fig. 2). There was no main effect of ‘Appropriateness’, but a main effect of ‘Compatibility’, F(1, 21) = 25.0, MSe = 2162, p < .001, η 2 p = .54, revealing faster responses when response and observed reach were compatible. However, in contrast to the error rates, the interaction between ‘Appropriateness’ and ‘Compatibility’ was non-significant, F(1, 21) < 1, though the pattern was numerically identical to the error rates. Indeed, planned t-tests showed that, as in the error rates, the compatibility effect was significant only for appropriate grasps (64 ms), t(21) = 3.95, p = 0.001, but not inappropriate grasps (35 ms), t(21) = 1.70, p = 0.11.

Replication

As the effects were only observed in the error rates but not RTs, it was important to see if they can be replicated in another experiment. Twenty-one participants saw the same stimuli and now explicitly judged the relationship between hand and goal object (e.g., press joystick left for appropriate grasps). The pattern of data was identical, with a significant Compatibility x Appropriateness interaction in errors, F(1, 20) = 4.71, MSe = 36.4, p = 0.042, η 2 p = .19, and a non-significant interaction in RTs, F(1, 20) < 1.

General discussion

The present study shows for the first time that object affordances do not only affect the production of one’s own actions, but also how the actions of others are perceived. Participants made left or right joystick movements to judge the aperture of left or right reaches that were either appropriate or inappropriate to an incidentally presented object. We found that joystick movements in the same direction as the observed reaches were executed more efficiently than movements in the opposite direction, consistent with previous research on action mirroring (Brass et al., 2000) and spatial stimulus response compatibility (e.g., Bosbach et al., 2005; Simon, 1969). Crucially, however, these reach direction compatibility effects were only observed when the grasp was appropriate to the affordances of the goal object. When the grasp was inappropriate, being either too large or too small, the compatibility effects were greatly reduced.

These data reveal that action observation not only involves a direct matching of visual to motor features, but also a sophisticated integration of the behavior of the body part with the affordances of the goal object. Affordances that did not match the observed grip weakened or disrupted the mapping of observed reach direction onto the participants’ motor system. This modulation occurred even though the object was irrelevant throughout the experiment and the reaches were directed away from it. Moreover, even though the reduction primarily affected compatibility effects in error rates rather than response times (for similar findings in SRC paradigms, see Bach & Tipper, 2007; Heyes & Ray, 2004, Exp 2), it was observed in two experiments, irrespective of whether the hand-object match was an incidentally varying aspect of the observed actions, or whether it had to be explicitly judged (see Replication).

These data challenge the view that motor activation during action observation reflects a simple mechanism that links an action’s apparent properties to action features the observer can produce, both on the motor level (Brass et al., 2000; di Pellegrino et al., 1992; Stürmer et al., 2000) or on the spatial/perceptual level (Bertenthal, Longo, & Kosobud, 2006; Catmur, Walsh, & Heyes, 2007; Prinz, 2002). Rather, they suggest that motor activation emerges at higher cognitive representations of the stimulus as a goal directed action, which is only established when hand and goal object match. Motor knowledge is therefore used more extensively during action understanding than previously thought; not only linking action perception to motor output, but also providing information about how other people’s actions have to conform to objects in order to be successful.

A well-known finding from monkey single cell studies is that mirror neurons do not fire for mimed grasps in the absence of a goal object (Rizzolatti & Craighero, 2004; see Morin & Grèzes, 2008; for a meta-analysis of neuroimaging studies showing similar effects in humans; Wohlschläger & Bekkering, 2002, for behavioral evidence). Our data open up the possibility that the reason for these responses is that action mirroring not only involves a simple visual-to-motor mapping process but also the matching of observed actions to the affordances of a potential goal object. Although computational accounts of the mirror system have posited such processes (e.g., Oztop & Arbib, 2002), this is the first experimental demonstration that such an object-action matching may occur automatically during human action observation and determine the extent to which another’s action is mapped onto the observer’s motor system. Previous studies have established that perceived goals or intentions are an important source of information during action observation that can, depending on the task, either disrupt or enhance action mirroring (cf. Liepelt et al., 2009; Longo & Bertenthal, 2009; van Elk, van Schie, & Bekkering, 2008; Wild, Poliakoff, Jerrison, & Gowen, 2010). We propose that the extraction of object affordances plays a key role in these processes, being able to bias action observation towards those actions for which a goal in the environment can be identified. The underlying computations appear to be made relatively automatically, at least when a context of purposeful action has been established by the social or experimental situation.

According to predictive and forward models of the mirror system, the motor system ‘simulates’ or ‘emulates’ both own and others’ actions to predict their outcome (e.g., Csibra, 2007; Kilner et al., 2007; Miall, 2003; Wolpert et al., 2003). Our data indicate that object affordances play a central role in these processes. When an observed grasp is inappropriate for an object, the mismatch evokes an error signal of action failure, suppressing simulation processes that cannot be linked to a goal. The three most striking aspects to our findings are that such action-object affordance effects take place even when the hand is not directed towards the object, reflecting the potential for successful action outcomes rather than current states; these effects are automatic in that reach direction and visible object are irrelevant to the participant’s task, at least in situation in which participants expect goal directed action; and finally there is crosstalk between the reach and grasp aspects of prehension, such that the mapping of one aspect onto the motor system is affected by a mismatch on the other.

References

Bach, P., Peatfield, N. A., & Tipper, S. P. (2007). Focusing on body sites: The role of spatial attention in action perception. Experimental Brain Research, 178(4), 509–517.

Bach, P., & Tipper, S. P. (2007). Implicit action encoding influences personal-trait judgments. Cognition, 102(2), 151–178.

Bertenthal, B. I., Longo, M. R., & Kosobud, A. (2006). Imitative response tendencies following observation of intransitive actions. Journal of Experimental Psychology: Human Perception and Performance, 32, 210–225.

Bosbach, S., Prinz, W., & Kerzel, D. (2005). Movement-based compatibility in simple response tasks. European Journal of Cognitive Psychology, 17(5), 695–707.

Brass, M., Bekkering, H., Wohlschläger, A., & Prinz, W. (2000). Compatibility between observed and executed finger movements: Comparing symbolic, spatial, and imitative cues. Brain and Cognition, 143, 124–143.

Catmur, C., Walsh, V., & Heyes, C. (2007). Sensorimotor learning configures the human mirror system. Current Biology, 17, 1527–1531.

Chong, T. T., Cunnington, R., Williams, M. A., Kanwisher, N., & Mattingley, J. B. (2008). fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Current Biology, 18(20), 1576–1580.

Chong, T. T., Williams, M. A., Cunnington, R., & Mattingley, J. B. (2008). Selective attention modulates inferior frontal gyrus activity during action observation. Neuroimage, 40(1), 298–307.

Craighero, L., Fadiga, L., Rizzolatti, G., & Umiltà, C. A. (1998). Visuomotor Priming Visual Cognition, 5, 109–125.

Csibra, G. (2007). Action mirroring and action understanding: An alternative account. In P. Haggard, Y. Rosetti, & M. Kawato (Eds.), Sensorimotor foundations of higher cognition. Attention and performance XXII (pp. 435–459). Oxford: Oxford University Press.

di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., & Rizzolatti, G. (1992). Understanding motor events: A neurophysiological study. Experimental Brain Research, 91, 176–180.

Edwards, M. G., Humphreys, G. W., & Castiello, U. (2003). Motor facilitation following action observation: A behavioural study in prehensile action. Brain and Cognition, 53(3), 495–502.

Flach, R., Knoblich, G., & Prinz, W. (2004). Recognizing one's own clapping: The role of temporal cues. Psychological Research, 69(1-2), 147–156.

Flanagan, J. R., & Johansson, R. S. (2003). Action plans used in action observation. Nature, 424(6950), 769–771.

Gibson, J. J. (1979). The ecological approach to visual perception. Boston: Houghton Mifflin.

Gillmeister, H., Catmur, C., Liepelt, R., Brass, M., & Heyes, C. (2008). Experience-based priming of body parts: A study of action imitation. Brain Research, 1217, 157–170.

Griffiths, D., & Tipper, S. P. (2009). Priming of reach trajectory when observing actions: Hand-centred effects. The Quarterly Journal of Experimental Psychology, 62, 2450–2470.

Heyes, C. M., & Ray, E. D. (2004). Spatial S-R compatibility effects in an intentional imitation task. Psychonomic Bulletin & Review, 11, 703–705.

Jeannerod, M., Arbib, M. A., Rizzolatti, G., & Sakata, H. (1995). Grasping objects: The cortical mechanisms of visuomotor transformation. Trends in Neuroscience, 18, 314–320.

Kilner, J. M., Friston, K. J., & Frith, C. D. (2007). Predictive coding: An account of the mirror neuron system. Cognitive Processing, 8(3), 159–166.

Kilner, J., Neal, A., Weiskopf, N., Friston, K., & Frith, C. (2009). Evidence of mirror neurons in human inferior frontal gyrus. The Journal of Neuroscience, 29(32), 10153–10159.

Liepelt, R., Ullsperger, M., Obst, K., Spengler, S., Von Cramon, D. Y., & Brass, M. (2009). Contextual movement constraints of others modulate motor preparation in the observer. Neuropsychologia, 47(1), 268–275.

Liepelt, R., von Cramon, D. Y., & Brass, M. (2008). What is matched in direct matching? Intention attribution modulates motor priming. Journal of Experimental Psychology: Human Perception and Performance, 34(3), 578–591.

Loftus, G. R., & Masson, M. E. J. (1994). Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review, 1(4), 476–490.

Longo, M. R., & Bertenthal, B. I. (2009). Attention modulates the specificity of automatic imitation to human actors. Experimental Brain Research, 192, 739–744.

Miall, R. C. (2003). Connecting mirror neurons and forward models. NeuroReport, 14, 2135–2137.

Morin, O., & Grèzes, J. (2008). What is “mirror” in the premotor cortex? A review. Clinical Neurophysiology, 3, 189–195.

Oosterhoff, N. N., Wiggett, A. J., Diedrichsen, J., Tipper, S. P. & Downing, P. E. (2010). Surface-based information mapping reveals crossmodal vision-action representations in human parietal and occipitotemporal cortex. Journal of Neurophysiology. (in press).

Oztop, E., & Arbib, M. A. (2002). Schema design and implementation of the grasp-related mirror neuron system. Biological Cybernetics, 87(2), 116–140.

Prinz, W. (2002). Experimental approaches to imitation. In A. N. Meltzoff & W. Prinz (Eds.), The imitative mind (pp. 143–162). Cambridge: Cambridge University Press.

Rizzolatti, G., & Craighero, L. (2004). The mirror neuron system. Annual Reviews of Neuroscience, 27, 169–192.

Simon, J. R. (1969). Reactions towards the source of stimulation. Journal of Experimental Psychology, 81(1), 174–176.

Smeets, J., & Brenner, E. (1999). A new view on grasping. Motor Control, 3, 237–271.

Stürmer, B., Aschersleben, G., & Prinz, W. (2000). Correspondence effects with manual gestures and postures: A study of imitation. Journal of Experimental Psychology: Human Perception and Performance, 26(6), 1746–1759.

Tucker, M., & Ellis, R. (2001). The potentiation of grasp types during visual object recognition. Visual Cognition, 8, 769–800.

van Elk, M., van Schie, H. T., & Bekkering, H. (2008). Conceptual knowledge for understanding other's actions is organized primarily around action goals. Experimental Brain Research, 189(1), 99–107.

Wild, K. S., Poliakoff, E., Jerrison, A., & Gowen, E. (2010). The influence of goals on movement kinematics during imitation. Experimental Brain Research, 204(3), 353–360.

Wilson, M., & Knoblich, G. (2005). The case for motor involvement in perceiving conspecifics. Psychological Bulletin, 131, 460–473.

Wohlschläger, A., & Bekkering, H. (2002). Is human imitation based on a mirror-neuron system? Some behavioural evidence. Experimental Brain Research, 143(3), 335–341.

Wolpert, D. M., Doya, K., & Kawato, M. (2003). A unifying computational framework for motor control and social interaction. Philosophical Transactions of The Royal Society: Biological Sciences, 358, 593–602.

Acknowledgements

This work was supported by a Wellcome Programme grant to Steven P. Tipper and by a Leverhulme Early Career Fellowship awarded to Andrew P. Bayliss. The authors thank Fraser Bailey and Kati Williams for assistance with data collection, Wendy Adams for assistance with stimulus production, and David McKiernan for constructing the joystick.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Bach, P., Bayliss, A.P. & Tipper, S.P. The predictive mirror: interactions of mirror and affordance processes during action observation. Psychon Bull Rev 18, 171–176 (2011). https://doi.org/10.3758/s13423-010-0029-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-010-0029-x