Abstract

The aim of this work was to test the hypothesis that motor fluency should help the integration of the components of the trace and therefore its re-construction. In the encoding phase of each of the three experiments we conducted, a word to be remembered appeared colored in blue or purple. Participants had to read these words aloud and, at the same time, execute a gesture in their ipsilateral (fluent gesture) or contralateral space (non-fluent gesture), according to the color of the word. The aim of the first experiment was to show that the words associated with a fluent gesture during the encoding phase were more easily recognized than those associated with a non-fluent gesture. The results obtained supported the hypothesis. In the second experiment, our objective was to show that the fluency of a gesture performed during encoding in order to associate a word with a color can facilitate the integration of the word with its color. Here again, the results obtained supported the hypothesis. While in Experiment 2 we tested the effect of motor fluency during encoding on word-color integration, the objective of Experiment 3 was to show that motor fluency was integrated in the word-color trace and contributed to the re-construction of the trace. The results obtained supported the hypothesis. Taken together, these findings lead us to believe that traces are not only traces of the processes that gave rise to them, but also traces of the way in which the processes took place.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

For several decades, memory has been considered as a set of contents stored in specific registers and therefore addressable and retrievable as they were. However, this conception has been challenged by assigning to memory a dynamic character. Thus, for the first model our memories are a copy of past experiences, while for the second model our memories are reconstructions of past experiences. Our work is part of the second model.

But, if it is a re-construction, then by what mechanism does a specific episode (episodic memory) emerge in the here and now, and what are the factors that facilitate this re-construction? The purpose of this work is to contribute to our understanding of this issue by showing that this re-construction is all the more efficient when fluency during encoding facilitates the integration of the components involved in the experiment and when this fluency is present during retrieval. In particular, we will try to show that motor fluency plays an essential role in this integration and re-construction. In other words, we test the hypothesis that traces are not only traces of the processes that gave rise to them but also traces of the way in which the processes took place (i.e., fluency).

An alternative to structural models of memory (e.g., SPI model, Tulving, 1995) emerged in the 1980s in the form of multiple-trace models in which a single memory system stores traces of all the sensorimotor components of experiences of our interactions with the environment (i.e., episodic traces; see, e.g., Rey, Riou, Cherdieu, & Versace, 2014b; Rey, Riou, Vallet & Versace, 2017; Rey, Riou, & Versace, 2014a; Rey, Riou, Muller, Dabic, & Versace 2015a, Rey, Vallet, Riou, Lesourd, & Versace, 2015b; Riou, Rey, Vallet, Cuny, & Versace, 2015). Memory traces are assumed to be stored in multi-sensory components and distributed over the entire brain, while knowledge emerges from the association of the recovery situation with the set of re-activated episodic traces (e.g., MINERVA – Hintzman, 1984, 1986, 1988; VISA – Whittlesea, 1989; Act-In – Versace, Vallet, Riou, Lesourd, Labeye & Brunel, 2014; ATHENA – Briglia, Servajean, Michalland, Brunel, & Brouillet, 2018). Indeed, in multiple-trace memory models, the content of the traces relates more to the content of the experience that gave rise to the trace than the actual content of the trace. In other words, the trace should be considered more as something that is likely to be reconstructed or to emerge if the situation is conducive to this than as concrete content that is stored and therefore addressable, recoverable as it is (De Brigard, 2014; Versace, Brouillet & Vallet, 2018; Versace et al., 2014).

The likelihood that a trace can be specifically re-constructed depends on multiple factors involved in what is commonly called encoding. Since the 1970s, several factors that increase the efficiency of encoding have been highlighted: the encoding specificity principle (Tulving & Thompson, 1972), the depth of processing (Craik & Lockhart, 1972), elaborate encoding (Craik & Tulving, 1975), dual coding (Paivio, 1991), the enactment effect (for a review, see Zimmer, Cohen, Guynn, Engelkamp, Kormi-Nouri & Foley, 2001). However, memory efficiency also depends on another factor that seems to play a key role, namely the level of integration of the components of the trace, i.e., the strength of the link between the components (for a discussion, see, e.g., Versace, Labeye, Badard, & Rose, 2009; Versace et al., 2014). The more integrated the features are, the easier it will be for the trace as a whole to be reconstructed, and the more likely it is that it will be dissociated from other traces, thus allowing the emergence of a multimodal and unitary experience that is highly distinctive from the other experiences (Purkart, Vallet, & Versace, 2019). Moreover, classic neuroimaging and event-related potential (ERP) studies have highlighted that when different information is unitized at the time of encoding (i.e., it is represented as a single event rather than as separate events), the feeling of familiarity is improved, and consequently memory is enhanced (Bader, Opitz, Reith, & Mecklinger, 2014; Diana, Yonelinas, & Ranganath, 2008; Haskins, Yonelinas, Quamme, & Ranganath, 2008; Rhodes & Donaldson, 2007). In multiple-trace memory models, and especially in the Act-In model, all the factors mentioned above are assumed to facilitate the re-construction of the trace by increasing the number and the quality of the trace's components as well as by facilitating their integration (see, e.g., Macri, Claus, Pavard, & Versace, 2020; Macri, Pavard, & Versace, 2018; Mather, 2007; Versace & Rose, 2007). What we call “re-construction” is related, from an embodied and situated perspective of cognition, to the concept of “simulation”Footnote 1 (Barsalou, 1999). That amounts to saying that our memories emerge from sensorimotor simulations of past experiences in the present situation (Versace, Brouillet, & Vallet, 2028).

If the importance of performing an action to recover memories about actions is well documented (for a review, see Engelkamp, 1998; Zimmer et al., 2001), the role of action for memory is still debated, whether for working memory or for long-term memory. Some authors failed to highlight the role of motor program for memory (Canits et al., 2018; Pecher, 2013; Pecher et al., 2013; Quak et al., 2014; Zeelenberg & Pecher, 2016). Others, on the contrary, highlight the importance of action in the construction of memory traces (Brouillet, Michalland, Guerineau, Thébault, & Moruth, 2018; Camus, Hommel, Brunel, & Brouillet, 2018; Dutriaux, Dahiez, & Gyselinck, 2019; Gimenez & Brouillet, 2020; Kormi-Nouri, 1995; Kormi-Nouri & Nilsson, 1998, 2001; Labeye, Oker, Badard, & Versace, 2008; Mecklinger, Gruenewald, Weiskopf, & Doeller, 2004; Shebani & Pulvermüller, 2013; van Dam, Rueschemeyer, Bekkering, & Lindemann, 2013). Finally, it would seem that although motor simulation plays a role in memory, it is neither necessary nor automatic; it may play a role depending on the task (Montero-Melis, Van Paridon, Ostarek, & Bylund, 2022).

In this paper we focus on a process that seems to play a core role at the encoding stage, called “encoding fluency” (e.g., Koriat & Ma’ayan, 2005), “Encoding fluency refers to the ease with which to-be-remembered items are mastered during study” (Koriat & Ma’ayan, 2005, p.479). Several studies have shown that the faster an encoding process is achieved, the greater the probability of retrieving that encoding is (Hertzog, Dunlosky, Robinson, & Kidder, 2003; Matvey, Dunlosky, & Guttentag, 2001). Moreover, there is now a substantial body of work that attests, at the neural level, to the importance of fluency in the memorization process (Li, Taylor, Wang, Aao, & Auo, 2017; Nessler, Mecklinger, & Penney, 2005; Rosburg, Mecklinger, & Frings, 2011; Wang, Li, Hou, & Rugg, 2020). More precisely, it seems that it is the interaction between the perirhinal cortex (PrC) and the lateral prefrontal cortex (PFC) that plays an important role when fluency induces a feeling of familiarity (Meckliger & Bader, 2020). All these data lead us to the hypothesis that a fluent gesture at encoding should facilitate the subsequent reconstruction of the episode, and even more so when this reconstruction involves the integration of the components of the trace, as predicted by multiple-trace memory models.

To investigate this, we used a well-documented paradigm known as “laterality and hand dominance”: an individual’s most fluent actions are those executed with the dominant hand on the dominant side. For example, for a right-hander, movement of the right hand on the right side (i.e., ipsilateral movement) will not only be faster (Fisk & Goodale, 1985) than movement of the right hand on the left side (i.e., contralateral movement), but will also enhance memory (Brouillet, Milhau, Brouillet, & Servajean, 2017; Brouillet, Michalland, Martin, & Brouillet, 2021; Chen & Li, 2021; Susser, 2014; Susser & Mulligan, 2015; Susser, Panitz, Buchin, & Mulligan, 2017; Yang, Gallo, & Beilock, 2009). However, Hayes et al. (2008) showed that the fluency effect associated with ipsilateral gestures was no longer present when an obstacle was interposed between the start of the action and the target to be reached.

The aim of the first experiment was to show that a word associated with a fluent gesture during an encoding phase is more easily recognized than a word associated with a non-fluent gesture. During the encoding phase, the participants were presented with 32 words colored in blue or purple. They had to read the words aloud and take a token from a centrally located transparent plastic box with their right hand. They had to put it in one of two colored boxes (one in blue and the other in purple) according to the color of the word. The colored boxes were placed to the right and to the left of the transparent box. The participants therefore executed an ipsilateral or contralateral gesture and the gesture was or was not impeded by an obstacle (a bottle). During the test phase, the participants saw 64 words (32 old and 32 new) presented in a dark color on a sheet of paper and, for each, were asked if they had been presented during the learning phase. Motor fluency was therefore manipulated during the encoding phase by means of two factors: the gesture (either ipsilateral or contralateral) and the presence or absence of an obstacle in the path of the gesture. As the two factors should impact the fluency of the gesture independently of each other, we predicted that they would have additive effects on recognition.

In the second and third experiments, the encoding phase was the same as for Experiment 1, except that only the ipsilateral or contralateral nature of the gesture was manipulated (there was no obstacle). In Experiment 2, our objective was to show that the fluency of the gesture performed during encoding in order to associate a word with a color improves later recognition by facilitating word-color integration. To this end, half of the words (old and new) were colored in blue and the other half in purple during the test phase. Thus, half of the old words were presented with their encoding color and the other half with the other color. Finally, the objective of Experiment 3 was to show that motor fluency was part of the word-color trace. In the recognition phase, participants had to respond yes (for old words) or no (for new words) using two colored keys that required an ipsilateral or contralateral gesture to respond, and whose color corresponded either with the encoding color of the word or the other color used in the encoding phase.

Experiment 1

The aim of the first experiment was to show that a word associated with a fluent gesture during an encoding phase is more easily recognized than a word associated with a non-fluent gesture.

Method

Participants

We performed power analysis with G*Power software (Faul, Erdfelder, Lang, & Buchner, 2007) to determine the total sample size (two groups and four measures). For an effect size of 0.25,Footnote 2 a probability of 0.05, and a power of 0.95, G*Power indicates 36 participants. Thus, 36 participants took part in this experiment and were randomly assigned to one of two groups (with or without an obstacle, see Procedure). They were not informed of the purpose of the experiment. The age of the participants in each group ranged from 18 to 30 years (mean for obstacle group: 24.5 years, SD: 1.58; mean for non-obstacle group: 25.3 years, SD: 2.02). The number of women and men was similar in each group (obstacle group: 14 women and four men; non-obstacle group: 12 women and six men). All participants were native French speakers and all were right-handed. Their vision was normal or corrected to normal. They gave their informed consent to take part in this experiment and duly signed the Laboratory’s Charter of Ethics.

Material

The material consisted of: (a) a computer screen (24-in., 75 Hz, 44.7 × 33.5 cm), (b) 75 white 2-cm diameter tokens; (c) three plastic boxes (20.5 × 9.7 × 3.6 cm): one transparent, one blue, one purpleFootnote 3; (d) 64 words of neutral valence from Bonin's norm (2003): Mean Concreteness (4.554), Mean Imaging (4.097), Mean Subjective Frequency (3.106), Mean Emotional Valence (3.135).

The 64 words were divided into two groups of 32 words each according to the nature of the words: NEW versus OLD (cf., Procedure). They were matched on concreteness, imagery, subjective frequency, and emotional valence. The order of presentation was counterbalanced among participants during the learning phase.

Procedure

Participants were received individually in a quiet room and were seated in front of a table on which was installed: (a) a computer screen (22-in., 44.7 × 33.5 cm) in front of the participant at a distance of 60 cm from their eyes; (b) the three boxes, laid lengthwise. The transparent box containing the white tokens was placed 10 cm from the edge of the table and the middle of its length corresponded to the middle of the length of computer screen. The blue box was situated to the right of the transparent box and the purple box to the left of the transparent box. The midpoints of each of these two boxes were located 40 cm from the middle of the transparent box (the position of these two boxes was counterbalanced among participants).

Once the participants were settled and comfortable, the experimenter explained to them that they should use only their right hand and asked them to keep their left hand on the thigh of their left leg. After that, he started the experiment and the participants could read the instructions on the screen. They were told that the experiment would consist of two phases: a learning phase and a recognition phase. They were told that in the learning phase, a fixation cross (displayed for 250 ms) would appear, followed by a word (font type: Calibri, size: 72 pt) colored in blue (16 words) or purple (16 words). The words colored in blue for half of the participants were colored in purple for the other half. Participants had to read them aloud and simultaneously take a token from the transparent plastic box with their right hand and put it in the box of the same color as the word. They thus executed 16 gestures in their ipsilateral space and 16 gestures in their contralateral space. They only had 3 s to put the token in the box after the word disappeared and a new fixation cross appeared followed by a new word. For half of the participants (obstacle group), bottles were placed between the transparent and the colored box to create an obstacle at an equal distance from the two boxes (20 cm). They therefore had to reach around the obstacle to put the token in the box. Three minutes after the last word had been presented, a message appeared on the screen indicating that the learning phase was over. The experimenter removed the boxes and the bottles from the table and gave the participants a sheet of paper on which 64 words were printed in a dark color (Times New Roman 12 pt): the 32 words of the learning phase (OLD) and 32 other words (NEW). The order of the words was counterbalanced between four lists, generated using the Excel RAND function, meaning that only four participants saw the same list in the same order during the recognition phase.

Participants were asked to circle "Yes" if they thought the word had been presented in the learning phase and "No" if they thought the word had not been presented in the learning phase. No time limit was imposed on the participants’ responses.

Experimental design

Two within-subject experimental factors were manipulated: (a) the nature of the words during the recognition phase (OLD vs. NEW), and (b) the gestural space during the learning phase (Ipsilateral vs. Controlateral) and consequently the color of the words to be learned. For half of the participants, 16 of the 32 words to be learned were colored blue (i.e., followed by an ipsilateral gesture) and 16 were colored purple (i.e., followed by a contralateral gesture). These colors were reversed for the other half of the participants. It should be noted that this factor does not concern the New words. One between-subject experimental factor was manipulated: presence of the obstacle during the learning phase (with vs without). Participants had to judge 64 words printed in a dark color. Of these 64 words, 32 were OLD and 32 were NEW.

Results

Statistical analyses were carried out using JASP software (Wagenmakers et al., 2018a, 2018b). We performed an ANOVA followed by a Bayesian ANOVA. The Bayes factor (BF10) is the ratio of p (D∣H1), the probability of observing the data under the alternative hypothesis, and p (D∣H0), the probability of observing the data under the null hypothesis. The Bayes factor is consequently a measure of the probability that the data matches the alternative hypothesis rather than the null hypothesis (Rouder, Speckman, Sun, Morey, & Iverson, 2009). In accordance with the recommendations made by Schönbrodt and Wagenmakers (2018), a BF10 ≥ 10 will be interpreted as strong evidence for the alternative hypothesis, 3 ≤ BF10 < 10 as moderate evidence for the alternative hypothesis, and a BF10 < 3 as anecdotal evidence for the alternative hypothesis, with a BF10 close to 1 considered as no evidence.

Since the obstacle and gestural space variables were not meaningful for the NEW words, we only present the results for the OLD words (Table 1). However, we observed without doubt that OLD words were, on average, recognized better than NEW words: 10.90 (2.06) versu 0.84 (0.83), t(35) = 26.20, p < 0.001, η2p = 0.95, BF10 = 2.24+21 and that there was no difference, on average, between the groups (with or without obstacle) regarding the NEW words: 0.88(0.90) versus 0.77(0.80), t(34) = 0.39, p = 0.70, η2p = 0.004, BF10 = 0.34.

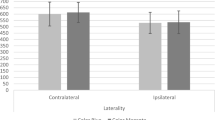

The analysis revealed a significant main effect of gestural space, F(1,34) = 21.60, p < 0.001, η2p = 0.38, BF10 = 733.70. OLD words that had been associated with an ipsilateral gesture during the learning phase were recognized better than OLD words associated with a contralateral gesture during the learning phase. A main effect of obstacle was also observed, F(1,34) = 12.09, p = 0.001, η2p = 0.26, BF10 = 14.07. The group with obstacle recognized fewer OLD words than the group without obstacle. The interaction between gestural space and obstacle was not significant, F(1,34) = 0.004, p = 0.95, η2p = 0.0001, BF10 = 0.12.

Experiment 1: Discussion

As expected, the results show, on the one hand, that the words associated with an ipsilateral gesture during the encoding phase were recognized more easily than those associated with a contralateral gesture; on the other hand, word recognition was lower when the gesture was hindered by the obstacle. Experiment 1 therefore confirms that the motor fluency of the encoding process facilitates the subsequent reconstruction of the episode.

But does motor fluency during encoding make subsequent recognition easier by facilitating the integration of the word with its color? Experiment 2 attempted to answer this question. The encoding phase was the same as for Experiment 1, except that only the ipsilateral or contralateral nature of the gesture was manipulated and half of the old words were presented with their encoding color during the test phase and the other half with the other color. Our predictions were as follows: old words presented in the same color as in the learning phase should be recognized better if the color and the word were strongly integrated, that is, if the gesture associating the word with its color was fluent during encoding (ipsilateral). By contrast, old words presented with a color different from that of the learning phase should be less well recognized if the new color was strongly integrated with another word during encoding, that is to say, when the gesture associated with this color was not fluent (contralateral). For new words, there should be more false recognitions for words presented in a color that was strongly integrated with words that induced an ipsilateral gesture in the encoding phase.

Experiment 2

The aim of this experiment was to show that the fluency of the gesture performed during encoding and that helps associate a word with a color improves later recognition by facilitating the integration of the word with its color.

Method

Participants

We conducted a power analysis with G*Power software (Faul, Erdfelder, Lang, & Buchner, 2007) to determine the total sample size (three crossed factors). For an effect size of 0.25, a probability of 0.05, and a power of 0.95, G*Power indicates 28 participants. Thirty-four participants took part in this experiment. They were not informed about the purpose of the experiment. The age of the participants ranged from 18 to 28 years, mean: 22.1 years, SD: 3.67 (24 women and 10 men). All participants were native French speakers and all were right-handed. Their vision was normal or corrected to normal. They gave their informed consent to take part in this experiment and duly signed the Laboratory’s Charter of Ethics.

Material

For the learning phase, the material was the same as that used in Experiment 1 (i.e., 16 words colored in blue and 16 words colored in purple). However, we did not use the two bottles from Experiment 1. For the recognition phase, the material was the same as that used in Experiment 1, except that the words were not presented in a dark color but instead in a color used in the learning phase (blue vs. purple). Consequently, 16 NEW words and 16 OLD words were printed in blue and the other 16 NEW and OLD words were printed in purple. The color of the NEW/OLD words was counterbalanced between two lists of words. Finally, the order of presentation of the words in each list was counterbalanced across four lists, which were generated using the Excel RAND function.

Procedure

For the learning phase, the procedure was the same as that used in Experiment 1, except that in this new experiment participants did not have to reach around an obstacle to put the tokens into the appropriate boxes. They only had to put tokens from the transparent box in the blue or purple box depending on the color of the word presented on the computer screen.

For the recognition phase, half of the 16 OLD words that had been presented in blue during the learning phase were presented in blue (eight words) and the other half were presented in purple (eight words). Similarly, half of the 16 OLD words which had been presented in purple during learning were presented in purple (eight words) and the other half were presented in blue (eight words). Thus, 16 OLD words were in the same color as in the learning phase (matching) and 16 OLD words were in a different color from in the learning phase (mismatching). In the case of the NEW words (32 words), half were presented in blue (16 words) and the other half were presented in purple (16 words). The words colored in blue for half of the participants were colored in purple for the other half.

Experimental design

The manipulated within-subject experimental factors were: (a) the gestural space during the learning phase (Ipsilateral vs. Contralateral) and consequently the color of the words to learned (blue or purple associated with an ipsilateral gesture vs. blue or purple associated with a contralateral gesture), (b) the nature of the words during the recognition phase (OLD vs. NEW), (c) the color of the words during the recognition phase (blue or purple). As the color of the words to be recognized did not have the same status for the Old as for the New words, two separate analyses were performed, one for the OLD words and one for the NEW words. Indeed, it is only with OLD words that we can test the effect of color matching/mismatching and consequently the integration of the color in the word (Table 2). An analysis of the NEW words should enable us to confirm the effect of the motor fluency associated with the color.

Results

As in Experiment 1, statistical analyses were carried out using JASP software (Wagenmakers et al., 2018a, 2018b). Although our hypotheses were different depending on the nature of the OLD and NEW words, we checked that OLD words were recognized better than NEW words, which was indeed the case: F(1,31) = 482.88, p < 0.001, η2p = 0.94, BF10 = 1.62+30.

Results for the OLD words

The analysis revealed a main effect of color matching, F(1,33): 80.17, p < 0.001, η2p = 0.70, BF10 = 3.51+14: the words were recognized better when the colors in the learning and recognition phases matched than when they mismatched. No main effect of the color-associated gesture was observed, F(1,33): 1.37, p = 0.25, η2p = 0.04, BF10 = 0.26. The interaction between color matching and color-associated gesture was significant, F(1,33): 26.84, p < 0.001, η2p = 0.44, BF10 = 5.84+17. Post hoc comparisons showed that when the color was associated with an ipsilateral gesture, the words were recognized better when the colors in the learning phase and recognition phases matched than when they mismatched, t (33) = 10.24, p < 0.001, η2p = 0.76, BF10 = 8.17+8. The same was true for the color associated with a contralateral gesture: matches yielded better recognition than mismatches, t (33) = 3.84, p < 0.001, η2p = 0.30, BF10 = 61.78. Moreover, the statistical analyses show that when the colors in the learning and recognition phases matched, the words were recognized better when the color was associated with an ipsilateral gesture than a contralateral gesture, t (33) = 3.07, p < 0.005, η2p = 0.22, BF10 = 9.11. However, when the colors in the two phases mismatched, the words were recognized better when the color was associated with a contralateral than an ipsilateral gesture, t (33) = 3.69, p < 0.001, η2p = 0.29, BF10 = 39.26.

Results for the NEW words

Statistical analysis confirmed that NEW words presented with a color associated with an ipsilateral gesture led participants to produce more false recognitions than NEW words presented with a color associated with a contralateral gesture: 5.50 (2.36) versus 1.31 (1.40), t(31) = 9.74, p < 0.001, η2p = 0.75, BF10 = 1.68+8.

Experiment 2: Discussion

The results fully confirm our predictions. When Old words appeared in the recognition phase with the same color as they had in the learning phase, they were recognized better when the gesture associating the word with its color was fluent during encoding (ipsilateral) than when it was not (contralateral). Motor fluency during encoding did facilitate the re-integration (re-association) of the word with its color during recognition. By contrast, old words presented with a color different from that of the learning phase were recognized better when the new color was weakly integrated with another word during encoding (when the gesture associated with this color was contralateral). As far as New words are concerned, we observed more false recognitions when they were presented in a color that was strongly integrated with words in the encoding phase (fluent gesture, that is to say ipsilateral).

All these results support our prediction that motor fluency during encoding strengthens word-color integration. However, the question of whether motor fluency is part of the trace remains. Experiment 3 addresses this issue. The encoding phase was the same as in Experiment 2. However, in the recognition phase, participants had to associate a color with the nature of the response to be performed (e.g., blue for "Yes, the word was present in the encoding phase" and purple for "No, the word wasn't present in the encoding phase"). The colored key was on the left or right of the keyboard, thus requiring the participants to produce an ipsilateral or contralateral gesture in order to respond. Thus, for the old words, the color associated with the response requiring an ipsilateral gesture (fluent) was either their encoding color (when the gesture executed to put the token in the box was fluent), or the color of another word in the encoding phase, when the gesture executed to put the token in the box was not fluent (contralateral). Our predictions for old words were as follows: when the color of the response key required the same gesture as the gesture strongly integrated with the color and the word in the encoding phase (e.g., ipsilateral gesture), the words should be better recognized than when the color of the response key required a gesture strongly integrated with the color of another word in the encoding phase (contralateral gesture). Furthermore, we expected that when the response key required an ipsilateral gesture, words should be recognized better than when the response key required a contralateral gesture. For the new words, our prediction was that there should be more false recognitions when the color of the response key required an ipsilateral rather than a contralateral gesture.

Experiment 3

The aim of this experiment was to show that motor fluency is part of the word-color trace.

Method

Participants

We performed a power analysis with G*Power software (Faul, Erdfelder, Lang & Buchner, 2007) to determine the total sample size (four groups and two measures). For an effect size of 0.25, a probability of 0.05, and a power of 0.95, G*Power indicates 76 participants. Thus, 76 participants took part in this experiment, randomly distributed into four groups (19 participants per group). They were not informed about the purpose of the experiment. The age of the participants in each group ranged from 19 to 25 years (group 1: mean: 22.1 years, SD: 1.78; 13 women and five men; group 2: mean 23.4 years, SD: 2.12; 12 women and six men; group 3: mean 22.6, SD: 1.97; 14 women and four men; group 4: mean: 23.3 years, SD: 2.08; 13 women and five men). All participants were native French speakers and all were right-handed. Their vision was normal or corrected to normal. They gave their informed consent to take part in this experiment and duly signed the Laboratory’s Charter of Ethics.

Material

For the learning phase, the material was the same as that used in Experiments 1 and 2. For the recognition phase, the material was the same as that used in Experiment 1 and the words were presented in a dark color, font type Times New Roman, size 18 pt. However, unlike in Experiments 1 and 2, the participants had to answer on an AZERTY computer keyboard without keypad (28.5 × 12 × 2 cm). The two keys to be pressed were located on the right (M, colored in blue) and on the left (Q, colored in purple) of the keyboard.

Procedure

For the learning phase, the procedure was the same as that used in Experiment 2. During the recognition phase, a fixation cross was first displayed (250 ms), followed by the words (32 OLD and 32 NEW presented in a random order), which then remained on the screen until the participants responded. The participants had to press on the space bar with the index finger of their right hand to make the fixation cross appear, and thus control the starting point of the gesture. Once the word was displayed, they had to indicate whether or not the word was present in the learning list. To do this, they had to press the blue key for “yes” and the purple key for "no," using the index finger of their right hand. The blue key (M) required an ipsilateral gesture and the purple key (Q) required a contralateral gesture. Note that the colors of the response keys were those used in the learning phase: blue versus purple.

Thus, the combination of the color of the words in the learning phase and the color of the response keys enabled us to form four experimental conditions: condition 1 (group 1), learning phase, color Blue-Ipsilateral gesture versus color Purple-Contralateral gesture; recognition phase, color Blue (Yes)-Ipsilateral gesture versus color Purple (No)-Contralateral gesture; condition 2 (group 2), learning phase, color Blue-Ipsilateral gesture versus color Purple-Contralateral gesture; recognition phase, color Blue (Yes)-Contralateral gesture versus color Purple (No)-Ipsilateral gesture; condition 3 (group 3), learning phase, color Blue-Contralateral gesture versus color Purple-Ipsilateral gesture; recognition phase, color Blue(Yes)-Contralateral gesture versus color Purple(No)-Ipsilateral gesture; condition 4 (group 4), learning phase, color Blue-Contralateral gesture versus color Purple-Ipsilateral gesture; recognition phase, color Blue(Yes)-Ipsilateral gesture versus color Purple-Contralateral gesture.

Experimental design

The nature of the words to be recognized (OLD vs. NEW) was manipulated within participants. The matching/mismatching of the color-associated gestural spaces between the learning and recognition phases was manipulated between participants. As in Experiment 2, two separate analyses were performed, one for the OLD words and one for the NEW words. Indeed, it is only with OLD words that we can test the effect of motoric matching/mismatching and consequently the integration of motor fluency in the word (Table 3). The NEW words enabled us to test the effect of color-associated motor fluency on recognition (Table 4).

Results

As in Experiments 1 and 2, statistical analyses were carried out using JASP software (Wagenmakers et al., 2018a, 2018b). Although our hypotheses differed depending on the nature of the OLD and NEW words, we checked that OLD words were recognized better than NEW words, and this was indeed the case: t(75) = 20.41, p < 0.001, η2p = 0.84, BF10 = 1.19+29. The result is the same whether the answer was given in the contralateral or ipsilateral space, respectively: t(37) = 14.33, p < 0.001, η2p = 0.84, BF10 = 5.19+13, t(37) = 14.37, p < 0.001, η2p = 0.84, BF10 = 5.66.+13

Results for the OLD words

The analysis revealed a significant main effect of matching (i.e., matching vs. mismatching), F(1,72) = 179.35, p < 0.001, η2p = 0.71, BF10 = 1.56+16. Old words were recognized better when the gestural space in the learning phase (Contralateral vs. Ipsilateral) and recognition phase (Contralateral vs. Ipsilateral) matched than when they did not. There was no main effect of the gestural space, F(1,72) = 0.28, p = 0.60, η2p = 0.004, BF10 = 0.24. The interaction was significant, F(1,72) = 16.57, p < 0.001, η2p = 0.18, BF10 = 1.56 + 17. Post hoc comparisons show that old words were recognized better when matching related to an ipsilateral rather than a contralateral gesture, t (72) = 2.50, p = 0.01, η2p = 0.07, BF10 = 2.88. However, Old words were recognized less well when the mismatch related to an ipsilateral rather than a contralateral gesture, t (72) = 3.25, p = 0.02, η2p = 0.12, BF10 = 6.50. Nevertheless, the Bayesian statistics suggest that we should be cautious regarding the results.

Results for the NEW words

The analysis revealed a significant main effect of matching, F(1,72) = 13.66, p < 0.001, η2p = 0.16, BF10 = 16.57. There were more false recognitions of new words in the matching (i.e., when the response space corresponded to the response space associated with the color) than the mismatching condition (i.e., when the response space did not correspond to the response space associated with the color). There was no main effect of the response space, F(1,72) = 0.006, p = 0.94, η2p = 0.00008, BF10 = 0.09. There were as many false recognitions when the response was given in the ipsilateral space as in the contralateral space. The interaction was significant, F(1,72) = 27.10, p < 0.001, η2p = 0.27, BF10 = 2.9+4. Post hoc comparisons show that there were more false recognitions for New words when the matching related to the ipsilateral rather than the contralateral space, t (72) = 3.73, p < 0.001, η2p = 0.16, BF10 = 7.66. When there was a mismatch, the New words were falsely recognized more often when the response gesture was in the contralateral space than in the ipsilateral space, t (72) = 3.62, p < 0.001, η2p = 0.15, BF10 = 1.1+4.

Conclusion

The obtained results are in line with what was expected. The data show that matching between the gestural space during learning (i.e., color-associated space) and the space in which the gesture has to be performed to give the answer enhances recognition of Old words compared to when the spaces mismatch, and that this is true for both the ipsilateral and contralateral spaces. This means that the motor action is part of the trace associated with the Old words. However, the matching associated with the ipsilateral space (fluency) increases the propensity to recognize Old words more than the matching associated with the contralateral space (lack of fluency). Moreover, the mismatching associated with the ipsilateral space is more prejudicial than that associated with the contralateral space. Thus, these two results show that motor fluency is well integrated in the memory traces of words. Finally, the results obtained for the NEW words suggest that motor fluency (i.e., gesture associated with the ipsilateral space) is well integrated with color. Indeed, the participants produced more false recognitions when there was a match between the space associated with the color of words and the response space than when there was a mismatch between the two. Taken together, these results suggest, on the one hand, that motor fluency is integrated in the memory traces of words together with their color, and, on the other, that motor fluency participates in the re-construction of memories.

General discussion

This work is based on multiple-trace memory models. According to these models, the trace reflects a sensorimotor experience likely to be reconstructed or to emerge as content actually preserved and therefore addressable and recoverable as it is. The likelihood that a trace can be specifically re-constructed depends on multiple factors involved in what is commonly called encoding, notably its richness (the number of components), and the quality of the components, but also, and perhaps most importantly, the level of integration of the components of the trace. Thus, the more integrated the features are, the more easily the trace as a whole will be reconstructed and the more likely it is that it will be dissociated from other traces.

While multiple factors (encoding specificity principle, depth of processing, elaborate encoding, dual coding, enactment effect) are assumed to facilitate the re-construction of traces by facilitating the integration of their components (see, e.g., Macri, Claus, Pavard, & Versace, 2020; Macri, Pavard, & Versace, 2018), Camus, Brouillet, and Brunel (2016) recently stressed that action plays a functional role in the integration of sensorimotor components.

The aim of this work was to identify the role that motor fluency plays in the integration mechanism. Indeed, it is well known that “encoding fluency” (e.g., Koriat & Ma’ayan, 2005) enhances memory (Hertzog, Dunlosky, Robinson, & Kidder, 2003; Matvey, Dunlosky, & Guttentag, 2001). To investigate this, we used the “laterality and hand dominance” paradigm (i.e., most fluent actions are those executed with the dominant hand on the dominant side), which has already shown its efficiency in the memory field (Brouillet, Milhau, Brouillet, & Servajean, 2017; Brouillet, Michalland, Martin, & Brouillet, 2021; Susser & Mulligan, 2015; Susser, 2014; Susser, Panitz, Buchin, & Mulligan, 2017; Yang, Gallo, & Beilock, 2009). In the encoding phase of the three experiments reported here, words therefore appeared colored in blue or purple and the participants had to read them aloud and remember them. Motor fluency was manipulated by means of the gesture to be performed in response to the color of the word: in the ipsilateral space (fluent) versus contralateral space (non-fluent). In Experiment 1, fluency was manipulated during the encoding phase by means of another factor: the presence or absence of an obstacle in the path of the gesture. This manipulation was not used in Experiments 2 and 3. The encoding phase was followed by a recognition phase. In Experiment 1, words to be recognized were presented in a dark font on a sheet of paper. In Experiment 2, words to be recognized (Old and New) were colored in one of the two colors used in the encoding phase, while half of the Old words were colored in the same color as in the encoding phase and the other half in the other color. They were also presented on a sheet of paper. In Experiment 3, participants had to press a colored key (the same colors as in the encoding phase) to indicate whether or not the word was present in the learning phase. The colored key was on the left or on the right of the keyboard, thus requiring the participant to produce an ipsilateral or contralateral gesture to respond. For the OLD words, the color associated with the "yes" response was either their encoding color (matching) or the other color used in the encoding phase (mismatching).

As expected, the results of Experiment 1 show that the Old words that were associated with an ipsilateral gesture during the encoding phase (i.e., motor fluency) were recognized more easily than words associated with a contralateral gesture (i.e., no motor fluency). Moreover, they were recognized less well when the gesture had been hindered by the obstacle (i.e., interruption to motor fluency). The results of Experiment 2 clearly show that the color associated with an ipsilateral gesture during the encoding phase both enhanced the recognition of the Old words and increased the level of false recognition of the New words. Moreover, when the OLD words appeared in the same color as in the learning phase, this matching enhanced memory. Conversely, when the OLD words appeared in a color different to that used in the learning phase, this discrepancy impaired their recognition. Finally, while the results of Experiment 3 do not show an effect of the space in which the response gesture is produced during recognition (i.e., ipsilateral/fluent vs. contralateral/not fluent), they nevertheless indicate that the gesture executed to give the response must also be considered. Indeed, we observed that the recognition score on the OLD words was higher when these words were in the same color as during the encoding phase than when they were in a different color (i.e., when the color was associated with the same response space). However, this score was even higher for the ipsilateral than the contralateral space (i.e., when participants performed a fluent gesture). Moreover, participants produced more false recognitions (i.e., NEW words) when there was a match between the space associated with the color of the words and the response space than when there was a mismatch between the two.

This work provides us with three important results. Firstly, the results of the first experiment support those previously obtained by Koriat and Ma’ayan (2005) regarding “encoding fluency” and confirmed by several other studies (Hertzog et al., 2003; Matvey et al., 2001): fluency at encoding enhances memory retrieval. However, and this is the original feature of our studies, unlike in these earlier works, it was not the study time at encoding that was manipulated but a subjective feeling associated with the gesture performed as a function of the space. A gesture performed in the participant's ipsilateral space (i.e., fluent gesture) favors recognition responses, as opposed to a gesture performed in the participant's contralateral space (i.e., non-fluent gesture). While our work supports the “encoding fluency” principle, it also supports the idea that our body influences cognitive processes through the actions we can carry out, as has been shown in the fields of comprehension (e.g., Zwaan, 2016), emotions (e.g., Casasanto, 2009), perception (e.g., O’Regan, 2011), and memory (e.g., Brouillet, 2020). On the other hand, and for the very first time, the results of Experiment 2 highlight the role of motor fluency in the integration process. Indeed, in a situation in which the color of the words was unchanged during recognition, there were more correct recognitions when the color had been associated with an ipsilateral gesture than with a contralateral gesture during encoding, thus inducing a fluent gesture and therefore better word-color integration. This is confirmed by the false recognitions (i.e., NEW words): the score for words with a color associated with an ipsilateral gesture was higher than for words associated with a contralateral gesture. Therefore, the results of Experiment 2 clearly show that motor fluency facilitates integration during encoding. Finally, the results observed in Experiment 3 show that motor fluency at retrieval enhances the re-construction of memories. When the color of the response key involved an ipsilateral gesture and the word's color was embedded in an ipsilateral gesture, participants were inclined to consider that the word had been presented in the acquisition phase. The results observed for the NEW words are particularly interesting here: matching colors (words and Yes answer key) induced a high number of false recognitions (25%). However, as interesting as they are, these results would need to be confirmed with left-handers because it has been shown that left-handers are less lateralized than right-handers for both left and right hemispheric functions (Johnstone, Karlson, & Carey, 2021).

Most importantly, however, the results of these three experiments taken together support the idea that memory is a memory of processes (Craik & Lockart, 1972; Franks et al., 2000; Roediger, Gallo, & Geraci, 2002). This idea underlies the multiple-trace models, in particular Act-In (Versace et al., 2014) and its mathematical formalization Athena (Briglia et al., 2018). As the ability of a trace to be re-constructed depends largely on the level of integration of the components of the trace, it explains why traces cannot be dissociated from the processes that gave rise to them, whether in the case of a specific trace or all traces in general. Our results lead us to believe that traces are not only traces of the processes that gave rise to them but also traces of the way in which the processes took place (i.e., fluency). Moreover, it appears that the way in which the processes took place contributes to the re-construction of the traces. Thus, we can consider that our results support the concept of “transfer of appropriate fluency” (Lanska & Westerman, 2018): in addition to the transfer of processes, there is also a transfer of the manner in which the process was carried out. In the 1970s, authors such as Kolers (1975, 1976), Kolers and Roediger (1984), and Morris, Bransford, and Franks (1977) proposed the idea of Transfer Appropriate Processing – TAP. This idea considers that memory performance depends on the overlap between encoding processing and retrieval processing. More precisely, it reflects the idea that if, during the retrieval phase, I use the same processes as those used during the encoding phase, then my memory performance will be optimal (neurophysiological findings confirm that retrieval is mediated by the reinstatement of the brain activity that was present during processing of the original event; Bramão & Johansson, 2018; Schendan & Kutas, 2007).

To conclude, “‘pastness’” cannot be found in memory trace, rather, reflects an attribution of transfer in performance” (Jacoby, Kelley & Dywan, 1989, p. 400).

Notes

Regarding embodied approaches of language, a growing number of studies have highlighted that when we read a word, brain simulates the same sensorimotor systems that are involved when interacting with real objects represented by the word. Thus, a large overlap between the brain areas involved in language and action is regularly observed (see Harpaintner, Sim, Trumpp, Ulrich, & Kiefer, 2020; Henningsen-Schomers & Pulvermüller, 2021; Pulvermüller, 2005, 2010, 2013).

We used an effect size of 0.25 as in Lanska and Westerman (2018) and Brouillet et al. (2021). But, to overcome the possible inadequacy of a binary rejection or acceptance of H0 (i.e., p > .05 vs. p < .05), we used Bayesian inference. Indeed, in the Bayesian framework, the value of hypotheses is updated based on the success of the prediction: hypotheses that predict the observed data relatively well are more credible than those that predict the data relatively poorly (Wagenmakers, Morey, & Lee, 2016). Moreover, it is now established that the Bayesian framework is a relevant complement to the frequentist p-value (Dienes, & Mclatchie, 2018).

References

Bader, R., Opitz, B., Reith, W., & Mecklinger, A. (2014). A novel conceptual unit is more than the sum of its parts: FMRI evidence from an associative recognition memory study. Neuropsychologia, 61, 123–134.

Barsalou, L. W. (1999). Perceptual symbol systems. The Behavioral and Brain Sciences, 22(4), 577–609.

Bramão, I., & Johansson, M. (2018). Neural pattern classification tracks transfer-appropriate processing in episodic memory. Eneuro, 5(4).

Briglia, J., Servajean, P., Michalland, A. H., Brunel, L., & Brouillet, D. (2018). Modeling an enactivist multiple-trace memory. ATHENA: A fractal model of human memory. Journal of Mathematical Psychology, 82, 97–110.

Brouillet, D. (2020). Enactive memory. Frontiers in psychology, 11, 114.

Brouillet, D., Milhau, A., Brouillet, T., & Servajean, P. (2017). Effect of an unrelated fluent action on word recognition: A case of motor discrepancy. Psychonomic Bulletin & Review, 24(3), 894–900.

Brouillet, D., Vagnot, C., Milhau, A., Brunel, L., Briglia, J., Versace, R., & Rousset, S. (2015). Sensory–motor properties of past actions bias memory in a recognition task. Psychological Research Psychologische Forschung, 79(4), 678–686.

Brouillet, D., Michalland, A. H., Guerineau, R., Draushika, M., & Thebault, G. (2019). How does simulation of an observed external body state influence categorisation of an easily graspable object? Quarterly Journal of Experimental Psychology, 72(6), 1466–1477.

Brouillet, T., Michalland, A. H., Martin, S., & Brouillet, D. (2021). When the Action to Be Performed at the Stage of Retrieval Enacts Memory of Action Verbs. Experimental Psychology, 68(1), 18–31.

Camus, T., Brouillet, D., & Brunel, L. (2016). Assessing the functional role of motor response during the integration process. Journal of Experimental Psychology: Human Perception and Performance, 42(11), 1693.

Camus, T., Hommel, B., Brunel, L., & Brouillet, T. (2018). From anticipation to integration: The role of integrated action-effects in building sensorimotor contingencies. Psychonomic Bulletin & Review, 25(3), 1059–1065.

Canits, I., Pecher, D., & Zeelenberg, R. (2018). Effects of grasp compatibility on long-term memory for objects. Acta Psychologica, 182, 65–74.

Casasanto, D. (2009). Embodiment of abstract concepts: Good and bad in right-and left-handers. Journal of Experimental Psychology: General, 138(3), 351.

Chen, M., & Lin, C. H. (2021). What is in your hand influences your purchase intention: Effect of motor fluency on motor simulation. Current Psychology, 40, 3226–3234.

Craik, F. I., & Lockhart, R. S. (1972). Levels of processing: A framework for memory research. Journal of Verbal Learning and Verbal Behavior, 11(6), 671–684.

Craik, F. I., & Tulving, E. (1975). Depth of processing and the retention of words in episodic memory. Journal of Experimental Psychology: General, 104(3), 268.

De Brigard, F. (2014). The nature of memory traces. Philosophy Compass, 9(6), 402–414.

Diana, R. A., Yonelinas, A. P., & Ranganath, C. (2008). The effects of unitization on familiarity-based source memory: Testing a behavioral prediction derived from neuroimaging data. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34, 730–740.

Dienes, Z., & Mclatchie, N. (2018). Four reasons to prefer Bayesian analyses over significance testing. Psychonomic Bulletin & Review, 25(1), 207–218.

Dutriaux, L., Dahiez, X., & Gyselinck, V. (2019). How to change your memory of an object with a posture and a verb. Quarterly Journal of Experimental Psychology, 72(5), 1112–1118.

Engelkamp, J. (1998). Memory for actions. Psychology Press.

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191.

Fisk, J. D., & Goodale, M. A. (1985). The organization of eye and limb movements during unrestricted reaching to targets in contralateral and ipsilateral visual space. Experimental Brain Research, 60(1), 159–178.

Franks, J. J., Bilbrey, C. W., Lien, K. G., & McNamara, T. P. (2000). Transfer-appropriate processing (TAP). Memory & Cognition, 28(7), 1140–1151.

Gimenez, C. & Brouillet, D. (2020). The role of the motor system in visual working memory. Cognition, Brain and Behavior, Volume XXIV, No. 2 (June), 153-162.

Harpaintner, M., Sim, E. J., Trumpp, N. M., Ulrich, M., & Kiefer, M. (2020). The grounding of abstract concepts in the motor and visual system: An fMRI study. Cortex, 124, 1–22.

Haskins, A. L., Yonelinas, A. P., Quamme, J. R., & Ranganath, C. (2008). Perirhinal cortex supports encoding and familiarity-based recognition of novel associations. Neuron, 59, 554–560.

Hayes, A. E., Paul, M. A., Beuger, B., & Tipper, S. P. (2008). Self produced and observed actions influence emotion: The roles of action fluency and eye gaze. Psychological Research Psychologische Forschung, 72(4), 461–472.

Hertzog, C., Dunlosky, J., Robinson, A. E., & Kidder, D. P. (2003). Encoding fluency is a cue used for judgments about learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 29(1), 22.

Hintzman, D. L. (1984). MINERVA 2: A simulation model of human memory. Behavior Research Methods, Instruments, & Computers, 16(2), 96–101.

Hintzman, D. L. (1986). “ Schema abstraction” in a multiple-trace memory model. Psychological Review, 93(4), 411.

Hintzman, D. L. (1988). Judgments of frequency and recognition memory in a multiple-trace memory model. Psychological Review, 95(4), 528.

Henningsen-Schomers, M. R., & Pulvermüller, F. (2021). Modelling concrete and abstract concepts using brain-constrained deep neural networks. Psychological research, 1–27.

Johnstone, L. T., Karlsson, E. M., & Carey, D. P. (2021). Left-handers are less lateralized than right-handers for both left and right hemispheric functions. Cerebral Cortex, 31(8), 3780–3787.

Li, B., Taylor, J. R., Wang, W., Aao, C., & Auo, C. (2017). Electrophysiological signals associated with fluency of different levels of processing reveal multiple contributions to recognition memory. Consciousness and Cognition, 53, 1–13.

Jacoby, L. L., Kelley, C. M., & Dywan, J. (1989). Memory attributions. In H. L. Roediger & F. I. M. Craik (Eds.), Varieties of memory and consciousness: Essays in honour of Endel Tulving (pp. 391–422). Lawrence Erlbaum Associates Inc.

Kolers, P. A. (1975). Specificity of operations in sentence recognition. Cognitive Psychology, 7(3), 289–306.

Kolers, R. A. (1976). Reading a year later. Journal of Experimental Psychology: Human Learning and Memory, 2, 554–565.

Kolers, P. A., & Roediger, H. L., III. (1984). Procedures of mind. Journal of Verbal Learning and Verbal Behavior, 23(4), 425–449.

Koriat, A., & Ma’ayan, H. (2005). The effects of encoding fluency and retrieval fluency on judgments of learning. Journal of Memory and Language, 52(4), 478–492.

Kormi-Nouri, R. (1995). The nature of memory for action events: An episodic integration view. European Journal of Cognitive Psychology, 7(4), 337–363.

Kormi-Nouri, R., & Nilsson, L. G. (1998). The role of integration in recognition failure and action memory. Memory & Cognition, 26(4), 681–691.

Kormi-Nouri, R., & Nilsson, L-G. (2001). The Motor Compinent Is Not Crucial! In H. D. Zimmer, R. Cohen, M. J. Guynn, J. Engelkamp, R. Kormi-Nouri & M. A. Foley (Eds.). Memory for action: A Distinct Form of Episodic Memory? (pp. 97–135)). New York: Oxford University Press.

Labeye, E., Oker, A., Badard, G., & Versace, R. (2008). Activation and integration of motor components in a short-term priming paradigm. Acta Psychologica, 129(1), 108–111.

Lanska, M., & Westerman, D. (2018). Transfer appropriate fluency: Encoding and retrieval interactions in fluency-based memory illusions. Journal of Experimental Psychology: Learning, Memory, and Cognition, 44(7), 1001.

Macri, A., Pavard, A., & Versace, R. (2018). The beneficial effect of contextual emotion on memory: The role of integration. Cognition and Emotion, 32(6), 1355–1361.

Macri, A., Claus, C., Pavard, A., & Versace, R. (2020). Distinctive effects of within-item emotion versus contextual emotion on memory integration. Advances in Cognitive Psychology, 16(1), 67.

Mather, M. (2007). Emotional arousal and memory binding: An object-based framework. Perspectives on Psychological Science, 2(1), 33–52.

Matvey, G., Dunlosky, J., & Guttentag, R. (2001). Fluency of retrieval at study affects judgments of learning (JOLs): An analytic or nonanalytic basis for JOLs? Memory & Cognition, 29(2), 222–233.

Mecklinger, A., & Bader, R. (2020). From fluency to recognition decisions: A broader view of familiarity-based remembering. Neuropsychologia, 146, 107527.

Michalland, A. H., Thébault, G., Briglia, J., Fraisse, P., & Brouillet, D. (2019). Grasping a chestnut burr: Manual laterality in action’s coding strategies. Experimental Psychology, 66(4), 310.

Montero-Melis, G., Van Paridon, J., Ostarek, M., & Bylund, E. (2022). No evidence for embodiment: The motor system is not needed to keep action verbs in working memory. cortex, 150, 108–125.

Morris, C. D., Bransford, J. D., & Franks, J. J. (1977). Levels of processing versus transfer appropriate processing. Journal of Verbal Learning and Verbal Behavior, 16(5), 519–533.

Nessler, D., Mecklinger, A., & Penney, T.B. (2005). Perceptual fluency, Semantic familiarity,

and recognition-related familiarity: an electrophysiological exploration. Cognitive Brain Research, 22 (2), 265–288.

O'Regan, J. K. (2011). Why red doesn't sound like a bell: Understanding the feel of consciousness. Oxford University Press.

Paivio, A. (1991). Dual coding theory: Retrospect and current status. Canadian Journal of Psychology/revue Canadienne De Psychologie, 45(3), 255.

Pecher, D. (2013). No role for motor affordances in visual working memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39(1), 2–13.

Pecher, D., de Klerk, R. M., Klever, L., Post, S., van Reenen, J. G., & Vonk, M. (2013). The role of affordances for working memory for objects. Journal of Cognitive Psychology, 25(1), 107–118.

Pulvermüller, F. (2005). Brain mechanisms linking language and action. Nature Reviews Neuroscience, 6(7), 576–582.

Pulvermüller, F. (2010). Brain embodiment of syntax and grammar: Discrete combinatorial mechanisms spelt out in neuronal circuits. Brain and Language, 112(3), 167–179.

Pulvermüller, F. (2013). Semantic embodiment, disembodiment or misembodiment? In search of meaning in modules and neuron circuits. Brain and Language, 127(1), 86–103.

Purkart, R., Versace, R., & Vallet, G. T. (2019). “Does it improve the mind’s eye?”: Sensorimotor simulation in episodic event construction. Frontiers in psychology, 10, 1403.

Quak, M., Pecher, D., & Zeelenberg, R. (2014). Effects of motor congruence on visual working memory. Attention, Perception, & Psychophysics, 76(7), 2063–2070.

Rey, A. E., Riou, B., & Versace, R. (2014a). Demonstration of an Ebbinghaus illusion at a memory level. Experimental Psychology, 61, 378–384.

Rey, A. E., Riou, B., Cherdieu, M., & Versace, R. (2014b). When memory components act as perceptual components: Facilitatory and interference effects in a visual categorisation task. Journal of Cognitive Psychology, 26(2), 221–231.

Rey, A. E., Riou, B., Muller, D., Dabic, S., & Versace, R. (2015a). “The mask who wasn’t there”: Visual masking effect with the perceptual absence of the mask. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(2), 567.

Rey, A. E., Riou, B., Vallet, G. T., & Versace, R. (2017). The automatic visual simulation of words: A memory reactivated mask slows down conceptual access. Canadian Journal of Experimental Psychology/revue Canadienne De Psychologie Expérimentale, 71(1), 14.

Rey, A. E., Vallet, G. T., Riou, B., Lesourd, M., & Versace, R. (2015b). Memory plays tricks on me: Perceptual bias induced by memory reactivated size in Ebbinghaus illusion. Acta Psychologica, 161, 104–109.

Riou, B., Rey, A. E., Vallet, G. T., Cuny, C., & Versace, R. (2015). Perceptual processing affects the reactivation of a sensory dimension during a categorization task. Quarterly Journal of Experimental Psychology, 68(6), 1223–1230.

Rhodes, S. M., & Donaldson, D. I. (2007). Electrophysiological evidence for the influence of unitization on the processes engaged during episodic retrieval: Enhancing familiarity-based remembering. Neuropsychologia, 45, 412–424.

Roediger, H. L., III., Gallo, D. A., & Geraci, L. (2002). Processing approaches to cognition: The impetus from the levels-of-processing framework. Memory, 10(5–6), 319–332.

Rosburg, T., Mecklinger, A., & Frings, C. (2011). When the brain decides: A familiarity-based approach to the recognition heuristic as evidenced by event-related brain potentials. Psychological Science, 22(12), 1527–1534.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16(2), 225–237.

Schendan, H., & Kutas, M. (2007). Neurophysiological evidence for the time course of activation of global shape, part, and local contour representations during visual object categorization and memory. Journal of Cognitive Neuroscience, 19, 734–749.

Shebani, Z., & Pulvermüller, F. (2013). Moving the hands and feet specifically impairs working memory for arm- and leg-related action words. Cortex, 49(1), 222–231.

Schönbrodt, F. D., & Wagenmakers, E. J. (2018). Bayes factor design analysis: Planning for compelling evidence. Psychonomic Bulletin & Review, 25(1), 128–142.

Susser, J. A. (2014). Motoric fluency in actions: Effects on metamemory and memory. Doctoral dissertation, The University of North Carolina at Chapel Hill.

Susser, J. A., & Mulligan, N. W. (2015). The effect of motoric fluency on metamemory. Psychonomic Bulletin & Review, 22(4), 1014–1019.

Susser, J. A., Panitz, J., Buchin, Z., & Mulligan, N. W. (2017). The motoric fluency effect on metamemory. Journal of Memory and Language, 95, 116–123.

Tulving, E. (1995). Organization of memory: Quo vadis? In M. S. Gazzaniga (Ed.), The cognitive neurosciences (pp. 839–853). The MIT Press.

van Dam, W. O., Rueschemeyer, S. A., Bekkering, H., & Lindemann, O. (2013). Embodied grounding of memory: Toward the effects of motor execution on memory consolidation. The Quarterly Journal of Experimental Psychology, 66(12), 2310–2328.

Versace, R., & Rose, M. (2007). The role of emotion in multimodal integration. Current Psychology Letters. Behaviour, Brain & Cognition, 21, Vol. 1, 2007.

Versace, R., Brouillet, D., & Vallet, G. (2018). Cognition incarnée: Une cognition située et projetée. Bruxelles, Mardaga.

Versace, R., Labeye, E., Badard, G., & Rose, M. (2009). The contents of long-term memory and the emergence of knowledge. European Journal of Cognitive Psychology, 21(4), 522–560.

Versace, R., Vallet, G. T., Riou, B., Lesourd, M., Labeye, E., & Brunel, L. (2014). Act-In: An integrated view of memory mechanisms. Journal of Cognitive Psychology, 26(3), 280–306.

Wagenmakers, E. J., Morey, R. D., & Lee, M. D. (2016). Bayesian benefits for the pragmatic researcher. Current Directions in Psychological Science, 25(3), 169–176.

Wagenmakers, E. J., Marsman, M., Jamil, T., Ly, A., Verhagen, J., Love, J., ... & Morey, R. D. (2018a). Bayesian inference for psychology. Part I: Theoretical advantages and practical ramifications. Psychonomic bulletin & review, 25(1), 35–57.

Wagenmakers, E. J., Love, J., Marsman, M., Jamil, T., Ly, A., Verhagen, J., ... & Morey, R. D. (2018b). Bayesian inference for psychology. Part II: Example applications with JASP. Psychonomic bulletin & review, 25(1), 58–76.

Wang, W., Li, B., Hou, M., & Rugg, M. D. (2020). Electrophysiological correlates of the perceptual fluency effect on recognition memory in different fluency contexts. Neuropsychologia, 148, 107639.

Whittlesea, B. W. A. 1989. “Selective attention, variable processing, and distributed representation: Preserving particular experiences of general structures”. In Parallel distributed processing: Implications for psychology and neurobiology, Edited by: Morris, R. G. M. 76–101. Oxford, UK: Oxford University Press

Yang, S. J., Gallo, D. A., & Beilock, S. L. (2009). Embodied memory judgments: A case of motor fluency. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35(5), 1359.

Zeelenberg, R., & Pecher, D. (2016). The role of motor action in memory for objects and words. In, B.H. Ross (Eds.), Psychology of Learning and Motivation (Vol. 64, pp. 161–193). Academic Press

Zwaan, R. A. (2016). Situation models, mental simulations, and abstract concepts in discourse comprehension. Psychonomic Bulletin & Review, 23(4), 1028–1034.

Zimmer, H. D., Cohen, R. L., Guynn, M. J., Engelkamp, J., Foley, M. A., & Kormi-Nouri, R. (2001). Memory for action: A distinct form of episodic memory? Oxford University Press.

Acknowledgements

We thank Rémi Gigan for his participation in the collecting of part of the results.

We thank Tim Pownall for proofreading the article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflicts of interest.

Ethics approval

All procedures performed in these studies were in accordance with the ethical standards of the university research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Open Practices Statement (TOP)

Data and material are available from https://doi.org/10.7910/DVN/TKEDWH

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Brouillet, D., Brouillet, T. & Versace, R. Motor fluency makes it possible to integrate the components of the trace in memory and facilitates its re-construction. Mem Cogn 51, 336–348 (2023). https://doi.org/10.3758/s13421-022-01350-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-022-01350-x