Abstract

The Atkinson-Shiffrin theory describes and explains some of the processes involved in storing and retrieving information in human memory. Here we examine predictions of related models for search and decision processes in recognizing information in long-term memory. In some models, recognition is presumably based on a test item’s familiarity judgment, and subsequent decisions follow from the sensitivity and decision parameters of signal detection theory. Other models dispense with the continuous notion of familiarity and base recognition on discrete internal states such as relative certainty that an item has or has not been previously studied, with an intermediate state of uncertainty that produces guesses. Still others are hybrid models with two criteria located along a familiarity continuum defining areas for rapid decisions based on high or low familiarities. For intermediate familiarity values, the decision can be delayed pending the results of search for, and occasional recollection of, relevant episodic information. Here we present the results from a study of human recognition memory for lists of words using both response time and error data to construct receiver-operating characteristic (ROC) curves derived from three standard methods based on the same data set. Models are evaluated against, and parameters estimated from, group as well as individual subjects’ behavior. We report substantially different ROC curves when they are based on variations in target-word frequency, confidence judgments, and response latencies. The results indicate that individual versus group data must be used with caution in determining the appropriate theoretical interpretation of recognition memory performance.

Similar content being viewed by others

Introduction

In the late 1960s the Atkinson-Shiffrin (A-S) theory was developed to summarize and explain a large body of data that had been collected since the time of Ebbinghaus (1885/1964) from experiments in which people studied, learned, and eventually tried to remember lists of verbal materials (Atkinson & Shiffrin, 1968, 1971). Verbal learning was important enough to inspire its own journals, conferences, and the beginnings of cognitive science as we know it today. The A-S theory has become known as the “modal model” in cognitive psychology, as most practicing theorists and experimentalists acknowledge it as part of our collective unconscious, driving our theories, leading to interpretations of our data, and giving us the jargon with which we communicate with our peers.

The theory was more general than the experiments on which it was based, of course, as it had implications for the roles of more primitive processes, such as sensation, perception, and the fleeting storage of iconic and echoic traces of their respective visual and auditory stimuli. It also anticipated some modern ideas of working memory, in that short-term memory was posited to have storage capacities as well as being subject to a host of control processes that determine its contents and functions. In addition, the effectively infinite and permanent long-term store is continuously consulted, reorganized, and updated as a result of control processes such as storage and retrieval. The distinction between memory structures (sensory, short-term, and long-term stores) and control processes (attention, rehearsal, storage, retrieval, etc.) was crucial in giving the theory the flexibility and adaptability that has determined its continuing usefulness. In sum, the model was specific enough to account for the classic results of study time, list length, retention interval, serial position effects, and interference effects that had dominated 100 years of memory research. Yet it was general enough to form the basis of contemporary theories of attention, consciousness, language use, and artificial intelligence. Here we wish to trace its influence in one specific area of psychological research, namely recognition memory for verbal materials.

In the early 1970s, we developed an extension of the A-S theory that was specific to search for and retrieval of information in long-term memory (Atkinson & Juola, 1973, 1974; Juola, Fischler, Wood & Atkinson, 1971). The Atkinson-Juola (A-J) model applied some of the analytic methods of signal detection theory (SDT) to the results of recognition memory experiments, as earlier work had indicated the power and flexibility of these methods (e.g., Banks, 1970; Egan, 1958; Kintsch, 1967; Parks, 1966). In these applications of SDT, memory strength is represented along a continuum in which Gaussian distributions associated with old (previously studied information) and new (not previously studied items) are separated but overlapping to some extent (see Fig. 1). When confronted with a word in a memory test, the participant presumably compares the test item’s familiarity, or subjective memory strength, to a criterion along this continuum to separate “old” (or “yes”) and “new” (or “no”) responses. The theory was generally quite successful in separating indices of memory strength by the sensitivity parameter, d’, and response bias by the location of the decision criterion, c. Further, d’ has been shown to be influenced by variables, such as retention interval and study time, that should theoretically affect memory strength, and c is affected by variables, such as relative target frequencies in test blocks as well as payoffs for correct responses and costs for errors, that should reasonably affect response biases (e.g., Swets, Dawes, & Monahan, 2002; Wickens, 2002).

Signal detection theory (SDT). When applied to recognition memory, the abscissa relates to a hypothetical dimension of memory strength, or familiarity, on which non-studied items (distractors or new items) are represented to the left with a Gaussian distribution having a mean of μ1, and studied items (targets or old items) to the right have a mean of μ2; μ1 is arbitrarily set to 0.0., and the standard deviation, σ1 is set to 1.0. Then d’, the difference between the means of the two distributions = μ2. In the equal variance model, σ1= σ2, but other models typically allow the target distribution to have a larger standard deviation. Along the familiarity dimension, the subjects are free to place some criterion, c, that separates “Old” (to the right of the criterion) from “New” judgments (to the left), and its location determines the proportions of hits [P(“Old ”|targets)] and false alarms [P(“Old ”|distractors)]. These proportions are used to plot the receiver-operating characteristic function (ROC curve) showing the relationship between the proportions of hits and false alarms as the criterion moves up or down

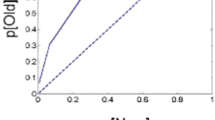

Recently, there have been several alternative accounts of recognition memory that have challenged the basic assumptions of the A-J model and the application of SDT in general. For example, Bröder and Schütz (2009) have claimed that the signal-detection basis of the theory is inappropriate, and that recognition memory results could be fit more adequately by a threshold theory, modified from original proposals (e.g., Egan, 1958; Fechner, 1860/1966; Snodgrass & Corwin, 1988). In their model, an underlying continuum of memory strength, if it exists, is mapped onto a set of discrete states, such as one below a threshold that is achieved only by new items, another above a second threshold that is achieved only by old items, and an intermediate state of uncertainty in which participants are forced to guess (see Fig. 2, describing the two high-threshold model, 2HTM).

A two-high threshold model (2HTM) for recognition memory. Old items are detected as being targets with probability Do, and new items are detected as being distractors with probability Dn. If detection fails, subjects are left in an uncertain, non-detect state between the two thresholds in which they guess “old” with probability g and “new” with probability (1 – g). Detect states automatically lead to the corresponding response

The result has been a classic, and ongoing debate in the literature about whether recognition performance should best be described as based on continuous memory strength or on discrete memory states (e.g., Dube, Starns, Rotello, & Ratcliff, 2012; Malejka & Bröder, 2019). It might well be the case that both classes of models fit the aggregate data for groups of subjects about equally well, whereas individual subjects might be better characterized as using different strategies or control processes depending on their abilities, strategies, or task demands (Malmberg, 2008; McAdoo, Key, & Gronlund, 2019). This debate has often been couched in terms of differential predictions for the shape of the receiver-operating characteristic (ROC) function that describes the relation between the proportions of hits (responding “yes” to a list item) and false alarms (responding “yes” to a non-list distractor) across several conditions in an experiment. Threshold theories generally predict that the ROC should be linear, rather than the smooth curve predicted by SDT, at least when response bias is manipulated experimentally. Bröder and Schütz (2009) reported the results from several experiments while claiming that the linear ROC functions predicted by a threshold theory give a better fit to the data in many cases than the curved ROC functions predicted by SDT. Such claims have not gone unchallenged in the literature (e.g., Dube & Rotello, 2012), and we conducted our own analysis of the data from Experiment 3 of Bröder and Schütz, in which the relevant manipulation of relative proportions of targets and distractors was made within subjects. In their model comparisons, they used a maximum-likelihood method to test the relative goodness-of-fit and found that 23 of 40 subjects’ data were fit better by the 2HTM predictions than by SDT. We examined their individual subjects’ data in plots of the proportions of hits against false alarms, and again when the data had been transformed to z-scores. We then found the best-fitting functions by linear regression and compared whether such linear fits were better for the raw proportions (as predicted by threshold theory) or for the z-transformed data (as predicted by SDT). In earlier comparisons of maximum-likelihood versus regression methods, Gronlund and Elam (1994) and Van Zandt (2000) reported very similar results in estimating parameters for recognition memory ROC curves when using both procedures. However, in our case, and in slight contrast to the modeling efforts of Bröder and Schütz, we found that when using linear regression, 24 out of 40 subjects’ data were better fit by SDT, so we view the results as being inconclusive. We will include the results of both linear regression and maximum likelihood procedures in the modeling efforts reported later in the current paper.

In the A-J model developed from the A-S theory, we supplemented the initial familiarity judgment with a secondary memory search process that could be used to determine the best response, especially in those cases in which familiarity was ambiguous in the region of substantial overlap in the underlying target and distractor strength distributions. That is, in a way similar to the 2HTM, we replaced the single criterion c in the original SDT model with two criteria, c0 and c1, to define a central region of relative uncertainty. In order to test some models of memory search processes, we insured that our subjects could rely on their memories for previously studied information by having them memorize lists of target words and demonstrate perfect serial recall before testing began. When test words were then presented, the individual responses were highly accurate, and response times (RTs) were the main data used for subsequent theoretical analysis (Atkinson & Juola, 1973, 1974; Juola et al., 1971).

The results indicated that, as in the Sternberg task (Sternberg, 1966, 2016), RTs increased linearly with the number of items in the memorized list, but the slopes of the best-fitting linear functions were much smaller than those reported by Sternberg. We argued that recognition of words in a well-learned list would seldom require some kind of memory-scanning process, but more often fast decisions could be made based on the familiarity, or memory strength, of the individual words alone. That is, an initial familiarity judgment could be a reliable indicator of the word’s likely list membership, and words with high or low familiarities were very likely to be list members or distractors, respectively. However, familiarity judgments proved to be fallible, and we assumed that the small list-length effect that we observed resulted from a back-up search process that examined the memorized list for the presence or absence of the test word when the familiarity was of an intermediate value.

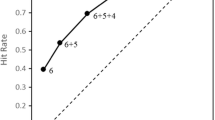

To quantify the model, we estimated the relative locations of two decision criteria along the hypothetical familiarity continuum from the false-alarm rates for distractors and the miss rates for targets. These values were quite low and independent of list length, so the estimated values did not differ across list-length conditions (see also Annis, Lenes, Westfall, Criss, & Malmberg, 2015, for a review and discussion of list-length effect in recognition memory). RTs, however, were definitely not independent of list length, and the RTs were fit by a model that summed encoding and RTs plus fast positive and negative RTs for decisions based on familiarity, and additional search times that depended on list length for decisions based on intermediate familiarity values (see Fig. 3).

Theoretical familiarity distributions for distractor words (left) and target words (right) in a recognition memory experiment. The criteria (C0 and C1) are determined by the observed miss (false negatives) and false alarm (false positives) rates, respectively, and they also determine the respective proportions of fast, familiarity-based negative and positive responses. The areas under the familiarity density functions between the criteria are used to estimate the proportions of slower responses that depend on list length as determined by recollective search processes through representations of the studied words in episodic memory (after Atkinson & Juola, 1973, 1974)

The model fit the error data perfectly, within the tolerance levels of experimental noise, and also provided a good fit to the RT data, including predictions of increased memory search times and larger list-length effects for distractors when their familiarities were increased by repeating distractor words during the test phase. Similarly, repetitions of target words during testing reduced both the overall RTs and the effects of list length, as predicted by the theory (Atkinson & Juola, 1973, 1974).

At the time that the A-J model was proposed, we anticipated some of the theoretical developments in memory research arguing for different types of systems on which recognition memory could be based. For example, the distinction between semantic and episodic memories proposed by Tulving (1972) dovetailed with our ideas that the familiarity of a test word is based on whatever information is initially retrieved when a word is encoded, and subsequent decisions are based on retrieval of episodic information stored, in our case, as a specific list. Further developments in theories of recognition memory have emphasized that similar distinctions can be made between familiarity or “feeling of knowing” judgments and those based on recollection of specific episodic details that can sometimes confirm or deny the presence of previous experiences with the test item (e.g., Parks & Yonelinas, 2007; Wixted, 2007; Yonelinas, 1994).

Such hybrid models of recognition performance have been analyzed in terms of the shape of the ROC function relating the observed proportions of hits and false alarms across different conditions and subjects. In the classic SDT model in which signal and noise distributions (corresponding to the memory strength distributions of target and distractor items, respectively), are equivariant, the ROC function describing choice behavior forms a smooth, symmetric curve lying above the diagonal from the point (0,0) to (1,1). The distance of the curve from the diagonal is determined by d’, the difference between the means of the strength distributions for studied (target) and non-studied (distractor) items, as scores move toward the (1,0) corner when discrimination is easier. Observed data should correspond to points along the curve that are determined by the choice of c, the bias parameter, or the relative willingness to say “yes” or “old” to a test item. In contrast, without additional assumptions, the 2-criterion high-threshold model (2HTM) predicts that the ROC curve should be linear, with data points extending from (Do,0) to (1,Dn-1) depending on the value of g.

Other models are hybrids of SDT and threshold theories, such as the A-J model. Although the A-J model was not used at the time to generate ROC curves, others like it have assumed that there is a familiarity component and a recollection component, with the familiarity part following continuous, Gaussian distributions, and the recollection part being a more discrete, all-or-none process. Thus, the hybrid model reduces to the SDT model when the two criteria converge, and it superficially resembles the 2HTM when the two criteria are separated. The hybrid model differs from the 2HTM in two important ways, however: (1) the model states are not discrete, but rather the underlying familiarity dimension is continuous, allowing for gradations in response speed and confidence, and (2) the state between the two criteria, while resulting in greater levels of uncertainty, produces not only biased guessing strategies, but decisions based on a search of episodic memory that can sometimes produce highly accurate and confident positive responses. If recollection fails, and by definition it must fail for distractor items, a biased decision process determines the final response.

Yonelinas and Parks (2007) have argued that such hybrid models generally produce curved ROC functions, but successful recollection increases the relative proportions of high-confidence hits. This result should make the ROC curve asymmetrical, but since the ROC curve is asymmetrical under SDT assumptions when the signal and noise distributions have unequal variances, the distinction might not be critical when considered alone in choosing between the theories.

In summary, enough uncertainty remains about search and decision processes in recognition memory that we decided to make a strong test of the relevant theories by collecting large numbers of observations from a relatively large sample of participants and then employing several different methods to generate ROC curves from the same set of data. In keeping with recently advocated theoretical arguments for analyzing group data as well as observations from individual participants (e.g., Botella, Privado, Suero, Colom, & Juola, 2019; Cohen, Sanborn, & Shiffrin, 2008; Estes & Maddox, 2005; Jang, Wixted, & Huber, 2011; Malejka & Bröder, 2019), we fit models to the data for each subject in order to determine whether some of the differences reported in the literature could be due to heterogeneous abilities or strategic variations in applying control processes across participants. It is also possible that group data could mask underlying behavioral patterns, such as in extreme cases in which individual subjects might generate linear ROC functions in different parts of the probability space, but their averages produce a curved ROC resultant.

For the present study, we used three methods to generate ROC curves: (1) separate blocks of test trials in which target (old item) proportions were varied from low to high versus complementary proportions of distractors (new items), (2) the use of confidence judgments to generate a series of decision criteria, and (3) the analysis of RTs into bins analogous to confidence ratings in which fast responses are assumed to represent confident judgments and slow responses are assumed to correspond more often to guesses. If all three methods converge on a common way of measuring underlying memory search and decision processes, then the recovered ROC curves should be consistent across them. They could then be used as evidence in favor of one or another of the various models proposed for search and decision processes in human recognition memory. As it turns out these predictions were overly optimistic.

Method

Participants

The participants were 58 students and other members of the academic community at the Universidad Autónoma de Madrid. They varied in age from 18 to 28 years (\( \overline{\mathrm{X}} \) = 19.2; S.D. = 2.1), and 50 of them were females. The data from 11 subjects were excluded from the final analysis due to failure to meet an arbitrary criterion of 66% correct or more in the recognition memory test.

Materials

The words used for study and test lists included 500 common, concrete Spanish nouns selected from word lists provided by Alonso, Fernández, Díez, and Beato (2004), Callejas, Correa, Lupiáñez, and Tudela (2003), and Pérez, Acosta, Megías, and Lupiáñez (2010). The words selected for study were presented on a computer monitor in black on a white background in lowercase 18-point Consolas font. From the participants´ viewing distance of approximately 60 cm, a four-letter word subtended about one degree of horizontal visual angle. The experiment was run using E-Prime® 3 (Psychology Software Tools).

Procedure

The subjects were run individually or in small groups in a computer laboratory equipped with several small carrels. After receiving instructions and signing consent forms, they viewed a study list of 250 words presented one at a time. The study lists consisted of two different random samples taken from the original 500-word set assigned to each subject in a counterbalanced order. Each word was presented in the center of the screen for 2.5 s followed by a .5-s blank interval. The participants were asked to pronounce each word aloud as it was presented to be sure that each was attended. The words were presented in sets of 83 or 84 with short rest periods between sets.

After the last study set was presented, the subjects were given an intervention task consisting of making judgments about whether a rotated letter was in its original form or was a mirror image. The intervention task lasted for about 5 min.

The test phase consisted of 500 trials, divided into five blocks of 100 trials each and separated by short rest periods. The tests included one presentation of each of the 250 target words from the study list and one presentation of each of the 250 remaining non-studied, distractor words. The subjects were divided into two groups to receive the test blocks in different orders. Half of the subjects began with a trial block that included 15 targets and 85 distractors, and subsequent blocks contained 35 targets and 65 distractors, 50 of each, 65 targets and 35 distractors, and finally 85 targets and 15 distractors. The order of these blocks was reversed for the other group of subjects. All subjects were informed of the proportions of targets and distractors at the beginning of each trial block.

On each trial, a test word was presented in the same font as in the study phase. A trial began with the presentation of a large, black outline rectangle near the center of the screen for 500 ms. When it disappeared, the test word was shown in the center of where the rectangle had been until the subject responded. Each participant was instructed to make a positive or “yes” recognition response with one finger of the left hand on the “A” key on the computer keyboard. A negative response was to be made with a different finger of the left hand on the “D” key. Subjects were instructed to respond as rapidly as possible while being careful to be correct. When the response was made, instructions appeared on the screen to press a key with a finger of the right hand on the number pad of the keyboard. The subjects were instructed to type “1” if they were certain that their response was correct, “2” if they were relatively sure that they were correct, and “3” if they thought that they were guessing. They were encouraged to use all three numerical responses on some trials throughout the experiment. If the initial response was incorrect, the screen turned red for about 50 ms after the confidence rating was made, and it remained blank for this period following correct responses. Then the rectangle appeared to signal the presentation of the next target word.

Following the final test word, the subjects were debriefed and thanked for their participation. The entire session lasted less than one hour.

Results

The results are presented in several parts, beginning with three sections reporting individual and group analyses of ROC curves based on the proportions of hits and false alarms observed in (1) blocks of trials with different proportions of targets and distractors, (2) categories determined by confidence ratings, and (3) categories determined by RTs.

Effects of target proportions

The manipulation of the proportions of targets and distractors in separate trial blocks resulted in the expected changes in response rates. Table 1 shows the average rates of hits and false alarms across subjects for the five trial blocks in which the proportions of targets were varied from .85 to .15. As a result, the number of “yes” responses across blocks (averaging about 75, 61, 47, 35, and 21 across subjects) spanned almost the same range as the actual number of targets shown in each block (85, 65, 50, 35, and 15, respectively). Both hit and false-alarm rates increased significantly with the proportion of targets in each block [F(4,184) = 40.35, p < .001, and F(4,184) = 41.21, p < .001, respectively].

We then found d’ and c for each subject in each trial block, and subjected these values, also shown in Table 1, to ANOVAs. The average sensitivity values measured by d’ did not vary significantly across trial blocks [F(4,184) < 1.0]. However, the systematic change in the criterion, c, across conditions was significant [F(4,184) = 52.1, p < .001]. The ROC plots shown in Fig. 4 correspond to the data in the first two rows of Table 1, including the raw, binary proportions in the left panel and their conversions to z-scores in the right panel of the figure. The average d’ and c estimates across subjects from Table 1 are shown Fig. 5.

Plots of the average proportions of hits against false alarms for the data from the five trial blocks that varied in their relative proportions of targets and distractors reported in Table 1. The left panel shows the ROC function for the raw response proportion data, and the right panel shows the data for their z-score transforms. The data points in the graphs, from left to right, represent conditions with increasing proportions of targets. The fitted line and curve represent predictions of the symmetric two-high threshold model (2HTM)

Plots of d’ (left panel) and c (right panel) averaged across participants for the five trial blocks as shown in Table 1. Hash marks indicate the 95% confidence intervals. The bars in the graphs, from left to right, represent conditions with decreasing proportions of targets

Model fitting to the aggregate data for target proportion blocks

We began by first testing the aggregate data across subjects against the predictions from the standard SDT (see Fig. 1) and from the 2HTM (see Fig. 2) using a maximum-likelihood method. In all models it was assumed that the experimental manipulation (target frequency) affected only the response criteria, not the sensitivity. Two 2HTMs were fitted, under the assumption of symmetric states (Do = Dn), and again without that restriction. Two SDT models were also fitted, under the homoscedastic assumption (σ1 = σ2), and again allowing for heteroscedasticity. The fits were achieved by using the R package MPTinR (Singmann & Kellen, 2013). Since there are five experimental conditions in which the target probabilities were varied, it is assumed that: (1) in the 2HTM the parameters Do and Dn are constant across those conditions, while the parameter g can vary depending on the experimental condition; and (2) in SDT, the parameters d’ and σ are constant across the conditions, while the criteria c can vary depending on the experimental condition. Consequently, the number of parameters in the symmetrical 2HTM is equal to 6 (because Do= Dn), whereas for the asymmetrical model there are 7. Likewise, for the homoscedastic version of SDT there are 6 parameters (because σ = 1 for both target and distractor distributions), whereas for the heteroscedastic version there are 7.

The model evaluations were carried out in two steps: (1) their overall fits were quantified by the G2statistic (only models with p > 0.05 were retained), and (2) the selected model, among the four candidates, was determined using standard statistical criteria. The results are summarized in Table 2. The parameters from g1 to g5 (in the 2HTM), and c1 to c5 (in SDT), refer to experimental conditions A to E (85% targets to 15% targets), respectively. The Do and Dn values for the 2HTM-Asymmetric model refer to trials on which old or new items were detected as such with respective average probabilities of 0.373 and 0.377. In the 2HTM-Symmetric model both probabilities equal 0.375. The decreasing value of the g parameter reflects the change in probability of saying “yes” in the uncertain state across the five experimental conditions. As the p-values were higher than .05, the conclusion is that both models provide acceptable fits to the average values found here. The results for the two SDT models do not change very much, as the only difference is that the parameter σ for the target distribution was set to 1.0 in the homoscedastic model whereas it was free to vary in the heteroscedastic model. However, the estimated value (1.05) changed little in that case. As expected, the criterion values (c1 to c5) shifted from left to the right as the target frequencies decreased. The criterion values in the SDT heteroscedastic model were obtained assuming σ = 1 for the noise distribution and were calculated in terms of the noise distribution (see Macmillan & Creelman, 2005; Eq. 3.2, p. 59). As the p-values for both models were also higher than .05, they are retained; so all four models are plausible when they are fitted to the average values. However, taking as a basis both the AIC and BIC indices, the best-fitting model overall is the symmetric 2HTM. The fits to the data shown in Fig. 4 correspond to predictions of the 2HTM.

Model fitting to individual data for trial blocks with varying target proportions

As the results of model fitting for the average data in recognition memory experiments sometimes hide important individual differences (e.g., Malmberg & Xu, 2006), we also analyzed the data at the individual level. The simplest way to compare models based on SDT and thresholds of the type proposed by Bröder and Schütz (2009), is to compare the best-fitting linear models to the data of each individual. SDT predicts that the linear fits to the P(H) X P(FA) functions should be better for the z-transformed proportions, and threshold theories (such as the 2HTM) predict that the linear fits should be better for the raw hit and false alarm proportions. Therefore, each subject’s ROC data were fit by linear models for both raw proportions and their z-transforms.

In the data for trial blocks in which target proportions were varied, the data were somewhat unstable, especially in the extreme conditions in which target (or distractor) frequencies were 15 in a trial block. Similar problems have been noted by others who have relied on individual-subject data for model fitting (e.g., Bröder & Schütz, 2009; Malejka & Bröder, 2019; Yonelinas & Parks, 2007).

In the present data collected across monotonic changes in target proportions, six of the 47 subjects actually showed negative slopes in their best-fitting linear functions across the target proportion blocks, although in no cases did the proportions of false alarms exceed the proportions of hits. Also, none of the negative slopes differed significantly from zero, whereas 17 of the 41 positive slopes were significant for the raw scores and 18 were significant for the z-scores. As Bröder and Schütz (2009) and Malejka and Bröder (2019) have reported, these data are unhelpful for discriminating between pure SDT models and two-threshold models with discrete states, as the proportions of variance accounted for by linear functions were inconclusive for distinguishing between the models. In our case, 28 of 47 subjects’ data showed better linear fits over their raw proportions of hits and false alarms, and for the other 19, their z-transforms were better fit by the linear functions. These differences give a slight but non-significant advantage for the 2HTM over SDT (p = .12, by a binomial test).

The model-fitting procedure using maximum-likelihoods for individual data was the same as that used for the subject averages, and Table 3 shows the values for the 47 participants. When comparing the individual AIC values, the best-fitting model is the 2HTM-symmetric for 24 individuals (51.1%), SDT-homoscedastic for 13 individuals (27.7%), SDT-heteroscedastic for seven (14.9%), and 2HTM-asymmetric for three (6.4%). When comparing the individual BIC values, the selected model is 2HTM-symmetric for 26 individuals (55.3%), SDT-homoscedastic for 19 individuals (40.4%), and SDT-heteroscedastic for two individuals (4.3%). Although the model selection results are about the same as with the average data for both indices, there is a non-negligible number of subjects for which the selected model is not the 2HTM-symmetric, but the SDT-homoscedastic model. For most individuals the model selected with both indices is the same (38/47; 80.9%). There is insufficient evidence in these data to support further theoretical explorations for the heteroscedastic SDT or for hybrid models, such as the A-J theory, that predict asymmetric ROC curves. Although asymmetric ROC curves consistent with unequal-variance models are clearly the norm in SDT analyses of recognition memory performance (e.g., Parks & Yonelinas, 2007), there are reports supporting the symmetric view, based on z-ROC plots of slopes near 1.0 (e.g., Gronlund & Elam, 1994; Experiment 1), such as the slope of .93 found in the present study.

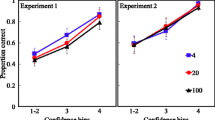

Effects of grouping responses into confidence rating bins

The confidence rating data were obtained by first combining the data from all trial blocks in which target proportions were varied. Table 4, part A shows the mean proportions of responses across all subjects and trial blocks for the six confidence categories for target and distractor trials separately. Despite instructions to use all categories about equally often in their responses, it is clear that the most responses were highly confident (59.5%) and guesses were very infrequent (6.6%). However, the instructions to match confidence to their subjective impression of accuracy was fairly successful, as “certain” responses were 81% correct overall, followed by “relatively sure” (64% correct), and “guess” was indeed an accurate description, as the accuracy level for the third response category was barely above chance (56%).

These data were then aggregated into bins beginning with the bin for the highest confidence rating for “yes” responses, then adding the data for the middle and highest confidence rating categories into a second bin, and so on until the 5th bin included all responses that were categorized as “certain yes,” “relatively sure yes,” “guess yes,” “guess no,” and “relatively sure no,” as is typically done when constructing ROC curves from confidence intervals (e.g., Van Zandt, 2000). The mean raw proportions of hits and false alarms across subjects for the confidence rating data are shown in Table 4, part B and also in Fig. 6 along with their conversions to z-scores. The lowest two rows of Table 4 show the average d’ and c values that were calculated from the individual subject parameter estimates.

Plots of the average proportions of hits against false alarms for the data from the cumulative confidence category bins reported in Table 4. The left panel shows the ROC function for the raw response proportion data, and the right panel shows the data for their z-score transforms. The data points, from left to right, represent bins of increasing size cumulated across confidence bins. The fitted curve and line represent predictions from the heteroscedastic version of signal detection theory (SDT)

The mean d’ for the data averaged across subjects was observed to be slightly higher for the confidence ratings (d’ = 1.29) than for the same data based on binary, yes-no decisions across the trial blocks with different target proportions (d’ = 1.12). Unlike the data compared across trial blocks, the values for d’ decreased consistently as more bins were added to the cumulative hit and false-alarm rates. That is, d’ was highest for the condition in which only the most-confident “yes” responses were included. Van Zandt (2000) has claimed that “…confidence is not scaled directly from perceived familiarity…” (p. 582), and Bröder and Schütz (2009) have also argued that d’ estimates in the literature can be different for binary and rating response measures. There were substantial changes in the c parameter consistent with the use of monotonically-changing decision criteria across a stable set of target and distractor memory strength distributions. Further, the data shown in Table 4 differ from those shown in Table 1 for the separate target proportion blocks. In Table 1 c can be observed to change markedly with changes in the relative prevalence of targets in the test blocks, but d’ remains relatively constant. However, across cumulative confidence bins, both c and d’ change monotonically and are clearly not independent. Grider and Malmberg (2008) have presented a detailed discussion of this issue and show that non-independence is a consequence of unequal variances in the target (signal) and distractor (noise) distributions in classical signal detection theory (Green & Swets, 1966; Macmillan & Creelman, 2005). The univariance assumption can be checked against the zROC data in that the zROC slope is equal to the ratio of the variances of the distractor and target distributions in SDT.

The ROC plots shown in Fig. 6 correspond to the data in Table 4, as do the d’ and c estimates in Fig. 7. In comparing Figs. 4 and 6 we can observe that when the theoretical familiarity distributions for targets and distractors have close to the same variance (zROC slope = .93 for the target proportion data), d’ and c are relatively independent, but the asymmetrical ROC curve in Fig. 6 (zROC slope = .79) indicates that the independence assumption is likely to be violated. Thus, we expect the positive correlation between c and d’ shown in Table 4B.

Plots of d’ (left panel) and c (right panel) averaged across participants for the confidence rating data shown in Table 4. Hash marks indicate the 95% confidence intervals. The bars in the graphs, from left to right, represent conditions with decreasing numbers of cumulated bins

Model fitting to the aggregate data across confidence levels

We again tested the aggregate data across subjects for each version of the 2HTM and the standard SDT using a maximum-likelihood method, following the same procedures as above. The results are summarized in Table 5.

On the basis of the p-values alone, the only retained model, and the one that provides the best fit to the category-rating data (as shown in Fig. 6), is the heteroscedastic version of SDT.

Model fitting to the individual data for confidence ratings

In fitting the data for the confidence rating bins, we began as before by finding the best-fitting linear functions relating the proportions of hits to proportions of false alarms for both the raw data and their z-transformations. In both cases, the linear fits were much better across individual subjects’ data for confidence ratings than they were for the target proportion conditions, as the cumulative summing of raw proportions guarantees that both false alarms and hits will increase monotonically across the confidence categories. Nevertheless, 38 of the 47 subjects showed a higher proportion of variance accounted for by linear models in the z-transforms than in the raw data, more consistent with SDT theory than threshold theory.

The ROC plots are clearly non-linear for the raw data for the confidence ratings as shown in Fig. 6. Visual inspection suggests that the confidence rating categories produced asymmetrical curves consistent with the view that the data are best fit by either a heteroscedastic SDT model (corresponding to the fits shown in Fig. 6), with the target or old item distribution having a greater variance, or by a hybrid model, in which responses not based on familiarity alone are followed by a search and possible recollection process which likely to yield a greater proportion of high-confidence “yes” responses to target words.

The maximum-likelihood procedure used was the same as that for the subject averages in the target proportion conditions, and Table 6 shows the values for the 47 participants. When comparing the individual AIC values, the selected model is SDT-heteroscedastic for 23 individuals (48.9%), SDT-homoscedastic for 15 individuals (31.9%), 2HTM-asymmetric for five (10.6%), and 2HTM-symmetric for four (8.5%). When comparing the individual BIC values, the selected model is SDT-homoscedastic for 29 individuals (61.7%), SDT-heteroscedastic for ten individuals (21.3%), 2HTM-symmetric for five (10.6%), and 2HTM-asymmetric for three (6.4%). In this case, the heteroscedastic SDT yields the best fit for the AIC measure, and the homoscedastic SDT is best using the BIC measure. Both measures indicate that SDT outperforms the 2HTM for the category rating data. For most participants the model selected with both indices was the same (32/47; 68.1%).

Despite the generally better fit obtained from SDT than from threshold models, there are reasons to be concerned about the assumption that familiarity directly determines confidence. If this were true, then the confidence rating data should be constant across conditions designed to affect response bias. Van Zandt (2000) demonstrated that the ROC curves obtained in different bias conditions are not identical. Specifically, she found that the slopes of the linear zROC functions across confidence categories were steeper for conditions in which subjects were biased to make relatively more “old” decisions. In the present study we made a similar analysis of the zROC plots across confidence ratings and replicated her finding. The zROC slopes were .832 for the combined 85% and 65% target proportion conditions, .760 for the 50% condition, and .725 for the combined 35% and 15% target proportion conditions. As Van Zandt pointed out, such results are inconsistent with a common familiarity-based continuous recognition model, and she suggested that changes in response bias associated with varying target proportions in a test list should be due to changes in the shapes of the underlying memory strength distributions in continuous recognition memory models, and not due to changes in the criterion, c alone.

Since the data for the confidence ratings were most often consistent with the heteroscedastic SDT, at least for the aggregate data, the results tend to support both unequal-variance SDT models as well as hybrid models. Following the suggestion of Yonelidas (1994; see also Malmberg, 2008) who argued that dual-process, hybrid models can provide a better fit to confidence rating ROCs than single-process models, we attempted to fit the data for confidence bins in each of the target proportion conditions using the A-J model (see Fig. 3). To do this, we first fit the model to the target proportion data for each trial block while temporarily ignoring the confidence rating data. The model has eight parameters; the mean of the target distribution (d’ = 1.0), the five guess parameters (g1 through g5) for items falling in the intermediate familiarity range when recollection fails, and r = recollection probability for target items falling in the intermediate range. The details of predictions and goodness-of-fit statistics are presented in Table 7. Notice that the model does not fit the data as well as any of the other models described in Table 2, although it provides an acceptable fit to the data overall (p = .05) as well as to the data of 36 of the 47 participants by the same criterion.

To determine how the hybrid model handles the data for confidence ratings across the target proportion blocks, we assumed that high-confidence hits could result from two sources, the proportion of the target distribution above the upper criterion, plus a proportion r of the trials in the intermediate, search region that result in successful recollections. Medium-confidence hits presumably result from unsuccessful recollection searches followed by positive responses with probability g. Similarly, high-confidence correct rejections result from the portion of the distractor distribution below the lower criterion, and medium-confidence correct rejections result from unsuccessful recollection searches followed by negative responses with probability (1 – g). Medium-confidence false alarms result from positive responses from the search area, and medium-confidence misses result from unsuccessful searches (1 – r) followed by negative responses (1 – g). Finally, the very small proportions of low-confidence responses (6.6% overall) were assumed to result from items with familiarities near the middle of the search area in which target and distractor familiarities were about the same, producing about 3.3% of guess responses each for hits, correct rejections, misses, and false alarms. The details of the model along with the estimated parameters, data, and model predictions for the confidence rating data are shown in Table 8. In the table we consider the data from the 15% and 85% target proportion blocks only, since these data sets should show the largest zROC slope discrepancies.

Since the locations of the criteria and the values of g change with each change in the target proportions across trial blocks, the number of parameters to be estimated is very large, even with the value of r fixed as well as the proportion (L) of low-confidence guesses. Nevertheless, our initial aim was to determine whether or not this version of the A-J model could anticipate Van Zandt’s (2000) findings. Therefore, for the purposes of fitting the model, we decided to put all of the effects of changes in target proportions across blocks into changes in the locations of the criteria, C0 and C1, and we chose a fixed value for the positive “guess” parameter g (= .25). When we determined the predicted hits and false alarms across confidence ratings for different target proportion conditions (as shown in Table 8), we found that the slopes of the zROC curves were .899 for the 15% target block, and .962 for the combined 85% target conditions. Note that under the general assumptions of SDT with equal standard deviations for the target and distractor distributions (1.0), the expected overall slope of the zROC linear fit should be 1.0. Thus, there is some evidence that the A-J hybrid model can predict slope differences across response-bias conditions without requiring changes in the shapes of the underlying theoretical familiarity distributions, as argued by Van Zandt. In addition, the A-J model predicted the change in d’ across cumulative responses in confidence bins as shown in Table 8, generating an expected value of d’ of 1.12 for the Y1 target bin, and .98 for the Y1 though N2 combined bins, compared with the respective observed d’ values of 1.12 and .77 for the aggregate data (note the d’ for the aggregate data, 1.29, is greater than the average d’ for each subject’s data, .99, across the confidence bins).

Effects of grouping responses into response time (RT) bins

The RT analysis began by first combining the data from all trial blocks in which target proportions were varied. Then these data were aggregated into bins by first separating all “yes” responses into one bin, and all “no” responses into another. Following this, these two response bins were further divided into thirds to identify the fastest, middle, and slowest responses. Finally, these were organized into six bins ordered along the continuum beginning with the fastest “yes” responses, the medium “yes” responses, the slowest “yes” responses, the slowest “no” responses, the medium “no” responses, and finally the fastest “no” responses. Dividing the RT continuum into bins provides an index of relative certainty analogous to the confidence ratings, under the assumption that fast responses are generally more confident than slow responses. The mean raw proportions of hits and false alarms across subjects for the RT data are shown in Table 9, and also in Fig. 8 along with their conversions to z-scores.

Plots of the average proportions of hits against false alarms for the data from the cumulative response time bins reported in Table 9. The left panel shows the ROC function for the raw response proportion data, and the right panel shows the data for their z-score transforms. The data points, from left to right, represent bins of increasing size cumulated across response time (RT) bins. The fitted curve and line represent predictions from the homoscedastic version of signal detection theory (SDT)

The mean d’ for the data averaged across subjects was observed to be somewhat less for the RT bins (d’ = 1.09) than for the confidence rating bins (d’ = 1.29), and it was about the same as that for the data separated into blocks based on target proportions (d’ = 1.12). However, unlike the data for blocks in which the target proportions were varied, as well as for the data sorted into confidence bins, the values for d’ were highest for the bins that included all of the positive responses and decreased in either direction from that point. There were very substantial changes in the c parameter consistent with the use of monotonically-changing decision criteria across a stable set of target and distractor memory strength distributions. The ROC plots shown in Fig. 8 correspond to the data in Table 9, as do the d’ and c estimates shown in Fig. 9.

Plots of d’ (left panel) and c (right panel) averaged across participants for the response time (RT) data shown in Table 9. Hash marks indicate the 95% confidence intervals. The bars in the graphs, from left to right, represent conditions with decreasing numbers of cumulated RT bins

Model fitting for the aggregate data for RT bins

We again tested the aggregate data across subjects for the 2HTM and the standard SDT using a maximum-likelihood method, following the same procedures as above. The results are summarized in Table 10. In this case, none of the four models is retained as they all show significant departures from acceptable fits to the data. In addition, we did not attempt to fit any hybrid models, such as the A-J theory, as the fits would be unlikely to be better than the simpler models with fewer parameters, and the RT bins would include complex, perhaps intractable, mixtures of responses from different familiarity, recollection, and guess contributors.

Model fitting to the individual data for RT bins

The procedure used was the same as that for the subject averages in the previous conditions. In fitting the data for the RT-binning procedures, we began as before by finding the best-fitting linear regressions of proportions of hits against proportions of false alarms for both the raw data and their z-transformations. In both cases, the linear fits were much better for individual subjects’ data across the RT bins than for the target proportion conditions, as again, the cumulative summing of raw proportions guarantees both false alarms and hits will increase monotonically across the RT bins. For the RT data, all 47 subjects showed better linear fits for their z-scores than for the raw scores. These results led us to reject the unmodified 2HTM model for the RT data. The ROC plots are clearly non-linear for the raw data RT bins, as shown in Fig. 8. Visual inspection suggests that the ROC plot for the raw data based on binning by RT produced a fairly symmetrical ROC curve at least superficially consistent with the homoscedastic SDT model. In support of this conclusion, the zROC slope was .91, in close agreement with the value of .93 found for the data from the separate target proportion blocks. Therefore, we chose to illustrate the fit to the ROC curves in Fig. 8 with the homoscedastic version of the SDT, as it also gave the best fit overall to the data for individual subjects by the BIC measure, as indicated in Table 12.

The model-fitting procedure using the maximum-likelihood procedure for individual data was the same as that used for the subject averages, and Table 11 shows the values for the 47 participants. When comparing the individual AIC values, the selected model is SDT-heteroscedastic for 29 individuals (61.7%) and SDT-homoscedastic for 18 individuals (31.3%). When comparing the individual BIC values, the selected model is SDT-homoscedastic for 31 individuals (66%) and SDT-heteroscedastic for 16 individuals (34%). In this case, the heteroscedastic SDT yields the best fit for the AIC measure, and the homoscedastic SDT is best using the BIC measure. Both measures indicate that SDT outperforms the 2HTM for fitting the RT data for all subjects. For most participants the model selected with both indices was the same (32/47; 68.1%).

Summary statistics for the data sorted into target proportion trial blocks, confidence rating bins, and RT bins

A summary of the model-fitting statistics for all three models is presented in Table 12. Also included are the best-fitting models for the aggregate data (indicated by *).

Discussion

The use of ROC curves to analyze and interpret the data from recognition memory studies has had a long and varied history (e.g., Kellen & Klauer, 2015; Kellen, Klauer, & Bröder, 2013; Malejka & Bröder, 2019; Wixted, 2007; Yonelinas & Parks, 2007). If recognition memory were a unitary process, and several different methods employed to measure it yielded the same general pattern of results, then the choice among models for search and decision processes in recognition memory would converge on a single best interpretation. As it stands, we are left with ambiguous evidence for several alternatives. Recognition could be based upon a single strength continuum with a single response criterion and yield smooth, symmetrical ROC curves above the main diagonal with endpoints at (0.0) and (1.1) (e.g., Dube, Starns, Rotello, & Ratcliff, 2012). Alternatively, recognition could be based on one or two (or more) thresholds delineating discrete states of knowledge about the likelihood that a test word is a target (e.g., Province & Rouder, 2012). Responses generated from such states, without additional assumptions (such as defining response-mapping processes from discrete states that would add additional parameters; e.g., Bröder, Kellen, Schütz, & Rohmeier, 2013; Chen, Starns, & Rotello, 2015), should yield linear ROC functions or functions with separate linear components. Finally, hybrid models based on the presumption that decisions can be based either on the test word’s familiarity or on a search of episodic memory for recollectible information predict that the ROC function should be curved, but perhaps highly asymmetric. It is also possible that ROC curves have lost their utility as a diagnostic tool for theory testing (as suggested by Dube, et al., 2012; Malejka & Bröder, 2019; McAdoo, et al., 2019; Malmberg, 2008; and others).

The present study used a single data set, collected from 47 subjects over five 100-trial blocks, in which subjects’ memories were tested for 250 previously-studied words among 250 distractors. The relevant variables were the relative proportions of targets in each trial block (varied from 15% to 85%), subjects’ RTs when asked to respond as quickly as possible while avoiding errors, and their subsequent confidence judgments about the correctness of their speeded responses. All three methods of measuring recognition memory produced unique aspects in the ROC functions.

The manipulation of relative target frequency resulted in predictable results, as the response criterion changed monotonically with the proportion of targets in each block. The subjects used a relatively liberal criterion when targets were frequent and a stricter criterion when targets were rare, closely correlating their overall proportion of “old” responses to the actual target proportions. The resulting ROC curve for the overall data (Fig. 4) was clearly better fit by a linear function than by a curved line, and this conclusion was supported by most but not all of the subjects in different tests applied to individual data. Similar results have been reported by Bröder and Schütz (2009) and others, although curvilinear ROC curves have also been found by researchers using similar methods (e.g., Dube & Rotello, 2012; Dube, et al., 2012; but see the rejoinder by Bröder & Malejka, 2017). The present results showed changes in the response criterion, c, with changes in target probability in the test lists, but the sensitivity parameter d’ was constant across the target frequency manipulation. The inconsistencies observed among individual subjects in our own data, and present also in other studies, render the distinction between discrete-state, threshold theories and continuous signal-detection theories inconclusive.

An examination of the data across confidence judgments yields a different picture. The overall data clearly are better fit by a curved ROC function (Fig. 6), a conclusion supported by the analysis of individual ROC functions. In these data, 38 out of 47 subjects produced ROC functions better fit by SDT than by threshold theory. However, it should be noted that threshold models can predict curved ROC functions with additional assumptions about how the response stage is mapped onto the internal states (e.g., Bröder & Schütz; 2009; Chen, et al., 2015; Kellen, Singmann, Vogt, & Klauer, 2015; Malmberg, 2002). Finally, the analysis across bins determined by RTs yielded data that more closely replicate the traditional SDT findings: smooth, almost symmetrical ROC curves (Fig. 8) that were more consistent than the linear predictions of threshold theory for all 47 subjects. However, these conclusions must be tempered by the relatively poorer fit for all considered models for the RT data, perhaps due to the increased variability of the d’ estimates across cumulative RT bins. In this case, d’ was neither independent of the decision criterion, c, nor was it monotonically correlated with c, as it was for the data portioned into confidence bins. Rather, d’ was greatest at the midpoint of the ROC curve, consistent with the “elbow” observed in other derivations of ROC curves from RT data (see Luce, 1986, for a review of such results).

So what can be made of these inconsistencies? A casual inspection of the data indicates that we might be dealing with an artifact. The larger the range of P(H) and P(FA) values, the more curved the data appear. Is it possible that the target proportion manipulation was too weak to induce subjects to change their response criteria sufficiently to demonstrate the extreme values of the ROC function (a point also raised by Dube & Rotello, 2012, and Malmberg & Xu, 2006)? Over any restricted range an arc can be approximated by a straight line. When one looks at the confidence rating data, it can be seen that the ROC curve begins to show its curvilinear tendencies only when the data venture into the upper-right corner of high hit and false-alarm rates. Similarly, the most clearly curved function is that determined by binning the data into RT categories which samples nearly the entire range of hit and false-alarm rates.

This tempting analysis is unfortunately too simple, as there are other differences among the results of the three response measures. The most obvious is that d’ is generally higher when measured by confidence ratings than across target proportion manipulations and RT measures (see also Gardner, Macfee & Krinsky, 1975), and it is clearly correlated with c in this case. Further, d’ assumed its maximum value for the most certain “yes” category, relative to those that include “probables” and guesses. In contrast, d’ is not related in a regular way with c in the RT binning procedure. Rather, d’ seems to be at a maximum at the point that divides “yes” and “no” responses, and trails off in either direction as positive or negative responses are made more rapidly. A simple explanation for these results could be that RTs are heavily influenced by familiarity alone, as high or low familiarities lead to fast responses, whereas attempts at recollection for intermediate values produce slower responses (Malmberg, 2008). Still, successful recollection can also lead to highly confident, if slower, responses producing discrepancies between the ROC curves determined from confidence ratings and RTs. The confidence rating data support models that predict asymmetric ROC curves, like the heteroscedastic version of SDT or hybrid SDT and recollection models, like the A-J theory. A similar conclusion has been advocated by Van Zandt (2000) who argued that “…equating confidence with perceived familiarity or likelihood oversimplifies how people estimate confidence” (p. 595). Although some have argued that RTs and confidence ratings measure approximately the same thing, others have shown both theoretically (e.g., Thomas & Myers, 1972) and empirically (e.g., Weidemann & Kahana, 2016) that the overall similarities cannot hide the fact that d’ is usually higher after partitioning data into confidence categories than into RT bins. Further, there are idiosyncrasies associated with ROC curves derived from RT binning procedures that can also affect model-fitting (see, e.g., Luce, 1986, pp. 227-8).

Despite some similarities in the ROC functions determined by confidence rating and RT binning procedures, the differences in the d’ and c parameters across the two measurement methods indicate that there is not a direct relationship between confidence and RT. In fact, when correlations were calculated between confidence ratings and RTs for the data from individual subjects in the present study, the average correlation was .281 (exceeding the value of .074 which would be significant at the .05 level for 500 pairs of observations), and the r value was significant for 41 of the 47 participants. Still these correlations are low enough that it is obvious that not all rapid responses are confident, and some confident responses result from slow processes. The prediction from hybrid theories that allow for slower, recollection processes to produce highly-confident responses, especially for old items, could result in the “elbow” in the ROC function for the RT binning procedure representing relatively higher d’ values for slower responses than for faster ones.

It should be pointed out that in the context of the A-J model, we presume that familiarity can lead to faster responses, on average, than recollection-based responses (Malmberg, 2008; see especially his application of Shiffrin & Steyvers’ (1997) REM model to RT data). This interpretation is also consistent with the “butcher on the bus” anecdote provided by Mandler (1980), in which, when confronted by someone we have seen before, but in a new context (e.g., on a bus) we might first experience a feeling of familiarity, subsequently followed by retrieval of contextual information (a supermarket), and finally recollection of a specific memory (he’s the butcher at the supermarket). However, there are other models that presume that the two processes occur in parallel, and that in at least in some cases, recollections might operate before familiarity drives a response (Brainerd, Nakamura, & Lee, 2019; Kellen, Klauer, & Bröder, 2013; Yonelinas, 1997).

In the present case, we believe that high and low familiarities can lead to rapid responses that are usually correct, and slower responses for intermediate familiarities result from attempts at test item recollections that are successful only on a minority of (target) trials. Still, when recollection fails, and unlike the discrete-state threshold models, the A-J model allows for familiarity to guide decisions even in the uncertain region between the two criteria of the model. Only for a very small proportion of trials (about 6.6% in the present case) do subjects seem to guess in a truly random way when both familiarity and recollection fail to discriminate targets from distractors.

We are therefore left with the conclusion that different methods of measuring recognition memory can produce different results, but these differences are not beyond reasonable theoretical interpretation. Fast responses seem to represent a continuous underlying representation of memory strength, and more reasoned responses, such as later indications of confidence, can access supporting evidence from recollections of episodic information stored during the study period. Methods that encourage subjects to produce responses across the range of possible outputs seem to capture a better picture of recognition memory than those based on more constricted domains. Further, analyses of individual participants’ data indicate that different strategies or abilities can be obscured by model fitting that examines only aggregate data. It is also likely that participants choose to engage different control processes in order to deal efficiently with variations in task demands and testing conditions (Malmberg, 2008; Malmberg, Raaijmakers, & Shiffrin, 2019). In this regard, it could be argued that: (1) the target proportion manipulation across trials blocks produces a global strategy affecting mainly response bias, (2) the instructions to respond rapidly emphasizes the role of familiarity in decision-making, and (3) the instructions to add a confidence rating after the speeded response leads to a relatively larger role for recollection in the confidence rating data. If these task variables indeed induce the application of control processes in different stages of the search and decision processes in recognition memory, then it is not surprising that the data tell different stories about behavioral components reflected in the ROC curves generated by the different testing manipulations.

In conclusion, the A-S theory of memory and its extension by the A-J model and other hybrid models involving combinations of SDT and recollective search processes seem flexible enough to remain viable explanations of search and decision processes in human memory (Klauer & Kellen, 2015). The present demonstration that such models can predict the effects of varying target proportions in a test list on the slopes of the zROC curves determined from confidence ratings, and the way in which d’ changes across confidence levels, are cases in point. That is not to say that alternative models based on random walks, evidence accumulators, diffusion processes, or multinomial processing trees (e.g., Dube, et al., 2012; Province & Rouder, 2012; Van Zandt, 2000) might ultimately be more successful in accounting for some specific details of recognition memory data, at least at the level of measurement or pragmatic models (Bröder, et al., 2013; Malmberg, 2008). This level of successful data-fitting can exist even if most measurement models lack the elegance and generality of theories of memory storage, retrieval, and decision processes such as the epistemic process models central to the work of Atkinson, Shiffrin, and their colleagues (Atkinson & Shiffrin, 1968; 1971; Atkinson & Juola, 1973, 1974; Shiffrin & Steyvers, 1997; Cox & Shiffrin, 2017).

Author Note

JFJ acknowledges support from a research fellowship (2016-T3/SOC-1544) provided by the Community of Madrid, Spain, while the present research was carried out. We are grateful for the useful comments provided by Arndt Bröder, Daniel Heck, Kenneth Malmberg, John Wixted, Andrew Yonelinas, and an anonymous reviewer on an earlier version of this paper. A complete data file for the present research and its description are available on the following link: https://osf.io/y78mk/

References

Alonso, M. A., Fernández, A., Díez, E., & Beato, M. S. (2004). Índices de producción de falso recuerdo y falso reconocimiento para 55 listas de palabras en castellano. Psicothema, 16, 357-362.

Annis, J., Lenes, J. G., Westfall, H. A., Criss, A. H., & Malmberg, K. J. (2015). The list-length effect does not discriminate between models of recognition memory. Journal of Memory and Language, 85, 27-41.

Atkinson, R. C., & Juola, J. F. (1973). Factors influencing speed and accuracy of word recognition. In S. Kornblum (Ed.), Attention and Performance IV. New York: Academic Press.

Atkinson, R. C., & Juola, J. F. (1974). Search and decision processes in recognition memory. In D. Krantz, R. Atkinson, R. Luce, and P. Suppes (Eds.). Contemporary Developments in Mathematical Psychology, Vol. 1, San Francisco: W.H. Freeman.

Atkinson, R. C., & Shiffrin, R. M. (1968). Human memory: A proposed system and its control processes. In K. W. Spence & J. T. Spence (Eds.), The Psychology of Learning and Motivation: Advances in Research and Theory (Vol. 2). New York: Academic Press.

Atkinson, R. C., & Shiffrin, R. M. (1971). The control of short-term memory. Scientific American, 225, 82-90. doi:https://doi.org/10.1038/scientificamerican0871-82 .

Banks, W. P. (1970). Signal detection theory and human memory. Psychological Bulletin, 14, 81-99.

Botella, J., Privado, J., Suero, M., Colom, R., & Juola, J. F. (2019). Group analyses can hide heterogeneity effects when searching for a general model: Evidence based on a conflict monitoring task. Acta Psychologica, 193, 171-179.

Brainerd, C. J., Nakamura, K., & Lee, W.-F., (2019). Recollection is fast and slow. Journal of Experimental Psychology: Learning Memory and Cognition.

Bröder, A., Kellen, D., Schütz, J., & Rohmeier, C. (2013). Validating a two-high-threshold measurement model for confidence rating data in recognition. Memory, 21, 916-944.

Bröder, A. & Malejka, S. (2017). On a problematic procedure to manipulate response biases in recognition experiments: The case of “implied” base rates. Memory, 25, 736-743.

Bröder, A. & Schütz, J. (2009). Recognition ROCs are curvilinear – or are they? On premature arguments against the two-high-threshold model of recognition. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 587-606.

Callejas, A., Correa, A., Lupiáñez, J., & Tudela, P. (2003). Normas asociativas Intracategoriales para 612 Palabras de Seis Categorías Semánticas en Español. Psicológica, 24, 185-214.

Chen, T., Starns, J. J., & Rotello, C. M. (2015). A Violation of the conditional independence assumption in the two-high-threshold model of recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41, 1205-1225.

Cohen, A. L., Sanborn, A. N., & Shiffrin, R. M. (2008). Model evaluation using grouped or individual data. Psychonomic Bulletin & Review, 15(4), 692-712.

Cox, G. E., & Shiffrin, R. M. (2017). A dynamic approach to recognition memory. Psychological Review, 124, 795-860.

Dube, C. & Rotello, C. M. (2012). Binary ROCs in perception and recognition memory are curved. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18, 130-151.

Dube, C., Starns, J. J., Rotello, C. M., & Ratcliff, R. (2012). Beyond ROC curvature: Strength and response time data support continuous-evidence models of recognition memory. Journal of Memory and Language, 67, 389-406.

Ebbinghaus, H. (1885). Über das Gedächtnis: Intersuchngen zur Experimentellen Psychologie. Liepzig: Dunker and Humboldt. (Translated by H. A. Ruger & C. E. Bussenius, 1913, and reissued by Dover Publications, 1964.)

Egan, J. P. (1958). Recognition memory and the operating characteristic (Tech. Note AFCAC-TN-58-51). Bloomington: Indiana University, Hearing and Communication Laboratory.

Estes, W. K., & Maddox, W. T. (2005). Risks of drawing inferences about cognitive processes from model fits to individual versus average performance. Psychonomic Bulletin & Review, 12(3), 403-408.

Fechner, G. T. (1966). Elements of Psychophysics (Vol. 1.). Translated by H. E., Adler, D. H. Howes & E. G. Boring. New York: Holt, Rinehart, & Winston. (Original work published in 1860.)

Gardner, R. M., Macfee, M., & Krinsky, R. (1975). A comparison of binary and rating techniques in the signal detection analysis of recognition memory. Acta Psychologica, 39, 13-19.

Green, D. M., & Swets, J. A. (1966). Signal Detection Theory and Psychophysics. New York: Wiley.

Grider, R. C., & Malmberg, K. J., (2008). Discriminating between changes in bias and changes in accuracy for recognition memory of emotional stimuli. Memory & Cognition, 36, 933-946.

Gronlund, S. D., & Elam, L. E. (1994). List-length effect: Recognition accuracy and variance of underlying distributions. Journal of Experimental Psychology: Learning, Memory, and Cognition. 20, 1355-1369.

Jang, Y., Wixted, J. T., & Huber, D. E. (2011). The diagnosticity of individual data for model selection: Comparing signal-detection models of recognition memory. Psychonomic Bulletin & Review, 18, 751-757.

Juola, J. F., Fischler, I., Wood, C. T., & Atkinson, R. C. (1971). Recognition time for information stored in long-term memory. Perception & Psychophysics, 10, 8-14.

Kellen, D. & Klauer, K. C. (2015). Signal detection and threshold modeling of confidence-rating ROCs: A critical test with minimal assumptions. Psychological Review, 122, 542-557. doi:https://doi.org/10.1037/a0039251.

Kellen, D., Klauer, K. C., & Bröder, A. (2013). Recognition memory models and binary-response ROCs: A comparison by minimum description length. Psychonomic Bulletin and Review, 20, 693-719.

Kellen, D., Singmann, H., Vogt, J., & Klauer, K. C. (2015). Further evidence for discrete-state mediation in recognition memory. Experimental Psychology, 62, 40-53.

Kintsch, W. (1967). Memory and decision aspects of recognition learning. Psychological Review, 74, 496-504.

Klauer, K. C., & Kellen, D. (2015). The flexibility of models of recognition memory: The case of confidence ratings. Journal of Mathematical Psychology, 67, 8-25.

Luce, R. D. (1986). Response Times. New York: Oxford University Press.

Macmillan, N. A., & Creelman, C. D. (2005). Detection Theory: A User’s Guide (2nd). New York: Psychological Press.

Malejka, S. & Bröder, A. (2019). Exploring the shape of signal-detection distributions in individual recognition ROC data. Journal of Memory and Language, 104, 83-107.

Malmberg, K. J. (2002). On the form of ROCs constructed from confidence ratings. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28, 380-387.

Malmberg, K. J. (2008). Recognition memory: A review of the critical findings and an integrated theory for relating them. Cognitive Psychology, 57, 335-384.

Malmberg, K. J., Raaijmakers, J. G. W., & Shiffrin, R. M. (2019). 50 years of research sparked by Aykinson and Shiffrin (1968). Memory & Cognition.

Malmberg, K. J. & Xu, J. (2006). The influence of averaging and noisy decision strategies on the recognition memory ROC. Psychonomic Bulletin & Review, 13, 99-105.

Mandler, G. (1980). Recognizing: The judgment of previous occurrence. Psychological Review, 87, 252-271.

McAdoo, R. M., Key, K. N., & Gronlund, S. D. (2019). Task effects determine whether recognition memory is mediated discretely or continuously. Memory & Cognition.

Parks, C. M. & Yonelinas, A. P. (2007). Moving beyond pure signal-detection models: Comment on Wixted (2007). Psychological Review, 114, 188-202.

Parks, T.E. (1966). Signal-detectability theory of recognition-memory performance. Psychological Review, 73, 44-58.

Pérez Dueñas, C., Acosta, A., Megías, J. L., & Lupiáñez, J. (2010). Evaluación de las dimensiones de valencia: Activación, frecuencia subjetiva de uso y relevancia para la ansiedad. La depresión y la ira de 238 sustantivos en una muestra universitaria. Psicológica, 31, 241-273.

Province, J. M., & Rouder, J. N. (2012). Proceedings of the National Academy of Sciences of the United States of America, 109, 14357-14362.

Shiffrin, R. M., & Steyvers, M. (1997). A model for recognition memory: REM – retrieving effectively from memory. Psychological Bulletin & Review, 4, 145-166.

Singmann, H., & Kellen, D. (2013). MPTinR: Analysis of Multinomial Processing Tree models with R. Behavior Research Methods, 45, 560–575.

Snodgrass, J. G., & Corwin, J. (1988). Pragmatics of measuring recognition memory: Applications to dementia and amnesia. Journal of Experimental Psychology: General, 117, 34-50.

Sternberg, S. (1966). High-speed scanning in human memory. Science, 153, 652-654.

Sternberg, S. (2016). In defence of high-speed memory scanning. Quarterly Journal of Experimental Psychology, 69, 10, 2020-2075. https://doi.org/10.1080/17470218.2016.1198820.

Swets, J.A., Dawes, R.M., & Monahan, J.M. (2002). Psychological science can improve diagnostic decisions. Psychological Science in the Public Interest, 1, 1-24.

Thomas, E. A. C. & Myers, J. L. (1972). Implications of listing data for threshold and nonthreshold models of signal detection. Journal of Mathematical Psychology, 9, 253-285.

Tulving, E. (1972). Episodic and semantic memory. In E. Tulving & W. Donaldson (Eds.), Organization of Memory. New York: Academic Press.

Van Zandt, T. (2000). ROC curves and confidence judgments in recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26(3), 582-600.

Weidemann, C. T. & Kahana, M. J. (2016). Assessing recognition memory using confidence ratings and response times. Royal Society Open Science. 3: 150670. https://doi.org/10.1098/rsos.150670.

Wickens, T. D. (2002). Elementary Signal Detection Theory. New York: Oxford University Press.

Wixted, J. T. (2007). Dual-process theory and signal detection theory of recognition memory. Psychological Review, 114, 152-176.

Yonelinas, A. P. (1994). Receiver-operating characteristics in recognition memory: Evidence for a dual-process model. Journal of Experimental Psychology: Learning. Memory. and Cognition, 20, 1341-1354.

Yonelinas, A. P. (1997). Recognition memory ROCs for item and associative information: The contribution of recollection and familiarity. Memory & Cognition, 25, 747-763.