Abstract

When trying to remember verbal information from memory, people look at spatial locations that have been associated with visual stimuli during encoding, even when the visual stimuli are no longer present. It has been shown that such “eye movements to nothing” can influence retrieval performance for verbal information, but the mechanism underlying this functional relationship is unclear. More precisely, covert in comparison to overt shifts of attention could be sufficient to elicit the observed differences in retrieval performance. To test if covert shifts of attention explain the functional role of the looking-at-nothing phenomenon, we asked participants to remember verbal information that had been associated with a spatial location during an encoding phase. Additionally, during the retrieval phase, all participants solved an unrelated visual tracking task that appeared in either an associated (congruent) or an incongruent spatial location. Half the participants were instructed to look at the tracking task, half to shift their attention covertly (while keeping the eyes fixed). In two experiments, we found that memory retrieval depended on the location to which participants shifted their attention covertly. Thus, covert shifts of attention seem to be sufficient to cause differences in retrieval performance. The results extend the literature on the relationship between visuospatial attention, eye movements, and verbal memory retrieval and provide deep insights into the nature of the looking-at-nothing phenomenon.

Similar content being viewed by others

People look at empty spatial locations where information was presented during encoding when retrieving information that is associated with this location. For instance, Richardson and colleagues (Hoover & Richardson, 2008; Richardson & Kirkham, 2004; Richardson & Spivey, 2000) showed that when participants were questioned about a verbal statement, they gazed back at a spatial location on a computer screen that was associated with the verbal information during encoding if the screen was blank. This so-called looking-at-nothing phenomenon has also been shown to occur in language processing (Altmann, 2004), visual mental imagery (Brandt & Stark, 1997; Johansson, Holsanova, & Holmqvist, 2006; Martarelli & Mast, 2011; Spivey & Geng, 2001), categorization (Martarelli, Chiquet, Laeng, & Mast, 2017; Scholz, von Helversen, & Rieskamp, 2015), reasoning (Jahn & Braatz, 2014; Scholz, Krems, & Jahn, 2017), and decision-making (Platzer, Bröder, & Heck, 2014; Renkewitz & Jahn, 2012).

Recent research has investigated if and under what circumstances eye movements have a functional role in memory retrieval (Bochynska & Laeng, 2015; Johansson, Holsanova, Dewhurst, & Holmqvist, 2012; Johansson & Johansson, 2014; Laeng, Bloem, D’Ascenzo, & Tommasi, 2014; Laeng & Teodorescu, 2002; Martarelli & Mast, 2013; Scholz, Mehlhorn, & Krems, 2016; Staudte & Altmann, 2016; Wantz, Martarelli, Cazzoli, et al., 2016; Wantz, Martarelli & Mast, 2016). Specifically, does looking at a spatial location that is (almost) blank aid the retrieval of information associated with this location? To test the functional role of eye movements during memory retrieval, Scholz et al. (2016) aurally presented participants with sentences describing four fictional cities. While they listened to each sentence, a symbol appeared in one of four spatial areas on a computer screen. Thus, each sentence was associated with one of four spatial locations. During the subsequent retrieval phase, participants were presented with a statement about one of the previously heard sentences and had to make a true/false judgment (similar to Richardson & Spivey, 2000). In some trials, additionally, a spatial cue appeared either in the spatial area associated with the to-be-retrieved information (congruent location) or in one of the other locations (incongruent locations). In trials in which no spatial cue appeared, participants looked at the empty spatial locations associated with the retrieved information, replicating previous results on the looking-at-nothing behavior. This behavior was more pronounced for correct than for wrong responses, an early indication of a functional link between eye movements and memory retrieval (see also Martarelli et al., 2017; Martarelli & Mast, 2011). More importantly, the gaze manipulation through the spatial cue revealed higher retrieval accuracy in congruent compared to incongruent trials in which gaze was guided away from associated spatial locations. The higher accuracy can be interpreted as resulting from an overlap between processes engaged in encoding and retrieving information stored in an episodic memory trace. Reenacting processes present during encoding facilitates memory by eliciting the execution of eye movements, which then increases memory activation for the desired information. Also in line with this explanation, disrupting the reenactment process can impair memory retrieval (Scholz et al., 2016).

Whereas Johansson et al. (2012) and Laeng et al. (2014; see also Bochynska & Laeng, 2015; Johansson & Johansson, 2014; Laeng & Teodorescu, 2002; Wantz, Martarelli, Cazzoli, et al., 2016) came up with similar results, others did not find such strong effects, thereby calling into question the functional relationship between eye movements and memory retrieval (Martarelli & Mast, 2013; Staudte & Altmann, 2016; Wantz, Martarelli, & Mast, 2016). For instance, in a study by Staudte and Altmann (2016), participants learned sequences of letters, each presented sequentially at a distinct location in a grid. The authors tested the recognition of either the sequence of locations at which the letters occurred or the sequence of letters. In some blocks of trials participants were allowed to gaze freely (free viewing condition), and in other blocks they had to fixate the center of the screen (fixed viewing condition). Fixed viewing impaired only location and not letter recall, and only when participants had to detect an error in the location sequence. In a study by Wantz, Martarelli, and Mast (2016), participants had to encode 24 visual objects in a mental-imagery task and were asked about these objects in subsequent retrieval trials. In some of the retrieval trials participants had to keep their eyes on a congruent spatial location (indicated by a red frame surrounding one quarter of a computer screen) and in other trials on an incongruent spatial location. The authors found no effect of this manipulation on retrieval accuracy.

An alternative explanation that may account for these differing results may be that it is not eye movements per se that cause differences in retrieval performance. Instead, covert shifts of attention could explain the functional relationship between eye movements and memory retrieval in the looking-at-nothing paradigm. Why would this be? For one, in previous studies that did not find a functional effect of eye movements on retrieval accuracy, participants may have adhered closely to the eye-movement instruction but were still able to shift their attention covertly to the associated spatial location, thereby facilitating memory retrieval of the desired information. For instance, Wantz, Martarelli, and Mast (2016) instructed participants to gaze freely but within one whole quadrant surrounded by a red frame. Participants may have covertly shifted attention to a neighboring spatial location in this study. In contrast, Laeng and Teodorescu (2002) instructed participants to fixate the center of the screen during retrieval, which may have prevented facilitation by covert shifts of attention toward the empty spatial locations during retrieval.

Previous research has already discussed that shifts of attention might play a central role in explaining the looking-at-nothing phenomenon (Brandt & Stark, 1997; Ferreira, Apel, & Henderson, 2008; Johansson et al., 2012; Laeng et al., 2014; Laeng & Teodorescu, 2002; Richardson, Altman, Spivey, & Hoover, 2009; Scholz et al., 2016; Wantz, Martarelli, Cazzoli, et al., 2016). Empirical results emphasizing the role of shifts of attention in executing eye movements to nothing during memory retrieval have been found by Richardson and Spivey (2000) and Johansson et al. (2012). For instance, Richardson and Spivey showed that looking at nothing occurred even when participants had to fixate the center of the screen during encoding. That is, they were not allowed to perform an eye movement during encoding. Still, during the retrieval phase, participants looked back to spatial locations that were associated with the retrieved information during encoding. These results might be explained by covert shifts of attention taking place during encoding, when participants associate spatial information with memory content. During retrieval, eye movements to nothing reflect the internal shifts of attention between the stored memory representations.

Additionally, a large body of literature shows a tight link between eye movements, attention, and working memory (Abrahamse, Majerus, Fias, & van Dijck, 2015; Belopolsky & Theeuwes, 2009; Huettig, Olivers, & Hartsuiker, 2011; Theeuwes, Belopolsky, & Olivers, 2009). Covert shifts of attention usually precede eye movements (e.g., Deubel & Schneider, 1996; Hoffman & Subramaniam, 1995; Kowler, Anderson, Dosher, & Blaser, 1995; Rizzolatti, Riggio, & Sheliga, 1987). Shifts of attention can occur within mental representations held in working memory (Griffin & Nobre, 2003; Lepsien, Griffin, Devlin, & Nobre, 2005; Olivers, Meijer, & Theeuwes, 2006; Theeuwes, Kramer, & Irwin, 2011), and they can function as a rehearsal mechanism that allows information to be maintained in working memory (Awh, Jonides, & Reuter-Lorenz, 1998; Godijn & Theeuwes, 2012; Postle, Idzikowski, Sala, Logie, & Baddeley, 2006; Smyth, 1996; Smyth & Scholey, 1994). Taking these results together, we assume that covert shifts of attention can be the mechanism that not only leads to the looking-at-nothing phenomenon but additionally underlies the functional role of eye movements to nothing.

In all previous studies testing the functional role of the looking-at-nothing phenomenon, covert shifts of attention were not controlled for independently of overt shifts of attention, that is, an attention shift including an eye movement. However, manipulating if covert or overt shifts of attention are performed during retrieval is needed to separate the contributions of overt and covert shifts of attention to the functionality of the looking-at-nothing phenomenon. A successful manipulation to study covert shifts of attention independent of overt eye movements was applied in a study testing insight problem-solving by Thomas and Lleras (2009). The authors presented participants with a tracking task in which they had to react to digits presented at different screen locations. They tested participants under attention shift as well as eye-movement instructions. Assessing digit identification accuracy (DIA) and digit response times of the tracking task allowed them to determine if participants adhered to the eye-movement and attention-shift instructions. In their study, participants performed equally in the two instruction conditions. They concluded that attention shifts appeared to be sufficient to guide insight problem-solving. In a similar vein, research on so-called retro-cues has shown that covert shifts of attention between items held in memory can be induced by presenting a spatial cue even after the encoding phase (for an overview, see Souza & Oberauer, 2016).

To test if covert shifts of attention can account for the functional relationship between eye movements and memory retrieval, we conducted two eye-tracking experiments. Similar to Scholz et al.’s (2016) study, participants listened to four sentences in each trial. Each sentence was associated with one of four spatial locations of a gray 2 × 2 matrix on a computer screen. In a retrieval phase, participants judged a statement about one of the previously heard sentences to be true or false. Simultaneously, participants were asked to solve a tracking task in which they were instructed either to look at (in the eye-movement group) or to shift their attention covertly to (in the attention-shift group) a spatial location associated with retrieval-relevant information (congruent condition) or away from it (incongruent conditions), which allowed us to assess whether the gaze instruction was successful.

Given previous findings on the congruent/incongruent manipulation of eye movements during memory retrieval (e.g., Johansson & Johansson, 2014; Scholz et al., 2016), we assumed that response accuracy would be higher when participants were guided toward the location where information was presented during encoding (congruent condition). When participants were guided away from the location associated with the retrieved information, we expected to find lower response accuracy (incongruent conditions).

Furthermore, we assumed that overt eye movements would not have an advantage over covert attention shifts even for retrieving information from memory that was associated with a visual location during a preceding encoding phase. Thus, we expected that response accuracy would not differ between the attention-shift and eye-movement groups.

Experiment 1

Method

Participants

Data collection took place in two waves. In the first phase, 32 students (26 female, 6 male, M age = 24.7 years, range: 20–32 years) and in the subsequent phase 39 students (27 female, 12 male, M age = 21.7 years, range: 18–35 years) at the Chemnitz University of Technology participated in the experiment for course credit. All participants were native German speakers and had normal or corrected-to-normal vision.

Apparatus

Participants were seated 600 mm in front of a 22-inch computer screen (1,680 × 1,050 pixels). Stimuli were presented with E-Prime 2.0. Auditory materials were presented via headphones. An SMI iView RED eye tracker sampled data from the right eye at 120 Hz. Eye movements were recorded with iView X 2.5 following a 5-point calibration. Data were analyzed with BeGaze 2.3. Fixation detection had a dispersion threshold of 100 pixels (2.8° visual angle) and a duration threshold of 80 ms. To improve tracking quality, participants placed their heads in a chin rest.

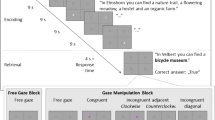

Materials

Visual stimuli consisted of a grid dividing the screen into equal quadrants. Each quadrant had a size of 14.3° of visual angle vertically and 17.1° of visual angle horizontally. To associate spatial locations with the auditory stimuli, a symbol appeared in a circle in the center of the respective spatial location (see Fig. 1). During the retrieval phase, participants saw digits from 0 to 9. The digits appeared in the same locations as the symbols that were presented during encoding or in a circle in the center of the screen. Circles were of equal size with a visual angle of 2.4°. Digits had a size of approximately 1.2° of visual angle. The spatial distances between diagonal circles was 21.7° of visual angle, and between the center of the screen and the center of each of the circles in the quadrants was 11.4° of visual angle.

Example trial with to-be-encoded sentences and a true statement about the sentence in the retrieval phase. The relevant quadrant is the top left location, as this is the location associated with the sentence. The bottom of the figure shows details of the digit locations of the tracking task. Participants in the eye-movement group tracked these digits via overt eye movements, while participants in the attention-shift group maintained central fixation and tracked the digits via covert movements of attention only (see main text for detailed information). Note that the size of the digits is increased in the figure to enhance readability

Auditory stimuli were taken from Scholz et al. (2016). Twelve additional sentences and six additional test statements were constructed. Overall, 40 sentences and 10 statements about 10 of the sentences were used in this experiment. The sentences described artificial locations. Each location consisted of a city name and was described by four attributes (sights, sports activities, institutions, and buildings). For instance, “In Zehdenick you can find a historical bookshop, a dark swamp, a Beethoven statue and a vineyard.” For half of the statements the correct answer was “true,” and for the other half the correct answer was “false” (e.g., True: “In Zehdenick you can find a Beethoven statue.” False: “In Zehdenick you can find a Mozart statue.”).

Procedure

The experiment started with two practice trials, followed by eight experimental trials. Each trial followed the same procedure and was divided into an encoding phase and a retrieval phase with tracking task (see Fig. 1). During the encoding phase, participants heard four sentences, with each sentence being associated with one quadrant on the screen. Participants were instructed to listen carefully and to memorize the sentences to the best of their ability, as they did not know which of the sentences would be tested during retrieval.

After the presentation of a fixation cross, the retrieval phase with tracking task was initiated. In each of the retrieval trials, participants had to do two things simultaneously: First, they had to listen to a test statement regarding one of the four sentences presented during the encoding phase and press either a blue or a red key on the keyboard, as quickly and accurately as possible, depending on whether they thought the statement was true or false. Second, while they were judging the truth of the statement, they saw a digit appear in one of the fields of the matrix or the middle of the screen alternating with a matrix consisting of empty circles at a frequency of 1 Hz (see Thomas & Lleras, 2009). Each digit from 0 to 9 was shown once, thus restricting the maximum trial duration to 20 s.

Participants were instructed to press the space bar on the keyboard whenever a digit appeared on the screen. Throughout a trial, digits always appeared in the same location of the matrix: either in the relevant spatial location (congruent condition, two trials), in one of the adjacent locations (incongruent adjacent clockwise, incongruent adjacent counterclockwise condition, two trials), in the diagonal location (incongruent diagonal condition, two trials), or in the center of the screen (center condition, two trials). As soon as the participant made the true/false judgment by hitting one of the response buttons, the retrieval trial ended and a new encoding phase followed.

In the eye-movement group, the tracking task was designed to guide participants’ eyes either to the congruent spatial location or away from it. Participants in this group were instructed to gaze freely but to keep their eyes on the screen. In the attention-shift group, participants were asked to fixate the center of the screen and to react to the digits by covertly shifting their attention to them.

Sentences, statements, sentence locations, and the conditions of the tracking task were counterbalanced in four lists and randomly assigned to participants. Encoding and retrieval phases lasted approximately 7 min.

Results

Manipulation check

Before analyzing response accuracy for the different instruction groups and conditions of the tracking task, we tested if the instructions and tracking task manipulations were successful. We considered the manipulations successful if participants followed the instructions to fixate the symbols during encoding, if participants in the eye-movement group fixated the digits of the tracking task, and if participants in the attention-shift group fixated the center of the screen during retrieval. Furthermore, we expected participants in both groups to perform equally well in the tracking task.

Gaze behavior during encoding and retrieval

To analyze gaze patterns, five areas of interest (AOIs) were drawn—one around each of the four circles in the quadrants and the circle in the center of the screen. AOIs exceeded the borders of each circle by 4.4° of visual angle. The first fixation was excluded from the analyses as right before each retrieval phase a fixation cross appeared in the center of the screen. Proportions were based on the fixation times in each of the five AOIs divided by the sum of the fixation times to all AOIs. Proportions of fixations on digits and on the center AOI sum up to 1.0 on the trial level. For the reported comparisons, we computed mean fixation proportions per participant. Concerning gaze behavior during the encoding phase, we expected both groups (eye-movement and attention-shift groups) to gaze toward each AOI containing a symbol during encoding for longer than 25% of the time, as this would suggest chance level (given four loudspeaker locations). We found that five participants in the eye-movement group and eight participants in the attention-shift group did not gaze toward the AOI containing the symbol in three or four of four sentence presentations during each encoding phase. As building up an association between the spatial locations containing the symbol and the auditorily presented sentences is crucial for studying the effect of this association on memory retrieval, these participants were excluded from further analyses. The remaining participants (30 participants in the eye-movement group, 28 participants in the attention-shift group) gazed proportionally longer than 25% of the fixation time toward the AOI with the loudspeaker than toward the other AOIs. This was confirmed by testing mean fixation proportions aggregated over symbols, trials, and participants against a value of .25, eye-movement group: t(29) = 13.6, p < .001, d = 2.5, BF10 > 1,000,Footnote 1 attention shift group: t(27) = 11.8, p < .001, d = 2.2, BF10 > 1,000. Additionally, participants in the attention-shift group did not differ from participants in the eye-movement group concerning the proportion of time spent looking at the loudspeakers during the encoding phase, t(56) = −0.4, p = .73, d = −0.1, BF01 = 3.57. The results on fixation proportions during the encoding phase are presented in Table 1. Concerning proportions of fixations during the retrieval phase, we expected participants in the eye-movement group to fixate the digits during the retrieval phase for more than 20% of the fixation time, as this would suggest chance level given the five digit locations. In comparison, we expected participants in the attention-shift group to fixate mainly on the center of the screen, again for more than 20% of the time. As expected, participants in the eye-movement group looked at the digits of the tracking task, t(29) = 7.5, p < .001, d = 1.4, BF10 > 1,000. Participants in the attention-shift group looked at the center of the screen, t(27) = 16.4, p < .001, d = 3.1, BF10 > 1,000 (see Table 1). Additionally, between-subjects t tests confirmed that participants in the attention-shift group looked proportionally longer at the center of the screen than participants in the eye-movement group, t(56) = 3.2, p = .002, d = −0.8, BF10 = 15.11. They also spent much less time looking at the digits of the tracking task than the eye-movement participants did, Welch’s t(43.1) = −4.6, p < .001, d = −1.1, BF10 = 479.82.

Tracking task performance

To analyze the performance in the tracking task, two measures were compared (see Thomas & Lleras, 2009). The first measure was the DIA, which assesses the number of digits a participant reacted to and the number of digits a participant observed before pressing the response button. DIA = 1 means that a participant reacted to every digit seen. The DIA was aggregated across all trials for each participant. No differences were expected between the two gaze instruction groups. Participants in the two groups responded comparably to the digits of the tracking task, Welch’s t(45.5) = 1.86, p = .07, d = 0.48, BF01 = 0.95 (see Table 1).

The second measure used was the average reaction time between the onset of a digit featured on the screen to the reaction of a participant in selecting the space bar. It was calculated per trial and aggregated over all trials per participant. Reaction times were expected to be similar for the two gaze instruction groups because tasks were the same for both groups other than the gaze instruction. As expected, participants responded equally fast to the digits of the tracking task, t(56) = −1.95, p = .06, d = −0.51, BF01 = 0.78 (see Table 1).

Retrieval task performance

Last, we tested whether participants differed in their overall performance in the retrieval task. We did not expect differences in response accuracy or response times between the two instruction groups. As expected, participants in the two instruction groups performed comparably well, t(56) = 1.0 , p = .32, d = 0.26, BF01 = 2.47, and responded equally fast to the retrieval task, t(56) = −0.8 , p = .43, d = −0.21, BF01 = 2.88 (see Table 1).

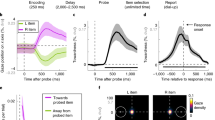

Response accuracy

The dependent measure was the retrieval performance, which was assessed as the mean percentage of correct responses for each tracking task condition, that is, congruent, incongruent adjacent, incongruent diagonal, and center. Note, for analyses, we combined the incongruent adjacent clockwise and counterclockwise conditions to one incongruent adjacent condition as we expected them to affect retrieval performance similarly. Trials were aggregated for each condition and per participant. Because no sentence was associated with the center, but participants still responded to the tracking task appearing in the center, retrieval performance in the center condition functioned as a baseline condition. We expected participants to perform better in the congruent condition than in the incongruent conditions (see Scholz et al., 2016). Additionally, we expected that if covert attention shifts were sufficient to cause the observed memory effect, retrieval performance would not differ between the two gaze instruction groups. To test this assumption, we subtracted retrieval performance in the center condition from performance in the congruent and incongruent conditions and performed a repeated-measures analysis of variance (ANOVA) for the within-subjects factor tracking task (congruent, incongruent adjacent, incongruent diagonal) and the between-subjects factor gaze instruction (eye movement, attention shift).Footnote 2 As expected, response accuracy varied as a function of tracking task as confirmed by a main effect of tracking task, F(2, 112) = 9.93, p < .001, ηp 2 = .15, BF10 = 200.15. Participants performed best in the congruent condition, followed by the incongruent adjacent condition. They performed worse in the incongruent diagonal condition, linear contrast tracking task: t(57) = −4.21, p < .001. Furthermore, there was no difference in retrieval performance between the two gaze instruction groups as shown by a missing significant main effect for the factor gaze instruction, F(1, 56) = 0.08, p = .77, ηp 2 = .0, BF01 = 3.03, and no significant interaction between the factors gaze instruction and tracking task, F(2, 112) = 0.35, p = .70, ηp 2 = .0, BF01 = 0.01 (see Fig. 2).

Mean differences in proportion of correct responses between each condition of the tracking task [congruent, incongruent (incongr.) adjacent, and incongruent diagonal] and the center condition for the two instruction groups (eye movement, attention shift) in Experiment 1. Error bars show within-subjects 95% confidence intervals (Morey, 2008)

Analyzing each condition separately showed that the tracking task led to only marginal differences in retrieval performance for the participants of the eye-movement group, F(2, 58) = 3.15, p = .050, ηp 2 = .10, BF10 = 1.19. Participants of the eye-movement group performed best in the congruent condition, followed by the incongruent adjacent condition. They performed worse in the incongruent diagonal condition, linear contrast tracking task: t(29) = 2.43, p = .02, and with the largest differences in retrieval performance between the congruent and incongruent diagonal conditions, t(29) = 2.48, p = .02, d = 0.45, BF10 = 2.92.

The tracking task led to significant differences for the attention-shift group, F(2, 54) = 7.59, p < .001, ηp 2 = .22, BF10 = 25.63. We observed the same linear trend for the attention-shift group, linear contrast tracking task: t(27) = 3.60, p < .001, and also with the largest differences in retrieval performance between the congruent and incongruent diagonal conditions, t(27) = 3.55, p < .001, d = 0.67, BF10 = 22.19.

Discussion

The aim of Experiment 1 was to test whether overt shifts of attention including an eye movement and covert shifts of attention lead to differences in performance when retrieving verbal information that was associated with a spatial location during encoding. To this purpose, we manipulated whether participants looked at or shifted their attention covertly to the spatial location where the retrieved information was presented during encoding. To test differences in memory retrieval, a tracking task was carried out in which a digit appeared either in the spatial location associated with the verbal information or alternatively in the center location of a screen or in one of the incongruent adjacent or diagonal locations.

In line with previous research (Johansson & Johansson, 2014; Scholz et al., 2016), we found higher retrieval accuracy in the congruent condition compared to the incongruent conditions. The most important finding is that retrieval performance was comparably good in the two instruction groups (eye movement and attention shift). That is, covert shifts of attention were sufficient to elicit the observed results on retrieval performance.

Two points regarding the results of Experiment 1 have to be noted: First, although participants in the eye-movement group looked significantly longer at the spatial areas associated with a verbal statement than participants in the attention-shift group, they also looked for a rather long time at the center of the screen. In Experiment 1, we did not explicitly instruct participants to look at the digits of the tracking task. Because an eye movement to a location usually incorporates an attention shift (Deubel & Schneider, 1996; Hoffman & Subramaniam, 1995), even participants in the eye-movement group might have solved the tracking task by shifting attention covertly while keeping the eyes fixed at the center of the screen.

Second, the tracking task was not equally distributed across the congruent and all other incongruent locations. The tracking task appeared in the congruent condition in 25% of the trials, which is above chance level of 20% (given the five possible locations of the tracking task). Consequently, the memory effect we found might have been due to the digits taking the role of a spatial cue (Yantis & Jonides, 1981; for an overview, see Mulckhuyse & Theeuwes, 2010). Although this cue was informative in only 25% of the trials (and invalid in 75% of the trials), participants could have tried to remember the information associated with the location where the digit appeared during retrieval. Thus, differences in retrieval accuracy might have occurred due to relying on the spatial cue rather than covert shifts of attention elicited by cuing participants to information stored in memory. Although the rather low number of trials might have prevented participants from adopting such a strategy, a second experiment was necessary to rule out this alternative explanation.

Experiment 2

The purpose of Experiment 2 was to test whether covertly shifting attention toward or away from associated spatial locations also facilitates memory retrieval, when all spatial locations are cued equally often. If the functional effect of shifting attention toward associated locations is due only to the digits of the tracking task functioning as retrieval cues, then the effect should diminish when all spatial locations are represented equally often. If, however, the functional effect is indeed driven by covert shifts of attention, then retrieval accuracy should vary with the conditions of the digits in the tracking task. To test this, in Experiment 2, digits were presented equally often in each of the four spatial locations and the center of the screen.

Because participants in the eye-movement group in Experiment 1 also looked frequently at the center of the screen, in Experiment 2, we explicitly instructed them to gaze at the digits of the tracking task during retrieval.

Method

Participants

Seventy-five students from the Chemnitz University of Technology (57 female, 18 male, M age = 21.4 years, range: 18–35 years) volunteered in the experiment for course credit. All had normal or corrected-to-normal vision and were native German speakers.

Apparatus

The same setup as in Study 1 was used.

Materials

The same visual materials were used as in Experiment 1. In Experiment 2, we increased the number of trials. Therefore, six additional location sentences and 66 test statements were constructed, resulting in a total item pool of 16 sentences and 128 statements (a true and a false version for each of the four attributes of a location). Additional materials were constructed in exactly the same way as for the materials in Experiment 1. For half the statements, the correct answer was “true” and for the other half the correct answer was “false.”

Procedure

The overall procedure was similar to that of Experiment 1 (see Fig. 1), but here sentences and statements were presented in four blocks. Each block began with encoding of four of the 16 sentences. In each encoding phase, each sentence was presented twice to improve learning of the associations between spatial location and heard sentences. During the retrieval trials of one block, 15 statements were presented one after the other and together with the tracking task. Digits of the tracking task always appeared in the same spatial location, which could be either congruent (three trials) or incongruent (adjacent clockwise, adjacent counterclockwise, diagonal, center; each three trials) with the location of the original sentence, represented by a symbol. Each retrieval trial was separated by a fixation cross presented in the center of the screen. After the 15 retrieval trials of one block, participants were told about the beginning of a new encoding phase.

Sentences during the encoding phase and statements during the retrieval phase were randomized for each participant and within each block. Overall, each participant was presented with all 16 sentences and a subset of 60 statements (15 statements × 4 blocks). All participants were told to look directly at the symbols during each sentence presentation during the encoding phase. In contrast to Experiment 1, participants in the eye-movement group were explicitly instructed to look at the digits of the tracking task during retrieval. The instructions for the attention-shift group did not change. They were instructed to look at the center of the screen but to shift their attention covertly toward the digits of the tracking task. As in Experiment 1, participants were asked to respond as quickly and as accurately as possible. Working through all four blocks took approximately 16 min.

Results

Manipulation check

As in Experiment 1, we first tested, if participants followed the instructions to fixate the symbols during the encoding phase. During the retrieval phase, participants in the eye-movement group were expected to fixate the digits of the tracking task and participants of the attention-shift group to fixate the center of the screen. We expected tracking task performance to be comparable between the instruction groups.

Gaze behavior during encoding and retrieval

The same AOIs as in Experiment 1 were used. Again, the first fixation was excluded from the analyses. Mean fixation proportions were analyzed. Concerning gaze behavior during the encoding phase, four participants, in more than half of the four presentations during each encoding phase, did not gaze toward the AOI containing the symbol and were excluded from further analyses. The remaining participants (35 participants in the eye-movement group, 36 in the attention-shift group) fixated proportionally longer than 25% of the fixation time on the AOI with the symbol than on the other AOIs, eye-movement group: t(34) = 28.35, p < .001, d = 4.8, BF01 > 1,000, attention-shift group: t(35) = 26.5, p < .001, d = 4.4, BF01 > 1,000. Additionally, participants in the attention-shift group did not differ from participants in the eye-movement group, t(69) = 0.21, p = .83, d = 0.05, BF01 = 4.01 (see Table 1).

Concerning fixation proportions during the retrieval phase, we found that participants in the eye-movement group looked at the digits of the tracking task longer than 20% of the time (chance level), t(34) = 28.1, p < .001, d = 4.8, BF10 = > 1,000. Participants in the attention-shift group looked at the center of the screen, t(35) = 58.9, p < .001, d = 9.8, BF10 > 1,000 (see Table 1). Additionally, we compared mean proportions of fixation on the center AOI between the eye-movement and the attention-shift group. Participants in the attention-shift group looked longer at the center AOI than participants in the eye-movement group, Welch’s t(56.9) = 22.78, p < .001, d = 5.4, BF10 > 1,000.

Tracking task performance

Tracking task performance was again analyzed in terms of the DIA criterion and reaction times when digits appeared on the screen. Six participants in 23 or more trials (more than one-third of all trials) did not react to the digits on the screen. As this manipulation was crucial to test if participants indeed shifted their attention toward the digit locations, we had to exclude these participants from further analyses. Table 1 shows descriptive statistics on tracking task performance. As in Experiment 1, no differences were expected between the two gaze instruction groups. Participants in the two gaze instruction groups (33 participants in the eye-movement group, 32 in the attention-shift group) responded comparably to the digits of the tracking task, t(63) = −0.66, p = .51, d = −0.16, BF01 = 3.27. They also responded equally fast to the digits of the tracking task, t(63) = 0.98, p = .33, d = 0.24, BF01 = 2.63 (Table 1).Footnote 3 We excluded 170 trials in which participants did not react at all to the digits on the screen (4.4% of all trials).

Retrieval task performance

Last, we tested if participants differed in their overall performance in the retrieval task. We did not expect differences in response accuracy or response times between the two instruction groups. As expected, participants in the two instruction groups performed comparably well, t(63) = 0.6, p = .52, d = 0.16, BF01 = 3.31, and responded equally fast to the retrieval task, t(63) = 0.5, p = .62, d = 0.13, BF01 = 3.54 (see Table 1).

Response accuracy

As in Experiment 1, we analyzed mean differences in proportion correct between each of the conditions in which the tracking task appeared in one of the quadrants and the center condition. In Experiment 2, we distinguished congruent, incongruent adjacent clockwise, incongruent adjacent counterclockwise, incongruent diagonal, and center conditions. Trials were aggregated for each condition per participant. If the tracking task facilitates memory retrieval, then we would expect participants to perform best in the congruent condition. If the tracking task decreases performance, then they should perform worse in the incongruent conditions. Following the results of Experiment 1, retrieval performance in the incongruent diagonal condition might be lower than in the incongruent adjacent conditions.

Over the two instruction groups, there was no effect of tracking task on response accuracy, F(3, 189) = 1.87, p = .14, ηp 2 = .03, BF01 = 5.91. There was a difference in retrieval performance between the two gaze instruction groups, as shown by a significant main effect for the factor gaze instruction, F(1, 63) = 4.99, p = .03, ηp 2 = .07, BF10 = 2.14, and no significant interaction between the factors gaze instruction and tracking task, F(3, 189) = 2.24, p = .09, ηp 2 = .03, BF01 = 2.9 (see Fig. 3). Analyzing each instruction group separately revealed that response accuracy varied as a function of tracking task, but only for participants in the attention-shift group, F(3, 93) = 4.0, p = .01, ηp 2 = .11, BF10 = 3.58, and not for the eye-movement group, F(3, 93) = 0.04, p = .99, ηp 2 = . 0, BF01 = 23.8. Participants in the attention-shift group performed best in the congruent condition and worst in the incongruent diagonal condition, linear contrast tracking task: t(31) = 3.03, p = .003, whereas this was not the case for participants in the eye-movement group, t(32) = 0, p = 1.0. Additionally, the largest decrease in retrieval performance of the attention-shift participants occurred between the congruent and incongruent diagonal conditions, t(31) = 3.15, p = .004, d = 0.56, BF10 = 10.65.

Mean differences in proportion of correct responses between each condition of the tracking task [congruent, incongruent (incongr.) adjacent clockwise (clockw.), incongruent adjacent counterclockwise (counterclockw.), incongruent diagonal] and the center condition for the two instruction groups (eye movement, attention shift) in Experiment 2. Error bars show within-subjects 95% confidence intervals

Discussion

Experiment 2 has shown that shifting attention covertly toward associated spatial locations increased retrieval performance in comparison to shifting attention away from associated spatial locations during verbal memory retrieval. Therefore, the results of Experiment 2 replicate the results of Experiment 1 with constant cue validities. That is, different cue validities (25% in Experiment 1, 20% in Experiment 2) did not affect retrieval performance differently.

Explicit instructions to gaze at the digits of the tracking task for participants in the eye-movement group led them to fixate the digits of the tracking task and reduced fixations on the center of the screen. However, these instructions also impaired retrieval performance in all congruent and incongruent conditions of the tracking task in comparison to the center condition. We discuss this point in the General Discussion.

In Experiment 1, each trial started with a new set of sentences, one of which was tested. That is, the other sentences could be dropped from memory. In Experiment 2, at the beginning of each block, participants were presented with a set of four sentences that were then tested 15 times. Thus, the sentences all had to be kept in memory. This difference in the encoding procedure did not affect the results. This is in line with previous studies that also in some cases tested retrieval immediately after encoding (e.g., Johansson et al., 2012; Scholz et al., 2016) or block-wise (e.g., Johansson & Johansson, 2014; Wantz, Martarelli, & Mast, 2016).

As in Experiment 1, retrieval performance in the incongruent conditions of Experiment 2 differed, with the lowest response accuracy scores in the diagonal condition. One explanation for this finding may be that the spatial distance between the congruent and the incongruent diagonal was larger than between the congruent and incongruent adjacent condition. For instance, Guérard, Tremblay, and Saint-Aubin (2009) found that larger spatial distances interfered with rehearsal more than shorter spatial distances. Moreover, the size of the spatial area from which information can be processed (the so-called useful field of view) largely depends on the complexity of the visual information (Hulleman & Olivers, 2017; Irwin, 2004). Given the rather low visual complexity of the visual materials, it could be that the useful field of view was rather large, leading to smaller detrimental effects on retrieval performance in the incongruent adjacent conditions compared to the diagonal conditions. However, Scholz et al. (2016) did not report differences between the incongruent adjacent and diagonal conditions, although they also did not analyze difference scores as we did in this study. No other study analyzed the data separately for the adjacent and diagonal conditions, and often other studies reported results only for the largest spatial distances (e.g., Johansson & Johansson, 2014). Therefore, future research is needed to explore the effects of spatial distance on retrieval accuracy in the looking-at-nothing paradigm.

General discussion

The aim of this study was to test whether covert shifts of attention in comparison to overt eye movements lead to differences in retrieval performance in a paradigm where people “look at nothing” (e.g., Richardson & Spivey, 2000). By manipulating eye movements and covert shifts of attention independently, in two experiments we found that covert shifts of attention indeed led to better memory retrieval in congruent compared to incongruent trials. This result provides preliminary empirical evidence on the role of covert shifts of attention in explaining why people look at empty spatial locations that have been associated with verbal information during a preceding encoding phase. Furthermore, they show that covertly shifting attention between memory representations can explain the functionality of “eye movements to nothing.” During encoding, verbal information was associated with spatial locations. The tracking task that took place during retrieval then cued participants’ attention either toward or away from the information held in memory (e.g., Griffin & Nobre, 2003). The spatial location acted as retrieval cue to enhance retrieval of associated memory representations in congruent trials (see Souza & Oberauer, 2016). In incongruent trials, the wrong retrieval cues were activated, which led to interference and impaired retrieval performance.

Such an explanation is in line with an attention-based account of mental imagery (e.g., Kosslyn, 1994), in which attention is sufficient for reconstructing pieces of information in a memory episode (see Laeng et al., 2014). It is also in line with theories assuming the process of seeking information in the brain during retrieval is very similar to the process of perceiving information (Thomas, 1999). That is, during encoding, continually updated and refined sets of procedures are stored that specify how to direct a person’s attention during retrieval. In a similar vein, a grounded perspective would assume that, during retrieval, the same neural activity is simulated that was present during encoding (Barsalou, 2008). However, how exactly attentional process interact with the encoding–retrieval overlap is still underexplored and should be an issue for future research (see Kent & Lamberts, 2008). More importantly, all outlined explanations lay out the possibility that an eye movement is executed at the end of the chain of events, but this is not necessarily the case, which is in line with our findings.

When participants were allowed to gaze freely, as in the eye-movement group of Experiment 1, they showed enhanced retrieval performance when they were guided toward a spatial location congruent with the location that was associated with the retrieved information during encoding. Their retrieval performance was impaired when they looked at an incongruent location. Unexpectedly, this behavioral pattern disappeared completely when they were explicitly instructed to look at a congruent or incongruent spatial location. In the eye-movement group of Experiment 2, retrieval performance was impaired in all conditions of the tracking task.

Concerning the results of Experiment 1, there was no significant difference in memory performance between the eye-movement and the attention-shift groups. However, analyzing the groups separately revealed only anecdotal evidence for the eye-movement group and a smaller effect than we found in the attention-shift group. Additionally, it was more difficult for participants in the eye-movement group to adhere to the strategy instruction, as revealed by lower performance in the tracking task (fewer identified digits, longer response times) and a rather long fixation on the center of the screen. The observed difference in tracking task performance did not reach significance, but, also, the evidence in support of the null hypothesis was inconclusive. In conclusion, eye movements to associated spatial locations did not help memory retrieval more than shifting attention covertly. If at all, it was more difficult for the eye-movement participants to solve the task in Experiment 1.

In Experiment 2, we instructed participants in the eye-movement group to look at the digits of the tracking task, guiding their eyes either toward or away from associated spatial locations. Although this instruction led to high strategy adherence, it impaired retrieval performance in all conditions of the tracking task. Thus, eye movements to associated spatial locations in Experiment 2 were not functional at all.

Research has demonstrated that eye movements can have detrimental effects on memory (Lawrence, Myerson, & Abrams, 2004; Pearson & Sahraie, 2003; Postle et al., 2006; Tas, Luck, & Hollingworth, 2016). Postle et al. (2006) reported impaired retrieval performance in an imagery task, in which participants performed voluntary eye movements in comparison to freely moving the eyes. They interpreted their results as indicating that it was not the eye movements per se but eye movement control that disrupted performance. Postle et al. (2006) concluded that voluntary eye movements disrupt the maintenance of information in working memory because they tap into the same processing resources. This explanation is in line with our results. The instruction to gaze freely in Experiment 1 led to the observed differences in memory retrieval. The instruction to fixate the digits of the tracking task in Experiment 2 impaired retrieval in all conditions of the tracking task. Tas et al. (2016) studied eye movements and attention shifts in a visual working memory task. When participants had to look at a distracting secondary object, performance declined in comparison to shifting attention to that object. They concluded that looking at an object leads to automatic encoding of that object, but shifting attention covertly does not. In this study, the tracking task consisted of a detection task involving the appearance of a digit in one of four spatial areas associated with verbal information during encoding or in the center of the screen. Encoding of the digits themselves was irrelevant for successful task completion. Following Tas et al. (2016), this would mean that shifting attention (and just noticing the change) was an adaptive strategy to solve the task, whereas performing an eye movement and encoding the digits was not. The additionally encoded information may have interfered with the retrieval task. Importantly, Tas et al. found impaired memory retrieval only for spatial tasks tapping into visual working memory resources. Their findings seem well suited to explain the results we found on verbal memory retrieval in this study, perhaps because we strongly associated verbal information with spatial locations during encoding and provided spatial frames of reference during retrieval together with a spatial tracking task. However, future research is necessary to disentangle these different explanations (eye-movement control, automatic encoding) for the lack of a functional relationship within the eye-movement group of Experiment 2 and provide a better understanding of the interactions between eye-movement programming, visual attention, and working memory retrieval (see also Theeuwes et al., 2009).

In this study, we manipulated eye movements by guiding participants’ attention to a congruent or incongruent spatial location (see also Johansson & Johansson, 2014; Martarelli & Mast, 2013; Scholz et al., 2016; Wantz, Martarelli, & Mast, 2016). However, others instructed participants to fixate the center (Bochynska & Laeng, 2015; Johansson et al., 2012; Laeng et al., 2014; Laeng & Teodorescu, 2002; Staudte & Altmann, 2016). To us, the center manipulation has three major drawbacks that might account for the varying effects found with this method. First, in the studies applying a central fixation condition to investigate the functional role of looking at nothing, no information was associated with the center. Thus, by comparing retrieval performance under free-gaze instructions with retrieval performance under central-fixation instructions, one compares a condition in which participants look at locations that have been associated with retrieval-relevant information with a condition in which participants look at a location that has not been so associated. Thus, the conditions differ in the amount of information associated with the spatial location. Additionally, an instruction to look at the center of the screen might add an additional memory load that leads to detrimental effects on retrieval performance in comparison to an instruction to gaze freely (see also Martarelli & Mast, 2013; but see Bochynska & Laeng, 2015, and Johansson et al., 2012). Last, a central fixation condition may lead participants to covertly orient attention toward the associated spatial location. The congruent/incongruent manipulation, in our opinion, overcomes these drawbacks: First, the eyes are guided to a location matching the retrieved information versus a location associated with mismatched, irrelevant information. Second, both the congruent and the incongruent manipulation afford an instruction to look at the locations, keeping the load comparable. Third, having to gaze at one corner of the screen and then shift attention to a different corner may be more difficult in terms of covertly shifting attention than looking at the center of the screen and shifting attention to one corner, which might be possible by widening the field of view (Hulleman & Olivers, 2017). Thus, the congruent condition/incongruent conditions make it harder for participants to use such a strategy. To arrive at an even more comparable estimate of participants’ retrieval performance, in this study we introduced the center of the screen as a location in the tracking task as well. Subtracting each individual’s performance in the center condition, where no sentence was associated, from her or his performance in conditions taking place in the four spatial areas that were associated with the sentences allowed us to derive an individual estimate of the increase and decrease in performance in the congruent condition/incongruent conditions by simultaneously controlling for the load induced by the tracking task.

In two experiments, we found no functional effect of eye movements (overt shifts of attention) on memory retrieval beyond that of covert shifts of attention. However, there may be situations in which eye movements can indeed aid memory. Whereas in the looking-at-nothing paradigm the screen is almost devoid of any useful information, when the environment is rich in visual information and it is important for successful task completion to encode the visual information, eye movements could be used to link memory representations to objects in the world, reducing working memory demands (Ballard, Hayhoe, Pook, & Rao, 1997; Hayhoe & Ballard, 2005; Spivey & Geng, 2001) and possibly enhancing retrieval performance. The extent to which eye movements may be functional for memory retrieval may also depend on the task difficulty. If the task is relatively easy, for instance, because only a rather small number of visual objects have to be memorized or because objects are located around the center of the screen and might therefore be traceable by a covert shift of attention, an eye movement might not additionally aid retrieval performance. In the same vein, if knowledge becomes strongly represented in memory—for instance, through repetitive testing of the same pieces of information—then the looking-at-nothing behavior can even diminish (Scholz, Mehlhorn, Bocklisch, & Krems, 2011; Wantz, Martarelli, & Mast, 2016). Yet in more complex tasks, for instance, when a complex visual scene has to be retrieved, several statements are auditorily presented only once, or the information has to be reused to form a decision or make an inference (Jahn & Braatz, 2014; Scholz et al., 2017; Scholz et al., 2015), looking at nothing has been found to be stable over more than 60 trials, and eye movements might have added to successful task completion. However, future research is needed to systematically investigate how task difficulty interacts with covert and overt shifts of attention when looking at nothing.

In this study, we manipulated the amount of overt and covert shifts of attention by instructing participants to either gaze freely (Experiment 1) versus looking at the tracking task (Experiment 2) or covertly shifting their attention toward the tracking task (Experiments 1 and 2). In both experiments and experimental conditions, we observed that participants sometimes failed to follow these instructions and only showed “more or less” overt than covert shifts of attention (e.g., eye-movement group of Experiment 1). This leaves open the question of how much of these shifts of attention are needed to achieve the benefits and costs that we observed. Additionally, future research should investigate if the effect is accumulating over time or if it is binary in nature.

When accessing parts of their mental representations, people direct their attention covertly to associated spatial locations, which activates the programming of corresponding eye movements. This is the case even for verbal information retrieval. We conclude that the process leading people to look at nothing is a shift of attention between information stored in an internal memory representation.

Notes

We report Bayes factors (see Kass & Raftery, 1995; Rouder, Speckman, Sun, Morey, & Iverson 2009; Wagenmakers, 2007) to provide evidence for or against the null hypothesis. BF01 indicates the odds of the null over the alternative hypothesis and BF10 the odds of the alternative over the null hypothesis. A value greater than 3 can be interpreted as strong evidence. All Bayesian analyses were performed with JASP Version 0.7.5.

Including sample period as an additional factor did not change the results. For instance, there was no significant main effect for the factor sample period in a repeated-measures ANOVA testing difference in proportion correct by tracking task and condition. Therefore, all analyses were collapsed over the two waves.

Across the two instruction groups, digit identification accuracy did not differ with regard to whether the correct response in the retrieval task was true or false. Digit response times for the first digit were larger than for the remaining digits (M First = 612 ms, SD First = 178 ms, M Remaining = 461 ms, SD Remaining = 136 ms). However, there was no difference between the instruction groups.

References

Abrahamse, E., Majerus, S., Fias, W., & van Dijck, J.-P. (2015). Editorial: Turning the mind’s eye inward: The interplay between selective attention and working memory. Frontiers in Human Neuroscience, 9, 1–3. doi:https://doi.org/10.3389/fnhum.2015.00616

Altmann, G. T. M. (2004). Language-mediated eye movements in the absence of a visual world: The “blank screen paradigm.” Cognition, 93, 79–87. doi:https://doi.org/10.1016/j.cognition.2004.02.005

Awh, E., Jonides, J., & Reuter-Lorenz, P. A. (1998). Rehearsal in spatial working memory. Journal of Experimental Psychology: Human Perception and Performance, 24, 780–790. doi:https://doi.org/10.1037/0096-1523.24.3.780

Ballard, D. H., Hayhoe, M. M., Pook, P. K., & Rao, R. P. (1997). Deictic codes for the embodiment of cognition. Behavioral and Brain Sciences, 20, 723–742.

Barsalou, L. W. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645. doi:https://doi.org/10.1146/annurev.psych.59.103006.093639

Belopolsky, A. V., & Theeuwes, J. (2009). When are attention and saccade preparation dissociated? Psychological Science, 20, 1340–1347. doi:https://doi.org/10.1111/j.1467-9280.2009.02445.x

Bochynska, A., & Laeng, B. (2015). Tracking down the path of memory: Eye scanpaths facilitate retrieval of visuospatial information. Cognitive Processing, 16, 159–163. doi:https://doi.org/10.1007/s10339-015-0690-0

Brandt, S. A., & Stark, L. W. (1997). Spontaneous eye movements during visual imagery reflect the content of the visual scene. Journal of Cognitive Neuroscience, 9, 27–38. doi:https://doi.org/10.1162/jocn.1997.9.1.27

Deubel, H., & Schneider, W. (1996). Saccade target selection and object recognition: Evidence for a common attentional mechanism. Vision Research, 36, 1827–1837.

Ferreira, F., Apel, J., & Henderson, J. M. (2008). Taking a new look at looking at nothing. Trends in Cognitive Sciences, 12, 405–410. doi:https://doi.org/10.1016/j.tics.2008.07.007

Godijn, R., & Theeuwes, J. (2012). Overt is no better than covert when rehearsing visuo-spatial information in working memory. Memory & Cognition, 40, 52–61. doi:https://doi.org/10.3758/s13421-011-0132-x

Griffin, I. C., & Nobre, A. C. (2003). Orienting attention to locations in internal representations. Journal of Cognitive Neuroscience, 15, 1176–1194. doi:https://doi.org/10.1162/089892903322598139

Guérard, K., Tremblay, S., & Saint-Aubin, J. (2009). The processing of spatial information in short-term memory: Insights from eye tracking the path length effect. Acta Psychologica, 132, 136–144. doi:https://doi.org/10.1016/j.actpsy.2009.01.003

Hayhoe, M., & Ballard, D. (2005). Eye movements in natural behavior. Trends in Cognitive Sciences, 9, 188–194. doi:https://doi.org/10.1016/j.tics.2005.02.009

Hoffman, J. E., & Subramaniam, B. (1995). The role of visual attention in saccadic eye movements. Perception & Psychophysics, 57, 787–795.

Hoover, M. A., & Richardson, D. C. (2008). When facts go down the rabbit hole: Contrasting features and objecthood as indexes to memory. Cognition, 108, 533–542. doi:https://doi.org/10.1016/j.cognition.2008.02.011

Huettig, F., Olivers, C. N. L., & Hartsuiker, R. J. (2011). Looking, language, and memory: Bridging research from the visual world and visual search paradigms. Acta Psychologica, 137, 138–150. doi:https://doi.org/10.1016/j.actpsy.2010.07.013

Hulleman, J., & Olivers, C. N. L. (2017). The impending demise of the item in visual search. Behavioral and Brain Sciences, 40. doi:https://doi.org/10.1017/S0140525X15002794

Irwin, D. E. (2004). Fixation location and fixation duration as indices of cognitive processing. In J. M. Henderson & F. Ferreira (Eds.), The interface of language, vision, and action: Eye movements and the visual world (pp. 105–133). New York, NY: Psychology Press.

Jahn, G., & Braatz, J. (2014). Memory indexing of sequential symptom processing in diagnostic reasoning. Cognitive Psychology, 68, 59–97. doi:https://doi.org/10.1016/j.cogpsych.2013.11.002

Johansson, R., Holsanova, J., Dewhurst, R., & Holmqvist, K. (2012). Eye movements during scene recollection have a functional role, but they are not reinstatements of those produced during encoding. Journal of Experimental Psychology: Human Perception and Performance, 38, 1289–1314. doi:https://doi.org/10.1037/a0026585

Johansson, R., Holsanova, J., & Holmqvist, K. (2006). Pictures and spoken descriptions elicit similar eye movements during mental imagery, both in light and in complete darkness. Cognitive Science, 30, 1053–1079. doi:https://doi.org/10.1207/s15516709cog0000

Johansson, R., & Johansson, M. (2014). Look here, eye movements play a functional role in memory retrieval. Psychological Science, 25, 236–242. doi:https://doi.org/10.1177/0956797613498260

Kass, R. E., & Raftery, A. E. (1995). Bayes factors. Journal of the American Statistical Association, 90, 773–795. doi:https://doi.org/10.1080/01621459.1995.10476572

Kent, C., & Lamberts, K. (2008). The encoding-retrieval relationship: Retrieval as mental simulation. Trends in Cognitive Sciences, 12, 92–98. doi:https://doi.org/10.1016/j.tics.2007.12.004

Kosslyn, S. M. (1994). Image and brain. Cambridge, MA: MIT Press.

Kowler, E., Anderson, E., Dosher, B., & Blaser, E. (1995). The role of attention in the programming of saccades. Vision Research, 35, 1897–1916.

Laeng, B., Bloem, I. M., D’Ascenzo, S., & Tommasi, L. (2014). Scrutinizing visual images: The role of gaze in mental imagery and memory. Cognition, 131, 263–283. doi:https://doi.org/10.1016/j.cognition.2014.01.003

Laeng, B., & Teodorescu, D. (2002). Eye scanpaths during visual imagery reenact those of perception of the same visual scene. Cognitive Science, 26, 207–231. doi:https://doi.org/10.1207/s15516709cog2602

Lawrence, B. M., Myerson, J., & Abrams, R. A. (2004). Interference with spatial working memory: An eye movement is more than a shift of attention. Psychonomic Bulletin & Review, 11, 488–494. doi:https://doi.org/10.3758/BF03196600

Lepsien, J., Griffin, I. C., Devlin, J. T., & Nobre, A. C. (2005). Directing spatial attention in mental representations: Interactions between attentional orienting and working-memory load. NeuroImage, 26, 733–743. doi:https://doi.org/10.1016/j.neuroimage.2005.02.026

Martarelli, C. S., Chiquet, S., Laeng, B., & Mast, F.W. (2017). Using space to represent categories: Insight from gaze position. Psychological Research, 81, 721–729. doi:https://doi.org/10.1007/s00426-016-0781-2

Martarelli, C. S., & Mast, F. W. (2011). Preschool children’s eye-movements during pictorial recall. British Journal of Developmental Psychology, 29, 425–436. doi:https://doi.org/10.1348/026151010X495844

Martarelli, C. S., & Mast, F. W. (2013). Eye movements during long-term pictorial recall. Psychological Research, 77, 303–309. doi:https://doi.org/10.1007/s00426-012-0439-7

Morey, R. D. (2008). Confidence intervals from normalized data: A correction to Cousineau (2005). Tutorials in Quantitative Methods for Psychology, 4, 61–64.

Mulckhuyse, M., & Theeuwes, J. (2010). Unconscious attentional orienting to exogenous cues: A review of the literature. Acta Psychologica, 134, 299–309. doi:https://doi.org/10.1016/j.actpsy.2010.03.002

Olivers, C. N. L., Meijer, F., & Theeuwes, J. (2006). Feature-based memory-driven attentional capture: Visual working memory content affects visual attention. Journal of Experimental Psychology: Human Perception and Performance, 32, 1243–1265. doi:https://doi.org/10.1037/0096-1523.32.5.1243

Pearson, D., & Sahraie, A. (2003). Oculomotor control and the maintenance of spatially and temporally distributed events in visuo-spatial working memory. Quarterly Journal of Experimental Psychology, 56A, 1089–1111. doi:https://doi.org/10.1080/02724980343000044

Platzer, C., Bröder, A. & Heck, D. (2014). Deciding with the eye: How the visually manipulated accessibility of information in memory influences decision behavior. Memory & Cognition, 42, 595–608. doi:https://doi.org/10.3758/s13421-013-0380-z

Postle, B. R., Idzikowski, C., Sala, S. D., Logie, R. H., & Baddeley, A. D. (2006). The selective disruption of spatial working memory by eye movements. Quarterly Journal of Experimental Psychology, 59, 100–120. doi:https://doi.org/10.1080/17470210500151410

Renkewitz, F., & Jahn, G. (2012). Memory indexing: A novel method for tracing memory processes in complex cognitive tasks. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38, 1622–1639. doi:https://doi.org/10.1037/a0028073

Richardson, D. C., Altmann, G. T. M., Spivey, M. J., & Hoover, M. A. (2009). Much ado about eye movements to nothing. Trends in Cognitive Sciences, 13, 235–236. doi:https://doi.org/10.1016/j.tics.2009.02.006

Richardson, D. C., & Kirkham, N. Z. (2004). Multimodal events and moving locations: Eye movements of adults and 6-month-olds reveal dynamic spatial indexing. Journal of Experimental Psychology: General, 133, 46–62. doi:https://doi.org/10.1037/0096-3445.133.1.46

Richardson, D. C., & Spivey, M. J. (2000). Representation, space and Hollywood Squares: Looking at things that aren’t there anymore. Cognition, 76, 269–295. doi:https://doi.org/10.1016/S0010-0277(00)00084-6

Rizzolatti, G., Riggio, L., & Sheliga, B. M. (1987). Space and selective attention. In C. Umiltà & M. Moscovitch (Eds.), Attention and performance XV: Conscious and nonconscious information processing (pp. 231–265). Cambridge, MA: MIT Press/Bradford Books.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16, 225–237. doi:https://doi.org/10.3758/PBR.16.2.225

Scholz, A., Krems, J. F., & Jahn, G. (2017). Watching diagnoses develop: Eye movements reveal symptom processing during diagnostic reasoning. Psychonomic Bulletin & Review. doi:https://doi.org/10.3758/s13423-017-1294-8

Scholz, A., Mehlhorn, K., Bocklisch, F., & Krems, J. F. (2011). Looking at nothing diminishes with practice. In L. Carlson, C. Hoelscher, & T. F. Shipley (Eds.), Proceedings of the 33rd annual conference of the Cognitive Science Society (pp. 1070–1075). Austin, TX: Cognitive Science Society.

Scholz, A., Mehlhorn, K., & Krems, J. F. (2016). Listen up, eye movements play a role in verbal memory retrieval. Psychological Research, 80, 149–158. doi:https://doi.org/10.1007/s00426-014-0639-4

Scholz, A., von Helversen, B., & Rieskamp, J. (2015). Eye movements reveal memory processes during similarity- and rule-based decision making. Cognition, 136, 228–246. doi:https://doi.org/10.1016/j.cognition.2014.11.019

Smyth, M. M. (1996). Interference with rehearsal in spatial working memory in the absence of eye movements. Quarterly Journal of Experimental Psychology, 49A, 940–949. doi:https://doi.org/10.1080/713755669

Smyth, M. M., & Scholey, K. A. (1994). Interference in immediate spatial memory. Memory & Cognition, 22, 1–13.

Souza, A. S., & Oberauer, K. (2016). In search of the focus of attention in working memory: 13 years of the retro-cue effect. Attention, Perception, & Psychophysics, 78, 1839–1860. doi:https://doi.org/10.3758/s13421-013-0392-8

Spivey, M. J., & Geng, J. J. (2001). Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research, 65, 235–241. doi:https://doi.org/10.1007/s004260100059

Staudte, M., & Altmann, G. T. M. (2016). Recalling what was where when seeing nothing there. Psychonomic Bulletin & Review, 24, 400–407. doi:https://doi.org/10.3758/s13423-016-1104-8

Tas, A. C., Luck, S. J., & Hollingworth, A. (2016). The relationship between visual attention and visual working memory encoding: A dissociation between covert and overt orienting. Journal of Experimental Psychology: Human Perception and Performance, 42, 1121–1138. doi:https://doi.org/10.1037/xhp0000212.

Theeuwes, J., Belopolsky, A. V., & Olivers, C. N. L. (2009). Interactions between working memory, attention and eye movements. Acta Psychologica, 132, 106–114. doi:https://doi.org/10.1016/j.actpsy.2009.01.005

Theeuwes, J., Kramer, A. F., & Irwin, D. E. (2011). Attention on our mind: The role of spatial attention in visual working memory. Acta Psychologica, 137, 248–251. doi:https://doi.org/10.1016/j.actpsy.2010.06.011

Thomas, L. E., & Lleras, A. (2009). Covert shifts of attention function as an implicit aid to insight. Cognition, 111, 168–174. doi:https://doi.org/10.1016/j.cognition.2009.01.005

Thomas, N. J. T. (1999). Are theories of imagery theories of imagination? An active perception approach to conscious mental content. Cognitive Science, 23, 207–245.

Wagenmakers, E.-J. (2007). A practical solution to the pervasive problems of p values. Psychonomic Bulletin & Review, 14, 779–804. doi:https://doi.org/10.3758/BF03194105

Wantz, A. L., Martarelli, C. S., Cazzoli, D., Kalla, R., Müri, R., & Mast, F. W. (2016). Disrupting frontal eye-field activity impairs memory recall. NeuroReport, 27, 374–378. doi:https://doi.org/10.1097/WNR.0000000000000544

Wantz, A. L., Martarelli, C. S., & Mast, F. W. (2016). When looking back to nothing goes back to nothing. Cognitive Processing, 17, 105–114. doi:https://doi.org/10.1007/s10339-015-0741-6

Yantis, S., & Jonides, J. (1981). Abrupt visual onsets and selective attention: Evidence from visual search. Journal of Experimental Psychology: Human Perception and Performance, 7, 937–947.

Acknowledgements

Parts of the data of Experiment 1 were presented at the 37th annual meeting of the Cognitive Science Society (July 2015, Pasadena, CA). Agnes Scholz gratefully acknowledges the support of the Swiss National Science Foundation (grant PP00P1_157432). Furthermore, the authors thank Helene Kreysa, Elke Lange, Corinna Martarelli, and Alessandra Souza for helpful comments on a previous version of the manuscript, Anita Todd for editing the manuscript and Daniela Eileen Lippoldt and Marisa Müller for their help in collecting the data.

Author note

Agnes Scholz, Department of Psychology, University of Zurich, Switzerland; Anja Klichowicz and Josef F. Krems, Department of Psychology, Chemnitz University of Technology, Germany.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Scholz, A., Klichowicz, A. & Krems, J.F. Covert shifts of attention can account for the functional role of “eye movements to nothing”. Mem Cogn 46, 230–243 (2018). https://doi.org/10.3758/s13421-017-0760-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-017-0760-x