Abstract

The purpose of this study was to assess whether the differential effects of working memory (WM) components (the central executive, phonological loop, and visual–spatial sketchpad) on math word problem-solving accuracy in children (N = 413, ages 6–10) are completely mediated by reading, calculation, and fluid intelligence. The results indicated that all three WM components predicted word problem solving in the nonmediated model, but only the storage component of WM yielded a significant direct path to word problem-solving accuracy in the fully mediated model. Fluid intelligence was found to moderate the relationship between WM and word problem solving, whereas reading, calculation, and related skills (naming speed, domain-specific knowledge) completely mediated the influence of the executive system on problem-solving accuracy. Our results are consistent with findings suggesting that storage eliminates the predictive contribution of executive WM to various measures Colom, Rebollo, Abad, & Shih (Memory & Cognition, 34: 158-171, 2006). The findings suggest that the storage component of WM, rather than the executive component, has a direct path to higher-order processing in children.

Similar content being viewed by others

Although basic calculation is an important part of math competence, another aspect of math that may be as important and warrants further investigation is word problem solving. Word problem solving is math exercises in which background information is presented as texts rather than math notations and equations, and it is one of the most important methods through which students can learn to select and apply the appropriate strategies for solving real-world problems.

Several lines of inquiry have indicated that one domain-general process, working memory (WM), may play an important role in problem solving in children (e.g., Lee, Ng, Ng, & Lim, 2004; Passolunghi & Mammarella, 2010; Swanson & Beebe-Frankenberger, 2004; Zheng, Swanson, & Marcoulides, 2011). This connection becomes apparent when the steps related to math problem solving are taken into consideration. For example, solving a word problem such as “13 pencils are for sale, and 6 pencils have erasers on top. The pencils are large. How many pencils do not have erasers?” involves a variety of mental activities. Children must access prestored information (e.g., 13 pencils), access the appropriate algorithm (e.g., 13 minus 6), and apply problem-solving processes to control its execution (e.g., ignoring the irrelevant information). Given the multistep nature of word problems, WM plays a major role in solution accuracy.

By far the most utilized framework for understanding the role of WM and problem solving is Baddeley’s multiple-components model (Baddeley & Logie, 1999). In this model, WM consists of three components: visual–spatial sketchpad, phonological loop, and central executive. The visual–spatial sketchpad is for the temporary storage of visual and spatial information, and it is important for the manipulation of mental images, such as mathematical symbols and shapes. The phonological loop is for the temporary storage of verbal information, and it is important for the storage of text and verbal information, such as the story in a word problem. It is also used for encoding and maintaining calculation operands (Furst & Hitch, 2000; Noël, Désert, Aubrun, & Seron, 2001). The central executive coordinates activities between the two subsystems (i.e., visual–spatial sketchpad and phonological loop), and increases the amount of information that can be stored in them. This model has been revised to include an episodic buffer (Baddeley, 2000, 2012), but support for the tripartite model has been found across various age groups of children (e.g., Gathercole, Pickering, Ambridge, & Wearing, 2004). For example, Gray et al. (2017) added measures of the episodic component (tasks that bind verbal and visual information) to a battery of WM measures given to 7- to 9-year-olds, and they found weak support for the four-factor model in comparison to Baddeley’s (e.g., Baddeley & Logie, 1999) earlier three-factor model. In addition, because the Baddeley and Logie model was originally three components and a fourth component was added later, for the present purposes this fourth component was not analyzed. Thus, this study focuses on the three-factor structure consistent with Baddeley’s earlier model.

Although several studies have suggested that the capacity and efficiency of WM is associated with word problem-solving performance (e.g., Passolunghi & Mammarella, 2010; Zheng et al., 2011), not all studies have shown a significant relation between WM and problem solving (Fuchs et al., 2006; Lee et al., 2004). For example, Lee et al. (2004) assessed the performance of 10-year-olds on measures of word problem solving, WM, intelligence, and reading ability. They found that children who had greater capacities related to WM measures were better able to solve mathematical problems. At the same time, they found that children with higher IQs, better reading skills, and larger vocabularies performed better on mathematical word problems. Furthermore, WM did not contribute important variance to word problem solving after measures of reading had been entered into the regression analysis. In contrast, some studies showed that reading or reading-related processes do not directly mediate the influence of WM on problem solving (e.g., Passolunghi, Cornoldi, & De Liberto, 1999; Swanson & Sachse-Lee, 2001). For example, Swanson and Sachse-Lee found among children with math disabilities that phonological processing, WM (executive component), and visual WM each contributed unique variance to word problem solving. Thus, they did not find support for the idea that reading ability or literacy processes mediated the role of WM in word problem solving.

Overall, the cognitive processes that underlie or mediate the relationship between WM and problem solving are unclear from the existing literature. Likewise, among those studies that have found a direct relationship between WM and word problem solving, the components of WM that predict word problem solving have also been unclear. Although some authors (Conway, Cowan, Bunting, Therriault, & Minkoff, 2002; Engle, Tuholski, Laughlin, & Conway, 1999; Kane et al., 2004) have found that the executive component of WM, and not storage, predicted high-order processes (in this case, intelligence), other have found that a common storage system (e.g., the phonological loop) was a better predictor of high-order skills than was the executive component of WM (e.g., Colom, Abad, Rebollo, & Shih, 2005; Colom, Flores-Mendoza, Quiroga, & Privado, 2005).

In summary, although some studies have suggested a significant relationship between different components of WM and word problem solving, this relationship may be completely mediated by individual differences in children’s skills in reading, math, and intelligence. Thus, further research is necessary to determine whether the components of WM have a direct relationship to word problem solving when the aforementioned mediator variables are taken into consideration. We considered three models to account for the mediation effects between WM and word problem solving: One focuses on fluid intelligence, a second focuses on a child’s knowledge base, and third relates to reading processes (these models are further discussed in Swanson & Beebe-Frankenberger, 2004).

The first model suggests that processes related to an executive system, as captured by measures of fluid intelligence, mediate the relationship between WM and problem solving. Fluid intelligence, or higher-order thinking skills, is highly dependent on the cognitive functions of WM. Several studies have suggested that WM and fluid intelligence are driven by the same executive processes (e.g., Conway et al., 2002), whereas other studies have suggested that WM and fluid intelligence operate as distinct constructs in predictions of academic performance (e.g., Ackerman, Beier, & Boyle, 2005). However, findings concerning the relationship between fluid intelligence and WM have been somewhat mixed. Thus, in the subsequent modeling we hoped to determine whether fluid intelligence is best modeled as a unique independent variable or as a mediating variable that underlies the contributions of WM to word problem solving.

A second model suggests that a child’s knowledge base plays a major role in mediating the influence of WM on problem solving. Several capacity models suggest that WM is the activated portion of declarative long-term memory (LTM; Anderson, Reder, & Lebiere, 1996; Cantor & Engle, 1993); that is, WM capacity influences the amount of resources available to activate knowledge (see Conway & Engle, 1994, for a review of this model). More specifically, a word problem introduces information into WM. The contents of WM are then compared with possible action sequences (e.g., associative links) in LTM (Ericsson & Kintsch, 1995). When a match is found (recognized), the contents of WM are updated and used to generate a solution. In the present study, we assessed whether the retrievability of contents in LTM mediates the relationship between WM and problem solving. The specific contents of interest were related to calculation skills (correct answers to calculation problems) as well as to accessing numerical, relational, question, extraneous information, and the appropriate operations and algorithms for problem solution (Mayer & Hegarty, 1996; Swanson, Cooney, & Brock, 1993).

A final model considers that the influence of WM on children’s word problem solving is primarily mediated by reading processes. Because mathematical word problems are a form of text, and because the decoding and comprehension of text draw on the phonological system (see Baddeley, Gathercole, & Papagno, 1998, for a review), reading mediates the relationship between problem-solving tasks and WM. We would expect reading to play a key role in mediating the effects of the phonological loop on word problem solving. This is because the phonological loop (temporary storage of verbal information) shares a substrate with reading processes. A related phonological process that may mediate the influence of the phonological loop on word problem solving is naming speed. Rapid naming is assumed to enhance the effectiveness of subvocal rehearsal processes, and hence to reduce the decay of memory items in the phonological store prior to output (e.g., Henry & Millar, 1993). Naming speed has been interpreted as a measure of how quickly items can be encoded and rehearsed within the phonological loop (e.g., McDougall, Hulme, Ellis, & Monk, 1994).

The present study addressed two questions:

-

1.

Is there a direct relationship between the components of WM and word problem solving when the mediating roles of reading, calculation, and fluid intelligence are included in the analysis?

Although several studies have shown that reading, calculation, and fluid intelligence are associated with word problem solving (e.g., Kyttälä & Björn, 2014; Swanson, Jerman, & Zheng, 2008; Vilenius-Tuohimaa, Aunola, & Nurmi, 2008; Zheng et al., 2011), this study was meant to determine whether a direct relationship would emerge between WM and word problem solving when the mediating effects of reading, math, and fluid intelligence were taken into consideration. On the basis of studies that had shown differential contributions of the WM components (i.e., central executive, phonological loop, and visual–spatial sketch pad) to word problem solving (e.g., Meyer, Salimpoor, Wu, Geary, & Menson, 2010; Swanson & Sachse-Lee, 2001; Zheng et al., 2011), we hypothesized that the phonological loop (the storage component) would have the strongest direct link to word problem solving.

-

2.

Do other processes, besides those related to reading and calculation skills, uniquely mediate the relationship between the components of WM and word problem solving?

Previous studies had attributed proficiency in word problem solving to naming speed (e.g., Cirino, 2011; Geary, 2011; Swanson & Kim, 2007) and problem representation (e.g., Swanson, 2004; Zheng et al., 2011), to name a few of the processes. Therefore, in the present study we aimed to determine whether WM contributes a direct path to word problem solving when it is mediated by the aforementioned processes. For example, several studies have revealed links among measures of naming speed (e.g., Landerl & Wimmer, 2008; Lepola, Poskiparta, Laakkonen, & Niemi, 2005; Schatschneider, Fletcher, Francis, Carlson, & Foorman, 2004; Wolf & Bowers, 1999), and therefore it can be hypothesized that measures of naming speed would mediate the relationship between WM and word problem solving.

Method

Participants

The data were gathered as part of a larger research project that occurred from 2008 to 2014. The sample from the larger study included 2nd through 5th grades. A subset of the data is reported in Swanson and Fung (2016) for Year 1 and 2 third graders. The present sample was selected from the Year 3 data and included only second, third, and fourth graders, since this was the larger sample size. Children were select from two charter schools and two public schools in the American Southwest. After we had received parent permission, the sample consisted of 413 children (213 females and 200 males), ages 6–10 (M = 8.38, SD = 0.51) from 35 classrooms. The majority of the sample (75%) was drawn from Grade 3 (8-years-olds), 10% from Grade 2, and 15% from Grade 4. The age ranges are reported in Table 1. The sample consisted of 207 Caucasians, 128 Hispanics, 23 African Americans, 22 Asians, and 33 who identified as Other (e.g., Native American, Vietnamese, or Pacific Islander). The mean socioeconomic status (SES) of the sample was primarily low to middle SES, based on free lunch participation, parent education, or parent occupation. Thirty-five percent of the sample received federal assistance from the free lunch program. All children were tested in January or February and included in two testing sessions.

The description of the criterion and predictor variables and procedures for each measure follow.

Criterion measures

Word problem solving

Three measures were used to assess word problem solving. The story problem subtest of the Test of Math Ability (TOMA-2; Brown, Cronin, & McEntire, 1994), the Story Problem Solving subtest from the Comprehensive Mathematical Abilities Test (CMAT; Hresko, Schlieve, Herron, Swain, & Sherbenou, 2003), and the KeyMath Revised Diagnostic Assessment (KeyMath; Connolly, 1998). In the TOMA, children were asked to silently read a short story problem that ended with a computational question about the story (i.e., Reading about Jack and his dogs and then ending with, “How many pets does Jack have?”) and then working out the answer in the space provided on their own. Reliability coefficient for the subtest is above .80.

The CMAT included word problems that increased in difficulty. The tester read each of the problems to the children, asking them to read along on their own paper. They were then asked to solve the word problem by writing out the answer. Two forms of the measure were created that varied only in names and numbers. The two forms were counterbalanced across presentation order. The manual for the CMAT subtest reported adequate reliabilities (>.86) and moderate correlations (>.50) when compared with other math standardized tests (e.g., the Stanford Diagnostic Math Test).

The KeyMath word problem-solving subtest involved the tester reading a series of word problems to the children while showing a picture illustrating the problem and then asking them to verbalize the answer to the problem. Both equivalent forms of the KeyMath were used (Forms A and B). The two forms were counterbalanced across presentation order. The KeyMath manual reported reliability at .90 with split-half reliability in the high .90s. Cross-validation with the Iowa Test of Basic Skills yielded an overall correlation of .76. The KeyMath problem-solving subtest involved the tester reading a series of story problems to the student while showing a picture illustrating the problem and then asking the child to verbalize the answer.

Predictor measures

Three measures captured executive processing, two captured performance related to the visual–spatial sketchpad, and three measures captured performance related to the phonological loop. The tasks used in this study were taken from Swanson and colleagues (Swanson & Frankenberger, 2004; Swanson et al., 2008) and the reader is referred to these sources for further descriptions of the psychometric characteristics of the measures. The Executive WM tasks administered in this study follows the same format as Daneman and Carpenter’s (1980) Listening Span measure. The Listening Span rather than the Reading span measures was selected because reading skills varied in the sample (i.e., young children were tested). Consistent with Daneman and Carpenter’s seminal WM measure, the processing of information was assessed by asking participant’s simple questions about the to-be-remembered material, whereas storage was assessed by accuracy of item retrieval. The question required a simple recognition of new and old information and was analogous to the yes/no response feature of Daneman and Carpenter’s task. It is important to note, however, that in these tasks the difficulty of the processing question remained constant within task conditions, whereas the number of items to be recalled within the list incrementally increased in size. Furthermore, the questions focused on the discrimination of items (old and new information) rather than deeper levels of processing such as mathematical computations (e.g., Towse, Hitch, & Hutton, 1998). All WM tasks were administered starting at the smallest list length (two items) and proceeded incrementally in the list length until an error occurred (e.g., an item omitted or recalled out of order). The Cronbach alpha for each task used in this study, with age partialed out, was > .80.

Central executive

This component of WM was measured using three tasks. The Listening Sentence Span task assessed children’s ability to remember information embedded in a short sentence (Daneman & Carpenter, 1980; Swanson, 1992). Testers read a series of sentences to each child and then asked a question about a topic in one of the sentences, and then children were asked to remember and repeat the last word of each sentence in order. For example, a set with two sentences: (Listen) “Many animals live on the farm. People have used masks since early times.” (Question) “What have been used since early times?”

The Conceptual Span task assessed children’s ability to organize sequences of words into abstract categories (Swanson, 1992). Children were presented with a set of words (e.g., “shirt, saw, pants, hammer, shoes, nails”) and asked which of the words “go together.”

The Auditory Digit Sequence task assessed children’s ability to remember numerical information embedded in a short sentence (Swanson, 1992). Children were presented with numbers in a sentence context (e.g., “Now suppose somebody wanted to have you take them to the supermarket at 8 6 5 1 Elm Street?”) and asked to recall the numbers in the sentence.

Visual–spatial sketchpad

This component of WM was measured using two tasks. The Mapping and Direction Span task assessed whether the children could recall a visual–spatial sequence of directions on a map with no labels (Swanson, 1992). Children were presented with a map of a “city” for 10 s that contained lines connected to dots and squares (buildings were squares, dots were stoplights, lines and arrows were directions to travel). After the removal of the map, children were asked to draw the lines and dots on a blank map. The difficulty ranged from a map with two arrows and two stoplights to a map with two arrows and twelve stoplights. The dependent measure was created by determining the number of correctly answered process questions, recalled dots, recalled lines between the dots, number of correct arrows (to receive credit the arrows had to be both in the correct spot and pointing in the correct direction), and numbers of insertions were also noted (extra dots, lines, and arrows; i.e., errors).

The Visual Matrix task assessed children’s ability to remember visual sequences within a matrix (Swanson, 1992). Children were presented a series of dots in a matrix and were allowed 5 s to study the pattern. After removal of the matrix, children were asked to draw the dots they remembered seeing in the corresponding boxes of a blank matrix. The difficulty ranged from a matrix of four squares with two dots to a matrix of 45 squares with 12 dots.

Phonological loop

This component of WM was measured using three tasks. The Forward Digit Span subtest of the Wechsler Intelligence Scale for Children, third edition (WISC-III; Wechsler, 1991) assessed short-term memory, since it was assumed that forward digit span presumably involves a subsidiary memory system (the phonological loop). The task involves a series of orally presented numbers that children repeat back verbatim. There are eight number sets with two trials per set, with the numbers increasing, starting at two digits and going up to nine digits. The WISC-III manual reported a test–retest reliability of .91, and Cronbach’s alpha was reported as .84.

The Word Span task was previously used by Swanson (2004) and assessed children’s ability to recall increasingly large word lists. Testers read to children lists of common but unrelated nouns, and children were asked to recall the words. Word lists gradually increased in set size from a minimum of two words to a maximum of eight. Cronbach’s alpha was previously reported as .62 (Swanson & Beebe-Frankenberger, 2004).

The Phonetic Memory Span task assessed children’s ability to recall increasingly large lists of nonsense words (e.g., des, seeg, seg, geez, deez, dez) ranging from two to seven words per list (Swanson & Berninger, 1995). Cronbach’s alpha was previously reported as .82 (Swanson & Beebe-Frankenberger, 2004).

Mediating measures

Reading

Reading comprehension was assessed by the Passage Comprehension subtest from the Test of Reading Comprehension, third edition (TORC; Brown, Hammill, & Weiderholt, 1995). This measure assessed children’s text comprehension of a topic’s or subject’s meaning during reading activities. For each item children were instructed to read silently the preparatory list of five questions, then read the short story that was presented in a brief paragraph, and finally answer the five comprehension questions (each with four possible multiple choice answers) about the story’s content. Coefficient alphas calculated across ages are reported at .90 or above. Test–retest reliability ranged from .79–.88. Inter-rater reliabilities ranged from .87 to .98.

Calculation

The arithmetic computation subtest for the Wide Range Achievement Test, third edition (WRAT; Wilkinson, 1993) and the Numerical Operations subtest of the Wechsler Individual Achievement Test (WIAT; Psychological Corp., 1992) were administered to measure calculation ability. Both subtests required children to perform written computation on number problems that increased in difficulty, beginning with single digit calculations and continued on up to algebra. The WRAT coefficient alphas were reported as .81 to .92; the WIAT reported reliability coefficients are similar, from .82 to .91.

A version of the Test of Computational Fluency (CBM), adapted from Fuchs, Fuchs, Eaton, Hamlett, and Karns (2000), was also administered. Children were required to write answers, within 2 min, 25 basic math calculation problems that were matched to grade level. The dependent measure was the number of problems solved correctly. Cronbach’s alpha has been previously reported as adequate .85 (Swanson & Beebe-Frankenberger, 2004).

Rapid automatized naming speed

The Comprehensive Test of Phonological Processing’s (CTOPP; Wagner, Torgesen, & Rashotte, 2000) Rapid Digit Naming and Rapid Letter Naming subtests were administered to assess speed in recall of numbers and letters. Children received a page that contained four rows and nine columns of randomly arranged numbers (i.e., 4, 7, 8, 5, 2). Children were required to name the numbers as quickly as possible for each of two stimulus arrays containing 36 numbers, for a total of 72 numbers. The dependent measure was the total time to name both arrays of numbers. The Rapid Letter Naming subtest is identical in format and scoring to the Rapid Digit Naming subtest, except that it measures the speed children can name randomly arranged letters (i.e., s, t, n, a, k) rather than numbers. Coefficient alphas for the CTOPP for the Rapid Digit Naming subtest ranged from .75 to .96 with an average of .87, and the Rapid Letter Naming subtest ranged from.70 to .92 with an average of .82. Coefficient alphas for the Rapid Naming composite score (created from the Rapid Digit and Rapid Letter Naming subtests) ranged from .87 to .96, with an average of .92, indicating a consistently high level of overall reliability. Test–retest reliability for the CTOPP Rapid Digit Naming subtest was .87, and that for the Rapid Letter Naming subtest was .92, with the Rapid Naming composite test–retest reliability being an acceptable .90.

Fluid intelligence

The Colored Progressive Matrices (Raven, 1976) were administered to assess fluid intelligence. Children were given a booklet with patterns displayed on each page and with each pattern revealing a missing piece. Six possible replacement pattern pieces were presented, and children were required to circle the replacement piece that best completed the pattern. The patterns progressively increased in difficulty. Cronbach’s coefficient alpha was an adequate .88.

Word problem solving components

This is an experimental instrument designed to assess the ability to identify the components of word problems (Swanson & Beebe-Frankenberger, 2004; Swanson & Sachse-Lee, 2001). Each booklet contained three problems that included assessing the recall of text from the word problems. To control for reading problems, the examiner orally read (a) each problem and (b) all multiple-choice response options as children followed along.

After the problem was read, children were asked to turn to the next page on which they see following statement: “Without looking back at the problem, circle the question the story problem was asking on the last page.” The multiple-choice questions for the problem above were (a) How many pine cones did Darren have in all? (b) How many pine cones did Darren start with? (c) How many pine cones did Darren keep? and (d) How many pine cones did Darren throw back? This page assessed the ability to correctly identify the question proposition of each story problem.

On the next page for each problem, the instructions were, “Without looking back at the problem, try to identify the numbers in the problem.” The multiple-choice questions for the sample problem above were (a) 15 and 5, (b) 5 and 10, (c) 15 and 20, and (d) 5 and 20. This page assessed the ability to correctly identify the numbers in the two assignment propositions of each story problem.

The instructions on the next page were, “Without looking back at the problem, identify what the question wants you to find.” The multiple-choice questions were (a) The total number of pine cones Darren found all together, (b) What Darren plans to do with the pine cones, (c) The total number of pine cones Darren had thrown away, and (d) The difference between the pine cones Darren kept and the ones he threw back. This page assessed the ability to correctly identify the goals in the two assignment propositions of each word problem.

The instructions for the final page were, “Without looking back at the problem, identify whether addition, subtraction, or multiplication was needed to solve the problem.” Children were directed to choose one of the two or three operations: (a) addition, (b) subtraction, and (c) multiplication. After choosing one of the two or three operations, children were then asked to identify the number sentence they would use to solve the problem: (a) 15 × 5 =, (b) 15 + 10 =, (c) 15 – 5 =, or (d) 15 + 5 =. This page of the booklet assessed ability to correctly identify the operation and algorithm, respectively.

At the end of each booklet, children were read a series of true/false statements. All statements were related to the extraneous propositions for each story problem within the booklet. For example, the statement “Darren used pine cones to make ornaments” would be true, whereas the statement “Darren used pine cones to draw pictures” would be false. Based on Swanson (2004), the word problem-solving components were divided into two constructs. The problem representation construct consisted of the question, number assignment, and goals. The solution planning construct consisted of the operation and algorithm components.

Statistical analysis

The Mplus 6.11 (Muthén & Muthén 1998–2011) software was used to conduct path analyses. Because some measures had missing data, maximum likelihood estimation was used. A preliminary step in the analysis found 22 outliers in the data set. The removal of 22 outliers (3.5 standard deviations above or below the mean for each measure), resulted in the final sample size of 413. The path analyses (discussed below) were conducted with and without the outliers (Hendra & Staum, 2010). The inclusion of outliers resulted in higher skewness and kurtosis for several measures, and poorer model fit. Thus, the final analyses were conducted without outliers. An examination of the descriptive statistics for the remaining sample met the assumption of normality (e.g., skewness below 3, kurtosis below 4, and standard deviations not larger than the means).

As a baseline for the analysis, two measurement (or baseline) models assessed the influence of WM components on word problem solving. Model 1 was a measurement model of the WM components and word problem solving.

Model 2 was a measurement model with the WM components and fluid intelligence predicting word problem solving. Thus, in Model 2, the three WM components and the fluid intelligence latent variables were set to correlate with each other. There are several reasons for these correlations. First, WM is traditionally conceptualized as one system with multiple components (central executive, phonological loop, and visual–spatial sketchpad), with the central executive coordinating activities between the two subsystems (i.e., the visual–spatial sketchpad and phonological loop), and also increasing the amount of information that can be stored in the two subsystems (Baddeley, 2012; Baddeley & Logie, 1999). Second, although fluid intelligence may be a concept distinct from WM (to be tested later), several studies with children have shown they have strong correlations with each other (e.g., Swanson, 2004; Swanson & Beebe-Frankenberger, 2004). Third, we determined whether setting the correlations between the three WM components, and between WM and fluid intelligence, resulted in better model fit.

Mediation models

Finally, three mediation models (Models 3, 4, and 5) were tested. We assessed the mediating effects of fluid intelligence, naming speed, knowledge of word problem components (representation and planning), reading, and calculation on the relationship between components of WM and word problem solving.

In subsequent modeling of the contributions of the WM components to word problem solving, fluid intelligence was considered a unique independent variable and later modeled as a mediating variable. The first contrast allowed for a determination of whether the processes that mediate fluid intelligence are similar to those that mediate WM components. The second allowed for a determination as to whether the constructs between executive components of WM and fluid intelligence were completely mediated by fluid intelligence.

The first mediation model tested whether the model fits between the independent variables (WM components, fluid intelligence) and the dependent variable (word problem solving) were best captured by indirect effects. The mediation variables of interest in this indirect effect were measures related to children’s knowledge bases and reading processes. Fluid intelligence was left as an exogenous variable so that we could determine whether the paths between fluid intelligence and the mediating variables and those between WM components and the mediating variables were comparable. That is, do the same processes that mediate fluid intelligence mediate the components of WM?

The second mediation model allowed for both indirect and direct effects on the relationships between WM and word problem solving and between fluid intelligence and word problem solving. The model addressed the following question: Is there a direct contribution of WM to word problem solving when all mediators are considered in the model? The model directly tested the hypothesis that the components of WM contribute direct paths to word problem solving that can be differentiated from the paths from fluid intelligence to word problem solving. That is, do the same processes that mediate fluid intelligence mediate the components of WM?

The final mediation model allowed fluid intelligence to serve as a mediating rather than an independent variable. That is, fluid intelligence might have an influence on any relationship between the components of WM and word problem solving, because it was related to both the independent (WM components) and dependent (word problem solving) measures in the previous models. In the final model, we assessed whether the effects of the components of WM on word problem solving can be attributed to fluid intelligence. Thus, Model 5 addressed the question: Does fluid intelligence mediate the contribution of WM to math problem solving? Those additional variables of interest in terms of mediation again were measures of children’s knowledge bases and reading. However, the final model determined whether any direct WM paths would emerge to influence problem solving when the mediating effects of the child’s knowledge base, reading, and fluid intelligence were completely accounted for.

Various model fit indexes were used to assess the goodness of fit of the various models, including chi-square, the Bentler comparative fit index (CFI), Tucker–Lewis index (TLI), root-mean square error of approximation (RMSEA; along with confidence intervals), and standardized root-mean square residual (SRMR). For a model to have excellent fit, the following were required: a nonsignificant chi-square value; a CFI > .95; a TLI > .90; and an RMSEA below .05, with the left endpoint of its 90% confidence interval smaller than .05 (Raykov & Marcoulides, 2008). Because the chi-square test is sensitive to sample size and has a tendency to reject models that are only marginally inconsistent with the data examined, more emphasis was placed on the other reported fit criteria (Raykov & Marcoulides, 2008). To compare competing or alternative mediation models, the Bayesian information criterion (BIC) and Akaike information criterion (AIC) were used. Models with smaller values are preferred to models with higher values when determining the best model fit. The AIC is primarily focused on comparing competing nonhierarchical models (Kline, 2012), but the BIC is recommended when the sample size is large and the number of parameters is small. Furthermore, the BIC is more likely to penalize for additional model parameters than is the AIC.

Procedures

Ten graduate students trained in test administration tested all of the participants in their schools. One session of approximately 45–60 min was required for small-group test administration, and one session of 45–60 min was required for individual test administration. During the group testing session, data were obtained from word-problem-solving process (components) booklets: the Test of Reading Comprehension, Test of Mathematical Ability, and Visual Matrix task. The remaining tasks were administered individually. Test administration was counterbalanced to control for order effects. The task order was random across participants within each test administrator.

Results

Descriptive statistics

The means, standard deviations, skewness, and kurtosis of the measures are shown on Table 1. The sample size varied from 405 to 413, excluding the 22 outliers. The skewness and kurtosis of all measures were below 3, indicating that the data were normally distributed. The correlations among the measures are shown on Tables 5 and 6.

Measurement model

Working memory components

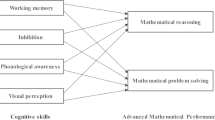

Model 1 (Fig. 1) was a measurement model of the WM components and word problem solving. The central executive component was measured by Conceptual Span, Auditory Digit Sequence, and Listening Sentence Span. The phonological loop was measured by Forward Digit Span, Word Span, and Phonetic Memory Span. The visual–spatial sketchpad was measured by the Visual Matrix and Mapping/Directions Span tasks. Word problem solving was measured by the WISC, Keymath, TOMA, and CMAT. The fit indexes indicated a good model fit: χ 2(48) = 67.057, p = .04; CFI = .984; TLI = .978; RMSEA = .031 (.008, .048); SRMR = .036. A good fit was indicated because only four of the five criteria were met for a significant chi-square value.

Model 2 (Fig. 2) was a path model that tested whether the WM components and fluid intelligence significantly predicted word problem-solving accuracy. The fit indexes indicated an excellent fit for the model: χ 2(80) = 98.300, p = .08; CFI = .986; TLI = .982; RMSEA = .024 (.000, .038); SRMR = .034. As is shown in Fig. 2, the central executive, phonological loop, and fluid intelligence were significant predictors of word problem solving. The strongest predictor was the phonological loop (β = .373). Fluid intelligence was also a significant predictor (β = .321), and somewhat better predictor than the central executive (β = .257). Fluid intelligence was also significantly correlated with all three WM components. It is important to note that both the central executive and fluid intelligence yielded unique variance, indicating that they are distinct constructs. The three WM components and fluid intelligence accounted for 57% of the variance in word problem-solving accuracy. Because the measures for the visual–spatial sketchpad and fluid intelligence relied on a visual problem-solving process, the inclusion of fluid intelligence in Model 2 partialed out the influence of the visual–spatial sketchpad on word problem-solving accuracy we had found in Model 1.

Mediation models

Next, potential mediators were added to the model. Consistent with the general practice of structural equation modeling, we first examined whether all the constructs were conceptually and statistically distinct, via a confirmatory factor analysis. Thus, in addition to measures of the WM components, fluid intelligence, and word problem solving, measures of speed, problem representation, planning, reading, and calculations were added to the model. Speed was measured by Rapid Digit Naming and Rapid Letter Naming. Problem representation was measured by the question, number, and goal components. Problem planning was measured by the operation and algorithm components. Reading was measured by the Comprehension subtest of the TORC. Calculation was measured by WIAT, WRAT, and CBM. A confirmatory factor analysis was computed, and the loadings are reported in Table 2. The fit indexes indicated a good model fit: χ 2(279) = 335.396, p = .01; CFI = .984; TLI = .980; RMSEA = .022 (.011, .030); SRMR = .035. The results suggested that the constructs were statistically distinct. All factor loadings were above .40, except that for Phonetic Memory Span (β = .33; see Table 2).

Model 3 (Fig. 3) was a path model that assessed the indirect effects of speed, representation, planning, reading, and calculation between components of WM and problem solving. The model did not include direct paths from the WM components to word problem solving. Table 3 shows the significant standardized coefficients when the components of WM and fluid intelligence were regressed mediation variables. As is further shown in Fig. 3, significant paths occurred from the central executive to measures of speed, representation, planning, and reading. Significant paths from fluid intelligence occurred for representation, planning, reading, and calculation (i.e., naming speed is not among the significant results). The phonological loop predicted only reading. The visual–spatial sketchpad did not predict any of the mediators.

As is shown in Table 4, naming speed, representation, planning, reading, and calculation yielded significant paths to word problem-solving accuracy. Reading and calculation were the strongest direct predictors of word problem solving (βs = .73 and .40, respectively). The results of the Sobel tests for each significant moderator are also reported in Table 4. Of all six significant mediated relationships, reading yielded the strongest path predicting word problem solving (β = .23). The fit indices for this model were excellent for four of the five criteria for which chi-square was significant: χ 2(289) = 380.964, p < .001; CFI = .974; TLI = .969; RMSEA = .028 (.020, .035); SRMR = .041. All of the mediated relationships between WM components and word problem solving accounted for 87% of the variance in word problem solving, as compared to the earlier models, which had accounted for only 57% of that variance.

To test the hypothesis that components of WM contribute direct paths to word problem solving, two alternatives to Model 3 were tested. Model 4 added to Model 3 direct paths from the three WM components to word problem solving (Fig. 4). As is shown, the three paths were nonsignificant, although the phonological loop approached significance (p = .06). Likewise, the direct path between fluid intelligence and word problem solving was nonsignificant.

A second alternative model (Model 5) determined whether direct paths between the WM components and word problem solving were significant if fluid intelligence was entered as a mediating variable. The previous models had shown the unique contributions of fluid intelligence when compared to WM components, whereas the final model directly tested whether fluid intelligence and WM share similar processes. Thus, the alternative model was fitted by including the direct paths from the three WM components to word problem solving (as in the previous alternative model), but removing the direct path from fluid intelligence to word problem solving (Fig. 5). In this model, significant paths emerged between the phonological loop and visual–spatial sketchpad and word problem solving. The chi-square, χ 2(287) = 368.396, was slightly higher than for the previous model. However, the values of CFI = 0.977 and TLI = 0.972 were comparable. Slightly better estimates occurred for RMSEA (0.026 [.017, .034]). This second alternative model indicated a significant direct effect for the phonological loop (p = .002) as well as for the visual–spatial sketch pad (p = .02) to word problem solving. The magnitude of the beta weight was larger for the phonological loop (β = .20) than for the visual–spatial sketch pad (β = .11). Thus, we concluded that fluid intelligence, along with other processes, mediated the contribution of the executive system to word problem solving.

Given that all three mediation models fit the data, which one provided the better model? To answer this question, AIC and BIC values were computed for each model. The AIC values were 45,095.57, 45,088.67, and 45,087.002, and the BIC values were 45,562.29, 45,567.46, and 45,561.78 for Models 3, 4, and 5, respectively. For both indices, the smallest values occurred for Model 5, which removed fluid intelligence as an independent variable and entered it as a mediating variable to word problem solving. However, the fits for all three models were good, and the differences in AIC and BIC values (especially between Models 4 and 5) were minuscule. Thus, no model was a clear winner in terms of whether fluid intelligence is best viewed as confounding or mediating WM performance. However, it is important to note that no significant direct path was found between fluid intelligence and problem solving (Models 3 and 4), and that when we removed this direct path of fluid intelligence (Model 5), significant direct paths related to WM storage and word problem solving emerged. Thus, because fluid intelligence changed the strengths of the relationships between WM components and word problem solving in Model 5 as compared to Model 4, we assume that fluid intelligence can be viewed as a moderator between measures of WM and word problem solving (see MacKinnon, 2008, p. 11, for discussion). The significant path related to the phonological loop that emerged in Model 5 aligns with those of Colom, Abad, et al. (2005), who argued that the contribution of WM to higher-order processing is the storage component. Our results suggest that storage (i.e., short-term memory) is a significant predictor of math problem-solving performance in children.

Discussion

The purpose of this study was to address the question of whether the direct effects of WM components (central executive, phonological loop, and visual–spatial sketchpad) on word problem-solving accuracy are completely mediated by reading, calculation, and fluid intelligence. Several studies have shown, by entering these aptitude variables into a regression model for this particular age group, that the influence of WM on problem-solving accuracy was eliminated. Other studies have shown this not to be the case. Thus, the present study addressed these conflicting findings by comparing outcomes related to nonmediation and mediation models. The results show that the storage component of WM maintains a direct influence on problem-solving accuracy, even in a fully mediated model. These findings will now be placed within the context of the questions that directed this study.

-

1. Is there a direct relationship between components of WM and word problem solving when the mediating role reading, calculation, and fluid intelligence are included in the analysis?

We did find a direct relationship between WM and problem-solving accuracy in the fully mediated model. These results are consistent with previous research that showed phonological loop is important when it comes to math (Furst & Hitch, 2000; Noël et al., 2001). These previous studies showed that children’s calculation performance suffers when interference of the phonological loop is introduced in experiments (e.g., reciting alphabet while solving problems) suggesting that encoding and rehearsal of operands and mental calculation are disrupted (Furst & Hitch, 2000; Noël et al., 2001). The importance of the phonological loop is magnified when it comes to word problem solving, because solving word problems involve another aspect of the phonological loop, language/text processing (Baddeley, 2012; Baddeley et al., 1998; Gathercole, 1998), which is an important first step in understanding the word problem.

These results also highlight the importance of fluid intelligence in word problem solving; although WM components are important when it comes to word problem solving, fluid intelligence is also an important factor. This can be seen more easily when one compares the total variances accounted for in the models with and without fluid intelligence. Without fluid intelligence, the three WM components accounted for 48% of the variance in word problem solving (Model 1). With fluid intelligence (Model 2), the model accounted for 57% of the variance. These results are in line with researchers who have suggested that WM and fluid intelligence are distinct concepts (Alloway, 2009; Alloway & Alloway, 2010).

As was shown when comparing Models 4 and 5, fluid intelligence mediates whether individual differences in visual–spatial WM play an important role in predicting word problem solving. This finding is consistent with those of other studies (Gathercole & Pickering, 2000; Holmes & Adams, 2006; Rasmussen & Bisanz, 2005) that have shown the visual–spatial sketchpad to be important to math performance because it is responsible for the processing of visual and spatial information, such as mathematical symbols, equations, physical shapes, color, and movement (Baddeley, 2012; Baddeley & Logie, 1999). In this study, the visual–spatial sketchpad became a significant predictor when the direct path between fluid intelligence and word problem solving was removed from the model. These findings suggest that assessments of fluid intelligence (Colored Progressive Matrices) and the visual–spatial sketchpad (Visual Matrix and Mapping & Direction) essentially measure processes that share a common storage system. The inclusion of fluid intelligence took away variance from the visual–spatial sketchpad.

-

2. Do other processes, besides those related to reading and calculation skills, uniquely mediate the relationship between the components of WM and word problem solving?

Before answering this question, it is important to note that reading was the strongest among all of the mediators between the components of WM and word problem solving. Reading mediated the relationship between the phonological loop and word problem solving (β = .23), and was stronger than mediation from the central executive and speed (β = –.08). This result, in combination with the strong direct relationship between reading and word problem solving (β = .73), suggests that language processing (the responsibility of the phonological loop) and understanding the story (reading comprehension) are important for solving word problems.

Interestingly, the results also indicated that reading is more important than calculation (β = .73 vs. β = .40) in predicting word problem solving, lending more evidence that understanding the story problem is the first step in solving word problems, followed by the actual calculations. If one does not understand the story, it is more difficult to extract the mathematical information from word problems to put into the equations and solve them.

The results related to other mediating variables beyond reading, calculation, and fluid intelligence are as follows. First, knowledge of word problem representation (question, number, and goal), but not planning (operation and algorithm), had a direct relationship with word problem solving. This extended the research of Zheng et al. (2011) by separating the representation and planning components. The results suggest that in addition to understanding the word problem (i.e., reading comprehension), an additional step may be required before the actual calculations. Simply understanding the story problem is not enough; children need to be able to identify and extract the mathematical information from the story.

Second, the significant direct relationship between speed and word problem solving is worth noting. This finding is consistent with studies that found speed to be an important predictor of math performance (Berg, 2008; Geary, 2011; Swanson & Kim, 2007). In addition to predicting word problem solving, and similar to previous studies (Cirino, 2011; de Jong & van der Leij, 1999; Hecht, Torgesen, Wagner, & Rashotte, 2001; Lepola et al., 2005; Schatschneider et al., 2004), this study also found that speed was a significant predictor of the basic reading and calculation that is required for solving word problems. Overall, these direct relationships (speed/reading/calculation → word problem solving, and speed → reading/calculation) suggest that speed plays an important role in both basic skills (reading comprehension and calculation) as well as higher skills that integrates both.

Taken together, the major theoretical contribution of our findings was that the storage component of WM (especially the phonological loop) sustained a direct effect on word problem solving in the full mediated model. We assumed that the executive component of WM was essential for the mental activities basic to children’s word problem solving, but their effects were completely mediated in the full model. Our results are consistent with findings suggesting that short-term memory eliminates the predictive contribution of executive WM to various measures (Colom, Rebollo, Abad, & Shih, 2006; Shahabi, Abad, & Colom, 2014). In addition, simple short-term storage has been found to have a significant role in accounting for the relationship between WM and several cognitive abilities (Colom, Abad, et al., 2005). Our results suggest that even when individual differences in reading and related processes are taken into consideration, individual differences in word problem solving are primarily dependent on the phonological loop, and the executive system of WM plays an indirect role.

Our findings are consistent with others studies with elementary age children showing that reading-related skills (e.g., phonological processing, naming speed) and math performance (e.g., numerical abilities) with elementary school children (Fuchs et al., 2006; Passolunghi, Lanfranchi, Altoè, & Sollazzo, 2015; Szucs, Devine, Soltesz, Nobes, & Gabriel, 2014). Consistent with Passolunghi et al. 2015; (Passolunghi, Vercelloni, & Schadee, 2007) we found that an indirect effect of fluid intelligence (nonverbal in this case) on math abilities. Likewise, consistent with our findings, Passoulunghi et al. (2015; Passolunghi et al., 2007) found that WM and phonological abilities have an indirect relationship with fluid (nonverbal) intelligence. Although our studies vary from these studies in terms of age and methodology, the findings support a network view (Szucs et al. 2014) in which cognitive abilities such as reading, naming speed, and WM sustain math performance over and above more domain-specific abilities (e.g., calculation).

As we indicated in the introduction, not all studies have found a direct relationship between WM and problem solving. Thus, why did we find a direct relationship? There are at least two reasons why our findings might not coincide with others’. First, the majority of the studies we reviewed relied on a single task to tap various components of WM. Thus, in contrast to our findings, these studies were limited because they focused on a single task’s variance rather than on latent factors. Second, of those studies with multiple measures designed to capture the components of WM and its influence on problem-solving accuracy, few have established the construct validity of the WM components (via confirmatory factor analysis) with the sample underlying the study. With those caveats in mind, we now consider three studies that have application to our findings (see Swanson & Fung, 2016, for further review of these studies).

First, Fuchs et al. (2006) studied the cognitive correlates of arithmetic computation and arithmetic word problems in third graders (N = 312). Although WM initially predicted solution accuracy when sight word efficiency was removed from the modeling, the results suggest that other academic skills and cognitive factors capture the role of WM and supersede it with regard to predicting solution accuracy. The findings some ways, matched our findings, except that we did not find that reading completely mediated the influence of WM on problem-solving accuracy. In a later study, Fuchs et al. (2012) administered a battery of nonverbal reasoning, language, attention behavior, WM, phonological processing, and processing speed measures as well as calculation measures to children in the second and third grade. In this study, predictions were made to word problem-solving performance. Although they found that nonverbal reasoning (fluid intelligence) and oral language were related to math problem solving, no significant direct effects were found for the WM measures. However, this study only used counting recall and listening recall to assess WM. The authors do suggest, however, that the absence of a direct and indirect effect of WM were related to the mediating effects of arithmetic and pattern recognition. In contrast, although we also find that calculation plays a significant role in mediation, the latent measure of reading plays a substantially larger role. In addition, we did not find that measures of calculation completely mediated the effects of WM on solution accuracy.

In a recent study, Cowan and Powell (2014) administered a battery of domain-general and domain-specific measures to 258 third graders. The dependent measure of arithmetic word problem-solving accuracy was a single measure taken from the Wechsler Individual Achievement Test. The phonological loop and the executive system of WM were measured by word recall and listening recall, respectively. The assessment of the visual–spatial sketchpad included measures that focused on block recall and maze memory. The authors found that the executive WM system, reasoning (fluid intelligence), and oral language (receptive vocabulary and grammar) contributed unique variance of problem-solving accuracy. Their findings related to the direct effects of WM coincide with ours, but a failure to enter reading skills into their analysis would possibly eliminate the direct contribution of the executive component to problem-solving accuracy.

Limitations

Our study has several limitations, of which we focus here on two. First, because the majority of the children in the sample were age 8, it was not possible to include age as a covariate in the models. It is possible that age may influence the effects of the three WM components and speed, because developmentally, young children’s WM capacity and speed is still increasing at a rapid rate as they age. Furthermore, since math and reading are learned systematically throughout school, and our sample included children between the ages of 6 and 10, it is possible that WM capacity, speed, and math and reading skills could be influenced by age. This, in turn, may affect the interpretation of the final models. It is important to note that 75% of the sample in this study were 8 years old, 15% were 7 years old, 8.71% were 9 years old, and fewer than 1% were in each of the other age categories (0.24% were 6 years old; 0.72% were 10 years old). Due to the small sample size of the other ages, it was not possible to include age as a covariate in the models.

Second, although this study revealed that reading and the phonological loop have the strongest relationships with word problem solving, it is possible that these relationships would differ for older children (e.g., high school). At upper grades, the math required to solve word problems is more difficult, and thus calculation and planning (i.e., knowledge of operations and algorithms) may have strong relationships with word problem solving. Future studies should examine this possibility in a longitudinal framework, examining changes in the relationships between WM, basic reading/calculation skills, and word problem solving.

Summary

In summary, only the storage component of WM directly predicted problem-solving accuracy in the fully mediated model. The direct effect of the executive component of WM was completely mediated by measures of reading, calculation, and fluid intelligence. These results challenge the notion that basic skills and fluid intelligence completely mediate the influence of WM on higher levels of processing, but also challenge the notion that the executive component plays the major role in higher-order processing.

References

Ackerman, P. L., Beier, M. E., & Boyle, M. O. (2005). Working memory and intelligence: The same or different constructs? Psychological Bulletin, 131, 30–60. doi:10.1037/0033-2909.131.1.30

Alloway, T. P. (2009). Working memory, but not IQ, predicts subsequent learning in children with learning difficulties. European Journal of Psychological Assessment, 25, 92–98. doi:10.1027/1015-5759.25.2.92

Alloway, T. P., & Alloway, R. G. (2010). Investigating the predictive roles of working memory and IQ in academic attainment. Journal of Experimental Child Psychology, 106, 20–29. doi:10.1016/j.jecp.2009.11.003

Anderson, J. R., Reder, L. M., & Lebiere, C. (1996). Working memory: Activation limitations on retrieval. Cognitive Psychology, 30, 221–256. doi:10.1006/cogp.1996.0007

Baddeley, A. D. (2000). The episodic buffer: A new component of working memory? Trends in Cognitive Sciences, 4, 417–422. doi:10.1016/S1364-6613(00)01538-2

Baddeley, A. (2012). Working memory: Theories, models, and controversies. Annual Review of Psychology, 63, 1–29. doi:10.1146/annurev-psych-120710-100422

Baddeley, A., Gathercole, S., & Papagno, C. (1998). The phonological loop as a language learning device. Psychological Review, 105, 158–173. doi:10.1037/0033-295X.105.1.158

Baddeley, A. D., & Logie, R. H. (1999). The multiple-component model. In A. Miyake & P. Shah (Eds.), Models of working memory: Mechanisms of active maintenance and executive control (pp. 28–61). Cambridge: Cambridge University Press. doi:10.1017/CBO9781139174909.005

Berg, D. H. (2008). Working memory and arithmetic calculation in children: The contributory roles of processing speed, short-term memory, and reading. Journal of Experimental Child Psychology, 99, 288–308. doi:10.1016/j.jecp.2007.12.002

Brown, V. L., Cronin, M. E., & McEntire, E. (1994). Test of mathematical abilities. Austin: PRO-ED.

Brown, V. L., Hammill, D., & Weiderholt, L. (1995). Test of reading comprehension. Austin: PRO-ED.

Cantor, J., & Engle, R. W. (1993). Working-memory capacity as long-term memory activation: An individual-differences approach. Journal of Experimental Psychology: Learning, Memory, and Cognition, 19, 1101–1114. doi:10.1037/0278-7393.19.6.1101

Cirino, P. T. (2011). The interrelationships of mathematical precursors in kindergarten. Journal of Experimental Child Psychology, 108, 713–733. doi:10.1016/j.jecp.2010.11.004

Colom, R., Abad, F. J., Rebollo, I., & Shih, P. C. (2005). Memory span and general intelligence: A latent-variable approach. Intelligence, 33, 623–642. doi:10.1016/j.intell.2005.05.006

Colom, R., Flores-Mendoza, C., Quiroga, M. Á., & Privado, J. (2005). Working memory and general intelligence: The role of short-term storage. Personality and Individual Differences, 39, 1005–1014. doi:10.1016/j.paid.2005.03.020

Colom, R., Rebollo, I., Abad, F. J., & Shih, P. C. (2006). Complex span tasks, simple span tasks, and cognitive abilities: A reanalysis of key studies. Memory & Cognition, 34, 158–171. doi:10.3758/BF03193395

Connolly, A. J. (1998). KeyMath revised-normative update. Circle Pines: American Guidance.

Conway, A. R., Cowan, N., Bunting, M. F., Therriault, D. J., & Minkoff, S. R. (2002). A latent variable analysis of working memory capacity, short-term memory capacity, processing speed, and general fluid intelligence. Intelligence, 30, 163–183. doi:10.1016/S0160-2896(01)00096-4

Conway, A. R. A., & Engle, R. W. (1994). Working memory and retrieval: A resource-dependent inhibition model. Journal of Experimental Psychology: General, 123, 354–373. doi:10.1037/0096-3445.123.4.354

Corporation, P. (1992). Wechsler individual achievement test. San Antonio: Harcourt Brace.

Cowan, R., & Powell, D. (2014). The contributions of domain-general and numerical factors to third-grade arithmetic skills and mathematical learning disability. Journal of Educational Psychology, 106, 214–229. doi:10.1037/a0034097

Daneman, M., & Carpenter, P. A. (1980). Individual differences in working memory and reading. Journal of Verbal Learning and Verbal Behavior, 19, 450–466. doi:10.1016/S0022-5371(80)90312-6

de Jong, P. F., & van der Leij, A. (1999). Specific contributions of phonological abilities to early reading acquisition: Results from a Dutch latent variable longitudinal study. Journal of Educational Psychology, 91, 450–476. doi:10.1037/0022-0663.91.3.450

Engle, R. W., Tuholski, S. W., Laughlin, J. E., & Conway, A. R. A. (1999). Working memory, short-term memory, and general fluid intelligence: A latent-variable approach. Journal of Experimental Psychology: General, 128, 309–331. doi:10.1037/0096-3445.128.3.309

Ericsson, K. A., & Kintsch, W. (1995). Long-term working memory. Psychological Review, 102, 211–245. doi:10.1037/0033-295X.102.2.211

Fuchs, L. S., Compton, D. L., Fuchs, D., Powell, S. R., Schumacher, R. F., Hamlett, C. L.,…Vukovic, R. K. (2012). Contributions of domain-general cognitive resources and different forms of arithmetic development to pre-algebraic knowledge. Developmental Psychology, 48, 1315–1326. 10.1037/a0027475

Fuchs, L. S., Fuchs, D., Compton, D. L., Powell, S. R., Seethaler, P. M., Capizzi, A. M.,…Fletcher, J. M. (2006). The cognitive correlates of third-grade skill in arithmetic, algorithmic computation, and arithmetic word problems. Journal of Educational Psychology, 98, 29–43. 10.1037/0022-0663.98.1.29

Fuchs, L. S., Fuchs, D., Eaton, S. B., Hamlett, C. L., & Karns, K. M. (2000). Supplemental teacher judgments of mathematics test accommodations with objective data sources. School Psychology Review, 20, 65–85.

Furst, A. J., & Hitch, G. J. (2000). Separate roles for executive and phonological components of working memory in mental arithmetic. Memory & Cognition, 28, 774–782. doi:10.3758/BF03198412

Gathercole, S. E. (1998). The development of memory. Journal of Child Psychology and Psychiatry, 39, 3–27. doi:10.1111/1469-7610.00301

Gathercole, S. E., & Pickering, S. J. (2000). Working memory deficits in children with low achievements in the national curriculum at 7 years of age. British Journal of Educational Psychology, 70, 177–194. doi:10.1348/000709900158047

Gathercole, S. E., Pickering, S. J., Ambridge, B., & Wearing, H. (2004). The structure of working memory from 4 to 15 years of age. Developmental Psychology, 40, 177–190. doi:10.1037/0012-1649.40.2.177

Geary, D. C. (2011). Cognitive predictors of achievement growth in mathematics: A 5-year longitudinal study. Developmental Psychology, 47, 1539–1552. doi:10.1037/a0025510

Gray, S., Green, S., Alt, M., Hogan, T., Kuo, T., Brinkley, S., & Cowan, N. (2017). The structure of working memory in young children and its relation to intelligence. Journal of Memory and Language, 92, 183–201. doi:10.1016/j.jml.2016.06.004

Hecht, S. A., Torgesen, J. K., Wagner, R. K., & Rashotte, C. A. (2001). The relations between phonological abilities and emerging individual differences in mathematical computation skills: A longitudinal study from second to fifth grades. Journal of Experimental Child Psychology, 79, 192–227. doi:10.1006/jecp.2000.2586

Hendra, R., & Staum, P. W. (2010). A SAS application to identify and evaluate outliers. Retrieved from www.nesug.org/Proceedings/nesug10/ad/ad07.pdf

Henry, L. A., & Millar, S. (1993). Why does memory span improve with age? a review of the evidence for two current hypotheses. European Journal of Cognitive Psychology, 5, 241–287. doi:10.1080/09541449308520119

Holmes, J., & Adams, J. W. (2006). Working memory and children’s mathematical skills: Implications for mathematical development and mathematics curricula. Educational Psychology, 26, 339–366. doi:10.1080/01443410500341056

Hresko, W., Schlieve, P. L., Herron, S. R., Swain, C., & Sherbenou, R. (2003). Comprehensive math abilities test. Austin: PRO-ED.

Kane, M. J., Hambrick, D. Z., Tuholski, S. W., Wilhelm, O., Payne, T. W., & Engle, R. W. (2004). The generality of working memory capacity: A latent-variable approach to verbal and visuospatial memory span and reasoning. Journal of Experimental Psychology: General, 133, 189–217. doi:10.1037/0096-3445.133.2.189

Kline, R. B. (2012). Assumptions of structural equation modeling. In R. Hoyle (Ed.), Handbook of structural equation modeling (pp. 111–125). New York: Guilford Press.

Kyttälä, M., & Björn, P. M. (2014). The role of literacy skills in adolescents’ mathematics word problem performance: Controlling for visuo-spatial ability and mathematics anxiety. Learning and Individual Differences, 29, 59–66. doi:10.1016/j.lindif.2013.10.010

Landerl, K., & Wimmer, H. (2008). Development of word reading fluency and spelling in a consistent orthography: An 8-year follow-up. Journal of Educational Psychology, 100, 150–161. doi:10.1037/0022-0663.100.1.150

Lee, K., Ng, S., Ng, E., & Lim, Z. (2004). Working memory and literacy as predictors of performance on algebraic word problems. Journal of Experimental Child Psychology, 89, 140–158. doi:10.1016/j.jecp.2004.07.001

Lepola, J., Poskiparta, E., Laakkonen, E., & Niemi, P. (2005). Development of and relationship between phonological and motivational processes and naming speed in predicting word recognition in grade 1. Scientific Studies of Reading, 9, 367–399. doi:10.1207/s1532799xssr0904_3

MacKinnon, D. P. (2008). Statistical mediation analysis. Mahwah: Erlbaum.

Mayer, R. E., & Hegarty, M. (1996). The process of understanding mathematical problems. In R. J. Sternberg & T. Ben-Zeev (Eds.), The nature of mathematical thinking (pp. 29–54). Mahwah: Erlbaum.

McDougall, S., Hulme, C., Ellis, A., & Monk, A. (1994). Learning to read: The role of short-term memory and phonological skills. Journal of Experimental Child Psychology, 58, 112–133. doi:10.1006/jecp.1994.1028

Meyer, M. L., Salimpoor, V. N., Wu, S. S., Geary, D. C., & Menson, V. (2010). Differential contribution of specific working memory components to mathematics achievement in 2nd and 3rd graders. Learning and Individual Differences, 20, 101–109. doi:10.1016/j.lindif.2009.08.004

Muthén, L. K., & Muthén, B. O. (1998–2011). Mplus user’s guide (6th ed.). Los Angeles, CA: Muthén & Muthén.

Noël, M. P., Désert, M., Aubrun, A., & Seron, X. (2001). Involvement of short-term memory in complex mental calculation. Memory & Cognition, 29, 34–42. doi:10.3758/BF03195738

Passolunghi, M. C., Cornoldi, C., & De Liberto, S. (1999). Working memory and intrusions of irrelevant information in a group of specific poor problem solvers. Memory & Cognition, 27, 779–790. doi:10.3758/BF03198531

Passolunghi, M. C., Lanfranchi, S., Altoè, G., & Sollazzo, N. (2015). Early numerical abilities and cognitive skills in kindergarten children. Journal of Experimental Child Psychology, 135, 25–42. doi:10.1016/j.jecp.2015.02.001

Passolunghi, M. C., & Mammarella, I. C. (2010). Spatial and visual working memory ability in children with difficulties in arithmetic word problem solving. European Journal of Cognitive Psychology, 22, 944–963. doi:10.1080/09541440903091127

Passolunghi, M. C., Vercelloni, B., & Schadee, H. (2007). The precursors of mathematics learning: Working memory, phonological ability and numerical competence. Cognitive Development, 22, 165–184. doi:10.1016/j.cogdev.2006.09.001

Rasmussen, C., & Bisanz, J. (2005). Representation and working memory in early arithmetic. Journal of Experimental Child Psychology, 91, 137–157. doi:10.1016/j.jecp.2005.01.004

Raven, J. C. (1976). Colored progressive matrices test. London: H. K. Lewis.

Raykov, T., & Marcoulides, G. A. (2008). Exploratory factor analysis. In T. Raykov & G. A. Marcoulides (Eds.), An introduction to applied multivariate analysis (pp. 241–76). New York: Routledge.

Schatschneider, C., Fletcher, J. M., Francis, D. J., Carlson, C. D., & Foorman, B. R. (2004). Kindergarten prediction of reading skills: A longitudinal comparative analysis. Journal of Educational Psychology, 96, 265–282. doi:10.1037/0022-0663.96.2.265

Shahabi, S. R., Abad, F. J., & Colom, R. (2014). Short-term storage is a stable predictor of fluid whereas working memory capacity and executive function are not: A comprehensive study with Iranian schoolchildren. Intelligence, 44, 134–141.

Swanson, H. L. (1992). Generality and modifiability of working memory among skilled and less skilled readers. Journal of Educational Psychology, 84, 473–488. doi:10.1037/0022-0663.84.4.473

Swanson, H. L. (2004). Working memory and phonological processing as predictors of children’s mathematical problem solving at different ages. Memory & Cognition, 32, 648–661. doi:10.3758/BF03195856

Swanson, H. L., & Beebe-Frankenberger, M. (2004). The relationship between working memory and mathematical problem solving in children at risk and not at risk for serious math difficulties. Journal of Educational Psychology, 96, 471–491. doi:10.1037/0022-0663.96.3.471

Swanson, H. L., & Berninger, V. (1995). The role of working memory in skilled and less skilled readers’ comprehension. Intelligence, 21, 83–108. doi:10.1016/0160-2896(95)90040-3

Swanson, H. L., Cooney, J. B., & Brock, S. (1993). The influence of working memory and classification ability on children’s word problem solution. Journal of Experimental Child Psychology, 55, 374–395. doi:10.1006/jecp1993.1021

Swanson, H. L., & Fung, W. (2016). Working memory components and problem solving: Are there multiple pathways? Journal of Educational Psychology, 108, 1153–1177. doi:10.1037/edu000116

Swanson, H. L., Jerman, O., & Zheng, X. (2008). Growth in working memory and mathematical problem solving in children at risk and not at risk for serious math difficulties. Journal of Educational Psychology, 100, 343–379. doi:10.1037/0022-0663.100.2.343

Swanson, H. L., & Kim, K. (2007). Working memory, short-term memory, and naming speed as predictors of children’s mathematical performance. Intelligence, 35, 151–168. doi:10.1016/j.intell.2006.07.001

Swanson, H. L., & Sachse-Lee, C. (2001). Mathematical problem solving and working memory in children with learning disabilities: Both executive and phonological processes are important. Journal of Experimental Child Psychology, 79, 294–321. doi:10.1006/jecp.2000.2587

Szucs, D., Devine, A., Soltesz, F., Nobes, A., & Gabriel, F. (2014). Cognitive components of a mathematical processing network in 9-year-old children. Developmental Science, 17, 506–524. Retrieved from search.proquest.com/docview/1611628590?accountid=14521.

Towse, J. N., Hitch, G. J., & Hutton, U. (1998). A reevaluation of working memory capacity in children. Journal of Memory and Language, 39, 195–217. doi:10.1006/jmla.1998.2574

Vilenius-Tuohimaa, P. M., Aunola, K., & Nurmi, J. E. (2008). The association between mathematical word problems and reading comprehension. Educational Psychology, 28, 409–426.

Wagner, R., Torgesen, J., & Rashotte, C. (2000). Comprehensive test of phonological processing. Austin: Pro-Ed.

Wechsler, D. (1991). Wechsler intelligence scale for children–third edition. San Antonio: Psychological Corp.

Wilkinson, G. S. (1993). The wide range achievement test. Wilmington DE: Wide Range.

Wolf, M., & Bowers, P. G. (1999). The double-deficit hypothesis for the developmental dyslexias. Journal of Educational Psychology, 91, 415–438. doi:10.1037/0022-0663.91.3.415

Zheng, X., Swanson, H. L., & Marcoulides, G. A. (2011). Working memory components as predictors of children’s mathematical word problem solving. Journal of Experimental Child Psychology, 110, 481–498. doi:10.1016/j.jecp.2011.06.001

Author note

This article is based on a 4-year study funded by the U.S. Department of Education, Cognition and Student Learning in Special Education (USDE R324A090002), Institute of Education Sciences, awarded to H.L.S. Wenson Fung used part of this dataset for his dissertation. The authors are indebted to Loren Albeg, Catherine Tung, Dennis Sisco-Taylor, Kenisha Williams, Garett Briney, Kristi Bryant, Debbie Bonacio, Beth-Brussel Horton, Sandra Fenelon, Jacqueline Fonville, Alisha Hasty, Celeste Merino, Michelle Souter, Yiwen Zhu, and Orheta Rice in the data collection and/or task development. Appreciation is given to the Academy for Academic Excellence/Lewis Center for Educational Research (Corwin and Norton Campuses), Santa Barbara, and Goleta School District. Special appreciation is given to Chip Kling, Sandra Briney, Amber Moran, Mike Gerber, and Jan Gustafson-Corea. The report does not necessarily reflect the views of the U.S. Department of Education or the school districts.

Author information

Authors and Affiliations

Corresponding authors

Appendices.

Appendices.

Rights and permissions

About this article

Cite this article

Fung, W., Swanson, H.L. Working memory components that predict word problem solving: Is it merely a function of reading, calculation, and fluid intelligence?. Mem Cogn 45, 804–823 (2017). https://doi.org/10.3758/s13421-017-0697-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-017-0697-0