Abstract

In three experiments, we investigated whether the information available to visual perception prior to encoding the locations of objects in a path through proprioception would influence the reference direction from which the spatial memory was formed. Participants walked a path whose orientation was misaligned to the walls of the enclosing room and to the square sheet that covered the path prior to learning (Exp. 1) and, in addition, to the intrinsic structure of a layout studied visually prior to walking the path and to the orientation of stripes drawn on the floor (Exps. 2 and 3). Despite the availability of prior visual information, participants constructed spatial memories that were aligned with the canonical axes of the path, as opposed to the reference directions primed by visual experience. The results are discussed in the context of previous studies documenting transfer of reference frames within and across perceptual modalities.

Similar content being viewed by others

Spatial memory supports the execution of many of our everyday activities. For example, we find our way to the office every day, and we initiate movements to locate out-of-sight objects in our home because we are able to retrieve from memory information about where things are in the environment. This information is encoded in memory upon experiencing a spatial scene. Although for most people this experience is predominantly visual, other perceptual modalities (i.e., touch, audition, and proprioception) and indirect sources (e.g., language) can substitute for vision (e.g., when touching objects in the dark) or can complement it (e.g., when walking in a city neighborhood) in constructing spatial representations. In the present study, we investigated whether preexisting information acquired from one sensory modality influences the subsequent encoding of spatial locations from a different modality. Specifically, we examined whether a reference frame established from visual experience can be used to encode locations experienced through proprioception.

Much research in spatial cognition has focused on investigating the organizational structure of spatial memories, and there is now converging evidence that spatial representations derived from various inputs are orientation dependent (see Mou & McNamara, 2002; Yamamoto & Shelton, 2009; and Kelly, Avraamides, & Giudice, 2011, for locations encoded from vision, audition, and touch, respectively). According to an influential theory proposed by Mou, McNamara, and colleagues (e.g., McNamara, 2003; Mou & McNamara, 2002), people organize spatial information in memory using allocentric reference frames that maintain object-to-object relations from a small number of preferred directions. The preferred directions are determined upon experiencing a spatial scene, on the basis of available environmental information such as the intrinsic symmetry of the spatial layout and the geometric structure of the enclosing space, but also on the basis of egocentric experience and instructions. For example, in one experiment conducted by Shelton and McNamara (2001), participants memorized a spatial layout of objects that were placed on a square mat aligned with the walls of the laboratory. All participants studied the layout from two viewpoints, with the order of learning counterbalanced across participants. One viewpoint was aligned with the local and global environmental axes created, respectively, by the mat and the walls of the room, and the other was misaligned to these axes by 135º. Following learning, participants carried out a series of judgments of relative direction (JRDs; i.e., trials of the form “imagine standing at x, facing y. Point to z,” with x, y, and z being objects from the memorized layout) in a different laboratory. The results indicated that regardless of study order, participants were faster and more accurate at pointing from the aligned perspective, suggesting that they organized their memories around a preferred direction defined by the salient environmental information. Further studies have provided evidence that perspectives aligned with environmental axes are generally preferred, even when they are not experienced egocentrically (Mou, Liu, & McNamara, 2009; Mou & McNamara, 2002; Mou, Zhao, & McNamara, 2007; but see Richard & Waller, 2013). Moreover, studies have shown that spatial memories organized on the basis of egocentric experience are reinterpreted and reorganized if a subsequent view is aligned with salient environmental structure (e.g., Valiquette, McNamara, & Labrecque, 2007). Overall, studies supporting the orientation-dependent view of spatial memory provide convincing evidence that documents the central role of environmental structure in organizing spatial information in memory.

Furthermore, a number of recent studies have provided evidence that a preferred direction established on the basis of salient environmental information that is present when experiencing a spatial layout can be also used as the reference direction for a subsequent experience with a different layout in the same environment (Greenauer, Mello, Kelly, & Avraamides, 2013; Kelly & McNamara, 2010). For example, in a study by Kelly and McNamara (2010; see also Kelly, Avraamides, & McNamara, 2010), participants studied two spatially overlapping layouts of objects. In the two-view condition, they first studied one of the layouts from a viewpoint that was aligned with its intrinsic structure (0º) and then studied both layouts from a viewpoint that was offset by 135º. In the one-view condition, participants studied both the initial layout and subsequently the two layouts together from the misaligned 135º viewpoint. JRD trials for the second layout showed that participants in the one-view condition performed better from imagined perspectives aligned with the experienced 135º viewpoint. However, in the two-view condition, performance for the second layout was best for perspectives oriented along the four directions that were aligned with the intrinsic structure of the first layout (0º, 90º, 180º, and 270º). Thus, in this condition participants established a reference frame with a preferred direction based on the intrinsic structure of the first layout and used it to organize the second layout, as well, even though they only viewed the second layout from 135º. Reference frame transfer was also documented in a study by Greenauer et al. (2013), but only when the preferred direction for the first layout was defined by environmental cues. When participants organized the first layout on the basis of egocentric experience (i.e., when the first layout had no intrinsic structure), the egocentrically defined preferred direction was not transferred to the second layout. Instead, participants reorganized their initial memory around the egocentric axis of the second layout (see also Meilinger, Berthoz, & Wiener, 2011, for similar findings).

Importantly, reference frame transfer is not limited to the visual modality. A study by Kelly and Avraamides (2011) provided evidence that a preferred direction determined on the basis of visual experience can subsequently be used to organize the memory of objects encoded from touch. In one experiment, Kelly and Avraamides had participants visually study two objects placed on a round table from two viewpoints that were offset from each other by 45º. Stripes were drawn on the table in order to provide a salient environmental axis with which either the first or the second viewpoint was aligned. Following visual learning, participants were blindfolded and experienced seven new objects through touch from the second viewpoint. Results from JRDs on the haptic objects showed that participants performed better from the imagined perspective aligned with the stripes, regardless of whether the objects were encoded from a perspective aligned or misaligned with the stripes. A follow-up experiment with no visual objects confirmed that the visual preview of the salient environmental axis produced by the stripes was sufficient to induce a preferred direction for the subsequent haptic memory, even when the objects were touched from a misaligned perspective. Pasqualotto, Finucane, and Newell (2013) showed a similar facilitatory effect of a visible surrounding room on memory for haptic locations.

Kelly, Avraamides, and Giudice (2011) investigated whether a preferred direction established from haptic experience could be used to organize the spatial memory of visual objects. Blindfolded participants touched two objects placed on a table in a way that they formed an imaginary column, expected to provide a salient environmental axis. Subsequently, eight objects were added to the table, and participants studied them visually either from the same touch-aligned viewpoint or from a viewpoint that was misaligned by 45º. The results showed that the haptically defined reference frame transferred to memories of the visual objects, but only when the objects were placed on a square table and participants were encouraged to touch the table’s edges during haptic learning. When the objects were placed on a round table, reference frame selection occurred from the visual study perspective. It follows from the studies above that only a preferred direction established on the basis of stable environmental information can be transferred to a later experience and used to organize the encoding of new spatial information. This further highlights the important role that environmental cues have on forming spatial memories.

In the present study, we examined the boundaries of environmental information as an organizing principle for spatial memory. Specifically, we tested whether environmental information encoded visually can establish the preferred direction(s) for a subsequent spatial memory formed through the proprioceptive experience of walking a path without vision. Proprioceptive information acquired from blindfolded walking of a path that connects a number of objects to be memorized allows people to experience locations sequentially from orientations aligned with the direction of walking. By emphasizing these orientations, proprioceptive learning may lead to the formation of strong orientation-dependent memories that are resistant to assimilation in a preexisting reference frame.

Previous studies have established that proprioceptive memory constructed from walking a path is organized around preferred directions, just like visual memories are (Yamamoto & Shelton, 2005, 2007). In one experiment by Yamamoto and Shelton (2005, Exp. 1), participants encoded the locations of objects by viewing them or by walking the path that connected them. Subsequent performance on JRD trials showed that participants were better able to respond from the perspective experienced during viewing or walkingFootnote 1 than from other novel perspectives. In a subsequent experiment (Exp. 2), Yamamoto and Shelton (2005) had participants experience the same layout both visually and proprioceptively, but from different perspectives. Their results showed evidence for the presence of two preferred directions, since participants, regardless of study order, performed better from the perspectives aligned with either the visual or the proprioceptive experience, as compared to novel perspectives. This result suggests that participants constructed and maintained either a single representation with two preferred directions or two distinct spatial representations, each with its own preferred direction. The fact that the same layout of objects was learned through both modalities makes it impossible to conclude from the study of Yamamoto and Shelton (2005) whether transfer of reference frames occurred across the two learning experiences.

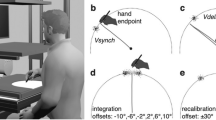

The goal of the present study was to examine whether prior environmental information acquired from vision can influence the encoding of a novel layout from proprioception, or whether proprioceptive learning is immune to cross-sensory transfer of a reference frame acquired through vision. Thus, in contrast to Yamamoto and Shelton (2005), in the present study we examined memories for spatial relations acquired exclusively from proprioception, and whether prior visual experience influences their reference frame organization. In three experiments, participants encoded the locations of objects placed on a path that they walked. The orientation of the path was misaligned to the walls of the enclosing space and the square sheet that covered the path prior to learning (Exp. 1), and also to the intrinsic structure of a separate layout that was studied visually prior to walking the path (Exps. 2 and 3). As in previous studies of spatial memory, participants were tested with JRDs in a different laboratory. If participants organized their memory for path objects using a reference frame established by visually previewing the geometry of the laboratory (and by memorizing the visual layout in Exps. 2 and 3), then faster and more accurate performance should be expected for imagined perspectives aligned with the walls of the room (0º, 90º, 180º, and 270º in Fig. 1). If, on the other hand, the proprioceptive experience was too salient to be influenced by previous visual environmental information, better performance would be expected for imagined perspectives aligned with the axes of the path (45º, 135º, 225º, and 315º). It was also possible that participants would organize their memories using a single preferred direction that coincided with their facing orientation at the origin of the path (225º), as had previously been shown in route navigation of real, virtual, and described environments (Richardson, Montello, & Hegarty, 1999; Wildbur & Wilson, 2008; Wilson, Wilson, Griffiths, & Fox, 2007). Finally, multiple preferred directions could be established for the three orientations experienced proprioceptively during walking (45º, 225º, and 315º; since participants never walked the path backward, they did not experience the 135º direction).

Experiment 1

In Experiment 1, blindfolded participants walked a path connecting objects that they had to memorize. The intrinsic structure of the path and the directions walked by participants were misaligned with the global reference frame implied by the walls of the enclosing laboratory that participants viewed prior to walking. This global reference frame was also aligned with a local reference frame provided by a square cover that was used to hide the path during the visual preview. Following learning, participants carried out a series of JRD trials at a different laboratory by manipulating a pointer presented on a computer screen. The question of interest was whether the memory formed for the objects of the path would be organized on the basis of the aligned global and local environmental reference frames experienced during viewing or on the basis of the intrinsic reference frame of the path experienced during walking.

Method

Participants

A group of 24 participants (nine male, 15 female) participated in the experiment in exchange for a small monetary compensation.

Materials

An eight-leg path containing only 90º turns was created by stitching together pieces of carpet. The axes of the path were misaligned to the walls of the enclosing room by 45º (i.e., they were oriented along the diagonal axes of the laboratory, as is shown in Fig. 1). Six objects with no intrinsic front–back orientations were placed at locations on the path. The form of the path and the arrangement of the objects on it were identical to those of Yamamoto and Shelton (2005, 2007). A large bed sheet was used to cover the path prior to walking, and the edges of the sheet were aligned with the room walls.

Design and procedure

After reading and signing informed consent forms, participants carried out a number of JRD trials to become familiar with the pointing task and the on-screen pointer that was used in the experiment. These practice trials required participants to imagine themselves at different positions and orientations in the campus and in the room they were in and to point from these positions to the direction of a different object/landmark. Two object/landmark names shown along with the pointer were used to convey the imagined position and orientation (e.g., “Imagine standing at the entrance of the campus facing the water fountain”), whereas a third name indicated the target to be pointed at (e.g., “Point to the library”). Participants also carried out a brief practice session that involved JRDs after walking a simple path with three objects. Following this practice, participants were blindfolded and led by the experimenter to a different laboratory. They were led to the corner of the laboratory that was adjacent to the origin of the path and faced along the diagonal axis of the room (the 315º direction in Fig. 1). At this point, they removed the blindfold and listened to the experimenter providing instructions about the task. During this time, which lasted approximately 5 min, participants had full visual access to the laboratory environment, but the path itself was covered with a square sheet that was aligned with the walls of the room. Following the instructions, participants were guided to the origin of the path and donned the blindfold when they indicated that they were ready to be guided along the path. As in Yamamoto and Shelton (2005), participants held with both hands a horizontal bar that the experimenter pulled to guide their walking. The experimenter also provided verbal walking instructions—for example, “walk straight”—during the walk. In contrast to the procedure of Yamamoto and Shelton (2005), participants always faced the direction of travel (i.e., no side-stepping was allowed). When participants reached an object on the path, they were asked by the experimenter to lift the blindfold a bit and to look down to their feet at the object for a few seconds. At this point, the instructor conveyed to participants the name of the object, which they had to memorize along with its location. This procedure was repeated until participants had encoded all six objects in memory. No two objects were visible at each stopping point. When participants reached the end of the path, they were guided back to the origin following a route that went around the path (i.e., from the endpoint toward the 225º corner and then to the origin) and then were invited to walk the path again. Walking the path was repeated until participants verbally indicated that they were confident of remembering all object locations, or until a maximum limit of three walks had been reached. Participants were allowed to think about the locations before telling the experimenter whether or not they wanted to walk the path again. No participant indicated difficulty remembering the objects after the three walks.

Following learning, participants were led blindfolded into a different laboratory for the testing phase. In this phase, they carried out a series of JRDs that required them to imagine themselves at different positions and orientations on the memorized path and to point toward another path object. Eight imagined perspectives, at 45º intervals, were used for the JRD trials. Participants completed a total of 64 trials (eight trials for each imagined perspective) on which they were asked to use the on-screen pointer to respond as quickly as they could without sacrificing accuracy for speed. The dependent measures were pointing error (i.e., the unsigned angular difference between participants’ pointing responses and the correct responses) and pointing latency (i.e., the time from when the trial information appeared until participants clicked the mouse to enter a pointing response).

Results

An initial analysis showed that no speed–accuracy trade-off was present: The average within-participants correlation between pointing error and latency was positive (M = .07) and did not differ from 0, t(23) = 1.91, p = .07.

Separate one-way analyses of variance (ANOVAs) for pointing error and latency were carried out in order to evaluate the effect of imagined perspective on performance. Planned contrasts comparing performance for perspectives aligned with the axes of the path (315º, 225º, 135º, 45º) and for perspectives aligned with the global and local environmental structure (0º, 90º, 180º, 270º) were used to determine whether spatial memories were organized on the basis of proprioceptive experience from walking the path or of the environmental reference frames implied by the enclosing environment and the cover of the path.

The analysis of pointing error revealed a significant effect of imagined perspective, F(7, 161) = 3.28, p = .003, η 2 = .13. As is shown in Fig. 2a, participants were more accurate for responses made from perspectives aligned with the intrinsic axes of the path (M = 22.67º, SD = 14.05º) than for those aligned with the geometry of the room and the cover (M = 28.03º, SD = 11.18º). This result was corroborated by the presence of a significant contrast, t(23) = 4.14, p < .001. Although pointing error was somewhat greater for the 45º perspective than for the other path-aligned perspectives, a separate ANOVA in which only path-aligned perspectives were included showed no reliable effect of perspective, F(3, 69) = 1.54, p = .213, η 2 = .06.

Performance as a function of imagined perspective for the path layout in Experiment 1. Panel a shows pointing errors, and panel b shows pointing latencies. Error bars indicate standard errors from the ANOVA

As with pointing errors, an effect of perspective was also present in pointing latencies, F(7, 161) = 2.83, p = .008, η 2 = .11: Participants were significantly faster on trials for which they adopted perspectives aligned with the axes of the path (M = 22.67 s, SD = 7.49 s) rather than the environmental axes (M = 25.78 s, SD = 8.60 s), t(23) = 2.70, p = .013 (Fig. 2b). As with pointing errors, the latencies for responding did not differ across the four path-aligned perspectives, F(3, 69) = 0.29, p = .83, η 2 = .01.

Discussion

The results from Experiment 1 showed that participants organized their memories on the basis of the intrinsic axis of the path they walked. No evidence for an influence of the visually experienced environmental axes was found. The fact that performance was equivalent across the four perspectives aligned with the axes of the path suggests that participants (1) did not maintain objects in memory from the perspectives they experienced directly and (2) did not establish memories with a preferred direction using the orientation of the first leg of the path. Instead, participants seem to have used the structure of the path (i.e., both axes of the path) to organize their proprioceptive memories.

A plausible interpretation of these findings is that the intrinsic structure of the path provides strong information that participants used to establish preferred directions just like the symmetric arrangement of objects did in the visual layouts used by Kelly and McNamara (2010) and Greenauer et al. (2013). Perhaps, this local environmental information provided by the path, and which was available during learning, provides a more salient organizational principle that overrides the visual information provided by the room geometry and the path cover prior to learning.

In Experiment 2, we attempted to increase the saliency of the environmental reference frame by adding horizontal and vertical stripes on the floor of the learning laboratory, since the study by Kelly and Avraamides (2011) had shown that stripes can effectively prime the selection of an environmental reference frame. The stripes were visible during the visual preview of the environment and when participants lifted the blindfold to observe objects during path walking. In addition, we further emphasized the environmental reference frame by having participants study a separate layout of visual objects whose intrinsic structure was aligned with the visually defined environmental reference frame. Participants carried out JRDs on the visual layout prior to walking the path in the same laboratory. Finally, in contrast to Experiment 1, in which participants were guided to an initial position in the corner of the room prior to walking the path, in Experiment 2 participants first viewed the space from a central position (adjacent to one of the sides of the room) aligned with 0º. It is possible that in Experiment 1 viewing the room from a position that was aligned with the orientation of the path further enhanced the diagonal axes rather than the orthogonal axes aligned with its geometric structure. We avoided this possibility in Experiment 2.

Experiment 2

In Experiment 2, participants walked the same path as in Experiment 1 while we increased the saliency of the visual extra-path information that was aligned with the environmental reference frame. Horizontal and vertical stripes on the floor were used to prime the axes aligned with the geometric structure of the room. Furthermore, the 0º orientation was further primed by having participants memorize from this orientation a separate visual layout with an aligned intrinsic structure. Thus, in Experiment 2, the geometric structure of the room, the orientation of the cover, the orientation of stripes on the floor, the intrinsic structure of the visual layout, and participants’ standing orientations before, during, and after visual learning were all aligned with the 0º orientation. On the basis of previous evidence that people weigh probabilistically available cues to establish preferred directions (Galati & Avraamides, 2012, 2013a), we expected that the multiple visual cues converging on the same reference direction in Experiment 2 would provide a strong test for reference frame transfer.

If participants readily assimilate path objects into a preexisting reference frame established from vision, they should now exhibit better performance for the directions aligned with the walls of the room. However, if they rely on the intrinsic structure of the path itself, the same results as in Experiment 1 would be expected.

Method

Participants

A group of 16 people (nine male, seven female) participated in the experiment and received a small monetary compensation for their participation.

Materials

The same eight-leg, six-object path as in Experiment 1 was used in the present experiment (see Fig. 1). Furthermore, a layout of seven different objects arranged on a visible 3 × 3 grid was set up in the space of the same laboratory (Fig. 3). The grid was made up of horizontal and vertical stripes that were put on the floor with tape. The intrinsic axes of this layout were aligned with the environment axes of the laboratory, but none of its intersections, where visual objects were placed, were on the walked path.

Schematic diagram of the visual layout grid of Experiment 2. The gridlines remained during propioceptive learning. Unfilled arrows indicate perspectives aligned with the room geometry, and filled arrows indicate misaligned perspectives. Circles represent objects to be memorized

Design and procedure

The design was identical to that of Experiment 1: Participants carried out JRDs from eight imagined perspectives deviating 45º from each other. The procedure was the same as that of Experiment 1, with a few notable exceptions. Prior to walking the path, participants carried out a visual memory task. They first memorized a layout of seven objects from an orientation that was aligned with both the intrinsic axis of the layout and the geometry of the room (Fig. 3). They then carried out 48 JRD trials (six for each imagined perspective) testing their memory for the visual objects. The intrinsic axes of the visual layout were emphasized by adding gridlines aligned with the two axes of the global environmental reference frame. These lines remained on the floor during the subsequent walking of the path and were partially visible when participants lifted the blindfold to study an object. Prior to walking the path, participants stood at the standpoint from which they had previously studied the visual layout and faced along 0º. At this standpoint, they were instructed how the proprioceptive learning would take place. They were then blindfolded and stepped sideways to the left toward the room corner that was adjacent to the path origin. When arriving at the corner, they took about two steps forward to reach the origin of the path. Finally, they rotated in place 135º to the right in order to assume the 315º orientation. Participants carried out the movements themselves but were guided by the experimenter pulling a horizontal bar that they held.

Results

Pointing errors and latencies were analyzed separately for JRDs on the visual layout and the path. The data from one participant were discarded from all analyses due to very high pointing errors. Initial analyses indicated no speed–accuracy trade-off for either condition. The average within-participants correlations of errors and latencies were positive for both the visual and proprioception sessions (Ms = .11 and .06, respectively) and were statistically significant only for the visual session, t(14) = 2.50, p = .026, and t(14) = 1.39, p = .19, respectively.

For the JRDs on the visual targets, effects of imagined perspective were found for both errors and latencies, F(7, 98) = 7.39, p < .001, η 2 = .35, and F(7, 98) = 4.37, p < .001, η 2 = .24. As is shown in Fig. 4, participants were more accurate for imagined perspectives that were aligned with the environmental structure of the room (M = 10.51º, SD = 5.66º) than for those misaligned to the room (M = 22.72º, SD = 12.14º), t(14) = 3.89, p = .002. They were also faster to point to objects from room-aligned perspectives (M = 16.24 s, SD = 5.92 s) than from misaligned ones (M = 20.20 s, SD = 8.73 s), t(14) = 3.87, p = .002.

Performance as a function of imagined perspective for the visual layout in Experiment 2. Panel a shows pointing errors, and panel b shows pointing latencies. Error bars indicate standard errors from the ANOVA

For the path JRD trials, a significant effect of imagined perspective was present for pointing errors, F(7, 98) = 4.65, p < .001, η 2 = .25 (Fig. 5a). However, in contrast to trials for the visual targets, participants were less accurate when pointing to path targets from perspectives that were aligned with the environmental axes (M = 29.42º, SD = 7.05º) than from those aligned with the axes of the path (M = 22.52º, SD = 7.84º), t(14) = 3.24, p = .006. Moreover, a significant effect of imagined perspective was present when only the four path-aligned perspectives were included, F(3, 42) = 4.90, p = .005, η 2 = .26. The effect was caused by errors being significant lower for the nonexperienced 135º perspective than for the 225º, and marginally so for the 315º, perspective, ps = .006 and .067, respectively.

Performance as a function of imagined perspective for the path layout in Experiment 2. Panel a shows pointing errors, and panel b shows pointing latencies. Error bars indicate standard errors from the ANOVA

The effect of perspective for pointing latencies was marginally significant, F(7, 98) = 2.08, p = .053, η 2 = .13. As in Experiment 1, participants were faster when pointing from path-aligned (M = 16.04 s, SD = 6.18 s) than from room-aligned (M = 17.90 ms, SD = 6.35 ms) perspectives, t(14) = 2.91, p = .011 (Fig. 5b). No reliable effect of imagined perspective was obtained when only path-aligned perspectives were entered in the analysis, F(3, 42) = 2.18, p = .11, η 2 = .14.

Discussion

Despite the changes made to increase the salience of the visual reference frames, and the 0º orientation in particular, participants in Experiment 2 were again faster and more accurate to respond from perspectives aligned with the intrinsic structure of the path. As we expected, performance for the visual layout was best for perspectives aligned with its intrinsic structure, which was aligned with the geometry of the room; however, the preferred direction established for the visual layout was not transferred to the organization of proprioceptive memory.

A caveat in Experiment 2 is that participants may have failed to perceive the misalignment of the path to the room geometry when they were guided without vision to its origin. Indeed, on the basis of evidence that people efficiently updated their position in space during blindfolded walking (e.g., Rieser, Guth, & Hill, 1986), we had assumed that participants in this experiment were able to keep track of their orientation when arriving at the start of the path. While guiding the participant to the origin of the path, the experimenter had also been careful to avoid any abrupt movement that could cause disorientation. Still, it is possible that participants did not update their position and orientation efficiently, and thus misperceived the path as being aligned with the room structure. This possibility was addressed in Experiment 3.

Another potential concern with Experiment 2 was that the visual and path layouts shared no common objects or locations, making it easy for participants to ignore the visual layout when learning the path locations. Although the goal of the visual layout was to provide additional visual cues converging on the reference frame of the room and the path cover, providing an interface between the two layouts might help to highlight the relationship between the visual environment and the walked path. In Experiment 3, we provided such an interface by modifying the layouts to include common objects and locations.

Experiment 3

In Experiment 3, we explicitly informed participants about the relation between the room geometry and the intrinsic orientation of the path. Prior to blindfolding participants, the experimenter pointed out to them the origin of the path and, using hand gestures, the orientations of the first and second legs. While walking the path, participants were reminded after the first turn (before walking the second leg) that their orientation was oblique to the walls of the room. Also, following the JRD testing for the path, participants drew the path within an outline of the room to verify that they were able to deduce that the main path axes were oblique to the room’s geometry. An additional modification in Experiment 3 was that the visual layout was changed to contain two objects from the path. These shared objects occupied the same locations in the room when they were presented as visual and as path objects. Furthermore, the two shared objects occupied the axis of bilateral symmetry of the visual layout (Fig. 6). We expected this change to enhance the salience of the visual layout’s intrinsic structure and its relation to the orientation of the path, and thus to increase the probability of reference frame transfer.

Visual and path layouts in Experiment 3. Filled circles indicate path objects, and dotted circles represent visual objects

Method

Participants

A group of 16 participants (five male, 11 female) carried out the experiment. Four participants received a small monetary compensation in exchange for their participation, and the remaining participants were unpaid volunteers.

Materials

The experiment used the same eight-leg, six-object path from Experiments 1 and 2 and a seven-object visual layout similar to that of Experiment 2. In contrast to Experiment 2, the path and visual layouts contained two shared objects presented at the same locations during both visual and proprioceptive learning. These objects lay on the axis of bilateral symmetry of the visual layout in order to further emphasize the intrinsic structure of the visual layout, its alignment with the environmental reference frame of the room, and the path’s misalignment to both the visual layout and the room.

Design and procedure

All aspects of the design and the procedure were identical to those of Experiment 2, with the following exceptions: (1) Prior to walking the path, the experimenter pointed to the location of the origin of the path (while the path was covered) and indicated with gestures the orientation of the first two legs of the path; (2) participants were informed that two of the visual objects would appear at the same locations in the proprioceptive learning, as well; (3) after walking the short first leg of the path and rotating 90º to the left, participants were reminded that they were occupying an orientation that was oblique to the walls of the room; and (4) at the end of the experiment, participants were given a sheet of paper containing an outline of the room and were asked to draw the path, marking on it the locations of the memorized objects. The room door was marked to allow participants to orient the outline to the actual space.

Results

Participants’ drawings were inspected by a research assistant naïve to the purpose of the study. The research assistant was instructed to categorize the drawings as being aligned to the outline of the room, misaligned by 45º clockwise, or other. All drawings were classified as being misaligned by 45º, indicating that participants were aware of how the path was oriented in the room.

For JRD trials, initial analyses showed that no speed–accuracy trade-off was present with the visual targets. The average within-participants correlation of errors and latencies was .10, which did not differ significantly from zero, t(15) = 1.66, p = .12.

For the visual layout, as in Experiment 2, the effect of imagined perspective was significant for both pointing errors and latencies, F(7, 105) = 3.72, p = .001, η 2 = .20, and F(7, 105) = 2.86, p = .009, η 2 = .16, respectively. Participants were more accurate responding from imagined perspectives aligned with the environmental structure of the room and the intrinsic structure of the layout (M = 17.57º, SD = 11.89º) than from misaligned imagined perspectives (M = 26.13º, SD = 13.12º), t(15) = 4.1, p = .001 (Fig. 7a). They were also faster to localize objects from room-aligned (M = 13.93 s, SD = 6.42 s) than from misaligned perspectives (M = 18.87 s, SD = 11.17 s), t(15) = 2.97, p = .009 (Fig. 7b).

Performance as a function of imagined perspective for the visual layout in Experiment 3. Panel a shows pointing errors, and panel b shows pointing latencies. Error bars indicate standard errors from the ANOVA

For the path layout, we first conducted an analysis to examine whether performance differed when the imagined perspective in a JRD trial was defined solely by path objects versus the two shared objects that were present in both the visual layout and the path layout. In trials that involved the shared objects, participants could have retrieved their spatial relation from their visual or their proprioceptive experiences. Since the two shared objects were used for a subset of trials involving the 0º and 180º perspectives, we compared for these two perspectives performance for trials that involved the shared objects and those that did not. To do so, we carried out a two-way repeated measures ANOVA with the factors Imagined Perspective (0º vs. 180º) and Trial Type (shared objects vs. path-only objects). For pointing errors, the analysis revealed a main effect of trial type, with participants being overall more accurate for trials involving shared objects, F(1, 15) = 6.72, p = .02, η 2 = .31. However, an interaction between trial type and imagined perspective was also present, F(1, 15) = 5.56, p = .032, η 2 = .27. For the 0º perspective, participants indeed pointed more accurately when the perspective was determined by shared (M = 23.54º, SD = 17.67º) rather than by path-only (M = 38.19º, SD = 23.01º) objects, p = .003. For the 180º perspective, performance was equal across trials with shared (M = 33.24º, SD = 13.82º) and path-only (M = 34.64º, SD = 16.14º) objects, p = .75. The latency analysis produced the same findings, with a significant main effect of trial type and a Trial Type × Perspective interaction, F(1, 15) = 7.73, p = .014, η 2 = .34, and F(1, 15) = 4.91, p = .043, η 2 = .25, respectively. Whereas participants responded faster overall for trials on which shared objects defined the imagined perspective than when they did not, this was the case mainly for the 0º perspective. For this perspective, participants were significantly faster for JRDs with shared (M = 11.36 s, SD = 4.35 s) than with path-only (M = 17.91 s, SD = 7.00 s) objects, p = .001. For the 180º perspective, participants were numerically faster for trials with shared (M = 15.59 s, SD = 5.97 s) than with path-only (M = 16.85 s, SD = 9.80) objects, but the difference was not significant, p = .56.

Given the advantage for adopting the imagined perspective defined by the two shared objects, especially for the 0º perspective, we carried out the analyses for the path JRDs excluding trials with shared objects. First, no speed–accuracy trade-off was present, as the average within-participants correlation of errors and latencies (M = .03) did not differ significantly from zero, t(15) = 0.95, p = .36. Second, the analysis of pointing errors revealed a significant main effect of imagined perspective, F(7, 105) = 2.73, p = .012, η 2 = .15: Participants were more accurate when pointing to targets from imagined perspectives that were path aligned (M = 30.42º, SD = 17.19º) rather than room aligned (M = 38.42º, SD = 16.70º), t(15) = 4.49, p < .001 (Fig. 8a). No differences were found across the four path-aligned perspectives, F(3, 45) = 0.33, p = .81, η 2 = .02. Third, the pointing latency findings paralleled those for pointing errors (Fig. 8b): A significant main effect of imagined perspective emerged, F(7, 105) = 2.13, p = .047, η 2 = .12, in which participants pointed more quickly from path-aligned (M = 14.63 s, SD = 5.27 s) than from room-aligned (M = 17.21 s, SD = 6.47 s) perspectives, t(15) = 3.97, p = .001. No effect of imagined perspective was obtained when only the four path-aligned perspectives were entered in the analysis, F(3, 45) = 0.22, p = .89, η 2 = .01.

Performance as a function of imagined perspective for the path layout in Experiment 3. Panel a shows pointing errors, and panel b shows pointing latencies. Error bars indicate standard errors from the ANOVA

Discussion

Despite our enhancing the relation between the visual layout and the path, as well as ensuring that participants were aware that the path was misaligned to the visual layout and the room geometry, the findings of Experiment 3 replicated those of the first two experiments: Participants were both more accurate and faster at responding from imagined perspectives that were aligned with the path axes rather than with the orthogonal axes implied by room geometry, the path cover, the intrinsic structure of the visual layout, and the stripes on the floor. The imagined perspective defined by the two shared objects, which were present in both the visual and path layouts, showed an advantage over the same perspective defined by path-only objects, but only for the perspective aligned with the study view and not for the contra-aligned perspective. The implications of the findings across the three experiments are discussed next.

General discussion

The aim of the present study was to examine whether environmental information that is known to influence the preferred orientation of visual memories can function as a scaffold for integrating location information subsequently acquired from proprioception. In Experiment 1, participants walked a path that was misaligned to both the global and local reference frames provided by visual inspection of the geometric structure of the enclosing laboratory and the orientation of a path cover. In Experiment 2, they walked the same path after memorizing locations and carrying out testing on a visual layout whose intrinsic structure was aligned with the global environmental reference frame of the room and their facing direction during learning. In Experiment 3, the path and the visual layout shared two objects in order to emphasize the spatial relation between them. Furthermore, participants were provided explicit information about the misalignment of the path and the room. The results from all three experiments clearly indicated that participants organized their memory for path objects on the basis of the intrinsic structure of the path itself that was encoded via proprioception, and not on information derived from prior visual experience.

Although the findings from the three experiments presented here replicate previous results that proprioceptive memories are organized around preferred directions (Yamamoto & Shelton, 2005, 2007), they contrast those from studies documenting transfer of reference frames across experiences (Greenauer et al., 2013; Kelly & Avraamides, 2011; Kelly et al., 2011; Kelly & McNamara, 2010; Pasqualotto et al., 2013). In these previous studies, a preferred direction established on the basis of environmental information was subsequently used to organize a second memory—constructed from the same or a different modality—that lacked environmental support. In the present experiments, the coinciding direction cues implied by the geometry of the room, the rectangular cover of the path, the stripes on the floor, and the intrinsic structure of a previously learned visual layout were not sufficient to override the misaligned intrinsic structure of the path from being used as the reference direction for proprioceptive memory.

A plausible explanation for the discrepancy of findings is that unlike encoding spatial information using vision and touch from a stationary observation point, walking between the objects of a layout allows one to create and maintain a strong memory of the path itself that provides a salient framework for remembering object locations and deducing object-to-object relations (Yamamoto & Shelton, 2007). The idiothetic information that is available during movement (i.e., proprioceptive information, vestibular cues, and copies of efferent commands) allows a moving observer to continuously update his or her position and orientation in the environment. Maintaining in memory the series of turns and distances traveled may provide the necessary scaffolding for remembering the locations of objects on the path.

Indeed, Yamamoto and Shelton (2007) provided evidence that memorizing locations in the context of a coherent path led to superior performance than learning these locations in isolation. Participants in the study by Yamamoto and Shelton (2007) encoded different spatial layouts by vision and proprioception. In each modality, locations were encoded as a coherent path, with participants either walking from one location to the other or viewing the locations of objects presented in sequence, or in isolation, with participants either walking from a fixed origin to each location and back or viewing each object by itself. The results from JRD testing revealed that, although visual learning performance was not influenced by the type of learning, for proprioceptive learning participants performed better when the locations of objects were experienced as a coherent path rather than in isolation. These results suggested that walking the coherent path allowed participants to deduce object-to-object relations more easily. In the present experiments, the symmetric path that we used entailed walking only in directions that were aligned with the intrinsic structure of the path. Perhaps this made the structure of the path more salient and, as a result, resistant to influences from conflicting environmental information acquired earlier from vision. Even when visual cues for the environmental reference frames were provided in Experiments 2 and 3, by allowing participants to observe the orientation of gridlines, participants discarded the visual information and organized their memories relative to the path axes. Interestingly, participants were no slower and no less accurate to respond from the perspective that was aligned with the intrinsic structure of the path but not experienced directly. Also, they were no more accurate or faster to respond from the perspective aligned with first-leg of the path. This indicates that the constructed spatial memory was anchored to an environmental reference frame intrinsic to the path rather than on proprioceptive experience per se.

An important difference between this and previous studies on reference frame transfer is that here participants walked a predetermined path encoding locations in a fixed order, whereas in previous studies showing reference frame transfer (e.g., Kelly & McNamara, 2010), participants were allowed to freely explore the objects of the layout in any order that they wished. It is possible that imposing a fixed order of encoding locations further primed the path-centric reference frame. A study by Greenauer and Waller (2008) showed that verbal instructions to encode locations from a particular perspective overrode the influence of environmental structure in determining the preferred direction of a visual memory. It is possible that learning locations in a fixed order can function just like verbal instructions to prime specific perspectives.

Regardless of their exact source, the present findings provide an indication for the limits of reference frame transfer by showing that salient environmental information that is typically sufficient to determine a preferred direction of spatial memory (e.g., Kelly & McNamara, 2010; Mou & McNamara, 2002) is not always transferred to the encoding of subsequent memories. It seems that transfer does not take place when the second experience is accompanied by cues that prime a different preferred direction. Indeed, in the studies of Greenauer et al. (2013) and Kelly and colleagues (Kelly & Avraamides, 2011; Kelly et al., 2011), the second layout had no salient intrinsic structure and was never aligned with any environmental axes. In these cases, the environmental reference frame created by stripes, the edges of the table, or the intrinsic structure of an existing memory provided a stable organizing principle for the subsequent memory.

The present study advances our understanding of how reference frames are used in the organization of spatial memory. Although previous studies have shown that reference frames acquired from vision can function as scaffolding for the subsequent encoding of visual (Kelly & McNamara, 2010) and haptic (Kelly & Avraamides, 2011; Pasqualotto et al., 2013) locations, the present study provides information about the boundaries of the role of visual reference frames by demonstrating a situation in which environmental references frames acquired from vision were not used to organize subsequent spatial information acquired from proprioception. The findings are compatible with the idea that when selecting the preferred orientation of their spatial memories, people combine probabilistically different sources of information (Galati & Avraamides, 2013a, b). In previous studies (Galati & Avraamides, 2012, 2013b), participants were more likely to select a given direction when multiple cues (e.g., egocentric experience and intrinsic structure) converged on that direction. Although in the present experiments multiple visual cues converged on the same reference direction, the structure of the path must have been too salient to ignore; in all three experiments, the directions primed by the path structure were selected as the organizing principle. Thus, it remains to be seen whether in other situations, reference frame transfer from vision to proprioception can take place.

Notes

A fixed facing orientation was used when walking the paths, with participants moving to the left or the right by side stepping.

References

Galati, A., & Avraamides, M. N. (2012). Collaborating in spatial tasks: Partners adapt the perspective of their descriptions, coordination strategies, and memory representations. In C. Stachniss, K. Schill, & D. Uttal (Eds.), Spatial cognition (Lecture notes in artificial intelligence, vol. 7463) (pp. 182–195). Heidelberg, Germany: Springer.

Galati, A., & Avraamides, M. N. (2013a). Flexible spatial perspective-taking: Conversational partners weigh multiple cues in collaborative tasks. Frontiers in Human Neuroscience. doi:10.3389/fnhum.2013.00618

Galati, A., & Avraamides, M. N. (2013b). The intrinsic structure of spatial configurations and the partner’s viewpoint shape spatial memories and descriptions. In M. Knauff, M. Pauen, N. Sebanz, & I. Wachsmuth (Eds.), Cooperative minds: Social interaction and group dynamics. Proceedings of the 35th Annual Meeting of the Cognitive Science Society (pp. 466–471). Austin TX: Cognitive Science Society.

Greenauer, N., Mello, C., Kelly, J. W., & Avraamides, M. N. (2013). Integrating spatial information across experiences. Psychological Research, 77, 540–554. doi:10.1007/s00426-012-0452-x

Greenauer, N., & Waller, D. (2008). Intrinsic array structure is neither necessary nor sufficient for nonegocentric coding of spatial layouts. Psychonomic Bulletin & Review, 15, 1015–1021. doi:10.3758/PBR.15.5.1015

Kelly, J. W., & Avraamides, M. N. (2011). Cross-sensory transfer of reference frames in spatial memory. Cognition, 118, 444–450. doi:10.1016/j.cognition.2010.12.006

Kelly, J. W., Avraamides, M. N., & Giudice, N. A. (2011). Haptic experiences influence visually acquired memories: Reference frames during multimodal spatial learning. Psychonomic Bulletin & Review, 18, 1119–1125. doi:10.3758/s13423-011-0162-1

Kelly, J. W., Avraamides, M. N., & McNamara, T. P. (2010). Reference frames influence spatial memory development within and across sensory modalities. In C. Hölscher, T. F. Shipley, M. O. Belardinelli, J. A. Bateman, & N. S. Newcombe (Eds.), Spatial cognition VII (Lecture notes in artificial intelligence) (pp. 222–233). Berlin, Germany: Springer. doi:10.1007/978-3-642-14749-4_20

Kelly, J. W., & McNamara, T. P. (2010). Reference frames during the acquisition and development of spatial memories. Cognition, 116, 409–420. doi:10.1016/j.cognition.2010.06.002

McNamara, T. P. (2003). How are the locations of objects in the environment represented in memory? In C. Freksa, W. Brauer, C. Habel, & K. F. Wender (Eds.), Spatial cognition III (Lecture notes in artificial intelligence) (pp. 174–191). Berlin, Germany: Springer. doi:10.1007/3-540-45004-1_11

Meilinger, T., Berthoz, A., & Wiener, J. M. (2011). The integration of spatial information across different viewpoints. Memory & Cognition, 39, 1042–1054. doi:10.3758/s13421-011-0088-x

Mou, W., Liu, X., & McNamara, T. P. (2009). Layout geometry in encoding and retrieval of spatial memory. Journal of Experimental Psychology: Human Perception and Performance, 35, 83–93. doi:10.1037/0096-1523.35.1.83

Mou, W., & McNamara, T. P. (2002). Intrinsic frames of reference in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 28, 162–170. doi:10.1037/0278-73-93.28.1.162

Mou, W., Zhao, M., & McNamara, T. P. (2007). Layout geometry in the selection of intrinsic frames of reference from multiple viewpoints. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 145–154. doi:10.1037/0278-7393.33.1.145

Pasqualotto, A., Finucane, C. M., & Newell, F. N. (2013). Ambient visual information confers a context-specific, long-term benefit on memory for haptic scenes. Cognition, 128, 363–379. doi:10.1016/j.cognition.2013.04.011

Richard, L., & Waller, D. (2013). Toward a definition of intrinsic axes: The effect of orthogonality and symmetry on the preferred direction of spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. doi:10.1037/a0032995

Richardson, A. E., Montello, D. R., & Hegarty, M. (1999). Spatial knowledge acquisition from maps and from navigation in real and virtual environments. Memory & Cognition, 27, 741–750. doi:10.3758/BF03211566

Rieser, J. J., Guth, D. A., & Hill, E. W. (1986). Sensitivity to perspective structure while walking without vision. Perception, 15, 173–188. doi:10.1068/p150173

Shelton, A. L., & McNamara, T. P. (2001). Systems of spatial reference in human memory. Cognitive Psychology, 43, 274–310. doi:10.1006/cogp.2001.0758

Valiquette, C. M., McNamara, T. P., & Labrecque, J. S. (2007). Biased representations of the spatial structure of navigable environments. Psychological Research, 71, 288–297. doi:10.1007/s00426-006-0084-0

Wildbur, D. J., & Wilson, P. N. (2008). Influences on the first-perspective alignment effect from text route descriptions. Quarterly Journal of Experimental Psychology, 61, 763–783. doi:10.1080/17470210701303224

Wilson, P. N., Wilson, D. A., Griffiths, L., & Fox, S. (2007). First-perspective spatial alignment effects from real-world exploration. Memory & Cognition, 35, 1432–1444. doi:10.3758/BF03193613

Yamamoto, N., & Shelton, A. L. (2005). Visual and proprioceptive representations in spatial memory. Memory & Cognition, 33, 140–150. doi:10.3758/BF03195304

Yamamoto, N., & Shelton, A. L. (2007). Path information effects in visual and proprioceptive spatial learning. Acta Psychologica, 125, 346–360. doi:10.1016/j.actpsy.2006.09.001

Yamamoto, N., & Shelton, A. L. (2009). Orientation dependence of spatial memory acquired from auditory experience. Psychonomic Bulletin & Review, 16, 301–305. doi:10.3758/PBR.16.2.301

Author Note

The reported research was supported by Research Grant No. 206912 (OSSMA) by the European Research Council to M.N.A. We thank Nathan Greenauer and Catherine Mello for useful comments and suggestions during the design of the experiments, and Adamantini Chatzipanayioti and Elena Averkiou for help with data collection.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Avraamides, M.N., Sarrou, M. & Kelly, J.W. Cross-sensory reference frame transfer in spatial memory: the case of proprioceptive learning. Mem Cogn 42, 496–507 (2014). https://doi.org/10.3758/s13421-013-0373-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-013-0373-y