Abstract

Research on patients with apraxia, a deficit in skilled action, has shown that the ability to use objects may be differentially impaired relative to knowledge about object function. Here we show, using a modified neuropsychological test, that similar dissociations can be observed in response times in healthy adults. Participants were asked to decide which two of three presented objects shared the same manipulation or the same function; triads were presented in picture and word format, and responses were made manually (button press) or with a basic-level naming response (verbally). For manual responses (Experiment 1), participants were slower to make manipulation judgments for word stimuli than for picture stimuli, while there was no difference between word and picture stimuli for function judgments. For verbal-naming responses (Experiment 2), participants were again slower for manipulation judgments over word stimuli, as compared with picture stimuli; however, and in contrast to Experiment 1, function judgments over word stimuli were faster than function judgments over picture stimuli. These data support the hypotheses that knowledge of object function and knowledge of object manipulation correspond to dissociable types of object knowledge and that simulation over motor information is not necessary in order to retrieve knowledge of object function.

Similar content being viewed by others

Introduction

Apraxia of object use (hereafter, apraxia) is a deficit in performing skilled, meaningful action that is not due to basic sensory or motor dysfunction (e.g., Liepmann, 1977; Rothi, Ochipa, & Heilman, 1991). Patients with apraxia may be impaired at imitating observed actions, grasping and using objects, pantomiming object use from visual presentation of objects, and/or gesturing from verbal command (see, e.g., Buxbaum & Saffran, 2002; Buxbaum, Veramonti, & Schwartz, 2000; Negri, Rumiati, et al., 2007; Ochipa, Rothi, & Heilman, 1989; for reviews, see Cubelli, Marchetti, Boscolo, & Della Sala, 2000; Leiguarda & Marsden, 2000; Mahon & Caramazza, 2005, Rothi et al., 1991). The patterns of spared and impaired cognitive function in patients with apraxia provide a window into interactions between motor representations associated with object use and conceptual knowledge of objects. One way to study this relationship in an experimental situation is to ask participants to make judgments about the similarity of different objects that are similar in terms of either their manner of manipulation or their function. For example, while the physical manner of manipulation associated with using a knife and scissors may be different, they ultimately can be used to cut a piece of paper. On the other hand, the motor movements associated with the use of scissors and pliers are similar despite having different functions. Thus, one may distinguish between similarity in manipulation (e.g., scissors and pliers) and similarity in function (e.g., knife and scissors). Buxbaum and collaborators (Buxbaum & Saffran, 2002; Buxbaum et al., 2000) found that some apraxic patients can be impaired for judging similarity in manner of manipulation among objects, while judging similarity in function can be relatively spared. While not tested on the same type of similarity judgment test, there are indications that it is possible for function knowledge to be disproportionately impaired relative to manipulation knowledge (Negri, Lunardelli, Reverberi, Gigli, & Rumiati, 2007; Sirigu, Duhamel, & Poncet, 1991).

Another important dimension that is relevant for understanding how the brain represents objects is the relationship between manipulation knowledge and visual structure. This relationship is captured in the notion of visual affordances (Gibson, 1979), or structural properties of objects that are “interpreted” in terms of their role in physical interactions with the objects (e.g., the handle on a teacup). The notion of a visual affordance includes within it the idea that there is a privileged relationship between manipulation knowledge and visual form. Evidence consistent with this idea is provided by the performance profile of patients with optic aphasia (Beauvois, 1982). Optic aphasia is a modality-specific deficit for naming objects from visual presentation. Interestingly, optic aphasics are able to successfully name from tactile presentation and from definition, and the classic profile in such cases is that they can successfully pantomime the appropriate object use of the same items that they cannot visually identify by name. There has been a lot of discussion about the nature of the underlying impairment in optic aphasia; one view is that, in such patients, there is a disconnection of visual information from left-hemisphere language centers (for discussions and data on optic aphasia, see Beauvois, 1982; Caramazza, Hillis, Rapp, & Romani, 1990; Coslett & Saffran, 1989; Shallice, 1988; Teixeira Ferreira, Guisiano, Ceccaldi, & Poncet, 1997). It has also been suggested that the data from optic aphasia suggest that there is a “privileged” relationship between the structural properties of tools and the actions associated with their use (Caramazza et al., 1990).

An important and unresolved issue is whether function and manipulation knowledge constitute dissociable types of information (Barsalou, 1999, 2008; Buxbaum & Saffran, 2002; Buxbaum et al., 2000; Gallese & Lakoff, 2005; Mahon & Caramazza, 2005). One theoretical view, the embodied cognition hypothesis, maintains that conceptual processing of tools necessarily involves the retrieval or simulation of motor information (Barsalou, 1999, 2008; Gallese & Lakoff, 2005). Thus, on embodied accounts, a necessary and intermediary step in the process of retrieving (or computing) function knowledge is simulation of the motor movements that are associated with using the object. In other words, motor-relevant information about how to manipulate objects is, in part, constitutive of function knowledge: Part of what it means to know an object’s function, according to the embodied cognition hypothesis, is to know how to use the object. Strong forms of the embodied hypothesis (e.g., Gallese & Lakoff, 2005) are eliminativist about “abstract” knowledge and argue that it does not persist without an active and concurrent motor simulation; other proposals within the embodied cognition framework (e.g., Barsalou, 1999) do posit abstract representations. However, all embodied hypotheses of tool representation maintain that a necessary step in conceptual analysis is motor simulation.

An alternative theoretical position maintains that while there is significant interaction and exchange of information between the systems that represent manipulation, function, and visual knowledge, they are nonetheless functionally dissociable systems and motor knowledge is not constitutive of function knowledge (e.g., Chatterjee, 2010; Mahon & Caramazza, 2005, 2008; Pelgrims, Olivier, & Andres, 2011). On this view, retrieving abstract conceptual knowledge may concomitantly activate manipulation knowledge, but critically, the retrieval of abstract conceptual knowledge (e.g., knowing an object’s function) does not logically imply the prior retrieval of an object’s motor-relevant properties (e.g., Mahon & Caramazza, 2005, 2008; Negri, Rumiati, et al., 2007; Rosci, Chiesa, Laiacona, & Capitani, 2003; see also Binder & Desai, 2011; Chatterjee, 2010; Hickok, 2009).

What is in a tool concept?

There is a broader theoretical issue that turns on how a theory models the relationship between manipulation and function knowledge. The issue is what types of information are considered to be “part of a concept” and which should be considered outside the scope of the concept. At a terminological level, there is the issue of whether one wants to include sensory and motor information within the scope of meaning of the term concept. But the terminological issue has limited implications. The terminological issue, in and of itself, cannot be empirically tested; one must simply decide how to deploy the term concept. However, there is a substantive issue of what types of information are retrieved in the chain of cognitive/neural processes that occur upon presentation of a visual object in the context of a given behavioral goal. To turn this around, one may ask: For what types of tasks can the ability to successfully complete them be taken to be diagnostic of concept possession? No theories would claim that being able to grasp an object and move it over 2 in. is diagnostic of whether one possesses the concept. However, what is the case when an object is grasped and used, or when a given object cannot be found (e.g., scissors) and so a second object is used in its place that can fulfill the same function (e.g., knife)?

The field has arrived at the consensus that being able to name a visually presented object is diagnostic of concept possession, at least in the most minimal sense. In other words, it may be possible to name objects on relatively impoverished semantic information. Indeed, it may be argued that the data from apraxia reviewed above of patients with impairments for using but not naming objects are evidence for that hypothesis. Nevertheless, observations of patients with impaired object use and spared object naming place an important constraint on theories. Those data indicate that motor simulation is not necessary in order for high-level visual object recognition, lexical semantic processing, and name retrieval to operate. Thus, the processes that are minimally involved in object naming do not include, constitutively, motor simulation, even when the objects being named are tools. We believe that those are the minimal implications of the currently available neuropsychological data and that they rule out very strong forms of the embodied cognition hypothesis (e.g., Gallese & Lakoff, 2005). The issue then becomes, positively, what model of tool representation is consistent with both the available patient evidence and the evidence that has been marshaled to support the embodied cognition hypothesis.

Here, we align our approach with the stronger claim, that part of the core of an artifact concept is knowledge of function (“what for”) and that the core of the concept does not include motor-based information.Footnote 1 The merits of the hypothesis that the core of tool concepts is abstract functional knowledge have already been discussed in the literature. Embodied cognition theorists would maintain that whatever the core of a concept is, computation of that “core” meaning involves a process of motor simulation (e.g., Barsalou, 1999, 2008; Gallese & Lakoff, 2005). Numerous studies have shown that activation of the sensory–motor systems is associated with the computation of meaning (e.g., Rueschemeyer, van Rooij, Lindemann, Willems, & Bekkering, 2010 ; Scorolli & Borghi, 2007; Zwaan, Stanfield, & Yaxley, 2002; for a review, see Pulvermüller, 2005). However, none of those data demonstrate that the activation of sensorimotor information is necessarily part of the process of retrieving the core of an artifact concept, because they do not distinguish whether sensory–motor activation occurs after core knowledge has been retrieved or before. Other theories that have been labeled as embodied cognition theories may not, in the end, be that embodied. For instance, in an elegant study, Boronat and colleagues (2005) showed that parietal regions involved in object use are more activated during manipulation judgments than during function judgments. Those authors argued that function knowledge may be represented in a verbal/declarative format and, thus, be representationally separate from motor-based knowledge about how to manipulate objects. However, we would argue that whether or not motor-based information is activated in the course of making manipulation judgments does not distinguish between embodied and nonembodied theories of artifact representation.

The present project

Buxbaum and colleagues (Buxbaum & Saffran, 2002; Buxbaum et al., 2000) first introduced the task in which manipulation and function triads are used, showing that apraxic patients can be impaired when asked to make manipulation judgments about triads (e.g., which two objects are manipulated similarly: pliers, scissors, knife), as compared with making function judgments about triads of objects (pliers, scissors, knife). Here, we modified that task to use response time (RT) and accuracy as the dependent measures. Of particular interest is whether making judgments about object function is as slow as, or slower than, making judgments about object manipulation. Specifically, if retrieving function information involves, necessarily, simulation of sensory–motor information and, hence, retrieval of manipulation knowledge, function judgments should be at least as slow as manipulation judgments. That pattern in the data would be consistent with the view that retrieving function knowledge includes an “embedded” retrieval of manipulation knowledge. If, however, function judgments are reliably faster than manipulation judgments, processes involved in the manipulation judgment are not involved in the function judgment. Because the experiments (see details below) were designed such that the only difference between function and manipulation judgments was the type of judgment, it could then be inferred that retrieving function knowledge does not involve, necessarily, retrieval of manipulation knowledge. The cleanest test of these hypotheses is when the stimuli are presented as words, since presentation in picture form is “contaminated” by the presence of object affordances. Thus, we tested both manipulation and function judgments with picture and word stimuli in the same group of participants. In Experiment 1, participants were asked to indicate their judgments about which two objects of a triad were more similar (in manipulation or function) with a manual (key-press) response. In Experiment 2, a different group of participants were asked to make the same decisions (over the same materials) with a basic-level verbal-naming response.

Experiment 1

Method

Participants

Thirty-two University of Rochester undergraduate students (8 male; all right-handed) between the ages of 18 and 24 years (M = 19.9 years, SD = 1.8 years) participated in the study in exchange for payment. They all had normal or corrected-to-normal vision and gave written informed consent in accordance with the University of Rochester Institutional Review Board.

Materials

Thirty-six grayscale photographs of man-made objects were presented using E Prime software 2.0 (Psychology Software Tools, Pittsburgh, PA). The 36 items were organized into 12 triads of 3 items (see Supplemental Online Materials for all triads). The 3 items within a triad always had the following relationship: 2 of the 3 items were related in manipulation, but not function, and 2 of the 3 items were related in function, but not manipulation. For instance, a triad might be feather duster, bell, and vacuum (feather duster and bell are similar in manipulation, while feather duster and vacuum are similar in function). The strength of this design is that the same materials (triads) appear for manipulation and function decisions. Thus, with proper counterbalancing of stimulus format, any difference between the two types of decisions cannot be attributed to properties of the words/concepts that were selected.

In the process of selecting stimuli that were both manipulable objects and optimized for the contrast of interest (manipulation vs. function), we could not find a way to avoid some orthographic similarities between targets and correct answer choices for some of the triads (see Supplemental Online Materials for the list of triads). Thus, the analyses below are restricted to items for which there are no orthographic confounds (8 of 12 triads, or 24 items) for both manipulation and function judgments. Because the principal analyses were restricted to 8 items per condition, the Supplemental Online Materials contain additional analyses where all materials were examined (12 function and manipulation triads), and where orthographic confounds were removed separately for manipulation and function decisions (3 triads shared orthographic similarities for function judgments between targets and correct answers, and 1 triad shared orthographic similarities for manipulation judgments between the target and the correct answers). The patterns observed in all supplemental analyses are the same as those reported in the main text.

Design

There were two factors in the experiment: decision type (two levels; manipulation and function) and presentation format (two levels; pictures and words). The materials were blocked by decision type, with 24 trials per block. The format of triads (picture or word) within a block was presented in an A [word] B [picture] A [word] or B [picture] A [word] B [picture] design. For instance, the first block for participant 1 might consist of WordManip(n=6) PictureManip(n=12) WordManip(n=6). The second block for participant 1 would then consist of WordFunc(n=6) PictureFunc(n=12) WordFunc(n=6). Decision type was counterbalanced across participants (for instance, for participant 2, it would be Block 1: PictureFunc(n=6) WordFunc(n=12) PictureFunc(n=6); Block 2: PictureManip(n=6) WordManip(n=12) PictureManip(n=6)). Whether the correct answer was the left or the right object on the screen was randomized within block. Each participant completed one function and one manipulation block for a total of 48 trials per participant, or 1,536 total trials. With 32 participants, the experimental design with the above-described counterbalancing scheme is completely balanced, so that no aspect of the experimental design is correlated with position in the experiment across subjects.

Procedure

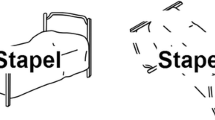

The experiment was run on a desktop computer monitor (1,920 × 1,080 pixels; monitor refresh rate = 60 Hz; viewing distance = 60 cm). Participants were told that they would see three stimuli (in word or picture form) presented in a triangular arrangement on every trial (see Fig. 1 for a schematic). Their task was to decide which of the two objects—the left object or the right object—was most similar to the target (top) object on the basis of manner of manipulation or function. They were given explicit instructions between blocks to focus solely on the type of relationship currently being tested. The verbatim instructions to participants for the two types of decisions are provided in the Supplemental Online Materials (all materials and experimental scripts are available from the corresponding author).

Schematic of trial structure. Participants were presented with three objects in a triad arrangement and were asked to decide which object—presented to the left or to the right—best matched the target object by manner of manipulation or function, respectively. Triads were presented in picture form (a) or word form (b)

Results

Only correct responses were included within the RT analyses (91.4 % of trials). RTs were cleaned by excluding responses faster than 200 ms and greater than 2 standard deviations above and below the mean for each participant, calculated across all conditions (4.9 % of correct trials were excluded according to those criteria). RTs, standard deviations, and proportions of error for each cell of the design are shown in Table 1. All statistical analyses were conducted treating both subjects and items as random factors (F 1/t 1 and F 2/t 2 analyses, respectively).

Accuracy analysis

A 2 × 2 ANOVA was performed over proportion correct data with decision type and presentation format as factors. There was no main effect of decision type, F 1(1, 31) = 1.23, MSE = .01, p > .26, \( \eta_{\text{p}}^{{2}} \) = .04; F 2 < 1, a main effect of presentation format in the subjects’ analysis, F 1(1, 31) = 4.19, MSE = .01, p < .05, \( \eta_{\text{p}}^{{2}} \) = .12; F 2(1, 15) = 1.23, MSE = .01, p > .28, \( \eta_{\text{p}}^{{2}} \) = .08, with word stimuli being more accurate than picture stimuli, and a significant interaction between the two factors, F 1(1, 31) = 10.92, MSE = .01, p < .01, \( \eta_{\text{p}}^{{2}} \) = .26; F 2(1, 15) = 8.38, MSE = .01, p < .05, \( \eta_{\text{p}}^{{2}} \) = .36. Planned contrasts (t-tests, two-tailed) showed that while there was no difference between manipulation decisions with word stimuli and manipulation decisions with picture stimuli, t 1 < 1; t 2 < 1, function decisions with words were more accurate than function decisions with pictures, t 1(31) = 3.75, p < .001; t 2(15) = 2.77, p < .05. In addition, while there was no difference between manipulation and function decisions with pictures, t 1(31) = 1.10, p > .27; t 2 < 1, function decisions with words were more accurate than manipulation decisions with words, t 1(31) = 2.99, p < .01; t 2(15) = 2.70, p < .01.

Response time analysis

A 2 × 2 ANOVA was performed contrasting the decision type and presentation format factors. There was a main effect of the decision type factor, F 1(1, 31) = 35.69, MSE = 253.37, p < .001, \( \eta_{\text{p}}^{{2}} \) = .54; F 2(1, 15) = 46.55, MSE = 989.27, p < .001, \( \eta_{\text{p}}^{{2}} \) = .76; the mean RTs for manipulation decisions, collapsing over presentation format, were longer than the mean RTs for function decisions. There was also a main effect of presentation format, F 1(1, 31) = 45.00, MSE = 111.41, p < .001, \( \eta_{\text{p}}^{{2}} \) = .59; F 2(1, 15) = 8.33, MSE = 315.99, p < .05, \( \eta_{\text{p}}^{{2}} \) = .36; the mean RTs for word stimuli were longer than the mean RTs for picture stimuli. Of particular importance, the interaction between the two factors was significant, F 1(1, 31) = 25.77, MSE = 327.74, p < .001, \( \eta_{\text{p}}^{{2}} \) = .45; F 2(1, 15) = 20.71, MSE = 745.17, p < .001, \( \eta_{\text{p}}^{{2}} \) = .58. Planned contrasts (paired t-tests, two-tailed) showed that manipulation judgments for words were slower than manipulation judgments for pictures, t 1(31) = 6.62, p < .001; t 2(15) = 3.90, p < .01, and that manipulation judgments for words were slower than function judgments for words, t 1(31) = 6.46, p < .001; t 2(15) = 10.34, p < .001. While manipulation judgments with pictures were slower than function judgments with pictures, t 1(31) = 2.65, p < .05; t 2(15) = 1.82, p < .08, there was no difference between function judgments with words and function judgments with pictures, t 1(31) = 1.34, p > .18; t 2 < 1 (see Fig. 2 for RT effects and Table 1 for accuracy effects).

Mean response times for function and manipulation judgments in picture and word form in Experiment 1. Error bars reflect standard errors of the means, across participants. *** p < .001. n.s. = not significant

Discussion

A function and manipulation judgment task was used to probe the independence of function knowledge from manipulation knowledge in healthy participants. Participants decided which two of three objects in a triad were most related in function or in manner of manipulation, and stimuli were either words or pictures. In Experiment 1, participants indicated their decision by means of a manual response (key-press). Manipulation judgments with word stimuli were slower than function judgments with word stimuli, as well as both types of judgments for picture stimuli. The accuracy and RT analysis for function decisions indicated a slight speed–accuracy trade-off: Function decisions in picture format were less accurate and faster than function decisions in word format (see Table 1). While there was no difference in RT between function decisions in word and picture format, t(31) = 1.34, p > .18, we corrected the RT data with the inverse efficiency score (IES; Townsend & Ashby, 1978; see also Bruyer & Brysbaert, 2011) and performed an identical 2 × 2 ANOVA; the IES is computed by dividing an individual participant’s RT by 1 minus the percent error (i.e., percent correct). The results from those additional analyses are detailed in the Supplemental Online Materials; importantly, all of the principal effects remain unchanged using the IES instead of raw RT. Thus, speed–accuracy trade-offs can be definitively ruled out as driving the observed pattern.

The findings in Experiment 1 support the hypothesis that retrieving knowledge of object function does not include within it an embedded simulation of the motor movement associated with the use of objects. If function knowledge is representationally distinct from motor-based knowledge, the question arises as to how function knowledge is represented. Above, we suggested that function knowledge is represented in an “abstract” format that would have a privileged relationship to lexical semantic information (see also Boronat et al., 2005; Crutch & Warrington, 2005; Shallice, 1988). That hypothesis receives some preliminary support from the results of the accuracy analyses, in which accuracy was higher for function judgments over word stimuli than for function judgments over picture stimuli. It is important, however, to distinguish the claim that function knowledge holds priority in the representation of artifact concepts, relative to manipulation knowledge, from the well-known hypothesis, known as the sensory/functional theory (SFT), that function knowledge is more important than visual knowledge for representing artifact concepts (e.g., Warrington & McCarthy, 1987; Warrington & Shallice, 1984). The SFT has not been supported by studies of patients with category-specific semantic deficits (e.g., Capitani, Laiacona, Mahon, & Caramazza, 2003). In contrast, the relevant neuropsychological domain for evaluating the idea that function knowledge holds priority over manipulation knowledge in the representation of artifact concepts is patients with selective impairments for knowledge of object function but spared knowledge of object manipulation (e.g., Negri, Lunardelli, et al., 2007; Sirigu et al., 1991).

In Experiment 2, we asked whether simply changing the way in which participants respond, from a manual key-press to a verbal basic-level naming response, would selectively change the pattern of RT effects observed for function judgments. In Experiment 2, participants indicated the correct match to the target object on every trial by naming the object at the basic level, while all other aspects of the experiment were identical to those in Experiment 1. If function knowledge is part of the core of an artifact concept, and if there is a privileged relationship between function knowledge and lexical semantics, this change in modality of response should produce a different pattern of effects relative to Experiment 1, but only for function judgments. Specifically, the prediction is that function judgments with word stimuli should be faster than function judgments with picture stimuli.

Experiment 2

Method

Participants

Thirty-two University of Rochester undergraduate students (9 male; all right-handed) between the ages of 18 and 21 years (M = 19.7 years, SD = 0.95 years) participated in the study in exchange for payment. They all had normal or corrected-to-normal vision, gave written informed consent in accordance with the University of Rochester Institutional Review Board, and had not taken part in Experiment 1.

Materials

The same materials and design as those from Experiment 1 were used in Experiment 2. Stimuli were presented with DMDX (Forster & Forster, 2003), and responses were recorded from verbal input into a microphone.

Procedure

The same desktop computer monitor as that in Experiment 1 was used in Experiment 2. In this experiment, a familiarization phase was included to ensure that participants named each object with the correct basic-level name; before the start of the experiment proper, each picture was presented with its correct name printed beneath it, and participants read the name. This familiarization was carried out twice, each time with a different random stimulus order. After familiarization, the experiment began. The directions were identical to those in Experiment 1, except that participants were instructed to speak the name of the object that matched the target into the microphone, and the resulting wav files were scored offline by the experimenter (F.E.G.). Experiment 2 was self-paced (participants hit the space bar with their right hand in between trials to continue to the next trial). All other aspects of Experiment 2 were identical to those in Experiment 1.

Results

Only correct responses were included in the RT analysis (92.3 % of trials). The data were cleaned following the same criteria as those in Experiment 1 (4.8 % of correct trials were excluded as outliers). RTs, standard deviations, and proportions of error for each cell of the design are shown in Table 2.

Accuracy analysis

A 2 × 2 ANOVA was performed contrasting the factors decision type and presentation format over the proportion correct data. There was no effect of decision type, F 1 < 1; F 2 < 1, a main effect of presentation format in the subjects’ analysis, F 1(1, 31) = 4.31, MSE = 0.01, p < .05, \( \eta_{\text{p}}^{{2}} \) = .12; F 2(1, 15) = 1.66, MSE = .01, p > .21, with word stimuli being more accurate than picture stimuli, and an interaction between decision type and presentation format, F 1(1, 31) = 6.04, MSE = 0.01, p < .05, \( \eta_{\text{p}}^{{2}} \) = .16; F 2(1, 15) = 3.79, MSE = .01, p < .07. While the accuracy of manipulation decisions was not modulated by presentation of pictures or words, t 1 < 1; t 2 < 1, function decisions with words were more accurate than function decisions with pictures, t 1(31) = 3.41, p < .01; t 2(15) = 2.16, p < .05. Function decisions with pictures and manipulation decisions with pictures were not significantly different, t 1 < 1; t 2 < 1; however, function decisions with words were more accurate than manipulation decisions with words, t 1(31) = 2.44, p < .05; t 2(15) = 1.83, p < .09.

Response time analysis

A 2 × 2 ANOVA was performed contrasting the decision type and presentation format factors. Decision type was significant, F 1(1, 31) = 25.54, MSE = 145.76, p < .001, \( \eta_{\text{p}}^{{2}} \) = .45; F 2(1, 15) = 63.25, MSE = 312.92, p < .001, \( \eta_{\text{p}}^{{2}} \) = .81: Function decisions were significantly faster than manipulation decisions. There was a main effect of presentation format in the subjects’ analysis, F 1(1, 31) = 7.96, MSE = 504.50, p < .01, \( \eta_{\text{p}}^{{2}} \) = .20; F 2(1, 15) = 1.37, MSE = 691.99, p < .26, \( \eta_{\text{p}}^{{2}} \) = .08: The mean RTs for picture stimuli were shorter than the mean RTs for word stimuli. The two-way interaction between presentation format and decision type was significant, F 1(1, 31) = 29.88, MSE = 423.08, p < .001, \( \eta_{\text{p}}^{{2}} \) = .49; F 2(1, 15) = 51.77, MSE = 114.47, p < .001, \( \eta_{\text{p}}^{{2}} \) = .78. Planned contrasts (paired t-test, two-tailed) were carried out following the same protocol as in Experiment 1. Manipulation judgments with words were slower than manipulation judgments with pictures, t 1(31) = 4.41, p < .001; t 2(15) = 3.76, p < .01; in addition, manipulation judgments with words were slower than function judgments with words, t 1(31) = 5.72, p < .001; t 2(15) = 11.22, p < .001. Manipulation judgments with pictures were slower than function judgments with pictures, t 1(31) = 2.69, p < .05; t 2(15) = 2.91, p < .05). Function judgments with pictures were slower than function judgments with words in the subjects’ analysis, t 1(31) = 3.01, p < .01; t 2(15) = 1.64, p < .12 (see Fig. 3 for RT effects and Table 2 for accuracy effects).

Mean response times for function and manipulation judgments in picture and word form in Experiment 2. Error bars reflect standard error of the mean, across participants. ** p < .01; *** p < .001

Discussion

Experiment 2 used the same experimental setup and materials as those in Experiment 1 and simply changed the way in which participants responded: In Experiment 2, participants indicated which of two objects matched a third on either manipulation or function by naming (at the basic level) the correct choice. As was observed in Experiment 1 with manual responses, manipulation judgments with words were slower than function judgments with words, as well as both types of decisions with pictures. Interestingly, the results for function judgments doubly dissociated from the pattern for manipulation judgments: While manipulation judgments were slower in word form than in picture form, function judgments were slower in picture form than in word form. These data support the hypothesis that retrieving function knowledge is independent of retrieving manipulation knowledge. In addition, these data support the hypothesis that function knowledge has a privileged relationship to lexical semantics.

An objection that may be raised against the conclusion that there exists a privileged relationship between manipulation knowledge and picture format is that in Experiment 2, RTs in the manipulation word format condition were facilitated more than RTs in the picture format condition. Thus, it may be argued that the disparity between manipulation decisions in word and picture format in Experiment 1 was due to motor interference. In this context, it is important to note that all experimental conditions were relatively faster in Experiment 2 than in Experiment 1 (function word, 441 ms; function picture, 268 ms; manipulation word, 742 ms; manipulation picture, 337 ms). This general change in RT may be due to multiple factors, including the change in response modality and the inclusion in Experiment 2 of a familiarization phase in which participants practiced naming all of the items. While the contribution of motor interference to the pattern observed in Experiment 1 cannot be ruled out entirely and would be interesting in and of itself, we do not believe that it can be the entire story, since the difference between word and picture stimuli for manipulation decisions obtained in both Experiments 1 and 2.Footnote 2

General discussion

Theories that posit that manipulation knowledge must be simulated in order to retrieve or compute function knowledge (e.g., Barsalou, 1999, 2008; Gallese & Lakoff, 2005; Kiefer & Pulvermüller, 2012; Simmons & Barsalou, 2003) have difficulty explaining the pattern of RT and error effects that we have reported. Our findings are consistent with the view that function and manipulation knowledge are functionally distinct types of object information and that retrieving function knowledge does not involve, necessarily, simulation of manipulation knowledge. This conclusion about the functional organization of object knowledge is consistent with the available neuropsychological data (Buxbaum & Saffran, 2002; Buxbaum et al., 2000; Negri, Rumiati, et al., 2007; Rosci et al., 2003; for discussions, see Kemmerer & Gonzalez Castillo, 2010; Mahon & Caramazza, 2005, 2008).

Functional neuroimaging data are also consistent with the view that function and manipulation knowledge are processed by separable systems. In healthy individuals, making manipulation decisions leads to differential BOLD contrast in left inferior parietal cortex (Boronat et al., 2005; Kellenbach, Brett, & Patterson, 2003), while function judgments leads to differential BOLD contrast in anterior temporal cortex (Canessa et al., 2008). More recently, Ishibashi, Lambon Ralph, Saito, and Pobric (2011) found that repetitive transcranial magnetic stimulation (rTMS) over inferior parietal cortex led to a selective slowing down of manipulation decisions, but not function decisions. On the contrary, when rTMS was applied to the anterior temporal lobes, there was a selective slowing down of function decisions, but not manipulation decisions (for similar TMS results, see Pelgrims et al., 2011; Pobric, Jeffries, & Lambon Ralph, 2010). These results place important constraints on a theory that posits simulation over motor information during concept retrieval. On the one hand, by hypothesis, while simulation of motor properties is important for filling out rich detail on symbolic representations (Mahon & Caramazza, 2008), data from brain-damaged patients (Buxbaum et al., 2000; Negri, Lunardelli, et al., 2007; Negri, Rumiati, et al., 2007), TMS (Ishibashi et al., 2011; Pelgrims et al., 2011; Pobric et al., 2010), functional neuroimaging (Boronat et al., 2005; Canessa et al., 2008; Kellenbach et al., 2003), and our behavioral data converge to suggest that the retrieval of manipulation knowledge is not a necessary step in retrieving function knowledge.

The data reported here help to elucidate what information is constitutive of tool concepts. Developmental work with prelinguistic children points to the role of function and shape knowledge for accessing concepts (Landau, Smith, & Jones, 1998), and neuropsychological studies indicate that function knowledge and naming impairments typically co-occur (Sirigu et al., 1991). Thus, by hypothesis, having a concept means being able to access amodal and symbolic properties of an object (e.g., it is a hammer, it is used for pounding nails, etc.), while the motor information necessary to use that object is processed by a representationally distinct, but fully interconnected, system. We have referred to this proposal elsewhere (Mahon & Caramazza, 2008) as the grounding by interaction hypothesis (see also Binder & Desai, 2011; Chatterjee, 2010; Hickok, 2009). The grounding by interaction hypothesis has been offered as an explanation of why motor information is automatically activated during conceptual tasks: Amodal conceptual knowledge and motor knowledge are representationally distinct but fully interconnected, and the dynamics of the system are such that activation of amodal conceptual knowledge leads to spreading activation of sensory–motor content that is connected and that may be relevant to, but may not be strictly necessary for, the computation of meaning.

These considerations frame a new set of questions: If motor activation is not necessary for the computation of meaning, then why is it there? Is motor system activation entirely ancillary to, and irrelevant for, the computation of meaning? Or does the computation of meaning lean on motor activation in important ways? By analogy, activation of lexical semantic representation leads to the automatic activation of phonological information, even when there is no logical need to activate phonology in order to satisfy the task requirements (e.g., Costa, Caramazza, & Sebastian-Galles, 2000; Peterson & Savoy, 1998). However, whereas phonological content bears an arbitrary (i.e., Saussarian) relation to meaning, motor knowledge about how to manipulate objects can be systematically tied to amodal meaning and function knowledge. For instance, the phonemes that make up the word “hammer” are arbitrary with respect to the meaning of the concept hammer; but the motor information that hammers are grasped in such and such a way and swung in such and such a way is very tightly tied up with what hammers are designed to accomplish (i.e., their function). It is not possible to come up with other, de novo, phonological arrangements to convey in English the meaning of the concept hammer, but other objects could be used, de novo, as hammers so long as they are manipulated in a way that satisfies the function of a hammer. Such systematic relationships between motor and conceptual knowledge for tools reduce the likelihood that motor activation is irrelevant to the computation of meaning. But the issue remains then as to exactly what functional role motor system activation plays in conceptual processing. In order to gain traction on this issue, it will be important to develop a model of the dynamics of information exchange among visual, motor, and conceptual processes; with strong hypotheses about the dynamical principles that govern how information is exchanged among functionally distinct processes, it will be possible to begin to ask how those different processes mutually depend on one another.

The tasks used in the present investigation permit a direct contrast between the two types of knowledge that were tested—function and manipulation knowledge—and allow addressing the question of whether retrieving one type of knowledge involves the retrieval of the other type. However, it is obviously the case that when making function judgments, function knowledge will be retrieved, and when making manipulation judgments, manipulation knowledge will be retrieved. A key issue to be addressed is whether when performing a “neutral” task, such as naming, function but not manipulation knowledge is automatically retrieved. More generally, another important issue to pursue is to evaluate, within the same experimental design, the relative importance of function, visual, and manipulation knowledge. The extant data from brain-damaged individuals (for a review, see Capitani et al., 2003) indicate that function knowledge does not hold priority over visual knowledge for discriminating among items within the category “tools.” However, it will be important to ask whether dissociations between those two types of knowledge can be shown as a function of the task in which participants are engaged.

Taking a step back, the grounding by interaction hypothesis can be tied into a theory of the causes of neural specificity for different classes of objects. The distributed domain-specific hypothesis (for discussions, see Mahon & Caramazza, 2009, 2011) proposes that functional specificity for a class of objects in the brain is driven not only by the local processing constraints of the region exhibiting specificity, but also by constraints imposed by connectivity with other regions that process other types of knowledge about the same class of objects. In other words, it could be hypothesized that the same channels of connectivity through which sensory–motor and conceptual knowledge are connected are also the substrate for biasing domain-specific networks to emerge, linking information about the same category of objects across different modalities of input and output.

Notes

A separate issue is whether function knowledge is differentially important as compared with high-level visual knowledge in representing artifact concepts–the so-called Sensory/Functional Theory. See below for a discussion.

Another important dimension to study in future work is perceptual similarity: Objects that are manipulated similarly share similar motor affordances (e.g., scissors, pliers) and tend to be structurally more similar. It is not clear whether perceptual similarity would slow down responses or speed them up, and how that would interact with the way in which participants are asked to respond. Available evidence (e.g., Lotto, Job, & Rumiati, 1999; for a discussion, see Navarrete, Del Prato, & Mahon, 2012) suggests that when targets must be identified, perceptual similarity of distractors impedes responding; however, it may be that when participants have to only “recognize” an object as matching or fulfilling a criterion (e.g., similar in manipulation), perceptual similarity would aid in directing attention toward that object. These will be important issues to sort out in future research.

References

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660.

Barsalou, L. W. (2008). Grounded cognition. Annual Review in Psycholology, 59, 617–645.

Beauvois, M. F. (1982). Optic aphasia: A process of interaction between vision and language. Philosophical Transactions of the Royal Society of London, 298, 35–47.

Binder, J. R., & Desai, R. H. (2011). The neurobiology of semantic memory. Trends in Cognitive Sciences, 15, 527–536.

Boronat, C. B., Buxbaum, L. J., Coslett, H. B., Tang, K., Saffran, E. M., Kimberg, D. Y., & Detre, J. A. (2005). Distinctions between manipulation and function knowledge of objects: Evidence from functional magnetic resonance imaging. Cognitive Brain Research, 23, 361–373.

Bruyer, R., & Brysbaert, M. (2011). Combining speed and accuracy in cognitive psychology: Is the Inverse Efficiency (IES) a better dependent variable than the mean reaction time (RT) and the percentage of errors (PE)? Psychologica Belgica, 51, 5–13.

Buxbaum, L. J., & Saffran, E. M. (2002). Knowledge of object manipulation and object function: Dissociations in apraxic and nonapraxic subjects. Brain and Language, 82, 179–199.

Buxbaum, L. J., Veramonti, T., & Schwartz, M. F. (2000). Function and manipulation tool knowledge in apraxia: Knowing “what for” but not “how”. Neurocase, 6, 83–97.

Canessa, N., Borgo, F., Cappa, S. F., Perani, D., Falini, A., Buccino, G., Tettamanti, M., & Shallice, T. (2008). The different neural correlates of action and functional knowledge in semantic memory: An fMRI study. Cerebral Cortex, 18, 740–751.

Capitani, E., Laiacona, M., Mahon, B., & Caramazza, A. (2003). What are the facts of category-specific disorders? A critical review of the clinical evidence. Cognitive Neuropsychology, 20, 213–261.

Caramazza, A., Hillis, A., Rapp, B. C., & Romani, C. (1990). The multiple semantics hypothesis: Multiple confusions? Cognitive Neuropsychology, 7, 161–189.

Chatterjee, A. (2010). Disembodying cognition. Language and Cognition, 2, 79–116.

Coslett, H. B., & Saffran, E. M. (1989). Preserved object recognition and reading comprehension in optic aphasia. Brain, 112, 1091–1110.

Costa, A., Caramazza, A., & Sebastian-Galles, N. (2000). The cognate facilitation effect: Implications for models of lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26, 1283–1296.

Crutch, S., & Warrington, E. K. (2005). Abstract and concrete concepts have structurally different representational frameworks. Brain, 128, 615–627.

Cubelli, R., Marchetti, C., Boscolo, G., & Della Salla, S. (2000). Cognition in action: Testing a model of limb apraxia. Brain and Cognition, 44, 144–165.

Forster, K. I., & Forster, J. C. (2003). DMDX: A Windows display program with millisecond accuracy. Behavior Research Methods, Instruments, & Computers, 35, 116–124.

Gallese, V., & Lakoff, G. (2005). The brain’s concepts: The role of the sensory-motor system in conceptual knowledge. Cognitive Neuropsychology, 22, 455–479.

Gibson, J. J. (1979). The ecological approach to visual perception. Boston, MA: Houghton Mifflin.

Hickok, G. (2009). Eight problems for the mirror neuron theory of action understanding in monkeys and humans. Journal of Cognitive Neuroscience, 21, 1229–1243.

Ishibashi, R., Lambon Ralph, M. A., Saito, S., & Pobric, G. (2011). Different roles of lateral anterior temporal and inferior parietal lobule in coding function and manipulation tool knowledge: Evidence from an rTMS study. Neuropsychologia, 49, 1128–1135.

Kellenbach, M. L., Brett, M., & Patterson, K. (2003). Actions speak louder than functions: The importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience, 15, 20–46.

Kemmerer, D., & Gonzalez Castillo, J. (2010). The two-level theory of verb meaning: An approach to integrating the semantics of action with the mirror neuron system. Brain and Language, 112, 54–76.

Kiefer, M., & Pulvermüller, F. (2012). Conceptual representations in mind and brain: Theoretical developments, current evidence and future directions. Cortex, 48, 805–825.

Landau, B., Smith, L., & Jones, S. (1998). Object shape, object function, and object name. Journal of Memory and Language, 38, 1–27.

Leiguarda, R. C., & Marsden, C. D. (2000). Limb apraxias: Higher-order disorders of sensorimotor integration. Brain, 123, 860–879.

Liepmann, H. (1977). The syndrome of apraxia (motor asymboly) based on a case of unilateral apraxia. (A translation from Monatschrift für Psychiatrie und Neurologie, 1900, 8, 15-44). In D. A. Rottenberg & F. H. Hockberg (Eds.), Neurological classics in modern translation. New York: Macmillan Publishing Co.

Lotto, L., Job, R., & Rumiati, R. (1999). Visual effect in picture and word categorization. Memory and Cognition, 27, 674–684.

Mahon, B. Z., & Caramazza, A. (2005). The orchestration of the sensory-motor systems: Clues from neuropsychology. Cognitive Neuropsychology, 22, 480–494.

Mahon, B. Z., & Caramazza, A. (2008). A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology – Paris, 102, 59–70.

Mahon, B. Z., & Caramazza, A. (2009). Concepts and categories: A cognitive neuropsychological perspective. Annual Reviews in Psychology, 60, 27–51.

Mahon, B. Z., & Caramazza, A. (2011). What drives the organization of object knowledge in the brain? Trends In Cognitive Science, 15, 97–103.

Navarrete, E., Del Prato, P., & Mahon, B. Z. (2012). Factors determining semantic faciliation and interference in the cyclic naming paradigm. Frontiers in Psychology, 38, 1–15.

Negri, G. A., Lunardelli, A., Reverberi, C., Gigli, G. L., & Rumiati, R. I. (2007). Degraded semantic knowledge and accurate object use. Cerebral Cortex, 43, 376–388.

Negri, G. A. L., Rumiati, R. I., Zadini, A., Ukmar, M., Mahon, B. Z., & Caramazza, A. (2007). What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cognitive Neuropsychology, 24, 795–816.

Ochipa, C., Rothi, L. J. G., & Heilman, K. M. (1989). Ideational apraxia: A deficit in tool selection and use. Annals of Neurology, 25, 190–193.

Pelgrims, B., Olivier, E., & Andres, M. (2011). Dissociation between manipulation and conceptual knowledge of object use in supramarginalis gyrus. Human Brain Mapping, 32, 1802–1810.

Peterson, R. P., & Savoy, P. (1998). Lexical selection and phonological encoding during language production: Evidence for cascading processing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 539–557.

Pobric, G., Jeffries, E., & Lambon Ralph, A. (2010). Amodal semantic representations depend on both anterior temporal lobes: Evidence from repetitive transcranial magnetic stimulation. Neuropsychologia, 48, 1336–1342.

Pulvermüller, F. (2005). Brain mechanisms linking language and action. Nature Reviews Neuroscience, 6, 576–582.

Rosci, C., Chiesa, V., Laiacona, M., & Capitani, E. (2003). Apraxia is not associated to a disproportionate naming impairment for manipulable objects. Brain and Cognition, 53, 412–415.

Rothi, L. J. G., Ochipa, C., & Heilman, K. M. (1991). A cognitive neuropsychological model of limb praxis. Cognitive Neuropsychology, 8, 443–458.

Rueschemeyer, S.-A., van Rooij, D., Lindemann, O., Willems, R. M., & Bekkering, H. (2010). The function of words: Distinct neural correlates for words denoting differently manipulable objects. Journal of Cognitive Neuroscience, 22, 1844–1851.

Scorolli, C., & Borghi, A. M. (2007). Sentence comprehension and action: Effector specific modulation of the motor system. Brain Research, 1130, 119–124.

Shallice, T. (1988). From neuropsychology to mental structure. Cambridge: Cambridge University Press.

Simmons, W. K., & Barsalou, L. W. (2003). The similarity-in-topography principle: Reconciling theories of conceptual deficits. Cognitive Neuropsychology, 20, 451–486.

Sirigu, A., Duhamel, J. R., & Poncet, M. (1991). The role of sensorimotor experience in object recognition. Brain, 114, 2555–2573.

Teixeira Ferreira, C., Giusiano, B., Ceccaldi, M., & Poncet, M. (1997). Optic aphasia: Evidence of the contribution of different neural systems to object and action naming. Cortex, 33, 499–513.

Townsend, J. T., & Ashby, F. G. (1978). Methods of modeling capacity in simple processing systems. In J. Castellan & F. Restle (Eds.), Cognitive theory, 3, 200-239. Hillsdale, N.J.: Erlbaum.

Warrington, E. K., & McCarthy, R. A. (1987). Categories of knowledge: Further fractionations and an attempted integration. Brain, 110, 1273–1296.

Warrington, E. K., & Shallice, T. (1984). Category specific semantic impairments. Brain, 107, 829–854.

Zwaan, R. A., Stanfield, R. A., & Yaxley, R. H. (2002). Language comprehenders mentally represent the shapes of objects. Psychological Science, 13, 168–171.

Author information

Authors and Affiliations

Corresponding author

Additional information

Authors’ Note

We are grateful to Laurel Buxbaum for making the materials from Boronat and colleagues (2005) available; those materials served as a starting point for the present set of triads. F.E.G. was supported by the Psi Chi Undergraduate Research Grant. B.Z.M. was supported by NIH Grant R21NS076176-01A1. This research was supported in part by Norman and Arlene Leenhouts. The authors are grateful to Jorge Almeida and Alfonso Caramazza for discussions of these issues.

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 171 kb)

Rights and permissions

About this article

Cite this article

Garcea, F.E., Mahon, B.Z. What is in a tool concept? Dissociating manipulation knowledge from function knowledge. Mem Cogn 40, 1303–1313 (2012). https://doi.org/10.3758/s13421-012-0236-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-012-0236-y