Abstract

Two experiments investigated whether the triadic interaction between objects, ourselves and other persons modulates motor system activation during language comprehension. Participants were faced with sentences formed by a descriptive part referring to a positive or negative emotively connoted object and an action part composed of an imperative verb implying a motion toward the self or toward other persons (e.g., “The object is attractive/ugly. Bring it toward you/Give it to another person/Give it to a friend”). Participants judged whether each sentence was sensible or not by moving the mouse toward or away from their body. Findings showed that the simulation of a social context influenced both (1) the motor system and (2) the coding of stimulus valence. Implications of the results for theories of embodied and social cognition are discussed.

Similar content being viewed by others

Introduction

An important ability of our species is to comprehend language referring words to objects, entities, and situations. According to the embodied and grounded cognition approach (Barsalou, 1999; Borghi, 2005; Borghi & Pecher, 2011; Elsner & Hommel, 2001; Glenberg, 1997), understanding language implies forming a mental simulation of what is linguistically described. For example, understanding the sentence “She kicks the ball” would imply a simulation entailing the recruitment of the same neurons that are activated when actually acting or perceiving the situation expressed through the language (Borghi & Cimatti, 2010; Gallese & Lakoff, 2005; Gibbs, 2003; MacWhinney, 1999; Zwaan, 2004). Simulation induces then a reenactment of our perceptual and interactive experience with objects and other entities, but it also has a predictive aspect, since it helps us prepare for situated action (e.g., avoiding a ball that is being kicked; see Barsalou, 2009; Borghi, 2012; Gallese, 2009).

Behavioral and TMS evidence has suggested that the simulation formed while comprehending language is quite detailed, since it is capable of activating different aspects of action, such as the effectors involved in the action-related sentences and the properties of objects mentioned in those sentences as well (see Borghi, Gianelli, & Scorolli, 2010, for a review). For example, for the sentence “She kicks the ball,” the foot, and not the hand, would be activated. In this sense, Glenberg and Kaschak (2002) demonstrated that the simulation activated while processing a sentence referring to an object motion affects motor responses. More precisely, in their experiment, participants were to judge whether sentences were sensible by pressing a button situated far from their body or close to it. The sentence stimuli referred to actions that imply a motion toward (“Open the drawer”) or away from (“Close the drawer”) the body. Results indicated that performance was faster when the movement implied by the sentence was congruent with the one actually performed by participants; that is, toward and away from the body movements were faster when performed in response to “Open the drawer” and “Close the drawer” sentences, respectively. The authors referred to this effect as the action-sentence compatibility effect (ACE).

Other evidence suggested that the neural system for emotion is engaged during language processing, showing how the motor system and the evaluation of emotional terms are strictly interwoven. The approach/avoidance effect (from now on, AAE) outlined that positive and negative words automatically trigger approach or avoidance actions (Chen & Bargh, 1999; Markman & Brendl, 2005; Niedenthal, Barsalou, Winkielman, Krauth-Gruber, & Ric, 2005; Puca, Rinkenauer, & Breidenstein, 2006; van Dantzig, Pecher, & Zwaan, 2008). Chen and Bargh found that congruent conditions—that is, pulling a lever toward the body in response to positive words (i.e., cake) and pushing a lever away from the body in response to negative words (i.e., spider)—yielded faster responses than did incongruent ones (i.e., pulling the lever toward the body in response to negative words and pushing the lever away from the body for positive words). The authors discussed their findings, claiming the following: “approach-like muscle movements are relatively faster in the presence of positive valenced stimuli and relatively slower in the presence of negatively valenced stimuli. Avoidance-like muscle movements are relatively faster in the presence of negatively valenced stimuli and relatively slower in the presence of positively valenced stimuli” (p. 221). In other words, processing positive words evokes movements toward the body (attraction), whereas processing negative words activates away-from-the-body movements (repulsion). Interestingly, van Dantzig et al. (2008) recently demonstrated that approach/avoidance actions are coded in terms of their outcomes, rather than being strictly bounded to specific movements, such as the toward or away from the body ones. In other words, on the one hand, attraction movements are those reducing the distance between the participants and the positively connoted words presented on screen. On the other hand, movements are given the repulsion label when they increase the distance between the participants and the negatively connoted words (see also Freina, Baroni, Borghi, & Nicoletti, 2009, on this topic).

Taken together, the ACE and the AAE studies support the embodied and grounded cognition approach, providing evidence for a reenactment of the actions, objects, and entities that are linguistically described by the stimuli. It has to be pointed out, though, that the aforementioned works do not take into account the social framework in which actions occur and in which objects and entities are perceived. This lack is particularly striking given that the last years have led to a substantial increase in the number of studies adopting a social perspective to investigate cognition. This research line starts from the assumption that the interactions between perception, action, and cognition cannot be fully understood by focusing on single individuals (see, e.g., the special issue of the journal Topics in Cognitive Science edited by Galantucci & Sebanz, 2009). Even if the social perspective has been adopted in language research as well (see, e.g., Clark, 1996; Galantucci & Steels, 2008; Moll & Tomasello, 2007; Pickering & Garrod, 2004; Tanenhaus & Brown-Schmidt, 2008; Tomasello, 2005), many scholars have admitted (e.g., Semin & Smith, 2008) that the social dimension has not been dealt with sufficiently by the existing embodied theories of language in cognitive psychology and cognitive neuroscience (see Schilbach et al., in press; Singer, in press). For this reason, the aim of the present work, moving from the embodied and grounded approach outlined above, was to investigate how a social perspective would influence the relationship between language and the motor system typically found through ACE and AAE paradigms. What will happen, for instance, when we take something good for ourselves in the presence of another person? How and to what extent does the presence of another person influence our behavior?

In order to answer these questions, we implemented a modified version of the ACE and AAE paradigms, in which sentence stimuli could describe actions directed toward the self but also toward other persons. More precisely, we asked participants to perform toward-/away-from-the-body movements while evaluating sentences such as, for instance, “The object is nice (ugly). Bring it toward you (Give it to another person).” In this way, we induced participants to simulate a social context, going beyond the self-related perspective typically used in the literature so far.

Our hypothesis was twofold. First, we predicted to replicate the well-know ACE (Glenberg & Kaschak, 2002). Indeed, participants were expected to yield faster performances when toward- and away-from-the-body movements were responses to sentences describing actions directed toward the self and toward other targets, respectively. Second, we hypothesized not only finding the AAE for sentences referring to the self, as had already been found in the literature (see Chen & Bargh, 1999), but also extending the finding to other targets. The last result would be completely new with respect to the current literature, which has typically focused on a self-related perspective.

In order to test our predictions, two experiments were conducted with the main aim of providing insights into whether and how the interaction between linguistically described objects (positive vs. negative objects) and actions directed to different targets (bringing to the self vs. giving to other targets) modulates the activation of the motor system during language comprehension.

Experiment 1

In the present experiment there were eight different conditions (see Table 1). Participants were asked to perform toward-/away-from-the-body movements when faced with sentences representing actions in which positively/negatively connoted objects could be directed toward the self or toward a generic another person target. Our hypothesis was twofold. First, we hypothesized to replicate the ACE as in Glenberg and Kaschak (2002); that is, we expected to find an interaction between implied sentence direction and the actual response direction. However, it is worth noting that, in the present experiment, the actions described in the sentences were always directed to a given target; that is, the verb to bring was always paired with the self (i.e., “Bring it toward you”), and the verb to give was always paired with the other person (i.e., “Give it to another person”). Therefore, faster reaction times (RTs) were expected when the actual response movements were congruent with the actions and the targets mentioned in the sentence (i.e., “Bring it toward you”—toward-the-body movement, conditions 1 and 3 (Table 1); “Give it to another person”—away-from-the-body movement, conditions 6 and 8 (Table 1)) with respect to when they were incongruent (i.e., “Bring it towards you”—away-from-the-body movement, conditions 2 and 4 (Table 1); “Give it to another person”—toward-the-body movement, conditions 5 and 7 (Table 1)).

Second, with respect to the AAE paradigm, we expected to replicate the facilitation for toward-the-body movements when performed in response to sentences describing positive objects (conditions 1 and 5) with respect to negative ones (conditions 3 and 7). As regard to the away-from-the-body movements, we hypothesized to find a similar pattern—that is, faster responses for the away-from-the-body movements–positive-objects association (conditions 2 and 6) with respect to the away-from-the-body movements–negative-objects one (conditions 4 and 8). The latter result would be new with respect to the literature, where a self-related perspective has mainly been adopted so far.

It has to be pointed out, though, that in our paradigm the response movements were not unambiguous, since participants were asked to perform different response movements for sensible/nonsensible (i.e., fillers) stimuli according to task instructions. Therefore, pulling the mouse toward the body could be matched either with sentences referring to a oneself target or with sentences referring to an another person target, and the same held for pushing the mouse away from the body. A way to get rid of this ambiguity was to verify whether the AAE would emerge within the target × valence interaction—that is, when the actions’ meaning, rather than the actual response direction, was considered. Hence, we hypothesized finding a facilitation for sentences where the oneself target was presented together with positive objects (conditions 1 and 2), with respect to negative ones (conditions 3 and 4). The same should be true for the sentences where the another person target was presented together with positive objects (conditions 5 and 6), with respect to negative ones (conditions 7 and 8).

Method

Participants

Twenty-four students at the University of Bologna volunteered for the experiment. They were all right-handed, had normal or corrected-to-normal vision, and were unaware of the purpose of the experiment.

Apparatus and stimuli

The experiment took place in a dimly lit and noiseless room. The participant was seated facing a 17-in. cathode-ray tube screen driven by a 1.6-GHz processor computer, at a distance of 61 cm. Stimulus selection, response timing, and data collection were controlled by the E-Prime v1.1 software (see Stahl, 2006). A black fixation cross (1.87° × 1.87° of visual angle) was presented at the beginning of each trial. The stimuli consisted of sentences written in black ink and presented at the center of a white screen. Words were written in a 30-point Courier New font.

Half of the sentences were sensible and served as target stimuli, whereas the other half were not sensible and served as fillers. All sensible sentences described a situation where a positively/negatively connoted object had to be moved toward the oneself or toward the another person target (50 % of the sentence stimuli for each type of target), such as, for instance, “The object is useful/useless. Bring it toward you/Give it to another person.” A total of 16 adjectives were used to connote the object as positive (8 adjectives; e.g., useful) or negative (8 adjectives; e.g., useless). Each adjective was presented twice, so that it could be shown together with the oneself and with the another person target. With this combination, 32 sentence stimuli were created. Half of these 32 sentences were presented with an object–target order (e.g., “The object is useful/useless. Bring it toward you/Give it to another person”), and half with a target–object one (e.g., “Bring it toward you/Give it to another person. The object is useful/useless”). We counterbalanced these two presentation orders within participants.

The filler sentences, whose aim was to force participants to read each sentence carefully before responding, were composed following the same logic and structure of the target sentences, but one part of the sentence, randomly located, did not make sense. The fact that the sentence did not make sense could be due to the adjective (e.g., “The object is tanned [touchy], bring it toward you [give it to another person]”), to the verb (e.g., “The object is attractive [ugly], walk it to yourself [walk it to another person]”), or to the target (e.g., “The object is attractive [ugly], bring it to a tea-pot [give it to a tree]). The number of fillers was the same as that of the target sentences (i.e., 32). A complete list of the stimuli used can be found at http://laral.istc.cnr.it/borghi/Appendix_self_others_objects.pdf.

Participants were to judge whether each sentence was sensible or not sensible (i.e., all the filler sentences) by pulling the mouse toward their body or pushing it away. In one block, the sensible sentences were associated with the toward-the-body movement and the fillers with the away-from-the-body one, whereas in the other block, the instructions were reversed. Each participant experienced both blocks, whose order was balanced among participants. The sensible and fillers sentences (64 trials; i.e., 32 + 32) were the same for both blocks, for a total of 128 experimental trials. Sixteen extra stimuli (eight sensible and eight filler sentences) were used as warm-up trials before each block.

Responses were made by moving the mouse, the speed rate of which was set at a low value, along a vertically traced course drawn on the tabletop. The movements were approximately 10 cm in a toward- and an away-from-the-body direction, starting from a home position that was marked upon the course center. The mouse acceleration rate was also set to the minimum, so that the position of the cursor on the screen was related as much as possible to the position of the mouse on the table. The cursor was automatically positioned upon the fixation cross on the screen at the start of each trial, and its position was continuously tracked during the participant’s response. When the cursor reached the screen top/bottom border, the system quit recording and started a new trial. Crucially, the cursor movement was immediately followed by a congruent sentence motion on the screen (see Neumann, Förster, & Strack, 2003; Neumann & Strack, 2000). That is, after the cursor had been moved to the screen bottom, the sentence slid slightly downward, while its font size also gradually increased in order to simulate a sentence motion toward the participant. The same was true when the cursor reached the screen top, but in this case, the sentence moved upward and its font diminished, as if simulating a motion away from the participant. In case of wrong or delayed responses, the sentence color turned from black to red, in order to stress the inaccurate performance.

Procedure

The experimental procedure was as follows. Participants were required to hold the mouse with their right hand, place it upon the home position on the course drawn on the tabletop, and then read on-screen instructions. On each trial, the mouse cursor was automatically placed upon the fixation cross that appeared at the screen center. Participants were then to click the left mouse button for each sentence to replace the cross. The sentence remained on the screen until a response was given (i.e., until the cursor reached the top/bottom screen edge) or until 4,000 ms had passed. Participants were then required to reposition the mouse upon the home position on the course before starting a new trial.

The feedback “ERRORE” (i.e., “error”) or “TROPPO LENTO”(i.e., “too slow”) was given for incorrect or delayed responses, respectively, and remained on the screen for 1,500 ms. A blank screen was then presented for 500 ms before the fixation cross appeared again (see Fig. 1).

a Sequence of events for each trial. A fixation cross appeared at the screen center, and the cursor was automatically placed upon it. When participants clicked the left mouse button, the fixation cross was replaced by a sensible or filler sentence until a response was given or until 4,000 ms had elapsed. Then a 1,500-ms feedback for wrong or belated responses appeared. After a delay of 500 ms, the next trial was initiated. Note that stimuli are not drawn to scale here. b Example of an experimental item. Each sentence was presented at the screen center, and participants had to move the mouse toward/away from their body, following the vertical course drawn on the table top, according to task instructions. When the mouse cursor reached the top/bottom screen border, the sentence slid slightly upward/downward while its font size also gradually decreased/increased. When participants performed the wrong mouse movements or when their responses were too slow, the sentence ink color turned from black to red

As has been said, the experiment comprised 32 practice trials and 128 experimental trials split into two equal blocks (i.e., 16 practice trials + 64 experimental trials each). The training session of the first block had a threshold of at least 70 % of correct responses, while for the second block, this threshold was increased up to 80 % because we wanted to make sure that participants understood the new instructions properly. If participants scored below the threshold, a further training session was performed. If they failed for three training sessions, the experiment ended automatically. After each block, participants could take a short break. At the end of the experiment, participants were debriefed and thanked.

Our crucial dependent variable was RT, because this measure is thought to reflect central processes such as stimulus evaluation, response selection, and motor planning (Rotteveel & Phaf, 2004). We defined RT as the time between the mouse click upon the fixation cross and the initiation of the cursor movement. The start of the movement corresponded to the moment at which the cursor moved 20 pixels from its starting point in a vertical direction. This measure combined a high sensitivity to true responses with a low responsiveness to small random mouse movements.

Data analysis

Considering the linguistic nature of the stimuli, the data from Experiment 1 were analyzed through a mixed-effects model, a robust analysis that allows controlling for the variability of items and participants (Baayen, Tweedie, & Schreuder, 2002; see the special issue of the Journal of Language and Memory edited by Forster & Masson, 2008, on this topic). This analysis prevents the potential lack of power of the by-participant and by-item analyses and limits the loss of information due to the prior averaging of the by-item and by-participant analyses (Baayen et al., 2002; see also Brysbaert, 2007).

Random effects were participants and items. Fixed effects were direction of the movement (toward the body vs. away from the body), target (oneself vs. another person), and valence (negative vs. positive).

Results and discussion

Incorrect responses were removed from the analysis (2.4 %). In addition, 2 participants were eliminated because they made too many errors (more than 24 % of the whole trials). We also discarded all responses to the filler sentences. Analysis of errors revealed no evidence of a speed–accuracy trade-off, so we focused on RT analysis. RTs faster/slower than the overall participant mean minus/plus 2 standard deviations were excluded from the analyses (1.6 %).

As for the random effects, the participants factor was significant, Wald Z = 3.113, p < .05, while the items one was not, Wald Z = 0.948, p = .343. When random factors (items and participants) were partialled out, the mixed-effects model analysis showed that the direction of the movement factor was not significant, F(1, 647) = 2.23, p = .136, whereas the main effects of target, F(1, 661) = 36.20, p < .001, and valence, F(1, 645) = 8.68, p < .001, were significant. Faster responses were yielded when sentences described actions directed toward the oneself target (M = 1,529 ms), with respect to the another person one (M = 1,638 ms). RTs were also faster when sentences referred to positive objects (M = 1,556 ms), with respect to negative ones (M = 1,611 ms). Interestingly, the first-order interactions were all significant [direction of the movement × target, F(1, 661) = 6.20, p < .05; direction of the movement × valence, F(1, 646) = 4.43, p < .05; and target × valence, F(1, 661) = 6.77, p < .05]. In order to better understand the differences underlying these interactions, we ran a repeated measures ANOVA with direction of the movement, target, and valence as the only within-participants factors and then conducted Fisher’s LSD post hoc tests.

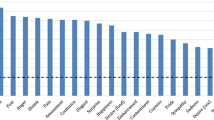

In line with the mixed-effects model analysis, all the interactions were significant [direction of the movement × target, F(1, 21) = 6.17, MSE = 13,575.52, p < .05; direction of the movement × valence, F(1, 21) = 5.68, MSE = 11,624.25, p < .05; target × valence, F(1, 21) = 9.25, MSE = 10,227.03, p < .05]. Post hoc tests showed, on the one hand, that the former interaction yielded the shortest RTs when sentences referring to the oneself target were responded to with toward-the-body movements (M = 1,497 ms, conditions 1 and 3, Table 1), p s < .05 (see Fig. 2, top panel). On the other hand, for the direction of the movement × valence interaction, the slower combination was found when sentences describing negative objects were responded to by performing away-from-the-body movements (M = 1,647 ms, conditions 4 and 8, Table 1), p s < .001 (see Fig. 2, middle panel). Finally, for the target × valence interaction, post hoc tests indicated that the fastest RTs were yielded when sentences referred to actions where positive objects were associated to the oneself target (M = 1,480 ms, conditions 1 and 2, Table 1), p s < .01 (see Fig. 2, bottom panel). See Table 2 for a complete list of RTs and standard errors in each experimental condition.

Experiment 1: results of the direction of the movement × target (top panel), direction of the movement × valence (middle panel), and target × valence (bottom panel) interactions. Values are in milliseconds, and bars represent standard errors. The values shown in this figure come from the repeated measures ANOVA on reaction times, since Fisher’s LSD post hoc tests were conducted on this analysis

In line with our first hypothesis, our data replicated the ACE. However, this effect was yielded only when response movements consisted in pulling the mouse toward the participants’ body, and not in pushing it away. Indeed, stimuli describing actions directed toward the oneself target were responded to faster with toward-the-body movements than with away-from-the-body ones (conditions 1 and 3 vs. conditions 2 and 4, Table 1). When sentences referred to an another person target, though, we did not find a facilitation for away-from-the-body movements with respect to the toward-the-body ones (conditions 6 and 8 vs. conditions 5 and 7, Table 1; see Fig. 2, top panel).

With regard to our second hypothesis, new and interesting results were found for the AAE. Namely, in contrast with what has typically been shown in the literature, our data showed that the tendency to reduce/increase the distance with positively/negatively connoted objects emerged with respect to the linguistically described oneself target (i.e., within the target × valence interaction), and not with respect to the actual participant performing the experiment (i.e., within the direction of the movement × valence interaction). Indeed, the significant interaction between the direction-of-the-movement and valence factors was only due to the slower combination between sentences describing negative objects and away-from-the-body movements (see Fig. 2, middle panel). Conversely, with regard to the target × valence interaction, faster RTs were yielded for sentences in which actions directed toward the oneself target were associated with positive objects (conditions 1 and 2, Table 1), with respect to negative ones (conditions 3 and 4, Table 1). However, we did not find a significant facilitation for sentences describing an another person target and positive objects, with respect to negative ones (conditions 5 and 6 vs. conditions 7 and 8, Table 1; see Fig. 2, bottom panel).

Two implications can be drawn from these results: First, our experimental manipulations succeeded in making participants simulate the actions and entities described in the sentences when they referred to the oneself target. Indeed, a significant ACE and a new AAE, yielded by the target × valence interaction, emerged. Second, contrary to our hypothesis, participants did not seem to be influenced by the social context. Indeed, we failed to find (1) the ACE for sentences where the away-from-the-body movement was associated with the another person target or (2) a facilitation for sentences describing another person target and positively connoted objects, with respect to negatively connoted ones. The absence of these effects could be attributed to the fact that a target connoted as an another person could be not familiar enough to lead participants to properly simulate a social context.

As has been indicated by recent literature on joint attention, joint action, and task sharing (Galantucci & Sebanz, 2009), the relationship shared by coactors is crucial for task execution. Of particular relevance for our work are the studies investigating the social Simon effect (SSE; see Milanese, Iani, & Rubichi, 2010; Sebanz, Bekkering, & Knoblich, 2006; Sebanz, Knoblich, & Prinz, 2003; Vlainic, Liepelt, Colzato, Prinz, & Hommel, 2010). In these studies, 2 participants were to respond to a stimulus nonspatial feature (e.g., color) while ignoring its location (e.g., left/right) on the screen. More precisely, one participant had to press the left key in response to the green stimulus color, and the other participant had to press the right key in response to the red color, so that each participant was performing a go/no-go task. The SSE refers to the fact that an advantage for spatially corresponding S–R parings (i.e., left-stimulus–left-response, right-stimulus–right-response) was found only when participants performed the task together and not separately. Results also indicated that the SSE emerged only when participants interacted with a biological agent, whereas it did not when they coacted with a computer or a wooden hand, thus indicating that the joint action failed when one of the agents was nonhuman (Tsai & Brass, 2007; Tsai, Kuo, Hung, & Tzeng, 2008). Interestingly, the type of interpersonal relationship shared by coactors was also found to play a crucial role for a successful task sharing to occur. Namely, the SSE disappeared when the participants’ interaction was connoted as negative and competitive (Hommel, Colzato, & van den Wildenberg, 2009; Kourtis, Sebanz, & Knoblich, 2010). Hence, these studies demonstrated that the higher the similarities between the coactors, the easier their tuning in. Therefore, considering the above-mentioned findings, we ran a second experiment in order to verify whether participants would simulate a relational context when targets were connoted in a closer and more familiar way.

Experiment 2

Recent evidence showed that the positive connotation of a coactor eased and improved participants’ performance during joint and shared tasks (Hommel et al., 2009; Iani, Anelli, Nicoletti, Arcuri, & Rubichi, 2011). Therefore, in the present experiment, we faced participants with new targets connoted in a positive and familiar fashion (specific positive targets; e.g., friend). We predicted that, even if only linguistically described and not actually coacting with the agent, these targets would ease the simulation of a social context and influence participants’ performance.

In order to directly compare these new specific positive targets with the targets used in the previous experiment (i.e., oneself and another person), we designed the present experiment presenting two blocks (see Table 1). In one block (block 1), we compared the specific positive targets (i.e., friend, buddy, boyfriend/girlfriend, ally) with the oneself one. In order to stress and enhance the positive connotation of these targets, we also added specific negative targets—that is, targets having a negatively connoted relationship with the agent (i.e., enemy, rival, challenger, opponent). Conversely, in the other block (block 2), we compared the oneself, specific positive, and generic targets. The latter category referred to targets having a not particularly familiar, close, or emotively connoted relationship with the agent (i.e., another person, unknown person, woman/man, dude). Our goal was to investigate the role played by the targets’ degree of specificity—that is, whether the presentation of targets being more (i.e., specific positive) or less (i.e., generic) familiar to the self would lead participants to properly simulate the social context.

To sum up, our hypothesis was twofold. First, we expected to find the ACE and AAE not only when sentences referred to the oneself target, as was shown in Experiment 1, but also when targets had a closer relation with the agent—that is, for specific positive targets. More specifically, as regards the ACE, we hypothesized to find faster RTs when the actual response movements were congruent with the actions and the targets mentioned in the sentence (i.e., “Bring it toward you”—toward-the-body movement, conditions 1 and 3; “Give it to a friend”— away-from-the-body movement, conditions 14 and 16, Table 1) than when they were incongruent (i.e., “Bring it toward you”—away-from-the-body movement, conditions 2 and 4; “Give it to a friend”—toward-the-body movement, conditions 13 and 15, Table 1). Second, we predicted to find the AAE within the target × valence interaction, and not within the direction of the movement × valence one, as was shown in Experiment 1. More specifically, we expected to find a facilitation when the oneself and specific positive targets were associated with positive objects (conditions 1 and 2 and conditions 13 and 14, Table 1, respectively) with respect to negative ones (conditions 3 and 4 vs. conditions 15 and 16, Table 1, respectively). Conversely, we did not expect to find a facilitation for positive objects with respect to negative ones, when targets shared a negative relationship (i.e., specific negative, block 1) or were not particularly familiar (i.e., generic, block2) with the agent.

Method

Twenty-two new students from the same pool were selected. They were all right-handed, had normal or corrected-to-normal vision, and were unaware of the purpose of the experiment.

The apparatus and procedure were the same as those in the previous experiment. The only modification concerned the targets presented in the sentence stimuli. Unlike in Experiment 1, we chose different types of targets, and we used multiple labels for each type. In order to compare the different target types within each participant, we split those into two blocks, whose order was counterbalanced between participants (for the complete stimuli appendix, follow this link: http://laral.istc.cnr.it/borghi/Appendix_self_others_objects.pdf).

In block 1, we presented participants with sentences referred to specific positive (four items: friend, buddy, boyfriend/girlfriend, and ally) and specific negative (four items: enemy, rival, challenger, and opponent) targets. In block 2, sentences referred to generic (four items: another person, unknown person, man/woman, and dude) and specific positive (four items: friend, buddy, boyfriend/girlfriend, and ally) targets. It is worth mentioning that (1) half of the sentences of each block referred to the oneself target, whereas the other half referred to other targets; (2) the adjectives and targets were counterbalanced across two lists (half of the participants received one list, while the other half received the other list); and (3) each participant experienced both blocks, whose order was balanced between participants.

As in Experiment 1, participants were to judge whether each sentence was sensible or not sensible (i.e., all the filler sentences) by pulling the mouse toward their body or pushing it away. Both block 1 and block 2 were divided into two equal parts (a and b) in order to balance the instructions. More specifically, in blocks 1a and 2a, the sensible sentences were associated with the toward-the-body movement and the fillers with the away-from-the-body one, whereas blocks 1b and 2b had the reverse assignment. Each participant experienced both block parts, whose order was counterbalanced between participants. Two intrablock training sessions were performed, following the same threshold as that in Experiment 1.

Data analysis

As in the previous experiment, the mixed-effects model was used for data analysis. Random factors were participants and items within the list version.Footnote 1 Fixed factors were direction of the movement (toward the body vs. away from the body), target (oneself–block 1 vs. specific positive–block 1 vs. specific negative vs. oneself–block 2 vs. generic vs. specific positive–block 2),Footnote 2 valence (negative vs. positive), and list version (1 vs. 2).Footnote 3

Results and discussion

As in Experiment 1, for each participant, erroneous trials (1.1 %) and outlier RTs (2.5 %) were excluded from the analyses. We also discarded RTs to the filler sentences. Analyses of errors revealed no evidence of a speed–accuracy trade-off, so we focused on RT analyses.

As for the random effects, participants, Wald Z = 3.12, p < .05, and items within list version, Wald Z = 6.27, p < .001, were significant.

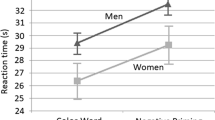

When random factors (items and participants) were partialled out, the mixed-effects model analysis showed that the direction of the movement was significant, F(1, 960) = 9.70, p < .05, since RTs were faster for the away-from-the-body movements (M = 1,381 ms) than for the toward-the-body ones (M = 1,410 ms). This result could be due to the fact that simulating the presence of multiple targets made the away-from-the-body movements more salient than the toward-the-body ones. The main effect of target was also significant, F(5, 4478) = 80.23, p < .001. Differences were explored using contrast analysis and Sidak’s correction for multiple comparisons. The analysis showed an overall facilitation of the oneself target for both block 1 and block 2 (M = 1,291 and 1,285 ms, respectively), p s < .001, and faster RTs for the specific positive–block 1 targets (M = 1,430 ms), as compared with the specific negative ones (M = 1,477 ms), p < .05 (see Fig. 3, top panel). Furthermore, the main effect of valence was significant, F(1, 352) = 8.32, p < .05, indicating that participants were faster when sentences referred to positive objects (M = 1,380 ms) than when they referred to negative ones (M = 1,411 ms). The direction of the movement × valence interaction was not significant, F(1, 4025) < 0.06, p = .810. Conversely, the direction of the movement × target, F(5, 3822) = 7.22, p < .001, and the target × valence, F(5, 4760) = 2.36, p < .05, interactions were significant.

Experiment 2: main effect of the target factor (top panel), interaction between the direction of the movement and target factors (middle panel), and interaction between the target and valence factors (bottom panel). Values are in milliseconds, and bars represent standard errors. The values shown in this figure come from the mixed-effects model analysis for the target factor (top panel) and from a repeated measures ANOVA for the two interactions (middle and bottom panels)

In order to better understand the differences underlying these interactions, a repeated measures ANOVA with direction of the movement, target, and valence as the only within-participants factorsFootnote 4 was performed, and Fisher’s LSD post hoc tests were also conducted.

In line with the mixed-effects model analysis, the interaction between the direction-of-the-movement and target factors was significant, F(5, 105) = 3.41, MSE = 16,003.653, p < .05 (see Fig. 3, middle panel). In block 1, post hoc tests showed that the oneself target yielded faster responses when associated with the toward-the-body movements (M = 1,269 ms) than with the away-from-the-body ones (M = 1,325 ms), p < .05 (conditions 1 and 3 vs. condition 2 and 4, Table 1). A facilitation for the away-from-the-body movements over the toward-the-body ones, though, was not found for specific positive targets (1,410 vs. 1,454 ms, respectively), p = .11, and specific negative targets (1,461 vs. 1,497 ms, respectively), p = .18. Conversely, in block 2, post hoc tests showed that both generic and specific positive targets were faster with the away-from-the-body movements (M = 1,405 and 1,415 ms, for generic and specific positive targets, respectively, conditions 18 and 20 and conditions 14 and 16, Table 1) than with the toward-the-body ones (M = 1,490 and 1,479 ms, for generic and specific positive targets, respectively, conditions 17 and 19 and conditions 13 and 15, Table 1), p s < .05. This indicates that, in block 2, response movements were modulated by generic and specific positive targets (see Fig. 3, middle panel). No facilitation was found, though, for the oneself target and toward-the-body movements (M = 1,294 ms), with respect to the away-from-the-body ones (M = 1,286 ms), p = .75 (conditions 1 and 3 vs. conditions 2 and 4, Table 1).

The interaction between the direction of the movement and valence factors was not significant, F(1, 21) = 0.07, MSE = 8,340.011, p = .80. Conversely, the interaction between the target and valence factors was significant, F(5, 105) = 2.64, MSE = 6,328.679, p < .05. In block 1, the oneself-target–positive-objects combination (M = 1,266 ms) was faster than the oneself-target–negative-object one (M = 1,328 ms), p < .001 (conditions 1 and 2 vs. conditions 3 and 4, Table 1). No facilitation was found, though, when specific positive targets were associated with positive objects (M = 1,428 ms) with respect to negative ones (M = 1,436 ms; see conditions 13 and 14 vs. 15 and 16, Table 1 ), p = .63. The same held for specific negative targets (M = 1,479 vs.1,480 ms, for positive and negative objects, respectively, p = .94; see conditions 9 and 10 vs. 11 and 12, Table 1).

The results of block 2 showed that the oneself target was faster when combined with positive objects (M = 1,257 ms) than with negative ones (M = 1,323 ms), p < .001 (conditions 1 and 2 vs. conditions 3 and 4, Table 1). Crucially, specific positive targets showed a significant facilitation when associated with positive objects (M = 1,429 ms), as compared with negative ones (M = 1,464 ms), p < .05 (conditions 13 and 14 vs. 15 and 16, Table 1). A facilitation was not found, though, for generic targets, since no difference emerged when sentences referred to positive objects (M = 1,439 ms, conditions 17 and 18, Table 1) or negative objects (M = 1,455 ms, conditions 19 and 20, Table 1; see Fig. 3, bottom panel), p = .34. See Table 2 for a complete list of RTs and standard errors in each experimental condition.

Taken together, our data were in line with our hypothesis and also gave new and interesting results. A significant ACE emerged for both block 1 and block 2. On the one hand, in block 1, the oneself target sentences yielded faster RTs when participants had to pull the mouse toward their body instead of pushing it away (conditions 1 and 3 vs. 2 and 4, Table 1). On the other hand, in block 2, sentences referring to both specific positive and generic targets yielded faster RTs when the mouse had to be pushed away from their body rather than pulled toward it (conditions 14 and 16 vs. 13 and 15 and conditions 18 and 20 vs. 17 and 19, Table 1, for specific and generic targets, respectively). Two things are worth discussing. First, the fact that we did not find an ACE for the oneself target in block 2 is not in line with the results typically found in literature and also with our data obtained so far. Second, the fact that the ACE emerged for generic target is new with respect to Experiment 1 and also to our hypothesis. These two results, however, may have a common explanation: Presenting participants with targets having different degree of specificity to the agent—that is, from nonfamiliar (e.g., another person; generic targets) to highly familiar (e.g., friend; specific positive targets)—may have led them to focus more on these targets, rather than on the oneself one.

A significant AAE emerged, consistent with Experiment 1, within the target × valence interaction, and not within the direction of the movement × target one, which was nonsignificant. As predicted, a facilitation for sentences describing positive objects, with respect to negative ones, emerged for the oneself target in both block 1 and block 2 (conditions 1 and 2 vs. 3 and 4, Table 1) and for the specific positive targets in block 2 only (conditions 13 and 14 vs. 15 and 16, Table 1). One may argue that the latter result is counterintuitive and that a significant AAE for specific positive targets should have been expected for block 1 as well. However, our data seem to clearly indicate that a social context was properly simulated only when specific positive and generic targets were presented together in the same block (i.e., block 2). It is worth noting that two different explanations might account for the fact that the ACE and AAE for specific positive targets were found only in block 2. First, the positive and negative target connotations in block 1 might have been so relevant and prevalent as to prevent the ACE and AAE to emerge. Alternatively, it is possible that participants properly simulated a social context only when faced with sentences describing targets having a different degree of specificity (i.e., specific positive vs. generic targets, block 2). This explanation might also account for the fact that the ACE for the oneself target emerged only in block 1: The simulation of a social context in block 2 yielded faster RTs for away-from-the-body movements with respect to toward-the-body ones, thus preventing the emergence of the oneself target—pulling-of-the-mouse facilitation. Although the present experiment does not allow us to disentangle these accounts, they could be relevant avenues of further research.

General discussion

In the present study, we tackled the issue of whether and how a social context would influence the relationship between language comprehension and the activation of the motor system. Specifically, we investigated how presenting sentences that refer to a social context would modify the ACE and AAE, which have typically been studied by adopting a self-related perspective. Four main conclusions can be drawn from our results.

First, in line with our hypothesis, our data show that both the ACE and AAE are shaped by the simulation of other targets beside the oneself one and, in particular, by those sharing a familiar and positive relationship with us. On the same line, Rueschemeyer, Glenberg, Kaschak, Muller, and Friederici (2010) demonstrated that the self versus other perception is relevant for language comprehension. Indeed, the authors found an increased level of activation along the cortical midline structure when participants processed sentences describing objects in motion toward oneself or another person, as compared with those describing motion away from the body.

Second, our results for the AAE are new with respect to the current literature, since they show a tendency to reduce/increase the distance with positively/negatively connoted objects with respect to the target mentioned in the sentence (target × valence interaction), and not with respect to the agent performing the experiment (direction of the movement × valence interaction). This indicates that processing a sentence referring to an object reflects not only directional information, but also the information related to the addressee of the object.

Ideomotor theories assign a crucial role to the goal-directedness of action, as compared with the kinematic aspects of movements (Hommel, Musseler, Aschersleben, & Prinz, 2001). Even if there is evidence in favor of the role of goals for linguistic simulation, in previous studies, goal-related and kinematic information have often been confused. In the present study, however, our paradigm allowed us to clearly disentangle these two kinds of information and, thus, to better understand the role played by the social context. Crucially, in our experiments, the action meaning of the sentence (i.e., “Bring it to you/Give it to another person/a friend”) and the kinematic meaning of the response movements (i.e., move the mouse toward/away from the body) were dissociated. Indeed, the goal-related information was represented by means of both the linguistically described action (bring vs. give) and the types of target. In line with ideomotor theories, our results clearly showed that aspects related to the sentence goal have more relevance than the kinematics ones.

Third, our findings allowed to tackle the issue of the automatic link between valence and motor responses. From current evidence, it is unclear whether the link between the valence coding and behavior is automatic. Chen and Bargh (1999) interpreted the AAE as if positive and negative words automatically triggered approach or avoidance actions. This interpretation adheres to a traditional view based on the idea that valence-specific automatic mechanisms are developed to facilitate the survival of the organism. In the same line, Krieglmeyer, Deutsch, De Houwer, and De Raedt (2010) showed that valence facilitated congruent approach/avoidance responses even when participants had no intention to approach/avoid the stimuli, when they were not required to process the stimuli valence and also when behavior was not labeled in approach/avoidance terms. Hence, the authors claimed that their findings “support the notion of a unique, automatic link between the perception of valence and approach–avoidance behaviour” (p. 607). In their matching account, Zhang, Proctor, and Wegener (2012) also supported an automatic valence coding. The authors embraced a dual-route model (see De Jong, Liang, & Lauber, 1994), claiming that through the automatic, or direct, route, “a matched stimulus–referent pair automatically activates a positive response ‘toward’ and a mismatched stimulus–referent pair automatically activates a negative response ‘away’” (p. 615). What the authors called a referent was a picture or a word, presented on the screen, which could be connoted in a positive or negative fashion (i.e., the picture/written name of Albert Einstein or Adolf Hitler, respectively). Participants were to move a positive or negative adjective-word (the stimulus; e.g., healthy vs. brutal), appearing below or above the referent, toward or away from it by means of a joystick device.

This automatic link between the perception of valence and behavior has been questioned by scholars who suggested that the AAE shares the same mechanisms underlying other action control phenomena, such as spatial compatibility effects, and that these effects can be explained by an extension of the theory of event coding (Hommel et al., 2001; see also Eder & Rothermund, 2008; Lavender & Hommel, 2007). This view is more in line with an embodied perspective, since it does not imply a rigid separation between cognition and emotion. Our findings support the last view. Indeed, in our experiments, the positive/negative stimuli connotation turned out to be coded in an action- (or goal-) specific way—that is, the valence coding emerged in relation to the social context (Rueschemeyer, Lindemann, van Elk, & Bekkering, 2010), and not in relation to the response movements. Hence, as for the automaticity of the valence coding, our findings seem to indicate a primacy of the target factor with respect the direction-of-the-movement one, a result that is new with respect to the existing literature. Indeed, in the present experiments, the target factor merged together the action meaning and the target the action was directed to—that is, the social context.

The fourth and last point concerns the influence of the type of targets on performance. Our results for the AAE showed that the similarities between the target and the agent are crucial in shaping performance. Indeed, although the well-documented bias for the positive objects related to the self was always present, the most interesting result concerned the specific positive targets. In line with brain-imaging and behavioral studies on motor resonance (e.g., Calvo-Merino, Grèzes, Glaser, Passingham, & Haggard, 2006; Liuzza, Setti, & Borghi, 2012), our results indicated that we tend to reduce the distance and the distinction between the self and a person perceived as similar and close to us (Aron, Aron, Tudor, & Nelson, 1991; Hommel et al., 2009). Furthermore, since a familiar person is linked to the participant’s memory (e.g., Kesebir & Oishi, 2010; Kuiper, 1982) and to the participant’s self-reference context (see the “inclusion-of-other-in-the-self approach” of Aron et al., 1991), the distance between the self and a specific positive target is then reduced. These findings broadened previous studies on joint action and task sharing. It has been shown that the more the similarities between coactors, the easier is their tuning in and the following task execution (Hommel et al., 2009; Iani et al., 2011, Kourtis et al., 2010). Our results demonstrated that this tuning occurred not only when participants were sharing a task and a common goal, but also when they were simulating the presence of a person with a familiar and positive relationship to them.

In conclusion, our findings clearly demonstrated that the simulation of a social context influences performance. New research is needed, though, to further explore the fascinating issue of how far human behavior implies social attention and how far language, due to its social character, reflects this very fact.

Notes

We included list version in the analyses to reduce error variance (Raaijmakers, Schrijnemakers, & Gremmen, 1999). However, results due to list version are not reported, since this factor was not of theoretical interest.

The block (1 vs. 2) factor was not included in the analysis, since this variable overlapped with the target one. Since the target factor had six levels—that is, the six types of target presented (oneself–block 1 vs. specific positive–block 1 vs. specific negative vs. oneself–block 2 vs. generic vs. specific positive–block 2)—the block variable would be redundant with respect to the target one.

See note 1.

Since, in the mixed-effects model analysis, the main effect of the list version factor was not significant, F(1, 20) = 0.387, p = .54, we did not include that factor in this analysis.

References

Aron, A., Aron, E. N., Tudor, M., & Nelson, G. (1991). Close relationships as including other in the self. Journal of Personality and Social Psychology, 60, 241–253.

Baayen, R. H., Tweedie, F. J., & Schreuder, R. (2002). The subjects as a simple random effect fallacy: Subject variability and morphological family effects in the mental lexicon. Journal of Brain and Language, 81, 55–65.

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22, 577–660.

Barsalou, L. W. (2009). Simulation, situated conceptualization, and prediction. Philosophical Transactions of the Royal Society B, 364, 1281–1289.

Borghi, A.M. (2005). Object concepts and action. In D. Pecher & R.A. Zwaan (Eds.), Grounding cognition: The role of perception and action in memory, language, and thinking (pp. 8–34). Cambridge: Cambridge University Press.

Borghi, A. M. (2012). Action language comprehension, affordances and goals. In Y. Coello & A. Bartolo (Eds.), Language and action in cognitive neuroscience. Hove, U.K.: Psychology Press.

Borghi, A. M., & Cimatti, F. (2010). Embodied cognition and beyond: Acting and sensing the body. Neuropsychologia, 48, 763–773.

Borghi, A. M., Gianelli, C., & Scorolli, C. (2010). Sentence comprehension: Effectors and goals, self and others. An overview of experiments and implications for robotics. Frontiers in Neurorobotics, 4, 3.

Borghi, A. M., & Pecher, D. (2011). Introduction to the special topic embodied and grounded cognition. Frontiers in Psychology, 2: 187.

Brysbaert, M. (2007). “The language-as-fixed-effect fallacy”: Some simple SPSS solutions to a complex problem (Version 2.0). London, Royal Holloway: University of London.

Calvo-Merino, B., Grèzes, J., Glaser, D. E., Passingham, R. E., & Haggard, P. (2006). Seeing or doing? Influence of visual and motor familiarity in action observation. Current Biology, 16, 1905–1910.

Chen, M., & Bargh, J. A. (1999). Consequences of automatic evaluation: Immediate behavioral predispositions to approach or avoid the stimulus. Personality and Social Psychology Bulletin, 25, 215–224.

Clark, H. H. (1996). Using language. Cambridge: Cambridge University Press.

De Jong, R., Liang, C.-C., & Lauber, E. (1994). Conditional and unconditional automaticity: A dual-process model of effects of spatial stimulus–response correspondence. Journal of Experimental Psychology: Human Perception and Performance, 20, 731–750.

Eder, A.B., & Rothermund, K. (2008). When do motor behaviors (mis)match affective stimuli? An evaluative coding view of approach and avoidance reactions. Journal of Experimental Psychology: General, 137, 262–281.

Elsner, B., & Hommel, B. (2001). Effect anticipation and action control. Journal of Experimental Psychology: Human Perception and Performance, 27, 229–240.

Forster, K., & Masson, M. (2008). Emerging data analysis [Special issue]. Journal of Memory and Language, 59, 387–556.

Freina, L., Baroni, G., Borghi, A.M., & Nicoletti, R. (2009). Emotive concept-nouns and motor responses: Attraction or repulsion? Memory & Cognition, 37, 493–499.

Galantucci, B., & Sebanz, N. (2009). Joint action: Current perspectives. Topics in Cognitive Science, 1, 255–259.

Galantucci, B., & Steels, L. (2008). The emergence of embodied communication in artificial agents and humans. In I. Wachsmuth, M. Lenzen, & G. Knoblich (Eds.), Embodied communication in humans and machines (pp. 229–256). Oxford: Oxford University Press.

Gallese, V. (2009). Motor abstraction: A neuroscientific account of how action goals and intentions are mapped and understood. Psychological Research, 73, 486–498.

Gallese, V., & Lakoff, G. (2005). The brain’s concepts: The role of the sensorimotor system in reason and language. Cognitive Neuropsychology, 22, 455–479.

Gibbs, R.W. (2003). Embodied experience and linguistic meaning. Brain and Language, 84, 1–15.

Glenberg, A. M. (1997). What memory is for. Behavioral and Brain Sciences, 20, 1–55.

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9, 558–565.

Hommel, B., Colzato, L. S., & van den Wildenberg, W. P. M. (2009). How social are task representations? Psychological Science, 20, 794–798.

Hommel, B., Musseler, J., Aschersleben, G., & Prinz, W. (2001). The theory of event coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–878.

Iani, C., Anelli, F., Nicoletti, R., Arcuri, L., & Rubichi, S. (2011). The role of group membership on the modulation of joint action. Experimental Brain Research, 211, 439–445.

Kesebir, S., & Oishi, S. (2010). A spontaneous self-reference effect in memory: Why some birthdays are harder to remember than others. Psychological Science, 21, 1525–1531.

Kourtis, D., Sebanz, N., & Knoblich, G. (2010). Favoutitism in the motor system: Social interaction modulates action simulation. Biology Letters, 6, pp. 758–761.

Krieglmeyer, R., Deutsch, R., De Houwer, J., & De Raedt, R. (2010). Being moved: Valence activates approach–avoidance behavior independently of evaluation and approach–avoidance intentions. Psychological Science, 21, 607–613.

Kuiper, N. A. (1982). Processing personal information about well-known others and the self: The use of efficient cognitive schemata. Canadian Journal of Behavioral Science, 14, 1–12.

Lavender, T., & Hommel, B. (2007). Affect and action: Towards an event-coding account. Cognition and Emotion, 21, 1270–1296.

Liuzza, M. T., Setti, A., & Borghi, A. M. (2012). Kids observing other kids’ hands: Visuomotor priming in children. Consciousness & Cognition, 21, 383–392.

MacWhinney, B. (1999). The emergence of language from embodiment. In B. MacWhinney (Ed.), The emergence of language (pp. 213–256). Mahwah, NJ: Erlbaum.

Markman, A. B., & Brendl, C. M. (2005). Constraining theories of embodied cognition. Psychological Science, 16, 6–10.

Milanese, N., Iani, C., & Rubichi, S. (2010). Shared learning shapes human performance: Transfer effects in task sharing. Cognition, 116, 15–22.

Moll, H., & Tomasello, M. (2007). Co-operation and human cognition: The Vygotskian intelligence hypothesis. Philosophical Transactions of the Royal Society, 362, 639–648..

Neumann, R., Förster, J., & Strack, F. (2003). Motor compatibility: The bidirectional link between behavior and evaluation. In J. Musch & K. C. Klauer (Eds.), The psychology of evaluation: Affective processes in cognition and emotion (pp. 371–391). Mahwah, NJ: Erlbaum.

Neumann, R., & Strack, F. (2000). Approach and avoidance: The influence of proprioceptive and exteroceptive cues on encoding of affective information. Journal of Personality and Social Psychology, 79, 39–48.

Niedenthal, P. M., Barsalou, L. W., Winkielman, P., Krauth-Gruber, S., & Ric, F. (2005). Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review, 9, 184–211.

Pickering, M. J., & Garrod, S. (2004).Towards a mechanistic psychology of dialogue. Behavioral and Brain Sciences, 27,169–226.

Puca, R. M., Rinkenauer, G., & Breidenstein, C. (2006). Individual differences in approach and avoidance movements: How the avoidance motive influences response force. Journal of Personality, 74, 979–1014.

Raaijmakers, J. G. W., Schrijnemakers, J. M. C., & Gremmen, F. (1999). How to deal with “language-as-fixed-effect fallacy”: Common misconceptions and alternative solutions. Journal of Memory and Language, 41, 416–426.

Rotteveel, M., & Phaf, R. H. (2004). Automatic affective evaluation does not automatically predispose for arm flexion and extension. Emotion, 4, 156–172.

Rueschemeyer, S. A., Glenberg, A. M., Kaschak, M. P., Muller, K., & Friederici, A. D. (2010). Top-down and bottom-up contributions to understanding sentences describing objects motion. Frontiers in Psychology, 1: 183.

Rueschemeyer, S. A., Lindemann, O., van Elk, M., & Bekkering, H. (2010) Embodied cognition: The interplay between automatic resonance and selection-for-action mechanisms. European Journal of Social Psychology, 39, 1180–1187.

Schilbach, L., Timmermans, B., Reddy, V., Costall, A., Bente, G., Schlicht, T., & Vogeley, K. (in press). Toward a second-person neuroscience. Behavioral and Brain Sciences.

Sebanz, N., Bekkering, H., & Knoblich, G. (2006). Joint action: Bodies and minds moving together. Trends in Cognitive Sciences, 10, 70–76.

Sebanz, N., Knoblich, G., & Prinz, W. (2003). Representing others' actions: Just like one's own? Cognition, 88, B11–B21.

Semin, G. R., & Smith, E. R. (Eds.). (2008). Embodied grounding: Social, cognitive, affective, and neuroscientific approaches. New York: Cambridge University Press.

Singer, T. (in press). The past, present and future of social neuroscience: A European perspective. NeuroImage.

Stahl, C. (2006). Software for generating psychological experiments. Experimental Psychology, 53, 218–232.

Tanenhaus, M.K. & Brown-Schmidt, S. (2008). Language processing in the natural world. Philosophical Transactions of the Royal Society B: Biological Sciences, 363, 1105–1122.

Tomasello, M. (2005). Uniquely human cognition is a product of human culture. In S. Levinson & P. Jaisson (Eds.), Evolution and culture (pp. 203–218). Cambridge, MA: MIT Press.

Tsai, C. C., & Brass, M. (2007). Does the human motor system simulate Pinocchio’s actions? Co-acting with a human hand vs. a wooden hand in a dyadic interaction. Psychological Science, 18, 1058–1062.

Tsai, C. C., Kuo, W. J., Hung, D. L., & Tzeng, O. J. (2008). Action co-representation is tuned to other humans. Journal of Cognitive Neuroscience, 20, 2015–2024.

van Dantzig, S., Pecher, D., & Zwaan, R. A. (2008). Approach and avoidance as action effect. Quarterly Journal of Experimental Psychology, 61, 1298–1306.

Vlainic, E., Liepelt, R., Colzato, L. S., Prinz, W., & Hommel, B. (2010). The virtual co-actor: The social Simon effect does not rely on online feedback from the other. Frontiers in Psychology, 1: 208.

Zhang, Y., Proctor, R. W., & Wegener, D. T. (2012). Approach–avoidance actions or categorization? A matching account of reference valence effects in affective s–r compatibility, Journal of Experimental Social Psychology, 48, 609–616.

Zwaan, R. A. (2004). The immersed experiencer: Toward an embodied theory of language comprehension. In B. H. Ross (Ed.), Psychology of learning and motivation (Vol. 44, pp.35–62). New York: Academic Press.

Acknowledgments

Many thanks to the members of the EMCO group (www.emco.unibo.it) for helpful comments and discussions on this work. We would also like to thank Dr. Yanmin Zhang and Dr. Elena Gherri for helpful comments on data interpretation and discussion and Prof. Roberto Bolzani for a significant help in data analysis and results discussions.

This work was supported by the MIUR (Ministero Italiano dell’Istruzione, dell’Università e della Ricerca) and by the European Community, FP7 project ROSSI (www.rossiproject.eu), Emergence of Communication in Robots through Sensorimotor and Social Interaction.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lugli, L., Baroni, G., Gianelli, C. et al. Self, others, objects: How this triadic interaction modulates our behavior. Mem Cogn 40, 1373–1386 (2012). https://doi.org/10.3758/s13421-012-0218-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-012-0218-0