Abstract

Cognitive effort has been implicated in numerous theories regarding normal and aberrant behavior and the physiological response to engagement with demanding tasks. Yet, despite broad interest, no unifying, operational definition of cognitive effort itself has been proposed. Here, we argue that the most intuitive and epistemologically valuable treatment is in terms of effort-based decision-making, and advocate a neuroeconomics-focused research strategy. We first outline psychological and neuroscientific theories of cognitive effort. Then we describe the benefits of a neuroeconomic research strategy, highlighting how it affords greater inferential traction than do traditional markers of cognitive effort, including self-reports and physiologic markers of autonomic arousal. Finally, we sketch a future series of studies that can leverage the full potential of the neuroeconomic approach toward understanding the cognitive and neural mechanisms that give rise to phenomenal, subjective cognitive effort.

Similar content being viewed by others

Phenomenal cognitive effort is ubiquitous. We all know what it is like to feel that a cognitive task is effortful, or to decide between engaging in a demanding task and daydreaming. Life is full of choices between expending cognitive effort in pursuit of often highly valuable outcomes, or instead withholding effort, for reasons that are often unclear beyond “just not feeling like it.” Intuition aside, there is ample evidence for a trade-off. Cognitive effort can impact task performance in a wide variety of tasks, ranging from arithmetic to political attitude formation (Cacioppo, Petty, Feinstein, & Jarvis, 1996). Moreover, it impacts economic decision-making quality (Franco-Watkins, Pashler, & Rickard, 2006; Garbarino & Edell, 1997; Payne, Bettman, & Johnson, 1988; Shah & Oppenheimer, 2008; Smith & Walker, 1993; Verplanken, Hazenberg, & Palenewen, 1992) and features prominently among the symptomatology of motivational and mood disorders and schizophrenia (Cohen, Lohr, Paul, & Boland, 2001; Fervaha, Foussias, Agid, & Remington, 2013). Finally, cognitive effort is fundamentally implicated in the regulation of cognitive control during goal pursuit (Dayan, 2012; Kurzban, Duckworth, Kable, & Myers, 2013; Shenhav, Botvinick, & Cohen, 2013).

Yet despite these numerous implications, little is known about cognitive effort beyond the first principle that decision-makers seek to minimize it (Hull, 1943). It is not clear why some tasks are effortful while others are not, what causes someone to withhold their effort or engage, or why we would even have a bias against effort in the first place. Likewise, subjective effort may be highly context-dependent for reasons that are not understood: In some cases, or among some individuals, cognitive effort may be sought out rather than avoided (Cacioppo & Petty, 1982). Currently, we know very little about the neural systems mediating the decision to expend effort. Part of the problem is that most studies deal with effort either indirectly—invoking it post-hoc, for example—or by neglecting it entirely. Limited treatment has yielded a construct that is widely implicated, yet poorly defined, impeding theoretical development.

Understanding the decision to expend cognitive effort, as with any decision, comes down to investigating the relevant costs and benefits: how they are perceived, represented, and ultimately drive action selection. A cost–benefit framing of behavior is the bedrock of economic decision-making. In particular, behavioral economics offers a rich methodology for investigating decision processes that cross the dimensions of choice, such as risk, delay, and uncertainty. Neuroeconomists have, in turn, applied behavioral economic methods to reveal the cognitive and neural mechanisms underlying decision processes. The successes of these fields argue strongly for leveraging this approach toward a neuroeconomics of cognitive effort.

This article is intended to lay the foundation for a program of research to clarify the descriptive and mechanistic principles of cognitive effort. The structure of the article is as follows. First, we review studies that have investigated cognitive effort directly, or implicated it in a diverse array of emerging theories on behavior and cognitive control; coverage is also given to the literature on the neural systems that support effort-based decision-making. Second, we provide a brief summary highlighting the utility of behavioral and neuroeconomic methods, including our own initial work incorporating such approaches toward the investigation of cognitive effort. Finally, we develop an integrated proposal for a future program of research by delineating major questions about cognitive effort and discussing how they might be addressed with neuroeconomic methods.

A brief review of cognitive effort

Why study cognitive effort?

The decision to expend cognitive effort can impact numerous and diverse outcomes. Academic achievement appears to be largely determined by a combination of intelligence, conscientiousness, and “typical intellectual engagement,” the latter two of which arguably pertain to effort (von Stumm, Hell, & Chamorro-Premuzic, 2011). Trait disposition to engage with and enjoy cognitively demanding tasks, such as is measured by the Need for Cognition Scale, predicts higher academic achievement and standardized testing scores, along with higher performance on mathematic, problem solving, memory, and other cognitive tasks and judgments (Cacioppo et al., 1996). Rational reasoning (Shah & Oppenheimer, 2008; Toplak, West, & Stanovich, 2011) and economic decision-making (Payne et al., 1988; Smith & Walker, 1993; Venkatraman, Payne, Bettman, Luce, & Huettel, 2009; Verplanken et al., 1992) may also depend on the willingness to expend effort.

Moreover, cognitive effort appears to be a central, but as yet understudied, dimension of mental illness and other clinical disorders. A lack of cognitive effort has been implicated in the symptomatology of syndromes for which anergia, avolition, and anhedonia feature prominently, including depression (Cohen et al., 2001; Hammar, 2009; Hartlage, Alloy, Vázquez, & Dykman, 1993; Treadway, Bossaller, Shelton, & Zald, 2012; Zakzanis, Leach, & Kaplan, 1998) and schizophrenia (Fervaha et al., 2013; Gold et al., 2013; though see Gold et al., 2014). For example, deficient effort has been invoked to explain the observation that depressed patients underperform on cognitively demanding tasks, despite matching control participants on less demanding tasks (Cohen et al., 2001; Hammar, 2009; Hartlage et al., 1993; Zakzanis et al., 1998).

Psychological theories of cognitive effort

Numerous and diverse theories implicate cognitive effort as mediating behavior. Yet direct treatment is rare, leaving the construct both underdeveloped and widely cited. Here we review a selected set of emerging and longstanding theories that invoke the construct of cognitive effort.

What is cognitive effort?

Prior to reviewing the theory, it is important to consider what is meant by “cognitive effort.” To serve a valuable epistemological purpose, “cognitive effort” must carry explanatory weight while not being redundant with existing constructs. This section begins with a brief consideration, motivated by this principle, of what should and should not be considered cognitive effort.

At a coarse level, “effort” refers to the degree of engagement with demanding tasks. High engagement may enhance performance by way of attention. However, effort is not redundant with attention. Consider the distinction between top-down, endogenous, voluntary attention, which may be subjectively effortful, and bottom-up, exogenous, involuntary attention, which is not (Kaplan & Berman, 2010).

Cognitive effort is also not motivation, though the effects of increased motivation on performance may be mediated by increased effort. A research participant, for example, may be motivated to either expend cognitive effort, such as when trying to enhance performance on a difficult task, or instead to minimize cognitive effort, such as when complying with instructions to rest, such as during a resting-state fMRI scan.

Effort and difficulty are also closely coupled. Some have posited difficulty as being the primary determinant of effort (Brehm & Self, 1989; Gendolla, Wright, & Richter, 2012; Kahneman, 1973). Yet, “effort” and “difficulty” are also not identical. Their distinction is readily apparent in the lines classically drawn between tasks that are “data-limited” and “resource-limited” (Norman & Bobrow, 1975). In short, tasks are resource-limited if performance can be improved by allocating more cognitive resources to their execution, and data-limited if performance is constrained by data quality, such that additional cognitive resources would not enhance performance. Consider, for example, the data-limited task of reading visually degraded (low-contrast or partially occluded) words. The task can be both very difficult and also not impacted by the degree of effort exerted toward it. The key distinction is that an observer might rate a task as being difficult, in the sense of being unlikely to succeed, while also not rating it as effortful.

As numerous authors have noted, one resource-limited function linked closely with effort is cognitive control (Mulder, 1986). It has long been recognized that effortful tasks are complex and nonautomatic, requiring controlled responses, and involve sequential and capacity-limited processes (Hasher & Zacks, 1979; Schneider & Shiffrin, 1977)—all features that characterize cognitive control. Moreover, mounting evidence is linking both the behavioral and physiological markers of control with phenomenal effort. However, cognitive control and effort should not be considered redundant. On the one hand, there is the possibility of control-demanding engagement that is not effortful. “Flow,” for example, is described as a state of effortless control that may characterize high proficiency at demanding tasks (Nakamura & Csikszentmihalyi, 2002). This phenomenon is not well understood, however, and may reflect increased reliance on automatic over controlled processing. On the other hand, rather than being synonymous with “control,” “effort” may be primarily implicated in the decision to engage control resources—a topic of recent theoretical (Inzlicht, Schmeichel, & Macrae, 2014; Kaplan & Berman, 2010; Kurzban et al., 2013; Shenhav et al., 2013) and empirical (Dixon & Christoff, 2012; Kool, McGuire, Rosen, & Botvinick, 2010; Westbrook, Kester, & Braver, 2013) work.

Cognitive effort is thus not identical with difficulty, motivation, attention, or cognitive control. These features may be necessary, but are yet insufficient to cover the concept. Importantly, effortful tasks are also motivating, sometimes drawing greater engagement when counteracting boredom, or more often causing aversion to engagement, due to some as-yet-unidentified causes. The motivational quality and volitional nature of cognitive effort suggest that an epistemologically useful definition should focus on decision-making: decisions about whether to engage, and also about the intensity of engagement.

In the sections that follow, we describe theories in which effort is treated as a variable in decisions to engage cognitive control and describe a program of research to investigate that decision. First, however, we review selected literature with theories implicating cognitive effort in mediating behavioral performance and physiological response during task engagement.

Effort as a mediator

Many theories point to cognitive effort as mediating the behavioral or physiological consequences of motivation. Cognitive fatigue—declining performance and physiological responses to task events over extended engagement with demanding tasks—is thought to be partly volitional, since motivational incentives can counteract fatigue effects (Boksem & Tops, 2008). Similarly, augmenting motivation can counteract “depletion” effects—in which willingness to exert self-control declines with protracted exertion of self-control (Muraven & Slessareva, 2003). Mind-wandering and task-unrelated thoughts are another active area of research with a potentially central role for cognitive effort (McVay & Kane, 2010; Schooler et al., 2011).

Cognitive effort has also been implicated in various theories on strategy selection in which high-effort, high-performance strategies compete with lower-effort, lower-performance ones. Mnemonic performance, for example, is thought to improve with more effortful encoding (Hasher & Zacks, 1979). Likewise, the quality of multi-attribute decision-making may depend on the extent to which a decision-maker uses more effortful, though higher-quality, strategies for comparing the alternatives (Payne et al., 1988). Effort, according to these theories, is treated as a cost offsetting the value of otherwise desirable cognitive strategies.

Cognitive effort is also implicated in more mechanistic accounts. The balance between model-free (habitual) and model-based control of behavior, for example, may be partly determined by cognitive effort (see, e.g., Daw, Gershman, Seymour, Dayan, & Dolan, 2011; Lee, Shimojo, & O’Doherty, 2014). According to this theory, behavior is either organized by simple, model-free associations between stimuli or states and their expected outcome values, or by model-based control, in which the organism explicitly represents (i.e., simulates) potential action–outcome sequences and selects the optimal sequences. Although model-based control can yield more globally optimal selections, individuals may default to model-free habits because model-based selection is computationally expensive.

A similar argument is found in dual-mechanisms-of-control theory (Braver, 2012), which describes two modes of cognitive control with dissociable temporal dynamics: proactive task set preparation and maintenance, which is effortful but potentially performance-enhancing, and a less effortful reactive mode. Intriguingly, a recent report provides evidence of an individual-differences correlation between model-based decision-making and proactive cognitive control, suggesting a common underlying process, such as effort-based decision-making (A. R. Otto, Skatova, Madlon-Kay, & Daw, 2014).

A distinction should be drawn between an account of effort based on computational costs and accounts based on metabolic costs. A bias against proactive and model-based control is explained by appealing to computational costs. They are costly because the working memory circuits supporting cognitive control are capacity-limited (Feng, Schwemmer, Gershman, & Cohen, 2014; Haarmann & Usher, 2001; Just & Carpenter, 1992; Oberauer & Kliegl, 2006). Thus, working memory constitutes a precious resource. As we describe in greater detail below, recent models have posited that phenomenal effort, or the bias against effort expenditure, is due to the opportunity cost of allocating that precious resource (Inzlicht et al., 2014; Kaplan & Berman, 2010; Kurzban et al., 2013; Shenhav et al., 2013). By contrast, metabolic accounts posit metabolic inputs as the precious resource. There is evidence of blood glucose depletion following extended engagement with demanding self-control tasks (see Gailliot & Baumeister, 2007, for a review). There is also evidence that blood glucose concentrations decrease with time spent specifically on cognitive-control-demanding tasks (Fairclough & Houston, 2004; though see Marcora, Staiano, & Manning, 2009). Glucose depletion, according to the metabolic model, is evidence that glucose is used by, and thus needed for, control processes. If blood glucose is necessary for control, then phenomenal effort may help prevent overuse of glucose by biasing disengagement.

However, there are several reasons to be skeptical of this metabolic account. Unlike physical effort, there does not appear to be a global metabolic cost for utilizing cognitive control relative to automatic (noncontrolled) behavior, or even relative to rest (but see Holroyd, in press). Indeed, the brain’s overall metabolic demands change little during task engagement (Gibson, 2007; Kurzban, 2010; Raichle & Mintun, 2006). Although cortical spiking is metabolically costly, consuming large amounts of ATP (Lennie, 2003), the brain’s intrinsic “resting” dynamics are also costly, and global glucose consumption may increase no more than 1% during vigorous task engagement (Raichle & Mintun, 2006). Moreover, astrocytes contain stores of glycogen, and consequently are much better positioned to provide for the rapid and transient increases in the local metabolic demands of task-engaged cortical processing (Gibson, 2007; Raichle & Mintun, 2006). Hence, a tight coupling between blood glucose and local metabolic demands is unlikely. Finally, the frequently observed link between blood glucose depletion and self-control may have other explanations. Specifically, it is possible that glucose provides one of many internal signals that track protracted engagement with demanding tasks that may jointly factor into the decision to expend further effort. In a recent and striking study, simply altering a participant’s beliefs about willpower determined whether or not changes in blood glucose affected effortful self-control (Job, Walton, Bernecker, & Dweck, 2013). This belief effect suggests that blood glucose can impact, but does not determine, the decision to expend effort.

An association between blood glucose and effort might also be explained by changes in peripheral metabolism that support the sympathetic arousal occurring during effortful task performance. Numerous markers of arousal have been found to track engagement with effortful tasks. These include cardiovascular changes: increased peripheral vasoconstriction and decreased pulse volume, decreased heart rate variability, and increased systolic blood pressure (Capa, Audiffren, & Ragot, 2008; Critchley, 2003; Fairclough & Roberts, 2011; Gendolla et al., 2012; Hess & Ennis, 2011; Iani, Gopher, & Lavie, 2004; Wright, 1996). These cardiovascular indicators, along with other sympathetic response variables, including skin conductance (Naccache et al., 2005; Venables & Fairclough, 2009) and pupil dilation (Beatty & Lucero-Wagoner, 2000; van Steenbergen & Band, 2013), have all been used as indices of cognitive effort. Though sympathetic arousal may accompany cognitive effort, it also responds to numerous other processes that are unrelated to effort. For this reason, and reasons explained later, arousal may provide evidence of effort, but it should not be equated with cognitive effort.

Theories about cognitive effort

Relative to the number of theories implicating effort as a mediator, fewer have aimed to explain cognitive effort directly. Theoretical development, however, has begun to address longstanding questions about why tasks are effortful, or, alternatively, why a bias against task engagement exists in the first place, and also how that bias is regulated and overcome.

Earlier theories (Kahneman, 1973; Robert & Hockey, 1997) posited effort, reasonably, though broadly, as a phenomenal experience consequent to the deployment of resources to boost performance. In the cognitive–energetic framework, for example, a control loop increases both resource deployment and phenomenal effort when a monitor identifies flagging performance (Robert & Hockey, 1997). “Energetics” implies a cost associated with resource deployment, but does not narrow down the nature of this resource or of the cost.

A recent metabolic-type proposal is concerned more with the accumulation of metabolic waste products than metabolic inputs. According to this proposal, the production-to-clearance ratio of amyloid beta increases during control-demanding tasks, and phenomenal effort reflects the progressive accumulation of amyloid beta proteins (Holroyd, in press). Multiple lines of evidence suggest this hypothesis, including that effortful task engagement may particularly increase cortical norepinephrine release, driving physiological changes that both increase amyloid beta production and decrease clearance rates. Also, sleep appears to play an important role in amyloid beta removal by boosting clearance rates (Xie et al., 2013), and sleep deprivation was recently shown to increase phenomenal effort (Libedinsky et al., 2013). Much more evidence is needed, however, that subjective effort maps onto the specific metabolic processes described in this proposal. Phenomenal effort may have nothing to do with accumulating amyloid beta, even if it does co-occur with demanding task engagement. Alternatively, amyloid accumulation may relate more to the progressive phenomenon of fatigue than to momentary or prospective effort.

Multiple authors have commented on potential connections between cognitive fatigue and cognitive effort. Fatigue is thought to interact with motivation to regulate effort expenditure (Boksem, Meijman, & Lorist, 2006; Hockey & Robert, 2011; Hopstaken, van der Linden, Bakker, & Kompier, 2014). Fatigue may well influence effort, but the two are likely dissociable. One may anticipate or experience subjective effort associated with particular tasks even at the beginning of a workday, and other tasks may seem relatively effortless, even when one is fatigued. Conversely, fatigue seems to be associated with clear performance declines that may be due to another source than effort expenditure. This suggests that fatigue is neither necessary nor sufficient to account for phenomenal effort.

As was suggested above, a growing literature proposes that effort reflects the opportunity costs of working memory allocation. The justification starts with the observation that cognitive control and working memory are valuable, in that they promote optimally goal-directed behaviors. Thus, a bias against their use appears maladaptive. The apparent paradox is resolved by taking into account the opportunity costs incurred by resource allocation.

Cognitive-control resources are valuable because they promote behavior in pursuit of valuable goals. According to an influential model, cognitive control involves the maintenance of task sets in prefrontal working memory circuits, from which top-down signals bias the processing pathways that connect stimuli to goal-directed responses (Miller & Cohen, 2001). Although these circuits can be flexibly reconfigured to subserve multiple task goals (Cole et al., 2013), their ability to implement multiple goals simultaneously is sharply limited (Feng et al., 2014; Haarmann & Usher, 2001; Just & Carpenter, 1992; Oberauer & Kliegl, 2006). Allocation toward any goal precludes allocation to other available goals, thus incurring opportunity costs. Optimizing behavior, therefore, means optimizing goal selection.

One way to optimize goal selection would be to make working memory allocation value-based. Early mechanistic models incorporated this principle by proposing that items are gated into working memory by value-based reinforcement signals encoded in phasic dopamine release to the (prefrontal) cortex and striatum (Braver & Cohen, 2000; Frank, Loughry, & O’Reilly, 2001). Subsequent reinforcement-learning models have advanced the position that just as reward can be used to train optimal behavior selection, it can be used to train optimal working memory allocation (Dayan, 2012). In an empirical demonstration, a reinforcement-learning model predicted trial-wise activation in the striatum (implicated in gating) corresponding to the predictive utility of items in working memory (Chatham & Badre, 2013). The result was interpreted as evidence that gating signal strength varies with the value of the items in working memory.

Building on the hypothesis that working memory allocation is value-based, a recent hypothesis has formalized the allocation of control resources in terms of economic decision-making (Shenhav et al., 2013). According to this proposal, task sets are selected and the intensity of their implementation is regulated as a function of the expected value of the goals to which they correspond. If the expected value of a goal is high, control is implemented more intensely. If the expected value drops because, for example, attainment becomes unlikely, or it becomes somehow too costly to pursue, intensity decreases, and at the limit, control resources are withdrawn. Importantly, the cost–benefit computation includes both the value of the goal, discounted by its likelihood of attainment and delay to outcome, and the costs related to the maintenance of task sets.

A number of recent proposals tie phenomenal cognitive effort either to maintenance costs in the expected value equation or to similar constructs (Inzlicht et al., 2014; Kaplan & Berman, 2010; Kurzban et al., 2013; Shenhav et al., 2013). Phenomenal effort is proposed to be the felt representation of opportunity costs of allocated resources. Cost signals need not always be conscious to influence behavior, but they may become conscious when the costs are sufficiently high. In any case, their effect is to bias disengagement and thereby conserve control resources. Performance decline may accompany disengagement, by way of attentional distraction, or an increasing tendency for goal switching rather than goal maintenance.

A number of variables have been proposed to affect subjective effort, including an individual’s working memory capacity, perceived efficacy, fatigue, and motivational state. Yet, little is known about how these variables influence subjective effort psychologically or mechanistically. Even less is known about the external variables that have been proposed to influence opportunity costs. For example, in computing opportunity costs, does the brain track the value of other available goals, or does it rely only on information about the current goal (Kurzban et al., 2013)? If it tracks other available goals, how is it determined what set of goals will be tracked? Also, how do these come to influence either prospective or ongoing decisions about effort?

Inspiration for future hypotheses may come from other models that have been developed to address the opportunity costs of resource allocation. In a model of physical vigor, for example, regulation is achieved by computing local information about the average rate of reward, which is reported by striatal dopamine tone (Niv, Daw, Joel, & Dayan, 2007). According to the model, vigor (the inverse latency to responding) is determined by a trade-off between the energetic costs of rapid responding and the opportunity costs (in terms of forgone rewards) incurred by sluggish or infrequent responding. If the average reward rate increases, the animal pursues the current goal with greater vigor, and if the reward rate decreases, vigor does as well.

An analogy may be drawn between effort and vigor. Speed–accuracy trade-offs in perceptual decision-making (Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006; Simen et al., 2009) suggest that, like physical behavior, cognitive speed is also optimized in task performance. Extending the analogy, the opportunity costs for cognitive effort might also reflect the average rate of experienced reward, indexed by striatal dopamine tone. Furthermore, the experienced reward could include not only external rewards, but also internal, self-generated “pseudorewards” (Botvinick, Niv, & Barto, 2009; Dietterich, 2000) that are registered following successful completion of subgoals in the pursuit of external reward.

Vigor, however, may differ from cognitive effort in key ways. Critically, it is not clear that a model of physical energy costs has an analogue in terms of cognitive energy costs, as we discussed above. Also, whereas current models of effort specify the goal to which the effort is directed, the average reward rate in vigor pertains to all experienced reward, and vigor thus generalizes to all ongoing behaviors (Niv et al., 2007). Finally, there is potential disagreement with respect to the impact of errors. Classic models of control hold that control intensity increases when errors are detected (Holroyd & Coles, 2002). In contrast, vigor should decrease when errors accumulate (because the errors imply a drop in average reward rate).

Another model that may provide inspiration for future theories on effort concerns the regulation of task engagement and the exploration–exploitation trade-off (Aston-Jones & Cohen, 2005). According to adaptive gain theory (AGT), the degree of task engagement (the speed and acuity of responses driven by norepinephrine-induced modulation of neuronal gain) is regulated by task utility (benefits minus costs), such that increasing utility yields greater engagement and decreasing utility yields distractibility and disengagement. Distractibility is considered adaptive, in the case of declining utility, because it frees up perceptual resources from exploitation of the current goal, toward exploration of other potential goals. AGT, unlike vigor, articulates a mechanism for response, and therefore goal specificity: gain modulation, time-locked with selected responses.

The AGT–effort link is intuitive and compelling on a number of dimensions. First, higher effort may correspond to higher engagement, whereas lower effort corresponds to distractibility, mind-wandering, and lack of focus. Second, norepinephrine, the chief neuromodulator of gain, is implicated in a host of cognitive-control functions including working memory, task switching, and response inhibition via impacts on neural dynamics in the prefrontal cortex (PFC; Robbins & Arnsten, 2009). Finally, the anterior cingulate cortex (ACC), which sends direct projections to the locus coeruleus by which it may drive norepinephrine release (Aston-Jones & Cohen, 2005), has been proposed to play a key role in the selection and maintenance of effortful actions (discussed below).

AGT may prove a rich source of hypotheses on opportunity-cost-based decision models and the neural mechanisms of cognitive effort. However, there is much that it does not address. For example, AGT is primarily concerned with the mechanisms regulating engagement rather than the determination of utility. As such, AGT does not specify what costs are included in the computation of utility or how they arise. Also, AGT is well-suited to address the regulation of ongoing task engagement, but not prospective decision-making or the estimation of future projected effort.

In summary, recent theories of cognitive effort emphasize the decision to expend effort and treat effort as a cost, and specifically as the opportunity cost of cognitive control. However, many questions remain. Does effort reflect the allocation of control resources? What is the exact nature of those resources? Relatedly, do certain control functions (e.g., task switching, maintenance, updating, inhibition, etc.) drive effort in particular? What variables contribute to the computation of opportunity costs? Also, what neural systems track those costs? Do similar or different systems support effort-based decision-making? As we describe next, these and many other questions may be best addressed by investigating decision-making processes and mechanisms directly.

Behavioral and neuroeconomic tools

Cognitive effort is a subjective, psychological phenomenon. It may covary with objective dimensions of task demands or incentive magnitude, but it cannot be described purely in these objective terms. Instead, it must be studied subjectively, either by self-report or as a psychophysical phenomenon: that is, in terms of decision-making. Free-choice decision-making emphasizes psychophysical intensity by maximizing the degree to which participants, rather than the task parameters, determine responses, and it may do so with greater reliability than self-reports. Moreover, decision-making about whether to initiate or maintain engagement with demanding tasks is, as we argued above, the most potentially valuable framing of cognitive effort. This framing emphasizes the volitional aspects unique to the notion of effort, rather than casting effort in terms that are merely redundant with existing constructs such as motivation, attention, or cognitive control. Furthermore, it facilitates investigation into unanswered questions related to how decisions about effort are made. More fundamental than questions of how are the questions of why: why certain tasks are effortful, or why they engender a bias against engagement. As we described above, recent theories on cognitive effort have attempted to address why by appealing to opportunity costs—a description in terms of cost–benefit decision-making.

Behavioral and neuroeconomic approaches offer a wealth of normative theory, experimental paradigms, and analytical formalisms that may be brought to bear on investigating decision-making about cognitive effort. The economic interpretation of behavior encompasses cost–benefit evaluation, preference formation, and ultimately decision-making.

Recent work has established that preferences with regard to effort are systematic, and therefore amenable to economic analysis (Kool & Botvinick, 2014; Kool et al., 2010). In a free-choice paradigm, participants were allowed to select blocks of trials that were identical except in the frequency of task-switching (i.e., higher or lower cognitive-control demand). Critically, most participants developed a bias against frequent task-switching, revealing that they preferred to avoid this cognitive-control operation (Kool et al., 2010). In a follow-up study, participants were given the chance to earn reward by selecting more-frequent task-switching. Their choice patterns revealed that cognitive labor is traded off with leisure as a normal, economic good (Kool & Botvinick, 2014). Similarly, another free-choice study offered participants the chance to earn more money by electing to complete blocks of trials using effortful, controlled responding, over relatively effortless, habitual responding, and the researchers found that binary choice bias was modulated by the monetary difference between the offers (Dixon & Christoff, 2012).

Establishing that preferences are systematic is a necessary first step, but much more can be done. Although binary choice bias can reveal the existence of preference, it does not reveal the strength of that preference, limiting inference about the factors that affect it, and ultimately what causes it. In contrast, the construction of preference functions offers more richly detailed, descriptive accounts of, for example, risky decision-making and intertemporal choice. These functions describe well-known violations of normative decision-making, as in asymmetric prospect theory (Kahneman & Tversky, 1979) and nonexponential discounting in intertemporal choice (Loewenstein & Prelec, 1992). They have also been used extensively in neuroeconomics to reveal both the computational and neural mechanisms of decision-making, informing psychology, systems neuroscience, and economic theory (for a review, see Loewenstein, Rick, & Cohen, 2008).

Preference functions

describe choice dimensions in terms of a common currency (subjective value, utility, etc.), reflecting the assumption that all economic goods are exchangeable. Developing these functions requires accurate quantification of the exchange. Thus, the development of precise and reliable methods for eliciting subjective values, referred to as “mechanism design,” has been a chief concern of behavioral economists. Becker–DeGroot–Marschak (BDM) auctions, for example, were designed specifically to ensure “incentive compatibility,” or that the true valuation is revealed, absent strategic influences arising when individuals are asked to state values. In a BDM auction, a buyer is asked to state the maximum he or she would be willing to pay to attain a desirable outcome, considering that this outcome will be attained if and only if the bid is larger than a randomly generated number, thus incentivizing the buyer to reveal the true, maximum price point. In well-designed valuation procedures, such as BDM auctions, all of the relevant features of a decision are rendered to be quantifiable, explicit, and therefore transparent to both the experimenter and the decision-maker.

The cognitive effort discounting (COGED) paradigm

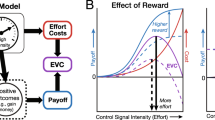

Recently, we adapted another widely used valuation procedure (referred to by economists as a “choice experiment”) to show that preferences regarding cognitive effort can be measured in terms of subjective values (Westbrook et al., 2013). In our paradigm, participants are familiarized with multiple levels of a demanding cognitive task (we used N = 1–6 in the N-back task), after which a series of paired offers are made to repeat either a more demanding level for more money or a less demanding level for less money. These offers are step-wise titrated (Fig. 1) until participants are indifferent between a baseline low-demand level (one-back) and each of the higher-demand levels. Indifference points are critical because they indicate a psychophysical equivalence between greater reward (e.g., dollars) and greater effort, thereby rendering effort in terms of an explicit metric.

Cognitive effort discounting (COGED) refers to the observation that the subjective value (SV) of the larger offer is reduced when it is paired with more demanding tasks. The paradigm thus quantifies effort as a cost, or conversely, how willing a decision-maker is to engage in a more demanding task. For example, if a participant were indifferent between being paid $2 to perform the three-back and $1.40 to perform the one-back task, then the subjective cost of the harder relative to the easier task is $0.60, and the SV of the $2 reward for completing the three-back task is $1.40. By holding both the baseline low-demand task and the large reward amount constant, we can examine indifference points across load levels, normalized by the reward magnitude (e.g., $1.40/$2.00 = $0.70), and thus can define the SV of a reward for task engagement across a range of specific load levels (a preference function, as in Fig. 2). The ability to measure effort in terms of a cost holds great promise, enabling a direct investigation of, for example, opportunity cost models of effort. It also yields a novel measure of cognitive motivation that can be used to predict cognitive performance, or to characterize individuals according to how costly they find an effort.

A number of critical findings support the content and predictive validity of the COGED as a novel measure of cognitive effort. First, indifference points varied as a monotonic function of working memory (N-back) load: Participants discounted rewards more with increasing demand (see Fig. 2). Given that all conditions were equated for duration and that payment was not contingent on performance, the most ready interpretation is that discounting reflects rising effort costs or, conversely, the falling SV of rewards obtained by greater effort. Beyond simply showing that participants prefer lower over higher demands, SV quantifies the degree of preference. Critically, preference was sensitive to objective cognitive load, a core determinant of state cognitive effort.

A second important finding relates to the subjectivity of subjective value: Sizeable individual differences in SV reflected trait cognitive motivation. Specifically, we found that SV correlated positively with need for cognition, a measure of the degree to which individuals engage with demanding activities in their daily life. This result supports both the hypothesis that individuals differ in trait cognitive motivation and the use of the COGED paradigm for measuring those differences. We also found that the same tasks were more costly for older than for younger adults (Fig. 2). Critically, this was true even when older and younger adults were matched not just on task features, but also on performance: Older adults dispreferred demanding tasks to a greater extent, even when they performed as well as younger adults. Given that performance matching is a common strategy in the cognitive aging literature, our work suggests that group differences in cognitive motivation may explain some of the differences in physiological arousal or performance that are commonly attributed to differences in processing capacity (Park & Reuter-Lorenz, 2009).

Benchmarking COGED against well-established delay discounting provided further validation for the novel paradigm. A common finding in the delay-discounting literature is the amount effect, that larger rewards are discounted proportionally less than smaller rewards (Green, Myerson, Oliveira, & Chang, 2013; Thaler, 1981). This same amount effect was obtained with COGED, supporting that as amounts grow larger, the psychological influence of reward becomes proportionally more important than the influence of the task demand on willingness to expend effort.

We also found a positive individual-difference correlation between COGED and delay discounting, such that those who find cognitive effort more costly are also more impulsive delay discounters, whereas those who find effort inexpensive vary widely in their impulsivity (Fig. 3). This correlation suggests that shared mechanisms underlie subjective effort and self-control in intertemporal choice (cf. Kool, McGuire, Wang, & Botvinick, 2013).

Benchmarking against delay discounting also provided extra evidence that the group difference in COGED did not reflect a simple wealth effect. Namely, although age differences in delay discounting were accounted for by self-reported income (replicating other reports; Green, Myerson, Lichtman, Rosen, & Fry, 1996), age differences in COGED remained, even when controlling for self-reported income.

The objective value of subjective value

The quantification of cognitive effort in terms of SV, using paradigms such as COGED, offers numerous conceptual and methodological advantages. Examples of each of these advantages and their relevance to cognitive effort-based decision-making follow. First, SV, unlike reward, provides a direct measure of effort costs. Thus, it avoids the pitfalls of equating reward with effort—an approach that is common in the nascent cognitive-effort literature. Second, as we discuss further below, a remarkable correspondence between SV and the neural dynamics during decision-making can be exploited to investigate the mechanisms of effort-based decision-making. Third, systematic analyses of SV functions may prove useful for dissociating overlapping constructs (e.g., effort vs. delay). Fourth, individual differences in discounting can predict such psychologically important variables as substance abuse and other forms of psychopathology. Fifth, SVs can be inferred from a decision-making phase that is separated from task engagement and passive evaluation, thus enabling investigation of the decision-making process in isolation.

Reward is not subjective value

Inference in decision-making research should be based on SV and not reward magnitude, yet the distinction is often overlooked. A common strategy is to manipulate cash reward and assume that effort expenditure is modulated in direct proportion (Croxson, Walton, O’Reilly, Behrens, & Rushworth, 2009; Krebs, Boehler, Roberts, Song, & Woldorff, 2012; Kurniawan et al., 2010; Pochon et al., 2002; Schmidt, Lebreton, Cléry-Melin, Daunizeau, & Pessiglione, 2012; Vassena et al., 2014). Meta-analyses, however, have shown that monetary incentives increase performance in only a fraction of laboratory studies (55%, by one estimate), and in others they either make no difference or make performance worse (Bonner, Hastie, Sprinkle, & Young, 2000; Camerer & Hogarth, 1999). Note that at least one fMRI study investigating the effects of reward magnitude on working memory (using the N-back task) showed that larger reward magnitude corresponded with greater prefrontal recruitment, but it failed to show any effects on task performance measures (Pochon et al., 2002). These results suggest that the relationship between reward and effort is complicated, at best.

How participants construe the value of incentives relative to the value of their participation may be part of the complication. In one study, participants’ performance improved with higher payment, but those who were offered no cash performed better still (Gneezy & Rustichini, 2000). The authors’ interpretation of this result was that payment for participation in a laboratory study is construed as an incomplete contract (each additional instruction fulfills part of the contract) and that pay schedules help participants determine the value of their effort (which will be low if payment is low). Evidence that decision-makers construe an implied contract with respect to cognitive effort, and cognitive control in particular, has come from a recent study showing that participants optimize the allocation of their time between cognitive labor or leisure, according to the structure of the pay schedule (Kool & Botvinick, 2014). If participants construe an implied contract considering the value of their effort in the context of incentives for performance, paradigms intended to investigate effort-based decision-making may instead generate data on social-fairness-based decision-making.

Furthermore, incentives may make a participant more willing to engage with a task, but not necessarily to expend effortful control. Consider that in one meta-analysis, the studies that least often exhibited monetary-incentive-related increases in performance used complex reasoning and problem-solving tasks, whereas the studies that most often showed incentive-related increases in performance used noncomplex vigilance and detection tasks (Bonner et al., 2000). Complex problem-solving is more likely to demand effortful cognitive control than do detection tasks. The fact that incentive effects were stronger for less complex tasks suggests that typical incentive schemes have a higher likelihood of modulating effortless than effortful forms of engagement.

Effort may be an important decision variable, but only if a participant deems it so. Relatedly, incentives are a way for researchers to indicate what they think is important about a task, but do not determine what the participant thinks is important. Consider boredom. The assumption that demanding tasks are aversive is incorrect if participants are motivated more to counteract boredom than to avoid cognitive demand. The counterassumption, that demanding tasks may be appetitive rather than aversive, is the premise of the Need for Cognition Scale. Furthermore, the possibility of boredom suggests that the preference for effort may be not only trait- but also state-dependent. Rather than making potentially inaccurate assumptions about willingness to engage with a task, preferences for task engagement can be measured explicitly. Recently, we measured preferences for reverse COGED by starting with equivalent offers for harder and easier tasks, and subsequently allowing participants to discount in either direction. Of 85 participants, only two accepted a smaller offer for a more demanding N-back level, supporting that the N-back is universally costly and that chiefly reward, not boredom, drives participants to select higher N-back loads.

Behavioral economic choice experiments—for example, offering a harder task for more reward versus an easier task for less reward—make clear to participants that their choice should depend on their own preferences. They also make clear the dimensions of choice on which the preferences should be based (e.g., difficulty and reward, and not unseen features of an implied contract). When SV is inferred from choice experiments, participants’ preferences are made clear. The factors underlying incentive effects, by contrast, are not.

Another benefit of SV over reward is that SV can be used to investigate choice difficulty. Some choices are harder to make than others; harder choices can reveal subtle decision-making biases (Dshemuchadse, Scherbaum, & Goschke, 2013; Krajbich, Armel, & Rangel, 2010) and elucidate the potential neural mechanisms that are most important when decisions are difficult (Basten, Biele, Heekeren, & Fiebach, 2010; Papale, Stott, Powell, Regier, & Redish, 2012; Pine et al., 2009). However, choice difficulty—proximity to indifference—cannot be inferred from reward alone. With knowledge of an individuals’ private SV function, difficulty can be investigated by using options tailored to isolate and manipulate the reward magnitude, control demands, and choice difficulty.

SV can be used to investigate the neural mechanisms of decision-making

As numerous authors have noted, SV is not only an “as if” abstraction describing choice patterns, but actually appears to be computed by the brain “in fact,” in the process of decision-making (Kable & Glimcher, 2009; Montague, King-Casas, & Cohen, 2006; Padoa-Schioppa, 2011). Neuroeconomists have probed the encoding of SV, and the choice dimensions impacting SV, revealing a great deal about the computations and mechanisms of decision-making. fMRI studies of intertemporal choice, for example, have revealed a “ventral valuation network” that encodes SV, including ventromedial prefrontal and orbitofrontal cortex and ventral striatum (Kable & Glimcher, 2007, 2010; McClure, Laibson, Loewenstein, & Cohen, 2004; Peters & Buchel, 2010a; Pine et al., 2009). Moreover, this work has investigated the role of SV representations—for example, adjudicating between a model in which a unified representation of SV drives choice, or instead one in which dual competing systems lead to characteristically patient or impulsive choice (Kable & Glimcher, 2007, 2010; McClure et al., 2004). Additionally, the neuroeconomic approach has been used to demonstrate that (a) reward magnitudes are transformed into SV on the basis of declining marginal utility (Pine et al., 2009) and (b) the SV of delayed rewards decreases hyperbolically, relative to the most immediately available option (Kable & Glimcher, 2010). Recent studies examining the encoding of SV in the BOLD signal have also begun to reveal the mechanisms involved in down-regulating impulsivity. Specifically, they have pointed to mechanisms by which episodic future thought (Peters & Buchel, 2010a) and a countermanding representation of anticipatory utility (Jimura, Chushak, & Braver, 2013) might reduce impulsivity.

SV not only correlates with fMRI data but is also encoded at much finer spatial and temporal resolutions. For example, single neurons in the monkey orbitofrontal cortex have been categorized as encoding either choice identity, the economic value of options in a menu, or the value of selected options in two-alternative forced choice tasks (Padoa-Schioppa & Assad, 2006). Also, model-based magnetoencephalography has revealed high-frequency parietal and prefrontal dynamics corresponding with a cortical attractor network model, with two competing pools driven by the SVs of either of two risky options (Hunt et al., 2012). All of these studies relied on estimates of SV that were revealed by behavioral economic methods.

We note, however, an emerging debate about how, and even whether, the brain actually calculates SV to make decisions (Slovic, 1995; Tsetsos, Chater, & Usher, 2012; Vlaev, Chater, Stewart, & Brown, 2011). The SV framework assumes that first SVs are computed by a weighted sum of choice features, and then the SVs of all options are compared. A counterproposal suggests instead that decisions are based on the relative values of the options (or of their features) and represent the comparative value differences (Vlaev et al., 2011). According to another proposal, preference formation can be described by a dynamic, leaky accumulator, driven by feature salience, in which salience is determined by recency and a moment-to-moment preference rank among the options (Tsetsos et al., 2012). This dynamic form of decision-making can, under the right conditions, predict nonnormative choice patterns, including preference reversals and framing biases. If decision-making is best described as a dynamic process of this kind, cognitive effort-based decision-making will also be subject to unstable preferences. Consider the apparent inconsistency between prospective judgments of effort, as when trying to decide whether to tackle a difficult writing project, and the subsequent experience of effort, as when deciding to stay engaged with the writing project.

Nevertheless, even if decision-making turns out not to involve separable stages of stable SV computation and comparison, the SV-based research strategy is still useful. SV reflects the weighted combination of decision features, and SV representations can still be used to identify the brain regions implicated in processing those features. However, one consequence of the alternative models is that they would necessitate weaker claims about the causal role of SV computations in decision-making. Rather than organizing a model of effort-based decision-making around a final SV comparator with concomitant inputs and outputs, a model may be organized around a thresholded accumulator or comparator of the features driving opportunity costs. In that case, an SV-based research strategy could identify relevant neural systems, but SV correlates should not be interpreted as computations of SV per se.

SV can be used to dissociate choice dimensions

Choice dimensions may overlap conceptually, but systematic analysis of SV functions over those dimensions can dissociate them. Consider, for example, delay and risk. Decision-makers discount both delayed and risky rewards (Green & Myerson, 2004; Prelec & Loewenstein, 1991; Rachlin, Brown, & Cross, 2000). Some have argued that delay discounting simply reflects the fact that temporal distance from a reward is associated with increased risk that the reward will not be obtained, and delay discounting is thus another form of risk discounting. Alternatively, low-probability rewards may simply imply lower frequencies of delivery (over repeated instances), and thus greater delay (Rachlin et al., 2000). Investigators have found evidence that these possibilities are dissociable, however, by examining the impact of reward magnitude manipulations on SV functions: Namely, larger delayed rewards are discounted less, whereas larger probabilistic rewards are discounted more (Green & Myerson, 2004). Also, although the same two-parameter hyperboloid function describes both delay and probabilistic discounting, magnitude independently affects one parameter in delay and the other in probability (Green et al., 2013).

The issue of construct dissociability is critical for cognitive effort. Rewards that require greater effort to obtain also typically take longer to obtain, or are less likely, or both. The close relationship between difficulty and effort suggests that effort-based decision-making may be reduced to delay- or risk-based decision-making. But, just as systematic investigation of SV functions has been used to dissociate delay and probability, an SV formulation can also provide a means by which to test the dissociability of effort from these related dimensions.

Considerable behavioral economic evidence already indicates that effort, at least in the case of physical effort, is dissociable from delay and risk (Burke, Brunger, Kahnt, Park, & Tobler, 2013; Denk et al., 2004; Floresco, Tse, & Ghods-Sharifi, 2007; Prévost, Pessiglione, Météreau, Cléry-Melin, & Dreher, 2010; Rudebeck, Walton, Smyth, Bannerman, & Rushworth, 2006). One study dissociated physical effort by independently manipulating the dopaminergic and glutamatergic systems (Floresco et al., 2007). A dopamine antagonist caused a rat to reduce the vigor of lever-pressing for food, even when the delay to reward was held constant, indicating a selective impact on effort discounting. Conversely, an NMDA receptor antagonist increased delay discounting, but had no effect on effort discounting when delays were held constant, indicating a selective impact on delay discounting. Furthermore, support for a dissociation of physical effort and risk in humans has come from fMRI data showing representations of cost related to risk in the anterior insula, and representations of costs related to physical effort in the supplementary motor area (Burke et al., 2013).

Of course, whether these dissociations from physical effort apply to cognitive effort remains to be seen. In attempts to dissociate cognitive effort-based decision-making from delay- or risk-based decision-making, recent studies have introduced methodological and analytical controls for time-on-task and likelihood of reward, and still have observed both demand avoidance (Kool et al., 2010) and cognitive effort discounting (Westbrook et al., 2013). For example, in the COGED paradigm, delay is dissociated from effort, because the task duration is fixed. Participants spend just as long on the two-back as on the three-back task, yet they consistently discount rewards for the three-back more steeply, indicating that effort costs are dissociable from delay. Similarly, risk is also fixed, because participants are instructed that their pay is not contingent on their performance (instead, it depends on their willingness to “maintain their effort”). Thus, effort-based discounting is not reducible to probability-based discounting. Yet more work needs to be done to investigate the dissociability of effort from risk-based or intertemporal choice. For example, if, as described above, cognitive effort stems from opportunity costs associated with the recruitment of cognitive-control resources, factors that make current goals less likely (i.e., more risky) or make them take longer to obtain (i.e., delayed) should influence opportunity costs and subjective effort.

Individual differences in SV as a clinical predictor

Longstanding philosophical concerns argue against interindividual utility comparisons: Simply put, there is no meaningful way to assess whether one person values a commodity as much as another. Two sellers may agree on a dollar price, but this does not guarantee equivalent valuations, since there is no way to ensure that they value dollars equally. Philosophical concerns aside, interindividual SV comparisons are demonstrably useful. Individual differences in delay and probability discounting have predicted substance abuse, obesity, and problem gambling (Alessi & Petry, 2003; Bickel et al., 2007; Holt, Green, & Myerson, 2003; Kollins, 2003; Madden, Petry, & Johnson, 2009; Weller, Cook, Avsar, & Cox, 2008), for example. Physical effort-based decision-making correlates with anhedonia and depression, and also with schizophrenia symptom severity, indicating that it may serve as an important diagnostic criterion (Cléry-Melin et al., 2011; Gold et al., 2013; Treadway, Bossaller, et al, 2012).

Cognitive-effort discounting may also have predictive utility. As we mentioned above, individual differences in effort discounting correlate with delay discounting (Westbrook et al., 2013), suggesting that effort discounting may predict multiple clinical outcomes. Also, if cognitive effort is more subjectively costly in depression and schizophrenia, it is reasonable to anticipate that the SV of effort can predict symptom severity in these disorders. Although self-report measures of trait cognitive motivation exist (e.g., the Need for Cognition Scale; Cacioppo & Petty, 1982), our prior work provides initial evidence that discounting is more sensitive to group differences (in cognitive aging) than are such self-report measures (Westbrook et al., 2013), potentially indicating the superior reliability of this behavioral index.

Isolating decision-making from task engagement and passive evaluation

Behavioral economic valuation procedures isolate decision-making from task experience. This is critical, because decision-related processes may differ from those associated with the processing of valenced stimuli. For example, prospective decision-making about a delayed reward and postdecision waiting for a chosen delayed reward have distinct and overlapping spatial profiles and temporal dynamics (Jimura et al., 2013). Valuation procedures engage participants in active decision-making, providing an excellent opportunity to investigate active cognitive effort-based decision-making apart from passive evaluation.

During task engagement, effort is typically inferred from task performance or from autonomic arousal. Yet both performance and arousal are indirect, and moreover, confounded indices of effort. Either may be jointly determined by numerous factors, including capacity, motivation, difficulty, and noneffortful forms of engagement, making inferences about effort indirect, at best. A dynamic interplay between these factors complicates matters further. Consider that false feedback of failure can make tasks seem more effortful, diminishing motivation, and driving actual performance lower (Venables & Fairclough, 2009). Such interactions complicate inferences about effort-based decision-making during ongoing performance and, further still, about the link with prospective effort-based decision-making.

It is also unclear how physiological and performance dynamics arising during task engagement relate to effort-based decision-making. Does increasing arousal reflect an input to or a consequence of the decision to expend effort, for example? Consider a study measuring effort in terms of systolic blood pressure (SBP) reactivity (Hess & Ennis, 2011). In the two-phase study, younger adults with higher SBP during a prior cognitive task elected to solve more math problems when given an unstructured period to solve as many problems as desired. The interpretation—that higher SBP reflects increased willingness to expend effort—suggests that SBP reflects the outcome of an effort-based decision. On the other hand, older adults with the highest SBP elected to solve fewer problems than other older adults. The interpretation there was that, for these older adults, the cost of effort was too high. Thus, SBP was also interpreted as tracking an input to, rather than an output from, a decision to expend effort. Because valuation procedures involve making decisions prior to engagement, one can safely infer that SV represents inputs to a prospective engagement decision.

Valuation procedures can also be structured to isolate such specific decision-related processes as passive stimulus evaluation, active selection, or outcome evaluation. This is critical, given the existence of behaviorally and neurally dissociable systems. In a notable example, valuation procedures have been used in conjunction with single-cell recordings in monkeys to dissociate a few specific forms of value representations in the orbitofrontal cortex: an offer value (encoding the value of one decision alternative), a chosen value (encoding the value of the alternative that the monkey chooses), and taste (encoding choice identity; Padoa-Schioppa & Assad, 2006). Note that different forms of information are encoded at different times with respect to the moment of decision.

Neuroeconomists have further dissociated decision values (which may include effort costs, where relevant) from outcome values (which do not include effort costs). Whereas fMRI evidence implicates medial and lateral orbitofrontal cortex in the representation of both decision values and outcome values, the ventral striatum appears to encode decision, but not outcome, values (Peters & Buchel, 2010b). Whether these distinctions hold for cognitive effort is unknown. One study on cognitive effort has revealed ventral striatal value representations appearing to reflect cognitive effort discounting, at the time when reward cues were delivered—after the period of effort expenditure (Botvinick, Huffstetler, & McGuire, 2009; cf. Schmidt et al., 2012; Vassena et al., 2014). This suggests either that outcome values are sensitive to cognitive effort or that they can obtain in the ventral striatum, in contradiction to other neuroeconomic studies. At present, no studies have examined cognitive effort-based decision-making using valuation procedures, so it is currently unknown how and where cognitive-effort-sensitive decision values are represented.

Decision-making has also been dissociated into three separate systems with different neural substrates and behavioral correlates: a Pavlovian system selecting from restricted range of behaviors, based on automatic stimulus–response associations (often innate responses to natural stimuli); a habitual system selecting among an arbitrary set of behaviors learned through repeated experiences; and a goal-directed system selecting behaviors on the basis of action–outcome associations (reviewed in Rangel, Camerer, & Montague, 2008). These decision-making systems are not only behaviorally, but also neurally, dissociable, with learning for Pavlovian responses implicating the basolateral amygdala, orbitofrontal cortex, and ventral striatum; habitual responding engaging corticostriatal loops routed through the dorsolateral striatum; and goal-directed responding appearing to more selectively engage the dorsomedial striatum. These dissociations may be relevant to cognitive effort-based decision-making in at least two ways. First, it is likely that decisions about cognitive effort, especially regarding nonoverlearned tasks, will be mediated by the goal-directed system. Action–outcome associations, for example, are used to regulate the intensity of cognitive control according to the expected-value-of-control model (Shenhav et al., 2013). Second, the balance of goal-directed and habitual responding may depend on an individuals’ tolerance for the relatively higher computational cost of goal-directed responding. Thus, the effort-based SV may predict reliance on goal-directed versus habitual decision-making.

In summary, examining cognitive effort-based decision-making in the context of a valuation procedure not only yields a reliable measure of effort (SV), but also provides the opportunity to investigate the decision to expend effort in isolation. This creates an opportunity to draw unconfounded inferences about decision inputs versus outputs and to emphasize active decision-making mechanisms. Systematic manipulations of choice dimensions will help resolve representations of outcome values versus decision values. This will also elucidate the interplay between effort-based decision-making and goal-directed versus habitual and Pavlovian systems.

Cognitive effort-based decision-making: An integrated research proposal

In the following section, we propose future work that could investigate the principles and neural mechanisms of cognitive effort-based decision-making. The common approach involves using behavioral economic methods to measure the SVs of rewards obtained by engaging in a demanding cognitive task, and taking this measure to represent the cost of cognitive effort, or, conversely, the willingness to engage with the task.

Open questions and research strategies

Why is cognitive effort aversive?

The lack of global metabolic constraints suggests that other factors justify the apparently aversive qualities of cognitive effort. Recent proposals posit that phenomenal effort reflects opportunity costs. Full development of a formal opportunity cost model will require explicit information on the subjective costs and benefits of goal pursuit—precisely what behavioral economic paradigms yield. SV data could thus elucidate the variables that determine opportunity costs. According to one recent proposal, opportunity costs reflect the “value of the next-best use” of cognitive resources (Kurzban et al., 2013). Evidence for such a proposal could be provided by investigating whether manipulating the value of the next-best option influences the subjective effort associated with a given task. For example, one could test whether presenting incidental cues about alternative rewarding activities (e.g., a ringtone indicating text message delivery) that signal opportunity costs versus equally distracting, but value-neutral, cues during performance of a demanding task makes the task more subjectively effortful. The test would be whether the SV of the task measured in a subsequent valuation phase was lower for participants receiving the opportunity cost cues, indicating that engagement was more costly for them.

Another proposal is that phenomenal effort reflects the drive for continued exploitation versus exploration, and that this may relate to experienced reward. One could thus test whether the average rate of the incidental rewards experienced by a participant affects his or her willingness to expend effort. As we have described above, the average reward rate associated with a task context has been proposed to regulate vigor in relation to physical effort (Niv et al., 2007). Pharmacological manipulations could also be used to test whether, for example, dopamine tone, proposed to regulate vigor, also affects the willingness to expend effort (Niv, Joel, & Dayan, 2006; Phillips, Walton, & Jhou, 2007).

Another key assumption is that subjective effort is linked to demands for working memory and cognitive control, which are capacity-limited. Thus, one could test whether SV is impacted by the difficulty of tasks that do not require cognitive control (e.g., “data-limited” tasks). Another test might be whether manipulations to diminish control capacity—for instance, working memory loads unrelated to goal pursuit, or lesions to the neural systems supporting cognitive control—yield systematic influences over effort-based decision-making.

Relatedly, hypotheses explaining the putative link between glucose depletion and self-control could also be tested. If blood glucose constitutes a necessary fuel for self-control, then glucose levels (and prior depletion) should determine performance levels in a given self-control task, potentially even independently of the SV associated with it (estimated from a valuation phase).

Alternatively, self-control may depend on the volitional factors mediating the relationship between blood glucose and self-control, such that the glucose–self-control relationship could be decoupled. In particular, a motivational account predicts that decrements in self-control task performance following a depletion phase would be directly mediated by associated reductions in the SV for such tasks. Such a motivational account also predicts that a manipulation of incentives (e.g., amount effects) could alter both the SV of a prospective self-control task (following a depletion phase) and also performance of that task, even without an associated influence on blood glucose levels. Likewise, the motivational account, but not the depletion account, suggests that it may be possible to manipulate blood glucose without altering willingness to engage (i.e., SV levels). In short, tests of these two accounts should examine whether blood glucose or SV is a better predictor of performance of self-control-demanding tasks.

Valuation procedures can additionally be used to measure the influence of the many factors that are purported to affect subjective effort beyond simple demands for cognitive control, including sleep deprivation, fatigue, perceived capacity, real capacity, mood, and so forth. Evidence that manipulating any of these factors affected the SV of the rewards for task engagement would demonstrate that they contribute to subjective cognitive effort.

For what behaviors is sensitivity to cognitive effort an explanatory trait variable?

Valuation procedures could be used to test whether cognitive effort impacts outcomes across the numerous domains putatively mediated by effort-based decisions. For example, valuation procedures could be used to test whether individual differences in cognitive effort sensitivity predict reliance on model-based versus model-free control of behavior. Likewise, they may determine an individual’s reliance on proactive versus reactive control strategies, the selection of complex decision-making strategies in multi-attribute decision-making, or the selection of effortful encoding strategies during tasks requiring long-term memory. In any of these domains, valuation procedures can enable tests of whether (a) individual differences in participants’ sensitivity to cognitive effort predict the selection of effortful task strategies, or (b) manipulations of state effort costs, via manipulations of task demands or motivation, can influence the selection of effortful strategies.

What peripheral physiological variables track effort?

To examine claims about peripheral physiological markers of cognitive effort, valuation procedures could be used to test whether physiological dynamics correlate with the SVs of rewards for effort across different kinds of tasks. For example, it would be useful to know whether pupil dilation, a historically important index of cognitive effort (Beatty & Lucero-Wagoner, 2000; Kahneman, 1973; Wierda, van Rijn, Taatgen, & Martens, 2012) tracks the SVs of cognitive effort estimated across different levels of control demands (e.g., N-back load in the COGED). Other markers of sympathetic arousal previously associated with cognitive effort include skin conductance response (Naccache et al., 2005; Venables & Fairclough, 2009), peripheral vasoconstriction (Iani et al., 2004), decreased heart rate variability (Critchley, 2003; Fairclough & Mulder, 2011), and increased systolic blood pressure (Critchley, 2003; Gendolla et al., 2012; Hess & Ennis, 2011; Wright, 1996). Systematic evaluation of the relationship between SVs and these markers could also clarify whether they should be thought of as inputs to (perhaps driving subjective costs) or outputs from a decision to expend cognitive effort.

Physiological arousal may diverge from effort in key ways, however, so it also would be important to delineate instances of when indices of arousal track effort and when they do not. The dynamics of pupil dilation, in particular, have recently been linked to a number of dissociable processes that may or may not influence subjective effort, including the regulation of the exploration–exploitation trade-off via neuronal gain modulation, and the processing of multiple forms of uncertainty (Aston-Jones & Cohen, 2005; Nassar et al., 2012). Testing for correlations of pupil dilation and the SVs of rewards for effort will determine the extent to which dilation indexes effort, and can also yield insights on the extent to which the cognitive processes tracked by pupil dilation may also regulate effort.

What neural systems are involved in effort-based decision-making?

Valuation procedures provide an ideal context in which to investigate neural decision-making processes. The encoding of SV has been frequently examined during physical effort-based decision-making (Croxson et al., 2009; Floresco & Ghods-Sharifi, 2006; Hillman & Bilkey, 2010; Kennerley, Behrens, & Wallis, 2011; Kennerley, Dahmubed, Lara, & Wallis, 2009; Kennerley, Walton, Behrens, Buckley, & Rushworth, 2006; Kurniawan et al., 2010; Pasquereau & Turner, 2013; Prévost et al., 2010; Rudebeck et al., 2006; Skvortsova, Palminteri, & Pessiglione, 2014; Walton, Bannerman, Alterescu, & Rushworth, 2003; Walton, Bannerman, & Rushworth, 2002; Walton, Croxson, Rushworth, & Bannerman, 2005; Walton et al., 2009). Whether or not the neural systems underlying decisions about physical effort are used to decide about cognitive effort is an open question (addressed in the next section). In any case, the physical-effort literature offers a theory and methodology for investigating cognitive effort.

Numerous candidate regions have been implicated in effort-based decision-making. Chief among these is the ACC. The ACC has been proposed to promote the selection and maintenance of effortful sequences of goal-directed behaviors by representing action–outcome associations (Holroyd & Yeung, 2012). Accordingly, the ACC has recently been proposed to be the nexus of hierarchical reinforcement learning as to the value of a temporally extended, effortful sequence of actions (“options”) that can supersede the value of individually costly actions at a lower level (Holroyd & McClure, 2015). The expected-value-of-control proposal holds that the ACC should encode something like the SV, taking into account reward value and the cost of cognitive control to regulate control intensity (Shenhav et al., 2013). If, as claimed, the ACC is responsible for overcoming a bias against effort, ACC activity should increase when the demands for cognitive effort are particularly high. Alternatively, ACC activity may reflect the difficulty of decisions about effort, so that ACC responds most vigorously when the SVs of options that vary in reward magnitude and effort demands are closest (cf. Pine et al., 2009, for a choice difficulty effect in intertemporal choice). Careful investigation of choice difficulty effects has also implicated the intraparietal sulcus (Basten et al., 2010).

Physical-effort studies have also strongly implicated the nucleus accumbens (NAcc; Croxson et al., 2009; Kurniawan et al., 2010; Kurniawan, Guitart-Masip, Dayan, & Dolan, 2013; Schmidt et al., 2012) and NAcc dopamine (DA; reviewed in Salamone, Correa, Nunes, Randall, & Pardo, 2012). NAcc DA has been implicated in regulating response vigor (Niv et al., 2007; Phillips et al., 2007; Wardle, Treadway, Mayo, Zald, & De Wit, 2011), which may or may not relate to cognitive effort. Moreover, lesions to the NAcc (as well as to the ACC) lead to a bias against physical effort in valuation procedures (e.g., Ghods-Sharifi & Floresco, 2010), suggesting that NAcc and NAcc DA lesions may also alter the preference for cognitive effort. Finally, the NAcc has been identified as part of the core ventral valuation network, encoding the value of reward cues (reviewed in Montague et al., 2006). It is an open question whether the NAcc encodes decision values, integrating the costs of effort during decision-making. Evidence of SV encoding during effort-based decision-making would support the idea that valuation regions like the NAcc are not only sensitive to reward magnitude and task difficulty, but also to decision values.

This same logic could be applied to test the hypothesized role of other key valuation regions, including the orbitofrontal cortex and ventromedial PFC. It is unknown whether decision value signals in these regions integrate the subjective cost of effort. Both are part of the ventral valuation network, yet they appear to play subtly different roles in decision-making. For example, there is evidence that the two regions may be dissociated along the lines of sensitivity to internally (interoceptive) versus externally driven value signals (Bouret & Richmond, 2010). Hence, they may differentially encode subjective effort to the extent that subjective effort reflects interoceptive signals—as from, for example, autonomic arousal.