Abstract

Discriminating personally significant from nonsignificant sounds is of high behavioral relevance and appears to be performed effortlessly outside of the focus of attention. Although there is no doubt that we automatically monitor our auditory environment for unexpected, and hence potentially significant, events, the characteristics of detection mechanisms based on individual memory schemata have been far less explored. The experiments in the present study were designed to measure event-related potentials (ERPs) sensitive to the discrimination of personally significant and nonsignificant nonlinguistic sounds. Participants were presented with random sequences of acoustically variable sounds, one of which was associated with personal significance for each of the participants. In Experiment 1, each participant’s own mobile SMS ringtone served as his or her significant sound. In Experiment 2, a nonsignificant sound was instead trained to become personally significant to each participant over a period of one month. ERPs revealed differential processing of personally significant and nonsignificant sounds from about 200 ms after stimulus onset, even when the sounds were task-irrelevant. We propose the existence of a mechanism for the detection of significant sounds that does not rely on the detection of acoustic deviation. From a comparison of the results from our active- and passive-listening conditions, this discriminative process based on individual memory schemata seems to be obligatory, whereas the impact of individual memory schemata on further stages of auditory processing may require top-down guidance.

Similar content being viewed by others

Sounds of personal significance seem to have a privileged role in auditory processing. In everyday life, this becomes most obvious when certain auditory events—such as the utterance of one’s own name, a familiar music piece, or the ringing of one’s own mobile phone—are distracting, whereas other unfamiliar, yet acoustically similar, sounds can remain unnoticed. Empirical evidence for this often-reported phenomenon suggests that significant sounds capture attention preferentially (Moray, 1959; Treisman, 1960; Wood & Cowan, 1995) and elicit a specific pattern of physiological reactions (cf. the orienting response; Gati & Ben-Shakhar, 1990; Öhman, 1979). Although these results can be interpreted with regard to behavioral consequences, they also allow for limited inferences regarding the stage of processing at which the acoustic input is matched involuntarily with existing memory schemata (Bregman, 1990) that represent the personal significance of a particular sound pattern.

Within the present article, we will stress the concept of “personal significance” and aim to distinguish it from the concept of “familiarity.” Perceptual familiarity is necessary but not sufficient to characterize a stimulus as being personally significant. As compared with familiarity, significance is regarded as a result of additional qualitative components, such as emotional and behavioral relevance. Hence, a stimulus will be considered of significance when it has affective meaning or when the stimulus carries a sufficient amount of informational value and behavioral relevance. Thus, the significant stimulus is prone to provoke behavior following its occurrence (e.g., Sokolov, 1963). To a certain degree, a stimulus’s significance applies regardless of the situational context (Gronau, Cohen, & Ben-Shakhar, 2003), and the physiological sensitivity to react is higher for significant sounds, even when no reaction is required in the current situation (Elaad & Ben-Shakhar, 1989), as is measurable by an enhanced orienting reaction and slower habituation (Sokolov, 1963). In the present article, the concept of significance is used with additional emphasis on its subjectivity. It is generally assumed that some stimuli are of biologically inherited significance, and thus are universally perceived as being significant (e.g., a scream), but here we will focus on the processing of previously arbitrary sounds that have become personally significant within an individual’s learning history.

To study involuntary detection mechanisms for significant sounds, electrophysiological methods, in particular event-related potentials (ERPs), seem to provide a valuable tool, since they track brain processes with high temporal resolution and are partially independent of a given behavioral response. Most of the ERP studies, so far, that have aimed to explore involuntary access to long-term memory schemata have not addressed the access to personal significance. Usually they have been based on varied general and unspecific representations within the classic oddball paradigm (e.g., using meaningful familiar environmental sounds vs. unfamiliar meaningless sounds: cf. Escera, Yago, Corral, Corbera, & Nuñez, 2003; Frangos, Ritter, & Friedman, 2005; Jacobsen, Schröger, Winkler, & Horvàth, 2005; using familiar vs. unknown voices: Beauchemin et al., 2006; Holeckova, Fischer, Giard, Delpuech, & Morlet, 2006; or using familiar vs. unfamiliar speech stimuli: see Näätänen, 2001, for a review). These studies utilized the mismatch negativity (MMN) and the P3a component of the human auditory ERPs as indexes of involuntary or automatic sound processing (for a recent review, see Näätänen, Kujala, & Winkler, 2011). Although these results clearly reflect the involuntary access to existing long-term memory representations, the data are not conclusive with respect to the question of whether the particular memory access happens independently of the concurrent mismatch detection. The acoustic change from a sequence of repeating standard stimuli to an irregular sound—that is, one that mismatches the established regularity—may have triggered the switch of attention that gives rise to further evaluation (cf. Escera et al., 2003; see also Roye, Jacobsen, & Schröger, 2007). To exclude the possibility of a potential confound of memory access and mismatch detection, it seems necessary to apply paradigms that can allow us to dissociate these two processes, and hence to present stimuli with equal probabilities (cf. Müller-Gass et al., 2007; Ofek & Pratt, 2005; Perrin, Garcia-Larrea, Mauguiere, & Bastuji, 1999; Perrin et al., 2006).

Also, from an ecological point of view, the impact of schemata often becomes evident in dynamic acoustic environments where a purely acoustic pop-out effect cannot take place. One’s own name, for instance, seems to capture our attention even in a stream of unattended speech sounds, in which this particular sound does not acoustically mismatch the other sounds (same voice, same acoustic source). Electropyhsiological studies in which actual names were used as the experimental stimuli have reported sensitivity of the P3 response of the event-related potential to existing memory schemata also without a concomitant acoustic regularity violation (Perrin et al., 2005, 2006). Although this effect was interpreted as reflecting an inherent target detection mechanism based on semantic word categorization, nonlinguistic stimuli may undergo an even faster involuntary tagging mechanism based on memory representations of the specific acoustic patterns—that is, on existing schemata. This hypothesis seems feasible when we consider the results from studies that have demonstrated fast selective processing mechanisms for emotionally charged stimuli (for reviews, see, e.g., LeDoux, 2000; Vuilleumier & Huang, 2009). Primarily, studies from the visual domain have suggested that stimuli of positive or negative valence are discriminated from less arousing stimuli involuntarily and compete for processing resources in an, at least partially, automatic fashion (Junghöfer, Bradley, Elbert, & Lang, 2001; Müller, Andersen, & Keil, 2008; Schupp, Junghöfer, Weike, & Hamm, 2003a; Schupp et al., 2007). If emotional valence is seen as one aspect of a more general concept of significance (or relevance), then these fast selective processing mechanisms should also operate for personally significant stimuli of neutral valence that share motivational relevance with emotional stimuli (cf. D. Sander, Grafman, & Zalla, 2003, p. 311).

In the present ERP study, we aimed to address the fast selective processing of neutral, nonlinguistic sounds that have attained behavioral relevance. We used a paradigm in which the personal significance of sounds was varied equiprobably. Within an individual (quasi-experimental) training approach (Exp. 1), self-chosen personally significant sounds—that is, a participant’s short message (SMS) ringtone—and nonsignificant sounds—that is, the SMS ringtones of 11 other participants—served as the experimental stimuli (see also Roye et al., 2007). The same experimental paradigm was used in Experiment 2, except that an experimental training approach was applied in which participants learned a specific sound as being personally significant, in order to demonstrate the causal relationship between personal significance and the previous ERP effects.

Since we applied a yoked experimental design (a significant sound of one participant served as the nonsignificant sound for a paired associate, and vice versa), differences in ERPs could only be due to the difference in personal significance. Effects due to differences in the acoustic stimulus characteristics should cancel out over all participants. In an earlier study (Roye et al., 2007), personally significant sounds elicited a negativity relative to nonsignificant sounds. This negativity occurred around 150 to 250 ms after stimulus onset and had a posterior distribution. In the present study, we expected to find the same negativity. However, here we utilized a paradigm in which this brain signature of personal significance should be elicited without preceding detection of a physical deviation.

Furthermore, although this negativity related to significant sound detection was of main interest, effects in earlier and later time windows also seemed likely. For example, the N1 has been shown to differ with acoustic familiarity (e.g., Bosnyak, Eaton, & Roberts, 2004; Kirmse, Jacobsen, & Schröger, 2009), and hence may also be augmented for a personally significant sound. Moreover, the P3, which is known to reflect evaluative processes and is elicited in situations in which stimuli have to be categorized (Comerchero & Polich, 1999; Polich, 2007), may likewise be responsive to personal significance, at least when sounds are attended. Indeed, in our previous study, the P3 was enhanced for personally significant deviant sounds, as compared to the P3 elicited by nonsignificant deviant sounds (Roye et al., 2007). We expected to find a similar effect on the P3 even when task-relevant personally significant sounds did not constitute a physical, but only a categorical, deviation.

Experiment 1

In the first experiment, personal significance of reasonable external validity was varied by using individual SMS ringtones as experimental stimuli that were recorded prior to the experimental session. ERPs to a participant’s own ringtone were compared with those to the ringtone of another, paired participant. The latter ringtone shared the same general meaning—that is, it would generally be classified as a ringtone—but had not been associated with any significance in the first participant’s individual past.

Method

Participants and stimuli

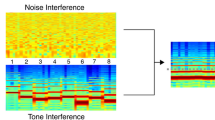

A group of 12 volunteers participated in the study (seven female, five male; 18 to 31 years of age). They reported normal auditory acuity and no medical problems, and gave informed consent prior to participation. All participants were treated according to the ethical principles of the World Medical Association (1996; Declaration of Helsinki) and received course credit or monetary compensation. The personal SMS ringtones of the participants were used as stimuli. These were recorded prior to the experimental session, were root-mean square (RMS) normalized in intensity, and had durations ranging from 84 to 1,689 ms (mean = 799 ms, SD = 60). Participants were chosen on the basis of their ringtones, to assure that all of the experimental stimuli were sufficiently distinguishable from each other from sound onset. Figure 1a shows the spectrograms of all 12 stimuli, confined to the first 50 ms of each sound, and illustrates that all of them differed in their spectral characteristics. Thus, the initial part of the sounds already delivered sufficient information to identify the personally significant sound within the present set of sounds. After the experimental session, participants were asked to listen again to all of the experimental stimuli and to tell whether any other than their own ringtone was of particular relevance or high familiarity (e.g., was the ringtone of a close relative or friend, was their own previous ringtone, etc.). None of the 12 participants reported that any other experimental stimuli fell in this category. For analysis purposes only, participants were grouped into pairs (see below).

Spectrograms of the experimental stimuli. a Experiment 1. Shown are spectrograms of all experimental stimuli, confined to the first 50 ms after sound onset. For purposes of the analysis, participants, and hence the contrasted stimuli, were yoked into pairs, here shown as one stimulus below its pair. The spectrograms illustrate that all of the contrasted stimuli differed in their spectral characteristics from the initial part. b Experiment 2. Shown are spectrograms of the contrasted experimental stimuli used in Experiment 2, over the whole 500-ms duration of the sounds. One group of participants was trained on the harpsichord sound, the other group was trained on the organ sound. These two sounds were presented among ten other instrumental sounds

Experimental design and procedure

Participants were continuously presented with a mixed, randomly played sequence of the 12 SMS ringtones of all participants (70 dB SPL, stimulus onset asynchrony = 1,800 ms) using the following constraints. The first five stimuli of a sequence did not include both the participant’s own ringtone and the nonsignificant comparison ringtone (see the yoked design, described below), and three sequential sounds had to be different. Each stimulus within the auditory sequence occurred with the same probability. Thus, the participant’s own ringtone occurred among all other ringtones with a probability of 8.3 % and deviated from the sequence solely on the stimulus dimension “personal significance.” Since all participants received identical stimulation, with only the personal significance of the stimuli changing, a yoked design could be applied when analyzing the data. Hence, within participants, measurements to the personally significant sound were compared with measurements to a nonsignificant sound of the paired associate, and the roles of these sounds were reversed for the yoked participant. Importantly, the yoked design guaranteed that responses to acoustically identical stimuli were compared across participants. Thus, it could be excluded that ERP effects were subject to acoustic differences between conditions. Further, instead of comparing the response to the participant’s own ringtone to responses to all other ringtones, this procedure assured similar signal-to-noise ratios within the analyzed electrophysiological responses.

To study the primarily involuntary characteristics of auditory processing, first, a passive-listening condition was applied, in which participants were instructed to watch a self-chosen muted and subtitled movie, during which the auditory stimulation was irrelevant and should be ignored. During this passive condition, a total of 150 trials per stimulus were presented. In two separate active-listening conditions (sequential order balanced), participants were instructed to respond to either their own ringtone (personally significant target/nonsignificant nontarget condition, 840 trials; i.e., 70 for each ringtone) or to the ringtone of the yoked participant (nonsignificant target/personally significant nontarget condition, 840 trials). Instead of watching a movie, during the active-listening condition, participants were instructed to fixate a cross on a blank screen in order to avoid excessive artifacts caused by eye movements.

Electrophysiological recordings and analysis

The electroencephalogram (EEG, measured via Ag/AgCl electrodes; BioSemi) was recorded continuously from 64 standard scalp locations according to the extended 10–20 system, with a sampling rate of 512 Hz. An anti-aliasing filter was applied during recording (fifth-order sync response with a −3-dB point at 102.4 Hz; see www.biosemi.com). Additional electrodes were placed on the tip of the nose, two electrodes at the left and right mastoid positions, and two facial bipolar electrode pairs in order to record electroocular activity (EOG). The vertical EOG was recorded from the right eye by one supratemporal and one infraorbital electrode, and the horizontal EOG from two electrodes at positions lateral to the outer canthi of the two eyes. The data were band-pass filtered offline using a finite impulse response filter (0.5- and 25-Hz half-amplitude cutoffs, window type Kaiser, filter length 1,857 points, transition band width 1 Hz). The continuous data were reconstructed into epochs of 1,100-ms length, including a 100-ms baseline before stimulus onset. If changes in the EEG signal did not exceed the rejection criterion of 100 μV on any channel within an epoch, these epochs were averaged separately for each participant for the personally significant versus nonsignificant sounds and the passive versus active conditions. Within the active condition, targets and nontargets were averaged separately. Mean amplitudes of the ERPs to the personally significant sound and to the nonsignificant sound in the passive- and active-listening conditions were compared in time windows centered on the mean peak latency of the grand-average ERP difference wave. Statistically, the data were analyzed via three-way repeated measures analyses of variance (ANOVAs) for the passive-listening condition, with the factors Personal Significance (yes vs. no), Anterior–Posterior (frontal, central, or parietal), and Laterality (7, 3, z, 4, and 8 lines of the electrodes). For the statistical analysis of the active-listening condition, the factor Target was added as well. For effects with more than one degree of freedom, the original degrees of freedom are reported along with the corrected probability (Greenhouse–Geisser). Bonferroni corrections were applied where necessary.

The same data set was also analyzed in the time-frequency domain. However, the present article focuses on ERPs that reflect the discrimination between personally significant and nonsignificant sounds and between training and nontraining variants, discussing the comparison to ERPs that are known to reflect deviance detection. Effects in the evoked gamma-band response (eGBR) can be found elsewhere (Roye, Schröger, Jacobsen, & Gruber, 2010).

Results

Passive-listening condition

All sounds elicited obligatory long-latency auditory evoked responses—that is, P1, N1, and P2 (see Fig. 2). Whereas P1 and N1 did not differ between the personally significant and nonsignificant sounds, a statistically significant effect was observed between 190 and 230 ms. That is, within a time window corresponding to the P2 and N2 evoked potentials, the participant’s own ringtone elicited a more negative-going deflection than did the nonsignificant sound characterized by a predominant posterior distribution [see Fig. 2; Personal Significance × Anterior–Posterior interaction, F(2, 22) = 4.33, p = .026]. Amplitudes were maximal at the right temporo-central and temporo-parietal electrode positions [at electrode T8, t(11) = −4.49, p < .01; at electrode P8, t(11) = −3.33, p < .01]. Although visual inspection suggested that effects due to the personal significance of the presented sounds also occurred in later time windows—that is, a positivity at posterior electrodes after around 400 ms, and a late frontal negativity after around 600 ms—none of these later effects reached statistical significance.

Experiment 1, passive listening. Grand-average ERPs of the passive-listening paradigm at nine representative electrode positions, elicited by both the personally significant stimulus and the nonsignificant stimulus. Difference waves (black lines) were calculated by subtracting the ERPs to the nonsignificant stimulus from the ERPs to the personally significant stimulus. Dotted gray outline bars mark the time window of the statistically significant effect. A mean scalp potential map of the effect related to personal significance can be seen in the lower right corner

Since the number of trials could have varied between conditions and, in turn, one could argue that the results could be explained by the different numbers of averaged trials, we applied a paired t test to test this hypothesis. The analysis revealed that the number of averaged—that is, not rejected—trials, did not differ depending on whether a personally significant (M = 106.42, SD = 24.20) or a nonsignificant sound (M = 106.00, SD = 21.04) was presented [t(11) = .25, p > .50].

Active-listening condition

Within the active-listening condition, responses to personally significant and nonsignificant sounds differed in three time windows, regardless of whether the respective sounds served as the nontarget (Fig. 3) or as the target (Fig. 4) sound in the current experimental block. First, N1 amplitude was enhanced for the personally significant sound (107–127 ms) [main effect of personal significance, F(1, 11) = 5.64, p = .04]. The effect was largest at fronto-central electrodes, and decreased toward more lateral electrodes [Personal Significance × Laterality, F(4, 44) = 3.95, p = .04; Personal Significance × Laterality × Anterior–Posterior, F(8, 88) = 4.62, p = .006]. A significant interaction involving the Target factor revealed that the N1 effect related to personal significance was even larger and extended to fronto-lateral electrode positions when target ERPs were contrasted, as compared with when nontarget ERPs were contrasted [Personal Significance × Target × Laterality, F(4, 44) = 3.85, p = .04; Personal Significance × Target × Anterior–Posterior × Laterality, F(8, 88) = 6.40, p = .001]. Second, as in the passive-listening condition, within the time range of P2 and N2, a participant’s own ringtone elicited a more negative-going deflection than did the nonsignificant sound, with a predominant right posterior distribution (188 to 228 ms) [Personal Significance × Anterior–Posterior, F(2, 22) = 5.84, p = .01; Personal Significance × Laterality, F(4, 44) = 3.47, p < .05; Personal Significance × Anterior–Posterior × Laterality, F(8, 88) = 3.58, p = .02]. Third, a participant’s own ringtone elicited an enhanced P3 amplitude (377–417 ms). This effect was largest at midline electrode positions [main effect of personal significance, F(1, 11) = 12.01, p = .005; Personal Significance × Laterality, F(4, 44) = 15.70, p < .001].

Experiment 1, active target detection. (Upper panels) Grand-average ERPs to nontarget stimuli during active listening at nine representative electrode positions, elicited by both the personally significant stimulus and the nonsignificant stimulus. Difference waves (black lines) were calculated by subtracting the ERPs to the nonsignificant stimulus from the ERPs to the personally significant stimulus. Dotted gray outline bars mark the time windows of statistically significant effects. (Lower panels) Mean scalp potential maps of the effects related to the personal significance of nontarget stimuli

Experiment 1, active target detection. (Upper panels) Grand-average ERPs to target stimuli during active listening at nine representative electrode positions, elicited by both the personally significant stimulus and the nonsignificant stimulus. Difference waves (black lines) were calculated by subtracting the ERPs to the nonsignificant stimulus from the ERPs to the personally significant stimulus. Dotted gray outline bars mark the time windows of statistically significant effects. (Lower panels) Mean scalp potential maps of the effects related to the personal significance of target stimuli

Although all target ERPs had higher amplitudes than did nontarget ERPs within the time ranges of the P2 and N2 [main effect of target, F(1, 11) = 6.86, p = .02] and the time range of the P3 [F(1, 11) = 47.65, p < .001], no interaction involving both factors—that is, Personal Significance and Target—reached statistical significance.

See Fig. 3 for the ERPs for significant and nonsignificant sounds when they served as nontargets within the sequence, and see Fig. 4 for the comparison when the respective sounds served as targets that had to be detected within the sequence.

Behavioral data

The two-paired t test revealed no significant reaction time difference between a participant’s own versus the other’s ringtone (own, 514.25 ± 36.20 ms [M ± SE]; other, 557.94 ± 24.79 ms) [t(11) = −1.21, p = .25].

Discussion for Experiment 1

In Experiment 1, we varied the personal significance of sounds by presenting each participant’s own mobile phone ringtone among a random sequence of other persons’ ringtones. The earliest effect associated with this manipulation occurred within the time range of the P2 and N2 evoked responses as an enhanced negativity to the participant’s own ringtone, characterized by a posterior, right-lateralized distribution over the scalp, that we will refer to below as the N2p. The effect was present irrespective of whether or not the auditory sequence in general was task-relevant (active-listening vs. passive-listening task), and it also remained present in the experimental situation when another sound had become behaviorally relevant (active listening, detection of a previously nonsignificant sound). Within that particular design, this observed ERP effect can only be ascribed to the processing of personal significance, because potential confounds were kept to a minimum. That is, due to the yoked design, acoustically identical stimuli contributed to the experimental conditions over all participants, and hence, differences in ERPs can solely be attributed to individual memory schemata. Furthermore, as compared with previous studies that have applied a classic oddball paradigm, here, no pop-out effect driven by context-dependent deviations from acoustic regularities could have triggered this schema-driven processing (cf. Beauchemin et al., 2006; Frangos et al., 2005; Jacobsen et al., 2005; Roye et al., 2007). Only personal significance made a particular sound in the sequence stand out. However, it needs to be noted that the participant’s own ringtone has been presented as a rare stimulus among several other nonsignificant sounds. Thus, it cannot be argued that the ERP effects in the present study reflect context-independent mechanisms. Previous studies, even more than the present one, have pointed to the highly context-dependent nature of auditory processing (e.g., Jacobsen et al., 2005; Lipski & Mathiak, 2008; Sussman & Steinschneider, 2006). It seems feasible that a significant sound among other significant sounds would not have evoked the same pattern of activations, because the stimulus would match established predictions about events that are likely to occur, similar to mechanisms that have been proposed for mismatch detection based on auditory regularities (e.g., Winkler, Denham, & Nelken, 2009). However, this context dependence in the schema-driven processing of significant sounds requires further examination.

The N2p effect in the present study appears to be the first evidence for a nonlinguistic detection process of personally significant events in the auditory environment. It should be noted that the effect, however, shares some similarities, such as polarity, latency, and topography, with the early posterior negativity (EPN) reported for the processing of emotional stimuli (~150–300 ms). This ERP component is elicited by arousing pictures of negative or positive valence (Junghöfer et al., 2001; Schupp et al., 2003a, 2007). The present N2p effect may be interpreted as an auditory analogue of the visual EPN. This view is supported by two auditory studies in which a posterior negativity to emotional stimuli was revealed within the same time range, relative to both neutral environmental sounds (Czigler, Cox, Gyimesi, & Horvath, 2007) and pure tones (Mittermeier et al., 2011). Hence, the present findings extend previous results, because here acoustic factors were controlled. In the present study, rather than emotional valence, we varied personal significance, in terms of emotional and behavioral relevance in an individual’s past. Thus, the data suggest that the brain quickly distinguishes stimuli that are represented in memory as being personally significant. This significance does not necessarily require emotional relevance that has been evolutionarily associated with dangers or high rewards. The data from previous functional imaging studies let us propose that an unspecific neural network of “relevance detectors” accounts for the likely automatic prioritized processing of personally significant information (Ousdal et al., 2008; D. Sander et al., 2003). This might involve the amygdala, but also structures of the insula, the orbitofrontal cortex, and the superior temporal sulcus (e.g., K. Sander & Scheich, 2001; Scharpf, Wendt, Lotze, & Hamm, 2010). An increase in neural activation primarily in the right superior temporal sulcus, as has been reported for affectively salient sounds, might also have contributed to the right-lateralized distribution of the N2p effect driven by the personal significance of the sound in the present study (cf. Grandjean et al., 2005). Future studies will be required in order to elucidate the underlying neural basis for individual schema-driven auditory processing in more detail.

Although it has been shown that the EPN for visual stimuli reflects stimulus-driven selective processing of emotional stimuli (Schupp, Junghöfer, Weike, & Hamm, 2003a, b), that process works on the basis of a capacity-limited mechanism (Schupp et al., 2007). The present study was not designed to answer the question of resource dependency. However, what can be stated at the moment is that the significance detection process was not restricted to the active-listening condition, in which the auditory stimulation was actively monitored (cf. Czigler et al., 2007; Mittermeier et al., 2011). The discriminative significance detection process even took place when the auditory stimulation was irrelevant to the task at hand. Thus, one can assume that it is an obligatory process, at least under the present task requirements. Whether or not it nonetheless is a resource-dependent process, as has been discussed for acoustic deviance detection processes (Müller-Gass, Stelmack, & Campbell, 2006; Rinne, Antila, & Winkler, 2001; Sussman, Winkler, & Schröger, 2003), remains to be shown in future studies.

The N2p effect was the only significant difference in ERPs between the personally significant and nonsignificant sounds during our passive-listening task. However, results from the active-listening condition suggest that more memory-related processing mechanisms, probably reflecting more resource-dependent processing, are initiated with occurrence of the personally significant sound when the auditory stimulation is intentionally monitored. Besides the N2p effect, which was present during all active conditions as well, the results from active listening showed an enhanced N1 component with a classic fronto-central distribution (Näätänen & Picton, 1987) and a larger P3 component over midline electrodes (for a review, see, e.g., Polich, 2007), elicited by the participant’s own ringtone. Interestingly, this effect occurred regardless of whether the presented stimulus, the participant’s own or another ringtone, served as a target or a nontarget in the current active experimental block—and thus, regardless of the task-specific stimulus relevance.

A modulation of the N1 amplitude depending on a representation of the sound in long-term memory has been reported in previous studies. There, the stored feature may best be specified with the term “acoustic familiarity.” Amplitude enhancements within the N1/N1m or N1–P2 time range have been shown for music sounds in musicians compared with nonmusicians (Pantev et al., 1998; Pantev, Roberts, Schulz, Engelien, & Ross, 2001; Shahin, Bosnyak, Trainor, & Roberts, 2003), for familiar language sounds (e.g., Ylinen & Huotilainen, 2007), for sounds following discrimination training (Atienza, Cantero, & Dominguez-Marin, 2002; Bosnyak et al., 2004; Menning, Roberts, & Pantev, 2000), and for complex environmental sounds (Kirmse et al., 2009), all of which were compared with less familiar, untrained counterparts. The present results augment these findings. The participant’s own ringtone, a personally significant, highly familiar sound also elicited an enhanced N1 amplitude. This effect can be attributed to the acoustic familiarity of one’s own ringtone being an aspect of personal significance, since the mentioned studies—in which basically only familiarity was manipulated—had already revealed the effect. At a neuronal level, one may assume that an elaborated sound pattern recruits more neuronal populations even at the stage of sensory processing, and that the occurrence of this sound activates more neurons to fire in synchrony. This interpretation, suggested by Pantev and colleagues (Pantev, Engelien, Candia, & Elbert, 2001), complements the previously reported eGBR effect, which temporally overlaps with the effect in the N1 time range (Roye et al., 2010). In the present study, N1 modulation by long-term memory representations was shown during the active condition only. It is known that the basic encoding of features is affected by attention (e.g., Woldorff & Hillyard, 1991). Here, this finding suggests that the attentional setting may mediate which experience-dependent tuned traces become effective at the stage of sensory transient stimulus detection. These results are in line with the idea of attentional sensitization, stating that a task can modulate the sensitivity of different processing stages, and thus allows for a more flexible interaction with the current demands (Kiefer & Martens, 2010).

In addition to the enhanced N1 component, the participant’s own ringtone resulted in an augmented P3 amplitude during the active-listening condition. As the P3 is related to postperceptual evaluation processes (e.g., Comerchero & Polich, 1999; Polich, 2007), this finding suggests prioritized processing, and in particular, deeper evaluation, following the discrimination of a personally significant sound from nonsignificant sounds. The topographical distribution of this effect, which spread from anterior to posterior electrode positions, suggests that this P3 enhancement is due to at least two cognitive mechanisms. First, an enhancement of a fronto-central P3a component is likely to have contributed to the effect, reflecting an augmented involuntary shift of attention to the personally significant sound (for reviews, see Escera, Alho, Schröger, & Winkler, 2000; Polich, 2007). Second, the P3b, usually with a predominant posterior distribution and related to target-related processing, had also previously been shown to depend on the meaning of a stimulus (among many other factors; e.g., Johnson, 1986).

To sum up, top-down mechanisms mediate different aspects of schema-driven auditory processing. That is, particularly the role of personal memory schemata in sensory processing and stimulus evaluation appear to partially rely on attention. The N2p effect, however—which has been interpreted to reflect a schema-driven discrimination process—was assumed to involuntarily promote a fast dissociation between personally significant and nonsignificant sounds in our acoustic environment.

Experiment 2

The use of personal mobile phone ringtones in Experiment 1 guaranteed a successful manipulation of personal significance. However, a quasi-experimental approach implies disadvantages, such as the possibility that participants in Experiment 1 selected their ringtones with respect to certain preferred acoustic stimulus characteristics, which, in turn, might have contributed to the discriminative N2p response. Experiment 2 was designed to replicate the N2p effect within an experimental training approach in which a causal relation between an acquisition period and the measured effect would be established. Therefore, ERP measurements were conducted before and after a training period of one month. During the training period, all participants were asked to use a randomly assigned sound as their personal mobile phone ringtone. Instrumental sounds that all had the same envelope and duration served as the experimental stimuli. As compared with the real ringtones used in Experiment 1, these were of comparable significance for all participants at the beginning of the experiment. Furthermore, due to the enhanced acoustic similarity between the stimuli relative to the real ringtones used in Experiment 1, the trained sound would then also probably be harder to differentiate, and less acoustic pop-out of the personally significant sound might make the discrimination process even more difficult. Hence, Experiment 2 tested the effect of personal significance detection more conservatively than had Experiment 1. Again, a yoked design was applied. Two groups of participants were trained, respectively, on two different sounds. Thus, in both conditions, measurements to acoustically identical stimuli could be compared, in order to ensure that the reported effects were exclusively due to the experimental variation.

Method

Participants and stimuli

A group of 16 volunteers participated in the study (11 female, five male; mean age 24 years, range 19–29 years), reported normal auditory acuity, no medical problems, and gave informed consent prior to participation. They were treated according to the ethical principles of the World Medical Association (1996; Declaration of Helsinki) and received course credit or monetary compensation.

Twelve different instrumental sounds were used as the experimental stimuli (Sony ACID Music Studio 7.0). Thus, all of the experimental stimuli shared the same meaning and were of comparable familiarity for all participants, since no musical experts participated. The stimuli were manipulated to have a 500-ms duration, including 50-ms rise and fall times, and to have equal mean intensities (RMS) and similar envelope characteristics. The following instrumental sounds were chosen: harpsichord and organ (serving as the trained stimuli for each of two yoked groups), as well as horn, clarinet, trumpet, oboe, violin, piano, guitar, chimes, harp, and cello (presented as filler sounds within the random sequence). Two of the 16 scheduled participants had to be excluded from the final analysis due to bad EEG signals. However, since each of the two excluded participants was from a different yoked group, this exclusion did not affect the balanced yoked design.

Experimental design and procedure

EEGs were measured before and after the training intervention. After the pretraining measurements, one half of the participants were trained on the harpsichord stimulus, and one half on the organ sound (Fig. 1b). To train the respective sounds and associate them with behavioral relevance, all participants were asked to change their mobile phone ringtone to the assigned experimental stimulus for one month (±3 days). During the experimental sessions, participants were continuously presented with a mixed, randomly played sequence of the 12 instrumental sounds (70 dB SPL, stimulus onset asynchrony 1,800 ms). Thus, as in Experiment 1, every sound occurred with a probability of 8.3 % (150 trials per stimulus, in sum). After the second experimental session, participants gave information about the average amounts that they had heard the sound during the training interval. According to the information they gave, they heard the sound from three to 20 times a day (mean = 7, SD = 6). The reported data were recorded during a passive-listening condition during which participants were watching a muted and subtitled movie. Experiment 2 was part of a study that included further active measures (signal detection thresholds). The present article focuses only on the ERP data relevant for the present question.

Electrophysiological recordings and analysis

Details of the recordings match the descriptions given for Experiment 1. For the analysis of ERPs, data were filtered offline using a 0.5- to 25-Hz band-pass finite impulse response filter (1,856 points). Continuous data were reconstructed into epochs of 800-ms length, including a 100-ms baseline before stimulus onset. If changes in the EEG signal did not exceed the rejection criterion of 100 μV on any channel, these epochs were averaged separately for each level of session (1, 2) and training (trained, untrained) for each participant. Mean amplitudes of the ERPs in Sessions 1 and 2 to the trained and untrained stimuli were compared in a time window centered on the mean peak latency of the difference between the grand-average ERP difference waves (i.e., Session 2 minus Session 1 trained sounds and Session 2 minus Session 1 untrained sounds). The analysis for the N2p effect was calculated at ad-hoc-selected left and right posterior electrode positions (P7, P8), since the effect had been largest at right posterior positions in Experiment 1. It should be tested whether the effect itself and its right lateralization will replicate. A repeated measures ANOVA was calculated accordingly, involving the factors Session (1, 2), Training (trained, untrained), and Position (P7, P8). For effects with more than one degree of freedom, the original degrees of freedom are reported, along with the corrected probability (Greenhouse–Geisser). Bonferroni corrections were applied where necessary.

Results

A significant effect of the training intervention was revealed in the time window from 160 to 200 ms at electrodes P7 and P8, showing an enhanced negativity in Session 2 relative to Session 1 for the trained sound only (see Fig. 5). The ANOVA revealed a significant Session × Training × Laterality interaction [F(1, 13) = 5.39, p = .04]. Post hoc analyses separately for the trained and the untrained sounds at each lateralized position revealed that a significant difference between Session 1 and Session 2 was confined to the trained sound [for the trained sound, we found main effects of session, F(1, 13) = 9.61, p = .008; electrode P7, t(13) = 2.71, p = .036; and electrode P8, t(13) = 3.10, p = .018; for the untrained sounds, the effects were not significant: electrode P7, t(13) = 0.54, p > .1; electrode P8, t(13) = 2.48, p > .05]. A visually inspected ERP difference between Sessions 1 and 2 at around 500 ms was analyzed in an overall repeated measures ANOVA, but it did not reach statistical significance for any interaction involving the factors Session and Training. Figure 5a shows the ERPs in Sessions 1 and 2 for the trained sound, and Fig. 5b shows ERPs for the untrained sounds, including scalp distributions of the differences between Sessions 1 and 2.

Experiment 2, training approach. Grand-average ERPs at nine representative electrode positions elicited in Sessions 1 and 2, plotted separately for the trained, personally significant stimulus (a) and the untrained, nonsignificant stimulus (b). Difference waves (black lines) were calculated by subtracting the ERPs conducted in Session 1 from the ERPs conducted in Session 2. Dotted gray outline bars mark the time window of the statistically significant effects due to the training intervention. Mean scalp potential maps of the differences between the two sessions for the trained and the untrained stimuli can be found below their respective panels

Discussion of Experiment 2

In contrast to Experiment 1, in which personal ringtones had been used as stimuli, in Experiment 2 the experimental stimuli were not self-selected, but randomly assigned by the experimenter. Thus, the results cannot stem from a processing mechanism associated with specific, individually preferred stimulus characteristics. Thus, this electrophysiological index of significant sound detection (the N2p reported in Exp. 1) could be replicated using a conservative training approach. The trained sound elicited a more negative-going deflection at posterior electrodes after around 200 ms in Session 2 as compared with Session 1, whereas this negativity was not revealed for the untrained sounds. Therefore, this appears to be a reliable effect reflecting the discrimination between sounds associated with personal significance and sounds that lack this significance. Furthermore, the result suggests that the training period of one month was long enough to associate the arbitrary instrumental sound with significance. Again, all sounds were presented in the experiment with the same probability. The trained sound even popped out less in Experiment 2 than in Experiment 1, since the instrumental sounds that we used varied less in their acoustic characteristics (same duration, same envelope). Thus, the data of the present experiment provide more evidence for the interpretation that the discrimination mechanism suggested to underlie the N2p effect does not require an acoustic mismatch detection process to trigger its elicitation. We propose that the detection of potentially significant sounds can happen on two separate routes, one relying on context-based acoustic mismatch detection, and the other on match detection between the current sound and personal long-term memory representations (see Fig. 6; see also Parmentier, Turner, & Perez, 2013).

Proposed two-route model of the detection of potentially relevant sounds. The model illustrates the notion that the discrimination of personally significant sounds from nonsignificant sounds is achieved in parallel with acoustic deviance detection. Both mechanisms rely on acoustic stimulus detection, reflected in early responses such as the evoked gamma-band response (eGBR) and the N1 of the auditory ERP. The present data suggest that the significance detection mechanism reflected in the N2p does not rely on acoustic deviance detection, reflected in the mismatch negativity (MMN), but rather that these are two independent processes. Both may lead to attentional orienting to initiate further cognitive and behavioral consequences if a certain threshold is surpassed. The threshold may depend on factors such as the saliency of the acoustic deviation, the stimulus’s personal significance, the processing resources that are currently available, and executive control mechanisms

The data analysis of oscillatory activity reported by Roye et al. (2010) showed an enhanced eGBR to the personally significant sound. Although future experiments will need to dissociate the eGBR and ERP effects, we argue that at least the eGBR and N2p effects reflect different functional processes; our N2p effect occurred about 100 ms after the eGBR effect. On the basis of previous research, we suggest that the eGBR effect reflects a first, context-independent match with long-term memory representations, which could also be described in terms of priming or memory-based anticipation (Herrmann, Munk, & Engel, 2004; Lenz, Schadow, Thaerig, Busch, & Herrmann, 2007; Oppermann, Hassler, Jescheniak, & Gruber, 2012; Roye et al., 2010). The later N2p effect, on the other hand, is interpreted as a subsequent detection process that may be context-dependent and that relies on higher-order categorization mechanisms (see also Roye et al., 2007).

General conclusion

We continuously monitor our auditory environment for potentially significant events. A wide range of research has shown that this is performed involuntarily, on the basis of inferred regularities and predictive modeling, utilizing acoustic cues held in short-term memory (e.g., Bendixen, SanMiguel, & Schröger, 2012; Schröger, 2007; Winkler et al., 2009). Much less is known about the role of individual long-term memory schemata in this monitoring process. The present experiments have revealed a discriminative response in human ERPs after about 200 ms that indicates that the auditory system detects a personally significant event among nonsignificant events, even without concurrent acoustic cues that would trigger selective attentional orienting. Although this response seems to be obligatory, the involvement of memory schemata at further stages of auditory processing might be subject to top-down mechanisms.

References

Atienza, M., Cantero, J. L., & Dominguez-Marin, E. (2002). The time course of neural changes underlying auditory perceptual learning. Learning and Memory, 9, 138–150.

Beauchemin, M., De Beaumont, L., Vannasing, P., Turcotte, A., Arcand, C., Belin, P., & Lassonde, M. (2006). Electrophysiological markers of voice familiarity. European Journal of Neuroscience, 23, 3081–3086. doi:10.1111/j.1460-9568.2006.04856.x

Bendixen, A., SanMiguel, I., & Schröger, E. (2012). Early electrophysiological indicators for predictive processing in audition: A review. International Journal of Psychophysiology, 83, 120–131. doi:10.1016/j.ijpsycho.2011.08.003

Bosnyak, D. J., Eaton, R. A., & Roberts, L. E. (2004). Distributed auditory cortical representations are modified when non-musicians are trained at pitch discrimination with 40 Hz amplitude modulated tones. Cerebral Cortex, 14, 1088–1099.

Bregman, A. S. (1990). Auditory scene analysis: The perceptual organization of sound. Cambridge, MA: MIT Press.

Comerchero, M. D., & Polich, J. (1999). P3a and P3b from typical auditory and visual stimuli. Clinical Neurophysiology, 110, 24–30.

Czigler, I., Cox, T. J., Gyimesi, K., & Horvath, J. (2007). Event-related potential study to aversive auditory stimuli. Neuroscience Letters, 420, 251–256.

Elaad, E., & Ben-Shakhar, G. (1989). Effects of motivation and verbal-response type on psychophysiological detection of information. Psychophysiology, 26, 442–451. doi:10.1111/j.1469-8986.1989.tb01950.x

Escera, C., Alho, K., Schröger, E., & Winkler, I. (2000). Involuntary attention and distractibility as evaluated with event-related brain potentials. Audiology & Neuro-Otology, 5, 151–166.

Escera, C., Yago, E., Corral, M. J., Corbera, S., & Nuñez, M. I. (2003). Attention capture by auditory significant stimuli: Semantic analysis follows attention switching. European Journal of Neuroscience, 18, 2408–2412.

Frangos, J., Ritter, W., & Friedman, D. (2005). Brain potentials to sexually suggestive whistles show meaning modulates the mismatch negativity. NeuroReport, 16, 1313–1317.

Gati, I., & Ben-Shakhar, G. (1990). Novelty and significance in orientation and habituation: A feature-matching approach. Journal of Experimental Psychology, 119, 251–263.

Grandjean, D., Sander, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., & Vuilleumier, P. (2005). The voices of wrath: Brain responses to angry prosody in meaningless speech. Nature Neuroscience, 8, 145–146. doi:10.1038/nn1392

Gronau, N., Cohen, A., & Ben-Shakhar, G. (2003). Dissociations of personally significant and task-relevant distractors inside and outside the focus of attention: A combined behavioral and psychophysiological study. Journal of Experimental Psychology. General, 132, 512–529. doi:10.1037/0096-3445.132.4.512

Herrmann, C. S., Munk, M. H., & Engel, A. K. (2004). Cognitive functions of gammaband activity: Memory match and utilization. Trends in Cognitive Sciences, 8, 347–355.

Holeckova, I., Fischer, C., Giard, M. H., Delpuech, C., & Morlet, D. (2006). Brain responses to a subject’s own name uttered by a familiar voice. Brain Research, 1082, 142–152.

Jacobsen, T., Schröger, E., Winkler, I., & Horvàth, J. (2005). Familiarity affects the processing of task-irrelevant auditory deviance. Journal of Cognitive Neuroscience, 17, 1704–1713.

Johnson, R. (1986). A triarchic model of P300 amplitude. Psychophysiology, 23, 367–384.

Junghöfer, M., Bradley, M. M., Elbert, T. R., & Lang, P. J. (2001). Fleeting images: A new look at early emotion discrimination. Psychophysiology, 38, 175–178.

Kiefer, M., & Martens, U. (2010). Attentional sensitization of unconscious cognition: Task sets modulate subsequent masked semantic priming. Journal of Experimental Psychology. General, 139, 464–489.

Kirmse, U., Jacobsen, T., & Schröger, E. (2009). Familiarity affects environmental sound processing outside the focus of attention: An event-related potential study. Clinical Neurophysiology, 120, 887–896.

LeDoux, J. E. (2000). Emotion circuits in the brain. Annual Reviews in Neuroscience, 23, 155–184. doi:10.1146/annurev.neuro.23.1.155

Lenz, D., Schadow, J., Thaerig, S., Busch, N. A., & Herrmann, C. S. (2007). What’s that sound? Matches with auditory long-term memory induce gamma activity in human EEG. International Journal of Psychophysiology, 64, 31–38.

Lipski, S. C., & Mathiak, K. (2008). Auditory mismatch negativity for speech sound contrasts is modulated by language context. NeuroReport, 19, 1079–1083.

Menning, H., Roberts, L. E., & Pantev, C. (2000). Plastic changes in the auditory cortex induced by intensive frequency discrimination training. NeuroReport, 11, 817–822.

Mittermeier, V., Leicht, G., Karch, S., Hegerl, U., Möller, H. J., Pogarell, O., & Mulert, C. (2011). Attention to emotion: Auditory-evoked potentials in an emotional choice reaction task and personality traits as assessed by the NEO FFI. European Archives of Psychiatry and Clinical Neuroscience, 261, 111–120. doi:10.1007/s00406-010-0127-9

Moray, N. (1959). Attention in dichotic-listening—Affective cues and the influence of instructions. Quarterly Journal of Experimental Psychology, 11, 56–60.

Müller, M. M., Andersen, S. K., & Keil, A. (2008). Time course of competition for visual processing resources between emotional pictures and foreground task. Cerebral Cortex, 18, 1892–1899. doi:10.1093/cercor/bhm215

Müller-Gass, A., Roye, A., Kirmse, U., Saupe, K., Jacobsen, T., & Schröger, E. (2007). Automatic detection of lexical change: An auditory event-related potential study. NeuroReport, 18, 1747–1751.

Müller-Gass, A., Stelmack, R. M., & Campbell, K. B. (2006). The effect of visual task difficulty and attentional direction on the detection of acoustic change as indexed by the mismatch negativity. Brain Research, 1078, 112–130.

Näätänen, R. (2001). The perception of speech sounds by the human brain as reflected by the mismatch negativity (MMN) and its magnetic equivalent (MMNm). Psychophysiology, 38, 1–21.

Näätänen, R., Kujala, T., & Winkler, I. (2011). Auditory processing that leads to conscious perception: A unique window to central auditory processing opened by the mismatch negativity and related responses. Psychophysiology, 48, 4–22. doi:10.1111/j.1469-8986.2010.01114.x

Näätänen, R., & Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology, 24, 375–425.

Ofek, E., & Pratt, H. (2005). Neurophysiological correlates of subjective significance. Clinical Neurophysiology, 116, 2354–2362.

Öhman, A. (1979). The orienting response, attention, and learning: An information processing perspective. In H. D. Kimmel, E. H. van Olst, & J. F. Orlebeke (Eds.), The orienting reflex in humans (pp. 443–472). Hillsdale, NJ: Erlbaum.

Oppermann, F., Hassler, U., Jescheniak, J. D., & Gruber, T. (2012). The rapid extraction of gist—Early neural correlates of high-level visual processing. Journal of Cognitive Neuroscience, 24, 521–529.

Ousdal, O. T., Jensen, J., Server, A., Hariri, A. R., Nakstad, P. H., & Andreassen, O. A. (2008). The human amygdala is involved in general behavioral relevance detection: Evidence from an event-related functional magnetic resonance imaging Go–NoGo task. Neuroscience, 156, 450–455. doi:10.1016/j.neuroscience.2008.07.066

Pantev, C., Engelien, A., Candia, V., & Elbert, T. (2001a). Representational cortex in musicians. Plastic alterations in response to musical practice. Annals of the New York Academy of Sciences, 930, 300–314.

Pantev, C., Oostenveld, R., Engelien, A., Ross, B., Roberts, L. E., & Hoke, M. (1998). Increased auditory cortical representation in musicians. Nature, 392, 811–814.

Pantev, C., Roberts, L. E., Schulz, M., Engelien, A., & Ross, B. (2001b). Timbre-specific enhancement of auditory cortical representations in musicians. NeuroReport, 12, 169–174.

Parmentier, F. B. R., Turner, J., & Perez, L. (2013). A dual contribution to the involuntary semantic processing of unexpected spoken words. Journal of Experimental Psychology. General. doi:10.1037/a0031550. Advance online publication.

Perrin, F., Garcia-Larrea, L., Mauguiere, F., & Bastuji, H. (1999). A differential brain response to the subject’s own name persists during sleep. Clinical Neurophysiology, 110, 2153–2164.

Perrin, F., Maquet, P., Peigneux, P., Ruby, P., Degueldre, C., Balteau, E., & Laureys, S. (2005). Neural mechanisms involved in the detection of our first name: A combined ERPs and PET study. Neuropsychologia, 43, 12–19. doi:10.1016/j.neuropsychologia.2004.07.002

Perrin, F., Schnakers, C., Schabus, M., Degueldre, C., Goldman, S., Brédart, S., & Laureys, S. (2006). Brain response to one’s own name in vegetative state, minimally conscious state, and locked-in syndrome. Archives of Neurology, 63, 562–569. doi:10.1001/archneur.63.4.562

Polich, J. (2007). Updating P300: An integrative theory of P3a and P3b. Clinical Neurophysiology, 118, 2128–2148.

Rinne, T., Antila, S., & Winkler, I. (2001). Mismatch negativity is unaffected by top-down predictive information. NeuroReport, 12, 2209–2213.

Roye, A., Jacobsen, T., & Schröger, E. (2007). Personal significance is encoded automatically by the human brain: An event-related potential study with ringtones. European Journal of Neuroscience, 26, 784–790.

Roye, A., Schröger, E., Jacobsen, T., & Gruber, T. (2010). Is my mobile ringing? Evidence for rapid processing of a personally significant sound in humans. Journal of Neuroscience, 30, 7310–7313.

Sander, D., Grafman, J., & Zalla, T. (2003). The human amygdala: An evolved system for relevance detection. Reviews in the Neurosciences, 14, 303–316.

Sander, K., & Scheich, H. (2001). Auditory perception of laughing and crying activates human amygdala regardless of attentional state. Cognitive Brain Research, 12, 181–198.

Scharpf, K. R., Wendt, J., Lotze, M., & Hamm, A. O. (2010). The brain’s relevance detection network operates independently of stimulus modality. Behavioural Brain Research, 210, 16–23.

Schröger, E. (2007). Mismatch negativity: A microphone into auditory memory. Journal of Psychophysiology, 21, 138–146.

Schupp, H. T., Junghöfer, M., Weike, A. I., & Hamm, A. O. (2003a). Attention and emotion: An ERP analysis of facilitated emotional stimulus processing. NeuroReport, 14, 1107–1110. doi:10.1097/00001756-200306110-00002

Schupp, H. T., Junghöfer, M., Weike, A. I., & Hamm, A. O. (2003b). Emotional facilitation of sensory processing in the visual cortex. Psychological Science, 14, 7–13. doi:10.1111/1467-9280.01411

Schupp, H. T., Stockburger, J., Bublatzky, F., Junghöfer, M., Weike, A. I., & Hamm, A. O. (2007). Explicit attention interferes with selective emotion processing in human extrastriate cortex. BMC Neuroscience, 8, 16.

Shahin, A., Bosnyak, D. J., Trainor, L. J., & Roberts, L. E. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. Journal of Neuroscience, 23, 5545–5552.

Sokolov, E. N. (1963). Perception and the conditioned reflex. Oxford, UK: Pergamon.

Sussman, E., & Steinschneider, M. (2006). Neurophysiological evidence for context-dependent encoding of sensory input in human auditory cortex. Brain Research, 1075, 165–174.

Sussman, E., Winkler, I., & Schröger, E. (2003). Top-down control over involuntary attention switching in the auditory modality. Psychonomic Bulletin & Review, 10, 630–637.

Treisman, A. M. (1960). Contextual cues in selective listening. Quarterly Journal of Experimental Psychology, 12, 242–248.

Vuilleumier, P., & Huang, Y. M. (2009). Emotional attention: Uncovering the mechanisms of affective biases in perception. Current Directions in Psychological Science, 18, 148–152.

Winkler, I., Denham, S. L., & Nelken, I. (2009). Modeling the auditory scene: Predictive regularity representations and perceptual objects. Trends in Cognitive Sciences, 13, 532–540.

Woldorff, M. G., & Hillyard, S. A. (1991). Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalography and Clinical Neurophysiology, 79, 170–191.

Wood, N. L., & Cowan, N. (1995). The cocktail party phenomenon revisited: How frequent are attention shifts to one’s name in an irrelevant auditory channel? Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 255–260.

Ylinen, S., & Huotilainen, M. (2007). Is there a direct neural correlate for memory-trace formation in audition? NeuroReport, 18, 1281–1284.

Author note

A.R.’s work was supported by a PhD fellowship from the Evangelisches Studienwerk e.V. Villigst. E.S.’s work was supported by a DFG-Reinhart-Koselleck project. We are grateful to Martin Reiche for his help in data collection, and thank the anonymous reviewers, who gave helpful comments on this article and on previous versions of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Roye, A., Jacobsen, T. & Schröger, E. Discrimination of personally significant from nonsignificant sounds: A training study. Cogn Affect Behav Neurosci 13, 930–943 (2013). https://doi.org/10.3758/s13415-013-0173-7

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-013-0173-7