Abstract

In past years, an extensive amount of research has focused on how past experiences guide future attention. Humans automatically attend to stimuli previously associated with reward and stimuli that have been experienced during visual search, even when it is disadvantageous in present situations. Recently, the relationship between “reward history” and “search history” has been discussed critically. We review results from research on value-driven attentional capture (VDAC) with a focus on these two experience-based attentional selection processes and their distinction. To clarify inconsistencies, we examined VDAC within a design that allows a direct comparison with other mechanisms of attentional selection. Eighty-four healthy adults were trained to incidentally associate colors with reward (10 cents, 2 cents) or with no reward. In a subsequent visual search task, distraction by reward-associated and unrewarded stimuli was contrasted. In the training phase, reward signals facilitated performance. When these value-signaling stimuli appeared as distractors in the test phase, they continuously shaped attentional selection, despite their task irrelevance. Our findings clearly cannot be attributed to a history of target search. We conclude that once an association is established, value signals guide attention automatically in new situations, which can be beneficial or not, depending on the congruency with current goals.

Similar content being viewed by others

Given that processing resources are limited, the brain has to filter information from the environment to ensure adaptive behavior and promote well-being. The cognitive mechanism enabling an organism to process relevant, while ignoring irrelevant, information is called selective attention (Gazzaniga, Ivry, & Mangun, 2002). This ability is fundamental for higher order cognitive processes such as learning, memory, decision-making, and planning, as well as the generation of actions. Attending or not attending to a specific stimulus in the environment may result in pleasant or unpleasant experiences, averted danger, or a missed chance. To optimize survival in complex environments, stimuli or contexts that have been previously classified as relevant should not only have an immediate effect but also guide attention and behavior in future situations. Therefore, learning associations between meaningful stimuli or contexts and beneficial outcomes is adaptive. Reward-based learning can occur in the form of an effortless association between stimuli and value due to classical conditioning or between consequences and prior action due to operant conditioning (for a review, see Edelmann & Wittmann, 2012). From an evolutionary and learning theoretical perspective, it seems sensible that the brain’s attentional system is partly guided by previous rewards. Understanding the influence of past rewarding experiences on attentional selection in future situations was the focus of many publications in the past few years. A series of experiments demonstrated that originally neutral contexts that have been associated with reward compete for attentional priority with other sources of selection at a later time period. This was termed value-driven attentional capture (VDAC; Anderson, 2013), an observation that questions the previously assumed dichotomy of selective attention processes.

Research on selective attention has traditionally focused on two mechanisms by which information is prioritized (e.g., Corbetta & Shulman, 2002; Desimone & Duncan, 1995; Kastner & Ungerleider, 2000; Posner, 1980). On the one hand, the focus of attention can be directed voluntarily in accordance with current goals (top-down). On the other hand, attention can be activated automatically by sensory stimulation, for example, by the perceptual salience of a stimulus in contrast to its environment (bottom-up). Brain networks involved in endogenous attentional selection are thought to be distinct but to flexibly interact with brain regions activated by exogenous input (Vossel, Geng, & Fink, 2014). Cumulating evidence in past years has challenged this dichotomous view on human attentional selection. The top-down (goal-driven) versus bottom-up (salience-driven) dichotomy was recognized as an oversimplification (Anderson, 2013; Awh, Belopolsky, & Theeuwes, 2012) because there are phenomena of attentional selection biases that are not well explained by task goals or by the physical salience of stimuli, but instead rely on past experiences. First, previously attended stimuli influence subsequent visual search. One example for this is enhanced performance during visual search in trials that are similar to the previous trial. Such priming effects remain robust even when contradicting voluntary goals (e.g. Kristjánsson, Wang, & Nakayama, 2002; Lamy & Kristjansson, 2013, for review; Müller, Reimann, & Krummenacher, 2003). Visual search is speeded when targets appear in repeated configurations, a phenomenon called contextual cueing (e.g., Chun, 2000; Chun & Jiang, 1998; Jiang, Swallow, Rosenbaum, & Herzig, 2013). Attention is also biased by (temporal) regularities, even if they provide no useful information for target detection and only depend on internal representations of experiences but not on stimulus features (Zhao, Al-Aidroos, & Turk-Browne, 2013). Second, monetary rewards have shown to affect subsequent attentional selection despite contrary task goals. On a trial-by-trial basis, humans preferentially attend to objects associated with reward, even when the feature associated with monetary outcome is irrelevant and attentional bias toward such features has unbeneficial effects (Hickey, Chelazzi, & Theeuwes, 2010). Reward-associated stimuli have also been shown to distract attention when competing with salient and goal-relevant stimuli (Anderson, Laurent, & Yantis, 2011a). As a consequence of these observations, another mechanism of attentional selection was proposed involving past experiences (also termed “history” effects; Awh et al., 2012), and the role of memory processes in the deployment of attentional resources was emphasized (e.g., Hutchinson & Turk-Browne, 2012; Kiyonaga & Egner, 2013; Olivers, Peters, Houtkamp, & Roelfsema, 2011; Zhao et al., 2013). In this context, a strong line of research emerged reporting effects of “reward history” (Anderson, 2013) on attention debating its relation and potential independency from classical mechanisms known to guide attention (volition/goals, physical salience) and from other forms of history effects known to modulate attentional priority (“selection history”; Awh et al., 2012).

In short, this research demonstrates that humans build associations between irrelevant contextual stimuli and the more-or-less rewarding experience they are connected to. Importantly, these value-signaling stimuli have the potential to modulate attentional priority and influence subsequent actions (Anderson, 2016, 2017; Failing & Theeuwes, 2017b). Reward history competes with other factors for attentional priority and robustly counteracts goal-directed attention (Anderson & Halpern, 2017; Failing, Nissens, Pearson, Le Pelley, & Theeuwes, 2015; Failing & Theeuwes, 2017a; Le Pelley, Pearson, Griffiths, & Beesley, 2015; Pearson, Donkin, Tran, Most, & Le Pelley, 2015). Value-driven attentional selection is also found if subjects are informed about the potentially distracting effect, even if subjects are rewarded to ignore the valuable distractors and have to search for a highly salient target stimulus (Failing & Theeuwes, 2017a; Le Pelley et al., 2015). These finding represents strong evidence that VDAC cannot be explained in terms of classical mechanisms of attentional selection (goal-relevance or physical salience). They suggest that after repeated pairing of stimuli with reward, value-signaling stimuli draw attention in an automatic, habit-like manner against willful control and full awareness of potentially adverse outcomes. These observations are striking because they imply that humans learn relevant information incidentally; information, which then guides attention automatically (Anderson, 2016) and cannot easily be modified even if the brain’s calculation of attentional priority is adverse in the current context (Failing & Theeuwes, 2017a).

Research focusing on value-driven attentional biases helps to explain how our brain calculates priorities to overcome its limited attentional resources. Recent findings shape the picture that past rewarding experiences impact present attentional selection just like current goals and physical salience. In addition, previously searched but unrewarded stimuli are also capable of influencing attention. Such search history effects on attentional selection and their relation to effects of reward history on attention have received less notice. It was debated whether value dependency of attentional selection is truly driven by value or by a more general process like search history of previous target stimuli. Sha and Jiang (2016) demonstrated that previously searched goal-relevant stimuli influence attentional processes in a subsequent task. The presence of reward-signaling stimuli slowed down responses compared with their absence, but there was no difference toward lower reward in their first experiment or an unrewarded condition in their second experiment. These and other findings confirm that stimuli that appeared simultaneously with nonrewarded target stimuli can bias attention later (Anderson, Chiu, DiBartolo, & Leal, 2017; Sha & Jiang, 2016; Wang, Yu, & Zhou, 2013). On the other hand a number of experiments did not find any search-driven attentional capture (Anderson et al., 2011a, 2014b; Laurent, Hall, Anderson, & Yantis, 2015; Qi, Zeng, Ding, & Li, 2013). It is, however, necessary to separate these effects to get a complete picture of when and how our attentional system is guided by value (see Sha & Jiang, 2016; Anderson & Halpern, 2017, for more details on this debate).

Different approaches have been used to differentiate value-driven effects on attention from other sources of attentional selection (search history, goal, physical salience). A few studies either did not contrast differently valuable stimuli or used an unrewarded experimental condition (Anderson, Faulkner, Rilee, Yantis, & Marvel, 2013a; Anderson, Leal, Hall, Yassa, & Yantis, et al., 2014b; Sali, Anderson, & Yantis, 2014); in these cases, it is impossible to determine the nature of the observed effects. Most frequently, gradual manipulations of value were examined, typically high and low monetary feedback, in comparison. A number of studies failed to find a difference in distraction by stimuli associated with high and low reward (e.g. Anderson et al., 2011a, 2013b; Anderson & Yantis, 2012; Laurent et al., 2015), which is critical when attentional capture is interpreted as value driven. Sometimes researchers included a separate control experiment into their design to rule out alternative explanations when a gradual modulation of attention by reward was not observed or when there was no difference compared with distractor absent trials (Anderson et al., 2011a, 2012, 2014a; Laurent et al., 2015; Qi et al., 2013). It should be noted, however, that in some of these control experiments, sample sizes were relatively small (between n = 10 and n = 20). In these studies, previously searched but unrewarded stimuli did not impact attention allocation (Anderson et al., 2011a, 2014a; Laurent et al., 2015; Qi et al., 2013) or working memory performance (Gong & Li, 2014), whereas some researchers reported search-history-driven distraction in subsequent visual search (Wang et al., 2013).

Stronger evidence for the value-based nature of the effect comes from a number of studies, which observed value-driven attentional capture independent of potential search history effects. For example, in some studies, the magnitude of value associated with stimuli was gradually related to attentional capture caused by these stimuli (Anderson & Halpern, 2017; Anderson et al., 2011b; Anderson & Yantis, 2012; Jiao, Du, He, & Zhang, 2015). Further, a few studies have contrasted a rewarded with an unrewarded feedback condition in a within-subjects design (Anderson, 2015a; Failing & Theeuwes, 2014; Mine & Saiki, 2015; Pool, Brosch, Delplanque, & Sander, 2014; Sha & Jiang, 2016). These studies demonstrated VDAC by colored stimuli (Failing & Theeuwes, 2014; Mine & Saiki, 2015), spatial location (Anderson, 2015a), as well as nonspatial pictorial scenes (Failing & Theeuwes, 2015). One study modulated motivation within subject and used different training methods (Pavlovian conditioning) as well as primary reward (food odor; Pool et al., 2014). However, sometimes VDAC reflected a combined effect of physical salience and value (Mine & Saiki, 2015). In other cases, goal-driven attentional processes could at least partly account for the observed attentional capture (Failing & Theeuwes, 2014, 2015). Thus, search-driven and value-driven effects on attention were directly contrasted in these studies, while VDAC was not always viewed in isolation of other mechanism of attentional selection.

Finally, a different approach in distinguishing search and reward history effects on attention should be mentioned. Following evidence of a previous study showing blunted VDAC in depressed subjects (Anderson et al., 2014b), researchers compared depressed and healthy participants in two separate experiments. Both groups showed attentional capture by previously searched targets, but only the healthy group developed an attentional bias toward previously rewarded targets (Anderson et al., 2017). This was interpreted in terms of a qualitative distinction between the way internal and external reward signals shape attention. This result contradicts other findings, including the latest replication of VDAC in a larger sample (Anderson & Halpern, 2017) where healthy volunteers did not show signs of attentional capture after training without monetary feedback. This could be due to differences in the length of training and highlights the importance of reporting and relating training effects to attentional capture in subsequent tasks.

Besides the important issue concerning the nature of VDAC effects, there are other aspects within this growing research field that are critically discussed. One issue is related to small sample sizes and a potential lack of power (Anderson & Halpern, 2017; Sha & Jiang, 2016). The two studies addressing this and examine VDAC in larger samples (Anderson & Halpern, 2017: N = 40; Sha & Jiang, 2016: N = 24 + 24) show contradictory results. Another issue concerns the counterintuitive finding that many studies reporting a significant modulation of attention by previously rewarded stimuli in a separate test phase did not find training effects (Anderson, 2015a, b; Anderson et al., 2011b, 2012, 2013a, b, 2014b; Mine & Saiki, 2015; Roper, Vecera, & Vaidya, 2014; Wang et al., 2013), while in other experiments value-driven attentional capture was evident in the training (Anderson, Laurent, & Yantis, 2014a; Anderson & Yantis, 2012; Failing & Theeuwes, 2014; Sha & Jiang, 2016) and in some publications results from the training phase were not reported (Anderson et al., 2011a; Jiao et al., 2015; Qi et al., 2013; Sali et al., 2014). These inconsistencies need to be considered when conclusions are drawn about training effects on subsequent attention selection (see also Sha & Jiang, 2016).

In summary, these findings strongly indicate that value influences subsequent attentional selection competing with other sources of attentional selection. However, despite the growing interest in this research field, several inconsistencies in the reported results still exist, which make it difficult to evaluate the nature and the underlying processes of the VDAC. In the current study, we wanted to enlighten the value-based nature of the VDAC. Therefore, we adapted the classical VDAC paradigm (see also Anderson et al., 2011a) by including an unrewarded condition in the training and test phase. This design allows a clear distinction of reward-driven attentional processes from other sources guiding attention (physical salience, goals, search history). To our knowledge different nuances of value as well as a searched but unrewarded condition have never been implemented in a single experiment for direct comparison. This is advantageous because it facilitates direct comparisons between different magnitudes of value as well as a potentially qualitatively different unrewarded condition, which helps distinguishing the nature of the observed effects. To allow a reliable comparison between these conditions, we examined a much larger number of participants than commonly used in the VDAC research. We hypothesized that both monetary feedback and successful response selection would bias attention, but that the delivery of generalized extrinsic rewards would yield comparably higher attentional priority. To prospect some of the results, we find compelling evidence for the value-driven nature of the capture effect, while VDAC did not clearly vary with the magnitude of reward, and searched stimuli did not impair task performance.

Method

Ethical statement

The experiment was approved by the ethical review committee of the Medical Factory of the Otto-von-Guericke University Magdeburg, in line with the declaration of Helsinki. All participants were informed about the procedures and gave their written consent prior to the start of the experiment.

Participants

Eighty-six university students took part in the experiment. Due to low overall performance, two subjects did not enter statistical analysis, resulting in a sample of N = 84 (mean age: 23.95 years, age range: 18–35 years; 53 females and 31 males). Participants had no record of psychiatric or neurological illness (including abuse of nicotine, alcohol, drugs, or medication). All participants had a normal Beck Depression Inventory (BDI-II) score (sum < 14;Hautzinger, Keller, & Kühner, 2006) on the day of measurement as well as normal color vision (Ishihara, 2010) and (corrected to) normal visual acuity. Participants were naïve to the purpose of the study. A subsequent questionnaire revealed that 12 participants were aware of the stimulus–reward associations.

Equipment

Computer-based tasks were run on a Linux machine and displayed on a Samsung S24C450 monitor (24-in., TN panel, 1920 × 1080 pixel, 60 Hz refresh rate) positioned on a desk at a viewing distance of approximately 60 cm. All experimental tasks were programmed and run using MATLAB (The MathWorks, Inc., 2012) and the Psychophysics Toolbox 3.0.12 (Brainard, 1997; Kleiner, Brainard, & Pelli 2007; Pelli, 1997). Participants were seated in an office chair behind a desk in separated, black-painted experimental cabins. Responses were given on a standard keyboard.

General procedure

The entire experimental session took about 2 hours. After giving written informed consent, participants completed a test for color deficiency (Ishihara, 2010), and a screening for acute depressive symptoms (Hautzinger et al., 2006). After completion of the training phase, participants were asked to answer additional questionnaires and perform cognitive tests. This was followed by the test phase of the VDAC task. Training and test trials were explained and practiced for 30 or 15 trials, respectively, directly prior to the start of the tasks. After completion of the test session, subjects answered a short questionnaire assessing the awareness of the reward–color associations (following Anderson et al., 2011a).

Value-driven attentional capture (VDAC) task

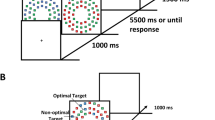

We modified the VDAC task used by Anderson, Faulkner, and colleagues (2013a) to first train stimulus–reward associations in an incidental learning task and later measure distraction from goal-driven and salience-driven attention by previously encountered rewarded and unrewarded stimuli (see Fig. 1). Importantly, an unrewarded (“neutral”) condition was added to both phases of the task to distinguish effects of reward and search history on allocation of attention.

Sequence of events of training phase (a) and test phase (b). a Participants searched for a green, red, or dark blue colored circle surrounding a horizontal or vertical target line and reported its orientation. Correct answers were followed by a high reward (10 cents), low reward (2 cents), or a neutral feedback (e t0 C+n0). The reward delivery was probabilistic (20% vs. 80%). b In test phase, participants searched for the different shape (square among circles or circle among squares) and reported the orientation of the white target line. In 75% of trials, equally distributed over conditions (high, low, no reward), one distractor was presented among the nontarget shapes; in the other 25% distractors were absent. In test phase, neither reward nor performance feedback was given. (Color figure online)

Materials and stimuli

All stimuli of the experimental tasks were presented against a gray background. The search array consisted of six colored forms (red, green, blue, magenta, cyan, orange, yellow, lilac or white—each 2.58° × 2.58° visual angle) placed on an imaginary circle with a radius of 5°. The colors (red, green, blue) associated with the experimental conditions (high reward, low reward, neutral) were counterbalanced across participants (12 subjects per six randomization groups) to control for potential differences in perceptual salience. Inside each form, a white line was presented. One of the lines had either a horizontal or vertical orientation and represented the target stimulus participants had to search for. The other lines were randomly presented at different angles (rotation by 45° counterclockwise and clockwise).

During training, the search display consisted of six circles. In each trial, a target stimulus was shown, and the circle surrounding the target was presented in one of the reward (high/low reward feedback) or neutral (no reward feedback) condition colors. During the test phase of this experiment, the search array consisted in half of the trials of five colored circles and one square, or, in the other half of the trials, of five squares and one circle, with the different-looking form enclosing the target stimulus. On distractor-present trials, one of the five equal geometrical forms was presented in one of the experimental colors.

Procedure

Figure 1 illustrates the task. Both parts of the task started with a white fixation cross (1.05° × 1.05° visual angle) presented for a variable interstimulus interval (ISI) of 400, 500, or 600 milliseconds (ms). Afterwards, the search array was shown until a response was made or until response-time maximum (1,200 ms during training and 1,500 ms during test phase) elapsed. This was followed by the presentation of the fixation cross for 1,000 ms during training and 800 ms during test phase. Only during training, a feedback screen was presented for 1,500 ms. The end of a trial in the test phase was marked by the appearance of an empty screen for 200 ms.

During training, monetary feedback was used to incidentally establish unconscious color-reward associations (high reward, low reward). An unrewarded feedback condition was introduced to assess the influence of searching for a neutral target context. In the training phase, participants were instructed to search for the horizontal or vertical line in either the red, green, or blue colored circle and report its orientation as fast and accurately as possible. Participants received feedback after each trial. In the reward conditions, they earned either 2 euro cents (+2 cents; low-reward condition) or 10 euro cents (+10 cents; high-reward condition). In the neutral condition, a screen with a nonsense letter string was presented (i.e., e t0 C+n0). Additionally, the sum of the total money gained was presented on-screen. The letters presented for the neutral condition consisted of a mix of letters used for the reward feedback (i.e., mst:meGmsuae rEou). Answers that were incorrect or too slow were presented with a feedback indicating a mistake (i.e., Fehler—the German word for error). To reduce the likelihood that the subjects would realize the color–reward associations, we used a probabilistic reward scheme. Trials in the high-reward condition were only rewarded with 10 cents in 80% of the trials and with 2 cents in 20% of the trials. For the low-reward condition, the scheme was reversed.

Participants were instructed that they could win maximally 14 euros during the first phase of the experiment and that they would receive half of the return additionally to their basic compensation of 14 euros right after the experiment. The training phase consisted of 120 trials per block and per experimental condition (high reward, low reward, neutral feedback), resulting in a number of 360 total trials.

The test phase allowed the comparison of four experimental conditions: high-reward and low-reward distractor, target-search-history distractor, and no distractor. In the test phase of the task, participants were informed to search for the different geometrical form in the search array and again report the orientation of the enclosed target line. No feedback was presented. The same colors were used for both phases of the experiment, but in the test phase, the experimental colors from the training phase always appeared in one of the nonsalient shapes, never in the location of the physically salient target shape. The test phase consisted of four blocks with 64 trials in each block, resulting in 64 trials per experimental condition (high reward, low reward, neutral feedback, no distractor) and 256 trials in total.

Experimental conditions in both phases were distributed equally over blocks and the total amount of trials. Within each block and for each experimental condition, target side (left/right), distractor side (left/right), type of object screen (circle among squares or vice versa), and target angle (vertical/horizontal) were counterbalanced. Trials were presented pseudorandomized per block so that the same experimental condition and type of target did not appear successively for more than three trials. Participants were equally distributed over the six color randomization groups to control for salience-driven differences due to perceptual differences of the three target colors.

Additional measures

Working memory

In addition to the main experimental tasks, participants performed a color-change-detection task prior to the training phase to examine visual working memory capacity (WMT; Luck & Vogel, 1997). Subjects perceived four, six, or eight colored squares randomly distributed on the screen to measure performance at low, medium, and large working memory load. Afterwards, one square was presented in one of the previous stimulus locations. Participants were instructed to detect the change of color and press the left arrow for change and right arrow for no change in color.

Impulsivity and depression

Previous findings of VDAC were more robust in more impulsive and less depressed populations, which is why it has been advised to measure these accessory characteristics and potentially use them as a covariate (see study sample considerations by Anderson & Halpern, 2017). Participants performed a paper–pencil test to measure trait impulsivity (German translation of the Barratt Impulsiveness Scale–Short Version; Meule, Vögele & Kübler, 2011). To check inclusion criteria and to build a score of acute depressive symptoms, the BDI-II (Hautzinger et al., 2006) was performed prior to the experimental tasks.

Awareness

After all measurements, subjects answered a short questionnaire assessing the awareness of color–reward associations. Participants were asked what they think describes best the first part of the experiment in which they could win money (training phase). They had to choose between the following options: (1) One color was worth more than the others, and participants were then asked to depict the reward color out of a list of all nine colors used in the training phase. (2) One of the white lines was worth more than the other, and participants were then asked to indicate which one (vertical/horizontal). (3) Reward for colored circles was equivalent overall, but one color was worth more when presented together with a vertical versus a horizontal line. (4) The amount of money that could be gained was independent of color and orientation of the stimuli. Afterwards, subjects gave information about the certainty of their answer (I am sure/I am not sure, but I have a hypothesis/I guessed).

A priori power calculations

Value-driven attention studies have frequently failed to find differences between the low-reward distractor condition and other experimental conditions, which was considered to be due to underpowering (Anderson & Halpern, 2017) and is important when interpreting results in terms of being value driven. Power calculations were performed a priori on previous data using G*Power 3 (Faul, Erdfelder, Lang, & Buchner, 2007) to examine appropriate sample size for the experiment, setting α = .05, and β = .85 as requested minimum values. We explored data from the test phase of the experiment focusing on contrasts indicating a difference between value and search history (high vs. neutral distractor) as well as gradual changes depending on the magnitude of reward (high vs. low distractor). Expecting a small effect size of f = 0.10, the first analysis indicated that a sample of N = 80 participants would be sufficient to find a reliable difference between high-reward and neutral feedback condition (ηp2 = .072; r = .89, p [two-tailed] = .000), with an actual power of β = 0.85. The second calculation suggested a sample size of N = 48 with an estimated power of β = 0.97 for the high and low reward contrast (f = 0.10; ηp2 = .099; r = .92, p [two-tailed] = .000). Consistently, Anderson and Halpern (2017) found a significant difference between high and low reward conditions in a sample of 50 subjects when reanalyzing data from two previous experiments.

Results

Training phase

Reaction times for correct trials of each subject were cleaned separately for the three experimental conditions by removing values three standard deviations above and below the individual mean. In total, 0.6% of responses were removed from the data. Two participants were excluded from further statistical analysis because of low performance in one of the experimental phases (less than 70% correct responses). In the remaining 84 subjects, accuracy in the training phase was 89.0%. The six color-randomization groups were fully counterbalanced in the sample. Reaction times for correct responses and accuracy of responses were analyzed using repeated-measures analysis of variance (ANOVA), including within-subjects factors feedback type and experimental block. Planned post hoc pairwise comparisons were calculated via the Bonferroni method to control for Type I error rate in multiple comparisons.

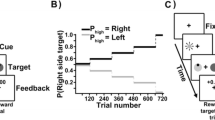

We found a significant main effect for the factor feedback type on response times, F(1.80, 149.68) = 5.14, p = .009, ηp2 = .06. Post hoc comparisons using Bonferroni correction indicated that reaction times for high-reward feedback (M = 669 ms, SD = 7 ms) were significantly shorter than response times in nonreward feedback trials (M = 688 ms, SD = 8 ms, p = .005). No other condition significantly differed from another after correcting for multiple comparisons (p > .1; low-reward feedback: M = 678 ms, SD = 8 ms). We also observed a significant main effect for block on response times, F(1.61, 133.70) = 97.47, p = .000, ηp2 = 0.54). Post hoc tests indicated that participants responded faster with more experience (Block 1: M = 714 ms, SD = 8 ms; Block 2: M = 672 ms, SD = 7 ms; Block 3: M = 650 ms, SD = 7 ms; all pairwise comparisons: p = .000). Importantly, we observed a significant interaction between feedback type and experimental block, F(4, 332) = 5.02, p = .001, ηp2 = .06, with a steeper decline for reward conditions. To ensure that conditioning was sufficient at the end of the learning phase, we conducted a separate analysis only for the last block of the training phase. Factor feedback type was significant in the last block, F(1.75, 145.41) = 8.50, p = .001, ηp2 = .09. Post hoc comparisons of response times revealed significant differences between high-reward feedback trials (M = 635 ms, SD = 8 ms) and nonreward feedback (M = 664 ms, SD = 10 ms, p = .001) as well as between high reward feedback trials and low reward feedback (M = 649 ms, SD = 8 ms, p = .039). These findings suggest that training was long enough to establish an attentional biases for colors associated with a higher reward. However the lower reward feedback only differed from higher monetary feedback but not from the nonreward feedback type. Figure 2 illustrates reaction times (a) and accuracy (b) over the time course of the training phase.

Unlike many other studies on VDAC, we found similar results for accuracy of responses. A repeated-measures ANOVA for percentage of correct answers showed a significant main effect for feedback type, F(2, 166) = 4.84, p < .01, ηp2 =.06. Post hoc comparisons reflected results for response times: Performance in highly rewarded trials (M = 90.1% correct responses, SD = 0.7%) was more accurate than in trials followed by no reward (M = 87.9%, SD = 0.9%, p = .01), but no other contrast yielded statistical significance when corrected for multiple comparisons (p > .1; low-reward feedback: M = 88.9% ms, SD = 0.8%). There was also a main effect of block, F(1.58, 130.99) = 102.36, p = .000, ηp2 = 0.55, on accuracy of responses. Pairwise Bonferroni-corrected post hoc tests showed that performance improved over all blocks (Block 1: M = 83.9%, SD = 0.9%; Block 2: M = 90.6%, SD = 0.7%; Block 3: M = 92.5%, SD = 0.6%; all pairwise comparisons: p = .000). Most importantly, the interaction between feedback type and block also reached significance for accuracy, F(3.25, 269.72) = 3.32, p < .05, ηp2 = 0.04. Figure 2b indicates that percentage of correct responses reflects value-driven attentional bias later in time compared with response times. As for response times, we conducted an additional ANOVA for the last block, which demonstrated that the factor feedback type was significant at the end of the training phase, F(1.66, 137.72) = 13.63, p = .000, ηp2 = 0.14. Post hoc tests revealed that accuracy of responses was lower in the last training block for nonrewarded trials (M = 90.1%, SD = 1.0%) compared with both high-reward (M = 94.1%, SD = 0.6%, p = .000) and low-reward trials (M = 93.3%, SD = 0.6%, p = .002), while we did not observe a reliable difference between the two reward conditions.

Test phase

Average accuracy in the test phase was 90.7%. Reaction time data was cleaned using the same procedure as for the training-phase data. In total, 0.8% of the responses were removed from the test-phase data. Repeated-measures ANOVAs, with the factors condition and block, were calculated for both reaction times and accuracy.

We observed a significant main effect of experimental condition, F(3, 249) = 4.681, p < .01, ηp2 = .05, and block, F(2.63, 218.30) = 14.35, p = .000, ηp2 = .15, on response time. Bonferroni-corrected post hoc comparisons revealed that stimuli previously associated with high reward (M = 729 ms, SD = 9 ms) prolonged responses compared with distractor-absent trials (M = 718 ms, SD = 9 ms, p = .024) and compared with trials with previously searched but unrewarded distractors (M = 717 ms, SD = 9 ms, p = .008). The other pairwise comparisons indicated that there was no statistically reliable difference between the contrasted conditions (p > .1; low-reward distractor: M = 722 ms, SD = 9 ms). Post hoc analysis of responses over time suggests that participants remained largely motivated throughout the unrewarded test phase, as reaction times became faster over the four blocks (1 vs. 2: p = .01; 1 vs. 3: p = .002; 1 vs. 4: p = .000; 2 vs. 3: p > .1; 2 vs. 4: p = .007; 3 vs. 4: p = .029). There was no interaction between block and experimental condition, F(7.81, 648.59) = 1.33, p > .1, ηp2 = .02), which indicates that the effects remained relatively stable throughout the experiment. In other words, subjects were not able to suppress and did not learn to overcome distraction from stimuli signaling a high reward, although reward signals were irrelevant for the task and although feedback was omitted. Figure 3 displays differences in response times per condition. To investigate more closely potential capture effects of the lower reward condition, we conducted a second analysis and estimated effects for the first experimental block of the test phase separately. This analysis showed a main effect for the experimental condition, F(2.58, 214.26) = 6.15, p = .001, ηp2 = .07. Similarly to the ANOVA including all blocks, high-reward distractors (M = 748 ms, SD = 11 ms) prolonged responses compared with distractor-absent trials (M = 728 ms, SD = 10 ms, p = .034) and compared with trials including a previously nonrewarded color stimulus (M = 717 ms, SD = 8 ms, p = .008), as indicated by post hoc comparisons. Post hoc tests further revealed that distraction was also stronger in trials containing a low-reward distractor (M = 742 ms, SD = 11 ms, p = .044) compared with trials containing a previously searched but unrewarded distractor. These findings mirror the effects we observed at the end of the training phase: Reaction times are influenced by stimuli associated with value, which is not simply an effect of search history. But we found no reliable proof that the magnitude of monetary feedback was relevant in the computation of attentional priority.

Comparable to most other studies, we did not find a significant effect of experimental condition on accuracy of responses, F(3, 249) = 1.39, p > .1, ηp2 = .02, and no interaction with block, F(7.49, 621.80) = 0.82, p > .1, ηp2 = .01, during the test phase. The factor block reached significance, F(2.69, 223.07) = 6.46, p = .001, ηp2 = .07. Post hoc comparisons of the four experimental blocks indicated that accuracy of responses increased throughout the test phase (1 vs. 2: p < .1; 1 vs. 3: p = .029; 1 vs. 4: p = .003; all other pairs: p > .1), which indicates that participants remained motivated for the task.

Correlations between training and test phase

We were interested whether learning in the training phase and the resulting bias of attention toward value-signaling stimuli did relate to VDAC observed in the test phase of the experiment. As a measure of attentional bias during training, we calculated two value-based difference scores for response times in the training phase by subtracting the mean of each reward condition from the mean of the nonreward condition for each subject. The magnitude of VDAC in the test phase was calculated by computing the difference between rewarded trials and the neutral condition, as well as between rewarded trials and distractor-absent trials. Afterwards, we conducted correlation analyses comparing conditions that were calculated by subtraction of the same value.

Attentional bias resulting from stimuli signaling a high reward during training correlated positively with attentional capture driven by high-reward distractors compared with distractor-absent trials (r = .22, p [two-tailed] < .05) and compared with nonrewarded trials (r = .33, p [two-tailed] < .01). The same pattern was observed for the low-reward condition: The difference score during training correlated positively with VDAC reflecting the difference toward distractor-absent trials (r = .26, p [two-tailed] < .05) and previously searched but not rewarded trials (r = .35, p [two-tailed] = .001). This finding supports that reward-signaling stimuli affect performance beneficially when they match current goals, but they also misguide attention when they are presented in competition with goal-relevant or physically salient information. Conducting the same correlation analysis for percentage of correct answers did not yield significant results.

Correlations with additional measures

We correlated the magnitude of attentional capture in the test phase of the experiment with working memory capacity and self-ratings of impulsiveness and depression symptoms. Unlike other studies, feedback type influenced both reaction times and accuracy of responses in our experiment. Therefore, we analyzed differences scores for both measures. Attentional capture reflected by response times did not correlate with the additional measures. Instead, we found difference scores build from accuracy of responses to correlate with impulsiveness: VDAC defined as the difference between high-reward and distractor-absent trials (r = −.24, p [two-tailed] < .05) as well as not-rewarded trials (r = −.26, p [two-tailed] < .05) were related to impulsiveness in our sample. Participants who rated themselves as more impulsive also committed more value-driven mistakes in the test phase. Other correlations with working memory and depression were not significant.

Discussion

Observations of attentional biases arising from formerly searched and previously rewarded stimuli gave rise to new perspectives on mechanisms of attentional selection. Traditional models of attentional selection assuming a dichotomy between salience-driven (bottom-up) and goal-driven (top-down) attention cannot convincingly explain that formerly experienced stimuli cause attentional capture under some circumstances. Such history effects have been conceptualized as an additional mechanism of the human attentional system (Anderson, 2013; Awh et al., 2012). If attentional biases caused by reward history or search history share a common mechanism is still an issue of debate (Anderson et al., 2017; Awh et al., 2012; Sha & Jiang, 2016; Stankevich & Geng, 2014).

We examined if previously rewarded stimuli guide attention in later visual search competing with other physically salient and goal-relevant stimuli in an experimental design that allows direct comparison of both, attentional capture by different magnitudes of reward and attentional capture by value-signaling compared with unrewarded stimuli. To our knowledge, this design has never been used before and our study also provides the largest sample investigating VDAC effects so far. We expected that both successfully searched but not rewarded as well as reward-associated stimuli would cause distraction in later visual search, but that formerly rewarded items would cause a stronger bias.

Value-signaling stimuli captured attention in both phases of our experiment. We demonstrated that prioritization of value signals in visual search can both impede and facilitate performance, depending on their congruency with current goals. Unlike a number of other studies (e.g., Anderson, 2015a, b; Anderson et al., 2011a, b, 2012, 2013a, b, 2014b; Anderson & Halpern, 2017; Anderson & Yantis, 2012; Gong & Li, 2014; Jiao et al., 2015; Mine & Saiki, 2015; Qi et al., 2013; Roper et al., 2014; Sali et al., 2014; Wang et al., 2013), the prospect of reward influenced both response times and error rates beneficially during the training of stimulus–reward associations. This finding contributes to the evidence that the prioritization of value signals in attentional selection does facilitate performance (Anderson et al., 2014a; Anderson & Yantis, 2012; Failing & Theeuwes, 2014; Kiss et al., 2009; Krebs, Boehler, Egner, & Woldorff, 2011; Kristjansson, Sigurjonsdottir, & Driver, 2010; Sha & Jiang, 2016). Prioritization of reward emerged gradually over time, but was only reliable for the higher reward condition. A correlation analysis revealed that participants who developed a larger attentional bias during training were also more distracted in the later test phase, which was the case for both reward feedback conditions. This finding implies that the presented effects for the test phase are truly induced by a learning process in the training phase.

In the test phase of the experiment, the participants’ attention was preferentially drawn to stimuli they had experienced as a signal for potential reward. The observed slowing of response times in the test phase in the presence of a high-reward distractor was undoubtedly driven by value and not by the history of target search, as comparisons with searched but unrewarded as well as distractor-absent trials were significant. VDAC for the higher monetary value did not diminish over time. Although reward feedback was omitted in the test phase, and attending reward-signaling stimuli could potentially decrease performance, participants did not learn to ignore the high-reward distractors over the course of the test phase. This finding is in line with observations of other researchers displaying the automatic or habit-like nature of VDAC and its robustness against willful control (Anderson, 2016; Failing et al., 2015; Failing & Theeuwes, 2017a; Le Pelley et al., 2015; Pearson et al., 2015). Our results offer strong evidence for the value-based nature of the attentional capture effect, which seems to independently contribute to the calculation of attentional priority.

Effects for the lower reward condition were present but comparably weaker in our study, as capture by low-reward distractors were only observed in the first run of the test phase, and this capture effect was comparable in the two reward conditions. Although our results suggest that VDAC is truly value driven and we found that smaller rewards can also impact the computation of attentional priorities, the role of the magnitude of value remains less clear. Because at the end of the training phase the prospect of a lower reward did not improve performance, it could be argued that for the lower reward condition, the established stimulus–reward associations were weaker due to insufficient training. We implemented the same training length for all feedback types, which was comparable to other studies reporting VDAC as reviewed by Anderson and Halpern (2017). Specifically, the training procedure was identical to studies that reported no attentional bias during training, but distraction by reward-associated stimuli as a function of the magnitude of value in the test phase (e.g., compare Anderson & Halpern, 2017, Experiment1). In direct comparison with unrewarded stimuli, the impact of the size of reward on the computation of attentional priority seems less powerful than the type of feedback. Besides the number of repetitions, two other factors could have contributed to these inconsistencies: First, it is a well-documented phenomenon that the actual value of rewards depends on the context they are perceived in (Saez, Saez, Paton, Lau, & Salzman, 2017; Seymour & McClure, 2008; Tremblay & Schultz, 1999). Contrasting higher monetary reward with a relatively low reward in the context of an unrewarded condition may be less rewarding compared with a situation in which a smaller reward itself represents the lowest reference point. Second, in our experiment, the working memory load was higher compared with other studies on VDAC that reported a difference between the higher and lower reward, as participants had to differentiate and remember one more condition. We can only speculate about the impact of the overall working memory load in our study. However, both attention and working memory are assumed to operate interdependently and to share the same limited resource (Kiyonaga & Egner, 2013). Adding more information could exceed working memory capacity, making it more difficult to form associations in the training phase and also to resolve them in the test phase, because of interferences between the items maintained in memory (Farrell et al., 2016).

Whether the underlying mechanisms of reward and search history are shared or critically different, and how the different mechanisms of attentional selection are computed toward guiding attention is currently debated (Anderson et al., 2017; Awh et al., 2012; Sha & Jiang, 2016; Stankevich & Geng, 2014). Some authors hypothesize two distinct processes, one being based on reward feedback processing, the other being the result of repeated stimulus selection, which is thought to increase orientation towards selected stimuli (Anderson et al., 2017). Our findings support the idea that reward-signaling information has a comparably higher potential in influencing encoding of stimulus–reward associations and attentional selection because of its meaning for an organism. Our observations also suggest that factors like physical salience, the number of repetitions, and the motivational impact of the experiences all contribute to the strength of the stimulus–reaction associations and to its robustness against reward omission. Value-signaling stimuli seem to represent a particularly potent class of information in (mis)guiding attention and our findings indicate that valuable stimuli operate independently from other stimuli also carrying motivationally relevant information as well as from the classical mechanisms of attentional selection (see, e.g., Stankevich & Geng, 2014).

An interesting additional perspective on this topic comes from theories of perceptual learning. Attentional bias for task-irrelevant stimuli is herein explained by reinforcement signals resulting from attentional orientation toward task-relevant features and successful task performance and the simultaneous perception of task-irrelevant features (Seitz & Watanabe, 2005; Watanabe & Sasaki, 2015). Effects of search history on attention might develop through intrinsic rewarding experience of achieving tasks goals. From this perspective, both search and reward history effects are based on reward learning, thus, not fundamentally different in the underlying process itself but differ concerning the type of motivational state that is induced (intrinsic vs. extrinsic). Positive reactions during achievement of task goals might trigger enhancement of motivational-affective relevance through classical conditioning (Le Pelley et al., 2015) or increase salience (Schultz, 2015) and influence subsequent attentional behavior due to the experience of intrinsic reward. In fact, there is evidence that allocation of attention depends on the subjective, motivational-affective relevance of the stimulus that is transferred during learning (Pool et al., 2014). Neuroimaging studies demonstrate that dopaminergic projection sites like the ventral striatum (VS) respond to a wide range of reinforcers, including monetary reward and performance feedback (Daniel & Pollmann, 2014; Pascucci, Hickey, Jovicich, & Turatto, 2017). Diverse types of reinforcers seem to activate common brain regions (Sescousse, Caldú, Segura, & Dreher, 2013). Some evidence exists for differential processing of monetary and performance feedback. For example, neural activity in the VS differs in relation to intrinsic versus extrinsic motivational states (Daniel & Pollmann, 2010) and the strength of the neural response in the VS varies with the type of reward (Daniel & Pollmann, 2010; Sescousse et al., 2013). However, evidence for a specialisation was not found in the VS but instead in regions like the dorsal striatum and frontal and posterior cingulate (Pascucci et al., 2017). The question cannot be answered with the present study, but we would like to outline that attentional capture induced by successful visual search and by monetary reward feedback may not differ fundamentally in the way neural representations are computed.

In line with some previous studies (e.g. Anderson & Halpern, 2017; Anderson et al., 2011a, 2014b; Laurent et al., 2015; Qi et al., 2013), in our sample, response times did not reveal attentional capture by previous target-related stimuli that were searched but not rewarded. This is unexpected, because in humans stimuli encountered repeatedly in relation to targets in visual search can affect the computation of attentional priorities. For example, probabilities of events that occur at a certain location are learned and shape attentional selection (Wang & Theeuwes, 2018). The question arises why we did not observe such an influence of search history on attention allocation. One answer may be connected to the number of repetitions needed to build a strong enough association to influence attentional priority. Experiments in which effects of search history occurred used a much higher number of training trials (e.g., Anderson et al., 2017, used 1,008 training trials per four training sessions; Sha & Jiang, 2016, used 384 training trials; compared with 120 unrewarded training trials in the current study). It can be speculated that receiving performance feedback may be less potent in inducing attentional capture and thus may require more repetitions.

Taken together, our findings imply that the value component differentially influences the computation of attentional priority maps compared with other motivationally relevant experiences; instead, the magnitude of monetary reward did not clearly modulate attentional selection. We present strong evidence for the value-based nature of the VDAC effect that cannot be explained in terms of a history of target search. Learned stimulus–reward associations capture attention. This can be beneficial, like in the training phase, or impede performance when the acquired attentional bias is inflexibly transferred to new situations in which it does no longer match the task requirements. We conclude that rewarding experiences guide attention in future situations, robustly over time and in competition with other sources of attentional selection.

References

Anderson, B. A. (2013). A value-driven mechanism of attentional selection. Journal of Vision, 13(3), 7. https://doi.org/10.1167/13.3.7

Anderson, B. A. (2015a). Value-driven attentional priority is context specific. Psychonomic Bulletin & Review, 22(3), 750–756. https://doi.org/10.3758/s13423-014-0724-0

Anderson, B. A. (2015b). Value-driven attentional capture is modulated by spatial context. Visual Cognition, 23(1/2), 67–81. https://doi.org/10.1080/13506285.2014.956851

Anderson, B. A. (2016). The attention habit: How reward learning shapes attentional selection: The attention habit. Annals of the New York Academy of Sciences, 1369(1), 24–39. https://doi.org/10.1111/nyas.12957

Anderson, B. A. (2017). Going for it: The economics of automaticity in perception and action. Current Directions in Psychological Science, 26(2), 140–145.

Anderson, B. A., & Halpern, M. (2017). On the value-dependence of value-driven attentional capture. Attention, Perception, & Psychophysics, 79(4), 1001–1011. https://doi.org/10.3758/s13414-017-1289-6

Anderson, B. A., & Yantis, S. (2012). Value-driven attentional and oculomotor capture during goal-directed, unconstrained viewing. Attention, Perception, & Psychophysics, 74(8), 1644–1653. https://doi.org/10.3758/s13414-012-0348-2

Anderson, B. A., Laurent, P. A., & Yantis, S. (2011a). Value-driven attentional capture. Proceedings of the National Academy of Sciences, 108(25), 10367–10371. https://doi.org/10.1073/pnas.1104047108

Anderson, B. A., Laurent, P. A., & Yantis, S. (2011b). Learned value magnifies salience-based attentional capture. PLOS ONE, 6(11), e27926. https://doi.org/10.1371/journal.pone.0027926

Anderson, B. A., Laurent, P. A., & Yantis, S. (2012). Generalization of value-based attentional priority. Visual Cognition, 20(6), 647–658. https://doi.org/10.1080/13506285.2012.679711

Anderson, B. A., Faulkner, M. L., Rilee, J. J., Yantis, S., & Marvel, C. L. (2013a). Attentional bias for nondrug reward is magnified in addiction. Experimental and Clinical Psychopharmacology, 21(6), 499–506. https://doi.org/10.1037/a0034575

Anderson, B. A., Laurent, P. A., & Yantis, S. (2013b). Reward predictions bias attentional selection. Frontiers in Human Neuroscience, 7. https://doi.org/10.3389/fnhum.2013.00262

Anderson, B. A., Laurent, P. A., & Yantis, S. (2014a). Value-driven attentional priority signals in human basal ganglia and visual cortex. Brain Research, 1587, 88–96. https://doi.org/10.1016/j.brainres.2014.08.062

Anderson, B. A., Leal, S. L., Hall, M. G., Yassa, M. A., & Yantis, S. (2014b). The attribution of value-based attentional priority in individuals with depressive symptoms. Cognitive, Affective, & Behavioral Neuroscience, 14(4), 1221–1227. https://doi.org/10.3758/s13415-014-0301-z

Anderson, B. A., Chiu, M., DiBartolo, M. M., & Leal, S. L. (2017). On the distinction between value-driven attention and selection history: Evidence from individuals with depressive symptoms. Psychonomic Bulletin & Review, 24(5), 1–7. https://doi.org/10.3758/s13423-017-1240-9

Awh, E., Belopolsky, A. V., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16(8), 437–443. https://doi.org/10.1016/j.tics.2012.06.010

Brainard, D. H. (1997). The Psychophysics Toolbox. Spatial Vision 10, 433–436.

Chun, M. M. (2000). Contextual cueing of visual attention. Trends in Cognitive Sciences, 4(5), 170–178. https://doi.org/10.1016/S1364-6613(00)01476-5

Chun, M. M., & Jiang, Y. (1998). Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology, 36(1), 28–71. https://doi.org/10.1006/cogp.1998.0681

Corbetta, M., & Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience, 3(3), 215–229. https://doi.org/10.1038/nrn755

Daniel, R., & Pollmann, S. (2010). Comparing the neural basis of monetary reward and cognitive feedback during information-integration category learning. The Journal of Neuroscience, 30(1), 47–55. https://doi.org/10.1523/JNEUROSCI.2205-09.2010

Daniel, R., & Pollmann, S. (2014). A universal role of the ventral striatum in reward-based learning: Evidence from human studies. Neurobiology of Learning and Memory, 114, 90–100. https://doi.org/10.1016/j.nlm.2014.05.002

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18(1), 193–222. https://doi.org/10.1146/annurev.ne.18.030195.001205

Edelmann, W., & Wittmann, S. (2012). Lernpsychologie: mit Online-Materialien (7., vollständig überarbeitete Auflage) [Learning psychology: With online materials]. Weinheim Basel: Beltz.

Failing, M., Nissens, T., Pearson, D., Le Pelley, M., & Theeuwes, J. (2015). Oculomotor capture by stimuli that signal the availability of reward. Journal of Neurophysiology, 114(4), 2316–2327. https://doi.org/10.1152/jn.00441.2015

Failing, M., & Theeuwes, J. (2017a). Don’t let it distract you: How information about the availability of reward affects attentional selection. Attention, Perception, & Psychophysics, 79(8), 2275–2298. https://doi.org/10.3758/s13414-017-1376-8

Failing, M., & Theeuwes, J. (2017b). Selection history: How reward modulates selectivity of visual attention. Psychonomic Bulletin & Review. https://doi.org/10.3758/s13423-017-1380-y

Failing, M. F., & Theeuwes, J. (2014). Exogenous visual orienting by reward. Journal of Vision, 14(5), 6. https://doi.org/10.1167/14.5.6

Failing, M. F., & Theeuwes, J. (2015). Nonspatial attentional capture by previously rewarded scene semantics. Visual Cognition 23(1/2), 82–104. https://doi.org/10.1080/13506285.2014.990546

Farrell, S., Oberauer, K., Greaves, M., Pasiecznik, K., Lewandowsky, C. J., Jarrold, C. (2016). A test of interference versus decay in working memory: Varying distraction within lists in a complex span task. Journal of Memory and Language 90, 66–87. https://doi.org/10.1016/j.jml.2016.03.010

Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191.

Gazzaniga, M. S., Ivry, R. B., & Mangun, G. R. (2002). Cognitive neuroscience: The biology of the mind (2nd). New York: Norton.

Gong, M., & Li, S. (2014). Learned reward association improves visual working memory. Journal of Experimental Psychology: Human Perception and Performance, 40(2), 841–856. https://doi.org/10.1037/a0035131

Hautzinger, M., Keller, F., & Kühner, C. (2006). BDI II—Beck Depressions-Inventar–Manual. Frankfurt: Harcourt Test Services.

Hickey, C., Chelazzi, L., & Theeuwes, J. (2010). Reward changes salience in human vision via the anterior cingulate. Journal of Neuroscience, 30(33), 11096–11103. doi:https://doi.org/10.1523/JNEUROSCI.1026-10.2010

Hutchinson, J. B., & Turk-Browne, N. B. (2012). Memory-guided attention: Control from multiple memory systems. Trends in Cognitive Sciences, 16(12), 576–579. https://doi.org/10.1016/j.tics.2012.10.003

Ishihara, S. (2010). Ishihara's Tests for Colour Deficiency: 24 Plates. Tokyo: Kanehara Shuppan.

Jiang, Y. V., Swallow, K. M., Rosenbaum, G. M., & Herzig, C. (2013). Rapid acquisition but slow extinction of an attentional bias in space. Journal of Experimental Psychology: Human Perception and Performance, 39(1), 87–99. https://doi.org/10.1037/a0027611

Jiao, J., Du, F., He, X., & Zhang, K. (2015). Social comparison modulates reward-driven attentional capture. Psychonomic Bulletin & Review, 22(5), 1278–1284. https://doi.org/10.3758/s13423-015-0812-9

Kastner, S., & Ungerleider, L. G. (2000). Mechanisms of visual attention in the human cortex. Annual Review of Neuroscience, 23(1), 315–341. https://doi.org/10.1146/annurev.neuro.23.1.315

Kiss, M., Driver, J., & Eimer, M. (2009). Reward priority of visual target singletons modulates event-related potential signatures of attentional selection. Psychological Science, 20, 245–251. https://doi.org/10.1111/j.1467-9280.2009.02281.x

Kiyonaga, A., & Egner, T. (2013). Working memory as internal attention: Toward an integrative account of internal and external selection processes. Psychonomic Bulletin & Review, 20(2), 228–242. https://doi.org/10.3758/s13423-012-0359-y

Kleiner, M., Brainard, D., & Pelli, D. (2007). What’s new in Psychtoolbox-3. Perception, 36(14), 1.

Krebs, R. M., Boehler, C. N., Egner, T., & Woldorff, M. G. (2011). The neural underpinnings of how reward associations can both guide and misguide attention. Journal of Neuroscience, 31(26), 9752–9759. https://doi.org/10.1523/JNEUROSCI.0732-11.2011

Kristjansson, A., Sigurjonsdottir, O., & Driver, J. (2010). Fortune and reversals of fortune in visual search: Reward contingencies for pop-out targets affect search efficiency and target repetition effects. Attention, Perception, & Psychophysics, 72(5), 1229–1236. https://doi.org/10.3758/APP.72.5.1229

Kristjánsson, A., Wang, D., & Nakayama, K. (2002). The role of priming in conjunctive visual search. Cognition, 85(1), 37–52.

Lamy, D. F., & Kristjansson, A. (2013). Is goal-directed attentional guidance just intertrial priming? A review. Journal of Vision, 13(3), 14. https://doi.org/10.1167/13.3.14

Laurent, P. A., Hall, M. G., Anderson, B. A., & Yantis, S. (2015). Valuable orientations capture attention. Visual Cognition, 23(1/2), 133–146. https://doi.org/10.1080/13506285.2014.965242

Le Pelley, M. E., Pearson, D., Griffiths, O., & Beesley, T. (2015). When goals conflict with values: Counterproductive attentional and oculomotor capture by reward-related stimuli. Journal of Experimental Psychology: General, 144(1), 158–171. https://doi.org/10.1037/xge0000037

Luck, S. J., & Vogel, E. K. (1997). The capacity of visual working memory for features and conjunctions. Nature, 390(6657), 279–281.

Mine, C., & Saiki, J. (2015). Task-irrelevant stimulus-reward association induces value-driven attentional capture. Attention, Perception, & Psychophysics, 77(6), 1896–1907. https://doi.org/10.3758/s13414-015-0894-5

Meule, A., Vögele, C., & Kübler, A. (2011). Psychometrische Evaluation der deutschen Barratt Impulsiveness Scale – Kurzversion (BIS-15). Diagnostica, 57(3), 126–133. https://doi.org/10.1026/0012-1924/a000042.

Müller, H. J., Reimann, B., & Krummenacher, J. (2003). Visual search for singleton feature targets across dimensions: Stimulus- and expectancy-driven effects in dimensional weighting. Journal of Experimental Psychology: Human Perception and Performance, 29(5), 1021–1035. https://doi.org/10.1037/0096-1523.29.5.1021

Olivers, C. N. L., Peters, J., Houtkamp, R., & Roelfsema, P. R. (2011). Different states in visual working memory: When it guides attention and when it does not. Trends in Cognitive Sciences , 15(7), 327–334. https://doi.org/10.1016/j.tics.2011.05.004

Pascucci, D., Hickey, C., Jovicich, J., & Turatto, M. (2017). Independent circuits in basal ganglia and cortex for the processing of reward and precision feedback. NeuroImage, 162, 56–64. https://doi.org/10.1016/j.neuroimage.2017.08.067

Pearson, D., Donkin, C., Tran, S. C., Most, S. B., & Le Pelley, M. E. (2015). Cognitive control and counterproductive oculomotor capture by reward-related stimuli. Visual Cognition, 23(1/2), 41–66.

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10(4), 437–442.

Pool, E., Brosch, T., Delplanque, S., & Sander, D. (2014). Where is the chocolate? Rapid spatial orienting toward stimuli associated with primary rewards. Cognition, 130(3), 348–359. https://doi.org/10.1016/j.cognition.2013.12.002

Posner, M. I. (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32(1), 3–25. https://doi.org/10.1080/00335558008248231

Qi, S., Zeng, Q., Ding, C., & Li, H. (2013). Neural correlates of reward-driven attentional capture in visual search. Brain Research, 1532, 32–43. https://doi.org/10.1016/j.brainres.2013.07.044

Roper, Z. J. J., Vecera, S. P., & Vaidya, J. G. (2014). Value-driven attentional capture in adolescence. Psychological Science, 25(11), 1987–1993. https://doi.org/10.1177/0956797614545654

Sali, A. W., Anderson, B. A., & Yantis, S. (2014). The role of reward prediction in the control of attention. Journal of Experimental Psychology: Human Perception and Performance, 40(4), 1654–1664. https://doi.org/10.1037/a0037267

Saez, R. A., Saez, A., Paton, J. J., Lau, B., & Salzman, C. D. (2017). Distinct Roles for the Amygdala and Orbitofrontal Cortex in Representing the Relative Amount of Expected Reward. Neuron, 95(1), 70–77.e3. https://doi.org/10.1016/j.neuron.2017.06.012.

Schultz, W. (2015). Neuronal reward and decision signals: From theories to data. Physiological Reviews, 95(3), 853–951. https://doi.org/10.1152/physrev.00023.2014

Seitz, A., & Watanabe, T. (2005). A unified model for perceptual learning. Trends in Cognitive Sciences, 9(7), 329–334. https://doi.org/10.1016/j.tics.2005.05.010

Sescousse, G., Caldú, X., Segura, B., & Dreher, J.-C. (2013). Processing of primary and secondary rewards: A quantitative meta-analysis and review of human functional neuroimaging studies. Neuroscience and Biobehavioral Reviews, 37(4), 681–696. https://doi.org/10.1016/j.neubiorev.2013.02.002.

Seymour, B., & McClure, S. M. (2008). Anchors, scales and the relative coding of value in the brain. Current Opinion in Neurobiology, 18(2), 173–178. https://doi.org/10.1016/j.conb.2008.07.010.

Sha, L. Z., & Jiang, Y. V. (2016). Components of reward-driven attentional capture. Attention, Perception, & Psychophysics, 78(2), 403–414. https://doi.org/10.3758/s13414-015-1038-7

Stankevich, B. A., & Geng, J. J. (2014). Reward associations and spatial probabilities produce additive effects on attentional selection. Attention, Perception, & Psychophysics, 76(8), 2315–2325. https://doi.org/10.3758/s13414-014-0720-5

The MathWorks, Inc. (2012). Matlab 2012b, Global Optimization Toolbox: User’s Guide (2017b). Retrieved from www.mathworks.com/help/pdf_doc/gads/gads_tb.pdf.

Tremblay, L., & Schultz, W. (1999). Relative reward preference in primate orbitofrontal cortex. Nature, 398(6729), 704–708. https://doi.org/10.1038/19525.

Vossel, S., Geng, J. J., & Fink, G. R. (2014). Dorsal and ventral attention systems: Distinct neural circuits but collaborative roles. The Neuroscientist, 20(2), 150–159. https://doi.org/10.1177/1073858413494269

Wang, L., Yu, H., & Zhou, X. (2013). Interaction between value and perceptual salience in value-driven attentional capture. Journal of Vision, 13(3), 5. https://doi.org/10.1167/13.3.5

Wang, Z., & Theeuwes, J. (2018). Statistical regularities modulate attentional capture. Journal of Experimental Psychology: Human Perception and Performance, 44(1), 13–17. https://doi.org/10.1037/xhp0000472

Watanabe, T., & Sasaki, Y. (2015). Perceptual learning: Toward a comprehensive theory. Annual Review of Psychology, 66(1), 197–221. https://doi.org/10.1146/annurev-psych-010814-015214

Zhao, J., Al-Aidroos, N., & Turk-Browne, N. B. (2013). Attention is spontaneously biased toward Regularities. Psychological Science, 24(5), 667–677. https://doi.org/10.1177/0956797612460407

Acknowledgements

This work was funded by the federal state of Saxony-Anhalt and the European Regional Development Fund (ERDF 2014-2020), Project: Center for Behavioral Brain Sciences (CBBS), FKZ: ZS/2016/04/78113. We thank Juliane Röher for her help with conducting the experimental sessions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Marchner, J.R., Preuschhof, C. Reward history but not search history explains value-driven attentional capture. Atten Percept Psychophys 80, 1436–1448 (2018). https://doi.org/10.3758/s13414-018-1513-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-018-1513-z