Abstract

In research on psychological time, it is important to examine the subjective duration of entire stimulus sequences, such as those produced by music (Teki, Frontiers in Neuroscience, 10, 2016). Yet research on the temporal oddball illusion (according to which oddball stimuli seem longer than standard stimuli of the same duration) has examined only the subjective duration of single events contained within sequences, not the subjective duration of sequences themselves. Does the finding that oddballs seem longer than standards translate to entire sequences, such that entire sequences that contain oddballs seem longer than those that do not? Is this potential translation influenced by the mode of information processing—whether people are engaged in direct or indirect temporal processing? Two experiments aimed to answer both questions using different manipulations of information processing. In both experiments, musical sequences either did or did not contain oddballs (auditory sliding tones). To manipulate information processing, we varied the task (Experiment 1), the sequence event structure (Experiments 1 and 2), and the sequence familiarity (Experiment 2) independently within subjects. Overall, in both experiments, the sequences that contained oddballs seemed shorter than those that did not when people were engaged in direct temporal processing, but longer when people were engaged in indirect temporal processing. These findings support the dual-process contingency model of time estimation (Zakay, Attention, Perception & Psychophysics, 54, 656–664, 1993). Theoretical implications for attention-based and memory-based models of time estimation, the pacemaker accumulator and coding efficiency hypotheses of time perception, and dynamic attending theory are discussed.

Similar content being viewed by others

Psychological representations of time exist on many scales, ranging from the very short to the very long, yet the mechanisms underlying each are often studied separately. Matthews and Meck’s (2016) integrative review, for instance, is limited to temporal intervals no longer than a few seconds. Teki (2016) suggests expanding beyond single events in time-related research by using stimuli comprising entire event sequences, such as music. One time perception phenomenon that has been studied using single events—but not yet studied using entire event sequences—is the temporal oddball illusion. The temporal oddball illusion refers to the finding that a single oddball (low-probability event) seems longer than a single standard (high-probability event) of the same duration (Tse, Intriligator, Rivest, & Cavanagh, 2004; see also Birngruber, Schröter, & Ulrich, 2014; Matthews & Gheorghiu, 2016; McAuley & Fromboluti, 2014; Pariyadath & Eagleman, 2012).Footnote 1

We aimed to discover first whether the temporal oddball illusion translates to entire stimulus sequences—whether entire sequences that contain oddballs seem longer than entire sequences that do not contain oddballs—and second whether such a translation is influenced by the mode of information processing. The mode of information processing could be direct temporal processing (deliberately completing an experimental time-estimation task with explicit instructions to estimate time) or indirect temporal processing (completing an experimental task with instructions that exclude time-related language, providing measures of subjective duration only incidentally). To answer the first question, we composed musical chord sequences such that half contained and half did not contain oddballs (Experiments 1 and 2). The oddball consisted of an auditory sliding tone that glided over a large range of pitches (following Tse et al., 2004); the chords consisted of stable pitches. To answer the second question, we manipulated the degree to which people engaged in direct temporal or indirect temporal processing while listening to the sequences. To do this, we varied the task people completed (Experiment 1), the event structure of the sequences (Experiments 1 and 2), and the familiarity of the sequences (Experiment 2). Using different methods to induce different modes of information processing ensures more robust and generalizable results.

We conceptualize direct temporal and indirect temporal processing as existing along a continuum—certain amounts of temporal information, and certain amounts of nontemporal information, are processed in every situation (Zakay, 1989, 1993). Indirect temporal processing, as used in this article, can be considered analogous to nontemporal processing as stated in Zakay (1993). As Zakay explained, “Temporal information processing takes place at all times, but it is done intermittently when cognitive capacity is not directly focused at P(t) [the temporal processor]” (p. 658). When people engage in direct temporal processing, they allocate the majority of their attentional resources to the passage of time (e.g., counting seconds). In contrast, when people engage in indirect temporal processing, they allocate the majority of their attentional resources to information unrelated to time, by attending to some other aspect of a stimulus. When attention is not captured by stimulus properties, it is free to focus on time, permitting direct temporal processing, but the opposite is true when attention is captured by stimulus properties. In the present research, our manipulations of direct temporal and indirect temporal processing were used to shift the allocation of attentional resources along the information processing continuum proposed by Zakay (1993). By varying task, event structure, and familiarity, we aimed to shift the majority of people’s attentional resources from counting seconds (direct temporal processing) to listening to music (indirect temporal processing).

Information processing manipulations

Task

One typical way researchers manipulate whether people engage in direct or indirect temporal processing is by using either the prospective or retrospective time estimation paradigm (e.g., Block, George, & Reed, 1980; Zakay, 1993; Zakay, Tsal, Moses, & Shahar, 1994). In prospective experiments, participants are explicitly asked to make duration judgments before the to-be-judged intervals; in retrospective experiments, participants are explicitly asked to make duration judgments only after the to-be-judged interval. In Experiment 1 of the present research, however, we used a different strategy to manipulate information processing. We had people complete either a verbal estimation or musical imagery reproduction task. Verbal estimation tasks induce direct temporal processing, whereas musical imagery reproduction tasks induce indirect temporal processing.

Verbal estimation is a standard method for measuring subjective duration (Grondin, 2008) that makes use of direct temporal processing. It requires that people focus on the passage of time during an interval and, following its completion, directly report its duration using numerical units (e.g., seconds). Musical imagery reproduction, on the other hand, is a novel method for measuring subjective duration that makes use of indirect temporal processing. Participants are asked to imagine an excerpt they previously heard, pressing one button when they start imagining the sounds, and another when they finish. Importantly, participants completing a musical imagery reproduction are not explicitly asked to estimate duration or time. Thus, making a musical imagery reproduction involves focusing attention on music and its inherent properties, such as pitch and timbre, rather than the duration of an interval.

All of the verbal estimates in Experiment 1 were prospective. We always warned participants they would be making a verbal estimate of duration before presenting the to-be-judged interval. It is difficult to characterize musical imagery reproductions as prospective or retrospective because they involve indirect temporal processing—participants are never explicitly asked to judge time, either before or after the relevant interval. Musical imagery reproductions function like retrospective judgments, in that participants are unaware they will be asked to judge time while listening to the stimulus, but take the indirect aspect even further—participants are never told that duration is the parameter of interest, not even afterwards.

Experimental instructions have been identified as a crucial factor in determining whether measures of subjective duration are generated from direct (explicit) temporal or indirect (implicit) temporal processing—the key factor is whether the experimental instructions use durational language, or not (Coull & Nobre, 2008). Direct temporal processing can be induced by instructing participants to judge duration—directly stating in the experimental instructions that the task is to estimate duration. Indirect temporal processing, on the other hand, can be induced by excluding time-related language from the experimental instructions but nonetheless recording the amount of time it takes people to make responses—participants are not directly told that their responses will be treated as measurements of subjective duration.

Measures of direct temporal processing can be collected using traditional methods of time estimation, such as verbal estimation, duration reproduction, duration production, method of comparison, and magnitude estimation (Grondin, 2008). Indirect temporal processing, on the other hand, can be measured by recording the emergent temporal intervals generated while participants make responses (Turvey, 1977; Zelaznik, Spencer, & Ivry, 2002). Researchers can record the amount of time it takes people to perform a behavior and then treat that emergent temporal interval as an indirect measure of subjective duration (Grondin, 2010).

Direct temporal and indirect temporal processing are not synonymous with the prospective and retrospective paradigms. Both paradigms—whether before or after the to-be-judged interval—ask participants to make direct duration judgments. Participants in the retrospective paradigm are not aware of the time-related experimental purpose before or during the to-be-judged interval but become aware afterwards when explicitly requested to judge duration. It would be even more ideal to have a paradigm that avoided revealing to participants the experimenter’s interest in subjective duration, altogether.

Musical imagery reproductions work exactly in this way. Musical imagery reproduction is a novel method for measuring subjective duration that is similar to the more commonly used duration reproduction method (Grondin, 2008). Both involve marking the beginning and ending of mentally rehearsed information. Duration reproductions, however, explicitly state “duration” or “time,” for example, in the experimental instructions, while musical imagery reproductions do not. Boltz (1998), for example, asked participants to “mentally imagine the duration of the melody until it reaches its original ending point” (p. 1094). In contrast, we asked participants to “imagine the music playing back in your head. Replay the music the same way you heard it, from beginning to end. Left-click to begin your imagined clip, then right-click when it ends.” Excluding time-related words ensured that the participants would stay naïve to the true purpose of the experiment, and engage in indirect (rather than direct) temporal processing. Using the phrase “Replay the music the same way you heard it” indirectly encouraged participants to imagine the music at the same tempo they heard it. But we could not directly ask them to preserve the tempo, because it would have disclosed the time-related purpose of the task, and risked contaminating it with more direct than indirect temporal processing.

Evidence suggests that musical imagery reproductions produce valid and accurate measures of subjective duration. Halpern and Zatorre (1999) instructed people to imagine familiar melodies playing back through their head, pressing a button when the melody reached its actual ending point. The durations of the musical imaginings conformed to the actual durations of the melodies. Grondin and Killeen (2009) found similar patterns of results. Duration reproductions created both while singing and imagining familiar songs were as accurate as those created while counting seconds. Weber and Brown (1986) showed that the durations of temporal intervals created while imagining songs were the same as those created while singing those songs. These findings make sense, considering that people tend to imagine songs at their actual tempos (Halpern, 1988b; Levitin & Cook, 1996), and auditory images likely represent the temporal extent of the actual stimuli (Halpern, 1988a).

Event structure

In both Experiments 1 and 2, we manipulated information processing by varying the event structure of musical chord sequences. We chose musical chord sequences as stimuli because they produce strong, well-theorized manipulations of expectation about what events will occur in a sequence, and when (see, e.g., Regnault, Bigand, & Besson, 2001). Music theorists and music psychologists have outlined the principles that govern relationships between musical events in the Western tonal system (Lerdahl & Jackendoff, 1983). Expectations about those relationships are fundamental to musical listening (Huron & Margulis, 2010).

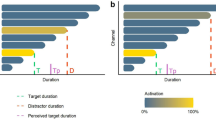

Jones and Boltz (1989) have shown that variations in event structure affect attending in real time. For the purposes of the present research, we used variations in event structure to encourage direct temporal or indirect temporal processing based on the degree to which more or less predictable stimulus properties capture attention. We used three different variations of event structure—repeated, coherent, and incoherent—each of which was designed to capture attention more effectively. Figure 1 illustrates the hypothesized influence of event structure on information processing.

Chord sequences composed of repetitions of a single chord (repeated chord sequences) confirm low-level expectations (Dehaene et al., 2001), permit efficient processing and memory storage (Saffran, Aslin, & Newport, 1996), and free attentional resources to monitor the passage of time (Zakay, 1993), encouraging direct temporal processing. Chord sequences composed of chord progressions that follow the rules of Western tonal harmony (coherent chord sequences) capture more attention than the mere repetition of a single chord, encouraging more indirect temporal processing (Cai, Eagleman, & Ma, 2015; Huettel, Mack, & McCarthy, 2002). Chord sequences composed of chords progressions that violate the rules of Western tonal harmony (incoherent chord sequences) violate deeply ingrained musical expectations, resist predictability, and capture attentional resources (Boltz, 1998; Brown & Boltz, 2002). When listening to or imagining incoherent music, people are too occupied trying to make sense of the musical twists, turns, and violations to devote meaningful amounts of attention to monitoring the passage of time. By capturing more attention, incoherent chord sequences encourage even more indirect temporal processing than coherent sequences.

Familiarity

In Experiment 2, we manipulated information processing by varying stimulus familiarity (see also Zakay, 1989). We presented one half of the chord sequences in a pretrial exposure phase and excluded the other half of the chord sequences from the pretrial exposure phase. Familiar sequences encourage direct temporal processing more than unfamiliar sequences because familiar sequences are more predictable (Zakay, 1993). Familiar events consume fewer attentional resources (Zakay, 1993) and facilitate earlier stages of processing than unfamiliar events (Avant, Lyman, & Antes, 1975). Reductions in nontemporal information processing load free attentional resources to engage in direct temporal processing (Zakay & Block, 1995). Thus, participants could engage in more direct temporal processing when listening to familiar sequences, but more indirect temporal processing when listening to unfamiliar ones.

Competing predictions

Three predictions exist about the relationship between the subjective duration of sequences that do and do not contain oddballs, and how information processing might influence this relationship: the pacemaker accumulator hypothesis, the coding efficiency hypothesis, and the dual-process contingency model.

Pacemaker accumulator hypothesis

The pacemaker accumulator hypothesis, proposed by Tse et al. (2004), asserts that a pacemaker determines the number of subjective temporal units accumulated in a cognitive timer (see, e.g., Zakay & Block, 1995). Tse et al. posit that oddballs seem longer than standards because oddballs increase the amount of attention captured, the rate of information processing, and the number of subjective temporal units registered by the cognitive timer. If this is true, then more subjective temporal units should be registered in the cognitive timer during a sequence that contains oddballs than during a sequence that does not contain oddballs. Thus, the pacemaker accumulator hypothesis predicts that the sequences that contain oddballs will seem longer than the sequences that do not contain oddballs, regardless of the event structure of the sequences.

Coding efficiency hypothesis

The coding efficiency hypothesis, proposed by Pariyadath and Eagleman (2007, 2012), asserts that repeated stimuli increase neural efficiency (see Summerfield & de Lange, 2014). According to this account, oddballs (unpredictable events) seem longer than standards (predictable events) to the extent that the stimulus sequences containing those events have predictable event structures. Oddballs that occur in a sequence with an unpredictable event structure should seem no longer than their adjacent events. To illustrate this point, Pariyadath and Eagleman (2007) ran an experiment using sequences of numerical units that varied in event structure, from highly predictable or repeated (i.e., 1—1—1—1—1) to moderately predictable or coherent (i.e., 1—2—3—4—5) to unpredictable or incoherent (e.g., 4—1—3—2—5). Pariyadath and Eagleman (2007) explained there was no oddball, per se, in these sequences. Rather, the first number was novel, and thus more unpredictable than the numbers that followed. The duration of the first number in the sequences with highly predictable and moderately predictable event structures seemed longer than each of the numbers that followed. But the duration of the first number in the sequences with unpredictable event structures seemed no different in duration than the numbers that followed.

If the coding efficiency hypothesis is correct, then the sum of neural responses evoked during a sequence that contains oddballs should be larger than the sum of neural responses evoked during a sequence that does not contain oddballs, but only for the sequences with predictable event structures. Thus, the coding efficiency hypothesis predicts that the sequences with predictable event structures that contain oddballs will seem longer than those that do not contain oddballs, but no effect of oddball will emerge when the sequences have unpredictable event structures. In addition, the coding efficiency hypothesis predicts that repetition suppression will lead the repeated sequences to seem shorter than the coherent and incoherent sequences.

Dual-process contingency model

Zakay (1993) proposed the dual-process contingency model (originally termed the resource allocation model; Zakay, 1989). The dual-process contingency model integrates the tenets of both attention-based and memory-based models of time estimation, giving it the ability to explain the findings of both prospective and retrospective experiments (see Block & Zakay, 1997). Attention-based models posit that subjective duration is a function of the amount of attention allocated to time (e.g., E. A. C. Thomas & Weaver, 1975; E. C. Thomas & Brown, 1974). The attentional gate model (one variety of attention-based model) theorizes that subjective temporal units, or pulses, created by a pacemaker pass through a cognitive gate and accumulate in a cognitive counter (Zakay & Block, 1995). The more attentional resources allocated to the passage of time during an interval, the wider the gate opens, the more pulses are counted, and the longer subjective duration should become. Memory-based models, on the other hand, posit that subjective duration is a function of the amount of meaningful information stored in and retrieved from memory. For instance, the storage-size hypothesis (Ornstein, 1969) and change-based hypotheses (e.g., Block, 1989; Poynter, 1989) posit that the greater the amount of information or perceptual changes stored in memory, the longer subjective duration should become.

The dual-process contingency model asserts that people have both a temporal processor and a nontemporal processor, each competing for limited attentional resources (Kahneman, 1973). The temporal processor is an attentional timer that registers temporal information, such as the number of seconds counted. The nontemporal processor, on the other hand, is a memory-based mechanism that registers nontemporal information, such as colors, textures, or anything that can take attention away from counting seconds or monitoring the passage time. It is important to note that nontemporal information is used to infer subjective duration in circumstances where no meaningful amount of temporal information has been registered by the temporal processor (from which duration could have otherwise been directly estimated).

According to the dual-process contingency model, when people are engaged in direct temporal processing (focusing on time-related aspects of the environment), sequences that contain oddballs should seem shorter than sequences that do not contain oddballs. This is because when people are engaged in direct temporal processing, oddballs should serve to distract attention from counting seconds (Debener, Kranczioch, Herrmann, & Engel, 2002), increase information processing load, and decrease the number of subjective temporal units registered in the temporal processor (on which duration is directly based). Conversely, when people are engaged in indirect temporal, or nontemporal, processing (focusing on the inherent time-unrelated aspects of stimuli), sequences that contain oddballs should seem longer than sequences that do not contain oddballs. This is because when people are engaged in indirect temporal processing, oddballs should serve to focus attention on the inherent nontemporal properties of the oddballs themselves, increase the number of changes perceived, and increase the amount of nontemporal information remembered (from which duration is indirectly measured). Thus, the dual-process contingency model predicts that the sequences that contain oddballs will seem shorter than the sequences that do not contain oddballs when people are engaged in direct temporal processing, but longer than sequences that do not contain oddballs when people are engaged in indirect temporal processing.

The present research

Past research on the temporal oddball illusion has been limited to comparing the subjective duration of individual standards to individual oddballs. In the present research, we compared the subjective duration of entire sequences containing oddballs to entire sequences not containing oddballs. We also examined how information processing might influence this potential translation of the temporal oddball illusion. In both Experiments 1 and 2, musical chord sequences did or did not contain auditory oddballs, and people listened to the sequences while engaged in direct temporal or indirect temporal processing. We manipulated information processing by independently varying the task (Experiment 1), the sequence event structure (Experiments 1 and 2), and the sequence familiarity (Experiment 2). The task was either to complete a verbal estimation or a musical imagery reproduction; the sequence event structure was either repeated, coherent, or incoherent; the sequence familiarity was either familiar or unfamiliar. The two manipulations in each experiment were crossed: In Experiment 1, people made both musical imagery reproductions and verbal estimations about repeated, coherent, and incoherent sequences. In Experiment 2, people made musical imagery reproductions about both familiar and unfamiliar repeated, coherent, and incoherent sequences (see Table 1). Completing a verbal estimation task, and listening to coherent, repeated, and familiar sequences induces direct temporal processing. Completing a musical imagery reproduction task, and listening to incoherent and unfamiliar sequences induces indirect temporal processing.

The pacemaker accumulator hypothesis predicts that the sequences containing oddballs will seem longer than those not containing oddballs, regardless of the sequence event structure. The coding efficiency hypothesis predicts that the sequences containing oddballs will seem longer than those not containing oddballs when the sequences have predictable event structures, but not when they have unpredictable event structures. The dual-process contingency model predicts that the sequences containing oddballs will seem shorter than the sequences not containing oddballs when people are engaged in direct temporal processing, but longer than the sequences not containing oddballs when people are engaged in indirect temporal processing.

Experiment 1

The two manipulations of information processing in Experiment 1 were task and event structure. We varied the type of task that people completed (verbal estimation or musical imagery reproduction), and the event structure of the chord sequences (repeated, coherent, or incoherent chord patterns).

Method

Participants

Fifty-five undergraduate students enrolled in General Psychology at the University of Arkansas volunteered to participate in this experiment in exchange for course credit. We determined sample size based on previous research demonstrating the influence of information processing on the subjective duration of musical sequences (Ziv & Omer, 2010). We excluded the data of three participants from the analysis (two for having abnormal hearing, and one for disregarding the instructions); we confirmed that the analyses with and without their data yielded the same pattern of results. The ages of the remaining 52 participants (31 females) ranged from 18 to 39 years (M = 19.88, SD = 3.45). None were music majors, but nine had received formal musical training for at least 1 year, ranging from 1 to 8 years (M = 3.60, SD = 2.61). All of the participants gave informed consent before participating in this experiment. This experiment was approved by the University of Arkansas Institutional Review Board.

Stimuli

To isolate the experimental variables of interest and to ensure participants had no prior familiarity with the stimuli, we composed original chord sequences using the Finale (2012; MakeMusic®, Inc.) music notation software. All of the chord sequences comprised isochronous 4-voice (SATB) piano chords (no rests or silences). The overall pitch frequency range of the piano chords was 55 Hz to 1047 Hz. All of the chord sequences were in 4:4 metric time. The chord sequences varied in duration, tempo, and rhythm to sustain interest (as detailed below), with these variations counterbalanced among the experimental factors of oddball and event structure. We normalized the amplitude of the chord sequences using the Audacity (2.0.6) recording and editing software.

We modeled the auditory oddball on the one used by Tse et al. (2004)—a sliding tone that rose from 82 Hz to 1480 Hz. The sliding motion of the ocarina-timbre oddball contrasted with the chord sequences, which were composed of stationary tones in a piano timbre. The oddballs occupied the same duration as the quarter note within the chord sequences (either 700 ms or 800 ms, as described below).

Crossing the experimental factor of oddball (contained oddballs or did not contain oddballs) with the experimental factor of event structure (repeated, coherent, or incoherent) produced six Oddball × Event Structure versions. To broaden the generalizability of the findings and maintain participant interest, we composed 12 base chord progressions using three different lengths, two different tempi, and two different note-duration rhythms. The three different sequence lengths were 3.5 s, 7 s, and 11.9 s. The two different tempi were 71 quarter notes per minute (700 ms quarter note interonset intervals) and 86 quarter notes per minute (850 ms quarter note interonset intervals). The two different note-duration rhythms were composed of quarter notes (four per measure), eighth notes (eight per measure), and triplets (12 per measure); where eighth notes occurred in Rhythm I, triplets occurred in Rhythm II, and vice versa. Crossing each of the 12 base chord progressions with each of the six Oddball × Event Structure versions produced a total of 72 unique chord sequences. Each participant heard each of the 72 unique chord sequences twice over the course of the experiment, amounting to 622 chords and 74 oddballs in total. Thus, the overall probability of an oddball occurring was 10.6% (comparable to Tse et al., 2004).

For each of the 72 unique chord sequences, we created 12 oddball position variations, in which the oddballs occupied different sequential positions; we crossed these 12 variations in a between-subjects Latin-square design. We created these 12 variations so that the oddballs could be presented quasirandomly both within and between subjects. Doing so allowed us to control for effects of beat perception, such as the perceptual accenting of the first and third beats in a 4:4 metric structure (Grahn & Brett, 2007). We placed oddballs on different quarter note beats within the 4:4 metric structure of the chord sequences. This enabled us to discount the explanation that any potential findings were driven by the serial locations of the oddballs, rather than by the presence of the oddballs themselves.

The repeated chord sequences started with a root position C major chord and used only this chord (see Fig. 2). The coherent chord sequences started with a root position C major chord and continued with chords drawn from the seven harmonies diatonic to C major, following the rules of Western tonal harmony (see Fig. 3). The incoherent chord sequences were formed by scrambling the order of the chords in the coherent sequences (following Pariyadath & Eagleman, 2007), violating the rules of Western tonal harmony (see Fig. 4). Half of the chord sequences contained oddballs placed quasirandomly after every two to six chords (following Pariyadath & Eagleman, 2012), as well as on the final beat. In all other respects, the chord sequences that contained oddballs were identical to those that did not (see Fig. 5).

Procedure

Participants were tested individually in a 4-ft × 4-ft WhisperRoom Sound Isolation Enclosure (MDL 4848E/ENV). They wore Sennheiser HD 600 headphones facing a 22-in. Dell P2212H monitor, and made responses using a computer keyboard, mouse, and DirectIN Rotary Controller (PCB v2014). The auditory stimuli were presented binaurally at a comfortable listening level. The experiment was presented using DirectRT (Version 2014.1.127; Jarvis, 2014) on a Dell OptiPlex 7010 desktop computer running Windows 7.

The experiment consisted of two blocks. Each consisted of three practice trials followed by 72 randomly presented experimental trials. Each trial proceeded as follows: Participants pressed a button to start the presentation of a chord sequence. After its completion, they made a response. The response was a musical imagery reproduction in the first block and a verbal estimation in the second block. We did not randomize the order of the blocks in order to protect the integrity of the Task manipulation; fixing the order in which participants make verbal estimations and duration reproductions is a common procedure (e.g., Block et al., 1980; Brown, 1985). The order of the blocks—first musical imagery reproduction, then verbal estimation—ensured that the musical imagery reproduction task induced indirect temporal processing rather than direct temporal processing. If verbal estimations had been called for first, they would have contaminated the subsequent musical imagery reproductions with knowledge of the experimental purpose, an increased awareness of duration, and an increased level of direct temporal processing.

In the first block, immediately upon the closure of the chord sequence in each trial, participants were presented with the on-screen instructions: “Imagine that excerpt playing back in your head. Replay it through your head the exact way you heard it play through the headphones, from start to finish. Press the green button to mark the start of the excerpt you’re imagining. Press the red button to mark the finish of the excerpt you’re imagining.”

In the second block, immediately upon the closure of the chord sequence in each trial, participants were presented with the on-screen question: “What is the duration of this excerpt? In other words, how many seconds passed from the moment it started to the moment it finished? Please try to be as specific and accurate as possible by rounding to the tenths decimal place.”

The experiment concluded with a brief demographic questionnaire that involved describing the extent to which people had received formal musical training. We included formal musical training in the analysis because it has been shown to affect how people perceive and process music (e.g., Schmithorst & Holland, 2003). Participants progressed through the experiment at their own pace. The experiment lasted about 50 min.

Data analysis

We ran a linear mixed model to account for random effects within participants (see Baayen, Davidson, & Bates, 2008). The within-subjects fixed effects were oddball (did or did not contain oddballs), task (verbal estimation or musical imagery reproduction), event structure (repeated, coherent, or incoherent), and duration (3.5 s, 7 s, or 12 s). The between-subjects fixed effects were formal musical training (had or had not received musical training for at least 1 year; following Janata & Paroo, 2006) and oddball position (12 variations).Footnote 2 The random effects were subject and item (12 base chord progressions).

To obtain a standardized measure of subjective duration, we divided the raw (ms) response data by the actual durations of the chord sequences. This produced ratio scores. Ratio scores represent directional bias, or constant error; values above and below 1.0 represent overestimations and underestimations, respectively (see Hornstein & Rotter, 1969). We reported 95% confidence intervals (CIs) as variability measures around the mean ratio scores. We did not report effect sizes because there is currently no appropriate or agreed-upon method for calculating effect size in linear mixed models (see Orelien & Edwards, 2008). The data consisted of 7,488 ratio scores (accessible at https://osf.io/v8jnb/), 31 of which were identified as outliers using the generalized extreme studentized deviate (ESD) method (Rosner, 1983) and excluded from the analysis; the analyses with and without the outliers yielded the same pattern of results. The resultant distribution was normal (skewness = 0.46; kurtosis = 0.85).

To account for random slope variance and obtain model convergence, we first ran the maximal model including all factors, interactions, and random slopes with the subject and item grouping variables (see Barr, Levy, Scheepers, & Tily, 2013). The final converged omnibus model included the full Oddball × Task × Event Structure × Duration interaction, in addition to formal musical training and oddball position; the random slopes of oddball, event structure, and task were included within both the subject and item grouping variables (see Appendix). Pseudo-R 2 was .388, indicating that the final model explained (modeled) 38.8% more variance than the model including only the subject grouping variable. The intraclass correlation coefficient was .295 (substantial clustering) for subject, and .060 (mild clustering) for item, confirming that these data were appropriate for linear mixed modeling.

We ran the linear mixed model with restricted maximum likelihood, using the lmer function of the lme4 package (Bates, Maechler, Bolker, & Walker, 2015) in R (R Core Team, 2016). We obtained regression weights using the summary function of the lme4 package, F statistics and p values for omnibus effects (Satterthwaithe approximation) using the anova function of the car and lmerTest packages (Fox & Weisberg, 2010), and normed means, standard deviations, and standard errors using the summarySEwithin function of the Rmisc package (Morey, 2008).

Although the omnibus main effects and interactions were obtained by running the model in the anova() function, the investigation of simple effects and interactions required using a combination of Helmert and dummy coding. Helmert coding allowed us to observe the simple effects of the omnibus main effect of event structure, and the simple effects of the omnibus main effect of duration. The Helmert-coding contrast matrix for event structure was [.5, −.5, 0, −1/3, −1/3, 2/3] with two columns and three rows, one for each level. In this coding scheme, the coherent sequences were compared to the incoherent sequences, and the repeated sequences were compared to the average of the coherent and incoherent sequences. The Helmert-coding contrast matrix for duration was [2/3, −1/3, −1/3, 0, −.5, .5]—the 3.5-s sequences were compared to the 7-s sequences, and the 12-s sequences were compared to the average of the 7-s and 3.5-s sequences.

The combination of Helmert and dummy coding allowed us to characterize the omnibus interactions, observing the effect of one factor while holding constant each level of another factor. For example, to characterize the Oddball × Event Structure interaction, we observed the simple effect of oddball at each level of event structure. To do this, we Helmert-coded oddball using the contrast matrix [−.5, .5], and dummy-coded event structure in three different ways, making each of its levels the reference group: The dummy-coding contrast matrix for event structure when the repeated-sequence level was the reference group was [0, 1, 0, 1, 0, 0]. When the repeated-sequence level was the reference group, the model revealed the effect of oddball for only the repeated sequences; when the coherent-sequence level was the reference group [0, 1, 0, 0, 0, 1], the model revealed the effect of oddball for only the coherent sequences; when the incoherent-sequence level was the reference group [1, 0, 0, 0, 0, 1], the model revealed the effect of oddball for only the incoherent sequences. By keeping oddball Helmert-coded while dummy coding each level of event structure as the reference group, we revealed the differential effects of oddball when the sequences were either repeated, coherent, or incoherent.

Results and discussion

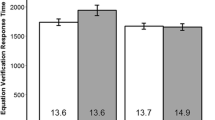

Figure 6 shows the Oddball × Task interaction, F(1, 7190) = 37.47, p < .0001. People verbally estimated the sequences that contained oddballs (M = 0.93, SD = 0.24, 95% CI [0.92, 0.94]) as shorter than the sequences that did not contain oddballs (M = 0.96, SD = 0.24, 95% CI [0.95, 0.97]), t(161) = −3.99, p = .0001. In contrast, people reproduced with musical imagery the sequences that contained oddballs (M = 0.97, SD = 0.29, 95% CI [0.96, 0.98]) as longer than the sequences that did not contain oddballs (M = 0.94, SD = 0.28, 95% CI [0.93, 0.96]), t(161) = 4.12, p < .0001. These results are in line with the dual-process contingency model, suggesting the effect of oddballs on the subjective duration of sequences is contingent upon whether people are engaged in explicit temporal processing (making verbal estimations) or implicit temporal processing (making musical imagery reproductions).

Mean ratio scores (± 1 SEM) for the chord sequences that did and did not contain oddballs as a function of task in Experiment 1

As Fig. 6 illustrates, mean ratio scores produced by the verbal estimations and musical imagery reproductions differed, but both were absolute underestimations. The average ratio scores of both the verbal estimations and musical imagery reproductions were below 1.0, indicating that the subjective durations of the sequences were shorter than the actual durations. Our primary research question, however, concerned relative subjective duration—comparing whether the sequences that contained oddballs seemed shorter or longer than the sequences that did not contain oddballs, rather than whether the sequences seemed shorter or longer than their actual durations. We found that verbal estimations of the sequences that contained oddballs were shorter (more underestimated) than the verbal estimations of the sequences that did not contain oddballs; but the musical imagery reproductions of the sequences that contained oddballs were longer (less underestimated) than the musical imagery reproductions of the sequences that did not contain oddballs.

Figure 7 shows the omnibus Oddball × Event Structure interaction, F(2, 7189) = 3.04, p = .048. The effect of oddballs was more negatively related to the repeated sequences than the averaged coherent and incoherent sequences, β = −0.021, t(7241) = −2.46, SE = 0.009, p = .014. The difference between the subjective duration of the repeated sequences that contained oddballs (M = 0.95, 95% CI [0.94, 0.97]) and those that did not contain oddballs (M = 0.97, 95% CI [0.95, 0.98]) was not significant, t(329) = −1.86, p = .064. These results further support the dual-process contingency model, showing that information processing has a significant influence on how oddballs distort the subjective duration of sequences.

Mean ratio scores (± 1 SEM) for the chord sequences that did and did not contain oddballs as a function of event structure in Experiment 1

Although the Oddball × Event Structure × Task interaction was not significant, F(2, 7189) = 1.71, p = .182, the influence of event structure on the effect of oddballs appeared to be isolated to the musical imagery reproduction block (see Fig. 8). When making musical imagery reproductions, the effect of oddballs was more negatively related to the repeated sequences than the averaged coherent and incoherent sequences, β = −0.032, t(7241) = −2.63, SE = 0.012, p = .009. People reproduced with musical imagery the incoherent sequences that contained oddballs as longer than those that did not contain oddballs, t(1113) = 4.12, p < .0001, and the coherent sequences that contained oddballs as longer than those that did not contain oddballs, t(1107) = 2.93, p = .003 (see also the Supplemental Materials at https://osf.io/v8jnb/).

Mean ratio scores (± 1 SEM) for the chord sequences that did and did not contain oddballs as a function of task and event structure in Experiment 1

The analysis also yielded a main effect of event structure, F(2, 27) = 3.99, p = .030, and an Event Structure × Task interaction, F(1, 7190) = 9.81, p < .0001. People reproduced with musical imagery the repeated sequences (M = 0.98, SD = 0.27, 95% CI [0.96, 0.99]) as longer than the averaged coherent and incoherent sequences (M = 0.95, SD = 0.27, 95% CI [0.93, 0.96]), t(221) = 4.99, p < .0001; no significant differences emerged between the subjective duration of the repeated, coherent, and incoherent sequences when people were making verbal estimations. These results highlight the fact that the coherent and incoherent chord sequences that contained oddballs were reproduced with musical imagery as longer than those that did not contain oddballs, but no such effect occurred for the repeated sequences (see Fig. 8).

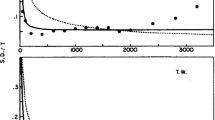

Finally, the analysis yielded a main effect of duration, F(2, 9) = 19.52, p < .001, and a Duration × Task interaction, F(2, 12) = 36.32, p < .0001. When making verbal estimations, the 12-s sequences (M = 0.90, SD = 0.22, 95% CI [0.89, 0.92]) were more underestimated than averaged 3.5-s and 7-s sequences (M = 0.97, SD = 0.23, 95% CI [0.95, 0.98]), t(9) = 3.74, p = .005; the 7-s sequences (M = 0.94, 95% CI [0.92, 0.95]) were more underestimated than the 3.5-s sequences, t(9) = 3.14, p = .012. Similarly, when making musical imagery reproductions, the 12-s sequences (M = 0.90, SD = 0.22, 95% CI [0.89, 0.92]) were more underestimated than the averaged 3.5-s and 7-s sequences (M = 1.01, SD = 0.27, 95% CI [1.00, 1.02]), t(9) = −6.76, p < .0001; the difference between the 7-s sequences (M = 0.94, SD = 0.21, 95% CI [0.92, 0.95]) and 3.5-s sequences was not significant, t(9) = −2.21, p = .054. These results exemplify Vierordt’s law (Lejeune & Wearden, 2009), showing that underestimations become more exaggerated (absolute accuracy decreases below a ratio score of 1.0) as the actual durations of intervals lengthen. Furthermore, the tendency to exaggerate underestimations as actual duration lengthened was more pronounced for the musical imagery reproductions than the verbal estimations.

Overall, in Experiment 1, the task manipulation yielded a robust interaction in the direction predicted by the dual-process contingency model. The event structure manipulation yielded a similar pattern of effects, but it appeared to influence the effect of oddball only when people were making musical imagery reproductions.

Experiment 2

In Experiment 1, the influence of event structure on the effect of oddball appeared to be isolated to the musical imagery reproduction block—only when people were making musical imagery reproductions did an effect of event structure appear to emerge. To further investigate this, we conducted a second experiment in which we isolated the influence of Event Structure on the effect of Oddball—we had people make only musical imagery reproductions, and not verbal estimations. Because we excluded verbal estimations from Experiment 2, we could not use task as a manipulation of information processing, as we did in Experiment 1. Instead, we manipulated information processing by varying the familiarity of the chord sequences—we included half of the chord sequences in a pretrial exposure phase. As described below, we ran a preliminary study to determine the number of times that each chord sequence needed to be presented in the exposure phase to become sufficiently familiar.

Familiar, predictable chord sequences encourage more direct temporal processing than unpredictable ones. We familiarized people with half of the sequences, making the highly predictable (repeated) and moderately predictable (coherent) ones even more predictable, and the unpredictable (incoherent) ones less unpredictable. We thus expected that the effect of familiarity would compound with the effect of event structure: that the subjective duration-distorting effects of the repeated and coherent sequences would be stronger when they were familiar than when they were unfamiliar, but the subjective duration-distorting effects of the incoherent sequences would be stronger when they were unfamiliar than when they were familiar.

Method

Participants

Fifty-seven undergraduate students enrolled in General Psychology at the University of Arkansas volunteered to participate in this experiment in exchange for course credit. We excluded from the analysis the data of one participant for having abnormal hearing. The ages of the remaining 56 participants (38 females) ranged from 18 to 23 years (M = 19.66, SD = 1.25). None were music majors, but 10 had received formal musical training for at least 1 year, ranging from 1 to 11 years (M = 3.35, SD = 3.27). None had participated in Experiment 1. All of the participants gave informed consent before participating in this experiment. This experiment was approved by the University of Arkansas Institutional Review Board.

Stimuli

To preserve the overall duration of Experiment 1 (about 50 min), we shortened the actual durations of the 12 s and 7 s chord sequences to 8.5 s and 6 s, respectively, and excluded the 3.5 s ones. Whereas Experiment 1 included 72 unique chord sequences, Experiment 2 included 48. Half of the chord sequences were presented in the pretrial exposure phase, and the other half were not presented in the pretrial exposure phase (all other factors were counterbalanced). All other aspects of the stimuli in Experiment 2 were identical to those in Experiment 1.

Procedure

The first block of Experiment 2 was an exposure phase. Participants were presented with the on-screen instructions: “You will be presented with a series of musical clips. Please listen carefully to each one. Important tasks will follow.” We then presented 24 unique chord sequences played 12 times each in random order (totaling 288 chord sequences).

In a preliminary study, we presented participants 12 unique chord sequences played 12 times each in random order. Following the exposure phase, participants made familiarity ratings for each of 24 unique chord sequences (half of which had been presented in the exposure phase). The chord sequences presented in the exposure phase were rated as significantly more familiar than the chord sequences that were not presented in the exposure phase. We interpreted this finding as evidence that 12 repetitions was sufficient to elevate familiarity. We thus used 12 repetitions in Experiment 2.

In the second block of Experiment 2, participants reproduced with musical imagery each of the 48 unique chord sequences (half of which had been presented in the exposure phase). Immediately upon the closure of the chord sequence in each trial, participants were presented with the on-screen instructions: “Imagine that same clip playing back in your head. Replay it the same way you heard it, from beginning to end. Left-click to begin your imagined clip, then right-click when it ends.” All other aspects of the procedure were identical to those in Experiment 1.

Data analysis

The within-subjects fixed effects were oddball, event structure, familiarity, and duration. The between-subjects fixed effect was formal musical training. The random effects were subject and item. After excluding 15 outliers using the ESD method, the data consisted of 2,673 normally distributed ratio scores (skewness = −0.05; kurtosis = 1.78). The final converged omnibus model included the random slope of oddball with the subject grouping variable, and the random slopes of event structure and familiarity with the item grouping variable (see Appendix). Pseudo-R 2 was .050, and the intraclass correlation coefficients for subject and item were .352 and .045, respectively. All other aspects of the data analysis were identical to those in Experiment 1.

Results and discussion

Figure 9 shows the omnibus Oddball × Event Structure interaction, F(2, 2536) = 8.02, p < .001. The effect of oddballs was more negatively related to the repeated sequences than the averaged coherent and incoherent sequences, β = −0.058, t(2535) = −3.99, SE = 0.015, p < .0001. People reproduced with musical imagery the repeated sequences that contained oddballs (M = 0.91, 95% CI [0.89, 0.93]) as shorter than those that did not contain oddballs (M = 0.95, 95% CI [0.93, 0.96]), t(329) = −2.60, p = .014. In contrast, people reproduced with musical imagery the incoherent sequences that contained oddballs (M = 0.93, 95% CI [0.91, 0.95]) as longer than those that did not contain oddballs (M = 0.90, 95% CI [0.88, 0.92]), t(329) = 2.14, p = .040. The difference between the subjective duration of the coherent sequences that did and did not contain oddballs was not significant, t(327) = 1.60, p = .120. This omnibus Oddball × Event Structure interaction is similar to that found in Experiment 1, showing that the effect of oddball was shaped by the mode information processing people were engaged in, and further supporting the dual-process contingency model.

Mean ratio scores (± 1 SEM) for the chord sequences that did and did not contain oddballs as a function of event structure in Experiment 2

We also found a main effect of duration, F(1, 2) = 35.53, p = .026. The 8.5-s sequences (M = 0.88, 95% CI [0.87, 0.89]) were more underestimated than the 6-s sequences (M = 0.97, 95% CI [0.95, 0.98]). This results is similar to the main effect of duration found in Experiment 1, showing that underestimations become more exaggerated as the actual durations of intervals lengthen.

General discussion

Until now, the temporal oddball illusion has been applied only to the subjective duration of single events. The present research asked, does the finding that individual oddballs seem longer than individual standards translate to entire event sequences? Is this potential translation influenced by whether people are engaged in direct temporal or indirect temporal processing? In two experiments, we tested whether chord sequences that contain oddballs seem longer than chord sequences that do not contain oddballs, and we manipulated whether people were engaged in direct temporal or indirect temporal processing while listening to the chord sequences by independently varying the task people completed (Experiment 1), the event structure of the sequences (Experiments 1 and 2), and the familiarity of the sequences (Experiment 2). Overall, the present findings suggest that the answer to the first question is maybe, and to the second question, yes. The sequences that contained oddballs sometimes seemed longer—and other times seemed shorter—than the sequences that did not contain oddballs, and this depended on the type of information processing people were engaged in. When people were engaged in direct temporal processing, the sequences that contained oddballs seemed shorter than the sequences that did not contain oddballs. But the opposite was true when people were engaged in indirect temporal processing—the sequences that contained oddballs seemed longer than the sequences that did not contain oddballs.

Experiment 1 yielded a robust Oddball × Task interaction: When people were making verbal estimates, the sequences that contained oddballs seemed shorter than the sequences that did not contain oddballs, but when people were making musical imagery reproductions, the sequences that contained oddballs seemed longer than the sequences that did not contain oddballs. Experiment 1 also yielded an Oddball × Event Structure interaction. This interaction showed that people were more likely to experience the sequences that contained oddballs as shorter than the sequences that did not contain oddballs when the sequences were repeated than when the sequences were coherent and incoherent. Experiment 2 yielded a similar Oddball × Event Structure interaction. The repeated sequences that contained oddballs seemed shorter than those that did not, but the incoherent sequences that contained oddballs seemed longer than those that did not. It is important to note that the presence or absence of oddballs in the sequences affected merely the degree to which people underestimated the sequences, evidenced by the mean ratio scores smaller than 1.0.

Information processing manipulations

Task

In Experiment 1, we induced direct temporal processing by asking people to make verbal estimations and indirect temporal processing by having them make musical imagery reproductions. The robust Oddball × Task interaction shown in Fig. 8 evidences that this manipulation altered the subjective duration of the musical excerpts. The chord sequences containing oddballs seemed shorter than those that did not when reported by verbal estimation, but longer when reproduced with musical imagery. One limitation we faced with the task manipulation was the inability to randomize the order of the blocks in Experiment 1. Asking some participants to complete verbal estimations first would have cued them into our interest in subjective time, contaminating their performance on the musical imagery reproduction task. Future research could determine whether order serves as a confound by using a fully between-subjects design, assigning only one task to each participant. Two factors, in any case, make it unlikely that the present results are attributable to an order effect. First, the shift in the oddball slopes between tasks in Experiment 1 was particularly robust. Second, the musical imagery reproductions in Experiment 1 were made in the first experimental block, whereas those in Experiment 2 were made after a 33-min exposure phase, yet both episodes of musical imagery reproductions yielded the same overall pattern of effects—an Event Structure × Oddball interaction.

Event structure

We manipulated information processing by varying event structure in both Experiments 1 and 2 (see Fig. 1; see also Brown & Boltz, 2002; Cai et al., 2015; Zakay, 1993). This manipulation affected the subjective duration of sequences with oddballs, as evidenced by an Event Structure × Oddball interaction in both experiments. We used repeated sequences to encourage direct temporal processing because they confirm low-level expectations (Dehaene et al., 2001) and are processed more efficiently than coherent and incoherent sequences (Zakay, 1993), leaving more attentional resources available to track the passage of time. The repeated sequences that did not contain oddballs were reproduced with musical imagery as longer than the coherent and incoherent ones, evidenced by the Event Structure × Task interaction in Experiment 1. One possible explanation for this Event Structure × Task interaction is that the repeated chord sequences without oddballs seemed to last too long, or end too late (see Jones & Boltz, 1989), given their impoverished content. Another potential explanation is that the repeated sequences without oddballs thwarted musical expectations about harmonic change (we generally expect music to progress and develop; Bharucha, 1987; Margulis, 2005, 2014). This stasis could have also enhanced feelings of frustration, which have been shown to lengthen subjective duration (Miller, 1978). In Experiment 2, the repeated sequences that contained oddballs were reproduced with musical imagery as shorter than the repeated sequences that did not contain oddballs. The reason the same task did not produce a difference in Experiment 1 could be that Experiment 2’s exposure phase lasted approximately 33 min, and made people bored. Given this preexisting state, the repeated sequences without oddballs might have seemed especially tedious, further inducing direct temporal processing.

Familiarity

In Experiment 2, we familiarized participants with half of the chord sequences in a pretrial exposure phase to enhance the predictability or expectedness of the chord sequences, reasoning that it would facilitate stimulus processing, free attention to focus on time, and increase direct temporal processing (Avant et al., 1975; Zakay, 1993). In contrast to our manipulations of task and event structure, familiarity did not interact with the effect of oddball. Familiarity also failed to impact time estimation in a number of previous experiments (e.g., Block, Hancock, & Zakay, 2010; Schiffman & Bobko, 1977). Past research suggests that large numbers of exposures are required for effects of preexposure, latent inhibition, and similarity to emerge (Kowal, 1987; Zakay, 1989). Experimental exposure phases, in our study and in other related work, might not provide enough “exposures” to produce reliable effects for familiarity. People ordinarily become deeply familiar with music over many listenings, over many years; we were limited to a single laboratory session. Future research might benefit from using preexisting music with which people are maximally familiar.

Theoretical implications

Dual-process contingency model

The main findings of the present research—the Oddball × Task and Oddball × Event Structure interactions—support the dual-process contingency model (Zakay, 1989, 1993). The dual-process contingency model predicted that the sequences containing oddballs would seem shorter than those not containing oddballs when people were engaged in direct temporal processing, but longer when people were engaged in indirect temporal processing. This is what we found. The sequences containing oddballs seemed shorter than those not containing oddballs when people were engaged in direct temporal processing—when making verbal estimates in Experiment 1, and when reproducing with musical imagery the repeated sequences in Experiment 2. In contrast, the sequences containing oddballs seemed longer than those not containing oddballs when people were engaged in indirect temporal processing—when reproducing with musical imagery the incoherent sequences in both Experiments 1 and 2. The dual-process contingency model asserts that temporal and nontemporal information are tightly linked, and that people engage in an interdependent level of temporal (direct temporal) and nontemporal (indirect temporal) information processing. The model defines engaging in temporal processing as attending to high amounts of temporal information relative to nontemporal information. Because certain amounts of temporal and nontemporal information are always processed (Zakay, 1989, 1993), we treated the distinction between temporal and nontemporal information processing as relative, a framework with which our results are consistent.

Attention-based and memory-based models

Although the dual-process contingency model integrates the tenets of both attention-based and memory-based models, they can also be considered separately in light of the present research. Attention-based and memory-based models both account for the findings of time estimation experiments, but in different contexts—attention-based models are used to explain prospective findings (involving direct temporal processing), whereas memory-based model predictions are used to explain retrospective findings (involving indirect temporal processing; Block & Zakay, 1997). In the present experiments, attention-based models predicted that the sequences containing oddballs would seem shorter than those not containing oddballs, because oddballs should distract people from time and disrupt time-keeping behaviors. Memory-based models, on the other hand, predicted that sequences containing oddballs would seem longer than those not containing oddballs, because oddballs should increase the amount of nontemporal information encoded in and retrieved from memory. Our principal findings—the Oddball × Task and Oddball × Event Structure interactions—support the predictions of both attention-based and memory-based models, depending on which mode of information processing people were engaged in. Attention-based models were supported when people were engaged in direct temporal processing; memory-based models were supported when people were engaged in indirect temporal processing. These findings are in line with the meta-analytic findings of Block and Zakay (1997) and reflect the pattern of effects that motivated the dual-process contingency (and resource allocation) model (Zakay, 1989, 1993).

Pacemaker accumulator hypothesis

The present findings do not support the pacemaker accumulator hypothesis (Tse et al., 2004). The pacemaker accumulator hypothesis predicted that the sequences containing oddballs would seem longer than those not containing oddballs, regardless of event structure. But we found that the sequences containing oddballs seemed longer than those not containing oddballs only when people were reproducing with musical imagery the incoherent chord sequences, or engaged in indirect temporal (nontemporal information) processing. Considered in isolation, this finding raises the possibility that the temporal oddball illusion is driven by nontemporal (in contrast to temporal) information processing—that oddballs seem longer than standards because oddballs draw attention to their inherent nontemporal rather than temporal stimulus properties. This possibility is in line with the processing principle (Matthews & Meck, 2016), which emphasizes that the subjective duration of an individual stimulus event lengthens as a function of the amount of perceptual information extracted from the stimulus. Together with Matthews and Meck (2016), the present research reinforces the possibility that subjective durations of relatively short individual events are distorted by stimulus properties that are, in essence, nontemporal.

Coding efficiency hypothesis

The present findings do not support the coding efficiency hypothesis (Pariyadath & Eagleman, 2007, 2012). The coding efficiency hypothesis predicted that the predictable (repeated) sequences containing oddballs would seem longer than those not containing oddballs, but there would be no difference between the subjective duration of the unpredictable (incoherent) sequences. Instead, we found that the repeated sequences containing oddballs seemed shorter than those not containing oddballs. Additionally, the incoherent sequences containing oddballs seemed longer than those not containing oddballs. The coding efficiency also hypothesized that repetition suppression would lead the repeated sequences to seem shorter than the coherent and incoherent sequences. To the contrary, the Event Structure × Task interaction in Experiment 1 revealed that in the musical imagery reproduction block, the repeated sequences seemed longer than the coherent and incoherent ones.

Dynamic attending theory

Jones and Boltz (1989) proposed dynamic attending theory to explain how the structural compatibility of temporal and nontemporal stimulus information determines whether people attend to low-level characteristics (e.g., inherent properties of stimulus events) or high-level ones (e.g., anticipated ending times of sequences). The manipulation of expectations makes sequences with incoherent event structures that sound like they end too early seem relatively short, and ones that sound like they end too late seem relatively long. Studies testing the tenets of dynamic attending theory have altered the structural coherence of musical sequences by varying both temporal accents (lengthening note durations) and nontemporal accents (varying pitch) to show how sequence event structure affects subjective duration. We manipulated the structural coherence of sequences by varying nontemporal accents (harmonic changes) to show how sequence event structure interacts with attention-capturing expectancy violations (oddballs) to affect subjective duration. Similar to Brown and Boltz (2002), the coherent musical sequences were not subjectively longer than the incoherent ones, suggesting that temporal accents might need to be manipulated for differences to arise between the subjective duration of coherent and incoherent sequences. In both of the present experiments, we counterbalanced the two rhythmic patterns of the chord sequences (comprising quarter notes, eighth notes, and triplets) across all of the sequences and experimental factors, in order to control for effects of entrainment. Nonetheless, if people entrained to a slower beat in the musical imagery reproduction block than in the verbal estimation block, entrainment could have played a role. In this case, oddballs could have served to speed people’s relatively slow internal beat in the musical imagery reproduction block, but slow people’s relatively fast internal beat in the verbal estimation block.

Conclusion

Taken together, the present findings support the dual-process contingency model more than the pacemaker accumulator and coding efficiency hypotheses. The dual-process contingency model performs superiorly because it accounts for the impact of information processing on subjective duration. It integrates the tenets of both attention-based and memory-based models of time estimation, allowing it to explain effects of subjective duration in a variety of contexts. Moreover, the dual-process contingency model was developed to address subjective time at the scale of seconds to minutes—consistent with stimuli lengths in our study—whereas the pacemaker accumulator and coding efficiency hypotheses were developed to address subjective time at shorter time scales (e.g., milliseconds). The dual-process contingency model originated in the time estimation literature, whereas the pacemaker accumulator and coding efficiency hypotheses originated in the time perception literature.

Time estimation generally involves intervals longer than about 3 s, and time perception intervals shorter than 3 s (Pöppel, 1997; see Fraisse, 1984, for a discussion of the distinctions between time estimation and time perception). Evidence suggests that common processes underlie the temporal representations of intervals longer and shorter than 3 s (Lewis & Miall, 2009; Rammsayer & Troche, 2014), and that different aspects of psychological time share neural pathways (Teki, Grube, & Griffiths, 2011; cf. Teki, Grube, Kumar, & Griffiths, 2011). Nonetheless, the majority of studies on psychological time have examined time perception in isolation, using single events ranging only from milliseconds to a few seconds as stimuli (Matthews & Meck, 2016). Further research should work to illuminate the relationship between time estimation and time perception.

The present research tested an implementation of the temporal oddball illusion in a novel context using a novel methodology. We investigated whether the temporal oddball illusion translates from single events to multiple-event sequences, and whether this potential translation depends on information processing. The main findings were that the sequences containing oddballs could seem shorter or longer than those not containing oddballs, depending on whether people were engaged in direct or indirect temporal processing. These results support the dual-process contingency model and can be explained using the notion of an information processing continuum (Zakay, 1989, 1993): As attention shifted from counting seconds (direct temporal processing) to listening to music (indirect temporal processing), for example, the effect of oddballs shifted from decreasing the number of seconds counted to increasing the amount of music remembered.

Notes

An oddball is a deviant event (e.g., a sliding tone presented after a series of nonsliding tones); a standard is a repeated event (e.g., the nonsliding tone).

The omnibus model yielded no effects of formal musical training or oddball position, so they were excluded from subsequent contrasts.

References

Avant, L. L., Lyman, P. J., & Antes, J. R. (1975). Effects of stimulus familiarity upon judged visual duration. Attention, Perception, & Psychophysics, 17, 253–262. https://doi.org/10.3758/BF03203208

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59, 390–412. https://doi.org/10.1016/j.jml.2007.12.005

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68, 255–278. https://doi.org/10.1016/j.jml.2012.11.001

Bates, D., Maechler, M., Bolker, B., Walker, S., Christensen, R. H. B., & Singmann, H. (2015). lme4: Linear mixed-effects models using Eigen and S4, 2014. R package version, 1.

Bharucha, J. J. (1987). Music cognition and perceptual facilitation: A connectionist framework. Music Perception, 5, 1–30. https://doi.org/10.2307/40285384

Birngruber, T., Schröter, H., & Ulrich, R. (2014). Duration perception of visual and auditory oddball stimuli: Does judgment task modulate the temporal oddball illusion? Attention, Perception, & Psychophysics, 76, 814–828. https://doi.org/10.3758/s13414-013-0602-2

Block, R. A. (1989). Experiencing and remembering time: Affordances, context, and cognition. Advances in Psychology, 59, 333–363. https://doi.org/10.1016/S0166-4115(08)61046-8

Block, R. A., & Zakay, D. (1997). Prospective and retrospective duration judgments: A meta-analytic review. Psychonomic Bulletin & Review, 4, 184–197. https://doi.org/10.3758/BF03209393

Block, R. A., George, E. J., & Reed, M. A. (1980). A watched pot sometimes boils: A study of duration experience. Acta Psychologica, 46, 81–94. https://doi.org/10.1016/0001-6918(80)90001-3

Block, R. A., Hancock, P. A., & Zakay, D. (2010). How cognitive load affects duration judgments: A meta-analytic review. Acta Psychologica, 134, 330–343. https://doi.org/10.1016/j.actpsy.2010.03.006

Boltz, M. G. (1998). The processing of temporal and nontemporal information in the remembering of event durations and musical structure. Journal of Experimental Psychology: Human Perception and Performance, 24, 1087. https://doi.org/10.1037/0096-1523.24.4.1087

Brown, S. W. (1985). Time perception and attention: The effects of prospective versus retrospective paradigms and task demands on perceived duration. Attention, Perception, & Psychophysics, 38, 115–124. https://doi.org/10.3758/BF03198848

Brown, S. W., & Boltz, M. G. (2002). Attentional processes in time perception: Effects of mental workload and event structure. Journal of Experimental Psychology: Human Perception and Performance, 28, 600. https://doi.org/10.1037/0096-1523.28.3.600

Cai, M. B., Eagleman, D. M., & Ma, W. J. (2015). Perceived duration is reduced by repetition but not by high-level expectation. Journal of Vision, 15(13), 1–17. https://doi.org/10.1167/15.13.19

Coull, J. T., & Nobre, A. C. (2008). Dissociating explicit timing from temporal expectation with fMRI. Current Opinion in Neurobiology, 18, 137–144. https://doi.org/10.1016/j.conb.2008.07.011

Debener, S., Kranczioch, C., Herrmann, C. S., & Engel, A. K. (2002). Auditory novelty oddball allows reliable distinction of top-down and bottom-up processes of attention. International Journal of Psychophysiology, 46, 77–84. https://doi.org/10.1016/S0167-8760(02)00072-7

Dehaene, S., Naccache, L., Cohen, L., Le Bihan, D., Mangin, J. F., Poline, J. B., & Rivière, D. (2001). Cerebral mechanisms of word masking and unconscious repetition priming. Nature Neuroscience, 4, 752–758. https://doi.org/10.1038/89551

Fox, J., & Weisberg, S. (2010). Time-series regression and generalized least squares in R, an Appendix to An R Companion to Applied Regression (2nd ed.). New York, NY: Sage. Available: http://socserv.mcmaster.ca/jfox/Books/Companion/appendix/Appendix-Timeseries-Regression.pdf

Fraisse, P. (1984). Perception and estimation of time. Annual Review of Psychology, 35, 1–36. https://doi.org/10.1146/annurev.ps.35.020184.000245

Grahn, J. A., & Brett, M. (2007). Rhythm and beat perception in motor areas of the brain. Journal of cognitive neuroscience, 19, 893–906. https://doi.org/10.1162/jocn.2007.19.5.893

Grondin, S. (2008). Methods for studying psychological time. In S. Grondin (Ed.), Psychology of Time (pp. 51–74). Bingley: Emerald.

Grondin, S. (2010). Timing and time perception: A review of recent behavioral and neuroscience findings and theoretical directions. Attention, Perception, & Psychophysics, 72, 561–582. https://doi.org/10.3758/APP.72.3.561

Grondin, S., & Killeen, P. R. (2009). Tracking time with song and count: Different Weber functions for musicians and nonmusicians. Attention, Perception, & Psychophysics, 71, 1649–1654. https://doi.org/10.3758/APP.71.7.1649

Halpern, A. R. (1988a). Mental scanning in auditory imagery for songs. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14, 434. https://doi.org/10.1037/0278-7393.14.3.434

Halpern, A. R. (1988b). Perceived and imagined tempos of familiar songs. Music Perception, 6, 193–202. https://doi.org/10.2307/40285425

Halpern, A. R., & Zatorre, R. J. (1999). When that tune runs through your head: A PET investigation of auditory imagery for familiar melodies. Cerebral Cortex, 9, 697–704. https://doi.org/10.1093/cercor/9.7.697

Hornstein, A. D., & Rotter, G. S. (1969). Research methodology in temporal perception. Journal of Experimental Psychology, 79, 561. https://doi.org/10.1037/h0026870

Huettel, S. A., Mack, P. B., & McCarthy, G. (2002). Perceiving patterns in random series: Dynamic processing of sequence in prefrontal cortex. Nature Neuroscience, 5, 485–490. https://doi.org/10.1038/nn841

Huron, D. B., & Margulis, E. H. (2010). Musical expectancy and thrills. In P. N. Juslin & J. A. Sloboda (Eds.), Handbook of music and emotion: Theory, research, applications (pp. 575–604). New York: Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199230143.003.0021

Janata, P., & Paroo, K. (2006). Acuity of auditory images in pitch and time. Attention, Perception, & Psychophysics, 68, 829–844. https://doi.org/10.3758/BF03193705

Jarvis, B. G. (2014). DirectRT (Version 2014.1.127). New York: Empirisoft Corporation.

Jones, M. R., & Boltz, M. (1989). Dynamic attending and responses to time. Psychological Review, 96, 459. https://doi.org/10.1037/0033-295X.96.3.459

Kahneman, D. (1973). Attention and effort. Englewood Cliffs: Prentice Hall.

Kowal, K. H. (1987). Apparent duration and numerosity as a function of melodic familiarity. Attention, Perception & Psychophysics, 42, 122−131. https://doi.org/10.3758/BF03210500

Lejeune, H., & Wearden, J. H. (2009). Vierordt’s The experimental study of the time sense (1868) and its legacy. European Journal of Cognitive Psychology, 21, 941–960. https://doi.org/10.1080/09541440802453006

Lerdahl, F., & Jackendoff, R. (1983). A generative theory of tonal music. Cambridge: MIT Press.

Levitin, D. J., & Cook, P. R. (1996). Memory for musical tempo: Additional evidence that auditory memory is absolute. Attention, Perception & Psychophysics, 58, 927–935. https://doi.org/10.3758/BF03205494

Lewis, P. A., & Miall, R. C. (2009). The precision of temporal judgement: Milliseconds, many minutes, and beyond. Philosophical Transactions of the Royal Society of London Series B, Biological Sciences, 364, 1897–1905. https://doi.org/10.1098/rstb.2009.0020

Margulis, E. H. (2005). A model of melodic expectation. Music Perception, 22, 663–714. https://doi.org/10.1525/mp.2005.22.4.663

Margulis, E. H. (2014). On repeat: How music plays the mind. New York: Oxford University Press.

Matthews, W. J., & Gheorghiu, A. I. (2016). Repetition, expectation, and the perception of time. Current Opinion in Behavioral Sciences, 8, 110–116. https://doi.org/10.1016/j.cobeha.2016.02.019

Matthews, W. J., & Meck, W. H. (2016). Temporal cognition: Connecting subjective time to perception, attention, and memory. Psychological Bulletin, 142, 865–907. https://doi.org/10.1037/bul0000045

McAuley, J. D., & Fromboluti, E. K. (2014). Attentional entrainment and perceived event duration. Philosophical Transactions of the Royal Society of London B: Biological Sciences, 369. https://doi.org/10.1098/rstb.2013.0401