Abstract

A number of studies have recently examined the link between individual differences in media multitasking (using the MMI) and performance on working memory paradigms. However, these studies have yielded mixed results. Here we examine the relation between media multitasking and one particular working memory paradigm—the n-back (2- and 3-back)—improving upon previous research by (a) treating media multitasking as a continuous variable and adopting a correlational approach as well as (b) using a large sample of participants. First, we found that higher scores on the MMI were associated with a greater proportion of omitted trials on both the 2-back and 3-back, indicating that heavier media multitaskers were more disengaged during the n-back. In line with such a claim, heavier media multitaskers were also more likely to confess to responding randomly during various portions of the experiment, and to report media multitasking during the experiment itself. Importantly, when controlling for the relation between MMI scores and omissions, higher scores on the MMI were associated with an increase in false alarms, but not with a change in hits. These findings refine the extant literature on media multitasking and working memory performance (specifically, performance on the n-back), and suggest that media multitasking may be related to the propensity to disengage from ongoing tasks.

Similar content being viewed by others

Over the past decade, there has been a mounting interest in the common, everyday behavior known as media multitasking. Media multitasking refers to the concurrent use of multiple forms of media (such as listening to music while writing a manuscript; Ophir, Nass, & Wagner, 2009; Rideout, Foehr, & Roberts, 2010) and has typically been explored using an individual-differences approach wherein people’s tendency to engage in media multitasking in daily life is assessed via the Media Multitasking Index (MMI; Ophir et al., 2009). This research has tended to focus on determining whether higher levels of media multitasking (i.e., higher scores on the MMI) are associated with benefits or deficits to various cognitive traits (Alzahabi & Becker, 2013; Cain, Leonard, Gabrieli, & Finn, 2016; Cain & Mitroff, 2011; Cardoso-Leite, Kludt, Vignola, Ma, Green, & Bavelier, 2016; Lui & Wong, 2012; Minear, Brasher, McCurdy, Lewis, & Younggren, 2013; Ophir et al., 2009; Ralph, Thomson, Seli, Carriere, & Smilek, 2015; Sanbonmatsu, Strayer, Medeiros-Ward, & Watson, 2013; Uncapher, Thieu, & Wagner, 2016) and personality traits (e.g., Pea et al., 2012; Sanbonmatsu et al., 2013; Shih, 2013; Wilmer & Chein, 2016). One such cognitive trait that researchers have examined in the context of media multitasking, and one that we focus on in the current paper, is working-memory capacity.

To date, when examining the association between media multitasking and working memory, researchers have employed a number of ostensible working-memory paradigms including the n-back (Cain et al., 2016; Cardoso-Leite et al., 2016; Ophir et al., 2009), Vogel, McCollough, and Machizawa’s (2005) filtering task (Cardoso-Leite et al., 2016; Ophir et al., 2009; Uncapher et al., 2016; see Cain et al., 2016, for a similar version), the AX-continuous performance task (AX-CPT; Cardoso-Leite et al., 2016; Ophir et al., 2009), a probed item-recognition task (Minear et al., 2013), as well as the operation span task (OSPAN; Sanbonmatsu et al., 2013) and reading span task (RSPAN; Minear et al., 2013). The extant studies are in full agreement that heavy media multitaskers do not perform better on working memory tasks than do light media multitaskers. Unfortunately, aside from this general point, there has been considerable variability in the findings so far. Specifically, there have been discrepancies in conclusions drawn across studies using different working memory tasks as well as discrepancies in results across studies using the same working memory task.

In terms of inconsistencies in conclusions drawn from studies using different working memory tasks, performance on some tasks suggests that higher levels of media multitasking are associated with poorer working memory, whereas performance on other tasks suggests no such relation between media multitasking and working memory. For instance, Ophir et al. (2009) initially found that heavier media multitaskers (as identified by the MMI) generally performed more poorly on the n-back, a filtering task, and the AX-CPT than did lighter multitaskers. In contrast, Minear et al. (2013) found no such differences when indexing working memory via the RSPAN or with a probe item-recognition task.

Variable findings with regard to associations between media multitasking and different working memory paradigms may not be all that surprising. After all, each working memory paradigm is unique in some way, relying at least to some extent on a different subset of cognitive processes. For example, while complex span tasks such as the OSPAN and serial-recognition tasks such as the n-back are both well-known putative tests of working memory, the two measures have only been found to weakly correlate, with more unique than shared variance when predicting general fluid intelligence (e.g., Kane, Conway, Miura, & Colflesh, 2007; Oberauer, 2005). The result is that variable relations between media multitasking and working memory across different working-memory paradigms become difficult to interpret. It is unclear whether the apparent inconsistencies—for example, between Ophir et al. (2009) and Minear et al. (2013)—are due to (a) the different types of cognitive processes engaged by the various working-memory paradigms (e.g., filtering vs. updating vs. recall vs. recognition) and/or to (b) spurious results in one of the studies.

Variable findings when the same working-memory paradigm is used are substantially more problematic. Consider the n-back – a putative test of the ability to maintain and update information within working memory—wherein participants are required to indicate whether a current stimulus matches the stimulus presented n items back. In three different studies (Cain et al., 2016; Cardoso-Leite et al., 2016; Ophir et al., 2009), individual differences in media multitasking were measured using the MMI (Ophir et al., 2009), and in each study, n-back performance was assessed via hits and false alarms across various cognitive loads. Yet in each of the three different studies, the researchers arrived at a different conclusion based on a different set of results. Whereas Ophir et al. (2009) found that heavier multitaskers produced more false alarms than did light multitaskers, Cain et al. (2016) and Cardoso-Leite et al. (2016) found no such differences. At the same time, whereas Cain et al. (2016) found that heavier multitaskers produced fewer hits than did light multitaskers, this result was not observed in Ophir et al. (2009) and Cardoso-Leite et al. (2016). Last, although (as noted above) both Cain et al. (2016) and Ophir et al. (2009) showed differences in rates of hits or false alarms across heavy and light media multitaskers, Cardoso-Leite et al. (2016) found no differences whatsoever in either of these measures. Thus, according to Ophir et al. (2009), heavy media multitasking is associated with a deficit in inhibiting responses to familiar stimuli, but not with the ability to maintain and update information in working memory. In contrast, Cain et al. (2016) posit that heavy media multitasking is associated with deficits in maintaining and updating information within working memory, but not with deficits in inhibiting familiar stimuli. Last, Cardoso-Leite et al. (2016) found no clear link between media multitasking and inhibition or updating difficulties, as reported by the other two groups of researchers.

One, perhaps obvious, question to be raised at this point is: Why might these studies have found such variable results, especially when using the same measures of media multitasking (the MMI) and working memory (e.g., the n-back)? Such inconsistent results are certainly not unique to the media-multitasking literature, and in fact, it appears that the media-multitasking literature is facing a similar problem to that recently experienced by the video-gaming literature (see Unsworth et al., 2015). In particular, many investigations of media multitasking suffer from two important shortcomings: (1) the use of an extreme-groups approach, and (2) the use of small sample sizes that likely overestimate effect sizes and capitalize on chance findings.

With respect to the first shortcoming (i.e., the use of an extreme-groups approach), it is interesting to note that in each investigation of media multitasking, researchers have consistently found that there is no bimodal distribution of “heavy media multitaskers” and “light media multitaskers.” Despite this observation, many researchers in the media-multitasking literature have adopted an extreme-groups approach (as in the video-game literature), which has involved comparing “heavy” and “light” media multitaskers (HMMs vs. LMMS)—participants whose MMI scores fall one standard deviation above or below the mean (Cardoso-Leite et al., 2016; Ophir et al., 2009; Uncapher et al., 2016), or in the upper and lower quartile of the MMI distribution (Alzahabi & Becker, 2013; Cain & Mitroff, 2011; Minear et al., 2013). Importantly, however, one problem with this extreme-groups approach is that participants within each extreme group (i.e., HMMs vs. LMMs) are all treated as being equal, when it seems likely to be the case that they are not. Indeed, the range in MMI scores between the upper bound of the light media-multitasking group (LMM) and the lower bound of the heavy media-multitasking group (HMM) have been reported to be smaller than the possible range within a particular extreme group (e.g., Cain & Mitroff, 2011; Cardoso-Leite et al., 2016; Minear et al., 2013; Ophir et al., 2009). A second problem with the extreme-groups approach is that a large amount of information is lost from the middle portion of the distribution. To reiterate, given that MMI scores are unanimously found to be relatively normally distributed, there is no clear reason why one ought to discard data from individuals whose scores fall in the middle portion of the distribution when one could examine the entire distribution.

The second, perhaps more troubling and pervasive shortcoming of many media-multitasking studies is that they often rely on small sample sizes. For example, in the n-back studies discussed earlier, Ophir et al. (2009) compared 15 HMMs to 15 LMMs, Cardoso-Leite et al. (2016) compared 20 LMMs to 12 HMMs, and Cain et al. (2016) conducted a correlational analysis on a sample of 58. Extending to media-multitasking papers using other paradigms, Minear et al. (2013) compared 36 LMMs to 33 HMMs on the RSPAN, and in another experiment, compared 27 HMMs to 26 LMMs on a recent probes item recognition task. In their singleton-distractor study, Cain and Mitroff (2011) also compared 21 HMMs to 21 LMMs. The core issue with using such small sample sizes is that they can lead to gross overestimates effect sizes, increasing the likelihood of finding spurious significant effects and thus decreasing the reproducibility of results (Button et al., 2013).

In this study we attempted to resolve the conflicting findings with regard to the relation between media multitasking and one index of working memory: the n-back. We focused on the n-back because the n-back (a) has been shown to have considerable variability in its relation to media multitasking (as we have noted above) and (b) has been explored solely using small samples and an extreme-group approach. Given the aforementioned limitations of the prior media multitasking studies using the n-back, we were mindful to (1) collect a large sample (over 300 participants) and (2) treat media multitasking behavior as a continuous variable, using a correlational approach to analyze performance variables of interest (hits and false alarms). Last, we also used two different, albeit highly similar, indices of media multitasking through two alternate versions of Ophir et al. (2009) MMI—one that has been used in most media multitasking studies (e.g., Cain et al., 2016; Cardoso-Leite et al., 2016; Minear et al., 2013; Ophir et al., 2009), and another that has been used less frequently (Ralph et al., 2015).

Method

Participants

Three hundred and seventeen participants (163 male 154 Female) with an age range of 19 to 64 years (M = 32.86, SD = 9.11) signed up for the study via Amazon Mechanical Turk and received $3.00 as compensation for their time.

Stimuli and procedure

When participants signed up for the study, they first completed a demographics questionnaire as well as the MMI. Afterwards, participants performed a 2-back, followed by a 3-back in fixed order. Following the n-back, participants were asked to complete a second, alternate version of the MMI, as well as a few questions designed as compliance checks at the conclusion of the study.

Demographic information

The first part of the experiment involved participants responding to a few basic demographic questions, indicating their biological gender, age, highest level of education, combined annual household income, and whether or not they were currently employed (full time or part time) outside of Mechanical Turk. These questions are provided as a supplementary material in Appendix A.

First media use questionnaire (MMI-1)

Participants then completed the original Media Multitasking Index (Here referred to as MMI-1) as per Ophir et al. (2009). This survey, provided as a supplementary material in Appendix B, is divided into two parts. In the first part of the survey, participants are asked to indicate, on average, how many hours per week they spend using 12 different forms of media. These 12 forms of media include (1) print media, (2) television, (3) computer-based video (e.g., YouTube), (4) music, (5) nonmusic audio, (6) video/computer games, (7) telephone/cell phone voice calls, (8) instant messaging, (9) text messaging (SMS), (10) email, (11) Web surfing, and (12) other computer-based applications (e.g., Word). In the second part of the survey, participants complete a “multitasking matrix” whereby they indicate, for each of the 12 media, how often they simultaneously use each of the other 11 media. Participants were told to enter 0 for never, 1 for a little of the time, 2 for some of the time, and 3 for most of the time. For each of the 12 media activities, these responses are summed and divided by three (so that each value is either 0, .33, .66, or 1), and then multiplied by the total number of hours spent with a given media as indicated in part one. These 12 weighted multitasking scores are then divided by the total number of hours spent engaging in all media (i.e., the sum of the hours indicated in part one of the survey) to produce the MMI score.

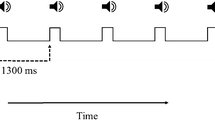

N-back

After completing the MMI-1, participants then performed the n-back. Our n-back design consisted of a 2-back and 3-back, and was constructed following the design used in Kane et al. (2007) using their specific stimulus sets. As per Kane et al., we manipulated three factors: the memory load (2-back vs. 3-back), the stimulus type (target vs. nontarget), and the sequence type (lure vs. nonlure). In the 2-back, targets were when the current letter matched the letter presented two items back, whereas nontargets were when the current letter did not match the letter presented two items back. Nontargets could also be considered lures or nonlures. For the 2-back, lures consisted of 1-back lures only (i.e., when the current letter matched the letter presented one item back). Nonlure sequences for targets meant that the target was not preceded by a 1-back lure, whereas lure sequences for targets meant that the target was preceded by a 1-back lure. Similarly, in the 3-back, targets were letters that matched the letter presented three items back, whereas nontargets were letters that did not match the letter presented three items back. For the 3-back, lures consistent mostly of 2-back lures (i.e., when the current letter matched the letter presented two items back), with one 1-back lure included per block of 48 trials. Nonlure sequences for targets were when the target was not preceded by a lure (1-back or 2-back lure), whereas lure sequences for targets were when the target was preceded by either a 1-back or 2-back lure.

Participants completed four blocks of 48 trials for both the 2-back and 3-back. Stimuli consisted of eight phonologically distinct letters—B, F, K, H, M, Q, R, and X. Within each block of 48 trials, there were eight targets (16.67%) and 40 nontargets. Six of these targets were “nonlure targets” (i.e., they were not preceded by a 1-back lure), and two of these targets were “lure targets” (i.e. they were preceded by a 1-back lure). Of the 40 nontarget trials, 34 were nonlures and six were lures (i.e., 1-back lures). Each letter appeared six times within each block, including once as a target. All participants first completed four blocks of 2-back trials, followed by the four blocks of 3-back trials.

Second media use questionnaire (MMI-2)

After completing the n-back, participants responded to a second version of the Media Multitasking Index (referred to as MMI-2). This version of the MMI has been used by our research team in previous papers (e.g., Ralph et al., 2015) and was obtained through Clifford Nass’s research website at Stanford University in December of 2012, shortly before his passing in 2013. When we originally accessed this version of the MMI, it was linked to the original paper, so we made the assumption that this was the measure used by Ophir et al. (2009). As we have become more familiar with the tools that others are using, we are still not sure how widely this version is used, and in fact we have not seen this version of the MMI used elsewhere beyond our own research. However, it includes slightly different items and alternative groups of items than the original MMI, which may be useful in comparing predictability of various forms of multitasking. A copy of this MMI-2 is provided as a supplementary material in Appendix C. The MMI-2 addresses 10 groupings of activities (nine media-based), including (1) face-to-face communication; (2) using print media (including print books, print newspapers, etc.); (3) texting, instant messaging, or emailing; (4) using social sites (e.g., Facebook, Twitter—except games); (5) using nonsocial text-oriented sites (e.g., online news, blogs, eBooks); (6) talking on the telephone or video chatting (e.g., Skype, iPhone video chat); (7) listening to music; (8) watching TV and movies (online and off-line) or YouTube; (9) playing video games or online games; and (10) doing homework/studying/writing papers. For each medium, participants first indicate (a) on an average day (not week, as per MMI-1), how many hours they spend engaging in the given medium, followed by (b) indicating the extent to which they simultaneously engage in each of the remaining nine activities (e.g., using a social site and listening to music) and the extent to which they perform a second activity of the same type (e.g., using a social site and using a second social site). Responses to the (b) component are indicated using the same scale as the original MMI, and resulting MMI scores are produced in the same fashion, exception only across 10 activities instead of 12.

Compliance checks

At the end of the study, participants responded to four final questions asking about their compliance with study instructions. These included (1) “Did you respond randomly at any point during the first survey of media use?” (2) “Did you respond randomly at any point during the second survey of media use?” (3) “Did you respond randomly at any point during the attention task (i.e., the n-back)?” and (4) participants were asked to indicate whether or not they were in fact multitasking while they perform our study on multitasking (“I was multitasking during this study” vs. “I was not multitasking during this study”). These questions are provided in full in Appendix D.

Results

Before analyzing the data, we decided to remove data from participants who reported that they had responded randomly to either of the MMI surveys. This led to the removal of 52 participants. However, we included data from those who indicated they responded randomly at some point during the n-back because the implication of this response is less clear: It may be a sign of noncompliance or it may be that participants were compliant with task instructions, yet admitted to responding randomly following errors, while they reconstructed their working-memory stream. Thus, we report the following analyses on a dataset of 265 participants (128 male, 137 female).

Here our primarily goal was to assess whether media multitasking is correlated with performance on the n-back (2-back and 3-back) using our full dataset of participants (265 total). Performance on the n-back was assessed in terms of hits (the proportion of target trials that were correctly identified as targets), false alarms (the proportion of nontarget trials that were incorrectly identified as targets), and omissions (the proportion of trials for which no response was provided). Furthermore, media multitasking was indexed by two related but slightly different versions of the MMI (referred to as MMI-1 and MMI-2).

Performance on the n-back

Before examining the relation between the MMIs and performance on the n-back, we first report on the general performance of participants on the n-back. To reiterate, the n-back included a manipulation of memory load (2-back, 3-back), stimulus type (target, nontarget), and sequence type (lure, nonlure; as per Kane et al., 2007). As such, we conducted two repeated-measure analyses of variance for hits and false alarms separately, with memory load (2-back, 3-back) and sequence type (lure, nonlure) entered as the within-subjects variables (see Table 1). First, in terms of hits, there was a main effect of memory load such that participants scored fewer hits in 3-back (M = .46, SD = .24) compared to the 2-back (M = .64, SD = .27), F(1, 264) = 226.49, p < .001, ηp 2 = .46. There was also a main effect of sequence type on hits, whereby participants scored more hits when targets were preceded by lures (M = .58, SD = .25) than by nonlures (M = .54, SD = .24), F(1,264) = 16.48, p < .001, η 2 p = .06, as well as a Memory Load × Sequence Type interaction, F(1, 264) = 45.55, p < .001, ηp 2 = .15. Second, in terms of false alarms, we observed a main effect of memory load whereby participants had more false alarms in the 3-back (M = .16, SD = .14) compared to the 2-back (M = .11, SD = .12), F(1, 264) = 51.54, p < .001, ηp 2 = .16. There was also a main effect of sequence type on false alarms whereby participants had a greater number of false alarms to nontarget lure trials (M = .24, SD = .18) than nonlure trials (M = .11, SD = .11), F(1, 264) = 250.39, p < .001, ηp 2 = .49, as well as a Memory Load × Sequence Type interaction, F(1, 164) = 18.90, p < .001, ηp 2 = .07.

MMIs and n-back performance

In Table 2, we present descriptive statistics for the MMI-1 (the original MMI discussed in Ophir et al., 2009), the MMI-2 (an alternative version of the MMI downloaded from Clifford Nass’s website), as well as overall hits, false alarms, and omissions for both the 2-back and 3-back. Both indices of media multitasking (MMI-1 and MMI-2) demonstrated good skew and kurtoses with values within an acceptable range of <2 and <4, respectively (Kline, 1998). The MMIs were also reasonably well correlated with one another (see Table 3), although not as high as one might expect given that the two indices are supposedly tapping the same underlying construct. Nevertheless, we present the following data as a function of each of the two indices of media multitasking.

Pearson product-moment correlations between both indices of media multitasking (MMI-1 and MMI-2) and performance measures on the n-back (overall hits, false alarms, and omissions) are depicted in Table 3. Given that age was found to correlate with both indices of media multitasking (both rs = -.20, ps = .001), and age is known to be related to working memory performance (e.g., Mattay et al., 2006; Oberauer, 2005), we also reported partial correlations below the diagonal controlling for age effects. \

Overall hits and false alarms

At first glance, it appears that heavier media multitasking generally predicts poorer performance on both the 2-back and 3-back. When age is not taken into account (Table 3, above the diagonal; see also Fig. 1), higher scores on the MMI-1 and MMI-2 predict fewer hits in both the 2-back and 3-back. Meanwhile, higher scores on the MMI-2 (but not the MMI-1) were found to predict higher false alarms, again, in both the 2-back and 3-back. Given that the relations between the MMI-2 and performance measures on the n-back were nominally stronger than those of the MMI-1, we visualize a subset of these relations in Fig. 1. When controlling for the association of age with media multitasking and working memory (Table 3, below the diagonal), which is likely the more appropriate set of relations to focus on, the pattern of results changes slightly. While the aforementioned association of the MMI-2 and false alarms (in both the 2-and 3-back) remains the same, the association of both the MMI’s with hits is restricted to the 2-back only.

Omissions

Our third performance metric obtained from the n-back was omissions (the proportion of trials to which participants did not make a response). In both the 2-back and 3-back, regardless of whether age was controlled, scores on both media multitasking indices (MMI-1 and MMI-2) were positively correlated with omissions. On the one hand, an analysis of overall hits and false alarms seem in line with the general claim that heavier media multitaskers have poorer working memory than their light multitasking counterparts—with some clear nuances as to the specific relations with hits versus false alarms. However, these data also highlight a dispositional perspective—heavier media multitaskers may also be less likely or less willing to comply with task instructions. In addition to finding a positive and significant correlation between media multitasking indices (MMI-1 and MMI-2) and the proportion of omitted trials for both the 2-back and 3-back, we also found that heavier multitaskers were also more likely to be multitasking while completing the experiment (indicated via our Compliance Check question #4—“I was multitasking during this study” vs. “I was not multitasking during this study”), r s = .22 and .21, ps ≤ .001 (for the MMI-1 and MMI-2, respectively).Footnote 1 Furthermore, perhaps owing to such multitasking, there is also a marginal trend whereby heavier media multitaskers admitted to responding slightly more randomly during the n-back, r s = -.114, p = .065.

Another important observation shown in Table 3 is that omissions and hits were highly and inversely correlated. Intuitively, this makes good sense as the less a participant responds, the poorer their performance measures that are contingent on a response. A lack-of-a-response (omission) has the effect of lowering hits while simultaneously lowering false alarms. Given that media multitasking indices (MMI-1 and MMI-2) were both found to positively predict omissions, and omissions were strongly tied to other performance metrics on the n-back, we reanalyzed our data while controlling for differences in omissions (see Table 4).

Hits and false alarms when controlling for omissions

When controlling for the influence of omissions and age (shown below the diagonal in Table 4; see also Fig. 2) we find that heavier media multitasking is associated with a higher false alarm rate in both the 2-back and 3-back. Importantly, however, we no longer find any evidence that media multitasking is associated with a change in hits (although we do note a modest correlation between the MMI-2 and hits in the 2-back, when not controlling for differences in age). Figure 2 depicts the relations between MMI-2 (given that it was nominally more strongly tied to performance metrics) and n-back hits and false alarms after removing variance associated with age and overall omissions by using unstandardized residuals.

Lure versus nonlure sequences

As mentioned earlier, our n-back also contained a manipulation of sequence type—that is, whether nontargets were presented as lures versus nonlures, or in the case of targets, whether a target was preceded by a lure or nonlure foil/nontarget. First, as shown in Table 5, there was no strong evidence that the media multitasking indices (MMI-1 and MMI-2) were specifically associated with hits to targets that were preceded by lures versus nonlures. Furthermore, as we noted earlier, any significant correlations that were observed between the media multitasking indices and hits were reduced once the association with age and omissions were controlled (Table 5, parentheses). Second, as shown in Table 6, although the media multitasking indices were again observed to be correlation with false alarms, there was no strong evidence that these associations were driven by false alarms to lures versus nonlures.

Sensitivity versus bias

We end with an examination of the relation between scores on the media multitasking indices and calculated sensitivity (dL) and response bias (CL) measures; computing these signal detection metrics is common practice in both the working memory literature, as well as the literature linking media multitasking and working memory performance. These measures of sensitivity (dL) and response bias (CL) were calculated as was done by Kane et al. (2007, p. 617), as they followed a recommendation put forth by Snodgrass and Corwin (1988). Interestingly, in both the 2-back and 3-back, higher scores on each of the media multitasking indices (MMI-1 and MMI-2) were significantly associated with decreases in sensitivity (dL) but not with a change in response bias (CL; see Table 7). As also shown in Table 7, consistent with the general pattern of results discussed thus far, the relation between media multitasking and sensitivity was nominally stronger for the MMI-2 than the MMI-1. Last, it is worth noting that most of these associations remain statistically significant after controlling for the shared association with age. Only the relation between the MMI-1 and sensitivity on the 3-back becomes nonsignificant when controlling for the shared association with age.

General discussion

The purpose of the current investigation was to reach a firm conclusion as to how individual differences in media multitasking relate to performance on the n-back. We sought to reach such a firm conclusion by (1) treating media multitasking as a continuous variable, using the entire media multitasking spectrum (rather than extreme-groups), and (2) collecting a large sample of participants. One important observation we made was that individuals who scored higher on the MMIs also omitted a greater proportion of trials in the n-back—a behavioral measure that has not been reported in previous media multitasking studies using the n-back. Given that participants were instructed to provide a response on each trial of the n-back (i.e., to indicate whether the current letter matched the letter presented n items ago by pressing one of two response keys, match vs. nonmatch), omissions appear to be reflective of a disengagement from the task. One obvious implication is that heavier media multitaskers might be less engaged while completing the n-back. Whether this is due to explicit noncompliance or implicit differences in attentional mechanisms remains an open question. However, it is clear that such omissions have an impact on other performance measures such as hits and false alarms. For instance, the less a participant responds, the fewer false alarms they will commit, but also the fewer hits they will achieve. Indeed, we noted a substantial relation between omissions and hits (more omissions were linked with fewer hits) as well as false alarms (more omissions were linked with fewer false alarms). It therefore seems most reasonable to assess the relation between media multitasking and performance on the n-back when controlling for the proportion of omitted trials.

When controlling for omissions (and age effects), we found a robust relation between media multitasking and false alarms on both the 2-back and 3-back, wherein higher levels of media multitasking were associated with an increase in false alarms. Furthermore, no strong evidence was found for a link between media multitasking and hits (contrary to Cain et al., 2016). Independent of cognitive load (2-back, 3-back) heavier media multitaskers are thus more likely to endorse nontargets as targets, but are not different from lighter media multitaskers in terms of the ability to correctly identify targets as targets. This finding is relatively consistent with those initially reported by Ophir et al. (2009; albeit cognitive load influenced the outcome in their work but not in ours). Last, we note that when calculating measures of sensitivity and response bias, scores on the MMIs were associated with changes in sensitivity (on both the 2-back and 3-back) but not response bias, wherein higher levels of media multitasking were associated with poorer sensitivity.

When omissions are not taken into consideration, significant associations between media multitasking and hits (as found by Cain et al., 2016) begin to emerge. A likely possibility is that these emergent relations between media multitasking and hits are due to the shared association with omissions. This divergence of results depending on the treatment of omissions also highlights the importance of taking into account omitted trials (see Cheyne, Solman, Carriere, & Smilek, 2009), and points towards one possible source of previous, discrepant findings (e.g., Cain et al., 2016; Ophir et al., 2009).

Another interesting implication of the current results is that individual differences in media multitasking might be linked with individual differences in the propensity to overtly disengage from ongoing tasks. In addition to heavier media multitaskers omitting a greater proportion of trials, we also found that heavier multitaskers reported being more likely to media multitask during the n-back, and were marginally more likely to report that they responded randomly at some point during the n-back. Taken together, these findings suggest that in addition to potential differences in cognitive control that have thus far been the focus of much research, media multitasking may be associated with differences in how individuals approach or engage with tasks (see Ralph et al., 2015). However, as alluded to earlier, it remains unclear whether such a tendency towards disengagement/inattention reflects a deficit in the ability to remain attentive or alternatively, insufficient interest, and/or motivation to remain attentive.

Thus far, several studies have been conducted to determine whether media multitasking is associated with differences in various aspects of cognitive control. It is worthwhile to take a step back and consider why this is a topic of such interest. One clear concern is that habitually attending to multiple streams of information might have the unfortunate consequence of eroding executive control systems (see Cain & Mitroff, 2011)—we refer to this as an ability hypothesis of media multitasking. For example, heavier media multitaskers in Ophir et al.’s (2009) and Cain and Mitroff’s (2011) experiments may be fundamentally less able to ignore distracting information as a result of their media use tendencies—a possibility that is quite troubling. An alternative formulation of the ability hypothesis of media multitasking is of course that individuals find themselves in media multitasking scenarios due to fundamental differences in cognitive control (i.e., those that are less able to ignore distracting information on the aforementioned laboratory tasks are also less likely to ignore multiple media streams available in the environment).

We suggest that it is important to consider yet another possibility—a strategic hypothesis of media multitasking—wherein individual differences in media multitasking may reflect how individuals choose to engage with their environment, without involving particular underlying differences in ability (discussed in Ralph et al., 2015). That is, it is not that heavier media multitaskers cannot ignore distracting information, it is that they choose not to; a scenario that is clearly much less concerning. But what might drive such strategic differences in task engagement? One factor might be individual differences in thresholds of engagement, whereby heavier media multitaskers may need more stimulation to be motivated to engage with a task. In prior work, higher levels of media multitasking have been linked with greater reports of impulsivity and sensation seeking (Sanbonmatsu et al., 2013; Wilmer & Chein, 2016), a greater discounting of delayed rewards (Wilmer & Chein, 2016), the endorsement of intuitive yet incorrect decision on the cognitive reflection task (CRT; Schutten, Stokes, & Arnell, 2016), as well as a speeding of responses at the expense of accuracy on difficult Raven’s Matrices problems (Minear et al., 2013). In this study we find that higher levels of media multitasking are further associated with a greater propensity to omit trials, as well as to media multitasking during the experiment itself and, to a lesser extent, respond randomly. Collectively, these findings may suggest that heavier media multitasking is associated with a higher threshold of engagement (craving more, immediate stimulation), less willingness to commit effort to complete tasks (e.g., CRT and difficult Raven’s Matrices), and accordingly a greater propensity to disengage from ongoing tasks in favor of pursuing alternative actions (as found here, such as consuming additional media).

Unfortunately, ability and strategy choices are often tightly linked. At present, the findings in the current media multitasking literature are insufficient for conclusively assessing whether heavier media multitaskers are less able to, for instance, exert top-down control over attention (the ability hypothesis), or are simply less willing to exert such control (the strategic hypothesis). Even in the current experiment, it is unclear whether higher omissions for heavier media multitaskers reflect a failure of sustained attention or a loss of interest and motivation to perform well on the task. Hopefully in future pursuits, we may gain some ground separating ability and affective influences when examining the relation between media multitasking tendencies and performance measures.

Before we conclude, it is also worthwhile to consider how the two MMIs compared in our investigation. Here we used two similar, albeit slightly different version of the MMI. For example, while MMI-1 contains items referencing homework, studying, and writing papers, the MMI-2 does not. Similarly, while MMI-2 contains questions about computer applications (e.g., Word), the MMI-1 does not. Furthermore, while the MMI-1 asks participants to aggregate their media multitasking behavior over the course of a typical week, the MMI-2 asks participants to estimate their media multitasking tendencies over the course of a typical day. Although the MMI-1 and MMI-2 were highly correlated and often predicted the same performance measures, these structural differences might explain why the MMI-2 was often a significantly stronger predictor of performance measures than the MMI-1. In terms of item composition, the MMIs are an aggregate of a vast array of activities that differ between the questionnaires. Within any given sample, how heavily participants are loading on particular activities may have consequences for correlations with behavioral measures. For example, it might be the case that media multitasking while doing homework/studying/writing papers is a particularly important or representative media multitasking behavior that is only included in the MMI-2 (hence the stronger associations with performance metrics). After all, it is certainly reasonable to assume that not all media multitasking behaviors are equal. Second, in terms of the temporal window in which the MMIs require participants to estimate their multitasking behavior, it might be the case that aggregating one’s information over the shorter time span of a typical day may be easier than aggregating one’s information over the larger time span of a typical week. Last, all participants completed the MMI-1 near the beginning of the study, and the MMI-2 near the end of the study. It is therefore conceivable that the order in which the MMIs were completed may play a role in how strongly they were associated with performance metrics.

Concluding comments

By treating media multitasking as a continuous variable and using a large sample of participants, we were able to conclude with reasonable confidence that higher levels of media multitasking (as indexed by two different versions of the MMI) are associated with poorer performance on the N-back. These findings are important because they begin to adjudicate between several sets of seemingly inconsistent findings within the media multitasking literature. Moving forward, we encourage the field to begin treating media multitasking as the continuous variable that it is consistently found to be, and use larger samples of participants so that we may gain a better understanding of expected effect sizes and improve our ability to find consistent results.

Notes

Thirty-four of our 265 participants (12.8%) indicated that they were multitasking during the experiment

References

Alzahabi, R., & Becker, M. W. (2013). The association between media multitasking, task-switching, and dual-task performance. Journal of Experimental Psychology: Human Perception and Performance, 39(5), 1485–1495. doi:10.1037/a0031208

Cain, M. S., Leonard, J. A., Gabrieli, J. D. E., & Finn, A. S. (2016). Media multitasking in adolescence. Psychonomic Bulletin & Review. doi:10.3758/s13423-016-1036-3. Advanced online publication.

Cain, M. S., & Mitroff, S. R. (2011). Distractor filtering in media multitaskers. Perception, 40(10), 1183–1192. doi:10.1068/p7017

Cardoso-Leite, P., Kludt, R., Vignola, G., Ma, W. J., Green, C. S., & Bavelier, D. (2016). Technology consumption and cognitive control: Contrasting action video game experience with media multitasking. Attention, Perception & Psychophysics, 78(1), 218–241. doi:10.3758/s13414-015-0988-0

Cheyne, J. A., Solman, G. J. F., Carriere, J. S. A., & Smilek, D. (2009). Anatomy of an error: A bidirectional state model of task engagement/disengagement and attention-related errors. Cognition, 111(1), 98–113. doi:10.1016/j.cognition.2008.12.009

Kane, M. J., Conway, A. R. A., Miura, T. K. M., & Colflesh, J. H. (2007). Working memory, attention control, and the n-back task: A question of construct validity. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33(3), 615–622. doi:10.1037/0278-7393.33.3.615

Kline, R. B. (1998). Principles and practices of structural equation modeling. New York: Guilford Press.

Lui, K. F. H., & Wong, A. C. N. (2012). Does media multitasking always hurt? A positive correlation between multitasking and multisensory integration. Psychonomic Bulletin & Review, 19(4), 647–653. doi:10.3758/s13423-012-0245-7

Mattay, V. S., Fera, F., Tessitore, A., Hariri, A. R., Berman, K. F., Saumitra, D., & Weinberger, D. R. (2006). Neurophysiological correlates of age-related changes in working memory capacity. Neuroscience Letters, 392, 32–37. doi:10.1016/j.neulet.2005.09.025

Minear, M., Brasher, F., McCurdy, M., Lewis, J., & Younggren, A. (2013). Working memory, fluid intelligence, and impulsiveness in heavy media multitaskers. Psychonomic Bulletin & Review, 20(6), 1274–1281. doi:10.3758/s13423-013-0456-6

Oberauer, K. (2005). Binding and inhibition in working memory: Individual and age differences in short-term recognition. Journal of Experimental Psychology: General, 134(3), 368–387. doi:10.1037/0096-3445.134.3.368

Ophir, E., Nass, C., & Wagner, A. D. (2009). Cognitive control in media multitaskers. Proceedings of the National Academy of Sciences of the United States of America (PNAS), 106(37), 15583–15587. doi:10.1073/pnas.0903620106

Pea, R., Nass, C., Meheula, L., Rance, M., Kumar, A., Bamford, H., & Zhou, M. (2012). Media use, face-to-face communication, media multitasking, and social well-being among 8- to 12-year-old girls. Developmental Psychology, 48(2), 327–336. doi:10.1037/a0027030

Ralph, B. C. W., Thomson, D. R., Seli, P., Carriere, J. S., & Smilek, D. (2015). Media multitasking and behavioral measures of sustained attention. Attention, Perception & Psychophysics, 77(2), 390–401. doi:10.3758/s13414-014-0771-7

Rideout, V. J., Foehr, U. G., & Roberts, D. F. (2010). Generations M2: Media in the lives of 8- to 18-year-olds. Henry J. Kaiser Family Foundation. Retrieved from http://kff.org/other/poll-finding/report-generation-m2-media-in-the-lives/

Sanbonmatsu, D. M., Strayer, D. L., Mederois-Ward, N., & Watson, J. M. (2013). Who multi-tasks and why? Multi-tasking ability, perceived multi-tasking ability, impulsivity, and sensation seeking. PLOS ONE 8(1). doi:10.1371/journal.pone.0054402

Schutten, D., Stokes, K. A., & Arnell, K. M. (2016). I want to media multitask and I want to do it now: Individual differences in media multitasking predict delay of gratification and system 1 thinking.

Shih, S.-I. (2013). A Null Relationship between media multitasking and well-being. PLoS ONE, 8(5), e64508. doi:10.1371/journal.pone.0064508

Snodgrass, J. G., & Corwin, J. (1988). Pragmatics of measuring recognition memory: Applications to dementia and amnesia. Journal of Experimental Psychology: General, 117, 34–50. doi:10.1037/0096-3445.117.1.34

Uncapher, M. R., Thieu, M. K., & Wagner, A. D. (2016). Media multitasking and memory: Differences in working memory and long-term memory. Psychonomic Bulletin & Review, 23, 483–490. doi:10.3758/s13423-015-0907-3

Vogel, E. K., McCollough, A. W., & Machizawa, M. G. (2005). Neural measures reveal individual differences in controlling access to working memory. Nature, 438, 500–503. doi:10.1038/nature0417

Wilmer, H. H., & Chein, J. M. (2016). Mobile technology habits: Patterns of association among device usage, intertemporal preference, impulse control, and reward sensitivity. Psychonomic Bulletin & Review. doi:10.3758/s13423-016-1011-z. Advanced online publication.

Acknowledgments

This research was supported by a Natural Science and Engineering Research Council of Canada (NSERC) Discovery Grant to Daniel Smilek and an NSERC Postgraduate Doctoral Scholarship to Brandon Ralph.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 33 kb)

Rights and permissions

About this article

Cite this article

Ralph, B.C.W., Smilek, D. Individual differences in media multitasking and performance on the n-back. Atten Percept Psychophys 79, 582–592 (2017). https://doi.org/10.3758/s13414-016-1260-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-016-1260-y