Abstract

Technology has the potential to impact cognition in many ways. Here we contrast two forms of technology usage: (1) media multitasking (i.e., the simultaneous consumption of multiple streams of media, such a texting while watching TV) and (2) playing action video games (a particular subtype of video games). Previous work has outlined an association between high levels of media multitasking and specific deficits in handling distracting information, whereas playing action video games has been associated with enhanced attentional control. Because these two factors are linked with reasonably opposing effects, failing to take them jointly into account may result in inappropriate conclusions as to the impacts of technology use on attention. Across four tasks (AX-continuous performance, N-back, task-switching, and filter tasks), testing different aspects of attention and cognition, we showed that heavy media multitaskers perform worse than light media multitaskers. Contrary to previous reports, though, the performance deficit was not specifically tied to distractors, but was instead more global in nature. Interestingly, participants with intermediate levels of media multitasking sometimes performed better than both light and heavy media multitaskers, suggesting that the effects of increasing media multitasking are not monotonic. Action video game players, as expected, outperformed non-video-game players on all tasks. However, surprisingly, this was true only for participants with intermediate levels of media multitasking, suggesting that playing action video games does not protect against the deleterious effect of heavy media multitasking. Taken together, these findings show that media consumption can have complex and counterintuitive effects on attentional control.

Similar content being viewed by others

Nearly every time a large portion of society begins to adopt a new form of technology, there is a concomitant increase in interest in the potential effects of that technology on the human body, brain, and/or behavior. For instance, over a period of approximately 20 years (starting in 1941), the percentage of American homes owning a television set went from 0% to more than 90% (Gentzkow & Shapiro, 2008). This trend resulted in a vast body of research investigating the effects of television viewing on everything from learning and scholastic performance (Greenstein, 1954), to the development of social relationships and social norms (Riley, Cantwell, & Ruttiger, 1949; Schramm, 1961), to basic visual and motor skills (Guba et al., 1964), to voting patterns (Glaser, 1965; Simon & Stern, 1955).

The forms of technology of most significant interest today include Internet access, cellphone possession, and computer/video game exposure. The amount of time the average American spends consuming multimedia today is substantial—in 2009, 8- to 18-year-olds in the US consumed more than 7.5 h of media daily. Moreover, this number is increasing rapidly—by more than 1 h/day over the preceding 5 years (Rideout, Foehr, & Roberts, 2010; Roberts, Foehr, & Rideout, 2005). Although the literature on these newer forms of multimedia is only in its relative infancy, a few key lessons have been gleaned from the broader literature. First, the effects of media on human cognition can depend on the medium, even when the content is held constant. For example, 9-month-olds learn to discriminate Mandarin Chinese phonetic contrasts from live exposure to a human tutor, but not from exposure to a tutor presented on television (Kuhl, 2007; see also DeLoache et al., 2010). Second, the effects of media can depend on individual differences in the consumers. For example, the cognitive benefits gained via playing certain types of video games are larger in females than in males (Feng, Spence, & Pratt, 2007) and in individuals with certain genetic traits than in other people (Colzato, van den Wildenberg, & Hommel, 2014). Third, the effects of media can depend on the specific forms of content within the medium. For example, “violent” video games and “heroic” video games are both “types of video games”—however, playing violent video games has been linked with increases in antisocial behavior, whereas playing heroic video games has been linked to increases in pro-social behavior (Gentile et al., 2009). Finally, the effects of media can depend on how a person engages with the medium (e.g., active vs. passive). For example, playing the exact same real-time strategy game may or may not lead to improved cognitive flexibility, depending on whether the game is played in a challenging or a nonchallenging way (e.g., Glass, Maddox, & Love, 2013). It therefore follows that in order to make sensible claims about the impact of technology on cognition, it is necessary to focus on clearly defined forms of media. Following Cardoso-Leite, Green, and Bavelier (2015), we decided to contrast two groups of media users: action video game players and heavy media multitaskers.

Impact of different technology usage on cognition

Action video game players

Action video games are fast-paced interactive systems that place a heavy load on divided attention, peripheral processing, information filtering, and motor control. A large load is also placed on decision making, via the nesting of goals and subgoals at multiple time scales. Action video games—in contrast to other types of games, such as life simulations—have been shown to produce a variety of benefits in vision, attention, and decision making (for reviews, see Green & Bavelier, 2012; Latham, Patston, & Tippett, 2013; Spence & Feng, 2010). In the domain of attention, action video games have been shown to improve the top-down attentional system, which underlies abilities such as focusing attention on some elements at the expense of others (i.e., selective attention), maintaining attention over longer periods of time (i.e., sustained attention), and sharing attention in time, in space, or across tasks (i.e., divided attention; for reviews, see Green & Bavelier, 2012; Hubert-Wallander, Green, & Bavelier, 2011). Action video game players (AVGP)—typically defined as individuals who have played 5 h or more of action video games per week for the last 6 months—have better spatial selective attention, since they can more accurately locate stimuli in space, either when the stimuli are presented in isolation (Buckley, Codina, Bhardwaj, & Pascalis, 2010) or when the stimuli are presented alongside distractors (Spence & Feng, 2010; Green & Bavelier, 2003, 2006a; Spence, Yu, Feng, & Marshman, 2009; West, Stevens, Pun, & Pratt, 2008). AVGP also show an increased ability to direct attention in time, as evidenced by a shorter interference window in backward masking (Li, Polat, Scalzo, & Bavelier, 2010) and a reduced attentional blink (Cohen, Green, & Bavelier, 2007; Green & Bavelier, 2003). They can also visually track a greater number of targets (Boot et al., 2008; Cohen et al., 2007; Green & Bavelier, 2003, 2006b; Trick, Jaspers-Fayer, & Sethi, 2005), which suggests increased attentional resources or improved efficiency of attention allocation (Ma & Huang, 2009; Vul, Frank, Tenenbaum, & Alvarez, 2009; see also Chisholm, Hickey, Theeuwes, & Kingstone, 2010; Chisholm & Kingstone, 2012). However, not all aspects of perception and cognition appear to be enhanced in AVGP. For instance, bottom-up attention appears to be unaffected by action video game experience (Castel, Pratt, & Drummond, 2005; Dye, Green, & Bavelier, 2009a; but see West et al., 2008). Importantly, long-term training studies, wherein individuals who do not naturally play action video games undergo training on either an action video game or a control video game for many hours (e.g., 50 h spaced over the course of about 10 weeks), have confirmed that action video game play has a causal role in the observed effects (Bejjanki et al., 2014; Feng et al., 2007; Green & Bavelier, 2003; Green, Pouget, & Bavelier, 2010; Green, Sugarman, Medford, Klobusicky, & Bavelier, 2012; Oei & Patterson, 2013; Strobach, Frensch, & Schubert, 2012; Wu et al., 2012; Wu & Spence, 2013; for reviews, see Bediou, Adams, Mayer, Green, & Bavelier, submitted; Green & Bavelier, 2012).

Media multitasking

Media multitasking is the simultaneous consumption of multiple streams of media—for instance, reading a book while listening to music, texting while watching television, or viewing Web videos while e-mailing (Ophir, Nass, & Wagner, 2009). It is important to note that extensive media multitasking is different from extensive media use. An individual may consume a large amount of different forms of media, but if the different forms are not consumed concurrently, the person would not be considered a media multitasker. To study the potential impact of engaging in large amounts of media multitasking, Ophir et al. first devised a media multitasking questionnaire from which the authors computed an index (the media multitasking index: MMI) that reflects the extent to which a respondent self-reports media multitasking. Participants that were one standard deviation above the population average were termed heavy media multitaskers (HMM), whereas those at the opposite end (i.e., 1 SD below the mean) were termed light media multitaskers (LMM).

Intuitively, because HMM perform multiple tasks at the same time and switch more frequently between tasks or media than do LMM, one might expect HMM to outperform LMM in multitasking. Indeed, practice typically leads to performance improvements. Contrary to this intuition, however, Ophir and colleagues (2009) reported that HMM were worse—not better—than LMM in a task-switching experiment. HMM were also worse in other tasks, including the filter task (which requires participants to recall briefly presented visual elements while ignoring distractors), the N-back task (which requires participants to keep a series of recently presented items in working memory and to update that list as new items appear), and the AX-continuous performance task (AX-CPT, which requires participants to respond differently to a target letter “X” depending on whether or not it was preceded by the letter “A”; note that all of these tasks are presented in greater detail below). Furthermore, the performance decrements observed when contrasting HMM and LMM were not global in nature (i.e., with the HMM being generally slower/less accurate on all tasks and all conditions within tasks), but instead appeared to be specific to situations that involved distractors. For example, in the AX-CPT, HMM and LMM performed equally well in the distractor-absent condition. However, HMM were more negatively affected (i.e., slower response times [RTs]) by the presence of distractors than were LMM.

To account for these results, the authors argued that media multitasking might have negative effects on cognitive control, thus leading to weaker resistance to distractors. Alternatively, because the study was purely cross-sectional in nature, it is also possible that those participants who are less resistant to distractors or who privilege breadth over depth with regard to cognitive control might more frequently engage in media multitasking.

Recent studies have brought some support for this final possibility. Ralph, Thomson, Seli, Carriere, and Smilek (2015), for instance, observed a negative impact of media multitasking in some but not other measures of sustained attention, unlike what might be expected if HMM were generally less resistant to distractors. In contrast, Cain and Mitroff (2011) used the additional-singleton task to test the idea of breadth-biased attention. In this task, participants are presented with an array of shapes (squares and circles) and asked to report which symbol is presented in the unique target shape (circle) while ignoring the remaining, nontarget shapes (squares). In half of the trials, all of the shapes are colored green, whereas in the remaining half of the trials, one of the shapes is colored red (the additional singleton). Participants were informed that there were two experimental conditions: In the “sometimes” condition the color singleton could be the target, whereas in the “never” condition this was never the case. The difference in performance across these two conditions reflects the ability to take into account instructions or prior knowledge when spatially distributing attention. HMM, as opposed to LMM, did not modulate their responses between these two tasks, suggesting that they maintained a broader attentional scope despite the explicit task instructions.

Lui and Wong (2012) went a step further and reasoned that there might be situations in which a broader attentional scope might in fact be advantageous. These authors used the pip-and-pop paradigm, in which participants search for a vertical or horizontal line (target) among an array of both red and green distractor lines with multiple orientations. The colors of the lines changed periodically within a trial, with target and distractor lines changing their colors at different frequencies (the orientations of the lines were kept constant within each trial). In some conditions, a tone was presented in synchrony with the flickering of the target line; participants were not explicitly informed about the meaning of the tones. The results showed that target detection performance increased in the presence of the tones and that this benefit correlated positively with the MMI score. The authors concluded that HMM—perhaps because of their breadth-biased attentional processes—are better able to integrate multisensory information.

Finally, a recent study used voxel-based morphometry to report that participants with higher levels of media multitasking had lesser gray matter density in the anterior cingulate cortex than did individuals with lower levels of media multitasking (Loh & Kanai, 2014).

Although these results are suggestive, the available literature remains somewhat unsettled. Minear, Brasher, McCurdy, Lewis, and Younggren (2013), for example, compared HMM and LMM in two task-switching experiments but found no significant difference between these two groups, whereas Alzahabi and Becker (2013) found that HMM actually performed better at task switching than LMM, not worse, as Ophir and colleagues (2009) had reported. Thus, due to these inconsistent results, additional replications are definitely needed in the domain.

Comparing AVGP to media multitaskers

Media multitasking and playing action video games are two common forms of technology usage; as such, they are likely to co-occur in the same participants. Yet, little is known about their joint impact or interaction. Media multitaskers and AVGP, though, have been independently evaluated using the same experimental paradigms across different studies. For example, HMM have not been seen to differ from LMM on the attentional network test (ANT; Minear et al., 2013)—which measures three components of attention (alerting, orienting, and executive control)—or in terms of dual-task performance (Alzahabi & Becker, 2013), whereas AVGP differ from non-video-game players (NVGP) both on the ANT (Dye, Green, & Bavelier, 2009a; but see Wilms, Petersen, & Vangkilde, 2013) and in terms of dual-tasking (Strobach et al., 2012). HMM appear to be more impulsive and sensation-seeking than LMM (Minear et al., 2013; Sanbonmatsu, Strayer, Medeiros-Ward, & Watson, 2013) and to score lower on fluid intelligence tests (Minear et al., 2013). AVGP, on the other hand, have been shown to be no more impulsive than NVGP (Dye, Green, & Bavelier, 2009b) and do not differ from NVGP on measures of fluid intelligence (Boot, Kramer, Simons, Fabiani, & Gratton, 2008; Colzato, van den Wildenberg, Zmigrod, & Hommel, 2013). Finally, some abilities seem to be unchanged by both media multitasking and action video game play, such as the ability to abort an imminent motor response, as measured with the stop-signal task (Colzato et al., 2013; Ophir et al., 2009).

So far, only one study has investigated both gaming and media multitasking. The additional-singleton experiment by Cain and Mitroff (2011), described earlier, reported that HMM did not take into account information about the task structure. These authors classified the same set of participants into AVGP and NVGP on the basis of their self-reported gaming experience. While both gaming groups’ performance was modulated by task structure, there was no difference between these two groups. Unfortunately, this study did not consider that media multitasking and gaming experience might interact. For example, action game play could serve as a protective mechanism against the negative effects seen with extensive media multitasking, or conversely, the negative effects of media multitasking could overwhelm the positive benefits of action video game play. One of the aims of the present study was to evaluate the potential interactions between these two types of technology experiences, and thus gain a fuller picture of their effects.

The primary focus of this study was to contrast the effects of these two types of media consumption on tasks measuring attentional or cognitive control. To this end, we utilized the exact same four tasks used by Ophir et al. (2009). Below we review, for each of the four tasks utilized in the present study, the existing literature for both action video gaming and media multitasking.

AX-continuous performance task

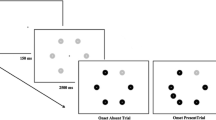

The AX-CPT (see Fig. 1) measures proactive cognitive control—as opposed to the reactive control that is required, for example, in the Stroop task. In the AX-CPT, participants have to first encode a stimulus (the cue) that signifies a context and allows them to prepare a response to a second stimulus (the probe).

AX-continuous performance task. Participants are presented with sequences of red letters and are required to press a specific key (the “YES” key) if and only if the letter presented is a red “X” AND the previous red letter was an “A.” In all other situations (e.g., the letter that is presented is not a red “X,” or the letter is a red “X” but the previous red letter was not an “A”), participants are to press a different key (the “NO” key). There were two experimental conditions: the “no-distractors” condition (top panel) and the “distractors” condition (bottom panel). The latter differed from the former in the presentation of three white letters—the distractors—sandwiched between the red letters. Participants were to press the “NO” key in response to each of these distractor elements

Ophir and colleagues (2009) compared HMM and LMM on two versions of the AX-CPT: the distractor-absent condition and the distractor-present condition. In the distractor-present condition, additional, irrelevant and clearly identifiable stimuli were presented between the cue and the probe. Ophir and colleagues’ results showed that HMM and LMM did not differ in the distractor-absent condition, but that HMM were slower than LMM to respond in the distractor-present condition. This pattern led these authors to conclude that HMM were more affected by distracting information than LMM.

The AX-CPT has not been used to contrast AVGP and NVGP. Some studies, however, suggest that AVGP might outperform NVGP on this type of cognitive control task. Dye, Green, and Bavelier (2009b) assessed the performance of AVGP and NVGP on the test of variables of attention (TOVA). The TOVA is in essence a go/no-go task, wherein participants press a button whenever a target shape appears in one location, and withhold a response when the target appears in a different location. Dye, Green, and Bavelier (2009a) found that AVGP responded more quickly than NVGP with no difference in accuracy—that is, the faster responses were not the result of a speed–accuracy trade-off. Bailey, West, and Anderson (2010) used different subsets of trials on the Stroop task to extract indices of proactive and reactive cognitive control in AVGP and NVGP. In this setup, video game experience was not found to modulate reactive cognitive control. Proactive control, as measured by RT analyses, also did not differ as a function of video game experience, except for a tendency for lesser influence of the previous trial in more-experienced gamers, which the authors interpreted as deficient maintenance of proactive control with more video game experience. More recently, McDermott, Bavelier, and Green (2014) using a “recent-probes” proactive interference task, found different speed–accuracy trade-offs between AVGP and NVGP, with AVGP being faster but less accurate, but no genuine difference in proactive interference. It remains to be seen whether these results can be replicated when using a more direct measure of proactive control (the AX-CPT) and controlling for media multitasking.

N-back task

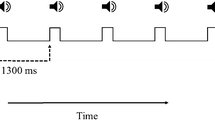

In the N-back task, participants are shown a sequence of letter stimuli and have to decide for each of them whether it matches the stimulus presented N items ago (see Fig. 2). Thus, to perform the task well, participants must remember the N most recently presented items and update that list as new items are presented. The N-back task taps the process of “updating”—one of the three major functions of cognitive control (Miyake et al., 2000; the remaining components being shifting/flexibility and inhibition).

N-back task. Participants face a constant stream of letters presented for 500 ms and separated by 3,000-ms blank screens. For each new letter, participants report via keypresses (“YES” and “NO” keys) whether it is the same as the letter presented N steps back. The left and right panels illustrate sequences of such trials in the two-back and the three-back conditions, respectively

Ophir and colleagues (2009) compared HMM and LMM on two-back and three-back versions of the N-back task. Performance was assessed only in terms of accuracy, not RTs. The results showed that HMM and LMM differed only in the three-back condition, in which HMM had approximately the same hit rate as LMM, but made substantially more false alarms. Furthermore, this increased false alarm rate appeared to build up during the course of the experiment, as if HMM had increasing difficulty filtering out previously encountered but now irrelevant items.

In the AVGP/NVGP literature, Boot, Kramer, Simons, Fabiani, and Gratton (2008) used a spatial version of the two-back task in two experiments. In the first experiment, they observed that self-reported AVGP tended to be faster, but not more accurate, than NVGP. In the second experiment, NVGPs trained on either an action video game or a control game. The results showed that all participants improved in this task, but there were no significant differences between the two groups.

More recently, Colzato, van den Wildenberg, Zmigrod, and Hommel (2013) compared AVGP and NVGP in one-back and two-back conditions—similar to those used by Ophir et al. (2009). Performance was assessed using both d' and RTs on correct trials. Their results showed that in both of these conditions, AVGP were faster and more accurate than NVGP. Specifically, AVGP had both higher hit rates and lower false alarm rates than NVGP, which is compatible with the hypothesis of improved cognitive control (rather than a specific improvement in distractor filtering). Finally, McDermott et al. (2014) measured both accuracy and RTs for AVGP and NVGP in a spatial N-back task in which N varied from one to seven. AVGP were faster overall than NVGP while keeping the same level of accuracy. These group differences were particularly salient when the task difficulty was increased.

Task switching

Task-switching experiments require participants to keep multiple task sets active in memory and to flexibly switch among task sets across trials. In a typical task-switching experiment, participants are presented with a sequence of stimuli on which they have to perform one of two tasks, depending on a previously presented cue (see Fig. 3 for an example). The reduction in performance after a task switch is termed the task-switch cost. Ophir et al. (2009) reported that HMM had a significantly larger task-switch cost than LMM, suggesting that HMM are less able to filter out irrelevant task sets.

Task switching. At the beginning of each trial, participants are presented a task cue indicating whether they should perform a NUMBER (“odd vs. even,” as in the example depicted above) or a LETTER (“vowel vs. consonant”) discrimination task on the subsequently presented stimulus that comprises both a letter and a number (e.g., “e7”)

Task switching has been repeatedly investigated in AVGP (Andrews & Murphy, 2006; Boot et al., 2008; Cain, Landau, & Shimamura, 2012; Colzato et al., 2014; Colzato, van Leeuwen, van den Wildenberg, & Hommel, 2010; Green, Sugarman, Medford, Klobusicky, & Bavelier, 2012; Karle, Watter, & Shedden, 2010; Strobach et al., 2012). Most of these studies have reported enhanced task-switching abilities. This is true both for studies that selected individuals on the basis of their self-reported action video game experience (i.e., cross-sectional studies—Andrews & Murphy, 2006; Boot et al., 2008; Cain et al., 2012; Colzato et al., 2010; Green et al., 2012; Karle et al., 2010; Strobach et al., 2012; but see Gaspar et al., 2014) and for studies that had participants train on action video games (i.e., training studies—Colzato et al., 2013; Green et al., 2012; Strobach et al., 2012; but see Boot et al., 2008). For example, Andrews and Murphy, using a number and letter discrimination task, observed a reduced task-switching cost for AVGP relative to NVGP. This was true for short intertrial intervals (150 ms) but not for longer ones (600 and 1,200 ms). Boot et al. required participants to classify numbers as either greater/smaller than 5 or even/odd. AVGP showed a reduced task-switch cost (although this was not seen in a training experiment). More recently, Green et al. (2012) contrasted AVGP and NVGP in four different task-switching experiments that varied in terms of response mode (vocal vs. manual), the nature of the task (perceptual vs. conceptual), and the level at which the switches were applied (switching among goals or stimulus response mappings). The researchers also trained participants on either an action video game or a control game for 50 h. In all of these conditions, AVGP showed reduced task-switch costs relative to NVGP. Overall, the vast majority of this literature suggests that action video game play improves cognitive flexibility.

Filter task

In the filter task, participants are successively presented with two arrays of oriented lines that are colored red or blue (see Fig. 4). On half of the trials, one of the red lines from the first display changes orientation in the second display. Participants are asked to report whether there was an orientation change in any of the red lines and to ignore the blue lines. By varying the numbers of targets (red lines) and distractors (blue lines) in the display, this task assesses both visual short-term memory capacity and resistance to distractors. Ophir and colleagues (2009) measured performance in ten different combinations of target number/distractor number, but because their focus was on the effect of distractors, they reported data only from the four conditions that kept the target number constant at two and varied the distractor number. Their results showed that for LMM, performance did not vary with the number of distractors. However, for HMM, performance decreased when the number of distractors was high, suggesting that HMM might be specifically impaired in filtering out task-irrelevant information.

Filter task. Participants fixate the central cross during the entire trial. Two images separated by a blank screen are presented in succession, depicting an array of randomly oriented red (target) and blue (distractor) lines (different shades of gray in the print figure). From the first to the second exposure, these images are either exactly identical (“same” response) or one (and only one) of the targets changes its orientation (“different” response). The numbers of targets and distractors vary across trials

Some studies have suggested that AVGP have better visual short-term memory (e.g., Appelbaum, Cain, Darling, & Mitroff, 2013) and are better at filtering out distractors (e.g., Mishra, Zinni, Bavelier, & Hillyard, 2011) than NVGP. More recently, Oei and Patterson (2013) investigated the impact of 20 h of action video game training on the filter task. They measured the same ten target/distractor number conditions as Ophir and colleagues (2009) but reported only the “two-targets six-distractors” (maximal number of distractors) and the “eight-targets no-distractors” (maximal number of targets) conditions. Playing action video games, in comparison to other forms of video games, improved performance in both of these conditions, suggesting that action video game experience improves both visual capacity and distractor suppression (see also Oei & Patterson, 2015).

Method

Recruitment procedure

The participants were students recruited at the University of Rochester and the University of Wisconsin using a mixture of procedures. At the University of Wisconsin, students filled out a battery of questionnaires that included the media multitasking and video game experience questionnaires as part of a general screening procedure to enroll in psychology experiments (and were recruited on the basis of their responses to these questionnaires). In addition, flyers were distributed at both universities to overtly and specifically recruit students with or without extensive action video gaming experience.

Participants

Of the 68 students who were initially enrolled in the study, 60 completed all the experiments and questionnaires (the partial data from the eight incomplete participants were excluded from further analysis). Of these, 35 were recruited and tested at the University of Rochester and 25 at the University of Wisconsin.

Participants filled in the same media multitasking questionnaire as in Ophir et al. (2009) and the same video game usage questionnaire that is used in the Bavelier lab (see the Appendix). In the media multitasking questionnaire, participants are presented with a list of media (e.g., TV, print) and asked to report how much time they spend on each medium and to what extent they simultaneously consume each of the media combinations (e.g., TV and print at the same time). In the video game usage questionnaire, participants are asked to report their weekly consumption of video games separately for a set of game genres (e.g., action games, role playing games). In the case of the media multitasking questionnaire, participants were classified as HMM or LMM using the same boundary values as for Ophir et al. (i.e., media multitasking indexes <2.86 defined LMM, and indexes >5.90, HMM). All participants falling between these two extremes were termed intermediate media multitaskers (IMM). For gaming, we used the same criteria previously used in our work (Hubert-Wallander, Green, Sugarman, & Bavelier, 2011), in that those who played first-person shooter or other action games for more than 5 h per week (and at most 3 h of turn-based, role playing, or music games) were classified as AVGP; those who played less than 1 h of first-person shooters, action or sports games, and real-time strategy games were classified as NVGP. Figure 5 reports the sample sizes across the media multitasking and gaming groups.

Distribution of the media multitasker index (MMI) among the action video game players (AVGP, top row) and non-video-game players (NVGP, bottom row). Vertical solid lines represent the criterion values to be considered either light (LMM) or heavy (HMM) media multitaskers (with individuals in between being categorized as intermediate media multitaskers—IMM). These values are identical to those used by Ophir et al. (2009), based on their large sample of college students

Participants were on average 20.68 years (SEM = 0.43) old and mostly males (52/60); the eight females were all NVGP, distributed in the media multitasking groups as follows: zero HMM, four IMM, and four LMM. All participants gave written consent and received either $10/h or course credit for their participation.

Apparatus

Participants were seated at an approximate distance of 57 cm from the screen. All measurements were made on the same Dell Optiplex computer running E-Prime 2.0 software, with stimuli presented on a 19-in. Dell TFT monitor.

Procedure

All participants completed three questionnaires: the Adult ADHD Self-Report Scale (ASRS, version 1.1, six items; Kessler et al., 2005), the Cognitive Failure Questionnaire (CFQ, 25 items; Broadbent, Cooper, FitzGerald, & Parkes, 1982), and the Work and Family Orientation Questionnaire (WOFO, 19 items distributed across three subscales—work, mastery, and competitiveness; Helmreich & Spence, 1978).

The four tasks presented in this study (i.e., AX-CPT, N-back, filter task, and task switching) were identical to those of Ophir et al. (2009) and were administered over two sessions. Half of the participants completed the N-back and filter tasks in their first session and the AX-CPT and task-switching task during the second session. This order was reversed for the remaining participants. The individual task procedures are described below.

AX-continuous performance task

Figure 1 illustrates the time course of typical trials in the distractor-absent and distractor-present conditions of the AX-CPT. Participants were exposed to a sequence of colored letters and were instructed to press the “Yes” key only in cases in which the current letter was a red “X” and the previous red letter (regardless of any intervening white letters—see below) was an “A.” In all other cases, participants were to press the “No” key. Two experimental conditions were run in succession: the distractor-absent and distractor-present conditions. The difference between these two conditions was that in the distractor-absent condition, all letters that appeared were red (with approximately 5 s between the presentations—see Fig. 1). Conversely, in the distractor-present condition, three different white colored distractor letters were presented within the 5-s period between red letter presentations. Distractors were presented for 300 ms and separated by 1-s intervals. Participants were to press the “No” key in response to each of these distractor stimuli.

All participants first completed the distractor-absent condition and then the distractor-present condition. Each of these conditions contained five blocks of 30 trials—21 trials were of the type “AX,” three had a cue “A” but a non-“X” probe (generically termed the “AY” trial type), three had an “X” probe but a non-“A” cue (the “BX” trial type), and three trials had neither an “A” as a cue nor an “X” as a probe (“BY” trials). The complete experiment lasted about 30 min.

N-back

Figure 2 illustrates the time course of typical trials in the two-back and three-back conditions. Participants were presented with sequences of letters (selected from the set B C D F G H J K L M N P Q R S T V W Y Z). Letters were presented for 500 ms and interleaved with 3-s periods of a blank screen, during which participants were to press one of two keyboard keys to indicate whether the just-presented letter was the same as the letter that had been presented two letters (in the two-back condition) or three letters (in the three-back condition) earlier.

All participants first completed the two-back condition before moving on to the three-back condition. For each of these conditions, participants started with a practice block of 20 trials before completing three blocks of 30 trials of the N-back task. One third of the trials in each of these blocks required a “same as N-back” response.

Task switching

Figure 3 illustrates a typical trial in the task-switching experiment. In this experiment, participants were presented with a stimulus composed of both a letter (from the set a e i k n p s u) and a number (from 2 to 9; e.g., “e7”) and asked to perform either a letter discrimination task (“vowel vs. consonant”) or a number discrimination task (“odd vs. even”) by pressing one of two keys. The same two keys were used for both tasks (left button for odd/vowel and right button for even/consonant). A task cue (NUMBER vs. LETTER) was displayed for 200 ms at the beginning of each trial and indicated which task to perform on the stimulus presented 226 ms later. The intertrial interval was 950 ms.

Participants first performed three practice blocks (both tasks in isolation, and then a shortened version of the full task). Each of these blocks contained 30 trials. After the practice blocks, participants performed four blocks of the task-switching experiment. Each of these blocks contained 80 trials, 40% of which were randomly interleaved switch trials.

Filter task

Figure 4 illustrates the spatial layout and time course of a typical trial in the filter task. Participants were asked to fixate a central cross that appeared at the beginning of each trial on a gray background. Then, 200 ms after this onset, an array of randomly oriented, evenly distributed, nonoverlapping red and blue lines was displayed for 100 ms. The participants’ task was to encode the orientations of the red target lines. The blue lines were task-irrelevant and therefore called “distractors.” A second array, displayed for 2,000 ms, was presented 900 ms after the offset of the first display. Participants reported whether or not one of the red target lines had changed orientation (±45°) across these two arrays. An orientation change occurred on 50% of the trials, and the numbers of targets and distractors varied from trial to trial. There could be two, four, six, or eight targets and zero, two, four, or six distractors, with the additional constraint that the total number of elements in an array could not exceed eight (creating a total of ten target–distractor conditions). Participants first performed 20 practice trials (two trials per condition) before completing a unique block of 200 trials (ten trials per condition).

Data analysis

Software package

The data were analyzed using R (version 3.0.1).

MMI scores

The media multitasking questionnaire (Ophir et al., 2009) assesses both the total amount of media use (across 12 distinct forms of media) and the joint usage of all pairs of media. We then computed an MMI, which reflects the mean amount of simultaneously used media weighted by the total use of each form of media (see the supplementary methods for further details). Ophir and colleagues observed that MMIs measured on 262 university students produced an approximately Gaussian distribution with a mean of 4.38 and a standard deviation of 1.52. They classified participants who were 1 SD above or below the mean as HMM or LMM, respectively. Note that the remaining participants were not included in their study. Unlike Ophir et al., we included these participants as a third group that we called the intermediate media multitaskers.

In the present study, we measured MMIs on 60 participants, some of whom were overtly recruited for being AVGP or NVGP. Figure 5 illustrates the distribution of MMIs as a function of gaming group. MMI again appeared to be approximately normally distributed, with a mean of 3.98 and a standard deviation of 1.99. AVGP had higher scores than NVGP (4.45 vs. 3.62), but this difference was not statistically significant [two-sided t test: t(58) = 1.63, p = .109].

To classify our participants into HMM, LMM, and IMM, we used the same numeric values obtained by Ophir and colleagues (2009; as opposed to using ±1 SD from the mean of our own sample). We did so for a number of reasons. First, their sample matches more closely the distribution of MMI scores in the general population, because they used a larger sample of participants from a homogeneous population (as compared to our sample, which dramatically overrepresented AVGP as compared to their numbers in the general population). Second, we wanted to be able to compare and contrast our results directly with those of Ophir and colleagues. Finally, because in our sample AVGP tended to have larger MMI scores than NVGP, using a cutoff of 1 SD below the mean would have led to a very small sample size of AVGP among the LMM (there would, however, have been no effect on the composition of the HMM group).

Accuracy

Trials without any response or with aberrant RTs so short that they could not be based on processing the display (less than 120 ms) or so long that participants were not doing our tasks (greater than 5 s) were excluded from further accuracy analyses (on average, 0.6% of the data: AX-CPT, 1.81%; N-back, 0.41%; task switching, 0.11%, filter task, 0.15%). Accuracy was assessed as the percentage of correct responses in all but the filter task. Following previous studies, performance in the filter task was assessed using the index of visual capacity K = S(H – F), where S is the number of targets in the display, H the hit rate, and F the false alarm rate (Marois & Ivanoff, 2005; Vogel, McCollough, & Machizawa, 2005). In addition, performance in the filter task was evaluated using an alternative model (Ma, Husain, & Bays, 2014; van den Berg, Shin, Chou, George, & Ma, 2012); in this case, accuracy was assessed in terms of numbers of hits and false alarms.

Response speed

Response speed was assessed on correct trials only. Because RT distributions are known to be skewed, we treated the RTs for outliers by first log-transforming the RTs, removing as outliers all trials on which the log(RT) was 2 SDs beyond the mean of its respective condition for each task and participant, before transforming back to RTs. This procedure excluded 4.5% of the data across tasks and groups. The percentages of trials removed across tasks (AX-CPT and N-back, 4.6%; task switching, 4.5%; filter task, 4.2%) and across groups (LMM, 4.34%; IMM, 4.53%; HMM, 4.54%; AVGP, 4.35%; NVGP, 4.59%) were comparable. Analyses were then carried out on the median RTs computed for each condition and each participant. In the task-switching experiment, as is customary, all trials that immediately followed an error trial were excluded from the median RT computation. We note that analyses carried out on the median RT, without applying the outlier-RT trimming procedure described above, led to virtually identical results. However, because the p values were overall more significant without outlier removal, we chose to report the results from the more conservative approach with outlier removal.

Combined RT/accuracy analyses

In order to assess group differences in terms of both speed and accuracy, we computed inverse efficiency scores that combined measures of response speed and accuracy in a unique variable (i.e., by dividing response speed by the accuracy rate), a method often used in the developmental or aging literature when groups with different baseline RTs have to be compared (see, e.g., Akhtar & Enns, 1989; McDermott et al., 2014). The inverse efficiency scores were then submitted to statistical analysis for all tasks except the filter task, in which response speed is not emphasized and the typical measure of interest is K.

Data analyses plan

For each of the tasks, the omnibus analysis of variance (ANOVA) with the between-group factors Media Multitasking Group (three levels: HMM, IMM, LMM) and Gaming Group (two levels: AVGP, NVGP) is presented first. Next, because previously published studies on media multitasking contrasted only LMM and HMM, we present an ANOVA that includes only HMM and LMM, with the factors Media Multitasking Group (two levels: HMM, LMM) and Gaming Group (two levels: AVGP, NVGP). Then, because the bulk of the action video game literature has not selected for either HMM or LMM, and thus the IMM group is likely to be most similar to previously published studies contrasting AVGP to NVGP, an ANOVA focused only on IMM from the different gaming groups (two levels: AVGP, NVGP) is presented. Because the numbers of AVGP and NVGP within the HMM and LMM groups were limited, it was not possible to reliably assess the effects of video game experience within each of these two groups. As an alternative, we decided to collapse these two groups, to contrast the effects of gaming among these more “extreme” media multitaskers with those observed within the IMM. Finally, when contrasting two conditions or groups, we used Welch’s t test, which does not assume the samples to have equal variances.

Results

Questionnaires

Scores on the ASRS, the CFQ, and the WOFO did not differ across gaming and media multitasking groups (ANOVA: all ps > .19), with the exception of the Mastery subscale of the WOFO questionnaire. High scores on this scale reflect a strong “preference for difficult, challenging tasks and for meeting internally prescribed standards of performance excellence.” We observed a significant difference in WOFO–Mastery across media multitasking levels [F(2, 54) = 5.78, p = .0053], as well as a significant Media Multitasking × Gaming Group interaction [F(2, 54) = 3.44, p = .04]. Mastery scores were higher for HMM (3.65 ± 0.1; p < .02) than for both IMM (3.25 ± 0.07; p = .0016) and LMM (3.37 ± 0.11; p = .06); these last two groups were not different from each other (p = .35). The gaming by media multitasking interaction was more difficult to interpret: Among the LMM group, Mastery scores did not differ between AVGP and NVGP (p = .75); among the IMM group, AVGP had lower Mastery scores than NVGP (p = .02), whereas among the HMM, the opposite tended to be true (p = .07).

AX-CPT

The average speed and accuracy for each group and each condition of the experiment are displayed in Table 1.

Overall performance was assessed using inverse efficiency scores (see Fig. 6). The 2×3×2 omnibus ANOVA on inverse efficiency scores with the factors Condition (i.e., distractor present vs. absent), Media Multitasking (i.e., HMM, IMM, LMM), and Gaming (i.e., AVGP vs. NVGP) revealed a main effect of condition [F(1, 108) = 11.73, p = .00087], a main effect of media multitasking [F(2, 108) = 4.01, p = .02], and no significant effect of gaming [F(1, 108) = 0.94, p = .34]. Condition did not interact with gaming group and/or media multitasking group (all ps > .25). The gaming by media multitasking group interaction was significant [F(2, 108) = 3.83, p = .02], suggesting different impacts of video game group as a function of media multitasking group. Given the low Ns in the extreme media multitasking cells (HMM and LMM), we regrouped these two into an extreme media multitasking group and carried out a 2×2×2 ANOVA with the factors Condition (i.e., distractor present vs. absent), Media Multitasking (i.e., extreme vs. intermediate), and Gaming (i.e., AVGP vs. NVGP). The results confirmed a main effect of condition [F(1, 112) = 8.53, p < .005] and a significant gaming group by media multitasking interaction [F(1, 112) = 8.44, p < .005]. No significant effect of gaming emerged [F(1, 112) = 3.07, p = .08], and no effect of media multitasking (IMM vs. extreme MM: F(1, 112) = 1.32, p = .25; all other ps > 0.14].

AX-CPT: Mean inverse efficiency as a function of group and condition. Overall performance is illustrated in terms of inverse efficiency. Note that a smaller inverse efficiency score means better performance. The left, middle, and right panels represent the data from participants classified as light (LMM), intermediate (IMM), and heavy (HMM) media multitaskers. Data from the action video game players (AVGP) and non-video-game players (NVGP) are displayed as empty and filled circles, respectively. Performance decreased with increasing levels of media multitasking. AVGP outperformed NVGP, but only among the IMM group. Error bars indicate 1 SEM

The 2×2×2 ANOVA focused on media multitasking impact and contrasting HMM and LMM revealed a main effect of condition [F(1, 56) = 15, p = .00028], a main effect of media multitasking [F(1, 56) = 9.04, p = .0039] due to worse performance in HMM, and no significant main effect of gaming group [F(1, 56) = 0.4, p = .5]. None of the other effects were significant (for interactions with condition, ps > 0.2; for the interaction between gaming and media multitasking group, p = .99). Thus, unlike what was reported by Ophir et al. (2009), condition and media multitasking group did not interact.

Finally, the 2×2 ANOVA with condition and gaming group including only IMM individuals yielded a main effect of gaming group [F(2, 25) = 8.16, p = .006], no significant main effect of condition, and no significant gaming group by condition interaction (p > .37).

AX-CPT: Discussion

As in previous work, the overall performance in this proactive cognitive control task was best in the distractor condition as compared to the nondistractor condition. Indeed, it has been reported that the presence of differently colored distractors between prime and target tends to facilitate processing, possibly by maintaining the engagement of participants over time as they wait for the next target. A main effect of media multitasking group indicated differences in performance as a function of media multitasking, and a media multitasking by gaming group interaction suggested different impacts of gaming as a function of media multitasking group.

A closer look at the impact of media multitasking group confirmed worse performance in HMM than in LMM. Although this effect is in the same direction as that reported previously by Ophir et al. (2009), in that study, HMM differed from LMM only when distractors were added to the task. In the present study, we did not observe a specific impairment of the HMM group relative to the LMM group in the presence of distractors. Instead, we noted that HMM performed worse overall than LMM, with IMM falling numerically between the two groups (see Table 1).

Finally, the effects of AVGP on proactive control depended on the media multitasking group: Only in the IMM group did AVGP outperform NVGP, and they did so in terms of both accuracy and response speed (see Table 1), excluding different speed–accuracy trade-offs between AVGP and NVGP as a possible explanation. Importantly, gaming group was not a differentiating variable when considering the extreme media multitasking groups, whether LMM or HMM, as was confirmed by the lack of significant interactions between gaming status and media multitasking status.

N-back

The average speed and accuracy for each group and each condition of the experiment are displayed in Table 2.

Overall performance was assessed using inverse efficiency scores (see Fig. 7). The 2×3×2 omnibus ANOVA on inverse efficiency scores with the factors Condition (i.e., two- vs. three-back), Media Multitasking (i.e., HMM, IMM, LMM), and Gaming (i.e., AVGP vs. NVGP) revealed a main effect of condition [F(1, 108) = 5.77, p = .02]. There was no significant main effect of gaming group [F(1, 108) = 0.31, p = .58], and only a marginal effect of media multitasking group [F(2, 108) = 2.7, p = .07]. Condition did not interact with gaming or media multitasking (all ps > .5). However, we did find a significant gaming by media multitasking group interaction [F(2, 108) = 4.05, p = .02], again indicating a different impact of action video game play as a function of media multitasking. To further understand this effect, a 2 (two- vs. three-back) × 2 (extreme vs. intermediate) × 2 (AVGP vs. NVGP) ANOVA was carried out, grouping both HMM and LMM participants as an extreme media multitasking group. This analysis showed a main effect of condition [F(1, 112) = 6.3, p < .02] and a significant gaming group by media multitasking interaction [F(1, 112) = 7.2, p < .009]. We observed no main effect of gaming group [F(1, 112) = 1.26, p = .26], but there was a significant effect of media mulitasking [F(1, 112) = 4, p < .05], suggesting that in this task IMM performed better overall than the combined HMM and LMM groups. No other effects were significant (ps > .6).

N-back: Mean inverse efficiency as a function of group and condition. Overall performance was assessed with inverse efficiency. The left, middle, and right panels represent data from the light (LMM), intermediate (IMM), and heavy (HMM) media multitaskers. Data from the action video game players (AVGP) and non-video-game players (NVGP) are displayed as empty and filled circles, respectively. Data points are group averages, and error bars indicate 1 SEM

The 2×2×2 ANOVA contrasting HMM and LMM on inverse efficiency scores revealed only a marginal effect of condition [F(1, 56) = 2.69, p = .11; all other ps > .32], and no significant difference when contrasting HMM and LMM [F(1, 56) = 0.78, p = .38]. Because Ophir et al. (2009) reported increased false alarms in HMM relative to LMM, but only in the three-back condition, we ran generalized mixed-effects models on the false alarms rates and computed likelihood ratio tests to compare the models with and without media multitasking group. We found no significant difference between HMM and LMM in terms of overall false alarm rates (Condition vs. Condition + Media Multitasking Group: χ 2(1) = 1.02, p = .31], and also no evidence for an interaction between media multitasking group and condition (Condition + Media Multitasking Group vs. Condition × Media Multitasking Group: χ 2(1) = 0.93, p = .33].

Finally, the 2×2 ANOVA restricted to IMM with Condition and Gaming Group as factors showed a marginally significant effect of condition [F(1, 52) = 3.84, p = .06] and a significant effect of gaming group [F(1, 52) = 12.9, p = .0007], with no significant interaction (p > .8). Numerically, AVGP were both more accurate and faster than NVGP (see Table 2).

N-back: Discussion

Poorer performance was found on three-back than on two-back trials, as expected. The main effect of media multitasking group was only marginally significant, driven by greater efficiency in IMM than in both HMM and LMM (faster responses at equivalent accuracy—see Table 2). Within the IMM group, the AVGP exhibited significantly better performance than nongamers, replicating and extending previous work on action video games. In contrast, action video game play did not have any effect when considering HMM and LMM participants.

Finally, although numerically HMM tended to be less efficient than LMM (less accurate and slower), no significant differences were observed between HMM and LMM, and unlike in Ophir et al. (2009), HMM were not specifically impaired in the three-back condition.

Interestingly, the effects of action video gaming on N-back performance depended on the media multitasking group; only in the IMM group did AVGP outperform NVGP. Gaming group was not a differentiating variable when considering the extreme media multitasking groups, whether LMM or HMM,, as confirmed by the lack of significant interactions between gaming status and media multitasking status.

Task switching

The average speed and accuracy for each group and each condition of the experiment are displayed in Table 3.

Overall performance was assessed using inverse efficiency scores (see Fig. 8). The 2×3×2 omnibus ANOVA on inverse efficiency scores with the factors Trial Type (two levels: task-repeat and task-switch trials), Media Multitasking (three levels), and Gaming (two levels) revealed only a significant effect of trial type [F(1, 108) = 16.5, p = .00009] due to greater efficiency on task-repeat than on task-switch trials. None of the other effects were significant (all ps > .12). As before, a 2×2×2 ANOVA grouping participants from both HMM and LMM as an extreme media multitasking group was carried out. The results showed a main effect of condition [F(1, 112) = 17, p = .00007] and a significant effect of gaming group [F(1, 112) = 4.4, p < .04], with AVGP being overall more efficient than NVGP (809 ± 32 vs. 910 ± 40). No other effects were significant (ps > .1).

Task switching: Mean inverse efficiency as a function of group and trial type (i.e., task-repeat and task-switch trials). Overall performance was assessed with inverse efficiency. The left, middle, and right panels show the group averages for the light (LMM), intermediate (IMM), and heavy (HMM) media multitasking groups, respectively. Data from the action video gamers (AVGP) are depicted as empty circles; those from the non-video-gamers (NVGP) are shown as filled circles. Error bars indicate 1 SEM

The 2×2×2 ANOVA on inverse efficiency scores comparing LMM and HMM again revealed a main effect of trial type [F(1, 56) = 9, p = .004]. None of the other effects were significant (ps > .2), except for a marginal interaction between gaming and media multitasking group [F(1, 56) = 2.93, p = .09], most likely reflecting a trend for greater AVGP efficiency in LMM, but worse efficiency in HMM.

The 2×2 ANOVA restricted to IMM participants showed main effects of trial type [F(1, 52) = 7.53, p = .008] and gaming group [F(1, 52) = 5.83, p = .02], with no interaction (p > .7). The AVGP appeared to be numerically both more accurate and faster than NVGP (see Table 3).

Task switching: Discussion

As expected, performance was poorer on task-switch than on task-repeat trials, no significant effects of media multitasking were observed, and AVGP tended to show better performance overall than NVGP.

Several previous studies have compared the abilities of HMM and LMM to flexibly switch between tasks. Ophir et al. (2009) reported that HMM were less flexible than LMM. Subsequent studies, however, either observed no difference between these two groups (Minear et al., 2013) or a difference in the opposite direction (Alzahabi & Becker, 2013). In the present study, we observed no reliable difference across these two media multitasking groups. One possible explanation for these discrepancies may reside in an interaction effect between media multitasking, video game play, and trial type (i.e., task switch vs. task repeat). For illustrative purposes only, if we consider the NVGP within the HMM and LMM groups, we see a pattern similar to that of Alzahabi and Becker (i.e., smaller task-switch costs for HMM), whereas the AVGP within those same groups present a pattern similar to that of Ophir et al. (i.e., larger task-switch costs for HMM). Clearly, our data lack the power to test such a claim, and we leave it to future studies with increased sample sizes to resolve this issue.

A large number of studies have investigated whether action video game play is associated with improved task-switching ability, and most have observed this to be the case in both cross-sectional studies (Andrews & Murphy, 2006; Boot et al., 2008; Cain et al., 2012; Colzato et al., 2010; Green et al., 2012; Karle et al., 2010; Strobach et al., 2012; but see Gaspar et al., 2014) and experimental studies (i.e., training—Colzato et al., 2013; Green et al., 2012; Strobach et al., 2012; but see Boot et al., 2008). In the present study, we observed that AVGP were indeed faster than NVGP, especially when considering IMM participants. However, although task-switch costs were numerically smaller in AVGP than in NVGP—and by an amount that was similar to that previously observed in the literature—the variance was such that the reduction in task-switch costs proper was not statistically significant.

Finally, the interaction between media multitasking status and gaming status was less clear for this measure than for the previous two measures, although numerically a trend was noted for worse AVGP performance in the HMM group.

Filter task

Analyses were carried out on the index of visual capacity K (see Fig. 9). Because target and distractor numbers are not independent but rather are negatively correlated, the analysis was performed with target number as the variable of interest, collapsing across distractor numbers. We note that K is expected to increase as target numbers increase, since K by definition is limited by target number.

Filter task: Mean visual capacity (K) as a function of group and condition. K is plotted as a function of the number of distractors (x-axis), the number of targets (rows of panels), the media multitasking group (i.e., LMM, IMM, or HMM, columns of panels), and the gaming group (i.e., AVGP and NVGP, colors). Error bars indicate 1 SEM

The 4×3×2 omnibus ANOVA on the K index, with the factors Target Number (four levels: two, four, six, or eight targets), Media Multitasking (three levels), and Gaming (two levels), revealed main effects of target number [F(3, 216) = 3.87, p = .01] and media multitasking [F(2, 216) = 4.3, p = .01], and a significant gaming group by media multitasking interaction [F(2, 216) = 5.8, p = .003; all other ps > .16]. As before, we ran the same ANOVA after grouping participants from both the HMM and LMM as an extreme group to better characterize the interaction between gaming status and media multitasking. This 4×2×2 omnibus ANOVA showed a main effect of gaming [F(1, 224) = 7.1, p < .01], a main effect of media multitasking (intermediate vs. extreme) [F(1, 224) = 6.4, p < .02]—with IMM performing better than the extreme group (K: 2.1 ± 0.12 vs. 1.8 ± 0.10)—and a significant gaming group by media multitasking interaction [F(1, 224) = 12, p = .0006]. Apart from the main effect of the number of targets [F(3, 224) = 6, p = .0006], no other effects were significant (ps > .14).

When contrasting HMM and LMM in a 4×2×2 ANOVA, no significant effect was found (all ps > .13). Yet, because Ophir et al. (2009) reported a rather specific effect of HMM, whereby those individuals showed worse performance than LMM as distractors increased when the target number was held at two, we ran the equivalent analysis. For each participant we regressed K on the number of distractors in the two-targets condition. We then contrasted the estimated slopes and intercepts across the media multitasking (LMM/HMM) and gaming (AVGP/NVGP) groups. Numerically, HMM were more affected by the number of distractors than were LMM (HMM: –0.027 ± 0.02 vs. LMM: –0.018 ± 0.01) and had lower intercepts (HMM: 1.43 ± 0.11 vs. LMM: 1.59 ± 0.05), but these differences were not statistically reliable (in t tests on intercepts or slopes, all ps > .22). The equivalent analysis on AVGP and NVGP also showed no significant difference (ps > .16), except when focusing on the IMM group, in which AVGP had numerically higher intercepts than NVGP (1.73 ± 0.04 vs. 1.52 ± 0.09) and smaller distractor effects (–0.018 ± 0.02 vs. –0.035 ± 0.02). The effect on intercepts was significant [t(19) = 2.1, p = .05], but the distractor effect was not [t(25.1) = 0.5741, p = .57].

The 4×2 ANOVA restricted to IMM with Target Number and Gaming as factors revealed main effects of gaming group [F(1, 104) = 19.2, p = .00003] and of target number [F(3, 104) = 6.4, p = .0005], as well as a significant gaming group by target number interaction [F(3, 104) = 4.4, p = .005], suggesting different effects of gaming group as a function of media multitasking and target number. Following Oei and Patterson (2013, 2015), who compared AVGP and NVGP visual capacity in this same task, we ran analyses on just the “two-targets six-distractors” condition and just the “eight-targets no-distractors” condition, as they did. Within the IMM group, AVGP did outperform NVGP on the “two-targets six-distractors” condition (K: AVGP, 1.650 ± 0.118; NVGP, 1.346 ± 0.113; one-sided t test: t(25) = 1.83, p = .04] and on the “eight-targets no-distractors” condition (AVGP, 3.852 ± 0.436; NVGP, 1.685 ± 0.413; t(24.9) = 3.6, p = .0007], confirming enhanced visual memory in AVGP. The equivalent analysis on HMM and LMM showed no significant difference on the “eight-targets no-distractors” condition [t(26.7) = 1.15, p = .13], but there was a trend for LMM to performing better in the “two-targets six-distractors” condition (K: LMM, 1.56 ± 0.09; HMM, 1.30 ± 0.13) [one-sided t(20.6) = 1.6, p = .06].

Although it is conventional to use capacity K to characterize individuals’ visual working memory, it has recently been pointed out that using of this measure implies a commitment to the underlying slot model of working memory limitations, in which a fixed number of items is stored perfectly, and any others are forgotten completely (Ma et al., 2014; van den Berg et al., 2012; for reviews of the slot model, see Cowan, 2001; Fukuda, Awh, & Vogel, 2010). This account contrasts with the so-called “noise-based” or “resource” models of visual memory (Bays & Husain, 2008; Ma et al., 2014; van den Berg et al., 2012; Wilken & Ma, 2004), in which performance declines with set size solely due to an increase of noise per items as set size increases. To make sure that our conclusions were not dependent on model assumptions, we reanalyzed the data under the variable-precision model of visual short-term memory (van den Berg et al., 2012). Figure 10 shows the hits (circles) and false alarms (squares) for each of the media multitasking (panels) and gaming (color) groups as a function of the number of targets. Symbols are group averages, and lines represent the model’s best fit of the within-group pooled data.

Filter task: Data and model fits of the hit and false alarm rates. Hit and false alarm rates are plotted (circles and squares, respectively) as a function of the number of targets (x-axis), the media multitasking group (i.e., LMM, IMM, or HMM, columns of panels), and the gaming group (i.e., AVGP [empty symbols] and NVGP [filled symbols]). Points are group averages, and error bars indicate 1 SEM. Lines illustrate the model fits

To assess group differences quantitatively, we fit the pooled data within each subgroup (e.g., only data from the AVGP within the IMM group) or across groups (e.g., combining data across all IMM participants, irrespective of their gaming group) and computed nested likelihood ratio tests to determine whether the increased number of parameters required to fit each subgroup separately (e.g., AVGP and NVGP within IMM) yielded an improvement in the fits after controlling for those extra parameters. As in the previous analyses, we first looked at media-multitasking-related group differences. We found a significant difference between HMM and LMM (D = 19, df = 3, p = .0003, Akaike information criterion [AIC] difference = 13) and HMM and IMM (D = 22, df = 3, p = .0001, AIC difference = 16), but no reliable difference between IMM and LMM (D = 3.8, df = 3, p = .28, AIC difference = –2.2). Numerically, HMM had the lowest average performance (d' = 1.2), followed by LMM (d' = 1.5) and IMM (d' = 1.6). Next, we looked at gaming-related group differences. As in the previous analyses, we observed a clear difference between AVGP and NVGP within the IMM group (D = 53, df = 3, p = 1.7e-13, AIC difference: 47.1), but no significant difference between AVGP and NVGP within the LMM group (D = 2.9, df = 3, p = .41, AIC difference: –3.1), and only a small difference within the HMM group (D = 9.4, p = .02, AIC difference: 3.4). Numerically, AVGP outperformed NVGP within the IMM group (AVGP, d' = 1.8; NVGP, d' = 1.3), but not among the LMM (AVGP, d' = 1.5; NVGP, d' = 1.5) or HMM (AVGP, d' = 1.1; NVGP, d' = 1.3) participants.

Filter task: Discussion

As expected, capacity in this visual memory task increased as the number of targets to be remembered was increased. With regard to media effects, an interaction between media multitasking group and gaming group was seen, again suggesting different impacts of gaming group as a function of media multitasking group. Indeed, when considering the IMM group only, AVGP showed greater visual capacity than NVGP, reflecting enhanced visual memory abilities. This effect seems mostly due to enhanced hits, rather than to differences in false alarms (Fig. 10). Thus, overall we replicated previous work suggesting that AVGP have a greater ability to encode targets in memory (Green & Bavelier, 2006b; Oei & Patterson, 2013). A main effect of media multitasking was observed, with HMM performing worse than both IMM and LMM. IMM showed a numerical tendency for better performance, as measured by either K or d'. However, this seems mostly driven by AVGP in that subpopulation. When contrasting HMM and LMM, we found no reliable differences in terms of distractor effects, and modest differences in terms of visual capacity. This stands in contrast to Ophir et al.’s (2009) report that HMM, unlike LMM, were affected by the number of distractors presented in the display but did not differ across conditions in their ability to encode targets.

General discussion

The goal of the present study was to investigate the impact of diverse forms of media consumption on cognitive control functions. Previous studies have reported that people who consume a large number of forms of media at the same time—known as heavy media multitaskers—experience an increased and specific difficulty in handling distracting information relative to people who media multitask less intensively. A different line of research has investigated the effects of action video game play on cognition and has generally reported cognitive benefits. These two groups of participants are therefore interesting candidates for the study of the impact of media on cognition. In addition, gaming and media multitasking are not mutually exclusive factors. In fact, because they appear to lead to opposite effects, failing to control for one while studying the other may lead to improper conclusions about one or both effects. In the present study, we measured cognitive performance on four different tasks requiring cognitive control among the same set of participants, for whom we recorded their self-reported media multitasking activity and action video game experience. Although our results demonstrate that it is indeed important to take both of these factors into account, one should keep in mind that this study is purely correlational, and thus is agnostic about the causal relationship between these forms of media consumption and cognitive performance. Numerous training studies have shown that playing action video games may cause cognitive benefits, but no study has yet attempted to manipulate the media multitasking habits of randomly selected participants and to assess the cognitive consequences.

In the present study, participants’ performance was measured in tasks that tap proactive cognitive control (the AX-continuous performance task), working memory (the N-back task), cognitive flexibility (task switching), and visual short-term memory (the filter task). For each of these tasks, we asked what differences in cognitive ability are associated with media multitasking and with action video game play, and to what extent these two factors interact.

Media multitasking effects

The same four tasks were used by Ophir and colleagues (2009), who documented HMM to be specifically impaired in the presence of distractors (as compared to LMM). The present results do not exactly replicate their findings. In our data, no specific impairment emerged for HMM in conditions containing distractors. Instead, the HMM in our data set appeared to be overall less efficient than LMM, in that they were either slower, made more errors, or were both slower and made more errors. This result was significant in the AX-CPT and in the filter task.

Interestingly, most previous studies have focused only on HMM and LMM, ignoring intermediate participants, presumably based on the assumption that the dependent variable of interest should be a monotonic function of media multitasking. In other words, IMM performance was expected to lie somewhere in between HMM and LMM performance. This assumption is contradicted by our data. In the AX-CPT, IMM did indeed perform better than HMM and worse than LMM, but in the N-back and filter tasks, IMM performed best. Although this pattern may lead one to conclude that the relationship between performance and media multitasking follows a U-shaped curve, with intermediate levels of media multitasking being associated with enhanced cognitive control, the inclusion of action video game status sheds a rather different light.

Action video game effects

Research on the effects of action video games has repeatedly shown that AVGP outperform NVGP on a number of cognitive dimensions, with studies occasionally reporting an absence of such group differences. On the basis of that literature, one might expect AVGP to have outperformed NVGP in all four tasks in this study. Yet, the omnibus ANOVA failed to document a main effect of gaming for each of the four tasks. Rather, our results show that AVGP outperformed NVGP in all four tasks, but only when those participants had an intermediate level of media multitasking. Thus, the effects of action video games described in the literature may depend on a variety of individual difference factors, including media multitasking experience (see also the individual differences in genetic composition examined by Colzato et al., 2014). Although this study replicated previous reports of attentional and cognitive benefits in action game players, it only did so in the IMM group, with the main effect of action game play vanishing when considering the whole sample. This pattern of results has interesting implications for the various criticisms raised about the field. For instance, some researchers have argued that the reason why AVGP outperform NVGP in cognitive tasks is that AVGP, but not NVGP, might believe that they have above-average cognitive abilities, and that this belief is what actually drives the observed cognitive benefits (Boot, Blakely, & Simons, 2011; Boot, Simons, Stothart, & Stutts, 2013). Although we did not ask our participants about their expectations, our results nonetheless indicate that this hypothesis is unlikely. Indeed, across our four experiments AVGP outperformed NVGP only within the intermediate group of media multitaskers, showing that being an AVGP is not sufficient for observing the effect. It is also worth mentioning that HMM, who have been documented to be quite self-confident in their cognitive abilities (Sanbonmatsu et al., 2013), and who showed the highest Mastery scores as measured by the WOFO in the present study, did not show better performance, but instead showed, if anything, worse performance. Overall, this pattern of results is inconsistent with the idea that the effects of media on cognitive performance can be accounted by “expectation effects.”

Media multitasking by action gaming interactions

When considering the relative impacts of media multitasking and action gaming, omnibus ANOVAs indicated significant interactions between gaming and media multitasking status in three of the four tasks presented (AX-CPT, N-back, and filter tasks). Such interactions highlight the importance of being aware of the media multitasking status of participants when considering the impact of other experiential factors, such as video game experience, on performance. Experiments that have sampled AVGP and NVGP from the higher or lower ranges of media multitaskers will have difficulties observing group differences (Cain & Mitroff, 2011). Among the three media multitasking groups, HMM performed quite poorly overall, and within the extreme media multitasking groups (HMM and LMM), we saw no effect of playing action video games. Thus, playing action video games appears to only be beneficial in the IMM group, suggesting its potential benefit may be of no protective value to HMM. It is important to note, though, that the latter suggestion comes with a variety of critical caveats—in particular, that (1) as yet there is no conclusive evidence showing that the relationship between heavy media multitasking and poor attentional performance is causal (since all of the research in the domain thus far has been correlational in nature) and (2) the numbers of participants in the relevant cells (e.g., AVGP + HMM) were small.

Substantiating these suggestive interaction effects will require the collection of much larger sample sizes to reach adequate levels of statistical power. The typical recruitment procedure utilized in the field (and in the present article) is ill-adapted to this aim, given the scarcity of these extreme populations. Indeed, we currently estimate that AVGP and NVGP each represent less than 10% of the student population on US campuses, given our selection criteria (with the situation being worse if the goal is to perfectly match for sex). Similarly, LMM and HMM, by definition, each represent approximately 16% of the student population on US campuses. Because action gaming and media multitasking behavior are not strongly correlated, the intersection of these extremes is quite rare (i.e., 1%–2% of the population). For such rare populations, recruitment may best be achieved by implementing online versions of these tasks and recruiting participants via crowdsourcing platforms (see, e.g., Shapiro, Chandler, & Mueller, 2013). In addition to the increase in certainty that larger Ns would provide regarding the observed effects, significantly larger sample sizes would also allow for more sophisticated statistical tools, such as factor analysis, confirmatory factor analysis, and structural equation modeling, to be employed. These, in turn, could provide much needed clarity regarding the sometimes counterintuitive effects of media consumption on attentional control.

Conclusions