Abstract

Growing evidence suggests that visual information is processed differently in the near-hand space, relative to the space far from the hands. To account for the existing literature, we recently proposed that the costs and benefits of hand proximity may be due to differential contributions of the action-oriented magnocellular (M) and the perception-oriented parvocellular (P) pathways. Evidence suggests that, relative to the space far from the hands, in near-hand space the contribution of the M pathway increases while the contribution of the P pathway decreases. The present study tested an important consequence of this account for visual representation. Given the P pathway’s role in feeding regions in which visual representations of unified objects (with bound features) are formed, we predicted that hand proximity would reduce feature binding. Consistent with this prediction, two experiments revealed signs of reduced feature binding in the near-hand space, relative to the far-hand space. We propose that the higher contribution of the M pathway, along with the reduced contribution of the P pathway, shifts visual perception away from an object-based perceptual mode toward a feature-based mode. These results are discussed in light of the distinction between action-oriented and perception-oriented vision.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

One of the most fundamental and ubiquitous aspects of human existence is a continuous reciprocal cycle of perception and action that shapes our daily behaviors. Examples of perception influencing action are common and easily observed; simply closing your eyes while reaching for your coffee cup will show ample evidence of this direction of the perception–action cycle. The effect of action on perception, however, is more subtle, but nevertheless profound. Indeed, numerous experiments have shown that various types of action affect various types of perceptual phenomena; for instance, rotating a crank arm modulates the direction in which the ambiguous motion of flickering lights is perceived (Wohlschläger, 2000), better golf putters perceive larger holes (Witt, Linkenauger, Bakdash, & Proffitt, 2008), and even performing a simple keypress can influence stimulus identification, depending on the shared features of stimulus and response (Müsseler & Hommel, 1997). These studies are just a few instances of how planning or producing an action influences how we perceive information from the environment.

Over the last five years, one particularly interesting and robust effect of action on perception has emerged. Over a series of experiments, Abrams, Davoli, Du, Knapp, and Paull (2008) explored three common visual attention phenomena: visual search, peripheral cueing, and the attentional blink. In one condition, participants performed these tasks with their hands on two response buttons, far away from the visual stimuli, and in a similar position to the keyboard responses that overwhelmingly dominant cognitive psychology studies. Critically, in the other condition, the two buttons were placed on either side of the monitor, such that their hands were very near the visual stimuli. The differences brought about by hand posture were striking: Steeper search slopes, less inhibition of return, and greater attentional blinks were found in the near-hand condition. Moreover, the effect of hand position is not limited to visual attention tasks, since Davoli, Du, Montana, Garverick, and Abrams (2010) found reduced Stroop interference at a near-hand position relative to a far-hand position. Indeed, the effect appears in a variety of tasks, since Tseng and Bridgeman (2011) noted better performance on a change detection task.

In order to account for how hand position affects perception, Gozli, West, and Pratt (2012) hypothesized that positioning both hands near visual stimuli may increase the contribution of the magnocellular (M) visual pathway, while decreasing the parvocellular (P) pathway. The M-pathway account was originally envisioned by Previc (1998) and is grounded in the notion that the near-hand space is highly relevant for action (e.g., Abrams et al., 2008). If true, then visual information processing would be biased toward the action-based dorsal pathway, which receives the majority of its input from M channels. Likewise, positioning both hands far from visual stimuli takes the stimuli out of any immediate action space and biases processing toward the perception-based ventral pathway, which receives the majority of its input from parvocellular (P) channels. Note, furthermore, that activity in the two pathways is thought to be mutually inhibitory (e.g., Yeshurun, 2004). Gozli et al. (2012) tested these interconnected predictions by taking advantage of the unique perceptual sensitivity of each pathway. Cells along the M pathway are known to have high temporal resolution but poor spatial resolution, whereas cells along the P pathway are known to have low temporal resolution but high spatial resolution (Derrington & Lennie, 1984; Livingstone & Hubel, 1988). Thus, Gozli et al. had participants perform a temporal gap detection task (i.e., flicker vs. continuous presence of a circle) and a spatial gap detection task (i.e., broken circle vs. full circle) with their hands either on the monitor (near hands) or on a keyboard (far hands). Measures of d' supported their hypothesis; participants were more sensitive to temporal gaps in the near-hand condition, and more sensitive to spatial gaps in the far-hand condition. Further support for the visual-pathway account has come from two recent studies. Chan, Peterson, Barense, and Pratt (2013) found that the advantage in identifying rapidly presented low-spatial-frequency images over high-spatial-frequency images reported by Kveraga, Boshyan, and Bar (2007) is exacerbated when the hands are placed near the stimuli. Similarly, Abrams and Weidler (2014) found that processing low-spatial-frequency Gabor patches is faster near the hands than far from the hands, and further showed that this effect can be removed in the presence of diffuse red light, which is known to inhibit M-channel activity (e.g., Breitmeyer & Breier, 1994; West, Anderson, Bedwell, & Pratt, 2010).

In the present study, we are concerned with the possibility that hand position could modulate later stages of visual processing due to the increased M contribution and a decreased P contribution. Particularly, considering that the M and P pathways roughly correspond to the dorsal and ventral visual streams (Livingstone & Hubel, 1988; Ungerleider & Mishkin, 1982) leads us to a specific prediction regarding near-hand and far-hand visual representations. Whereas the ventral pathway plays an essential role in forming representations of complex and multifeatured objects (e.g., Barense et al., 2005; Barense, Gaffan, & Graham, 2007), the dorsal pathway is specialized for action-oriented processing that (a) does not rely on the formation of visual memory (Goodale, Cant, & Króliczak, 2006) and (b) proceeds with feature-based processing without requiring the construction of multifeatured items (e.g., Ganel & Goodale, 2003). Therefore, hand proximity may cause a reduced tendency for forming representations of multifeatured visual objects.

A more recent study by Qian, Al-Aidroos, West, Abrams, and Pratt (2012) provides indirect support for the prediction that object-based visual processing might be reduced near the hands. In this study, participants were presented with small red or blue dots (relevant feature) that appeared in circular black and white checkerboards (irrelevant feature). Importantly, the visually evoked potentials were time-locked to changes in the irrelevant feature, and could therefore reflect observers’ tendency to allocate some processing resources to irrelevant features of the object (Duncan, 1984). Indeed, Qian et al. found that the near-hand condition produced a decrement in the P200 waveform when compared to the far-hand condition. The visual P200 is thought to reflect feature-based processing (e.g., Luck & Hillyard, 1994), and a reduction in response to the irrelevant feature suggests that observers were less inclined to group the relevant and irrelevant features into unified object representation in the near-hand space, consistent with a reduced P contribution. This finding, in the context of the visual-pathway account of the near-hand effect, forms the starting point for the present investigation.

In the present study, we attempted to directly test for changes in visual object representation as a function of hand proximity. With regard to object representation, perhaps the most influential research on this question has been conducted by Kahneman and Treisman. On the basis of the notion that features are bound into objects through attention (Treisman & Gelade, 1980), Kahneman and Treisman (1984) speculated that the end product of the feature integration process is an “object file,” which is a representation that temporarily stores all of the physical characteristics of an object (e.g., location, shape, size), as well as information such as the relationship between various features. By creating object files, it is possible to maintain the representation of an object when it changes location by simply updating the object file rather than updating every piece of feature-specific information incorporated into the object file. Later, the initial object-file notion was extended by Hommel (1998) to include action-based information, and he termed these bound action–perception representations “event files.” In terms of the present study, our predictions are straightforward; placing the hands near visual stimuli should detrimentally affect the binding of information into object files (Exp. 1) and event files (Exp. 2).

Experiment 1

In developing an experimental test for the notion of object files, Kahneman, Treisman, and Gibbs (1992) introduced the object-specific preview task. The authors reasoned that the effect of a visual prime on a subsequent target should depend, not only on their visual similarity, but also on whether the two are perceived as two presentations of the same object or as two different objects. On the basis of the concept of object files, they predicted a larger preview benefit when the prime and the target are perceived as the same object. In one experiment, a preview display consisted of two placeholders, each containing a letter. After the letters disappeared, the two placeholders moved to new positions, and then one letter appeared in one of the placeholders. Participants were instructed to identify the letter as quickly as possible. The experiment consisted of three types of trials: same-object repetition (a letter reappeared in its original placeholder), different-object repetition (a letter reappeared but in the alternative placeholder), and switch (an altogether new letter appeared in a placeholder). Across different levels of motion duration (fast or slow), preview display duration (20 or 500 ms), and cue position (early or late), response times (RTs) were consistently shorter for same-object repetition than for different-object repetition, by amounts from 13 to 41 ms. These object-specific preview effects, argued Kahneman et al., are evidence of binding the placeholder and the letter into a unified visual representation, an object files. Whereas with same-object repetition observers could rely on the object files that they had already formed during preview, different-object repetition violated the object files and required observers to update those representations, causing slower and less accurate performance.

To test our notion that positioning the hands near visual stimuli will adversely affect object files, we turned to Kahneman et al.’s (1992) object-specific preview effect. The logic of the experiment was fundamentally similar to the original, in that we compared the effects of two prime–target relationships; the two could match either in their shape alone or in objecthood. To simplify the task, we did not manipulate motion duration or preview display duration, and we did not use cues to manipulate location salience. Importantly, the task involved two hand positions: near hands (hands on both sides of the monitor) and far hands (hands on the keyboard in front of the monitor). Our prediction was straightforward; if placing the hands near visual stimuli activates the M pathway and inhibits the P pathway, which impedes the construction of object files, the object-specific preview effect should be smaller in the near-hand condition, and larger in the far-hand condition.

Method

Participants

A group of 16 University of Toronto undergraduate students (13 female, three male; mean age = 18.4 years, SD = 0.7) participated in the experiment in exchange for course credit. Participants reported normal or corrected-to-normal vision, and they were unaware of the purpose of the study. All experimental protocols were approved by the Research Ethics Board of the University of Toronto.

Apparatus and stimuli

The experiment was run in MATLAB (MathWorks, Natick, MA) using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997), version 3.0.8. The stimuli were presented on a 19-in. CRT monitor set at 1,024 × 768 pixels resolution and an 85-Hz refresh rate. The viewing distance from the monitor was fixed at 45 cm, using a chin/head rest.

Visual stimuli were presented in white against a black background. Recognition stimuli were from a set of 16 distinct symbols (#, @, $, ^, %, ?, >, <, ], ~, +, =, \, |, /, and *), presented inside rectangular placeholders subtending 2º × 2º of visual angle, whose centers deviated by 5º from the screen center.

Procedure

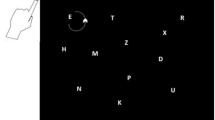

Figure 1 shows the sequence of events in a sample trial. Each trial began with the presentation of the central fixation cross (0.6º × 0.6º) and the horizontally aligned placeholders, displayed for 1,000 ms. Next, two symbols appeared in the placeholders (sample display), remaining for 400 ms, followed by a 200-ms delay. Participants were instructed to remember both symbols for the upcoming recognition test. The two placeholders began to move, with constant velocity, on every trial (in a clockwise or counterclockwise way) until they were vertically aligned. The motion display lasted for 175 ms, and one symbol was immediately presented in one of the placeholders, remaining on display until a response was recorded. Participants were instructed to make speeded responses depending on whether they recognized the symbol from the sample display (“repeat”), regardless of its location, or whether the symbol had not been presented in the sample display (“new item”). For “new-item” and “repeat” responses, respectively, the “Z” and “/?” buttons of a keyboard were used in the far-hand condition, and mouse buttons attached to the left and right sides of the screen in the near-hand condition. Participants were given feedback immediately when pressing the wrong key (“MISTAKE!,” displayed for 2,000 ms).

(a) Sequence of events on a sample trial in Experiment 1. (b) Response time (RT) data from Experiment 1, graphed as a function of hand position (near vs. far) and trial type (new item, same-frame repetition, or different-frame repetition). Error bars represent within-subjects 95% confidence intervals

Design

Each participant completed ten practice trials and 160 experimental trials. Trials were divided equally into repeat and new-item trials. A repeat trial was one in which the recognition stimulus had been presented in the sample display, regardless of its location. A new-item trial was one in which the recognition stimulus differed from both items presented in the sample display. Repeat trials were further divided equally into same-object and different-object trials. On same-object trials, the repeated item preserved its original frame, whereas on different-object trials, the repeated item switched frames. The location of the recognition stimulus (above vs. below) and the direction of frame motions (clockwise vs. counterclockwise) were randomized and equiprobable.

Results and discussion

Mean recognition RTs from the correct trials (Fig. 1b) were submitted to a 3 × 2 repeated measures analysis of variance (ANOVA) based on trial type (new item, same-object repeat, or different-object repeat), and hand position (near or far). We found a main effect of trial type [F(2, 30) = 5.29, p = .011, η p 2 = .260], with same-object trials having shorter RTs than the other two types, but no main effect of hand position [F(1, 15) < 1]. Most importantly, the two-way interaction reached significance [F(2, 30) = 4.71, p = .017, η p 2 = .238]. As expected, in the far-hand condition, we observed an advantage of presenting a repeated symbol within the same object [i.e., an advantage of preserving the object file far from the hands, t(15) = 3.71, SE = 14.91, p = .002]. By contrast, in the near-hand condition, there was no advantage to presenting a repeated symbol within the same object [t(15) < 1].

The error data were also submitted to the same 3 × 2 ANOVA, which revealed only a marginally significant effect of trial type [F(2, 30) = 2.82, p = .076, η p 2 = .158]. Consistent with the RT findings, a trend was apparent toward better performance with same-object repeat trials (error rate = 3.1%), as compared with different-object repeat trials (error rate = 5.1%).

As predicted, positioning the hands near the stimuli had a detrimental effect on forming object representations. Whereas the far-hand condition produced a same-object advantage similar to that found by Kahneman et al. (1992), no such advantage was incurred in the near-hand condition. It is important to note that the method used in Experiment 1 involved changes to visual stimuli across two dimensions only (shape and spatiotemporal continuity). To confirm this conclusion in a more complex setting, a second experiment was conducted using visual stimuli that varied across multiple dimensions.

Experiment 2

Following the initial work on object files, Hommel (1998) suggested that the classic view of an object file could be expanded to include, not only perceptual features of an event, but also features of the observer’s action. He introduced the concept of an event file, to refer to the unified representation of a multifeatured event consisting of perceptual and response features (Hommel, 2004). The underlying notion is that the attention systems evolved to support the action systems, and as such, any mechanism that binds features into objects must contain information about the responses made toward such bound stimuli (Hommel, 1997).

To empirically demonstrate the notion of event files, Hommel (1998) created a new version of the object preview paradigm (now known as the partial repetition cost [PRC] paradigm) that included the participants’ motor responses as a feature, along with the visual features that could vary from preview to target. The PRC paradigm consisted of three key sequential stimuli: a response cue (left or right arrows, indicating which response to prepare), the first stimulus (S1, to which the prepared response was produced), and the second stimulus (S2, which was the identification target). In other words, the response to S1 is a detection response, whereas the response to S2 is a discrimination response (e.g., left key for “O” and right key for “X”). Both S1 and S2 could vary in shape (X or O), color (red or green), and location (above or below fixation). In relation to S1, S2 could repeat or switch along any of the three visual dimensions. In addition, the response feature coinciding with S1 and S2 could also be switched or repeated. Thus, sometimes the two events were exactly the same (full repetition), sometimes they were entirely different (full switch), and sometimes they differed in a subset of features (partial repetition). Three observations have come from the PRC paradigm: First, repeating a single visual feature or response does not have a benefit if other features are switched. In other words, the S1–S2 priming effect is not feature-based, but an effect driven by bound features. Second, repeating a subset of features (i.e., partial repetition) is often equally costly as, or more costly than, a full switch, presumably because partial repetition demands updating an already-existing event file. Third, the pattern of PRC findings indicates that not all features participate in the construction of an event file. For instance, color and shape are often bound only when they are task-relevant (Hommel, 1998).

Since our contention is that a near-hand location impairs object-based processing, we predicted that placing the hands near the stimuli would bring about two atypical patterns in the PRC experiment—namely (1) feature-based priming and (2) a reduced cost of partial repetition. Specifically, if event file representation is reduced near the hands, then the activation of a feature (e.g., the shape “X” in S1) should facilitate processing this feature a second time (e.g., repetition of shape “X” in S2), regardless of the repetition of other features (e.g., color or location). Consequently, main effects of feature repetition should emerge, indicative of priming that is based on the activation of individual features. Importantly, such feature-based priming would eliminate the advantage of the full-switch condition over partial repetition, because now the partial repetition would include more feature-based repetition than would a full switch. In addition, this paradigm would also allow us to examine how hand position affects the binding of the response feature and visual features. If the near-hand condition only affects forming a representation of visual object files, then binding across response and visual features should not be affected. If hand position affects binding across all representations, then the binding between response and visual features should also be weakened.

Method

Participants

A group of 16 new University of Toronto undergraduate students (10 female, six male; mean age = 18.6 years, SD = 1.1) participated in the experiment in exchange for course credit. Participants reported normal or corrected-to-normal vision, and they were unaware of the purpose of the study.

Apparatus and stimuli

The same apparatus was used as in Experiment 1. Visual stimuli were presented against a black background; all of them were presented in white, except for the visual target stimuli. The targets (S1 and S2) were red or green letters “X” and “O” printed in Arial font, fitting inside a 1.6º × 1.6º frame. A target was always presented along the vertical midline, but deviated 3.2º vertically from the horizontal midline. Participants used the “Z” (left response) and “/?” (right response) buttons on a keyboard in the far-hand condition, whereas in the near-hand condition they used buttons on computer mice attached on the left and right sides of the monitor.

Procedure

Figure 2 shows the sequence of events in a sample trial. Each trial began with the presentation of the central fixation cross (0.6º × 0.6º), displayed for 1,000 ms and followed by a blank screen (500 ms). Next, the response cue, consisting of three arrow heads (e.g., “>>>”) appeared at fixation, indicating the correct response to the first target stimulus (S1). The response cue remained on display for 1,000 ms, followed by another blank screen (800–1,200 ms). Next, S1 appeared above or below fixation and remained on display until the first response (R1) was recorded. Participants were instructed to respond using the key indicated by the response cue (e.g., a left response following “<<<”) as soon as they detected S1, regardless of S1’s features. Given a correct R1, a blank screen (500 ms) followed S1 offset, after which the second target stimulus (S2) appeared above or below fixation, remaining on display until the second response (R2) was recorded. A correct R2 depended on the shape of S2 (a right response for “X,” a left response for “O”). The mapping between response locations (left/right) and S2 shapes did not change across the near-hand and far-hand conditions. Participants were given feedback immediately when pressing the wrong key for either R1 or R2 (“MISTAKE!”; 2,000 ms). An error in response to S1 terminated the trial without S2 presentation.

Sequence of events on a sample trial in Experiment 2

Design

Participants began by performing a block of 30 practice trials (ten trials with R1 alone, ignoring S2; ten trials with R2 alone, ignoring S1; and ten trials with both R1 and R2 combined). All participants performed the task in both the near-hand and far-hand conditions. The two conditions were blocked (each block consisted of 256 trials) and counterbalanced across participants. That is, eight participants performed the practice block and the first half of the test trials with hands near the display, whereas the other eight performed the practice and the first half of the test trials with their hands far from the display. Participants were given the opportunity to take a short break after every 64 experimental trials.

The features of the two events (stimulus location, color, shape, and response) were randomized, and each feature, as well as each combination of features, was equally probable. The primary dependent measure was the speed of the second response, as a function of S1–S2 relationship. The relation between the two events along each dimension gave rise to four factors (Location, Color, Shape, and Response), each having two levels (repetition vs. switch). Accordingly, a main effect of each of these four factors would indicate a priming effect unique to that feature (e.g., a main effect of shape would indicate shape priming). Furthermore, two-way interactions would indicate binding between two features (e.g., a Shape × Location interaction could indicate that repeating shape alone did not facilitate performance if the location was switched). Similarly, three-way and four-way interactions could reveal binding among three and four features, respectively (Hommel, 1998). With the additional factor of Hand Position (near vs. far), the RT data for R2 could be examined as a function of all five factors.

Results and discussion

R1

Mean RTs for the detection response to S1 (mean ± SE, 398 ± 26 ms) did not vary significantly as a function hand position, t(15) = 0.43, SE = 22.54, p = .70. Neither did the error rates (1.07% ± 0.2%) differ across the two hand conditions, t(15) = 1.32, SE = .002, p = .21. Overall, these data suggest good performance in the detection component of the task.

R2

Mean RTs for the discrimination response to S2 were submitted to a five-way repeated measures ANOVA, using Hand Position (near or far), Stimulus Location (repeat or switch), Stimulus Shape (repeat or switch), Stimulus Color (repeat or switch), and Response (repeat or switch) as factors (α = .05). Due to the fact that this ANOVA included 31 tests, we will only report the significant findings and those null findings that are relevant to our hypothesis. Among the main effects, only the effect of shape was significant [F(1, 15) = 7.08, p = .018, η p 2 = .321], with shorter RTs for repeated shapes over switches. Importantly, the effect of shape interacted with hand position [F(1, 15) = 5.09, p = .039, η p 2 = .253]. As Fig. 3a indicates, repetition of shape had a significant benefit in the near-hand condition, t(15) = 3.13, SE = 7.18, p = .007, whereas it did not have a significant benefit in the far-hand condition, t(15) = 1.18, SE = 6.96, p = .26. Given that repetition of shape alone benefited performance only in the near-hand condition, regardless of other features, this indicates that feature binding may be weaker with the hands near the display.

Data from Experiment 2. (a) Shape repetition benefit in Experiment 2 (i.e., the response time [RT] disadvantage of shape-switch over shape-repeat trials), graphed as a function of hand position. (b) RT data, graphed as a function of hand position and the shape–location compound variable. In this figure, “full repetition” means that shape and location are both repeated; “partial repetition” means either shape or location, but not both, are repeated; and “full switch” means that shape and location are both switched. (c) Shape repetition benefit, graphed as a function of hand position and the response–location compound variable. In this figure, “full repetition” means that response and location are both repeated; “partial repetition” means that either response or location, but not both, are repeated; and “full switch” means that response and location are both switched. Error bars represent within-subjects 95% confidence intervals

Evidence of shape–location binding was found, in the form of a two-way interaction between shape and location [F(1, 15) = 9.87, p = .007, η p 2 = .397]. We found an RT benefit with full shape–location repetition (508 ± 36 ms), relative to either a partial repetition (532 ± 36 ms), t(15) = 4.76, SE = 4.91, p < .001, or a full switch (532 ± 37 ms), t(15) = 2.78, SE = 8.41, p = .014. Surprisingly, however, the full shape–location switch did not have an advantage over partial repetition (t ≈ 0, SE = 5.40, p ≈ 1). This was likely driven by the data from the near-hand condition, although the trend toward a three-way interaction between hand, shape, and location did not reach significance [F(1, 15 = 3.28, p = .090, η p 2 = .179]. The pattern found in the far-hand condition resembled typical PRC findings, in that RTs peaked at partial repetition (Fig. 3b). By contrast, with hands near the display, RTs increased linearly with increasing feature switches, peaking at full switch. Indeed, the cost of a full switch over a partial repetition with hands near (9 ± 7 ms) differed significantly from the far-hand condition (–9 ± 7 ms), t(15) = 2.72, SE = 6.76, p = .016, suggesting that location and shape had become separate sources of priming in the near-hand condition, which is indicative of weaker shape–location binding near the hands.

Furthermore, a two-way interaction between shape and response [F(1, 15) = 26.22, p < .001, η p 2 = .636] indicated shape–response binding; responses were slower with partial repetition (554 ± 39 ms) than with either a full repetition (494 ± 35 ms), t(15) = 5.56, SE = 10.70, p < .001, or a full switch (511 ± 34 ms), t(15) = 3.83, SE = 11.14, p = .002 (i.e., a partial repetition cost). Interestingly, this shape–response binding was not modulated by hand position (F < 1, for the three-way interaction).

Finally, the only remaining significant effect was a four-way interaction between hand position, shape, location, and responseFootnote 1 [F(1, 15) = 8.71, p = .010, η p 2 = .367]. In order to simplify this interaction and to shed further light on the main effect of shape repetition, we used shape repetition benefit as the new dependent variable. In addition, we combined location and response to construct a single, three-level factor of Location–Response (full repetition, partial repetition, and full switch). Figure 3c demonstrates the shape repetition benefit as a function of location–response repetition and hand position. Most importantly, consider the shape repetition benefit with partial repetition of location and response. In the near-hand condition, this benefit was observed (31 ± 10 ms), despite the partial repetition. By contrast, with hands far, the partial repetition completely eliminated the benefit (–5 ± 6 ms). The elimination of the shape repetition benefit suggests that shape did not act as an independent source of priming in the far-hand condition, consistent with reliable shape–location–response binding. By contrast, in the near-hand condition the shape repetition benefit was preserved despite partial repetition of other features, consistent with reduced shape–location–response binding.

It is worthwhile to highlight that the response–shape binding did not seem to depend on hand position. This might indicate a distinction between binding perceptual features and binding across perceptual and motor features. Although hand proximity seems to interfere with forming multifeatured representations of visual objects, it might leave perceptual–motor binding unaffected. A second possibility is that cognitive control over processing of the two highly relevant features (shape and response) obstructs the effect of hand proximity.Footnote 2 This seems especially plausible in light of the observations that binding among highly task-relevant features is sensitive to cognitive control (Hommel, Kray, & Lindenberger, 2011; Keizer, Verment, & Hommel, 2010). Regardless, in line with Experiment 1, the findings of this experiment are consistent with an object-based processing tendency in the far-hand condition, and a feature-based processing tendency in the near-hand condition.

General discussion

The present two experiments were designed to test the hypothesis that positioning the hands near visual stimuli, which activates the M pathway and inhibits the P pathway, will impair the binding of visual features into object or event files. Experiment 1 revealed Kahneman et al.’s (1992) object-specific preview effect in the far-hand condition, but no such effect in the near-hand condition. Similarly, Experiment 2 showed a simple priming effect by shape in the near-hand condition, which is rather atypical in the PRC paradigm. By contrast, in the far-hand condition, the priming effect of shape depended on the repetition of other features, which is indicative of stronger feature binding in the space far from the hands.

We propose that the reduced tendency toward feature binding is a high-level consequence of the increased contribution of the M pathway, and the decreased contribution of the P pathway, in processing stimuli near the hands (Abrams & Weidler, 2014; Gozli et al., 2012). Even though our claim with regard to the neural basis of these findings is inferential, the role of the P pathway in feature binding is supported by studies of patients with lesions to the ventral visual pathway. Barense et al. (2007) found that damage to the perirhinal cortex (a region situated late along the ventral stream) impaired patients’ performance on a visual task involving multifeatured objects, whereas performance was intact with single-featured objects (see also Barense et al., 2005). Despite this consistency between our proposal and the lesion studies, the neural basis of the present findings remains open to empirical test.

Although this has been the first study to directly show that hand proximity can mitigate object-based binding processes, this conclusion is also consistent with earlier studies. For example, Davoli et al. (2010) observed reduced Stroop effect in the near-hand condition, which also fit well with an increased tendency toward feature-based processing. If observers are better able to process the color dimension without including the word stimulus in the same event file, a reduction in the Stroop effect would be expected. Besides color–word binding, the grouping of letters into word units, which is known to influence Stroop effect (Reynolds, Kwan, & Smilek, 2010), may have been reduced near the hands in the study reported by Davoli et al. In the study reported by Tseng and Bridgman (2011), working memory estimates were larger in the near-hand condition. But this could also be attributed to the way in which the authors assessed working memory capacity—that is, in terms of remembering features. It is possible that a tendency toward feature-based processing (away from object-based processing) would increase the capacity to encode features. Had the authors tested visual working memory in a task that required feature binding, perhaps a cost would have been observed for the near-hand condition.

In a recent report, Weidler and Abrams (2014) found that placing the hands near visual stimuli reduced the flanker compatibility effect as well as the task-switching cost, and they proposed that the near-hand posture results in higher cognitive control than does the far-hand condition. Both of these findings, however, are also consistent with the perceptual account proposed here. The flanker compatibility effect has been shown to be sensitive to perceptual binding (Kramer & Jacobson, 1991), and therefore, a reduced tendency to bind targets and flankers into the same object file would also reduce the effect of flankers. Moreover, the task-switching experiment used by Weidler and Abrams required observers to alternate between the perceptual dimensions of color and shape. This task shares an important resemblance to the PRC task, because on that each trial, the perceptual event is represented in terms of an integration of a relevant feature, an irrelevant feature, and a response. A reduced tendency to bind these features would reduce the cost of the preceding trial on a current trial. Furthermore, this task could generally benefit from a feature-based processing mode, which would facilitate attending to the relevant and ignoring the irrelevant feature on each trial. Indeed, the authors also found a marginally significant overall benefit of hand proximity for this task.

Also consistent with the present study are the findings of Ganel and Goodale (2003), who compared the influence of an irrelevant dimension (length) on judgments of a relevant dimension (width). They found that when participants simply viewed the objects, without acting upon them (corresponding to our far-hand condition), the irrelevant perceptual dimension biased performance, indicative of object-based processing. By contrast, when participants grasped the objects (corresponding to our near-hand condition), the irrelevant dimension no longer biased performance, indicative of feature-based processing.

By showing evidence for the influence of hand posture on vision, this study also adds to the growing literature indicating that one of the underlying mechanisms of such effects is the differentiation of activity in the P and M pathways. Indeed, evidence now suggests that having the hands near visual stimuli improves temporal acuity (Gozli et al., 2012), reduces object substitution masking (Goodhew, Gozli, Ferber, & Pratt, 2013), improves rapid “gist” processing (Chan et al., 2013), and is more susceptible to red diffuse light (Abrams & Weidler, 2014), all of which are associated with the M pathway. Likewise, having the hands at some distance from visual stimuli improves spatial acuity (Abrams & Weidler, 2014; Gozli et al., 2012) and improves object-based processing (present study), both of which rely on the contribution of the P pathway. Although these variations in the contributions of the two pathways may not be the only factor at play, it certainly appears to be one of the factors that must be considered when defining how hand position influences vision.

By specifically demonstrating that hand position can alter how features are bound into objects and events, this study also shows the depth of the interaction between action and perception. Creating, maintaining, and modifying object representations is critical to our successful interactions with the world, since objects are what we ultimately interact with. At a distance, beyond our immediate action space, it is important that objects keep their continuity in the visual field, and binding features together is an important mechanism that supports object perception. But within our action space, it may well be that object consistency is less necessary than providing the action system with featural information useful to the manipulation of objects (e.g., orientation, texture, size, and shape). By biasing activity toward either of the visual pathways, the action and perception systems can interact to recover the visual information needed to generate appropriate behaviors for the task at hand.

Notes

Neither the main effect of color nor its interaction with other factors reached significance (cf. Hommel, 1998). Therefore, we discarded the color variable from all post-hoc analyses.

We thank Bernhard Hommel for pointing out this possibility.

References

Abrams, R. A., Davoli, C. C., Du, F., Knapp, W. H., III, & Paull, D. (2008). Altered vision near the hands. Cognition, 107, 1035–1047. doi:10.1016/j.cognition.2007.09.006

Abrams, R. A., & Weidler, B. J. (2014). Trade-offs in visual processing for stimuli near the hands. Attention, Perception, & Psychophysics. doi:10.3758/s13414-013-0583-1

Barense, M. D., Bussey, T. J., Lee, A. C. H., Rogers, T. T., Davies, R. R., Saksida, L. M., & Graham, K. S. (2005). Functional specialization in the human medial temporal lobe. Journal of Neuroscience, 25, 10239–10246. doi:10.1523/JNEUROSCI.2704-05.2005

Barense, M. D., Gaffan, D., & Graham, K. S. (2007). The human medial temporal lobe processes online representations of complex objects. Neuropsychologia, 45, 2963–2974.

Brainard, D. H. (1997). The Psychophysics Toolbox. Spatial Vision, 10, 433–436. doi:10.1163/156856897X00357

Breitmeyer, B. G., & Breier, J. I. (1994). Effects of background color on reaction time to stimuli varying in size and contrast: Inferences about human M channels. Vision Research, 34, 1039–1045.

Chan, D., Peterson, M. A., Barense, M. D., & Pratt, J. (2013). How action influences object perception. Frontiers in Psychology, 4, 462. doi:10.3389/fpsyg.2013.00462

Davoli, C. C., Du, F., Montana, J., Garverick, S., & Abrams, R. A. (2010). When meaning matters, look but don’t touch: The effects of posture on reading. Memory & Cognition, 38, 555–562. doi:10.3758/MC.38.5.555

Derrington, A. M., & Lennie, P. (1984). Spatial and temporal contrast sensitivities of neurones in lateral geniculate nucleus of macaque. Journal of Physiology, 357, 219–240.

Duncan, J. (1984). Selective attention and the organization of visual information. Journal of Experimental Psychology: General, 113, 501–517. doi:10.1037/0096-3445.113.4.501

Ganel, T., & Goodale, M. A. (2003). Visual control of action but not perception requires analytical processing of object shape. Nature, 426, 664–667. doi:10.1038/nature02156

Goodale, M. A., Cant, J. S., & Króliczak, G. (2006). Grasping the past and present: When does visuomotor priming occur? In H. Öğmen & B. G. Breitmeyer (Eds.), The first half second: The microgenesis and temporal dynamics of unconscious and conscious visual processes (pp. 51–72). Cambridge, MA: MIT Press.

Goodhew, S. C., Gozli, D. G., Ferber, S., & Pratt, J. (2013). Reduced temporal fusion in near-hand space. Psychological Science, 24, 891–900. doi:10.1177/0956797612463402

Gozli, D. G., West, G. L., & Pratt, J. (2012). Hand position alters vision by biasing processing through different visual pathways. Cognition, 124, 244–250.

Hommel, B. (1997). Toward an action-concept model of stimulus–response compatibility. Advances in Psychology, 118, 281–320.

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus–response episodes. Visual Cognition, 5, 183–216. doi:10.1080/713756773

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494–500. doi:10.1016/j.tics.2004.08.007

Hommel, B., Kray, J., & Lindenberger, U. (2011). Feature integration across the lifespan: Stickier stimulus–response bindings in children and older adults. Frontiers in Psychology, 2, 268. doi:10.3389/fpsyg.2011.00268

Kahneman, D., & Treisman, A. (1984). Changing views of attention and automaticity. In R. Parasuraman & D. A. Davies (Eds.), Varieties of attention (pp. 29–61). New York, NY: Academic Press.

Kahneman, D., Treisman, A., & Gibbs, B. J. (1992). The reviewing of object files: Object-specific integration of information. Cognitive Psychology, 24, 175–219. doi:10.1016/0010-0285(92)90007-O

Keizer, A. W., Verment, R., & Hommel, B. (2010). Enhancing cognitive control through neurofeedback: A role of gamma-band activity in managing episodic retrieval. NeuroImage, 49, 3404–3413.

Kramer, A. F., & Jacobson, A. (1991). Perceptual organization and focused attention: The role of objects and proximity in visual processing. Perception & Psychophysics, 50, 267–284. doi:10.3758/BF03206750

Kveraga, K., Boshyan, J., & Bar, M. (2007). Magnocellular projections as the trigger of top-down facilitation in recognition. Journal of Neuroscience, 27, 13232–13240.

Livingstone, M., & Hubel, D. (1988). Segregation of form, color, movement, and depth: Anatomy, physiology, and perception. Science, 240, 740–749. doi:10.1126/science.3283936

Luck, S. J., & Hillyard, S. A. (1994). Electrophysiological correlates of feature analysis during visual search. Psychophysiology, 31, 291–308.

Müsseler, J., & Hommel, B. (1997). Blindness to response-compatible stimuli. Journal of Experimental Psychology: Human Perception and Performance, 23, 861–872. doi:10.1037/0096-1523.23.3.861

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442. doi:10.1163/156856897X00366

Previc, F. H. (1998). The neuropsychology of 3-D space. Psychological Bulletin, 124, 123–164. doi:10.1037/0033-2909.124.2.123

Qian, C., Al-Aidroos, N., West, G., Abrams, R. A., & Pratt, J. (2012). The visual P2 is attenuated for attended objects near the hands. Cognitive Neuroscience, 3, 98–104.

Reynolds, M., Kwan, D., & Smilek, D. (2010). To group or not to group. Experimental Psychology, 57, 275–291.

Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12, 97–136. doi:10.1016/0010-0285(80)90005-5

Tseng, P., & Bridgeman, B. (2011). Improved change detection with nearby hands. Experimental Brain Research, 209, 257–269.

Ungerleider, L. G., & Mishkin, M. (1982). Two cortical visual systems. In D. J. Ingle, M. A. Goodale, & R. J. W. Mansfield (Eds.), Analysis of visual behavior (pp. 549–586). Cambridge, MA: MIT Press.

Weidler, B. J., & Abrams, R. A. (2014). Enhanced cognitive control near the hands. Psychonomic Bulletin & Review.. doi:10.3758/s13423-013-0514-0

West, G. L., Anderson, A. K., Bedwell, J. S., & Pratt, J. (2010). Red diffuse light suppresses the accelerated perception of fear. Psychological Science, 21, 992–999. doi:10.1177/0956797610371966

Witt, J. K., Linkenauger, S. A., Bakdash, J. Z., & Proffitt, D. R. (2008). Putting to a bigger hole: Golf performance relates to perceived size. Psychonomic Bulletin & Review, 15, 581–585. doi:10.3758/PBR.15.3.581

Wohlschläger, A. (2000). Visual motion priming by invisible actions. Vision Research, 40, 925–930. doi:10.1016/S0042-6989(99)00239-4

Yeshurun, Y. (2004). Isoluminant stimuli and red background attenuate the effects of transient spatial attention on temporal resolution. Vision Research, 44, 1375–1387. doi:10.1016/j.visres.2003.12.016

Author note

This study was supported by a Discovery grant from the Natural Sciences and Engineering Research Council of Canada (NSERC) to J.P., and by a postgraduate NSERC scholarship awarded to D.G.G.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gozli, D.G., Ardron, J. & Pratt, J. Reduced visual feature binding in the near-hand space. Atten Percept Psychophys 76, 1308–1317 (2014). https://doi.org/10.3758/s13414-014-0673-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-014-0673-8