Abstract

Similarities have been observed in the localization of the final position of moving visual and moving auditory stimuli: Perceived endpoints that are judged to be farther in the direction of motion in both modalities likely reflect extrapolation of the trajectory, mediated by predictive mechanisms at higher cognitive levels. However, actual comparisons of the magnitudes of displacement between visual tasks and auditory tasks using the same experimental setup are rare. As such, the purpose of the present free-field study was to investigate the influences of the spatial location of motion offset, stimulus velocity, and motion direction on the localization of the final positions of moving auditory stimuli (Experiment 1 and 2) and moving visual stimuli (Experiment 3). To assess whether auditory performance is affected by dynamically changing binaural cues that are used for the localization of moving auditory stimuli (interaural time differences for low-frequency sounds and interaural intensity differences for high-frequency sounds), two distinct noise bands were employed in Experiments 1 and 2. In all three experiments, less precise encoding of spatial coordinates in paralateral space resulted in larger forward displacements, but this effect was drowned out by the underestimation of target eccentricity in the extreme periphery. Furthermore, our results revealed clear differences between visual and auditory tasks. Displacements in the visual task were dependent on velocity and the spatial location of the final position, but an additional influence of motion direction was observed in the auditory tasks. Together, these findings indicate that the modality-specific processing of motion parameters affects the extrapolation of the trajectory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Location cues in moving objects change at each point in time. The visual system senses motion from the pattern of displaced retinal image features (Albright & Stoner, 1995), whereas the auditory system is provided with dynamically changing interaural time or interaural intensity differences (Middlebrooks & Green, 1991). The analysis of these cues requires highly developed neuronal circuits that enable us to integrate motion features and to perceive dynamic aspects of our environment.

A number of studies have dealt with several effects that are specific either to motion onset (the Fröhlich effect: Getzmann, 2005b; Kerzel & Gegenfurtner, 2004; Müsseler & Aschersleben, 1998), to a time point within the trajectory (the flash-lag effect: Alais & Burr, 2003; Bachmann, Murd, & Põder, 2012; Linares, López-Moliner, & Johnston, 2007; Nijhawan, 2001; Vreven & Verghese, 2005), or to the final position of motion (representational momentum: Freyd & Finke, 1984; Getzmann, Lewald, & Guski, 2004; Hubbard, Kumar, & Carp, 2009; Mateeff & Hohnsbein, 1988).

Representational momentum describes a forward displacement at motion offset. Thereby, the final position of motion is typically overestimated—that is, is remembered farther in the direction of motion. Thus far, the most detailed descriptions of this motion-specific effect have been provided for the visual domain (e.g., Freyd & Finke, 1984; Hayes & Freyd, 2002; Hubbard & Motes, 2002; Kerzel, 2002; Maus & Nijhawan, 2009). Representational momentum in spatial hearing was first described by Getzmann et al. (2004), who observed a forward displacement of the final position of a sound source moving through space. This was comparable to what had previously been reported in the perception of moving visual stimuli. Getzmann (2005a) thus suggested that representational momentum is a phenomenon that applies to motion perception in general.

To date, the underlying mechanisms of representational momentum are still a matter of debate. Several theories considering low-level sensory processes, higher-level cognitive processes, and network models were critically examined by Hubbard (2010). At cognitive levels, the effect was initially explained by internalized principles of physical rules (Freyd, 1987). The physical-momentum hypothesis states that a moving object cannot halt immediately, and therefore the representation of perceived motion is briefly sustained following motion offset. More recent studies have discussed the observed forward displacement in terms of the concept of mental extrapolation of the trajectory at higher cognitive levels (Hubbard, 2005; Hubbard & Bharucha, 1988; Taya & Miura, 2010). That is, when a moving object vanishes abruptly, the predicted course of target motion is beyond the actual position of the trajectory’s termination point, resulting in mislocalization farther in the direction of motion. The crucial role of higher cognitive mechanisms in the emergence of the representational momentum effect has been supported by the findings of an fMRI study that revealed the involvement of prefrontal areas (Rao et al., 2004). In contrast, low-level theories explain forward displacements with mechanisms that occur at the perceptual level. For instance, it has been proposed that ocular tracking of target motion induces an overshoot of the final position (Kerzel, 2000; Kerzel, Jordan, & Müsseler, 2001). Furthermore, since no forward displacement was observed when a fixation point was used during stimulus presentation, Kerzel (2005) argued that a pursuit of the target motion is required in order to predict the course of motion. However, several studies that employed visual stimuli have provided evidence against such an explanation (see Hubbard, 2005, for a review). Also for auditory motion, Getzmann (2005a) reported mislocalization of the final position, irrespective of visual fixation or target pursuit.

Far better understood than the underlying mechanisms of representational momentum are the influences of target-, display-, context-, and observer-related characteristics on the magnitude of displacement. Such characteristics are, for example, target velocity, attention, landmarks, or response measure (see Hubbard, 2005, for a review).

To date, the influence of these characteristics on the magnitude of displacement has mainly been investigated separately for the visual and auditory modalities, and direct comparisons between the two modalities are rare. The main goal of the present study was to compare the influences of different target characteristics (i.e., various motion parameters) on visual and auditory localization performance using the same experimental setup and response measure. To this end, three experiments were conducted in which either moving visual or moving auditory targets were presented in the free field, while participants maintained fixation at a central location. The participants were asked to indicate the final position of moving targets by using a pointing device, and the moving stimuli were systematically varied in terms of the spatial location of their final position, motion direction, and velocity. We extended the range of spatial locations of the final position to the extreme periphery, which allowed for the evaluation of localization performance across the entire frontal field. The employed stimulus velocities varied from 13°/s to 160°/s.

To investigate whether binaural cues have an influence on the auditory representational-momentum effect, low-frequency (Exp. 1: 250–1000 Hz) and high-frequency (Exp. 2: 2000–8000 Hz) noise bursts were employed as the stimuli. In the free field, localization of moving low-frequency sound sources is based on a dynamic comparison of interaural time differences, whereas dynamically changing interaural intensity differences are cues for the localization of moving high-frequency sound sources (Middlebrooks & Green, 1991). Both noise bands were matched in consideration of the logarithmic frequency scaling on the basilar membrane, so that bursts in the respective noise bands activated sections of equal length on the basilar membrane.

In the present study, we tested the hypothesis that the extrapolation of trajectory is affected by the processing of motion parameters, such as the accuracy in detecting positional changes of the moving stimulus and the encoding of motion direction and motion velocity. If the processing of these target characteristics is a crucial factor, then the magnitudes of displacement of the final position should vary as a function of the employed motion parameters in both the visual and auditory tasks. We presented above-threshold stimuli in both modalities to test the representational-momentum effect under optimal stimulus conditions. The participants’ performance should thus be dependent on intrinsic position uncertainty (Michel & Geisler, 2011)—that is, inherent limitations in the processing of a stimulus that result, for example, from the spatial resolution of a sensory modality.

Previous studies in which position uncertainty was induced either by the blurring of target intensity and contrast (Fu, Shen, & Dan, 2001) or by peripheral stimulus presentation (Kanai, Sheth, & Shimojo, 2006) have shown that less accurate positional information results in larger forward displacements. On the basis of the latter findings, we hypothesized that less precise encoding of spatial coordinates in paralateral and lateral space leads to greater position uncertainty and should be reflected in an increase in the magnitude of forward displacement.

Furthermore, we hypothesized that fundamental differences in the encoding of the visual space and the acoustic space would affect the extrapolation of the trajectory differently. Visual motion perception is based on a direct, topographically organized representation, whereas the auditory system infers motion indirectly by calculating location-dependent time and intensity differences between the two ears (Wilson & O’Neill, 1998). Previous studies have shown that both the visual system (Finlay, 1982; To, Regan, Wood, & Mollon, 2011) and the auditory system (Grantham, 1986) are most accurate in tracking motion parameters in the central field, and that this accuracy decreases in both modalities when attempting to track moving targets in paralateral and lateral space. However, accuracy across space is not comparable between the two modalities (Perrott, Ambarsoom, & Tucker, 1987; Perrott, Saberi, Brown, & Strybel, 1990) because visual resolution is superior to auditory resolution in central space, but declines more severely than auditory resolution toward lateral space. That is, the visual function crosses below the shallower slope of the auditory function.

Additionally, differences between the two modalities in the preference for any motion direction and/or any velocity should be reflected in distinct magnitudes of displacement.

General method

Participants

The participants were volunteers recruited from the electronic bulletin board of the University of Leipzig (for further details, see the descriptions for each experiment). They were naïve as to the purpose of the study, and each participant took part in no more than one of the three experiments. All participants gave informed written consent and were compensated for taking part in the study. This study conformed to the Code of Ethics of the World Medical Association and was approved by the local ethics committee of the University of Leipzig.

Apparatus and stimuli

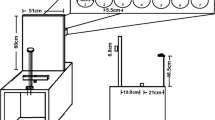

The experiments were conducted in an anechoic, sound-attenuated free field laboratory (45 m2, Industrial Acoustics Company [IAC], Niederkrüchten, Germany). Forty-seven broadband loudspeakers (Visaton, FRS8 4 W, Haan, Germany) in steps of 4.3° and 188 white light-emitting diodes (LEDs) in steps of 1° were mounted in an azimuthal, semicircular array, covering a plane from −98° (to the left) to +98° (to the right; see Fig. 1). In combination with 204 infrared (IR)-sensitive phototransistors that were arranged in steps of 1°, the LED array was used to provide visual feedback on the angular positions pointed to by the participants. A customized IR torch (Solarforce L2 with a 3-W NVG LED, Fulidat Electronics Limited, Kowloon, Hong Kong) served as a pointing device. The subtended angle of the IR-light beam compassed a maximum of eight IR-sensitive phototransistors. Online calculation of the mean position across all activated phototransistors led to a flashing of the corresponding LED as visual feedback in all three experiments.

Experimental setup: Array of 47 loudspeakers and 188 LEDs (dots in front of the loudspeaker symbols) mounted in an azimuthal, semicircular arrangement at a distance of 2.35 m from the participant’s head. Locations in the left and right hemifields are denoted by negative and positive signs, respectively. Arrows indicate the trajectories of the auditory or visual stimuli (black indicates trajectories toward the midline, and gray, trajectories toward the periphery). The final positions of moving stimuli are shown by black loudspeaker and LED symbols. Note that for the trajectories terminating at 30° and 47°, the stimuli moved toward the final position from either side—that is, either toward the midline or toward the periphery

The participants were seated in a comfortable, fixed chair at a constant distance of 2.35 m from the semicircular array. The loudspeakers and LEDs were hidden behind acoustically transparent gauze, which did not affect the visibility of the LEDs. An IR camera in the test chamber allowed for the monitoring of the participants’ performance during the experimental sessions. The timing and order of the stimulus presentation, as well as the recording of the indicated angular positions, were controlled by custom-written MATLAB scripts (R2007b, The MathWorks Inc., Natick, USA). The auditory and visual stimuli were digitally generated using RPvdsEx (real-time processor visual design studio, Tucker Davis Technologies [TDT]) and delivered to two multichannel signal processors (RX8, System3, TDT). A more detailed description of the experimental setup and the calibration of the loudspeakers is given in Schmiedchen, Freigang, Nitsche, and Rübsamen (2012).

The auditory stimuli were narrow-band Gaussian noise bursts centered at 500 Hz (Exp. 1: 250–1000 Hz; low frequency) and at 4000 Hz (Exp. 2: 2000–8000 Hz; high frequency). These two distinct noise bands were employed in order to examine whether auditory performance is affected by the types of dynamically changing binaural cues (interaural time or intensity differences) that are used for the localization of moving sound sources.

Moving auditory stimuli were generated by the successive activation of adjacent loudspeakers. Each trial was created afresh. To obtain a smooth and continuous motion, the ratio of sound intensities between two adjacent loudspeakers was adjusted by linear crossfading of the output voltage. The stimuli were presented at 40 dB SL (sensation level). To minimize the probability that localization performance would be affected by adaptation to loudness-related localization cues, a level roving (variability in sound intensity around presentation level) of ±3 dB was employed between trials. Moving visual stimuli were simulated by the successive activation of adjacent LEDs (luminance: 2.5 lux). The small distance of 1° between two adjacent LEDs was sufficient to induce an apparent motion percept.

The stimuli traveled an angular range of either 38° or 98° in the horizontal plane. Stimulus durations varied among 0.25, 0.5, 1.0, 2.0, and 3.0 s (including 10-ms rise and decay times). The systematic variation of stimulus velocities as a function of stimulus duration is given for 38° stimuli in Table 1. The final positions of the moving stimuli were located at −8°, −30°, −47°, −68°, −85°, +8°, +30°, +47°, +68°, or +85°. The black loudspeaker and LED symbols in Fig. 1 illustrate the spatial locations of the final positions, and arrows indicate the corresponding trajectories, which were divided into two groups: motion toward the midline and motion toward the periphery.

The final positions of trajectories toward the midline were located at ±8°, ±30°, or ±47°, and the trajectories toward the periphery terminated at ±30°, ±47°, ±68°, or ±85°. The termination points located at ±30° and ±47° were used for trajectories toward both the midline and the periphery (Table 2). A total of 70 moving stimulus combinations traveling an angular range of 38° were presented during each test session. Another 30 stimulus combinations with trajectories covering an angular range of 98° (+68° to –30°, –68° to +30°, +51° to –47°, –51° to +47°, +30° to –68°, and –30° to +68°) were randomly interspersed to make the total lengths of the trajectories unpredictable. Responses to the latter stimuli were not considered in the analysis.

Furthermore, stationary stimuli at a duration of 0.5 s (in Exp. 1, noise bursts at 250–1000 Hz; in Exp. 2, noise bursts at 2000–8000 Hz; in Exp. 3, flashes at 2.5 lux) were presented from spatial locations that matched the ten different final positions of the trajectories.

Procedure

The experimental protocol (including the recording of hearing thresholds, the participants’ task, and the pointing procedure) was adapted from our previous localization study (Schmiedchen et al., 2012). Participants were tested in complete darkness. Prior to Experiments 1 and 2, the individual hearing thresholds for the moving low-frequency or moving high-frequency auditory stimuli were obtained for each participant in order to adjust the presentation level during the tests to 40 dB SL. Starting with an initial sound level of 63 dB SPL, the participants indicated in a one-up/one-down procedure (step size: 2.5 dB) whether they heard a sound in the left hemifield moving from –38° to 0°. When they were confident of their hearing threshold—that is, the minimum sound pressure level that was required in order to detect the moving stimulus—participants terminated the run by a buttonpress. Individual detection thresholds for moving visual stimuli were not determined prior to Experiment 3; the luminance of the visual stimuli was constant for each participant.

All participants completed a practice run consisting of 20 stimuli in order to familiarize themselves with the task and the IR pointing device. The experiments were divided into ten blocks with ten moving stimulus combinations per block (seven stimulus combinations traveling an angular range of 38°, and three stimulus combinations traveling an angular range of 98°). Each stimulus combination was repeated three times. The stimuli within an experimental block were presented in randomized order. Additionally, the order of the ten experimental blocks was counterbalanced across participants.

The participants were instructed to look straight ahead during stimulus presentation and were asked not to pursue the moving stimuli with their eyes or with their head. A fixation point was not provided, to ensure that participants could not make use of it as a reference for the midline position in the darkened test chamber and that it would not bias localization of the final position of the moving stimuli, as had previously been demonstrated by Hubbard and Ruppel (1999), who investigated the effect of a stationary landmark.

The participants’ task was to indicate the final position of moving targets by pointing to the perceived position with a handheld IR torch after the visual or the auditory stimulus had disappeared. During pointing, the participants were allowed to move their head and to elevate their hand in order to facilitate the behavioral response. Following the successful registration of the indicated angular position, an intertrial interval (ITI) of 3.0 s allowed participants to reorient to straight ahead.

In a control block, the participants were asked to indicate the perceived locations of stationary auditory stimuli or stationary visual stimuli that corresponded to the final positions of the trajectories. Stationary stimuli from each spatial location were repeated three times within the control block, and the stimuli were presented in a randomized order.

Data analysis

Localization performance for moving stimuli was analyzed only for trajectories covering an angular range of 38°. The difference between the indicated final position and the actual final position of motion was calculated for each stimulus condition and each participant. To assess whether the target locations for moving and stationary stimuli were either over- or underestimated, the actual final position was subtracted from the indicated final position. For leftward motion and stationary stimuli in the left hemifield, the resulting values were multiplied by the factor −1. Doing so ensured that the displacement would always be positive if targets were overestimated, and negative if targets were underestimated. Stimulus repetitions were then averaged for each participant. In all three experiments, participants’ performance did not differ between the two hemifields, so data were collapsed for the respective locations in the left and right hemifields.

All statistical analyses were based on pointing errors. To establish whether over- or underestimation occurred, one-sample, two-tailed t tests against zero were performed for each stimulus condition. Comparisons between stimulus conditions were performed using repeated measures analyses of variance (ANOVAs).

The data for each experiment were submitted to three separate ANOVAs. First, a 3 × 5 (Spatial Location of the Final Position [8°, 30°, or 47°] × Stimulus Velocity [13°/s, 20°/s, 40°/s, 80°/s, or 160°/s]) ANOVA was conducted in order to evaluate localization performance for stimuli traveling toward the midline. A second, 4 × 5 (Spatial Location of the Final Position [30°, 47°, 68°, or 85°] × Stimulus Velocity [13°/s, 20°/s, 40°/s, 80°/s, or 160°/s]) ANOVA was performed for stimuli traveling toward the periphery. Finally, to further evaluate the influence of motion direction on localization performance, the data obtained at 30° and 47° were submitted to a 2 × 2 × 5 (Spatial Location of the Final Position [30° or 47°] × Motion Direction [toward the periphery or toward the midline] × Stimulus Velocity [13°/s, 20°/s, 40°/s, 80°/s, or 160°/s]) ANOVA, as both trajectories toward the midline and trajectories toward the periphery terminated at 30° and 47°.

Finally, the results of Experiments 1–3 were compared in three mixed factorial ANOVAs. The same factorial designs as described above were used, including the between-subjects factor Stimulus Type (moving low-frequency auditory stimuli, moving high-frequency auditory stimuli, or moving visual stimuli).

Experiment 1: Localization of moving low-frequency auditory stimuli

In the first experiment, participants were presented with moving low-frequency auditory stimuli whose localization in space is based on the analysis of interaural time differences.

Method

A group of 22 volunteers participated in this experiment (15 female, seven male, three left-handed; mean age: 23.9 years; age range 20–30 years). None of them reported hearing difficulties or any other neurological disorder. The auditory stimuli were low-frequency noise bursts (250–1000 Hz).

Results

One sample, two-tailed t tests revealed that participants significantly overestimated the final positions of moving low-frequency auditory stimuli (Fig. 2A) at central (8°), paracentral (30°), and paralateral (47°) locations. The magnitude of forward displacement was reduced at lateral locations (68° and 85°), and in the outermost periphery (85°), participants even tended to underestimate the actual endpoints. Also, the location of laterally presented stationary auditory stimuli was underestimated, whereas the localization of stationary auditory stimuli was more accurate at central and paralateral positions (Fig. 2A, open symbols). In all cases, however, the deviation from the target location in the localization of moving auditory stimuli was less negative than that of stationary auditory stimuli.

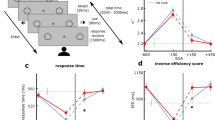

Mean displacements of the final positions of (A) moving low-frequency auditory stimuli, (B) moving high-frequency auditory stimuli, and (C) moving visual stimuli. The data were collapsed across the two hemifields and are plotted as a function of the spatial location of the final position of motion, stimulus velocity, and motion direction. The displacements are given as differences between the actual target location and the indicated location. Mean values differing from zero (one-sample, two-tailed t tests, p < .05) are denoted by asterisks (*). Gray symbols indicate trajectories toward the periphery, and black symbols indicate trajectories toward the midline. Localization performance can be taken from the direction of the deviation (arrows on the right side of the graph). That is, for gray symbols, mean values in the panel “toward the periphery” denote forward displacements, and mean values in the panel “toward the midline” denote backward displacements. For black symbols, mean values in the panel “toward the midline” denote forward displacements, whereas mean values in the panel “toward the periphery” denote backward displacements. Additionally, the participants’ performance in localizing stationary stimuli that matched the final positions of the trajectories is indicated by open symbols. The lower and upper error bars represent the 25th and the 75th percentiles, respectively. Data for the localization of stationary visual stimuli are taken from Freigang et al., manuscript in preparation

The repeated measures ANOVA for stimuli moving toward the midline (Fig. 2A, black symbols) revealed that the magnitude of overestimation of the final position was largest for the fastest velocity and decreased with the slowing of stimulus velocity [F(4, 84) = 16.19, p < .001, η 2 = .44]. The gradual decrease of displacements as a function of decreases in stimulus velocity was more pronounced when the stimuli terminated at 30° and 47° rather than at the central location of 8° [F(8, 168) = 2.43, p = .042, η 2 = .10].

The same analysis was conducted for conditions in which the stimuli moved toward the periphery (Fig. 2A, gray symbols). A main effect of spatial location of the final position confirmed that the overall magnitude of forward displacement was largest at paralateral locations, but was reduced at lateral locations [F(3, 63) = 45.31, p < .001, η 2 = .68]. The motion offsets at the outermost spatial location (85°) were mainly underestimated. Unlike for stimuli moving toward the midline, stimulus velocity had a reverse effect on localization performance, with the magnitude of forward displacement increasing with the slowing of stimulus velocity [F(4, 84) = 9.24, p < .001, η 2 = .31]. The effect of stimulus velocity was relatively weak at 30°, but it was prominent at paralateral (47°) and lateral (68°, 85°) locations, which was supported by an interaction of spatial location of the final position and stimulus velocity [F(12, 252) = 5.73, p < .001, η 2 = .21].

The locations at 30° and 47° were termination points for both trajectories directed toward the periphery and trajectories directed toward the midline. This allowed for the direct evaluation of the role of motion direction on localization performance (Fig. 2A, second and third columns; gray symbols indicate motion toward the periphery, and black symbols, motion toward the midline). Overall, the magnitude of forward displacement was largest for fast stimuli [F(4, 84) = 5.48, p = .014, η 2 = .21]. The decrease in the magnitude of forward displacement with the slowing of stimulus velocity was more pronounced at 47° than at 30° [F(4, 84) = 4.59, p = .007, η 2 = .18]. Localization performance was also influenced by an interaction of stimulus velocity and motion direction: For auditory motion traveling toward the midline, the largest overestimations of the final position were observed for fast stimuli, whereas for stimuli moving toward the periphery, the magnitude of overestimation was largest for slow stimuli [F(4, 84) = 20.44, p < .001, η 2 = .49].

Taken together, all three motion parameters—spatial location of motion offset, velocity, and motion direction—affected localization performance for moving low-frequency auditory stimuli. On the one hand, the results revealed a gradual increase in the magnitude of forward displacement from central to paralateral locations, but a clear reduction of the effect at lateral locations. On the other hand, we observed inverse effects of stimulus velocity between motion toward the midline and motion toward the periphery.

Experiment 2: Localization of moving high-frequency auditory stimuli

Fundamentally different neuronal mechanisms underlie the processing of acoustic space at low and high frequencies (Blauert, 1997): Whereas interaural time differences are the main cue for the localization of low-frequency sounds, high-frequency sound localization is predominantly based on interaural intensity differences. To examine whether these localization cues contribute differently to displacement, the same experiment was performed with moving auditory stimuli for which localization would be based on dynamically changing interaural intensity differences.

Method

Nineteen volunteers participated in this experiment (nine female, ten male, two left-handed; mean age: 24.7 years; age range 20–29 years). None of them reported hearing difficulties or any other neurological disorder. The auditory stimuli were high-frequency noise bursts (2000–8000 Hz).

Results

As with the moving low-frequency auditory stimuli, the perception of the final position of moving high-frequency auditory stimuli was significantly shifted farther in the direction of motion at central (8°), paracentral (30°), and paralateral (47°) locations (Fig. 2B), as was revealed by one-sample, two-tailed t tests. In contrast, the forward displacements for motion offsets in lateral space were clearly reduced (68°), and locations in the outermost periphery (85°) were predominantly underestimated by the participants. Stationary high-frequency auditory stimuli presented in lateral space were also underestimated—that is, localization was biased toward the midline. However, as we had observed for low-frequency auditory stimuli, the displacements for moving high-frequency auditory stimuli were less negative than the displacements that were observed for stationary auditory stimuli.

The repeated measures ANOVA for stimuli moving toward the midline (Fig. 2B, black symbols) confirmed a gradual decrease in the magnitude of overestimation with the slowing of stimulus velocity [F(4, 72) = 20.65, p < .001, η 2 = .53].

An effect of stimulus velocity on localization performance [F(4, 72) = 6.64, p = .003, η 2 = .21] was also revealed for stimuli moving toward the periphery (Fig. 2B, gray symbols). However, stimulus velocity had an inverse effect, and the localization of the final positions was shifted farther in the direction of motion with the slowing of stimulus velocity. Moreover, the deviation from the target location depended on the location of the final position in space [F(3, 54) = 37.26, p < .001, η 2 = .67]. That is, forward displacements were largest at paralateral locations and were clearly reduced in lateral space. Additionally, locations in the outermost periphery were more likely to be underestimated by the participants. The interaction of spatial location of the final position and stimulus velocity is explained by the fact that stimulus velocity had only a comparably weak influence on localization performance at 30° [F(12, 216) = 2.93, p = .013, η 2 = .14].

An additional analysis that also included motion direction as a factor was performed for the locations of 30° and 47° (Fig. 2B, second and third columns; gray symbols indicate motion toward the periphery, and black symbols, motion toward the midline). The analysis revealed a main effect of stimulus velocity [F(4, 72) = 3.44, p = .046, η 2 = .16]. That is, the overall magnitude of displacement of the final position was largest for fast stimuli and gradually decreased with the slowing of stimulus velocity. Analogously to what we found for moving low-frequency auditory stimuli, the highly significant interaction of motion direction and stimulus velocity [F(4, 72) = 9.22, p < .001, η 2 = .34] suggests an inverse influence of stimulus velocity on localization performance between auditory motion traveling toward the midline and toward the periphery. That is, the magnitude of forward displacement gradually decreased with the slowing of stimulus velocity for auditory motion toward the midline, whereas an opposite trend was observed for auditory motion toward the periphery.

Thus, the influences of the motion parameters that we employed on the localization of the final position of moving high-frequency auditory stimuli were similar to those on the localization performance observed for moving low-frequency auditory stimuli in Experiment 1.

Experiment 3: Localization of moving visual stimuli

The fundamental differences between the visual and auditory systems in the encoding of motion might have consequences for modality-specific localization performance. To test this hypothesis, we performed an experiment in which visual stimuli moved along the same trajectories and with the same velocities as the auditory stimuli used in Experiments 1 and 2.

Method

Twenty volunteers with normal or corrected-to-normal vision participated in this experiment (12 female, eight male, all right-handed; mean age: 25.8 years, age range 21–32 years).

Results

One-sample, two-tailed t tests confirmed that the final positions of moving visual stimuli (Fig. 2C) were significantly overestimated by the participants at central (8°), paracentral (30°), and paralateral (47°) locations, whereas in the periphery, forward displacements were reduced (68°) or the final positions were most likely to be underestimated (85°). The eccentricity of stationary visual stimuli in lateral space was also underestimated—that is, biased toward the midline. However, the magnitude of underestimation for moving visual stimuli was less negative than that for stationary stimuli.

The repeated measures ANOVA for stimuli moving toward the midline (Fig. 2C, black symbols) revealed a main effect of the spatial location of the final position [F(2, 38) = 6.68, p = .007, η 2 = .26], indicating that the largest magnitude of overestimation occurred at paralateral locations, whereas the magnitude of overestimation decreased toward central locations. Also, stimulus velocity affected visual localization performance, with the magnitude of overestimation gradually decreasing with the slowing of stimulus velocity [F(4, 76) = 9.86, p < .001, η 2 = .34].

The second analysis, which was performed for stimuli moving toward the periphery (Fig. 2C, gray symbols), confirmed that the magnitude of forward displacement was most pronounced at paralateral locations but was reduced at lateral locations, and that final positions in the outermost periphery were mainly underestimated [F(3, 57) = 21.82, p < .001, η 2 = .54]. The magnitude of displacement also varied as a function of stimulus velocity; that is, the largest forward displacements were observed for fast velocities, whereas the smallest forward displacements occurred for slow velocities [F(4, 76) = 27.86, p < .001, η 2 = .60].

To evaluate the influence of motion direction on the perception of the final position of motion, an additional analysis was performed for the results obtained at 30° and 47° (Fig. 2C, second and third columns; gray symbols indicate motion toward the periphery, and black symbols, motion toward the midline). The analysis revealed a main effect of stimulus velocity [F(4, 76) = 27.86, p < .001, η 2 = .60], which was due to a gradual decrease of forward displacement with the slowing of stimulus velocity. Furthermore, the influence of stimulus velocity on localization performance was more pronounced at 47° than at 30° [F(4, 76) = 3.61, p = .014, η 2 = .16]. The interaction of motion direction and stimulus velocity [F(4, 76) = 5.18, p = .012, η 2 = .21] is explained by the steeper decrease in the magnitude of forward displacement as a function of stimulus velocity for stimuli moving toward the periphery. In addition, the overall magnitude of overestimation at 47° was largest for stimuli traveling toward the midline. Motion direction did, however, have a lesser influence on localization performance at 30° [F(1, 19) = 14.60, p = .001, η 2 = .44].

In summary, visual localization performance was mainly influenced by the spatial location of the final position and by stimulus velocity, but was hardly affected by the interaction of stimulus velocity and the direction of the moving visual stimulus. The results indicate a gradual increase in the magnitude of forward displacement from central to paralateral locations and a reduction of the magnitude of displacement at lateral locations. Furthermore, displacements of the final position of motion decreased with the slowing of stimulus velocity.

Combined comparisons

The identical experimental designs allowed for a comparison of localization performance between all three stimulus types (moving low-frequency auditory stimuli vs. moving high-frequency auditory stimuli vs. moving visual stimuli). The reports of statistical significance below are limited to main effects or interactions involving the between-subjects factor stimulus type.

The analysis for stimuli moving toward the midline revealed an interaction of stimulus velocity and stimulus type [F(8, 232) = 3.78, p = .007, η 2 = .12], which is explained by the fact that the final positions of moving visual stimuli were overestimated to a higher degree than those of moving low-frequency auditory stimuli for all velocities except the fastest [F(4, 160) = 6.15, p = .004, η 2 = .13]. The final positions of moving high-frequency auditory stimuli were overestimated to a higher degree for the slowest and the fastest stimulus velocities, whereas the final positions of moving visual stimuli were overestimated to a higher degree in trials with moderate velocities [F(4, 148) = 5.46, p = .002, η 2 = .13].

The analysis for stimuli moving toward the periphery confirmed an inverse effect of stimulus velocity on visual and auditory localization performance [F(8, 232) = 22.69, p < .001, η 2 = .44]: Whereas for auditory motion the magnitude of forward displacement increased with the slowing of stimulus velocity, the magnitude of forward displacement decreased with the slowing of stimulus velocity for visual stimuli [moving low-frequency auditory stimuli vs. moving visual stimuli, F(4, 160) = 36.40, p < .001, η 2 = .48; moving high-frequency auditory stimuli vs. moving visual stimuli, F(4, 148) = 29.60, p < .001, η 2 = .44]. The differential effects of stimulus velocity on visual and auditory localization performance were most pronounced at paralateral and lateral locations [F(24, 696) = 2.13, p = .006, η 2 = .07].

The inclusion of the motion direction factor in the analysis of localization performance at 30° and 47° yielded an interaction of motion direction, stimulus velocity, and stimulus type [F(2, 232) = 12.54, p < .001, η 2 = .30]. Subsequent pair-wise comparisons showed that this interaction resulted from differences between the visual task and both auditory tasks. The localization performance for moving low-frequency and moving high-frequency auditory stimuli was strongly influenced by an interaction of stimulus velocity and motion direction. That is, opposite effects on the magnitude of displacement were observed with the slowing of stimulus velocity between the two motion directions. In contrast, for moving visual stimuli, the magnitude of overestimation gradually decreased with the slowing of stimulus velocity, irrespective of motion direction [moving low-frequency auditory stimuli vs. moving visual stimuli, F(4, 160) = 6.90, p < .001, η 2 = .15; moving high-frequency auditory stimuli vs. moving visual stimuli, F(4, 148) = 13.60, p < .001, η 2 = .27].

In summary, the analyses revealed that the magnitudes of displacement were comparable between moving low-frequency auditory stimuli and moving high-frequency auditory stimuli, but not between moving visual stimuli and both types of moving auditory stimuli. The results of the visual task and the auditory tasks mainly differed with respect to the influences of stimulus velocity and motion direction. Whereas localization performance for moving low-frequency and moving high-frequency auditory stimuli was affected by an interaction of stimulus velocity and motion direction as well as by the spatial location of motion offset, visual performance was mainly dependent on stimulus velocity and the spatial location of motion offset.

Discussion

In the present study, we aimed to assess the influences of spatial location, stimulus velocity, and motion direction on the localization of the final position of moving visual and moving auditory targets. To date, only one study has compared the magnitudes of displacement between visual and auditory tasks (Getzmann & Lewald, 2009). Those authors presented moving stimuli that terminated in central space and found similar displacements in both modalities when motion velocities did not vary within the stimulus, which led them to conclude that accurate extrapolation requires an invariant amount of information.

Here, we replicated previous findings by showing that participants predominantly overshoot the trajectories’ termination points in the two modalities. The mean displacements in the visual task and the auditory tasks were about 4°–8° at central locations. Additionally, our results demonstrated that the magnitude of forward displacement gradually increased from central to paralateral locations, but forward displacements were clearly reduced in lateral space. At 85°, participants even tended to underestimate the final positions of moving auditory and moving visual stimuli.

Furthermore, we found localization performance in each modality to be crucially dependent on the spatial location of the final position, stimulus velocity, and motion direction. Most importantly, the present study revealed significant differences in the magnitudes of displacement between the visual task and the auditory tasks.

These findings support the hypothesis that the processing of motion parameters influences the extrapolation of trajectory. Differences in the magnitudes of displacement between the visual task and the auditory tasks likely resulted from differences in the modality-specific processing of motion parameters.

Processing of motion parameters likely interacts with predictive mechanisms

A general trend observed in both the visual task and the auditory tasks was a gradual increase in forward displacements from central to paralateral locations, but a clear reduction of the effect in lateral space. Previous studies by Fu et al. (2001) and Kanai et al. (2006) demonstrated that the extrapolation of visual targets farther in the direction of motion was larger for spatially blurred targets—that is, for targets with a high degree of position uncertainty. Our results at paralateral spatial locations are in accord with these earlier findings and confirm that position uncertainty due to less precise encoding of spatial coordinates leads to larger forward displacements. Consequently, one might expect to find even larger forward displacements in lateral space, but instead we observed a reduction of the effect, and even backward displacements in the extreme periphery. Although extrapolation per se may not be affected in lateral space, systematic forward displacements have likely been drowned out by much larger localization biases of perceived position toward the midline (i.e., underestimation of eccentricity). In our control task, we also found a localization bias of stationary stimuli toward the midline; that is, the eccentricity of stationary stimuli was particularly underestimated at lateral locations, which is in line with previous studies that have reported similar effects for stationary visual stimuli (Fortenbaugh & Robertson, 2011; Mateeff & Gourevich, 1983) and stationary auditory stimuli (Ihlefeld & Shinn-Cunningham, 2011, Mateeff & Hohnsbein, 1988). However, when participants were asked to localize moving stimuli, the displacements of the final positions were less negative than those displacements that were observed when participants localized the corresponding stationary stimuli. This finding suggests that localization biases differ between moving and stationary events, and further indicates that localization of an event at one and the same position in space depends on whether the event is stationary or part of a trajectory (e.g., the final position of motion).

The magnitude of displacement of the final position of motion is thus affected by localization biases toward the midline and, more importantly, by accuracy in the encoding of spatial coordinates. The latter finding is consistent with our hypothesis that mechanisms involved in the processing of motion parameters and predictive mechanisms inducing extrapolation of the trajectory interact in the determination of displacements at motion offset. This hypothesis is also supported by previous evidence from neuroimaging studies. Using transcranial magnetic stimulation (TMS) in a visual task on implied motion perception, Senior et al. (2000) found that a disruption of processing in area MT/V5 eliminated the visual representational momentum effect, which argues for the critical role of the processing of motion parameters. The activation of cortical areas outside of the visual cortex in response to implied-motion stimuli has been related to the establishment of predictions on the further course of the trajectory. Schubotz and von Cramon (2001, 2002) reported that perceptual predictions activate the intraparietal sulcus and the premotor cortex; they interpreted this activation in terms of action planning in response to motion. Amorim et al. (2000) also found activity in the fronto-parietal networks, suggesting that the extrapolation of trajectory involves working memory functions.

Along with the spatial location of the final position, stimulus velocity and motion direction also had direct influences on the magnitude of displacement when considering modality-specific performance. As stimulus duration and velocity are inherently linked for constant trajectories, one has to take into account that the observed effects might to some extent also be related to increased uncertainty for short stimulus durations. However, the shortest stimulus duration employed in the present study was 250 ms, which exceeded the temporal window for the formation of both auditory (Sussmann, Winkler, Ritter, Alho, & Näätänen, 1999) and visual (Czigler et al., 2006) events.

Auditory localization performance strongly depended on the interaction of stimulus velocity and motion direction. The magnitude of forward displacement increased with the slowing of stimulus velocity for auditory motion toward the periphery, and decreased with the slowing of stimulus velocity for auditory motion toward the midline. Getzmann et al. (2004) reported the largest forward displacements for continuous auditory targets at slow velocities. However, they employed stimulus velocities that matched only the slowest stimulus velocities in our study. Furthermore, the authors did not consider velocity effects in interaction with the direction of motion. Our results suggest that neither a directional sensitivity nor a velocity preference can explain the observed displacements in the auditory task, but rather, the interaction between the two factors is what best explains them. This holds true irrespective of the binaural cues that the auditory system uses for the localization of auditory motion. The performance was comparable across all conditions when participants relied either on dynamically changing interaural time differences (low-frequency noise bursts in Exp. 1) or on dynamically changing interaural intensity differences (high-frequency noise bursts in Exp. 2).

In the visual localization task, the magnitude of forward displacement was generally largest for stimuli at high velocities. This finding is in good agreement with previous reports on velocity effects in the perception of visual motion offsets (Finke, Freyd, & Shyi, 1986; Freyd & Finke, 1985; Hubbard & Bharucha, 1988). We extended these findings by showing that the most pronounced forward displacements for high stimulus velocities occur at paralateral locations. This is likely the result of the fact that forward displacements are generally larger when spatial coordinates are less precisely encoded. Furthermore, the magnitude of displacement in the visual task was less influenced by the interaction of motion direction and stimulus velocity than were those in the auditory tasks. Although directional sensitivity in terms of detection or discrimination thresholds to motion onset has been studied extensively in the visual domain (Ball & Sekuler, 1980; Naito, Sato, & Osaka, 2010; Raymond, 1994), there is no general agreement regarding whether visual motion detectors are superior in encoding centripetal (toward the midline) or centrifugal (toward the periphery) motion. Our findings suggest at least that directional sensitivity only marginally affects the localization of visual motion offsets in the horizontal plane.

Importantly, when comparing the results of the visual task and the auditory tasks, the interaction of motion parameters affected the magnitudes of displacement differently. If one assumes that predictions on the further course of the trajectories of visual and auditory stimuli are established in shared cortical areas, differences between visual and auditory localization performance can again be explained in terms of an interaction between the processing of motion parameters and predictive mechanisms that induce forward displacements of the final position of motion. Dynamically changing interaural differences and the spectral content of the arriving information are cues for the indirect computation of motion by the auditory system. The variation of binaural cues across space, however, does not follow a linear relationship (Feddersen, Sandel, Teas, & Jeffress, 1957; Macpherson & Middlebrooks, 2002). As such, the available information differs depending on whether the auditory stimulus moves toward the periphery or toward the midline. In contrast, visual motion activates a retinotopic-based pathway. This direct representation implicates a constant amount of available motion information across space, which might explain the different effects of motion parameters on visual and auditory localization performance. Accordingly, the fundamental differences in how the two modalities encode motion are likely reflected in the different magnitudes of displacement.

Moreover, our results on the localization of auditory and visual endpoints are not in accord with a study by Shi and Nijhawan (2008). Those authors investigated the effect of motion direction on the localization of moving visual objects and found larger forward displacements for foveopetal motion (i.e., from the periphery to central vision). They interpreted their data in terms of biological relevance and suggested that foveopetal motion is of more significance than motion in the opposite direction. Our results provide evidence for a more complex relationship between displacements and motion parameters. Evidently, in the auditory task a combination of motion direction, stimulus velocity, and spatial location—whereas in the visual task a combination of stimulus velocity and spatial location—is what determines the magnitude of displacement in spatial tasks.

Ocular tracking and foreknowledge do not explain the displacements

In the present study, participants were instructed to look straight ahead during stimulus presentation and not to pursue the stimuli with their eyes or their heads. Nonetheless, displacements were observed in the localization of both moving auditory stimuli and moving visual stimuli. Although we cannot rule out any reflex of the participants to pursue the moving targets once in a while, it seems unlikely that purely perceptual processes induced by ocular tracking of the trajectory (Kerzel, 2003, 2005) might explain the present results. Our findings, rather, are in line with the notion that the extrapolation of trajectory is independent of eye movements in both visual and auditory tasks, as has previously been proposed by Getzmann (2005a).

Also, foreknowledge or expectations (Hubbard, 1994; Kerzel, 2002; Verfaillie & d’Ydewalle, 1991) with regard to the position of motion offset may not explain the observed displacements. Participants were largely unable to predict the location of the final position of motion (although there was some limited prediction, because they knew that the stimuli would not go behind them), as the trajectories differed in their angular ranges. About a third of the moving stimuli traveled an angular range of 98°, and those stimuli were randomly interspersed among stimuli traveling an angular range of 38° in order to ensure that participants could not adapt to the length of the trajectory after a number of presented trials.

Furthermore, the occurrence of forward displacements in the two modalities does not support the hypothesis that mislocalization of the final position of motion is due to a compensation for the neuronal delay in visual signal processing (Alais & Burr, 2003; Nijhawan, 1994). Signal transmission is much faster in the auditory than in the visual system (Fain, 2003), and it is therefore unlikely that the observed mislocalizations in the auditory task were induced by a mechanism that compensates for neuronal delay.

Taken together, the results of the present study show that mislocalizations of the final position of motion in each modality vary as a function of motion parameters—that is, the spatial location of the final position, the stimulus velocity, and the motion direction. Position uncertainty in consequence of less precise encoding of spatial coordinates led to larger forward displacements in paralateral space, but this effect was drowned out by underestimation of the target eccentricity in the extreme periphery. Crucially, the comparison between the visual and auditory tasks revealed that the interaction of motion parameters affected the magnitudes of displacement differently. If extrapolation of the trajectory is considered to be the underlying mechanism for the observed mislocalizations in previous studies and in the present study, the magnitude of displacement likely reflects the interplay between the modality-specific processing of motion parameters and predictive mechanisms that rely on higher cognitive functions.

References

Alais, D., & Burr, D. (2003). The “flash-lag” effect occurs in audition and cross-modally. Current Biology, 13, 59–63. doi:10.1016/S0960-9822(02)01402-1

Albright, T. D., & Stoner, G. R. (1995). Visual motion perception. Proceedings of the National Academy of Sciences, 92, 2433–2440. doi:10.1073/pnas.92.7.2433

Amorim, M. A., Lang, W., Lindinger, G., Mayer, D., Deecke, L., & Berthoz, A. (2000). Modulation of spatial orientation processing by mental imagery instructions: A MEG study of representational momentum. Journal of Cognitive Neuroscience, 12, 569–582. doi:10.1162/089892900562345

Bachmann, T., Murd, C., & Põder, E. (2012). Flash-lag effect: Complicating motion extrapolation of the moving reference-stimulus paradoxically augments the effect. Psychological Research, 76, 654–666. doi:10.1007/s00426-011-0370-3

Ball, K., & Sekuler, R. (1980). Human vision favors centrifugal motion. Perception, 9, 317–325. doi:10.1068/p090317

Blauert, J. (1997). Spatial hearing: The psychophysics of human sound localization. Cambridge, MA: MIT Press.

Czigler, I., Winkler, I., Pató, L., Várnagy, A., Weisz, J., & Balázs, L. (2006). Visual temporal window of integration as revealed by the visual mismatch negativity event-related potential to stimulus omissions. Brain Research, 1104, 129–140. doi:10.1016/j.brainres.2006.05.034

Fain, G. (2003). Sensory transduction. Sunderland, MA: Sinauer.

Feddersen, W. E., Sandel, T. T., Teas, D. C., & Jeffress, L. A. (1957). Localization of high-frequency tones. Journal of the Acoustical Society of America, 29, 988–991. doi:10.1121/1.1909356

Finke, R. A., Freyd, J. J., & Shyi, G. C. (1986). Implied velocity and acceleration induce transformations of visual memory. Journal of Experimental Psychology. General, 115, 175–188. doi:10.1037/0096-3445.115.2.175

Finlay, D. (1982). Motion perception in the peripheral visual field. Perception, 11, 457–462. doi:10.1068/p110457

Fortenbaugh, F. C., & Robertson, L. C. (2011). When here becomes there: Attentional distribution modulates foveal bias in peripheral localization. Attention, Perception, & Psychophysics, 73, 809–828. doi:10.3758/s13414-010-0075-5

Freyd, J. J. (1987). Dynamic mental representations. Psychological Review, 94, 27–438. doi:10.1037/0033-295X.94.4.427

Freyd, J. J., & Finke, R. A. (1984). Representational momentum. Journal of Experimental Psychology. Human Perception and Performance, 10, 126–132. doi:10.1037/0278-7393.10.1.126

Freyd, J. J., & Finke, R. A. (1985). A velocity effect for representational momentum. Bulletin of the Psychonomic Society, 23, 443–446. Retrieved from http://dynamic.uoregon.edu/~jjf/repmo.html

Fu, Y., Shen, Y., & Dan, Y. (2001). Motion-induced perceptual extrapolation of blurred visual targets. Journal of Neuroscience, 21(RC172), 1–5. Retrieved from www.jneurosci.org/content/21/20/RC172.full.pdf

Getzmann, S. (2005a). Representational momentum in spatial hearing does not depend on eye movements. Experimental Brain Research, 165, 229–238. doi:10.1007/s00221-005-2291-0

Getzmann, S. (2005b). Shifting the onset of a moving sound source: A Fröhlich effect in spatial hearing. Hearing Research, 210, 104–111. doi:10.1016/j.heares.2005.08.003

Getzmann, S., & Lewald, J. (2009). Constancy of target velocity as a critical factor in the emergence of auditory and visual representational momentum. Experimental Brain Research, 193, 437–443. doi:10.1007/s00221-008-1641-0

Getzmann, S., Lewald, J., & Guski, R. (2004). Representational momentum in spatial hearing. Perception, 33, 591–599. doi:10.1068/p5093

Grantham, D. W. (1986). Detection and discrimination of simulated motion of auditory targets in the horizontal plane. Journal of the Acoustical Society of America, 79, 1939–1949. doi:10.1121/1.393201

Hayes, A. E., & Freyd, J. J. (2002). Representational momentum when attention is divided. Visual Cognition, 9, 8–27. doi:10.1080/13506280143000296

Hubbard, T. L. (1994). Judged displacement: A modular process? The American Journal of Psychology, 107, 359. doi:10.2307/1422879

Hubbard, T. L. (2005). Representational momentum and related displacements in spatial memory: A review of the findings. Psychonomic Bulletin and Review, 12, 822–851. doi:10.3758/BF03196775

Hubbard, T. L. (2010). Approaches to representational momentum: Theories and models. In R. Nijhawan & B. Khurana (Eds.), Space and time in perception and action (pp. 338–365). Cambridge, UK: Cambridge University Press.

Hubbard, T. L., & Bharucha, J. J. (1988). Judged displacement in apparent vertical and horizontal motion. Perception & Psychophysics, 44, 211–221. doi:10.3758/BF03206290

Hubbard, T. L., Kumar, A. M., & Carp, C. L. (2009). Effects of spatial cueing on representational momentum. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 666–677. doi:10.1037/a0014870

Hubbard, T. L., & Motes, M. A. (2002). Does representational momentum reflect a distortion of the length or the endpoint of a trajectory? Cognition, 82, B89–B99. doi:10.1016/S0010-0277(01)00156-1

Hubbard, T. L., & Ruppel, S. E. (1999). Representational momentum and landmark attraction effects. Canadian Journal of Experimental Psychology, 53, 242–256. doi:10.1037/h0087313

Ihlefeld, A., & Shinn-Cunningham, B. G. (2011). Effect of source spectrum on sound localization in an everyday reverberant room. Journal of the Acoustical Society of America, 130, 324–333. doi:10.1121/1.3596476

Kanai, R., Sheth, B. R., & Shimojo, S. (2006). Stopping the motion and sleuthing the flash-lag effect: Spatial uncertainty is the key to perceptual mislocalization. Vision Research, 44, 2605–2619. doi:10.1016/j.visres.2003.10.028

Kerzel, D. (2000). Eye movements and visible persistence explain the mislocalization of the final position of a moving target. Vision Research, 40, 3703–3715. doi:10.1016/S0042-6989(00)00226-1

Kerzel, D. (2002). A matter of design: No representational momentum without predictability. Visual Cognition, 9, 66–80. doi:10.1080/13506280143000322

Kerzel, D. (2003). Mental extrapolation of target position is strongest with weak motion signals and motor responses. Vision Research, 43, 2623–2635. doi:10.1016/S0042-6989(03)00466-8

Kerzel, D. (2005). Representational momentum beyond internalized physics: Embodied mechanisms of anticipation cause errors in visual short-term memory. Current Directions in Psychological Science, 14, 180–184. doi:10.1111/j.0963-7214.2005.00360.x

Kerzel, D., & Gegenfurtner, K. R. (2004). Spatial distortions and processing latencies in the onset repulsion and fröhlich effects. Vision Research, 44, 577–590. doi:10.1016/j.visres.2003.10.011

Kerzel, D., Jordan, J. S., & Müsseler, J. (2001). The role of perception in the mislocalization of the final position of a moving target. Journal of Experimental Psychology. Human Perception and Performance, 27, 829–840. doi:10.1037/0096-1523.27.4.829

Linares, D., López-Moliner, J., & Johnston, A. (2007). Motion signal and the perceived positions of moving objects. Journal of Vision, 7(7):1, 1–7. doi:10.1167/7.7.1

Macpherson, E. A., & Middlebrooks, J. C. (2002). Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. Journal of the Acoustical Society of America, 111, 2219–2236. doi:10.1121/1.1471898

Mateeff, S., & Gourevich, A. (1983). Peripheral vision and perceived visual direction. Biological Cybernetics, 49, 111–118. doi:10.1007/BF00320391

Mateeff, S., & Hohnsbein, J. (1988). Dynamic auditory localization: Perceived position of a moving sound source. Acta Physiologica et Pharmacologica Bulgarica, 14, 32–38.

Maus, G. W., & Nijhawan, R. (2009). Going, going, gone: Localizing abrupt offsets of moving objects. Journal of Experimental Psychology. Human Perception and Performance, 35, 611–626. doi:10.1037/a0012317

Michel, M., & Geisler, W. S. (2011). Intrinsic position uncertainty explains detection and localization performance in peripheral vision. Journal of Vision, 11(1):18, 1–18. doi:10.1167/11.1.18

Middlebrooks, J. C., & Green, D. M. (1991). Sound localization by human listeners. Annual Review of Psychology, 42, 135–159. doi:10.1146/annurev.ps.42.020191.001031

Müsseler, J., & Aschersleben, G. (1998). Localizing the first position of a moving stimulus: The Fröhlich effect and an attention-shifting explanation. Perception & Psychophysics, 60, 683–695. doi:10.3758/BF03206055

Naito, T., Sato, H., & Osaka, N. (2010). Direction anisotropy of human motion perception depends on stimulus speed. Vision Research, 50, 1862–1866. doi:10.1016/j.visres.2010.06.007

Nijhawan, R. (1994). Motion extrapolation in catching. Nature, 370, 256–257. doi:10.1038/370256b0

Nijhawan, R. (2001). The flash-lag phenomenon: Object motion and eye movements. Perception, 30, 263–282. doi:10.1068/p3172

Perrott, D. R., Ambarsoom, H., & Tucker, J. (1987). Changes in head position as a measure of auditory localization performance: auditory psychomotor coordination under monaural and binaural listening conditions. Journal of the Acoustical Society of America, 82, 1637–1645. doi:10.1121/1.395155

Perrott, D. R., Saberi, K., Brown, K., & Strybel, T. Z. (1990). Auditory psychomotor coordination and visual search performance. Perception & Psychophysics, 48, 214–226. doi:10.3758/BF03211521

Rao, H., Han, S., Jiang, Y., Xue, Y., Gu, H., Cui, Y., & Gao, D. (2004). Engagement of the prefrontal cortex in representational momentum: An fMRI study. NeuroImage, 23, 98–103. doi:10.1016/j.neuroimage.2004.05.016

Raymond, J. E. (1994). Directional anisotropy of motion sensitivity across the visual field. Vision Research, 34, 1029–1037. doi:10.1016/0042-6989(94)90007-8

Schmiedchen, K., Freigang, C., Nitsche, I., & Rübsamen, R. (2012). Crossmodal interactions and multisensory integration in the perception of audio-visual motion—A free-field study. Brain Research, 1466, 99–111. doi:10.1016/j.brainres.2012.05.015

Schubotz, R. I., & von Cramon, D. Y. (2001). Functional organization of the lateral premotor cortex: fMRI reveals different regions activated by anticipation of object properties, location and speed. Cognitive Brain Research, 11, 97–112. doi:10.1016/S0926-6410(00)00069-0

Schubotz, R. I., & von Cramon, D. Y. (2002). Predicting perceptual events activates corresponding motor schemes in lateral premotor cortex: An fMRI study. NeuroImage, 15, 787–796. doi:10.1006/nimg.2001.1043

Senior, C., Barnes, J., Giampietro, V., Simmons, A., Bullmore, E. T., Brammer, M., & David, A. S. (2000). The functional neuroanatomy of implicit-motion perception or representational momentum. Current Biology, 10, 16–22. doi:10.1016/S0960-9822(99)00259-6

Shi, Z., & Nijhawan, R. (2008). Behavioral significance of motion direction causes anisotropic flash-lag, flash-drag, flash-repulsion, and movement-mislocalization effects. Journal of Vision, 8(7):24, 1–14. doi:10.1167/8.7.24

Sussmann, E., Winkler, I., Ritter, W., Alho, K., & Näätänen, R. (1999). Temporal integration of auditory stimulus deviance as reflected by the mismatch negativity. Neuroscience Letters, 264, 161–164. doi:10.1016/S0304-3940(99)00214-1

Taya, S., & Miura, K. (2010). Cast shadow can modulate the judged final position of a moving target. Attention, Perception, & Psychophysics, 72, 1930–1937. doi:10.3758/APP.72.7.1930

To, M. P. S., Regan, B. C., Wood, D., & Mollon, J. D. (2011). Vision out of the corner of the eye. Vision Research, 51, 203–214. doi:10.1016/j.visres.2010.11.008

Verfaillie, K., & d’Ydewalle, G. (1991). Representational momentum and event course anticipation in the perception of implied periodical motions. Journal of Experimental Psychology: Learning, Memory, and Cognition, 17, 302–313. doi:10.1037/0278-7393.17.2.302

Vreven, D., & Verghese, P. (2005). Predictability and the dynamics of position processing in the flash-lag effect. Perception, 34, 31–44. doi:10.1068/p5371

Wilson, W. W., & O’Neill, W. E. (1998). Auditory motion induces directionally dependent receptive field shifts in inferior colliculus neurons. Journal of Neurophysiology, 79, 2040–2062. Retrieved from http://jn.physiology.org/content/79/4/2040.full

Author note

This research was supported by the Deutsche Forschungsgemeinschaft, through the graduate program “Function of Attention in Cognition” (GRK 1182). The authors thank Ingo Kannetzky, Jörg Eckebrecht, and Matthias Freier for technical assistance, and Patrice Voss for proofreading the manuscript.

Correspondence concerning this article should be addressed to Kristina Schmiedchen, Faculty of Biosciences, Pharmacy and Psychology, University of Leipzig, Talstr. 33, 04103 Leipzig, Germany. E-mail: k.schmiedchen@uni-leipzig.de.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schmiedchen, K., Freigang, C., Rübsamen, R. et al. A comparison of visual and auditory representational momentum in spatial tasks. Atten Percept Psychophys 75, 1507–1519 (2013). https://doi.org/10.3758/s13414-013-0495-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-013-0495-0