Abstract

We investigated the effects of seen and unseen within-hemifield posture changes on crossmodal visual–tactile links in covert spatial attention. In all experiments, a spatially nonpredictive tactile cue was presented to the left or the right hand, with the two hands placed symmetrically across the midline. Shortly after a tactile cue, a visual target appeared at one of two eccentricities within either of the hemifields. For half of the trial blocks, the hands were aligned with the inner visual target locations, and for the remainder, the hands were aligned with the outer target locations. In Experiments 1 and 2, the inner and outer eccentricities were 17.5º and 52.5º, respectively. In Experiment 1, the arms were completely covered, and visual up–down judgments were better when on the same side as the preceding tactile cue. Cueing effects were not significantly affected by hand or target alignment. In Experiment 2, the arms were in view, and now some target responses were affected by cue alignment: Cueing for outer targets was only significant when the hands were aligned with them. In Experiment 3, we tested whether any unseen posture changes could alter the cueing effects, by widely separating the inner and outer target eccentricities (now 10º and 86º). In this case, hand alignment did affect some of the cueing effects: Cueing for outer targets was now only significant when the hands were in the outer position. Although these results confirm that proprioception can, in some cases, influence tactile–visual links in exogenous spatial attention, they also show that spatial precision is severely limited, especially when posture is unseen.

Similar content being viewed by others

The body of work investigating the perception of more than one sense at a time has pointed toward several guiding principles of multisensory interaction (for recent reviews, see Alais, Newell, & Mamassian, 2010; Macaluso & Maravita, 2010). One such principle is that the spatial coordination of the senses of touch and vision is maintained across changes in body position. This conclusion has arisen from convergent evidence provided by investigations of monkeys’ tactile–visual bimodal cells (e.g., Graziano & Gross, 1994), studies of phenomena in brain-injured patients (e.g., di Pellegrino, Làdavas, & Farnè, 1997; Spence, Shore, Gazzaniga, Soto-Faraco, & Kingstone, 2001), brain-imaging experiments using fMRI (e.g., Macaluso, Frith, & Driver, 2002) or electroencephalography (e.g., Kennett, Eimer, Spence, & Driver, 2001), and behavioral/psychophysical research (e.g., Spence, Pavani, & Driver, 2000). One influential image is of zones of visual space that surround body parts, with visual events/objects within a zone interacting with touches on that zone’s body part (e.g., Macaluso & Maravita, 2010). These zones are imagined to follow their respective body parts around in space as they move (Spence et al., 2001).

Single-cell recording

The evidence that the links between touch and vision can remap across changes of body position is compelling. For example, single-cell recording in monkeys has shown that visual events close to hands cause tactile–visual bimodal cells to fire (Fogassi et al., 1996; Graziano & Gross, 1998; Iriki, Tanaka, & Iwamura, 1996). These visual receptive fields are coded in arm-centered coordinates. That is, these visual receptive fields move, in retinotopic coordinates, as the relative positions of the arms and eyes change (Graziano, Yap, & Gross, 1994), even when that relative position is occluded from view (Graziano, 1999). Similarly, visual receptive fields can follow a false arm in the absence of a view of the real arm (Graziano, Cooke, & Taylor, 2000). Moreover, visual receptive fields of some tactile–visual cells apparently extend to incorporate tools that are held within the hand containing the tactile receptive field (Iriki et al., 1996) and can even remap to take account of indirect views of hands, such as those provided via a camera–monitor system (Iriki, Tanaka, Obayashi, & Iwamura, 2001). However, not all recorded tactile–visual cells show this remapping (e.g., only five of 25 arm-centered bimodal cells in Graziano & Gross, 1994), and even some that do remap show imprecise co-location of visual and tactile receptive fields (as in Graziano et al., 1994, when the hand moves in their Fig. 1C).

Brain-injured patients

Following unilateral, typically parietal, brain injury, some patients exhibit the phenomenon of unilateral extinction to double simultaneous stimulation: A contralesional event is perceived when it is presented alone, but is “extinguished” from awareness when it is presented simultaneously with an ipsilesional event (e.g., Bender, 1952). Such extinction can occur between a tactile event on one side and a visual event on the other (e.g., Mattingley, Driver, Beschin, & Robertson, 1997). However, the degree to which a contralesional event is extinguished by an ipsilesional one depends on a number of spatial and postural factors. For example, whereas a right-visual-field event can extinguish a left-hand tactile one, in some right-brain-injured patients this extinction is markedly reduced if the unstimulated right hand is moved away from the right visual event (di Pellegrino et al., 1997; Làdavas, di Pellegrino, Farnè, & Zeloni, 1998), yet it returns if a false/rubber hand is now put in the empty space close to the right visual event (Farnè, Pavani, Meneghello, & Làdavas, 2000). Extinction is abolished if the head and eyes are turned so that the right-visual-field event is now located close to the previously extinguished left tactile event (Kennett, Rorden, Husain, & Driver, 2010). Similarly to the single-cell work outlined above, the use of tools by the right hand can bring more distant visual events within range such that they now also extinguish left tactile events (Farnè & Làdavas, 2000; Maravita, Husain, Clarke, & Driver, 2001; see Farnè, Serino, & Làdavas, 2007, for a review). Conversely, the use of a tool by the left hand can bring right visual events within the same “bimodal representation” as the previously extinguished left tactile event, thereby reducing extinction (Maravita, Clarke, Husain, & Driver, 2002). Taken as a whole, these results are accounted for well by visual zones of space that coordinate visual events close to the body with tactile events on the body.

Brain-imaging experiments

Tactile–visual interactions revealed using fMRI have also pointed to spatial coordination across the senses. For example, activity in unimodal visual cortex following a visual event is markedly increased when a simultaneous tactile event occurs close by (Macaluso, Frith, & Driver, 2000). Analogously, unimodal somatosensory activation increases when an activating tactile stimulus is close to a visual stimulus (Macaluso, Frith, & Driver, 2005). These tactile–visual interactions take account of the relative positions in space of visual and tactile events, as has been shown by the effects that different eye positions have on the results (Macaluso et al., 2002).

Event-related potential (ERP) studies also suggest that tactile and visual events are spatially coordinated in a way that takes the current eye and/or hand posture into account (Eimer, Cockburn, Smedley, & Driver, 2001; Kennett, Eimer, et al., 2001; Macaluso, Driver, van Velzen, & Eimer, 2005). Visual ERP components (N1 and Nd effects) are enhanced by preceding close by, yet spatially nonpredictive, tactile events, and this enhancement follows the hand as it is crossed over and placed next to visual events in the opposite visual field (Kennett, Eimer, et al., 2001). Similarly, directing tactile endogenous attention led to enhanced N1 and P2 responses to co-located visual stimuli, even when the hands were crossed (Eimer et al., 2001). When gaze shifts were employed to realign visual and tactile stimuli during shifts of tactile endogenous attention, visual N1 components were affected by this misalignment (Macaluso, Driver, et al., 2005). However, Macaluso, Driver, et al. also found limitations in the coordination between the senses. Specifically, visual P1 components showed no remapping across changes in posture, and even the remapping shown by the visual N1 was modulated by purely anatomical factors.

Behavioral and psychophysical experiments

Flexible spatial coordination of touch and vision has been demonstrated using a number of different behavioral paradigms. Endogenous spatial attention experiments have shown the largest cueing effects for both tactile and visual targets when attention is directed at the same external spatial location for both modalities, even when the hands are placed in a crossed position (Spence et al., 2000). Similarly, for exogenous spatial attention, spatially nonpredictive tactile events lead to faster and more accurate responses for following visual events on the same side (Spence, Nicholls, Gillespie, & Driver, 1998), even when the hands are crossed (Kennett, Eimer, et al., 2001; Kennett, Spence, & Driver, 2002). The same effect is found when the roles of the modalities are swapped, so that the cues are now visual and the targets are tactile (Kennett et al., 2002).

Crossmodal-congruence paradigms have revealed a number of spatial and postural effects with respect to tactile–visual perception. Typically, these experiments require participants to discriminate rapidly between upper and lower events within one imperative modality (touch or vision). Meanwhile, a simultaneous distractor event in the other modality (vision or touch, respectively) is presented in an upper or lower location. Half of the time the upper/lower location of the target and distractor match (congruent trials), and half of the time they mismatch (incongruent trials; for a review, see Spence, Pavani, Maravita, & Holmes, 2004). Target responses are faster and more accurate on congruent than on incongruent trials. This difference is termed a congruence effect and is a measure of the interference between the senses (Spence et al., 2004). Congruence tasks have been used to map the spatial extent within which tactile and visual interference is maximal. The findings fit well with those summarized above. Namely, maximal interference zones cross with the hands in space (Spence, Pavani, & Driver, 2004), extend to incorporate used tools (Maravita, Spence, Kennett, & Driver, 2002), and are influenced by misleading apparent locations of the arms conveyed by false/rubber arms (Pavani, Spence, & Driver, 2000; though see Spence & Walton, 2005).

However, a cautionary note has been sounded about such crossmodal-congruence paradigms (Spence, Pavani, & Driver, 2004; Spence & Walton, 2005). According to this caveat, congruence effects are guided less by overlapping perceptual coding of the competing stimuli, and more by competing response codes. Nevertheless, the ways in which the congruence effects vary with posture, tool, or rubber hand manipulations still require that the intensity of the response competition be influenced by spatial/perceptual, rather than purely by response, manipulations (Spence, Pavani, & Driver, 2004). Thus, the body of results produced with this paradigm remains informative with respect to the spatial coordination of tactile and visual perception.

Are multisensory zones spatially constrained?

The body of work investigating humans, cited above, has tended to investigate only one visual position on either side of a central fixation, with the posture manipulations tending to be an offset of the whole visual field to one side (Kennett et al., 2010; Macaluso, Driver, et al., 2005; Macaluso et al., 2002), a crossing of the arms (Kennett, Eimer, et al., 2001; Kennett et al., 2002; Spence et al., 2000; Spence, Pavani, & Driver, 2004), or a movement of one of the arms (di Pellegrino et al., 1997; Làdavas et al., 1998; Spence, Pavani, & Driver, 2004). Therefore, the conclusions regarding how spatially specific are interactions between the senses might be limited to the whole of a visual hemifield. Even the cited single-cell studies, which showed more restricted visual receptive fields surrounding body parts, have presented no data on the extent or the sharpness of the boundaries of these fields. Moreover, as we discussed above, these same studies showed that not all tactile–visual bimodal cells take the current posture into account (e.g., Graziano & Gross, 1994), and even some of the cells that do show such modulation fail to keep the senses in strict spatial register (e.g., Graziano et al., 1994), and across populations of bimodal cells, visual receptive fields can vary widely in size, from small fields close to the body to larger fields extending away from their respective tactile fields on the hand/arm (Graziano & Gross, 1994) or face (Duhamel, Colby, & Goldberg, 1998). It follows that the widely held view of tightly restricted zones of visual space being attached to specific body parts might in part be a misleading model for tactile–visual interactions.

In the present article, we investigate the extent of visual space that is influenced by specific tactile events. By modifying a cueing paradigm previously used to reveal links in exogenous spatial attention between touch and vision (Kennett, Eimer, et al., 2001; Kennett et al., 2002; Spence et al., 1998), we employed eight possible visual locations following spatially nonpredictive tactile cues. Participants performed a speeded up/down judgment on visual targets that could appear at any of two widely separated eccentricities on either side of a central fixation (thus, four horizontally arrayed positions by two elevations). Tactile cues were presented to either the left or the right index finger, whereas, for each block of trials, the hands were placed in alignment either with the inner or the outer pair of visual eccentricities. If such tactile events lead to strictly spatially specific visual performance advantages for the cued side, visual cueing effects should be shown only for the visual targets with which the hands are aligned. Conversely, if a cue to a hand placed within, say, the left visual field produces an advantage for visual targets within its whole hemifield, the change in hand alignment should leave cueing effects unaltered.

Experiment 1

In the present experiment, we sought to test whether a spatially uninformative tactile event to the left or the right hand would affect visual judgments in any of four lateral target positions. Visual targets were always equally likely to appear in either of two eccentricities in either hemifield (i.e., at any of four lateral target positions). Participants were required to direct their gaze centrally and to maintain this central fixation throughout. In half of the blocks, participants’ hands (and hence the possible positions of the tactile cues) were placed next to the two inner target positions, one on either side of fixation (see Fig. 1A). In the remaining blocks, the hands were placed farther apart, next to the two outer target positions, again one on either side of fixation (see Fig. 1B). At each of the four lateral target positions, a pair of lights was positioned, one placed slightly above the other. A visual target consisted of the illumination of a single light at either elevation in any of the four possible lateral target locations. Participants’ task was to discriminate as quickly as possible whether the upper or the lower of any of the pairs of lights was illuminated, regardless of its lateral position. Participants’ arms were completely covered to ensure that sensory information about their current posture must come from proprioception rather than vision.

If tactile–visual spatial exogenous links are the result of a hemifield-wide advantage on the same side as the irrelevant tactile cue, the pattern of spatial cueing effects found in the present experiment should not depend on whether the hands were positioned next to the inner or the outer two possible lateral target positions. However, if the cued-side advantage was the result of a more spatially specific mechanism giving an advantage for visual targets appearing close to the current location of the cued hand, the pattern of spatial cueing effects found here should depend crucially on the current posture. For example, the cued-side advantage for outer targets appearing on the same side as the preceding tactile cue, relative to targets on the opposite side, would be predicted to be larger when the hands were aligned with the outer two possible lateral target positions, as compared with when the hands were placed next to the inner positions (even though the tactile stimulation itself was unchanged by the hand location).

Method

Participants

A group of 24 naive healthy volunteers (15 female, nine male; 20 right-handed) 19–38 years of age (M = 23 years) were reimbursed £5 to participate in this study. All had normal or corrected-to-normal vision and normal touch, all by self-report.

Stimuli and apparatus

Figure 1 shows the experimental layout. Each participant sat at a table and used an adjustable chinrest. Dim lighting was sufficient to see the experimental array and the participant’s own arms. One tactile stimulator was attached to each index finger so that a small, blunt, metal rod could strike the medial surface (hand positioned palm-down on the tabletop) of the middle phalanx. The sensation of a firm tap resulted from the metal rod (diameter: 1.5 mm) being propelled by a 12-V solenoid, which was computer activated. On activation, the rod struck an area of the skin measuring 1.8 mm2 with a momentum of approximately 3–4 g ⋅ m/s. The hands were placed on the tabletop, one to each side of the participant’s midline, at one of two possible eccentricities. In “inner-cues” blocks, the hands were placed so that tactile stimulation was about 390 mm from the participant’s eyes and 18º to the left or right of central fixation (see Fig. 1A). In “outer-cues” blocks, the hands were placed so tactile stimulation was approximately 550 mm from the participant’s eyes and 53º to either side of fixation (see Fig. 1B).

Eight green, 5-mm light-emitting diodes (LED), arranged in four vertical pairs, comprised the potential visual targets. One pair was placed next to each of the four possible tactile cue locations, with one LED above the tactile position and one below (i.e., the four LED pairs were placed at 18º and 53º to the left and right of fixation). These LEDs were 60 mm closer to the participant’s eyes than the stimulators to allow a small space for the hands and apparatus. The inner pairs of LEDs were vertically separated by 7.5º, whereas the outer LED pairs were 11.5º apart vertically. This larger vertical separation for the more eccentric target pairs allowed participants to perform the elevation discrimination task (up vs. down for the visual targets; see the Procedure section) with sufficient ease. A black occluding sheet extended from behind the participant’s head to beyond their hands. The sheet was positioned immediately below the target lights and above the hands and their tactile stimulators.

Participants were required to look straight ahead, throughout experimental blocks, at a red 2-mm LED, positioned 25º–35º below eye level (dependent upon the chinrest height). Right eye position was monitored using a Skalar Iris 6500 infrared eyetracker, which interfaced with the controlling computer using a 12-bit analog-to-digital converter to provide online feedback to both the experimenter and the participant. Recalibration of this device was performed prior to each block to ensure an accurate signal. Error feedback to the participant (see the Procedure) was provided by a yellow 5-mm LED placed immediately below fixation.

Each participant provided speeded responses by pressing a foot pedal placed either under the right heel or under the right toes. The participant’s right foot rested on a hinged pivot-board that could be tilted by flexing the foot to make these responses. Throughout the experiment, white noise was presented through whole-ear headphones (80 dB[A] to each ear) to mask any sounds made by the tactile stimuli or foot pedals.

A tactile cue consisted of three 50-ms taps, separated by 20 ms, to one or the other index finger (total duration: 190 ms). A visual target was the illumination of any one of the eight green LEDs for 100 ms. A central yellow feedback LED switched on for 350 ms at the end of any trial in which response was incorrect, before target onset or too slow (2,000 ms after target onset). Horizontal eye position deviation greater than ±3º, and blinks, were signaled at trial end, after any response error feedback, by the feedback LED flashing four times (50 ms on, 50 ms off).

Procedure

One practice block of 50 trials was followed by eight experimental blocks of 96 trials. Illumination of the fixation light started each trial. After a variable delay (380–580 ms), a tactile cue was presented (lasting 190 ms) with equal likelihood to either the left or right index finger. Following an interstimulus interval (equiprobably 10 or 160 ms) a single visual target was presented (making the stimulus onset asynchrony [SOA] either 200 or 350 ms). This visual target could unpredictably be in any of the eight possible locations, rendering tactile cues spatially nonpredictive. Thus, cues and targets were presented in the same hemifield in half of the trials, and in opposite hemifields on the remainder. A target appeared in the specific lateral position of a cue on a quarter of trials. Participants were instructed to provide a speeded judgment of the visual target’s elevation (up vs. down) by pressing the toe pedal for an upper light, or the heel pedal for a lower light. Importantly, the response dimension (up vs. down) was entirely orthogonal to the dimension cued (left vs. right; cf. Spence & Driver, 1994, 1996). Note also that the cue provides no information about the subsequent target location or response type. Participants were informed of this and instructed simply to ignore the tactile cues. The fixation light was extinguished and error feedback was given as soon as a pedal response was recorded, or if no response was made within 2,000 ms of target onset. The next trial began, after a 400-ms intertrial interval, when the fixation light was illuminated.

Hand position was changed after each experimental block. Hands were placed at the inner two cue locations, aligned with the inner two pairs of target lights, in half of the experimental blocks and at the outer two cue locations in the remainder. The cue alignment during the first block was counterbalanced across participants and applied also during the practice block.

Results

Responses that were too fast or slow (i.e., outside the range of 100–2,000 ms after target onset) were excluded (2.8 % of all trials). Trials were also excluded if eye-position recordings were beyond ±3º from fixation (a further 5.9 % of all trials). Data were then collapsed across target elevation (up vs. down). Response errors in the up/down visual judgments were recorded as a percentage of remaining trials for each condition and discarded from reaction time (RT) calculations. Median RTs for each condition and participant were calculated. Initial analyses considered target side as a factor. However, no significant effects involving this factor were found in this, or either subsequent experiment. Consequently, the data were collapsed across target sides (left vs. right), and the resulting analyses are presenting below.

The interparticipant means of the median RTs, with the respective error rates, are shown in Table 1 (top section), along with those from the other experiments in this article. Given that better performance, in this choice RT experiment, can be reflected in faster or more accurate responses, or some mixture of both, a combined measure was used. We used the inverse efficiency measure suggested by Townsend and Ashby (1978, 1983) and used by previous authors (Akhtar & Enns, 1989; Christie & Klein, 1995; Kennett, Eimer, et al., 2001; Murphy & Klein, 1998). Inverse efficiency was calculated by dividing the median RT by the accuracy rate for each participant and each condition. The interparticipant means of these values are shown in Table 1 (top). In this article, we will present analyses of inverse efficiency data throughout. However, the findings are closely mirrored by those that arose from separate analyses on the RT and error data.

Tactile–visual exogenous spatial cueing would be reflected by better performance when the visual targets were on the same side of space as the tactile cues. Moreover, if the exogenous tactile cues operated in a spatially specific way, we would expect the cued-side advantage for specific target locations to be largest when the hands were aligned with those targets. Figure 2A plots the cueing effects (the cued-side performance advantage calculated by subtracting inverse efficiency for cued-side targets from that for targets on the uncued side) for each combination of target eccentricity and cue eccentricity. In a four-way within-participants analysis of variance (ANOVA) on the inverse efficiency data, we investigated the factors Cue Side (target ipsilateral vs. contralateral to cue), Hand Alignment (inner [18º] vs. outer [53º]), Target Eccentricity (inner [18º] vs. outer [53º]), and SOA (200 vs. 350 ms). The terms involving the factor Cue Side were the main focus of this study. Among those, only the main effect of cue side was significant [F(1, 23) = 21.9, p < .001], as performance was better overall for cued-side targets (M = 452 ms) than for contralaterally cued targets (M = 466 ms). The key Cue Side × Hand Alignment × Target Eccentricity interaction very narrowly missed significance [F(1, 23) = 4.3, p = .0502]. Planned t tests treating each target eccentricity separately showed that cueing effects did not depend on whether the hands were aligned with targets [inner targets, t(23) = 1.3, p = .2; outer targets, t(23) = 1.3, p = .2].

Interparticipant mean cueing effects of inverse efficiency scores for (A) Experiment 1, (B) Experiment 2, and (C) Experiment 3. Each bar represents one of the four conditions made by crossing target eccentricity with hand alignment. Error bars show within-participants 95 % confidence intervals for each respective cueing effect

Other significant terms, not involving spatial cueing, included the main effect of SOA [F(1, 23) = 14.0, p = .001], due to a long-SOA advantage, and the main effect of target eccentricity [F(1, 23) = 148.3, p < .001], due to clear advantage for inner targets. The Hand Alignment × Target Eccentricity × SOA interaction was also significant [F(1, 23) = 4.4, p = .048]. No other terms were significant (all other terms, F < 2.8, p > .10).

Spatially nonpredictive tactile cues led to systematic performance benefits for closely following visual targets on the same side. However, no robust evidence was shown here for a modulation of cueing effects by changes in alignment between cues and targets. These results are consistent with a hemifield-wide model of exogenous spatial cueing.

Discussion

Spatially nonpredictive tactile stimulation of one hand led to reliable performance advantages for visual event within the same visual hemifield. This confirms the standard finding of tactile–visual links in exogenous spatial attention (Kennett, Eimer, et al., 2001; Kennett et al., 2002; Spence et al., 1998). This ipsilateral performance advantage for either target eccentricity was not reliably affected by whether the hands were aligned with either the inner, or the outer, targets. Therefore, these results are inconsistent with the prevailing view that vision and touch are coordinated in a spatially precise way. In particular, information about the current posture does not seem to influence tactile–visual links in exogenous spatial attention.

The present experiment completely prevented participants from viewing their current posture, to ensure that only proprioception was used to sense current posture. This restriction has previously not prevented the crossing of hands from influencing tactile–visual exogenous (Kennett et al., 2002) or tactile–visual congruence effects (Spence, Pavani, & Driver, 2004). However, in those examples, posture changes moved tactile stimuli from one visual hemifield to another, unlike here where posture changes did not cross the body midline. A view of the arms has been suggested as being crucial for tactile–visual interactions (see also Kennett, Taylor-Clarke, & Haggard, 2001; Làdavas, Farnè, Zeloni, & di Pellegrino, 2000; Sambo, Gillmeister, & Forster, 2009) within the context of crossmodal extinction following unilateral brain injury (though see also Kennett et al., 2010, for a contrary view). Consequently, a replication of the present experiment, but now allowing participants to view their arms, might reveal how important this extra sensory input is in defining the spatial specificity of tactile–visual interactions.

Experiment 2

This study sought to test whether the lack of postural influences on exogenous cueing effects, found in Experiment 1, would be replicated even when the hands and arms are in plain view. If tactile cue location is precisely coded only when proprioception plus vision provide sensory information about the current posture, specific tactile–visual links might be expected to be reliably revealed here. Thus, cueing effects will depend on the current hand alignment. However, if tactile–visual links in exogenous spatial attention are not spatially precise, unlike the dominant notion described in the Introduction, the extra visual information will not prevent the present experiment from replicating Experiment 1 and changing posture will not influence crossmodal spatial cueing effects.

Method

Participants

A group of 24 new, naive, healthy volunteers (16 female, eight male; 20 right-handed) 19–33 years of age (M = 26 years) were reimbursed £5 to participate in this study. All had normal or corrected-to-normal vision and normal touch, by self-report.

Stimuli, apparatus, and procedure

All stimulus, layout, and procedural details were exactly as in Experiment 1 (see Fig. 1), with one exception: The occluding sheet was removed, so that participants could now see their own hands and arms. Only participants’ index fingers remained hidden, to avoid any possibility that the tactile cues had a visual component.

Results

Data processing and analysis were exactly as in Experiment 1. A total of 3.6 % of all trials were excluded for being outside acceptable time limits and a further 6.1 % due to eye-position errors. Table 1 (middle section) shows interparticipant means of the RT, error, and inverse-efficiency data for each condition. Figure 2B plots the cued-side advantage for each combination of target eccentricity and hand alignment. As before, a four-way within-participants ANOVA with the factors Cue Side (2), Hand Alignment (2), Target Eccentricity (2), and SOA (2) was performed on the inverse efficiency data. The three-way Cue Side × Hand Alignment × Target Eccentricity interaction was significant [F(1, 23) = 9.6, p = .005]. This term subsumed a significant main effect of cue side [F(1, 23) = 13.8, p = .001], a significant Cue Side × Hand Alignment interaction [F(1, 23) = 9.7, p = .005], and a significant Hand Alignment × Target Eccentricity interaction [F(1, 23) = 6.9, p = .01]. Planned t tests explored the cueing effects for each target eccentricity and hand alignment. These revealed that the significant ANOVA terms above were due to outer-target cueing effects being significantly larger when hands were aligned with targets [t(23) = 3.6, p = .002], whereas inner-target cueing effects did not reliably differ between hand alignments [t(23) = 0.4]. The within-participants error bars in Fig. 2B show the reliability of cueing effects for each target eccentricity at each hand alignment. Specifically, cueing effects were significant in the outer posture for both target eccentricities [inner targets, t(23) = 2.6, p = .02; outer targets, t(23) = 4.0, p < .001], but were not significant in the inner posture for either target eccentricity [inner targets, t(23) = 1.7, p = .1; outer targets, t(23) = 1.4, p = .2].

As in Experiment 1, the main effects of SOA [F(1, 23) = 23.1, p < .001] and target eccentricity [F(1, 23) = 108.8, p < .001] were significant. Once more, these effects reflect performance advantages for the long SOA and the inner targets, respectively. The Target Eccentricity × SOA interaction was also significant [F(1, 23) = 7.3, p = .01]. No other terms were significant, including the Cue Side × SOA interaction [F(1, 23) = 4.0, p = .06; all other terms, Fs < 2.3, ps > .14].

As in Experiment 1, spatially nonpredictive tactile cues, in general, led to cued-side performance advantages for closely following visual targets. In contrast, though, the present experiment shows clear evidence that hand and cue alignment can affect cueing effects. In particular, the outer targets in the present experiment were only cued when the hands, and therefore the cues, were aligned with them. The effect of hand alignment on the spatial cueing of inner targets was unreliable.

Comparing Experiment 1 with Experiment 2

A direct statistical comparison of the two experiments was performed to investigate to what extent the apparent differences in the findings were reliable. Note that, although only Experiment 2 showed a significant relationship between cue side, target eccentricity and hand alignment in the ANOVA, this term only narrowly missed significance in Experiment 1. Moreover, outer targets in both experiments were only reliably cued when hands were aligned with these targets.

A five-way mixed ANOVA was performed on the inverse-efficiency data from both Experiments 1 and 2 to compare them directly. The four within-participants factors were Cue Side (2), Hand Alignment (2), Target Eccentricity (2), and SOA (2). The single between-participants factor was Experiment, corresponding to a manipulation of arm view (i.e., Exp. 1 vs. Exp. 2, or arms visible vs. arms occluded). The only significant term involving spatial cueing and the Experiment factor was the three-way Cue Side × Hand Alignment × Experiment interaction [F(1, 46) = 5.0, p = .03]. This interaction reflects that only in Experiment 2 was cueing larger when the hands were in the outer rather than the inner position, regardless of target eccentricity. One other significant term that involved the factor Experiment was the Target Eccentricity × Hand Alignment × Experiment interaction [F(1, 46) = 6.0, p = .02]. Since this term does not involve the factor Cue Side, it is not directly relevant to exogenous spatial cueing. Nonetheless, it represents the fact that for outer targets only, and only in Experiment 2 (hands visible), the outer-hand alignment led to more efficient performance than the inner hand alignment (regardless of the cue side). All other terms involving the factor Experiment were nonsignificant [all other terms, Fs < 2.0, ps > .16]. Several terms that did not reveal a difference between experiments were also significant, including the important three-way Cue Side × Hand Alignment × Target Eccentricity interaction [F(1, 46) = 13.8, p < .001]. This term was the crucial finding in Experiment 2, reflecting the fact that cueing effects for outer targets did depend upon hand alignment. Given that this term does not itself interact with the factor of experiment, it shows that no robust difference exists between the experiments in the effect that cue and target alignment has on cueing effects.

The remaining significant terms were either subsumed by the previously mentioned factors or did not include the Cue Side factor. These were the main effects of cue side, target eccentricity, and SOA, as well as the Target Eccentricity × SOA and Cue Side × Hand Alignment interactions [all terms, Fs > 6.1, ps < .02]. No other terms were significant [for all terms, Fs < 3.8, ps > .06].

Discussion

This experiment extended Experiment 1, by now allowing clear view of the arms during the same tactile–visual exogenous cueing paradigm. Significant cueing effects for visual targets on the same versus opposite side as a spatially uninformative tactile cue were again found. However, now, unlike Experiment 1, these cueing effects reliably depended on the current posture (at least for the outer visual targets). This might appear to suggest that, in conditions where vision cannot inform the system of the current arm position (Exp. 1), tactile–visual exogenous cueing operates via a hemifield-wide mechanism. Whereas, when vision adds to proprioception in sensing arm position (Exp. 2), tactile–visual exogenous cueing operates in a spatially specific way.

Before resting on this two-mechanism account we consider the role of proprioception and vision when localizing our hands. Behavioral experiments have shown that we reconstruct the location of the hands/fingers via a combination of proprioceptive and visual (where available) information (Plooy, Tresilian, MonWilliams, & Wann, 1998; van Beers, Sittig, & Denier van der Gon, 1999) and monkey single cells in the premotor cortex have been found that combined visual and proprioceptive information about the location of the arm (Graziano, 1999). This combination of visual and proprioceptive information was available to participants in the present Experiment 2, however, only proprioception was available to localize the hands in Experiment 1. The present results suggest that proprioception alone was not sufficient to encode the posture change. If this was because the posture manipulation was dwarfed by the error in the coded location of the tactile cue, a larger posture manipulation might allow posture effects to be revealed even when sensed only by proprioception. The final experiment will test this suggestion by covering the arms, as in the first experiment, but now having a very large posture manipulation between blocks.

Experiment 3

Experiment 1 found no evidence that proprioception alone was sufficient to allow posture changes to modulate tactile–visual spatial cueing. This might be due to the degraded ability to localize the hand in space when it is unseen. If the perceptual system is to remap a given somatotopic signal to a different external spatial location according to the current position of the stimulated skin, it must have accurate information as to the location of the body part. Finger localization accuracy depends on both visual and proprioceptive information (Plooy et al., 1998; van Beers et al., 1999). Furthermore, proprioceptive information becomes increasingly inaccurate after a supported limb becomes static (as in the present experiments) in the absence of vision, and the perceived limb position can even drift toward the body over time (Wann & Ibrahim, 1992). In Experiment 1, information regarding current arm position was provided by proprioceptive information only, whereas in Experiment 2 it was provided by both proprioception and vision. Even if the proprioceptive system were allowed to operate optimally (such as by frequent movements of the arms, which were not allowed in the present experiments) then localization of the arm and hand should have been somewhat less accurate in Experiment 1 than 2. This likely difference would have been emphasized in the present experimental context, since the arms were held static for approximately one minute before the start of each block and for over three-and-a-half minutes by the end of a block, thus probably reducing the precision of proprioceptive information (Wann & Ibrahim, 1992). It would be reasonable, therefore, for any effects of posture change on tactile–visual cueing, to be somewhat increased from Experiment 1 to Experiment 2, in which a view of the arms was allowed. The postural manipulation employed in Experiment 1 might have been rather small relative to the accuracy of the proprioceptive localization of the unseen static arm, whereas the same postural manipulation in Experiment 2 could have been large enough in the context of superior arm localization. If a larger postural manipulation were employed in an otherwise identical study to Experiment 2, then evidence for an effect of unseen hand posture on tactile–visual links in exogenous covert spatial attention might be found. Accordingly, in the present experiment we changed the two possible cue (and target) eccentricities from the values of 18º and 53º that had been used in Experiments 1 and 2, to 10º and 86º, respectively, moving the inner locations farther inward and the outer locations farther outward. For this postural manipulation, the hands were moved through 76º (rather than 35º) between the inner and outer postures (see Fig. 3). It was hoped that if any relationship exists between unseen posture and exogenous links in spatial attention between touch and vision, then this extreme posture manipulation, would be sensitive enough to measure it reliably.

Schematic diagram showing the setup used in Experiment 3. The inner hand alignment is depicted in solid lines and dotted lines represent the outer posture

If covered arms, and posture sensed only via proprioception, lead to a qualitatively different mechanism for tactile–visual links in exogenous spatial attention, such as hemifield-wide cueing, this larger posture manipulation should not change the pattern of cueing effects. However, if the previous posture manipulation was too small to reveal effects of unseen hand and target alignment, the much larger manipulation should now lead to spatial cueing effects that do depend on the relative external spatial position of tactile cues and visual targets.

Method

Participants

A group of 29 new, naive, healthy volunteers were reimbursed £5 to participate in this study. Seven of these were excluded from the analysis because two completed less than two of eight experimental blocks and a further five participants responded accurately on fewer than 25 trials (out of a possible 48) in at least one condition. Had this applied to any participants in the previous experiments, exclusion would have resulted. We adopted a high exclusion rate in the present experiment for two reasons: The extreme eccentricity of the outer targets in this experiment compared with the previous two studies resulted in more choice-response errors; the stricter eye-position criterion increased the number of rejected trials (see the Results section below). The remaining 22 participants (11 female, 11 male; 21 right-handed) were 17–34 years of age (M = 24 years). All had normal or corrected-to-normal vision and normal touch, all by self-report.

Stimuli, apparatus, and procedure

The experimental layout is shown in Fig. 3. Most stimulus, layout, and procedural details were as in Experiment 1. As in that experiment, the hands were occluded by a sheet. The only substantive modifications were the positioning of the target lights and the corresponding placement of the hands, with the tactile cues. Now, the hands were placed such that the tactile cues occurred either 10º or a full 86º to either side of the center (either about 530 or 680 mm from the participant’s eyes, respectively). Again, the four vertical pairs of green target LEDs were placed next to, at the same visual angle as, and 50 mm closer to the participants’ eyes than, the four possible cue locations. Thus, these were now 10º or 86º to the left and right of central fixation. Because the inner target positions were now closer to the central fixation point, a stricter eye-position criterion for trial rejection was applied (2.5º vs. 3.0º; see the Results). The LEDs within pairs were vertically spaced by either 4.8º (inner targets) or 11.7º (outer targets) of visual angle. The larger vertical separation for the more eccentric target pairs was intended to allow sufficient ease at the elevation discrimination task. However, this visual task remained difficult at retinal eccentricities of 86º, as is shown by the higher error rates and slower RTs for these targets.

Central fixation was now provided by a yellow LED and error feedback by a red LED. Participants now continuously depressed both foot pedals by resting their right foot on them. Responses were the speeded release of one of the pedals (the toe pedal for upper targets, the heel pedal for lower targets).

Results

The data processing and analysis were almost exactly as in Experiments 1 and 2. The only change was a stricter eye-position criterion being applied here. A total of 0.5 % of all trials were excluded for being outside acceptable time limits, and a further 13.2 % due to eye position deviating more than ±2.5º. Table 1 (bottom) shows interparticipant means of the RT, error and inverse efficiency data for each condition. Figure 2C plots the cued-side advantage for each combination of target eccentricity and hand alignment. As before, a four-way within-participants ANOVA with the factors Cue Side (2), Hand Alignment (2), Target Eccentricity (2), and SOA (2) was performed on the inverse efficiency data. The key Cue Side × Hand Alignment × Target Eccentricity interaction was now significant [F(1, 23) = 5.1, p = .04]. As in Experiment 2, this term subsumed a significant main effect of cue side [F(1, 23) = 9.3, p = .006], a significant Cue Side × Hand Alignment interaction [F(1, 23) = 7.9, p = .01], and a significant Hand Alignment × Target Eccentricity interaction [F(1, 23) = 6.8, p = .02]. Planned t tests were again employed to explore the cueing effects for each target eccentricity and hand alignment. These showed that the cueing effects for outer targets were significantly larger when hands were aligned with targets [t(21) = 2.9, p = .01], whereas inner-target cueing effects were not significantly altered by hand alignment [t(21) = 1.1, p = .3]. Cueing effects for specific combinations of target eccentricity and hand alignment were only significant for outer targets with hands aligned [see the error bars in Fig. 2C; inner targets, inner hands, t(21) = 0.7; inner targets, outer hands, t(21) = 1.9, p = .07; outer targets, inner hands, t(21) = 0.5; outer targets, outer hands, t(21) = 4.7, p < .001].

As in Experiments 1 and 2, the main effect of target eccentricity was significant [F(1, 21) = 78.2, p < .001], due the clear advantage for less retinally peripheral targets. As in Experiment 2, the Hand Alignment × Target Eccentricity interaction was significant [F(1, 21) = 6.8, p = .02]. No other terms were significant [for all other terms, Fs < 3.6, ps > .07].

Here, spatially nonpredictive tactile cues, led to cued-side performance advantages for closely following visual targets only when the hands and cues were placed in the outer position. This provides evidence that hand and tactile cue position can affect exogenous cueing effects even when sight of that position is prevented. In particular, the outer targets in the present experiment were cued only when the hands, and therefore the cues, were aligned with them.

Discussion

The spatial cueing effects on speeded visual judgments of outer targets produced by spatially nonpredictive tactile events were modulated by unseen changes in hand position in this experiment. The general pattern of results found here is in close agreement with that found in Experiment 2. In that experiment, participants were allowed to see their arms, but the posture manipulation was smaller than here, and the aligned target eccentricities correspondingly different. In this experiment, no visual information as to the position of the arm was available, thus implicating a role for proprioception in signaling the current hand posture. Such results argue strongly that crossmodal integration of touch with proprioception allowed the current location in external space of the tactile cue to be coded prior to the effects of that cue being mapped spatially to exert a crossmodal influence on visual judgments.

General discussion

In a series of three experiments, we examined the degree to which exogenous tactile cueing of visual covert spatial attention is specific to regions near the current location of the cued hand. Within experiments, the alignment of tactile cues and visual targets was manipulated by changing posture between blocks. Across experiments, the view of the arms and the size of the posture manipulation were varied, to explore whether viewing the arms qualitatively changed these tactile–visual interactions. In confirmation of previous work (Kennett, Eimer, et al., 2001; Kennett et al., 2002; Spence et al., 1998), across all three experiments we found that, in general, spatially nonpredictive tactile cues led to better performance in the speeded visual up–down discrimination task when the visual stimuli and tactile cues were on the same side of space, rather than on opposite sides. Importantly, for the first time, we now have also demonstrated that tactile spatial cueing for visual stimuli on the same side of space is not restricted to a small region surrounding the cued finger or hand, whether or not that hand can be seen. Experiment 2 showed that, when the position of the arms was in view, ipsilateral cues provided an advantage for visual targets as much as 35º away (i.e., inner targets were cued when the hands were placed at the outer position). Similarly, in Experiment 1—again with a 35º posture manipulation, but now with the arms covered—we found that posture had no reliable effect on the cueing effects for either target eccentricity. Experiment 3 did not reveal specific cueing effects of inner targets by outer cues. However, it could be argued that the planned two-tailed t test was excessively conservative, given the strong directional prediction in favor of a cue-side advantage. If one were to accept a one-tailed test here, outer tactile cues did lead to cueing effects for inner visual targets located as much as 76º away [t(21) = 1.9, p(one-tailed) = .03].

Before considering accounts of these results, we note that the commonly held view of spatially precise coordination of the senses of vision and touch across changes in posture clearly does not account well for these data (see the introduction and Macaluso & Maravita, 2010, for reviews). Such spatial precision would have resulted in cueing effects always being largest when the cues and targets were most closely aligned. This was not the case in any experiment. In particular, even in those experiments that showed statistically reliable posture effects (Exps. 2 and 3), inner-target cueing effects were not reliably larger when the hands were aligned with thosee targets. In fact, the nonsignificant trend was for misaligned cues to lead to greater spatial cueing. Nevertheless, these results also show that tactile–visual interactions can be affected by within-hemifield posture changes. Both Experiment 2 (viewed arm posture) and Experiment 3 (unseen arm posture) showed that visual cueing effects can be sensitive to changes in the position of tactile cues. In these experiments, outer visual targets showed cueing effects only when the hands, and therefore the tactile cues, were aligned with them. Thus, these experiments revealed that, although posture is taken into account, the spatial specificity of tactile–visual interactions is limited, at least in the case here of exogenous spatial attention. Despite this cautionary note, the present experiments do add to the evidence that tactile–visual links in exogenous covert spatial attention remap across changes in posture (Kennett, Eimer, et al., 2001; Kennett et al., 2002; Spence et al., 1998). That is, across all conditions within each experiment, the retinal inputs of the visual targets and the somatosensory inputs of the tactile cues were invariant across changes in posture. Nonetheless, spatial cueing of the visual targets due to the tactile events was not always invariant.

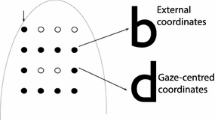

These results, as a whole, are clearly not explained by simple hemifield-wide cueing, as historically has been advanced, for the sense of vision alone (e.g., by Hughes & Zimba, 1985; although this view was afterward rejected by the authors themselves; see Hughes & Zimba, 1987). The results of Experiment 1, taken alone, were consistent with such a model. However, a mechanism advanced to explain just these specific experimental conditions would not be parsimonious. Two alternative accounts deserve some speculation: One draws on gradient models of attention to suggest that cueing advantages pertain only to the region of external space close to the cue (e.g., Downing & Pinker, 1985; LaBerge & Brown, 1989), with a peak of advantage at the cued location, and a smooth fall-off, or gradient, into the surrounding space. A second account proposes that the arms segment the visual scene to allow for whole-hemifield cueing between the arms, but not outside them. Figure 4 presents schematic diagrams of these two alternatives. These accounts have a number of important features, which we will consider in turn.

Diagrams showing speculative accounts for the regions of visual space cued exogenously by tactile cues to the left hand in both the inner (solid lines) and outer (dotted lines) hand alignments. The cued regions are projected onto the layout of either Experiment 2 (A, C), with arms visible, or Experiment 3 (B, D), with arms occluded. These regions depict a modified cued-zones account (A, B) or a segmented-hemifield account (C, D)

A segmented-hemifield account suggests that a tactile cue draws attention to its whole visual hemifield, from the midline up to the arm. The arm is thus considered to form an outer boundary beyond which attention does not spread. This idea shares features described in work investigating the spread of visual attention within segmented objects (e.g., Hollingworth, Maxcey-Richard, & Vecera, 2012; Martinez, Ramanathan, Foxe, Javitt, & Hillyard, 2007). Since the arm flexibly defines the outer boundary of the cued portion of the hemifield, the region of visual space cued by tactile stimuli varies along with the current posture (see Figs. 4C and D).

In contrast, the cued-zones account starts from the currently conventional idea (see the introduction) that visual–tactile interactions are spatially specific and centered on the cued location. However, these zones are then grossly modified according to the accuracy, or otherwise, of spatial coding of the tactile events in question.

The cued zones depicted in Fig. 4A are smaller than those in Fig. 4B. Figure 4A represents Experiment 2, where the arm position was visible, whereas Fig. 4B represents Experiment 3, in which arm position could only be sensed proprioceptively. It is known that both vision and proprioception contribute to finger localization accuracy (Plooy et al., 1998; van Beers et al., 1999). Moreover, proprioceptive perception of the position of a static, supported limb becomes less accurate over time (Wann & Ibrahim, 1992). Although all of the present experiments featured supported static limbs, only Experiment 2 allowed proprioception plus vision to locate arm position. It is therefore highly likely that arm localization was superior in Experiment 2, resulting in more precise coding of the tactile cues’ spatial location. If such spatial coding contributes to the size of the cued visual zones, such zones would probably have been smaller in Experiment 2 (see Fig. 4A) than in Experiment 1 or 3 (see Fig. 4B).

Both the segmented-hemifield and cued-zone concepts account well for one key feature of the present data set: Outer targets were only cued reliably by outer cues (see Fig. 2). The segmented-hemifield account does this by placing outer targets outside the cued region when the arms draw a boundary at the inner location, yet inside the cued region when the arms move their boundary to the outer location. Similarly, the cued-zones account places the outer visual targets outside the cued regions centered on the inner hand locations, yet inside the cued zones centered on those locations when the arms are placed there. However, both accounts have more difficulty accounting for the performance on inner targets. The segmented-hemifield account predicts that inner-target cueing should be observed regardless of the hand position, because inner targets are within the segmentation boundary (i.e., the arm) in both postures. This pattern fits with the results of Experiment 1, but not with those of Experiments 2 and 3, in which inner targets were not cued reliably when the hands were placed close to them.

Likewise, if cued zones are of a fixed size, they cannot explain the present asymmetrical effects of misaligned targets and cues. Zones sized such that inner cues do not reach the outer targets would similarly not reach inner targets when the hands that they were centered on were placed at the outer locations. However, in Experiment 2 inner targets were cued by outer cues (it also could be argued that a one-tailed test would be justified to show the same pattern in Exp. 3). One way to reconcile this asymmetry with a cued-zone account would be to allow the zones to alter in size as posture changes. This approach might be reasonable on several grounds: Firstly, the visual space nearer the fovea (recall that fixation was directed centrally and tracked by an eye-movement monitor) is cortically overrepresented, even more so in earlier visual regions, relative to more peripheral regions of space. This might result in a given neural extent of cueing corresponding to a smaller spatial extent for regions nearer the fovea (as has been suggested by Downing & Pinker, 1985). Secondly, it has been found, in endogenous visual–spatial cueing paradigms employing several lateral target positions, that cued regions surround the cue location more tightly (i.e., attentional gradients are steeper), the less peripheral this location is (Downing & Pinker, 1985; Shulman, Wilson, & Sheehy, 1985). Thirdly, single-cell studies of monkey premotor cortex have shown that the receptive fields of tactile–visual bimodal cells can vary in size depending on stimulus properties (Fogassi et al., 1996). Additionally, variable visual–tactile interference effects during reach-to-grasp experiments are suggestive of modulation in multisensory zones in humans (Brozzoli, Pavani, Urquizar, Cardinali, & Farnè, 2009). Finally, it has been shown that the localization of the finger becomes poorer as successively more extreme joint positions are reached (Rossetti, Meckler, & Prablanc, 1994). More extreme shoulder and elbow joint positions were required for adopting the outer posture than the inner posture in all three of the present experiments. Therefore, according to the logic employed before when contrasting the size of cued regions when arms were visible versus occluded, the cued zones might be expected to be larger for cues to hands in outer positions, as compared with when the hands were aligned with the inner targets.

Now applying these cued zones to the results found here, let us first consider cueing of the outer targets. In all three experiments, outer targets were only cued when the hands were aligned with them. Clearly, whether the arms were seen (Fig. 4A) or not (Fig. 4B), the cued zones only encompassed the outer target positions when the hands were placed there. One aspect to note is that the outer target position in Experiment 1 was the same as that depicted in Fig. 4A (for Exp. 2), whereas the likely cued zones were similar in size to those depicted in Fig. 4B (for Exp. 3). This might have resulted in the edges of the inner cued zone only weakly cueing outer targets in Experiment 1. This in turn might lead to the results observed in Experiment 1, in which outer targets were cued reliably only by outer cues, but conversely, the cueing of these targets was not significantly affected by the posture manipulation.

For inner targets, no reliable effects of posture were found in any of the experiments. According to the model above, the enlarged cued zones when the hands are in the outer position might extend to encompass the ipsilateral inner target locations. Moreover, when the hands are placed at the inner target positions, the relatively large cued zones might spread across the midline, eliminating the cueing effect for inner targets cued by inner cues. Nevertheless, this account cannot satisfactorily explain the result of Experiment 1, in which inner targets were reliably cued regardless of posture.

The present study is not the first to have employed a horizontal array of four, or more, target locations. Similar approaches have been used to investigate visual attention (Downing & Pinker, 1985; Rizzolatti, Riggio, Dascola, & Umiltà, 1987; Tassinari, Aglioti, Chelazzi, Marzi, & Berlucchi, 1987), auditory attention (Rorden & Driver, 2001), and audiovisual links in exogenous spatial attention (Spence, McDonald, & Driver, 2004). All of these experiments found evidence in favor of restricted zones of space being advantaged by directing attention to one position. In the latter case, restricted visual zones were cued by spatially nonpredictive auditory stimuli that varied in their external spatial relationship with the visual targets across changes in posture (albeit changes in eye fixation posture; Spence, McDonald, & Driver, 2004).

One previous investigation of visual–tactile interactions employed a spatial arrangement similar to the one in the present article in a crossmodal-congruence task (though see also Driver & Grossenbacher, 1996; Spence & Walton, 2005). Visual targets could be at one of two eccentricities on either side of central fixation (although the participant’s eye position was not monitored), and hands, with tactile distractors, were placed at either an inner or an outer location. Interference on the visual task by the tactile distractors was largest when they were from the same hemispace. However, unlike here, the interference for visual targets was not modulated by hand alignment (though see Spence, Pavani, & Driver, 2004, for a one-handed within-hemifield modulation of visual–tactile congruence effects). Indeed, given that participants were allowed to view their hands and arms, as in the present Experiment 2, this lack of modulation might be surprising. Notwithstanding the fact that we were investigating the spatial distribution of links between vision and touch in exogenous spatial attention, several other important differences distinguish the previous experiment from ours, and any of them might have acted to reduce the impact of the posture change, as compared to our Experiment 2. Firstly, their tactile events were intentionally not orthogonal to their visual task, as in all congruence paradigms. In such situations, it is not clear to what extent perception- and response-related issues might impact on the response patterns (see Spence, Pavani, & Driver, 2004, for a discussion of this issue). Secondly, only one target eccentricity was presented in each block of trials, perhaps diminishing the impact of any spatial mismatch between touch and vision when the modalities were misaligned (e.g., within blocks, the hands might have been mapped onto the current target eccentricity, analogously to a mouse-controlling hand being effortlessly mapped onto the location of a pointer on a computer display, even if the hand is in clear view). Lastly, the previous posture manipulation was much smaller (18º vs. 35º). Indeed, in a similar paradigm, but with a much larger (63º) one-handed misalignment of tactile targets with visual distractors, posture change did modulate visual–tactile congruence effects (Spence, Pavani, & Driver, 2004).

Our main finding that spatially nonpredictive tactile events did not lead to small spatially specific cued zones of visual space has implications for the current view of good spatial coordination of touch and vision across postures. Although we found evidence that current posture can affect some visual cueing effects, and that cued zones do a reasonable job of accounting for the pattern of results, future work must confirm whether such zones exist and whether they are centered on the cued hands. Current research considering tactile–visual spatial interactions has tended to employ static scenarios, as here, which conceivably makes the remapping problem easier to solve (though note that proprioception is degraded under these conditions; see Wann & Ibrahim, 1992). By more closely mimicking real-world situations, dynamic situations could be devised to measure the extent to which these results would generalize to moving scenes. The current tactile stimuli were task-irrelevant throughout, and participants were instructed to ignore them. This might have further degraded the accuracy of the spatial coding of our stimuli relative to a more ecologically valid scenario; however, real-world tactile warning signals, such as those given by animals that we might wish to fend off accurately, are typically not the focus of attention when they are delivered. Nevertheless, it remains open whether increasing the task relevance of tactile stimuli in similar paradigms would lead to more spatially specific tactile–visual interactions than those found here.

References

Akhtar, N., & Enns, J. T. (1989). Relations between covert orienting and filtering in the development of visual-attention. Journal of Experimental Child Psychology, 48, 315–334.

Alais, D., Newell, F. N., & Mamassian, P. (2010). Multisensory processing in review: From physiology to behaviour. Seeing and Perceiving, 23, 3–38.

Bender, M. B. (1952). Disorders in perception. Springfield, IL: Charles C. Thomas.

Brozzoli, C., Pavani, F., Urquizar, C., Cardinali, L., & Farnè, A. (2009). Grasping actions remap peripersonal space. NeuroReport, 20, 913–917.

Christie, J., & Klein, R. (1995). Familiarity and attention: Does what we know affect what we notice? Memory & Cognition, 23, 547–550. doi:10.3758/BF03197256

di Pellegrino, G., Làdavas, E., & Farnè, A. (1997). Seeing where your hands are. Nature, 388, 730.

Downing, C. J., & Pinker, S. (1985). The spatial structure of visual attention. In M. I. Posner & O. S. M. Marin (Eds.), Attention and performance XI (pp. 171–187). Hillsdale, NJ: Erlbaum.

Driver, J., & Grossenbacher, P. J. (1996). Multimodal spatial constraints on tactile selective attention. In T. Inui & J. L. McClelland (Eds.), Attention and performance XVI: Information integration in perception and communication (pp. 209–235). Cambridge, MA: MIT Press.

Duhamel, J. R., Colby, C. L., & Goldberg, M. E. (1998). Ventral intraparietal area of the macaque: Congruent visual and somatic response properties. Journal of Neurophysiology, 79, 126–136.

Eimer, M., Cockburn, D., Smedley, B., & Driver, J. (2001). Cross-modal links in endogenous spatial attention are mediated by common external locations: Evidence from event-related brain potentials. Experimental Brain Research, 139, 398–411.

Farnè, A., & Làdavas, E. (2000). Dynamic size-change of hand peripersonal space following tool use. NeuroReport, 11, 1645–1649.

Farnè, A., Pavani, F., Meneghello, F., & Làdavas, E. (2000). Left tactile extinction following visual stimulation of a rubber hand. Brain, 123, 2350–2360.

Farnè, A., Serino, A., & Làdavas, E. (2007). Dynamic size-change of peri-hand space following tool-use: Determinants and spatial characteristics revealed through cross-modal extinction. Cortex, 43, 436–443.

Fogassi, L., Gallese, V., Fadiga, L., Luppino, G., Matelli, M., & Rizzolatti, G. (1996). Coding of peripersonal space in inferior premotor cortex (area F4). Journal of Neurophysiology, 76, 141–157.

Graziano, M. S. A. (1999). Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proceedings of the National Academy of Sciences, 96, 10418–10421.

Graziano, M. S. A., Cooke, D. F., & Taylor, C. S. R. (2000). Coding the location of the arm by sight. Science, 290, 1782–1786.

Graziano, M. S. A., & Gross, C. G. (1994). The representation of extrapersonal space: A possible role for bimodal, visual–tactile neurons. In M. S. Gazzaniga (Ed.), The cognitive neurosciences (pp. 1021–1034). Cambridge, MA: MIT Press.

Graziano, M. S. A., & Gross, C. G. (1998). Spatial maps for the control of movement. Current Opinion in Neurobiology, 8, 195–201.

Graziano, M. S. A., Yap, G. S., & Gross, C. G. (1994). Coding of visual space by premotor neurons. Science, 266, 1054–1057.

Hollingworth, A., Maxcey-Richard, A. M., & Vecera, S. P. (2012). The spatial distribution of attention within and across objects. Journal of Experimental Psychology: Human Perception and Performance, 38, 135–151. doi:10.1037/a0024463

Hughes, H. C., & Zimba, L. D. (1985). Spatial maps of directed visual attention. Journal of Experimental Psychology: Human Perception and Performance, 11, 409–430.

Hughes, H. C., & Zimba, L. D. (1987). Natural boundaries for the spatial spread of directed visual- attention. Neuropsychologia, 25, 5–18.

Iriki, A., Tanaka, M., & Iwamura, Y. (1996). Coding of modified body schema during tool use by macaque postcentral neurones. NeuroReport, 7, 2325–2330.

Iriki, A., Tanaka, M., Obayashi, S., & Iwamura, Y. (2001). Self-images in the video monitor coded by monkey intraparietal neurons. Neuroscience Research, 40, 163–173.

Kennett, S., Eimer, M., Spence, C., & Driver, J. (2001). Tactile–visual links in exogenous spatial attention under different postures: Convergent evidence from psychophysics and ERPs. Journal of Cognitive Neuroscience, 13, 462–478.

Kennett, S., Rorden, C., Husain, M., & Driver, J. (2010). Crossmodal visual–tactile extinction: Modulation by posture implicates biased competition in proprioceptively reconstructed space. Journal of Neuropsychology, 4, 15–32.

Kennett, S., Spence, C., & Driver, J. (2002). Visuo-tactile links in covert exogenous spatial attention remap across changes in unseen hand posture. Perception & Psychophysics, 64, 1083–1094. doi:10.3758/BF03194758

Kennett, S., Taylor-Clarke, M., & Haggard, P. (2001). Noninformative vision improves the spatial resolution of touch in humans. Current Biology, 11, 1188–1191.

LaBerge, D., & Brown, V. (1989). Theory of attentional operations in shape identification. Psychological Review, 96, 101–124. doi:10.1037/0033-295X.96.1.101

Làdavas, E., di Pellegrino, G., Farnè, A., & Zeloni, G. (1998). Neuropsychological evidence of an integrated visuotactile representation of peripersonal space in humans. Journal of Cognitive Neuroscience, 10, 581–589.

Làdavas, E., Farnè, A., Zeloni, G., & di Pellegrino, G. (2000). Seeing or not seeing where your hands are. Experimental Brain Research, 131, 458–467.

Macaluso, E., Driver, J., van Velzen, J., & Eimer, M. (2005). Influence of gaze direction on crossmodal modulation of visual ERPS by endogenous tactile spatial attention. Cognitive Brain Research, 23, 406–417.

Macaluso, E., Frith, C. D., & Driver, J. (2000). Modulation of human visual cortex by crossmodal spatial attention. Science, 289, 1206–1208.

Macaluso, E., Frith, C. D., & Driver, J. (2002). Crossmodal spatial influences of touch on extrastriate visual areas take current gaze direction into account. Neuron, 34, 647–658.

Macaluso, E., Frith, C. D., & Driver, J. (2005). Multisensory stimulation with or without saccades: fMRI evidence for crossmodal effects on sensory-specific cortices that reflect multisensory location-congruence rather than task-relevance. NeuroImage, 26, 414–425.

Macaluso, E., & Maravita, A. (2010). The representation of space near the body through touch and vision. Neuropsychologia, 48, 782–795. doi:10.1016/j.neuropsychologia.2009.10.010

Maravita, A., Clarke, K., Husain, M., & Driver, J. (2002). Active tool use with the contralesional hand can reduce cross-modal extinction of touch on that hand. Neurocase, 8, 411–416.

Maravita, A., Husain, M., Clarke, K., & Driver, J. (2001). Reaching with a tool extends visual–tactile interactions into far space: Evidence from cross-modal extinction. Neuropsychologia, 39, 580–585.

Maravita, A., Spence, C., Kennett, S., & Driver, J. (2002). Tool-use changes multimodal spatial interactions between vision and touch in normal humans. Cognition, 83, B25–B34.

Martinez, A., Ramanathan, D. S., Foxe, J. J., Javitt, D. C., & Hillyard, S. A. (2007). The role of spatial attention in the selection of real and illusory objects. Journal of Neuroscience, 27, 7963–7973. doi:10.1523/JNEUROSCI.0031-07.2007

Mattingley, J. B., Driver, J., Beschin, N., & Robertson, I. H. (1997). Attentional competition between modalities: Extinction between touch and vision after right hemisphere damage. Neuropsychologia, 35, 867–880.

Murphy, F. C., & Klein, R. M. (1998). The effects of nicotine on spatial and non-spatial expectancies in a covert orienting task. Neuropsychologia, 36, 1103–1114.

Pavani, F., Spence, C., & Driver, J. (2000). Visual capture of touch: Out-of-the-body experiences with rubber gloves. Psychological Science, 11, 353–359.

Plooy, A., Tresilian, J. R., MonWilliams, M., & Wann, J. P. (1998). The contribution of vision and proprioception to judgements of finger proximity. Experimental Brain Research, 118, 415–420.

Rizzolatti, G., Riggio, L., Dascola, I., & Umiltà, C. (1987). Reorienting attention across the horizontal and vertical meridians: Evidence in favor of a premotor theory of attention. Neuropsychologia, 25, 31–40. doi:10.1016/0028-3932(87)90041-8

Rorden, C., & Driver, J. (2001). Spatial deployment of attention within and across hemifields in an auditory task. Experimental Brain Research, 137, 487–496.

Rossetti, Y., Meckler, C., & Prablanc, C. (1994). Is there an optimal arm posture—Deterioration of finger localization precision and comfort sensation in extreme arm-joint postures. Experimental Brain Research, 99, 131–136.

Sambo, C. F., Gillmeister, H., & Forster, B. (2009). Viewing the body modulates neural mechanisms underlying sustained spatial attention in touch. European Journal of Neuroscience, 30, 143–150.

Shulman, G. L., Wilson, J., & Sheehy, J. B. (1985). Spatial determinants of the distribution of attention. Perception & Psychophysics, 37, 59–65.

Spence, C., & Driver, J. (1994). Covert spatial orienting in audition: Exogenous and endogenous mechanisms. Journal of Experimental Psychology: Human Perception and Performance, 20, 555–574.

Spence, C., & Driver, J. (1996). Audiovisual links in endogenous covert spatial attention. Journal of Experimental Psychology: Human Perception and Performance, 22, 1005–1030.

Spence, C., McDonald, J., & Driver, J. (2004). Exogenous spatial cuing studies of human crossmodal attention and multisensory integration. In C. Spence & J. Driver (Eds.), Crossmodal space and crossmodal attention (pp. 277–320). Oxford, UK: Oxford University Press.

Spence, C., Nicholls, M. E. R., Gillespie, N., & Driver, J. (1998). Cross-modal links in exogenous covert spatial orienting between touch, audition, and vision. Perception & Psychophysics, 60, 544–557.

Spence, C., Pavani, F., & Driver, J. (2000). Crossmodal links between vision and touch in covert endogenous spatial attention. Journal of Experimental Psychology: Human Perception and Performance, 26, 1298–1319.

Spence, C., Pavani, F., & Driver, J. (2004). Spatial constraints on visual–tactile cross-modal distracter congruency effects. Cognitive Affective & Behavioral Neuroscience, 4, 148–169. doi:10.3758/CABN.4.2.148

Spence, C., Pavani, F., Maravita, A., & Holmes, N. (2004). Multisensory contributions to the 3–D representation of visuotactile peripersonal space in humans: Evidence from the crossmodal congruency task. Journal of Physiology, 98, 171–189.

Spence, C., Shore, D. I., Gazzaniga, M. S., Soto-Faraco, S., & Kingstone, A. (2001). Failure to remap visuotactile space across the midline in the split-brain. Canadian Journal of Experimental Psychology, 55, 133–140.

Spence, C., & Walton, M. (2005). On the inability to ignore touch when responding to vision in the crossmodal congruency task. Acta Psychologica, 118, 47–70.

Tassinari, G., Aglioti, S., Chelazzi, L., Marzi, C. A., & Berlucchi, G. (1987). Distribution in the visual field of the costs of voluntarily allocated attention and of the inhibitory after-effects of covert orienting. Neuropsychologia, 25, 55–71. doi:10.1016/0028-3932(87)90043-1

Townsend, J. T., & Ashby, F. G. (1978). Methods of modeling capacity in simple processing systems. In N. J. Castellan & F. Restle (Eds.), Cognitive theory (Vol. 3, pp. 199–239). Hillsdale, NJ: Erlbaum.

Townsend, J. T., & Ashby, F. G. (1983). The stochastic modeling of elementary psychological processes. Cambridge, UK: Cambridge University Press.

van Beers, R. J., Sittig, A. C., & Denier van der Gon, J. J. (1999). Localization of a seen finger is based exclusively on proprioception and on vision of the finger. Experimental Brain Research, 125, 43–49.

Wann, J. P., & Ibrahim, S. F. (1992). Does limb proprioception drift? Experimental Brain Research, 91, 162–166.

Author note

We thank Chris Rorden for discussions and technical assistance, and Brian Aviss for helping to design and build the tactile cue stimulators. S.K. was funded by the Medical Research Council (UK), and J.D. held a Wellcome Trust programme grant and was a Royal Society 2010 Anniversary Research Professor. Correspondence to Steffan Kennett, Department of Psychology, University of Essex, Colchester, CO4 3SQ, UK (e-mail: skennett@essex.ac.uk). This article is published in memory of Jon Driver, my friend and mentor.

Author information

Authors and Affiliations

Corresponding author

Additional information

Jon Driver (deceased)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Kennett, S., Driver, J. Within-hemifield posture changes affect tactile–visual exogenous spatial cueing without spatial precision, especially in the dark. Atten Percept Psychophys 76, 1121–1135 (2014). https://doi.org/10.3758/s13414-013-0484-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-013-0484-3