Abstract

In this article, we ask what serves as the “glue” that temporarily links information to form an event in an active observer. We examined whether forming a single action event in an active observer is contingent on the temporal presentation of the stimuli (hence, on the temporal availability of the action information associated with these stimuli), or on the learned temporal execution of the actions associated with the stimuli, or on both. A partial-repetition paradigm was used to assess the boundaries of an event for which the temporal properties of the stimuli (i.e., presented either simultaneously or temporally separate) and the intended execution of the actions associated with these stimuli (i.e., executed as one, temporally integrated, response or as two temporally separate responses) were manipulated. The results showed that the temporal features of action execution determined whether one or more events were constructed; the temporal presentation of the stimuli (and hence the availability of their associated actions) did not. This suggests that the action representation, or “task goal,” served as the “glue” in forming an event in an active observer. These findings emphasize the importance of action planning in event construction in an active observer.

Similar content being viewed by others

People often carry out more than one task at a time or keep track of more than one event at a time. To do so requires information relevant to one task or event to be temporarily linked to keep information from that task or event separate from the other concurrent tasks or events (Hommel 2004, 2005). In the present study, we examined what serves as the glue that links information together to form an event in an active observer when stimuli and actions executed to these stimuli unfold over time. Understanding the factors involved in forming a representation of a single event is complicated by the fact that boundaries defining an event are not always clear. Past research (see summary by Kurby & Zacks 2008; Zacks, Speer, Swallow, Braver, & Reynolds, 2007) has identified event boundaries by having observers passively watch videos or read text and subjectively segment this activity into discrete events. This research shows that events can be subjectively identified at a range of temporal grains, from course (extended over time; e.g., making a cake) to fine (brief; e.g., turning on an oven). Also, descriptions of course-grained events by passive observers were associated with objects and the descriptions of fine-grained events were associated actions on those objects. These findings suggest that both objects and actions on those objects play a role in event construction. However, it is unclear how stimuli and actions interact to form event representations. In addition, it is unclear how events are constructed when one is an active as opposed to a passive observer (Kurby & Zacks, 2008; Zacks et al., 2007) and when objective as opposed to subjective measures are used to determine event boundaries. In the present study, we examined how stimuli and actions interact to form one or more fine-grained events in an active observer by using objective measures to assess the conditions in which one or more events are constructed.

Importantly, event formation by active observers requires the generation of goals (and subgoals) and the integration and organization of action features needed to meet these goals, whereas event formation by passive observers does not (Higuchi, Holle, Roberts, Eickhoff, and Vogt, 2012; Vogt et al., 2007). Thus, event construction or boundaries defining one or more events may be related to how one chunks or segments their responses to meet the goals (and subgoals) that one has generated. In the present study, we examined what determines the boundaries of simple, abstract events, where observers are actively engaged in processing and responding to visual stimuli. More specifically, we examined whether forming a representation of a fine-grained event in an active observer is contingent on the temporal presentation of the stimuli (hence, on the temporal availability of the action information associated with these stimuli), or on the learned temporal execution of the actions associated with the stimuli, or on both. Because active observers typically react to and manipulate perceptual stimuli in a goal-directed manner, we expected that the intended, temporal execution of the actions associated with the stimuli would play a dominant role in defining the boundaries of an event. That is, the availability of perceptual information and action information would not be as important in forming an event as how one planned to act on this information. This prediction is consistent with ideomotor theories of action control (Greenwald 1970; Prinz 1990, 1997), which assume that the actions produced as part of event may constrain how the event is perceived (Kurby and Zacks 2008). Understanding the factors that define an event in an active observer is important, as these factors may determine how episodes in which one is directly involved are encoded in memory (Mattson, Fournier, & Behmer, 2012; Zacks and Tversky 2001).

Design and rationale

It is well known that actions and visual stimuli can become strongly associated with each other, so activating one representation activates the other (Fagioli, Ferlazzo, & Hommel, 2007; Fagioli, Hommel, & Schubotz, 2007; Hommel 2004, 2005; Pulvermüller, Hauk, Nikulin, & Ilmoniemi, 2005; Schubotz and von Cramon 2002; Tipper 2010; Zmigrod and Hommel 2009). As a result, when two visual stimuli become associated with each other, the actions associated with these stimuli should also become associated with each other (Logan 1988; Logan and Etherton 1994). Also, for an active observer, actions associated with abstract perceptual stimuli can give meaning to these stimuli if the actions themselves represent the task goal(s). Because these perceptual stimuli can carry meaning from their action associations, we can examine whether this meaningful information is linked together to form a single event by evaluating whether the actions associated with the perceptual stimuli are integrated as a single event or action plan. To do this, we used the partial-repetition paradigm developed by Stoet and Hommel (1999). This paradigm is useful for determining whether or not cognitive codes representing meaning-based information are integrated into one action event (action plan), consistent with the assumptions of the theory of event coding (Hommel, Müsseler, Aschersleben, & Prinz, 2001; see also Fournier et al., 2010; Hommel 2004, 2005; Stoet and Hommel 2002).

In this paradigm, two different visual stimulus sets (A and B) are presented sequentially. Participants plan and maintain a sequence of joystick responses in memory (e.g., “move left then up”) on the basis of the perceptual identity of the first stimulus set (e.g., a left-pointing arrowhead mapped to a left response paired with a red box mapped to an up response). While they maintain this action plan (“move left then up”) in short-term memory, a second visual stimulus appears (e.g., the letter H or S). Participants respond immediately with a joystick movement to the left or right based on the identity of the second stimulus (e.g., H = left and S = right). After executing the action to the second stimulus (Action B), the action to the first stimulus set (Action A) is executed. The results have shown that the execution of Action B is delayed when Action B shares a feature code (e.g., move “left”) with Action A (e.g., move “left then up”), relative to when it does not share a feature code with Action A (e.g., move “right then up”; Mattson and Fournier 2008; Stoet and Hommel 1999, 2002; Wiediger and Fournier 2008). This delay is referred to as a “partial-repetition cost.”

Partial-repetition costs are assumed to occur only when the features of Action A (e.g., “left” and “up”) are integrated (or bound) prior to the onset of Stimulus B. When action features are integrated, activating one of these action features spreads activation (in a forward direction) to other action features with which it is bound (Fournier, Gallimore, & Feiszli, 2013; Mattson et al., 2012). For example, if Action B (e.g., “left) matches one of the features of Action A (e.g., “left” among the bound action features “left-up”), then Action B (e.g., “left”) will activate the matching Action A feature (e.g., “left”) and the other Action A feature(s) it is bound to (e.g., “up”; see Fig. 1, panel A). As a result, time needed to inhibit the irrelevant Action A feature (e.g., “up”) will delay the selection of the Action B feature and hence delay the execution of Action B. If there is complete feature overlap or no overlap between Action B and Action A, no delay (cost) in executing Action B is expected because no Action A features will need to be inhibited in order to select the Action B feature (Hommel 2004; Mattson et al., 2012).

A binding account of partial-repetition costs. The circles represent action feature codes (L = left, R = right, U = up, D = down), and ellipses represent the binding of action feature codes. (A) Action A features (mapped to Stimulus A) are temporarily integrated into one action plan or event representation. If an Action B feature (e.g., L) is activated and this feature matches the first feature in the Action A sequence, the matching Action B feature (e.g., L) will reactivate (i.e., backward prime) all of the features of Action A (e.g., L–U), creating feature-code confusion. Resolving this feature-code confusion (by inhibiting the irrelevant features from Action A that have been activated; e.g., U or D) in order to correctly respond to Action B (e.g., L) will take time, causing slow response execution for Action B. This will lead to a partial-repetition cost. (B) Action A features are not bound into one action plan or event representation, but are instead represented as two different actions or events. No partial-repetition costs should be observed in the action feature overlap condition, because two different action plans (events) represent Action A—one that contains the same feature as Action B (e.g., L), and one that does not (e.g., U or D). As a result, the Action B feature (e.g., L) may reactivate (backward prime) the matching Action A feature (e.g., L), but this activation will not spread to the other Action A features (e.g., U or D) because the two Action A features (e.g., L and U or D) are not bound together; hence, no feature-code confusion should occur. In the no-feature-overlap conditions, regardless of whether the features for Action A are integrated into one event (A) or are separated into two events (B), if a feature of Action B does not match a feature of Action A, performance in executing Action B should be unaffected

In contrast, if the features of Action A are not bound, a partial feature overlap between Action B and Action A should not lead to a partial-repetition cost (Hommel 2004, 2005; Hommel and Colzato 2004; Mattson et al., 2012). In this case, each feature of Action A can be activated independently, and hence activating one action feature will not spread activation to the others (see Fig. 1, panel B). As a result, no interference should occur when Action B (e.g., “left”) matches one of the features of Action A (e.g., “left” among the unbound action features “left” and “up”). Thus, Response B performance (cost or no cost) in the partial-overlap case, as compared to the no-overlap case, can indicate whether or not the features of Action A were integrated into an event. If there is a partial-repetition cost, the feature codes were integrated; if there is no partial-repetition cost, the feature codes were not integrated.Footnote 1 The relative size of the partial-repetition cost should also reflect the relative binding strength among action feature codes (Mattson et al., 2012).

We used the partial-repetition paradigm to evaluate whether the formation of a single action event in an active observer is contingent on presenting stimuli relevant to the action at the same point in time, or is contingent on the intention to execute the action features associated with the stimuli as one integrated action, or both. To test this, stimuli relevant to Action A (e.g., left- and right-pointing arrowheads mapped to left and right movements, and red and green boxes mapped to up and down movements) were presented either simultaneously (temporally integrated) or temporally separate. Also, the features of Action A (movements left or right and up or down) mapped to these stimuli were executed as one, temporally integrated action (e.g., “left then up” originating from a central location) or as two, temporally separate actions (e.g., “left” and “up,” each originating from a central location). The manipulations of stimuli presentation and action execution were run between participants and are represented in Fig. 5 in the Method section below. Moreover, partial feature overlap (overlap vs. no overlap) between Action B and Action A was manipulated within participants to determine under which stimuli and action execution conditions a partial-repetition cost would be observed. The occurrence of a partial-repetition cost was used as evidence that participants planned Action A and represented the features of Action A as one, integrated action event (Stoet and Hommel 1999). If participants planned Action A but the features of Action A were not integrated into a single event representation, we should not find a partial-repetition cost.

Consistent with ideomotor theories of action control, we predicted that the formation of a single representation of an action event is contingent on temporal integration of the intended action execution. If this is the case, then we should find an interaction between the factors of action execution and action feature overlap demonstrating a partial-repetition cost (or greater cost) in the integrated action execution conditions but not in the separate action execution conditions. In addition, the Stimuli Presentation factor should not interact with the Action Feature Overlap factor. That is, a partial-repetition cost should not be contingent on whether the stimuli were presented temporally integrated or separate. Our results showed that temporal integration of the intended actions across stimuli was critical to forming a single representation of an action event.

Method

Participants

A group of 117 undergraduates from Washington State University participated for optional extra credit in a psychology class. This study was approved by the Washington State University Institutional Review Board, and informed consent was obtained from all participants. Participants had at least 20/40 visual acuity and could accurately identify red/green bars on a Snellen chart. Thirteen of the participants were excluded for not following instructions. Data were analyzed for 104 participants.

Apparatus

Stimuli appeared on a computer screen approximately 61 cm from the participant. E-prime software (version 1.2) presented stimuli and collected data. Responses were recorded using a custom-made joystick apparatus (see Fig. 2) that consisted of a round, plastic handle (joystick; 5.1 cm wide and 2.9 cm tall) mounted on a square box (30.5 cm2). The apparatus was recessed into a table, centered along the participant’s body midline. The joystick could move along a 1.1-cm-wide track 11.35 cm to the left, right, up, or down from the center, as well as 22.7 cm (per side) around the perimeter of the box. A magnet at the bottom of the joystick triggered response sensors below the track. From the center of the response apparatus, two sensors were placed at 1.5 and 7.5 cm in left, right, up, and down directions. Additionally, sensors were placed 3.9 cm from the end of the track on both sides of the upper left, upper right, lower left, and lower right corners. Four spring-loaded bearings, located in the center of the apparatus where the four tracks intersected, provided tactile feedback as the participant centered the joystick. All joystick responses were made with the dominant hand. A separate hand-button held in the nondominant hand initiated each trial.

Stimuli and responses

Action A Action A represented the responses mapped to Stimulus A. Stimulus A (subtending 3.29º of visual angle) was a white arrowhead (0.85º of visual angle) pointing to the left (<) or right (>) and a box (1.50º of visual angle) outlined in red or green. The colored box was centered 1.03º of visual angle above a central fixation cross (subtending 0.75º of visual angle), and the arrowhead was centered 0.94º of visual angle above the colored box. Stimulus A required two joystick movements. The first movement (left or right) was indicated by the arrowhead direction; a left-pointing arrowhead indicated a left movement and a right-pointing arrowhead indicated a right movement. The second movement (up or down) was indicated by the colored box. For half of the participants, the green box indicated an “up” movement and the red box indicated a “down” movement; the other half of participants had the opposite stimulus–response assignment. Thus, four possible responses were mapped to Stimulus A. For example, a left-pointing arrowhead and green box indicated a “left then up” movement; a left-pointing arrowhead and red box indicated a “left then down” movement; a right-pointing arrowhead and green box indicated a “right then up” movement; and a right-pointing arrowhead and red box indicated a “right then down” movement.

Action B Action B represented the responses mapped to Stimulus B. Stimulus B was a white, uppercase H or S (0.85º of visual angle) centered 0.94º of visual angle below the fixation cross. Stimulus B required a speeded left or right joystick response based on letter identity. Half of the participants responded to the H with a left movement and to the S with a right movement, and the other half had the opposite stimulus–response assignment.

Design and procedure

Three different factors were manipulated: the Overlap (overlap or no overlap) between action features mapped to Stimulus B (Action B) and those mapped to Stimulus A (Action A), the Temporal Properties of the Stimuli (integrated or separate) corresponding to Stimulus A, and the Temporal Properties of the Action Execution (integrated or separate) corresponding to Action A. Feature overlap between Action B and Action A was manipulated within participants. Action B (e.g., move “right”) either overlapped with Action A (e.g., move “right then up”) or did not (e.g., move “left then up”). Feature overlap (overlap or no overlap) between Action B and Action A occurred randomly and with equal probability in each block of trials. Also, the four possible pairs of stimuli (arrowhead direction and box color) for Stimulus A were equally paired with the two possible letter stimuli (H or S) for Stimulus B. Obtaining a partial-repetition cost was used as evidence that participants planned and represented Action A as one, integrated action event.

The temporal properties (integrated vs. separate) of the stimuli (arrowhead and colored box) for Stimulus A were manipulated between participants. The arrowhead and colored box were either presented simultaneously (integrated stimuli condition) or the arrowhead and colored box were presented sequentially, as two temporally separate stimuli: A1 and A2, respectively (separate stimuli condition). See Fig. 3. In both conditions, a black screen appeared before each trial with the message “Press the hand-button to start the trial.” After pressing the hand-button, a fixation cross on a light or dark gray background appeared for 1,000 ms. Next, Stimulus A was presented.

In the integrated stimuli condition (see Fig. 3, left), participants saw Stimulus A (an arrowhead and colored box) above the fixation cross for 1,000 ms on a light or dark gray background followed by a screen where the fixation cross appeared alone on a black background for 1,000 ms. Half of these participants saw Stimulus A and the fixation preceding Stimulus A on a dark gray background, and the other half saw these stimuli on a light gray background.

In the separate stimuli condition (see Fig. 3, right), half of participants saw an arrowhead on a light gray background (for 1,000 ms) followed 1,000 ms later by a colored box on a dark gray background (for 1,000 ms). The other half of these participants saw the stimuli in the same order but had the opposite stimulus-background pairing. The different stimulus-background pairings were used to encourage separate encoding of the stimuli.

The temporal properties of Action A execution (integrated vs. separate) were also manipulated between participants. The actions to the arrowhead and colored box were either executed as a single, continuous action consisting of two movements (integrated action condition; e.g., “left–up”), or the actions to the arrowhead and colored box were executed as two separate actions (separate action condition; e.g., “left” and “up”). See Fig. 4.

In the integrated action execution condition, the following events occurred (see Fig. 4, left). During the presentation of Stimulus A and the screen that followed, participants were instructed to plan and maintain a response (e.g., “left–up”) to Stimulus A (arrowhead and colored box) in memory. Then Stimulus B (H or S) appeared below the fixation cross on a black background for 100 ms followed by a black screen for 4,900 ms or until a response was detected for Stimulus B. Participants were instructed to respond to Stimulus B (left or right movement) as quickly and accurately as possible. The speed of responding to Stimulus B was emphasized as important. After responding to Stimulus B, a black screen appeared until the joystick was centered or until 5,000 ms had elapsed. Once centered, a light gray or dark gray screen appeared (consistent with the arrowhead background color) and participants had 5,000 ms to execute the planned response to Stimulus A. Participants were instructed to emphasize accuracy, not speed, when responding to Stimulus A. After responding to Stimulus A, which required a continuous action consisting of two movements (e.g., “left–up”), participants returned the joystick back to center. Performance feedback occurred 1,000 ms after participants had centered the joystick: Their response reaction time (RT), accuracy for Stimulus B (900-ms duration), and accuracy for Stimulus A (600-ms duration) were presented. Once the joystick was centered, the initiation screen for the next trial appeared 100 ms later. Participants initiated the next trial by pressing the hand-button when they were ready.

In the separate action execution condition, the following occurred (see Fig. 4, right). Participants were instructed to plan and execute a response (left or right movement) on the basis of the arrowhead direction and to also plan (and execute) a response (up or down) on the basis of the color of the box. Participants were instructed to maintain these two responses, in order, in memory while executing their speeded response to Stimulus B (H or S). After executing their speeded response to Stimulus B and centering the joystick, the screen background changed color (either to light or dark gray, consistent with the background color of the arrowhead stimulus). This signaled the participant to execute the action (A1) corresponding to the arrowhead direction (left or right). After executing the response to the arrowhead direction, the joystick was centered. Then, the screen background changed color again (either to dark or light gray). This signaled the participant to execute the action (A2) corresponding to the colored box (up or down). The joystick was then centered again. Performance feedback occurred at the end of the trial as follows: Stimulus B response RT and accuracy appeared for 900 ms, followed by arrowhead response accuracy for 600 ms, and then colored box response accuracy for 300 ms. Once the joystick was centered, the initiation screen for the next trial appeared 100 ms later.

For both action execution conditions, responses to Stimulus B and Stimulus A originated from the center of the joystick apparatus. Stimulus B RT was measured from the onset of Stimulus B until a response was detected at the left or right sensor located 7.5 cm from the center of the apparatus. Stimulus A responses in the action execution integrated condition were collected via three response sensors (one located 1.5 cm and one located 7.5 cm either left or right from the center of the apparatus and another located 3.9 cm up or down from the lower and upper corners of the apparatus, respectively). Stimulus A responses in the action execution separate condition were collected via four response sensors (one located 1.5 cm and one located 7.5 cm either left or right from the center of the apparatus for the first response, and one located 1.5 cm and one located 7.5 cm either up or down from the center of the apparatus for the second response).

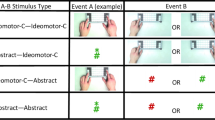

Stimuli presentation (integrated vs. separate) and action execution (integrated vs. separate) were combined factorially between participants. The presentation of the stimuli (integrated vs. separate) for Stimulus A and the joystick responses corresponding to the executed actions (integrated vs. separate) for Action A are shown in Fig. 5. A more detailed description of the instructions for the combinations of the stimuli and action execution conditions is presented in the Appendix.

Combined pictorial representation of the stimuli presentation conditions (integrated, separate) and action execution conditions (integrated, separate), which were factorially combined between participants. The different combined conditions are represented separately in each panel: The upper left panel represents the temporally integrated stimuli and integrated action execution; the upper right panel represents the temporally integrated stimuli and separate action executions; the lower left panel represents the temporally separate stimuli and integrated action executions; and the lower right panel represents the temporally separate stimuli and separate action executions. The stimuli corresponding to Stimulus A (arrowhead and box) are presented in the gray boxes within each panel. Stimuli that were temporally integrated were presented in the same frame, and stimuli that were temporally separate were presented in different frames with different backgrounds (shades of gray). The response apparatus is presented below the gray boxes in each panel, and the response movements for Stimulus A (Action A; e.g., left and up) are depicted by the arrows. An Action A execution that was integrated (e.g., “left–up”) required a continuous joystick movement from the center of the response apparatus to the left and then up, whereas an Action A execution that was separate (e.g., “left” and “up”) required a joystick movement to the left from the center of the response apparatus and, after centering the joystick once again, a joystick movement up from the center of the apparatus

To encourage participants to plan their action to Stimulus A prior to the onset of Stimulus B, they were instructed to maintain the response for this stimulus in memory. Participants were instructed not to execute any part of the planned response to Stimulus A until after they responded to Stimulus B. In addition, they were instructed not to move any fingers or use any external cues to help them remember the response(s) for Stimulus A—they were repeatedly told to maintain the action(s) for Stimulus A (Action A) in memory. These instructions were strongly emphasized. Some practice trials were integrated into the instructions to make sure participants understood the task. Participants who were observed or reported using external cues (as opposed to memory) to remember the action(s) to Stimulus A were excluded from the study.

The participants completed one 90-min session, consisting of 80 practice trials followed by seven blocks of 32 experimental trials. Participants took a short break after the third block. At the end of the experiment, they answered debriefing question about the strategies that they had used during the task. Those who were observed or who reported moving fingers or using any external cues (e.g., vocalizing the response) when planning an action to Stimulus A in the experimental trials were excluded, because they had violated the explicit task instructions. This occurred for a total of 13 participants (three in the combined integrated stimuli and integrated action execution condition, five in the combined separate stimuli and separate action execution condition, five in the combined integrated stimuli and separate action execution condition, and four in the combined separate stimuli and integrated action execution condition). As a result, 24 participants took part in each of the factorially combined conditions, except for the separate stimuli and separate action execution condition, which had 32 participants.Footnote 2 The stimulus–response mappings for the colored boxes (Stimulus A) and for letters (Stimulus B) were balanced for each of the within-participants and between-participants factors.

Analyses

A series of 2 × 2 × 2 mixed-design analyses of variance (ANOVAs) with the within-subjects factor Action Feature Overlap (overlap or no overlap), the between-subjects factor Stimuli Presentation (integrated or separate), and the between-subjects factor Action Execution (integrated or separate) were conducted separately on Action A error rates, Action B correct RTs, and Action B error rates. The correct-RT and error analyses for Action B were restricted to trials on which Action A responses were accurate. Approximately 0.2% of trials from each participant were lost due to Action A recording errors.

Results

Figure 6 shows the Action B correct RTs and error rates for the action feature overlap and no-overlap conditions when the stimuli (corresponding to Stimulus A) were presented temporally integrated versus separate (left panel) and when the action maintained in memory (Action A) was executed as one integrated versus two separate actions (right panel). As is evident in this figure, partial-repetition costs were contingent on the temporal properties of the intended action execution and not on the temporal presentation of the stimuli.

Action B correct reaction times (RTs) and percentages of errors for the feature overlap and no-overlap conditions when the stimuli corresponding to Stimulus A were integrated or separate (left panel) and when Action A execution was integrated or separate (right panel). Error bars represent one within-subjects standard error of the mean

Action A responses

No significant effects were found for action recall accuracy, ps > .08. The average error rate was 10%.

Action B responses

In the correct RT data, there was a significant main effect of action feature overlap F(1, 100) = 19.06, p < .0001, η p 2 = .16, consistent with a partial-repetition cost. The mean correct RT was greater for the action feature overlap (M = 593 ms) than for the no-overlap (M = 576 ms) condition. Moreover, there was a significant interaction between action feature overlap and action execution, F(1, 100) = 5.69, p < .02, η p 2 = .054. The two-way interaction between action feature overlap and stimulus presentation and the three-way interaction were not significant (Fs < 1, respectively). This indicates that partial-repetition costs were contingent on the intended, action execution of Action A and not on the presentation of the stimuli mapped to Action A. Planned comparisons showed that when the action execution was integrated, the mean RT for the action feature overlap condition (M = 605 ms) was significantly greater than that for the no-overlap condition (M = 579), p < .0001, yielding a partial-repetition cost of 26 ms. However, when the action execution was separate, the mean RTs for the action feature overlap (M = 581 ms) and no-overlap conditions (M = 574) did not differ (p = .15; JZS Bayes factor = 4.68),Footnote 3 indicating no partial-repetition cost. Also, planned comparisons confirmed that the partial-repetition costs were equivalent when stimuli were presented either together (yielding a significant cost of 12 ms, p < .01) or separately (yielding a significant cost of 18 ms, p < .01), t(102) = −0.45, p > .05; JZS Bayes factor = 11.89.

The average error rate was 2.2%, and no significant effects were found for error rate, ps > .08. Importantly, no interactions involving the factor Stimuli Presentation were close to significance for error rate (Fs < 1), confirming that partial-repetition costs were not influenced by the temporal presentation of the stimuli. However, planned comparisons revealed more accurate responses (M = 1%) in the action feature overlap relative to the no-overlap condition when action execution was separate (p < .02) but not when action execution was integrated (p > .85). This confirms the conclusions drawn from the RT data that no partial-repetition costs occurred when action execution was separate; partial-repetition costs only occurred when action execution was integrated.

Evidence of action planning

A partial-repetition cost occurred in conditions where the execution of Action A was integrated. This indicates that advanced action planning occurred for Stimulus A—at least for these conditions. Also, no partial-repetition facilitation was found in the conditions where the features of Action A were executed separately, suggesting that the action plan for Stimulus A was constructed prior to the onset of Stimulus B. Stoet and Hommel (1999) showed that if Action A is not planned in advance of Stimulus B onset, a partial-repetition facilitation occurs (i.e., the execution of Action B is faster when Action B and Action A partly overlap, as compared to when they do not overlap). Finally, 73% of participants (33% in the integrated action execution and 40% in the separate action execution conditions) reported maintaining a verbal representation of the movements or movement sequence (e.g., left–up), 15% (~6% in the action execution integrated and ~9% in the action execution separate conditions) reported using a visuospatial representation of the intended movements or movement sequence, 11% (~7% in the action execution integrated and ~4% in the action execution separate conditions) reported using a mental picture of the perceptual stimuli, and ~1% reported no strategy (in the action execution separate condition only). This suggests that most participants were planning their actions to Stimulus A in advance, and were not maintaining a visual image of this stimulus (arrowhead and colored box) until just before they needed to execute Action A.

Discussion

Using a partial-repetition paradigm, we measured the occurrence of a partial-repetition cost to determine whether forming a single representation of an event (a fine-grain event) in an active observer is contingent on the temporal presentation of the stimuli or the intended temporal execution of the actions associated with these stimuli, or both. Partial-repetition costs were found regardless of whether the stimuli were presented together or separate, and hence costs were not contingent on the temporal presentation of the stimuli. However, partial-repetition costs were contingent on whether or not the action execution corresponding to these stimuli was integrated or separate. That is, costs only occurred when the responses (e.g., left movement followed by down movement) maintained in memory were executed as one, integrated action (e.g., a continuous left then down movement originating from joystick center); not when the responses were executed as two, separate actions (e.g., a left movement and down movement, each originating from joystick center). The size of the partial-repetition cost (26 ms) obtained when action execution was integrated is consistent with those found in other studies where partial action overlap was based on response hand (left or right; e.g., Mattson and Fournier 2008; Stoet and Hommel 1999).

At minimum, these findings suggest that actions associated with different stimuli (that occur either at the same point or at different points in time) can be integrated to form a single action plan when the intent is to execute the action features mapped to these stimuli as one action as opposed to two separate actions. However, there is an abundance of evidence that perceptual stimuli and actions can be strongly associated (Fagioli, Ferlazzo, & Hommel, 2007; Fagioli, Hommel, & Schubotz, 2007; Humphreys et al., 2010; Schubotz and von Cramon 2002, 2003; Tipper 2010; Tipper, Paul, & Hayes, 2006; Tucker and Ellis 1998), and can be integrated to form a single event or episode (Barsalou 2009; Hommel 2004, 2005; Hommel et al., 2001; Logan 1988). According to Logan and Etherton (1994), each episode represents a specific combination of goals, stimuli, interpretations, and responses that occur together on a specific occasion. Thus, on the basis of past research findings and interpretations, we assume that evidence indicating that actions are integrated also indicates that the perceptual features associated with these actions are integrated, and together form a representation of an action event. This assumption is consistent with instance theories (Logan 1988), modal theories of memory (e.g., Barsalou 2009; Barsalou, Simmons, Barbey, & Wilson 2003), and the theory of event coding (Hommel et al., 2001). It is unlikely that the strong associations between perceptual features and action features are broken such that only the perceptual features or only the action features are represented in an event. If this was the case, learning specific actions to different types of stimuli could not occur.

Interpreting our results within the context above suggests that the intent to combine or not combine actions across perceptual stimuli can influence whether one or more events are perceived or constructed, respectively. The temporal availability of stimuli (and hence the temporal availability of their associated actions) appear less important in determining whether one or more events are perceived or constructed—at least when the temporal availability of stimuli occur close together (1 s apart) in time. It is not clear whether these findings will generalize when stimuli (and their associated actions) occur at more disparate time intervals. It could be that the availability of action features must occur within a particular temporal window in order to integrate action features into a single event. If so, simply the intent to integrate actions across stimuli may be ineffective at establishing event boundaries in cases where the stimuli (containing the relevant action features to be integrated) are presented too far apart in time. Regardless of this limitation, however, our study suggests that an observer’s intent to execute actions in an integrated or separate fashion can influence the construction of event boundaries.

The finding that action representation (integrated vs. separate) is important in defining the representation of a single event, is consistent with the findings by Zacks and colleagues (summarized in Kurby and Zacks 2008), who showed that passive observers described fine-grained events (e.g., turning on an oven) in terms of actions on objects as opposed to the objects themselves. Our findings are also consistent with ideomotor theories of action control that assume that the actions one is currently producing to an event can constrain the events one currently perceives. Because both actions and events are hierarchically organized by goals and subgoals (e.g., Hommel et al., 2001; Kurby and Zacks 2008; Rosenbaum, Inhoff, & Gordon, 1984), it follows that how one represents the action(s) corresponding to different perceptual stimuli will determine whether or not one initially experiences or constructs this perception-action information as one or more meaningful events.

The present study focused on fine-grained as opposed to coarse-grained events where the event durations were short and the actions forming the events were simple. Kurby and Zacks (2008) provide evidence that perceptual features may play a more dominant role in formation of coarse-grained events (e.g., making a cake), where events are longer in duration and are more complex. However, this finding may be limited to passive observers. Event formation by active observers requires the generation of goals (and subgoals) and the integration and organization of the action features needed to meet these goals, whereas event formation by passive observers does not (Higuchi et al., 2012; Vogt et al., 2007). Thus, it is possible that the integration or chunking of action features may play a dominant role in defining coarser-grained events (e.g., making a cake)—at least for active observers.

There is an abundance of evidence that perceiving actions, perceiving objects with associated actions, and reading verbs can automatically activate motor areas of the brain (e.g., Cattaneo, Caruana, Jezzini, & Rizzolatti, 2009; Pulvermüller 2005; see the review by Tipper 2010). It is argued that motor activation is induced by action words, affordances of perceptual objects, and other perceptual events because motor activation is intrinsically linked to the processing of semantics and affordances (e.g., Fagioli, Ferlazzo, & Hommel, 2007; Fagioli, Hommel, & Schubotz, 2007; Schubotz and von Cramon 2002, 2003; Tipper et al., 2006; Tucker and Ellis 1998). In other words, motor activation is part of the meaning or concept, regardless of whether the meaning or concept is represented by a word(s), object(s), or actions of another individual (e.g., Pulvermüller 2005; Rueschemeyer, Lindemann, Van Elk, & Bekkering, 2009), which is consistent with modal theories of memory (e.g., Barsalou 2009; Barsalou et al., 2003). Our results are consistent with these findings and interpretations. Moreover, our study suggests that action execution based on one’s task goals determines whether one or more meaningful events are constructed. This suggests that how one intends to execute an action associated with different perceptual stimuli can influence the grain size of an event stored in memory.

Notes

The number of participants was larger in the condition in which stimuli were presented separately and the action was executed separately than in the other stimulus–action conditions, in order to make sure that the lack of a partial-repetition cost was not due to insufficient power for this condition.

The JZS Bayes factor (Rouder, Speckman, Sun, Morey, & Iverson, 2009) is a measure indicating how much more likely the null hypothesis is to be true than is the alternative hypothesis. According to Jeffries (1961), a value of 3 provides “some evidence” for favoring the null. Our value exceeds this, suggesting that the null hypothesis is 4.68 times more likely to be true than the alternative hypothesis.

References

Barsalou, L. W. (2009). Simulation, situated conceptualization, and prediction. Philosophical Transactions of the Royal Society B, 364, 1281–1289. doi:10.1098/rstb.2008.0319

Barsalou, L. W., Simmons, W. K., Barbey, A., & Wilson, C. D. (2003). Grounding conceptual knowledge in modality-specific systems. Trends in Cognitive Sciences, 7, 84–91. doi:10.1016/S1364-6613(02)00029-3

Cattaneo, L., Caruana, F., Jezzini, A., & Rizzolatti, G. (2009). Representation of goal and movements without overt motor behavior in the human motor cortex: A transcranial magnetic stimulation study. Journal of Neuroscience., 29, 11134–11138. doi:10.1523/jneurosci.2605-09.2009

Fagioli, S., Ferlazzo, F., & Hommel, B. (2007a). Controlling attention through action: Observing actions primes action-related stimulus dimensions. Neuropsychologia, 45, 3351–3355. doi:10.1016/j.neuropsychologia.2007.06.012

Fagioli, S., Hommel, B., & Schubotz, R. (2007b). Intentional control of attention: Action planning primes action-related stimulus dimensions. Psychological Research, 71, 22–29. doi:10.1007/s00426-005-0033-3

Fournier, L. R., Gallimore, J. M., & Feizli, K. (2013). On the importance of being first: Serial order effects in the interaction between action plans and ongoing actions. Manuscript submitted for publication.

Fournier, L. R., Wiediger, M. D., McMeans, R., Mattson, P. S., Kirkwood, J., & Herzog, T. (2010). Holding a manual response sequence in memory can disrupt vocal responses that share semantic features with the manual response. Psychological Research, 74, 359–369. doi:10.1007/s00426-009-0256-9

Greenwald, A. G. (1970). Sensory feedback mechanisms in performance control: With special reference to the ideo-motor mechanism. Psychological Review, 77, 73–99. doi:10.1037/h0028689

Higuchi, S., Holle, H., Roberts, N., Eickhoff, S. B., & Vogt, S. (2012). Imitation and observational learning of hand actions: Prefrontal involvement and connectivity. NeuroImage, 59, 1668–1683. doi:10.1016/j.neuroimage.2011.09.021

Hommel, B. (2004). Event files: feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494–500. doi:10.1016/j.tics.2004.08.007

Hommel, B. (2005). Perception in action: Multiple roles of sensory information in action control. Cognitive Processing, 6, 3–14.

Hommel, B., & Colzato, L. (2004). Visual attention and the temporal dynamics of feature integration. Visual Cognition, 11, 483–521. doi:10.1080/13506280344000400

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The Theory of Event Coding (TEC): A framework for perception and action planning. Behavioral and Brain Sciences, 24, 849–878. doi:10.1017/S0140525X01000103

Humphreys, G. W., Yoon, E. Y., Kumar, S., Lestou, V., Kitadono, K., Roberts, K. L., & Riddoch, M. J. (2010). The interaction of attention and action: From seeing action to acting on perception. British Journal of Psychology, 101, 185–206. doi:10.1348/000712609x458927

Jeffries, H. (1961). Theory of probability (3rd ed.). Oxford, UK: Oxford University Press, Clarendon Press.

Kurby, C. A., & Zacks, J. M. (2008). Segmentation in the perception and memory of events. Trends in Cognitive Sciences, 12, 72–79. doi:10.1016/j.tics.2007.11.004

Logan, G. D. (1988). Toward an instance theory of automatization. Psychological Review, 95, 492–527. doi:10.1037/0033-295X.95.4.492

Logan, G. D., & Etherton, J. L. (1994). What is learned during automatization? The role of attention in constructing an instance. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20, 1022–1050. doi:10.1037/0278-7393.20.5.1022

Mattson, P. S., & Fournier, L. R. (2008). An action sequence held in memory can interfere with response selection of a target stimulus, but does not interfere with response activation of noise stimuli. Memory & Cognition, 36, 1236–1247. doi:10.3758/MC.36.7.1236

Mattson, P., Fournier, L., & Behmer, L., Jr. (2012). Frequency of the first feature in action sequences influences feature binding. Attention, Perception, & Psychophysics, 74, 1446–1460. doi:10.3758/s13414-012-0335-7

Prinz, W. (1990). A common coding approach to perception and action. In O. Neumann & W. Prinz (Eds.), Relationships between perception and action (pp. 167–201). New York, NY: Springer.

Prinz, W. (1997). Perception and action planning. European Journal of Cognitive Psychology, 9, 129–154. doi:10.1080/713752551

Pulvermüller, F. (2005). Brain mechanisms linking language and action. Nature Reviews Neuroscience, 6, 576–582. doi:10.1038/nrn1706

Pulvermüller, F., Hauk, O., Nikulin, V. V., & Ilmoniemi, R. J. (2005). Functional links between motor and language systems. European Journal of Neuroscience, 21, 793–797. doi:10.1111/j.1460-9568.2005.03900.x

Rosenbaum, D. A., Inhoff, A. W., & Gordon, A. M. (1984). Choosing between movement sequences: A hierarchical editor model. Journal of Experimental Psychology: General, 113, 372–393. doi:10.1037/0096-3445.113.3.372

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16, 225–237. doi:10.3758/PBR.16.2.225

Rueschemeyer, S., Lindemann, O., Van Elk, M., & Bekkering, H. (2009). Embodied cognition: The interplay between automatic resonance and selection-for-action mechanisms. European Journal of Social Psychology, 39, 1180–1187. doi:10.1002/ejsp.662

Schubotz, R. I., & von Cramon, D. Y. (2002). Predicting perceptual events activates corresponding motor schemes in lateral premotor cortex: An fMRI study. NeuroImage, 15, 787–796. doi:10.1006/nimg.2001.1043

Schubotz, R. I., & von Cramon, D. Y. (2003). Functional–anatomical concepts of human premotor cortex: Evidence from fMRI and PET studies. NeuroImage, 20, S120–S131.

Stoet, G., & Hommel, B. (1999). Action planning and the temporal binding of response codes. Journal of Experimental Psychology: Human Perception and Performance, 25, 1625–1640. doi:10.1037/0096-1523.25.6.1625

Stoet, G., & Hommel, B. (2002). Interaction between feature binding in perception and action. In W. Prinz & B. Hommel (Eds.), Common mechanisms in perception and action: Attention and performance XIX (pp. 538–552). New York, NY: Oxford University Press.

Tipper, S. P. (2010). From observation to action simulation: The role of attention, eye-gaze, emotion, and body state. Quarterly Journal of Experimental Psychology, 63, 2081–2105. doi:10.1080/17470211003624002

Tipper, S., Paul, M., & Hayes, A. (2006). Vision-for-action: The effects of object property discrimination and action state on affordance compatibility effects. Psychonomic Bulletin & Review, 13, 493–498. doi:10.3758/bf03193875

Tucker, M., & Ellis, R. (1998). On the relations between seen objects and components of potential actions. Journal of Experimental Psychology: Human Perception and Performance, 24, 830–846. doi:10.1037/0096-1523.24.3.830

Vogt, S., Buccino, G., Wohlschläger, A. M., Canessa, N., Shah, N. J., Zilles, K., & Fink, G. R. (2007). Prefrontal involvement in imitation learning of hand actions: effects of practice and expertise. NeuroImage, 37, 1371–1383. doi:10.1016/j.neuroimage.2007.07.005

Wiediger, M. D., & Fournier, L. R. (2008). An action sequence withheld in memory can delay execution of visually guided actions: The generalization of response compatibility interference. Journal of Experimental Psychology: Human Perception and Performance, 34, 1136–1149. doi:10.1037/0096-1523.34.5.1136

Zacks, J. M., Speer, N. K., Swallow, K. M., Braver, T. S., & Reynolds, J. R. (2007). Event perception: A mind–brain perspective. Psychological Bulletin, 133, 273–293. doi:10.1037/0033-2909.133.2.273

Zacks, J. M., & Tversky, B. (2001). Event structure in perception and conception. Psychological Bulletin, 127, 3–21. doi:10.1037/0033-2909.127.1.3

Zmigrod, S., & Hommel, B. (2009). Auditory event files: Integrating auditory perception and action planning. Attention, Perception, & Psychophysics, 71, 352–362. doi:10.3758/APP.71.2.352

Author note

We thank Gordon Logan, Wolfgang Prinz, and Bernhard Hommel for their useful comments on the revision of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Four conditions were formed by factorially combining the stimulus presentation conditions (integrated vs. separate) and the action execution conditions (integrated vs. separate). The instructions for each combined condition are described in the table below. The first column represents the integrated stimuli and integrated action execution conditions. The second column represents the integrated stimuli and separate action execution conditions. The third column represents the separate stimuli and separate action execution conditions, and the fourth column represents the separate stimuli and integrated action execution conditions.

Instructions for Integrated Stimuli, Integrated Action: IS–IA | Instructions for Integrated Stimuli, Integrated Action: IS–SA | Instructions for Separate Stimuli, Separate Action: SS–SA | Instructions for Separate Stimuli, Integrated Action: SS–IA | |

|---|---|---|---|---|

a) | “You will be presented with two symbol sets. Your task will be to plan the appropriate response to the first symbol set and hold this action plan in memory. Then you will see a second symbol set that you will respond to immediately. Finally, you will respond to the first symbol set in the correct order.” | “You will be presented with two symbol sets. Your task will be to plan the appropriate responses to the first symbol set and hold these action plans in memory. Then you will see a second symbol set that you will respond to immediately. Finally, you will respond to the first symbol set in the correct order.” | “You will be presented with three symbols. Your task will be to plan the appropriate responses to the first two symbols and hold these action plans in memory. Then you will see a third symbol that you will respond to immediately. Finally, you will respond to the first two symbols in the correct order.” | “You will be presented with three symbols. Your task will be to plan the appropriate response to the first two symbols and hold this action plan in memory. Then you will see a third symbol that you will respond to immediately. Finally, you will respond to the first two symbols in the correct order.” |

b) | “The first symbol set will appear above the fixation cross. The first symbol set will consist of a left- or right-pointing arrowhead above a red or green colored box. This symbol set will prompt you to respond with two movements, the arrowhead will indicate the first movement and the colored box will indicate the second movement.” | Same as IS-IA | “The first two symbols will appear above the fixation cross. The first symbol will consist of an arrowhead pointing to the left or right, and this symbol will indicate your first response to plan.” | “The first two symbols will appear above the fixation cross. The first symbol will consist of an arrowhead pointing to the left or right, and this symbol will indicate the first part of your response to plan.” |

Responses were then described for left and right arrowheads (left and right movement, respectively). | Responses were then described for left and right arrowheads (left and right movement, respectively). | |||

Responses were then described for left and right arrowheads (left and right movement, respectively) and then for red and green boxes (e.g., up and down movement, respectively). | Same as IS-IA | Examples were shown and participants responded to left and right arrowhead directions. They were instructed to recenter the joystick after responding to each arrowhead (to advance the screen). | ||

The instructions continued with a description of the 4 possible symbol sets with corresponding responses (e.g., “If the first symbol set contains a LEFT-pointing arrowhead above a RED box, the correct action is to move the joystick LEFT then UP”). | Same as IS-IA | “The second symbol you will see is a colored box below the arrowhead but above the fixation cross. The color of this box will indicate your response. | “The second symbol you will see is a colored box below the arrowhead but above the fixation cross. The color of this box will indicate the second part of your planned response.” | |

Participants were then presented with practice symbol sets (pictures of arrowheads with boxes) with the correct response written next to the symbol set. | Participants were then presented with practice symbol sets (pictures of arrowheads with boxes) with the correct response written next to the symbol set. | Responses were then described for red and green boxes (e.g., up and down movement, respectively). | Responses were then described for red and green boxes (e.g., up and down movement, respectively). | |

Afterward, they were instructed “More specifically, if you saw the following symbols (arrowhead left above a red box), your response would be to move the joystick to the left (back to center) then up.” | Examples were shown and participants responded to red and green boxes. They were instructed to recenter the joystick after responding to each box color (to advance the screen). | Participants were then presented with practice symbol sets (pictures of arrowheads and boxes) with the correct response written next to the symbol set. | ||

Then they were shown one of the symbol sets and were to make the correct response (e.g., a continuous movement “left then up”). They were to recenter the joystick (to advance to the next screen). | Then they were shown two different symbol sets and were to make the correct response to advance the screen (e.g., left arrowhead with red box requires the response: move left, back to center, move up). | This was followed by practice with two different colored boxes. During practice, participants moved the joystick to the appropriate direction (up or down) and recentered the joystick (to advance the screen). | Then they were shown one of the symbol sets (e.g., left pointing arrowhead with a red box) and participants had to move the joystick correctly (e.g., a continuous movement left then up). They were to recenter the joystick after responding to the arrowhead direction and box color (to advance to the next screen). | |

c) | This was followed by a description of second symbol set (H, S) and their required responses (e.g., “left” or “right” movement). They received 2 practice trials with these 2 symbols, and an instruction that emphasized response speed. | Same as IS–IA | This was followed by a description of the third symbol (H, S) and their required responses (e.g., “left” or “right” movement). They received 2 practice trials with these 2 symbols, and an instruction that emphasized response speed. | Same as SS–SA |

d) | “Okay, you have seen the two symbol sets, here is where things get tricky. During the experiment you will first see the arrowhead and the colored box (the first symbol set).” | Same as IS–IA | “Okay, you have seen the three symbols, here is where things get tricky. During the experiment, you will first see the arrowhead and then the colored box above the fixation cross.” | Same as SS–SA |

e) | “When you see this symbol set, you will plan a response, but you will not execute the response immediately. Instead, you will hold the response to the arrowhead and the colored box in memory.” | “When you see this symbol set, you will plan responses to these symbols, but you will not execute these responses immediately. Instead, you will hold the responses to the arrowhead and the colored box in memory.” | “When you see these two symbols, you will plan responses to them, but you will not respond immediately. Instead you will hold the response to the arrowhead and the response to the box in memory, in order (i.e., response to arrowhead followed by response to colored box).” | “When you see these two symbols, you will plan a response to them, but you will not respond immediately. Instead, you will hold the response to the arrowhead and colored box in memory.” |

f) | “Next, you will see the second symbol set (the letter H or S) below the fixation cross. As soon as you see the H or S, immediately execute the correct response to the letter. The response to the H or S should be executed as FAST as possible.” | Same as IS–IA | “Next, you will see a third symbol (either an H or S) below the fixation cross. As soon as you see the H or S, execute the correct response to the letter immediately. The response to the H or S should be executed as FAST as possible.” | Same as SS–SA |

g) | “After responding as fast as possible to the letter, please calmly recenter the joystick and then calmly respond to the ARROWHEAD and COLORED BOX. Remember to recenter the joystick after making your response to each symbol set.” | “After responding as fast as possible to the letter, you will calmly respond to the ARROWHEAD and then the COLORED BOX. Remember to recenter the joystick after making your response to each symbol.” | “After responding as fast as possible to the letter, you will calmly respond to the ARROWHEAD and then the COLORED BOX. The background for inputting your responses will correspond to the background color in which the symbol originally appeared. Remember to recenter the joystick after making your response to each symbol.” | “After responding as fast as possible to the letter, you will calmly respond to the ARROWHEAD and COLORED BOX. Remember to recenter the joystick after making your response.” |

h) | “It is critical that you respond to the LETTER (H or S) appearing below the fixation cross as QUICKLY and ACCURATELY as possible. For this response, we will record your speed.” | Same | Same | Same |

i) | “Your response to the arrowhead and colored box DOES NOT have to be fast. For this response, we will record accuracy only.” | “Your responses to the arrowhead and colored box DO NOT have to be fast. For these responses, we will record accuracy only.” | Same as IS–SA | Same as IS–IA |

j) | “To recap, here is what will happen: First, you will see an arrowhead and a colored box above the fixation cross.” | Same as IS–IA | “To recap, here is what will happen: First, you will see an arrowhead, then a colored box above the fixation cross.” | Same as SS–SA |

k) | “Plan a response to the arrowhead and colored box, and hold this response plan in memory. Then you will see a letter (H or S) below the fixation cross. Respond immediately and as fast and accurately as you can once you have identified the letter.” | “Create a plan for your responses to the arrowhead and colored box (i.e., left–up, left–down, right–up, or right–down). Then you will immediately respond to the letter (i.e., left or right) below the fixation cross as quickly and as accurately as possible.” | “Create a plan for your responses to the arrowhead and colored box. Then you will immediately respond to the letter below the fixation cross as quickly and as accurately as possible.” | “Create a plan for your response to the arrowhead and colored box. Then you will immediately respond to the letter below the fixation cross as quickly and as accurately as possible.” |

“Then, recenter the joystick (calmly) and recall the response planned to the arrowhead and colored box. After completing this response, please recenter the joystick.” | “Afterward, recall the response planned to the arrowhead and the colored box respectively.” | “Afterward, recall the response planned to the arrowhead and then the colored box, respectively.” | “Afterward, recall the response planned to the arrowhead and colored box.” | |

“If you are confused about this task, please ask the experimenter for clarification.” | “If you are confused about this task, please as the experimenter for clarification.” | “If you are confused about this task, please ask the experimenter for clarification.” | “If you are confused about this task, please ask the experimenter for clarification.” | |

l) | Instructions then described the performance feedback presented on each trial, keeping the hand on the joystick at all times, and the importance of not using external cues (moving fingers, limbs, head, saying the response out loud) to recall the actions associated with the first symbol set, but to rely on memory. | Same | Same | Same |

Rights and permissions

About this article

Cite this article

Fournier, L.R., Gallimore, J.M. What makes an event: Temporal integration of stimuli or actions?. Atten Percept Psychophys 75, 1293–1305 (2013). https://doi.org/10.3758/s13414-013-0461-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-013-0461-x