Abstract

Postural changes and the maintenance of postural stability have been shown to affect many aspects of cognition. Here we examined the extent to which selective visual attention may differ between standing and seated postures in three tasks: the Stroop color-word task, a task-switching paradigm, and visual search. We found reduced Stroop interference, a reduction in switch costs, and slower search rates in the visual search task when participants stood compared to when they sat while performing the tasks. The results suggest that the postural demands associated with standing enhance cognitive control, revealing broad connections between body posture and cognitive mechanisms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recent investigations have revealed the importance of considering the effect of bodily states and movements on cognition. For instance, cognitive processes like preference judgments (Beilock & Holt, 2007; Ramsøy, Jacobsen, Friis-Olivarius, Bagdziunaite, & Skov, 2017), language comprehension (Fischer & Zwaan, 2008; Zwaan, 2014), memory (Canits, Pecher, & Zeelenberg, 2018; Glenberg, 1997), self-evaluation (Briñol, Petty, & Wagner, 2009), creativity (Andolfi, Di Nuzzo, & Antonietti, 2017; Hao, Xue, Yuan, Wang, & Runco, 2017), and problem solving (Ma, 2017; Thomas & Lleras, 2007) are all strongly influenced by how we use our bodies to interact with the world. Visual information processing, including visual search and change detection, is also affected by the actions we plan and execute (Bekkering & Neggers, 2002; Glenberg, Witt, & Metcalfe, 2013; Tseng et al., 2010; Vishton et al., 2007; Wohlschläger, 2000), and visual perception changes to reflect our physiological states and ability to act (for reviews, see Proffitt, 2006 and Witt, 2011) as well as the relatively stable dimensions of the body, including body size (Stefanucci & Geuss, 2009). Even the proximity with which the hands are held to a visual stimulus can alter how it is perceived, attended, remembered, and processed for meaning (e.g., Abrams, Davoli, Du, Knapp, & Paull, 2008; Cosman & Vecera, 2010; Davoli, Du, Montana, Garverick, & Abrams, 2010; Reed, Grubb, & Steele, 2006; Tseng & Bridgeman, 2011). Taken together, the findings suggest rich interconnections between cognition and the control of the body.

One particularly important body process is the control of posture. While running, walking, or even standing one’s safety may depend upon not falling down. And research on postural control has revealed that maintenance of postural stability can sometimes be so demanding that it impairs simultaneous performance of simple cognitive tasks. For example, Kerr, Condon, and McDonald (1985) have shown that performing a balancing task (standing heel-to-toe while blindfolded) impairs spatial working memory (but not verbal memory) compared to sitting. Impairments in spatial but not non-spatial working memory have also been reported when standing compared to sitting (Chen, Yu, Niu, & Liu, 2018). Others have found impairment in both spatial and non-spatial reaction time tasks using auditory stimuli while participants maintained a standing posture with their eyes closed compared to when sitting (Yardley et al., 2001).

Conversely, the performance of challenging cognitive tasks can have an adverse effect on maintenance of postural stability, especially for older adults and demanding postural tasks (e.g., Rankin, Woollacott, Shumway-Cook, & Brown, 2000; see Woollacott & Shumway-Cook, 2002, for a review). Taken together the findings suggest that maintenance of postural stability may tap some of the same limited mental resources that underlie many cognitive tasks.

Recent results, however, have shown that the relation between cognition and postural control is not so simple. Rosenbaum, Mama, and Algom (2017) found that Stroop interference is reduced in a standing compared to a seated posture. They hypothesized that the attentional demands of maintaining a standing posture can lead to enhanced attentional selectivity.

One explanation for the apparently discrepant findings is that mild postural demands may produce an alerting effect, resulting in enhanced attentional selectivity, whereas more demanding postural control tasks require additional resources – and tap into some limited central resource that impairs cognitive or attentional performance. Before pursuing that possibility, however, it is necessary to establish precisely the effects of postural demands on a broader range of tasks. Our goal in the present paper was to more fully explore the influence of postural demands on key aspects of visual cognition.

In the present study, we examined potential differences in visual cognition between seated and standing postures in three representative tasks. First, we sought to replicate the findings of Rosenbaum et al. (2017) and confirm that Stroop interference is reduced when standing. Next, we studied task-switching, an often-used task requiring selective attention and cognitive control. Finally, we examined whether standing alters visual search. A reduced visual search rate reveals prolonged inspection of the search elements that might lead to a more thorough assessment of each item (Abrams et al., 2008).

Experiment 1: Stroop color-word task

Accomplishing one’s goals often requires the attentionally demanding selection of task-relevant information. A commonly-used test of how well people can select task-relevant information is the Stroop (1935) color-word task (cf. MacLeod, 1991). In the standard Stroop paradigm, participants must respond to the color in which a letter-string appears while attempting to ignore the meaning of the string (e.g., “GREEN” presented in red font). The task is typically much more difficult when the letter-string spells out the name of an alternate color, because of interference that occurs between the meaning of the letter-string and the appropriate color-name response (Egeth, Blecker, & Kamlet, 1969). Such interference is commonly quantified as the additional time required to respond to incongruent (e.g., “RED” presented in a green color) compared to congruent (e.g., “GREEN” presented in a green color) stimuli.

Interference in the Stroop task has been shown to vary in magnitude depending on what is done with the body. For example, Stroop interference is attenuated by holding one’s hands near the stimuli (Davoli et al., 2010), and can be reduced by stepping backwards during the task (Koch, Holland, Hengstler, & van Knippenberg, 2009). Together, these findings suggest that the attentional mechanisms involved in the selection of task-relevant information are sensitive to certain bodily states and actions.

Additionally, Rosenbaum et al. (2017) demonstrated that Stroop interference is reduced when standing, hypothesizing that the increased demands of standing result in enhanced selectivity of attention. In the present experiment, we sought to replicate the Rosenbaum et al. results before examining other tasks. Participants here performed the Stroop color-word task both while sitting and while standing.

Method

Participants

Fourteen Washington University undergraduates each participated in one 30-min session for course credit. The sample size was similar to the sample size in Experiments 1 and 2 of Rosenbaum et al. (2017).

Apparatus

Stimuli were presented on a 15-in. LCD flat-panel display, which was raised or lowered according to the posture condition. For both the sitting and the standing postures, viewing distance was fixed at 76.2 cm by an adjustable chinrest. Participants held a 6-cm diameter response button in each hand in a manner that was identical for both postures. Figure 1 depicts the experimental environment and the two postures.

The sitting (left panel) and standing (right panel) postures assumed by participants in the experiments. The distance between the hands was equal in the two postures. The room was dimly lit during actual experimentation. The environment was similar in Experiments 2 and 3, but the display device differed across experiments

Stimuli, procedure, and design

All stimuli were presented on a black background. Each trial began with a white fixation cross presented at the center of the screen. After 500 ms, a letter-string replaced the cross. Participants were instructed to respond as quickly and accurately as possible to the color (green or red) in which the letter-string appeared. They indicated their response by pressing the corresponding response button. Participants were also instructed that they should ignore all other aspects of the letter-string including its meaning. Participants heard an error tone if they pressed the wrong button or did not respond within 1,500 ms. There was a 1,500-ms inter-trial interval.

There were three kinds of letter-strings: congruent strings spelled color-words that were consistent with the color in which they appeared (e.g., “RED” appearing in red); incongruent strings spelled color-words that were inconsistent with the color in which they appeared, but consistent with the alternative (i.e., incorrect) response (e.g., “RED” appearing in green); neutral strings consisted of a series of Xs in red or green. Half of the neutral strings were three Xs in length (i.e., “XXX”) and the other half were five Xs long (i.e., “XXXXX”) to match the lengths of the strings “RED” and “GREEN”. Half of the neutral strings of each length were presented in green, and the other half were presented in red. Additionally, for both congruent and incongruent trials, green and red strings were presented equally often.

We employed a 2 (posture: sitting, standing) × 3 (congruency: neutral, congruent, incongruent) within-subjects design. Participants performed one half of the experiment in each posture, with posture order counterbalanced across subjects. For each posture, there were two initial blocks of practice trials, followed by four blocks of experimental trials. Each block (practice and experimental) consisted of 12 neutral, 12 congruent, and 12 incongruent trials, for a total of 144 experimental trials in each posture. The order of trials in each block was randomly determined. There was a brief break between blocks.

Results

Mean response times for correct responses are shown in Fig. 2. A 2 (posture: sitting, standing) × 3 (congruency: neutral, incongruent, congruency) ANOVA revealed that, on average, participants were slower to respond in the incongruent condition compared to both the congruent and neutral conditions (F(2, 26) = 3.45, p = .047, ηp2 = .21). This pattern is consistent with typical Stroop interference, indicating that the to-be-ignored meaning of the letter-strings influenced the speed with which participants could identify their colors. However, as can be seen in Fig. 2, the magnitude of the interference was modulated by posture (posture × congruency interaction: F(2, 26) = 4.73, p = .018, ηp2 = .27). Specifically, all of the observed Stroop interference occurred in the seated posture (mean difference between incongruent and congruent response times = 15.64 ms, SD = 20.10 ms; significantly greater than zero, t(13) = 2.91, p = .012), whereas a negligible amount occurred while standing (M = -.38 ms, SD = 14.77 ms; not different from zero, t(13) = .10, n.s.). There was no main effect of posture (F < 1).

Mean response times in the Stroop color-word task (Experiment 1) as a function of posture and stimulus-response congruency. Error bars represent within-subject standard errors

Mean error percentages are shown in Table 1. The overall error rate was 4.6%. A 2 × 3 repeated measures ANOVA revealed that accuracy did not depend on posture (F < 1). A significant main effect of congruency indicated that the most errors occurred on incongruent trials (F(2, 26) = 3.76, p = .037, ηp2 = .22), consistent with typical Stroop interference. Posture did not interact with congruency (F(2, 26) = 1.47, p = .25, ηp2 = .10), therefore the observed response time differences are not attributable to a speed-accuracy tradeoff.

Discussion

In the present experiment we found that the magnitude of Stroop interference was markedly reduced when participants adopted a standing posture, consistent with the Rosenbaum et al. (2017) findings. There was no main effect of posture, suggesting that the mild postural control requirements associated with standing enhanced attentional selectivity with no apparent cost.

Experiment 2: Task-Switching

In Experiment 1, we showed that Stroop interference was reduced when standing compared to sitting, suggesting that selectivity of attention may be enhanced when standing. In order to further investigate this possibility, we explored whether task-switching, a measure of cognitive control (e.g., Meiran, 1996, 2000; Weidler & Abrams, 2014), is affected by posture. Here, we asked participants to identify either the color or shape of a visual stimulus. Switching tasks from one trial to another (i.e., identifying a color and then identifying a shape) is typically slower and less accurate than performing the same task on successive trials (e.g., identifying shape and then identifying a shape again). If the reduction in accuracy, decrease in speed, or both, is attenuated when standing, that would indicate that cognitive control is enhanced when standing.

Method

Participants

Thirty Washington University undergraduates each participated in one 30-min session for course credit. We chose a sample size equal to that used by Weidler and Abrams (2014), who also examined the effect of a postural change (hand proximity) on task-switching using an identical paradigm.

Apparatus

Stimuli were presented on an LCD flat-panel display, which was raised or lowered according to the posture condition. For both the sitting and the standing postures, viewing distance was fixed at 57 cm by an adjustable chinrest. Participants held a 6-cm diameter response button in each hand in a manner that was identical for both postures (the postures are shown in Fig. 1).

Stimuli, procedure, and design

The sequence of events during the experiment is shown in Fig. 3. Each trial began with a cue that was a 25° × 25° square composed of either a solid or a dashed white line, centered in the display. The border type (solid or dashed) indicated to the participant whether to respond to the color or shape of the upcoming target. The cue assignment was counterbalanced across participants. After 1,000 ms, a target appeared at the center of the display, while the cue remained on-screen. The target was a yellow or blue square or triangle subtending approximately 12° × 12°. All stimuli were presented on a black background. Participants were instructed to respond as quickly and accurately as possible to either the color or the shape of the target, as indicated by the cue. Each of the two buttons was assigned to one of the colors and one of the shapes at the beginning of the experiment, and participants selected their response by pressing the corresponding response button. Participants heard an error tone and saw a 5-s error message if they pressed the wrong button or did not respond within 1,500 ms. The next cue appeared 200 ms after the correct response or error message.

Sequence of events on two representative trials from Experiment 2

Participants first performed 12 untimed and 24 speeded practice trials. The experiment consisted of eight test blocks, each of which included a buffer trial followed by 48 test trials. No-switch trials occurred when the task on a given trial matched the task on the previous trial, and switch trials occurred when the task on a given trial did not match that on the previous trial. The target on each trial was chosen randomly, and switch and no-switch trials were randomly ordered. For both the switch and no-switch trials, the stimulus color and shape were sometimes associated with the same response (congruent), and on other trials with different responses (incongruent). Participants had a break at the end of each block, and the experimenter switched the posture condition after the fourth test block. Starting posture was counterbalanced across participants.

Results

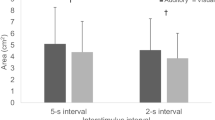

Both response time and accuracy were analyzed with 2 (condition: switch or no-switch) × 2 (congruency: congruent or incongruent) × 2 (posture: sitting or standing) repeated measures ANOVAs. The accuracy results, shown in Fig. 4, revealed a main effect of condition, F(1, 29) = 62.94, p < .001, \( {\eta}_p^2 \) = .69: No-switch trials (M = .95, SD = .03) were significantly more accurate than switch trials (M = .90, SD = .06), revealing a typical switch cost. Congruent trials were also more accurate than incongruent ones, F(1,29) = 67.4, p < .001, \( {\eta}_p^2 \) = .70. There was no main effect of posture, F(1, 29) = 2.86, p = .10, \( {\eta}_p^2 \) = .09, but importantly there was a significant condition × posture interaction, F(1, 29) = 5.54, p = .026, \( {\eta}_p^2 \) = .16. The interaction indicates that there was a reduced switch cost when participants were standing compared to when they were sitting. The analysis of accuracy also revealed a significant congruency × condition interaction, F(1,29) = 23.33, p < .001, \( {\eta}_p^2 \) =.45, with switch costs greater for incongruent than for congruent trials. No other interactions were significant.

Accuracy from Experiment 2. The results indicate that there was a reduced switch cost for the standing condition compared to the sitting condition. Error bars represent within-subject standard error

Mean reaction times for correct responses for each condition are shown in Table 2. There was a main effect of condition, F(1, 29) = 115.10, p < .001, \( {\eta}_p^2 \) = .80: No-switch trials were significantly faster than switch trials, revealing a typical switch cost. Congruent trials were also faster than incongruent ones, F(1,29) = 40.95, p < .001, \( {\eta}_p^2 \) = .59. There was no main effect of posture (F > 1). There was a significant congruency × condition interaction, F(1,29) = 4.77, p = .037, \( {\eta}_p^2 \) =.14, with switch costs greater for incongruent than for congruent trials. No other interactions were significant.

Discussion

The present experiment shows that the switch cost (the reduction in accuracy that occurs when the task on a given trial does not match the task on the previous trial compared to when the two tasks match) is reduced when standing compared to when sitting. As in Experiment 1, and the visual stimuli were identical in the two postural conditions, there was no main effect of posture; thus, these results suggest that the mild demands of maintaining a standing posture benefit the trials that require enhanced selectivity of attention. These results, along with those from Experiment 1, indicate that selectivity of attention is enhanced when standing.

The present results differ somewhat from a recent study that examined the same issue. Stephan, Hensen, Fintor, Krampe, and Koch (2018) found an increased effect of congruency when participants were standing, but failed to find an effect of posture on switch costs, whereas we did here. However, numerous differences between experiments do not permit a direct comparison. For example, participants in our experiment switched between attending to either the color or the shape of a visual object, whereas in the Stephan et al. experiment participants judged the magnitude or parity of an auditorily presented digit. It is possible that the postural demands of standing may enhance visual selectivity (as suggested by each of our experiments) while drawing from some mental resources required for numerical judgments. Additionally, Stephan et al. used a response in the magnitude judgment task that was incompatible with the typical orientation of the mental number line. It is possible that this additional demand altered the performance in some fundamental way. More work will be needed to confirm these possibilities.

Experiment 3: Visual search

Another task in which selectivity of attention is relevant is visual search. Eimer (2015) has suggested that visual search requires the same sort of attentional selectivity and cognitive control studied in Experiments 1 and 2. Thus, if standing enhances such processes, it might also be expected to affect visual search. Only a limited amount of research bears on the question of whether the attentional mechanisms involved in visual search operate consistently across seated and standing postures. In one study of trained baggage screeners, attentional performance during search did not appear to depend on whether one stood or sat to complete the task (Drury et al., 2008). However, Drury et al. only examined the time to find a target, leaving open the possibility that differences do exist in important components of search behavior, such as details of the attentional movements during the search. On the other hand, other studies have specifically examined the deployment and guidance of attention during standing searches (Gilchrist, North, & Hood, 2001; Thomas et al., 2006), but the designs of those studies did not include seated participants. Thus, although the general patterns of attentional performance exhibited by standing participants resembled previous research using seated participants, direct quantitative comparisons between postures were not possible.

In the present experiment, participants performed visual searches both while seated and while standing. Of particular interest was whether posture would affect the shifting of attention between items during search – a critical component of search behavior that reflects how thoroughly items are processed (cf. Abrams et al., 2008; Fox et al., 2000), but which has not been the subject of direct comparison between sitting and standing until now. Shifts of attention during visual search can be assessed using search-rate (Treisman & Gelade, 1980; Wolfe, 1998), the increment in time needed to process an additional item in the search display, with greater (i.e., slower) search-rates indicating prolonged processing of each item.

Method

Participants

Twelve Washington University undergraduates each participated in one 30-min session for course credit. The number of subjects was selected to match that used in similar experiments in our laboratory (e.g., Abrams et al., 2008).

Apparatus

Stimuli were presented on an 18-in. CRT display that was raised or lowered according to the posture condition. For both the sitting and the standing postures, viewing distance was fixed at 90 cm by an adjustable chinrest. Participants held a 6-cm diameter response button in each hand in a manner that was identical for both postures (see Fig. 1).

Stimuli, procedure, and design

All stimuli were black presented on a white background. At the beginning of each trial, a fixation cross (1.13° × 1.13°) appeared at the center of the screen. After 1 s, a search display appeared within an area 16° high × 25° wide. The number of items in the display (i.e., the set size) varied systematically. The search display consisted of either four or eight block letters (2.26° high × 1.13° wide), one of which was always a target (‘H’ or ‘S’), with the other letters as distractors (‘E’ and ‘U’). The target and distractor identities were randomly selected on each trial. The location of each item in the search display was also randomly selected, with the constraint that items could not appear within .57° of one another.

Participants were instructed to indicate the identity of the target as quickly and as accurately as possible by pushing the appropriate response button. Visual feedback was provided if participants responded within 100 ms (“Too fast!”), did not respond within 1,500 ms (“Too slow!”), or pressed the wrong button (“Wrong key pressed!”). There was a 2-s inter-trial interval.

We employed a 2 (posture: sitting, standing) × 2 (set size: 4, 8) within-subjects design. Each participant completed two blocks of 64 trials in one posture, followed by two blocks in the other posture. The posture order was counterbalanced across participants. Participants received an equal number of trials of each set size per block. A brief break separated blocks.

Results

Mean response times for correct responses are shown in Fig. 5. A 2 (posture: sitting, standing) × 2 (set size: 4, 8) repeated measures analysis of variance (ANOVA) revealed that response times increased as set size increased (F(1, 11) = 81.9, p < .001, ηp2 = .88), indicating that participants searched displays in an item-by-item (i.e., serial) manner (e.g., Treisman & Gelade, 1980). Furthermore, overall response times did not differ between postures (F < 1), consistent with previous research (Drury et al., 2008). Nevertheless, the two postures yielded different search-rates, as evidenced by a posture × set size interaction (F(1, 11) = 5.9, p = .033, ηp2 = .35; as seen by the slopes of the functions in Fig. 5 (search rates were computed by dividing the difference between the response times for four and eight items for both sitting and standing by four to estimate the time spent per item). Participants searched through displays at a slower rate when they were standing (17.2 ms/item) compared to when they were sitting (11.2 ms/item). Analysis of the individual search rates shows that a reduced rate while standing was exhibited by ten of the 12 participants (p = .039 by a sign test).

Mean response times in the visual search task (Experiment 3) as a function of posture and set size. On average, participants searched through displays at a slower rate when they were standing compared to when they were sitting (as evidenced by the slopes of the functions). Error bars represent within-subject standard errors

Mean error percentages are shown in Table 3. The overall error rate was 4.9%. A 2 × 2 repeated measures ANOVA revealed that accuracy did not depend on set size (F(1, 11) = 3.4, p = .09) or on posture (F < 1). However, posture did interact with set size: an increase in set size led to an increase in errors, but only in the standing condition (F(1, 11) = 7.96, p = .017). The interaction shows that prolonged inspection times (as reflected by the search rate) when standing served to limit the reduction in accuracy associated with standing for the larger set size.

Discussion

In Experiment 3, participants searched visual displays at a slower rate when standing compared to sitting. And while there was no main effect of posture on either response time or accuracy, when the set size increased, errors also increased, but only in the standing condition. This pattern suggests that standing may have impaired some aspects of search, at least for the larger set size. But at the same time, participants devoted more time to inspecting each item when standing (as revealed by the difference in search slopes). This prolonged inspection time presumably limited the impact on accuracy in the large set size searches caused by the requirement to maintain a standing posture.

Although there was no main effect of posture on response time (RT), participants did respond slightly more quickly to the smaller displays when standing. We chose to emphasize the difference in slopes because a change in the intercept of the search function could reflect effects of many factors that are unrelated to the visual search itself. For example, a physical difference in the response requirements when standing versus sitting, or additional demands of maintaining one posture might cause one posture to have overall slower RTs. Additionally, many other factors, including decision-related processes, could affect the intercepts across conditions. While changes in visual search processes can also affect the intercepts, it is not possible to separate the search-related effects from the influences of other factors here. The slopes, on the other hand, provide a purer measure of processes related to search only – uncontaminated by those other factors, and it is typical to use the slopes to make inferences about the search processes (e.g., Wolfe, 2016). Indeed, the same pattern reported here has been found in experiments that have examined the effects of hand proximity (Abrams & Weidler, 2014, Exp. 3; Abrams et al., 2008, Exps. 1a and 1b; Davoli & Abrams, 2009). One explanation for the pattern is that it reflects a small (but not significant) reduction in the baseline RT in the standing posture. It is possible that the difference in intercepts might reveal something about the effects of standing, but more work would be needed to confirm that possibility.

General discussion

In the present experiments we studied the cognitive and attentional changes associated with the requirement to maintain postural stability. Participants performed three tasks that tap into visual selection and cognitive control while either seated or standing. The requirement to stand led to reduced interference from the to-be-ignored feature in a Stroop task, reduced errors when switching tasks in a visual task-switching paradigm, and prolonged inspection of search elements in a visual search. Taken together, the results reveal an enhancement in cognitive control and attentional selectivity when one is standing compared to sitting.

On the surface, our results appear to contrast with other results that have indicated decrements in cognitive task performance when postural control demands are increased (e.g., Chen et al., 2018; Kerr et al., 1985). However, in these earlier studies postural control was much more demanding than to simply maintain a standing posture: Participants stood heel-to-toe, sometimes while blindfolded. The pattern suggests that moderate postural control demands, such as those associated with standing, may result in heightened arousal – recruiting additional cognitive or attentional resources that are brought to bear on the task at hand. Indeed, recent research has shown that increases in arousal can lead to enhanced cognitive control (Zeng et al., 2017). However, more demanding postural maintenance may deplete limited attentional resources, leading to an impairment in cognitive performance.

It is possible that the changes observed here when standing were triggered not by heightened arousal caused by maintenance of a standing posture, but instead by the mental states typically associated with such a posture. For example, when one’s safety is being threatened, they may be likely to stand in order to more thoroughly assess the situation, and flee if necessary. Standing provides a better vantage point for surveillance of the environment, and more readily affords subsequent ambulatory behaviors (e.g., fight or flight). In fact, some primates reflexively stand on their hind limbs when their vision is restricted (Manaka & Sugita, 2009). Adopting a particular posture (in this case, standing) may activate mental states associated with that posture (such as the heightened alertness, attentional selectivity, and cognitive control that might be needed in a fight-or-flight situation). Even though participants in our experiments did not face flight-or-fight situations, it appears that standing engaged mechanisms that yielded more thorough item analysis and more effective selection of task-relevant information. Such changes would be advantageous for ensuring the successful acquisition of information about a dynamic environment, informing decisions pertaining to interaction.

General support for this possibility comes from research that has revealed changes in visual cognition and perception caused by specific postures or prepared actions. For example, preparation of a power grasp facilitates detection of changes in relatively large objects (Symes, Tucker, Ellis, Vainio, & Ottoboni, 2008) and sensitivity to motion (Thomas, 2015). Preparation of a finger-poking movement enhances sensitivity to luminance differences, whereas a grasping movement enhances perception of object size (Wykowska, Schubö, & Hommel, 2009). And preparation of a pinching action facilitates change detection in small objects (Symes et al., 2008) and perception of form (Thomas, 2015). Each of these changes might help to serve the action that is afforded by the posture that had been adopted. As we have suggested here, the changes we observed when people were standing may also serve to facilitate the actions that are afforded by standing, such as, for example, the ability to engage in a fight-or-flight response.

Our conclusions are similar to those of other researchers who have shown connections between motivational states and the behavioral changes that often accompany those states. For example, approach and avoidance motivational states are typically associated with behaviors that, respectively, facilitate the acquisition of reward or the avoidance of punishment. These states, and the behavioral changes that accompany them, have been shown to be invoked by the production of movements that are typically associated with them. For example, an avoidance motivation is sometimes associated with increased cognitive control (Savine, Beck, Edwards, Chiew, & Braver, 2010), and a narrowing of the scope of attention to favor local over global stimulus elements (Förster, Friedman, Özelsel, & Denzler, 2006). Stepping backward, an avoidance response, reduces Stroop interference (Koch et al., 2009), presumably reflecting the narrowing of attention and increased cognitive control. Similarly, arm extension, an avoidance response that can be used to push an object away from the body, has also been shown to reduce both Stroop interference and switching costs in task-switching (Koch, Holland, & van Knippenberg, 2008) compared to the production of arm flexion movements that could be used to pull an object closer. In this context, the effects of standing that we have reported here contribute to a growing list of changes in vision and cognition that are linked to bodily states, postures, and afforded actions. Furthermore, because standing led to greater cognitive control and more focused selective attention in the present experiments, it is possible to speculate that standing invoked emotional states similar to those invoked by an avoidance motivation.

Other research has also shown a strong connection between posture and visual selection, with a pattern of results very similar to what we have reported here. Specifically, holding one’s hands near to a stimulus being evaluated leads to reduced Stroop interference (Davoli et al., 2010), reduced task switch costs (Weidler & Abrams, 2014), and increased visual search slopes (Abrams et al., 2008), paralleling the findings reported in the three experiments here. These earlier effects have been attributed to the heightened importance of thoroughly evaluating objects near the body (or the hands, in this case), because such objects could require action or potentially pose more of a danger than objects that are far away. Given that standing and hand-proximity produce similar cognitive changes, a shared mechanism, perhaps related to action planning and execution, could be involved. Whether these two postures affect attention through such a shared mechanism should be investigated in future research.

Changes in posture influenced RT (but not accuracy) in Experiment 1 and accuracy (but not RT) in Experiment 2. There was no evidence of a speed-accuracy tradeoff in either experiment – instead, both patterns reflect enhanced cognitive control when standing. It is not clear why posture affected RT in one experiment and accuracy in the other, but because individuals can choose to trade speed for accuracy in tasks such as the ones studied here, it is difficult to predict whether a specific factor will influence one or the other (or both). Indeed, Wilckens et al. (2017) examined task-switching using a design similar to that of our Experiment 2, and while they too found typical switch costs in both speed and accuracy (as did we), their interaction of interest, like ours, was present only in accuracy, not in RT. In other cases, effects were found in RT but not accuracy (Alzahabi, Becker, & Hambrick, 2017). More research will be needed to determine whether these differences reveal something important about effects of postural change.

Finally, it is worth noting that the vast majority of research on cognition and attention has been conducted with seated participants. However, the present experiments reveal that attentional processes may be affected by posture in fundamental ways. Importantly, there was not a main effect of posture in any of the present experiments, indicating that the changes in performance were not likely to be caused merely by the additional demands of some arbitrary secondary task (the task of standing, in the present case). Thus, it may be worthwhile to consider posture in future studies of cognition and attention.

References

Abrams, R. A., Davoli, C., Du, F., Knapp, W., & Paull, D. (2008). Altered vision near the hands. Cognition, 107, 1035-1047.

Abrams, R. A., & Weidler, B. J. (2014). Tradeoffs in visual processing for stimuli near the hands. Attention, Perception, & Psychophysics, 76, 383-390.

Alzahabi, R., Becker, M. W., & Hambrick, D. Z. (2017). Investigating the relationship between media multitasking and processes involved in task-switching. Journal of Experimental Psychology: Human Perception and Performance, 43(11), 1872–1894. https://doi.org/10.1037/xhp0000412.supp (Supplemental)

Andolfi, V. R., Di Nuzzo, C., & Antonietti, A. (2017). Opening the mind through the body: The effects of posture on creative processes. Thinking Skills and Creativity, 24, 20-28. https://doi.org/10.1016/j.tsc.2017.02.012

Beilock, S. L., & Holt, L. E. (2007). Embodied preference judgments: Can likeability be driven by the motor system? Psychological Science, 18, 51-57.

Bekkering, H., & Neggers, S. F. W. (2002). Visual search is modulated by action intentions. Psychological Science, 13, 370-374.

Briñol, P., Petty, R. E., & Wagner, B. (2009). Body posture effects on self-evaluation: A self-validation approach. European Journal of Social Psychology, 39, 1053-1064.

Canits, I., Pecher, D., & Zeelenberg, R. (2018). Effects of grasp compatibility on long-term memory for objects. Acta Psychologica, 18265-74. https://doi.org/10.1016/j.actpsy.2017.11.009

Chen Y., Yu Y., Niu R., & Liu, Y. (2018). Selective effects of postural control on spatial vs. nonspatial working memory: A functional near-infrared spectral imaging study. Frontiers in Human Neuroscience 12:243. https://doi.org/10.3389/fnhum.2018.00243

Cosman, J. D., & Vecera, S. P. (2010). Attention affects visual perceptual processing near the hand. Psychological Science, 21, 1254-1258.

Davoli, C. C., & Abrams, R. A. (2009). Reaching out with the imagination. Psychological Science, 20, 293-295.

Davoli, C. C., Du, F., Montana, J., Garverick, S., & Abrams, R. A. (2010). When meaning matters, look but don’t touch: The effects of posture on reading. Memory & Cognition, 38, 555-562.

Drury, C., Hsiao, Y., Joseph, C., Joshi, S., Lapp, J., & Pennathur, P. (2008). Posture and performance: Sitting vs. standing for security screening. Ergonomics, 51, 290-307.

Egeth, H., Blecker, D., & Kamlet, A. (1969). Verbal interference in a perceptual comparison task. Perception & Psychophysics, 6, 355-356.

Eimer, M. (2015). EPS Mid-Career Award 2014: The control of attention in visual search: Cognitive and neural mechanisms. The Quarterly Journal of Experimental Psychology, 68, 2437-2463. https://doi.org/10.1080/17470218.2015.1065283

Fischer, M. H., & Zwaan, R. A. (2008). Embodied language: A review of the role of motor system in language comprehension. The Quarterly Journal of Experimental Psychology. 61, 825-850.

Förster, J., Friedman, R. S., Özelsel, A., & Denzler, M. (2006). Enactment of approach and avoidance behavior influences the scope of perceptual and conceptual attention. Journal of Experimental Social Psychology, 42, 133–146. https://doi.org/10.1016/j.jesp.2005.02.004

Fox, E., Lester, V., Russo, R., Bowles, R., Pichler, A., & Dutton, K. (2000). Facial expressions of emotion: Are angry faces detected more efficiently? Cognition & Emotion, 14, 61-92.

Gilchrist, I., North, A., & Hood, B. (2001). Is visual search really like foraging? Perception, 30, 1459-1464.

Glenberg, A. M. (1997). What memory is for. Behavioral and Brain Sciences, 20, 1-55.

Glenberg, A. M., Witt, J. K., & Metcalfe, J. (2013). From the revolution to embodiment: 25 years of cognitive psychology. Perspectives on Psychological Science, 8, 573-585. https://doi.org/10.1177/1745691613498098

Hao, N., Xue, H., Yuan, H., Wang, Q., & Runco, M. A. (2017). Enhancing creativity: Proper body posture meets proper emotion. Acta Psychologica, 17332-40. https://doi.org/10.1016/j.actpsy.2016.12.005

Kerr, B., Condon, S. M., & McDonald, L. A. (1985). Cognitive spatial processing and the regulation of posture. Journal of Experimental Psychology: Human Perception and Performance, 11, 617–622. https://doi.org/10.1037/0096-1523.11.5.617

Koch, S., Holland, R. W., Hengstler, M., & van Knippenberg, A. (2009). Body locomotion as regulatory process: Stepping backward enhances cognitive control. Psychological Science, 20, 549-550.

Koch, S., Holland, R. W., & van Knippenberg, A. (2008). Regulating cognitive control through approach-avoidance motor actions. Cognition, 109, 133-142.

Ma, J. Y. (2017). Multi-party, whole-body interactions in mathematical activity. Cognition and Instruction, 35, 141-164. https://doi.org/10.1080/07370008.2017.1282485

MacLeod, C. (1991). Half a century of research on the Stroop effect: An integrative review. Psychological Bulletin, 109, 163-203.

Manaka, Y. & Sugita, Y. (2009). Insufficient visual information leads to spontaneous bipedal walking in Japanese monkeys. Behavioral Processes, 80, 104-106.

Meiran, N. (1996). Reconfiguration of processing mode prior to task performance. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 1423-1442. https://doi.org/10.1037/0278-7393.22.6.1423

Meiran, N. (2000). Modeling cognitive control in task-switching. Psychological Research, 63, 234-249. https://doi.org/10.1007/s004269900004

Proffitt, D. R. (2006). Embodied perception and the economy of action. Perspectives on Psychological Science, 1, 110-122.

Ramsøy, T. Z., Jacobsen, C., Friis-Olivarius, M., Bagdziunaite, D., & Skov, M. (2017). Predictive value of body posture and pupil dilation in assessing consumer preference and choice. Journal of Neuroscience, Psychology, and Economics, 10, 95-110. https://doi.org/10.1037/npe0000073

Rankin, J. K., Woollacott, M. H., Shumway-Cook, A., & Brown, L. A. (2000). Cognitive influence on postural stability: A neuromuscular analysis in young and older adults. Journal of Gerontology: MEDICAL SCIENCES, 55, M112-M119.

Reed, C. L., Grubb, J. D., & Steele, C. (2006). Hands up: Attentional prioritization of space near the hand. Journal of Experimental Psychology: Human Perception and Performance, 32, 166-177.

Rosenbaum, D., Mama, Y., & Algom, D. (2017). Stand by your Stroop: Standing up enhances selective attention and cognitive control. Psychological Science, 28, 1864-1867. https://doi.org/10.1177/0956797617721270

Savine, A., Beck, S., Edwards, B., Chiew, K., Braver, T. (2010). Enhancement of cognitive control by approach and avoidance motivational states. Cognition and Emotion, 24, 338-356.

Stefanucci, J. K., & Geuss, M. (2009). Big people, little world: The body influences size perception. Perception, 38, 1782-1795.

Stephan, D. N., Hensen, S., Fintor, E., Krampe, R., & Koch, I. (2018). Influences of postural control on cognitive control in task-switching. Frontiers in Psychology, 9, 1153. https://doi.org/10.3389/fpsyg.2018.01153

Stroop, J. (1935). Studies of interference in serial verbal reactions. Journal of Experimental Psychology, 18, 643-662.

Symes, E., Tucker, M., Ellis, R., Vainio, L., & Ottoboni, G. (2008). Grasp preparation improves change detection for congruent objects. Journal of Experimental Psychology: Human Perception and Performance, 34, 854.

Thomas, L. E. (2015). Grasp posture alters visual processing biases near the hands. Psychological Science, 26, 625-632.

Thomas, L. E., Ambinder, M., Hsieh, B., Levinthal, B., Crowell, J., Irwin, D., et al. (2006). Fruitful visual search: Inhibition of return in a virtual foraging task. Psychonomic Bulletin & Review, 13, 891-895.

Thomas, L. E., & Lleras, A. (2007). Moving eyes and moving thought: On the spatial compatibility between eye movements and cognition. Psychonomic Bulletin & Review 14, 663-668.

Treisman, A. M., & Gelade, G. (1980). A feature-integration theory of attention. Cognitive Psychology, 12, 97-136.

Tseng, P., & Bridgeman, B. (2011). Improved change detection with nearby hands. Experimental Brain Research, 209, 257-269.

Tseng, P., Tuennermann, J., Roker-Knight, N., Winter, D., Scharlau, I., & Bridgeman, B. (2010). Enhancing implicit change detection through action. Perception, 39, 1311-1321.

Vishton, P. M., Stephens, N. J., Nelson, L. A., Morra, S. E., Brunick, K. L., & Stevens, J. A. (2007). Planning to reach for an object changes how the reacher perceives it. Psychological Science 18, 713-719.

Weidler, B. J., & Abrams, R. A. (2014). Enhanced cognitive control near the hands. Psychonomic Bulletin & Review, 21, 462-469.

Wilckens, K. A., Hall, M. H., Erickson, K. I., Germain, A., Nimgaonkar, V. L., Monk, T. H., & Buysse, D. J. (2017). Task switching in older adults with and without insomnia. Sleep Medicine, 30, 113–120. https://doi.org/10.1016/j.sleep.2016.09.002

Witt, J. K. (2011). Action’s effect on perception. Current Directions in Psychological Science, 20, 201-206.

Wohlschläger, A. (2000). Visual motion priming by invisible actions. Vision Research, 40, 925-930.

Wolfe, J. M. (1998). What can 1 million trials tell us about visual search? Psychological Science, 9, 33-39.

Wolfe J. M. (2016). Visual search revived: The slopes are not that slippery: A reply to Kristjansson (2015). i-Perception, 7(3), 2041669516643244. https://doi.org/10.1177/2041669516643244

Woollacott, M. & Shumway-Cook, A. (2002). Attention and the control of posture and gait: A review of an emerging area of research. Gait & Posture, 16, 1-14. https://doi.org/10.1016/S0966-6362(01)00156-4

Wykowska, A., Schubö, A., & Hommel, B. (2009). How you move is what you see: Action planning biases selection in visual search. Journal of Experimental Psychology. Human Perception and Performance, 35, 1755–1769. https://doi.org/10.1037/a0016798

Yardley, L., Gardner, M., Bronstein, A., Davies, R., Buckwell, D., & Luxon, L. (2001). Interference between postural control and mental task performance in patients with vestibular disorder and healthy controls. Journal of Neurology, Neurosurgery & Psychiatry, 71, 48–52. https://doi.org/10.1136/jnnp.71.1.48

Zeng, Q., Qi, S., Li, M., Yao, S., Ding, C. & Yang, D. (2017). Enhanced conflict-driven cognitive control by emotional arousal, not by valence. Cognition and Emotion, 31, 1083-1096. https://doi.org/10.1080/02699931.2016.1189882

Zwaan, R. A. (2014). Embodiment and language comprehension: Reframing the discussion. Trends in Cognitive Sciences, 18, 229-234. https://doi.org/10.1016/j.tics.2014.02.008

Open practices statement

The data from all the experiments are available at: http://rabrams.net under the “Resources” tab.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

William H. Knapp III deceased 9-10-2017

Rights and permissions

About this article

Cite this article

Smith, K.C., Davoli, C.C., Knapp, W.H. et al. Standing enhances cognitive control and alters visual search. Atten Percept Psychophys 81, 2320–2329 (2019). https://doi.org/10.3758/s13414-019-01723-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01723-6