Abstract

The link between perception and action allows us to interact fluently with the world. Objects which ‘afford’ an action elicit a visuomotor response, facilitating compatible responses. In addition, positioning objects to interact with one another appears to facilitate grouping, indicated by patients with extinction being better able to identify interacting objects (e.g. a corkscrew going towards the top of a wine bottle) than the same objects when positioned incorrectly for action (Riddoch, Humphreys, Edwards, Baker, & Willson, Nature Neuroscience, 6, 82–89, 2003). Here, we investigate the effect of action relations on the perception of normal participants. We found improved identification of briefly-presented objects when in correct versus incorrect co-locations for action. For the object that would be ‘active’ in the interaction (the corkscrew), this improvement was enhanced when it was oriented for use by the viewer’s dominant hand. In contrast, the position-related benefit for the ‘passive’ object was stronger when the objects formed an action-related pair (corkscrew and bottle) compared with an unrelated pair (corkscrew and candle), and it was reduced when spatial cues disrupted grouping between the objects. We propose that these results indicate two separate effects of action relations on normal perception: a visuomotor response to objects which strongly afford an action; and a grouping effect between objects which form action-related pairs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A critical role of visual perception is to guide actions. To facilitate smooth interaction with the world, objects ‘afford’ actions associated with their use (Gibson, 1979). Viewing a graspable object or a tool leads to activation in visuomotor (left premotor and parietal) regions of the cortex (Chao & Martin, 2000; Grèzes & Decety, 2002), even when there is no intention to act. These regions are also engaged when participants plan and execute grasping movements (Binkofski & Buccino, 2006; Culham, Cavina-Pratesi, & Singhal, 2006), indicating that perceiving an object which affords an action can automatically potentiate appropriate motor programs (Grèzes, Tucker, Armony, Ellis, & Passingham, 2003). Consistent with this, behavioural studies show that viewing manipulable objects facilitates compatible responses, so that participants are, for example, faster to make a right-hand response when viewing a teapot with its handle turned to the right than when the handle is turned to the left (Tucker & Ellis, 1998). This visuomotor priming response (Craighero, Fadiga, Umiltà, & Rizzolatti, 1996) occurs when the orientation of the handle is irrelevant to the task (Tucker & Ellis, 1998), and even when the object itself is irrelevant (Phillips & Ward, 2002), suggesting that the action properties of objects have an automatic effect on motor responses. Here, we use the term ‘visuomotor response’ to describe object affordances which would result in automatic motor priming and activation in visuomotor dorsal stream regions.

Interestingly, there is evidence that object affordances influence perception as well as action. Riddoch et al. (2003) tested patients with visual extinction, who show limited attention to stimuli presented to the side contralateral to their lesion when a competing object is present on the ipsilesional side. The patients were better able to identify two concurrently presented objects that appeared to be interacting (e.g. a corkscrew going towards the top of a wine bottle) than pairs of objects placed in incorrect positions for their combined use. That is, the perceived interaction between the objects ameliorated their extinction. Recovery from extinction is also found when items are grouped by Gestalt grouping cues such as collinearity or common contrast polarity (Gilchrist, Humphreys, & Riddoch, 1996). Riddoch et al. proposed that the action relationship between interacting objects enabled them to be perceptually grouped, so that they were selected together rather than competing (in a spatially biased manner), for attentional resources. Green and Hummel (2006) have reported consistent data with healthy young participants, who were better at confirming that a target picture matched a written label (e.g. ‘glass’) when a ‘distractor’ object (a jug) was positioned to interact with the target. This occurred when there was a short (100-ms) interval between the target and distractor, but not with a longer interval (250 ms), suggesting that the benefit arose from perceptual grouping of the two stimuli.

The present research uses an object identification task to further investigate the effect of object affordances on normal perception. In particular, we are interested in the relationship between the two effects described above: the visuomotor response to objects which afford an action, and the perceptual response to objects which appear to interact (e.g. the perceptual grouping effect). Do the perceptual and visuomotor effects of object affordances reflect the same underlying mechanism, or are separate processes involved in each? On the one hand, the perceptual response to affordances could be driven by a visuomotor response which feeds forward / back to influence perception. This would be consistent with evidence for a close coupling between action and perception. For example, target discrimination is improved when participants prepare to make a reaching action towards the target location (Deubel, Schneider, & Paprotta, 1998). This coupling between action and perception is found even when the target appears at a predictable location and participants would benefit from attending elsewhere. On the other hand, neuroimaging studies show that the ventral visual stream (typically associated with object identification rather than action) not only processes the visual features of objects, such as their shape and orientation, but also responds to their abstract functional use, such as the way in which they are manipulated and the context in which they are used (Grill-Spector, 2003; Martin, 2007). Riddoch and colleagues (Riddoch, Humphreys, Heslop, & Castermans, 2002; Yoon & Humphreys, 2007; Yoon, Humphreys, & Riddoch, 2005) have proposed a direct (non-semantic) route from vision to categorical representations of action (lift, cut, sit, and so forth), which allows appropriate actions to be rapidly accessed from the structural properties of the object(s) and/or learned object–action associations. This suggests that perceptual grouping of interacting objects could result from a visual response to the stimuli without any requirement for a visuomotor response. Neuroimaging data from our laboratory suggest that this may be the case (Roberts & Humphreys, 2010a). We found that viewing objects that were correctly versus incorrectly positioned for action led to increased activation in ventral visual areas (lateral occipital complex and fusiform gyrus), but found no evidence for changes in activation in the more dorsal visuomotor regions.

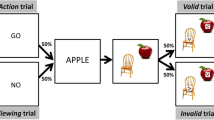

In the current experiments, participants were presented with brief images of pairs of objects which were either in the correct co-locations for action (enabling perceptual grouping) or in incorrect co-locations (Fig. 1). The task was simply to identify the objects. Each pair of objects comprised an ‘active’ object, which would be manipulated in the action (e.g. a jug or knife), and a ‘passive’ object, which would be the target of the action (e.g. a glass or apple). Following Riddoch et al. (2003), we expect improved object identification when the objects are correctly co-located for action (Factor 1: object positioning). To evaluate the relationship between perceptual and visuomotor responses to object affordances, we then manipulate two factors which might be expected to have a stronger impact on one rather than the other affordance-based response (see below): (1) the relationship between the objects; whether they form an action-related pair or are unrelated (Factor 2: object pairing); and (2) the location of the objects relative to the viewer; i.e. whether the active object is oriented for use by the viewer’s dominant hand or their other hand (Factor 3: object orientation). Based on the evidence reviewed below, we hypothesise that perceptual grouping will be stronger for related than for unrelated pairs, and that the visuomotor response will be stronger when the objects are oriented for use by the dominant hand.

a) Perceptual grouping is stronger for action-related objects.

Riddoch et al. (2006) compared the effects of action-positioning for pairs of objects that were familiar as a pair (e.g. wine bottle and wine glass), unfamiliar but still portraying a plausible action (e.g. wine bottle and bucket), and unfamiliar and unlikely to be used together (e.g. wine bottle and tennis ball). Recovery from extinction was found only when the objects could plausibly be used together in an action; no benefit was found for objects that would not be used in a combined action, indicating that only plausibly-interacting objects were perceptually grouped.

The finding that grouping occurred for both familiar and unfamiliar pairs of plausibly-interacting objects indicates that the benefit for action-positioning is based on the action properties of the objects and not just the visual familiarity of the pairing. Consistent with this, Humphreys, Riddoch, and Fortt (2006) found that extinction was not improved when non-interacting objects were placed in familiar versus unfamiliar co-locations (e.g. the sun above, rather than below, a tree). This confirms that the benefit for action-positioning is distinct from the effects obtained when objects are presented within coherent scenes (Biederman, 1972).

b) Object orientation influences the visuomotor response.

Neuroimaging and motor priming studies reveal an enhanced visuomotor response to objects which have high affordance (e.g. tools vs 3D shapes) (Creem-Regehr & Lee, 2005), or are presented in an action context (Tipper, Paul, & Hayes, 2006). In addition, Petit, Pegna, Harris, and Michel (2006) found an early premotor/motor-cortex response when a graspable object was viewed in easily graspable positions (handle towards the viewer’s dominant hand) compared with awkward positions (handle away from viewer). Handy, Grafton, Shroff, Ketay, and Gazzaniga (2003) combined evidence from EEG and fMRI to demonstrate that graspable objects can capture attention when placed in appropriate positions for use, and that this is associated with a visuomotor response. The EEG study revealed an enhanced P1 (an early visual ERP component known to be modulated by attention) when graspable objects were located in the right and lower visual fields. This led Handy et al. to conclude that graspable objects can ‘grab’ attention. The fMRI study confirmed that presenting tools in the right visual field increased the visuomotor response (in premotor and parietal regions).

Interestingly, Riddoch et al. (2003) also found a bias towards action-related objects in an action context. When patients did not show recovery from extinction (i.e. when only one of the objects was correctly identified), they tended to report the object that would be ‘active’ in the interaction, but only when the objects were in correct positions for their combined use. When the objects were in incorrect positions, patients tended to report the ipsilesional object, consistent with their spatial bias. Similarly, we found that healthy participants show a bias in temporal order judgements towards the ‘active’ member of a pair of objects positioned correctly rather than incorrectly for action (Roberts & Humphreys, 2010b). Positioning the objects for action led to a small but reliable shift in the point of subjective simultaneity (PSS), indicating relatively faster processing of the active object when it was presented in an action context. This effect was found for both action-related and unrelated objects; the effect was not mediated by object pairing.

In Experiment 1, we presented participants with pairs of objects that were (1) positioned in correct or incorrect co-locations for action (positioning); (2) in (familiar) action-related or (unfamiliar) action-unrelated pairs (pairing); and (3) oriented for use by the viewer’s dominant hand or their other hand (orientation) (Fig. 1). We evaluate the effect of these factors on identification of the active and passive objects. We hypothesise that object identification will be improved when the objects are positioned for action, and that this effect will be enhanced when the objects form a familiar, action-related pair. In addition, we expect improved identification of the active object when it is positioned for use with a passive object (i.e. when it is presented in an action context), and for this effect to be enhanced when it is positioned in an appropriate location for use (i.e. when it is oriented for use by the viewer’s dominant hand). If perceptual grouping of interacting objects is driven by a visuomotor response, we would expect changes to the visuomotor response (i.e. changes expected when the active object is placed in an action context and in an appropriate orientation for use) to also improve identification of the passive object. In Experiment 2, we attempt to further separate the perceptual and visuomotor responses to object affordances by cueing attention to one of the objects or to neither object. Cueing attention to part of the display should interfere with perceptual grouping of the objects (Goldsmith & Yeari, 2003), but not with an automatic visuomotor response to the objects (Phillips & Ward, 2002).

Example stimuli. The objects could be correctly or incorrectly positioned for their combined use (alternating rows). Object pairs comprised an active object (that would be manipulated in the action: the corkscrew/match) and a passive object (the bottle/candle). The active object could be oriented for use by the dominant hand (to the right for a right-handed viewer; columns 1 and 2) or the other hand (to the left for a right-handed viewer; columns 3 and 4). To manipulate object pairing, the objects were organised into ‘sets’ comprising two action-related pairs of objects (Set 1 = corkscrew - bottle, match - candle; see Appendix 1 for a complete list). Objects were viewed in action related pairs (top rows) or re-paired within the set to form unrelated pairs (bottom rows)

Experiment 1: effects of action positioning, orientation, and pairing on object identification

Method

Participants

Twenty volunteers (5 male, 3 left-handed, mean age 22 years) were recruited from the University of Birmingham research participation scheme. Participants gave written informed consent and either received course credit or were paid for their time.

Stimuli

The stimuli were 50 greyscale clip-art style images of ‘active’ objects (objects that would be actively manipulated in an action) paired with related and unrelated ‘passive’ objects (objects typically held still during the action; see Fig. 1 for example stimuli and Appendix 1 for a complete list of pairs). To ensure that each object was presented an equal number of times in each condition, related object pairs were organised into pairs of pairs (‘sets’, see Appendix 1). This allowed unrelated pairs to be formed by swapping the passive objects within each set [i.e. in Set 1, the corkscrew was presented with a bottle (related pair) and a candle (unrelated pair)]. As far as possible the unrelated pairs did not show an appropriate action (with some limitations: for example, while we were careful not to re-pair the jug with any sort of container, a jug could of course be used to pour water onto anything). Each pair of (related and unrelated) objects were displayed in correct and incorrect positions for action, with the active object oriented to the right (appropriate for use by a right-handed viewer) or to the left. Incorrectly-positioned pairs were formed by horizontally flipping the active item, giving the incorrect active items the same angle of rotation as correctly-positioned active items presented on the other side (Fig. 1), to control for effects of object rotation on identification rates (Lawson & Jolicoeur, 1998). The majority of pairs showed the active item above the passive item; six pairs showed the active item below the passive item. Active items had a smaller approximate size than passive items (active: 5.58 cm2, range 1.12–11.9; passive: 8.56 cm2, range 0.4–19.8). Twelve volunteers (1 male, average age 22 years, 2 left-handed) were asked to rate the object pairs for whether the objects appeared to be being used together, based on both the identity and position of the objects. Ratings were on a scale from 1 (‘definitely not being used together’) to 5 (‘definitely being used together’). Pairs of related objects received higher ratings than unrelated objects (F 1,11 = 62.07, p < 0.001), as did objects that were positioned correctly versus incorrectly for action (F 1,11 = 15.38, p < 0.01). The effect of positioning for action was stronger for related pairs (4.45 vs 3.56) than for unrelated pairs (1.79 vs 1.63), resulting in a significant interaction between pairing and positioning (F 1,11 = 19.96, p < 0.01). There was no effect on the ratings of whether the objects were oriented for use by the dominant hand or the other hand.

Procedure

Participants were presented with each of the 400 object pairs (50 active items paired with related and unrelated passive items; presented in correct and incorrect positions for action; with the active object to the left or to the right), and were asked to identify both objects. Participants viewed the stimuli on a CRT monitor (with a refresh rate of 100 Hz) from a distance of approximately 60 cm. At the start of each trial a fixation cross was presented for 2,000 ms. Object pairs were then presented for 50 ms, followed by a 300-ms mask composed of small sections taken from a comparable set of greyscale clip-art style images (see Fig. 2). Participants verbally identified the objects and then pressed the space bar to initiate the next trial. An experimenter noted their responses. Before starting the experiment, participants completed two practice blocks using a different set of object pairs (hammer - nail, knife - butter; teaspoon - mug, pin - balloon; hacksaw - pipe, teapot - teacup). In the first practice block (six trials), object pairs were presented for 1,000 ms to familiarise participants with the experimental protocol. The second practice block (12 trials) included feedback but was otherwise identical to the main experiment.

Results

For each participant, we calculated the proportion of correctly-identified objects in each condition. A relaxed scoring system was applied where any appropriate object name was accepted (e.g. for ‘ketchup’ we accepted ‘tomato ketchup’, ‘bottle’, and ‘bottle of sauce’, and for ‘coin’ we accepted ‘money’ and ‘pound’). To aid this process, a separate group of five participants (all female, mean age 19 years) viewed and named each object individually. Their responses contributed to the list of appropriate object names, and were also used to identify responses that were reasonable but technically incorrect (e.g. ‘nail’ for ‘screw’, ‘cup’ for ‘glass’, ‘pliers’ for ‘secateurs’). These responses were also accepted as correct.

The proportions of correct responses in each condition were entered into three-way analyses of variance (ANOVA) contrasting positioning (correct or incorrect positions for action), pairing (related or unrelated passive item), and orientation (active object oriented for use by the dominant or other hand; see Fig. 1). Separate ANOVAs were conducted for the active and passive objects. Figure 3 shows the proportion of correctly-identified objects in each condition. Identification of both active and passive objects was significantly better when they formed a related than an unrelated pair (active: 66% related vs 58% unrelated; F 1,19 = 108.33, p < 0.001; passive: 58% vs 47%; F 1,19 = 120.42, p < 0.001), and when the objects were correctly rather than incorrectly positioned for action (active: 64% correctly-positioned vs 60% incorrectly-positioned; F 1,19 = 40.08, p < 0.001; passive: 53% vs 52%; F 1,19 = 4.89, p < 0.05).

Proportion of correctly-identified objects in each condition in Experiments 1 and 2. Asterisks indicate significant and near-significant differences between identification rates for correctly- and incorrectly-positioned objects, based on repeated measures t tests (***p < 0.001; **p < 0.05; *p < 0.1)

For the active objects, there was a significant interaction between the orientation of the objects (oriented for use by the dominant hand or other hand) and the positioning of the objects (correct or incorrect for action) (F 1,19 = 21.94, p < 0.001). To explore the interaction, we conducted separate ANOVAs for pairs where the objects were oriented for use by the dominant hand and pairs where they were oriented for use by the other hand. This showed a stronger benefit for positioning the objects for action when the active object was oriented for use by the dominant hand (66% correctly-positioned vs 60% incorrectly-positioned; F 1,19 = 68.03, p < 0.001) than when it was oriented for use by the other hand (63% vs 61%; F 1,19 = 6.12, p < 0.05). There was no interaction between the pairing of the objects and the position of the objects (F 1,19 = 0.18, p = 0.68). The three-way interaction between pairing, positioning, and orientation was also not significant (F 1,19 = 0.006, p = 0.94).

In contrast to the data with active objects, for passive objects there was a significant interaction between pairing (related or unrelated) and positioning for action (F 1,19 = 4.75, p < 0.05), but no interaction between the orientation of the objects (dominant hand or not) and positioning for action (F 1,19 = 0.64, p = 0.43). Object position (correct vs incorrect for action) influenced performance when the objects formed a related pair (60% correctly positioned vs 56% incorrectly positioned; F 1,19 = 10.54, p < 0.01) but not when they formed an unrelated pair (47% vs 47%; F 1,19 = 0.002, p = 0.97). As with active objects, the three-way interaction was not significant (F 1,19 = 0.002, p = 0.96).

Bias to the active object

Active objects tended to be smaller than passive objects (5.58 cm2 compared with 8.56 cm2) and for both active and passive objects there was a positive correlation between object size and identification rates (active items: Pearson’s correlation coefficient = 0.35, p < 0.05, passive items: 0.33, p < 0.05). Nevertheless, identification was better for active objects (62%) than for passive objects (53%).

Following Riddoch et al. (2003), we investigated whether, on trials where participants were only able to identify one of the objects, positioning objects for action biased participants towards the active object. Generalised estimating equations (SPSS v.15) were used to investigate whether orientation to the dominant hand, relatedness of the pairing and the action-positioning of the objects influenced the likelihood that the active object was reported on trials where only one object was correctly identified. There was a significant effect of positioning objects for action (Wald Χ 2 = 5.77, p < 0.05), and a significant interaction between action positioning and the orientation of the objects (Wald Χ 2 = 8.87, p < 0.01). When objects were oriented for use by the dominant hand, there was a significant effect of positioning for action (Wald Χ 2 = 12.68, p < 0.001; estimated marginal means = 66% for correctly positioned objects vs 60% for incorrectly positioned objects). When objects were oriented for use by the other hand there was no longer an effect of positioning (Wald Χ 2 = 0.10, p = 0.75; 61% vs 60%). Figure 4 shows identification rates in each condition, averaged across participants.

Grouping of action-related objects

Based on the findings from neuropsychological studies, we hypothesised that objects positioned for action would be perceptually grouped, and that this grouping would be stronger for related than for unrelated pairs (Riddoch et al., 2003, 2006). To address this possibility, we explored whether participants were more likely to identify both the objects when they were correctly positioned for action than when they were not, and whether this was influenced by the orientation of the objects to the dominant hand or the relatedness of the pairing of the objects (Fig. 5). Participants were more likely to identify both objects when they formed a related pair versus an unrelated pair (F 1,19 = 200.40, p < 0.001) and when they were positioned correctly versus incorrectly for action (F 1,19 = 18.71, p < 0.01), with no effect of orientation to the dominant hand (F 1,19 = 0.47, p = 0.50). Consistent with the neuropsychological data, the effect of object position (correct vs incorrect for action) was stronger for related pairs of objects (48% correctly-positioned vs 43% incorrectly-positioned) than for unrelated pairs (29% vs 28%), resulting in a significant two-way interaction between pairing and action positioning (F 1,19 = 7.02, p < 0.05). These results are consistent with the idea that related pairs of interacting objects are perceptually grouped, and that this grouping leads to improved identification of both objects.

Alternative explanations

We conducted two further analyses to exclude alternative explanations for our results. The first assesses whether the advantage for the active item was related to its relative position on the screen (typically above the passive item), and the second considers whether the advantage for related pairs of objects reflected improved guesswork or memory for associated pairs.

The active object was positioned above the passive object for 44 of the object pairs, and below the passive object for the remaining 6. To evaluate the effect of this factor, the top/bottom location of the active object was entered into the orientation × pairing × position ANOVAs as an additional variable. Identification was better for pairs where the active object was above the passive object [active object: 63% (above) vs 54% (below), F 1,19 = 8.57, p < 0.01; passive object: 53% (when active object above) vs 48% (when active object below), F 1,19 = 3.42, p = 0.08)], but there were no significant interactions involving location and whether the objects were positioned for action. The pattern of results was similar when the active object was located above and below the passive object: for active objects there was an interaction between orientation to the dominant hand and positioning for action, both when the active object was above (F 1,19 = 11.96, p < 0.01) and below (F 1,19 = 7.77, p < 0.05) the passive object. For the passive object, there was a trend towards an interaction between pairing and positioning for action in both cases (above: F 1,19 = 3.01, p = 0.099; below: F 1,19 = 3.50, p = 0.077).

Riddoch et al. (2003) were able to exclude the possibility that guesswork (based on a single identified object) led to improved identification of both objects on trials where the objects were appropriately paired. They contrasted performance with action-related (e.g. spoon and bowl) and associatively-related (e.g. spoon and fork) objects, and found that appropriate pairing only improved performance for action-related objects, even though the associatively-related pairs could be equally easily guessed. They also reported no false alarms on single item trials—that is, the patients never reported seeing (i.e. guessed) a second item on trials where only one item was presented. Participants in our study used their knowledge of the stimuli to make educated guesses. Each object was seen with a related object (e.g. spanner and nut) and an (unrelated) object from the other pair in the ‘set’ (e.g. spanner and glass; see Appendix 1). Of the 4,984 trials where the active object was correctly identified, participants guessed an incorrect passive object on 683 trials (14%). Incorrect guesses were highly variable, with a slight tendency towards visually similar objects (e.g. ‘ball’ for ‘apple’), but participants also made educated guesses: e.g., having correctly identified the spanner, they named the nut (when the glass was shown) or the glass (when the nut was shown). In total, the passive object from the other pair in the set was named on 34 trials (5% of the trials where the active object was identified and participants incorrectly guessed the identity of the passive object). Participants were more likely to make these educated guesses when the objects were unrelated (correctly positioned for action: 7%; incorrectly positioned for action: 8%) rather than related (0.8%; 0.8%), indicating that guesses were inflated to related passive objects. Critically, though, there was no suggestion that positioning the objects for action influenced guesswork. Similarly, of the 4,203 trials where the passive object was correctly identified, participants guessed an incorrect active object on 611 trials (15%), of which 88 (15%) were the active object from the other pair in the set. Again, the object from the other pair was more likely to be guessed for unrelated pairs (21% correctly positioned; 20% incorrectly positioned) than related pairs (4%; 5%) but there was little effect of positioning the objects for action. Hence, although the higher identification rates for related pairs of objects are likely to reflect some guesswork, this does not appear to explain the benefit found for objects which are positioned for action.

To minimise the influence of guesswork, we took data from just the first trial from each set, prior to participants possibly learning some of the pairings, and investigated whether pairing and positioning for action still influenced the likelihood of identifying both objects. This analysis was based on only 25 trials per participant (i.e. one trial for each ‘set’ of objects in Appendix 1). However, participants still showed improved performance with related object pairs and objects which were correctly positioned for action. When the objects were related, both objects were identified on 20% of the trials when the objects were correctly positioned for action versus 16% of the trials when the objects were incorrectly positioned for action. When the objects were unrelated, the values were 16% and 10%, respectively. These effect sizes are similar to those observed across the whole experiment (see above).

Discussion

Positioning objects for action led to improved identification rates for both active and passive members of a pair of objects. Consistent with the neuropsychological data (Riddoch et al., 2003, 2006), participants were better at identifying both objects when they were co-located for their combined use, but only when the objects could be used together in a combined action. This finding is consistent with the hypothesis that action-related objects are perceptually grouped when they are correctly positioned for action.

Different patterns of results were found for identification of the active and passive objects. As hypothesised, active objects were more likely to be identified when they were correctly positioned for action than when they were incorrectly positioned, and this effect was enhanced when they were oriented for use by the viewer’s dominant hand. These findings indicate that factors which enhance the visuomotor response to objects can also facilitate object identification. There was no effect of object pairing (related vs unrelated) in this case, suggesting that factors which influence grouping of the objects do not necessarily influence the visuomotor response. Instead, it appears that only a minimally-interactive context is necessary for the potential for action to influence perception of active objects. Participants also showed a bias in perceptual report towards the active (rather than passive) object when the objects were correctly positioned for use and oriented towards the dominant hand, confirming that the active object can capture attention when placed in an appropriate position for use (Handy et al., 2003). These findings are also consistent with evidence that preparing to make a reaching movement towards a target improves perception of stimuli presented at that location (Deubel et al., 1998). Interestingly, Humphreys, Wulff, Yoon, and Riddoch (2010) have reported that orienting active objects for the dominant hand can affect extinction, too. Patients showed less extinction with objects positioned for action when the active object was aligned with the hand that the patient would normally use for that object in the action. The results point to a visuomotor component of the effects of action relations on perception.

In contrast to the data with active objects, identification of the passive object was unaffected by the orientation of the objects in relation to the participants’ hands, but it was influenced by whether the objects were likely to be used together in an action (whether they formed an action-related pair). The same pattern of results was found for passive objects as was found for identification of both objects, suggesting that identification of the passive object is improved when the objects are grouped.

It seems then that the action relationship between two objects has two separate effects on perception: it provides an action context which enhances the visuomotor response to (and consequently identification of) the active object, and it allows related pairs of objects to be perceptually grouped, facilitating reporting of the passive object. Results from temporal order judgements and from extinction studies also suggest that the visuomotor response might be separate from perceptual grouping. In temporal order judgements, participants show relatively faster processing of an active object when it is positioned for action, but no evidence for perceptual integration of the two objects (no reduction in temporal resolution, as would be expected when the two targets are grouped; Nicol & Shore, 2007; Roberts & Humphreys, 2010b). In extinction, the effects of orienting active objects to the patient’s dominant hand arise only when the objects are shown from the participant’s (egocentric) reference frame (as if the participant is using the objects). On the other hand, effects of co-locating objects for action occur both when objects are seen from the patient’s reference frame and when they are seen from the opposite position (from an allocentric frame, looking across a table to an actor; Humphreys et al., 2010). In temporal order judgements, then, effects of the visuomotor response can be shown in the absence of perceptual grouping, while in extinction, effects of perceptual grouping can be demonstrated when the visuomotor response effect is reduced (stimuli shown from an allocentric frame).

An alternative explanation for the action-related benefit in reporting of both objects is that the active object acts as a cue to direct attention towards the passive object, once the active object is selected. Riddoch et al. (2003) excluded this explanation since they found no effect of whether the active or passive object was presented to the patient’s ipsilesional side, yet, in the absence of the active object, the patients oriented first to the ipsilesional side. Positioning objects for action affected the first orienting response, not orienting after selection of a first (ipsilesional) object. In the present study, the different patterns of results for active and passive objects also make attentional cueing an unlikely explanation. If attention is captured by active objects oriented for use by the dominant hand, cueing of the passive object by the active object should be sensitive to the orientation of the objects but not to whether the objects are action-related. This was not the case.

Experiment 2: effects of attentional cueing on object affordances

For Experiment 2, we attempted to further separate the perceptual and visuomotor effects of object affordances by disrupting grouping between the objects. We used the same experimental design as in Experiment 1, but presented a spatial (arrow) cue at fixation prior to the objects being presented, in order to alter the spatial distribution of attention. We posit that grouping acts to spread attention to both members of a pair of related, interacting objects, which facilitates report of the passive objects in particular. Spatial cues could influence grouping between the objects in two ways. One possibility is that grouping will be weakened when spatial cues direct attention away from the objects. Freeman and colleagues (e.g. Freeman & Driver, 2008; Freeman, Driver, Sagim, & Zhaoping, 2003) have shown that perceptual grouping between flankers and a central target is reduced when attention is directed elsewhere in the display. Similarly, Goldsmith and Yeari (2003) demonstrated that effects of grouping are found when attention is spread across the visual field, but reduced when attention is narrowly focused. A second possibility is that attentional cueing to either the active or passive object will reduce the spread of attention across the two objects by focusing attention on just one of them. In this case, we might expect a reduced benefit of action-positioning on report of the passive object when either the active or passive object is cued.

Experiment 2 followed the same design as Experiment 1, but with an arrow cue presented at fixation prior to the objects appearing. Spatial attention was manipulated by orienting the arrow cue towards one of the two objects present or to an empty quadrant (the arrow pointed diagonally towards one of the four quadrants of the display). Central arrow cues, rather than peripheral cues, were selected to minimise the effect of differences in the shape and size of the objects. Uninformative arrow cues have been shown to result in reflexive shifts in visuospatial attention (Tipples, 2002). In addition to the object pairs, participants also viewed 80 single objects, presented in one of the four quadrants. To enhance any spatial orienting effects, participants were told that on these single-object trials the arrow cue indicated the probable location of the target object (85% valid). These single object trials were included to encourage participants to attend to the cue and to provide a measure of their spatial attention.

We hypothesise that grouping between the objects will be reduced across the experiment as a whole, due to attention being focused on the central cue (Goldsmith & Yeari, 2003). Based on the findings from Experiment 1, this would be expected to reduce the benefit of action positioning on report of both objects, and also on report of the passive object, but to have no effect on report of the active object (i.e. we expect that active-object identification will still be affected by the visuomotor effects of action context and orienting the active object to the dominant hand). Effects of action positioning are also likely to be reduced when neither object is cued, due to grouping being reduced when attention is directed away from the objects (Freeman et al., 2003). When either the active or passive object is cued, we predict that performance will be improved for the cued object, but reduced for the uncued object. We also hypothesise that cueing either one of the objects will disrupt grouping between the objects.

Method

Participants

Twenty volunteers (6 male, 2 left-handed, mean age 21 years) were recruited from the University of Birmingham research participation scheme. Participants gave written informed consent and either received course credit or were paid for their time.

Stimuli

Object pairs were the same as those used for Experiment 1 (Fig. 1; Appendix 1), plus an additional 40 greyscale clip-art style images of individual objects (see Appendix 2 for a list of objects). Arrow cues were presented at fixation and measured 0.9 cm2.

Procedure

The procedure was the same as that for Experiment 1 but with the following modifications. Each trial began with the 2,000-ms fixation cross, as in Experiment 1. However, for Experiment 2 we introduced a 120-ms arrow cue at fixation, followed by a 170-ms fixation cross, before the target and mask appeared (cue-target SOA = 290 ms). Each of the 40 single objects was presented twice: once each in either the top right and bottom left quadrants or in the top left and bottom right quadrants. Single-object and paired-object trials were presented in random order. On paired-object trials, the direction of the arrow was randomly selected, so that it could cue either the active object (approximately 1/4 of trials), the passive object (≈1/4 of trials), or neither object (≈1/2 of trials). On 85% of single-object trials, the arrow indicated the object’s location.

Results

The identification of active objects followed a similar pattern to that found in Experiment 1 (see Fig. 3 for identification rates from both experiments). Active objects were identified more accurately than passive objects (60% vs 51%), and were more likely to be identified when the objects formed action-related pairs (65% related vs 56% unrelated; F 1,19 = 143.40, p < 0.001) and when the objects were positioned for action (62% correctly positioned vs 59% incorrectly positioned; F 1,19 = 17.57, p < 0.001). There was also a trend towards an interaction between orienting the active object to the dominant hand and positioning objects for action (F 1,19 = 3.27, p = 0.086), reflecting a stronger effect of positioning for action when the objects were oriented for use with the dominant hand (63% correctly positioned vs 59% incorrectly positioned) rather than with the other hand (60% vs 59%). These results with active objects closely mirror those from Experiment 1. To confirm that the cues did not influence identification of the active object, we entered data from Experiments 1 and 2 into the same analysis, with Experiment as a between-participants factor. There was no main effect of Experiment and no interaction involving Experiment and any other factor(s).

On trials where only one object was identified, the object was more likely to be the active item (61% active vs 39% passive), and, as in Experiment 1, there was an interaction between orienting the active object to the dominant hand and positioning objects for action (Wald Χ 2 = 8.47, p < 0.01). When the objects were oriented for use by the dominant hand, there was a bias towards the active object when the stimuli were correctly vs. incorrectly positioned for use (Wald Χ 2 = 8.47, p < 0.01; estimated marginal means = 63% correctly positioned vs 59% incorrectly positioned). This was not the case when the objects were oriented for use with the other hand (Wald Χ 2 = 0.12, p = 0.73; estimated marginal means = 60% correct vs 60% incorrect) (Fig. 4). As before, there were no significant effects of Experiment.

In contrast, identification of the passive object showed a different pattern of results to that found in Experiment 1. Passive objects were still identified more often when they were in related relative to unrelated action pairs (F 1,19 = 95.79, p < 0.001), but there were no other significant effects. Positioning the objects for action no longer led to any improvements in identification (F 1,19 = 1.35, p = 0.26) and there was no interaction between pairing and positioning (F 1,19 = 1.60, p = 0.22). Despite the different pattern of results, there was no significant interaction between Experiment and action-positioning (F 1,38 = 0.56, p = 0.46) or between Experiment, pairing and positioning (F 1,38 = 0.91, p = 0.35). However, this likely reflects the effect of the cues on identification of the passive object (see below).

The identification of both objects was significantly better when they were in related relative to unrelated pairs (F 1,19 = 165.73, p < 0.001), with a trend towards a benefit for positioning-for-action (F 1,19 = 3.49, p = 0.077). There was a benefit for correctly positioning related pairs of objects (44% correctly-positioned vs 41% incorrectly positioned) but not unrelated pairs (25% vs 26%), resulting in a significant interaction between pairing and positioning (F 1,19 = 6.36, p < 0.05) (Fig. 5). The effect of positioning was weaker in Experiment 2 than Experiment 1, resulting in a trend towards an interaction between Experiment and positioning (F 1,38 = 3.27, p = 0.079).

Effect of cueing

Cued single objects were not more likely to be identified than uncued single objects (48% cued vs 50% uncued; t 19 = −0.63, p = 0.54). However, this was based on just a small number of objects (particularly in the uncued condition: cues were 85% valid and so only 12 objects per participant were uncued).

To confirm that grouping was reduced when attention was directed away from the objects (Freeman et al., 2003), we repeated the orientation × pairing × positioning ANOVAs using only data where neither object was cued. The findings were similar to those for the full dataset. For active object identification, there was an interaction between the position of the objects and their orientation towards the dominant or other hand (F 1,19 = 6.99, p < 0.05), indicating that any reduction in grouping did not have an effect on identification of active object. For passive objects, there was no effect of positioning (F 1,19 = 0.03, p = 0.87), and no interaction between pairing and positioning (F 1,19 = 0.65, p = 0.43). In this case, the interaction between pairing, positioning, and Experiment approached significance (F 1,38 = 3.54, p = 0.07), indicating that the spatial cues weakened the effects of positioning the objects for action.

Next, we considered the pattern of results when either the active or passive object was cued. We entered the data into a five-way ANOVA contrasting positioning, pairing, orientation, cue direction (active cued, passive cued), and object type (active, passive). A two-way interaction between cue direction and object type (F 1,19 = 7.91, p < 0.05) confirmed that cueing an object increased the likelihood of it being identified (relative to when the other object was cued). Active object identification was better when the active object was cued (61%) than when the passive object was cued (59%). Passive object identification was better when the passive object was cued (53%) than when the active object was cued (48%). This two-way interaction was additionally modulated by the position of the objects (three-way interaction: F 1,19 = 5.08, p < 0.05; Fig. 6a) and the pairing of the objects (three-way interaction between pairing, cue direction, and object type: F 1,19 = 13.95, p < 0.01; Fig. 6b).

Proportion of correctly identified objects following arrow cues to the active object (black columns) or passive object (grey columns). a Interaction between cue direction and object position (for incorrectly-positioned objects only); b interaction between cue direction and object pairing (for unrelated pairs of objects only)

Figure 6a shows that identification rates were similar across cueing conditions when the objects were correctly positioned (no interaction between cue direction and object type for correctly-positioned objects: F 1,19 = 1.39, p = 0.25), but differed when the objects were incorrectly positioned for action (F 1,19 = 11.34, p < 0.01). This resulted from reduced identification of the passive object when an incorrectly-positioned active object was cued, relative to when the passive object was cued (45% vs 54%; t 19 = 3.62, p < 0.01) (and also relative to when the objects were correctly positioned and the active object was cued (45% vs 52%; t 19 = 2.72, p < 0.05). This led to an increased effect of positioning for action when the active object was cued compared with when the passive object was cued (interaction between cue direction and positioning for passive objects only: F 1,19 = 10.02, p < 0.01): when the active object was cued, passive-object identification was better when the objects were correctly positioned (52%) compared with incorrectly positioned (45%; t 19 = 2.72, p < 0.05). When the passive object was cued there was no effect of positioning on passive-object identification (52% correctly positioned vs 54% incorrectly positioned; t 19 = −1.30, p = 0.21).

Figure 6b shows that object identification was unaffected by the cue when the objects formed a related action pair (no interaction between cue direction and object type for related objects only: F 1,19 = 0.002, p = 0.96), but differed when they formed an unrelated pair (F 1,19 = 25.30, p < 0.001). When the objects were unrelated, active object identification was significantly better when the active object was cued relative to when the passive object was cued (57% active-cued vs 53% passive-cued; t 19 = 2.10, p = 0.05). Comparison with the neither-cued condition suggested that this reflected a slight reduction in identification when the passive object was cued (56% neither cued vs 53% passive cued; t 19 = 1.98, p = 0.063) rather than an increase in identification when the active object was cued (56% neither cued vs 57% active cued; t 19 = 0.68, p = 0.51). Identification of the passive object was impaired when the objects were unrelated and the arrow cued the active object rather than the passive object (42% active-cued vs 50% passive-cued; t 19 = −3.39, p < 0.001).

There were no significant four- or five-way interactions, suggesting that the pairing of the objects and their action positioning separately influenced participants’ ability to identify the objects following spatial cues.

Discussion

The addition of spatial cues in Experiment 2 reduced the benefit of positioning-for-action on identification of passive objects and both objects, but had little effect on the identification of active objects. These findings are consistent with our hypothesis that changes to the spatial distribution of attention disrupt grouping between objects, reducing the benefit of positioning-for-action on identification of the passive object and both objects, but not influencing the visuomotor response to the action positioning and orientation of the active object. A similar pattern of results was found across all trials, and on just trials where the spatial cues directed attention away from both objects.

The results from Experiment 1 suggested that perceptual grouping between related, interacting objects improved identification of passive objects and both objects. In Experiment 2, we used spatial cues to disrupt grouping between the objects (Goldsmith & Yeari, 2003), and found a reduced benefit of positioning-for-action on report of the passive object and both objects. These results support the claim that interacting objects are perceptually grouped (Experiment 1; Green & Hummel, 2006; Riddoch et al., 2003). The pattern of results for the active object indicate that the visuomotor response is less affected by changes in spatial attention. As in Experiment 1, identification of the active object was enhanced when it was positioned for action and oriented for use by the viewer’s dominant hand. This finding is consistent with earlier research showing that active objects capture attention when placed in appropriate positions for their use (Handy et al., 2003), overcoming spatial biases in the distribution of attention (di Pellegrino, Rafal, & Tipper, 2005; Riddoch et al., 2003).

Cueing attention towards either the active or passive object improved identification of the cued object (relative to when the other object was cued). This effect was separately modulated by the pairing and action-positioning of the objects. Cue direction did not influence object identification when the objects were related, but identification of the passive object was reduced when an unrelated active object was cued. Similarly, cue direction did not influence identification of correctly-positioned objects, but cueing an incorrectly-positioned active object impaired identification of the passive object. It seems that directing attention towards an unrelated or incorrectly-positioned active object prevented attention spreading to the passive object. These results suggest that there was some processing of both the action and the associative relation between stimuli, despite the presence of the spatial cues. We think of these results in the following way. The effects of action relations (in terms of both pairing and positioning) are to spread attention to both members of a pair, reducing effects of spatial cueing which otherwise arise on the passive object (with incorrectly positioned and unrelated pairs). Note that this spread of attention appears to operate even when attention is biased to one of the objects—here by the spatial cue; by the lesion in patients showing visual extinction (cf. Riddoch et al., 2003).

General discussion

The present results indicate two separable effects of the action relationship between two objects: first, there is a bias towards an ‘active’ object in an action context, which is likely to be mediated by a visuomotor response to the objects. This bias is modulated by orienting the active object to the dominant hand. Second, related interacting objects are perceptually grouped, facilitating identification of the passive object and increasing the likelihood that both objects are identified. These two mechanisms are somewhat in opposition, since attentional-capture by the active object would prevent attention being distributed across both objects (allowing them to be perceived as a single unit).

What determines whether attention is captured by the active object or distributed across both objects as an interacting unit? Riddoch et al. (2006) proposed a two-stage model to account for the effects of action relations on object perception in patients with extinction. At Stage 1, attention is allocated to either one or both objects in a pair. Stage 1 is a bottom-up process, sensitive to the action-related properties of objects; presumably the same action-related properties that are accessed via the direct route from perception to action (i.e. structural features and/or learned object-action associations; Riddoch et al., 2002). Attention is more likely to be allocated across both objects when they are action related and correctly positioned for their combined use, which in turn makes it more likely that both objects will be identified. At this stage, we would additionally contend that visuomotor activation feeds forward / back to influence perception of objects which afford an action. Potentially, the relative strengths of the visuomotor response and the action-related grouping cues influence whether attention is captured by the active object or distributed across both objects. This process could also be influenced by the initial focus or distribution of attention, prior to the objects being perceived. At Stage 2 of Riddoch et al.’s (2002) model, the initial bottom-up coding is confirmed by a top-down process which is sensitive to the familiarity of both the interaction between the objects and the familiarity of the individual objects. Riddoch et al. (2002) proposed that the speed of the second stage influences which object is selected for report: if Stage 2 is slow then only the object selected first will be reported. Here, there may be an additional benefit from presenting related objects, and, as we observed in Experiment 2, this might also counter-act effects of attentional cueing even when related objects are not positioned for action.

Positioning for use by the dominant hand or in the right hemifield?

The object pairs were viewed with the active object presented either to the left or right of the passive object. The data were then analysed according to whether the active object was oriented for use with the viewer’s dominant hand (to the right for right-handed viewers and to the left for left-handed viewers) or for use with their other hand. The results show that compatibility between the handedness of participants and the left/right location of the objects affected identification of the active object, but not the passive object, consistent with our interpretation that object affordances are enhanced when the objects are oriented for use by the dominant hand (see also Humphreys et al., 2010). However, an alternative explanation is that it is the location of the object in the right or left hemifield that influences performance. Visuomotor processes are known to be localised to the left hemisphere (Chao & Martin, 2000; Grèzes & Decety, 2002), which may confer a right-visual field advantage for processing action-related objects (Handy et al., 2003). Handy and Tipper (2007) attempted to address this possibility by presenting left-handed participants with ‘tools’ to their left and right hemifields. In theory, this design would allow a handedness-compatibility benefit for objects in the left hemifield to be evaluated separately from a visuomotor benefit for objects in the right hemifield. In fact, ERP data showed that attention was drawn to the location of tools in both hemifields, hinting that both processes may be in operation. We attempted to look at our data in a similar way. We combined data from Experiments 1 and 2 and analysed the active-object identification data separately for the left- (n = 5) and right-handed (n = 35) participants. Right-handed participants had higher identification rates when the active object was located on the right (F 1,34 = 4.39, p < 0.05), when the objects were related (F 1,34 = 228.25, p < 0.001) and when the objects were positioned correctly for action (F 1,34 = 39.25, p < 0.001). They also showed a significant interaction between the location of the active object and the position of the objects (F 1,34 = 18.81, p < 0.001), with a stronger effect of positioning for action when the active object was on the right (66% correctly-positioned vs 60% incorrectly-positioned) than when it was on the left (62% vs 60%). Left handed-participants had similar identification rates when the active object was on the left and right (F 1,4 = 0.50, p = 0.520), but showed improved performance for related objects (F 1,4 = 18.82, p < 0.05) and for objects that were correctly positioned for action (F 1,4 = 40.15, p < 0.01). There was no interaction between the location and action-position of the active object though (F 1,4 = 0.02, p = 0.902), with similar effects of positioning-for-action when the active object was on the left (59% correctly-positioned vs 53% incorrectly-positioned) and on the right (61% vs 55%). This result could have occurred for at least two reasons. One is that left-handed participants have a different cortical organisation to right handers (e.g. their cortical organisation is less asymmetric; see Solodkin, Hlustik, Noll, & Small, 2001; Tzourio, Crivello, Mellet, Nkanga-Ngila, & Mazoyer, 1998), and so they do not show the left-hemisphere/right hemispace bias to the active object present in right handers. An alternative is that left-handed individuals tend to be less asymmetric in hand use (Oldfield, 1971), and so show a reduced effect of visuomotor affordance to their dominant hand. However, given the small number of left-handed individuals tested here, our conclusions should remain cautious.

Summary and conclusions

We report data from two experiments which investigated the perceptual and visuomotor response to object affordances. The results indicate that positioning objects for action can elicit both perceptual and visuomotor responses. Pairs of action-related objects were identified more accurately when they were positioned for action, consistent with neuropsychological data indicating that interacting objects are perceptually grouped (Riddoch et al., 2003). This effect was reduced when spatial cues modulated the distribution of attention, disrupting grouping between the objects. Participants also showed improved report of (and a bias towards) the active object of the pair when it was positioned for action and oriented for use with the viewer’s dominant hand. In this case, we hypothesise that the action positioning of the object (relative to both the viewer and another object) increased the visuomotor response to the object (Handy et al., 2003; Petit et al., 2006), driving attention to the location of the active object (Handy et al., 2003) and improving object identification (Riddoch et al., 2003). This effect was not modulated by changes to the distribution of attention, consistent with evidence that active objects can automatically elicit a visuomotor response (Phillips & Ward, 2002; Riddoch et al., 2003).

References

Biederman, I. (1972). Perceiving real world scenes. Science, 177, 77–80.

Binkofski, F., & Buccino, G. (2006). The role of ventral premotor cortex in action execution and action understanding. Journal of Physiology - Paris, 99, 396–405.

Chao, L. L., & Martin, A. (2000). Representation of manipulable man-made objects in the dorsal stream. NeuroImage, 12, 478–484.

Craighero, L., Fadiga, L., Umiltà, C. A., & Rizzolatti, G. (1996). Evidence for visuomotor priming effect. NeuroReport, 8, 347–349.

Creem-Regehr, S. H., & Lee, J. N. (2005). Neural representations of graspable objects: Are tools special? Cognitive Brain Resesarch, 22, 457–469.

Culham, J. C., Cavina-Pratesi, C., & Singhal, A. (2006). The role of the parietal cortex in visuomotor control: What have we learned from neuroimaging? Neuropsychologia, 44, 2668–2684.

Deubel, H., Schneider, W. X., & Paprotta, I. (1998). Selective dorsal and ventral processing: Evidence for a common attentional mechanisms in reaching and perception. Visual Cognition, 5, 81–107.

di Pellegrino, G., Rafal, R., & Tipper, S. P. (2005). Implicitly evoked actions modulate visual selection: Evidence from parietal extinction. Current Biology, 15, 1469–1472.

Freeman, E. & Driver, J (2008). Voluntary control of long-range motion integration via selective attention to context. Journal of Vision 8(11):18, 1–22.

Freeman, E., Driver, J., Sagi, D., & Zhaoping, L. (2003). Top-down modulation of lateral interactions in early vision: Does attention affect integration of the whole or just perception of the parts? Current Biology, 13, 985–989.

Gibson, J. J. (1979). The Ecological Approach to Visual Perception. Hillsdale, N.J.: Lawrence Erlbaum.

Gilchrist, D., Humphreys, G. W., & Riddoch, M. J. (1996). Grouping and extinction: Evidence for low-level modulation of visual selection. Cognitive Neuropsychology, 13, 1223–1249.

Goldsmith, M., & Yeari, M. (2003). Modulation of object-based attention by spatial focus under endogenous and exogenous orienting. Journal of Experimental Psychology: Human Perception and Performance, 29, 897–918.

Green, C., & Hummel, J. E. (2006). Familiar interacting object pairs are perceptually grouped. Journal of Experimental Psychology: Human Perception and Performance, 32, 1107–1119.

Grèzes, J., & Decety, J. (2002). Does visual perception of object afford action? Evidence from a neuroimaging study. Neuropsychologia, 40, 212–222.

Grèzes, J., Tucker, M., Armony, J., Ellis, R., & Passingham, R. E. (2003). Objects automatically potentiate action: An fMRI study of implicit processing. European Journal of Neuroscience, 17, 2735–2740.

Grill-Spector, K. (2003). The neural basis of object perception. Current Opinion in Neurobiology, 13, 159–166.

Handy, T. C., Grafton, S. T., Shroff, N. M., Ketay, S., & Gazzaniga, M. S. (2003). Graspable objects grab attention when the potential for action is recognized. Nature Neuroscience, 6, 421–427.

Handy, T. C., & Tipper, C. M. (2007). Attentional orienting to graspable objects: What triggers the response? Neuro Report, 18, 941–944.

Humphreys, G. W., Riddoch, M. J., & Fortt, H. (2006). Action relations, semantic relations, and familiarity of spatial position in Balint’s syndrome: Crossover effects on perceptual report and on localization. Cognitive Affective & Behavioral Neuroscience, 6, 236–245.

Humphreys, G. W., Wulff, M., Yoon, E. Y., & Riddoch, M. J. (2010). Neuropsychological evidence for visual- and motor-based affordance: Effects of reference frame and object-hand congruence. Journal of Experimental Psychology: Learning, Memory and Cognition, 36, 659–670.

Lawson, R., & Jolicoeur, P. (1998). The effects of plane rotation on the recognition of brief masked pictures of familiar objects. Memory & Cognition, 26, 791–803.

Martin, A. (2007). The representation of object concepts in the brain. Annual Review of Psychology, 58, 25–45.

Nicol, J. R., & Shore, D. I. (2007). Perceptual grouping impairs temporal resolution. Experimental Brain Research, 183, 141–148.

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia, 9, 97–113.

Petit, L. S., Pegna, A. J., Harris, I. M., & Michel, C. M. (2006). Automatic motor cortex activation for natural as compared to awkward grips of a manipulable object. Experimental Brain Research, 168, 120–130.

Phillips, J. C., & Ward, R. (2002). S-R correspondence effects of irrelevant visual affordance: Time course and specificity of response activation. Visual Cognition, 9, 540–558.

Riddoch, M. J., Humphreys, G. W., Edwards, S., Baker, T., & Willson, K. (2003). Seeing the action: Neuropsychological evidence for action-based effects on object selection. Nature Neuroscience, 6, 82–89.

Riddoch, M. J., Humphreys, G. W., Heslop, J., & Castermans, E. (2002). Dissociations between object knowledge and everyday action. Neurocase, 8, 100–110.

Riddoch, M. J., Humphreys, G. W., Hickman, M., Clift, J., Daly, A., & Colin, J. (2006). I can see what you are doing: Action familiarity and affordance promote recovery from extinction. Cognitive Neuropsychology, 23, 583–605.

Roberts, K. L., & Humphreys, G. W. (2010a). Action relationships concatenate representations of separate objects in the ventral visual system. NeuroImage, 52, 1541–1548.

Roberts, K. L., & Humphreys, G. W. (2010b). The one that does leads: Action relations influence the perceived temporal order of graspable objects. Journal of Experimental Psychology: Human Perception and Performance, 36, 776–780.

Solodkin, A., Hlustik, P., Noll, D. C., & Small, S. L. (2001). Lateralization of motor circuits and handedness during finger movements. European Journal of Neurology, 8, 425–434.

Tipper, S. P., Paul, M. A., & Hayes, A. E. (2006). Vision-for-action: The effects of object property discrimination and action state on affordance compatibility effects. Psychonomic Bulletin & Review, 13, 493–498.

Tipples, J. (2002). Eye gaze is not unique: Automatic orienting in response to uninformative arrows. Psychonomic Bulletin & Review, 9, 314–318.

Tucker, M., & Ellis, R. (1998). On the relations between seen objects and components of potential actions. Journal of Experimental Psychology: Human Perception and Performance, 24, 830–846.

Tzourio, N., Crivello, F., Mellet, E., Nkanga-Ngila, B., & Mazoyer, B. (1998). Functional anatomy of dominance for speech comprehension in left handers vs. right handers. NeuroImage, 8, 1–16.

Yoon, E. Y., & Humphreys, G. W. (2007). Dissociative effects of viewpoint and semantic priming on action and semantic decisions: Evidence for dual routes to action from vision. The Quarterly Journal of Experimental Psychology, 60, 601–623.

Yoon, E. Y., Humphreys, G. W., & Riddoch, M. J. (2005). Action naming with impaired semantics: Neuropsychological evidence contrasting naming and reading for objects and verbs. Cognitive Neuropsychology, 22, 753–767.

Acknowledgments

This research was supported by an ESRC European Collaborative Research Project grant awarded to Glyn Humphreys. Thanks to Joy Payne for her help with creating the stimuli and participant testing.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Appendix 2

Rights and permissions

About this article

Cite this article

Roberts, K.L., Humphreys, G.W. Action relations facilitate the identification of briefly-presented objects. Atten Percept Psychophys 73, 597–612 (2011). https://doi.org/10.3758/s13414-010-0043-0

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-010-0043-0