A Systematic Review of Robotic Rehabilitation for Cognitive Training

- 1Department of Mechanical, Aerospace, and Biomedical Engineering, University of Tennessee, Knoxville, Knoxville, TN, United States

- 2School of Nursing, MGH Institute of Health Professions, Boston, MA, United States

A large and increasing number of people around the world experience cognitive disability. Rehabilitation robotics has provided promising training and assistance approaches to mitigate cognitive deficits. In this article, we carried out a systematic review on recent developments in robot-assisted cognitive training. We included 99 articles in this work and described their applications, enabling technologies, experiments, and products. We also conducted a meta analysis on the articles that evaluated robot-assisted cognitive training protocol with primary end users (i.e., people with cognitive disability). We identified major limitations in current robotics rehabilitation for cognitive training, including the small sample size, non-standard measurement of training and uncontrollable factors. There are still multifaceted challenges in this field, including ethical issues, user-centered (or stakeholder-centered) design, the reliability, trust, and cost-effectiveness, personalization of the robot-assisted cognitive training system. Future research shall also take into consideration human-robot collaboration and social cognition to facilitate a natural human-robot interaction.

1. Introduction

It is estimated that ~15% of the world's population, over a billion people, experience some form of disability and a large proportion of this group specifically experience cognitive disability (WHO, 2011). The number of people with disabilities is increasing not only because of the growing aging population who have a higher risk of disability but also due to the global increase in chronic health conditions (Hajat and Stein, 2018). Individuals with cognitive disability, such as Alzheimer's disease (AD) or Autism spectrum disorder (ASD), may have a substantial limitation in their capacity for functional mental tasks, including conceptualizing, planning, sequencing thoughts and actions, remembering, interpreting subtle social cues, and manipulating numbers and symbols (LoPresti et al., 2008). This vulnerable population is usually associated with significant distress or disability in their social, occupational, or other important activities.

With recent advancements of robotics and information and communication technologies (ICTs), rehabilitation robots hold promise in augmenting human healthcare and in aiding exercise and therapy for people with cognitive disabilities. As an augmentation of human caregivers with respect to the substantial healthcare labor shortage and the high burden of caregiving, robots may provide care with high repeatability and without any complaints and fatigue (Taheri et al., 2015b). In a meta analysis comparing how people interacted with physical robots and virtual agents, Li (2015) showed that physically present robots were found to be more persuasive, perceived more positively, and result in better user performance compared to virtual agents. Furthermore, robots can facilitate social interaction, communication and positive mood to improve the performance and effectiveness of cognitive training (Siciliano and Khatib, 2016). For example, a recent study (Pino et al., 2020) showed that older adults with mild cognitive impairment (MCI) that received memory training through the humanoid social robot (NAO) achieved more visual gaze, less depression, and better therapeutic behavior. Physically embodied robots hold promise as accessible, effective tools for cognitive training and assistance in future.

There have been a few literature reviews on physical rehabilitation (Bertani et al., 2017; Kim et al., 2017; Morone et al., 2017; Veerbeek et al., 2017), or cognitive rehabilitation for specific user populations, such as children with ASD (Pennisi et al., 2016) and older adults (Mewborn et al., 2017). To the authors' best knowledge, this article presents the first systematic review that focuses on robotic rehabilitation for cognitive training. We present applications, enabling technologies, and products of robotics rehabilitation based on research papers research papers focusing on cognitive training. We also discuss several challenges to the development of robots for cognitive training and present future research directions.

2. Methods

2.1. Search Strategy

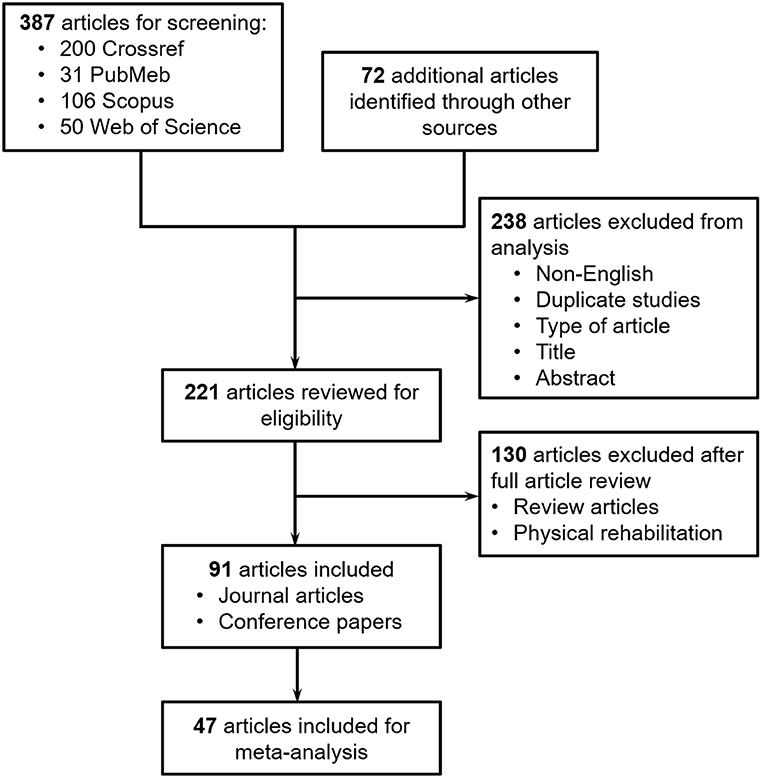

We conducted a systematic review in the datasets of Google Scholar, Crossref, PubMed, Scopus, and Web of Science using the key words (“robot” OR “robots” OR “robotics” OR “robotic”) AND (“cognitive training” OR “cognitive rehabilitation” OR “cognitive therapy” OR “cognitive recovery” OR “cognitive restore”). The search was limited to the articles published between 2015 and December 14, 2020. The search in Google Scholar yielded 5,630 articles. Google Scholar ranks sources according to relevance, which takes into account the full text of each source as well as the source's author, the publication in which the source appeared and how often it has been cited in scholarly literature (University of Minnesota Libraries, 2021). We screened the titles of the first 500 articles and excluded the remaining results due to their low relevance. After the analysis of abstracts and written languages, 328 articles were further excluded due to duplication (i.e., exact copy of two works), non-English language, and/or not pertaining to the research topic. Then 172 full articles were reviewed for eligibility. The articles that were aimed for physical rehabilitation or review articles were excluded. Finally, 80 eligible articles were included for further analysis. As illustrated in the PRISMA flow diagram (see Figure 1), with the keywords we initially found 200, 31, 106, and 50 articles in the datasets of Crossref, PubMed, Scopus and Web of Science for screening, respectively. These articles were combined with the 80 additional articles from Google Scholar for further screening. After the analysis of titles, abstracts, written languages and types of article, 238 articles were excluded due to duplication, non-English language, non-eligible article types (e.g., book chapter, book, dataset, report, reference entry, editorial and systematic review), and/or not pertaining to the research topic. Then 229 full articles were reviewed for eligibility. The articles that were aimed for physical rehabilitation or review articles were excluded. Finally, 99 eligible articles were included in this systematic review, including journal articles and conference papers presented at conference, symposium and workshop. These papers included the contents of applications, user population, supporting technologies, experimental studies, and/or robot product(s). Moreover, 53 articles that included experimental study of robot-assisted cognitive training with primary end users (i.e., people with cognitive disability) were identified for further meta-analysis.

Figure 1. PRISMA flow diagram illustrating the exclusion criteria and stages of the systematic review.

The literature include cognitive training robots in the forms of companion robots, social robots, assistive robots, social assisted robots, or service robots, which are collectively referred to as “cognitive training robots” in this systematic review. The literature employed different terminologies for cognitive training by rehabilitation robots, such as robot-enhanced therapy (David et al., 2018; Richardson et al., 2018), robot-assisted intervention (Scassellati et al., 2018), robot-assisted treatment (Taheri et al., 2015a), robot-assisted training (Tsiakas et al., 2018), robot-assisted therapy (Sandygulova et al., 2019), robot-mediated therapy (Begum et al., 2015; Huskens et al., 2015). Here, we do not distinguish between these different terms and instead adopt the term of “robot-assisted training” to represent all these different terms.

3. Results

3.1. Applications

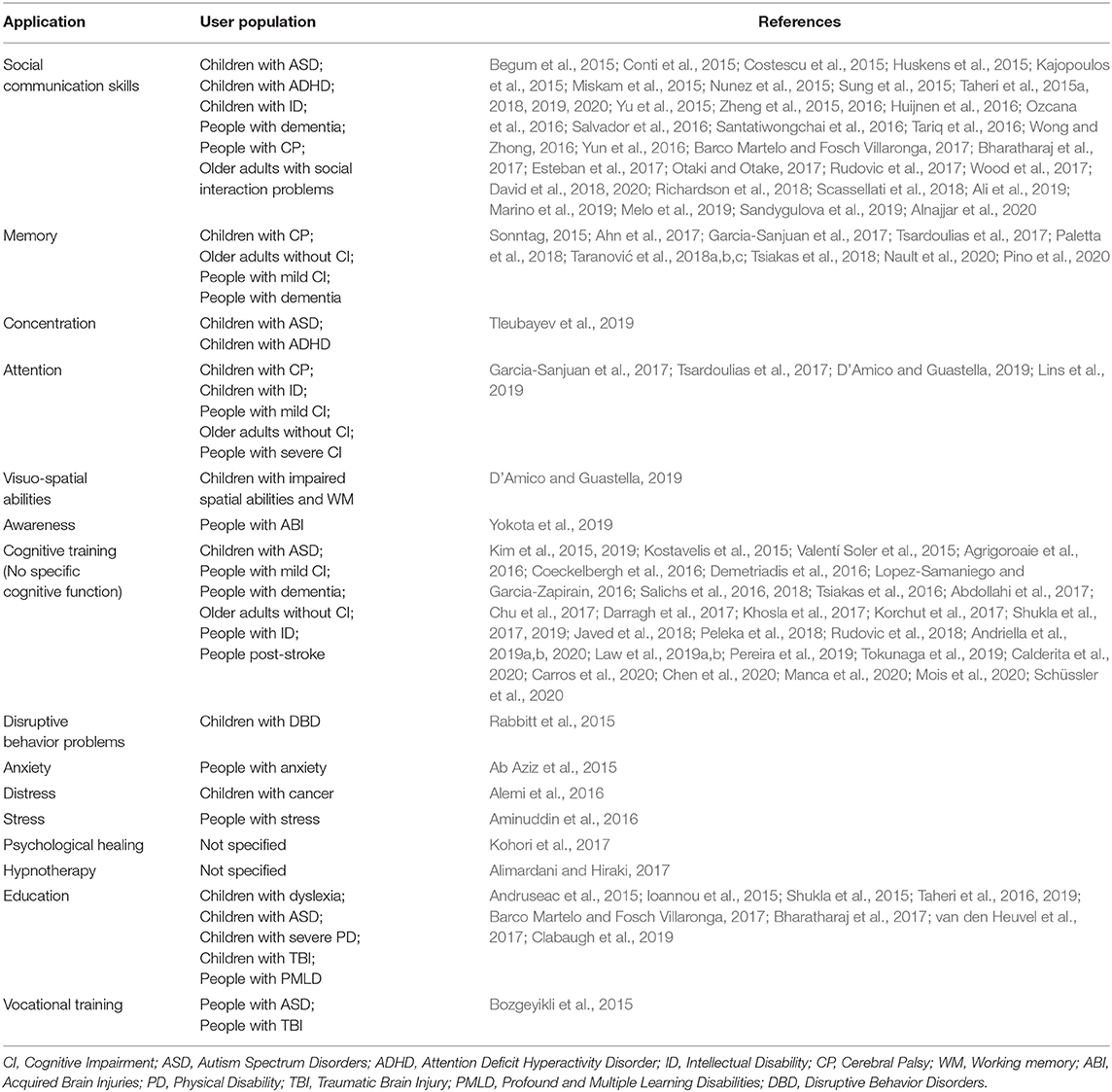

The studies on robot-assisted cognitive training are categorized in terms of their applications and end users in Table 1. To date, the most researched application (36 out of 98 articles, as shown in Table 1) of robots in cognitive training is to improve individual social communication skills, which may include joint attention skills, imitation skills, turn-taking skills and other social interaction skills. For example, Kajopoulos et al. (2015) designed a robot-assisted training protocol based on response to joint attention for children with ASD. The training protocol used a modified attention cueing paradigm, where the robot's head direction cued children's spatial attention to a stimulus presented on one side of the robot. The children were engaged in a game that could be completed only through following the robot's head direction. To aid in the training of imitation skills in children with ASD, Taheri et al. (2020) proposed a reciprocal gross imitation human–robot interaction platform, in which ASD children are expected to imitate the robot body gestures, including a combination of arms, feet, neck, and torso movements. David et al. (2020) developed a robot-enhanced intervention on turn-taking abilities in ASD children. In their protocol, the robot provided instruction (e.g., “Now is my turn”) to the child, checked if the child moved the picture as instructed, and provided feedback (e.g., “Good job”) to the child if the child respected turns by staying with his or her hands still, without interrupting the robot. Robot-assisted cognitive training showed increased cognitive capabilities for people with limited social capabilities, such as children with ASD (Huijnen et al., 2016; Esteban et al., 2017; Marino et al., 2019) and people with dementia (Sung et al., 2015; Yu et al., 2015; Otaki and Otake, 2017). Due to cognitive impairment, individuals with dementia may also show deficits in social functioning, such as social withdrawal (Havins et al., 2012; Dickerson, 2015). In the pilot study by Sung et al. (2015) about robot-assisted therapy using socially assistive pet robot (PARO), institutionalized older adults showed significantly increased communication and interaction skills and activity participation after receiving 4-week robot-assisted therapy. Another robotic application is to provide intervention to enhance people's impaired cognitive function, such as memory (Paletta et al., 2018), attention (Lins et al., 2019) and concentration (Tleubayev et al., 2019), or reduce their negative psychophysiological feelings, such as stress (Aminuddin et al., 2016) and anxiety (Ab Aziz et al., 2015). Additionally, a few studies adopted the robots to facilitate learning and educational activities for people with cognitive disabilities, such as children with dyslexia (Andruseac et al., 2015) or Traumatic Brain Injury (TBI) (Barco Martelo and Fosch Villaronga, 2017).

3.2. Enabling Technologies

Recent development in robotics, ICTs, multimodal human-robot interaction, and artificial intelligence leads to significant process in robot-assisted cognitive training and rehabilitation. This section presents a summary on a few important enabling technologies that foster the advancement of robotic rehabilitation for cognitive training, including multimodal perception, multimodal feedback, gamification, virtual and augmented reality, and artificial intelligence.

3.2.1. Design of Physical Appearance

A robot can have a human-like (Miskam et al., 2015; Peleka et al., 2018; Taheri et al., 2019), animal-like (Cao et al., 2015; Sung et al., 2015), or unfamiliar appearance (Scassellati et al., 2018). Besides the appearance, the size, softness, and comfort of the robot can also have an impact on users' perception, affection, cognitive load, and gaze following during interaction (Kohori et al., 2017), and thus the effectiveness of cognitive training. It remains unclear how users' perception is specifically affected by the robot's appearance. On the one hand, the human-like appearance was indicated to significantly positively affect users' perception of anthropomorphism, animacy, likeability, and intelligence toward robots, compared to a snowman-like appearance (Haring et al., 2016). On the other hand, increasing human-like appearance was found not to necessarily increase performance in human-robot interaction. For example, in the survey of expectation about the robots' appearance in robot-assisted ASD therapy, zoomorphic robots were indicated to be less ethically problematic than robots that looked too much like humans (Coeckelbergh et al., 2016). Some of their participants (i.e., parents, therapists, or teachers of children with ASD) worried about the possibility that the robot is perceived by the child as a friend, or that the robot looks too human-like. The relation between robots' human-like appearance and people's reaction to them may relate to the uncanny valley theory (Mori et al., 2012), which describes that people's familiarity (or affinity) with robots increases as the robots appear more human-like but when the robots are almost human, people's response would shift to revulsion. Tung (2016) observed an uncanny valley between the degree of anthropomorphism and children's attitudes toward the robots. In the review paper to study factors affecting social robot acceptability in older adults including people with dementia or cognitive impairment, Whelan et al. (2018) found that there is a lack of consensus regarding to the optimal appearance of social robots and that the uncanny valley concept varies between individuals and groups.

3.2.2. Multimodal Sensing

Having a good understanding of a user's cognitive state, affective state and surrounding environment, which is termed as multimodal perception, is a prerequisite step for robots to provide cognitive training. Usually the concept of multimodal perception involves two stages, multimodal sensing and perception. Further details on these two techniques in previous publications are individually discussed in the following. Various sensors have been adopted to facilitate a robot to achieve multimodal sensing, based on system requirements, end-user population, cost-effectiveness, etc. Among different sensing technologies, visual and auditory sensing are the most popular modalities. We summarize the multiple modalities for sensing in the following aspects.

1. Visual sensing. During human-robot interaction, visual sensors are a very popular, useful and accessible channel for perception. The advancement of technologies, such as manufacturing and ICTs, enabled researchers to integrate small, high-resolution and affordable cameras into their rehabilitation robotic system. Some studies placed cameras in the environment along with the robot (Melo et al., 2019). Other studies included cameras in the robotic mechanical system. For example, in the social robot Pepper, there were 2D and 3D cameras attached to the head (Paletta et al., 2018). With computational approaches, such as computer vision, the robots analyzed the video/images from the cameras and recognized users' critical states, such as their environment, facial expression, body movements, and even emotion and attention (Paletta et al., 2018; Peleka et al., 2018; Rudovic et al., 2018; Mois et al., 2020), which led to a better perception of users (Johal et al., 2015).

2. Auditory sensing. Another popular modality adopted during robot-assisted cognitive training is auditory sensing. Researchers analyzed users' auditory signals in terms of the lexical field, tone and/or volume of voice for speech recognition, emotion detection, and speaker recognition in robots (Paletta et al., 2018; Peleka et al., 2018; Rudovic et al., 2018). Due to the natural and intuitive nature for users behind the auditory sensing, some end users, such as older adults preferred this sensing channel to the touch input during interaction with the robot (Zsiga et al., 2018).

3. Physiological sensing. Besides visual and auditory sensing, physiological modalities have been incorporated into the robotic system, in order to have a better understanding of users' states (e.g., affective states). Previous studies show that human-robot interaction may be enhanced using physiological signals, such as heart rate, blood pressure, breathing rate, and eye movement (Sonntag, 2015; Lopez-Samaniego and Garcia-Zapirain, 2016; Ozcana et al., 2016; Ahn et al., 2017; Alimardani and Hiraki, 2017). For example, Rudovic et al. (2018) employed wearable sensors to detect children's heart-rate, skin-conductance and body temperature during their robot-based therapy for children with ASD, to estimate children's levels of affective states and engagement. When studying robot-assisted training for children with ASD, Nunez et al. (2015) used a wearable device of electromyography (EMG) sensors to detect smiles from children's face.

4. Neural sensing. The inclusion of brain-imaging sensors provides capabilities to measure and/or monitor a user's brain activity and to understand the user's mental states (Ali et al., 2019). This was especially useful when considering the user's cognitive states, such as level of attention and task engagement (Alimardani and Hiraki, 2017; Tsiakas et al., 2018). Neural sensing may also be meaningful to users who have difficulty in expressing their intention and feeling because of their physical and/or cognitive limitations, such as the older adults with Alzheimer's disease. Currently, among all candidates of brain-imaging sensors, EEG and functional near-infrared spectroscopy (fNIRS) are two popular modalities due to their advantages of non-invasiveness, portability, and cost-effectiveness. For example, Lins et al. (2019) developed a robot-assisted therapy to stimulate the attention level of children with cerebral palsy and applied electroencephalogram (EEG) sensors to measure children's attention level during the therapy.

3.2.3. Multimodal Feedback

After perceiving its user and environment, a robot shall entail multimodal feedback to interact (e.g., display its behaviors and feedback) with its user in a comfortable, readable, acceptable, and effective way (Melo et al., 2019). Multimodal feedback is particularly meaningful when the end users are unfamiliar with technologies or are limited in cognitive capabilities, such as older adults with dementia. Examples of feedback include voice, video, gesture, and physical appearance, all of which can affect users' perception of the robot during their interaction and thus the effectiveness of cognitive training/rehabilitation (Ab Aziz et al., 2015; Rabbitt et al., 2015). The following list shows popular modalities for robotic feedback during interaction with human users.

1. Visual feedback. One of the most widely-used overt feedback modalities is visual feedback or graphical user interface (GUI), displaying two-dimensional information. During robot-assisted cognitive training, visual feedback was delivered through an additional computer screen (Salvador et al., 2016; Taheri et al., 2019; Mois et al., 2020), or a touchscreen embodied in the robot (Paletta et al., 2018; Peleka et al., 2018). Some principles and/or issues on the design of GUI for robot-assisted training were suggested in previous studies. For example, a few studies recommended a larger screen and a simpler interface associated with each function choice for GUI to better facilitate visual feedback during cognitive training (Ahn et al., 2017).

2. Auditory feedback. Another widely used modality for feedback or interaction is auditory output, like speech during human-human interaction. This intuitive auditory communication can reduce the unfamiliarity with robotic technologies and increase the usability of system in the vulnerable population, such as the elderly population (Zsiga et al., 2018). Among previous studies, this feedback was delivered in one or combined form of beep (Nault et al., 2020), speech (Ab Aziz et al., 2015; Miskam et al., 2015; Peleka et al., 2018; Taheri et al., 2019), and music (Nunez et al., 2015). With auditory output, the robot provided daily communication and medication reminder, instructed cognitive training, and made emergent warning (e.g., short of battery) to the user (Orejana et al., 2015).

3. Non-verbal feedback. We refer to non-verbal feedback as all non-verbal communication cues by a robotic body, such as hand gestures and other body movements (Miskam et al., 2015; Taheri et al., 2019), eye gaze (Taheri et al., 2019), eye colors (Miskam et al., 2015; Taheri et al., 2019), and facial expression. Animation, similar to verbal language, makes a significant contribution to improving robot-assisted cognitive training. Moreover, the robot's animation can particularly introduce social interaction to a user, which is meaningful to individuals with impairments in social interaction skills.

4. Haptic feedback. Haptic feedback, by simulating the sense of touch and motion, may play important role during robot-assisted cognitive training due to the importance of touch in everyday life (Beckerle et al., 2017; Cangelosi and Invitto, 2017). Tactile feedback is one type of haptic feedback. During robot-assisted cognitive training, haptic feedback can also be introduced using vibration via wearable devices. For example, Nault et al. (2020) developed a socially assistive robot-facilitated memory game elaborated with audio and/or haptic feedback for older adults with cognitive decline. Although there was no significant difference in participants' game accuracy, preference, and performance in their system pilot study, the results provided insight into future improvements, such as increasing the strength of haptic feedback to increase the ease of being perceived and make the system more engaging. One notable robot, Paro, with the combination of soft, plush surface and additional encouraging haptic feedback (e.g., small vibration) creates a soothing presence. In a pilot study for institutionalized older adults by Sung et al. (2015), the communication and social skills of participants were improved by the robot-assisted therapy using Paro.

3.2.4. Gamification

Recently, game technology is becoming a popular way to motivate, engage and appeal to users in cognitive tasks, since traditional cognitive tasks are usually effortful, frustrating, repetitive, and disengaging. Serious games and brain training games are a growing research field for cognitive training (Heins et al., 2017). Integration between gaming and robotic technologies has attracted increasing amount of interest in research and application, to further enhance users' engagement in cognitive training. A few types of relationships between robots and games have been developed in the literature. For example, a robot can lead or accompany users through the game for cognitive training by providing instructions on how to perform the task (Ioannou et al., 2015; Chu et al., 2017; Tsardoulias et al., 2017; Scassellati et al., 2018; Sandygulova et al., 2019; Taheri et al., 2019; Tleubayev et al., 2019; Nault et al., 2020) or playing a role of an active player in the game (Tariq et al., 2016; Melo et al., 2019). Additionally, a robot can provide various types of feedback (see details in section 3.2.3) to encourage users to engage in the game (Taheri et al., 2015a; Lopez-Samaniego and Garcia-Zapirain, 2016). Often, games associated with cognitive training can be integrated into the robotic systems through a GUI (Ahn et al., 2017; Paletta et al., 2018; Peleka et al., 2018).

3.2.5. Virtual and Augmented Reality

Combining robot-assisted cognitive training with virtual reality (VR) and/or augmented reality (AR) techniques offers a cost-effective and efficient alternative to traditional training settings. The incorporation of VR/AR allows for replication of the tasks and environments in a more convenient and affordable way. Researchers also explored robotic cognitive training using mixed reality technology in cognitive training. For example, Sonntag (2015) presented an intelligent cognitive enhancement platform for people with dementia, where a mixed reality glass was used to deliver the storyboard (e.g., serious game for active memory training) to the user and a NAO robot served as a cognitive assistant for “daily routine.” Bozgeyikli et al. (2015) used virtual reality in a vocational rehabilitation system, which included six different modules, such as money management in a virtual grocery store, to provide vocational training for persons with ASD and TBI.

3.2.6. Artificial Intelligence

Artificial Intelligence plays a significant role in the field of robot-assisted cognitive training/rehabilitation, including applications in multimodal perception and feedback, personalization, and adaptability (Ab Aziz et al., 2015; Rudovic et al., 2018). Given multimodal sensing, a successful multimodal perception further requires robots to integrate signals across multiple modalities of input sensors. To date, a great progress has been made thanks to the advancement of machine learning and deep learning. Multi-modal signals enable the robot with a good interpretation and understanding of its users, including their needs, intention, emotions, and surrounding environment (Paletta et al., 2018). Rudovic et al. (2018) implemented deep learning in a robot for ASD therapy to automatically estimate children's valence, arousal and engagement levels. Javed et al. (2018) utilized multimodal perception, including the analyses of children's motion, speech, and facial expression, to estimate children's emotional states. Using multiple feedback modalities may overload users with redundant information, increase task completion time, and reduce the efficiency of cognitive training (Taranović et al., 2018c). Additionally, users may favor certain modalities over others due to personal preference or cognitive disability. Taranović et al. (2018c) designed an experiment of adaptive modality selection (AMS) in robot-assisted sequential memory exercises and applied artificial intelligence to learn the strategy that selects the appropriate combination and amount of feedback modalities tailored to different situations (e.g., environments and users). An appropriate strategy is crucial to successful long-term robot-assisted cognitive intervention. Specifically, reinforcement learning, an area of machine learning, is a promising approach to adapt and personalize the intervention to each individual user as well as to optimize the performance of robot-assisted cognitive training, due to its capability of allowing a robot to learn from its experience of interaction with users (Sutton and Barto, 2018). For example, Tsiakas et al. (2016) used interactive reinforcement learning methods to facilitate the adaptive robot-assisted therapy, that is, adapt the task difficulty level and task duration to users with different skill levels (e.g., expert or novice user), in the context that users need to perform a set of cognitive or physical training tasks. Javed et al. (2018) developed a Hidden Markov model (HMM) in their adaptive framework for child-robot interaction, aiming to enable a child with ASD to engage in robot-assisted ASD therapy over long term. In their HMM, the states took into consideration a child's emotional state or mood, and the actions were the robot's behaviors or other audiovisual feedback. Clabaugh et al. (2019) utilized reinforcement learning to personalize instruction challenge levels and robot feedback based on each child's unique learning patterns for long-term in-home robot interventions. Although reinforcement learning may suffer the problem of sample inefficiency, the slowness of reinforcement learning can be overcome using techniques, such as episodic memory and meta-learning (Botvinick et al., 2019).

3.3. Experimental Studies

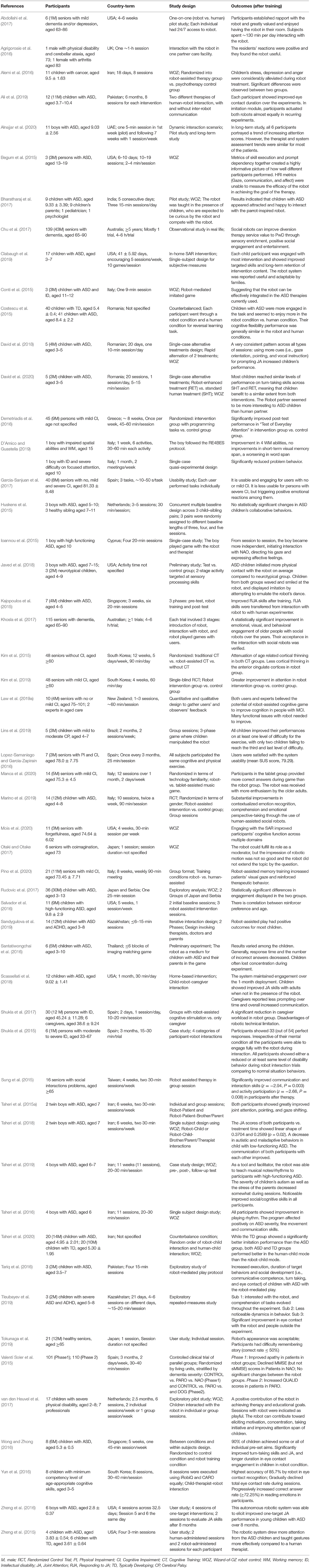

Many experimental studies have been conducted to evaluate the important properties of robotic rehabilitation, such as feasibility, safety, usability, performance, etc. On the one hand, exploratory studies including surveys and interviews with users (e.g., patients, caregivers, and therapists) have been conducted to inform the next stage of study (Rabbitt et al., 2015; Coeckelbergh et al., 2016; Salichs et al., 2016; Darragh et al., 2017; Kohori et al., 2017; Korchut et al., 2017; Law et al., 2019b). On the other hand, researchers have conducted experimental studies to verify and/or validate robot-assisted cognitive training systems. Table 2 shows a meta analysis for experimental studies, where the robot-assisted cognitive training was provided to primary end users (i.e., persons with cognitive disabilities). Up to date, majority of the experimental studies were conducted in a controlled lab setting, and only a few studies were conducted in an environment simulating daily activities in real world (Scassellati et al., 2018).

3.3.1. Study Design

Most experimental studies included three phases: pre-training assessment (i.e., baseline assessment), robot-assisted cognitive training, and post-training assessment (Kajopoulos et al., 2015; Kim et al., 2015; Sung et al., 2015; Yu et al., 2015; Alemi et al., 2016; Taheri et al., 2016, 2019; van den Heuvel et al., 2017; Scassellati et al., 2018; Marino et al., 2019). The effectiveness of robot-assisted training was evaluated by the comparison of pre- and post-training assessments using machine learning or statistical methods (Kim et al., 2015; Yu et al., 2015; Scassellati et al., 2018; Marino et al., 2019; Taheri et al., 2019).

Most studies adopted the group-based design where participants were randomly assigned to control or intervention groups (Kim et al., 2015; Sung et al., 2015; Yu et al., 2015; Marino et al., 2019). Some researchers employed single-case designs (or single-subject designs) to investigate the impact of social robots on cognitive training (Ioannou et al., 2015; Taheri et al., 2015a; David et al., 2018). For example, Ioannou et al. (2015) conducted the single-case study to explore the potential role of co-therapist of humanoid social robot, NAO, during autism therapy session with one child with ASD. In their study, there are four intervention sessions, and one follow-up, post-intervention therapy session to examine the effectiveness of the therapy with NAO.

Sample sizes vary dramatically in the literature (Ioannou et al., 2015; Kajopoulos et al., 2015; Kim et al., 2015; Sung et al., 2015; Chu et al., 2017; Khosla et al., 2017; Rudovic et al., 2018), where some studies were conducted with hundreds of participants whereas some studies included only a few participants. Challenges to recruitment included accessibility of participants and their caregivers, participants' disability, and ethical issues (e.g., privacy).

In terms of the intensity and duration of robot-assisted cognitive training, due to the variety of applications and end users, there was also a great variation in the total number of training sessions as well as the session duration. For example, with respect to one single training session, it took from about 20 min (Shukla et al., 2015; Tleubayev et al., 2019) to 90 min (Kim et al., 2015). Corresponding to the total cognitive training period, it varied from a few days (Bharatharaj et al., 2017) to more than 5 years (Chu et al., 2017).

3.3.2. Evaluation

Researchers employed subjective and/or objective evaluation metrics to evaluate the performance of robots in cognitive training. Subjective measurement include qualitative observation, interviews and questionnaires. Objective measurements evaluate the performance from a behavioral or neurophysiological level.

1. Observation. During the experiments or recorded video, the experimenters or professional therapists observed and evaluated participants' behaviors, such as affective feelings, eye contact, communication, and other related interactions, based on their knowledge and experience (Begum et al., 2015; Conti et al., 2015; Costescu et al., 2015; Ioannou et al., 2015; Shukla et al., 2015; Taheri et al., 2015a, 2019; Yu et al., 2015; Tariq et al., 2016; Wong and Zhong, 2016; Yun et al., 2016; Zheng et al., 2016; Abdollahi et al., 2017; Chu et al., 2017; Garcia-Sanjuan et al., 2017; Khosla et al., 2017; Rudovic et al., 2017; David et al., 2018; Marino et al., 2019; Sandygulova et al., 2019; Tleubayev et al., 2019). This measurement was a very practical, dominant metric during the study of children with ASD. With the development of ICTs, some studies also applied customized software (instead of human effort) to evaluate user's behaviors, such as smiles and visual attention (Pino et al., 2020).

2. Interview. Interviews were conducted with the primary users (i.e., patients), their caregivers (e.g., parents and other family caregivers), and therapists, to learn about users' opinion and experience, and the performance of the robot-assisted cognitive training (Yu et al., 2015; Bharatharaj et al., 2017; Darragh et al., 2017; Paletta et al., 2018; Sandygulova et al., 2019; Taheri et al., 2019; Tleubayev et al., 2019). As stated in the book on user experience (UX) lifecycle by Hartson and Pyla (2018), user interview is a useful, practical technique to understand users' needs, design solutions, and evaluate UX, all of which are basic fundamental activities in UX lifecycle. Specifically, interviews can be applied to extract requirements of people with cognitive disability and/or their caregivers, to create the human-robot interaction design concepts, and to verify and refine human-robot interaction design for cognitive training. For example, in the case studies by Orejana et al. (2015), older adults with chronic health conditions in a rural community used a healthcare robot (iRobi) in their homes for at least 3 months. Then participants were interviewed to learn personal accounts of participants's experience. Through the interview, the authors found that more familiar games may be easier for older people to relate to and therefore increase users' confidence and that a larger screen would make the functions easier to see and use. The interview also revealed that older people sometimes have less dexterity so making the touchscreen less sensitive to long presses may remove accidental triggering of functions.

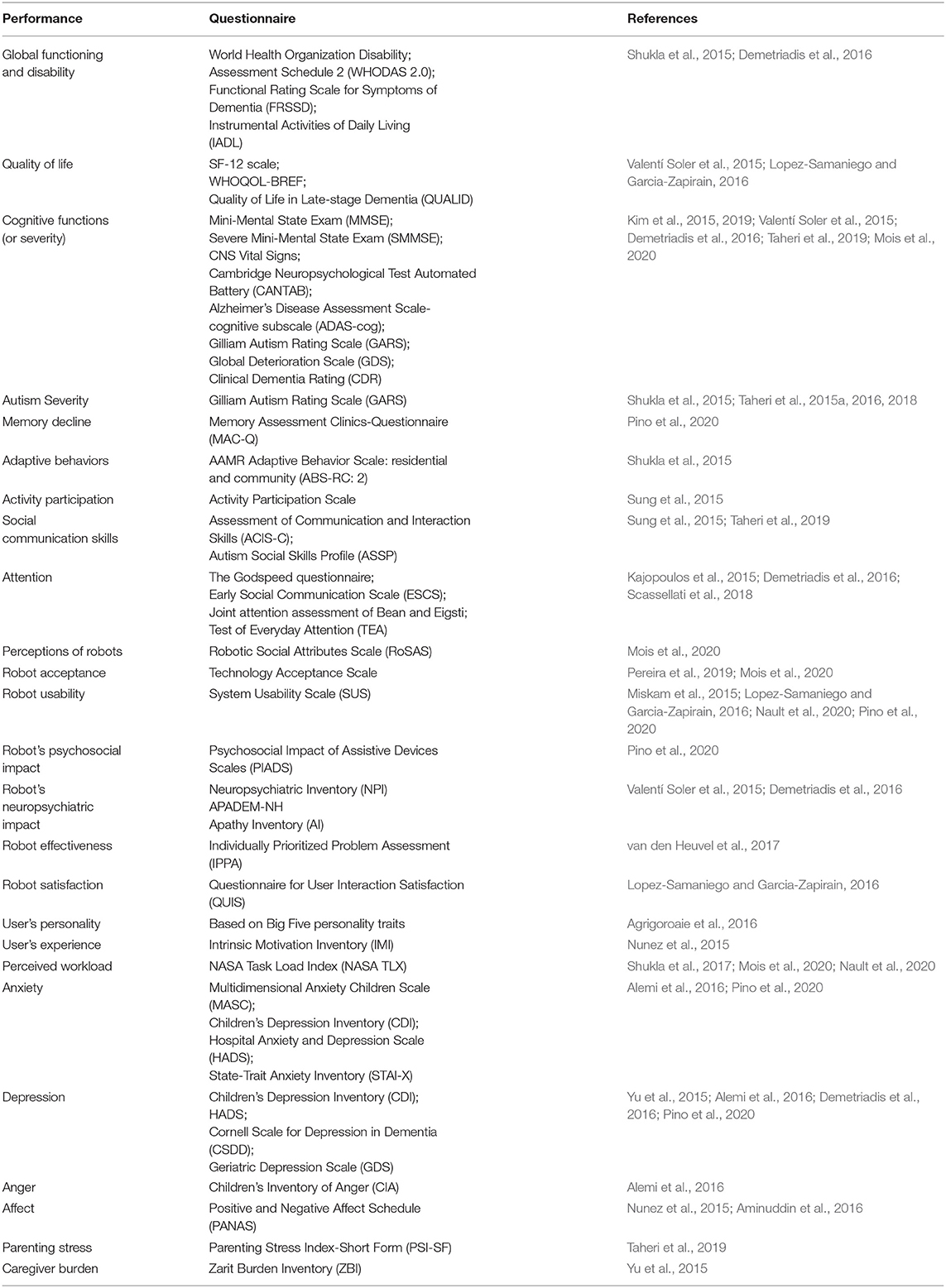

3. Questionnaire. Most studies utilized questionnaires to evaluate the performance of robot-assisted cognitive training. Researchers adopted questionnaire(s) based on their targeted performance, such as targeted user groups (e.g., patients, caregivers, or therapists), targeted cognitive capabilities (e.g., memory or anxiety), and research goals (e.g., users' perception of robot or effectiveness of robot). A few studies designed their own questionnaires according to their study (Tariq et al., 2016; Abdollahi et al., 2017; Ahn et al., 2017; Bharatharaj et al., 2017; Khosla et al., 2017; van den Heuvel et al., 2017; Scassellati et al., 2018; Lins et al., 2019; Tokunaga et al., 2019). Table 3 shows a list of common questionnaires in the literature.

4. Behavioral measurement. From a behavioral perspective, researchers measured the number of correct/incorrect responses, response time, and/or time to complete the activity by participants to evaluate the performance of robot-assisted training (Bozgeyikli et al., 2015; Costescu et al., 2015; Ioannou et al., 2015; Kajopoulos et al., 2015; Salvador et al., 2016; Shukla et al., 2017; Lins et al., 2019; Nault et al., 2020).

5. Neurophysiological measurement. The advancement of brain-imaging technologies and deep learning enables researchers to assess the impact of cognitive training on cognitive capabilities from a neurophysiological perspective, using brain-imaging tools, such as EEG, fNIRS or functional magnetic resonance imaging (fMRI) (Ansado et al., 2020). Researchers also applied such tools to detect changes in the brain associated with participants' cognitive capability as metrics to evaluate the performance of robots in cognitive training (Kim et al., 2015; Alimardani and Hiraki, 2017).

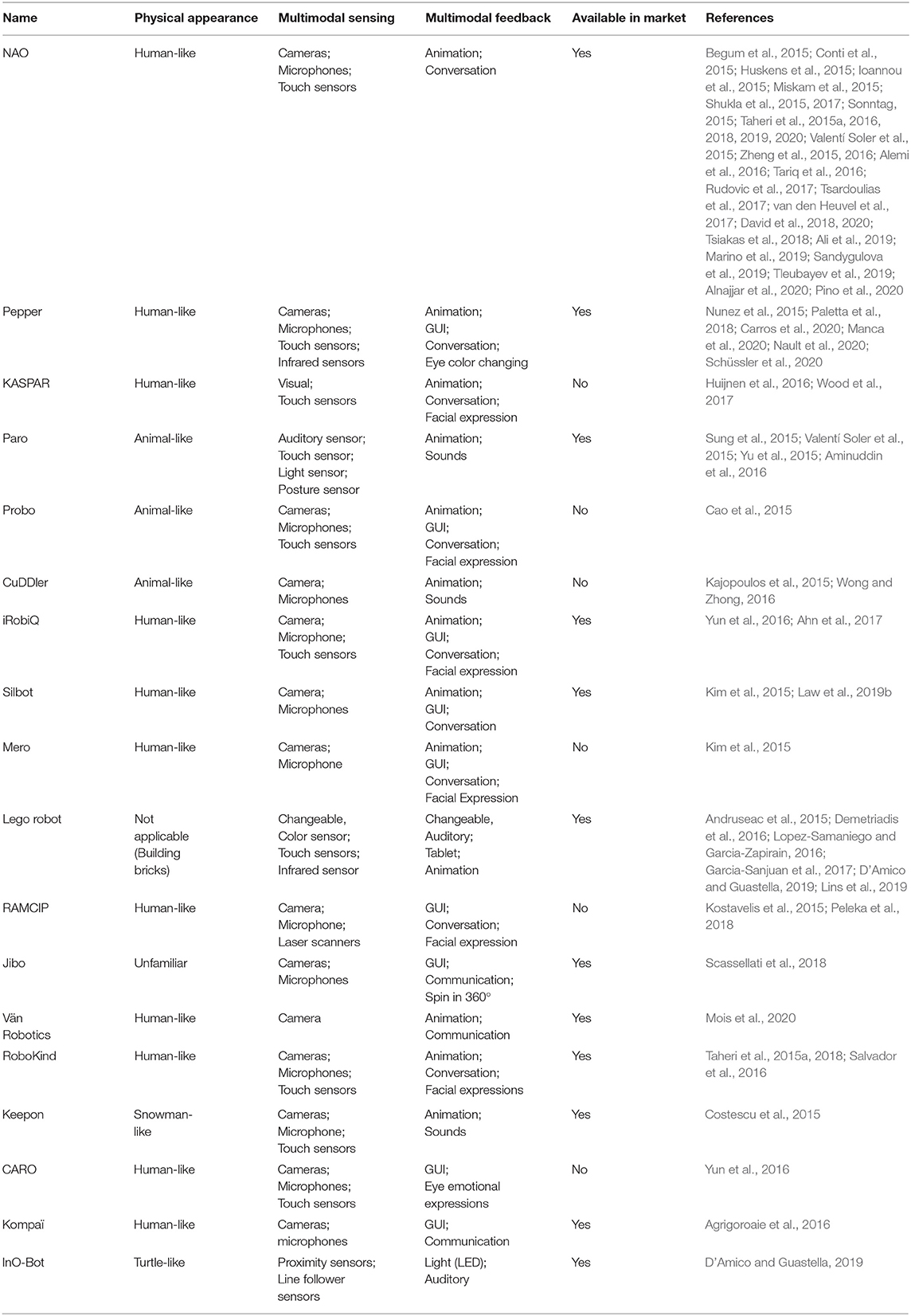

3.4. Robot Products

The development of technologies, such as manufacturing and ICTs, has led to the generation of mass-product robots for research, education and therapeutic applications (Wood et al., 2017; Pandey and Gelin, 2018). Particularly in the field of cognitive training/rehabilitation, the developed robots are featured with capabilities, such as the aforementioned multimodal perception and multimodal feedback to support the human-robot interaction during cognitive training. Table 4 shows commonly used robot products as well as the important features to enable these robots to assist cognitive training. Their specific applications in cognitive training among previous studies, for example, assisting the intervention for memory and social communication skills, are listed in Table 1.

4. Discussions

4.1. Limitations

4.1.1. Sample Size

Probably, the most common challenge faced by researchers of cognitive training is a small size of participants. This imposes the generalization and reliability of experimental results in question. The limitation of small sample size was caused by the small number of participants or the limited number of available robots for experiments (Kajopoulos et al., 2015; Shukla et al., 2015; Zheng et al., 2015; Tariq et al., 2016; Bharatharaj et al., 2017; Darragh et al., 2017; Garcia-Sanjuan et al., 2017; Tsardoulias et al., 2017; Lins et al., 2019; Marino et al., 2019; Tleubayev et al., 2019). Typically, a research lab has only a few robots. This would be particularly challenging to experimental studies that require multiple sessions for each individual user. In this case, within the same study period, these studies have to control the number of participants to a small number. The recruitment of participants was influenced by the accessibility to participants during the whole study and sometimes the problems associated with their caregivers (Alemi et al., 2016; Taheri et al., 2019). For example, in the study (Alemi et al., 2016) of exploring effect of utilizing a robot NAO as a therapy-assistive tool to deal with pediatric distress among children with cancer. In terms of the small sample size, researchers mentioned that due to the novelty of their project and scant number of systematical psychological interventions for patients with cancer or other refractory illness in Iranian hospitals, it was difficult to persuade children's parents to join their study. Also, they mentioned that it was hard for parents to bring their kids to intervention sessions on a regular basis. Moreover, the issue of small sample size also means that the participants in some studies were not general and representative, in terms of factors, such as their severity of cognitive disability, their age and gender (Begum et al., 2015; Yu et al., 2015; Chu et al., 2017).

4.1.2. Measurement of Training Effectiveness

Another impeding factor in studies of robot-assisted cognitive training is the evaluation of its training effectiveness, which can relate to choosing tools for specific evaluation metrics, identifying relevant evaluation metrics, or designing experiments to facilitate evaluation. In terms of the evaluation metrics, many studies adopted subjective evaluations, which could be biased and inaccurate. To the authors' best knowledge, there is no standardized questionnaire to evaluate robot-assisted cognitive training. As shown in Table 3, multiple different questionnaires were applied to evaluate the same target (e.g., robot acceptance), which makes it difficult to compare the performance of robot-assisted cognitive training between different studies (Bharatharaj et al., 2017). Often, assessment metrics focus on the impact on the specifically trained cognitive capability, ignoring the potential transfer to other cognitive skills (Zheng et al., 2016) and the long-term performance (Richardson et al., 2018). Moreover, evaluations were frequently conducted for the robot-assisted cognitive training in controlled laboratory settings. The real-world environments are usually noisy and dynamic, which brings greater challenges for a reliable, robust user-robot interaction and a good user experience of the robot (Salem et al., 2015; Trovato et al., 2015).

Additionally, the effectiveness of robot-assisted cognitive training may be impacted by users' perceived interaction with the robot. On the one hand, some studies (Lopez-Samaniego and Garcia-Zapirain, 2016; Pereira et al., 2019; Mois et al., 2020) have evaluated the acceptance, satisfaction and perception of robots for cognitive training. On the other hand, many studies (Kim et al., 2015; Shukla et al., 2015; Demetriadis et al., 2016; Pino et al., 2020) have evaluated effectiveness of robot-assisted cognitive training on participant's cognitive capabilities. However, it is rarely addressed in the literature how acceptance and perception of the robot affects the effectiveness of cognitive training. Moreover, as shown in Table 2, some studies presented the results of robot-assisted training without comparing to the effectiveness of human-assisted training. For more rigorous evaluation of the effectiveness of a robot-assisted cognitive training approach, it is recommended to compare against human-assisted training and other existing approaches.

4.1.3. Uncontrollable Factors

There always exist uncontrollable factors during the study of robot-assisted cognitive training/rehabilitation. The problem is more noteworthy for multiple-session studies since researchers cannot control participants' daily and social activities outside of the laboratory setting. The topic of uncontrollable factors is relatively less studied. In a study on using a social robot to teach music to children with autism, Taheri et al. (2019) pointed out some improvements observed in music education and/or social skills are attributable to other interventions or education the participants may be receiving. When investigating the influence of robot-assisted training on cortical thickness in the brains of elderly participants, Kim et al. (2015) recognized uncontrollable factors due to participants' daily cognitive activity at home, such as using computers or reading books.

4.2. Challenges and Future Development

4.2.1. Ethical Challenges

During the development of robots for cognitive training/rehabilitation, there are some ethical issues with respect to human dignity, safety, legality, and social factors to be considered. For example, during robot-assisted cognitive training, the interaction between the user and the robot happens at both the cognitive (dominant) and physical level (Villaronga, 2016). There could be the issue of perceived safety, or cognitive harm. For example, the user may perceive the robot unsafe or scary (Salem et al., 2015; Coeckelbergh et al., 2016). In the study (Shim and Arkin, 2016) exploring the influence of robot deceptive behavior on human-robot interaction, a robot NAO deceptively showed positive feedback to participants' incorrect answers in a motor-cognition dual task. The self-report results revealed that the robot's deceptive feedback positively affected a human's frustration level and task engagement. Even though a robot's deceptive action may lead to positive outcome, Shim and Arkin emphasized that the ethical implications of the robot deception, including those regarding motives for deception, should always be discussed and validated prior to its application. Another arising ethical issue is how responsibility can be allocated, or distribution of responsibility (Loh, 2019; Müller, 2020). For example, if a robot acts during cognitive training, will the robot itself, designers or users be responsible, liable or accountable for the robot's actions? We should also pay close attention to ethical issues, such as the affective attachments, dependency on the robot, safety and privacy protection of users' information, and transparency in the use of algorithms in robotic systems (Kostavelis et al., 2015; Casey et al., 2016; Richardson et al., 2018; Fiske et al., 2019). Similarly, designers should accommodate the design of robot to these ethical considerations (Ozcana et al., 2016). To ensure the perceived safety, researchers need to always take end users' perception into account, which can be known through questionnaires and interviews with them (and their caregivers and therapists if needed), and their behavioral and neurophysiological activities. The tendency for humans to form attachments to anthropomorphized robots should be carefully considered during design (Riek and Howard, 2014; Riek, 2016). Moreover, for fear that the robot could replace human health care from both patients and the professional caregivers, it should be emphasized that the rehabilitation robots are developed with the aim of supplementing human caregivers, rather than replacing them (Doraiswamy et al., 2019).

4.2.2. User-Centered Design

The goal of robotic cognitive rehabilitation is to provide cost-effective cognitive training to vulnerable people with cognitive disabilities, which can supplement their caregivers and/or therapists (Doraiswamy et al., 2019). Therefore, we encourage the idea of user-driven, instead of technology-driven, robot design and development (Rehm et al., 2016). Emphasis should be given to the primary users (i.e., patients) of the robots and other key stakeholders (e.g., caregivers, therapists, and doctors) to design and shape this robot, including requirement analysis, robot development and evaluation with different stakeholders (Casey et al., 2016; Gerling et al., 2016; Leong and Johnston, 2016; Rehm et al., 2016; Salichs et al., 2016; Barco Martelo and Fosch Villaronga, 2017; Riek, 2017). It is also important to pay attention to potential technical difficulties for vulnerable populations, such as the elderly and children with ASD (Orejana et al., 2015) and the social and contextual environment that the robot will be applied to (Jones et al., 2015). More standardized, unbiased benchmarks and metrics need to be developed for different stakeholders to evaluate the performance of robots from their perspectives. While it is necessary to start pilot studies with healthy participants, it is crucial to relate the developed systems to patients with cognitive impairment at home settings.

Furthermore, robot development is a multidisciplinary study which requires knowledge from multiples fields, such as social cognitive science, engineering, psychology, and health care, such as ASD and dementia. Enhanced collaborations among these fields are needed to improve future technology in robotic rehabilitation.

4.2.3. Reliability, Trust, Transferability, and Cost-Effectiveness

The reliability of the robotic system ensures that the robot can consistently work in noisy, dynamic real-world environment. This makes a significant contribution to a user's confidence and increases positive perception of the robot (Wood et al., 2017). Mistakes made by robots during interaction can cause a loss of trust in human users. More work on human-robot interaction is needed to implement a reliable and robust robot to assist cognitive training. This may cover multimodal sensing technologies, artificial intelligence, and modeling. On the other hand, we need to take into consideration how to effectively restore trust to the robot in case that mistakes are made by the robot during interaction with the user. This may involve how and when the robot apologizes for its mistake for users. Robinette et al. (2015) found that timing is a key to repair robot trust, suggesting that the robot should wait and address the mistake next time a potential trust decision occurs rather than addressing the mistake immediately.

Currently, most studies focus on specific cognitive training tasks and environments, which means that the robot cannot assist in other cognitive tasks. Here, we encourage the implementation of a transferable mechanism in robots for cognitive training. In other words, we should enable more powerful learning algorithms in the robot so that the robot can learn and adapt to more new cognitive training (Andriella et al., 2019a). Researchers should also take cost-effectiveness into account during the design of robot (Wood et al., 2017). From the commercial perspective, cost-effectiveness is considered beyond the purchase, maintenance, and training costs for the system (Riek, 2017). Furthermore, from the perspective of time and effort of users, more work is needed to find out the optimal robot-assisted cognitive training strategy (e.g., the frequency and duration of each cognitive session). Therefore, we encourage future studies clearly state the used training strategies, making it easier for the community to compare different strategies.

4.2.4. Personalization

There is no “one-size-fits-all” in health care. To provide a successful cognitive training, the robot needs to be personalized and adaptive in three levels. Firstly, personalization requires the robot to provide appropriate cognitive training and feedback to meet the specific need of groups with different cognitive disabilities (e.g., people with ASD, people with ADRD). Secondly, the robots need to adapt to the diversity existing in the population as well as tailor to each individual user's severity of cognitive impairment, cultural and gender-dependent difference, personality and preference (Kostavelis et al., 2015; Javed et al., 2016; Riek, 2016; Darragh et al., 2017; Rudovic et al., 2017; Richardson et al., 2018; Sandygulova et al., 2019). For example, children with ASD, have a wide range of behavioral, social, and learning difficulties. And each individual may have a different preference to robot's gender and modalities of feedback (Sandygulova and O'Hare, 2015; Nault et al., 2020). As a result, we expect that a personalized robot would provide various cognitive training, e.g., a variety of games and adjustable voice, for diverse individual needs and requirements to keep the user engaged and focused over long term (Scassellati et al., 2018; Tleubayev et al., 2019). Furthermore, rehabilitation robots should adapt to individually time-changing characters, such as cognitive impairment, task engagement, and even personalities (Agrigoroaie and Tapus, 2016; Tsiakas et al., 2016, 2018). For example, the robot should adjust the cognitive training and feedback if the user feels bored, too difficult, or too easy (Lins et al., 2019). Machine learning methods should also take into consideration of personalization. Existing methods, such as interactive reinforcement learning (IRL) or incremental learning (Castellini, 2016) provide good examples, where one block module is used to specifically model each user's information, such as patient's name, hobbies and personalities related to cognitive training (Salichs et al., 2016). IRL is a variation of reinforcement learning that studies how a human can be included in the agent learning process. Human input play the role of feedback (i.e., reinforcement signal after the selected action) or guidance (i.e., actions to directly intervene/correct current strategy). IRL can also be utilized to enable adaptation and personalization during robot-assisted cognitive training. For example, Tsiakas et al. (2016) proposed an adaptive robot assisted cognitive therapy using IRL, where the primary user feedback input (e.g., engagement levels) were considered as a personalization factor and the guidance input from professional therapist were considered as a safety factor. Their simulation results showed that IRL improved the applied policy and led to a faster convergence to optimal policy. Castellini (2016) proposed an incremental learning model to enforce a true, endless adaptation of the robot to the subject and environment as well as improve the stability and reliability of robot's control. Incremental learning enables an adaptive robot system to update its own model whenever it is required, new information is available, or the prediction is deemed no longer reliable.

4.2.5. Human-Robot Collaboration

Future rehabilitation robots should not only be autonomous but also be collaborative (or co-operative) (Huijnen et al., 2016; Weiss et al., 2017). From the perspective of collaboration between the robot and the primary end users (i.e., people with cognitive disability), there is evidence indicating that a fully autonomous robotic system is not the best option for interaction with the vulnerable population (Peca et al., 2016). Instead, a semi-autonomous robot is a more suitable solution (Wood et al., 2017). With the highest-level goal of enhancing user's cognitive capabilities, the robot should “care” about the user's situation, take compensatory reaction as a teammate, engage the user and train/stimulate the user's cognitive capabilities as best as possible. The capability of collaboration may also help to avoid the user's feeling of redundancy and increase their feeling of self-autonomy and long-term engagement in cognitive training (Orejana et al., 2015). The robot should have a good perception of the user's changing situations and an intelligent strategy to engage the user. On the other hand, from the perspective of collaboration among robots, users, and their caregivers (and therapists), more future work is needed to solve the shared control issue. Researchers need to figure out strategies for robots to render the caregivers' and therapists' tasks easier as an assistive tool for cognitive training, instead of totally replacing them (Kostavelis et al., 2015; Coeckelbergh et al., 2016). A distribution between autonomy of robots and teleoperation by caregivers/therapists is needed to support the collaboration of robots for cognitive training.

4.2.6. Social Cognition

The knowledge gained in human-human interaction can be applied to foster human-robot interaction and to obtain critical insights for optimizing social encounters between humans and robots (Henschel et al., 2020). Marchesi et al. (2019) conducted a questionnaire study to investigate whether people adopt intentional stance (Dennett, 1989) toward a humanoid robot, iCub. Their results showed that it is possible to sometimes induce adoption of the intentional stance toward humanoid robots. Additionally, non-invasive neuroimaging techniques (e.g., fMRI) in neuroscience enable the possibility of probing social cognitive processing in human brain during interaction with robots. For example, Rauchbauer et al. (2019) observed that neural markers of mentalizing and social motivation were significantly more activated during human-human interaction than human-robot interaction. Klapper et al. (2014) showed that human brain activity within the theory-of-mind network (Saxe and Wexler, 2005; Price, 2012; Koster-Hale and Saxe, 2013) was reduced when interacting with agents in a form of low human animacy (i.e., physical appearance) compared to high human animacy. These issues become important when we adopt robots for cognitive training for people with cognitive dysfunction (Frith and Frith, 1999; Chevallier et al., 2012), as they underline the substantial contrast between human-human and human-agent interactions. Additionally, the advanced non-invasive, portable, and cost-effective neuroimaging techniques (e.g., EEG and fNIRS) hold the promise of evaluating human-robot interaction from controlled laboratory setting to real-world setting. Herein, we encourage to leverage human neuroscience to facilitate the development of robots for cognitive training, such as understanding the effects of robot-assisted cognitive training and learning the extent and contexts at which it can be beneficial from neurophysiological perspective.

4.2.7. Natural Human-Robot Interaction

Similar to human-human interaction during cognitive training by human therapists, robots need to be able to interact with users naturally in robotic rehabilitation. This includes having a good understanding of user's emotions (e.g., happiness, shame, engagement), intentions and personality (Pettinati and Arkin, 2015; Rahbar et al., 2015; Vaufreydaz et al., 2016; Rudovic et al., 2018), being able to provide an emotional response when being shared with personal information (de Graaf et al., 2015; Chumkamon et al., 2016), talking day-by-day more to the user on various topics like hobbies, and dealing with novel events (Dragone et al., 2015; Kostavelis et al., 2015; Adam et al., 2016; Ozcana et al., 2016). These natural user-robot interactions require powerful perception, reasoning, acting and learning modules in robots, or in other words, cognitive and social-emotional capabilities. However, from the perception perspective, understanding users' intentions and emotions is still a great challenge for robots (Matarić, 2017). Robots need to interpret multimodal signals (e.g., facial expression, gestures, voice, and speech) simultaneously to understand users' covert intentions and emotions. Similarly, more work is needed for the multimodal feedback in the future. To maximize the benefits of physically present robots and facilitate both the short- and long-term human-robot interaction for cognitive training, we need to develop more embodied communication in robots, not limited in verbal communication (Paradeda et al., 2016; Salichs et al., 2016; Matarić, 2017). Haptic sensing and feedback should be strongly considered in future research as part of multimodal perception and feedback (Arnold and Scheutz, 2017; Cangelosi and Invitto, 2017). More specifically, we need to implement the strategy to enable the robot to associate cognitive assistance and exercise with appropriate multimodal feedback, e.g., spoken words, facial expressions, eye gaze, and other body movements (Paletta et al., 2018). The embodied communication during human-robot interaction is a challenging research area (Nunez et al., 2015). It is still unclear how and how much the embodied communication from the robot can influence user's perception of the robot (Dubois et al., 2016). Moreover, previous studies indicated that users' experience of the robot could also be influenced by the unexpected behaviors (Lemaignan et al., 2015), synchrony and reciprocity (Lorenz et al., 2016), and even cognitive biases (Biswas and Murray, 2016, 2017) from the robot. A caveat is that there still exist many unknowns for natural human-robot interaction.

In summary, to achieve natural human-robot interaction during cognitive training requires not only multimodal sensing technology and artificial intelligence (e.g., deep learning) (Jing et al., 2015; Lopez-Samaniego and Garcia-Zapirain, 2016; Pierson and Gashler, 2017) but also the development of related fields (Wan et al., 2020), such as cognitive computing (Chen et al., 2018), social-cognitive mechanisms (Wiltshire et al., 2017), and modeling of cognitive architectures (Kotseruba et al., 2016; Woo et al., 2017).

5. Conclusion

Robot-assisted cognitive training is becoming an affordable promise for people with cognitive disabilities. In this review paper, we present a systematic review on the current application, enabling technologies, and main commercial robots in the field of robot-assisted cognitive training. Many studies have been successfully conducted to evaluate the feasibility, safety, usability, and effectiveness of robotic rehabilitation. Existing studies often include a small sample size. Also, the questionnaires need to be standardized both to evaluate the overall experience with the robot and the impact of the robot on the specific cognitive ability that it aims to assess. There are still multifaceted challenges in the application of robots in cognitive training. Firstly, ethical issues, such as human safety and violation of social norms, can arise during robot-assisted cognitive training. Secondly, with respect to the design of a robot-assisted cognitive training system, the developers should have a close collaboration with the end-users and stakeholders from the initial design, implementation, evaluation and improvement. Thirdly, the trust, reliability and the cost-effectiveness should be taken into account. Moreover, the rehabilitation robot should be capable to adapt and personalize to the specific individual need, and also learn to collaborate with users in the future. The recent advancement of social cognition may facilitate the human-robot interaction during cognitive training. Lastly, the rehabilitation robot should be able to interact with users in a natural way, similar to the human-human interaction during cognitive training. Noticeably, these challenges are mutually influencing one another. Cross-disciplinary collaboration is necessary to solve these challenges in future.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

FY, RL, and XZ determined the review scope and review strategies. FY, EK, and ZL conducted the searching and screening of the literature, and reviewing of the selected articles. FY, EK, RL, and XZ wrote the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Ab Aziz, A., Ahmad, F., Yusof, N., Ahmad, F. K., and Yusof, S. A. M. (2015). “Designing a robot-assisted therapy for individuals with anxiety traits and states,” in 2015 International Symposium on Agents, Multi-Agent Systems and Robotics (ISAMSR) (Putrajaya: IEEE), 98–103. doi: 10.1109/ISAMSR.2015.7379778

Abdollahi, H., Mollahosseini, A., Lane, J. T., and Mahoor, M. H. (2017). “A pilot study on using an intelligent life-like robot as a companion for elderly individuals with dementia and depression,” in 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids) (Birmingham: IEEE), 541–546. doi: 10.1109/HUMANOIDS.2017.8246925

Adam, C., Johal, W., Pellier, D., Fiorino, H., and Pesty, S. (2016). “Social human-robot interaction: a new cognitive and affective interaction-oriented architecture,” in International Conference on Social Robotics (Springer), 253–263. doi: 10.1007/978-3-319-44884-8

Agrigoroaie, R., Ferland, F., and Tapus, A. (2016). The enrichme project: Lessons learnt from a first interaction with the elderly,” in International Conference on Social Robotics (Kansas City, MO: Springer), 735–745. doi: 10.1007/978-3-319-47437-3_72

Agrigoroaie, R. M., and Tapus, A. (2016). “Developing a healthcare robot with personalized behaviors and social skills for the elderly,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Christchurch: IEEE), 589–590. doi: 10.1109/HRI.2016.7451870

Ahn, H. S., Lee, M. H., Broadbent, E., and MacDonald, B. A. (2017). “Is entertainment services of a healthcare service robot for older people useful to young people?” in 2017 First IEEE International Conference on Robotic Computing (IRC) (Taichung: IEEE), 330–335. doi: 10.1109/IRC.2017.70

Alemi, M., Ghanbarzadeh, A., Meghdari, A., and Moghadam, L. J. (2016). Clinical application of a humanoid robot in pediatric cancer interventions. Int. J. Soc. Robot. 8, 743–759. doi: 10.1007/s12369-015-0294-y

Ali, S., Mehmood, F., Dancey, D., Ayaz, Y., Khan, M. J., Naseer, N., et al. (2019). An adaptive multi-robot therapy for improving joint attention and imitation of asd children. IEEE Access 7, 81808–81825. doi: 10.1109/ACCESS.2019.2923678

Alimardani, M., and Hiraki, K. (2017). Development of a real-time brain-computer interface for interactive robot therapy: an exploration of eeg and emg features during hypnosis. Int. J. Comput. Inform. Eng. 11, 187–195. doi: 10.5281/zenodo.1340062

Alnajjar, F., Cappuccio, M., Renawi, A., Mubin, O., and Loo, C. K. (2020). Personalized robot interventions for autistic children: an automated methodology for attention assessment. Int. J. Soc. Robot. 13, 67–82. doi: 10.1007/s12369-020-00639-8

Aminuddin, R., Sharkey, A., and Levita, L. (2016). “Interaction with the paro robot may reduce psychophysiological stress responses,” in 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (Christchurch: IEEE), 593–594. doi: 10.1109/HRI.2016.7451872

Andriella, A., Suárez-Hernández, A., Segovia-Aguas, J., Torras, C., and Alenyà, G. (2019a). “Natural teaching of robot-assisted rearranging exercises for cognitive training,” in International Conference on Social Robotics (Madrid: Springer), 611–621. doi: 10.1007/978-3-030-35888-4_57

Andriella, A., Torras, C., and Alenyà, G. (2019b). “Learning robot policies using a high-level abstraction persona-behaviour simulator,” in 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) (New Delhi: IEEE), 1–8. doi: 10.1109/RO-MAN46459.2019.8956357

Andriella, A., Torras, C., and Alenya, G. (2020). Cognitive system framework for brain-training exercise based on human-robot interaction. Cogn. Comput. 12, 793–810. doi: 10.1007/s12559-019-09696-2

Andruseac, G. G., Adochiei, R. I., Păsărică, A., Adochiei, F. C., Corciovă, C., and Costin, H. (2015). “Training program for dyslexic children using educational robotics,” in 2015 E-Health and Bioengineering Conference (EHB) (Iasi: IEEE), 1–4. doi: 10.1109/EHB.2015.7391547

Ansado, J., Chasen, C., Bouchard, S., Iakimova, G., and Northoff, G. (2020). How brain imaging provides predictive biomarkers for therapeutic success in the context of virtual reality cognitive training. Neurosci. Biobehav. Rev. 120, 583–594. doi: 10.1016/j.neubiorev.2020.05.018

Arnold, T., and Scheutz, M. (2017). The tactile ethics of soft robotics: designing wisely for human–robot interaction. Soft Robot. 4, 81–87. doi: 10.1089/soro.2017.0032

Barco Martelo, A., and Fosch Villaronga, E. (2017). “Child-robot interaction studies: from lessons learned to guidelines,” in 3rd Workshop on Child-Robot Interaction, CRI 2017 (Vienna).

Beckerle, P., Salvietti, G., Unal, R., Prattichizzo, D., Rossi, S., Castellini, C., et al. (2017). A human-robot interaction perspective on assistive and rehabilitation robotics. Front. Neurorobot. 11:24. doi: 10.3389/fnbot.2017.00024

Begum, M., Serna, R. W., Kontak, D., Allspaw, J., Kuczynski, J., Yanco, H. A., et al. (2015). “Measuring the efficacy of robots in autism therapy: how informative are standard HRI metrics?” in Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction (Portland, OR), 335–342. doi: 10.1145/2696454.2696480

Bertani, R., Melegari, C., Maria, C., Bramanti, A., Bramanti, P., and Calabrò, R. S. (2017). Effects of robot-assisted upper limb rehabilitation in stroke patients: a systematic review with meta-analysis. Neurol. Sci. 38, 1561–1569. doi: 10.1007/s10072-017-2995-5

Bharatharaj, J., Huang, L., Mohan, R. E., Al-Jumaily, A., and Krägeloh, C. (2017). Robot-assisted therapy for learning and social interaction of children with autism spectrum disorder. Robotics 6:4. doi: 10.3390/robotics6010004

Biswas, M., and Murray, J. (2016). “The effects of cognitive biases in long-term human-robot interactions: case studies using three cognitive biases on marc the humanoid robot,” in International Conference on Social Robotics (Kansas City, MO: Springer), 148–158. doi: 10.1007/978-3-319-47437-3_15

Biswas, M., and Murray, J. (2017). The effects of cognitive biases and imperfectness in long-term robot-human interactions: case studies using five cognitive biases on three robots. Cogn. Syst. Res. 43, 266–290. doi: 10.1016/j.cogsys.2016.07.007

Botvinick, M., Ritter, S., Wang, J. X., Kurth-Nelson, Z., Blundell, C., and Hassabis, D. (2019). Reinforcement learning, fast and slow. Trends Cogn. Sci. 23, 408–422. doi: 10.1016/j.tics.2019.02.006

Bozgeyikli, L., Bozgeyikli, E., Clevenger, M., Raij, A., Alqasemi, R., Sundarrao, S., et al. (2015). “Vr4vr: vocational rehabilitation of individuals with disabilities in immersive virtual reality environments,” in Proceedings of the 8th ACM International Conference on PErvasive Technologies Related to Assistive Environments (Corfu), 1–4. doi: 10.1145/2769493.2769592

Calderita, L. V., Vega, A., Barroso-Ramírez, S., Bustos, P., and Núñez, P. (2020). Designing a cyber-physical system for ambient assisted living: a use-case analysis for social robot navigation in caregiving centers. Sensors 20:4005. doi: 10.3390/s20144005

Cangelosi, A., and Invitto, S. (2017). Human-robot interaction and neuroprosthetics: a review of new technologies. IEEE Consum. Electron. Mag. 6, 24–33. doi: 10.1109/MCE.2016.2614423

Cao, H. L., Pop, C., Simut, R., Furnemónt, R., De Beir, A., Van de Perre, G., et al. (2015). “Probolino: a portable low-cost social device for home-based autism therapy,” in International Conference on Social Robotics (Paris: Springer), 93–102. doi: 10.1007/978-3-319-25554-5_10

Carros, F., Meurer, J., Löffler, D., Unbehaun, D., Matthies, S., Koch, I., et al. (2020). “Exploring human-robot interaction with the elderly: results from a ten-week case study in a care home,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI), 1–12. doi: 10.1145/3313831.3376402

Casey, D., Felzmann, H., Pegman, G., Kouroupetroglou, C., Murphy, K., Koumpis, A., et al. (2016). “What people with dementia want: designing mario an acceptable robot companion,” in International Conference on Computers Helping People With Special Needs, (Linz: Springer), 318–325. doi: 10.1007/978-3-319-41264-1_44

Castellini, C. (2016). “Incremental learning of muscle synergies: from calibration to interaction,” in Human and Robot Hands (Cham: Springer), 171–193. doi: 10.1007/978-3-319-26706-7_11

Chen, H., Zhu, H., Teng, Z., and Zhao, P. (2020). Design of a robotic rehabilitation system for mild cognitive impairment based on computer vision. J. Eng. Sci. Med. Diagn. Ther. 3:021108. doi: 10.1115/1.4046396

Chen, M., Herrera, F., and Hwang, K. (2018). Cognitive computing: architecture, technologies and intelligent applications. IEEE Access 6, 19774–19783. doi: 10.1109/ACCESS.2018.2791469

Chevallier, C., Kohls, G., Troiani, V., Brodkin, E. S., and Schultz, R. T. (2012). The social motivation theory of autism. Trends Cogn. Sci. 16, 231–239. doi: 10.1016/j.tics.2012.02.007

Chu, M. T., Khosla, R., Khaksar, S. M. S., and Nguyen, K. (2017). Service innovation through social robot engagement to improve dementia care quality. Assist. Technol. 29, 8–18. doi: 10.1080/10400435.2016.1171807

Chumkamon, S., Hayashi, E., and Koike, M. (2016). Intelligent emotion and behavior based on topological consciousness and adaptive resonance theory in a companion robot. Biol. Inspir. Cogn. Architect. 18, 51–67. doi: 10.1016/j.bica.2016.09.004

Clabaugh, C. E., Mahajan, K., Jain, S., Pakkar, R., Becerra, D., Shi, Z., et al. (2019). Long-term personalization of an in-home socially assistive robot for children with autism spectrum disorders. Front. Robot. AI 6:110. doi: 10.3389/frobt.2019.00110

Coeckelbergh, M., Pop, C., Simut, R., Peca, A., Pintea, S., David, D., et al. (2016). A survey of expectations about the role of robots in robot-assisted therapy for children with ASD: ethical acceptability, trust, sociability, appearance, and attachment. Sci. Eng. Ethics 22, 47–65. doi: 10.1007/s11948-015-9649-x

Conti, D., Di Nuovo, S., Buono, S., Trubia, G., and Di Nuovo, A. (2015). “Use of robotics to stimulate imitation in children with autism spectrum disorder: A pilot study in a clinical setting,” in 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (Kobe: IEEE), 1–6. doi: 10.1109/ROMAN.2015.7333589

Costescu, C. A., Vanderborght, B., and David, D. O. (2015). Reversal learning task in children with autism spectrum disorder: a robot-based approach. J. Autism Dev. Disord. 45, 3715–3725. doi: 10.1007/s10803-014-2319-z

D'Amico, A., and Guastella, D. (2019). The robotic construction kit as a tool for cognitive stimulation in children and adolescents: the re4bes protocol. Robotics 8:8. doi: 10.3390/robotics8010008

Darragh, M., Ahn, H. S., MacDonald, B., Liang, A., Peri, K., Kerse, N., et al. (2017). Homecare robots to improve health and well-being in mild cognitive impairment and early stage dementia: results from a scoping study. J. Ame. Med. Direc. Assoc. 18:1099-e1. doi: 10.1016/j.jamda.2017.08.019

David, D. O., Costescu, C. A., Matu, S., Szentagotai, A., and Dobrean, A. (2018). Developing joint attention for children with autism in robot-enhanced therapy. Int. J. Soc. Robot. 10, 595–605. doi: 10.1007/s12369-017-0457-0

David, D. O., Costescu, C. A., Matu, S., Szentagotai, A., and Dobrean, A. (2020). Effects of a robot-enhanced intervention for children with ASD on teaching turn-taking skills. J. Educ. Comput. Res. 58, 29–62. doi: 10.1177/0735633119830344

de Graaf, M. M., Allouch, S. B., and Van Dijk, J. (2015). “What makes robots social? A user's perspective on characteristics for social human-robot interaction,” in International Conference on Social Robotics (Paris: Springer), 184–193. doi: 10.1007/978-3-319-25554-5_19

Demetriadis, S., Tsiatsos, T., Sapounidis, T., Tsolaki, M., and Gerontidis, A. (2016). “Exploring the potential of programming tasks to benefit patients with mild cognitive impairment,” in Proceedings of the 9th ACM International Conference on PErvasive Technologies Related to Assistive Environments (Corfu Island Greece), 1–4. doi: 10.1145/2910674.2935850

Dickerson, B. C. (2015). Dysfunction of social cognition and behavior. Continuum 21:660. doi: 10.1212/01.CON.0000466659.05156.1d

Doraiswamy, P. M., London, E., Varnum, P., Harvey, B., Saxena, S., Tottman, S., et al. (2019). Empowering 8 Billion Minds: Enabling Better Mental Health for All via the Ethical Adoption of Technologies. Washington, DC: NAM Perspectives.

Dragone, M., Amato, G., Bacciu, D., Chessa, S., Coleman, S., Di Rocco, M., et al. (2015). A cognitive robotic ecology approach to self-configuring and evolving aal systems. Eng. Appl. Artif. Intell. 45, 269–280. doi: 10.1016/j.engappai.2015.07.004

Dubois, M., Claret, J. A., Basañez, L., and Venture, G. (2016). “Influence of emotional motions in human-robot interactions,” in International Symposium on Experimental Robotics (Tokyo: Springer), 799–808. doi: 10.1007/978-3-319-50115-4_69

Esteban, P. G., Baxter, P., Belpaeme, T., Billing, E., Cai, H., Cao, H. L., et al. (2017). How to build a supervised autonomous system for robot-enhanced therapy for children with autism spectrum disorder. Paladyn J. Behav. Robot. 8, 18–38. doi: 10.1515/pjbr-2017-0002

Fiske, A., Henningsen, P., and Buyx, A. (2019). Your robot therapist will see you now: ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J. Med. Internet Res. 21:e13216. doi: 10.2196/13216

Frith, C. D., and Frith, U. (1999). Interacting minds-a biological basis. Science 286, 1692–1695. doi: 10.1126/science.286.5445.1692

Garcia-Sanjuan, F., Jaen, J., and Nacher, V. (2017). Tangibot: a tangible-mediated robot to support cognitive games for ageing people—a usability study. Perv. Mob. Comput. 34, 91–105. doi: 10.1016/j.pmcj.2016.08.007

Gerling, K., Hebesberger, D., Dondrup, C., Körtner, T., and Hanheide, M. (2016). Robot deployment in long-term care. Z. Gerontol. Geriatr. 49, 288–297. doi: 10.1007/s00391-016-1065-6

Hajat, C., and Stein, E. (2018). The global burden of multiple chronic conditions: a narrative review. Prev. Med. Rep. 12, 284–293. doi: 10.1016/j.pmedr.2018.10.008

Haring, K. S., Silvera-Tawil, D., Watanabe, K., and Velonaki, M. (2016). “The influence of robot appearance and interactive ability in HRI: a cross-cultural study,” in International Conference on Social Robotics (Kansas City, MO: Springer), 392–401. doi: 10.1007/978-3-319-47437-3_38

Hartson, R., and Pyla, P. S. (2018). The UX Book: Agile UX Design for a Quality User Experience. Cambridge, MA: Morgan Kaufmann.

Havins, W. N., Massman, P. J., and Doody, R. (2012). Factor structure of the geriatric depression scale and relationships with cognition and function in Alzheimer's disease. Dement. Geriatr. Cogn. Disord. 34, 360–372. doi: 10.1159/000345787

Heins, S., Dehem, S., Montedoro, V., Dehez, B., Edwards, M., Stoquart, G., et al. (2017). “Robotic-assisted serious game for motor and cognitive post-stroke rehabilitation,” in 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH) (Perth, WA: IEEE), 1–8. doi: 10.1109/SeGAH.2017.7939262

Henschel, A., Hortensius, R., and Cross, E. S. (2020). Social cognition in the age of human-robot interaction. Trends Neurosci. 43, 373–384. doi: 10.1016/j.tins.2020.03.013

Huijnen, C. A., Lexis, M. A., and de Witte, L. P. (2016). Matching robot kaspar to autism spectrum disorder (ASD) therapy and educational goals. Int. J. Soc. Robot. 8, 445–455. doi: 10.1007/s12369-016-0369-4

Huskens, B., Palmen, A., Van der Werff, M., Lourens, T., and Barakova, E. (2015). Improving collaborative play between children with autism spectrum disorders and their siblings: the effectiveness of a robot-mediated intervention based on Lego® therapy. J. Autism Dev. Disord. 45, 3746–3755. doi: 10.1007/s10803-014-2326-0

Ioannou, A., Kartapanis, I., and Zaphiris, P. (2015). “Social robots as co-therapists in autism therapy sessions: a single-case study,” in International Conference on Social Robotics (Paris: Springer), 255–263. doi: 10.1007/978-3-319-25554-5_26

Javed, H., Cabibihan, J. J., Aldosari, M., and Al-Attiyah, A. (2016). “Culture as a driver for the design of social robots for autism spectrum disorder interventions in the Middle East,” in International Conference on Social Robotics (Kansas City, MO: Springer), 591–599. doi: 10.1007/978-3-319-47437-3_58

Javed, H., Jeon, M., and Park, C. H. (2018). “Adaptive framework for emotional engagement in child-robot interactions for autism interventions,” in 2018 15th International Conference on Ubiquitous Robots (UR) (Honolulu, HI: IEEE), 396–400. doi: 10.1109/URAI.2018.8441775

Jing, H., Lun, X., Dan, L., Zhijie, H., and Zhiliang, W. (2015). Cognitive emotion model for eldercare robot in smart home. China Commun. 12, 32–41. doi: 10.1109/CC.2015.7114067

Johal, W., Calvary, G., and Pesty, S. (2015). “Non-verbal signals in HRI: interference in human perception,” in International Conference on Social Robotics (Paris: Springer), 275–284. doi: 10.1007/978-3-319-25554-5_28

Jones, A., Küster, D., Basedow, C. A., Alves-Oliveira, P., Serholt, S., Hastie, H., et al. (2015). “Empathic robotic tutors for personalised learning: a multidisciplinary approach,” in International Conference on Social Robotics (Paris: Springer), 285–295. doi: 10.1007/978-3-319-25554-5_29