First- and Third-Person Perspectives in Immersive Virtual Environments: Presence and Performance Analysis of Embodied Users

- 1LAMPA, Arts et Métiers ParisTech, Présence et Innovation, Angers, France

- 2DICEN-IDF, Conservatoire National des Arts et Métiers, Paris, France

Current design of virtual reality (VR) applications relies essentially on the transposition of users’ viewpoint in first-person perspective (1PP). Within this context, our research aims to compare the impact and the potentialities enabled via the integration of the third-person perspective (3PP) in immersive virtual environments (IVE). Our empirical study is conducted in order to assess the sense of presence, the sense of embodiment, and performance of users confronted with a series of tasks presenting a case of potential use for the video game industry. Our results do not reveal significant differences concerning the sense of spatial presence with either point of view. Nonetheless, they provide evidence confirming the relevance of using the first-person perspective to induce a sense of embodiment toward a virtual body, especially in terms of self-location and ownership. However, no significant differences were observed concerning the sense of agency. Concerning users’ performance, our results demonstrate that the first-person perspective enables more accurate interactions, while the third-person perspective provides better space awareness.

1. Introduction

The sense of presence and the sense of embodiment are essential components of user experience in immersive virtual environments. For years, these concepts have been the focus of numerous investigations conducted by researchers from different disciplinary fields, such as computer science, psychology, or even neuroscience. While no consensus has been reached on the matter, it is nevertheless possible to identify three constitutive dimensions of the embodiment process in IVE (Kilteni et al., 2012): the sense of self-location, the sense of agency, and the sense of ownership of the virtual body. Identifying the factors influencing these components is an essential step in order to allow the development of applications exploiting the potential of virtual reality technologies.

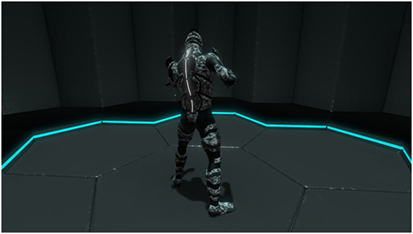

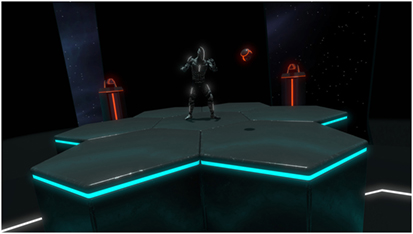

Current research allows to identify many factors affecting the level of technological immersion (Slater and Wilbur, 1997; Slater, 1999; Cummings and Bailenson, 2016) of VR setup, such as field of view, tracking level, or stereoscopy. However, the studies concerning the viewpoint notion and its consequences in terms of embodiment reached different conclusions (Slater et al., 2010; Petkova et al., 2011; Maselli and Slater, 2013; Debarba et al., 2015). Some argue that the first-person perspective is the most suitable condition to induce a high sense of embodiment (Slater et al., 2010; Petkova et al., 2011; Maselli and Slater, 2013), while others do not observe significant differences between the two viewpoint modes (Debarba et al., 2015). Moreover, only few studies analyze the relation between viewpoint and performance in IVE (Salamin et al., 2010; Covaci et al., 2014; Debarba et al., 2015). The purpose of our study is to contribute to an improved understanding of the embodiment process in IVE by focusing on a use case close to what the video game industry could offer (Figure 1) thanks to the currently increasing spread of VR tech. More specifically, the research presented here aims to determine the impact and the potentialities offered by the use of different viewpoints in IVE: first- and third-person perspective (Figures 2 and 3). The analysis of these modalities is carried out taking into account the sense of presence, the sense of embodiment, and the users’ performance.

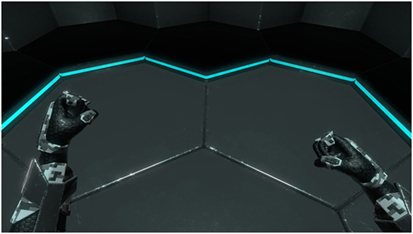

Figure 2. Virtual character seen from a first-person perspective (1PP) during the appropriation phase and controlled by the users thanks to the motion capture suit.

Figure 3. Virtual character seen from a third-person perspective (3PP) during the appropriation phase and controlled by the users thanks to the motion capture suit.

The first line of our research consists in determining the impact of the viewpoint on two dimensions of the sense of presence: spatial presence and self-presence (Lee, 2004); the latter including the notion of embodiment. In this context, the users control an avatar thanks to a real-time motion capture system that enables the transposition of their movements within the virtual environment. The subjective assessment of the notions of presence and embodiment is based on a questionnaire and post-experiment interviews.

The second line of our study focuses on the consequences of the viewpoint notion for users’ capabilities to perceive, navigate, and interact. These criteria are assessed in an objective way by recording users’ performance data in the VR application. These data are also compared with the subjective results obtained through the questionnaire and the post-experiment interviews.

The next section presents a state of the art concerning the notions of presence, embodiment, and viewpoints in immersive virtual environments. Section 3 presents the experiment and the protocol. Results are analyzed in Section 4 and implications are discussed in Section 5. Section 6 concludes the study while future work is depicted in section 7.

2. Related Work

2.1. Presence and Embodiment

Presence is a complex notion studied in many disciplinary fields and not limited to virtual environments. Literally, the definition of presence refers to the fact, for someone or something, of being physically in a certain place, as opposed to absence. It is necessary to consider the sense of presence as oscillating between “primary reality,” reality in which we live every day, and “mediated reality,” the virtual reality in our research context. Studying the sense of presence, thus, consists of trying to determine by what reality the user is mainly affected. The concept of presence was initially defined as the subjective feeling of “being there” (Heeter, 1992; Sheridan, 1992; Slater et al., 1994), or later as the illusion of non-mediation (Lombard and Ditton, 1997). More recently, Lee (2004) proposed a categorization consisting of three dimensions:

• Spatial (or physical) presence.

• Self-presence.

• Social presence.

As our research does not focus on the relationship between social actors [avatars, agents (Fox et al., 2015)], we will assess on the notions of spatial presence and self-presence.

Spatial presence has been the topic of much research since the introduction of the presence concept. Although, it was not initially formulated in this way, the majority of the conducted studies dealt with the relationship between users and the elements constituting the virtual spaces. Lee (2004) proposes the following definition:

Psychological state in which virtual (para-authentic or artificial) physical objects are experienced as actual physical objects in either sensory or non-sensory ways.

The term “object” includes the general constitution of the environment, with the exception of the representation of the user and the social entities.

Self-presence was initially introduced to describe the effect of the virtual environment on the body perception, the psychological and emotional state, the perceived traits, and identity (Biocca, 1997). This definition has been later extended to various mediums (Lee, 2004), no longer confining this notion to immersive virtual environments:

Psychological state in which virtual (para-authentic or artificial) self/selves are experienced as the actual self in either sensory or non-sensory ways.

According to these definitions, the sense of embodiment is, therefore, intrinsically linked to the notion of self-presence. Biocca (1997) emphasizes here the importance of the body in the process of self-identification, pointing out three entities to consider in the embodiment process in virtual environments:

• The objective body: physical body of the user.

• The virtual body: representation of the user’s body within the virtual environment.

• The body schema: mental model of the user’s body.

According to this theory, the success of the embodiment process in virtual environments depends on users’ ability to transfer their body schemata from their objective bodies to the virtual bodies. Thus, the sense of embodiment manifests itself when the properties of a virtual body are treated as those of the biological body (Kilteni et al., 2012). Three dimensions are identified as constitutive of the sense of embodiment.

The sense of self-location, corresponding to a determinate volume in space where the user feels located. Several phenomena can affect this sense of location. Studies performed on out of body experiences have demonstrated that visuotactile synchronous stimulations induce a localization conflict (Ehrsson, 2007; Lenggenhager et al., 2007). According to previous research (Petkova and Ehrsson, 2008; Slater et al., 2010; Debarba et al., 2015), the viewpoint also has a determining impact on this notion in IVE.

The sense of agency, defined as the sensation of “global motor control, including the subjective experience of action, control, intention, motor selection and the conscious experience of will” (Blanke and Metzinger, 2009). This feeling, therefore, requires a correlation between the intention of the subject and the action resulting from it within the virtual environment. Thus, the tracking of users’ movements induces a high sense of agency (Caspar et al., 2015). However, it should be noted that recent studies on this topic demonstrate that it is possible to experience such a feeling toward a virtual body perceived from a first-person perspective, whether or not the action is performed directly by the subjects (Kokkinara et al., 2016; Nagamine et al., 2016).

The sense of ownership, referring to one’s self-attribution of a body (Kilteni et al., 2012). The emergence of this feeling is influenced by different factors, such as morphological similarities or by the spatial correlation of the body (Argelaguet et al., 2016). This phenomenon was initially observed with the rubber hand illusion paradigm (RHI) introduced by Botvinick and Cohen (1998). In this experiment, it was demonstrated that visuotactile synchronous stimulations between an artificial hand and the real hand of a subject enable the induction of a sense of ownership of the artificial limb.

This paradigm, extended to virtual environments, demonstrates the possibility of experiencing a sense of ownership toward a virtual body. Many studies highlight various factors inducing this feeling, such as visuotactile synchrony (Slater et al., 2008; Normand et al., 2011; Kokkinara and Slater, 2014) or visuomotor synchrony (Sanchez-Vives et al., 2010; Kokkinara and Slater, 2014). It turns out that proprioceptive feeling associated with movement enhances immersion (Slater et al., 2009). Furthermore, it has been demonstrated that visuomotor synchrony plays a predominant role in the identification process between the user and the avatar (Usoh et al., 1999; Debarba et al., 2015). Therefore, the use of a real-time motion capture system can enable the emergence of a sense of ownership of the virtual body (Kokkinara and Slater, 2014).

Previous research corroborates these findings (Slater et al., 2010; Maselli and Slater, 2013), emphasizing a predominance in favor of the first-person perspective in the process of embodiment and ownership of a virtual body. These findings contrast with the analysis carried out by Debarba et al. (2015) from subjective measurements, which do not reveal any major differences concerning the sense of embodiment, regardless of the two viewpoint modes. The author explains these results by the high level of involvement induced by the proposed task, requiring an implication and, therefore, a higher cognitive load for the subjects.

2.2. Viewpoints and Performance

Despite divergent results, the aforementioned literature reveals that the viewpoint notion can affect the sense of presence and the sense of embodiment. However, few studies consider the potentialities of this modality on users’ performance in immersive virtual environments.

In the field of non-immersive video games, there are multiple ways of managing the viewpoint, which stems from the position of the camera within the virtual environment. See Taylor (2002) for a synthesis of the viewpoints most commonly used by video game developers. For our study, we focus on the use of the first- and the third-person perspectives (Figures 2 and 3). Video game theories (Rouse, 1999; Taylor, 2002) agree that the third-person perspective potentially increases the awareness of the virtual space while perceiving the avatar acting within the environment, but with consequences for the immersion of the players. These acceptances will later be corroborated by Denisova and Cairns (2015).

In the field of virtual reality, the studies by Salamin et al. (2006, 2010) are a first step toward assessing the performance related to the use of the third-person perspective via a headset displaying the video stream of a camera located behind the user. The results of this research tend to demonstrate a better ability with the first-person perspective when interacting with static objects, especially if the latter are occulted by the user’s body. On the other hand, perception and navigation could be facilitated by the third-person perspective. The partial transposition of these analyzes in IVE (Boulic et al., 2009; Debarba et al., 2015) corroborates the results concerning the perception of the environment during interactions with static elements. Although less accurate in the reaching of objects, it is easier for the subjects to detect the elements located in the periphery of the field of view thanks to the third-person perspective.

Researches on immersive environments repeatedly demonstrate the underestimation of distance perception (Knapp and Loomis, 2004; Mohler et al., 2008) which must be considered in order to obtain coherent navigation and interactions. Although the evolution of virtual reality headsets (Creem-Regehr et al., 2015) and the use of avatars (Mohler et al., 2010) enable the minimization of this underestimation, some studies propose to introduce effective palliative solutions (Kelly et al., 2014). Therefore, the integration of an appropriation phase in our protocol would allow a clear reduction of the lack of depth perception.

In conclusion, our literature review highlighted some divergences concerning the viewpoint impact on the sense of embodiment in virtual environments. However, the first studies considering the viewpoint notion on users’ performance in IVE identify some potential benefits that let one imagine new use cases in the field of virtual reality. Our experiment is, therefore, based on an application that enables to identify the situations benefiting from the contributions of two different viewpoints (1PP/3PP) in immersive virtual environments.

3. Material and Methods

The aim of the proposed experiment is to observe and compare the impact of different viewpoints in immersive environments on the sense of presence, the sense of embodiment, and users’ performance in terms of perception, navigation, and interaction.

The virtual reality application developed for this experiment (Figure 1) proposes two viewpoint conditions: first-person (1PP) and third-person (3PP) perspective (Figures 2 and 3). The scenario consists of a first task designed to analyse the subjects’ capabilities to perceive and interact with moving elements. It should be noted that the sound effects in the IVE are not spatialized in an attempt not to bias the assessment of visual perception faculties by auditory perception. The second task allows the assessement of the participants’ effectiveness in terms of navigation and accurate interactions. During this experiment, the users are equipped with a head-mounted display and a motion capture suit to control the avatar within the virtual environment.

The sense of presence and the sense of embodiment are assessed subjectively thanks to a questionnaire and post-experiment interviews. The feelings of the subjects concerning their performance related to the different viewpoint modes are also collected through a questionnaire in order to be confronted with the objective data measured by the application.

3.1. VR Application

The application used for the experiment was developed using the real-time 3D engine Unity 3D. The proposed environment draws its inspiration from the science fiction universe (Figure 1), frequently used in the production of video game content and presenting a potential use case. This kind of environment gives credibility to the different tasks proposed by the implemented scenario, which aims at maximizing the users’ involvement. This universe anchors the avatar as well as the drone, responsible for providing the instructions during the appropriation phase, in a coherent whole. The virtual environment consists of a training room, necessary for the appropriation phase (Kelly et al., 2014). A platform then elevates the subject to access the arena where the tasks take place. This arena consists of hexagonal platforms that can dynamically modulate the environment according to the actions to be undertaken. Moreover, the graphic atmosphere is globally dark and minimalist but highlighted by the use of lights, leading to an immediate identification of the tasks to be carried out, in order to maximize the affordance of the environment. Indeed, the colors of some elements adapt to the proposed interactions, which enable the subject to quickly distinguish a hostile element (orange) from a neutral element (white/blue).

The classical integration of the virtual reality headset induces a colocalized vision between the subject and the avatar within the IVE for the first-person perspective condition (Figure 2). On the other hand, for the third-person perspective condition (Figure 3), the virtual camera is placed 3 m behind the avatar enabling the subject to perceive the entirety of the virtual body. The rotation of the camera, centered around the character, is proportional to the rotation of the subject’s head.

3.2. Apparatus

The HTC Vive virtual reality headset is used to display the virtual environment with a resolution of 2,160 × 1,200 pixels (1,080 × 1,200 pixels per eye), a field of view of 110° and a refresh rate of 90 Hz. The motion capture, which enables the subjects to control the avatar in real time, is performed by the Perception Neuron wireless suit composed of 32 inertial sensors (3 axis gyroscopes, 3 axis accelerometers, and 3 axis magnetometers) clocked at 60 Hz (Figure 4). This suit captures and replicates all body movements, fingers included.

Figure 4. User equipped with the required devices for the experiment: HTC Vive virtual reality headset, Perception Neuron motion capture suit.

The computer running the application is composed of an Intel Core I7-6700HQ @ 2.60 GHz processor and a Nvidia GeForce GTX 1070 graphics card. The latter is installed on a platform suspended from the ceiling of the experiment room, enabling access to a navigation space of approximately 35 m2. Placing the computer at the center of the area rather than on the periphery potentially doubles the accessible space, limited by the length of the headset wire.

3.3. Participants

31 subjects were recruited for our experiment. Three of them were discarded from our results due to calibration issues requiring the interruption of the experiment. Consequently, 28 male subjects (aged from 20 to 26, M = 22.46, SD = 1.53) were considered. Each subject has a correct or corrected vision thanks to vision glasses allowing them to use the VR headset without discomfort. All the subjects have used at least once an immersive virtual reality system and have experience with video games, including first- and third-person games.

We chose to recruit only male participants with similar background for this study to ensure the variable control and the motion capture stability. Moreover, the male character design of our experiment could potentially influence the sense of embodiment of female participants. Nevertheless, it could be interesting to investigate in future works the impact of gender on the sense of embodiment.

3.4. Procedure

First, each subject completes a pre-experiment profile questionnaire. Second, the user is geared up with the equipment required for the experiment and receives the necessary instructions. Once this one is equipped and calibrated, the operator initializes the application and launches the scenario consisting of three phases:

• Appropriation.

• Task 1: perception and deflection of projectiles.

• Task 2: navigation and interaction with terminals.

The appropriation phase is carried out prior to the successive tasks. The participant performs two immersion sessions, one for each viewpoint mode (1PP/3PP) following a within-subject design, and completes the questionnaire after each session. The order of the conditions is counterbalanced to limit the learning phenomenon. The whole process takes approximately 60 min, including 15 min of immersion within the virtual environment. Then, a semi-structured interview is conducted to collect the feeling of the users’ concerning the experiment.

3.4.1. Appropriation

The appropriation phase allows the subject to understand how the application works and to become accustomed to the two viewpoint modes. Moreover, in order to adjust depth perception, often compressed into IVE (Knapp and Loomis, 2004), this phase allows the user to navigate within the virtual environment. Indeed, this bias of perception can be partially corrected by the implementation of a repetitive traveling task which consists of reaching a target (Kelly et al., 2014), here materialized by a drone. At the initialization of the appropriation phase, the drone gives the user the necessary instructions. It moves five times in the room and asks the subject to reach its position, allowing the latter to appropriate the space. Finally, the user is asked to reach the central platform giving the access to the arena where the tasks of the scenario take place.

3.4.2. Task 1: Perception and Deflection of Projectiles

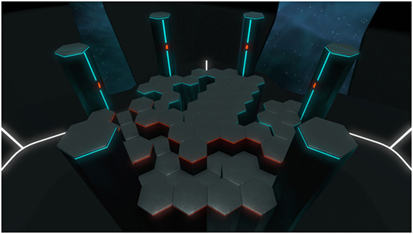

The first task assesses the subject’s perception of the environment as well as the capacity of interaction with moving elements depending on the viewpoint used. The participant must deviate a series of 30 spheres (20 cm diameter) coming from launchers positioned at the six corners of the hexagonal arena (Figure 5). 15 spheres per minute are launched at a fixed frequency of one every 4 s. The selection of the launcher is defined dynamically according to the orientation of the subject to ensure that five projectiles are emitted for each of the six possible directions. During this phase, the subject is located on a virtual platform of approximately 2.5 m2. As mentioned earlier, if we assume that audio content could provide useful spatial information, the sound effects in the IVE are not spatialized in order to assess only the visual perception faculties.

Figure 5. Configuration of the virtual arena during the first task: perception and deflection of projectiles.

3.4.3. Task 2: Navigation and Interaction with Terminals

The second task assesses the navigation ability and the effectiveness of the subject during accurate interactions depending on the viewpoint used. To achieve this, the platforms of which the virtual environment is composed are modulated in order to define the path to be taken (Figure 6). The user must reach the end of the first three sections of the path without falling from the platforms surrounded by emptiness. The three following sections have borders with which the subject must avoid colliding. The access to each section is triggered by an accurate interaction consisting of activating a terminal located at the end of each section. This interaction consists of laying the avatar’s hand over the hand print of the terminal during 1 s.

Figure 6. Configuration of the virtual arena during the second task: navigation and interaction with terminals.

3.5. Measures

Our measures are based on three kinds of data in order to obtain as much information as possible concerning the notions of presence, embodiment, and performance.

Objective performance data are collected in a CSV file (Comma-Separated Values). This file contains the following information:

• Provenance and number of deviated spheres (DS). Each deviation is counted if the subject manages to deflect the projectile with the hands or the arms.

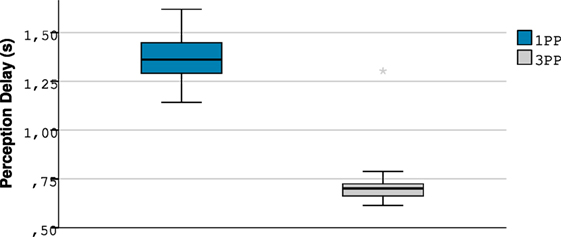

• Perception delay (PD) of the projectiles. This information is used to analyze the perception capacity of the subjects. It corresponds to the duration between the launch of the spheres and their detections by the subjects. However, it should be noted that in absence of eye tracking, a projectile is considered as perceived as soon as it enters the field of view of the VR headset.

• Navigation duration (ND) for each section. This period of time corresponds to the duration between the activation of the traveled section and the activation of the next one.

• Interaction duration (ID) with the terminals. This period of time corresponds to the duration between the access to the interaction area and the activation of the terminal.

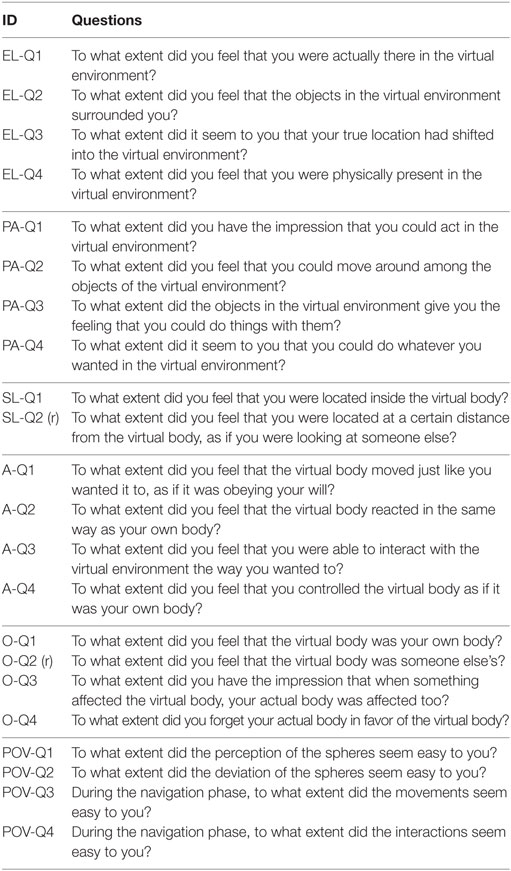

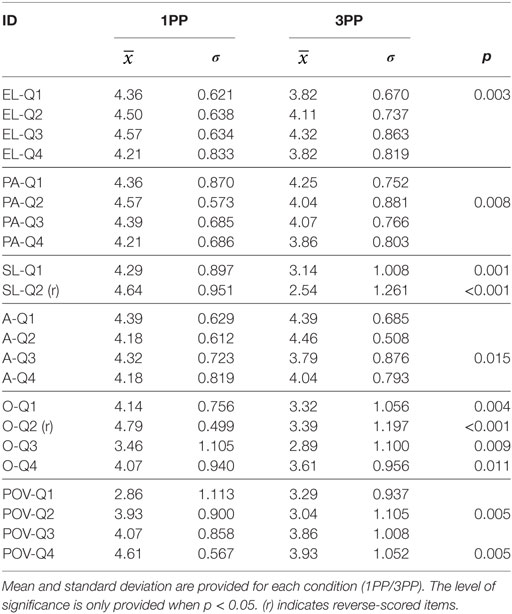

The post-experiment questionnaire, completed by the subjects after each session, collects the subjective feeling in terms of presence, embodiment, and ease with the different viewpoint modes. It consists of five-point semantic scale items divided into three dimensions (Table 1):

• Spatial presence, composed of eight items (α = 0.81) divided into two factors: environmental location (EL) and possible actions (PA). The items are essentially adapted from the MEC-SPQ (Vorderer et al., 2004) translated into French.

• Embodiment, composed of 10 items (α = 0.84) divided into three factors: self-location (SL), sense of agency (A) and sense of ownership (O). The items are mainly based on the works of Debarba et al. (2015); Argelaguet et al. (2016).

• Point of view (POV) consisting of four independent items assessing the ease of each subject with the different viewpoint conditions when facing the proposed tasks.

Table 1. Post experiment questionnaire composed of three dimensions: spatial presence (Environmental Location—EL, Possible Actions—PA), embodiment (Self-Location—SL, Agency—A, Ownership—O), point of view (POV).

Cronbach’s alpha coefficient was calculated considering the data collected under one of the two experiment conditions (1PP) to check the internal consistency of the questionnaire which was found to be highly reliable (α > 0.8 for each dimension).

The semi-structured interview concluding the experiment lets the subjects express themselves freely about the experiment allowing a semantic analysis to identify recurring statements and the collection of pertinent and useful observations within the scope of our research to illustrate our findings.

3.6. Hypotheses

H1: The viewpoint used in IVE impacts the sense of presence (H1.1) and the sense of embodiment (H1.2).

H2: Third-person perspective in IVE improves the users’ space awareness and the perception of the virtual environment surrounding their avatars.

H3: Third-person perspective in IVE facilitates interactions with moving elements thanks to a better perception of their trajectories.

H4: The viewpoint used in IVE impacts the navigation effectiveness.

H5: First-person perspective in IVE improves the effectiveness of accurate interactions thanks to a better perception of the arms and the hands of the users’ avatars.

4. Results

The Shapiro–Wilk Test was carried out to check the normality of the distributions of the answers to the post experiment questionnaire (Table 2) as well as the objective data collected by the application. As the variables did not follow a normal distribution (p < 0.05 for all tested variables), we chose non-parametric tests. Thus, the Wilcoxon Signed-Ranks Test was used to compare objective performance data and the subjective answers depending on the two viewpoint conditions (1PP/3PP); results are considered significant when p < 0.05. Furthermore, the Mann–Whitney U Test did not reveal any order effect for the four objective performance measures considered.

Table 2. Statistical summary of the answers to the post experiment questionnaire presenting each factor of the three dimensions: spatial presence (Environmental Location—EL, Possible Actions—PA), embodiment (Self-Location—SL, Agency—A, Ownership—O), point of view (POV).

4.1. Presence

The first part of the analysis concerns the notions of presence and embodiment addressed by the first two dimensions of the questionnaire (Tables 1 and 2) and the post-experiment interviews.

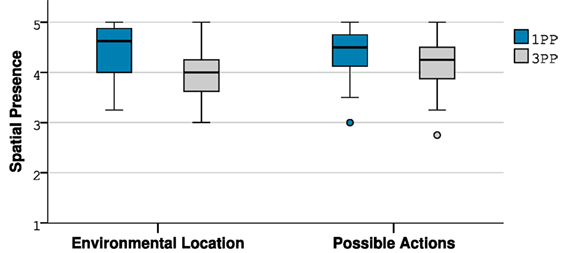

4.1.1. Spatial Presence

The assessment of the questions related to spatial presence of the environmental location factor (EL-Q1—EL-Q4) does not present significant differences between the two viewpoint modes, except for the first item (EL-Q1) (Z = 2.995; p = 0.003) in favor of the 1PP. The possible actions factor (PA-Q1—PA-Q4) also reveals only one significant difference for the second item (PA-Q2) (Z = 2.639; p = 0.008) in favor of the 1PP.

According to our results, the viewpoint impact on spatial presence is very limited, which invalidates H1.1. However, the near-maximum values for both first- and third-person perspectives indicate that each modality does not prevent the induction of a high sense of spatial presence.

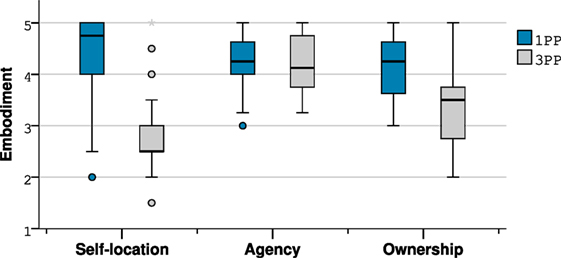

4.1.2. Embodiment

The assessment of the questions related to the sense of embodiment factors (SL-Q1—SL-Q2) is significantly higher in 1PP: SL-Q1 (Z = 3.274; p = 0.001), SL-Q2 (Z = 3.849; p < 0.001). Concerning the agency factor, the item 1 (A-Q1) does not present any significant difference. We find that the second item results (A-Q2) are higher in 3PP while those of the items 3 and 4 (A-Q3, A-Q4) are higher in 1PP. However, only the difference observed in the third item proves to be significant in favor of the 1PP (Z = 2.430; p = 0.015). Finally, the results concerning the sense of ownership factors (O-Q1—O-Q4) demonstrate that the 1PP condition is significantly superior to the 3PP condition for the four considered questions: O-Q1 (Z = 2.898; p = 0.004), O-Q2 (Z = 3.970; p < 0.001), O-Q3 (Z = 2.631; p = 0.009), O-Q4 (Z = 2.555; p = 0.011).

The analysis of the embodiment dimension demonstrates that the 1PP positively and significantly affects self-location and ownership factors, thus confirming H1.2. However, the agency factor does not seem to be impacted by the viewpoint condition (Figure 8).

4.2. Performance

The second part of the analysis concerns the subjects’ performance in terms of perception, interaction, and navigation. The objective data are recorded by the application and the subjective data come from the post-experiment questionnaire and from the semi-structured interviews.

4.2.1. Task 1: Perception and Deflection of Projectiles

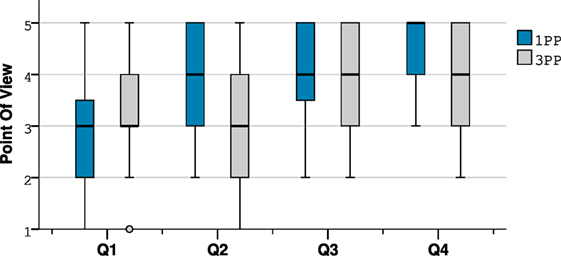

The point of view dimension of the post-experiment questionnaire consists of four independent questions (POV-Q1—POV-Q4) assessing the ease of each subject with the different viewpoint conditions. The higher the results on the five-point scale, the easier the tasks for the subjects.

The assessment of the first item (POV-Q1) concerning moving elements perception capability does not reveal any significant difference between the viewpoint modes. However, there is a trend in favor of the 3PP. Moreover, during the interview, the majority of the subjects (N = 23) say that they found the 3PP more suitable for projectile perception. This trend is confirmed by the objective data concerning performance with a significantly higher perception delay in 1PP (Z = 4.541; p < 0.001). The average perception time is 1.37 s (σ = 0.119) in 1PP, against 0.72 s (σ = 0.124) in 3PP (Figure 10). The trend identified in the questionnaire in favor of the 3PP as well as the interviews and the objective measures concerning the recorded perception times demonstrate the validity of H2.

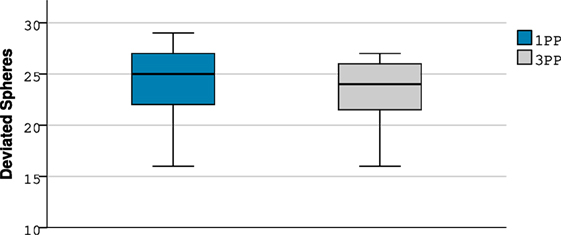

The results of the second item (POV-Q2) concerning interactions with moving elements (projectile deviation) are significantly higher in 1PP (Z = 2.821; p = 0.005). However, the number of deviated projectiles is not significant. Participants have, on average, deviated 24.39 (σ = 3.107) spheres in 1PP against 23.19 (σ = 3.259) in 3PP (Figure 11). Therefore, the results do not allow us to validate H3.

4.2.2. Task 2: Navigation and Interaction with Terminals

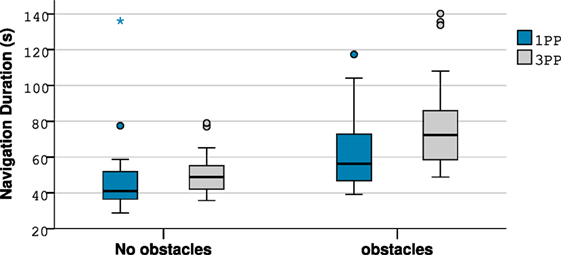

Concerning the navigation, the objective data do not present any significant differences between the viewpoint modes according to the third item assessment (POV-Q3). The post-experiment interviews confirm the divergences of opinion, some subjects considering the 1PP more suitable (N = 16), others the 3PP (N = 10). However, the total travel time during the no obstacle (NO) and obstacle (O) phases is significantly higher in 3PP: NO (Z = 2.018; p = 0.044), O (Z = 3.267; p < 0.001). The average obstacle-free navigation duration is 46.68 s (σ = 20.37) in 1PP and 50.72 s (σ = 11.96) in 3PP (Figure 12). The average navigation duration with obstacles is 64.34 s (σ = 26.33) in 1PP and 77.56 s (σ = 25.67) in 3PP. In the light of the subjective data, we cannot confirm or reject H4. However, objective performance relative to the navigation duration gives an advantage to the 1PP condition.

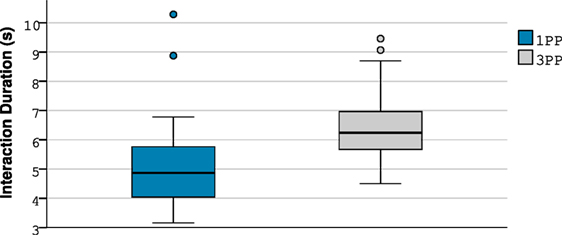

The assessment of the last item (POV-Q4) concerning accurate interactions is significantly higher in 1PP (Z = 2.828; p = 0.005). This finding is corroborated by the higher average interaction time in 3PP (Z = 3.796; p < 0.001). This duration is measured at 5.12 s (σ = 1.56) in 1PP and 6.56 s (σ = 1.33) in 3PP. Thus, the subjective and objective data corroborate H5 and demonstrate that accurate interactions are conducted more effectively under the 1PP condition.

5. Discussion

5.1. Presence and Embodiment

The results of our experiment concerning the viewpoint impact on the notion of spatial presence do not allow us to identify a real advantage for one condition over the other. Indeed, while we observe a slight tendency in favor of the first-person perspective for the two factors constituting this dimension (environmental location and possible actions), most results reveal no significant gap between 1PP and 3PP. However, it is interesting to note that under both conditions, our system induce a very high sense of spatial presence. Indeed, the mean results for each factor have near-maximum values on our scale (Figure 7). These findings suggest that it is possible for a subject to have the feeling of “being there” (place illusion), regardless of the viewpoint mode used in IVE. However, further research is needed to confirm this.

Our results concerning the sense of embodiment reveal significant differences on two of the factors constituting this dimension. Indeed, the 1PP proves to have a favorable and superior impact to the 3PP on the sense of self-location (volume of space in which the user feels located) and the sense of ownership (one’s self-attribution of a body). Our literature review highlighted the divergence of studies investigating these notions. Our results corroborate some previous findings (Slater et al., 2010; Petkova et al., 2011; Maselli and Slater, 2013) but are in contrast with Debarba’s study (Debarba et al., 2015) in which the viewpoint condition does not induce significant differences in the subjective evaluations of the senses of self-location and ownership, while we observe that the 1PP favors them both. This difference could potentially be related to the metrics’ sensitivity. Indeed, their questionnaire uses one item while ours includes several one to assess each factor. However, although the sense of ownership is objectively higher under the 1PP condition in our study, we note that it remains relatively important under the 3PP one. As shown by previous work (Kokkinara and Slater, 2014), visuomotor synchrony enabled via the motion capture can induce an important ownership of the avatar’s body. We also observed during the interviews the hesitation of some subjects about the self-location questions. Indeed, a state of bilocation has been mentioned several times, potentially justifying some exceptional values in our results (Figure 8):

It’s not the same perception. In the third-person condition we have more the feeling of directing a character, but we still remain completely “inside” because we see very well that we are moving and that the character responds well to what we are doing.

I felt like I was watching a movie and being the actor in it at the same time.

The sense of agency, on the other hand, does not present any notable difference between both first- and third-person perspectives. This sense seems to be very high for both conditions. This finding demonstrates that despite the relative difference concerning body ownership, the use of the third-person perspective appears to be consistent with the design of experiences inducing a sense of embodiment in virtual environments.

5.2. Performance

Our experiment also aims to assess viewpoint impact over users’ performance in terms of perception, navigation, and interaction. The objective quantitative results recorded by the application demonstrate an improved space awareness thanks to the use of the third-person perspective (Figure 9). More than two-thirds of the panel explain this improvement by the wider field of view enabling one to quickly perceive the elements coming from the periphery of the avatar. This observation corroborates the study by Debarba et al. (2015), supporting our hypothesis about the potential of perception induced by the third-person perspective. Perhaps an augmentation of the field of view of future virtual reality headsets could reduce the differences we observed between the two viewpoint conditions, but without letting users perceive their whole avatars acting in the virtual environment.

Figure 9. Boxplot of the questions of the viewpoint factor: perception (Q1), interactions (Q2), navigation (Q3), accurate interactions (Q4).

Concerning interactions with moving elements, our experiment does not allow us to conclude in favor of either one of the viewpoint modes. Although the perception of the projectiles is increased under the 3PP condition, the number of deviated spheres remains sensibly the same. However, the subjective data collected by the questionnaire and the interviews demonstrate that a majority of subjects consider the deviation to be easier under the first-person perspective condition. Here, the mentioned problems are related, in particular, to depth perception that remains less precise despite the appropriation phase (Kelly et al., 2014). The occlusion generated by the virtual body of the avatar is also problematic for projectiles coming in front of the user. A similar phenomenon is observed during the second task. Indeed, our objective and subjective results demonstrate a better effectiveness of the subjects when performing accurate interactions under the first-person perspective condition (Figure 12).

In terms of navigation, the recorded durations are, on average, lower with the first-person perspective (Figure 13). However, it is interesting to note that if the 1PP seems to be a more natural transposition of our viewpoint inside the virtual environment, we have also observed a remarkable appropriation of the potentialities offered by the 3PP by some subjects:

In third-person, in my head, I was the camera, […] I will try to place it where I can, in order to improve the vision to see where I put my feet and my hands …

Indeed, the control of the viewpoint in third-person perspective via the user’s head movements enables to position the camera above the character in order to perceive the environment near the user’s avatar. Eight subjects used this strategy during the obstacle navigation phase. However, one-third of the users emphasize the necessity of the appropriation phase allowing for necessary adaptation so the experiment could run properly:

There is a real progression from the beginning to the end of the experiment […]. As a tool, we start to master it and we see what we can do with it (3PP).

Finally, in order to ensure the usability of the third-person perspective integration as implemented in our application, we questioned the subjects during the interviews about the discomforts that can occur. Indeed, the mismatch between the vision and the vestibular system of the user can potentially induces cybersickness. No subjects stated that they felt any trouble during the experiment. This information is essential for the viability of the work undertaken here, underlining the viability of both viewpoints through our setup in immersive virtual environments.

5.3. Critical Analysis

Taking into account the findings of our study, we are considering some adjustments concerning the third-person perspective integration in IVE in order to maximize its usability. We intend to test the fading of the elements coming between the camera and the avatar instead of the dynamic distance adaptation of the viewpoint. Although the level design of our virtual environment rarely confronts the users with this phenomenon, this optimization seems to be necessary for an optimal user experience. Indeed, if the current integration is commonly used in the field of video games, some elements have to be redesigned for virtual reality.

6. Conclusion

The presented study allows the identification of the impacts and potentialities induced by the use of both first- and third-person perspectives in immersive virtual environments. Therefore, it contributes to the exploration and the understanding of the mechanisms of presence, embodiment, and performance inherent to virtual reality.

Our results demonstrate that although the spatial presence feeling is high under both viewpoint conditions, two of the three factors constituting the sense of embodiment are impacted. Indeed, the first-person perspective favors the sensation of being located in the virtual body as well as the sense of ownership of this one. However, although objectively inferior under the third-person perspective condition, this sense of ownership remains relatively high under both viewpoint modes. This observation is potentially linked to visuomotor synchrony, identified by the literature as a preponderant factor of the sense of embodiment. Finally, we did not observe any significant differences concerning the sense of agency, the latter being very high regardless of which viewpoint is used.

Beyond the notions of presence and embodiment, we were able to observe and identify different situations suitable for the use of each viewpoint. Concerning space awareness and the environment perception capacity, the results are significantly in favor of the 3PP. However, the more the proposed interactions require a high degree of precision, the more the 1PP is favored by the subjects. Indeed, if the objective data do not make it possible to identify the more suitable modality concerning interactions with mobile elements, accurate interactions have been found to be more effective under the 1PP condition. Finally, the assessment of navigation durations gives an advantage to the 1PP. However, the remarkable appropriation of the 3PP by the subjects allowed us to observe the use of new navigation strategies. It would be interesting to carry out a more detailed study on this aspect with more advanced users.

Finally, the results analysis in terms of presence, embodiment, and performance demonstrate that both first- and third-person perspectives seem consistent with the induction of a high spatial presence feeling. However, the first-person perspective remains the best condition to induce a sense of embodiment toward a virtual body. This viewpoint enables a maximal user inclusion within the virtual environment, in particular thanks to the natural transposition of our perceptual mechanisms and provides the more suitable conditions for the accuracy of interactions. On the other hand, the third-person perspective improves space awareness and perception of the virtual environment which must be considered when designing VR applications. We hope that the identification of the suitable situations concerning the studied viewpoints will support the development of potential new use cases in this age of democratization of virtual reality.

7. Future Work

The results of our study demonstrate the applicability and the potential offered by using the third-person perspective in immersive virtual environments. Based on these findings, our next experiment will investigate the impact of the integration of users as avatars of themselves thanks to 3D reconstruction technologies. Our aim is to observe the consequences induced in terms of embodiment and involvement in order to identify the potential benefits of this ubiquitous situation.

Ethics Statement

The panel recruited for the proposed experiment consists of adult students from a virtual reality training curriculum who volunteered to participate. Participation in research activities is part of their educational program. The non-invasive devices integrated in our setup are regularly used by the subjects that we have solicited and are accessible to the general public. Moreover, our protocol excludes the collection of physiological data and preserves the anonymity of the subjects. During the development of our experiment, we also followed the recommendations formulated by Madary and Metzinger (2016), especially the principles of non-maleficence and informed consent.

Author Contributions

GG, OC, and SR conceived the study and the experimental protocol. GG, OC, and EA designed the metrics. GG developed the VR application. GG and OC ran the experiment and analyzed the objective and subjective data. GG and OC wrote the paper.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank our lab and its teams for giving us the opportunity to achieve our work. Thanks to all the people consulted and involved in both technical and scientific aspects. Finally, we thank the participants mobilized during the experiment for their availability and their investment.

References

Argelaguet, F., Hoyet, L., Trico, M., and Lecuyer, A. (2016). “The role of interaction in virtual embodiment: effects of the virtual hand representation,” in IEEE Virtual Reality (VR), Greenville, SC, 3–10.

Biocca, F. (1997). The Cyborg’s dilemma: progressive embodiment in virtual environments [1]. J. Comput. Mediated Commun. 3, 0–0. doi: 10.1111/j.1083-6101.1997.tb00070.x

Blanke, O., and Metzinger, T. (2009). Full-body illusions and minimal phenomenal selfhood. Trends Cogn. Sci. 13, 7–13. doi:10.1016/j.tics.2008.10.003

Botvinick, M., and Cohen, J. (1998). Rubber hands ‘feel’ touch that eyes see. Nature 391, 756. doi:10.1038/35784

Boulic, R., Maupu, D., and Thalmann, D. (2009). On scaling strategies for the full-body postural control of virtual mannequins. Interact. Comput. 21, 11–25. doi:10.1016/j.intcom.2008.10.002

Caspar, E. A., Cleeremans, A., and Haggard, P. (2015). The relationship between human agency and embodiment. Conscious. Cogn. 33, 226–236. doi:10.1016/j.concog.2015.01.007

Covaci, A., Olivier, A.-H., and Multon, F. (2014). “Third person view and guidance for more natural motor behaviour in immersive basketball playing,” in Proceedings of the 20th ACM Symposium on Virtual Reality Software and Technology, VRST ’14 (New York, NY: ACM), 55–64.

Creem-Regehr, S. H., Stefanucci, J. K., Thompson, W. B., Nash, N., and McCardell, M. (2015). “Egocentric distance perception in the oculus rift (dk2),” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception, SAP ’15 (New York, NY: ACM), 47–50.

Cummings, J. J., and Bailenson, J. N. (2016). How immersive is enough? A meta-analysis of the effect of immersive technology on user presence. Media Psychol. 19, 272–309. doi:10.1080/15213269.2015.1015740

Debarba, H. G., Molla, E., Herbelin, B., and Boulic, R. (2015). “Characterizing embodied interaction in first and third person perspective viewpoints,” in IEEE Symposium on 3D User Interfaces (3DUI), Arles, 67–72.

Denisova, A., and Cairns, P. (2015). “First person vs. third person perspective in digital games: do player preferences affect immersion?” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, CHI ’15 (New York, NY: ACM), 145–148.

Ehrsson, H. H. (2007). The experimental induction of out-of-body experiences. Science 317, 1048–1048. doi:10.1126/science.1142175

Fox, J., Ahn, S. J. G., Janssen, J. H., Yeykelis, L., Segovia, K. Y., and Bailenson, J. N. (2015). Avatars versus agents: a meta-analysis quantifying the effect of agency on social influence. Hum. Comput. Interact. 30, 401–432. doi:10.1080/07370024.2014.921494

Heeter, C. (1992). Being there: the subjective experience of presence. Presence Teleoperators Virtual Environ. 1, 262–271. doi:10.1162/pres.1992.1.2.262

Kelly, J. W., Hammel, W. W., Siegel, Z. D., and Sjolund, L. A. (2014). Recalibration of perceived distance in virtual environments occurs rapidly and transfers asymmetrically across scale. IEEE Trans. Vis. Comput. Graph 20, 588–595. doi:10.1109/TVCG.2014.36

Kilteni, K., Groten, R., and Slater, M. (2012). The sense of embodiment in virtual reality. Presence Teleoperators Virtual Environ. 21, 373–387. doi:10.1162/PRES_a_00124

Knapp, J. M., and Loomis, J. M. (2004). Limited field of view of head-mounted displays is not the cause of distance underestimation in virtual environments. Presence Teleoperators Virtual Environ. 13, 572–577. doi:10.1162/1054746042545238

Kokkinara, E., Kilteni, K., Blom, K. J., and Slater, M. (2016). First person perspective of seated participants over a walking virtual body leads to illusory agency over the walking. Sci. Rep. 6, 28879. doi:10.1038/srep28879

Kokkinara, E., and Slater, M. (2014). Measuring the effects through time of the influence of visuomotor and visuotactile synchronous stimulation on a virtual body ownership illusion. Perception 43, 43–58. doi:10.1068/p7545

Lee, K. M. (2004). Presence, explicated. Commun. Theory 14, 27–50. doi:10.1111/j.1468-2885.2004.tb00302.x

Lenggenhager, B., Tadi, T., Metzinger, T., and Blanke, O. (2007). Video ergo sum: manipulating bodily self-consciousness. Science 317, 1096–1099. doi:10.1126/science.1143439

Lombard, M., and Ditton, T. (1997). At the heart of it all: the concept of presence. J. Comput. Mediated Commun. 3, 0–0. doi:10.1111/j.1083-6101.1997.tb00072.x

Madary, M., and Metzinger, T. K. (2016). Real virtuality: a code of ethical conduct. Recommendations for good scientific practice and the consumers of VR-technology. Front. Robot. AI 3:3. doi:10.3389/frobt.2016.00003

Maselli, A., and Slater, M. (2013). The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 7:83. doi:10.3389/fnhum.2013.00083

Mohler, B. J., Bülthoff, H. H., Thompson, W. B., and Creem-Regehr, S. H. (2008). “A full-body avatar improves egocentric distance judgments in an immersive virtual environment,” in Proceedings of the 5th Symposium on Applied Perception in Graphics and Visualization, APGV ’08 (New York, NY: ACM), 194.

Mohler, B. J., Creem-Regehr, S. H., Thompson, W. B., and Bülthoff, H. H. (2010). The effect of viewing a self-avatar on distance judgments in an HMD-based virtual environment. Presence Teleoperators Virtual Environ. 19, 230–242. doi:10.1162/pres.19.3.230

Nagamine, S., Hayashi, Y., Yano, S., and Kondo, T. (2016). “An immersive virtual reality system for investigating human bodily self-consciousness,” in Fifth ICT International Student Project Conference (ICT-ISPC), Nakhon Pathom, 97–100.

Normand, J.-M., Giannopoulos, E., Spanlang, B., and Slater, M. (2011). Multisensory stimulation can induce an illusion of larger belly size in immersive virtual reality. PLoS ONE 6:e16128. doi:10.1371/journal.pone.0016128

Petkova, V., Khoshnevis, M., and Ehrsson, H. H. (2011). The perspective matters! Multisensory integration in ego-centric reference frames determines full-body ownership. Front. Psychol. 2:35. doi:10.3389/fpsyg.2011.00035

Petkova, V. I., and Ehrsson, H. H. (2008). If I were you: perceptual illusion of body swapping. PLoS ONE 3:e3832. doi:10.1371/journal.pone.0003832

Rouse, R. III (1999). What’s your perspective? ACM SIGGRAPH Comput. Graph. 33, 9–12. doi:10.1145/330572.330575

Salamin, P., Tadi, T., Blanke, O., Vexo, F., and Thalmann, D. (2010). Quantifying effects of exposure to the third and first-person perspectives in virtual-reality-based training. IEEE Trans. Learn. Technol. 3, 272–276. doi:10.1109/TLT.2010.13

Salamin, P., Thalmann, D., and Vexo, F. (2006). “The benefits of third-person perspective in virtual and augmented reality?” in Proceedings of the ACM Symposium on Virtual Reality Software and Technology, VRST ’06 (New York, NY: ACM), 27–30.

Sanchez-Vives, M. V., Spanlang, B., Frisoli, A., Bergamasco, M., and Slater, M. (2010). Virtual hand illusion induced by visuomotor correlations. PLoS ONE 5:e10381. doi:10.1371/journal.pone.0010381

Sheridan, T. B. (1992). Musings on telepresence and virtual presence. Presence Teleoperators Virtual Environ. 1, 120–126. doi:10.1162/pres.1992.1.1.120

Slater, M. (1999). Measuring presence: a response to the Witmer and singer presence questionnaire. Presence Teleoperators Virtual Environ. 8, 560–565. doi:10.1162/105474699566477

Slater, M., Lotto, B., Arnold, M. M., and Sanchez-Vives, M. V. (2009). How we experience immersive virtual environments: the concept of presence and its measurement. Anuario de psicología/UB J. Psychol. 40, 193–210.

Slater, M., Pérez Marcos, D., Ehrsson, H., and Sanchez-Vives, M. (2008). Towards a digital body: the virtual arm illusion. Front. Hum. Neurosci. 2:6. doi:10.3389/neuro.09.006.2008

Slater, M., Spanlang, B., Sanchez-Vives, M. V., and Blanke, O. (2010). First person experience of body transfer in virtual reality. PLoS ONE 5:e10564. doi:10.1371/journal.pone.0010564

Slater, M., Usoh, M., and Steed, A. (1994). Depth of presence in virtual environments. Presence Teleoperators Virtual Environ. 3, 130–144. doi:10.1162/pres.1994.3.2.130

Slater, M., and Wilbur, S. (1997). A framework for immersive virtual environments (five): speculations on the role of presence in virtual environments. Presence Teleoperators Virtual Environ. 6, 603–616. doi:10.1162/pres.1997.6.6.603

Taylor, L. N. (2002). Video Games: Perspective, Point-of-View, and Immersion. Master’s thesis, University of Florida.

Usoh, M., Arthur, K., Whitton, M. C., Bastos, R., Steed, A., Slater, M., et al. (1999). “Walking > walking-in-place > flying, in virtual environments,” in Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ’99 (New York, NY: ACM/Addison-Wesley Publishing Co), 359–364.

Keywords: virtual reality, first-person perspective, third-person perspective, presence, embodiment, performance

Citation: Gorisse G, Christmann O, Amato EA and Richir S (2017) First- and Third-Person Perspectives in Immersive Virtual Environments: Presence and Performance Analysis of Embodied Users. Front. Robot. AI 4:33. doi: 10.3389/frobt.2017.00033

Received: 04 April 2017; Accepted: 19 June 2017;

Published: 17 July 2017

Edited by:

Maria V. Sanchez-Vives, Consorci Institut D’Investigacions Biomediques August Pi I Sunyer, SpainReviewed by:

Yiorgos L. Chrysanthou, University of Cyprus, CyprusJohn Quarles, University of Texas at San Antonio, United States

Xueni Pan, Goldsmiths, University of London, United Kingdom

Copyright: © 2017 Gorisse, Christmann, Amato and Richir. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Geoffrey Gorisse, geoffrey.gorisse@ensam.eu

Geoffrey Gorisse

Geoffrey Gorisse Olivier Christmann

Olivier Christmann Etienne Armand Amato2

Etienne Armand Amato2  Simon Richir

Simon Richir