- Department of Experimental Clinical and Health Psychology, Ghent University, Gent, Belgium

In the Stroop task, participants identify the print color of color words. The congruency effect is the observation that response times and errors are increased when the word and color are incongruent (e.g., the word “red” in green ink) relative to when they are congruent (e.g., “red” in red). The proportion congruent (PC) effect is the finding that congruency effects are reduced when trials are mostly incongruent rather than mostly congruent. This PC effect can be context-specific. For instance, if trials are mostly incongruent when presented in one location and mostly congruent when presented in another location, the congruency effect is smaller for the former location. Typically, PC effects are interpreted in terms of strategic control of attention in response to conflict, termed conflict adaptation or conflict monitoring. In the present manuscript, however, an episodic learning account is presented for context-specific proportion congruent (CSPC) effects. In particular, it is argued that context-specific contingency learning can explain part of the effect, and context-specific rhythmic responding can explain the rest. Both contingency-based and temporal-based learning can parsimoniously be conceptualized within an episodic learning framework. An adaptation of the Parallel Episodic Processing model is presented. This model successfully simulates CSPC effects, both for contingency-biased and contingency-unbiased (transfer) items. The same fixed-parameter model can explain a range of other findings from the learning, timing, binding, practice, and attentional control domains.

Introduction

One of the main areas of interest in experimental psychology is how the cognitive system controls attention to maximize task performance (Treisman and Gelade, 1980; Miller and Cohen, 2001; Petersen and Posner, 2012). Consider the classic Stroop task (Stroop, 1935). Participants are much slower and more error prone to identify the color of color words on incongruent trials (e.g., the word “red” printed in blue) relative to congruent trials (e.g., “red” in red). The difference in performance between these two conditions is termed the congruency effect. Of course, the impairments on incongruent trials indicate a (partial) failure of selective attention. However, participants are reasonably accurate, indicating that participants are able to instantiate the goal to ignore the word and attend to the print color. In addition to strategic adjustments of attention at the start of an experiment in order to achieve task goals, the cognitive system might continue to adapt attentional control dynamically throughout a task. One particularly impactful idea, called the conflict monitoring (or conflict adaptation) account, is that attention is dynamically adjusted on a trial-by-trial basis in reaction to response conflict (Botvinick et al., 2001).

One of the main sources of evidence presented in support of the conflict monitoring account is the proportion congruent (PC) effect (Logan and Zbrodoff, 1979; Lowe and Mitterer, 1982; Logan et al., 1984). The PC effect is the observation that congruency effects are much diminished when trials are mostly incongruent (e.g., 75% incongruent, 25% congruent) relative to mostly congruent (e.g., 75% congruent, 25% incongruent). According to the conflict monitoring account, participants adapt to the frequent conflict in the mostly incongruent condition by decreasing attention to the distracting word (and/or increasing attention to the color). As such, the word has a reduced impact on color identification, thereby diminishing the congruency effect. In the mostly congruent condition, however, conflict is less frequent, and attention is, as a result, more “lazy.”

Though extremely popular, the conflict monitoring account has been contested on several fronts (for reviews, Schmidt, 2013b; Schmidt et al., 2015). For instance, Schmidt and Besner (2008; see also, Atalay and Misirlisoy, 2012; Grandjean et al., 2013; Schmidt, 2013a, 2014a, 2016a; Hazeltine and Mordkoff, 2014; Levin and Tzelgov, 2016) presented the argument that the (item-specific) PC effect might be due (primarily or even entirely) to the learning of contingencies (i.e., correlations) between color words and responses (for related ideas, see Logan et al., 1984; Mordkoff, 1996; Melara and Algom, 2003). For instance, in the mostly congruent condition, words are presented most often in their congruent color (e.g., “red” 75% of the time in red). As such, color words are strongly predictive of the congruent response, which benefits congruent trials. On incongruent trials (e.g., “red” in green), however, the word mispredicts the color response, resulting in a cost. The net result is an increased congruency effect. In the mostly incongruent condition, the situation is reversed. Depending on the exact manipulation, color words might be presented most often in a specific incongruent color (e.g., “green” most often in red, etc.). Thus, words are accurately predictive of the incongruent response, and mispredict a congruent response. The net effect is a reduced congruency effect. What is most interesting about the contingency learning account of the PC effect is that it is unrelated to conflict, control, or attention. The account argues that the learning of stimulus-response correspondences is all that matters.

Currently, it is general consensus that the majority of the PC effect in the prototypical paradigm is due to contingency learning. However, it is also clear that there are findings that cannot be explained by simple contingency learning, and debate still continues as to whether conflict monitoring produces these remaining components of the PC effect (Blais and Bunge, 2010; Bugg and Chanani, 2011; Bugg et al., 2011a,b; Atalay and Misirlisoy, 2012; Bugg and Hutchison, 2013; Grandjean et al., 2013; Schmidt, 2013a,c, 2014a,b, 2016a; Bugg, 2014, 2015; Hazeltine and Mordkoff, 2014; Levin and Tzelgov, 2016). For instance, consider the list-level PC effect. Some inducer items are manipulated for PC in separate groups of participants (e.g., “red” and “blue” mostly congruent for one group, and mostly incongruent for another), and these are intermixed with other (contingency-unbiased) diagnostic items (e.g., “green” and “yellow,” which have the same PC for both groups). A (list-level) PC effect is, in some cases, observed for the diagnostic items (e.g., Hutchison, 2011; Bugg, 2014; Wühr et al., 2015; cf., Bugg et al., 2008; Blais and Bunge, 2010). That is, the PC effect of the inducer items generalizes to the diagnostic items.

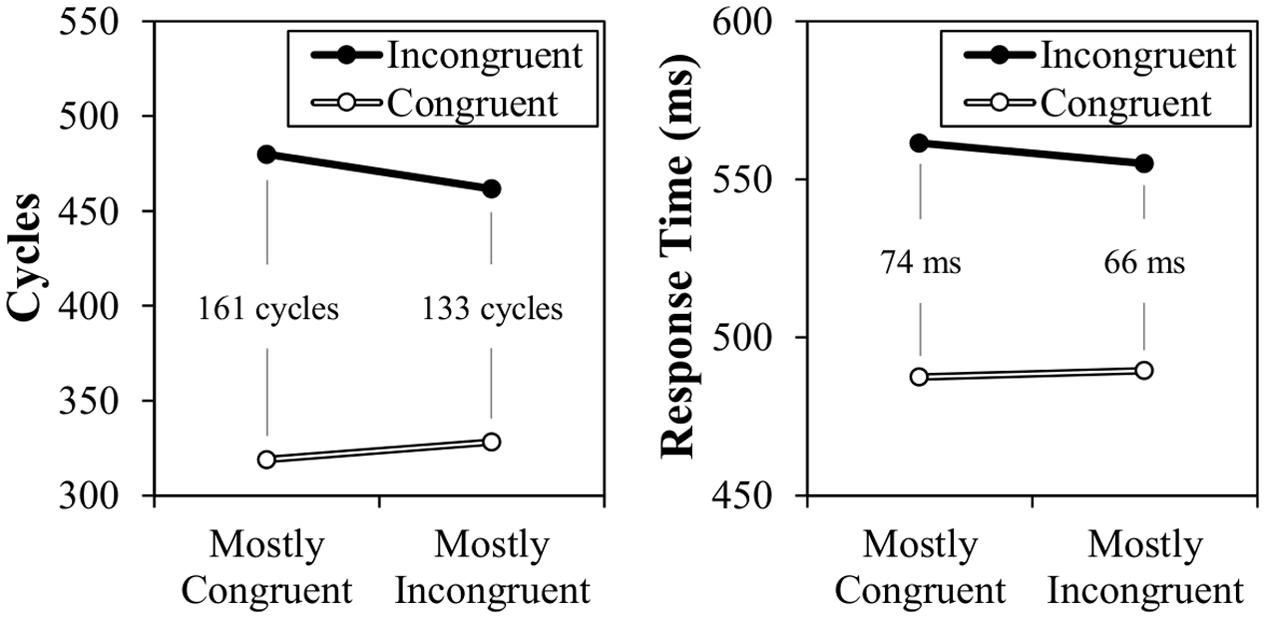

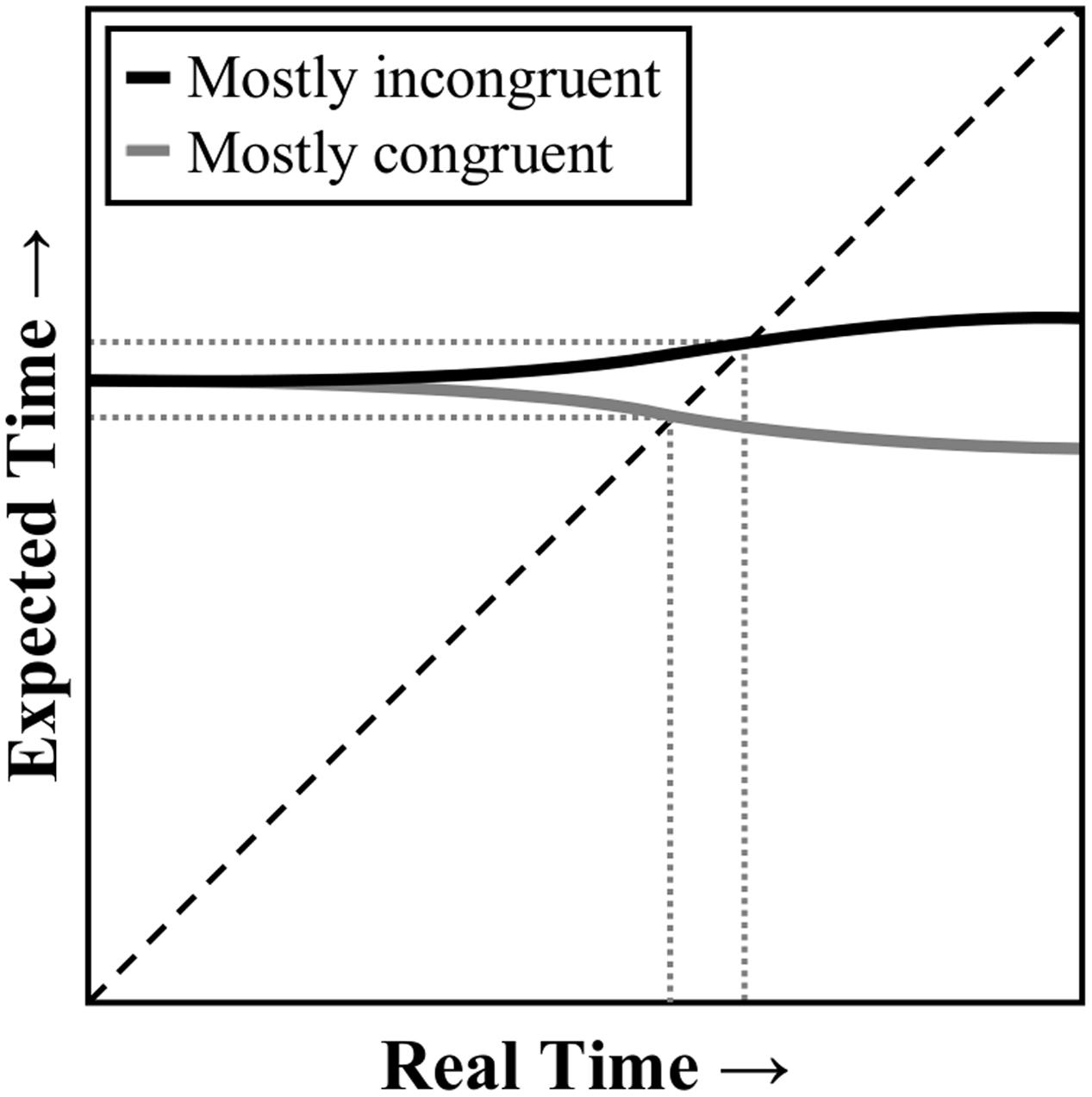

Obviously, list-level PC effects cannot be explained by contingency learning, given the lack of a contingency for diagnostic items. However, Schmidt (2013c, (2014b) argued that the list-level PC effect could be explained by temporal learning (i.e., rhythmic responding). Response times tend to be rhythmic, with the current RT typically similar to the RTs of immediately preceding trials (Grosjean et al., 2001). This has implications for (list-level) PC effects, because the task “pace” will tend to be faster in the mostly congruent condition (where most trials are easy) relative to the mostly incongruent condition (where most trials are hard). According to the temporal learning account, response initiation is speeded when the task pace can be maintained. In particular, it is proposed that the (otherwise stable) threshold to respond is decreased at the expected time, as illustrated in Figure 1. That is, the amount of evidence needed to select a response temporarily decreases when the expected time approaches. If activation (evidence) for a response is sufficient to cross this reduced threshold, responding is speeded (i.e., because less evidence accrual was required to determine the response) and the task pace is maintained. In contrast, if insufficient activation has accrued to determine a response at the expected time (e.g., because the trial was harder than average), then the task pace is “broken,” the threshold to respond increases back to normal, and the rhythmic benefit is lost. Similarly, if a response is determined before the expected time to respond (e.g., because the trial was easier than average), responding will, of course, be fast, but will not have benefitted from a reduced threshold to respond.

FIGURE 1. A representation of how expectancy-driven reductions in the response threshold can produce a proportion congruent effect. An earlier drop in the threshold in the mostly congruent condition benefits congruent trials, whereas a later drop in the threshold in the mostly incongruent condition benefits incongruent trials.

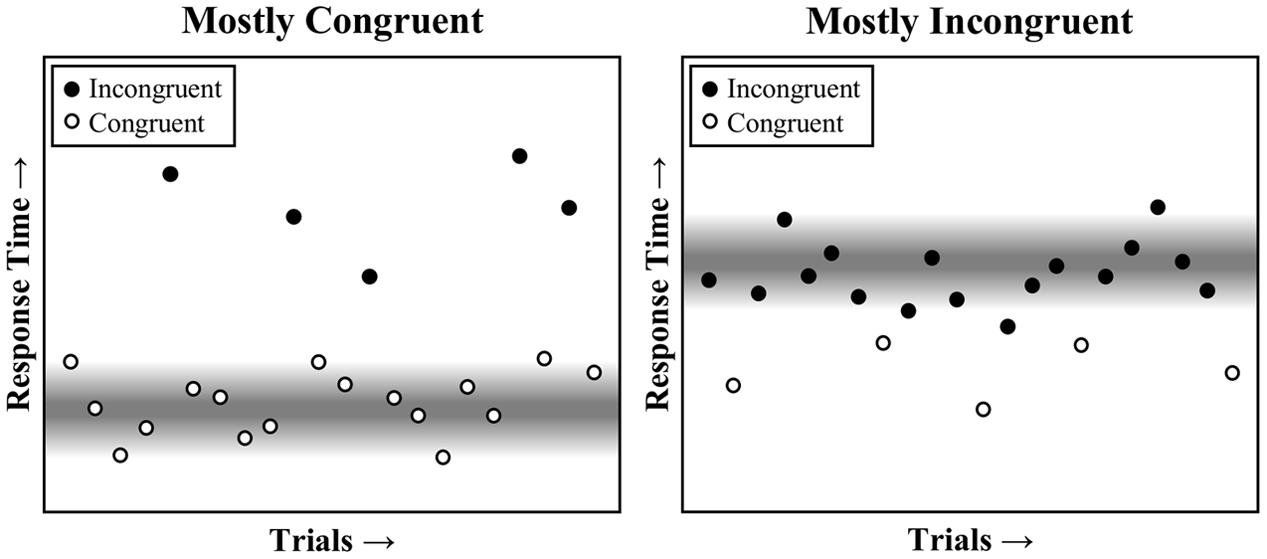

Because the mostly congruent condition contains mostly easy, and therefore fast, congruent trials, the expected time to respond will be earlier in the trial (i.e., around the time of a typical congruent trial). As such, the faster task pace in the mostly congruent condition will tend to benefit congruent trials, which fit into the task rhythm, but not incongruent trials, which break the task rhythm. The reverse is true in the mostly incongruent condition, where a slower pace benefits incongruent trials, but not congruent trials. This is also illustrated in Figure 1, and is further illustrated visually in a trial-by-trial representation in Figure 2. Temporal learning may therefore account for list-level PC effects (Kinoshita et al., 2011), even for contingency-unbiased diagnostic items (Schmidt, 2013c, 2014b, 2016b). As an added aside, some threshold adjustment mechanisms can produce a speed-accuracy trade-off, such that responding is either faster but more error prone (low threshold) or slower but less error prone (high threshold). However, the dynamically adjusting threshold in the Parallel Episodic Processing (PEP) model does not produce a speed-accuracy trade-off in PC (or congruency sequence) effects, but instead produces the same pattern in errors as in response times (Schmidt, 2013c; Schmidt and Weissman, 2016), just as in the participant data. This is because the threshold is fixed, only temporarily dipping early in the mostly congruent condition (benefitting congruent, but increasing fast incongruent errors) and the reverse in the mostly incongruent condition.

FIGURE 2. A visual representation of task pace on performance across a series of trials in the mostly congruent and mostly incongruent conditions. The response threshold is diminished at the expected time to respond (gray), speeding any trials that can benefit from this reduced threshold. This primarily benefits congruent trials in the mostly congruent condition and incongruent trials in the mostly incongruent condition.

The above discussion illustrates how contingency and temporal learning processes might explain item-specific and list-level PC effects. The present manuscript focuses on another variant of the PC task. The context-specific proportion congruent (CSPC) effect is the finding that a PC effect is still observed when mostly congruent and mostly incongruent trials are randomly intermixed and only distinguished by a secondary contextual cue. For instance, Corballis and Gratton (2003; see also, Wendt et al., 2008) used flanker (rather than Stroop) stimuli and presented participants mostly congruent stimuli on one side of the screen (e.g., left), and mostly incongruent stimuli on the other side (e.g., right). Even though (a) the two context were randomly intermixed, and (b) the exact same stimuli were used in both locations, congruency effects were smaller in the mostly incongruent location than in the mostly congruent location. In subsequent reports, Crump et al. (2006, 2008) used a similar manipulation with Stroop-like stimuli. Again, a CSPC effect was observed. Other reports have used stimulus dimensions other than location as the contextual cue, such as text font (Bugg et al., 2008), time duration (Wendt and Kiesel, 2011), and color (Lehle and Hubner, 2008), but the present discussion will be framed in the location-specific Stroop manipulations of Crump and colleagues.

Typically, CSPC effects are interpreted in terms of context-specific conflict monitoring (e.g., Crump et al., 2006). The notion is that attention is more stringently controlled in the mostly incongruent context (e.g., location), and more lax in the mostly congruent context (e.g., another location). This, of course, implies that attentional control can be adjusted extremely rapidly: before a trial begins, the participant cannot possibly know which contextual location they will experience. Indeed, a particularly interesting detail of the experiments by Crump et al. (2006, 2008) is that color words were presented at fixation, then removed from the screen before a color patch was presented either above or below fixation. Thus, participants could not know the PC context until after the word was already removed from the screen (i.e., when target location was known). As such, participants cannot know whether or not to attend to the word until after it is already gone. This clearly indicates that if attentional control is involved, it cannot be control of attention to perceptual inputs, but must be occurring somewhere further down the processing stream.

It is noteworthy that, task wide, words are not predictive of colors in CSPC designs. Contingency learning may nevertheless account for (part) of the CSPC effect, because the word and location combined do strongly predict the color response. For instance, if the mostly congruent location is up, then “red” plus “up” likely indicates a red response. In contrast, “red” plus “down” likely indicates a green response (or no response, depending on the manipulation). In other words, the contingency bias is identical, save for the fact that word and location information must be used jointly to predict the color response. Learning based on feature conjunctions is known to occur outside the context of conflict paradigms like the Stroop task (Mordkoff and Halterman, 2008), so it seems reasonable that location-word combinations might be used to predict the likely color response (see also, Holland, 1992 for a review of occasion setting).

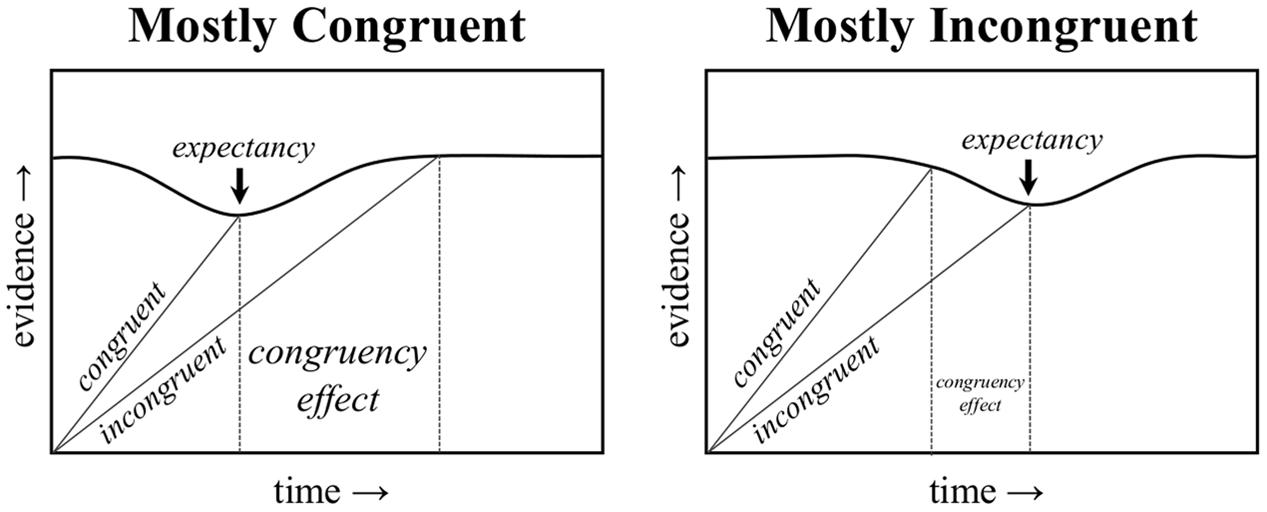

However, context-specific contingency learning cannot be the whole story. Crump and Milliken (2009; see also, Heinemann et al., 2009; Crump et al., 2016; but see, Hutcheon and Spieler, 2016) further demonstrated that if CSPC is manipulated with some (contingency-biased) inducer items, there is still a CSPC effect for other non-manipulated (contingency-unbiased) diagnostic items. Paralleling the above discussion of item-specific and list-level PC effects, the context-level (i.e., non-item-specific) component of the CSPC effect might be explained by context-specific (i.e., location-specific) temporal learning. The only added assumption would, again, be that (non-conflict) learning can be context-specific. In this case, participants might be learning a different pace for each contextual location. In support of this notion, Schmidt et al. (2014) have already demonstrated that a CSPC-like interaction can be observed in a non-conflict task. In particular, “mostly easy” and “mostly hard” location contexts were created with “easy” and “hard” items of a completely different sort: high contrast (i.e., easy to see) and low contrast (i.e., hard to see) letters. Figure 3 illustrates how context-specific temporal learning can come about. At the start of the trial, of course, the participant cannot know which location context they will experience. However, as soon as the target color appears above or below fixation, knowledge of the stimulus location can be used to rapidly adjust the expected time to respond. Thus, an earlier expected time to respond in the mostly congruent than mostly incongruent condition can again be determined.

FIGURE 3. Illustration of a temporal learning mechanism as it relates to context-specific proportion congruent effects. At the start of the trial, the expected time to respond is the same for a mostly congruent or mostly incongruent stimulus. However, as the contextual stimulus (e.g., location) is processed, the expected time to respond can adjust dynamically. The response threshold is decreased when real time is close to the expected time to respond. Dashed line represents where expected time equals real time. Dotted lines indicate the points where the current expectancy (solid line) intersects with current real time.

As the above discussions illustrate, the same procedural concerns in standard, item-specific, and list-level PC designs also apply to the CSPC design. The only added assumption is that learning (of both contingencies and temporal information) can be context/location-specific. Indeed, as the following sections will demonstrate, this “added” assumption is not much of an assumption at all, because context-specificity can be a simple by-product of the same memory mechanisms required for simple item-specific learning. In the simulations to follow, it will be demonstrated how the PEP model is able to simulate CSPC effects, including for contingency-unbiased diagnostic items, using only contingency and temporal learning mechanisms.

Materials and Methods

The Model

In this section, a brief conceptual overview of the PEP model is presented. A full description of the math of Version 2.0 of the model can be found in Schmidt et al. (2016), and the one small change made for the current Version 2.1 of the model can be found in the Supplementary Material of the current report. Full documented source code of the model can also be downloaded from the website of the author1.

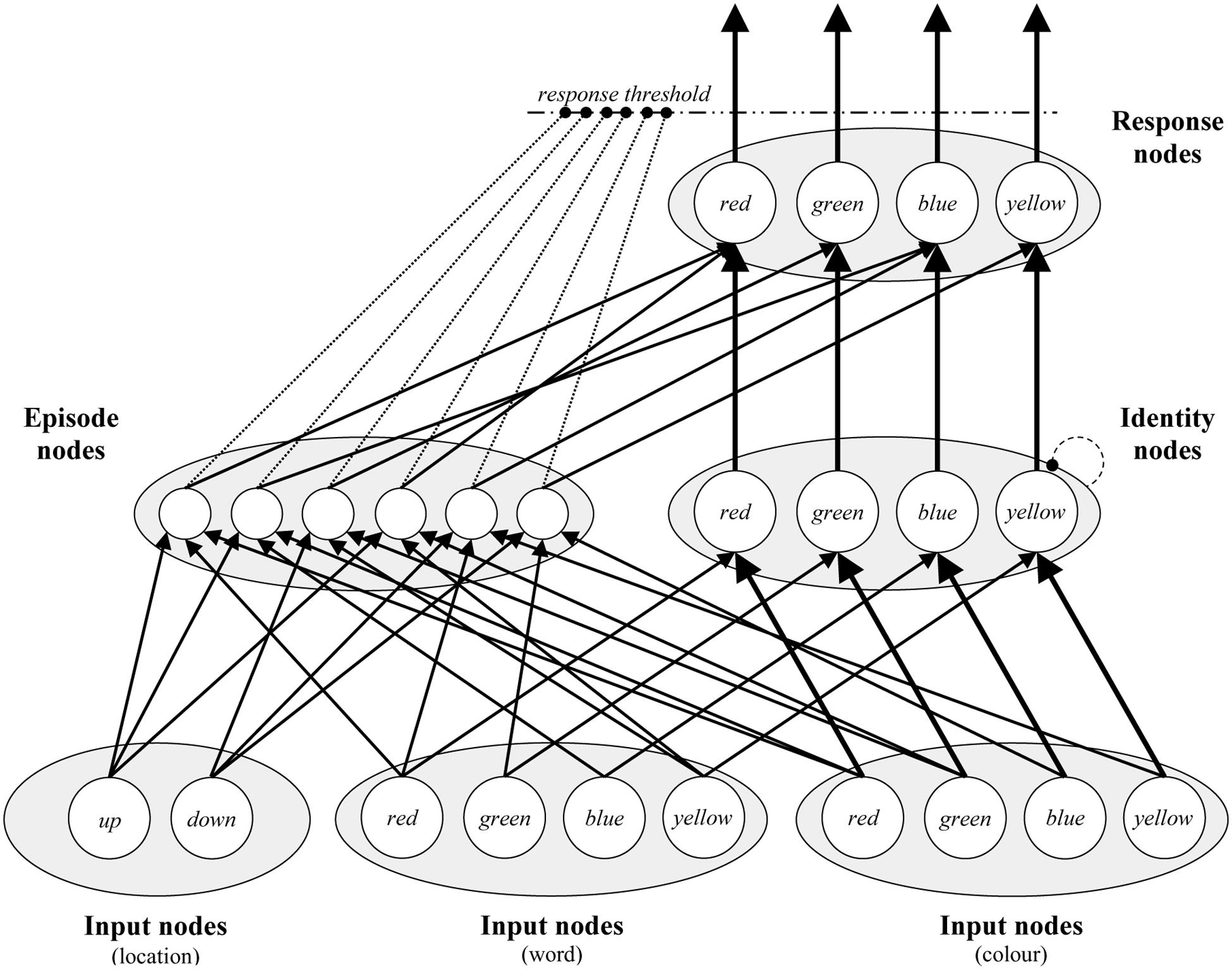

The PEP model is depicted visually in Figure 4. At the bottom of the model, there are Input nodes for each stimulus type (i.e., colors, words, and locations). On each trial, all Input nodes are activated with random noise, and presented stimuli are further activated with a signal. Thus, the model begins each trial with random biases for each input, but over time identifies the color, word, and location that are presented on the trial. Word and color Input nodes pass on activation to Identity nodes, one for each color concept. Within these nodes, lateral inhibition produces a main effect of congruency. Note that locations do not connect to Identity nodes, because they are unrelated to the target color dimension (this is also true of words when simulating experiments with color-unrelated neutral words), but location nodes are otherwise exactly identical to word nodes. Finally, activation from Identity nodes feeds forward to Response nodes. Once activation for one of the Response nodes exceeds the response threshold, the model responds. The number of “cycles” (simulated milliseconds) the model takes to respond is the simulated response time.

FIGURE 4. A visual depiction of the Parallel Episodic Processing (PEP) model. There are Input nodes for each location, word, and color. Input nodes feed activation to Identity nodes, where conflict can occur between color words and colors. Identity nodes feed activation to Response nodes, where a response is selected. Via Episode nodes, locations, words, and colors activate the responses that they are connected to, in addition to affecting the response threshold dynamically. Connections ending in circles indicate inhibition.

The above describes the algorithmic route of the model. The actual “work” of the model in producing the effects of interest in PEP model simulations is in the Episode nodes. During each trial, a new Episode node is created, which codes for the stimuli presented (word, color, and location), the response that was made, and also the response time. On subsequent trials, episodes are retrieved on the basis of the stimuli being currently presented to the model. For instance, the word “blue” will activate episodes coding for the word “blue.” Activated episodes have two effects on processing. The first is via the contingency learning mechanism (Schmidt, 2013a, 2016a; Schmidt et al., 2016). Each activated episode facilitates response nodes proportional to (a) how active the Episode node is, and (b) how strong the connection between the episode and response is. This produces a benefit for high contingency (accurately predictive) over low contingency (wrongly predictive) trials, because, for instance, if “green” is presented most often in red, then presenting the word “green” will activate “green”-word episodes, most of which code for (and therefore facilitate) red responses. Though less relevant for the current simulations, recently encoded episodes have a stronger effect on retrieval than older ones (for an extended discussion, see Schmidt et al., 2016).

The second way that episodic retrieval influences processing is via the temporal learning mechanism (see Schmidt, 2013c; Schmidt and Weissman, 2016). In particular, activated episodes are used to determine the expected time to respond. Like with the contingency learning mechanism, recent events have a much stronger effect on current-trial expectancies. As such, the model expects to be able to respond at a similar speed as on previous trials (see the Supplementary Material for the math on how this is computed). The response threshold (i.e., amount of activation required to select a response) temporarily decreases around the time at which the current cycle time is close to the expected time to respond. In this way, responding is speeded if the “pace” can be maintained (i.e., a response is able to cross the temporarily decreased threshold), but is not speeded if the pace is broken (e.g., not enough evidence for a response at the expected time).

Model Changes

No modifications need to be made to the PEP model to simulate context-specific contingency learning. Context-specific contingency learning is a logical consequence of the same learning mechanism used for regular (“item-specific”) contingency learning. With a “context-specific” PC manipulation all that has changed is an added feature (e.g., location). If “red” is presented most often in green in the bottom location, then when “red” and “down” are presented together “red” will activate “red” episodes and “down” will activate “down” episodes. This means that episodes encoding for both “red” and “down” will be very strongly activated. These episodes, of course, predominately point to a green response. Thus, according to this episodic conceptualization, “item-specific” and “context-specific” learning are not really different. The only minor difference between the two is that “context-specific” learning involves more than one predictive dimension.

Changes to the temporal learning mechanism, however, were required to simulate context-specific temporal learning. In the previous versions of the PEP model, the temporal learning mechanism was relatively simple. After each trial, the response time was (and still is) directly encoded into the episode for that trial. During each trial, the model retrieved the last five encoded episodes and each of these episodes decreased the response threshold at the encoded time. The most recent episode had the largest impact on the threshold and older ones had less and less impact. One thing to note about this instantiation of a rhythmic responding mechanism is that it is not item-specific. The last five episodes are retrieved regardless of whether these episodes match the currently presented stimuli. Secondly, episodes are retrieved at a strength directly proportional to how old they are and not based on connection weightings and episode activations. This formulation of the temporal learning mechanism was for simplicity only and changes in Version 2.1 of the PEP.

In the new version of the temporal learning mechanism, several key changes are made. First, a single pace (expected time to respond) is computed on each trial, rather than each retrieved episode affecting the response threshold separately. Second, the pace is adjusted during the course of the trial on the basis of which episodes are activated. Thus, if the episodes that are activated all happen to be trials in which a fast response was made, the pace will speed. Conversely, if episodes where slow responses were made are retrieved, the pace will slow. Thus, item-specific (and context-specific) influences on the current-trial rhythm are possible. Third, how much influence a given episode has on the pace is determined by the weighting of the time information for that episode. Just like the connections between episodes and stimuli or responses, time weightings (newly added to the model) are weakened with retrieval. Thus, recent events still have much larger impacts on the response threshold, but for a different reason than in the previous version of the model. Note that this change also makes the contingency and temporal learning mechanisms similar to one another (e.g., the prediction of what response to make and when to make it are both determined by the same episodic activations).

Full details of the new code are presented in the Supplementary Material, but here a walkthrough is presented as to how the temporal learning mechanism can produce a CSPC effect for contingency-unbiased transfer items. At the start of a trial, the pace is determined by the previous trial pace and the immediately preceding RT. As such, the model is blind to the upcoming context at this point. As the model begins to retrieve episodes, however, the pace will adjust. If, for instance, a color stimulus is presented in the top of the screen, episodes encoding for “up” will become more active than episodes encoding for “down.” If mostly congruent stimuli have been presented in the top location, then most of these episodes will have a fast RT encoded. As such, these activated episodes will bias the pace toward a faster response. That is, the model expects a response earlier on in the trial, which works to the advantage of (normally fast) congruent trials, thereby increasing the congruency effect. In contrast, when the color stimulus is presented in the mostly incongruent location, most of the retrieved episodes will point to a slow response. As such, a response will be expected later in the trial, and this will work to the advantage of incongruent trials, rather than congruent trials.

Because it may be unclear, it is important to stress why this works for contingency-unbiased diagnostic items. Of course, episodes that exactly match the current-trial stimuli (i.e., same word, same location, and same color) are the most strongly retrieved. However, episodes that only partially match the current-trial stimuli are also retrieved to a lesser extent. For instance, a diagnostic stimulus such as “blue” in yellow in the top location will partially activate all nodes that code for the word “blue,” all nodes that code for the color yellow, and, more crucially, all nodes that code for the top location. Thus, “blue” in yellow in the top location will also (partially) activate the inducer items for the top location (e.g., “red” in red), which will be 100% congruent. This is similar to the MINERVA 2 model of Hintzman (1986), where multiple episodes are retrieved in parallel, each in proportion to the overlap between the stimulus presented and the stimulus encoded in memory (i.e., such that partially similar episodes provide a weak contribution to the “echo” from memory, and highly similar episodes provide a stronger contribution). Thus, even though episodes are encoded in a 100% item-specific fashion, retrieval from memory does produce generalization. That is, inducers will influence time expectancies for diagnostic items in a context-specific fashion.

Simulation Procedure and Data Analysis

All simulations were run with one fixed parameterization. That is, the only thing that changes from simulation to simulation is which stimuli are presented to the model. Using the same fixed parameterization, it was also confirmed that Version 2.1 of the PEP model is backward compatible with previous simulations (i.e., the model still produces all the same effects that it was previously reported to simulate). Note that the model aims for rough qualitative fit to data and does not aim to match effects (or even overall RT) cycle-for-millisecond. This is particularly the case given that the same fixed-parameter model is used to simulate a broad range of effects from drastically different paradigms (e.g., verbal and keypress Stroop, single letter identification, prime-probe arrow tasks, Eriksen flanker tasks, etc.). That is, rather than overfitting a model to one or two specific experiments, the PEP model aims to demonstrate whether or not a given process is able to produce a range of different effects. Thus, the aim of the present simulations is to determine whether the processes instantiated in the model are sufficient to produce a CSPC effect of the correct form, and not to match exact effect sizes.

Note that each simulated “participant” has the same base parameters (e.g., same learning rate, etc.). This might be reasonably changed in the future to model inter-individual differences (e.g., by assigning parameters to each participant from a Gaussian distribution). However, there is plenty of noise throughout the model, such that each simulated participant does produce different results. To ensure robustness, 500 simulated “participants” were run for each simulation. Given this large “sample,” certainty of whether the model does or does not produce an effect is well above that for a typical experimental sample (i.e., ps ≪ 0.05). Thus, numerical effect sizes are discussed, but no statistics are reported for brevity. However, all effects that are discussed are statistically significant. Also, only response times are presented both for brevity and because the original CSPC experiments reported null CSPC effects for errors (or did not analyze errors at all). However, results for errors are comparable to the RT data (i.e., there is no speed-accuracy trade-off; see “Introduction”) and are not excessively inflated (as can be a problem in some models). It is worth noting, however, that errors were essentially non-existent (<1%) in the original participant data, whereas the model produced incongruent trial error rates more comparable to a standard Stroop paradigm. The smaller error rates in the CSPC participant data relative to a standard Stroop task might be due to the spatially- and temporally-separated distracters, a detail which is not reflected in the PEP model. This point will be discussed in further detail in the “General Discussion.” Error data and the data from the backward compatibility simulations are available for download from the website of the author (along with the model code download).

Simulation 1: Base CSPC Effect

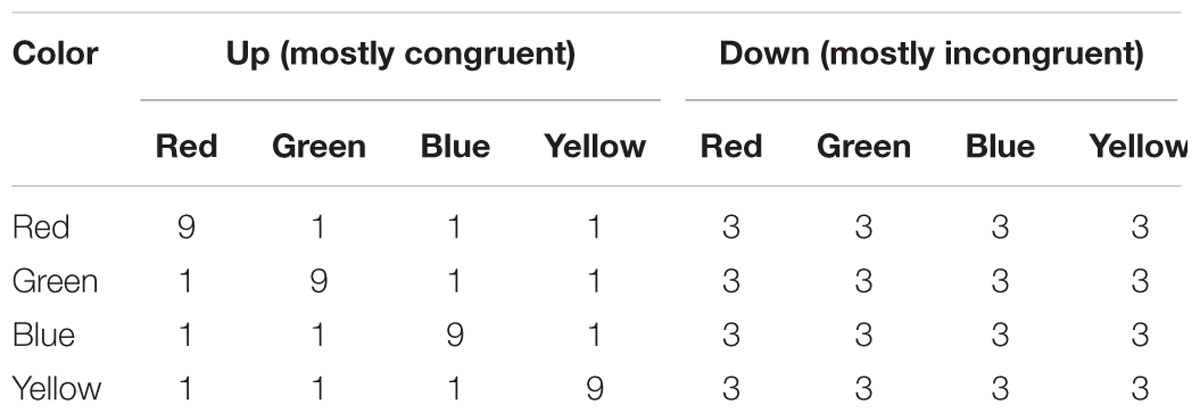

Simulation 1 models the basic location-based CSPC effect. In particular, Experiment 2a of Crump et al. (2006) is simulated. The design is presented in Table 1. Trials consist of the word “red,” “green,” “blue,” or “yellow” presented at fixation for 100 cycles followed by the a red, green, blue, or yellow patch presented above or below fixation until a response or 2000 cycles elapsed. In each block of 96 trials, there are 48 mostly congruent location trials, where the color word is followed by the congruent color 75% of the time. In the remaining 48 mostly incongruent location trials, color words are followed by each of the four display colors equally often (75% incongruent). As in the original report, the model was presented four blocks of trials (384 trials total). The simulation was run twice: once with the model as normal, and once with the temporal learning mechanism lesioned. The purpose of the second run was to demonstrate context-specific contingency learning. Because the PEP model will produce a CSPC effect via both the contingency and temporal learning mechanisms, lesioning the latter is necessary to demonstrate the former is also contributing to the effect (and in Simulation 2, a contingency-free demonstration of context-specific temporal learning is provided). This lesion was achieved by fixing the response threshold, rather than allowing it to vary (see Supplementary Material).

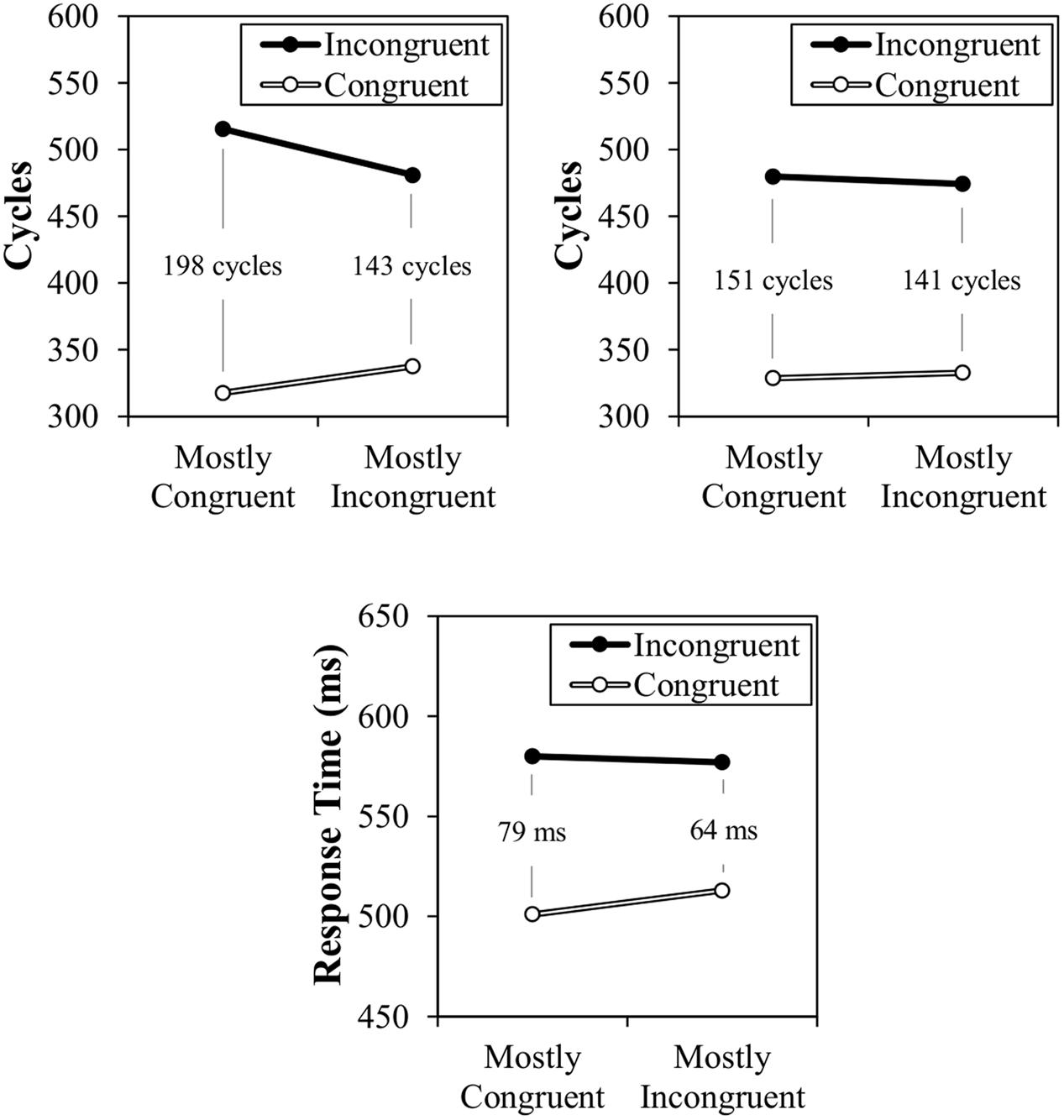

The results of Simulation 1 can be observed in Figure 5, along with the participant data from the original report. As can be observed, a location-based CSPC effect is observed both with or without the temporal learning mechanism. However, the CSPC effect is much larger in the normal model (54 cycles) than in the model with a lesioned temporal learning mechanism (10 cycles). The latter of the two simulations confirms that the contingency learning mechanism designed for simple item-specific learning produces context-specific contingency learning without any modifications. The larger effect in the non-lesioned model demonstrates the added contribution of context-specific temporal learning (see also, Simulation 2). It is noteworthy that both the overall congruency effect and CSPC effect are larger in the modeled data than in the participant data. This might suggest that the strength of activation of the distracting word could be weakened somewhat (to be discussed in further detail in the “General Discussion”). However, the goal of the model is only to match rough qualitative fit, rather than precise quantitative fit. That is, the important point is that this simulation confirms that CSPC effects can be produced by contingency and temporal learning mechanisms. The model does not require a conflict monitor or attentional control device. It is also noteworthy that, numerically, the effect in the participant data seemed to be slightly larger for congruent trials than for incongruent trials, whereas the reverse is true in the modeled data. However, this is only an (untested) numerical trend in the participant data that does not appear to be consistent across studies (e.g., see the participant data modeled in Simulation 2).

FIGURE 5. Simulation 1 normal model cycle times (top left), lesioned model cycle times (top right), and participant response times (bottom).

Simulation 2: CSPC Effect for Diagnostic Items

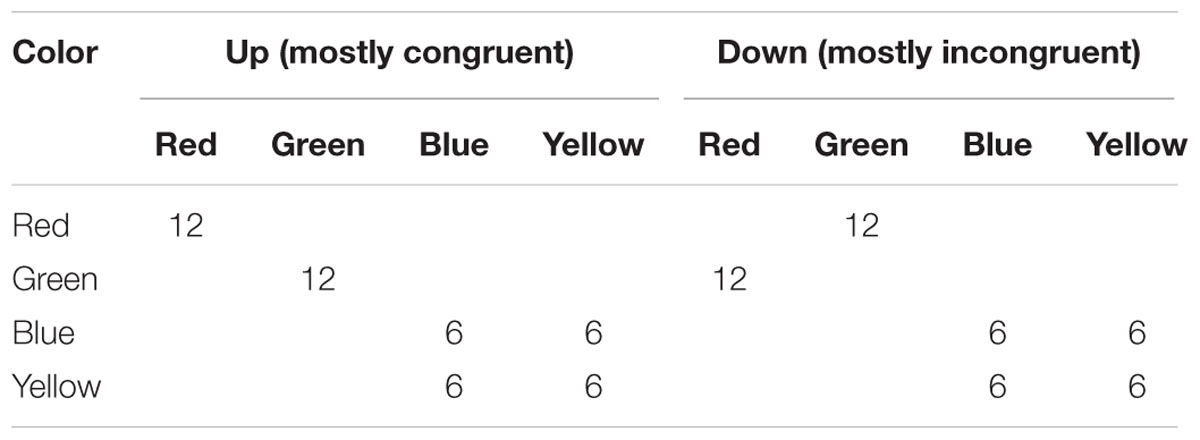

Simulation 2 models the CSPC effect for contingency-unbiased diagnostic items. In particular, Experiment 1 of Crump and Milliken (2009) is simulated. Diagnostic items are particularly interesting, because a CSPC effect for these items might seem strongly indicative of conflict monitoring. The present simulation demonstrates why this conclusion is too strong. The design of this experiment is presented in Table 2. The experiment is identical to that simulated in Simulation 1, except that there are two item types. Two items (e.g., “red” and “green”) are presented 100% with the congruent color in one location (mostly congruent context), and 100% with the incongruent color in the other location (mostly incongruent context). The remaining two items (“blue” and “yellow”) are presented equally often in the congruent and incongruent color in both location contexts. As in the original report, the model was presented four blocks of 96 trials each (384 trials total).

The results of Simulation 2 are presented in Figure 6, along with the original participant data. As can be observed, a CSPC effect is again successfully modeled, but this time for contingency-unbiased items. This 27 cycle effect therefore demonstrates that the context-specific temporal learning mechanism does allow for the inducer items to influence the context-specific rhythm for diagnostic items. It might be again noted that the congruency effect (and, proportionately, the CSPC effect) are notably larger in the modeled data. Again, the relatively small congruency effect in the participant data likely indicates the reduced influence of the spatially- and temporally-separated word dimension.

General Discussion

The present series of simulations demonstrated the proof-of-principle that location-based CSPC effects can result from context-specific contingency and temporal learning. Most importantly, even CSPC effects for contingency-unbiased diagnostic items can be explained by this episodic learning model. It is also critical to highlight that the model does not measure response conflict, as in the conflict monitoring model. Similarly, the PEP model does not adjust attention in response to conflict. Instead, the model maximizes performance by retrieving memories of past events in order to anticipate what response is likely given the stimuli presented, and when to respond on the basis of the same memories.

It should also be noted that the PEP model is not specifically developed to explain one narrow phenomenon. The same model, with one fixed-parameter set, also explains a range of findings outside the “cognitive control” domain, such as the power law of practice, color-word contingency learning and acquisition curves, mixing costs, and stimulus-response binding effects (Schmidt et al., 2016). It also explains a range of so-called “cognitive control” phenomena, such as item-specific PC effects, list-level PC effects for contingency-unbiased diagnostic items, congruency sequence effects in a paradigm that eliminates feature integration and contingency confounds, and asymmetric list shifting effects (Schmidt, 2013a,c, 2016a,b; Schmidt and Weissman, 2016). Including the two newly added simulations of CSPC effects, this totals 14 experiments spanning the practice, learning, binding, timing, and attentional control domains. Of particular interest, the “attentional control” phenomena are, in the PEP model, a product of the basic learning mechanisms required to simulate performance in non-conflict tasks. No conflict monitoring or attentional adjustment homunculi are needed as supplementary assumptions. The episodic account that the PEP model instantiates is therefore much more parsimonious than the conflict monitoring alternative.

Though the episodic account may be more parsimonious, it may, of course, be the case that conflict monitoring does play a role in these paradigms (e.g., in addition to the non-conflict learning biases). Indeed, the present simulations do nothing to argue against this possibility. Instead, the present simulations only demonstrate the feasibility of the simple episodic account. Some additional evidence does add further plausibility to the account proposed here, however. In addition to demonstrations of context-specific contingency learning (Mordkoff and Halterman, 2008) and context-specific temporal learning (Schmidt et al., 2014) in non-conflict tasks (i.e., where conflict monitoring is presumably impossible), King et al. (2012) reanalysed CSPC data with a linear ballistic accumulator model to contrast two competing interpretations of the effect. In particular, the conflict monitoring account suggests that CSPC effects come about because of shifts in the amount of attention to target and distracting stimuli. This implies that a change in the drift rate (i.e., rate of activation) for stimuli produces the CSPC effect. In contrast, the CSPC effect might result from changes in the response threshold (i.e., amount of evidence needed to select a response), indicative of expectancies. Their modeling results support the latter notion. This is seemingly inconsistent with the conflict monitoring hypothesis, because it is unclear how changes in attention to targets and distracters could be reflected in thresholds. King and colleagues interpret their results in terms of response caution (van Maanen et al., 2011), which is heavily related to the temporal learning account presented in the present paper (i.e., threshold adjustments resulting from expectancies), in addition to other time-based learning models (e.g., Grice, 1968; Kohfeld, 1968; Ollman and Billington, 1972; Van Duren and Sanders, 1988; Strayer and Kramer, 1994a,b; Kinoshita et al., 2011). Though these results do not rule out the possibility that conflict monitoring may play some role in the CSPC effect, like the present results, these modeling efforts add additional credence to the notion that non-attention based learning accounts are viable competing alternative explanations.

On the other hand, if attention does play some role in the CSPC effect, differences in attentional distribution in the mostly congruent and mostly incongruent contexts may originate from a factor unrelated to conflict. In particular, differences in stimulus informativeness in the mostly congruent and mostly incongruent conditions (Schmidt, 2014a) might lead to contingent attentional capture biases (Dishon-Berkovits and Algom, 2000; Jiang and Chun, 2001; Melara and Algom, 2003; Cosman and Vecera, 2014). For instance, Dishon-Berkovits and Algom manipulated the extent to which a distracting stimulus was predictive of (i.e., contingent with) the target stimulus. When a predictive contingency was present, word-word Stroop effects (i.e., both the target and distracter are words) were observed. The authors argued that attention was increased to the (predictive) distracter because it was informative for responding. However, when the distracter and targets were uncorrelated (i.e., every distracter presented equally often with all targets), the word-word Stroop effect was eliminated. They argued that, in this case, attention to the (unpredictive) distracter was reduced, thereby eliminating interference. In this way, presence of a contingency between distracters and targets can “capture” attention. In the original studies of Crump et al. (2006; but not in Crump and Milliken, 2009), words were predictive of the congruent color in the mostly congruent context, but unpredictive of the color in the mostly incongruent context. One might argue that this could lead to differences in contingency-driven (rather than conflict-driven) attentional capture in the two context. On the other hand, this would assume that attention to the word is adjusted after the word has already disappeared (i.e., when the contextual location is known). Thus, as with the conflict monitoring account, it would have to be assumed that any adjustment in processing occurs some time after initial perceptual processing. Still, if a compelling demonstration of a clear attentional contribution to the CSPC effect can be observed, additional work might focus on distinguishing between conflict monitoring and contingent attentional capture.

Before closing, it is important to note that there were some minor discrepancies between the modeled and participant data. Most notably, the model produced much larger congruency effects and, proportionately, larger CSPC effects than was observed in the participant data. Though not presented for brevity, the error congruency effect was also notably increased. Although the PEP model did produce the correct qualitative pattern of results (the express goal of this investigation), it is worth speculating over this quantitative difference. The model produced congruency effects and PC effects of a magnitude comparable to a standard Stroop task (i.e., color word printed in color). The reason for the much smaller effects in the participant data of CSPC experiments may originate from the fact that the distracting word is spatially- and temporally-separated from the target color. Indeed, the word is presented in a location (center) that is never a target location. Thus, the word likely receives less attention in such experiments, diminishing the impact of the word (Glaser and Glaser, 1982). Thus, one might reasonably argue that distracting word activation could be weakened in simulations of this sort of experiment. However, it is generally contrary to the fixed-parameter philosophy guiding development of the PEP framework to make simulation-by-simulation adjustments of parameters, so the results have been presented “as is.”

Context-specific proportion congruent effects are particularly interesting, because their existence implies that the cognitive system is able to very rapidly adapt to task-irrelevant (i.e., non-target) contextual information processed in parallel with task-relevant target stimuli. The transfer of the CSPC effect to contingency-unbiased diagnostic items is, at first glance, seemingly one of the most compelling pieces of evidence in support of the notion that conflict monitoring must play some role in performance. However, the current manuscript illustrates why this strong conclusion may not be warranted. Proportional and inducer/diagnostic item manipulations are typically used for the purpose of studying the effects of high versus low conflict contexts on attentional control. Unfortunately, these manipulations also introduce unintended (i.e., conflict-unrelated) regularities to the task. The human cognitive machine is particularly adept at picking up on such task regularities and using the acquired knowledge to automate performance and facilitate responding during expected events. To what extent the simple (non-conflict) episodic learning account presented in the current manuscript is a full (rather than just partial) description of CSPC effects is uncertain, but it is hoped that this report will encourage further investigation of these interesting questions.

Author Contributions

JS wrote the code for the simulations, ran the simulations, analyzed the data, and wrote the paper alone.

Funding

This research was supported by a postdoctoral researcher grant (1211814N) from the Research Foundation – Flanders (FWO – Vlaanderen) to the author.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fpsyg.2016.01806/full#supplementary-material

Footnotes

References

Atalay, N. B., and Misirlisoy, M. (2012). Can contingency learning alone account for item-specific control? Evidence from within- and between-language ISPC effects. J. Exp. Psychol. Learn. Mem. Cogn. 38, 1578–1590. doi: 10.1037/a0028458

Blais, C., and Bunge, S. (2010). Behavioral and neural evidence for item-specific performance monitoring. J. Cogn. Neurosci. 22, 2758–2767. doi: 10.1162/jocn.2009.21365

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., and Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychol. Rev. 108, 624–652. doi: 10.1037/0033-295X.108.3.624

Bugg, J. M. (2014). Conflict-triggered top-down control: default mode, last resort, or no such thing? J. Exp. Psychol. Learn. Mem. Cogn. 40, 567–587. doi: 10.1037/a0035032

Bugg, J. M. (2015). The relative attractiveness of distractors and targets affects the coming and going of item-specific control: evidence from flanker tasks. Atten. Percept. Psychophys. 77, 373–389. doi: 10.3758/s13414-014-0752-x

Bugg, J. M., and Chanani, S. (2011). List-wide control is not entirely elusive: evidence from picture-word Stroop. Psychon. Bull. Rev. 18, 930–936. doi: 10.3758/s13423-011-0112-y

Bugg, J. M., and Hutchison, K. A. (2013). Converging evidence for control of color-word Stroop interference at the item level. J. Exp. Psychol. Hum. Percept. Perform. 39, 433–449. doi: 10.1037/a0029145

Bugg, J. M., Jacoby, L. L., and Chanani, S. (2011a). Why it is too early to lose control in accounts of item-specific proportion congruency effects. J. Exp. Psychol. Hum. Percept. Perform. 37, 844–859. doi: 10.1037/a0019957

Bugg, J. M., Jacoby, L. L., and Toth, J. P. (2008). Multiple levels of control in the Stroop task. Mem. Cogn. 36, 1484–1494. doi: 10.3758/MC.36.8.1484

Bugg, J. M., McDaniel, M. A., Scullin, M. K., and Braver, T. S. (2011b). Revealing list-level control in the Stroop task by uncovering its benefits and a cost. J. Exp. Psychol. Hum. Percept. Perform. 37, 1595–1606. doi: 10.1037/a0024670

Corballis, P. M., and Gratton, G. (2003). Independent control of processing strategies for different locations in the visual field. Biol. Psychol. 64, 191–209. doi: 10.1016/S0301-0511(03)00109-1

Cosman, J. D., and Vecera, S. P. (2014). Establishment of an attentional set via statistical learning. J. Exp. Psychol. Hum. Percept. Perform. 40, 1–6. doi: 10.1037/a0034489

Crump, M. J. C., Brosowsky, N. P., and Milliken, B. (2016). Reproducing the location-based context-specific proportion congruent effect for frequency unbiased items: a reply to Hutcheon and Spieler (2016). Q. J. Exp. Psychol. (Hove) doi: 10.1080/17470218.2016.1206130 [Epub ahead of print]

Crump, M. J. C., Gong, Z. Y., and Milliken, B. (2006). The context-specific proportion congruent Stroop effect: location as a contextual cue. Psychon. Bull. Rev. 13, 316–321. doi: 10.3758/BF03193850

Crump, M. J. C., and Milliken, B. (2009). The flexibility of context-specific control: evidence for context-driven generalization of item-specific control settings. Q. J. Exp. Psychol. 62, 1523–1532. doi: 10.1080/17470210902752096

Crump, M. J. C., Vaquero, J. M. M., and Milliken, B. (2008). Context-specific learning and control: the roles of awareness, task relevance, and relative salience. Conscious. Cogn. 17, 22–36. doi: 10.1016/j.concog.2007.01.004

Dishon-Berkovits, M., and Algom, D. (2000). The Stroop effect: it is not the robust phenomenon that you have thought it to be. Mem. Cogn. 28, 1437–1449. doi: 10.3758/BF03211844

Glaser, M. O., and Glaser, W. R. (1982). Time course analysis of the Stroop phenomenon. J. Exp. Psychol. Hum. Percept. Perform. 8, 875–894.

Grandjean, J., D’Ostilio, K., Fias, W., Phillips, C., Balteau, E., Degueldre, C., et al. (2013). Exploration of the mechanisms underlying the ISPC effect: evidence from behavioral and neuroimaging data. Neuropsychologia 51, 1040–1049. doi: 10.1016/j.neuropsychologia.2013.02.015

Grice, G. R. (1968). Stimulus intensity and response evocation. Psychol. Rev. 75, 359–373. doi: 10.1037/h0026287

Grosjean, M., Rosenbaum, D. A., and Elsinger, C. (2001). Timing and reaction time. J. Exp. Psychol. Gen. 130, 256–272. doi: 10.1037/0096-3445.130.2.256

Hazeltine, E., and Mordkoff, J. T. (2014). Resolved but not forgotten: stroop conflict dredges up the past. Front. Psychol. 5:1327. doi: 10.3389/fpsyg.2014.01327

Heinemann, A., Kunde, W., and Kiesel, A. (2009). Context-specific prime-congruency effects: on the role of conscious stimulus representations for cognitive control. Conscious. Cogn. 18, 966–976. doi: 10.1016/j.concog.2009.08.009

Hintzman, D. L. (1986). “Schema abstraction” in a multiple-trace memory model. Psychol. Rev. 93, 411–428. doi: 10.1037/a0027023

Holland, P. C. (1992). Occasion setting in Pavlovian conditioning. Psychol. Learn. Motiv. 28, 69–125. doi: 10.1016/S0079-7421(08)60488-0

Hutcheon, T. G., and Spieler, D. H. (2016). Limits on the generalizability of context-driven control. Q. J. Exp. Psychol. doi: 10.1080/17470218.2016.1182193 [Epub ahead of print]

Hutchison, K. A. (2011). The interactive effects of listwide control, item-based control, and working memory capacity on Stroop performance. J. Exp. Psychol. Learn. Mem. Cogn. 37, 851–860. doi: 10.1037/a0023437

Jiang, Y. H., and Chun, M. M. (2001). Selective attention modulates implicit learning. Q. J. Exp. Psychol. 54A, 1105–1124. doi: 10.1080/713756001

King, J. A., Donkin, C., Korb, F. M., and Egner, T. (2012). Model-based analysis of context-specific cognitive control. Front. Psychol. 3:358. doi: 10.3389/fpsyg.2012.00358

Kinoshita, S., Mozer, M. C., and Forster, K. I. (2011). Dynamic adaptation to history of trial difficulty explains the effect of congruency proportion on masked priming. J. Exp. Psychol. Gen. 140, 622–636. doi: 10.1037/a0024230

Kohfeld, D. L. (1968). Stimulus intensity and adaptation level as determinants of simple reaction time. J. Exp. Psychol. 76, 468–473. doi: 10.1037/h0021285

Lehle, C., and Hubner, R. (2008). On-the-fly adaptation of selectivity in the flanker task. Psychon. Bull. Rev. 15, 814–818. doi: 10.3758/PBR.15.4.814

Levin, Y., and Tzelgov, J. (2016). Contingency learning is not affected by conflict experience: evidence from a task conflict-free, item-specific Stroop paradigm. Acta Psychol. 164, 39–45. doi: 10.1016/j.actpsy.2015.12.009

Logan, G. D., and Zbrodoff, N. J. (1979). When it helps to be misled: facilitative effects of increasing the frequency of conflicting stimuli in a Stroop-like task. Mem. Cogn. 7, 166–174. doi: 10.3758/BF03197535

Logan, G. D., Zbrodoff, N. J., and Williamson, J. (1984). Strategies in the color-word Stroop task. Bull. Psychon. Soc. 22, 135–138. doi: 10.3758/BF03333784

Lowe, D. G., and Mitterer, J. O. (1982). Selective and divided attention in a Stroop task. Can. J. Psychol. 36, 684–700. doi: 10.1037/h0080661

Melara, R. D., and Algom, D. (2003). Driven by information: a tectonic theory of Stroop effects. Psychol. Rev. 110, 422–471. doi: 10.1037/0033-295X.110.3.422

Miller, E. K., and Cohen, J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202. doi: 10.1146/annurev.neuro.24.1.167

Mordkoff, J. T. (1996). “Selective attention and internal constraints: there is more to the flanker effect than biased contingencies,” in Converging Operations in the Study of Visual Selective Attention, eds A. Kramer, M. G. H. Coles, and G. D. Logan (Washington, DC: APA), 483–502.

Mordkoff, J. T., and Halterman, R. (2008). Feature integration without visual attention: evidence from the correlated flankers task. Psychon. Bull. Rev. 15, 385–389. doi: 10.3758/PBR.15.2.385

Ollman, R. T., and Billington, M. J. (1972). The deadline model for simple reaction times. Cogn. Psychol. 3, 311–336. doi: 10.1016/0010-0285(72)90010-2

Petersen, S. E., and Posner, M. I. (2012). The attention system of the human brain: 20 years after. Annu. Rev. Neurosci. 35, 73–89. doi: 10.1146/annurev-neuro-062111-150525

Schmidt, J. R. (2013a). The parallel episodic processing (PEP) model: dissociating contingency and conflict adaptation in the item-specific proportion congruent paradigm. Acta Psychol. 142, 119–126. doi: 10.1016/j.actpsy.2012.11.004

Schmidt, J. R. (2013b). Questioning conflict adaptation: proportion congruent and Gratton effects reconsidered. Psychon. Bull. Rev. 20, 615–630. doi: 10.3758/s13423-012-0373-0

Schmidt, J. R. (2013c). Temporal learning and list-level proportion congruency: conflict adaptation or learning when to respond? PloS ONE 8:e0082320. doi: 10.1371/journal.pone.0082320

Schmidt, J. R. (2014a). Contingencies and attentional capture: the importance of matching stimulus informativeness in the item-specific proportion congruent task. Front. Psychol. 5:540. doi: 10.3389/fpsyg.2014.00540

Schmidt, J. R. (2014b). List-level transfer effects in temporal learning: further complications for the list-level proportion congruent effect. J. Cogn. Psychol. 26, 373–385. doi: 10.1080/20445911.2014.896367

Schmidt, J. R. (2016a). Proportion congruency and practice: a contingency learning account of asymmetric list shifting effects. J. Exp. Psychol. Learn. Mem. Cogn. 42, 1496–1505. doi: 10.1037/xlm0000254

Schmidt, J. R. (2016b). Temporal learning and rhythmic responding: no reduction in the proportion easy effect with variable response-stimulus intervals. Front. Psychol. 7:634. doi: 10.3389/fpsyg.2016.00634

Schmidt, J. R., and Besner, D. (2008). The Stroop effect: why proportion congruent has nothing to do with congruency and everything to do with contingency. J. Exp. Psychol. Learn. Mem. Cogn. 34, 514–523. doi: 10.1037/0278-7393.34.3.514

Schmidt, J. R., De Houwer, J., and Rothermund, K. (2016). The parallel episodic processing (PEP) model 2.0: a single computational model of stimulus-response binding, contingency learning, power curves, and mixing costs. Cogn. Psychol. 91, 82–108. doi: 10.1016/j.cogpsych.2016.10.004

Schmidt, J. R., Lemercier, C., and De Houwer, J. (2014). Context-specific temporal learning with non-conflict stimuli: proof-of-principle for a learning account of context-specific proportion congruent effects. Front. Psychol. 5:1241. doi: 10.3389/fpsyg.2014.01241

Schmidt, J. R., Notebaert, W., and Van den Bussche, E. (2015). Is conflict adaptation an illusion? Front. Psychol. 6:172. doi: 10.3389/fpsyg.2015.00172

Schmidt, J. R., and Weissman, D. H. (2016). Congruency sequence effects and previous response times: Conflict adaptation or temporal learning? Psychol. Res. 80, 590–607. doi: 10.1007/s00426-015-0681-x

Strayer, D. L., and Kramer, A. F. (1994a). Strategies and automaticity: 1. Basic findings and conceptual-framework. J. Exp. Psychol. Learn. Mem. Cogn. 20, 318–341.

Strayer, D. L., and Kramer, A. F. (1994b). Strategies and automaticity: 2. Dynamic aspects of strategy adjustment. J. Exp. Psychol. Learn. Mem. Cogn. 20, 342–365.

Stroop, J. R. (1935). Studies on interference in serial verbal reactions. J. Exp. Psychol. 18, 643–661. doi: 10.1037/h0054651

Treisman, A. M., and Gelade, G. (1980). Feature-integration theory of attention. Cogn. Psychol. 12, 97–136. doi: 10.1016/0010-0285(80)90005-5

Van Duren, L. L., and Sanders, A. F. (1988). On the robustness of the additive factors stage structure in blocked and mixed choice reaction designs. Acta Psychol. 69, 83–94. doi: 10.1016/0001-6918(88)90031-5

van Maanen, L., Brown, S. D., Eichele, T., Wagenmakers, E. J., Ho, T., Serences, J., et al. (2011). Neural correlates of trial-to-trial fluctuations in response caution. J. Neurosci. 31, 17488–17495. doi: 10.1523/JNEUROSCI.2924-11.2011

Wendt, M., and Kiesel, A. (2011). Conflict adaptation in time: foreperiods as contextual cues for attentional adjustment. Psychon. Bull. Rev. 18, 910–916. doi: 10.3758/s13423-011-0119-4

Wendt, M., Kluwe, R. H., and Vietze, I. (2008). Location-specific versus hemisphere-specific adaptation of processing selectivity. Psychon. Bull. Rev. 15, 135–140. doi: 10.3758/PBR.15.1.135

Keywords: context-specificity, contingency learning, temporal learning, computational modeling, attention, conflict monitoring, proportion congruent effect, Stroop task

Citation: Schmidt JR (2016) Context-Specific Proportion Congruency Effects: An Episodic Learning Account and Computational Model. Front. Psychol. 7:1806. doi: 10.3389/fpsyg.2016.01806

Received: 21 September 2016; Accepted: 01 November 2016;

Published: 16 November 2016.

Edited by:

Antonino Vallesi, University of Padua, ItalyReviewed by:

Sander Andries Los, Vrije Universiteit Amsterdam, NetherlandsJ. Toby Mordkoff, University of Iowa, USA

Daniel Algom, Tel-Aviv University, Israel

Copyright © 2016 Schmidt. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: James R. Schmidt, james.schmidt@ugent.be

James R. Schmidt

James R. Schmidt