Influence of Background Musical Emotions on Attention in Congenital Amusia

- 1Laboratory of Behavioral Neurology and Imaging of Cognition, Department of Fundamental Neuroscience, University of Geneva, Geneva, Switzerland

- 2Swiss Center of Affective Sciences, Department of Psychology, University of Geneva, Geneva, Switzerland

- 3International Laboratory for Brain, Music and Sound Research, University of Montreal, Montreal, QC, Canada

- 4Department of Psychology, University of Montreal, Montreal, QC, Canada

Congenital amusia in its most common form is a disorder characterized by a musical pitch processing deficit. Although pitch is involved in conveying emotion in music, the implications for pitch deficits on musical emotion judgements is still under debate. Relatedly, both limited and spared musical emotion recognition was reported in amusia in conditions where emotion cues were not determined by musical mode or dissonance. Additionally, assumed links between musical abilities and visuo-spatial attention processes need further investigation in congenital amusics. Hence, we here test to what extent musical emotions can influence attentional performance. Fifteen congenital amusic adults and fifteen healthy controls matched for age and education were assessed in three attentional conditions: executive control (distractor inhibition), alerting, and orienting (spatial shift) while music expressing either joy, tenderness, sadness, or tension was presented. Visual target detection was in the normal range for both accuracy and response times in the amusic relative to the control participants. Moreover, in both groups, music exposure produced facilitating effects on selective attention that appeared to be driven by the arousal dimension of musical emotional content, with faster correct target detection during joyful compared to sad music. These findings corroborate the idea that pitch processing deficits related to congenital amusia do not impede other cognitive domains, particularly visual attention. Furthermore, our study uncovers an intact influence of music and its emotional content on the attentional abilities of amusic individuals. The results highlight the domain-selectivity of the pitch disorder in congenital amusia, which largely spares the development of visual attention and affective systems.

Introduction

Music is prevalent in the modern environments of our daily life. Frequently used in concomitance with mental or motor routine activities such as working out, reading a book, or driving a car, music exposure is also present in many public places, such as restaurants or shops. Although music can be distracting (Kämpfe et al., 2011; Silvestrini et al., 2011), it also has the capacity to enhance cognitive or physical activities in various populations, including Alzheimer's and Parkinson's disease patients, elderly with fall risks (Trombetti et al., 2011; Hars et al., 2013), as well as healthy people (Thompson et al., 2001; Trost et al., 2014; Fernandez et al., 2019b). For instance, music exposure can enhance visuo-spatial attention (Rowe et al., 2007; McConnell and Shore, 2011; Trost et al., 2014; Fernandez et al., 2019b), an essential cognitive ability involving allocating processing resources to specific goal-relevant sensory information.

The beneficial effect of music exposure on visuo-spatial attention may be at least partly related to the emotions music conveys. Previous research has demonstrated the importance of affective states in influencing attention allocation (Mitchell and Phillips, 2007; Vanlessen et al., 2016). Notably, positive affect has frequently been associated with a broader scope of attention (Fredrickson, 2004) which might in turn impair selective attention due to reduced selectivity (Rowe et al., 2007). During music exposure, both positive valence and high arousal may play a similar role in enhancing visuo-spatial information processing (McConnell and Shore, 2011; Trost et al., 2014; Fernandez et al., 2019b).

We (Fernandez et al., 2019b) previously investigated the impact of exposure to music evoking different emotions, including joy, tension, tenderness, and sadness, on the deployment of selective attention processes using a classic visuo-spatial attention network test (ANT) (Fan et al., 2002). In line with other results (Trost et al., 2014), we found that when control participants were exposed to highly arousing background music, especially pleasant music, their performance on the test improved, as revealed by faster target detection in the presence of distractors and greater engagement of fronto-parietal areas (Fernandez et al., 2019b) which is associated with top-down attentional control (Corbetta and Shulman, 2002). These findings are consistent with the notion that rhythmic stimuli can stimulate physiological systems including attentional processes, through their beat structure, especially when the target appears in synchrony with the strong beats of the presented music (Escoffier et al., 2010; Bolger et al., 2013; Trost et al., 2014). The effect of music exposure on selective attention resources is probably mediated by an entrainment of brain rhythms and induced changes in emotional state.

Critically, the above-mentioned studies were conducted using a sample from the general population without regard to individual musical abilities. Notably, people are not equal when it comes to music abilities. Although most people develop normal musical skills, 1.5 to 4% of the population (Peretz and Vuvan, 2017) may suffer from a genetic, music-specific neurodevelopment disorder called congenital amusia (Peretz et al., 2002; Hyde and Peretz, 2004). This musical disorder can be separated into two variants: the most common pitch-based form (also referred as “pitch deafness”) and the more recently described time-based form (also referred as “beat deafness”) (Phillips-Silver et al., 2013; Peretz and Vuvan, 2017). In the pitch-based form of congenital amusia, the focus of this study, individuals (amusics hereafter) present a dysfunction in the fine-grained processing of the pitch structure of music which plays a fundamental role in developing a normal musical system and in fully experiencing music's subtleties (Peretz, 2016). At the cortical level, congenital amusia is associated with neural anomalies affecting both functional and structural connectivity in fronto-temporal networks of the right hemisphere (Hyde et al., 2011; Peretz, 2016). At the behavioral level, congenital amusia is characterized by a selective impairment in the perception and production of very small (<2 semitones) variations in pitch (Hyde and Peretz, 2004). This pitch deficit can affect several musical tasks, such as singing in tune (Dalla Bella et al., 2009), perceiving dissonance (Ayotte et al., 2002; Cousineau et al., 2012), and recognizing familiar melodies without the aid of lyrics (Ayotte et al., 2002). Although this pitch deficit does not usually appear alongside any other psychoacoustic deficits, many amusics also experience difficulties with rhythm (Ayotte et al., 2002), especially in the presence of pitch variation (Foxton et al., 2006; Phillips-Silver et al., 2013).

While the emotional information conveyed in music and speech largely depends on pitch (among other acoustical features), studies investigating the relationship between emotional sensitivity and pitch deficits present in congenital amusia show contrasting results. Previous studies reported that amusics could still correctly recognize the emotion content expressed by music (Ayotte et al., 2002; Gosselin et al., 2015; Jiang et al., 2017) or by speech prosody (Ayotte et al., 2002; Hutchins et al., 2010) despite their musical impairment. In most cases, amusics were found to rely on alternative acoustic features (e.g., tempo, timbre, or roughness) to correctly distinguish musical emotions (Cousineau et al., 2012; Gosselin et al., 2015; Marin et al., 2015). However, other work observed mild impairments in the discrimination of emotions in music or in speech but with preserved intensity judgements (Lévêque et al., 2018; Pralus et al., 2019), or moderately reduced capacities to discriminate emotional prosody in speech (Thompson et al., 2012; Lolli et al., 2015; Lima et al., 2016) as compared with control participants. In addition to suggesting that pitch processing impacts musical emotion perception, the latter findings could also be explained by recent evidence linking congenital amusia to music-specific disturbances in consciousness. Because of these heterogeneous findings on emotion recognition in amusics and their inability to create conscious representations of pitch, it remains unclear whether amusics may still respond to the presence of affective music exposure in other conditions that rely on more implicit processing (Tillmann et al., 2016; Lévêque et al., 2018; Pralus et al., 2019), or whether their perception of emotional content in music may be dampened by their limited musical resources when attentional demands are focused on other stimuli. In the latter case, amusics' perceptual system might be unable to extract relevant affective cues from music due to disrupted pitch processing, and therefore fail to produce the indirect effects of musical emotions on other cognitive functions, such as those observed in attentional processing. Thus, testing attentional effects of musical emotions in amusics would allow us not only to better characterize the extent of musical deficits in this population, but also to clarify the possible role of pitch processing in driving these effects.

Finally, although congenital amusia deficits are thought to occur without any other (cognitive) impairments, several theories have suggested an intimate connection between music and visual-spatial abilities, particularly linking sound frequency representation with spatial codes (e.g., lower pitch is usually represented lower in space than higher pitch) (Rusconi et al., 2006). In line with this assumption, enhanced attentional processing has been reported in musicians compared to non-musicians (Brochard et al., 2004; Sluming et al., 2007) including better executive control performance measured with an ANT paradigm (Medina and Barraza, 2019). However, the relationship between music abilities and visuo-spatial attention is unclear in musically (or visually) impaired people. While Douglas and Bilkey (2007) linked poor performance on a classic mental rotation task in amusic individuals to their deficits in processing contour components, other studies found preserved performance in a similar rotation task in amusics (Tillmann et al., 2010; Williamson et al., 2011). These divergent findings further motivate our aim to better characterize the relationship between different visuo-spatial attention components and musical capacities in amusia.

Given these gaps in the current literature, we here assess to what extent congenital amusia deficit might interfere with the indirect (implicit) effects of musical emotions on selective attentional processes. To address this question, amusic and control participants performed the Attentional Network task (ANT; Fan et al., 2002) mentioned above while they were exposed to music communicating four emotional expressions (differentially organized along both arousal and valence dimensions), in addition to a silent condition. In one single task, the ANT probes three distinct components of selective visuo-spatial processing, namely, executive control, alerting, and orienting (Posner and Petersen, 1990; Petersen and Posner, 2012). Executive control is characterized as the ability to selectively attend to specific information by filtering out concurrent distractors. Alerting is defined as the ability to maintain a highly reactive state toward sensory stimuli, while orienting involves the ability to change the focus of attention and direct it to a specific feature or location of stimuli. To our knowledge, the present study is the first to evaluate the effects of several properties of music exposure on distinct components of attention in individuals presenting the pitch-based form of congenital amusia, particularly concerning the emotional aspects of music and their underlying arousal and valence dimensions, and also more generally the first to probe for any link between musical and visuo-spatial attention abilities in this population.

Materials and Methods

Participants

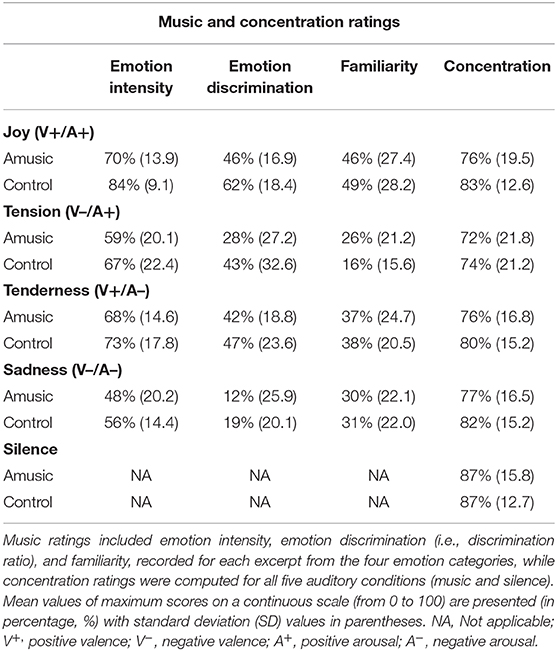

Fifteen amusic participants meeting the criteria for the pitch-based form of congenital amusia and fifteen controls matched for education level and musical education duration took part in the study. Participants were mainly right-handed. Participants' characteristics are provided in Table 1.

Table 1. Participants' characteristics and musical abilities, measured with the Montreal Battery of Evaluation of Amusia (MBEA; Peretz et al., 2003) and the pitch change detection task (Hyde and Peretz, 2004).

Prior to being selected for participation in this study, the participants were tested on their musical abilities with the online test of amusia (Peretz and Vuvan, 2017), the Montreal Battery of Evaluation of Amusia (MBEA; Peretz et al., 2003), and the Pitch-Change Detection task (Hyde and Peretz, 2004), all reliable tools to identify amusic individuals (Vuvan et al., 2018). The online test is composed of three tasks, namely the scale test, the off-beat test, and the out-of-key test. Standard testing with the MBEA comprises the same scale test and additional contour, interval, rhythm, meter, and memory tests (Peretz et al., 2003; Vuvan et al., 2018). A melodic composite score was computed by averaging the scale, contour, and interval values measured with the MBEA. Finally, the pitch-change detection task evaluates the severity of the pitch deficit. This task assesses the participant's accuracy in detecting a pitch change of the fourth tone in a five-tone sequence. Here, the pitch-change detection scores represent the detection accuracy for the smallest pitch change in the task, i.e., a pitch change of a quarter semitone (25 cents), which is the most discriminant change (Hyde and Peretz, 2004). All amusic participants included in the present study scored below cut-off scores for both the scale test (22/30) and the melodic composite test (21.4/30), except for one amusic who scored below cut-off on the scale test but slightly above cut-off on the melodic composite test. These cut-off scores (i.e., 2SD below the mean of a normative sample) were chosen in accordance with latest normative data (Peretz and Vuvan, 2017; Vuvan et al., 2018) and used as inclusion criteria. All control participants presented normal music abilities, while all amusic participants scored below the cut-off, indicating the presence of pitch deficits.

All amusic participants had normal non-verbal reasoning and verbal working memory abilities as assessed by the Matrix Reasoning and the Digit Span tests from the WAIS-III (Wechsler Adult Intelligence Scale; Wechsler et al., 1997). All participants had normal or corrected-to-normal vision, had no hearing deficits, and no psychiatric, neurological, or toxicological history.

Only non-musicians were recruited according to the following criteria: (i) no music education/practice before 10 years old, and (ii) no current/past regular music practice for a duration of over 5 years. All participants provided informed written consent in accordance with the regulations of the Research Ethics Council for the Faculty of Arts and Sciences at the Université de Montréal.

Auditory Material

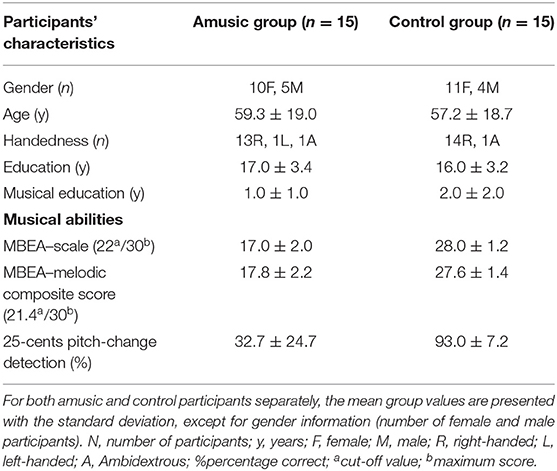

Twelve pieces of instrumental classical music validated in previous work (Trost et al., 2012; Fernandez et al., 2019b) were used in the current study and categorized into four emotions, namely joy, tension, tenderness, and sadness. These four emotions are organized along orthogonal dimensions of arousal (i.e., Relaxing–Stimulating) and valence (i.e., Unpleasant–Pleasant) (Trost et al., 2012) and represent the major emotion types identified in the Geneva Emotional Music Scale (Zentner et al., 2008). Hence, both joy and tension are typically associated with highly arousing ratings, while sadness and tenderness are defined as low-arousing. Orthogonally, joy and tenderness are categorized as positively valenced, while tension and sadness are negatively valenced. Our musical excerpts comprised three pieces for each of these four categories, and were presented three times each during the experiments. All musical excerpts had a 45-s duration. Acoustic characteristics of music excerpts are presented for the four emotion categories in Table 2.

Table 2. Acoustic characteristics of the music excerpts included in the study for the four emotion categories.

Experimental Design

Attention Network Task

The experimental task took place in a sound-isolated room. Visual stimuli were displayed on a screen at a distance of 50 cm while auditory stimuli were presented binaurally through high-quality headphones (DT 770 pro−250 Ohms, Beyerdynamic) with optimal tolerable loudness determined for each participant. Stimuli presentation and response recording (through a standard keyboard) were controlled using Cogent toolbox (developed by Cogent 2000 and Cogent Graphics) implemented in Matlab 2009b (Mathworks Inc., Natick, MA, USA).

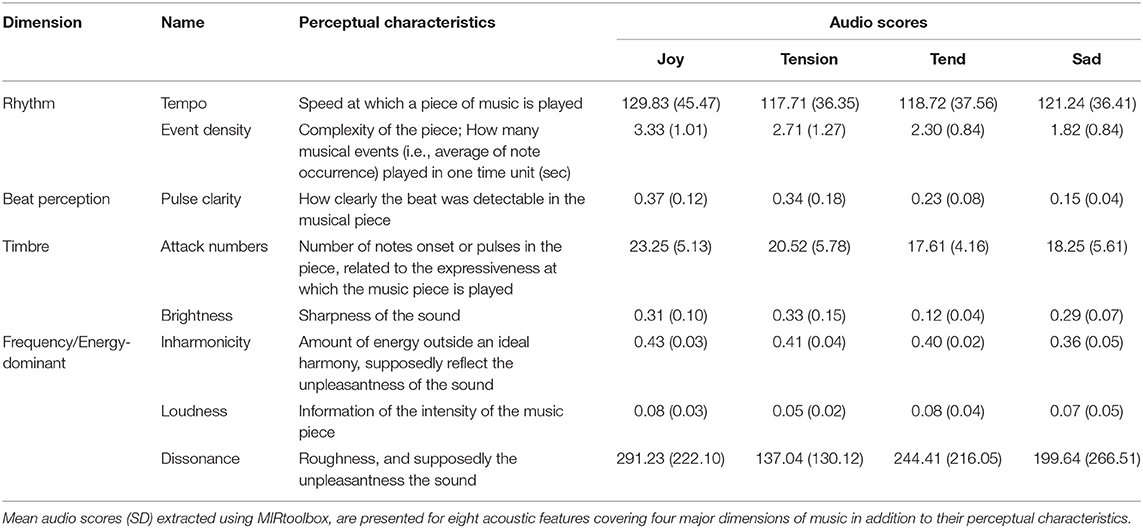

We used a modified Attention Network Test (ANT; Fan et al., 2002), similar to our previous study in young and older healthy individuals (Fernandez et al., 2019b). In this task, participants are asked to judge the direction of a central arrow (leftward or rightward) considered as the target, presented together with either congruent or incongruent flankers (i.e., distractor arrows with the same or different direction, respectively). This visual display (five arrows including central target) is preceded by one of four types of cues (represented by a zero) at different positions on the screen (Figure 1). These cues correspond to either a central, double, spatial (valid or invalid), or no-cue condition. Based on previous work by Fan et al. (2002), the executive component of attention was assessed by contrasting congruent vs. incongruent conditions regardless of cue type. Alerting and Orienting components were measured by comparing different cue conditions (i.e., double vs. no-cue conditions for alerting; center vs. valid spatial cue conditions for orienting).

Figure 1. Illustration of the modified ANT design, with distinct (A) cue categories and (B) target categories used to assess the executive, alerting, and orienting components of attention. (C) Example of a typical trial time-course at the beginning of a block (here musical block), with an empty 3,500 ms interval prior to the first trials. All trials began with a cue presentation (spatially invalid cue here), followed by the target display (incongruent condition here). Participants had to indicate the direction of the central arrow of the target (right or left) as fast and as accurately as possible after its presentation (within 1,700 ms max). Music, familiarity and concentration ratings for the preceding musical excerpt were presented after the blocks (i. emotion intensity for joy, tenderness, tension and sadness, ii. familiarity, and iii. concentration).

Different blocks of the ANT were performed during exposure to the musical pieces from the four different emotional categories as well as during silence to provide a baseline condition. The set of 12 musical excerpts (three pieces for each emotional category) was repeated three times, while the silence condition was presented nine times, leading to a total of 45 blocks (nine for each emotional category or silence condition) of 45 s duration each, and comprising 10 or 11 trials per block. Cue and target types were presented in a pseudo-randomized order and intermixed within the same block. Auditory conditions were also alternated in a pseudo-randomized order between blocks.

The ANT included a total number of 480 trials. As illustrated in Figure 1, for each block, the auditory exposure (music or silence) started 3,500 ms prior to the first visual stimulus appearance. Each trial began with a central fixation cross (duration between 600 and 1,500 ms) followed by one of the four possible cues (100 ms duration, visual angle of 0.91°) and lastly the target display with five arrows (1,700 ms duration). Each cue type (i.e., no-cue, center, double, and spatial cue) was presented in a quarter of the total number of 480 trials. In the no-cue condition (120 trials), only the fixation cross was displayed. In the center cue condition (120 trials), only a single cue circle was displayed at screen center. In the double cue condition (120 trials), two circle cues were presented simultaneously above and below the fixation cross, corresponding to the positions of the target stimuli. In the spatial cue condition (120 trials, regardless of validity), only one cue was presented to indicate either the correct location of the upcoming target (i.e., spatially valid, 60 trials) or the opposite location (i.e., spatially invalid, 60 trials). The cue was followed by an empty 400 ms interval during which only the fixation cross was displayed. The target stimuli consisted of a row of five horizontal arrows, among which the central arrow was the target (visual angle of 5.90° and 1.03°, respectively), randomly presented either above or below the central fixation cross (visual angle of 2.31°). In half of the total of 480 trials (240 trials), the central target arrowhead pointed in the same direction as the flanker arrows [i.e., Congruent condition (Con)], while in the other half of the trials (240 trials), it pointed in the opposite direction [i.e., Incongruent condition (Inc)]. Participants were asked to maintain their gaze directed to the fixation cross and to indicate, as fast and as accurately as possible, the direction of the central arrow after its presentation. Responses were given by pressing a corresponding button on the keyboard (i.e., right or left index for indicating right or left direction, respectively). Buttons presses as well as response times were measured throughout the whole experiment.

This experimental design is similar to our prior study (Fernandez et al., 2019b), with the exception of (i) a central fixation cross that was maintained during the presentation of visual stimuli (Fan et al., 2002), (ii) a slightly smaller visual size of the stimuli, and (iii) an additional silence condition used as a baseline condition.

Emotion, Familiarity, and Concentration Ratings

At the end of a subset of the musical blocks of the ANT (i.e., 12 blocks), participants were asked to rate (i) the emotion intensity (i.e., “To what extent the musical excerpt expresses joy, tenderness, tension, and sadness”); (ii) familiarity (i.e., “To what extent were you familiar with this musical piece?”); and (iii) concentration level during the preceding block (i.e., “To what extent were you concentrated on the task?”). Each musical excerpt received four ratings, one for each emotion category along four distinct scales ranging from 0 (= not at all) to 6 (= extremely). Familiarity and concentration ratings were measured using a numerical scale ranging from 0 (= not at all) to 100 (= extremely), which resulted in one single value per musical excerpt. The order of the requested ratings was pseudo-randomized so that each musical excerpt was evaluated once. After a subset of the silence blocks (i.e., three blocks), only concentration level was assessed.

Data Analysis

All statistical tests were chosen according to the normality of the residuals distribution and the equality of variances in our data, using R Software version 3.2.4 (R., R Development Core Team). The direction of significant interactions was tested using t-tests whose resulting p–values were adjusted using Bonferroni corrections.

Emotion, Familiarity, and Concentration Ratings

First, individual emotion ratings (i.e., emotion intensity) were averaged over the three different musical excerpts for each emotion category (i.e., joy, tenderness, tension, and sadness). Second, the ability to correctly discriminate musical emotions (i.e., discrimination ratio) was assessed for each emotion category by subtracting the mean intensity scores of the three other categories from the emotion intensity score of a specific category, as used in previous studies of emotion recognition (e.g., Cristinzio et al., 2010). Familiarity ratings were computed for each emotion category by averaging scores for the three different excerpts associated with this emotion. Finally, concentration levels were assessed by averaging the concentration scores obtained for each emotion category (i.e., joy, tenderness, tension, sadness, and silence) and each participant.

Three distinct 4 (emotion category) × 2 (group) mixed-model repeated measures ANOVA analyses were used to separately examine emotion intensity, discrimination ratio, and familiarity ratings. The concentration ratings were entered into a 5 (auditory condition) × 2 (group) mixed-model repeated measures ANOVA. The emotion or auditory conditions were considered as within-subject factors, and the group as a between-subjects factor.

Attention Network Task

Both the percentage of correct responses (accuracy, AC) and mean reaction times (RT) of correct trials were calculated for each participant and each group separately (Amusics and Controls), for each of the five auditory conditions (Joy, Tenderness, Tension, Sadness, and Silence), each target type (Congruent and Incongruent), and each cue type (Central, Double, Spatial and No-cue). A trial was considered accurate when participants correctly indicated the direction of the central arrow within the trial time limit (1,700 ms). The three distinct attentional components were separately analyzed according to the specific cues or stimulus combination (Fan et al., 2002; Fernandez et al., 2020). Executive control was assessed by contrasting incongruent vs. congruent arrow conditions regardless of the cue type (i.e., Con and Inc), while the alerting and orienting components were determined by contrasting distinct cues regardless the target type (i.e., Double vs. No-cue conditions for alerting; Center vs. Valid Spatial conditions for orienting).

Because residuals were not normally distributed (preventing the use of parametric statistical tests), AC analyses were performed for each attentional component using paired Wilcoxon rank tests to determine differences between groups and visual conditions (Con vs. Inc; Double vs. No-Cue; Center vs. Valid Spatial). Close-to-ceiling accuracy rates were found in both groups (>95% correct), and no major effect of musical emotion (p > 0.3, Wilcoxon rank tests) was found for any component or groups. Consequently, our main analyses and results concerning the influence of music focused on RTs only.

As RT scores revealed normally distributed residuals and equal variances, the effect of music exposure on RT was assessed using three distinct ANOVAs performed, one for each attentional component. Mean RT measures were entered in a separate 2 × 2 × 5 mixed-model repeated-measure ANOVA with trial type (Con and Inc for executive; Double and No-Cue for alerting; Center and Valid Spatial for orienting) and musical emotion category (Joy, Tenderness, Tension, Sadness, and Silence) as within-subject levels and group (Amusics and Controls) as a between-subject factor. Because arousal and valence dimensions are considered as two essential and independent dimensions of all emotion types (Russell, 2003) and thus also describe the functional organization of music-induced emotions (Trost et al., 2012), emotional effects on attention performance and RTs were further assessed by sorting emotion categories into their corresponding arousal and valence dimensions (in line with the 4 categories used in our study). This was achieved using three distinct 2 × 2 × 2 × 2 mixed-model repeated-measure ANOVAs (one for each attentional component) with trial type (Con and Inc for executive; Double and No-Cue for alerting; Center and Valid Spatial for orienting), valence (high and low), and arousal (high and low) as within-subject levels, plus group as a between-subject factor.

The effect of age was determined by entering the mean RT results in three additional 2 × 2 × 5 × 1 mixed-model repeated measure ANCOVAs (one for each attentional component), with the corresponding trial conditions (Con and Inc for executive; Double and No-Cue for alerting; Center and Valid Spatial for orienting) and auditory conditions (Joy, Tenderness, Tension, Sadness, and Silence) as within-subject levels, groups as a between-subject factor, plus age as a covariate.

Finally, Pearson correlation analyses were performed to assess any relationship between musical abilities scores (MBEA scale, melodic composite or pitch-change detection scores) and indices of the three attentional components, namely the executive cost (RTInc−RTCon), the alerting efficiency (RTNo Cue−RTDouble Cue), and the orienting efficiency (RTCenter Cue−RTSpatial valid cue), calculated following previous work (e.g., Jiang et al., 2011).

Of note, the sample size of the current study was determined by a power analysis using G*Power (version 293 3.1.9.7, Heinrich Heine University). This analysis indicated a probability ≥ 90% to replicate the experimental differences on our attentional task with n ≥ 15 based on the effect size (d = 1.83) observed in a previous study using the same paradigm in both younger and older individuals (Fernandez et al., 2019b).

Results

Attention Network Task Results

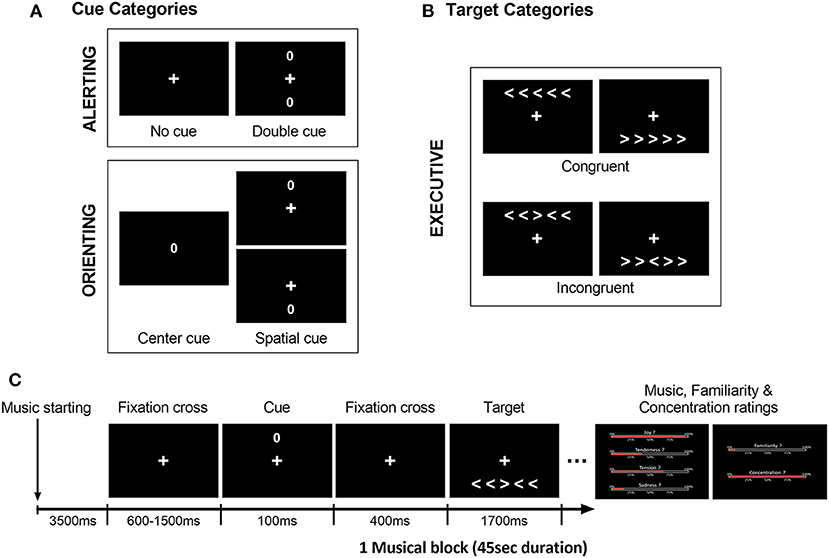

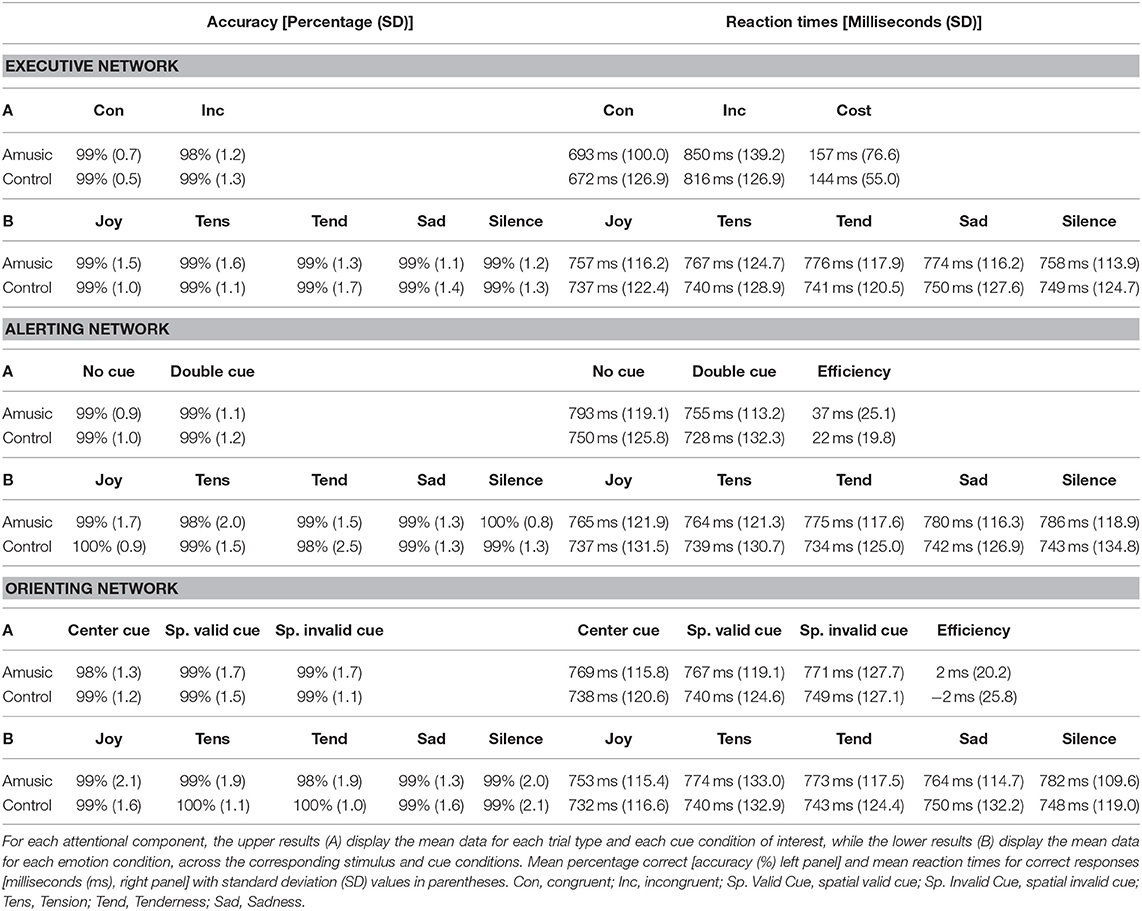

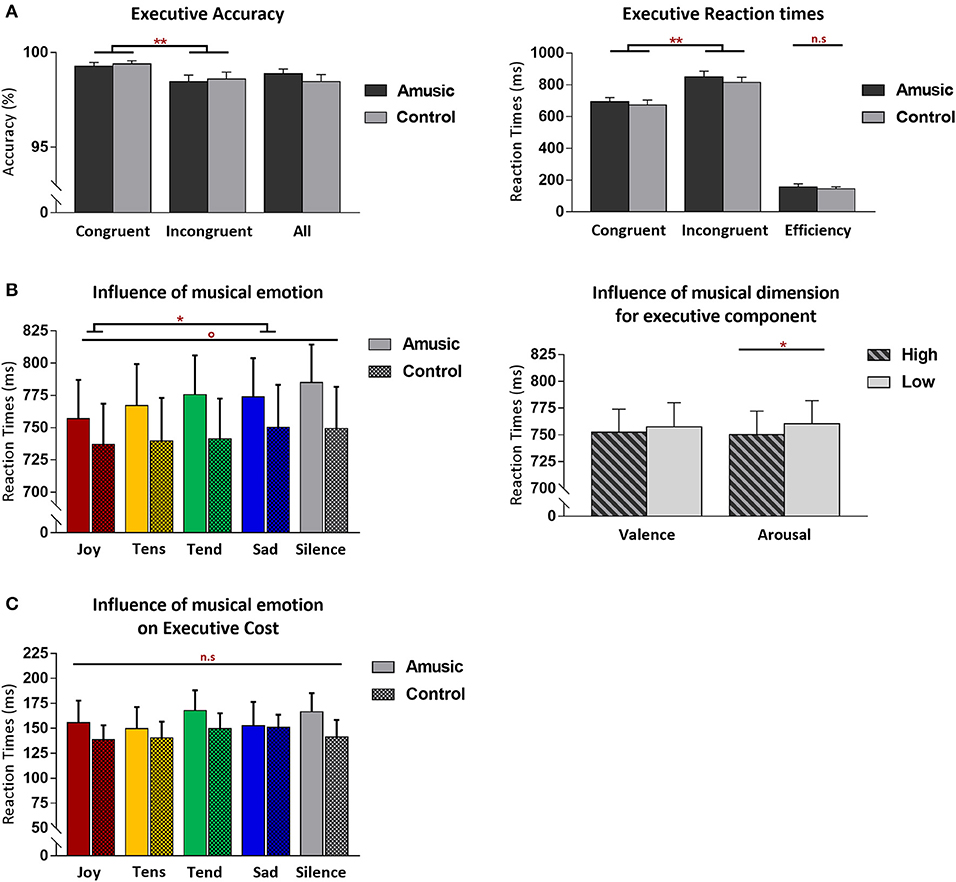

AC (in percentage, %) and RT results (in milliseconds, ms) for each attentional component measured in the ANT are presented in Table 3. Additionally Figure 2 shows more detailed results for the executive control component. Because AC showed no significant effect for music on performance, our main analysis comparing different emotions and different groups focused on RT data.

Table 3. Behavioral scores in the Attention Network Task (ANT) for measures assessing executive control, alerting, and orienting components, for both the amusic and control groups.

Figure 2. Behavioral results from the Attention Network Task (ANT) for the executive control component of attention, shown for amusic and control participants. (A) Mean accuracy (%) (left) and RT (in milliseconds, ms) (right) for congruent and incongruent target conditions. Mean accuracy (%) from both target conditions merged together, as well as interference cost (ms) [(mean RT Inc-mean RT Con)] are also presented. (B) Mean RT (ms) regardless of congruence and group conditions, as a function of the discrete emotion category of music, plus a silence condition (left) or as a function of the valence and arousal dimensions of music (right) during the task. (C) Executive cost (ms) as a function of the emotion category of music (plus silence), presented without group distinction. Graphs illustrate standard errors of the mean (SEM) and p-values (* or °) with the following meaning: *p < 0.01; **p < 0.001; °p < 0.05 without Bonferroni corrections. The n.s. abbreviation indicates non-significant results.

Executive Control Component

Executive control performance was assessed by comparing trials where targets appeared with congruent vs. incongruent flankers. As expected, participants were more accurate (paired Wilcoxon test, V = 224.5; p < 0.001) and faster [F(1, 28) = 156.50; p < 0.001] for congruent (M = 99%; SD = 0.6; M = 682 ms; SD = 112.6) compared to incongruent trials (M = 98%; SD = 1.2; M = 833 ms; SD = 130.3). Critically, amusic and control participants did not differ for global executive control performance. Both groups showed similar accuracy scores (V = 34; p = 0.71), and RT data disclosed no main group effect [F(1, 28) = 0.41; p = 0.52] nor any group by trial type interaction [F(1, 28) = 0.35; p = 0.55].

More critically, the influence of music on executive control performance was assessed by comparing RTs between the different auditory background conditions. A first ANOVA performed on RTs across all auditory conditions (four emotion types and silence) and trial types (Con vs. Inc) revealed a main effect of auditory condition [F(4, 112) = 3.20; p = 0.01] with no group effect [F(4, 112) = 0.59; p = 0.66] nor any group by trial type interaction [F(4, 112) = 0.63; p = 0.63]. Joyful music (M = 747 ms; SD = 116.9) yielded faster visual target detection than sad music across groups [t(29) = −4.06; p = 0.003; M = 762 ms; SD = 120.2]. An additional ANOVA treating music valence and arousal as separate factors revealed a main effect of arousal [F(1, 28) = 8.42; p = 0.007] with faster RT during high-arousing (M = 750 ms; SD = 120.6) compared to low-arousing music (M = 760 ms; SD = 118.2) in both amusics and control participants taken together. No group by arousal interaction was found [F(1, 28) = 0.53; p = 0.46]. No main effect of valence [F(1, 28) = 2.08; p = 0.16], nor group by valence interaction was found [F(1, 28) = 0.06; p = 0.80]. There was no arousal by valence interaction [F(1, 28) = 0.25; p = 0.61], and no significant group by arousal by valence interaction [F(1, 28) = 2.61; p = 0.27].

Finally, a significant main effect of age was demonstrated by our covariate analysis, with longer RTs regardless of congruency/trial type and auditory background [F(1, 26) = 38.64; p < 0.001], reflecting a general age-related slowing in performance which was similar between amusic and control participants [F(1, 26) = 2.63; p = 0.11]. Specific correlation analyses revealed no association between the executive cost (RTInc-RTCon) and musical abilities in amusics, assessed with MBEA scale [r(13) = 0.37, p = 0.17], melodic composite [r(13) = 0.09, p = 0.74], and pitch-change detection scores [r(13) = −0.07, p = 0.78]. No such association was found either in control participants [MBEA scale: r(12) = −0.33, p = 0.24; melodic composite: r(12) = −0.15, p = 0.60; pitch-change detection score: r(12) = 0.42, p = 0.13].

Alerting Component

The alerting component of attention was assessed by comparing trials where targets were preceded by double (non-informative) cues vs. no cues. No significant difference in AC was found between the two cue types (paired Wilcoxon test, V = 106; p = 0.51) or between the two groups (V = 115; p = 0.93). For RTs, results showed a main effect of cue type [F(1, 28) = 51.49; p < 0.001] with slower RTs with no-cue (M = 771 ms; SD = 122.3) than with double cues (M = 742 ms; SD = 121.8). No group effect emerged [F(1, 28) = 0.61; p = 0.43] nor any group by cue type interaction [F(1, 28) = 3.46; p = 0.07].

Again, music effects were examined by ANOVAs on RTs measured in different auditory exposure conditions. We found no significant influence of auditory background on alerting for both groups (p > 0.04). There was no group by auditory background interaction implicating emotion category [F(1, 28) = 0.67; p = 0.61], valence [F(1, 28) = 0.09; p = 0.76], or arousal [F(1, 28) = 2.70; p = 0.11].

Finally, the age covariate also revealed a main effect on RTs across the different alerting cue types [F(1, 26) = 28.99; p < 0.001], without group distinction [F(1, 26) = 2.19; p = 0.15]. None of the two groups showed any correlation of alerting efficiency (RTNo Cue−RTDouble Cue) with musical abilities, namely MBEA scale [amusic: r(13) = 0.02, p = 0.92; control: r(12) = 0.11, p = 0.70], melodic composite [amusic: r(13) = 0.18, p = 0.51; control: r(12) = −0.59, p = 0.02, with did not survive multiple comparisons correction], or pitch-change detection scores [amusic: r(13) = −0.27, p = 0.31; control: r(12) = −0.12, p = 0.65].

Orienting Component

Finally, orienting effects were probed by comparing performance on trials where targets were preceded by valid spatial cues vs. center cues. AC showed no difference between the two cue types (paired Wilcoxon test, V = 67; p = 0.16) or between the two groups (V = 75; p = 0.12). Likewise, RTs showed no effect of cue type [F(1, 28) = 0.01; p = 0.90], no effect of group [F(1, 28) = 0.42; p = 0.52], and no group by cue type interaction [F(1, 28) = 0.38; p = 0.54].

The effects of auditory exposure on RTs were not significant in terms of either emotion category [F(4, 112) = 0.61; p = 0.65], valence [F(4, 112) = 0.47; p = 0.49], or arousal [F(4, 112) = 0.002; p = 0.96], in both amusics and control participants taken together. Although there was a significant interaction between emotion category and cue type [F(4, 112) = 2.59; p = 0.04], none of the pairwise post hoc comparisons survive multiple-comparison correction. The triple interaction emotion category by cue type by group was not significant [F(4, 112) = 0.95; p = 0.43].

Finally, the age covariate again showed a main effect on RTs [F(1, 26) = 41.56; p < 0.001] across the two different orienting conditions, without any group distinction [F(1, 26) = 1.99; p = 0.16]. No correlation was found between orienting efficiency (RTCenter Cue −RTSpatial valid cue) and musical abilities for amusics: MBEA scale [r(13) = −0.08, p = 0.76], melodic composite [r(13) = −0.12, p = 0.64], or pitch-change detection scores [r(13) = 1.5, p = 0.58]; or for control participants [MBEA scale: r(12) = −0.25, p = 0.38; melodic composite: r(12) = −0.30, p = 0.28; pitch-change detection score: r(12) = 0.25, p = 0.38].

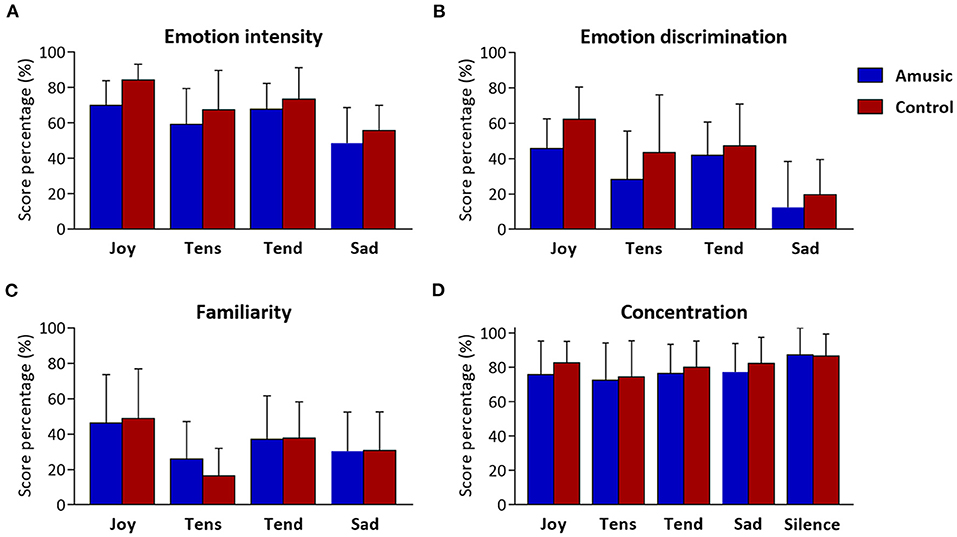

Emotion, Familiarity, and Concentration Ratings

The ratings obtained for emotion intensity, emotion discrimination, familiarity, and concentration ratings are presented in Table 4 and Figure 3. As can be seen, amusics generally judged the emotions expressed by music as less intense than controls did [F(1, 28) = 4.48; p = 0.04]. In both groups, a main effect of emotion category [F(3, 84) = 15.92; p < 0.001] showed that joy, tense, and tender music pieces were judged as more intense than sad music. No group by emotion interaction was found [F(3, 84) = 0.46; p = 0.71]. Emotion discrimination (i.e., relative ratio of ratings for the expressed emotion subtracted by the average ratings given to the other three emotions) showed a main effect of emotion category [F(3, 84) = 21.33; p < 0.001] with a lower discrimination ratio for sad (M = 16%; SD = 23.04) compared to joyful [t(29) = −7.55; p < 0.001; M = 54%; SD = 19.29], tense [t(29) = −3.45; p = 0.006; M = 36%; SD = 30.51], and tender music [t(29) = −6.23; p < 0.001; M = 45%; SD = 21.16]. There was no group effect [F(1, 28) = 3.25; p = 0.08], nor group by emotion interaction [F(3, 84) = 0.64; p = 0.58]. Hence, emotion categorization was comparable between amusics and controls.

Figure 3. Music and concentration ratings obtained at the end of musical blocks. Scores resulting from (A) emotion intensity, (B) emotion discrimination (i.e., discrimination ratio), and (C) familiarity are presented as percentages (%) for each of the four emotion categories (i.e., Joy, Tension, Tenderness, and Sadness), for amusic and control participants separately. Concentration scores (D) are presented (%) for each of the five auditory conditions (including silence) for the two groups separately.

Familiarity ratings were low for all musical pieces (values < 50), but joyful music was generally rated as more familiar than other emotion categories [F(3, 84) = 19.90; p < 0.001]. Importantly, there was no group effect [F(1, 28) = 0.04; p = 0.83], nor group by emotion interaction [F(3, 84) = 1.20; p = 0.31].

Finally, as expected, subjective concentration ratings revealed a main effect of auditory condition [F(4, 112) = 5.70; p < 0.001], showing higher concentration ratings during silence than during music exposure conditions [t(29) > 2.78; p < 0.03], but there was no significant difference between groups [F(4, 112) = 0.44; p = 0.51], nor an auditory condition by group interaction [F(4, 112) = 0.45; p = 0.76].

Discussion

The present study investigated to what extent pitch deficits characterizing the most common form of congenital amusia could influence indirect effects of music exposure on attentional performance. Our results show that major visuo-spatial attention components are preserved in individuals with congenital amusia. Furthermore, and more critically, amusics are still sensitive (just as the general population is) to pleasant and arousing music while performing a visuo-spatial attentional task.

Impact of Amusia on Emotion Processing

Although emotions conveyed by music were evaluated as less intense by the amusic participants, these individuals categorized the emotions expressed by different pieces with similar accuracy to the control participants. This result is in line with the notion that discrimination and intensity perception might be dissociated in emotion processing (Hirel et al., 2014). Our finding is also consistent with previous work that highlighted relatively spared music-related emotional judgements in congenital amusia (Ayotte et al., 2002; Gosselin et al., 2015). However, the results diverge from other work where poorer emotion recognition was found in amusics as compared to controls for both speech (Thompson et al., 2012; Lolli et al., 2015; Lima et al., 2016) and music (Lévêque et al., 2018). In the latter study, the authors suggested that such a discrepancy could have resulted from the use of orchestral music (Lévêque et al., 2018). Specifically, orchestral music might be more sensitive to subtle amusic deficits during an emotion discrimination task in comparison to the piano music excerpts, like those used in our previous studies (Ayotte et al., 2002; Gosselin et al., 2015). However, in this study, we used orchestral music as well as piano and string music excerpts. Interestingly, more recent work reported deficits in explicit emotion processing in amusics for both music and speech when using a forced-choice method (i.e., choosing a specific emotion among given categories) (Thompson et al., 2012; Lévêque et al., 2018; Pralus et al., 2019), while relatively comparable implicit processing abilities of musical emotions and emotional prosody were observed using indirect investigation methods (e.g., intensity ratings of emotions) (Tillmann et al., 2016; Lévêque et al., 2018; Pralus et al., 2019). Here we employed a free scale method for both intensity and discrimination measures, but these judgments could have engaged participants' internal representations of musical emotions differently compared to explicit emotion categorization tasks with forced-choice labels that require conscious retrieval (Cleeremans and Jiménez, 2002). While discrimination judgments may rely on more strategic access to categorical knowledge based on discrete cues, intensity judgements may involve other perceptual abilities operating on more continuous sensory dimensions. Future work should help further disentangle the complex relationships between musical emotion recognition, auditory complexity, and both explicit/implicit investigation methods in the amusic population.

We also note that all participants had more difficulties correctly categorizing sadness compared to other emotions, and had more success categorizing joy, as found previously (Gosselin et al., 2015; Lévêque et al., 2018). In everyday life, sadness is typically associated with unpleasant feelings. However, in music it is often associated either with pleasantness (Kawakami et al., 2013) or with complex emotions, namely simultaneous positive and negative feelings (Juslin et al., 2014; Sachs et al., 2015), as is similarly reported for nostalgia (Barrett et al., 2010; Trost et al., 2012; Schindler et al., 2017). Such expressions of mixed emotions could be a factor behind the lower discrimination ratios and lower intensity scores observed here. Most importantly, all the differential effects of musical emotion on the ratings were similar between our amusic and control participants. Therefore, the results indicate that amusics do have a fairly average capacity to respond to various musical emotions despite a deficit in pitch processing. This is consistent with a possible dissociation between emotional and perceptual components in the processing of music (Gosselin et al., 2015).

Impact of Amusia on Attentional Processing

Overall, behavioral performance in the attentional task fully accorded with the literature and showed that the ANT paradigm was effective in assessing visual attention. All participants showed sensitivity to conflicting distractor information when detecting a visual target, with more errors and longer RTs on incongruent compared to congruent trials (Casey et al., 2000; Fan et al., 2005; Fernandez et al., 2019b). They were also faster during trials for which they were temporally warned about the imminent target (i.e., double cue) in comparison to no-cue trials, showing benefits of phasic alertness (Fan et al., 2002; Finucane et al., 2010; McConnell and Shore, 2011). Unexpectedly, behavioral results associated with the orienting component of the ANT did not show any modulation of accuracy or RTs (i.e., for center compared to spatially valid cues), indicating no significant beneficial effect of shifting the attentional focus toward the target location prior to its presentation, contrary to what is typically reported in the literature (Fan et al., 2005; Finucane et al., 2010; McConnell and Shore, 2011). This lack of orienting effect may be caused by an inadequate interval between cue and target displays, or by insufficient spatial preparation following the presentation of the visual cue [perhaps due a 50% validity contingency used here, compared to 100% validity contingency in the original Fan's ANT (Fan et al., 2002)]. Nevertheless, other well-established effects were replicated and accompanied by a significant, age-related slowing of attentional performance, as consistently reported in the literature, particularly for executive control (Zhu et al., 2010; Fernandez et al., 2019a,b). Overall, therefore, our findings converge with previous work on attention and validate our modified ANT task ensuring its sensitivity to assessing congenital amusic individuals in the presence or absence of music exposure.

Critically, our findings for the three distinct attentional components showed comparable accuracy and RT performance between amusic and control participants across all conditions. These findings were confirmed by further correlation analyses revealing that the severity of musical deficits, measured with the MBEA scale, melodic composite, and pitch-change detection, did not predict attentional performance in any of the three attentional components. This spared performance in congenital amusia stands in sharp contrast with executive control deficits documented in several populations, including the elderly (Zhu et al., 2010; Fernandez et al., 2019a) and patients with visual attentional developmental disorders (e.g., ADHD) (Johnson et al., 2008; Mogg et al., 2015) who show abnormal distractor susceptibility (i.e., more errors/larger RTs in incongruent trials) in several paradigms, including the ANT version with arrow flankers as used here. Similarly, an attenuation of alerting states has been reported in ADHD (Johnson et al., 2008) and patients with strokes (Spaccavento et al., 2019) compared to the control population, but it was not seen in the amusic group. Finally, the age-related slowing in visual attention was similar in the amusic and control participants who were relatively old but age-matched suggesting that musical deficits have no distinctive impact on attentional performance with increasing age. Thus, the present study highlights preserved attention processing in congenital amusia, in keeping with the notion that their impairment is a selective musical disorder affecting pitch processing (Peretz et al., 2002; Peretz, 2016; Peretz and Vuvan, 2017).

Interestingly, finding normal visuo-spatial attention in congenital amusia does not support earlier claims of an intimate link between musical and visuo-spatial attentional processing abilities (Douglas and Bilkey, 2007), based on the assumption that sound frequency representations may be intertwined with spatial codes (e.g., lower pitch is usually represented lower in space than higher pitch) (Rusconi et al., 2006). Rather, the present study is more in line with prior studies showing preserved mental rotation abilities in amusics with low pitch or contour MBEA scores (Tillmann et al., 2010; Williamson et al., 2011). We found no attenuation of visuo-spatial attentional processing indices in amusic individuals, even in the most musically impaired, by showing no link between three distinct scores measuring musical deficits and attentional performance. These findings suggest that the severity of musical deficits does not impact visuo-spatial attention and further questions the notion of a continuum of visuo-spatial cognition with musical abilities (Douglas and Bilkey, 2007). However, we cannot disregard the possibility that more complex aspects of object representation/manipulation in space might interplay with musical skills, but any such interaction seems unrelated to the attentional processes probed here, again reinforcing the view that congenital amusia occurs independently of any other cognitive deficits.

Effects of Emotional Music on Attention in Amusia

Our main goal with the present work was to assess whether the influence of music exposure on attentional processes has a similar effect on the congenital amusia population as compared to the general population. In accordance with previous work (Fernandez et al., 2020), we found a reliable modulation of processing speed, regardless of visual flanker congruency, when participants were exposed to joyful (i.e., high-arousing and high-valence) compared to sad music (i.e., low-arousing and low-valence), and this effect was similar in amusics and controls. This attentional enhancement was likely mainly driven by the arousal dimension of music since valence did not appear to modulate performance. Such effects of arousal might result from a greater engagement of the attentional control network in frontal and parietal cortices, as observed in a recent fMRI study using a similar paradigm (Fernandez et al., 2019b).

Unlike in previous work, however, we did not find significantly faster performances when comparing joyful musical exposure to the silence condition (Fernandez et al., 2019b), probably because of the small effect size of this modulation in the current population sample. However, the pattern of absolute RT effects (see Figure 3) fully accords with earlier observations, suggesting not only that joyful music produced fastest responses, while sad music and silence produced the slowest, but also that such differences reflect a facilitation of stimulus processing due to joyful (and more generally high-arousing) music rather than a slowing or distracting effect of (negative) emotional music relative to silence (Trost et al., 2014).

Overall, the normal influence of music exposure on executive attentional control in congenital amusia suggests that these individuals still receive the indirect effects of music in spite of their musical deficits. This finding supports the increasingly supported theory that an amusics' brain has the capacity to track subtle musical (pitch) variations without awareness (e.g., Peretz et al., 2009; Tillmann et al., 2012; Zendel et al., 2015). Taken together, our results highlight the powerful and pervasive capacity of music and musical emotions to influence the mind and our behavior through relatively automatic and unconscious pathways, including high-level cognitive functions associated with executive control. Our study yields precious insights into the remarkable relationships between emotional and attentional processing in the human brain through which music can enhance cognitive abilities.

A few possible limitations of the current study should be acknowledged. First of all, our main results and conclusions concerning the preserved attentional effects of musical emotions rely on negative findings, i.e., no significant differences between congenital amusics and controls in critical behavioral effects of interest. We feel that this finding is unlikely to be caused by insufficient power, given that our sample size was validated by previous work (Fernandez et al., 2019b). In addition, although the ANT has been successfully employed in several studies to assess major attentional components across various populations (Wang et al., 2005; Fernandez-Duque and Black, 2006; Mahoney et al., 2010; Park et al., 2019), it may have failed to capture another attentional dimension that is possibly affected by amusia. Nevertheless, the current finding of comparable visuo-spatial attentional performance in this paradigm, despite the congenital impairment associated with amusia, helps to further refine our understanding of this disorder. Further investigations should confirm intact attentional performances in amusics across a wider range of tasks, for instance, by comparing their performance to populations known to present attentional deficits. Finally, another general limitation of our work might be the relatively small sample of individuals with congenital amusia who were included in the study. As this condition has a low prevalence (1.5% up to 4%) in the general population, we deliberately chose strict inclusion criteria (i.e., scores below cut-off scores on two scale tests in accordance with the latest normative data) to ensure an inclusion of individuals presenting clear and substantial musical deficits only (Peretz and Vuvan, 2017; Vuvan et al., 2018), but this strictness inherently limited the size of our sample. We also acknowledge a potential lack of statistical power for some task conditions, particularly the alerting and orienting components whose assessment is made by computing a subset (half) of the total trials (see Fan et al., 2002), unlike the executive control component. This lack of statistical power might account for a failure to demonstrate a main effect of emotion on these components, contrary to our previous study (Fernandez et al., 2019b). In addition, the manipulation of the orienting components might have produced insufficient spatial preparation (50% validity contingency) and allowed participants to ignore the spatial cues, accounting for a lack of a significant validity effect during attentional orienting, unlike the original ANT paradigm (Fan et al., 2002).

In any case, to our knowledge, this study is the first to assess selective attention abilities as well as the influence of music exposure on attentional processes in congenital amusia. We were able to confirm normal emotional processing and cognitive control functions in amusics, notably by demonstrating that they exhibit similar accuracy and reaction time performances compared to the control population in the attentional network task measuring three distinct attentional components. Furthermore, they also exhibit faster reaction times in attention conflict conditions during joyful/high-arousing music compared to sad/low-arousing music, similar to people with normal music perception (Fernandez et al., 2019b). These data reveal that affect-related influences of music on attention control do not depend on the neural system altered in congenital amusia and still operate despite defective pitch processing. Our study yields insights on the remarkable relationships between emotional and attentional processing in the human brain through which music can enhance cognitive abilities.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Research Ethics Council for the Faculty of Arts and Sciences at the Université de Montréal. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

NF designed the project and validated the task. NF performed the data collection and analyses and wrote the initial draft of manuscript with contributions from other authors. PV supervised the research protocol, methodology, and analyses. NG and IP supervised the research protocol and provided testing material and resources for data collection. All authors actively contributed to the revision, corrections of the manuscript, approved the final version for publication, and contributed to the research question formulation.

Funding

This work was supported in parts by a grant awarded to NF from the Swiss National Science Foundation (P1GEP3_161687) as well as by a grant from the Canadian Institutes of Health Research, the Natural Sciences and Engineering Research Council of Canada, and the Canada Research Chairs program to IP.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Experimental data were collected during NF collaborative stay in BRAMS at the University of Montréal. We warmly thank Wiebke J. Trost, Ph.D (Cognitive and Affective Neuroscience Lab, University of Zurich, Switzerland) for sharing the auditory material which was valuable for the completion of this project. We are grateful to the staff of the International Laboratory for Brain, Music and Sound Research (BRAMS) of the University of Montreal (Canada) for their technical assistance in the acquisition of the data. In particular, the authors thank Mihaela Felezeu for her invaluable help in the recruitment of congenital amusic and control participants. We thank Caitlyn Trevor, Ph.D (Cognitive and Affective Neuroscience Lab, University of Zurich, Switzerland) for proofreading the manuscript. We would like to thank the reviewers for their careful reading and helpful comments that allowed us to improve our paper.

References

Ayotte, J., Peretz, I., and Hyde, K. (2002). Congenital amusia: a group study of adults afflicted with a music-specific disorder. Brain 125, 238–251. doi: 10.1093/brain/awf028

Barrett, F. S., Grimm, K. J., Robins, R. W., Wildschut, T., Sedikides, C., and Janata, P. (2010). Music-evoked nostalgia: affect, memory, and personality. Emotion 10:390. doi: 10.1037/a0019006

Bolger, D., Trost, W., and Schön, D. (2013). Rhythm implicitly affects temporal orienting of attention across modalities. Acta Psychol. 142, 238–244. doi: 10.1016/j.actpsy.2012.11.012

Brochard, R., Dufour, A., and Despres, O. (2004). Effect of musical expertise on visuospatial abilities: evidence from reaction times and mental imagery. Brain Cogn. 54, 103–109. doi: 10.1016/S0278-2626(03)00264-1

Casey, B., Thomas, K. M., Welsh, T. F., Badgaiyan, R. D., Eccard, C. H., Jennings, J. R., et al. (2000). Dissociation of response conflict, attentional selection, and expectancy with functional magnetic resonance imaging. Proc. Natl. Acad. Sci. U.S.A. 97, 8728–8733. doi: 10.1073/pnas.97.15.8728

Cleeremans, A., and Jiménez, L. (2002). Implicit learning and consciousness: a graded, dynamic perspective. Implicit Learn. Consc. 2002, 1–40.

Corbetta, M., and Shulman, G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 201–215. doi: 10.1038/nrn755

Cousineau, M., McDermott, J. H., and Peretz, I. (2012). The basis of musical consonance as revealed by congenital amusia. Proc. Natl. Acad. Sci. U.S.A. 109, 19858–19863. doi: 10.1073/pnas.1207989109

Cristinzio, C., N'diaye, K., Seeck, M., Vuilleumier, P., and Sander, D. (2010). Integration of gaze direction and facial expression in patients with unilateral amygdala damage. Brain 133, 248–261. doi: 10.1093/brain/awp255

Dalla Bella, S., Giguère, J.-F., and Peretz, I. (2009). Singing in congenital amusia. J. Acoust. Soc. Am. 126, 414–424. doi: 10.1121/1.3132504

Douglas, K. M., and Bilkey, D. K. (2007). Amusia is associated with deficits in spatial processing. Nat. Neurosci. 10, 915–921. doi: 10.1038/nn1925

Escoffier, N., Sheng, D. Y. J., and Schirmer, A. (2010). Unattended musical beats enhance visual processing. Acta Psychol. 135, 12–16. doi: 10.1016/j.actpsy.2010.04.005

Fan, J., McCandliss, B. D., Fossella, J., Flombaum, J. I., and Posner, I. M. (2005). The activation of attentional networks. Neuroimage 26, 471–479. doi: 10.1016/j.neuroimage.2005.02.004

Fan, J., McCandliss, B. D., Sommer, T., Raz, A., and Posner, I. M. (2002). Testing the efficiency and independence of attentional networks. J. Cogn. Neurosci. 14, 340–347. doi: 10.1162/089892902317361886

Fernandez, N. B., Hars, M., Trombetti, A., and Vuilleumier, P. (2019a). Age-related changes in attention control and their relationship with gait performance in older adults with high risk of falls. Neuroimage 189, 551–559. doi: 10.1016/j.neuroimage.2019.01.030

Fernandez, N. B., Trost, W. J., and Vuilleumier, P. (2019b). Brain networks mediating the influence of background music on selective attention. Soc. Cogn. Affect. Neurosci. 14, 1441–1452.

Fernandez, N. B., Trost, W. J., and Vuilleumier, P. (2020). Brain networks mediating the influence of background music on selective attention. Soc. Cogn. Affect. Neurosci. 14, 1441–1452. doi: 10.1093/scan/nsaa004

Fernandez-Duque, D., and Black, S. E. (2006). Nature reviews neuroscience. Neuropsychology 20:133. doi: 10.1037/0894-4105.20.2.133

Finucane, A. M., Whiteman, M. C., and Power, J. M. (2010). The effect of happiness and sadness on alerting, orienting, and executive attention. J. Atten. Disord. 13, 629–639. doi: 10.1177/1087054709334514

Foxton, J. M., Nandy, R. K., and Griffiths, D. T. (2006). Rhythm deficits in ‘tone deafness’. Brain Cogn. 62, 24–29. doi: 10.1016/j.bandc.2006.03.005

Fredrickson, B. L. (2004). The broaden-and-build theory of positive emotions. Philos. Trans. R. Soc. B Biol. Sci. 359, 1367–1378. doi: 10.1098/rstb.2004.1512

Gosselin, N., Paquette, S., and Peretz, I. (2015). Sensitivity to musical emotions in congenital amusia. Cortex 71, 171–182. doi: 10.1016/j.cortex.2015.06.022

Hars, M., Herrmann, F. R., Gold, G., Rizzoli, R., and Trombetti, A. (2013). Effect of music-based multitask training on cognition and mood in older adults. Age Ageing 43, 163–200. doi: 10.1093/ageing/aft163

Hirel, C., Lévêque, Y., Deiana, G., Richard, N., Cho, T., Mechtouff, L., et al. (2014). Acquired amusia and musical anhedonia. Rev. Neurol. 170, 536–540. doi: 10.1016/j.neurol.2014.03.015

Hutchins, S., Gosselin, N., and Peretz, I. (2010). Identification of changes along a continuum of speech intonation is impaired in congenital amusia. Front. Psychol. 1:236. doi: 10.3389/fpsyg.2010.00236

Hyde, K. L., and Peretz, I. (2004). Brains that are out of tune but in time. Psychol. Sci. 15, 356–360. doi: 10.1111/j.0956-7976.2004.00683.x

Hyde, K. L., Zatorre, R. J., and Peretz, I. (2011). Functional MRI evidence of an abnormal neural network for pitch processing in congenital amusia. Cereb. Cortex 21, 292–299. doi: 10.1093/cercor/bhq094

Jiang, C., Liu, F., and Wong, C. P. (2017). Sensitivity to musical emotion is influenced by tonal structure in congenital amusia. Sci. Rep. 7:7624. doi: 10.1038/s41598-017-08005-x

Jiang, J., Scolaro, A. J., Bailey, K., and Chen, A. (2011). The effect of music-induced mood on attentional networks. Int. J. Psychol. 46, 214–222. doi: 10.1080/00207594.2010.541255

Johnson, K. A., Robertson, I. H., Barry, E., Mulligan, A., Daibhis, A., Daly, M., et al. (2008). Impaired conflict resolution and alerting in children with ADHD: evidence from the Attention Network Task (ANT). J. Child Psychol. Psychiatry 49, 1339–1347. doi: 10.1111/j.1469-7610.2008.01936.x

Juslin, P. N., Harmat, L., and Eerola, T. (2014). What makes music emotionally significant? exploring the underlying mechanisms. Psychol. Music 42, 599–623 doi: 10.1177/0305735613484548

Kämpfe, J., Sedlmeier, P., and Renkewitz, F. (2011). The impact of background music on adult listeners: a meta-analysis. Psychol. Music 39, 424–448. doi: 10.1177/0305735610376261

Kawakami, A., Furukawa, K., Katahira, K., and Okanoya, K. (2013). Sad music induces pleasant emotion. Front. Psychol. 4:311. doi: 10.3389/fpsyg.2013.00311

Lévêque, Y., Teyssier, P., Bouchet, P., Bigand, E., Caclin, A., and Tillmann, B. (2018). Musical emotions in congenital amusia: impaired recognition, but preserved emotional intensity. Neuropsychology 32, 880–894. doi: 10.1037/neu0000461

Lima, C. F., Brancatisano, O., Fancourt, A., Müllensiefen, D., Scott, S. K., Warren, J. D., et al. (2016). Impaired socio-emotional processing in a developmental music disorder. Sci. Rep. 6:34911. doi: 10.1038/srep34911

Lolli, S., Lewenstein, A. D., Basurto, J., Winnik, S., and Loui, P. (2015). Sound frequency affects speech emotion perception: Results from congenital amusia. Front. Psychol. 6:1340. doi: 10.3389/fpsyg.2015.01340

Mahoney, J. R., Verghese, J., Goldin, Y., Lipton, R., and Holtzer, R. (2010). Alerting, orienting, and executive attention in older adults. J. Int. Neuropsychol. Soc. 16, 877–879. doi: 10.1017/S1355617710000767

Marin, M. M., Thompson, W. F., Gingras, B., and Stewart, L. (2015). Affective evaluation of simultaneous tone combinations in congenital amusia. Neuropsychologia 78, 207–220. doi: 10.1016/j.neuropsychologia.2015.10.004

McConnell, M. M., and Shore, D. I. (2011). Upbeat and happy: arousal as an important factor in studying attention. Cogn. Emot. 25, 1184–1195. doi: 10.1080/02699931.2010.524396

Medina, D., and Barraza, P. (2019). Efficiency of attentional networks in musicians and non-musicians. Heliyon 5:e01315. doi: 10.1016/j.heliyon.2019.e01315

Mitchell, R. L., and Phillips, L. H. (2007). The psychological, neurochemical and functional neuroanatomical mediators of the effects of positive and negative mood on executive functions. Neuropsychologia 45, 617–629. doi: 10.1016/j.neuropsychologia.2006.06.030

Mogg, K., Salum, G., Bradley, B., Gadelha, A., Pan, P., Alvarenga, P., et al. (2015). Attention network functioning in children with anxiety disorders, attention-deficit/hyperactivity disorder and non-clinical anxiety. Psychol. Med. 45, 2633–2646. doi: 10.1017/S0033291715000586

Park, J., Miller, C. A., Sanjeevan, T., van Hell, J. G., Weiss, D. J., and Mainela-Arnold, E. (2019). Bilingualism and attention in typically developing children and children with developmental language disorder. J. Speech Language Hear. Res. 62, 4105–4118. doi: 10.1044/2019_JSLHR-L-18-0341

Peretz, I. (2016). Neurobiology of congenital amusia. Trends Cogn. Sci. 20, 857–867. doi: 10.1016/j.tics.2016.09.002

Peretz, I., Ayotte, J., Zatorre, R. J., Mehler, J., Ahad, P., Penhune, V. B., et al. (2002). Congenital amusia: a disorder of fine-grained pitch discrimination. Neuron 33, 185–191. doi: 10.1016/S0896-6273(01)00580-3

Peretz, I., Brattico, E., Järvenpää M., and Tervaniemi, M. (2009). The amusic brain: in tune, out of key, and unaware. Brain 132, 1277–1286. doi: 10.1093/brain/awp055

Peretz, I., Champod, A. S., and Hyde, K. (2003). Varieties of musical disorders. Ann. N. Y. Acad. Sci. 999, 58–75. doi: 10.1196/annals.1284.006

Peretz, I., and Vuvan, D. T. (2017). Prevalence of congenital amusia. Eur. J. Hum. Genet. 25, 625–630. doi: 10.1038/ejhg.2017.15

Petersen, S. E., and Posner, M. I. (2012). The attention system of the human brain: 20 years after. Annu. Rev. Neurosci. 35, 73–89. doi: 10.1146/annurev-neuro-062111-150525

Phillips-Silver, J., Toiviainen, P., Gosselin, N., and Peretz, I. (2013). Amusic does not mean unmusical: beat perception and synchronization ability despite pitch deafness. Cogn. Neuropsychol. 30, 311–331. doi: 10.1080/02643294.2013.863183

Posner, M. I., and Petersen, S. E. (1990). The attention system of the human brain. Annu. Rev. Neurosci. 13, 25–42. doi: 10.1146/annurev.ne.13.030190.000325

Pralus, A., Fornoni, L., Bouet, R., Gomot, M., Bhatara, A., Tillmann, B., et al. (2019). Emotional prosody in congenital amusia: impaired and spared processes. Neuropsychologia 134:107234. doi: 10.1016/j.neuropsychologia.2019.107234

Rowe, G., Hirsh, J. B., and Anderson, K. A. (2007). Positive affect increases the breadth of attentional selection. Proc. Natl. Acad. Sci. U.S.A. 104, 383–388. doi: 10.1073/pnas.0605198104

Rusconi, E., Kwan, B., Giordano, B. L., Umilta, C., and Butterworth, B. (2006). Spatial representation of pitch height: the SMARC effect. Cognition 99, 113–129. doi: 10.1016/j.cognition.2005.01.004

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145–172. doi: 10.1037/0033-295X.110.1.145

Sachs, M. E., Damasio, A., and Habibi, A. (2015). The pleasures of sad music: a systematic review. Front. Hum. Neurosci. 9:404. doi: 10.3389/fnhum.2015.00404

Schindler, I., Hosoya, G., Menninghaus, W., Beermann, U., Wagner, V., Eid, M., et al. (2017). Measuring aesthetic emotions: a review of the literature and a new assessment tool. PLoS ONE 12:e0178899. doi: 10.1371/journal.pone.0178899

Silvestrini, N., Piguet, V., Cedraschi, C., and Zentner, R. M. (2011). Music and auditory distraction reduce pain: emotional or attentional effects? Music Med. 3, 264–270. doi: 10.1177/1943862111414433

Sluming, V., Brooks, J., Howard, M., Downes, J. J., and Roberts, N. (2007). Broca's area supports enhanced visuospatial cognition in orchestral musicians. J. Neurosci. 27, 3799–3806. doi: 10.1523/JNEUROSCI.0147-07.2007

Spaccavento, S., Marinelli, C. V., Nardulli, R., Macchitella, L., Bivona, U., Piccardi, L., et al. (2019). Attention deficits in stroke patients: the role of lesion characteristics, time from stroke, and concomitant neuropsychological deficits. Behav. Neurol. 2019:7835710. doi: 10.1155/2019/7835710

Thompson, W. F., Marin, M. M., and Stewart, L. (2012). Reduced sensitivity to emotional prosody in congenital amusia rekindles the musical protolanguage hypothesis. Proc. Natl. Acad. Sci. U.S.A. 109, 19027–19032. doi: 10.1073/pnas.1210344109

Thompson, W. F., Schellenberg, E. G., and Husain, G. (2001). Arousal, mood, and the Mozart effect. Psychol. Sci. 12, 248–251. doi: 10.1111/1467-9280.00345

Tillmann, B., Gosselin, N., Bigand, E., and Peretz, I. (2012). Priming paradigm reveals harmonic structure processing in congenital amusia. Cortex 48, 1073–1078. doi: 10.1016/j.cortex.2012.01.001

Tillmann, B., Jolicoeur, P., Ishihara, M., Gosselin, N., Bertrand, O., Rossetti, Y., et al. (2010). The amusic brain: Lost in music, but not in space. PLoS ONE 5:e10173. doi: 10.1371/journal.pone.0010173

Tillmann, B., Lalitte, P., Albouy, P., Caclin, A., and Bigand, E. (2016). Discrimination of tonal and atonal music in congenital amusia: The advantage of implicit tasks. Neuropsychologia 85, 10–18. doi: 10.1016/j.neuropsychologia.2016.02.027

Trombetti, A., Hars, M., Herrmann, F. R., Kressig, R. W., Ferrari, S., and Rizzoli, R. (2011). Effect of music-based multitask training on gait, balance, and fall risk in elderly people: a randomized controlled trial. Arch. Intern. Med. 171, 525–533. doi: 10.1001/archinternmed.2010.446

Trost, W., Ethofer, T., Zentner, M., and Vuilleumier, P. (2012). Mapping aesthetic musical emotions in the brain. Cereb. Cortex 22, 2769–2783. doi: 10.1093/cercor/bhr353

Trost, W., Frühholz, S., Schön, D., Labbé, C, Pichon, S., Grandjean, D., et al. (2014). Getting the beat: entrainment of brain activity by musical rhythm and pleasantness. Neuroimage 103, 55–64. doi: 10.1016/j.neuroimage.2014.09.009

Vanlessen, N., De Raedt, R., Koster, E. H., and Pourtois, G. (2016). Happy heart, smiling eyes: a systematic review of positive mood effects on broadening of visuospatial attention. Neurosci. Biobehav. Rev. 68, 816–837. doi: 10.1016/j.neubiorev.2016.07.001

Vuvan, D., Paquette, S., Goulet, G. M., Royal, I., Felezeu, M., and Peretz, I. (2018). The montreal protocol for identification of amusia. Behav. Res. Methods 50, 662–672. doi: 10.3758/s13428-017-0892-8

Wang, K., Fan, J., Dong, Y., Wang, C.-Q., Lee, T. M., and Posner, I. M. (2005). Selective impairment of attentional networks of orienting and executive control in schizophrenia. Schizophr. Res. 78, 235–241. doi: 10.1016/j.schres.2005.01.019

Wechsler, D., Coalson, D. L., and Raiford, E. S. (1997). WAIS-III: Wechsler Adult Intelligence Scale. San Antonio, TX: Psychological corporation.

Williamson, V. J., Cocchini, G., and Stewart, L. (2011). The relationship between pitch and space in congenital amusia. Brain Cogn. 76, 70–76. doi: 10.1016/j.bandc.2011.02.016

Zendel, B. R., Lagrois, M.-É., Robitaille, N., and Peretz, I. (2015). Attending to pitch information inhibits processing of pitch information: the curious case of amusia. J. Neurosci. 35, 3815–3824. doi: 10.1523/JNEUROSCI.3766-14.2015

Zentner, M., Grandjean, D., and Scherer, R. K. (2008). Emotions evoked by the sound of music: characterization, classification, and measurement. Emotion 8, 494–521. doi: 10.1037/1528-3542.8.4.494

Keywords: congenital amusia, emotion, executive control, music exposure, selective attention

Citation: Fernandez NB, Vuilleumier P, Gosselin N and Peretz I (2021) Influence of Background Musical Emotions on Attention in Congenital Amusia. Front. Hum. Neurosci. 14:566841. doi: 10.3389/fnhum.2020.566841

Received: 28 May 2020; Accepted: 30 November 2020;

Published: 25 January 2021.

Edited by:

Franco Delogu, Lawrence Technological University, United StatesReviewed by:

Anne Caclin, Institut National de la Santé et de la Recherche Médicale (INSERM), FranceCaicai Zhang, The Hong Kong Polytechnic University, Hong Kong

Copyright © 2021 Fernandez, Vuilleumier, Gosselin and Peretz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Natalia B. Fernandez, Natalia.fernandez@unige.ch

Natalia B. Fernandez

Natalia B. Fernandez Patrik Vuilleumier

Patrik Vuilleumier Nathalie Gosselin

Nathalie Gosselin Isabelle Peretz

Isabelle Peretz