Challenges for identifying the neural mechanisms that support spatial navigation: the impact of spatial scale

- 1German Center for Neurodegenerative Diseases (DZNE), and Center for Behavioural Brain Sciences (CBBS), Otto-von-Guericke University, Magdeburg, Germany

- 2Department of Psychology, Faculty of Science and Technology, Bournemouth University, Bournemouth, UK

Spatial navigation is a fascinating behavior that is essential for our everyday lives. It involves nearly all sensory systems, it requires numerous parallel computations, and it engages multiple memory systems. One of the key problems in this field pertains to the question of reference frames: spatial information such as direction or distance can be coded egocentrically—relative to an observer—or allocentrically—in a reference frame independent of the observer. While many studies have associated striatal and parietal circuits with egocentric coding and entorhinal/hippocampal circuits with allocentric coding, this strict dissociation is not in line with a growing body of experimental data. In this review, we discuss some of the problems that can arise when studying the neural mechanisms that are presumed to support different spatial reference frames. We argue that the scale of space in which a navigation task takes place plays a crucial role in determining the processes that are being recruited. This has important implications, particularly for the inferences that can be made from animal studies in small scale space about the neural mechanisms supporting human spatial navigation in large (environmental) spaces. Furthermore, we argue that many of the commonly used tasks to study spatial navigation and the underlying neuronal mechanisms involve different types of reference frames, which can complicate the interpretation of neurophysiological data.

Introduction

A central issue in the cognitive neuroscience of spatial navigation pertains to the question of reference frames. Ever since the discovery of place cells in the rodent hippocampus (O’Keefe and Dostrovsky, 1971), this structure has been thought to provide an allocentric description of the environment. In contrast, cortical regions such as posterior parietal cortex or the striatum have been linked to processing spatial information in various egocentric reference frames. Even though the terms “egocentric” and “allocentric” appear frequently in manuscripts on spatial navigation, their precise meaning is often not described. In addition, different authors have different interpretations, which can lead to confusion about what exactly is meant by an allocentric neural code, for example.

In this review, our aim is to highlight some of the problems that can arise when studying the neural mechanisms that are presumed to support different spatial reference frames. In particular, we show that many of the commonly used tasks involve different types of reference frames, which can complicate the interpretation of neurophysiological data gathered in such situations. In addition, we discuss how the scale of space in which a navigation task takes place determines the processes that are being recruited, which has important implications, for example, for the inferences that can be made from animal studies about the neural mechanisms supporting human spatial navigation.

Background

Egocentric Vs. Allocentric Reference System

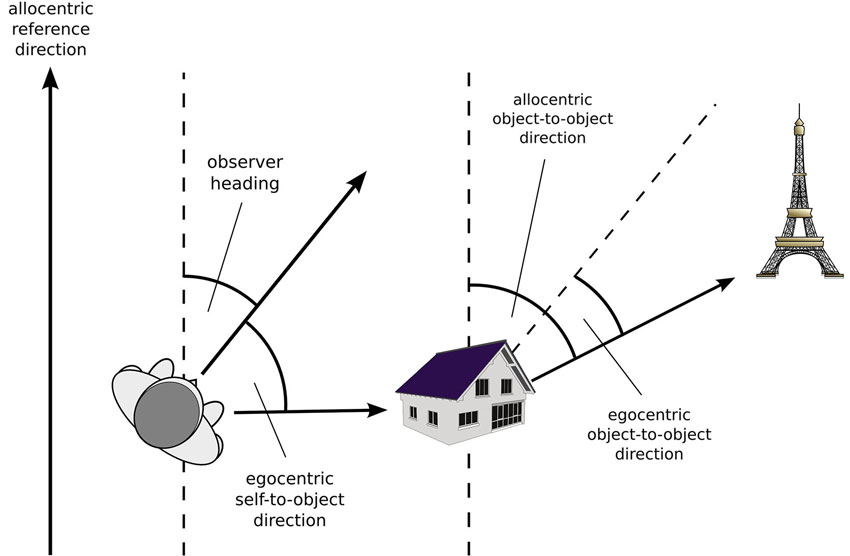

To begin, we will provide a definition of what characterizes an egocentric vs. an allocentric spatial code. Exceptions notwithstanding, there is general agreement that in an egocentric reference frame, locations are represented with respect to the particular perspective of an observer. As shown in Figure 1, the origin of the egocentric reference system is centered on the observer, and its orientation is defined by the observer’s heading. Importantly, the brain entertains multiple egocentric reference frames that are anchored to different body parts (i.e., eye-centered, head-centered, trunk-centered), hence the orientation of an egocentric reference system is determined by the orientation of the specific body part.

For the sake of simplicity, let us assume that all body parts are orientated in the same direction, hence the observer’s heading coincides with the orientation of all egocentric reference frames. Assuming a polar coordinate system, the egocentric position of an external object can now be specified as follows: the length of the vector connecting the observer and the object is the egocentric distance of the object, and the angle between the observer’s heading and that vector specifies its egocentric direction. However, egocentric direction can also specify the direction between two external objects, in this case it refers to the angle between the direction of the observer’s heading and the vector connecting the two objects.

In contrast to observer dependent egocentric reference systems, an allocentric reference frame specifies an object’s position within a framework external to the observer and independent of its position and orientation. Assuming a polar coordinate system, an object’s allocentric distance corresponds to the length of a vector connecting the origin of the coordinate system and the object, and the angle between the allocentric reference direction and that vector specifies its allocentric direction. However, most environments do not provide a meaningful reference point that could serve as an unambiguous origin for an allocentric coordinate system. As a consequence, allocentric distance of an object is rarely defined with respect to an origin but rather with respect to other, behaviorally relevant objects in the environment. Similarly, allocentric direction usually specifies the direction between two external objects, defined as the angle between an allocentric reference direction and the vector connecting the two objects (Figure 1).

From these definitions, it is obvious that one major difference between egocentric and allocentric representations pertains to what happens when the observer moves about in the environment. For example, if the observer simply turns around, an object’s egocentric direction changes, but its egocentric distance remains the same. In contrast, neither allocentric distance nor directions are affected by observer rotation. The differences are even more pronounced for translational movements, for which both egocentric distance and direction change, whereas their allocentric counterparts remain unaffected. It is this observer independence that makes allocentric representations an attractive format for long-term memory representations: when the observer moves about in a familiar environment, planning any navigational step from an egocentric representation would require that one continuously updates the egocentric vectors towards all potential goals. In contrast, in an allocentric representation, all the observer needs to do is to update their own allocentric position, because this knowledge allows for computing egocentric distance and direction towards other objects from any vantage point.

There is, however, one major problem that is rarely addressed in the literature: what does an allocentric reference system actually look like? While an egocentric reference system can be easily defined in any situation where an observer is present, this is much harder for allocentric reference frames. Imagine, for example, an observer walking around on famous St. Peter’s Square in Rome, which has an oval shape. In this situation, it is not at all obvious what would define the orientation of an allocentric reference system. Moreover, while the observer might try to extract the major axes of the square and use those to define orientation, the situation becomes even more ambiguous when the observer leaves the square and strolls around one of the neighboring streets whose orientation changes as one walks along. Is the initial orientation of the allocentric representation still used or is a new one adopted? And what factors determine whether or not a switch to a new direction occurs?

Scales of Space

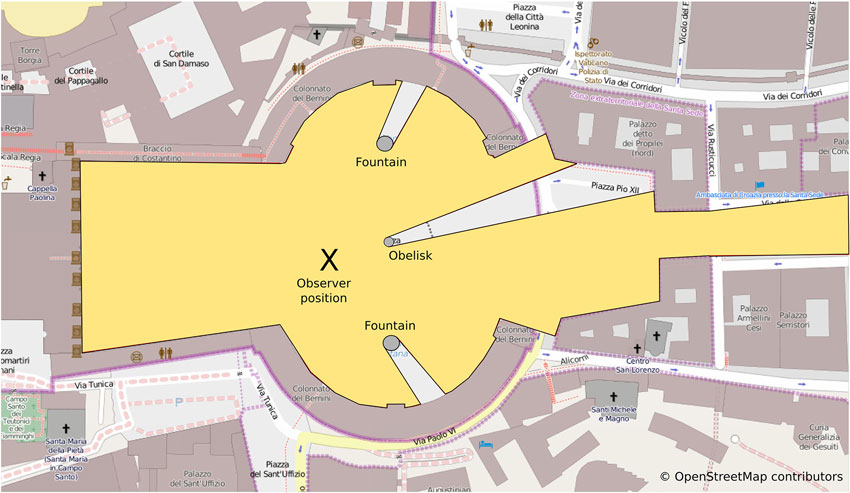

When considering the kind of reference frames associated with different navigation tasks or paradigms it is important to consider the scale of space in which these tasks take place. Montello distinguishes between four classes of psychological spaces: figural, vista, environmental and geographical space (Montello, 1993). While figural space (e.g., the space of pictures or small objects) and geographical space (e.g., the space of countries or nations) are too small or too large to be experienced through navigation, the distinction between vista and environmental space is relevant in the context of navigation: a vista space is the space that can be visually apprehended from a single location or with only little exploratory movements (see Figure 2). Typical examples for vista spaces are single rooms or town squares such as the St. Peters Square in Rome mentioned above. In contrast, environmental spaces such as buildings, neighborhoods or towns cannot be experienced from a single place or even from a certain part of the environment but require considerable movement. Although navigation paradigms that aim to investigate spatial reference frames and the underlying neuronal representations have made use of both vista and environmental spaces, the impact that different spatial scales have on the ensuing spatial representation and their reference frame are rarely considered.

Figure 2. Vista spaces: the yellow polygon despicts the area of St Peters square that is visible from the observer position (x). Note that almost the entire space can be apprehended from a single position. While visual barrieres such as the obelisk and the fountains will obstruct the view of some of the space, only little exploratory movements are required to apprehend the entire space. The visible area from any position is crucial for defining the local vista spaces as well as connections between them (see Franz and Wiener, 2008).

Why does the scale of space matter? There are obvious differences between navigating vista spaces and navigating environmental spaces. First, navigating large environmental spaces takes longer than navigating vista spaces and requires traversing through several connected (vista) spaces. It therefore requires integration of information over extended periods of time as well as space and involves the planning of complex routes which may feature a large number of decision points. Second, target locations in environmental space are beyond the sensory horizon while they lie within the sensory horizon in vista spaces. These differences have important implications for the spatial representations and the cognitive processes involved in navigation and will be discussed in more detail below.

The most commonly used navigation tasks in animal research are all vista space paradigms. The Morris Water Maze (MWM), the T-, Plus- or Y-maze are set up such that the entire environment along with all the navigationally relevant cues and the target destination can be perceived, either at all times or when making movement decisions. Accordingly, the bulk of our knowledge about the neuronal mechanisms involved in egocentric or allocentric navigation that comes from electrophysiological and behavioral neuroscience experiments in animals relates to navigation in small scale vista spaces. While environmental scale spaces are more commonly used in behavioral and brain imaging navigation experiments in humans, the question of how behavioral and neuronal mechanisms in vista and environmental scale spaces relate has received very little attention. This is somewhat surprising, given that one may argue that navigation abilities and the corresponding spatial representation have evolved to support navigation in environmental spaces where—in contrast to vista scale spaces—target destinations are not visible and cannot be reached by a simple visual approach (discussed in more detail below).

How do representations of vista and environmental spaces relate?

In principle, there are two ways of representing environmental scale spaces. First, the entire environment is represented in a single reference frame; depending on the nature of this reference frame, locations in the environment are either described as coordinates relative to the origin of an allocentric coordinate system, relative to other locations (allocentric), or as vectors relative to self in an egocentric reference frame. Second, different parts of the environment are represented independently; in order to navigate environments successfully, these independent representations have to be linked.

It is hard to imagine that our spatial knowledge about large environments such as entire cities is represented in a single reference frame: given an allocentric representation, where would be the origin of the coordinate system (see also discussion in Section Egocentric vs. Allocentric Reference System)? Alternatively, given a purely egocentric reference frame, one would need to constantly update egocentric vectors to all known locations in the environment during navigation. There is also empirical evidence challenging the idea that environmental spaces are represented within a single reference frame. For example, people automatically update object locations in their immediate surrounding (vista space) while more remote parts of the environment are not efficiently updated (Wang and Brockmole, 2003). Evidence for separate or fragmented representations also comes from pointing experiments, demonstrating increased accuracy for within-region as compared to between-region direction judgements (Han and Becker, 2014). This suggests that representations of environmental scale spaces are fragmented into independent units. Similar conclusions have been drawn by Meilinger et al. who demonstrated that different (vista) spaces along a route were encoded using independent local reference frames (Meilinger et al., 2014). A neuronal code for an egocentric representation of vista spaces has been hypothezied by Byrne et al. (2007): in their model, a population of posterior parietal neurons—presumably located in the precuneus—represents the locations of all landmarks and objects visible from the current location or from a location that is recalled from previous experience.

Further support for the idea of independent representations or reference frames (Worden, 1992; Derdikman and Moser, 2010) comes from animal experiments demonstrating that (i) the same place cell may code for different locations in different environments (e.g., Skaggs and McNaughton, 1998; Colgin et al., 2008); and (ii) that entorhinal grid cells do not exhibit periodic two dimensional firing fields covering the entire environments in environments that are subdivided into multiple corridors, but rather establish separate grid patterns for each corridor (Derdikman et al., 2009).

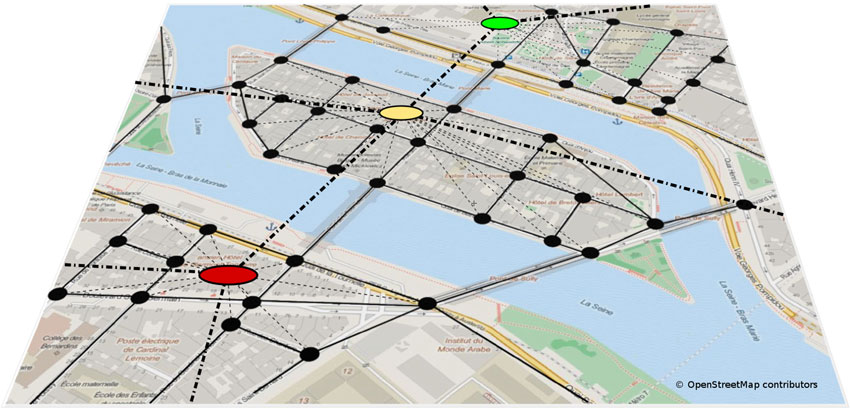

How are these individual representations of smaller (vista scale) spaces connected? Graph-like representations have long been suggested to provide a structure suitable to integrate independent, yet interconnected, memories of space (Kuipers, 1978; Poucet, 1993; Schölkopf and Mallot, 1995). In addition, graphs also allow for hierarchical spatial representations (Stevens and Coupe, 1978; Wiener and Mallot, 2003; Han and Becker, 2014). In graph-like structures, local positional information is usually represented in nodes, while edges represent connections between nodes (see Figure 3). Several graph models of environmental scale spatial memory have been proposed in the animal and human literature. The exact nature of the information stored in nodes and edges differ between models: Poucet (1993), for example, suggested that nodes are place representations, while connections between distinct places are encoded in polar coordinates as vectors. At a higher level of abstraction, the animal’s current environment, which may contain any number of places that share common stimulus properties, becomes a so-called local chart. Note that the idea of a local chart is similar, if not identical, to the concept of a vista space. According to Poucet (1993) environmental scale spaces are represented in terms of multiple local charts (vista spaces) and spatial relationships between them. Closely related to this network of charts idea is the Network of Reference Frames theory (Meilinger, 2008), which proposes that environmental spaces are represented by means of interconnected reference frames, i.e., independent coordinate systems each with a specific orientation and representing a different vista space. These reference frames or nodes are connected by edges which describe the perspective shift—the translation and rotation—necessary to move between them.

Figure 3. Hierarchical graph-like representation of an environmental scale space. Single places or vista scale spaces are represented as nodes, connections between them are represented by edges. Graphs also allow to represent hierarchical spatial knowledge, where several places are combined to form regions. The spatial relationship between different regions is represented at a higher level of abstraction (Wiener and Mallot, 2003).

What do We Know About the Neural Mechanisms that Support Navigational Behavior?

Navigation in Vista Scale Spaces

Allocentric navigation in vista space

Ever since the discovery of place cells in the hippocampus of freely moving rats (O’Keefe and Dostrovsky, 1971), the hippocampus has been thought to provide an allocentric description of an environment, often referred to as a cognitive map. Place cells fire whenever an animal moves through a certain location, independent of its facing direction. Many experiments have shown that both environmental boundaries and salient landmarks placed outside the apparatus exert tight control over the firing of place cells, suggesting that place cells are driven by environmental objects located (i) in a certain allocentric direction from the animal; and (ii) at a certain distance from the animal. This distance sensitivity relative to the observer, however, makes it impossible to determine whether place cells code for distance in an egocentric or an allocentric reference frame, since both are identical when the observer occupies one of the two points between which distance is computed.

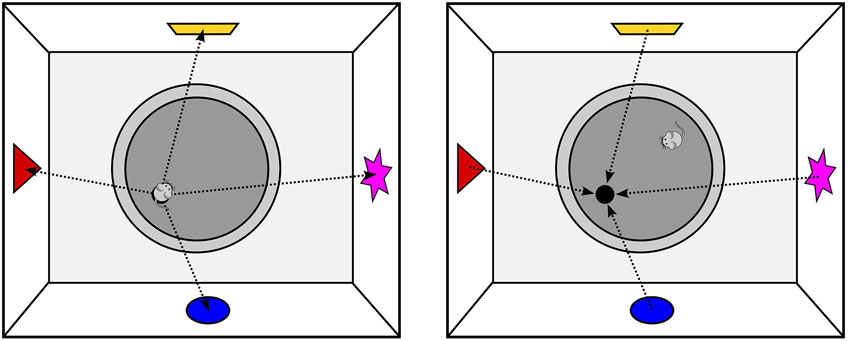

Electrophysiological recordings of place cells, however, can only demonstrate a correlation between spatial position and the firing behavior of neurons. To establish the causal role of the hippocampus for allocentric navigation, numerous studies have applied temporary or permanent lesions to the hippocampus. By far the most popular paradigm used in these experiments is the MWM (Figure 4), which is often referred to as the gold standard for studying allocentric spatial learning or memory. In the MWM, the animal has to find a platform submerged in a pool of opaque water, a task that healthy animals learn quickly even though the starting location is changed between trials or sessions. In contrast, animals with hippocampal lesions show marked impairments in the MWM as they take longer to learn the location and tend to often search for the platform in incorrect locations (Morris et al., 1982). However, when these animals always begin the trial from the same position, they show little to no impairment. Taken together, these findings suggest that the hippocampus contributes only to allocentric spatial learning, whereas egocentric navigation is independent of the hippocampus.

Figure 4. Spatial Coding in the Morris Water Maze; left: the animal has found the platform and can encode the spatial relationship between the platform and extra-maze cues as allocentric vectors. Note that while sitting on the platform, allocentric and egocentric vectors to the landmarks only differ with respect to their orientation, but not in their length; right: as the entire environment can be perceived from any position in the pool, the platform location can be computed by simply projecting the learned allocentric vectors from the landmarks into the pool. As a consequence, the animal does not need to know its current allocentric position to find the submerged platform.

While the results from these studies seem to tell a coherent story about the navigational functions of the hippocampus, a closer look reveals a number of problems and limitations. In the MWM, the location of the goal—i.e., the position of the hidden platform—can either be specified by an allocentric vector that specifies the distance and direction from the origin of a coordinate system or by multiple allocentric vectors between the platform location and other objects in the environment. As discussed above, it is unclear what would specify the origin of an overall allocentric coordinate system in the MWM, hence it seems far more likely that the allocentric representation involves vectors between the platform location and external landmarks.

What is rarely appreciated is that the MWM only assesses navigation in vista space. Specifically, no matter where the animal is started on a given trial, the entire environment can always be perceived with little or no movement (note that rodents have a very large field of vision; even with a more restricted field of vision, head movements are sufficient to perceive the entire environment). In other words, all information required to calculate the platform location is available from the animals current location, and the platform location can therefore be computed by simply projecting the allocentric vectors from the landmarks into the pool (see Figure 4). As a consequence, the animal does not need to know where it is located in the environment, since the platform location can easily be computed by looking at the landmarks. This lack of the need for self-localization also applies to other popular vista space navigation tasks. For example, in the T-, Y- or Plus Maze paradigms, the entire space is visible from the central junction, hence direct approach behavior to the goal without self-localization is possible.

Finally, and contrary to widely held assumptions, it is not easy to identify the spatial reference frame used in vista space navigation tasks. For example, in the control condition used in many MWM studies, rats simply have to approach a visible platform. Animals with hippocampal lesions perform well in this version of the task, which is typically taken as evidence that the hippocampus is specifically required for allocentric spatial memory. However, this control condition does not require any spatial learning, because the goal is always visible. Similarly, studies with humans with hippocampal lesions have employed egocentric control conditions in which the platform location is marked by a nearby beacon (Goodrich-Hunsaker et al., 2010), which does not require the learning of an egocentric vector either. As a consequence, the only conclusion that can be drawn from such studies is that the hippocampus plays a role in forming spatial memories, but it does not inform about the nature of the reference system. To overcome this problem, some authors have employed control conditions in which the platform continues to be submerged but the animal is always started from the same location (Eichenbaum et al., 1990), assuming that it now solves the task by learning an egocentric vector from its starting location towards the goal. Even this control condition is problematic because (i) it is now unclear what information is used to reference the goal location, because both extramaze landmarks and the animal’s starting position could serve this purpose; and (ii) it is possible that the task is generally easier when the animal is always released from the same starting location. A solution to this problem would involve a condition in which the animal is always started from a different location—as in the allocentric condition—but the platform is moved so that it remains in a constant egocentric relation to the animal’s starting position. With this manipulation, both egocentric and allocentric conditions would require the animal to learn vectors, either relative to external room cues (allocentric) or relative to its own position (egocentric).

While our discussion of allocentric navigation in vista space has focussed on studies investigating spatial coding in the hippocampus, the discovery of grid cells in entorhinal cortex (EC) of rodents and primates shows that an allocentric code for an organism’s position may already be computed upstream of the hippocampus (Hafting et al., 2005; Killian et al., 2012; Jacobs et al., 2013). While theoretical models assume that the main function of the grid cell system consists of path integration—the tracking of changes in spatial position based on incoming self-motion cues—most studies on spatial coding in EC have employed electrophysiological recordings in freely moving animals that were not engaged in any navigational task. In addition, lesions studies investigating the role of EC for allocentric learning (i.e., in the MWM) have yielded mixed results (Burwell et al., 2004; Steffenach et al., 2005). Given these findings and the interpretational problems of the MWM and other vista space navigation tasks as discussed above, it is difficult to ascertain the navigational contribution of the grid cell system to vista space navigation at present.

The role of boundaries and geometric layout

In rectangular arenas, rats often confuse diagonally opposite corners, even when differentiated by distinctive cues (Cheng, 1986). This led to the claim that rats rely preferentially on the geometry of space, encoded in a dedicated geometric module. Similar ideas have been proposed for humans, even though they may combine geometric cues with featural cues (i.e., landmarks) by means of natural language (Wang and Spelke, 2002). While later studies have cast doubt on the idea of a dedicated geometric module in the mammalian brain (Cheng, 2008), behavioral work has consistently shown that salient geometric cues such as the walls of a room are often used to define allocentric reference directions (Shelton and Mcnamara, 2001).

On the neuronal level, two cell types have been described that may provide a neural substrate for the coding of geometric layout. Border cells fire whenever the animal is at an environmental boundary such as a wall of the recording arena (Solstad et al., 2008). In addition, boundary vector cells, which have been found in the subiculum, fire whenever the animal is located in a certain distance and allocentric direction away from a boundary (Lever et al., 2009). Importantly, given that hippocampal place cells appear to function independently of the grid cell system (Brandon et al., 2014), these boundary vector cells may provide the key input that drives the localized firing of hippocampal place cells (Bush et al., 2014).

In the context of our discussion of vista and environmental space, it is important to note that virtually all behavioral and neuroscience experiments on geometry coding have been conducted in vista space environments such as single rooms for humans or regularly shaped boxes (i.e., rectangles) for rodents. In these environments, salient geometric boundaries that define the layout of a space can be seen from any vantage point, hence it makes perfect sense that distance and direction to the boundaries are (i) explicitly coded in neuronal circuits; and (ii) used to organize spatial representations and to reference the locations of external objects and of the navigator. A major problem, however, arises when we need to code for a location that lies beyond our sensory horizon: in environmental spaces such as neighborhoods or different parts of a city, geometric cues that could be seen from any vantage point are rarely available (note, of course, that there are exceptions such as the extended cliff face of Edinburgh’s famous Salisbury Crags that, due their elevated location, are visible from most parts of the city). Rather, all one can see when wandering on a typical street or square are the faces of the buildings in the local surroundings, which disappear quickly upon turning into another street. Geometric cues such as rivers or extended major roads, which could be useful for coding locations in large scale space, can often not be seen or, even if they are within one’s current sensory horizon, only parts of them are visible. As a consequence, environmental boundaries and boundary related coding appear most useful for coding local positions in vista spaces such as the location of a traffic light relative to the surrounding buildings, but they are rarely available and useful in environmental scale spaces.

Egocentric navigation in vista space

Similar to allocentric navigation, animal studies on navigation involving egocentric knowledge are predominantly carried out in vista space environments. For example, in the MWM, lesions to the striatum impair navigation towards a location marked by a distinct visible landmark but not to an unmarked one defined relative to distal landmarks and boundaries (Packard and Mcgaugh, 1992; McDonald and White, 1994). Similarly, when a location is defined by its distance and direction from an intramaze landmark (given distal orienting cues), and not by the boundary of the maze, hippocampal damage does not impair navigation (Pearce et al., 1998) although lesions of the anterior thalamus (with presumed disruption of the head-direction system) do impair navigation (Wilton et al., 2001). These findings, which are in accord with the results from fMRI experiments in humans (Doeller et al., 2008), are generally taken as evidence for the complementary roles of the hippocampus and the striatum for spatial navigation, with the former defining locations relative to the boundaries and extramaze landmarks in an allocentric reference frame, while the latter defines locations relative to local landmarks, and the head direction system is required to derive the animal’s orientation from distant landmarks. A second type of studies have consistently implicated the dorsal striatum, in particular the caudate, in learning habits and egocentrically defined motor responses. For example, in the Plus Maze task, the response strategy consists of always executing the same motor response at the central junction (i.e., turn right), independent of the direction from which this junction is approached. Inactivating the caudate leads to a blocking of response learning and a preference for a place strategy, whereas inactivating the hippocampus has the opposite effect (Packard and Mcgaugh, 1992).

These complementary roles of the dorsal striatum: (i) coding for object positions relative to local landmarks or beacons; and (ii) defining an egocentric motor response, however, do not tap into the process of coding egocentric knowledge as defined in Figure 1. Rather, coding for object positions relative to local landmarks involves an observer independent object-to-object vector that requires the retrieval of an allocentric reference direction. In addition, learning to execute a specific motor response such as “turn right” does not involve the learning of a metric egocentric vector between the observer and a target object. All that is needed is motor skill or habit learning in which a categorical motor behavior such as “turn right”, which is not specified in terms of a precise angle, is associated with obtaining a reward. In support of this conceptualization, response learning in the Plus- or T-maze is known to proceed slower than place learning (Packard and Mcgaugh, 1992), supporting the notion that response learning requires repeated reinforcement whereas place learning is a form of rapid, incidental learning (Salmon and Butters, 1995).

Direct evidence for the coding of egocentric vectors towards external objects in vista space comes from primate studies investigating the functions of the posterior parietal cortex. When monkeys are presented with a visual or auditory target, neurons in subdivisions of the posterior parietal cortex code for the object’s location in multiple egocentric reference frames, for example eye-centered, head-centered or trunk-centered (Colby, 1998). Such locational cues form the basis of an egocentric map of the surrounding space that critically depends on the precuneus and connected inferior and superior parietal areas. Similar egocentric representations have been documented in humans using fMRI (Committeri et al., 2004; Schindler and Bartels, 2013), but it is important to note that these studies are generally performed with static observers. Egocentric navigation, however, involves a dynamic updating of the egocentric object vectors as the observer moves about an environment. This integration of self-motion cues with existing egocentric representations appears to be mediated by the precuneus (Wolbers et al., 2008; Jahn et al., 2012). It is currently not clear, however, as to whether the precuneus also provides egocentric codes for distant objects beyond the sensory horizon—a key component of navigation in environmental scale space. In addition, whether the role of the precuneus is restricted to providing transient egocentric codes or whether it also stores long-term memory traces of such codes is unknown.

Navigation in Environmental Scale Spaces

Navigation in environmental scale spaces can be based on allocentric or egocentric strategies (Wiener et al., 2013). Functional brain imaging studies in humans have shown that the hippocampal circuit is recruited when people employ strategies that require allocentric processing, such as planning and navigating novel routes through familiar environments (“wayfinding”). The parietal cortex and striatal circuits, in contrast, are involved in egocentric navigation strategies such as following a well-known route (Hartley et al., 2003; Iaria et al., 2003; Wolbers et al., 2004; Burgess, 2008). While these results seem to mirror the findings of lesion and electrophysiological studies in animals in vista scale space nicely (discussed above), it is important to consider the impact that the scale of space has on the mechanisms involved in navigation.

Allocentric navigation in environmental spaces

Wayfinding is the most commonly used allocentric navigation task in environmental spaces (e.g., Wiener et al., 2004; Hölscher et al., 2011). Wayfinding in familiar environments such as cities, neighborhoods or buildings involves planning novel routes to destinations beyond the current sensory horizon (i.e., in a different vista space). During wayfinding, landmarks specifying the goal location will not be available at the start of the navigation. Thus, in contrast to allocentric navigation in vista spaces, the goal location cannot be computed simply by looking at the landmarks. Rather, computing a trajectory to the goal location requires several steps. The first step involves localizing oneself within the environment, a process that, as we discussed before, is not required in vista scale space navigation. In a second step, the goal needs to be localized. These first two steps can be conceptualized as highlighting two locations in an internal representation of space or cognitive map. Finally, the actual trajectory from the current location to the goal has to be computed. Importantly, this trajectory will rarely be a straight line as it is in tasks such as the MWM. Instead, navigation in environmental spaces often requires planning and following long paths with several decision points. Simply choosing the path option most aligned with the direction of the goal location does not necessarily lead to the destination. This has important implications for the underlying internal mental map; representations only coding spatial relationships between landmarks or locations are not sufficient. Wayfinding in environmental spaces also requires knowledge about connections between places which is difficult—if not impossible—to accomplish if all spatial knowledge is coded in a single allocentric reference frame (i.e., in map like representations of space). As mentioned in Section Scales of Space, the simplest structure to achieve this is a graph-like representation, in which nodes represent places (or vista spaces) and edges refer to the movements required to navigate between neighboring nodes.

In humans, functional brain imaging studies suggest that the hippocampus is recruited during the learning of environmental spaces (cognitive mapping) and when planning routes or shortcuts through known environments: Wolbers and Büchel (2005) trained participants on a long and complex route featuring several places and landmarks and asked participants to judge the spatial relations between these landmarks. Interestingly, hippocampal activation did not follow overall performance but rather reflected the amount of knowledge acquired in a given experimental session, suggesting prominent hippocampal activation only during early stages of cognitive mapping. Furthermore, hippocampal activation is associated with planning routes through spatial but not social (purely relational) networks (Kumaran and Maguire, 2005) as well as with the quality of the solution (Hartley et al., 2003).

Although these studies demonstrate that the hippocampus is involved in allocentric navigation in environmental spaces, they do not reveal its precise role during wayfinding. As discussed above, wayfinding requires localization of self and destination and the planning of a route between these locations. Furthermore, the route needs to be monitored during travel and further planning en route is often required (Wiener and Mallot, 2003; Hölscher et al., 2011). To isolate the neuronal mechanisms underlying these processes, Spiers and Maguire had experienced London Taxi drivers navigate through a virtual reality simulation of London (Spiers and Maguire, 2007a,b). Using retrospective verbal protocols, they isolated different cognitive processes involved in wayfinding and related them to functional brain imaging data recorded during the actual navigation. Neural activity associated with route planning was not limited to the hippocampus, but involved a network of different regions including retrosplenial cortex and prefrontal cortex (PFC). Specifically, hippocampal activation was most prominent during initial planning, suggesting its primary role is to activate or retrieve the relevant spatial information, and the retrosplenial cortex was most active during planning and monitoring progress along the route. In addition, PFC was involved in planning, monitoring, when expectations are violated or when encountering unexpected roadblocks which required replanning of the route. Finally, during navigation, the spatial relationship between self and goal location was continuously tracked by several brain regions. Medial prefrontal activity correlated positively with beeline distance to the target; activity in right subicular/enthorinal region correlated negatively with target distance, and the posterior parietal cortex coded for the egocentric direction to the target. The latter result is in line with findings obtained in vista space paradigms, demonstrating that the posterior parietal cortex represents spatial location relative to a number of egocentric reference frames (Colby, 1998, see also Section “Egocentric Navigation in Vista Space”).

Taken together, successful allocentric navigation in environmental space involves a number of processes, including self localization, the planning of complex routes, monitoring progress along the route and further (re)planning, that are not necessary when navigating vista spaces. As a consequence, research using vista scale paradigms such as the MWM, the Plus- or T-maze will only be able to identify a subset of the neuronal mechanisms involved in environmental space navigation.

Learning unfamiliar environments

Another important difference between navigation in vista and environmental scale spaces relates to the learning of the environment. To solve the allocentric version of the MWM task, for example, subjects have to know the position of the hidden platform relative to the environmental cues. Essentially this information can be encoded after finding the platform either by taking a snapshot of the sensory information which can be compared to the sensory input during navigation to calculate goal direction (Cartwright and Collet, 1983; Cheung et al., 2008), or by memorizing the spatial relations (i.e., vectors) between self-location and environmental landmarks. Learning environmental scale spaces is different as information about the entire environment cannot be acquired instantaneously, but is experienced over an extended period of time during exploration. That is, information about different parts of the environment is experienced at different times and has to be combined into an integrated representation of space. Knowledge about spatial relations between different parts of the environment comes from fundamental navigation mechanisms such as path integration and spatial updating which inform about the translation and rotations when navigating between places.

Egocentric navigation in environmental spaces

The prototypical egocentric navigation task in environmental spaces is route navigation or route following. In humans, route knowledge is often conceptualized as a series of stimulus-response (S-R) associations (Trullier et al., 1997): the recognition of a place or landmark triggers a movement response that is coded in an egocentric reference frame. In the so-called associative cue strategy this response is explicitly directional (e.g., “Turn left at the Gas Station”). In contrast, in the beacon strategy, the response requires a movement or turn towards a landmark or beacon (e.g., “Turn towards the Gas Station”). Finally, route navigation can also be supported by simply memorizing a series of direction changes (“Left—Right—Right - …”, Waller and Lippa, 2007), in particular when the environment does not provide any salient landmarks.

Route learning studies in both real and virtual environmental scale spaces demonstrated that objects located at decision points are more reliably remembered than those that were located between decision points (Aginsky et al., 1997; Schinazi and Epstein, 2010). When presented with isolated objects that were encountered during route learning, neuronal activity in the parahippocampal gyrus is modulated by the navigational relevance of these objects, with strongest activation for objects at decision points that serve as landmarks or associative cues for route knowledge (Janzen and van Turennout, 2004; Janzen and Weststeijn, 2007; Schinazi and Epstein, 2010). Moreover, both behavioral and neural responses to the landmark-object are modulated when primed by an adjacent landmark. Specifically, faster behavioral responses and increased activation in the retrosplenial complex (RSC) are associated with in-route vs. against-route primes, suggesting that the RSC is involved in integrating landmark and path information (Schinazi and Epstein, 2010). These findings are in line with earlier studies, suggesting that retrosplenial areas play an important role in the learning of spatial relationships in large environmental spaces (Wolbers and Büchel, 2005). Electrophysiological findings in monkeys, demonstrating that neurons in the medial parietal regions, analogous to the human RSC, selectively respond to specific S-R associations, are consistent with the idea that the RSC supports associative cue based route learning (Sato et al., 2006).

Some early evidence using standard vista space paradigms such as the T-maze suggest that allocentric strategies can be adopted faster than egocentric response strategies (Tolman et al., 1946) In environmental space, in contrast, parallel acquisition of egocentric and allocentric strategies has been shown in both animals (Rondi-Reig et al., 2006) and humans (Iglói et al., 2009). It is likely that differences in the strategy adopted between vista and environmental spaces result from differences in egocentric navigation strategies. Specifically, learning an egocentric response strategies in vista space paradigms such as the T- or Plus-Maze involves only a single response that is best described as a striatum-dependent motor-skill or as habit learning which involves repeated reinforcement (Salmon and Butters, 1995). Egocentric navigation in environmental space, in contrast, usually involve associative components (“Turn right at the church”) as well as memory of a series of movement decisions, the execution of which takes much longer than a single vista space response. The learning of habitual motor programmes that support egocentric navigation in vista spaces may therefore be less suited for environmental scale space.

In contrast to the widely held view that the hippocampus is involved in allocentric but not egocentric processing during navigation (Byrne et al., 2007; Whitlock et al., 2008; Banta Lavenex and Lavenex, 2009), a number of studies have implicated the hippocampus in route learning. Barrash et al. (2000), for example, demonstrated that patients with hippocampal lesions showed impaired route learning performance. Marked route learning impairments have also been demonstrated in patients with Mild Cognitive Impairment (MCI) and early stage Alzheimer’s Disease (Cushman et al., 2008; Pengas et al., 2010), which are primarily characterized by neurodegenerative changes in the medial temporal lobe (Whitwell et al., 2007). Furthermore, knock out mice lacking hippocampal CA1 NMDA receptors are not only impaired in allocentric learning but also in learning a sequence of self-movements (sequential-egocentric learning: “left-right-left”; Rondi-Reig et al., 2006). Interestingly, these knock out mice were not impaired in learning a single self-movement (“turn left”) as required for T- or Plus-Maze vista space paradigms, but only showed deficits in learning successive body turns. These results are in line with findings implicating the hippocampus in the learning of complex motor sequences (Schendan et al., 2003; Gheysen et al., 2010).

Another explanation for the involvement of the hippocampus in route learning comes from its role in episodic memory (Eldridge et al., 2000; Burgess et al., 2002), because each travel through an environment also represents an episode during which different places (or vista spaces) are sequentially experienced. Remembering such a route or episode requires associating places that one has occupied with movement decisions. Indeed, evidence from electrophysiological studies suggest that the hippocampal code for positional information is modulated by past experiences. For example, when rats navigate along more complex paths or routes, firing rates of hippocampal place cells may vary in the same location depending on where the animal has come from (Frank et al., 2000; Wood et al., 2000).

Conclusion/Summary

In this review we have highlighted a number of problems arising in the study of the neuronal mechanisms supporting egocentric and allocentric reference frames in navigation. First, and in contrast to egocentric reference frames, the nature of allocentric reference frames is somewhat ill defined. For example, any allocentric reference frame requires an origin for its coordinate system. In most environments, however, it is unclear where this origin would lie and whether locations are encoded by means of allocentric vectors relative to the origin of the coordinate system or by their spatial relations to other locations. While some of this confusion may be related to the term “allocentric” itself which implies that the reference frame has a center, it results in different usage of the term in the literature and the exact nature of the assumed allocentric reference system often remains underspecified.

Second, to study the neuronal mechanisms involved in allocentric processing one ideally wants a purely allocentric navigation task. However, all navigation paradigms have egocentric components, as actual navigation requires information—such as direction to the destination or direction of turn—in an egocentric format when planning and executing body movements. In allocentric navigation paradigms such as the MWM it is not easy to clearly characterize the allocentric and egocentric components, which makes it more difficult to allocate neuronal activity to these different reference frames.

Third, the egocentric conditions used in the prominent vista space paradigms do not require the processing of egocentric vectors and may therefore not constitute the best possible comparison to hippocampal-dependent allocentric conditions for which the processing of object-to-object vectors is required. In egocentric vista space navigation subjects need to associate beacons with platform positions (MWM) or learn a motor response (Plus Maze). The egocentric information that is learned in these situation is categorical (next to, left, right) rather than metric (vector) and solving these tasks involves habit or motor skill learning to a much greater extend than allocentric versions of the task.

Fourth, ultimately navigation is about reaching destinations beyond the current sensory horizon, that is, it is about movement in environmental space. The vast majority of animal studies addressing the neuronal basis of navigation, however, have employed small scale vista space paradigms. Our knowledge about how results from these studies translate to navigation in environmental scale spaces is limited. We have argued that successful navigation in environmental space, both egocentric and allocentric, involves a number of processes that are not required in vista space navigation. Research using vista scale paradigms will therefore only be able to identify a subset of the neuronal mechanisms involved in everyday navigation. This is demonstrated in findings implicating the hippocampus in route learning in environmental scale spaces—the prototypical egocentric task in environmental spaces. Such findings challenge the notion that the hippocampal circuit is recruited exclusively for allocentric navigation while the striatal circuit is responsible for egocentric navigation and demonstrate that future work needs to address the relationship between neuronal mechanisms underlying navigation in vista and environmental spaces.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Jonathan Shine and Anett Kirmess for their comments and help with writing this manuscript.

References

Aginsky, V., Harris, C., Rensink, R., and Beusmans, J. (1997). Two strategies for learning a route in a driving simulator. J. Environ. Psychol. 17, 317–331. doi: 10.1006/jevp.1997.0070

Banta Lavenex, P., and Lavenex, P. (2009). Spatial memory and the monkey hippocampus: not all space is created equal. Hippocampus 19, 8–19. doi: 10.1002/hipo.20485

Barrash, J., Damasio, H., Adolphs, R., and Tranel, D. (2000). The neuroanatomical correlates of route learning impairment. Neuropsychologia 38, 820–836. doi: 10.1016/s0028-3932(99)00131-1

Brandon, M. P., Koenig, J., Leutgeb, J. K., and Leutgeb, S. (2014). New and distinct hippocampal place codes are generated in a new environment during septal inactivation. Neuron 82, 789–796. doi: 10.1016/j.neuron.2014.04.013

Burgess, N. (2008). Spatial cognition and the brain. Ann. N Y Acad. Sci. 1124, 77–97. doi: 10.1196/annals.1440.002

Burgess, N., Maguire, E. A., and O’Keefe, J. (2002). The human hippocampus and spatial and episodic memory. Neuron 35, 625–641. doi: 10.1016/s0896-6273(02)00830-9

Burwell, R. D., Saddoris, M. P., Bucci, D. J., and Wiig, K. A. (2004). Corticohippocampal contributions to spatial and contextual learning. J. Neurosci. 24, 3826–3836. doi: 10.1523/jneurosci.0410-04.2004

Bush, D., Barry, C., and Burgess, N. (2014). What do grid cells contribute to place cell firing? Trends Neurosci. 37, 136–145. doi: 10.1016/j.tins.2013.12.003

Byrne, P., Becker, S., and Burgess, N. (2007). Remembering the past and imagining the future: a neural model of spatial memory and imagery. Psychol. Rev. 114, 340–375. doi: 10.1037/0033-295x.114.2.340

Cartwright, B. A., and Collet, T. S. (1983). Landmark learning in bees: experiments and models. J. Comp. Physiol. 151, 521–543. doi: 10.1007/bf00605469

Cheng, K. (1986). A purely geometric module in the rat’s spatial representation. Cognition 23, 149–178. doi: 10.1016/0010-0277(86)90041-7

Cheng, K. (2008). Whither geometry? Troubles of the geometric module. Trends Cogn. Sci. 12, 355–361. doi: 10.1016/j.tics.2008.06.004

Cheung, A., Sturzl, W., Zeil, J., and Cheng, K. (2008). The information content of panoramic images II: view-based navigation in nonrectangular experimental arenas. J. Exp. Psychol. Anim. Behav. Process. 34, 15–30. doi: 10.1037/0097-7403.34.1.15

Colby, C. L. (1998). Action-oriented spatial reference frames in cortex. Neuron 20, 15–24. doi: 10.1016/s0896-6273(00)80429-8

Colgin, L. L., Moser, E. I., and Moser, M. B. (2008). Understanding memory through hippocampal remapping. Trends Neurosci. 31, 469–477. doi: 10.1016/j.tins.2008.06.008

Committeri, G., Galati, G., Paradis, A. L., Pizzamiglio, L., Berthoz, A., and LeBihan, D. (2004). Reference frames for spatial cognition: different brain areas are involved in viewer-, object- and landmark-centered judgments about object location. J. Cogn. Neurosci. 16, 1517–1535. doi: 10.1162/0898929042568550

Cushman, L. A., Stein, K., and Duffy, C. J. (2008). Detecting navigational deficits in cognitive aging and Alzheimer disease using virtual reality. Neurology 71, 888–895. doi: 10.1212/01.wnl.0000326262.67613.fe

Derdikman, D., and Moser, E. I. (2010). A manifold of spatial maps in the brain. Trends Cogn. Sci. 14, 561–569. doi: 10.1016/j.tics.2010.09.004

Derdikman, D., Whitlock, J. R., Tsao, A., Fyhn, M., Hafting, T., Moser, M. B., et al. (2009). Fragmentation of grid cell maps in a multicompartment environment. Nat. Neurosci. 12, 1325–1332. doi: 10.1038/nn.2396

Doeller, C. F., King, J. A., and Burgess, N. (2008). Parallel striatal and hippocampal systems for landmarks and boundaries in spatial memory. Proc. Natl. Acad. Sci. U S A 105, 5915–5920. doi: 10.1073/pnas.0801489105

Eichenbaum, H., Stewart, C., and Morris, R. G. (1990). Hippocampal representation in place learning. J. Neurosci. 10, 3531–3542.

Eldridge, L. L., Knowlton, B. J., Furmanski, C. S., Bookheimer, S. Y., and Engel, S. A. (2000). Remembering episodes: a selective role for the hippocampus during retrieval. Nat. Neurosci. 3, 1149–1152. doi: 10.1038/80671

Frank, L. M., Brown, E. N., and Wilson, M. (2000). Trajectory encoding in the hippocampus and entorhinal cortex. Neuron 27, 169–178. doi: 10.1016/s0896-6273(00)00018-0

Franz, G., and Wiener, J. M. (2008). From space syntax to space semantics: a behaviorally and perceptually oriented methodology for the efficient description of the geometry and topology of environments. Environ. Plan. B Plan. Des. 35, 574–592. doi: 10.1068/b33050

Gheysen, F., Van Opstal, F., Roggeman, C., Van Waelvelde, H., and Fias, W. (2010). Hippocampal contribution to early and later stages of implicit motor sequence learning. Exp. Brain Res. 202, 795–807. doi: 10.1007/s00221-010-2186-6

Goodrich-Hunsaker, N. J., Livingstone, S. A., Skelton, R. W., and Hopkins, R. O. (2010). Spatial deficits in a virtual water maze in amnesic participants with hippocampal damage. Hippocampus 20, 481–491. doi: 10.1002/hipo.20651

Hafting, T., Fyhn, M., Molden, S., Moser, M. B., and Moser, E. I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature 436, 801–806. doi: 10.1038/nature03721

Han, X., and Becker, S. (2014). One spatial map or many? Spatial coding of connected environments. J. Exp. Psychol. Learn. Mem. Cogn. 40, 511–531. doi: 10.1037/a0035259

Hartley, T., Maguire, E. A., Spiers, H. J., and Burgess, N. (2003). The well-worn route and the path less traveled: distinct neural bases of route following and wayfinding in humans. Neuron 37, 877–888. doi: 10.1016/s0896-6273(03)00095-3

Hölscher, C., Tenbrink, T., and Wiener, J. M. (2011). Would you follow your own route description? Cognitive strategies in urban route planning. Cognition 121, 228–247. doi: 10.1016/j.cognition.2011.06.005

Iaria, G., Petrides, M., Dagher, A., Pike, B., and Bohbot, V. D. (2003). Cognitive strategies dependent on the hippocampus and caudate nucleus in human navigation: variability and change with practice. J. Neurosci. 23, 5945–5952.

Iglói, K., Zaoui, M., Berthoz, A., and Rondi-Reig, L. (2009). Sequential egocentric strategy is acquired as early as allocentric strategy: parallel acquisition of these two navigation strategies. Hippocampus 19, 1199–1211. doi: 10.1002/hipo.20595

Jacobs, J., Weidemann, C. T., Miller, J. F., Solway, A., Burke, J. F., Wei, X. X., et al. (2013). Direct recordings of grid-like neuronal activity in human spatial navigation. Nat. Neurosci. 16, 1188–1190. doi: 10.1038/nn.3466

Jahn, G., Wendt, J., Lotze, M., Papenmeier, F., and Huff, M. (2012). Brain activation during spatial updating and attentive tracking of moving targets. Brain Cogn. 78, 105–113. doi: 10.1016/j.bandc.2011.12.001

Janzen, G., and van Turennout, M. (2004). Selective neural representation of objects relevant for navigation. Nat. Neurosci. 7, 673–677. doi: 10.1038/nn1257

Janzen, G., and Weststeijn, C. G. (2007). Neural representation of object location and route direction: an event-related fMRI study. Brain Res. 1165, 116–125. doi: 10.1016/j.brainres.2007.05.074

Killian, N. J., Jutras, M. J., and Buffalo, E. A. (2012). A map of visual space in the primate entorhinal cortex. Nature 491, 761–764. doi: 10.1038/nature11587

Kuipers, B. J. (1978). Modeling spatial knowledge. Cogn. Sci. 2, 129–153. doi: 10.1207/s15516709cog0202_3

Kumaran, D., and Maguire, E. A. (2005). The human hippocampus: cognitive maps or relational memory? J. Neurosci. 25, 7254–7259. doi: 10.1523/jneurosci.1103-05.2005

Lever, C., Burton, S., Jeewajee, A., O’keefe, J., and Burgess, N. (2009). Boundary vector cells in the subiculum of the hippocampal formation. J. Neurosci. 29, 9771–9777. doi: 10.1523/JNEUROSCI.1319-09.2009

McDonald, R. J., and White, N. M. (1994). Parallel information processing in the water maze: evidence for independent memory systems involving dorsal striatum and hippocampus. Behav. Neural Biol. 61, 260–270. doi: 10.1016/s0163-1047(05)80009-3

Meilinger, T. (2008). “The network of reference frames theory: a synthesis of graphs and cognitive maps,” in International Conference Spatial Cognition (SC 2008). Springer, Berlin, Germany.

Meilinger, T., Riecke, B. E., and Bulthoff, H. H. (2014). Local and global reference frames for environmental spaces. Q. J. Exp. Psychol. (Hove) 67, 542–569. doi: 10.1080/17470218.2013.821145

Montello, D. R. (1993). “Scale and multiple psychologies of space,” in Spatial Information Theory: A Theoretical Basis for GIS. Proceedings of COSIT ’93, eds A. U. Frank and I. Campari (Berlin: Springer-Verlag), Lecture Notes in Computer Science 716, 312–321.

Morris, R. G., Garrud, P., Rawlins, J. N., and O’Keefe, J. (1982). Place navigation impaired in rats with hippocampal lesions. Nature 297, 681–683. doi: 10.1038/297681a0

O’Keefe, J., and Dostrovsky, J. (1971). The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175. doi: 10.1016/0006-8993(71)90358-1

Packard, M. G., and Mcgaugh, J. L. (1992). Double dissociation of fornix and caudate nucleus lesions on acquisition of two water maze tasks: further evidence for multiple memory systems. Behav. Neurosci. 106, 439–446. doi: 10.1037//0735-7044.106.3.439

Pearce, J. M., Roberts, A. D., and Good, M. (1998). Hippocampal lesions disrupt navigation based on cognitive maps but not heading vectors. Nature 396, 75–77. doi: 10.1038/23941

Pengas, G., Patterson, K., Arnold, R. J., Bird, C. M., Burgess, N., and Nestor, P. J. (2010). Lost and found: bespoke memory testing for Alzheimer’s disease and semantic dementia. J. Alzheimers Dis. 21, 1347–1365. doi: 10.3233/JAD-2010-100654

Poucet, B. (1993). Spatial cognitive maps in animals: new hypotheses on their structure and neural mechanisms. Psychol. Rev. 100, 163–182. doi: 10.1037//0033-295x.100.2.163

Rondi-Reig, L., Petit, G. H., Tobin, C., Tonegawa, S., Mariani, J., and Berthoz, A. (2006). Impaired sequential egocentric and allocentric memories in forebrain-specific-NMDA receptor knock-out mice during a new task dissociating strategies of navigation. J. Neurosci. 26, 4071–4081. doi: 10.1523/jneurosci.3408-05.2006

Salmon, D. P., and Butters, N. (1995). Neurobiology of skill and habit learning. Curr. Opin. Neurobiol. 5, 184–190. doi: 10.1016/0959-4388(95)80025-5

Sato, N., Sakata, H., Tanaka, Y. L., and Taira, M. (2006). Navigation-associated medial parietal neurons in monkeys. Proc. Natl. Acad. Sci. U S A 103, 17001–17006. doi: 10.1073/pnas.0604277103

Schendan, H. E., Searl, M. M., Melrose, R. J., and Stern, C. E. (2003). An FMRI study of the role of the medial temporal lobe in implicit and explicit sequence learning. Neuron 37, 1013–1025. doi: 10.1016/s0896-6273(03)00123-5

Schinazi, V. R., and Epstein, R. A. (2010). Neural correlates of real-world route learning. Neuroimage 53, 725–735. doi: 10.1016/j.neuroimage.2010.06.065

Schindler, A., and Bartels, A. (2013). Parietal cortex codes for egocentric space beyond the field of view. Curr. Biol. 23, 177–182. doi: 10.1016/j.cub.2012.11.060

Schölkopf, B., and Mallot, H. A. (1995). View-based cognitive mapping and path planning. Adapt. Behav. 3, 311–348. doi: 10.1177/105971239500300303

Shelton, A. L., and Mcnamara, T. P. (2001). Systems of spatial reference in human memory. Cogn. Psychol. 43, 274–310. doi: 10.1006/cogp.2001.0758

Skaggs, W. E., and McNaughton, B. L. (1998). Spatial firing properties of hippocampal CA1 populations in an environment containing two visually identical regions. J. Neurosci. 18, 8455–8466.

Solstad, T., Boccara, C. N., Kropff, E., Moser, M. B., and Moser, E. I. (2008). Representation of geometric borders in the entorhinal cortex. Science 322, 1865–1868. doi: 10.1126/science.1166466

Spiers, H. J., and Maguire, E. A. (2007a). A navigational guidance system in the human brain. Hippocampus 17, 618–626. doi: 10.1002/hipo.20298

Spiers, H. J., and Maguire, E. A. (2007b). Neural substrates of driving behaviour. Neuroimage 36, 245–255. doi: 10.1016/j.neuroimage.2007.02.032

Steffenach, H. A., Witter, M., Moser, M. B., and Moser, E. I. (2005). Spatial memory in the rat requires the dorsolateral band of the entorhinal cortex. Neuron 45, 301–313. doi: 10.1016/j.neuron.2004.12.044

Stevens, A., and Coupe, P. (1978). Distortions in judged spatial relations. Cogn. Psychol. 10, 422–437. doi: 10.1016/0010-0285(78)90006-3

Tolman, E. C., Ritchie, B. F., and Kalish, D. (1946). Studies in spatial learning; place learning versus response learning. J. Exp. Psychol. 36, 221–229. doi: 10.1037/h0060262

Trullier, O., Wiener, S. I., Berthoz, A., and Meyer, J. A. (1997). Biologically based artificial navigation systems: review and prospects. Prog. Neurobiol. 51, 483–544. doi: 10.1016/s0301-0082(96)00060-3

Waller, D., and Lippa, Y. (2007). Landmarks as beacons and associative cues: their role in route learning. Mem. Cognit. 35, 910–924. doi: 10.3758/bf03193465

Wang, R., and Spelke, E. (2002). Human spatial representation: insights from animals. Trends Cogn. Sci. 6, 376–382. doi: 10.1016/s1364-6613(02)01961-7

Wang, R. F., and Brockmole, J. R. (2003). Human navigation in nested environments. J. Exp. Psychol. Learn. Mem. Cogn. 29, 398–404. doi: 10.1037/0278-7393.29.3.398

Whitlock, J. R., Sutherland, R. J., Witter, M. P., Moser, M. B., and Moser, E. I. (2008). Navigating from hippocampus to parietal cortex. Proc. Natl. Acad. Sci. U S A 105, 14755–14762. doi: 10.1073/pnas.0804216105

Whitwell, J. L., Przybelski, S. A., Weigand, S. D., Knopman, D. S., Boeve, B. F., Petersen, R. C., et al. (2007). 3D maps from multiple MRI illustrate changing atrophy patterns as subjects progress from mild cognitive impairment to Alzheimer’s disease. Brain 130, 1777–1786. doi: 10.1093/brain/awm112

Wiener, J. M., and Mallot, H. A. (2003). ‘Fine-to-Coarse’ route planning and navigation in regionalized environments. Spat. Cogn. Comput. 3, 331–358. doi: 10.1207/s15427633scc0304_5

Wiener, J. M., de Condappa, O., Harris, M. A., and Wolbers, T. (2013). Maladaptive bias for extrahippocampal navigation strategies in aging humans. J. Neurosci. 33, 6012–6017. doi: 10.1523/JNEUROSCI.0717-12.2013

Wiener, J. M., Schnee, A., and Mallot, H. A. (2004). Use and interaction of navigation strategies in regionalized environments. J. Environ. Psychol. 24, 475–493. doi: 10.1016/j.jenvp.2004.09.006

Wilton, L. A., Baird, A. L., Muir, J. L., Honey, R. C., and Aggleton, J. P. (2001). Loss of the thalamic nuclei for “head direction” impairs performance on spatial memory tasks in rats. Behav. Neurosci. 115, 861–869. doi: 10.1037//0735-7044.115.4.861

Wolbers, T., and Büchel, C. (2005). Dissociable retrosplenial and hippocampal contributions to successful formation of survey representations. J. Neurosci. 25, 3333–3340. doi: 10.1523/jneurosci.4705-04.2005

Wolbers, T., Hegarty, M., Buchel, C., and Loomis, J. M. (2008). Spatial updating: how the brain keeps track of changing object locations during observer motion. Nat. Neurosci. 11, 1223–1230. doi: 10.1038/nn.2189

Wolbers, T., Weiller, C., and Buchel, C. (2004). Neural foundations of emerging route knowledge in complex spatial environments. Brain Res. Cogn. Brain Res. 21, 401–411. doi: 10.1016/j.cogbrainres.2004.06.013

Wood, E. R., Dudchenko, P. A., Robitsek, R. J., and Eichenbaum, H. (2000). Hippocampal neurons encode information about different types of memory episodes occurring in the same location. Neuron 27, 623–633. doi: 10.1016/s0896-6273(00)00071-4

Keywords: spatial navigation, hippocampus, vista space, environmental space, reference frames, allocentric and egocentric representation

Citation: Wolbers T and Wiener JM (2014) Challenges for identifying the neural mechanisms that support spatial navigation: the impact of spatial scale. Front. Hum. Neurosci. 8:571. doi: 10.3389/fnhum.2014.00571

Received: 27 March 2014; Accepted: 13 July 2014;

Published online: 04 August 2014.

Edited by:

Arne Ekstrom, University of California, Davis, USAReviewed by:

Gaspare Galati, Sapienza Università di Roma, ItalyNaohide Yamamoto, Queensland University of Technology, Australia

Copyright © 2014 Wolbers and Wiener. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Thomas Wolbers, German Center for Neurodegenerative Diseases (DZNE), and Center for Behavioural Brain Sciences (CBBS), Otto-von-Guericke University, Leipziger Straße 44, 39120 Magdeburg, Germany e-mail: thomas.wolbers@dzne.de

Thomas Wolbers

Thomas Wolbers Jan M. Wiener

Jan M. Wiener