Can Machine Learning Approaches Lead Toward Personalized Cognitive Training?

- 1Department of Psychology, University of Haifa, Haifa, Israel

- 2The Integrated Brain and Behavior Research Center (IBBR), University of Haifa, Haifa, Israel

Introduction

Cognitive training efficacy is controversial. Although many recent studies indicate that cognitive training shows merit, others fail to demonstrate its efficacy. These inconsistent findings may at least partly result from differences in individuals' ability to benefit from cognitive training in general, and from specific training types in particular. Consistent with the move toward personalized medicine, we propose using machine learning approaches to help optimize cognitive training gains.

Cognitive Training: State-of-the-Art Findings and Debates

Cognitive training targets neurobiological mechanisms underlying emotional and cognitive functions. Indeed, Siegle et al. (2007) suggested that cognitive training can significantly improve mood, daily functioning, and cognitive domains. In recent years, various types of cognitive training have been researched. Frequently researched training types include cognitive bias modification (CBM) aims to modify cognitive processes such as interpretations and attention, making these more adaptive and accommodating to real-life demands (Hallion and Ruscio, 2011); inhibitory training seeks to improve inhibitory control and other executive processes, thus helping regulate behavior and emotion (Cohen et al., 2016; Koster et al., 2017); working memory training targets attentional resources, seeking to increase cognitive abilities by improving working memory capacities (Melby-Lervåg and Hulme, 2013). All these types demonstrated major potential in improving psychopathological symptoms or enhancing cognitive functions (Jaeggi et al., 2008; Hakamata et al., 2010).

Despite the accumulating body of evidence suggesting that cognitive training is a promising research path with major clinical potential, questions remain regarding its efficacy, and generalizability. Recent meta-analyses further corroborate this (for a discussion, see Mogg et al., 2017; Okon-Singer, 2018). For example, several research groups tested CBM studies using meta-analyses. Hakamata et al. (2010) analyzed twelve studies (comprising 467 participants from an anxious population), reporting positive moderate effects of training on anxiety symptom improvement. Yet two other meta-analyses focusing on both anxiety and depression (49 and 45 studies, respectively) demonstrated small effect sizes and warned of possible publication bias (Hallion and Ruscio, 2011; Cristea et al., 2015). These inconsistent results raise important questions about training efficacy. Several factors have been suggested as potential sources of this variability in effect size, including differences in inclusion criteria and quality of the studiesincluded (Cristea et al., 2015).

As in the CBM literature, meta-analyses of working memory training also yielded divergent results. Au et al. (2015) analyzed twenty working memory training studies comprising samples of healthy adults and reported small positive effects of training on fluid intelligence. The authors suggested that the small effect size underestimates the actual training benefits and may result from methodological shortcomings and sample characteristics, stating that “it is becoming very clear to us that training on working memory with the goal of trying to increase fluid intelligence holds much promise” (p. 375). Yet two other meta-analyses of working memory (87 and 47 studies, respectively) described specific improvements only in the trained domain (i.e., near transfer benefits) and few generalization effects in other cognitive domains (Schwaighofer et al., 2015; Melby-Lervåg et al., 2016). As with CBM, these investigations did not include exactly the same set of studies, making it difficult to infer the reason for the discrepancies. Nevertheless, potential factors contributing to variability in intervention efficacy include differences in methodology and inclusion criteria (Melby-Lervåg et al., 2016).

Some scholars suggested that the inconsistent results seen across types of training may be result from the high variability in training features, such as dose, design type, training type, and type of control groups (Karbach and Verhaeghen, 2014). For example, some studies suggest that only active control groups should be used and that using untreated controls is futile (Melby-Lervåg et al., 2016), while others discovered no significant difference between active and passive control groups (Schwaighofer et al., 2015; Weicker et al., 2016). Researchers have also suggested that the type of activity assigned to the active control group (e.g., adaptive or non-adaptive) may influence effect sizes (Weicker et al., 2016). Adaptive control activity may lead to underestimation of training benefits, while non-adaptive control activity may yield overestimation (von Bastian and Oberauer, 2014).

Training duration has also been raised as a potential source of variability. Weicker et al. (2016) suggested that the number of training sessions (but not overall training hours) is positively related to training efficacy in a brain injured sample. While only studies with more than 20 sessions demonstrated a long-lasting effect. In a highly influential working memory paper, Jaeggi et al. (2008) compared different numbers of training sessions (8–19). Outcomes demonstrated a dose-dependency effect: the more training sessions participants completed, the greater the “far transfer” improvements. In contrast, in a 2014 meta-analytical review Karbach and Verhaeghen reported no dose–dependency, as overall training time did not predict training effects. This is somewhat consistent with the findings of Lampit et al. (2014) meta-analysis, which indicated that only three or fewer training sessions per week were beneficial in training healthy older adults in different types of cognitive tasks. Furthermore, even time gaps between training sessions when the overall number of sessions is fixed may be influential. A study that specifically tested the optimal intensity level of working memory training revealed that distributed training (16 sessions in 8 weeks) was more beneficial than high intensity training (16 sessions in 4 weeks) (Penner et al., 2012). In sum, literature reviews maintain that this large variability in training hampers attempts to evaluate the findings (Koster et al., 2017; Mogg et al., 2017).

So far, the majority of studies in the field of cognitive training have been concerned mainly with establishing the average effectiveness of various training methods, with studies based on combined samples comprising individuals who profited from training and those who did not. Therefore, the samples' heterogeneity might be too high to evaluate efficacy for the “average individual” in each sample. We contend that focusing on the average individual contributes to the inconsistent findings, as is also the case with other interventions aimed at improving mental health (Zilcha-Mano, 2018). We argue that the inconsistent findings and large heterogeneity in studies evaluating cognitive training efficacy do not constitute interfering noise but rather provide important information that can guide us in training selection. In addition to selecting the optimal training for each individual, achieving maximum efficacy also requires adapting the selected training to each individual's characteristics and needs (Zilcha-Mano, 2018). In line with this notion, training games studies (i.e., online training platforms displayed in a game-like format) showcased different methods which personalized cognitive training by (a) selecting the type of training according to a baseline cognitive strengths and weaknesses evaluation or the intent of the trainee, and (b) adapting the ongoing training according to the individual's performance (Shatil et al., 2010; Peretz et al., 2011; Hardy et al., 2015). Until now, however, training personalization was made by pre-exist defined criteria and rationale (i.e., individual's weaknesses and strengths, individual's personal preference). Additional method for personalization, that is becoming increasingly popular in recent years, is data-driven personalization implemented by machine learning algorithms (Cohen and DeRubeis, 2018).

The observed variation in efficacy found in cognitive training studies may serve as a rich source of information to facilitate both intervention selection and intervention adaptation—the two central approaches in personalized medicine (Cohen and DeRubeis, 2018). Intervention selection seeks to optimize intervention efficacy by identifying the most promising type of intervention for a given individual based on as many pre-training characteristics as possible (e.g., age, personality traits, cognitive abilities). Machine learning approaches are especially suitable for such identification because they enable us to choose the most critical items for guiding treatment selection without relying on specific theory or rationale. In searching for a single patient characteristic that guides training selection, most approaches treat all other variables as noise. It is more intuitive, however, to hypothesize that no single factor is as important in identifying the optimal training for an individual as a set of interrelated factors. Traditional approaches to subgroup analysis, which tests each factor as a separate hypothesis, can lead to erroneous conclusions due to multiple comparisons (inflated type I errors), model misspecification, and multicollinearity. Findings may also be affected by publication bias because statistically significant moderators have a better chance of being reported in the literature. Machine learning approaches make it feasible to identify the best set of patient characteristics to guide intervention and training selection (Cohen and DeRubeis, 2018; Zilcha-Mano et al., 2018). With that said, given the flexibility of methods like decision tree analyses, there is a risk of overfitting that reduces validity for inference out of sample, such that the model will fit specifically the sample on which it was built and may be therefore unlikely to be generalizable in an independent application (Ioannidis, 2005; Open Science Collaboration, 2015; Cohen and DeRubeis, 2018). Thus, it is important to test out-of-sample prediction, either on a different sample or a sub-sample of the original sample on which the model was not built (e.g., cross-validation).

An example of treatment selection from the field of antidepressant medication (ADM) demonstrates the utility of this approach. Current ADM treatments are ineffective for up to half the patients, despite much variability in patient response to treatments (Cipriani et al., 2018). Researchers are beginning to realize the benefits of implementing machine learning approaches in selecting the most effective treatment for each individual. Using the gradient boosting machine (GBM) approach, Chekroud et al. (2016) identified 25 variables as most important in predicting treatment efficacy and were able to improve treatment efficacy in 64% of responders to medication—a 14% increase.

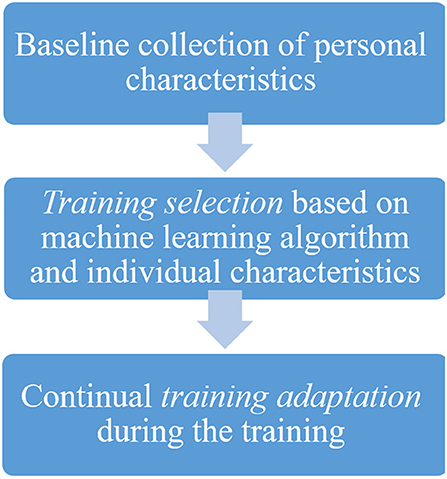

Whereas, training selection affects pre-treatment decision-making, training adaptation focuses on continuously adapting the training to the individual (see Figure 1). A patient's baseline characteristics (e.g., age, personality traits, cognitive abilities) and individual training performance trajectory can be used to tailor the training parameters (training type, time gaps between sessions, number of sessions, overall training hours) to achieve optimal performance. Collecting information from a sample of patients with similar baseline characteristics that underwent the same intervention yields an expected trajectory. Deviations from this expected trajectory act as warning signs and can help adapt the training parameters to the individual's needs (Rubel and Lutz, 2017).

An example of treatment adaptation comes from the field of psychotherapy research, where a common treatment adaptation method involves providing therapists with feedback on their patients' progress. This method was developed to address the problem that many therapists are not sufficiently aware of their patients' progress. While many believe they are able to identify when their patients are progressing as expected and when not, in practice this may not be true (Hannan et al., 2005). Many studies have demonstrated the utility of giving therapists feedback regarding their patients' progress (Lambert et al., 2001; Probst et al., 2014). Shimokawa et al. (2010) found that although some patients continue improving and benefitting from therapy (on-track patients—OT), others seem to deviate from this positive trajectory (not-on-track patients—NOT). These studies provided clinicians feedback on their patients' state so they could better adapt their therapy to the patients' needs. This in turn had a positive effect on treatment outcomes in general, especially outcomes for NOT patients, to the point of preventing treatment failure. These treatment adaptation methods have recently evolved to include implementations of the nearest neighbor machine learning approach originating in avalanche research (Brabec and Meister, 2001), as well as other similar approaches to better predict an individual's optimal trajectory and identify deviations from it (Rubel et al., in press).

Machine learning approaches may thus be beneficial in the efforts of progressing toward personalized cognitive training. The inconsistencies between studies in terms of the efficacy of CBM, inhibitory training, and working memory training can serve as a rich and varied source to guide the selection and adaptation of effective personalized cognitive training. In this way, general open questions such as optimal training duration and time gaps between sessions will be replaced with specific questions about the training parameters most effective for each individual.

Author Contributions

RS managed the planning process of the manuscript, performed all administrative tasks required for submission and drafted the manuscript. HO-S and SZ-M took part in planning, supervision, brainstorming, and writing the manuscript. ST took part in brainstorming and writing the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was supported by the JOY ventures grant for neuro-wellness research awarded to HO-S and SZ-M.

References

Au, J., Sheehan, E., Tsai, N., Duncan, G. J., Buschkuehl, M., and Jaeggi, S. M. (2015). Improving fluid intelligence with training on working memory: a meta-analysis. Psychon. Bull. Rev. 22, 366–377. doi: 10.3758/s13423-014-0699-x

Brabec, B., and Meister, R. (2001). A nearest-neighbor model for regional avalanche forecasting. Ann. Glaciol. 32, 130–134. doi: 10.3189/172756401781819247

Chekroud, A. M., Zotti, R. J., Shehzad, Z., Gueorguieva, R., Johnson, M. K., Trivedi, M. H., et al. (2016). Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet Psychiatr. 3, 243–250. doi: 10.1016/S2215-0366(15)00471-X

Cipriani, A., Furukawa, T. A., Salanti, G., Chaimani, A., Atkinson, L. Z., Ogawa, Y., et al. (2018). Comparative efficacy and acceptability of 21 antidepressant drugs for the acute treatment of adults with major depressive disorder: a systematic review and network meta-analysis. Lancet 391, 1357–1366. doi: 10.1016/S0140-6736(17)32802-7

Cohen, N., Margulies, D. S., Ashkenazi, S., Schäfer, A., Taubert, M., Henik, A., et al. (2016). Using executive control training to suppress amygdala reactivity to aversive information. Neuroimage 125, 1022–1031. doi: 10.1016/j.neuroimage.2015.10.069

Cohen, Z. D., and DeRubeis, R. J. (2018). Treatment selection in depression. Ann. Rev. Clin. Psychol. 14, 209–236. doi: 10.1146/annurev-clinpsy-050817-084746

Cristea, I. A., Kok, R. N., and Cuijpers, P. (2015). Efficacy of cognitive bias modification interventions in anxiety and depression: meta-analysis. Br. J. Psychiatr. 206, 7–16. doi: 10.1192/bjp.bp.114.146761

Hakamata, Y., Lissek, S., Bar-Haim, Y., Britton, J. C., Fox, N. A., Leibenluft, E., et al. (2010). Attention bias modification treatment: a meta-analysis toward the establishment of novel treatment for anxiety. Biol. Psychiatr. 68, 982–990. doi: 10.1016/2Fj.biopsych.2010.07.021

Hallion, L. S., and Ruscio, A. M. (2011). A meta-analysis of the effect of cognitive bias modification on anxiety and depression. Psychol. Bull. 137, 940–58. doi: 10.1037/a0024355

Hannan, C., Lambert, M., Harmon, C., Nielsen, S., Smart, D., Shimokawa, K., et al. (2005). A lab test and algorithms for identifying clients at risk for treatment failure. J. Clin. Psychol. 61, 155–163. doi: 10.1002/jclp.20108

Hardy, J. L., Nelson, R. A., Thomason, M. E., Sternberg, D. A., Katovich, K., Farzin, F., et al. (2015). Enhancing cognitive abilities with comprehensive training: a large, online, randomized, active-controlled trial. PLoS ONE 10:e0134467. doi: 10.1371/journal.pone.0134467

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Med. 2:e124. doi: 10.1371/journal.pmed.0020124

Jaeggi, S. M., Buschkuehl, M., Jonides, J., and Perrig, W. J. (2008). Improving fluid intelligence with training on working memory. Proc. Natl. Acad. Sci. U.S.A. 105, 6829–6833. doi: 10.1073/pnas.0801268105

Karbach, J., and Verhaeghen, P. (2014). Making working memory work: a meta-analysis of executive-control and working memory training in older adults. Psychol. Sci. 25, 2027–2037. doi: 10.1177/2F0956797614548725

Koster, E. H., Hoorelbeke, K., Onraedt, T., Owens, M., and Derakshan, N. (2017). Cognitive control interventions for depression: a systematic review of findings from training studies. Clin. Psychol. Rev. 53, 79–92. doi: 10.1016/j.cpr.2017.02.002

Lambert, M. J., Whipple, J. L., Smart, D. W., Vermeersch, D. A., Nielsen, S. L., and Hawkins, E. J. (2001). The effects of providing therapists with feedback on patient progress during psychotherapy: are outcomes enhanced? Psychother. Res. 11, 49–68. doi: 10.1080/713663852

Lampit, A., Hallock, H., and Valenzuela, M. (2014). Computerized cognitive training in cognitively healthy older adults: a systematic review and meta-analysis of effect modifiers. PLoS Med. 11:e1001756. doi: 10.1371/journal.pmed.1001756

Melby-Lervåg, M., and Hulme, C. (2013). Is working memory training effective? A meta-analytic review. Dev. Psychol. 49, 270–91. doi: 10.1037/a0028228

Melby-Lervåg, M., Redick, T. S., and Hulme, C. (2016). Working memory training does not improve performance on measures of intelligence or other measures of “far transfer” evidence from a meta-analytic review. Perspect. Psychol. Sci. 11, 512–534. doi: 10.1177/1745691616635612

Mogg, K., Waters, A. M., and Bradley, B. P. (2017). Attention bias modification (ABM): review of effects of multisession ABM training on anxiety and threat-related attention in high-anxious individuals. Clin. Psychol. Sci. 5, 698–717. doi: 10.1177/2F2167702617696359

Okon-Singer, H. (2018). The role of attention bias to threat in anxiety: mechanisms, modulators and open questions. Curr. Opin. Behav. Sci. 19, 26–30. doi: 10.1016/j.cobeha.2017.09.008

Open Science Collaboration (2015). Estimating the reproducibility of psychological science. Science 349:aac4716. doi: 10.1126/science.aac4716

Penner, I. K., Vogt, A., Stöcklin, M., Gschwind, L., Opwis, K., and Calabrese, P. (2012). Computerised working memory training in healthy adults: a comparison of two different training schedules. Neuropsychol. Rehabil. 22, 716–733. doi: 10.1080/09602011.2012.686883

Peretz, C., Korczyn, A. D., Shatil, E., Aharonson, V., Birnboim, S., and Giladi, N. (2011). Computer-based, personalized cognitive training versus classical computer games: a randomized double-blind prospective trial of cognitive stimulation. Neuroepidemiology 36, 91–99. doi: 10.1159/000323950

Probst, T., Lambert, M. J., Dahlbender, R. W., Loew, T. H., and Tritt, K. (2014). Providing patient progress feedback and clinical support tools to therapists: Is the therapeutic process of patients on-track to recovery enhanced in psychosomatic in-patient therapy under the conditions of routine practice? J. Psychosom. Res. 76, 477–484. doi: 10.1016/j.jpsychores.2014.03.010

Rubel, J.A., Giesemann, J., Zilcha-Mano, S., and Lutz, W. (in press). Predicting process-outcome associations in psychotherapy based on the nearest neighbor approach: the case of the working alliance. Psychotherapy Research.

Rubel, J. A., and Lutz, W. (2017). “How, when, and why do people change through psychological interventions?—Patient-focused psychotherapy research,” in Routine Outcome Monitoring in Couple and Family Therapy, eds T. Tilden and B. Wampold (Cham: Springer), 227–243. doi: 10.1007/978-3-319-50675-3_13

Schwaighofer, M., Fischer, F., and Bühner, M. (2015). Does working memory training transfer? A meta-analysis including training conditions as moderators. Educ. Psychol. 50, 138–166. doi: 10.1080/00461520.2015.1036274

Shatil, E., Metzer, A., Horvitz, O., and Miller, A. (2010). Home-based personalized cognitive training in MS patients: a study of adherence and cognitive performance. NeuroRehabilitation 26, 143–153. doi: 10.3233/NRE-2010-0546

Shimokawa, K., Lambert, M. J., and Smart, D. W. (2010). Enhancing treatment outcome of patients at risk of treatment failure: meta-analytic and mega-analytic review of a psychotherapy quality assurance system. J. Consult. Clin. Psychol. 78, 298–311. doi: 10.1037/a0019247

Siegle, G. J., Ghinassi, F., and Thase, M. E. (2007). Neurobehavioral therapies in the 21st century: summary of an emerging field and an extended example of cognitive control training for depression. Cognit. Ther. Res. 31, 235–262. doi: 10.1007/s10608-006-9118-6

von Bastian, C. C., and Oberauer, K. (2014). Effects and mechanisms of working memory training: a review. Psychol. Res. 78, 803–820. doi: 10.1007/s00426-013-0524-6

Weicker, J., Villringer, A., and Thöne-Otto, A. (2016). Can impaired working memory functioning be improved by training? A meta-analysis with a special focus on brain injured patients. Neuropsychology 30, 190–212. doi: 10.1037/neu0000227

Zilcha-Mano, S. (2018). Major developments in methods addressing for whom psychotherapy may work for and why. Psychother. Res. 1–16. doi: 10.1080/10503307.2018.1429691

Keywords: cognitive training, cognitive remediation, machine learning, treatment adaptation, treatment selection

Citation: Shani R, Tal S, Zilcha-Mano S and Okon-Singer H (2019) Can Machine Learning Approaches Lead Toward Personalized Cognitive Training? Front. Behav. Neurosci. 13:64. doi: 10.3389/fnbeh.2019.00064

Received: 25 October 2018; Accepted: 13 March 2019;

Published: 04 April 2019.

Edited by:

Benjamin Becker, University of Electronic Science and Technology of China, ChinaReviewed by:

Qiang Luo, Fudan University, ChinaCopyright © 2019 Shani, Tal, Zilcha-Mano and Okon-Singer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Reut Shani, rshani05@campus.haifa.ac.il

Reut Shani

Reut Shani Shachaf Tal

Shachaf Tal Sigal Zilcha-Mano

Sigal Zilcha-Mano Hadas Okon-Singer

Hadas Okon-Singer