Computational Grounded Cognition: a new alliance between grounded cognition and computational modeling

- 1 Istituto di Scienze e Tecnologie della Cognizione, Consiglio Nazionale delle Ricerche, Roma, Italy

- 2 Istituto di Linguistica Computazionale “Antonio Zampolli”, Consiglio Nazionale delle Ricerche, Pisa, Italy

- 3 Department of Psychology, Emory University, Atlanta, GA, USA

- 4 School of Computing and Mathematics, University of Plymouth, Plymouth, UK

- 5 School of Psychology, University of Dundee, Dundee, Scotland, UK

- 6 Department of Psychology, University of Western Ontario, London, ON, Canada

- 7 School of Social Sciences, Humanities and Arts, University of California, Merced, CA, USA

Embodied theories are increasingly challenging traditional views of cognition by arguing that conceptual representations that constitute our knowledge are grounded in sensory and motor experiences, and processed at this sensorimotor level, rather than being represented and processed abstractly in an amodal conceptual system. Given the established empirical foundation, and the relatively underspecified theories to date, many researchers are extremely interested in embodied cognition but are clamoring for more mechanistic implementations. What is needed at this stage is a push toward explicit computational models that implement sensorimotor grounding as intrinsic to cognitive processes. In this article, six authors from varying backgrounds and approaches address issues concerning the construction of embodied computational models, and illustrate what they view as the critical current and next steps toward mechanistic theories of embodiment. The first part has the form of a dialog between two fictional characters: Ernest, the “experimenter,” and Mary, the “computational modeler.” The dialog consists of an interactive sequence of questions, requests for clarification, challenges, and (tentative) answers, and touches the most important aspects of grounded theories that should inform computational modeling and, conversely, the impact that computational modeling could have on embodied theories. The second part of the article discusses the most important open challenges for embodied computational modeling.

Introduction

Embodied cognition is a theoretical stance which postulates that sensory and motor experiences are part and parcel of the conceptual representations that constitute our knowledge. This view has challenged the longstanding assumption that our knowledge is represented abstractly in an amodal conceptual network of formal logical symbols. There now exist a large number of interesting and intriguing demonstrations of embodied cognition. Examples include changes in perceptual experience or motor behavior as a result of semantic processing (Boulenger et al., 2006; Meteyard et al., 2008), as well as changes in categorization that reflect sensory and motor experiences (Smith, 2005; Ross et al., 2007). These demonstrations have received a great deal of attention in the literature, and have spurred many researchers to take an embodied approach in their own work. There are also a number of theoretical accounts of how embodied cognition might work (Clark, 1998; Lakoff and Johnson, 1999). One influential proposal is “perceptual symbols system” theory (Barsalou, 1999), according to which the retrieval of conceptual meaning involves a partial re-enactment of experiences during concept acquisition. However, to a large extent, embodied theories of cognition are still developing, particularly in terms of their computational implementations, as well as their specification with regard to moment-by-moment online processing.

Given the established empirical foundation, and the relatively underspecified theories to date, many researchers are extremely interested in embodied cognition but are clamoring for more mechanistic implementations. What is needed at this stage is a push toward explicit computational models that implement sensorimotor grounding as intrinsic to cognitive processes. With such models, theoretical descriptions can be fleshed out as explicit mechanisms, idiosyncratic patterns across experiments may be explained, and quantitative predictions for new experiments can be put forward.

In this article, six authors from varying backgrounds and approaches address issues concerning the construction of embodied computational models, and illustrate what they view as the critical current and next steps toward mechanistic theories of embodiment. We propose the use of cognitive robotics to implement embodiment, and discuss the main prerequisites for a fruitful cross-fertilization between empirical and robotics research. Cognitive robotics is a broad research area, whose central aim is realizing complete robotic architectures that, on the one hand, include principles and constraints derived from animal and human cognition and, on the other hand, learn to operate autonomously in complex, open-ended scenarios (possibly interacting with humans) and have realistic embodiment, sensors, and effectors.

The relationship between theories of grounded cognition and cognitive robotics is twofold. On the one hand, theories and findings in research on grounded cognition imply that robot design should take into account the fact that a robot’s cognitive capacities should not be independent of its design and the modalities it uses for interacting with the external environment. This poses opportunities and challenges for robotics research (Pfeifer and Scheier, 1999). On the other hand, computational modeling in cognitive robotics can contribute to the development of better theories of embodied cognition by clarifying and testing some of its critical aspects, such as the extent to which embodied phenomena exert a causal influence on cognitive processing, thereby suggesting new avenues of research. Note that we are interested in computational models of embodied cognition in general, and not only for modeling human cognition, although we often use human cognitive abilities as examples in this article.

The article is structured as follows. We begin by clarifying the usage of some terms. The second section takes the form of a dialog between two fictional characters: Ernest, the “experimenter,” and Mary, the “computational modeler.” The dialog consists of an interactive sequence of questions, requests for clarification, challenges, and (tentative) answers. The dialog touches on the most important aspects of grounded theories that should inform computational modeling and, conversely, the impact that computational modeling could have on grounded theories. In the final section, we discuss the most important open challenges for embodied computational modeling, and suggest a roadmap for future research.

The use of terms such as “grounded” and “situated” is somewhat arbitrary, and these terms are used often interchangeably with “embodied.” Because of this issue in the current literature, we introduce some definitions at the outset of this article (cf. Myachykov et al., 2009; Fischer and Shaki, 2011). Together with these definitions, we also provide examples that specifically pertain to numerical cognition because this area of knowledge representation has traditionally been considered as a domain par excellence for abstract and amodal concepts, a view we wish to challenge.

Grounding

At the most general level, cognition has a physical foundation and it is, first and foremost, grounded in the physical properties of the world, such as the presence of gravity and celestial light sources, and constrained by physical principles (at least until we have evidence of life and cognition in a virtual reality). One example of grounding in the domain of numerical cognition is the fact that we associate smaller numbers with lower space and larger numbers with upper space (Ito and Hatta, 2004; Schwarz and Keus, 2004). This association is presumably universal because it reflects the physical necessity that the aggregation of more objects leads to larger piles. The recognition of the physical foundation of cognition has led researchers to challenge traditional theories of cognitive science and AI, in which cognitive operations were conceived as unconstrained manipulations of arbitrary and amodal symbols. The philosophical debate on how concepts and ideas have any meaning and are linked to their referents was revitalized by Searle’s (1980) Chinese room argument and by Harnad’s (1990) paper on symbol grounding, in which he argued that language-like symbols traditionally used in AI are meaningless because they lack grounding and reference to the external world. Harnad argued that the solution to this problem lies in the grounding of symbols in sensorimotor states; in this way, internal manipulations are constrained by the same laws that govern sensory and motor processes. Successively, grounded cognition has become the label for a methodological approach to the study of cognition that sees it as “grounded in multiple ways, including simulations, situated action, and, on occasion, bodily states” (Barsalou, 2008a, p. 619). As such, grounded cognition is different from, and wider than, embodied or situated cognition, because on occasion “cognition can indeed proceed independently of the specific body that encoded the sensorimotor experience (Barsalou, 2008a).” Rather, embodied and situated effects on representation and cognition can be conceptualized as a cascade.

Embodiment

On top of conceptual grounding, embodied representations are shaped by sensorimotor interactions, and consequently by the physical constraints of the individual’s body. Thus, embodiment is a consequence of the filtering properties of our sensory and motor systems, but this input is already structured and shaped in accord with physical principles, and these provide the grounding of cognition. For an example of embodiment in the domain of numerical cognition, consider the ubiquitous fact that small numbers are responded to faster with the left hand and larger numbers with the right hand – the spatial–numerical association of response codes (SNARC) effect. This effect is weaker in people who start counting on the fingers of their right hand compared to those who start counting on their left hand (Fischer, 2008; Lindemann et al., inpress), presumably because right-starters associate small numbers with their right side. This shows that the systematic use of one’s body influences the cognitive representation of numbers. Note that “embodied cognition” is often used metonymically so as to refer to “grounded cognition;” the former label is much more popular than the latter, and there is nothing wrong with its use providing that one keeps in mind that its literal meaning is restrictive.

Situatedness

Finally, situated cognition refers to the context dependence of cognitive processing and reflects the possibility that embodied signatures in our performance are context-specific and can be modified through experience. This can be a simple change of posture, as in the crossing of arms that reveals the dominance of allocentric over egocentric spatial coding in the Simon effect (Wallace, 1971). The SNARC effect offers two illustrations of this idea. First, a given number can be associated with either left or right space depending on the range of other numbers in the stimulus set (Dehaene et al., 1993; Fias et al., 1996). Second, turning one’s head alternatingly to the left and right while generating random numbers leads to a bias, such that left turns evoke more smaller numbers than do right turns (Loetscher et al., 2008). Both examples illustrate how the specific situation modulates the grounded and embodied representation of numbers (see also Fischer et al., 2009, 2010).

Although most contemporary theories of grounded cognition focus only on a subset of the phenomena that we have described here, future theories should tell a coherent story of how all of the relevant grounded, embodied, and situated phenomena constitute and constrain cognition.

Embodied Cognition and Computational Modeling: A Discussion

Topic 1. What Qualifies as an “Embodied” Computational Model and What are its Most Important Requirements?

Recent research has shown that grounded, embodied, and situated phenomena have a great impact on cognitive processing at all levels rather than being confined to the sensory and motor peripheries. In particular, beyond basic response production, intelligent action coupled with perception epitomizes embodied approaches. This poses significant challenges for computational models in all traditions (symbolic, connectionist, etc.). The first and foremost challenge is that cognition cannot be studied as a module independent from other modules (sensory and motor), as suggested by the “cognitive sandwich” metaphor. Instead, cognition is deeply interrelated with sensorimotor action and affect. Evidence indicates that even complex cognitive operations such as reasoning and language rely on and recruit perceptual and motor brain areas, and that imposing interference in these sensorimotor areas significantly impairs (or enhances) a person’s ability to execute cognitive tasks. Embodiment plausibly exerts its influence also by shaping development; thus, complex cognitive operations are learned based on simpler sensorimotor skills, which provide a ready neural and functional substrate. This implies that cognitive processes cannot be divorced from the sensorimotor processes that provided the scaffold for their development.

Consider a few examples of embodiment signatures in cognition. Spatial associations are frequently used to ground abstract conceptual knowledge, such as numerical magnitudes. This has been documented extensively in the SNARC effect (for a recent meta-analytic review, see Wood et al., 2008). Briefly, smaller magnitudes are associated with left space and larger magnitudes with right space, but this mapping is sensitive to contextual and cultural factors. More recently, the manipulation of magnitudes (arithmetic) has been shown to be mapped onto space, with addition inducing right biases and subtraction inducing left biases (Pinhas and Fischer, 2008; Knops et al., 2009). Another significant example of embodiment signatures in cognition is attention deployment, which plays a central role in forming concepts and directing reasoning within a grounded cognition framework (Grant and Spivey, 2003). In line with the embodied cognition approach, bodily constraints impose corresponding constraints on cognitive functioning and vice versa. Consider first how body postures affect attentional processing. With regard to one’s own postures, attention cannot be cued more laterally if the observer’s eyes are already at their biomechanical limit (Craighero et al., 2004). Similarly, pre-shaping one’s hand influences the selection of large or small objects in a visual search task (Symes et al., 2008), and planning to either point or grasp modulates the space- and object-based deployment of attention (Fischer and Hoellen, 2004) as well as the selection of object features (Bekkering and Neggers, 2002). With regard to perceiving other people’s postures, a large body of work on joint attention has discovered behavioral and neural evidence of rapid and automatic ability to process another person’s gaze direction (Frischen et al., 2007), head orientation (Hietanen, 2002), and hand aperture (Fischer et al., 2008) to deploy one’s own attention to a likely action goal. Body postures also affect one’s higher-level cognition. For example, adopting a particular posture will improve one’s recollection of events that involved similar postures, such as reclining on a chair and the associated experience of a dental visit (Dijkstra et al., 2008).

This body of work seems to confirm the tight coupling between sensory and motor maps on the one hand and conceptual processing on the other hand, as postulated by the embodied cognitive approach. It does, however, also raise an architectural challenge for computational modeling because it seems to require persistent cross-talk between domain-specific systems to account for the wide range of embodiment effects on performance. In terms of computational modeling, the main implication of this view is that the specific way in which robots act, perceive their external environment, and strive to survive and obtain reward, must have a significant impact on their cognitive representations and skills, and on how they develop. Indeed, this insight has close relations with a limitation that has been widely recognized in AI research, namely that cognitive processes were implemented by manipulating abstract symbols that were not “grounded” in the external world, and were unrelated to the robot’s action repertoire and perception (Harnad, 1990).

Research in grounded cognition makes an even stronger case for the influence of embodiment on cognition. Not only should representations be grounded, but their processing should essentially be fully embodied as well, such that there is no central processing independent of sensorimotor processes and/or affective experience. For instance, if we consider again the examples mentioned for spatial reasoning and attention, this leads to difficult issues for modelers, such as how spatial relations could be transferred to other domains (e.g., the temporal domain), or how spatial associations of abstract concepts could be simulated. The grounded perspective opens new avenues in robotics research, although a number of open research issues remain to be addressed.

One general set of issues relative to the realization of embodied cognitive models concerns the computational architecture, aside from whether it takes the form of neural networks, Bayesian approaches, production systems, classic AI architectures, or another form. Ernest, an experimenter, and Mary, a computational modeler, discuss these topics.

Requirement 1. Modal versus amodal representations

Mary: What are the most important constraints that grounded cognition pose for computational modeling and robotics? And, conversely, what are the essential features that computational models should have for them to be recognized as being “grounded cognitive models”?

Ernest: Perhaps the first and foremost attribute of a grounded computational model is the implementation of cognitive processes (e.g., memory, reasoning, and language understanding) as depending on modal representations and associated mechanisms for their processing [e.g., Barsalou’s (1999) simulators] rather than on amodal representations, transductions, and abstract rule systems.

Mary: This is a key departure point from most models in AI, which use amodal representations. However, don’t you think that modal representations, such as for instance visual representations, are too impoverished to support cognition? Take for example the visual representation of an apple. It could be sufficient to support decisions on how to grasp the apple, but maybe not for processing the word “apple” or for reasoning about apple market prices.

Ernest: Well, there is a big gap between the visual representation of an apple and the kind of reasoning you have in mind. However, note that embodied cognition does not claim that the brain is a recording system, or that objects are represented as “pictures” in the brain. Rather, the key idea is that the format of representations is still modal when they are manipulated in reasoning, memory, and linguistic tasks. For instance, it is now increasingly recognized that linguistic objects are stored as sensorimotor codes (Pulvermüller, 2005). Since language processing recruits the same circuits as action representation and planning (bidirectional) interference effects occur that have been observed experimentally. There is increasing evidence that the modal nature of representations creates “interferences” with memory and reasoning tasks as well (see Barsalou, 2008a for a review). Note also that to implement a truly embodied cognitive system, multiple modalities are essential. In addition to sensory and motor modalities, internal modalities, including affect, motivation, and reward, are essential from the embodied perspective.

Mary: Then, one can ask: what modalities are critical to include in a model of grounded cognition?

Ernest: Well, the answer depends, I suppose, on the empirical phenomena you want to model and on the specific embodiment of the robots you use. In general, however, it seems obvious that an embodied model of human cognition has to include the perceptual modalities, including, if possible, various aspects of vision, hearing, taste, smell, and touch, as all of them are relevant to human cognition. Note that the different modalities could be organized differently in the brain, and could contribute differently to action control. Excluding task-specific weighting of relevance, it seems important to implement visual dominance over the other modalities because it has frequently been demonstrated in sensory conflict paradigms, as in Calvert et al. (2004), for example. Embodied models of non-human cognition should also consider that non-human animals use multiple modalities as well.

Mary: Implementing multimodality in robots is not simple. There are nowadays many robotic platforms in the market; if we also consider that some of them can be customized, this offers (at least in principle) an ample choice of modalities to be included. However, simply including more modalities does not guarantee better performance, because an important issue concerns the associations among them. How should representations of different modalities be associated into patterns?

Ernest: In principle, this could be done directly, via connections from one modality to another, or indirectly, via association areas that function as hubs, linking modalities.

Mary: In computational terms, a simple, but certainly not unique, method for implementing direct connections from one modality to another is designing robot controllers composed of multiple, interlinked modal “maps” [e.g., Kohonen’s (2001) self-organizing maps], such as for instance motor maps, visual maps, and auditory maps, and see how they become related so as to support cognitive processing. For instance, a robot controller composed of multiple maps can learn coordinated motor programs such as looking at objects, pointing at objects, hearing their sound, etc., and the maps could develop strong associations between object-specific (motor, perceptual) features. Association areas can be designed as well within the same framework, by introducing maps that group the outputs of multiple maps. However, I don’t see any principled criterion for deciding what association areas should be included and how should they be organized.

Ernest: This problem is complicated because modalities seem to have a hierarchical rather than a flat structure. However, we still have an incomplete knowledge of the hierarchical structure of feature areas and association areas, and the connectivity patterns among them. Also important are the unique areas associated with bottom-up activation versus top-down simulation, along with shared areas. Regarding perception, most researchers believe that at least one association area or convergence zone is required for integrating information from different modalities (Damasio, 1989; Simmons and Barsalou, 2003). These ideas offer a starting point for computational modeling, which could be useful for answering many open questions. One question is whether a single area exists in which all types of information are integrated, as proposed by Patterson et al. (2007), or whether there are many, possibly hierarchically organized convergence zones. This question seems ripe for modeling because we have only begun to explore the consequences of various configurations in experimental work. For example, is more than one association area required to account for patterns of conceptual impairment?

Mary: I believe that the computational methodology could help in this regard by assessing the computational advantages that association areas and hierarchies provide, and assessing their costs (in computational terms). In addition, by using computational modeling it becomes possible to investigate the factors that regulate the patterns of connectivity among modalities. For instance, it has been proposed that “far-” senses, such as vision, are often predictive of “near-” senses, such as touch (Verschure and Coolen, 1991), and this would constrain associations and hierarchies. A third important issue concerns investigating the relations between sensory and motor codes. Indeed, in complete robot architectures, not only sensory information, but also motor information, in terms of both planning and execution of movements, would be required, and an important issue for computational modeling is how to integrate them.

Ernest: In cognitive neuroscience and psychology, there is a wide interest on how sensory and motor information is integrated in the brain. Traditional theories of planning tend to see sensory, cognitive, and motor codes as different; this implies a transduction from (modal) sensory to (amodal) cognitive codes, in which the latter guides cognitive processing and activates motor codes (Newell and Simon, 1972). By contrast, ideomotor theories of action provide support for the common coding of action and perception (Prinz, 1997; Hommel et al., 2001), which requires no transduction and could provide a better substrate for computational modeling of embodied phenomena. Similar ideas have rapidly gained importance in (social) cognitive neuroscience, due to the discovery of multimodal neurons, such as mirror neurons (Rizzolatti and Craighero, 2004). Many researchers believe that both perception and action rely on a principle of feature binding, whose anatomical and functional aspects are only partly understood. At the functional level, Körding and Wolpert (2004) proposed that the central nervous system combines, in a nearly optional fashion, multiple sources of information, such as visual, proprioceptive and predicted sensory states, to overcome sensory and motor noise. One advantage here is that neuroscience research is advancing rapidly and continues to provide useful information on how to represent and integrate these types of information.

Mary: This is indeed a very relevant point for computational modeling of embodiment. In traditional AI and vision research, internal representations are typically defined as functions of the input, and perceptual learning is formulated as the problem of extracting useful “features” from passively received perceptual (mainly visual) stimuli. Even in robotics, most studies (e.g., using reinforcement learning) use fixed sets of representations, which define for instance the current location and pose of the robot. Not only are these representations predefined (by the programmer), but also they are “generic,” or not specifically tied to the motor repertoire of the robot. Conversely, researchers in cognitive robotics increasingly recognize that perception and action form a continuum, and perceptual learning cannot disregard what is behaviorally relevant for the robot (see, e.g., Weiller et al., 2010); or, in other words, that representations should be shaped by the motor repertoire of the robot rather than being generic descriptions of the external world. This has led to a renaissance of the construct of object affordances (Gibson, 1979). Yet another formulation of the same idea is that learning is not a passive process, but is governed by the properties of the learning system. Because robots can actively control their inputs by means of their motor commands, their perceptual representations become dependent on motor skills and imbued with motor information as they explore their environment.

Ernest: Robotics seems to be a good starting place for investigating the mutual relations among the sensory modalities, and between sensory and motor modalities. Going even further in this direction, internal representations should be imbued with value representation, or information from the “internal” modalities, such as affect, interoception, motivation, and reward.

Mary: In robotic scenarios, adding internal motivations to robot architectures offers a natural way to link actions and value. The study of motivational systems is recently re-gaining importance in robotic settings (see, e.g., Fellous and Arbib, 2006).

Ernest: This stream of research could have additional advantages. Indeed, not only is value information essential for indicating significance to a robot’s actions, but current research in embodied cognition is revealing that affect, emotion, and the internal states that result from them could play a key role in shaping high-level cognition in a fully embodied system. One possibility is that understanding and producing abstract concepts, such as love or fear, depends on knowledge acquired from introspection (Craig, 2002; Barsalou and Wiemer-Hastings, 2005).

Requirement 2. From sensorimotor experience to cognitive skills: abstraction and abstract thought on the top of a modal system

Mary: This leads us to another important topic. Even if we understand how the modalities interact, from a computational viewpoint, we do not know how modal representations can support cognitive processing, nor the wide range of cognitive tasks embodied theories can potentially tackle. Is there any theory of how grounded representations could do that?

Ernest: One of the most “organic” proposals so far is Barsalou’s (1999) idea of simulation. A simulation is “the re-enactment of perceptual, motor, and introspective states acquired during experience with the world, body, and mind” (Barsalou, 2008a, pp. 618–619). The ability to map simulations onto sensorimotor states by using overlapping systems is essential, and permits implementing the top-down construal that characterizes all cognitive activity. A big challenge for computational modeling is realizing simulation mechanisms. This permits testing whether and how they can support cognitive operations ranging from memory tasks to categorization, action planning and symbolic operations, and producing both abstractions and exemplars, both of which are central to cognition.

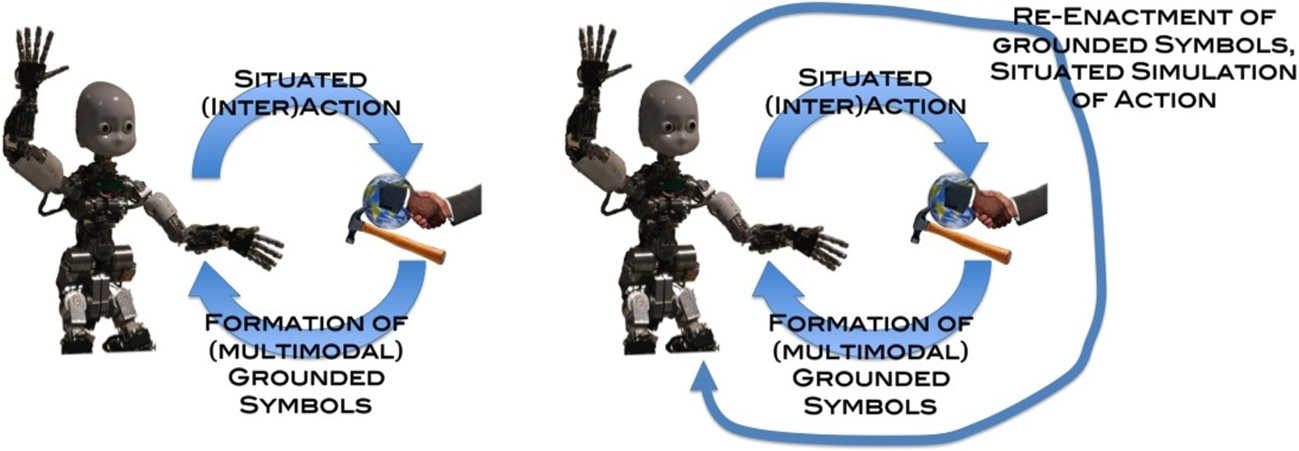

Mary: Then, it seems to me that an embodied cognition picture of cognitive processing could be the following (see Figure 1). First, grounded models are formed based on situated interaction of the robot with its environment (including other robots or humans). These symbols are multimodal and link perceptual, motor and valence information related to the same learning episodes. Second, cognitive processing is performed through the re-enactment of grounded symbols: a process that is called “situated simulation.” During situated simulation, what becomes active anew includes not only the relevant episode-specific representations, but also the associated bodily resources and sensorimotor strategies, and so cognition operates under the same constraints and situatedness of action.

Figure 1. A grounded cognition perspective on how grounded (modal) symbols are firstly formed based on situated interactions with the external environment, and therefore re-enacted as situated simulations that afford higher-level cognitive processing (having the same characteristics and constraints as embodied and situated action).

Ernest: This seems to me quite an appropriate blueprint. How different is it from standard computational models?

Mary: Well, some existing systems, for instance connectionist architectures, already encode sensorimotor patterns in some form. What is more novel is how sensorimotor patterns are reused, and the idea that grounded symbols can be re-enacted so as to produce grounded cognitive processes. From a computational viewpoint, one interesting aspect of this theory is that a single mechanism, simulation, could underlie a wide range of cognitive phenomena. However, despite the potentialities of this idea, it raises many feasibility issues, such as how quick and accurate simulations should be to be really useful, how many computational resources are required to run simulations in real time, and how simulations of different aspects of the same situation can merge. Feasibility issues are of primary importance for computational modeling; if the idea of situated simulation successfully permeates cognitive robotics, a lot of effort will be required to bridge the gap between its current conceptualization and the full specification of efficient simulated mechanisms. In addition, we still have an incomplete picture of how simulation works. Even if we have a complete architecture provided with multiple modalities, it is still unclear what should be re-enacted that constitutes a simulation.

Ernest: As a first approximation, simulations could be automatic processes that simply re-enact the content of previously stored perceptual symbols, although there could be deliberate uses of simulations as well.

Mary: This simply shifts the problem from the re-enactment to the formation of simulators, and more in general to how the different modalities contribute to specific cognitive tasks. Take categorization as an example. It is difficult to see how individual concepts are extracted from rich multimodal experience, and which mechanisms are responsible for their formation. How should these mechanisms work in practice?

Ernest: Psychologists and neuroscientists have often focused on pattern association in associative areas, which could encode increasingly “abstract” concepts. Nevertheless, how (and which) patterns are associated and classified is only partly understood. One recent idea (Barsalou, 1999) is that categorical representations might emerge when attention is focused repeatedly on the same kind of thing in the world, by utilizing associative mechanisms among modalities, which, in turn, might permit re-enactment and simulation when needed. To the best of my knowledge, this mechanism has never been tested in computational models and would certainly be a valuable contribution to embodied cognition research because it would represent the development of alternative computational mechanisms.

Mary: This is a very good example of what grounded models can offer concerning longstanding questions, like the acquisition of abstract concepts and abstract thought. Also, your example highlights the “style” of embodied cognitive models compared to traditional computational modeling. What seems to me to be crucial here is that the acquisition of representations and skills is itself an embodied and situated process, is grounded in the sensorimotor abilities and bodily resources of the learner, and thus is modulated by the same environmental and cultural circumstances.

Ernest: You are right. Not only should grounded models refrain from using amodal symbols, but also from modeling the acquisition and use of concepts and reasoning skills as abstract processes, or processes that are not subject to the same constraints and laws that govern sensorimotor skills, as has been done in traditional computational modeling of psychological phenomena. This is not to say that all concepts originate in experience, given that there could be nativist contributions as well, only that the empirical contributions to concepts reflect the constraints of actual experience.

Mary: This is a good starting point for a research program in embodied computational modeling. However, computer scientists also have to deal with the soundness and feasibility of their approach; and unfortunately, from a computational viewpoint, the powers and limitations of simulations are still unclear. For instance, similar to traditional theories of conceptual representations, simulations could be too rigid to account for the variety of experience. If simulations and concepts collect (or perhaps average on) experience, how do they adapt to novel simulations and how do they get framed around background situations?

Ernest: One possible answer to this question is that simulations are not expected to replay all collected information; instead, they merge with perception to form completely situated experiences and can re-enact different content depending on current goals, sensorimotor, social, and affective states, all of which make (only) some content relevant. In a series of articles, Barsalou (1999, 2008a, 2009) presented various arguments and data indicating that simulators can be considered dynamical systems that produce simulations in a context-specific way that changes continually with experience.

Mary: A second possible limitation of simulations and re-enactment is that they seem to be prima facie related to the here-and-now. Therefore, it is difficult to see how they relate to something outside the present situation.

Ernest: According to Barsalou (1999), simulations re-enact perceptual experience, and the same neural codes implied in the initial experience with the actual objects. However, this does not preclude running simulations that represent future states of affairs. The re-enactment notion should not be confused with passive recollection of past states. Many recent studies have highlighted the importance of simulating future states of affairs to coordinate with the world as it will be, not simply with how it is now, and have argued that preparing for future action, not just recalling it, could be the main adaptive advantage of re-enactment, simulation, and memory systems (Glenberg, 1997; Schacter et al., 2007; Pezzulo, 2008; Bar, 2009; Barsalou, 2009). The richness and multimodality of simulations is useful to produce predictions across domains. A study by Altmann and Kamide (1999) shows that subjects started to look at edible objects more than inedible objects when listening to “the boy will eat” but not “the boy will move,” indicating that people can combine linguistic and non-linguistic cues to generate predictions. (Note that here the terminology is somewhat ambiguous because sometimes “simulation” is used as a synonym of re-enactment and sometimes as a synonym of long-term prediction.). Studies that involve imagination of future states of affairs also report that (visual and motor) simulations and imagery share neural circuits with actual perceptions and actions (Kosslyn, 1994; Jeannerod, 1995) and are subject to the same timing and general constraints. For instance, visual images and perception have the same metric spatial information and are subject to illusions in the same way. Performed and imagined actions respect Fitts’s law of motor control and its occasional violations (Eskenazi et al., 2009; Radulescu et al., 2010). It has been proposed that detachment from the here-and-now of experience, which, for example, is required for planning actions in the future, could be realized as a sophistication of the predictive and prospective abilities required in motor control and could recruit the same brain areas, rather than being processed in segregated brain areas with abstract representations (Pezzulo and Castelfranchi, 2007, 2009). This view is supported by the close relationship between the neural circuits that underlie motor imagery and motor preparation (Cisek and Kalaska, 2004).

Mary: Still, we have been talking about non-present circumstances related to the senses and the modalities (future or past states of affairs). It is even harder to imagine how simulations might relate to non-observable circumstances, such as, for example, “transcendental” concepts like space and time. How could space and time be implemented in a grounded system? And how would these implementations allow the system to run simulations of non-present experience with some fidelity to the representation of space and time in actual perception and action? A second issue concerns abstract concepts, including how they can support a full-fledged symbolic system.

Ernest: Well, these are all difficult questions, and I believe that cross-fertilization between empirical research and computational modeling could contribute to clarifying them. One tenet of grounded cognition is that all processes are situated and use modality-specific information rather than being processed in an abstract, amodal, logical space. This means that the representations of space and time in grounded systems, in all their manifestations, draw significantly on the processing of space and time in actual experience. Perception, cognition, and action must be coupled in space and time, and simulations of non-present situations must be implemented in space and time, perhaps using overlapping systems. Internal simulations do not escape this rule; so if abstract concepts and symbolic manipulations are grounded in introspective simulations, they should be sensitive to external space and time, too, and retain sensorimotor aspects. Although realizing how to implement a full-fledged symbolic system is a complex issue, some ideas useful for modelers have been presented. For example, Barsalou (2003) argued that selective attention and categorical memory integration are essential for creating a symbolic system. Once these functions are present, symbolic capabilities can be built upon them, including type-token propositions, predication, categorical inference, conceptual relations, argument binding, productivity, and conceptual combination.

Requirement 3. Realistic linkage of cognitive processes with the body, the sensory and motor surfaces, the environments in which cognition happens, and brain dynamics

Mary: These ideas at least provide some initial direction for creating novel grounded architectures and models. However, we have mostly discussed the modality of representations: do you think that there are additional factors that embodied models should include?

Ernest: According to grounded cognition theories, not only the modalities, but also sensorimotor skills and bodily resources influence cognitive processing, even in abstract domains. For instance, visuomotor strategies and eye movements are reused for abstract thinking; finger movements can be employed for counting; spatial navigation skills can be reused for reasoning in the temporal domain; motor planning processes can be re-enacted for imagining future events, understanding actions executed by others, or as an aid to memory. In these cases, expressions such as “taking a perspective on a problem” or “putting oneself into another’s shoes” or “grasping a concept” have to be taken more literally than normally assumed. Overall, due to their coherent learning processes and to re-enactment, grounded cognitive processes have the same powers, but also the same constraints, as bodily actions.

Mary: I wonder how your examples could be treated in a sound cognitive robotics design methodology. Ideally, rather than focusing on the abstract nature of cognitive problems, modelers should ask first what sensorimotor processes could support them in embodied agents. An emblematic example is temporal reasoning via spatial skills: a somewhat novel way to approach this topic could be learning spatial navigation first, and then reasoning in the temporal domain on top of the spatial representations, by re-enacting similar bodily processes. Clearly, this should be done within an embodied and situated research program rather than generically via computational modeling. I am increasingly more inclined to propose cognitive robotics as the primary methodology with which this and other grounded phenomena could be studied, as it emphasizes the importance of sensorimotor processes, situated action, and the role of the body. Still, it is unclear to me how realistic the bodies of robots should be. What kind of embodiment is necessary to study grounded phenomena? Is the specific embodiment of our models really important for embodied phenomena to happen? Can we study embodied cognition in agents that do not have their own “body” (as in general cognitive agents) or that are just computer simulations of robots’ bodies?

Ernest: Well, we know that most embodied effects are not only due to the way task-related information is represented, re-enacted, and processed, but are also due to the fact that the body is the medium of all cognitive operations, whether they are as simple as situated action or as complex as reasoning. Contrary to traditional cognitive theory, researchers in embodied cognition (e.g., Lakoff and Johnson, 1999) have argued that the body shapes cognition during development and continues to exert an influence at all stages of cognitive processing. Embodiment could have subtle and unexpected effects on cognitive processing. For instance, Glenberg and Kaschak (2002) showed that the action system influences sentence comprehension [the action–sentence compatibility (ACE) effect] and that subjects needed more time to understand sentences when the action required to signal comprehension was in the opposite direction than the target sentence (e.g., upward direction when the sentence referred to downward actions). From all these considerations, I would say that being realistic about embodiment is a must, at least in certain domains. Then, I see the point of your last question: a paradoxical consequence of taking embodiment claims literally is that cognitive robots could not be good models of human cognition because their bodies are too different from the body of humans, and have different computational, sensory, memory, and motor resources. What are the currently available platforms in cognitive robotics, and how well embodied are they?

Mary: Within embodiment research, cognitive models can be based on a variety of tools and platforms ranging from general cognitive agent systems (including multi-agent systems), to robot simulation models, up to physical robot platforms in cognitive robotics.

• Cognitive agents. Through these models we can typically simulate only selected features of the agent’s embodied system. For example an agent can have a retina-like visual system, and a motor control system to navigate the environment. This is the case for models of environment navigation as in foraging tasks. Moreover, these models are suitable for multi-agent simulation where we also investigate social and interaction aspects of cognitive processing. For example, Cangelosi (2001) implemented a multi-agent model of the evolution of communication. In it, a population of simulated abstract agents have to perform a foraging task. They can perceive the visual properties of objects (“mushrooms”) that determine their category of edible and inedible objects. Moreover, agents have a motor system to navigate the 2D world and approach/avoid foods. The perceptual and motor systems are implemented through a connectionist network, which also includes information relevant to the agent’s basic drives, such as hunger. Through this essential modeling of the agent’s sensorimotor system, it has been possible to investigate the symbol grounding problem in language learning and evolution (see also Cangelosi, 2010).

• Simulated robotic agents. These include realistic models of an existing robot, such as simulation models of the iCub humanoid platform (Tikhanoff et al., 2008), which is an open source robotic platform specifically developed for cognitive research, and of mobile robots such as khepera (Nolfi and Floreano, 2000). Moreover, it is possible to build physics-realistic models that do not correspond to living systems, such as in studies of the evolution of morphology (Pfeifer and Bongard, 2006). Typically these simulation models are based on physics engines that simulate the physics of object–object interaction dynamics with a high degree of fidelity. Despite the fact that the use of a simulation might not provide a full model of the complexity present in the real environment and might not assure fully reliable transferability of the controller from the simulation environment to the real one, robotic simulations are of great interest for cognitive scientists (Ziemke, 2003). For example, a simulator for the iCub robot (Metta et al., 2008; Tikhanoff et al., 2008) magnifies the value a research group can extract from the physical robot by making it more practical to share a single robot between several researchers. The fact that the simulator is free and open makes it a simple way for people interested in the robot to begin learning about its capabilities and design, with an easy “upgrade” path to the actual robot due to the protocol-level compatibility of the simulator and the physical robot. And for those without the means to purchase or build a humanoid robot, such as small laboratories or hobbyists, the simulator at least opens a door to participate in this area of research.

• Physical robot platforms in cognitive robotics. This is for embodied models of cognitive capabilities directly implemented and tested in the physical platform such as the iCub robot (Metta et al., 2008; Macura et al., 2009). Physical robot models are important when one wants to study the detailed physics of interaction dynamics of specific configurations of sensors and actuators. The main field of cognitive modeling relying on physical robot platforms is that of cognitive robotics (Metta and Cangelosi, in press). In particular, cognitive robotics regards the use of bio-inspired methods for the design of sensorimotor, cognitive, and social capabilities in autonomous robots. Robots are required to learn such capabilities (e.g., attention and perception, object manipulation, linguistic communication, social interaction), through interaction with their environment, and via incremental developmental stages. Cognitive robotics, especially approaches that focus on the modeling of developmental stages (aka developmental and epigenetic robotics), can be very beneficial in investigating the role of embodiment, from the early stages of cognitive development to well developed cognitive systems, and to study how bodies and cognitive abilities co-evolve and exert significant influences on one another.

The choice of the most suitable modeling approach from the three methodologies listed above depends on the specific aims of the research, the availability of resources, and the consideration of the technical issues specific to the chosen methodology. For example, the first two approaches based on cognitive agents and on simulated robot agents are useful when the details of the whole embodiment system are not crucial, but rather it is important to investigate the role of specific sensorimotor properties in cognitive modeling. Moreover, the practical and technical requirements of the first two methods are limited as they are mostly based on software simulations. Instead, the work with physical robot platforms, as in cognitive and developmental robotics, has the important advantage of considering the constraints of a whole, integrated embodiment architecture. In addition, robotics experiments can demonstrate that what has been observed in simulation models can actually be extrapolated to real robot platforms. This enhances the potential scientific and technological impact of the research, as well as further demonstrating the validity of cognitive theories and hypotheses.

Ernest: I see that there is a range of possibilities here. Do you think that, to study cognition, the bodies of robots should be the same, or very similar to, the bodies of humans or of other animals?

Mary: The kind of embodiment and the constraints that have to be taken into consideration depend on what you expect from embodied computational modeling. On the one hand, modeling can help to find novel ways of understanding phenomena that are potentially applicable to cognition in general, such as the idea that sensory processing, categorization, and action planning are interdependent rather than separate processing stages. On the other hand, if one aims to produce specific predictions about, say, humans, then she should aim at replicating the same bodies and the same constraints (e.g., environmental and social), or at least a useful approximation – which could be difficult to define a priori.

Ernest: One could argue that this is not the whole story, though, since there are additional constraints that could be potentially central to embodied cognitive modeling, such as brain dynamics and their peculiarities and limitations, which are could also be part of the robot embodiment in some sense.

Mary: I see your point here. Modeling in general is about finding useful abstractions, but it is difficult to define a priori which constraints should be included in embodied computational models, and which should not. There is a debate on this topic in the cognitive robotics community, with positions that range from defenders of biologically constrained methods to the less demanding artificial life approach. Although this is still an open point, it is necessary to recognize that, compared to traditional AI methodology that focuses on “abstract” or “general” intelligence or problem solvers, embodied cognitive modeling suffers more from the idiosyncrasies of what is meant to be modeled, be it a human or another living organism, because it takes a theoretical stance on the role of the “substratum” of cognition and the body.

Ernest: I see another problem of embodied computational models compared to traditional ones. Indeed, one important aspect of embodied models is that they should be coherent at the architectural level; or, in other terms, that their functions should not be developed by fully encapsulated models that work in isolation. This is especially true for the realization of higher-level cognitive abilities, such as reasoning, language, and categorical thinking, which cannot be totally disjoint from the neural systems that, say, direct eye movements and attention, regulate posture, or prepare actions to be executed.

Mary: This seems to rule out the hybrid approaches that are popular in robotics, in which complex cognitive skills are juxtaposed to basic sensorimotor skills, with a minimal (and predefined) interface. In addition, this poses a challenge to any kind of modular design in which functionalities are partly or completely encapsulated and do not interact, calling overall for a truly integrative theory. Understanding to what extent modelers can use modular design and what functionalities actually interact in any given cognitive process is both an important research aim and is crucial for the realization of working robotic systems. Indeed, to achieve the latter aim, it would be very difficult to simply connect all components, but rather the design of their coordination is crucial (Barsalou et al., 2007).

Interim conclusion. Novelty of grounded cognitive modeling and cognitive robotics

Ernest: We have discussed many important ingredients of embodied computational models, but I can easily imagine that some of them are already used in computational modeling and robotics. In your opinion, what are the most novel elements?

Mary: There are a few points that circulate to some extent in the literature, but to which embodied computational modeling should give extra emphasis: (1) Representations (grounded modal symbols) and cognitive abilities are not “given” but learned through sensorimotor interaction and on the top of sensorimotor skills and genetically specified abilities. Take as an example spatial abilities. A natural way to implement them using early connectionist (PDP) models is to encode spatial relations in the input nodes, and them let the agent learn navigation on top of them by capturing relevant statistics in the input. Rather, in this methodology even spatial relations should be autonomously acquired. (2) Higher-level cognitive abilities (in the individual and social domains) develop on top of the architecture for sensorimotor control. The re-enactment of modal representations rather than the re-coding in amodal format determines them, and they typically reuse existing sensorimotor competences in novel, more cognitive domains (e.g., visuomotor strategies for the temporal domain; counting with fingers) rather than using novel components. (3) Embodied cognitive modeling should go beyond isolated models, for example, attention models, memory models, and navigation models, to focus on complete architectures that develop their skills over time (see, e.g., Anderson, 2010). (4) Embodied cognitive modeling should emphasize the fact that robots and agents are naturally oriented to action. Other abilities (e.g., representation ability, memory ability, categorization ability, attention ability) could be in the service of action themselves, rather than having disconnected functions (e.g., vision as a re-coding of the external world). This latter point would have an impact on the traditional conceptualization of cognition as a stage in the perception–cognition–action pipeline.

Ernest: I see that all these are important points, and I am sure that future research will point out other relevant ingredients as well. Concerning the impact that your research could have, I expect that if a strong case can be made for the efficacy of embodied cognitive models, it would contribute to the success of grounded theories in general (as it was the case for the adoption of modal representations in cognitive science under the influence of early AI systems based on the manipulation of logic rules). So, my question is: what is the equivalent of the grounded perspective in computational modeling?

Mary: Unfortunately, cognitive modeling does not yet have standard, off-the-shelf solutions for implementing grounded phenomena, but there are several lines of research that could lead to convincing solutions. As a reaction to the conceptual and technical limitations of early AI symbolic systems, connectionism emphasized that cognition is based on distributed representations and processing (e.g., statistical processing) rather than on the manipulation of amodal symbols and abstract rules. Similarly, Bayesian systems showed the full effect of statistical manipulations on representations and structures (most of the time, however, this has been shown on predefined representations). Although connectionist and Bayesian systems might provide a good starting point for modeling grounded phenomena, they are not complete answers per se. In most connectionist and Bayesian architectures, processing occurs in modular systems separated from the modalities (similar conceptually to a transduction from modal to amodal representations), and the processing of cognitive tasks is specialized rather than shared with perception and action (similar conceptually to the manipulation of abstract rules, except that they are not explicitly represented but are implicitly encoded in the weights of the networks). This means that sensory and motor modalities do not affect cognition during processing, even though they can do so during development. This would be a weak demonstration of grounding and embodiment, showing that sensorimotor and bodily processes are affected by cognition, but not vice versa. Another extremely interesting research approach is dynamic systems, which emphasize situated action and the importance of a tight coupling with the external environment for the realization of all perceptual, action, and cognitive phenomena, as well as for their development (see, e.g., Thelen and Smith, 1994).

Within dynamic systems and dynamic fields thinking, cognition is mediated by the dynamics of sensorimotor coordination, and is sensitive to its parameters (e.g., activation level of dynamic fields, and their timing), rather than being separated from the sensory and motor surfaces. For this reason, dynamic systems could be an ideal starting point for modeling grounded cognition (Schöner, 2008). In addition, dynamic systems could potentially offer explanations that span multiple levels, including brain dynamics, sensorimotor interactions, and social interaction, all using the same language and theoretical constructs. However, the full potential of this approach has not been shown yet. First, we need increasingly more dynamic systems models of the higher-level cognitive phenomena that interest psychologists, and which provide novel accounts of existing data. An interesting example is Thelen et al.’s (2001) model, which offers a novel explanation of children’s behavior in the A-not-B paradigm. However, much remains to be done in this direction if dynamic systems want to become a paradigm for implementing complex operations on modal systems. Second, these systems should be increasingly embodied, instantiated for instance in robotic architectures, and tested in increasingly realistic situated (individual and social) scenarios, in order to tell a more complete story about the passage from realistic sensorimotor processing to realistic higher-level cognitive and social tasks. Third, dynamic systems tend to de-emphasize (or reformulate) internal representation and related notions, which are common currency in psychological and neuroscientific explanations, in favor of a novel ontology that includes conceptual terms such as “stability,” “synchronization,” “attractor,” and “bifurcation.” Besides the adequacy of these ideas, there is clearly a more sociological issue, and a new theoretical synthesis is required. It is logically possible that the new ontology replaces the old one (but then it is necessary that it provides higher explanatory power, and this is clearly acknowledged by psychologists and neuroscientists), that it re-explains the old one, offering novel and potentially more interesting theories of traditional concepts such as “representation,” or that the two ontologies can be harmonized to some extent, but clearly the foundational aspects of a “dynamicist” cognitive science should be clarified before it can really offer itself as a novel candidate paradigm (Spivey, 2007). Although I have emphasized dynamic systems research, different research traditions, including for instance Bayesian approaches and connectionist networks, offer a good starting point for developing embodied cognitive models as well, providing that they successfully face the same challenges that I outlined before. However, I believe that a necessary complement to all these methodologies is to increasingly adopt cognitive robotics as their experimental platform, rather than designing models of isolated phenomena, or relaxing too many constraints about sensorimotor processing and embodiment. Indeed, it seems to me that cognitive robotics offer a key advantage to the aforementioned methodologies, because it emphasizes almost all of the components of grounded models: the importance of embodiment, the loop among perceptual, motor and cognitive skills, and the mutual dependence of cognition and sensorimotor processes.

Topic 2. What can Embodied Cognition Learn From Computational Modeling and the Synthetic Methodology?

We have discussed what aspects of grounded cognition theories should inform computational modeling and the realization of robots informed by embodied cognitive abilities. Apart from the obvious scientific and technological achievements that these robots could provide, we have argued that computational models could help to answer open questions in the grounded cognition literature, and we have offered a few examples of this potential cross-fertilization. Here we focus on methodological issues: the role that computational modeling could play in developing grounded theories of cognition, and how it can complement theoretical and empirical research.

Ernest: It seems to me that computational modeling, as a methodology, is highly complementary to empirical research. It can help shed light on some aspects of grounded theories that are difficult to assess with empirical means only, and in doing so it can help to formulate better theories and specific predictions that can be tested empirically, and to even falsify current views (or at least lower our confidence level) by showing that they are computationally untenable. However, prima facie it is different to imagine how designing efficacious robots could be a convincing argument for psychologists and neuroscientists for or against a certain theory.

Mary: The primary role of embodied cognition models is not necessarily that of designing physical robots, such as the iCub, that are capable of reproducing human embodiment phenomena, although this is also a crucial benefit, as demonstrated by the advantages of biologically inspired systems. Rather, for cognitive scientists the robotic and computer simulation models are a way to verify and extend their hypotheses and theories. A simulation model can be viewed as the implementation of a theory in a computer or robot platform. A theory is a set of formal definitions and general assertions that describe and explain a class of phenomena. Examples of general cognitive theories are the ones on embodied cognition (as discussed in this article) but also other general, and hard to test theories such as in language evolution research that hypothesize a specific ability as the major factor explaining the origins of language (e.g., gestural communication for Armstrong et al., 1995; and tool making for Corballis, 2003). Theories expressed as simulations possess three characteristics that may be crucial for progress in the study of cognition (Cangelosi and Parisi, 2002). First, if one expresses one’s theory as a computer program, the theory cannot help but be explicit, detailed, consistent, and complete because, if it lacks these properties, the theory/program would not run in the computer and would not generate results. Second, a theory expressed as a computer program helps generate detailed predictions because, as we have said, when the program runs in the computer, the simulation results are the predictions (even predictions not thought of by the researcher) for human behavior derived from the theory. And finally, computer simulations are not only theories but also virtual experimental laboratories. As in a real experimental laboratory, a simulation, once constructed, allows the researcher to observe phenomena under controlled conditions, to manipulate the conditions and variables that control the phenomena, and to determine the consequences of these manipulations. Indeed, this is one of the main advantages of computational modeling over other techniques. Computer simulations answer questions that cannot be addressed directly by empirical research; for instance, because the addressed phenomena cannot be observed directly or replicated (e.g., evolutionary phenomena) or are simply too difficult, risky, unethical, or expensive to test in the real world (e.g., the consequences of different learning episodes or model architectures for development). In addition, certain empirical phenomena depend on systemic and computational constraints on the behaving (or cognitive) system, irrespective of whether these constraints are posed by the system itself (e.g., bounded resources) or by external factors (e.g., situatedness). For instance, in a computational study reviewed below, Pezzulo and Calvi (in press) investigated what simulators could emerge in a perceptual symbol system due to limited resources and other computational constraints.

Ernest: This is indeed interesting, and I see how computational modeling could contribute to the development and refinement of embodied theories of cognition. For instance, one issue that is widely debated is how to interpret the activation of the motor system during “cognitive” tasks, such as language understanding; or, in other terms, assessing if embodied phenomena are causal or epiphenomenal. So far, the most common methodology consists of measuring the time course of activation of the brain areas; for instance, of motor areas during language perception (Pulvermüller, 2005). In brief, early activations are more compatible with the view that embodied cognition plays a causal role. A more direct approach to the understanding of causality consists of interfering with the cognitive process, such as in TMS studies, but also with behavioral paradigms that create interference between tasks (e.g., a motor and a higher-level cognitive task).

Mary: Computational modeling can in principle help to resolve the aforementioned debate by providing principled ways to assess causality in cognitive processes, or at least provide a “sufficiency proof” that certain cognitive tasks, whose functioning is still unclear, can be explained on the basis of embodied phenomena. For instance, it is possible to compare how competing (epiphenomenal versus causal) computational models explain motor involvement during perception of language (Pulvermüller, 2005; Garagnani et al., 2007) or affordances (Tucker and Ellis, 2001, see later). In “epiphenomenal” models, representations (e.g., linguistic representations) are amodal and their processing is modular (i.e., separated from the sensory and action cortices), and when the “central” processing affects the “peripheries,” this is an epiphenomenon without causal influence. On the contrary, in “causal” models all processing involves simulations and manipulation of modal representations. Implementing causal and epiphenomenal theories in computational terms, and embodying them into robot or agent architectures, can help to disambiguate their explanatory power, and to compare their empirical predictions.

Ernest: Another important issue for which computational models can provide insight is the apparently contradictory evidence on facilitatory or inhibitory roles of embodied processes. For instance, observation of actions performed by others can either facilitate or inhibit one’s own motor actions, depending not only on the degree of convergence between the observed and executed actions, but also on the time course of the interference, and in some cases on the localization of the processing in the brain. The conflicting facilitatory versus inhibitory effects in the literature could, for instance, reflect the hierarchical nature of perceptual and motor systems, with different kinds of effects reflecting different levels in these systems, or alternatively, they could depend on the time course of the interference. What is lacking is a specific model of when and how various processes produce facilitation or inhibition, which could serve to test different hypotheses.

Mary: Relative to this issue, computational modeling can implement competing theories that aim at explaining interference, such as theories in which timing or competition for shared resources is viewed as the key element for modulating the interaction. Note that in different tasks and domains, the mechanisms and the effect could be different, so computational models should be endowed with precise details and contextual constraints. At the same time, it is desirable that common principles emerge, and thus the promise of computational modeling lies in providing a comprehensive framework for explaining interference within cognitive processing and reconciling the puzzling findings.

Ernest: You have argued convincingly that cognitive robotics could be a good starting point for modeling embodied phenomena, but I see a potential drawback in its method: when modeling cognitive functions, we should not forget that the way they are realized depends on the way they develop. Indeed, issues associated with the architecture’s development and plasticity are important, too, including morphological, genetic, experiential, and social contributions, and how epigenesis is realized (Elman et al., 1996). For instance, as sensorimotor skills mature over time, cognitive abilities also develop in coordination with the acquisition of action skills (Rosenbaum et al., 2001). Also, social factors contribute substantially to development. Unfortunately, these dynamics are quite problematic to study empirically (but see Thelen and Smith, 1994). Again, however, here computational modeling can really help, addressing, for instance, the following questions: To what extent is a developmental trajectory necessary to “build” a grounded system? If it is necessary, what sorts of learning regimens are critical, and why?

Mary: This also seems like a nice place for modeling, at least in terms of the development or learning of knowledge in some specific domains. So, one could investigate whether you need to begin with some number of association areas already in place, for example. Or possibly this might be an interesting situation for using constructive algorithms like cascade correlation (Fahlman and Lebiere, 1990) or evolutionary techniques (Nolfi and Floreano, 2000) to see how “hidden units,” or convergence zones, develop with experience. Overall, I would definitely agree that the dynamics of situated experience play an essential role in the shaping of cognitive abilities, and this is why I see developmental and epigenetic robotics approaches as extremely promising approaches for the construction of grounded systems (Weng et al., 2001).

Ernest: Overall, from this discussion I see significant potential for collaboration and cross-fertilization between the theoretical, empirical, and synthetic methodologies. However, I have recently participated in many good conferences, such as SAB, EpiRob, and ALife, in which I have seen many computational systems at work. Although most scientists in these conferences have similar motivations as you, that is, designing computational models that can tell us something about cognition (and in particular higher-level cognition), and using similar methods as you describe, including cognitive robotics, I am still unsure of what the results are. I don’t deny that most of the things that I have seen are inspiring; still I fail to see how they can really influence my work. My guess is that most current computational models are mere “proofs of concept” and lack the adequate level of detail to start deriving precise predictions, or to simply be considered as useful tools by psychologists and neuroscientists. To realize the full impact of cognitive modeling on current (and future) theories, one not only has to develop computational systems that are generically informed by embodied cognition principles, but systems that target specific functionalities and experimental data.

Mary: This is indeed a necessary step. It is worth noting that although we still need a solid methodology for comparing empirical and synthetic data, various approaches have been proposed that compare modeling and empirical data both qualitatively and quantitatively. For example, within the literature on computational and robotic models of embodiment, there are studies where reaction times collected in psychology experiments have been directly compared with other time-related measurements in computational agents. Caligiore et al. (2010) directly compare the reaction times of Tucker and Ellis’s (2001) stimulus response compatibility experiments with the time steps required by the simulated iCub robot’s neural controller to reach a threshold that initiates the motor response. In Macura et al. (2009), a more indirect comparison for reaction time is used, based on the neural controller’s error measurements in the production of the response. In the context of neuroscience, specific quantitative methods have been developed to compare brain-imaging data from human participants (e.g., from fMRI and PET methodologies), with “synthetic brain-imaging” data from computational models ranging from computational neuroscience models (Horwitz and Tagamets, 1999; Arbib et al., 2000) to connectionist models (Cangelosi and Parisi, 2004). The direct comparison between empirical and modeling data remains a major challenge for multi-agent models of cognition. This is the case for evolutionary models (e.g., language evolution models, Cangelosi and Parisi, 2002) where only general qualitative comparisons are possible due to the lack of data, or due to less realistic implementation of the sensorimotor and behavioral systems (e.g., multi-agent models of foraging). There are, however, other important reasons why our ideas fail to permeate cognitive science as a whole. On the one hand, there is a clear “sociological” problem of different languages and different conferences. On the other hand, there are more serious methodological differences that have to be bridged to some extent. It is often the case that modelers and empiricists have different research questions in mind, or use different lexicons. In addition, modelers in the communities that you mentioned tend to emphasize complete architectures and the fact that many processes interact, whereas empirical research often adopts a divide-and-conquer strategy and tends to study brain and behavior as if there were specialized processes, such as memory, attention, and language, with specialized neural representations.

Ernest: I would agree that the methodological differences make collaboration harder, and then that “empiricists” have to change as much as “modelers.” The last point you mentioned is the most important one to me. Although you would rarely meet a cognitive scientist who claims to be a modularist, still some modularism (and localizationism) leaks into experimental paradigms in practice. Indeed, there is a tendency to study cognitive processes in isolation, as if they had separable neural substrates and encapsulated representations, a clear “objective” target that can be readily disconnected from the organism’s behavior and goals (e.g., memory is for storage and retrieval the maximum number of elements, attention is for selecting stimuli), and as if they had specialized resources, inputs, and outputs that are clearly separable from those implied in other processes that take place at the same time. Overall, an added value of collaboration with embodied computational modelers could be a push toward integrated theories of cognition rather than theories of isolated functions.

Topic 3. Current Embodied Computational Models: Successes and Limits

As we have briefly mentioned, there have been many attempts to model embodied and situated phenomena, especially in the connectionist and dynamic systems traditions. In these areas, it is widely recognized that the body, environment, and internal neural dynamics of agents are highly interconnected and shape one another (Clark, 1998; Pfeifer and Scheier, 1999). However, although these models fit the general framework of grounded cognition generically, most of them do not incorporate its specific predictions. In addition, only a few of them explicitly address complex (or even moderately complex) cognitive abilities, which are a true “benchmark” for grounded theories.

This section discusses a few robotic and agentive systems that exemplify current efforts toward the realization of embodied models of higher-level cognitive abilities, and specifically concepts and language understanding. This short review, which is undoubtedly biased by the authors’ knowledge, is by no means representative of the most successful systems in technological terms, but is intended to illustrate current directions toward embodied cognitive models, together with their powers and limitations.