Abstract

Conjoint analysis (CA) has emerged as an important approach to the assessment of health service preferences. This article examines Adaptive Choice-Based Conjoint Analysis (ACBC) and reviews available evidence comparing ACBC with conventional approaches to CA. ACBC surveys more closely approximate the decision-making processes that influence real-world choices. Informants begin ACBC surveys by completing a build-your-own (BYO) task identifying the level of each attribute that they prefer. The ACBC software composes a series of attribute combinations clustering around each participant’s BYO choices. During the Screener section, informants decide whether each of these concepts is a possibility or not. Probe questions determine whether attribute levels consistently included in or excluded from each informant’s Screener section choices reflect ‘Unacceptable’ or ‘Must Have’ simplifying heuristics. Finally, concepts identified as possibilities during the Screener section are carried forward to a Choice Tournament. The winning concept in each Choice Tournament set advances to the next choice set until a winner is determined.

A review of randomized trials and cross-over studies suggests that, although ACBC surveys require more time than conventional approaches to CA, informants find ACBC surveys more engaging. In most studies, ACBC surveys yield lower standard errors, improved prediction of hold-out task choices, and better estimates of real-world product decisions than conventional choice-based CA surveys.

Similar content being viewed by others

Public involvement in health service planning is, increasingly, a policy recommendation.[1] Public involvement may include participation in national healthcare financing debates, contributions to health systems planning decisions, or the treatment choices of individual patients.[1,2] In addition to the ethical imperative of decision control and patient choice, health services consistent with patient preferences yield incrementally better outcomes.[3,4]

Conjoint analysis (CA), or discrete-choice conjoint experiments,[5] have emerged as promising approaches to the study of health service preferences.[6,7] These methods can help decision makers understand the health service choices of communities,[8] the perspectives of different user segments,[9] or the unique preferences of individual patients.[10–12] The methods underlying CA were proposed by mathematical psychologists[13] and are used widely in the fields of health economics, transportation economics, environmental economics, and marketing research.[14–18] CA conceptualizes a health service, treatment, or outcome as a set of multi-level attributes.[7] Attributes may be quantitative (e.g. the cost of treatment) or qualitative (e.g. the brand of a medication). Cunningham et al.,[9] for example, examined preferences for 14 attributes of patient-centered care.[9] These included health information transfer, participation in healthcare decisions, and prompt feedback on progress. In studies employing CA, each attribute is defined by a series of levels. Healthcare decisions, for example, might be made by patients or staff, or collaboratively by patients and staff.[9] In studies employing choice-based CA, informants make choices between conceptsFootnote 1 composed of experimentally varied combinations of the study’s attribute levels.[7] These methods allow investigators to determine the relative influence of variations in the levels of each attribute on informant choices,[19] estimate the utility of each attribute level,[14,19] compute willingness to pay for the components of health services,[20,21] identify segments with different preferences,[22,23] and simulate the response of different segments to hypothetical service options.[7,14,24] In contrast to quality-adjusted life-year (QALY) analyses, CA can estimate the utility of a broader range of service processes, non-health benefits, and health outcomes.[25–27]

The widespread use of CA by marketing researchers and economists[16] is based on a combination of theoretical assumptions and empirical findings. For example, the multi-attribute choice tasks included in studies employing CA approximate the way information is often presented in healthcare decision-making situations. Patients making individual healthcare decisions, for example, must consider the benefits, risks, and costs of competing multifaceted treatment options.

Second, by prompting participants to consider each attribute in the context of others, conjoint methods anticipate the treatment and service delivery trade-offs that health service planners and individual patients must consider.[7] By systematically varying attribute levels, simulations can predict the response of participants to a range of hypothetical services or outcomes.[7,14] Moreover, as a decompositional approach, CA allows investigators to estimate the relative contribution of individual attributes to the choices participants make.[7,14]

Finally, rating scales are subject to biases and response sets, which limit their utility as an approach to the study of preferences.[28] Social desirability biases, for example, may influence the response of informants to the types of questions included in health research surveys.[29] CA, in contrast, can reveal attitudes that participants do not report in surveys using other methods.[30,31]

1. Limitations of Conjoint Analysis (CA)

Despite the strengths of CA, several findings have encouraged the development of new approaches to the collection of preference data. First, the questions addressed in many applications of CA require a relatively large number of attributes. In full-profile choice-based conjoint surveys, the options included in choice tasks are composed of one level from each of the study’s attributes; the complexity of these tasks can be overwhelming for informants. Although full-profile designs optimize statistical efficiency,[32] informant efficiency and the quality of the data obtained via these methods declines.[33]

Second, the attributes presented in conjoint studies are often combined according to orthogonal designs,[32] yielding attribute combinations that do not approximate the product or service preferences of the study’s informants. Choices, for example, may exclude attribute levels of critical importance to informants or include levels that are unacceptable. If the options in choice-based conjoint surveys are perceived to be irrelevant, boring, or frustrating, informants may not devote the attention, effort, and time required for these methods to accurately assess their preferences.

Third, random utility theory, and the weighted additive logit models on which CA is based, assume that the value of a health service equals the sum of the part-worth utilities associated with its component attributes.[7,34] Informants maximize utility by choosing options with a combination of attributes yielding the greatest value.[7,34,35] Basing choices on a consideration of all of the attributes composing a complex health service is referred to as a compensatory strategy. Accordingly, features with higher utilities (e.g. positive health outcomes) may compensate for features with lower utilities (e.g. short-term adverse effects).

As choice tasks become increasingly complex, irrelevant, or uninteresting, informants may adopt simplifying heuristics.[36] For example, rather than engaging in an effortful consideration of the incremental contribution of all of the attributes composing a health service concept, participants may base their choices on one or two salient features. Physicians, for example, shift to non-compensatory simplifying heuristics as the complexity of pharmaceutical decisions increases.[37]

In studies employing CA, as in many real-world choice situations, many informants employ two-stage or dual-process decision-making strategies.[38,39] Dual-process models have emerged as an important conceptual framework in the study of human information processing and decision making.[40] These models have been applied widely in marketing research[38,41] and extended to the study of risky health decisions,[42] patient treatment choices,[43,44] diagnostic errors,[45,46] medical education,[47] and the neural correlates of decision making.[48]

According to dual-process models, patients might simplify complex decisions by eliminating treatments with unacceptable risks. This screening stage of the decision-making process is accomplished quickly and intuitively according to non-compensatory simplifying heuristics, rather than the systematic, effortful, weighting of each component attribute assumed by random utility theory,[39] the model on which CA analysis is based.[34] In the second phase of the decision-making process, treatments remaining in the patient’s simplified consideration set would be examined according to a deliberative, analytical, compensatory process conforming to the weighted additive assumptions of random utility theory.[49]

When choice tasks include attribute levels that would have been excluded from an informant’s consideration set, conventional choice-based conjoint surveys may confound the two stages of the decision-making process. For example, if an unacceptable attribute level is present, informants may exclude complex multi-attribute options on this factor alone. Modeling studies suggest that, in surveys using conventional approaches to CA, simple non-compensatory rules (e.g. basing decisions on a small subset of attributes) could account for a significant percentage of respondent choices.[38,50]

Investigators conducting studies using CA have attempted to deal with non-compensatory simplifying heuristics in several ways. Some have employed partial-profile designs in which the concepts presented in each choice task are composed of a subset of the study’s attributes.[14,33] By reducing the complexity of individual choice tasks and encouraging informants to consider the remaining attributes more carefully, partial-profile designs balance informant and mathematical efficiency.[33] These methods have been applied to the design of health services, prevention programs, and medical education.[9,51–54]

Other investigators have attempted to develop surveys simulating the two-stage decision-making processes used to make real-world choices. Adaptive conjoint analysis (ACA), for example, was designed to customize the interview by allowing informants to eliminate attributes that were of little interest.[55] Informants begin ACA surveys by rating their preference for the levels of each attribute. An optional series of ratings determines the importance of each attribute. This information is used to compute utilities and compose conjoint rating questions featuring attributes and attribute levels that are relevant to each respondent. ACA software re-computes utilities following each conjoint rating question. The composition of subsequent questions is based on updated estimates of the respondent’s utilities and the combination of attributes that would be most informative given the respondent’s previous ratings.

Although ACA has proven to be a useful approach to the study of health service preferences,[11,56–65] the popularity of this ratings-based approach has been eclipsed by choice-based conjoint methods.[14]

2. Adaptive Choice-Based Conjoint Analysis (ACBC)

This article introduces a more recent adaptive approach, Adaptive Choice-Based Conjoint Analysis (ACBC) and reviews studies comparing ACBC with conventional choice-based conjoint methods. The current version of ACBC was released in March of 2009 as a component within Sawtooth Software’s SSI Web suite of internet survey methods. The introduction of ACBC followed an extended phase of software development, 8 months of beta testing by 50 investigators, and more than 40 individual studies.[66]

The ACBC program was designed to provide a survey process that is more engaging than conventional approaches to CA, to obtain more information than is typically available, to improve the estimation of utilities, and to better predict real-world preferences.[66,67] To approximate dual-process decision making, ACBC includes components allowing both simplifying heuristics and the more effortful compensatory processing assumed by the logit rule. To accomplish this, ACBC combines several widely used approaches to survey design: build-your-own (BYO) configurators, a screening section, and choice-based conjoint tasks. The components of a standard ACBC survey are described in the following sections. Interested readers can complete several working ACBC surveys at the Sawtooth Software website.[68]

2.1 Build Your Own

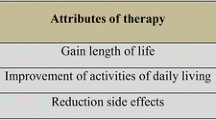

In most instances, ACBC surveys begin with a self-explicated measure of preferences, the BYO task.[66,69] In BYO tasks, participants select the level of each attribute that they would prefer. The BYO stage can include a cost configurator, in which the levels of each attribute are associated with different costs, an approach sometimes employed at websites marketing computers or automobiles. The total cost of the concept selected by the informant varies as different attribute levels are selected. In addition to introducing informants to the attributes included in the study, the BYO section allows the software to compose a relevant set of concepts for consideration in the Screener section of the ACBC survey. An example of a BYO task without a cost configurator is presented in figure 1.

Sample build-your-own (BYO) exercise. Note that attributes with an established preference order (e.g. preference for less frequent side effects) could be excluded from the BYO task. These attributes are, in any case, carried into the Screener section of the survey (reproduced with permission from Sawtooth Software, Inc.).

Investigators have the option of using each informant’s response to questions completed prior to the BYO section of the ACBC survey to delete irrelevant or unacceptable attributes or attribute levels.[66] Using constructed lists, for example, Goodwin[70] allowed participants to reduce the number of attributes shown in an ACBC survey by selecting 10 of the 16 product features that they were most likely to consider when making a purchase decision.

2.2 The Screener Section

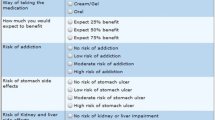

Next, the ACBC software composes a set of product or service concepts clustering around the preferences expressed in the BYO task. The concepts in the Screener section are shown in full profile, with each concept defined by one level of each attribute included in the survey. Although the concepts presented in the Screener section emphasize the informant’s BYO task choices, the Screener section includes at least one presentation of each attribute level included in the study.[66] Participants determine whether each concept is a possibility or not. Those marked as a possibility are retained for inclusion in the Choice Tournament described in section 2.3. Figure 2 presents a page from an ACBC Screener section.

2.2.1 Identifying ‘Unacceptable’ Screening Rules

Over a series of screening questions, the ACBC software identifies attribute levels that each informant consistently excluded from the concepts selected as possibilities.[66] To determine whether the informant is operating according to non-compensatory screening heuristics, a list of these omitted attribute levels is presented as an ‘Unacceptables’ screening question (figure 3). Decision-making research suggests that informants often make quick intuitive judgments to which they are not committed.[39] For example, in studies using ACA, the approach described earlier, informants often screen out attribute levels that prove to be acceptable when combined with attribute levels having higher utility values.[66] Each ‘Unacceptables’ screening question, therefore, asks participants to pick the one attribute level that is most unacceptable. If product or service concepts composed for presentation, but not yet evaluated by the participant, include unacceptable levels, these concepts are coded as ‘not a possibility’ and are replaced with new concepts adhering to this screening rule. The process of screening for possible concepts and identifying unacceptable attribute levels continues through a user-defined series of iterations.

2.2.2 Identifying ‘Must Have’ Screening Rules

The software also identifies attribute levels that each participant consistently included in the concepts selected as possibilities in the Screening section. It is assumed that these choices may reflect the operation of non-compensatory ‘Must Have’ screening rules. The software presents a list of potential ‘Must Have’ attribute levels and asks informants whether any of these must be included. Again, informants identify the one attribute level that is most important to them. This ‘Must Have’ attribute level, and those logically included in this screening rule, are included in all subsequent presentations. The process of screening for possible concepts and identifying ‘Must Have’ attribute levels continues for a user-defined series of iterations.[66]

2.3 The Choice Tournament

An example of a Choice Tournament set is presented in figure 4. This stage of the survey approximates a conventional full-profile conjoint task; one level from each attribute (excluding those deleted prior to the BYO) is included in each concept presented in the Choice Tournament. However, unlike conventional CA, the Choice Tournament is restricted to concepts identified as possibilities during the Screener section. Moreover, because the concepts presented in the Choice Tournament respect each participant’s ‘Unacceptable’ and ‘Must Have’ screening rules, this phase of the ACBC survey encourages the more controlled, effortful, compensatory processes assumed by weighted additive logit models.[39] To reduce the complexity of the choices presented in the Choice Tournament, attribute levels that are constant across the concepts in each choice set are grayed out. Participants are instructed to ignore these levels and concentrate on those attributes that vary across tasks. Because attributes of primary importance are often tied across the options presented in each Choice Tournament task, informants can consider attributes of secondary or tertiary importance more carefully. The ‘winning’ concept in each Choice Tournament set advances to the next set, where it competes with several additional options derived from the Screener section. The Choice Tournament proceeds until a final winner is identified.

2.4 Optional Components of ACBC Surveys

The ACBC software suite provides investigators with a series of potentially useful options.

2.4.1 Summed Pricing

Studies using CA often treat price as an independent attribute with discrete levels.[20,21] Cunningham et al.,[52] for example, used a partial-profile design to examine the influence of cost ($0, $1000, or $2000) on prevention program choices. By assigning incremental prices to all attribute levels, in contrast, ACBC’s pricing option generates more realistic product or service concepts.[66] The summed price of each product or service option is based on the incremental costs of the attribute levels included in that concept. To avoid co-linearity that would confound price and feature utilities, Sawtooth Software[66] suggests randomly varying the overall summed price (e.g. ±30%). This allows the utility of non-price attributes to be interpreted independently from those associated with price increments.[66]

2.4.2 Calibration Section

The optional Calibration section presents several product or service concepts in sequence (e.g. the BYO concept, several concepts selected as possibilities or rejected in the Screener section, and the Choice Tournament winner). Informants indicate on a Likert intention scale whether they would actually purchase or use each concept. By presenting options with widely varying expected utilities, the regression equations used to estimate the none thresholdFootnote 2 yield more precise beta coefficients.[66]

2.5 The Analysis of ACBC Data

The ACBC software codes the BYO, Screener section, and Choice Tournament as a series of choice tasks. For example, each non-price attribute in the BYO section of the survey is configured as one choice task. In the Screener section, each concept presents a binary choice: is each alternative a possibility or not? Participants are assumed to compare the utility of each concept’s combination of attribute levels with a threshold constant. The Choice Tournament is treated as a standard choice-based conjoint survey.

The ACBC software generates counts reflecting the number of times each attribute level was chosen in the BYO section, the number of times each attribute level was designated as ‘Unacceptable’ or ‘Must Have’ during the Screener section, the number of products screened into consideration sets, and how often each level was included in the winning Choice Tournament concept.

The components of an ACBC survey (e.g. BYO, Screener section, and Choice Tournament) can be analyzed individually or in combination using multinomial logit models.[66] The software’s default recommendation is to use ACBC’s Hierarchical Bayes program to estimate individual-level utility coefficients by iteratively estimating and leveraging estimates of population-level means and co-variances.[71–74] An optional monotone regression program estimates standardized (zero-centered) individual-level coefficients directly from the ACBC data.[66] This approach is similar in concept to the ‘bottom-up’ technique developed by Louviere et al.[75] for best/worst discrete-choice experiments. Although ACBC’s default approach is to estimate main effects, first-order interactions can be computed.

The ACBC program allows the estimation of individual utility coefficients via Hierarchical Bayes to be constrained on the basis of each participant’s response to additional questions, or globally when all respondents are assumed to have the same preference order. However, in the single study comparing constrained and unconstrained estimation, no differences in hit rate predictions were observed.[14]

3. Review

In preparation for this review, we (CEC and KD) participated in a 2-day ACBC beta testing workshop, obtained beta versions of the ACBC software, completed an ACBC study of the prevention program design preferences of 1004 university undergraduates, and programmed a second study examining factors influencing the decision of university students to obtain an H1N1 influenza vaccination.

We searched for trials randomly assigning informants to either ACBC or conventional choice-based CA. Next, we included cross-over trials[76] in which participants were randomly assigned to complete (i) a conventional choice-based CA survey followed by an ACBC survey; or (ii) an ACBC survey followed by a choice-based CA survey. Finally, we reviewed feasibility studies examining the implementation of ACBC in commercial market research applications. Although more than 100 ACBC surveys have been conducted,[66] the lack of published findings precluded a meta-analytic review.

We examined evidence addressing two hypotheses. First, because ACBC adjusts to each informant’s preferences, we assumed that those assigned to ACBC surveys would evaluate the experience more favorably than would those completing conventional full-profile choice-based CA surveys. Second, assuming that informants devote more effort to the consideration of concepts that are relevant, that choices between concepts with similar utilities are more efficient,[77] and that the coding of ACBC surveys generates more data per participant,[66] we postulated that ACBC would yield better predictions than conventional choice-based CA designs.

3.1 Choice-Based Conjoint Analysis vs ACBC

3.1.1 Consumer Electronics

Chapman et al.[78] examined preferences for consumer electronic products at Microsoft® Corporation. The study included 8 attributes with 2–5 levels. A sample of 400 personal computer users completed a traditional choice-based CA and a comparable ACBC survey. Using a cross-over design,[76] informants were randomly assigned to complete a conventional choice-based CA or ACBC survey first. The choice-based CA version of the survey comprised 12 choice tasks presenting random combinations of the study’s attribute levels, plus two ‘hold-out’ tasks designed to test the internal validity of the study’s simulations.[79] Footnote 3 The ACBC survey included a BYO with a cost configurator, nine screening tasks presenting three product options, ‘Must Have’ and ‘Unacceptable’ screening questions, and a Choice Tournament with three concepts in each choice set. This study included three validity checks: (i) a fixed task assessing preferences for three products; (ii) an opportunity for a random sample of 25% of the study’s informants to choose one of the competing products at no cost; and (iii) simulations predicting 6 months of actual market data regarding the sales of the two competing products (unit of sales of product A/[unit of sales of A + unit of sales of B]).

Participants reported no differences in the realism of the products presented in choice-based CA or ACBC surveys, their interest in and attention to the two approaches, and the extent to which their responses were realistic. Although the ACBC survey required more time to complete (median time 7.4 vs 4.1 minutes), it was perceived as less boring.[78] The standard deviations associated with respondent utilities were smaller for ACBC than for choice-based CA surveys. On attributes with a natural order, ACBC yielded fewer unconstrained reversals.Footnote 4 Informants were more sensitive to price in ACBC surveys and, on the outcome of greatest interest to investigators, ACBC preference share predictions were closer to actual market data than those derived from choice-based CA surveys. Indeed, the ACBC predictions did not differ significantly from actual head-to-head market shares.

3.1.2 Homes

Orme and Johnson[80] randomly assigned more than 1000 home owners to choice-based CA or ACBC surveys exploring home purchase preferences. This study featured ten attributes (e.g. square feet and number of bedrooms), with 31 levels. Price was estimated as a single linear parameter. The survey began with a BYO exercise that included a summed pricing option. To determine whether the length of an ACBC survey could be reduced, informants were randomly assigned to Screener sections with either 24 or 32 home concepts. After each set of four home concepts, the software determined whether the informant might be operating according to ‘Must Have’ or ‘Unacceptable’ screening rules. The Choice Tournament presented sets of three home concepts derived from the Screener section. The home selected in each Choice Tournament set advanced to the next choice set until a winner was determined. Five hold-out tasks, with the fifth composed of attributes selected in the preceding tasks, were included to compare the validity of the predictions derived from the choice-based conjoint and ACBC surveys.

The ACBC survey required more time to complete than the conventional choice-based CA survey (average 5.5 vs 3.8 minutes). Informants, however, rated the ACBC survey more positively than the conventional choice-based CA survey.[80] They thought ACBC’s home presentations were more realistic, the survey was less monotonous and boring, and that the format elicited more realistic answers. Participants felt that the ACBC survey encouraged slower and more careful responses. On hold-out choice task simulations, ACBC yielded lower errors in share of preference predictions and more accurate estimates of individual choices than the conventional choice-based CA survey. Finally, 24 screening concepts yielded results comparable to 32 screening concepts.

3.1.3 Computers

Johnson and Orme[67] randomly assigned a panel of 600 informants to either an ACBC survey or a conventional choice-based CA survey examining computer preferences. The study included ten attributes (e.g. brand and hard-drive capacity) with a total of 37 levels. Informants began by completing three hold-out tasks and a fourth task composed of the options preferred in the first three hold-out tasks.

The ACBC survey was rated as a more positive experience than the choice-based CA approach. As other investigators have noted, the ACBC survey required more time to complete (median time 11.6 vs 5.4 minutes). However, the ACBC survey yielded significantly better hit rates (60.8% vs 50.0%) than the conventional choice-based CA survey when predicting the fourth hold-out task.[67]

3.1.4 Automobiles

Kurz[81] randomly assigned 1030 participants planning car purchases to either ACBC or choice-based CA surveys. A non-randomized sample of 316 participants completed a partial-profile choice-based CA survey. The study included 9 attributes, each with 3–5 levels, plus price and brand. Those assigned to the ACBC task completed a BYO; seven Screener section sets, each presenting four concepts; a Choice Tournament with ten choice tasks; and a Calibration section. Those assigned to the choice-based CA survey completed 15 choice tasks, each presenting three concepts. Because partial-profile surveys generate less data per choice task,[14] participants in this condition completed 20 choice tasks.

Although ACBC required more time than the partial-profile (median 15.6 vs 5.4 minutes) or conventional choice-based CA surveys (median 3.4 minutes),[81] participants rated the ACBC survey more positively. ACBC yielded better hold-out task hit rates and, according to the authors, a latent class segmentation structure that was more differentiated, stable, and easily interpreted than those derived from traditional choice-based CA or partial-profile designs.

3.1.5 Fast Food

A team of investigators from Sawtooth Software[66] used ACBC to examine fast food choices. An internet panel of approximately 650 participants was randomly assigned to complete either an ACBC survey or a conventional choice-based CA survey. In most comparisons, informants spent significantly more time on the ACBC than the choice-based CA survey. This study, therefore, attempted to control time by increasing the number of choice tasks included in the choice-based CA survey to 24. Each choice task presented five full-profile concepts comprising experimentally varied combinations of the levels of the study’s four attributes.

In contrast to studies including a larger number of attributes and fewer choice tasks in the choice-based CA arm of the trial, participants took longer to complete the choice-based CA survey than the ACBC survey (median 6.8 vs 5.6 minutes).[66] The investigators observed no differences in hit rates for simulations predicting hold-task choices or share of hold-out task preferences.[82]

3.2 Implementing ACBC

A number of investigators have also described the implementation of ACBC methods in commercial settings.[70,83,84]

3.2.1 In the Mobile Telephone Industry

In a (non-randomized) study applying ACBC to the design of mobile telephone packages, 1500 respondents completed a customized choice-based CA survey, 300 completed an ACBC survey, and 300 completed a conventional choice-based CA survey.[83] The ACBC survey included a BYO task, a Screening stage, questions to identify ‘Must Have’ and ‘Unacceptable’ attribute levels, and a Choice Tournament. ACBC surveys yielded significantly lower errors in share of preference predictions than the conventional choice-based CA or customized choice-based CA surveys.[83] In contrast with the results reported in randomized trials, hit rates for the choice-based CA survey in this non-randomized study were slightly better than those for ACBC or customized CA surveys.

3.2.2 In a Manufacturing Firm

Goodwin[70] described the introduction of ACBC to design problems in a traditional consumer products manufacturing firm. The team concluded that ACBC provided the flexibility of earlier ratings-based adaptive approaches to CA, the realism of choice-based methods, and the task simplification of partial-profile designs. These advantages were achieved with smaller sample sizes (e.g. 400 vs 800) than the partial-profile methods previously used by the company. Results were acceptable to product managers and applicable to real-world design decisions.

3.2.3 In the Chemical Products Industry

Finally, Binner et al.[84] applied ACBC to the design of a chemical product. A sample of 200 informants completed an ACBC survey with 14 attributes (including price), a BYO, Screener section with 24 concepts, four ‘Must Have’ and three ‘Unacceptable’ questions, a Choice Tournament with a maximum of 18 concepts, and six calibration concepts. The implementation team concluded that ACBC provided a realistic and relevant survey experience for participants. Results were useful in defining new products, improving packaging, and optimizing the range of products offered. Although the survey averaged 30 minutes, the team determined that reliable results could be obtained with smaller samples. They concluded that, although ACBC would not replace conventional CA, it provided a valuable adjunct to existing methods.

4. Discussion

The ACBC software suite was designed to simulate the two-stage decision-making processes that influence many real-world choices.[39] This approach accommodates both simplifying heuristics[36] and the more effortful compensatory processing assumed by the weighted additive logit rule.[34] By presenting product, service, or outcome concepts clustering around preferences stated in the BYO, and respecting the ‘Unacceptable’ or ‘Must Have’ heuristics identified in the Screener section, ACBC attempts to create a more user-centered survey process than conventional CA. Randomized trials confirm that, for most participants, ACBC yields a more relevant and engaging survey than conventional choice-based conjoint methods.[67,78,80]

Informants completing ACBC surveys report that they are more inclined to devote the higher level of attention needed to understand their preferences.[80] In comparison with conventional choice-based CA, ACBC surveys yield lower standard errors[78,80] and improved prediction of hold-out task choices.[67,78,80,81] The single study[78] predicting real-world decisions found that ACBC provided better estimates of actual product choices than conventional choice-based CA surveys.

Most investigators reported that ACBC surveys require more time to complete than conventional choice-based CA approaches. This may add incremental costs to the conduct of studies relying on commercial internet panels,[85] and pose a logistical challenge when studies are conducted during the course of the health service delivery process.[12] However, investigators may be able to limit the length of ACBC studies by reducing the number of tasks included in the Screener section.[80] For example, Orme and Johnson[80] reported that an abbreviated Screener section presenting 24 concepts yielded results similar to one presenting 32 concepts.

Because ACBC captures more individual data than conventional choice-based conjoint surveys, preliminary studies suggest ACBC may require smaller sample sizes.[66] For example, Chapman et al.[78] concluded that ACBC would yield similar group-level standard errors, with 38% fewer participants than a choice-based conjoint survey. Goodwin[70] estimated that sample sizes for ACBC could be approximately 50% of those for partial-profile studies. Smaller sample sizes may compensate for the additional time required to complete ACBC surveys and make these methods more useful in health service contexts where it might be difficult to compose patient samples large enough to conduct conventional choice-based CA surveys.

Although the components of ACBC surveys are widely used, the combination of these approaches constitutes a new methodology. Developing an ACBC survey requires a larger number of design decisions than conventional choice-based CA studies. Although technical manuals provided useful guidelines,[66] and Sawtooth Software’s workshops allowed us to develop and field a successful ACBC survey, we concluded that the proficient use of ACBC methods requires more training and experience than conventional approaches to CA. There is, moreover, a need for methodological studies informing the many decisions involved in the design of an ACBC survey.

The development of ACBC has, in part, been motivated by a concern that informants in conventional CA studies base their choices on non-compensatory simplifying heuristics.[66] The operation of non-compensatory screening strategies has been inferred from the quantitative analysis of response patterns[86] and the brief response times observed in some studies using choice-based conjoint surveys.[38] Does the 12–15 seconds that participants devote to individual choice tasks, for example, allow the slower, deliberative, effortful consideration assumed by the weighted additive models on which CA is based?[67] However, ‘think aloud’ paradigms show that, participants whose response patterns suggest they might be using non-compensatory simplifying strategies, may actually be choosing according to a weighted additive rule.[86] Recent research, moreover, demonstrates that automatic, intuitive processes can integrate weighted additive information much more quickly than was previously believed.[87] There is a need for more research explicating the decision-making processes operating at different stages of choice-based CA and ACBC surveys.

4.1 Limitations

It is important to acknowledge some limitations of these methods. ACBC is not applicable to all design problems. For example, it is recommended that ACBC is most appropriate for surveys with ≥5 attributes.[66] In the single trial including fewer attributes, ACBC did not outperform a conventional choice-based CA survey.[66] It is also suggested that ACBC studies include <12 attributes, with no more than seven levels per attribute.[66] However, investigators interested in a greater number of attributes or attribute levels can use pre-BYO questions to reduce the number of attributes or attribute levels carried forward into the BYO section of the survey.[66]

Second, because ACBC uses BYO choices to compose concepts for the Screener section, and choices in the Screener section to design the Choice Tournament, ACBC surveys must be administered by computer. Although it is not possible to conduct paper and pencil versions, ACBC surveys can be administered using Computer-Administered Personal Interview programs or computers with wireless internet access.

Finally, ACBC does not currently permit alternative-specific designs, where some attributes or attribute levels are appropriate for some concept alternatives but not others (e.g. adverse effects associated with one medication but not another).[14]

The limitations of the available evidence also merit consideration. For example, it is difficult to determine whether the improved prediction observed in some ACBC studies results from the additional time that informants spend completing ACBC surveys; the greater attention that informants appear to devote to the decision-making process; the convergence of methods achieved by including BYO, a Screening section, and Choice Tournaments; the increase in data that ACBC surveys yield; or the fact that choice sets including attributes with similar utilities (utility balance) are more efficient.[77] A meta-analytic study of 35 commercial data sets demonstrating little improvement in prediction with increases beyond 10–15 choice tasks[88] suggests this is not simply a result of a longer survey process.[66]

With the exception of the study reported by Chapman et al.,[78] which found that ACBC yielded more accurate market share predictions than conventional choice-based CA surveys, we found no trials comparing the predictive validity of these methods in real-world settings. Although reports describing the application of ACBC to marketing problems were generally favorable, there is a need to examine the utility of these methods in health service settings. Estimating the value of treatments or outcomes that may be more abstract and less familiar than the products and services encountered in marketing applications may pose difficulties for these methods.[89,90] Moreover, we know little about the effects of allowing patients to designate ‘Unacceptable’ or ‘Must Have’ attribute levels before the benefits and risks of these options are fully explained and understood.

Finally, many of the studies cited in this article were conducted by researchers affiliated with Sawtooth Software. Meta-analytic reviews suggest that industry-sponsored randomized trials yield systematically larger effect sizes than those conducted with independent funding.[91–93] There is a need for independent studies comparing different approaches to the conduct of discrete-choice studies.

5. Conclusions

The ACBC software suite was designed to create a more relevant and engaging experience, enhance the quality of the data collected in choice-based CA surveys, improve utility estimation, and increase the validity of real-world predictions. Preliminary evidence from marketing research applications suggests that ACBC represents a useful approach to the involvement of users in a more patient-centered health service delivery process.

Notes

The term ‘concepts’ refers to the multi-attribute product or service options included in ACBC and choice-based conjoint surveys.

The ‘none threshold’ can be used to estimate the percentage of participants who would prefer none of the options presented in simulations.[14]

Investigators sometimes present one or more hold-out choice tasks to all participants. Hold-out tasks are not included in the design of the experiment or the estimation of utilities. The accuracy with which simulations using the utilities derived from the remaining choice tasks predict either individual hold-out task choices (hit rates), or the overall percentage of participants selecting each hold-out task option (share of preference), is thought to reflect the study’s internal validity.[14]

Although preferences for the levels of some attributes might have a natural order (e.g. treatment effect size or frequency of adverse events), respondent errors may lead to utility values violating these assumptions (e.g. reversals). Some statistical packages, therefore, allow analysts to impose constraints that require utilities to increase or decrease monotonically.[14]

References

Wait S, Nolte E. Public involvement policies in health: exploring their conceptual basis. Health Econ Policy Law 2006 April; 1(2): 149–62

Lomas J. Reluctant rationers: public input to health care priorities. J Health Serv Res Policy 1997 Apr; 2(2): 103–11

Swift JK, Callahan JL. The impact of client treatment preferences on outcome: a meta-analysis. J Clin Psychol 2009 Feb 18; 65(4): 368–81

Adamson SJ, Bland JM, Hay EM, et al. Patients’ preferences within randomised trials: systematic review and patient level meta-analysis. BMJ 2008 Oct; 337: a1864

Ryan M. Discrete choice experiments in health care. BMJ 2004 Feb 14; 328(7436): 360–1

Bridges JFP, Kinter ET, Kidane L, et al. Things are looking up since we started listening to patients: trends in the application of conjoint analysis in health 1982–2007. Patient 2008 Oct 1; 1(4): 273–83

Lancsar E, Louviere J. Conducting discrete choice experiments to inform healthcare decision making: a user’s guide. Pharmacoeconomics 2008; 26(8): 661–77

Ryan M, Scott DA, Reeves C, et al. Eliciting public preferences for healthcare: a systematic review of techniques. Health Technol Assess 2001; 5(5): 1–186

Cunningham CE, Deal K, Rimas H, et al. Using conjoint analysis to model the preferences of different patient segments for attributes of patient-centred care. Patient 2008 Oct 1; 1(4): 317–30

Fraenkel L, Bogardus Jr ST, Concato J, et al. Treatment options in knee osteoarthritis: the patient’s perspective. Arch Intern Med 2004 Jun 28; 164(12): 1299–304

Fraenkel L, Chodkowski D, Lim J, et al. Patients’ preferences for treatment of hepatitis C. Med Decis Making 2010; 30(1): 45–57

Fraenkel L. Conjoint analysis at the individual patient level: issues to consider as we move from a research to a clinical tool. Patient 2008 Oct 1; 1(4): 251–3

Luce RD, Tukey JW. Simultaneous conjoint measurement: a new type of fundamental measurement. J Math Psychol 1964; 1(1): 1–27

Orme BK. Getting started with conjoint analysis: strategies for product design and pricing research. Madison (WI): Research Publishers, 2009

Gustafsson A, Herrmann A, Huber F. Conjoint analysis as an instrument of market research practice. In: Gustafsson A, Herrmann A, Huber F, editors. Conjoint measurement. Berlin: Springer, 2007: 3–34

Hensher DA, Rose JM, Greene WH. Applied choice analysis: a primer. New York: Cambridge University Press, 2005

Ryan M, Gerard K, Amaya-Amaya M. Using discrete choice experiments to value health and health care. New York: Springer, 2007

Hanley N, Ryan M, Wright R. Estimating the monetary value of health care: lessons from environmental economics. Health Econ 2003 Jan; 12(1): 3–16

Lancsar E, Louviere J, Flynn T. Several methods to investigate relative attribute impact in stated preference experiments. Soc Sci Med 2007 Apr; 64(8): 1738–53

Brown DS, Johnson FR, Poulos C, et al. Mothers’ preferences and willingness to pay for vaccinating daughters against human papillomavirus. Vaccine 2010 Feb 17; 28(7): 1702–8

Telser H, Zweifel P. Measuring willingness-to-pay for risk reduction: an application of conjoint analysis. Health Econ 2002 March; 11(2): 129–39

DeSarbo WS, Ramaswamy V, Cohen SH. Market segmentation with choice-based conjoint analysis. Mark Lett 1995; 6(2): 137–47

Ramaswamy V, Cohen SH. Latent class models for conjoint analysis. In: Gustafsson A, Herrmann A, Huber F, editors. Conjoint measurement methods and applications. 4th ed. New York: Springer, 2007: 295–320

Huber J, Orme BK, Miller R. Dealing with product similarity in conjoint simulations. In: Gustafsson A, Herrmann A, Huber F, editors. Conjoint measurement: methods and applications. 4th ed. New York: Springer, 2007: 347–62

Ryan M, Skatun D, Major K. Using discrete choice experiments to go beyond clinical outcomes when evaluating clinical practice. In: Ryan M, Gerard K, Amaya-Amaya M, editors. Using discrete choice experiments to value health and health care. New York: Springer, 2007: 101–16

Bridges JF. Future challenges for the economic evaluation of healthcare: patient preferences, risk attitudes and beyond. Pharmacoeconomics 2005; 23(4): 317–21

Ryan M. Using conjoint analysis to take account of patient preferences and go beyond health outcomes: an application to in vitro fertilisation. Soc Sci Med 1999 Feb; 48(4): 535–46

Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. 4th ed. New York: Oxford University Press, 2008

Tourangeau R, Yan T. Sensitive questions in surveys. Psychol Bull 2007 Sept; 133(5): 859–83

Caruso EM, Rahnev DA, Banaji MR. Using conjoint analysis to detect discrimination: revealing covert preferences from overt choices. Soc Cogn 2009; 27(1): 128–37

Phillips KA, Johnson FR, Maddala T. Measuring what people value: a comparison of ‘attitude’ and ‘preference’ surveys. Health Serv Res 2002 Dec; 37(6): 1659–79

Kuhfeld WF, Tobias RD, Garratt M. Efficient experimental design with marketing research applications. J Market Res 1994; 31(4): 545–57

Patterson M, Chrzan K. Partial profile discrete choice: what’s the optimal number of attributes? In: Sawtooth Software Inc., editors. 2003 Sawtooth Software Conference Proceedings; 2003 April 15–17; San Antonio (TX). Sequim (WA): Sawtooth Software Inc., 2003: 173–85

Louviere JJ, Hensher DA, Swait JD. Conjoint preference elicitation methods in the broader context of random utility theory preference elicitation methods. In: Gustafsson A, Herrmann A, Huber F, editors. Conjoint measurement: methods and applications. 4th ed. New York: Springer, 2007: 167–97

Bridges JF. Stated preference methods in health care evaluation: an emerging methodological paradigm in health economics. Appl Health Econ Health Policy 2003; 2(4): 213–24

Shah AK, Oppenheimer DM. Heuristics made easy: an effort-reduction framework. Psychol Bull 2008; 134(2): 207–22

Chinburapa V, Larson LN, Brucks M, et al. Physician prescribing decisions: the effects of situational involvement and task complexity on information acquisition and decision making. Soc Sci Med 1993 Jun; 36(11): 1473–82

Gilbride TJ, Allenby GM. A choice model with conjunctive, disjunctive, and compensatory screening rules. Mark Sci 2004; 23(3): 391–406

Kahneman D, Frederick S. Representativeness revisited: attribute substitution in intuitive judgment. In: Gilovich T, Griffin D, Kahneman D, editors. Heuristics of intuitive judgment: extensions and applications. 1st ed. New York: Cambridge University Press, 2002: 49–81

Mukherjee K. A dual system model of preferences under risk. Psychol Rev 2010 Jan; 117(1): 243–55

Bettman JR, Luce MF. Constructive consumer choice processes. J Consum Res 1998 Dec; 25(3): 187–219

Gibbons FX, Houlihan AE, Gerrard M. Reason and reaction: the utility of a dual-focus, dual-processing perspective on promotion and prevention of adolescent health risk behaviour. Br J Health Psychol 2009 May; 14 (Pt 2): 231–48

Peters E, Diefenbach MA, Hess TM, et al. Age differences in dual information-processing modes: implications for cancer decision making. Cancer 2008 Dec 15; 113(12 Suppl.): 3556–67

Slovic P, Peters E, Finucane ML, et al. Affect, risk, and decision making. Health Psychol 2005; 24(4): S35–40

Croskerry P. Clinical cognition and diagnostic error: applications of a dual process model of reasoning. Adv Health Sci Educ Theory Pract 2009 Sep; 14Suppl. 1: 27–35

Croskerry P. A universal model of diagnostic reasoning. Acad Med 2009 Aug; 84(8): 1022–8

Norman G. Dual processing and diagnostic errors. Adv Health Sci Educ Theory Pract 2009 Sep; 14Suppl. 1: 37–49

Kuo WJ, Sjostrom T, Chen YP, et al. Intuition and deliberation: two systems for strategizing in the brain. Science 2009 Apr 24; 324(5926): 519–22

Hauser J, Ding M, Gaskin S. Non-compensatory (and compensatory) models of consideration-set decisions. In: Sawtooth Software Inc., editors. 2009 Sawtooth Software Conference Proceedings; 2009 Mar 25–27; Delray Beach (FL). Sequim (WA): Sawtooth Software Inc., 2009: 207–32

Hauser J, Ely D, Yee M, et al. Must have aspects vs. tradeoff aspects in models of customer decisions. In: Sawtooth Software Inc., editors. 2006 Sawtooth Software Conference Proceedings; 2006 Mar 29–31; [clDelray Beach (FL). Sequim (WA): Sawtooth Software Inc., 2006: 169–81

Cunningham CE, Deal K, Rimas H, et al. Modeling the information preferences of parents of children with mental health problems: a discrete choice conjoint experiment. J Abnorm Child Psychol 2008 Oct; 7(36): 1128–38

Cunningham CE, Vaillancourt T, Rimas H, et al. Modeling the bullying prevention program preferences of educators: a discrete choice conjoint experiment. J Abnorm Child Psychol 2009 May 20; 37(7): 929–43

Cunningham CE, Deal K, Rimas H, et al. Providing information to parents of children with mental health problems: a discrete choice conjoint analysis of professional preferences. J Abnorm Child Psychol 2009 Nov; 37(8): 1089–102

Cunningham CE, Deal K, Neville A, et al. Modeling the problem-based learning preferences of McMaster University undergraduate medical students using a discrete choice conjoint experiment. Adv Health Sci Educ Theory Pract 2006 Aug; 11(3): 245–66

Johnson RM. History of ACA [Sawtooth Software research paper series]. Sequim (WA): Sawtooth Software, 2001 [online]. Available from URL: http://www.sawtoothsoft ware.com/download/techpap/histaca.pdf [Accessed 2010 Aug 8]

Fraenkel L, Gulanski B, Wittink D. Patient treatment preferences for osteoporosis. Arthritis Rheum 2006 Oct 15; 55(5): 729–35

Fraenkel L, Gulanski B, Wittink D. Patient willingness to take teriparatide. Patient Educ Couns 2007 Feb; 65(2): 237–44

Bell RA, Paterniti DA, Azari R, et al. Encouraging patients with depressive symptoms to seek care: a mixed methods approach to message development. Patient Educ Couns 2010 Feb; 78(2): 198–205

Dwight-Johnson M, Lagomasino IT, Aisenberg E, et al. Using conjoint analysis to assess depression treatment preferences among low-income Latinos. Psychiatr Serv 2004 Aug; 55(8): 934–6

Dwight-Johnson M, Sherbourne CD, Liao D, et al. Treatment preferences among depressed primary care patients. J Gen Intern Med 2000 Aug; 15(8): 527–34

Pieterse AH, Berkers F, Baas-Thijssen MC, et al. Adaptive conjoint analysis as individual preference assessment tool: feasibility through the internet and reliability of preferences. Patient Educ Couns 2010 Feb; 78(2): 224–33

Stiggelbout AM, de Vogel-Voogt E, Noordijk EM, et al. Individual quality of life: adaptive conjoint analysis as an alternative for direct weighting? Qual Life Res 2008 May; 17(4): 641–9

Beusterien KM, Dziekan K, Schrader S, et al. Patient preferences among third agent HIV medications: a US and German perspective. AIDS Care 2007 Sep; 19(8): 982–8

Gan TJ, Lubarsky DA, Flood EM, et al. Patient preferences for acute pain treatment. Br J Anaesth 2004 May; 92(5): 681–8

Halme M, Linden K, Kaaria K. Patients preferences for generic and branded over-the-counter medicines: an adaptive conjoint analysis approach. Patient 2009; 2(4): 243–55

Sawtooth Software, Inc. ACBC technical paper [Sawtooth Software technical paper series]. Sequim (WA): Sawtooth Software, Inc., 2009 [online]. Available from URL: http://www.sawtoothsoftware.com/download/techpap/acbctech. pdf [Accessed 2010 Aug 8]

Johnson RM, Orme BK. A new approach to adaptive CBC [Sawtooth Software research paper series]. Sequim (WA): Sawtooth Software, Inc., 2007 [online]. Available from URL: http://www.sawtoothsoftware.com/download/tech pap/acbc10.pdf [Accessed 2010 Aug 8]

Sawtooth Software. Adaptive choice (ACBC) [online]. Available from URL: http://www.sawtoothsoftware.com/ products/acbc/ [Accessed 2010 Aug 8]

Johnson R, Orme BK, Pinnell J. Simulating market preference with ‘build your own’ data. In: Sawtooth Software Inc., editors. 2006 Sawtooth Software Conference Proceedings; 2006 Mar 29–31; Delray Beach (FL). Sequim (WA): Sawtooth Software, Inc., 2006: 239–53

Goodwin RJ, editor. Introduction of quantitative marketing research solutions in a traditional manufacturing firm: practical experiences. 2009 Sawtooth Software Conference Proceedings; 2009 Mar 25–27; Delray Beach (FL). Squim (WA): Sawtooth Software Inc., 2009

Arora N, Allenby GM, Ginter JL. A hierarchical Bayes model of primary and secondary demand. Mark Sci 1998; 17(1): 29–44

Allenby GM, Arora N, Gintner JL. Incorporating prior knowledge into the analysis of conjoint studies. J Market Res 1995; 32: 152–62

Lenk PJ, DeSarbo WS, Green PE, et al. Hierarchical Bayes conjoint analysis: recovery of partworth heterogeneity from reduced experimental designs. Mark Sci 1996 March; 15(2): 173–92

Allenby GM, Fennell G, Huber J, et al. Adjusting choice models to better predict market behavior. Mark Lett 2005 Dec; 16(3–4): 197–208

Louviere JJ, Street D, Burgess L, et al. Modeling the choices of individual decision-makers by combining efficient choice experiment designs with extra preference information. J Choice Model 2008; 1: 128–63

Streiner DL, Norman GR. PDQ epidemiology. 3rd ed. New York: McGraw-Hill Medical, 2009

Huber J, Zwerina K. The importance of utility balance in efficient choice designs. J Mark Res 1996 Aug; 33(3): 307–17

Chapman CN, Alford JL, Johnson C, et al. CBC vs. ACBC: comparing results with real product selection. In: Sawtooth Software Inc., editors. 2009 Sawtooth Software Conference Proceedings; 2009 Mar 25–27; Delray Beach (FL). Sequim (WA): Sawtooth Software,Inc., 2009: 199–206

Johnson RM. Including holdout choice tasks in conjoint studies [Sawtooth Software research paper series]. Sequim (WA): Sawtooth Software, Inc., 1997 [online]. Available from URL: http://www.sawtoothsoftware.com/download/ techpap/inclhold.pdf [Accessed 2010 Aug 8]

Orme BK, Johnson RM. Testing adaptive CBC: shorter questionnaires and BYO vs. ‘most likelies’ [Sawtooth Software research paper series]. Sequim (WA): Sawtooth Software, Inc., 2008 [online]. Available from URL: http://www.sawtoothsoftware.com/download/techpap/acbc3home. pdf [Accessed 2010 Aug 8]

Kurz P, editor. A comparison between adaptive choice based conjoint, partial profile choice based conjoint and choice based conjoint. SKIM/Sawtooth Software Training Event; 2009 May 25–27; Prague. Sequim (WA): Sawtooth Software Inc., 2009

Orme BK. Fine-tuning CBC and adaptive CBC questionnaires [Sawtooth Software research paper series]. Sequim (WA): Sawtooth Software, Inc., 2009 [online]. Available from URL: http://www.sawtoothsoftware.com/ download/techpap/finetune.pdf [Accessed 2010 Aug 8]

Neggers R, Hoogerbrugge M, editors. ACBC test in mobile telephony. SKIM/Sawtooth Software Conference; 2009 May 25–27; Prague. Squim (WA): Sawtooth Software, Inc., 2009

Binner S, Neggers R, Hoogerbrugge M, editors. ACBC: a case study. SKIM/Sawtooth Software Training Event; 2009 May 25–27; Prague. Sequim (WA): Sawtooth Software, Inc., 2009

Johnson FR, Ozdemir S, Manjunath R, et al. Factors that affect adherence to bipolar disorder treatments: a stated-preference approach. Med Care 2007 Jun; 45(6): 545–52

Ryan M, Watson V, Entwistle V. Rationalising the ‘irrational’: a think aloud study of discrete choice experiment responses. Health Econ 2009 Mar; 18(3): 321–36

Glockner A, Betsch T. Multiple-reason decision making based on automatic processing. J Exp Psychol Learn Mem Cogn 2008 Sep; 34(5): 1055–75

Hoogerbrugge M, van der Wagt K. How many choice tasks should we ask? In: Sawtooth Software Inc., editors. 2006 Sawtooth Software Conference Proceedings; 2006 Mar 29–31; Delray Beach (FL). Sequim (WA): Sawtooth Software, Inc., 2006:97–110

Johnson FR. Why not ask? Measuring patient preferences for healthcare decision making. Patient 2008 Oct 1; 1(4): 245–8

Telser H, Becker K, Zweifel P. Validity and reliability of willingness-to-pay estimates: evidence from two overlapping discrete-choice experiments. Patient 2008 Oct 1; 1(4): 283–98

Perlis RH, Perlis CS, Wu Y, et al. Industry sponsorship and financial conflict of interest in the reporting of clinical trials in psychiatry. Am J Psychiatry 2005 Oct 2005; 162(10): 1957–60

Gluud LL. Bias in clinical intervention research. Am J Epidemiol 2006; 163(6): 493–501

Bhandari M, Busse JW, Jackowski D, et al. Association between industry funding and statistically significant pro-industry findings in medical and surgical randomized trials. Can Med Assoc J 2004; 170(4): 477–80

Acknowledgements

This project was supported by the Jack Laidlaw Chair in Patient-Centered Health at McMaster University Faculty of Health Sciences. Dr Mark Loeb provided helpful advice regarding the design of the H1N1 survey used to illustrate ACBC methods. Bryan Orme provided thoughtful comments during the preparation of this manuscript. Jenna Ratcliffe, Diana Urajnik, Stephanie Mielko, Heather Rimas, and Catherine Campbell provided editorial assistance.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Cunningham, C.E., Deal, K. & Chen, Y. Adaptive Choice-Based Conjoint Analysis. Patient-Patient-Centered-Outcome-Res 3, 257–273 (2010). https://doi.org/10.2165/11537870-000000000-00000

Published:

Issue Date:

DOI: https://doi.org/10.2165/11537870-000000000-00000