Abstract

Meta-analysis is a very useful tool to combine information from different sources. Fixed effect and random effect models are widely used in meta-analysis. Despite their popularity, they may give us misleading results if the models don’t fit the data but are blindly used. Therefore, like any statistical analysis, checking the model fitting is an important step. However, in practice, the goodness-of-fit in meta-analysis is rarely discussed. In this paper, we propose some tests to check the goodness-of-fit for the fixed and random effect models with assumption of normal distributions in meta-analysis. Through simulation study, we show that the proposed tests control type I error rate very well. To demonstrate the usefulness of the proposed tests, we also apply them to some real data sets. Our study shows that the proposed tests are useful tools in checking the goodness-of-fit of the normal models used in meta-analysis.

Similar content being viewed by others

Introduction

Due to technological advancement, the speed of generating large data is increasing. The tremendous amount of data provide us opportunities to answer many scientific questions. On the other hand, however, we are facing the challenge: how to extract useful information from different but related studies. Meta-analysis has been shown to be a very useful tool to combine information; it has being intensively used in data analysis1. For example, when we used “((meta-analy* or metaanaly* or metanaly* or pooled analy* or consorti*) or meta-analysis)” to retrieve researches on meta-analysis from the PubMed database (http://www.ncbi.nlm.nih.gov/pubmed), it returned 65,881 papers published within the most recent five years (as of February 8, 2015). This demonstrates that meta-analysis is a popular and useful statistical tool in data analysis.

Fixed effect (FE) model and random effect (RE) model are the two most commonly used models in meta-analysis, though some less frequently used methods, such as Bayesian meta-analysis and p-value combining approaches, are also available in the literature. In practice, many researchers conduct meta-analysis as follows. First, they perform the Cochran’s test to check the assumption of homogeneity of effects. If the assumption is not rejected, the FE model is used and the results from FE are reported. On the other hand, if this test provides evidence against the homogeneity assumption, the RE model is then used and the results from the RE model are used. Sometimes, results from both FE and RE models were reported1.

An important, but usually unanswered, question in meta-analysis is: how the models used fit the data. Just like in any statistical modeling, the goodness-of-fit test is a critical step to check the model adequacy. The reason we should not ignore this step is that results from an inadequate model may be misleading. Unfortunately, this issue is rarely, if not at all, discussed in practice. There are several possible explanations. First, although many software packages are available, they do not provide GoF tests. Second, many researchers who conduct meta-analysis have limited statistical background and are not aware of this issue and its consequences. Last but not least, no GoF test has been developed for meta-analysis in the literature, though some GoF tests have been proposed for generalized linear mixed models2,3,4,5,6.

To fill the gap, in this paper we propose some GoF test approaches for meta-analysis to assess if the data are jointly normally distributed, regardless of the type of the mean effects. Through simulation we show that the proposed tests can control type I error rate. We also conduct some simulations to compare the power performance among the proposed tests. Finally, the proposed tests are applied to two real data sets to demonstrate their usefulness.

Method

Suppose we have statistics yi (e.g., mean difference, effect size, log odds ratio, etc.) and its variance, vi, for study i (i = 1, 2, …, K) of K related but independent studies. We want to combine the information from those K studies using the following random effect model:

where the independent study-specific effects, βi’s, represent a random sample from a grand normal population with overall mean μ (e.g, μ = ln(OR) with OR being the overall OR across studies for the binary trait, or the average mean difference across studies for the quantitative trait) and between-study variance τ2. In other words, we assume  are independently and identically distributed as

are independently and identically distributed as  . We also assume the study error terms are independent and follow a normal distribution, i.e,

. We also assume the study error terms are independent and follow a normal distribution, i.e,  . Note that here the variance

. Note that here the variance  for each individual study may not be the same.

for each individual study may not be the same.

When τ2 = 0, the random effect model (1) becomes a fixed effect model. Therefore, fixed effect model is a special case of the random effect model. The parameters  and

and  in (1) are usually estimated through a two-step approach. In the first step,

in (1) are usually estimated through a two-step approach. In the first step,  will be estimated using one of many different estimators7,8,9,10,11,12. In general, those approaches give similar results and none of them performs uniformly the best. In addition, among those estimators, the DerSimonian-Laird (DL) method is most commonly used. Therefore, in this paper the DL estimator is used.

will be estimated using one of many different estimators7,8,9,10,11,12. In general, those approaches give similar results and none of them performs uniformly the best. In addition, among those estimators, the DerSimonian-Laird (DL) method is most commonly used. Therefore, in this paper the DL estimator is used.

The parameter, τ2, which measures the between-study variance, is estimated by10:

where  ,

,  and

and  is the statistic of the Cochran’s test for homogeneity13,14 where the null hypothesis is

is the statistic of the Cochran’s test for homogeneity13,14 where the null hypothesis is  . Q is defined as follows:

. Q is defined as follows:

where

Note that under the normality assumption in model (1) and the null hypothesis  for the Cochran’s test, Q has a chi-square distribution with degrees of freedom (df) equal to K-1. A large value of Q (or a small p-value from the Cochran’s test) indicates the lack of fit of the fixed effect model; a random effect model as (1) is then usually performed.

for the Cochran’s test, Q has a chi-square distribution with degrees of freedom (df) equal to K-1. A large value of Q (or a small p-value from the Cochran’s test) indicates the lack of fit of the fixed effect model; a random effect model as (1) is then usually performed.

In the second step, the overall effect of  is then estimated by the weighted mean

is then estimated by the weighted mean  and its variance is estimated by Var

and its variance is estimated by Var . Then the hypothesis is tested for overall effect across all studies as: H0: μ = 0 vs H1: μ ≠ 0. The Wald test,

. Then the hypothesis is tested for overall effect across all studies as: H0: μ = 0 vs H1: μ ≠ 0. The Wald test,  asymptotically under H0, is the standard test of the hypothesis about the overall mean.

asymptotically under H0, is the standard test of the hypothesis about the overall mean.

Like any statistical approach, model checking is a critical step as the results from a model with lack of fit may be misleading. Several goodness-of-fit tests have been proposed in the literature for the generalized linear mixed models2,3,4,5,6, which include the random effect models as special cases. However, unlike the ordinary random effect models where the within cluster error terms are assumed to be independently and identically distributed (iid) as a normal distribution, the RE model (1) in meta-analysis assumes the error terms  have known and possible different variances. Therefore, those existing goodness-of-fit tests designed for the generalized linear mixed models are inapplicable for the random effect model (1) in meta-analysis.

have known and possible different variances. Therefore, those existing goodness-of-fit tests designed for the generalized linear mixed models are inapplicable for the random effect model (1) in meta-analysis.

To fill this gap, we propose some new goodness-of-fit tests for the random effect model (1). It can be easily shown that under model (1),  has a multivariate normal distribution, i.e.,

has a multivariate normal distribution, i.e.,  , where the covariance matrix

, where the covariance matrix  . If the Cochran test statistic Q is large, from (2) we know that

. If the Cochran test statistic Q is large, from (2) we know that  is expected to be large as well. On the other hand, if

is expected to be large as well. On the other hand, if  is large enough, the covariance matrix

is large enough, the covariance matrix  can be approximated by a matrix with all diagonal elements equal to a constant. In this case the yi’s can be seen as approximately K iid samples from a normal distribution. Therefore, the normality tests can be used to check the goodness-of-fit for model (1). In this paper, we propose several goodness-of-fit tests for model (1) based on the following popular and powerful normality tests: the Anderson-Darling (AD) test15,16, the Cramer-von Mises (CvM) test15,17,18 and the Shapiro-Wilk (SW) test19. In general, from model (1) the yi’s are samples from K independent but not identical normal distributions; the p-values from the normality tests are not accurate. However, to overcome this difficulty we can estimate those p-values based on resampling approaches, such as parametric bootstrap. Specifically, the proposed tests are conducted by the following steps:

can be approximated by a matrix with all diagonal elements equal to a constant. In this case the yi’s can be seen as approximately K iid samples from a normal distribution. Therefore, the normality tests can be used to check the goodness-of-fit for model (1). In this paper, we propose several goodness-of-fit tests for model (1) based on the following popular and powerful normality tests: the Anderson-Darling (AD) test15,16, the Cramer-von Mises (CvM) test15,17,18 and the Shapiro-Wilk (SW) test19. In general, from model (1) the yi’s are samples from K independent but not identical normal distributions; the p-values from the normality tests are not accurate. However, to overcome this difficulty we can estimate those p-values based on resampling approaches, such as parametric bootstrap. Specifically, the proposed tests are conducted by the following steps:

Step 1. For given  (i = 1, 2, …, K), calculate the statistics ad0, cvm0 and sw0, from the AD, CvM and SW tests, respectively;

(i = 1, 2, …, K), calculate the statistics ad0, cvm0 and sw0, from the AD, CvM and SW tests, respectively;

Step 2. Estimate  using (2);

using (2);

Step 3. Resample B sub-samples from  , where

, where  is the estimate of

is the estimate of  with

with  being replaced by its estimate

being replaced by its estimate  . For each sample j (j = 1, 2, …, B), calculate statistics, adj, cvmj and swj using the AD test, the CvM test and the SW test, respectively;

. For each sample j (j = 1, 2, …, B), calculate statistics, adj, cvmj and swj using the AD test, the CvM test and the SW test, respectively;

Step 4. The p-values are estimated by the portions of adj, cvmj and swj, which are greater than ad0, cvm0 and sw0, respectively.

Note that the parameter  in model (1) has no effect on the normality tests and therefore, in Step 3 we can simulate data from a multivariate normal distribution with mean vector equal to 0, instead of

in model (1) has no effect on the normality tests and therefore, in Step 3 we can simulate data from a multivariate normal distribution with mean vector equal to 0, instead of  .

.

To assess the performance of the proposed tests, we estimate their type I error rates and the detecting powers using simulated data. We also illustrate the use of the proposed tests through two real data applications.

Results

Simulation results

In the simulation study, we assume there are K (K = 5, 10, 15, 20, 30, 50) independent studies. We also assume the variances (vi) of the K effects are random samples from the uniform distribution U(a, b) with different values of a and b. To estimate the type I error rate, for the null hypothesis the parameter  takes three values 0, 0.1 and 1.0. The pair of (a, b) take values (0.1, 1.0), (0.01, 0.1) and (0.001, 0.01). Without loss of generality,

takes three values 0, 0.1 and 1.0. The pair of (a, b) take values (0.1, 1.0), (0.01, 0.1) and (0.001, 0.01). Without loss of generality,  are assumed iid samples from the normal distribution N(0,

are assumed iid samples from the normal distribution N(0, ). In our simulation study, we use B = 1,000 bootstraps to estimate p-value. The significance level 0.05 and 1,000 replicates are used to estimate the type I error rate and power.

). In our simulation study, we use B = 1,000 bootstraps to estimate p-value. The significance level 0.05 and 1,000 replicates are used to estimate the type I error rate and power.

Table 1 reports the estimated type I error rates for each of the three methods. It clearly shows that the proposed tests control type I error rate around the nominal level 0.05 no matter whether the effects are homogeneous ( = 0), somehow heterogeneous (

= 0), somehow heterogeneous ( is relatively small, e.g.,

is relatively small, e.g.,  = 0.1 while a = 0.1, b = 1), or highly heterogeneous (

= 0.1 while a = 0.1, b = 1), or highly heterogeneous ( is relatively large, e.g.,

is relatively large, e.g.,  = 0.1 while a = 0.001 and b = 0.01).

= 0.1 while a = 0.001 and b = 0.01).

, a, b) from 1,000 replicates at significant level 0.05.

, a, b) from 1,000 replicates at significant level 0.05.When assessing the detecting power, for the alternative hypothesis, we assume  in model (1) have the following distributions: (a) uniform U(-1, 1), (b) log-normal LN(0, 1), (c) double exponential or Laplace DE(0, 1), (d) Cauchy Cauchy(0, 1) and (e) t-distribution with df = 1, T1. For the error term,

in model (1) have the following distributions: (a) uniform U(-1, 1), (b) log-normal LN(0, 1), (c) double exponential or Laplace DE(0, 1), (d) Cauchy Cauchy(0, 1) and (e) t-distribution with df = 1, T1. For the error term,  , it is independently sampled from a normal distribution with mean 0 and variance vi, which is a random sample from the uniform distribution U(a, b). The values of the pair of (a, b) are set to be (0.001, 0.01), (0.001, 0.1), (0.001, 1) and (0.001, 10).

, it is independently sampled from a normal distribution with mean 0 and variance vi, which is a random sample from the uniform distribution U(a, b). The values of the pair of (a, b) are set to be (0.001, 0.01), (0.001, 0.1), (0.001, 1) and (0.001, 10).

Tables 2, 3, 4, 5, 6 report the estimated power values from each method under different settings. It can be seen that when study size (K) is small, all methods have relatively low power values. However, the detecting power increases when the sample size increases. In general, the power decreases when the variances vi’s and their heterogeneity increase (e.g, b increases). From the simulation results, we can observe that these three methods have similar performance and none of them is uniformly more powerful than the other two under all conditions.

Real data application

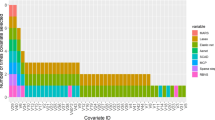

We use two real data sets of meta-analysis to illustrate the usefulness of the proposed tests. Both of the original data sets gave the estimated odds ratio (OR) and its 95% confidence interval (CI) for each study included in the meta-analyses. Table 7 lists the data set 1 from a meta-analysis of 12 trials that exam the effect of patient rehabilitation designed for geriatric patients on functional outcome improvement. The data were taken from Figure 4 of Riley et al.20, part of the Figure 2 of Bachmann et al.21. The p-value from the Cochran’s test for homogeneity was 0.021. Therefore, the authors ran the random effect model and estimated the overall OR as 1.36 with 95% CI (1.08, 1.71)20,21. It should be pointed out that with small sample size like K = 12 here, the Cochran test is anti-conservative22. Table 8 lists the data set 2, which were taken from Figure 2 of Danese and Tan23. In data set 2 there are 44 studies investigating the association between childhood maltreatment and obesity. The p-value from the Cochran’s test for homogeneity was very small (<0.0001); the authors then chose random effect model in their meta-analysis. The overall OR was estimated as 1.36 with 95% CI (1.26, 1.74).

In order to use the proposed tests, for data of OR and its 95% CI, as in Tables 7, 8, we first take the logarithm of the OR to get log(OR) and then use log(U/L)/3.92 to get the standard error (square root of vi) for each individual study, where U and L are the upper and lower limits of the 95% CI for OR. The above estimates of log(OR) and  are appropriate as the 95% CI of the odds ratio is usually calculated from

are appropriate as the 95% CI of the odds ratio is usually calculated from  , namely, the 95% CI for the log(OR) is

, namely, the 95% CI for the log(OR) is  , where

, where  is the estimated standard error of

is the estimated standard error of  . For data set 1, B = 105 bootstraps were used and the p-values were 0.025, 0.018 and 0.039 from the three proposed goodness-of-fit tests AD, CvM and SW, respectively. Those small p-values suggest that the random effect model may not be adequate for this data set. For data set 2, the p-values were 0.047, 0.037 and 0.048 from the AD test, the CvM test and the SW test, respectively. The goodness-of-fit of the random effect model in the meta-analysis was also rejected at significance level 0.05 by all of the three proposed tests.

. For data set 1, B = 105 bootstraps were used and the p-values were 0.025, 0.018 and 0.039 from the three proposed goodness-of-fit tests AD, CvM and SW, respectively. Those small p-values suggest that the random effect model may not be adequate for this data set. For data set 2, the p-values were 0.047, 0.037 and 0.048 from the AD test, the CvM test and the SW test, respectively. The goodness-of-fit of the random effect model in the meta-analysis was also rejected at significance level 0.05 by all of the three proposed tests.

Discussion

Meta-analysis is an important and useful tool for combining information from related studies. However, blindly using the tool may result in misleading results. Model checking is a critical step and should not be ignored. The goodness-of-fit tests proposed in this paper provide useful tool to check model adequacy in meta-analysis.

In practice, the Cochran’s test for homogeneity is usually performed in meta-analysis to see whether or not the fixed effect model fits the data. In general, if the hypothesis of homogeneity is rejected, the assumption of the fixed effect model is questionable. As a result, the random effect model is then usually used. However, little has been found in the literature about the adequacy of the random effect model in meta-analysis. Our proposed goodness-of-fit tests fill the gap.

It should be pointed out that the Cochran’s test for homogeneity was not a formally goodness-of-fit test for the fixed effect model, though in many situations rejecting the hypothesis of homogeneity indicates the inadequacy of the fixed effect model. This is because the Cochran’s test assumes individual effects are from normal distributions. When the sample size for each individual study is large, this assumption may be valid. However, when sample sizes are small for some studies, the effects from those studies may not follow the normal distribution. Therefore, it is possible that in a meta-analysis the Cochran’s test does not reject the homogeneity assumption, but the goodness-of-fit tests indicate the lack of fit for both fixed effect and random effect models.

In a meta-analysis, if there is evidence of lack of fit for the random effect model, which includes fixed effect model as a special case, what should we do next? Viechtbauer agued that even if the homogeneity assumption was rejected, it is still valid to use weighted or unweighted mean to estimate the overall effect for the K studies1. In addition, many methods based on combining p-values can be used as well in this situation24,25,26,27.

Lastly, the proposed tests can be easily extended to check the normality assumption of the random effects in generalized linear mixed models. Furthermore, the proposed tests can also be used to check the random effect assumption in the meta-regression analysis, which is a special case of the generalized linear mixed model and extends the random effect model (1) by adding some fixed effects of study-level covariates.

Additional Information

How to cite this article: Chen, Z. et al. Goodness-of-fit test for meta-analysis. Sci. Rep. 5, 16983; doi: 10.1038/srep16983 (2015).

References

Viechtbauer, W. Conducting meta-analyses in R with the metafor package. J Stat Softw 36, 1–48 (2010).

Pan, Z. & Lin, D. Goodness‐of‐Fit Methods for Generalized Linear Mixed Models. Biometrics 61, 1000–1009 (2005).

Lin, K.-C. & Chen, Y.-J. Assessing generalized linear mixed models using residual analysis. Int J Innov Comput I 8, 5693–5701 (2012).

González-Manteiga, W. & Crujeiras, R. M. An updated review of Goodness-of-Fit tests for regression models. Test 22, 361–411 (2013).

Tang, M., Slud, E. V. & Pfeiffer, R. M. Goodness of fit tests for linear mixed models. J Multivariate Anal 130, 176–193 (2014).

Wen, S.-Q. & Zhu, L.-X. Empirical likelihood based goodness-of-fit testing for generalized linear mixed models. Acta Mathematicae Applicatae Sinica, English Series 30, 37–48 (2014).

Sidik, K. & Jonkman, J. N. A note on variance estimation in random effects meta-regression. J. Biopharm. Stat. 15, 823–838 (2005).

Sidik, K. & Jonkman, J. N. Simple heterogeneity variance estimation for meta‐analysis. J. Roy. Stat. Soc. Ser. C. (Appl. Stat.) 54, 367–384 (2005).

Viechtbauer, W. Bias and efficiency of meta-analytic variance estimators in the random-effects model. J Educ Behav Stat 30, 261–293 (2005).

DerSimonian, R. & Laird, N. Meta-analysis in clinical trials. Control. Clin. Trials 7, 177–188 (1986).

Hedges, L. & Olkin, I. Statistical methods for meta-analysis. San Diego, CA: Academic (1985).

Hunter, J. E. & Schmidt, F. L. Methods of meta-analysis: Correcting error and bias in research findings. (Sage, 2004).

Cochran, W. G. Problems arising in the analysis of a series of similar experiments. Supplement to the Journal of the Royal Statistical Society 4, 102–118 (1937).

Chen, Z., Ng, H. K. T. & Nadarajah, S. A note on Cochran test for homogeneity in one-way ANOVA and meta-analysis. Stat Pap 55, 301–310 (2014).

D’Agostino, R. B. Goodness-of-fit-techniques. (CRC press, 1986).

Anderson, T. W. & Darling, D. A. A test of goodness of fit. J. Amer. Statist. Assoc. 49, 765–769 (1954).

Cramér, H. On the composition of elementary errors: First paper: Mathematical deductions. Scand Actuar J 1928, 13–74 (1928).

Von Mises, R. Wahrscheinlichkeit, statistik und wahrheit. (Berlin, 1928).

Shapiro, S. S. & Wilk, M. B. An analysis of variance test for normality (complete samples). Biometrika 52, 591–611 (1965).

Riley, R. D., Higgins, J. & Deeks, J. J. Interpretation of random effects meta-analyses. BMJ 342, d549 10.1136/bmj.d549 (2011).

Bachmann, S. et al. Inpatient rehabilitation specifically designed for geriatric patients: systematic review and meta-analysis of randomised controlled trials. BMJ 340, c1718, 10.1136/bmj.c1718 (2010).

Chen, Z. A Nonparametric Approach to Detecting the Difference of Survival Medians. Commun Stat Simulat, (in press), 10.1080/03610918.03612014.03964804 (2014).

Danese, A. & Tan, M. Childhood maltreatment and obesity: systematic review and meta-analysis. Mol. Psychiatry 19, 544–554, 10.1038/mp.2013.54 (2014).

Chen, Z. & Nadarajah, S. On the optimally weighted z-test for combining probabilities from independent studies. Comput. Stat. Data Anal. 70, 387–394 (2014).

Chen, Z. Is the weighted z‐test the best method for combining probabilities from independent tests? J. Evol. Biol. 24, 926–930 (2011).

Loughin, T. M. A systematic comparison of methods for combining p-values from independent tests. Comput. Stat. Data Anal. 47, 467–485 (2004).

Fisher, R. A. Statistical Methods for Research Workers. (Oliver and Boyd, 1932).

Acknowledgements

The first author would like to thank the support from several faculty research awards from the Indiana University School of Public Health-Bloomington.

Author information

Authors and Affiliations

Contributions

Z.C. conceived, guided and designed the project and conducted the study. G.Z. and J.L. participated in the study through computational implementation, data analysis and discussions. Z.C. wrote the manuscript, which was read and approved by all authors.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/

About this article

Cite this article

Chen, Z., Zhang, G. & Li, J. Goodness-of-fit test for meta-analysis. Sci Rep 5, 16983 (2015). https://doi.org/10.1038/srep16983

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep16983

This article is cited by

-

Robust tests for combining p-values under arbitrary dependency structures

Scientific Reports (2022)

-

The Relationship Between Physical Activity and Self-Compassion: a Systematic Review and Meta-analysis

Mindfulness (2021)

-

TRAIL gene 1595C/T polymorphisms contribute to the susceptibility and severity of intervertebral disc degeneration: a data synthesis

BMC Musculoskeletal Disorders (2017)

-

Association between C4, C4A, and C4B copy number variations and susceptibility to autoimmune diseases: a meta-analysis

Scientific Reports (2017)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.