Abstract

Rhythmic joint coordination is ubiquitous in daily-life human activities. In order to coordinate their actions towards shared goals, individuals need to co-regulate their timing and move together at the collective level of behavior. Remarkably, basic forms of coordinated behavior tend to emerge spontaneously as long as two individuals are exposed to each other’s rhythmic movements. The present study investigated the dynamics of spontaneous dyadic entrainment, and more specifically how they depend on the sensory modalities mediating informational coupling. By means of a novel interactive paradigm, we showed that dyadic entrainment systematically takes place during a minimalistic rhythmic task despite explicit instructions to ignore the partner. Crucially, the interaction was organized by clear dynamics in a modality-dependent fashion. Our results showed highly consistent coordination patterns in visually-mediated entrainment, whereas we observed more chaotic and more variable profiles in the auditorily-mediated counterpart. The proposed experimental paradigm yields empirical evidence for the overwhelming tendency of dyads to behave as coupled rhythmic units. In the context of our experimental design, it showed that coordination dynamics differ according to availability and nature of perceptual information. Interventions aimed at rehabilitating, teaching or training sensorimotor functions can be ultimately informed and optimized by such fundamental knowledge.

Similar content being viewed by others

Introduction

Humans are in remarkable ways influenced by what they hear and see, especially when they perceive other people moving. The exposure to rhythmic behaviors attracts individuals to fall in sync with one another, and the phenomenon can be observed at the level of dyads, small-groups and large crowds1. For instance, when two people walk or dance together, they show a natural tendency to synchronize their steps2,3,4. When an audience applauds at the end of the concert, the auditory scene goes through intermittent periods of synchronized clapping5. When marching bands cross each other on the street, it requires conscious effort for them to avoid getting rhythmically entrained6.

Rhythmic joint coordination is ubiquitous in daily-life human activities, from the simplest form of unintentional synchronization7 to complex tasks such as musical performance8. The way different rhythms organize into orderly coordinated patterns over time implies co-regulated actions in response to rhythms produced by other agents. Characteristic for this co-regulation is that action patterns are often spontaneously attracted towards stable synchronization, via a process called entrainment9. ‘Coordination dynamics’ has been proposed as a theoretical framework to understand the generic organizational principles that underlie the coordination of coupled rhythmic units7,9,10,11,12. Central to the theory is that the coordination of multiple units over time is the result of a dynamic balance between keeping the own rhythm (competition) and moving together on the collective level (cooperation)13. Such idea resonates in earlier work by von Holst14, who termed the ‘maintenance effect’ versus the ‘magnet effect’ in his study of coordination in animal behavior. When intrinsic and external rhythms are incongruent, rhythmic units engage in a dynamic competition-cooperation process.

When it comes to rhythmic interactions within a dyad, this dynamic balance can be influenced by manifold variables, such as preferred tempo15, empathy16 and social factors17. However, among all possible sources of variability, informational coupling between individuals stands out as the most fundamental condition for interpersonal coordination. The essential minimal requirement for human interactions to occur is that individuals mutually exchange information through one or more sensory channels. Although experimental interactive scenarios necessarily imply a determined sensory modality18,19,20 and possibly cross-modal interactions4, the role of competing sensory modalities remains overlooked in the literature. To this date, whether and how coordination dynamics depend on the nature of available perceptual information requires further systematic investigation.

The aim of the present study is to develop a dynamic experimental paradigm, and apply it for studying modality dependencies of competition-cooperation dynamics in dyadic entrainment. The paradigm involves a unimanual tapping task, where two individuals are exposed to minimally different tempi and reciprocally coupled in visual and auditory modalities across experimental conditions. The procedure implies a tension between competition and cooperation processes in the dyadic interaction, and is meant to return an empirical layout of its dynamics across sensory modalities.

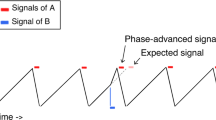

The core idea underlying the proposed experimental paradigm is to induce a competition between the intrinsic rhythm of an individual’s actions and the rhythm produced by another person. During a unimanual tapping task, two partners are exposed to two metronomes in different modalities (visual and auditory). The metronomes assigned to the partners slightly differ in frequency, resulting in a gradual increasing (0 to π rad) and decreasing (π to 0 rad) of absolute relative phase over multiple cycles. Accordingly, for each person, a corresponding cyclical de-phasing of the assigned metronome and partner’s metronome unfolds over time. With this simple expedient, we implemented a completely predictable system of two oscillators which deterministically revisits the same states throughout 10 consecutive cycles. An audio file showing metronomes’ behavior in available in the Supplementary materials.

When the partners are informationally coupled, they are exposed to two competing rhythms. The first one is the intended rhythm: participants are explicitly instructed to synchronize to their assigned metronome. The second rhythm is the coupling rhythm, which is produced by the partner and perceived in another sensory modality. Thus, when the members of the dyad follow their own auditory metronome, they are exposed to visual information from the partner (visual coupling). Alternatively, when the members of the dyad follow their own visual metronome, they are exposed to auditory information from the partner (auditory coupling). The same task performed in absence of informational coupling provides the control conditions for each modality, and a baseline to assess the impact of coupling on spontaneous dyadic entrainment. In presence of informational coupling, a cross-modal incongruency takes place between intended and coupling rhythms. The experimental design is schematized and described in Fig. 1.

Experimental design. The study was designed in a Modality (Visual, Auditory) x Coupling (Coupled, Uncoupled) factorial structure, resulting in the following conditions. 1. Visually Coupled. Participants tapped along with an auditory metronome, while looking at the partner’s hand tapping. The view of their own hand was hidden by a screen placed on the table. They were explicitly asked to neglect the partner’s movements and focus on following their own metronome. 2. Visually Uncoupled (control). Participants tapped along with an auditory metronome, while looking at their own hand. The view of the partner’s hand is hidden by a screen placed on the table. 3. Auditorily Coupled. Participants tapped along with a flickering LED, while hearing the sonification of the partner’s tapping. The view of all the hands was hidden by screens placed on the table. They were informed that the sounds they will hear are produced by the partner, and were explicitly asked to neglect them and focus on following their own metronome. 4. Auditorily Uncoupled (control). Participants tapped along with a flickering LED, while hearing the sonification of their own tapping. The view of all the hands was hidden with screens placed on the table.

In the framework of coordination dynamics, the concept of relative phase (ϕ) functions as a collective variable to describe co-regulation of timing. When competition dominates the interaction, rhythmic units may fully keep their own intrinsic frequency, whereas they may be fully attracted towards each other as cooperation prevails. The former case would typically result in an evenly spread distribution across ϕ (0 to 2π radians). In the latter case, the distribution would exhibit peaks over the so-called attractor regions, where rhythmic units are locked in temporally stable state. In human behavior, the intrinsic dynamics of the collective variable ϕ are well characterized by a layout of attractive and repelling fixed points, wherein in-phase (0 radians) and anti-phase (π radians) are the dominant stable modes of coordination. The Haken-Kelso-Bunz (HKB) model mathematically describes how phase transitions from one spatiotemporal pattern to another take place when the system is pushed beyond its equilibrium state21. The model points at the in-phase mode as the point where the system ultimately tends to reach stability.

Crucially, the behavior of the ‘drifting metronomes’ recursively guided the dyads through the whole range of ϕ values—and over its respective attractor regions. Based on a joint recurrence analysis of the system’s dynamics22, we computed a recurrence score as a metric of dyadic coupling strength and tracked its evolution over time (for a visual representation of the processing pipeline, see Figs. 2 and 3). By these means, we obtained an empirical attractor layout descriptive of the competition-cooperation dynamics within the dyads. The cooperation process (i.e., entrainment to the partner’s coupling rhythm) was expected to dominate the conflict around in-phase (0 radians) and anti-phase (π radians) points, leading to significantly higher recurrence score in presence of informational coupling and a significant modulation around attractor regions.

Analysis pipeline. The procedure is here presented as applied to the timeseries generated by the metronomes’ onsets, showing dyadic behavior under the ideal condition of perfect compliance with the task. (A) Starting from discrete onsets, (B) we interpolated each timeseries with a sine function to model the behavior as a system of coupled oscillators54 and (C) separately embed them in their respective phase-spaces for JRPs computation22. From the representation of one full cycle of the metronomes’ behavior, it is evident how the two oscillators smoothly de-phase and get back in-phase at the end of the cycle. (D) JRPs were computed of each individual cycle and (E) summed up, returning a 2-D matrix of the density of recurrence points over the trial structure. Finally, by looping over the column and summing all the rows of the matrix, (F) we compressed the representation into a 1-D timeseries of the total count of recurrences over the cycle structure. The presence of diagonal lines dominating the JRPs is exactly the configuration expected by a deterministic system22, and when compressed in the timeseries format result in a flat horizontal line with small periodic fluctuations (due to the down-sampling performed on the sinewaves prior to embedding, which was necessary to make JRPs calculations computationally feasible). Applying the pipeline to human dyads allowed us to map the local variations of their coupling strength over the expected relative phase, resulting in the picture of the ‘attractor landscape’34.

Recurrence score over average cycle. The sum of 10 JRPs from Visual Coupling and Visual Control conditions are compressed to a 1-D recurrence score timeseries. It is evident how visual coupling modulates the density of recurrence point and its distribution over the average cycle in this representative dyad (#5). Compared to the control condition, the recurrence score was higher on average and exhibited local maxima at the extremities of the cycle (i.e., in proximity of the in-phase attractor point).

Given the time-varying nature of the recurrence score as a response variable, we needed a method to model its local variations over the course of the metronomes’ cycles. We opted then for a statistical model which allowed us to assess the effects of our experimental manipulations over the entire unfolding of the interaction, and to avoid segmentation and multiple comparison while respecting the temporal structure of the data23,24. In addition to the inferences made possible by linear models based on intercepts, such as ANOVAs, our solution allowed to assess the effect of categorical predictors on the temporal profile of the response variable. In order to complement our observations at the dyadic level, we used the same framework to analyze the evolution of individual rhythmic behaviors over the course of the task.

Results

Growth curve analysis

25 was used to analyze the evolution of recurrence score over the course of the expected relative phase between partners (i.e., from 0 to 2π). The curves were calculated as the average of the 10 consecutive metronomes cycles in each experimental condition, and modeled with 2nd order orthogonal polynomials and fixed effects of Coupling and Modality on the polynomial terms. The uncoupled (control) conditions were treated as a baseline for contrasting the levels of the Coupling factor, and the audio conditions were treated as a baseline for the Modality factor.

In the context of orthogonal polynomials, a flat line can be considered a pure intercept and a ‘zero-order’ polynomial, in the sense that it exhibits zero changes in any direction. If the recurrence score was time-invariant, it would appear as a flat line indicating complete dominance of the competition process between rhythmic units, which would pursue their individual intended rhythms. On the other hand, significant changes of direction would indicate that the interaction is systematically modulated by the temporal structure of the task: around attractor points, the cooperation process would take over and the rhythmic units would be attracted towards the coupling rhythm. Figure 4 shows how orthogonal polynomials are suited for modelling local variations of the response variable around attractor points.

Orthogonal polynomials. The time-varying dimension of a response variable over the metronomes cycle can be modelled with higher-order polynomials. In our model, intercepts correspond to the overall average of the recurrence score: the main effects of categorical predictors indicate global differences across experimental conditions independently from the temporal profile of the response variable. On the other hand, effects of categorical predictors on the polynomial terms indicate the local effect of attractor points on the x-axis. For the linear component (1st order), the parameter estimate corresponds to the slope of the line; for the quadratic component (2nd order), it corresponds to the parabolic curvature; for the cubic and quartic components (3rd and 4th order), it corresponds to the sharpness of the peaks on the inflexion points. The order of the polynomial is ideally chosen based on hypothesis and on the nature of the data, should be confirmed by the empirical data, and should be allow a straightforward interpretation of the effects25. In our case, orders from 2 to 4 seem suited candidates for modelling different possible attractor landscapes, given the symmetric measure of the cycle and inflection points around in-phase and anti-phase regions. A full model based on orthogonal polynomials includes all terms up to the chosen order.

Orthogonal polynomials in the model were limited to the 2nd order: based on our hypotheses, we expected quadratic and quartic terms to capture the effects of in-phase and anti-phase attractor points. However, following data inspection and analysis of residuals, we concluded that the quadratic term alone was enough to capture the relevant effect of our experimental manipulation. Figure 5 shows the data with descriptive statistics across experimental conditions, and a comparison between 2nd order and 4th order models. Arguably, the better fit of the quartic term comes with a major cost in terms of model parsimony and interpretation. Our model also included random effects of Dyads on all polynomial terms, and their interactions with the factors: the random effects structure was maximized in order to minimize false alarm rates without substantial loss of power26.

Coupling strength as a function of the attractor landscape. (A) Recurrence score (on the log-scale) over the average of 10 cycles, for 14 dyads (colored dots). Grand-average and error bars are represented in black. The reader can visually appreciate the consistency of the “seagull-shaped” pattern in condition of visual coupling and the flat line in the respective control condition. The condition of auditory coupling shows a recurrence score higher on average but no clear pattern over the average cycle, and overall higher variability. (B) Recurrence score predicted by our 2nd order polynomial model. The inflection of the parabolic term and the negative slope of the linear term successfully capture the influence of the in-phase attractor points in the visually coupled condition. Arguably, this is the most parsimonious model for our data in the framework of growth curve analysis25. (C) Recurrence score predicted by our 4th order polynomial model. The quartic fit manages to capture more fine-grained modulations, particularly the local maxima on the inflection points at the extremes of the cycle. The profile recalls the typical ‘seagull-effect’ originally reported in34 and discussed in12 in the context of bimanual coordination dynamics. However, for the sake of interpretation of parameter estimates, we opted for the simplest possible model: given the absence of a local maximum on the anti-phase point, a single parabolic inflection point was sufficient to explain the effect of visual coupling. (D) Within-dyads comparison of the recurrence score over the average trial, across experimental conditions. Dots represent the empirical data, whereas continuous lines represent the full model (up to the 2nd order polynomial) fitted to the data.

As expected, we found a significant main effect of Coupling (Estimate = 42.809, SE = 18.142, p = 0.018) indicating an overall increase of the recurrence score in presence of informational coupling as compared to the uncoupled control conditions, independently from the sensory modality. We also report a significant interaction effect of Coupling and Modality on the orthogonal polynomial terms of Time (Linear: Estimate = −84.579, SE = 30.867, p = 0.006; Quadratic: Estimate = 190.320, SE = 36.973, p < 0.001), meaning that the parameter estimates in coupled conditions significantly differ across visual and auditory modalities. Given the observed major variability in the Auditory level and following inspection of the residuals, we fitted the same model on the natural log-transformed response variable for it provided a considerably better fit, and still found significant main effect of Coupling (Estimate = 0.237, SE = 0.117, p = 0.042) and interaction effects on the polynomial terms of Time (Linear: Estimate = −0.534, SE = 0.222, p = 0.016; Quadratic: Estimate = 1.257, SE = 0.299, p < 0.001). Table 1 shows the fixed effects parameter estimates and their standard errors for log-transformed recurrence score, along with p-values estimated using the normal approximation for the t-values.

The same model was then fitted to the phase error of the individual participants. The response variable was computed as the absolute phase difference between the finger-tapping and the metronomes timeseries, to quantify individual synchronization behavior. We found a significant main effect of Modality (Estimate = −0.230, SE = 0.080, p = 0.004), indicating that the assignment to visual metronomes resulted in higher phase error. Furthermore, the quasi-significant main effect of Coupling (Estimate = 0.156, SE = 0.080, p = 0.051) confirmed that informational coupling with the partner negatively affected the synchronization with the assigned metronome. When looking at the evolution of the phase error over time, the interaction effect of Coupling and Modality on the quadratic term of Time (Estimate = 1.058, SE = 0.373, p = 0.004) revealed that the temporal profile across conditions is coherent with the one observed for the recurrence score (see Fig. 6).

Individual synchronization as a function of the attractor landscape. (A) Phase error over the average of 10 cycles, for 28 participants (colored dots). Grand-average and error bars are represented in black. The reader can visually appreciate how individual rhythmic behaviors track the course of the recurrence score, supporting the idea of a dynamic balance between cooperation and competition processes. As the coupling between partners dominates the interaction, the magnitude of the phase error increases accordingly. (B) Phase error predicted by the 2nd order polynomial model. The "seagull-shaped" pattern observed in the visually coupled condition could be well modelled by the inflection of the parabolic term alone. Differently from the recurrence score, phase error exhibited a symmetric profile without significant interactions with the linear term.

In the context of growth curve analysis25, the parameter estimates provide a measure of effect size of straightforward interpretation for linear and non-linear changes over time as long as the order is not too high: above the 4th order, results become hardly interpretable and the risk of over-fitting the data increases. With the interaction effect of Coupling and Modality factors on the polynomial terms, we could quantify modality-specific effects of informational coupling on the evolution of the recurrence score and the individual synchronization performances. The results strongly support our predictions of spontaneous dyadic entrainment in presence of informational coupling and, crucially, show that its temporal dynamics are modality-dependent.

Discussion

The present study investigated the dynamics of dyadic entrainment, and more specifically how they depend on the sensory modality mediating informational coupling. An experimental paradigm was designed to dynamically manipulate the expected relative-phase between participants, while informational coupling was manipulated in visual and auditory modalities across conditions. At the global level, we wanted to prove that when intended and coupling rhythms are at odds, a spontaneous co-regulation of timing occurs within the dyad at the expenses of the individual intended rhythms. At the local level, we wanted to infer the configuration of a dyadic basin of attraction, by modelling the dyadic cooperation process around attractor points12. Ultimately, it was possible to evidence a crucial modality-dependency of dyadic entrainment.

In the first place, we reported that the mere presence of informational coupling led to spontaneous entrainment in dyads engaged in a rhythmic task. As long as two partners perceived each other, their rhythms were ‘attracted’ towards each other. The effect was quantified as a significant increase of the average recurrence score, compared to the baseline provided by the de-coupled conditions. Remarkably, such manifestation of self-organizing behavior12,27 emerged in the context of a task where subjects were explicitly instructed to ignore each other’s actions, pursuing independent uncoupled behaviors cued by their own reference. Even though the observation of spontaneous dyadic entrainment was reported in self-paced19,28, cued29,30 and joint improvisation tasks31,32,33, our procedure explicitly implemented rhythmic incongruency to investigate the competition-cooperation dynamic balance latent to the system’s dynamics13. When competition prevails, rhythmic units manage to keep the assigned (intended) rhythm, whereas when cooperation takes over, rhythmic units undergo a (spontaneous) co-regulation of timing and move together on a collective level of coordinated behavior. Overall, both visual and auditory coupling led to spontaneous dyadic entrainment despite the instruction of synchronizing with the assigned metronome. Moreover, the shift towards a cooperation process occurred at the expenses of the competing individual behaviors, as indicated by the significant increase of the average phase error in coupled conditions.

Beyond a global measure of coupling, the continuous de-phasing implemented in the ‘drifting metronomes’ paradigm allowed us to quantify local variations of recurrence score as a function of the expected relative phase between partners. The balance between cooperation and competition processes is expected to be dynamic, in the sense that it may vary over time and is constrained by the intrinsic dynamics of the system. Dyadic entrainment was expected to occur more strongly and more consistently around attractor regions, located around the in-phase and anti-phase points of the metronomes’ cycles21. With our approach, we achieved to return an empirical ‘attractor landscape’34 and to contrast it across conditions of visual and auditory coupling. Our findings strongly point towards a modality-dependency of dyadic entrainment (see Fig. 5), which in turn is reflected in the individual synchronization with the metronomes (see Fig. 6). The interaction effect of Coupling and Modality on the linear and quadratic terms of Time statistically confirms that the temporal modulation of the recurrence score is an exclusive property of visually-mediated coupling, with a remarkable consistency across dyads. In such condition, the recurrence score exhibits maximum values around the in-phase point at the start of the metronomes cycle, gradually decreases as the dyad leaves the attractor zone, reaches its minimum passed the anti-phase point, and finally increases towards maximum values as it enters the in-phase zone at the end of the cycle. On the other hand, the individual phase error exhibits a more symmetrical parabolic modulation, with only interaction on the quadratic term of Time reaching significance.

Selectively for the recurrence score, we report here an effect of directionality in the visually coupled condition: dyads slowly de-couple as they leave the in-phase point, whereas they couple more rapidly as they approach the same point. The negative slope of the linear term indicates that dyads tend to be more coupled in the first portion of the cycle, namely before passing the anti-phase point. The observation is suggestive of a hysteresis effect, which characterizes transitions from one attractor to another in dynamical systems12. In interlimb and interpersonal coordination, non-linear transitions from anti-phase to in-phase coordinative modes are typically observed as the system is pushed towards instability18,35,36. This can be experimentally induced in an experimental setting by increasing the frequency of the rhythmic units as control parameter. In our paradigm, we could appreciate that increasing the metronomes’ relative phase away from the in-phase point, the attractor exerted a long-lasting ‘pull-back’ effect. This was followed by a more abrupt ‘push-forward’ when transitioning over the anti-phase point, as the expected relative phase decreased. Even though the metronomes’ frequencies were not manipulated, their continuous de-phasing resulted in repeated transitions from in-phase to anti-phase regions and vice versa, as the dyad explored the attractor landscape.

In condition of auditory coupling, the recurrence score did not exhibit any significant variation over time: the measure was locally independent from the proximity of attractor points. Nevertheless, we still observed a significant global increase of recurrences compared to the baseline. Previous evidence shows that phase shifts in auditory sequences elicit a phase-correction response in participants instructed to tap in synchrony to isochronous sequence of flashing lights37. Auditory-driven phase corrections are prone to occur even when shifts are highly irregular38 and at different relative phases values, with overall high interindividual variability39. Similarly, in the context of our paradigm, sounds generated by the partner exerted attraction on the self-generated taps over the whole course of the metronomes’ cycle, regardless of the expected relative-phase value.

Finally, both control conditions resulted in a flat horizontal line below the average of the respective coupled conditions, as expected. It is worth noting the higher variability in Audio conditions, arguably due to the task of synchronizing to visual metronomes. From lower recurrence score in the control condition, we can conclude that dyads performed globally worse in synchronizing to visual cues as compared to auditory cues. This is in line with previous evidence on modality-dependent synchronization skills40,41, and backed by our concomitant observation of higher phase errors when visual metronomes were presented. Taken together, our findings support our hypothesis of modality-dependent dynamics, suggesting that auditorily-mediated entrainment is considerably less sensitive to the basin of attraction when compared to its visual counterpart.

Although interpersonal coordination can be described at the collective level as a self-organizing process12,27, humans ultimately entrain to each other via mutual adaptation of individual action-perception loops19,20. Therefore, an exhaustive account for dyadic entrainment should be capable of bridging the two levels, considering how coordination dynamics depend on the processing of information available in the interaction. Such link is foundational for translating dynamical control principles from intra- to inter-personal levels18. Recently, predictive coding42,43,44 was put forward as a plausible theoretical account for coordination dynamics in dyadic behavior45. The theory states that the brain is constantly engaged in an optimization process based on Bayesian inference: information sampled from the environment is compared to prior evidence, and the optimization consists of minimizing prediction errors. In the context of interpersonal coordination, such a putative mechanism could underpin the overt manifestations of dyadic entrainment by engaging the action systems of the rhythmic units involved. Let us consider the prediction error as the phase difference between executed and observed movements: as both units minimize the mismatch between the representations of observed and own motor behavior, the dyadic system tends towards a collective minimization of free energy in a stable attractor state45. Such interpretation of the dyadic attractor is supported by our results in the context of visually-mediated coupling: as the relative phase between partners increases, the cooperation process between rhythmic units loses strength in favor of competition. In other terms, the tendency of the partners to lock in a stable coordinative state depends on the energy required to correct for the prediction error. As the error increases, the correction becomes more effortful and it becomes easier for the units to pursue independent behaviors.

Crucially, such explanation does not hold for auditorily-mediated coupling. As distinct sensory systems have their unique interface with motor and timing systems41,46,47, it is necessary to interpret the results in light of individual information processing. There is arguably a crucial difference in the nature of the perceptual information available to the partners across different modalities. When the coupling is visually mediated, a stream of kinematic information is continuously sampled from the partner's actions48, such that the predictive models can be constantly updated at every stage of movement execution. On the other hand, in case of auditory coupling the prediction of the partner's tap is solely based on temporal information about the previous taps, since partners do not have online access to the reciprocal kinematic information. In such condition, the cooperation process still dominates the interaction but no consistent pattern emerges in terms of dynamics as quantified by our method. We propose that the availability of kinematic information through informational coupling is a crucial discriminant that might explain the observed modality-dependence of dyadic entrainment. The idea that prior evidence is built differently across modalities depending on availability of sensory information is consistent with a predictive account for dyadic entrainment.

A final question arises spontaneous: how would the dyad behave if kinematic information was embedded in a sound envelope, and conveyed to the partners via the auditory channel? One limitation of the current work is that we cannot conclude whether dyadic entrainment is modality-dependent in the strict sense or rather dependent on availability of kinematic information. Given the central role attributed to kinematics in predictive accounts for action perception48, it is of particular interest to investigate whether continuous sonification of movement parameters49 would support the same dynamics observed in presence of visual coupling. Previous work shows that presenting visual stimuli in a way that indicates movement over time, e.g., apparent hand motion50,51 or a bouncing ball40,46,52, behavioral outcomes of predictive entrainment are improved by the continuous stream of information. We hypothesize that a movement sonification strategy based on this principle could translate the kinematic advantage into auditorily-mediated dyadic interactions, resembling the dynamics here described in condition of visual coupling.

The findings and the questions raised from the present study might have relevant implications for optimizing interventions aimed at rehabilitating, teaching or training sensorimotor functions. Fundamental knowledge about dyadic interactions is what will ultimately inform such optimization. The evidence from the present study points at the advantages of the physical presence of a teacher (or a therapist, or a trainer) for guiding rhythmic movements, possibly integrating kinematic information via sonification strategies49.

In conclusion, we want to highlight that the ‘drifting metronomes’ procedure is meant as a methodological contribution for the investigation of interpersonal coordination, in the hope that the scientific community can build upon it with new research questions and experimental designs. We point at its adoption with simultaneous dual-electroencephalography recordings53, computational simulations54 and replication on pathological populations55,56,57 as potential sources of insight into the fundamentals of dyadic interactions.

Methods

Participants

Twenty-eight right handed participants took part in the study (18 females, 10 males; mean age = 29.07 years, standard deviation = 5.73 years). They were randomly paired in fourteen (N = 14) gender-matched dyads, in order to control for any gender bias in the interaction. None of them had history of neurological, major medical or psychiatric disorders. All of them declared not to be professional musicians upon recruitment, although some of them had musical experience. With the exception of one dyad, all participants declared not to know the assigned partner from before the experiment. The experiment was approved by the Ethics Committee of Ghent University (Faculty of Arts and Philosophy) and informed written consent was obtained from each participant. A 15€ coupon was given to all participants as economic compensation for their time.

Experimental apparatus and procedure

The two partners were sitting across the same table, facing each other. Chairs were provided with an armrest in order to exclude any tactile or proprioceptive coupling due to the table’s vibrations. Each partner was assigned to one pad and instructed to tap on it with the right index finger synchronizing with a metronome. As represented and described in Fig. 1, the metronome was either an auditory cue or a flickering light, depending on the experimental condition. Each partner was cued with a different tempo (1.67 Hz and 1.64 Hz). Aligning the start of the two tracks, the relative phase between the metronomes started at 0º and steadily increased in regular steps of 5.6º. A full cycle took 39 s to be completed. 10 consecutive cycles were performed in each experimental condition. In conditions of informational coupling, participants were instructed to ignore the partner and tap along with the assigned metronome.

A M-Audio M-Track 8 soundcard was used to route independent audio channels to each participant via in-ear plugs. Ableton Live 10 was adopted as main interface for stimuli presentation, to sonify the participants’ finger-taps and to route them in real-time. The same MIDI tracks were used to control the metronomes across conditions, by either triggering an audio sample or a flickering LED. Volume was adapted to every participant before the start of the experiment, and pink noise was regulated up to the point of suppressing any sound other than the auditory stimulation presented via Ableton. Participants were monitored on-line by means of a USB-camera, to make sure they complied with the instructions. No dyad was excluded from the analyses.

A Teensy 3.2 microcontroller was used as serial/MIDI hub in the setup. It was used to detect tapping onsets with 1 ms resolution, based on the analog input from strain gauge pressure sensors installed inside the pads. Every time a metronome onset was presented to a participant, a MIDI message was sent to the Teensy device to log the metronomes timeseries and to control the voltage of the LEDs when needed.

Simultaneous EEG recordings were performed from both partners of the dyads during the whole experiment, but such data were not presented in the present paper. Additional data were collected prior and during the experiment. Prior to the experiment, demographical data were collected; the Edinburgh inventory 58 was administered to assess the right handedness of the participants; the Interpersonal Reactivity Index (IRI) was administered as self-report of empathy and its subscales 59; the preferred spontaneous tempo was calculated via 30 s of self-paced finger-tapping on a dedicated smartphone app (www.beatsperminuteonline.com). During the breaks between experimental conditions, all participants provided subjective self-reports on different aspects of the task by expressing agreement on a scale from 1 (“Completely disagree”) to 7 (“Completely agree”) with a custom-made battery of 11 Likert items. Data collected from the questionnaires were not presented in the present paper.

Data analysis

Pre-processing

Our raw data consisted of timestamps logged from the Teensy controller with 1 ms resolution for 10 consecutive metronome cycles (390 s in total), and an associated ID for each partner and each metronome. As only form of cleaning, we removed onsets occurring < 350 ms from the previous one: false positives could occasionally be recorded when a participant pushed the pad for too long or accidentally laid the hand on it. The cleaned timeseries were then interpolated with a sine function at 1 kHz sampling rate, providing an estimate of the oscillators’ positions on its cycle with a temporal resolution of 1 ms. Conceptually, the choice of sinusoidal interpolation was supported by recent work on modelling of systems of coupled oscillators in joint finger-tapping studies54. Operationally, it guaranteed that all timeseries match in size without any loss of data, which was a requirement for the next steps of our analysis. Timeseries were finally downsampled by a factor of 40 to make the computation of RPs computationally feasible. Different orders of downsampling were tested to make sure that the results do not depend on this choice.

Phase-space reconstruction

The optimal parameters for the time-delayed embedding were computed for each participant, for the time course of each single metronomes cycle in all experimental conditions. The resulting mean value across all participants was applied to all individual instances. The reason for this approach is that in order to compare the rate of recurrences across conditions at the group level, the embedding procedure must be consistent across participants (e.g., see60, for an example of parameter selections in a factorial design). We first selected the delay tau of the timeseries (τ) as the first local minimum mutual information index61 as a function of delay. This approach minimized the timeseries self-similarity, extracting nearly orthogonal components and preventing the attractor from folding over itself62. The mean value of τ resulted to be 7. Next, we determined the number of embedding dimensions m with the method of false nearest neighbor63: we progressively unfolded the time series into higher dimensions until the data points did not overlap spuriously, finding a mean m = 3. Finally, in order to determine a maximum threshold for counting two neighboring points as recurrent, we selected a radius of 10% of the maximal phase-space diameter22.

Joint recurrence plots (JRPs)

Individual recurrence plots where computed as follows:

where ε is the neighborhood threshold, ‖ ⋅ ‖ is the Euclidean norm, and Θ is the Heaviside step function. A square matrix was returned from each shadow-manifold in the phase-space, containing 1s for all the instances where the distance ‖ · ‖ was smaller than the threshold ε, and 0s for the remaining elements. The distance was computed with the method of maximum norm. A joint recurrence plot (JRP) was computed for each dyad by overlapping the individual JRPs of the partners pair-wise, and keeping as 1 only the instances where both plots contained a recurrence. The computation of the JRPs was carried out with the crp toolbox for Matlab22.

The 10 trials (i.e., the metronome cycles) of each experimental condition were aggregated by summing the respective JRPs such that every cell of the 2-D matrix contained the count of times that a recurrence occurred in the same point of the cycle. Finally, we looped over the columns of the matrix summing all the counts contained in the rows, obtaining a 1-D vector recurrence scores which represented a density measure of the instances of coupled behavior over the course of the cycle. The scale of the recurrence score depends on the size of the JRPs and in turn on the embedding procedure, which made it necessary to set the same parameters for the whole sample. In order to stabilize our response variable and avoid over-sampling in view of our statistical model, the resulting timeseries were divided in 64 segments averaging the recurrence score. For the division, we chose the intervals determined by the steps of the slower metronome, as they provided an intrinsic regular subdivision of the experimental runs. All the steps presented so far were carried out in Matlab (see Fig. 2, for a schematic representation). Our approach was preferred over the “windowed” version for JRQA, for the latter would low-pass filter our timeseries and make it impossible to interpret the results. The resulting phase-shift would be dependent on the choice of the window size, hence not reliable for detecting attractor points over the landscape.

Individual rhythmic behavior

For every participant, phase angle timeseries were computed by linearly interpolating the finger-tapping onsets as a rampwave at 1 kHz sampling rate, and scaling it by 2π. The same procedure was repeated for the metronomes’ onsets. Phase error was calculated as the absolute difference between the participants’ and the assigned metronomes’ timeseries, wrapped to π and averaged within 64 bins like the recurrence score timeseries. This measure was used as response variable to assess the individual rhythmic behavior of the participants, complementing our findings at the level of collective behavior.

Statistical models

The recurrence score was used as response variable in a mixed-effects model with Modality and Coupling as factors, and Time as a continuous predictor expressed with the indexes of the metronome’s steps (from 1 to 64). Given the non-linear time course observed in the 'Visual Coupling' condition, we adopted the method of orthogonal polynomials25 including linear and quadratic functions of Time into our model (see Fig. 5). Dyads and interactions between Dyads and factors were modelled as random effects26 to account for the individual variability in synchronization skills and individual susceptibility to coupling across the experimental manipulations. The formula of the full model (up to the 2nd polynomial order) is the following:

The same model was fitted to the phase error of individual participants. Here, Subjects and Dyads were modelled as random effects.

Statistical analyses were carried out in R (version 4.0.3); model fitting was performed with the lme4 package64. All methods were carried out in accordance with relevant guidelines and regulations, based on the references provided in the respective paragraphs.

Data availability

All data generated during this study are included in this published article (and its Supplementary materials), together with the scripts used to analyze them.

References

Zhang, M., Kelso, J. A. S. & Tognoli, E. Critical diversity: Divided or united states of social coordination. PLoS One 13, e0193843 (2018).

van Ulzen, N. R., Lamoth, C. J. C., Daffertshofer, A., Semin, G. R. & Beek, P. J. Characteristics of instructed and uninstructed interpersonal coordination while walking side-by-side. Neurosci. Lett. 432, 88–93 (2008).

Nessler, J. A. & Gilliland, S. J. Kinematic analysis of side-by-side stepping with intentional and unintentional synchronization. Gait Posture 31, 527–529 (2010).

Miyata, K., Varlet, M., Miura, A., Kudo, K. & Keller, P. E. Modulation of individual auditory-motor coordination dynamics through interpersonal visual coupling. Sci. Rep. 7, 16220 (2017).

Néda, Z., Ravasz, E., Brechet, Y., Vicsek, T. & Barabási, A. L. The sound of many hands clapping. Nature 403, 849–850 (2000).

Clayton, M., Dueck, B. & Leante, L. Experience and meaning in music performance. (Oxford University Press, 2013).

Schmidt, R. C. & Richardson, M. J. Dynamics of Interpersonal Coordination. in Coordination: neural, behavioral and social dynamics (eds. Fuchs, A. & Jirsa, V. K.) 281–308 (Springer Berlin Heidelberg, 2008).

Keller, P. Ensemble performance: Interpersonal alignment of musical expression. in Expressiveness in music performance: Empirical approaches across styles and cultures , (pp (ed. Fabian, D.) vol. 383 260–282 (Oxford University Press, Oxford, 2014).

Phillips-Silver, J., Aktipis, C. A. & Bryant, G. A. The ecology of entrainment: Foundations of coordinated rhythmic movement. Music Percept. 28, 3–14 (2010).

Schöner, G. Learning and recall in a dynamic theory of coordination patterns. Biol. Cybern. 62, 39–54 (1989).

de Guzman, G. C. & Tognoli, E. The human dynamic clamp as a paradigm for social interaction. Proceedings of the (2014).

Kelso, J. A. S. Dynamic patterns: the self-organization of brain and behavior. (MIT Press, 1995).

Scott Kelso, J. A., Engstrom, D. A. & Engstrom, D. The Complementary Nature. (MIT Press, 2006).

Von Holst, E. The behavioural physiology of animals: the selected papers of Erich von Holst. (Methuen, 1973).

Schmidt, R. C. & Turvey, M. T. Phase-entrainment dynamics of visually coupled rhythmic movements. Biol. Cybern. 70, 369–376 (1994).

Novembre, G., Mitsopoulos, Z. & Keller, P. E. Empathic perspective taking promotes interpersonal coordination through music. Sci. Rep. 9, 12255 (2019).

Tschacher, W., Rees, G. M. & Ramseyer, F. Nonverbal synchrony and affect in dyadic interactions. Front. Psychol. 5, 1323 (2014).

Schmidt, R. C., Carello, C. & Turvey, M. T. Phase transitions and critical fluctuations in the visual coordination of rhythmic movements between people. J. Exp. Psychol. Hum. Percept. Perform. 16, 227–247 (1990).

Konvalinka, I., Vuust, P., Roepstorff, A. & Frith, C. D. Follow you, follow me: Continuous mutual prediction and adaptation in joint tapping. Q. J. Exp. Psychol. 63, 2220–2230 (2010).

Heggli, O. A., Konvalinka, I., Kringelbach, M. L. & Vuust, P. Musical interaction is influenced by underlying predictive models and musical expertise. Sci. Rep. 9, 11048 (2019).

Haken, H., Kelso, J. A. & Bunz, H. A theoretical model of phase transitions in human hand movements. Biol. Cybern. 51, 347–356 (1985).

Marwan, N., Carmen Romano, M., Thiel, M. & Kurths, J. Recurrence plots for the analysis of complex systems. Phys. Rep. 438, 237–329 (2007).

Demos, A. P. & Chaffin, R. Removing obstacles to the analysis of movement in musical performance: Recurrence, mixed models, and surrogates. The Routledge companion to embodied music interaction 341–349 (2017).

Demos, A. P., Chaffin, R. & Logan, T. Musicians body sway embodies musical structure and expression: A recurrence-based approach. Music Sci. 22, 244–263 (2018).

Mirman, D. Growth curve analysis and visualization using R. (CRC Press, 2017).

Barr, D. J., Levy, R., Scheepers, C. & Tily, H. J. Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Lang. 68, 255–278 (2013).

Tognoli, E., Zhang, M., Fuchs, A., Beetle, C. & Kelso, J. A. S. Coordination dynamics: A foundation for understanding social behavior. Front. Hum. Neurosci. 14, 317 (2020).

Lorås, H., Aune, T. K., Ingvaldsen, R. & Pedersen, A. V. Interpersonal and intrapersonal entrainment of self-paced tapping rate. PLoS One 14, e0220505 (2019).

Okano, M., Shinya, M. & Kudo, K. Paired synchronous rhythmic finger tapping without an external timing cue shows greater speed increases relative to those for solo tapping. Sci. Rep. 7, 43987 (2017).

Kimura, K., Ogata, T. & Miyake, Y. Effects of a partner’s tap intervals on an individual’s timing control increase in slow-tempo dyad synchronisation using finger-tapping. Sci. Rep. 10, 8237 (2020).

Noy, L., Dekel, E. & Alon, U. The mirror game as a paradigm for studying the dynamics of two people improvising motion together. Proc. Natl. Acad. Sci. USA 108, 20947–20952 (2011).

Noy, L., Levit-Binun, N. & Golland, Y. Being in the zone: physiological markers of togetherness in joint improvisation. Front. Hum. Neurosci. 9, 187 (2015).

Varlet, M., Nozaradan, S., Nijhuis, P. & Keller, P. E. Neural tracking and integration of “self” and “other” in improvised interpersonal coordination. Neuroimage 206, 116303 (2020).

Tuller, B. & Kelso, J. A. Environmentally-specified patterns of movement coordination in normal and split-brain subjects. Exp. Brain Res. 75, 306–316 (1989).

Kelso, J. A. S., Scholz, J. P. & Schöner, G. Nonequilibrium phase transitions in coordinated biological motion: critical fluctuations. Phys. Lett. A 118, 279–284 (1986).

Schmidt, R. C. & O’Brien, B. Evaluating the Dynamics of Unintended Interpersonal Coordination. Ecol. Psychol. 9, 189–206 (1997).

Repp, B. H. & Penel, A. Auditory dominance in temporal processing: new evidence from synchronization with simultaneous visual and auditory sequences. J. Exp. Psychol. Hum. Percept. Perform. 28, 1085–1099 (2002).

Kato, M. & Konishi, Y. Auditory dominance in the error correction process: a synchronized tapping study. Brain Res. 1084, 115–122 (2006).

Repp, B. H. & Penel, A. Rhythmic movement is attracted more strongly to auditory than to visual rhythms. Psychol. Res. 68, 252–270 (2004).

Iversen, J. R., Patel, A. D., Nicodemus, B. & Emmorey, K. Synchronization to auditory and visual rhythms in hearing and deaf individuals. Cognition 134, 232–244 (2015).

Comstock, D. C., Hove, M. J. & Balasubramaniam, R. Sensorimotor Synchronization With Auditory and Visual Modalities: Behavioral and Neural Differences. Front. Comput. Neurosci. 12, 53 (2018).

Friston, K. A theory of cortical responses. Philos. Trans. R. Soc. Lond. B Biol. Sci. 360, 815–836 (2005).

Friston, K. The free-energy principle: a unified brain theory?. Nat. Rev. Neurosci. 11, 127–138 (2010).

Friston, K., Mattout, J. & Kilner, J. Action understanding and active inference. Biol. Cybern. 104, 137–160 (2011).

Koban, L., Ramamoorthy, A. & Konvalinka, I. Why do we fall into sync with others? Interpersonal synchronization and the brain’s optimization principle. Soc. Neurosci. 14, 1–9 (2019).

Hove, M. J., Iversen, J. R., Zhang, A. & Repp, B. H. Synchronization with competing visual and auditory rhythms: bouncing ball meets metronome. Psychol. Res. 77, 388–398 (2013).

Hove, M. J., Fairhurst, M. T., Kotz, S. A. & Keller, P. E. Synchronizing with auditory and visual rhythms: an fMRI assessment of modality differences and modality appropriateness. Neuroimage 67, 313–321 (2013).

Kilner, J. M., Friston, K. J. & Frith, C. D. Predictive coding: an account of the mirror neuron system. Cogn. Process. 8, 159–166 (2007).

Maes, P.-J., Buhmann, J. & Leman, M. 3Mo: A Model for Music-Based Biofeedback. Front. Neurosci. 10, 548 (2016).

Hove, M. J. & Keller, P. E. Spatiotemporal relations and movement trajectories in visuomotor synchronization. Music Percept. 28, 15–26 (2010).

Hove, M. J., Spivey, M. J. & Krumhansl, C. L. Compatibility of motion facilitates visuomotor synchronization. J. Exp. Psychol. Hum. Percept. Perform. 36, 1525–1534 (2010).

Gan, L., Huang, Y., Zhou, L., Qian, C. & Wu, X. Synchronization to a bouncing ball with a realistic motion trajectory. Sci. Rep. 5, 11974 (2015).

Liu, D. et al. Interactive brain activity: Review and progress on EEG-based hyperscanning in social interactions. Front. Psychol. 9, 1862 (2018).

Heggli, O. A., Cabral, J., Konvalinka, I., Vuust, P. & Kringelbach, M. L. A Kuramoto model of self-other integration across interpersonal synchronization strategies. PLoS Comput. Biol. 15, e1007422 (2019).

Varlet, M. et al. Impairments of social motor coordination in schizophrenia. PLoS One 7, e29772 (2012).

Marsh, K. L. et al. Autism and social disconnection in interpersonal rocking. Front. Integr. Neurosci. 7, 4 (2013).

Dermody, N. et al. Uncovering the neural bases of cognitive and affective empathy deficits in Alzheimer’s disease and the behavioral-variant of frontotemporal dementia. J. Alzheimers. Dis. 53, 801–816 (2016).

Oldfield, R. C. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113 (1971).

Davis, M. H. & Others. A multidimensional approach to individual differences in empathy. (1980).

Afsar, O., Tirnakli, U. & Marwan, N. Recurrence Quantification Analysis at work: Quasi-periodicity based interpretation of gait force profiles for patients with Parkinson disease. Sci. Rep. 8, 9102 (2018).

Fraser, A. M. & Swinney, H. L. Independent coordinates for strange attractors from mutual information. Phys. Rev. A Gen. Phys. 33, 1134–1140 (1986).

Bradley, E. & Kantz, H. Nonlinear time-series analysis revisited. Chaos 25, 097610 (2015).

Rulkov, N. F., Sushchik, M. M., Tsimring, L. S. & Abarbanel, H. D. Generalized synchronization of chaos in directionally coupled chaotic systems. Phys. Rev. E Stat. Phys. Plasmas Fluids Relat. Interdiscip. Topics 51, 980–994 (1995).

Bates, D., Mächler, M., Bolker, B. & Walker, S. Fitting linear mixed-effects models using lme4. arXiv [stat.CO] (2014).

Acknowledgements

The present study was funded by Bijzonder Onderzoeksfonds (BOF) from Ghent University (Belgium). The hardware of the recording device was built by Ivan Schepers. Figure 1 was illustraded by Kevin Smink. Professor Peter Vuust and Dr. Ole Adrian Heggli from the Center for Music in the Brain (Aarhus University, Denmark) provided precious advice and feedback on the experimental design.

Author information

Authors and Affiliations

Contributions

M.R. conceptualized, designed and implemented the study. P.J.M. supervised and contributed to study design and data processing. M.L. supervised and contributed to study design and statistical modelling. M.R. collected, processed and analyzed the data. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rosso, M., Maes, P.J. & Leman, M. Modality-specific attractor dynamics in dyadic entrainment. Sci Rep 11, 18355 (2021). https://doi.org/10.1038/s41598-021-96054-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-96054-8

This article is cited by

-

Social-Motor Coordination Between Peers: Joint Action Developmental Trajectories in ASD and TD

Journal of Autism and Developmental Disorders (2024)

-

Knee flexion of saxophone players anticipates tonal context of music

npj Science of Learning (2023)

-

Liking as a balance between synchronization, complexity and novelty

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.