Abstract

Individual differences in motor learning ability are widely acknowledged, yet little is known about the factors that underlie them. Here we explore whether movement-to-movement variability in motor output, a ubiquitous if often unwanted characteristic of motor performance, predicts motor learning ability. Surprisingly, we found that higher levels of task-relevant motor variability predicted faster learning both across individuals and across tasks in two different paradigms, one relying on reward-based learning to shape specific arm movement trajectories and the other relying on error-based learning to adapt movements in novel physical environments. We proceeded to show that training can reshape the temporal structure of motor variability, aligning it with the trained task to improve learning. These results provide experimental support for the importance of action exploration, a key idea from reinforcement learning theory, showing that motor variability facilitates motor learning in humans and that our nervous systems actively regulate it to improve learning.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

References

Churchland, M.M., Afshar, A. & Shenoy, K.V. A central source of movement variability. Neuron 52, 1085–1096 (2006).

Jones, K.E., Hamilton, A.F.C. & Wolpert, D.M. Sources of signal-dependent noise during isometric force production. J. Neurophysiol. 88, 1533–1544 (2002).

Schmidt, R.A., Zelaznik, H., Hawkins, B., Frank, J.S. & Quinn, J.T. Jr. Motor-output variability: a theory for the accuracy of rapid motor acts. Psychol. Rev. 47, 415–451 (1979).

Stein, R.B., Gossen, E.R. & Jones, K.E. Neuronal variability: noise or part of the signal? Nat. Rev. Neurosci. 6, 389–397 (2005).

Osborne, L.C., Lisberger, S.G. & Bialek, W. A sensory source for motor variation. Nature 437, 412–416 (2005).

Harris, C.M. & Wolpert, D.M. Signal-dependent noise determines motor planning. Nature 394, 780–784 (1998).

van Beers, R.J., Baraduc, P. & Wolpert, D.M. Role of uncertainty in sensorimotor control. Phil. Trans. R. Soc. Lond. B 357, 1137–1145 (2002).

Scholz, J.P. & Schöner, G. The uncontrolled manifold concept: identifying control variables for a functional task. Exp. Brain Res. 126, 289–306 (1999).

Todorov, E. Optimality principles in sensorimotor control. Nat. Neurosci. 7, 907–915 (2004).

Todorov, E. & Jordan, M.I. Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235 (2002).

O'Sullivan, I., Burdet, E. & Diedrichsen, J. Dissociating variability and effort as determinants of coordination. PLoS Comput. Biol. 5, e1000345 (2009).

Charlesworth, J.D., Warren, T.L. & Brainard, M.S. Covert skill learning in a cortical-basal ganglia circuit. Nature 486, 251–255 (2012).

Ölveczky, B.P., Andalman, A.S. & Fee, M.S. Vocal experimentation in the juvenile songbird requires a basal ganglia circuit. PLoS Biol. 3, e153 (2005).

Kao, M.H., Doupe, A.J. & Brainard, M.S. Contributions of an avian basal ganglia-forebrain circuit to real-time modulation of song. Nature 433, 638–643 (2005).

Tumer, E.C. & Brainard, M.S. Performance variability enables adaptive plasticity of 'crystallized' adult birdsong. Nature 450, 1240–1244 (2007).

Sutton, R.S. & Barto, A.G. Introduction to Reinforcement Learning (MIT Press, 1998).

Kaelbling, L.P., Littman, M.L. & Moore, A.W. Reinforcement learning: a survey. J. Artif. Intell. Res. 4, 237–285 (1996).

Roberts, S. & Gharib, A. Variation of bar-press duration: where do new responses come from? Behav. Processes 72, 215–223 (2006).

Stahlman, W.D. & Blaisdell, A.P. The modulation of operant variation by the probability, magnitude, and delay of reinforcement. Learn. Motiv. 42, 221–236 (2011).

Huang, V.S., Haith, A., Mazzoni, P. & Krakauer, J.W. Rethinking motor learning and savings in adaptation paradigms: model-free memory for successful actions combines with internal models. Neuron 70, 787–801 (2011).

Scheidt, R.A., Reinkensmeyer, D.J., Conditt, M.A., Rymer, W.Z. & Mussa-Ivaldi, F.A. Persistence of motor adaptation during constrained, multi-joint, arm movements. J. Neurophysiol. 84, 853–862 (2000).

Sing, G.C., Joiner, W.M., Nanayakkara, T., Brayanov, J.B. & Smith, M.A. Primitives for motor adaptation reflect correlated neural tuning to position and velocity. Neuron 64, 575–589 (2009).

Smith, M.A., Ghazizadeh, A. & Shadmehr, R. Interacting adaptive processes with different timescales underlie short-term motor learning. PLoS Biol. 4, e179 (2006).

Sing, G.C. & Smith, M.A. Reduction in learning rates associated with anterograde interference results from interactions between different timescales in motor adaptation. PLoS Comput. Biol. 6, e1000893 (2010).

Joiner, W.M. & Smith, M.A. Long-term retention explained by a model of short-term learning in the adaptive control of reaching. J. Neurophysiol. 100, 2948–2955 (2008).

Bays, P.M., Flanagan, J.R. & Wolpert, D.M. Interference between velocity-dependent and position-dependent force-fields indicates that tasks depending on different kinematic parameters compete for motor working memory. Exp. Brain Res. 163, 400–405 (2005).

Diedrichsen, J., Criscimagna-Hemminger, S.E. & Shadmehr, R. Dissociating timing and coordination as functions of the cerebellum. J. Neurosci. 27, 6291–6301 (2007).

Joiner, W.M., Ajayi, O., Sing, G.C. & Smith, M.A. Linear hypergeneralization of learned dynamics across movement speeds reveals anisotropic, gain-encoding primitives for motor adaptation. J. Neurophysiol. 105, 45–59 (2011).

Conditt, M.A. & Mussa-Ivaldi, F.A. Central representation of time during motor learning. Proc. Natl. Acad. Sci. USA 96, 11625–11630 (1999).

Andalman, A.S. & Fee, M.S. A basal ganglia-forebrain circuit in the songbird biases motor output to avoid vocal errors. Proc. Natl. Acad. Sci. USA 106, 12518–12523 (2009).

Warren, T.L., Tumer, E.C., Charlesworth, J.D. & Brainard, M.S. Mechanisms and time course of vocal learning and consolidation in the adult songbird. J. Neurophysiol. 106, 1806–1821 (2011).

Ali, F., Otchy, T.M., Pehlevan, C., Fantana, A.L., Burak, Y. & Ölveczky, B.P. The basal ganglia is necessary for learning spectral, but not temporal, features of birdsong. Neuron 80, 494–506 (2013).

Gonzales-Castro, L.N., Hemphill, M. & Smith, M.A. Learning to learn: environmental consistency modulates motor adaptation rates. Proc. Ann. Symp.: Advances in Comp. Motor Control 7 (2008).

Frank, M.J., Doll, B.B., Oas-Terpstra, J. & Moreno, F. Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nat. Neurosci. 12, 1062–1068 (2009).

Della-Maggiore, V., Scholz, J., Johansen-Berg, H. & Paus, T. The rate of visuomotor adaptation correlates with cerebellar white-matter microstructure. Hum. Brain Mapp. 30, 4048–4053 (2009).

Tomassini, V. et al. Structural and functional bases for individual differences in motor learning. Hum. Brain Mapp. 32, 494–508 (2011).

Rutishauser, U., Ross, I.B., Mamelak, A.N. & Schuman, E.M. Human memory strength is predicted by theta-frequency phase-locking of single neurons. Nature 464, 903–907 (2010).

Berry, S.D. & Thompson, R.F. Prediction of learning rate from the hippocampal electroencephalogram. Science 200, 1298–1300 (1978).

Tamás Kincses, Z. et al. Model-free characterization of brain functional networks for motor sequence learning using fMRI. Neuroimage 39, 1950–1958 (2008).

Tchernichovski, O., Mitra, P.P., Lints, T. & Nottebohm, F. Dynamics of the vocal imitation process: how a zebra finch learns its song. Science 291, 2564–2569 (2001).

Woolley, S.C. & Doupe, A.J. Social context–induced song variation affects female behavior and gene expression. PLoS Biol. 6, e62 (2008).

Doya, K. & Sejnowski, T. A novel reinforcement model of birdsong vocalization learning. Adv. Neural Inf. Process. Syst. 8, 101–108 (1995).

Fiete, I.R., Fee, M.S. & Seung, H.S. Model of birdsong learning based on gradient estimation by dynamic perturbation of neural conductances. J. Neurophysiol. 98, 2038–2057 (2007).

Daw, N.D., Niv, Y. & Dayan, P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat. Neurosci. 8, 1704–1711 (2005).

Thoroughman, K.A. & Shadmehr, R. Learning of action through adaptive combination of motor primitives. Nature 407, 742–747 (2000).

Wagner, M.J. & Smith, M.A. Shared internal models for feedforward and feedback control. J. Neurosci. 28, 10663–10673 (2008).

Sober, S.J., Wohlgemuth, M.J. & Brainard, M.S. Central contributions to acoustic variation in birdsong. J. Neurosci. 28, 10370–10379 (2008).

Mandelblat-Cerf, Y., Paz, R. & Vaadia, E. Trial-to-trial variability of single cells in motor cortices is dynamically modified during visuomotor adaptation. J. Neurosci. 29, 15053–15062 (2009).

Takikawa, Y., Kawagoe, R., Itoh, H., Nakahara, H. & Hikosaka, O. Modulation of saccadic eye movements by predicted reward outcome. Exp. Brain Res. 142, 284–291 (2002).

Ölveczky, B.P., Otchy, T.M., Goldberg, J.H., Aronov, D. & Fee, M.S. Changes in the neural control of a complex motor sequence during learning. J. Neurophysiol. 106, 386–397 (2011).

Jolliffe, I. Principal Component Analysis (John Wiley & Sons Ltd, 2005).

Acknowledgements

We thank G. Sing, J. Brayanov, A. Hadjiosif and L. Clark for help with the analyses and helpful discussions. We thank G. Gabriel and S. Orozco for help with experiments. This work was supported in part by the McKnight Scholar Award, a Sloan Research Fellowship, a grant from the National Institute on Aging (R01 AG041878) to M.A.S. and a McKnight Scholar Award and a grant from the National Institute of Neurological Disorders and Stroke (R01 NS066408) to B.P.Ö.

Author information

Authors and Affiliations

Contributions

Y.R.M., B.P.Ö. and M.A.S. designed the reward-based learning experiments. H.G.W. and M.A.S. designed the error-based learning experiments. H.G.W., L.N.G.C. and M.A.S. designed the variability reshaping experiment. H.G.W., Y.R.M. and M.A.S. analyzed the data. Y.R.M., H.G.W., B.P.Ö. and M.A.S. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Integrated supplementary information

Supplementary Figure 1 Task description and scoring functions for experiments 1 and 2

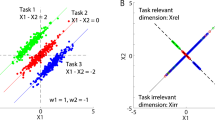

(a) The distribution of one subject's baseline movements represented in terms of their learning levels for Shape-1 and Shape-2, showing higher variability for the Shape-1 projection than the Shape-2 projection. The learning level for each trial was calculated as a function of the projection of the middle segment of the hand path (from 15 mm to 190mm of each 200 mm movement, Fig. 1b) onto one of the rewarded deflections shown in Fig. 1c. Specifically, the learning level was calculated as follows:  Here, x(y) denotes the x-positions of the hand during one trial as a function of y positions, x0(y) denotes the average x positions of the hand from the last 160 trials of the baseline period, and r(y) denotes the rewarded deflection for the particular experiment. Because the two types of rewarded deflections used (Shape-1 and Shape-2) were chosen to be orthogonal, i.e. with zero dot product, we can represent each hand path as a point in a two-dimensional space, the coordinates of which are defined by the learning levels of Shape-1 and Shape-2. (b) Illustration of the dynamic reward function used in Experiment 1 in which the reward allocation was based on performance relative to the previous 50 trials. This scoring function was defined as follows:

Here, x(y) denotes the x-positions of the hand during one trial as a function of y positions, x0(y) denotes the average x positions of the hand from the last 160 trials of the baseline period, and r(y) denotes the rewarded deflection for the particular experiment. Because the two types of rewarded deflections used (Shape-1 and Shape-2) were chosen to be orthogonal, i.e. with zero dot product, we can represent each hand path as a point in a two-dimensional space, the coordinates of which are defined by the learning levels of Shape-1 and Shape-2. (b) Illustration of the dynamic reward function used in Experiment 1 in which the reward allocation was based on performance relative to the previous 50 trials. This scoring function was defined as follows: where z is the learning level on each trial, η is the median learning level over the last 50 trials, and σ is the standard deviation of the learning level over the last 50 trials. This scoring function dynamically adjusted the scoring from one trial to the next based on the subject's recent performance history. We initially employed the dynamic scoring function as we believed it might facilitate learning; however we found similar learning rates for the static scoring function described below.

where z is the learning level on each trial, η is the median learning level over the last 50 trials, and σ is the standard deviation of the learning level over the last 50 trials. This scoring function dynamically adjusted the scoring from one trial to the next based on the subject's recent performance history. We initially employed the dynamic scoring function as we believed it might facilitate learning; however we found similar learning rates for the static scoring function described below. (c) Illustration of the static reward function used in Experiment 2 in which the reward allocation was fixed so that reward feedback would be consistent when comparing Shape-1 and Shape-2 learning. This scoring function was defined as follows: In order to achieve an apples-to-apples comparison between Shape-1 and Shape-2 learning in Experiment 2, we employed a static scoring function that was identical for Shape-1 and Shape-2 learning. This scoring function, remained constant throughout the experiment, and was therefore unaffected by differences in performance across subjects, allowing a direct comparison between Shape-1 and Shape-2 learning. For Experiment 1, the root mean square (RMS) amplitude of the rewarded deflections was 3.6 mm. This amplitude was based on pilot data indicating that it allowed for robust learning in a reasonable experiment duration. For Experiment 2, we maintained this same amplitude of the rewarded deflection for shape-1 and shape-2 learning to facilitate comparison. According to the expression above, the learning level (z) can be interpreted as the coefficient on the reward deflection that produces the minimum distance between this scaled rewarded deflection and the experimentally observed handpath. Note that this is just a least squares linear regression without an offset term. One can interpret this as the projection of the hand path point onto the rewarded shape normalized by the ideal learning level. The hand paths were recorded at 200 Hz, and were linearly interpolated onto a vector of y-positions every 0.254 mm in order to align the hand path measurements across trials. A learning level of 1(dimensionless) corresponds to an ideal amount of deflection from baseline performance. Importantly, all subjects were randomly split into two groups: 44 randomly chosen subjects were trained with positive deflections and the remaining 38 were trained with negative deflections (thick vs thin, Fig. 1c), ensuring that any drifts that might occur could not consistently promote or impede learning.

(c) Illustration of the static reward function used in Experiment 2 in which the reward allocation was fixed so that reward feedback would be consistent when comparing Shape-1 and Shape-2 learning. This scoring function was defined as follows: In order to achieve an apples-to-apples comparison between Shape-1 and Shape-2 learning in Experiment 2, we employed a static scoring function that was identical for Shape-1 and Shape-2 learning. This scoring function, remained constant throughout the experiment, and was therefore unaffected by differences in performance across subjects, allowing a direct comparison between Shape-1 and Shape-2 learning. For Experiment 1, the root mean square (RMS) amplitude of the rewarded deflections was 3.6 mm. This amplitude was based on pilot data indicating that it allowed for robust learning in a reasonable experiment duration. For Experiment 2, we maintained this same amplitude of the rewarded deflection for shape-1 and shape-2 learning to facilitate comparison. According to the expression above, the learning level (z) can be interpreted as the coefficient on the reward deflection that produces the minimum distance between this scaled rewarded deflection and the experimentally observed handpath. Note that this is just a least squares linear regression without an offset term. One can interpret this as the projection of the hand path point onto the rewarded shape normalized by the ideal learning level. The hand paths were recorded at 200 Hz, and were linearly interpolated onto a vector of y-positions every 0.254 mm in order to align the hand path measurements across trials. A learning level of 1(dimensionless) corresponds to an ideal amount of deflection from baseline performance. Importantly, all subjects were randomly split into two groups: 44 randomly chosen subjects were trained with positive deflections and the remaining 38 were trained with negative deflections (thick vs thin, Fig. 1c), ensuring that any drifts that might occur could not consistently promote or impede learning.

Supplementary Figure 2 Learning rate correlates with early task-relevant variability for all three reward-based learning datasets from Experiment 1 and 2

In the main text we examined the relationship between baseline variability and early learning redrawn in panel (a) above. However, it would seem that a more direct relationship between variability and learning rate might be observed if we compared variability during the early exposure to learning. Although this relationship is present in our data (see panel (b) which shows scatterplots illustrating the inter-individual correlations between early task-relevant variability and early learning in the three datasets of our reward-based learning experiments; each indicates a significant positive relationship) and seemingly more straightforward than the relationship between baseline variability and early learning, we avoided making this comparison as a primary analysis for technical reasons. In particular, directed learning during early exposure could easily be conflated with the estimate of variability. Thus, we looked at baseline variability before training in order to compare a “clean” estimate of variability with learning. In order to examine whether learning rate correlates with variability during early learning, in panel (b) we took advantage of the fact that learning and variability have largely separable frequency content, as learning typically occurs on a slower time scale than trial-to-trial motor noise. Accordingly, we measured early task-relevant variability by computing the variance of the learning curve after running it through a high-pass filter. In contrast, we measured early learning by averaging the learning curve after running it through the complementary low-pass filter. For the filters here we used a smoothing kernel size of 25 trials (equivalently, a cutoff frequency of 1/25 trial-1). As in the main text, early learning was defined by the first 125 trials of learning for expeirment 1, and the first 800 trials for experiment 2. This analysis demonstrates a significant positive relationship between early learning and variability for this particular cutoff frequency. Notably, in 2 out of 3 cases early learning is more strongly correlated with variability during the early learning period than with baseline variability. To determine whether the particular choice of cutoff frequency was crucial for demonstrating a significant relationship, we explored the relationship between learning rate and early variability under different cutoff frequencies. Panel (c) presents the strength of the relationships between early variability and learning for a range of possible cutoff frequencies, showing that there is a significant relationship regardless of the choice of cutoff frequency. The blue curves depict the R2 of the relationship for different cutoff frequencies, and the green curves depict the p-values. For reference, the dotted green line denotes p=0.01. Places where the solid green line is below the p=0.01 dotted line indicate smaller, more significant p-values. All correlations were positive. Taken together, this analysis suggests that early variability drives early learning in the reward-based learning experiment.

Supplementary Figure 3 Baseline variability correlates with early variability for all three reward-based learning datasets from Experiment 1 and 2

(a) Scatterplots demonstrating strong, highly significant inter-individual relationships between baseline variability and early variability for each of the three sets of subjects in experiment 1 and 2 (as in Figure S4b, early variability was calculated after running a high-pass filter on the learning curve with a cutoff frequency of 1/25 trial-1). The relationship between baseline variability and early variability is weakest for Experiment 2 Shape-2 learning; however, this is consistent with the observation that this group demonstrated the weakest relationship between baseline task-relevant variability and learning. Correspondingly, we found a much stronger correlation when we investigated the relationship between early variability and learning in this case (see Figure S4b, p = 0.00036), rather than between baseline variability and learning (see figure S4a, p = 0.0217). (b) Strength of the relationships between baseline variability and early variability for a range of possible cutoff frequencies. In line with our previous analysis from Figure S4c, we explored the relationship between baseline variability and early variability for a number of frequency cutoffs (used to calculating early variability), but found that the cutoff frequency used for the analysis above produces similar results as other cutoff frequencies. As in Figure S4c, the blue curves depicts the R2 of the relationship for different cutoff frequencies, and the green curves depict the p-values. For reference, the dotted green line denotes p=0.01. All correlations were positive.

Supplementary Figure 4 Learning curves and average learning in the reward-based learning task (Shape-1 learning in Experiment 1) using median-based stratification

Subjects from Experiment 1 were divided into groups with above-mean and below-mean Shape-1 variability as shown in Fig. 1f in the main paper. The mean task-relevant baseline variability was 0.50±0.13 overall; 0.44±0.05 for below-mean subjects and 0.64±0.16 for above-mean subjects. For comparison, here we stratified subjects based on the median and quartiles of the data (bottom 25%: 0.39±0.02, bottom 50%: 0.42±0.04, top 50%: 0.58±0.14, top 25%: 0.66±0.17). Learning curves show a clear early separation between subgroups, with higher variability groups showing higher learning (p=0.026, t(17.08)=1.95 for upper half vs lower half and p=0.0032, t(6.09)=4.05 for upper quartile vs lower quartile). Correspondingly, learning rates calculated for each subgroup in Experiment 1 based on the first 125 trials of training show an increasing monotonic relationship between baseline variability and learning rate across the four subgroups.

Supplementary Figure 5 Analysis of baseline force variability via motion-state projection

Since the pattern that best characterizes the total variability appeared similar to the temporal force pattern which was learned the fastest, we explored the existence of a link between motor output variability and motor learning ability across different force-field environments. We found the baseline variance associated with the four different force-field environments (illustrated in Fig. 3e) by projecting each error-clamp force trace from the baseline period onto normalized motion-dependent traces representing each force-field environment. We generated these traces separately for each error clamp trial by linearly combining its position and velocity traces according to the K and B values that define each environment. Panels (b-e) show how the three example force traces shown in (a) can be projected onto the four force-field environments we studied, shown in Fig. 3e. To compute the fraction of variance associated with each type of force-field (Fig. 3g), the variance of the magnitudes of the projections was divided by the total variance of the force traces. The single-trial learning rates for each force-field environment (Fig. 3f) were determined based on the data reported in a previous publication (Sing et al 2009). In this previous experiment, error-clamp trials were presented immediately before and after single trial force-field exposures, and the differences in the lateral force output (post-pre) were used to assess single trial learning rates. Since the previous study used a different window size, we recomputed the learning rates in the same 860 ms window used to assess motor output variability allowing a fair comparison between the variability associated with each type of force-field and the corresponding learning rates of each type of force-field (Fig. 3h). (a) Three example force profiles from the baseline period in the velocity-dependent force-field adaptation experiment (thin red, blue, and green lines). (b-e) Projections of force profiles from (a) onto the four different types of visco-elastic dynamics studied in Fig. 3 of the main text. The three example force traces are shown as thin lines and the projections as thick lines with the corresponding color. The variance accounted for by these projections were used to compute the task relevant variability during the baseline period analyzed in Fig. 3g-h.

Supplementary Figure 6 Additional learning curve analysis for the velocity-dependent force-field adaptation experiment

In Fig. 2f-g individuals were divided into groups with above-mean and below-mean velocity-dependent variability. The mean task-relevant baseline variability (expressed in terms of standard deviations) was 3.2±1.1 N, there were 21 subjects with variability under mean (mean variability 2.4±0.6 N) and 19 subjects with variability above mean (mean variability 4.1±0.8 N). We also examined subjects who showed especially high or low velocity-dependent variability, operationally defined as those who were more than one standard deviation away from the mean. There were 5 subjects with variability less than 1 SD below mean (mean variability 1.6±0.4 N) and 6 subjects with variability more than 1 SD above mean (mean variability 5.1±0.7 N). For comparison, we stratified subjects based on the median and quartiles of the data to determine the robustness of the trend we observed,. as shown in panel (a) above. Here we observed a similar trend to the mean/std stratified data shown in the main paper (40% faster learning in upper half vs lower half p=0.0347, t(38)=2.12, 68% faster learning in upper quartile vs lower quartile p=0.0201, t(17.63)=1.93). Interestingly, the relationship between baseline variability and learning rate only persists for 10-15 trials after which the learning curves converge as observed in panel (a) above and in Fig. 2f in the main paper. This early-only relationship may suggest that action exploration contributes to early learning during an error-dependent task, but that this exploration-driven learning becomes overshadowed by error-dependent adaptation later on. Another hypothesis is that task-relevant variability may continue to predict learning rate, but that task-relevant variability changes over the course of learning so that a relationship between learning rate and current variability is maintained although the relationship and baseline variability disappears. This topic warrants further investigation. (a) Median-stratified learning curves and early learning in the velocity-dependent force-field error-based learning task. (b) Full mean-stratified learning curve data taken from entire experiment duration. Note that early learning was calculated from the trials 1-10 (the window shaded in yellow), whereas the gray shaded region marks the window displayed in the left panel of (a) and in Fig. 2f in the main paper.

Supplementary Figure 7 Analyzing the Temporal Structure of Baseline Variability

The relationship between the task-relevant component of variability and learning ability demonstrated in Fig. 1-2 implies that the structure of variability may be an important determinant of motor learning ability. For the analysis in Fig. 3b-d, we used principal components analysis (PCA) on the force traces recorded during baseline error-clamp trials to understand the structure of variability during baseline. To obtain an accurate estimate of the overall structure of variability, we performed PCA on the aggregated baseline force traces for all subjects in Experiment 3. As in the analysis for Figure 2, forces were examined in an 860 ms window centered at the peak speed point of each movement. We performed subject-specific baseline subtraction when aggregating the data. Specifically, we subtracted the mean of each subject's baseline force traces from each of the raw baseline forces he or she produced so that individual differences in mean behavior would not contribute to our analysis of the structure of trial-to-trial variability. The principal components of the force variability were found by performing eigenvalue decomposition on the covariance matrix of the aggregated force data. Of the 173 principal components, the first five (a-e) accounted for over 75% of the total variance. Note that the eigenvector associated with the first principal component was scaled by the square root of its eigenvalue for display above and in Fig. 3d, 4h-i, and S10 in order to illustrate the amount of variability explained by it. Since previous studies have demonstrated that new dynamics are learned as a function of motion state, we sought to determine what fraction of the motion-related variability was accounted for by each principal component (Fig. 3c) in addition to the fraction of the overall variance accounted for (Fig. 3b). The fraction of overall variance directly corresponded to the eigenvalues determined in the eigenvector decomposition of the covariance matrix. In particular, this fraction corresponds to the ratio of the eigenvalue for a particular principal component to the sum of the eigenvalues for all principal components. Correspondingly, the fraction of motion-related variance can be computed based on ratios of scaled eigenvalues. To determine the fraction of motion-related variance, we first scaled each principal component's eigenvalue by the fraction of the corresponding eigenvector's variance accounted for by motion state (for example, 0.95 for PC1 shown in Fig. 3d and panel (a) above), then we found the ratio of each scaled eigenvalue to the sum of the scaled eigenvalues for all principal components. Note that from this procedure, we operationally define motion related variance (Fig. 3c) as variance that can be explained by a linear combination of position, velocity, and acceleration. Panels (a-e) duplicate the illustration of PC1 and its motion state fit presented in Fig. 3d alongside the corresponding plots for the next four principal components. Note that the R2 values for the motion-state fits (purple) onto the corresponding principal components (black) are indicated in Figure 3c, revealing that the shape of PC1 is almost entirely motion related (R2=0.95) whereas PC2,PC3, and PC5 have shapes which are barely motion related (R2 values of 0.093, 0.023, 0.22 for these PCs, respectively). Interestingly, PC4 has a shape which is almost as strongly motion related as PC1 (R2PC4=0.78 vs R2PC1=0.95), but it accounts for over fivefold less motion-related variance than PC1 as illustrated in Figure 3b, in line with the fact that PC5 explains far less of the overall variability in the data. The shape of PC4 is characterized by a negative combination of position and velocity which, as we can see from Fig. 3g, accounts for less force variability than the positive-combination of position and velocity that characterizes PC1. (a) The shape of the first principal component (PC) of the baseline force variability, which is also presented in Figure 3 of the main paper. (b-e) shapes of the 2nd through the 5th PCs. As in (a), each PC is shown in black, with the motion state fit in purple, and the position, velocity, and acceleration components of this fit shown in blue, pink, and gray, respectively.

Supplementary Figure 8 Next day retention of changes in the structure of variability

For environments presented on day one, the baseline period on the second day provided an opportunity to examine the extent to which the changes in motor output variability following training on the first day were retained overnight. Thus we used these measurements to examine the retention of the changes in motor variability for the 12 subjects who experienced velocity training and the 12 subjects who experienced position training on day one. This resulted in a data set that was half the size ones presented above. Despite the increased noise inherent in calculating principal components based on smaller data sets, we found that the first principal component consistently displayed a strong motion dependence, with a position, velocity, and acceleration fit producing an average R2 value of 0.77 compared to 0.92 for PC1 calculated from all pre-training data. Furthermore, in 90% of cases, 12 subjects per group, with each group measured in 2 conditions, the R2 values were above 0.54. However, the R2 values were lower than 0.35 in just 2.5% of the cases. Thus when we determined the gain space angles by comparing the relative sizes of the position and velocity coefficients in the motion state fit, we eliminated these 2.5% of cases in which the first principal component was not well described by motion states. This should not produce a bias in the estimates of the PV gain-space angles because elimination was based on the degree to which the gain-space vector characterized the shape of PC1 rather than the location of this vector. The resulting principal components on the next day, and the position and velocity contributions are shown above. To assess the amount of retention on the subsequent day, we compared the change in PV angle for the Pos-HCE to that for the Vel-HCE to determine whether specific changes in PC1 were retained. (a-b) Comparison of PC1 pre-training and 22 hours after training in the velocity-dependent and position-dependent HCEs. Note that these data are noisier than in the main text because retention data are only available for half the subjects, and the pre-training data shown correspond to only these subjects. Note also that these plots are analogous to those shown in Figures 4h-i in the main text. (c) PV gain-space plot for the changes in PC1 following training and for next-day retention. Note that this plot is very similar to Fig. 4l, but here the pre-training (gray) and post-training data (bright red and blue) are shown for only the subset of data for which next day retention was available (i.e. only day one data are shown). The 22 hour data (faded red and blue) are identical to those shown in Fig. 4l in the main text.

Supplementary Figure 9 Correlation between baseline peak velocity and baseline variability (top row) and baseline peak velocity and early learning (bottom row) for the three reward-based learning data sets

Since subjects had some flexibility in movement speed (movement time was restricted only to be below 450 ms), we investigated the relationship between learning and mean peak velocity during the baseline period (Fig. S1d). However, because durations under 450 ms necessitated very fast reaches for the 20 cm movements used in experiment 1 and 2, movements tended to be fairly consistent and there was not an extremely large spread of movement velocities in our data set: the mean peak speed was 0.94 m/s and the standard deviation was 0.13 m/s across subjects — only about 14% of the mean. When we looked at the average speeds of the high variability and low variability groups in order to determine if differences in movement speed might be driving the differences in learning that we attributed to variability, we found that the higher variability group displayed peak speeds of 0.86±0.08 m/s, 0.97±0.15 m/s, 0,94±0.11 m/s (mean±standard deviation for the three datasets) and the lower variability group displayed similar peak speeds of 0.95±0.18 m/s, 0.95±0.16 m/s, 0.93±0.11 m/s. Thus there was no significant difference in speeds between these groups (p=0.15, p=0.73, p=0.82), suggesting that differences in movement speed are not responsible for the differences in learning ability between these two subgroups. Moreover, we found no significant correlation between either movement speed and variability (Top row: maximum R2 value of 0.02), or movement speed and learning rate (Bottom row: maximum R2 value of 0.04). This indicates that movement speed was related to neither variability nor learning rate so that even if there had been a difference in speed between the high variability and low variability groups, this difference could not account for the relationship we uncovered between baseline variability and learning.

Supplementary Figure 10 Relationship between learning rate, variability, and lag-1 autocorrelation in baseline performance

Here we examined whether increased responsiveness to feedback (an increased rate of error-based learning) during the baseline might explain why increased baseline variability and increased rates of reward-based learning are linked. This would require (1) that increased error-based learning leads to increased baseline variability, and (2) that increased error-based learning during the baseline is positively related to increased reward-based learning during early training. However it turns out that requirement (1) above is the key issue here, as the characteristics of baseline performance in our dataset (specifically, the lag-1 autocorrelations) are such that increased error-based learning during baseline would actually lead to decreased baseline variability in the vast majority of subjects − 96% of subjects for shape-1, and 88% for shape-2 (and would have essentially no effect in the few remaining). This is explained below. Greater baseline variability would result from increased responsiveness to feedback only if this led to adjustments that were too strong, in the sense that they overcorrected errors from one trial to the next, adding more variability to motor output than they were taking away (as a counterexample, if one's muscles slowly fatigued in a way that caused movement patterns to drift, error-correcting adjustments from one trial to the next could actually reduce overall movement variability by compensating for this drift, rather than increase it). The principle above is illustrated in (b), where we show how learning rates affect variability and lag-1 autocorrelations by simulating the PAPC model from van Beers 2009. As these simulations show, the overcorrection of errors that increases trial-to-trial movement variability results in negative lag-1 autocorrelations in motor output. However, when learning rates are low enough that lag-1 autocorrelations are positive, increases in learning rate result in decreased variability. Thus increased learning rates result in increased variability only when lag-1 autocorrelations are negative (van Beers 2009). When we examined the autocorrelation in the baseline period from our own data we find the mean autocorrelation to be +0.22 on average for experiments 1 and 2, with 96% of individuals showing positive autocorrelations for shape-1 and 88% for shape-2, as illustrated in (a), which shows the autocorrelations that individual subjects display during the baseline period. The preponderance of positive autocorrelations in our baseline data indicate that increased learning rates would generally decrease, rather than increase motor variability. Note that for the PAPC model simulation in (b) we adjusted a single free parameter (the ratio between the amounts of execution and state noise) to match the average baseline lag-1 autocorrelation we observed in our data, at a learning rate of zero. However, the value of this parameter does not affect the general features of the simulation results, namely that autocorrelation monotonically decreases with learning rate, and that increases in learning rate lead to increases in variability only when lag-1 autocorrelations are negative. (a) Histograms showing distributions across subjects of lag-1 autocorrelation during the baseline period in the reward-based learning data. The autocorrelations were calculated for Shape-1 (left column) and Shape-2 (right column) regardless of which shape the subjects learned in the task. The vertical dashed lines indicate the population means. Note that the mean was approximately +0.2 in all 6 cases and the most negative value was -0.09. (b) The effect of different learning rates on motor output variability and lag-1 autocorrelation were determined by simulating the PAPC model from van Beers 2009, which is based on the combined effects of execution noise (output noise) and state noise. The relative contributions of execution noise and state noise were set so that lag-1 autocorrelation at a learning rate of zero matched that in our data (+0.22). Upper panel: the relationship between learning rate during the baseline period and baseline variability (standard deviation). The gray vertical line indicates the learning rate at which variability is minimal. Lower panel: the relationship between learning rate and lag-1 autocorrelation. Note that the variability-minimizing learning rate (gray line) results in zero autocorrelation. When autocorrelations are positive, as in our baseline data, learning rate increases result in decreased variability; however, at higher learning rates, when autocorrelations are negative, additional learning rate increases result in increased variability.

Supplementary Figure 11 Detailed methods for the velocity-dependent force-field adaptation experiment

Forty right handed neurologically intact subjects (20 female, 20 male, ages 18-31, mean age 21) participated in this experiment. This experiment was designed to examine the relationship between task-relevant variability during the baseline period and motor learning rates for force-field adaptation across individuals. This required accurate measurements of both baseline variability and learning rates for each subject. To obtain accurate measurements of baseline variability, we designed the experiment with a prolonged baseline period of 200 trials during which baseline variability was assessed based on 20 error-clamp trials that were randomly interspersed among null-field trials. Examples of the baseline lateral force traces from one subject are shown in Fig. 3a. This baseline period followed a 100 trial familiarization period during which baseline variability was not measured. Although movement duration was generally between 500-600 ms, we examined the force output generated in a 860 ms window centered at the peak speed point to ensure that we captured the entire movement (average peak speed=320 ±5 mm/s, average speed at start of window=0.44±0. 10 mm/s, average speed at end of window=2.7± 0.6 mm/s); this period is indicated by gray shading in Fig. 3a and Fig. S3. Since these subjects would later be exposed to a velocity-dependent force-field, we analyzed what would become the task-relevant component of the variability before any learning occurred by calculating the amount of velocity-dependent variability present during baseline error-clamp trials. We computed the velocity-dependent component of variability by projecting each force trace onto the corresponding velocity profile (Fig. S4j), and calculating the standard deviation of the magnitudes of these projections. Thus, if motor output variability was fully velocity-dependent, the variability in force traces would not change after projection and would be equal to the total variability. Following baseline, subjects experienced a 150 trial training period during which a velocity-dependent force-field environment was applied. In this training period, 80% of trials were training trials and 20% were error-clamp trials which we intermixed to measure the learning level at various points in training. Subjects were stratified based on the amount of velocity-dependent variability present during baseline error-clamp movements, and average learning curves were calculated for each group (Fig. 2f). We found that groupings with more task-relevant variability showed faster initial learning, but these differences disappeared as learning progressed. Initial learning rate (Fig. 2g-h) was calculated by finding the average increase in learning level over the first ten trials of training. This period included two error-clamp trials. To measure adaptation to the force-field environments, we examined the difference between the force profiles measured during pre-training error-clamp trials and the error-clamp trials randomly interspersed during the training period. We performed subject-specific baseline subtraction when computing force-field adaptation coefficients. To accomplish this, we subtracted the mean force output each subject generated on baseline error-clamp trials (black trace in panels a-c) from the raw force trace measured on error-clamps during the training period (dashed green line in b-c) to determine the change in motor output induced by exposure to a force-field environment (solid green line in panels b-c). Note that (a) shows data from an example subject, whereas (b) and (c) show mean data early and late in learning. The learning-related changes in the force profiles were regressed onto a linear combination of the motion states position, velocity, and acceleration (d-e). The gray shaded regions mark the window for the force profile regression. We measured learning for the pure velocity dependent force-field environment by normalizing the velocity regression coefficient to create an adaptation coefficient for which a value of 1 indicated full compensation for the force-field environment. Note that these adaptation coefficients are displayed in Fig. 2-3 in the main paper. For a force profile that is driven by adaptation to a velocity-dependent force-field, our adaptation coefficient represents the size of the bell-shaped velocity-dependent component of the measured force profile. This velocity-dependent component of the measured force profile specifically corresponds to the force component targeted to counteract the velocity-dependent force-field perturbation. The adaptation coefficient for the position-dependent force-field used in Fig. 3 was calculated in an analogous fashion based on the regression coefficient. The adaptation coefficients for the PComb and NComb environments were obtained by projecting the position and velocity regression coefficients onto the axis of the applied force-field and normalizing the result by the amplitude of the applied force-field. The position- and velocity-specific adaptation coefficients displayed in Fig. 4d-f were computed as described in previous work.

Supplementary information

Supplementary Text and Figures

Supplementary Figures 1–11 (PDF 1084 kb)

Rights and permissions

About this article

Cite this article

Wu, H., Miyamoto, Y., Castro, L. et al. Temporal structure of motor variability is dynamically regulated and predicts motor learning ability. Nat Neurosci 17, 312–321 (2014). https://doi.org/10.1038/nn.3616

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nn.3616

This article is cited by

-

Large-scale citizen science reveals predictors of sensorimotor adaptation

Nature Human Behaviour (2024)

-

Right-left hand asymmetry in manual tracking: when poorer control is associated with better adaptation and interlimb transfer

Psychological Research (2024)

-

Dopamine facilitates the translation of physical exertion into assessments of effort

npj Parkinson's Disease (2023)

-

Head movement kinematics are differentially altered for extended versus short duration gait exercises in individuals with vestibular loss

Scientific Reports (2023)

-

Arithmetic value representation for hierarchical behavior composition

Nature Neuroscience (2023)