Abstract

As the applied behavior analysis (ABA) service industry (“the industry”) continues to rapidly expand, it faces three major problems. First, ABA service delivery quality (ASDQ) is undefined in ABA research and the industry. Second, we cannot rely exclusively on professional organizations that oversee licensure and certification to control ABA service delivery quality because they do not have control over the relevant contingencies. Third, without objective indicators of ABA service delivery quality, it is difficult for ABA organizations to distinguish the quality of their services from competitors. In this article, first we explain the need for more critical discussion of ASDQ in the field at large, briefly describe a sample of common views of quality in ABA research and the industry, and identify some of their limitations. Then we define ASDQ and present a cohesive theoretical framework which brings ASDQ within the scope of our science so that we might take a more empirical approach to understanding and strengthening ASDQ. Next, we explain how organizations can use culturo-behavioral science to understand their organization’s cultural practices in terms of cultural selection and use the evidence-based practice of ABA at the organizational level to evaluate the extent to which methods targeting change initiatives result in high ASDQ. Lastly, in a call to action we provide ABA service delivery organizations with six steps they can take now to pursue high ASDQ by applying concepts from culturo-behavioral science and total quality management.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Just as behavior analysts were sowing the seeds of applied behavior analysis (ABA), on the design of cultures Skinner (1971) wrote, “Our culture has produced the science and technology it needs to save itself. It has the wealth needed for effective action. It has, to a considerable extent, a concern for its own future” (p. 181). At the same time Skinner encouraged behavior analysts to guard against the misuse of behavioral technology by looking not at “putative controllers, but at the contingencies under which they control” (p. 182). In this article we will argue that a systematic empirical approach to understanding the contingencies that control the use of applied behavioral technology in the ABA service delivery industry (hereafter referred to as “the industry”) is missing. We attempt to start addressing this gap by objectively defining ABA service delivery quality (ASDQ) within a cohesive framework that enables the empirical study of ASDQ at the organizational level, and the cultural practices of which it is a result.

The dimensions of ABA were established in the late 1960s (Baer et al., 1968). Since then, the industry has evolved into a group of mostly profit-making enterprises. Its practices may be viewed through an evolutionary lens as shaped by cultural selection (Skinner, 1981) within the context of a receiving system (i.e., selecting environment) or market (e.g., Malott, 2016) in response to a growing demand for services. Through this lens, we may view the industry as having grown and expanded because ABA practices have helped cultures behave more effectively with regard to specific problems with human behavior (Cooper et al., 2020). This is because to the extent that ABA is implemented as an evidence-based practice (EBP; Slocum et al., 2014), it works. A brief look at data from multiple professional organizations such as the Behavior Analyst Certification Board, Inc. ® (BACB ®), the Association for Behavior Analysis International ® (ABAI ®) and Autism Speaks ®, can help inform our understanding of the industry’s growth.

Industry Growth and Dissemination Data

As of January 7, 2020, there were 37,859 Board Certified Behavior Analysts ® (BCBA ®), 4,044 Board Certified Assistant Behavior Analysts ® (BCaBA ®), and 70,361 Registered Behavior Technicians ® (RBT ®; BACB). From 2014 to 2018, over 35,000 professionals became RBTs (BACB, 2019). According to the U.S. Employment Demand for Behavior Analysts Burning Glass Report (BACB, 2019), between 2010 and 2018 estimates of the annual national employment demand for BCaBAs, BCBAs and doctoral-level BCBAs (BCBA-DTM) increased every year, with overall increases of 1,942% for BCBAs and BCBA-Ds, and 1,098% for BCaBAs (BACB, 2019). Most ABA practitioners reported that they focused on autism-related services. Despite that primary area of focus, the results of a 2016 BACB Job Task Analysis (JTA) survey suggest that the industry has expanded to include a wide variety of other ABA subspecialty areas. The results of that JTA indicated that certificants are working in areas such as education, behavioral medicine, organizational behavior management (OBM), child welfare, behavioral gerontology, and sports and fitness (BACB, n.d.).

Asof March 19, 2020, the ABAI verified course sequence directory indicated therewere 691 verified course sequences in 47 countries (ABAI, 2020). Those sequences offer coursework which aspiring professionals can take to meet the coursework eligibility requirements to take the BACB’s BCaBA or BCBA certification exam. As of March 2020, all 50 states required insurance coverage of ABA services for the treatment of autism by state-regulated health plans (i.e., fully insured and state employee health benefit plans; Autism Speaks, n.d.). In November 2019, there were over 30 U.S. states in which licensure had been enacted to regulate the ABA profession (BACB, n.d.). Nevertheless, licensure is largely based on having an existing certification (e.g., BCBA) and therefore is unrelated to quality service provision.

The Need for a Systematic Discussion of ABA Service Delivery Quality

These data clearly suggest that the industry is growing around the world. This is especially true in its application to the education and treatment of individuals with developmental disabilities. We should celebrate that progress. But can we say the same about ABA service delivery quality (ASDQ)? What is quality? What do we really know about quality as it pertains to ABA service delivery? What are the dimensions of the construct of quality in the industry? Where are the data? And what is high ASDQ? How can university training programs identify so-called high-quality supervision practicum sites? How should insurance companies determine which ABA service providers to bring in-network or which contracts to reauthorize? How should the average everyday consumer choose an ABA service provider? How should people in the workforce choose an ABA service provider when seeking employment? How should school districts choose ABA service providers to contract for consultation? It seems safe to assume that like other industries, there is quantifiable variation in the quality and nature of ASDQ within and across organizations over time. It follows that by leveraging technology, we could study ASDQ empirically to better understand and act on its controlling variables.

The answers to these questions will likely have a large impact on the trajectory of the industry, its consumers, and the underlying science. Such answers may determine which variations in professional practices are strengthened or weakened over time. However, answers may be hard to come by without a definition of ASDQ that is sufficiently clear, objective, and measurable to allow the field to apply its technology to ASDQ. Additional reasons why a systematic discussion of ASDQ is urgently needed are that it may ultimately help us to deal with (1) the unknown impact of ABA business model diversity, industry growth, private equity investments, and subsequent acquisitions of ABA service providers; (2) issues related to international dissemination and misconceptions about ABA (e.g., Keenan et al., 2014); (3) opinions and narratives about negative experiences with ABA services described on social media platforms such as Twitter, Inc. ®; (4) highly flawed published research that nevertheless raises questions about practitioners’ use of ABA (e.g., research addressed by the response from Leaf et al., 2018); and (5) clinical or operational practices unwittingly supported by contingencies within ABA service delivery organizations with the potential for harm to consumers.

Our current situation parallels those faced by other industries such as the general manufacturing, automotive, aerospace, telecommunications, and environmental management industries, which developed Total Quality Management (TQM) standards to promote quality products and services in response to high demand, rapid growth, and changing social, economic, and political conditions (American Society for Quality [ASQ], 2019). Perhaps it is in our best interest to do the same by leveraging tools from our discipline and other disciplines, to engineer organizations that promote ASDQ. The notion that behavioral technology is well-suited to enable “an organizational culture that supports quality as a mission” is not new (e.g., Redmon, 1992, p. 547). However, applying our tools to promote ASDQ will require a robust systematic discussion of what ASDQ is, how to assess ASDQ, and how to increase and maintain high ASDQ. Examining how the industry and the science of behavior analysis conceptualize quality seems like a reasonable place to start.

The aims of the remainder of this article are to (1) provide a brief general overview of how quality is commonly conceptualized in behavior-analytic literature and the industry, (2) define quality and ASDQ, (3) apply concepts from culturo-behavioral science to develop an ASDQ framework, (4) propose some relations between ASDQ and the EBP of ABA, and (5) outline next steps organizations can take now to objectively assess and program for ASDQ in their organization and begin a systematic approach to promoting high ASDQ.

The Construct of Quality in Behavior-Analytic Literature

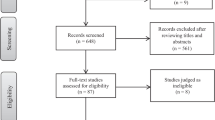

In our approximately year-long search for discussions of quality in the behavior-analytic literature, we limited our search largely to online and print resources readily accessible to most behavior analysts (i.e., practitioners). We searched multiple popular ABA textbooks such as the Handbook of Applied Behavior Analysis (Fisher et al., 2014) for definitions of quality and chapters dedicated to the topic as it relates to ABA services but were unsuccessful. We did not find the word “quality” in the subject index of the most recent version of the Cooper et al. (2020) text. Some texts discuss ABA quality in a general sense. For example, their chapter on basic behavioral principles and strategies in “Autism: Teaching Makes a Difference,” Scheuermann et al. (2019) state that the primary aim of the chapter is, “to provide an overview of . . . fundamental elements of high-quality behavioral programs. . .” (p. 27). However, a definition of quality and explanation of precisely how to determine whether a behavioral program is high quality, are lacking. Our research (albeit nonsystematic) and our combined 20+ years of experience in the field have not resulted in the identification of a clear operational definition of quality pertaining to ABA service delivery in the behavior-analytic literature. The construct of quality in ABA appears to be somewhat represented within OBM, and in particular within performance management (PM). The roots of the construct of quality in ABA can be traced back to parallels in the literature drawn among OBM, statistical process control, and TQM in the 1980s and 1990s (e.g., Babcock et al., 1998; Mawhinney, 1987). In PM, though, the concept of quality is mostly focused on the performance of individual workers, not on the organization as a whole.

In PM, the goal is to “create a workplace that brings out the best in people while generating the highest value for the organization” (Daniels & Baily, 2014, p. 1). This goal is accomplished by applying behavioral workplace technology based on ABA. In PM, quality has been defined as, “one measured aspect of performance that includes, among other things, performance accuracy, class, and novelty” (Daniels & Baily, 2014, p. 328). That is, measures of accuracy, class, and novelty quantify those aspects of a specific type of quality: performance quality. Accuracy is a measure of the desired performance, similar to procedural fidelity. For example, the accuracy with which an ABA therapist conducts a preference assessment with a patient. However, it is more common to measure deviations from the desired performance (i.e., errors). Data on errors or accuracy can be prescriptive by suggesting relevant antecedent manipulations, alternative behavior, and positive reinforcement that can be applied to increase the accuracy dimension of performance quality. Class is the “comparative superiority of a product beyond mere accuracy” (Gilbert, 1978; Daniels & Baily, 2014, p. 75). This measure focuses on the “form, style, or manner” in which a person or a product performs and involves more subjectivity than others. For example, the extent to which an ABA therapist displays high-quality positive affect when delivering praise for correct responses, or what might be called in mentalistic terms the therapist’s “attitude.” Novelty is “the unusual or unique aspect of a performance” (Daniels & Baily, 2014, p. 76) and often an important dimension of performance quality to measure when creativity and novel solutions to problems are needed to ensure quality. For example, when an ABA therapist motivates a young client with autism spectrum disorder by demonstrating novel ways to play with familiar toys for which there was previously an abolishing operation.

Common Conceptualizations of Quality in ABA Service Delivery Settings

Readily accessible, so-called quality indicators of clinical ABA service delivery and related “autism programs” have been defined and described in a myriad of ways by multiple sources. For example, (1) practitioners, (2) state departments of education, (3) blog authors, (4) professional organizations, and (5) university programs. We provide just a few examples (i.e., a nonexhaustive set) from these sources below to demonstrate the lack of consensus on conceptualizations of quality in the industry. We admire the many great practitioners and researchers who work tirelessly to improve the quality of care for ABA service recipients as exemplified below. However, we also identify some limitations in existing conceptualizations to make the argument that we need advances in the conceptual analysis of quality and a more systematic and empirical approach to its understanding. In particular, through conceptual and empirical research on quality, we may be able to clarify and extend what is known about the quality of ABA services, and ultimately leverage our science and quality tools from other industries to help ABA organizations provide sustainable high-quality services.

Practitioners

One approach to evaluating quality is to compare characteristics of the service to the seven dimensions of ABA (Baer et al., 1968). For example, Mark Sundberg (2015) developed an ABA Program Evaluation form v. 1.4 © made available to the public on his website (http://site.avbpress.com/Detailed_ABA_Program_Assessment), which evaluates quality by comparing characteristics of the service to the seven dimensions of ABA (i.e., Baer et al., 1968). The form consists of a list of practice characteristics pertaining to each dimension and a subjective Likert-style rating scale ranging from 0–None to 3–Good used to rate the extent to which each practice characteristic is demonstrated. Each of the seven sections of the form correspond to one of the seven dimensions and consists of Quick Assessment and “Detailed Assessment” items. Points are assigned to each item using the Likert-style rating scale and overall service quality is determined by the total number of points (up to 288 points).

Examples of items from the Quick Assessment section of each dimension illustrate how characteristics of service quality are evaluated. Applied dimension items include “behavioral deficits assessments completed: barriers, FBAs,” and whether “daily curriculum is consistent with assessment and IEP goals” (Sundberg, 2015, p. 1). Behavioral dimension items include whether a “data collection system” is in place, and “targets are definable, observable, and measurable” (p. 1). Analytic dimension items include whether the program “demonstrates prediction and control of skills and problem behaviors” and “the sources of control for barriers that impair language, social, and learning skills are identified and ameliorated” (p. 2). Technological dimension items include whether “staff demonstrate the correct use of basic ABA methodology” and “staff have established clear instructional control” (p. 2). Conceptual systems dimension items include whether “staff use behavioral terminology” and can “identify the relevant concepts and principles that underlie teaching procedures” (p. 3). Effective dimension items include whether “the students are acquiring appropriate and meaningful skills” and “negative behavior is significantly decreasing.” Generality dimension items include whether “daily programming for generalization occurs” and a parent training program is in place (p. 3).

Some strengths of this approach are that it ties quality assessment to the defining characteristics of the practice and by quantifying these characteristics, data-based decision making can promote quality. However, a focus on dimensions of ABA is limited in scope and may not be adequate to address other characteristics of ABA service quality such as quality at the organizational level or as perceived by consumers and other stakeholders. Furthermore, interobserver agreement and reliability may be difficult to attain without more objectively operationalizing definitions of each item (e.g., “sufficient amount of teaching trials”; Sundberg, 2015, p. 1) and standardizing administration procedures and processes.

State Departments of Education

In Fall of 2004, the New Jersey Department of Education published the New Jersey Autism Program Quality Indicators report. This report was developed by a panel of almost three dozen autism experts across multiple fields of study, including ABA, based on the scientific literature as a model for identifying successful school-based programs serving children (ages 3–8) with autism (New Jersey Department of Education, 2004). The report was organized in two primary sections: Program Considerations and Student Considerations.

Program Considerations consisted of seven components or indicators of quality (New Jersey Department of Education, 2004): program characteristics, personnel, curriculum, methods, family involvement and support, community collaboration, and program evaluation. For example, an essential program characteristic was the level of engagement and intensity sufficient to ensure a successful program. The panel suggested that this level depended on whether (1) the length of the school day and academic year for students with autism, including preschoolers, are equal to or greater than that of typical students (e.g., minimum of 25 hr per week and an extended school year program of 210 days per year), (2) educational services are provided across environments (including home), (3) individualized education planning teams provide justification when less than the minimum of recommended services is provided, (4) there is a sufficiently low student-to-teacher ratio (e.g., 2:1 or 1:1 student-to-teacher ratio) and in some cases small group instruction, (5) location and content of activities are based on the student’s individual needs (e.g., school, home, community), (6) educational activities are developmentally appropriate and systematically sequenced to meet individualized student objectives, and (7) pedagogical effectiveness and student progress are evaluated and documented.

Student Considerations in the New Jersey Autism Program Quality Indicators report consisted of “practices to consider in the decision-making process for the individual student” (New Jersey Department of Education, 2004, p. 19) specific to individual student assessment, development of the individualized education program, addressing challenging behavior, program options (i.e., placement), transitions across educational settings, and individual progress review and monitoring. For example, individual student assessment is high quality to the extent that (1) “assessments are conducted by a multidisciplinary team made up of qualified personnel who are knowledgeable regarding the characteristics of autism” (New Jersey Department of Education, 2004, p. 19), (2) the planning team reviews and incorporates the students’ medical and developmental history, (3) multiple measures and sources of information are used to assess all important domains of functioning, (4) the student’s skills, strengths, and needs are indicated, (5) the report is written clearly and the content is meaningful, (6) recommendations in the report that guide the individualized education plan reflect the integration of information generated from the multidisciplinary report, (7) parents receive copies of documentation related to eligibility determination 10 or more days prior to the meeting, and (8) given consent, other professionals who collaborate with the team have access to the report.

The New Jersey Autism Program Quality Indicators report (New Jersey Department of Education, 2004) was limited in that it was specific to quality school-based programming for children with autism. Therefore, the results might not be generalizable to the broader ABA industry. We were unable to identify an objective or measurable definition of quality or description of quality in the report. In addition, we were unable to identify empirical evidence in the report that adherence to the indicators can produce high-quality educational programming. Nevertheless, it provides a representative sample of what might be considered a model of high-quality ABA services for children with autism in school settings.

Blogs

In a July 31, 2016 blog post from Behavioral Science in the 21st Century, “Do you have a ‘quality’ ABA service provider?” (Ward et al., 2016), the authors suggested that an assessment or understanding of ABA service delivery quality might include considering that there are fundamental work processes operating at multiple levels (e.g., individual level and organizational level) regardless of the ABA service delivery setting (e.g., in-home, center-based, residential). They further suggested that those work processes could be understood and perhaps improved by applying a cultural level analog of the operant contingency called a metacontingency. A metacontingency is, “a contingent relation between 1) recurring interlocking behavioral contingencies having an aggregate product and 2) selecting environmental events or conditions” (Glenn et al., 2016, p. 13; emphasis in original). The metacontingency is considered by some behavior analysts to be a basic unit of analysis that enables an experimental approach to understanding cultural selection, in particular organizational culture (Glenn, 1986; although see Baia & Sampaio, 2020, for an alternative view on the unit of analysis). The idea that the concept of the metacontingency could help us understand quality at the organizational level seems to originate with Thomas Mawhinney (1992a). Mawhinney called metacontingencies that control an organization’s “survival or decline and death,” “survival-related metacontingencies” (Mawhinney, 1992b, p. 22). We believe the blog post fell short of listing or describing quality indicators or practical suggestions for how to apply the metacontingency to identify quality indicators or address ABA service quality. This may be due in part to the fact that culturo-behavioral science (e.g., Cihon & Mattaini, 2019) and related concepts such as the metacontingency are evolving and applied technology for intervening on cultural problems using metacontingencies is lacking (Zilio, 2019).

Amanda Kelly (i.e., BehaviorBabe; n.d.) published a set of 30 quality indicators of ABA service delivery (https://www.behaviorbabe.com/quality-indicators). However, like many other sources of information about ABA quality, BehaviorBabe did not offer a clear definition of quality and how to determine if and when high-quality services are attained by incorporating the quality indicators into one’s practice. In addition, many of the indicators such as, “The learner LOVES learning” and “Changes made are based on measures of progress” (Kelly, n.d., “30 Indicators” list) are open to interpretation and cannot be quantified without further operationalization.

Professional Organizations

Multiple major professional organizations such as the BACB, ABAI, the Behavioral Health Center of Excellence ® (BHCOE ®), the Council of Autism Service Providers ® (CASP ®), and the Association for Professional Behavior Analysts ® (APBA ®, n.d.), have worked to promote the quality of ABA services, in particular services for individuals with developmental or intellectual disabilities, in one way or another.

The BACB was founded in 1998, and the history of the development of behavior analyst credentialing is documented (e.g., Carr & Nosik, 2017; Johnston et al., 2017; Shook, 1993). As of the writing of this article, the BACB’s global mission was stated as, “To protect consumers of behavior analysis services worldwide by systematically establishing, promoting, and disseminating professional standards of practice” and their vision was stated as, “To help solve a wide variety of socially significant problems by increasing the availability of qualified behavior analysts around the world” (BACB, n.d.a). Towards these aims, the BACB generally has tried to promote the quality of clinical practice and competence through certification (Dixon et al., 2015) and enforcement of compliance with the BACB’s Professional and Ethical Compliance Code for Behavior Analysts © and the Registered Behavior Technician Ethics Code ©. Anticipated challenges of the BACB’s attempts to promote quality across the field of ABA included what to teach practitioners (i.e., verbal repertoires, nonverbal repertoires, which technologies to promote), the extent to which practitioner repertoires should include experimental and conceptual analysis of behavior, and whether practitioner training programs should emphasize research or practice (Moore & Shook, 2001).

Asof the writing of this article, ABAI’s stated mission was, “To contribute tothe well-being of society by developing, enhancing, and supporting the growthand vitality of the science of behavior analysis through research, education,and service” (ABAI, n.d., “Strategic Plan” section). Towardsthat aim, ABAI, “has been the primary membership organization for thoseinterested in the philosophy, science, application, and teaching of behavioranalysis.” (ABAI, n.d., “About the BACB” section). ABAI provides services to its members and the field such as events, job postings, journals, affiliated chapters, special interest groups, and a publication featuring articles related to these services. In general, ABAI has tried to promote the quality of graduate training programs through accreditation of graduate academic programs by evaluating those who provide training to individuals seeking certification in graduate programs (Dixon et al., 2015).

Critchfield (2015) noted the absence of a systematic approach to monitoring and ensuring ABA practitioner efficiency and the outcomes of practitioner training programs and identified some important limitations of credentialing practitioners and training program accreditation. A problem with both certification and accreditation is their focus on minimum educational and training standards (Shook & Neisworth, 2005). By relying heavily on multiple choice question-based exams of aspiring practitioners’ verbal behavior about ABA, the certification process cannot verify that field supervision practices and coursework requirements produce competent practitioners who can implement and evaluate interventions that produce positive, lasting behavior change (Critchfield, 2015). Accreditation raises educational standards but focuses on characteristics of the training program rather than the proficiency of its graduates (Critchfield, 2015). There are other limitations of these approaches to promoting quality that were not addressed by these authors. For example, the focus on practitioner competencies and training program quality, if successful, may produce practitioners with the skills to provide high-quality ABA services. However, that approach neglects the contingencies that need to be arranged in ABA service delivery environments at the organizational level to reinforce and maintain those skills. That is, certification and accreditation processes regulate who enters the profession, and/or how practitioners maintain their right to continue practicing, but contribute little to quality systems (i.e., quality assurance and quality control) in practice.

Incontrast with the BACB which certifies individual behavior analysts, and ABAIwhich accredits graduate training programs, the BHCOE accredits organizations.Specifically, BHCOE is an “international accrediting body” established in 2015to “meet accreditation needs specific to the delivery of behavior analysis”(BHCOE, 2020, “What is BHCOE Accreditation?” n.d.). As of January 31, 2020, the BHCOE website reported representing 10,000 patients, 5,000 BCBAs, and having accredited 275 organizations. Their website states that becoming accredited by BHCOE can help organizations in a variety of ways, such as meeting funder requirements and achieving high standards of clinical quality, transparency, and accountability.

The BHCOE’s accreditation process is based on a set of “BHCOE Standards” (i.e., “Standards and Guidelines for Effective Applied Behavior Analysis Organizations”). Organizations must first meet a set of preliminary standards, then a set of full standards. The preliminary and full standards are each comprised of seven sections containing requirements related to the professional and ethical behavior of organizations who provide ABA therapy.

The sections of the preliminary standards are (1) general requirements (e.g., “Organization has an employee handbook”), (2) hiring (e.g., “Organization conducts background checks”), (3) HIPAA (e.g., “Organization has a HIPAA breach policy”), (4) intake (e.g., “Organization has a client illness policy”), (5) clinical (e.g., “Organization has a data collection system”), (6) consumer protection (e.g., “Organization has legal representation”), and (6) liability (e.g., “Organization has cyber or data privacy insurance”).

The sections of the full standards are (1.0) staff qualification, training & oversight (e.g., “Organization provides staff with continuing education in line with their areas of need”), (2.0) treatment program and planning (e.g., “Organization utilizes research-based behavior-reduction procedures”), (3.0) collaboration & coordination of care (e.g., “Organization makes reasonable efforts to involves parents/guardians in training, participation, and treatment planning”), (4.0) ethics and consumer protection pertaining to waitlists, marketing and representation, and promoting ethical behavior (e.g., “If the organization holds a waitlist, they clearly communicate expectations of waitlist time to families”), (5.0) HIPAA compliance (e.g., “Organization has a data backup plan”), (6.0) patient satisfaction (e.g., “Organization operates in a manner that indicates patient or parent/guardian satisfaction at 80% or higher”), and (7.0) employee satisfaction (e.g., “The organization operates in a manner that indicates staff satisfaction at 80% or higher”). Although BHCOE provides standards and guidelines for ABA therapy focusing on service quality, as of the writing of this article, we could not locate content on their website explicitly defining and describing the requirements of “high-quality” services. We were also unable to identify direct empirical support for claims that member organizations’ adherence to the standards and guidelines results in high-quality services.

The BHCOE website indicates they developed 2021 full accreditation standards, which were undergoing public commentary at the time we submitted this article for publication (BHCOE, n.d.). It seems these standards have great potential to promote high-quality services in the industry and positively affect the lives of many consumers and professionals. This is especially relevant in relation to items that account for how organizations assess patient outcomes and use outcome data.

CASPwas formed in 2009 and their mission as a non-profit organization is to supportmembers by “cultivating, sharing, and advocating for provider best practices inautism services” (CASP, “Our Mission”, n.d.a). When this manuscript was written, their website asserted that “there has not been a published set of standards, guidelines, or resources specifically designed to address needs of autism service organizations, at the organizational level” (a focus on ABA services is implied by other website content), and that they “serve as a force for change, providing information and education and promoting standards that enhance quality.” They also listed multiple organizational goals such as to “ensure quality, safe and most effective care” and to “promote continuous quality improvement” and indicated that they advocate strongly for the use of ABA service delivery to support clients served by CASP member organizations due to its strong scientific evidence base. Althoughthe organization describes sets of guidelines aimed at helping memberorganizations deliver and sustain “high-quality services and manage treatmentcosts,” as of the writing of this paper we could not locate content on CASP’swebsite explicitly defining and describing what “high-quality” services are(CASP, n.d.-b). Nor could we identify direct empirical support for claims that member organizations’ adherence to CASP guidelines results in high quality ABA services.

TheAPBA’s mission as of the writing of this paper was, “to promote and advance thescience-based practice of behavior analysis by advocating for public policiesand informing, supporting, and protecting practitioners and consumers.” (APBA,n.d.). The APBA offers multiple resources to members and publishes practice guidelines for both members and nonmembers such as clarifications regarding guidelines for the treatment of autism spectrum disorder in collaboration with the BACB (https://cdn.ymaws.com/www.apbahome.net/resource/collection/1FDDBDD2-5CAF-4B2A-AB3F-DAE5E72111BF/Clarifications.ASDPracticeGuidelines.pdf). In the most recent version of the treatment guidelines for autism spectrum disorder published by the BACB (https://cdn.ymaws.com/www.apbahome.net/resource/collection/1FDDBDD2-5CAF-4B2A-AB3F-DAE5E72111BF/BACB_ASD_Gdlns.pdf), the word “quality” is used only to reference quality of life, quality of implementation (i.e., treatment integrity), and in a brief statement that the “guidelines should not be used to diminish the availability, quality, or frequency of currently available ABA treatment services” (p. 5). But nowhere in these documents, or any of APBA’s other guidelines could we identify where the organization defines the quality of ABA services.

University Programs

At least one graduate program, the School of Behavior Analysis at the Florida Institute of Technology (FIT, n.d.), uses a set of what may be considered quality standards of ABA service settings from the perspective of some graduate degree programs for identifying practicum sites (https://www.fit.edu/psychology-and-liberal-arts/schools/behavior-analysis/academics-and-learning/hybrid-learning/stage-2/). ABA service delivery settings interested in becoming a designated practicum site for FIT’s Master of Arts in Professional Behavior Analysis degree program must first complete a self-assessment by applying a behaviorally anchored rating scale to their practice. The assessor makes ratings on a Likert-style scale to indicate the extent to which the service provider meets criteria for each of seven dimensions: (1) case management, (2) staff training, (3) monitoring and support, (4) data and graphing, (5) ABA programming, (6) functional assessment, and (7) preference assessments. Higher scores across the items on the scale are desirable. For example, under “case management” a site can score 5 if there are “5+ BCBA’s on staff with appropriate caseloads” but 1 if there are “no BCBAs on staff.” Sites are disqualified if, “clients who engage in severe problem behavior only occasionally receive formal functional analyses from a qualified BCBA or BCBA-D” or “sites use, incorporate, or recommend services that are nonevidence based or have evidence of harm (e.g., chelation, facilitated communication, floortime, etc.)” (p. 3). Even if most practitioners agree these are important exclusion criteria if one is seeking to identify “high quality” ABA service settings, words like “occasionally” leave this exclusionary criterion open to interpretation. In addition, what constitutes an EBP is unclear because EBP as professional decision making (e.g., Slocum et al., 2014) or as a specific intervention that meets the standards of a professional organization such as the Council for Exceptional Children (Cook et al., 2014) are missing.

Summary and Conclusions about Quality in the Industry

We admire the incredible progress made by these and many other individuals and organizations who have worked tirelessly to develop and improve the standard of care in the industry. We want to emphasize that the examples we have provided do not represent any attempt to single out or disparage any organizations or individuals. Rather, the findings from these samples, although not comprehensive, seem to indicate that to move the industry forward in terms of quality we need more consensus on how to define and empirically evaluate the quality of ABA services in the industry, in particular at the organizational level. It is unclear whether the audit processes of external entities would ever be of adequate depth, breadth, and precision to accurately determine an ABA organization’s service quality. Organizations that rely too heavily on external quality audits may be at risk of neglecting the contingencies that control service quality in their organization. It is readily apparent that without an objective definition of quality at the organizational level, most ABA service delivery organizations cannot objectively evaluate the quality of their own services. Nor can consumers objectively compare the quality of services between ABA service delivery organizations without a clear definition. Every ABA service delivery organization asserts that they provide high quality. ABA services. Thus, our industry seems to be faced with three major problems we may be able to solve with an operational definition of quality at the organizational level and a cohesive framework within which we can empirically understand it. First, ASDQ is undefined. Second, licensure, certification, and external quality auditing entities do not and cannot directly control ASDQ because they do not have access to continencies that control service delivery quality. Third, without objective indicators of ASDQ, it is difficult for ABA organizations to distinguish the quality of their services from competitors.

Defining Quality

The word quality, from the Latin qualitas, meaning “character/nature, essential/distinguishing quality/characteristic” (http://www.latin-dictionary.net/definition/32524/qualitas-qualitatis) first appeared in English in the 1300s (https://www.merriam-webster.com/dictionary/who#etymology), and is thought to be “Cicero’s translation of the Greek word for quality, poiotes, coined by Plato” (https://www.grammarphobia.com/blog/2012/07/quality.html). Definitions and uses of the word quality vary across disciplines and industries because it has been part of everyday language for hundreds of years. As a result, it can be difficult to objectively define as it pertains to ABA service delivery. One everyday use of the term quality is, “the personally defined expectation for a product or service” and a second use is, “some sense of durability or reliability of the good or service” (Bobbitt & Beardsley, 2016, p. 304). The second use acknowledges that, “quality is a dynamic process” (Mawhinney, 1992a, p. 528). In an effort to provide a clear definition of quality that most would likely agree on, and that could lead to operational definitions useful for improving quality, Bobbitt and Beardsley (2016) proposed defining quality as, “an agreed-upon standard of performance or outcome” (p. 305). We combined these uses of the term “quality” with cultural selection in mind and arrived at a definition, which we suggest may be a good starting point in a new scientific approach to ASDQ.

ASDQ Defined

We define ASDQ as the extent to which an organization’s ABA products, services, and outcomes meet standards determined by professionals and consumers, over time, in response to changes in a receiving system, while maximizing the financial health of the organization.

As discussed in more detail below in the section on “next steps,” organizations can develop professional and consumer standards internally, or adopt external standards when they are available. Broad examples of professional standards include the extent to which the service uses evidence-based practices (i.e., specific interventions such as functional communication training; e.g., Gerow et al., 2018), best practices (i.e., specific assessments such as functional analysis of challenging behavior; Iwata et al., 1982/1994), services conform to an evidence-based practice of ABA framework (Slocum et al., 2014), all seven dimensions of ABA are represented, practitioners consistently practice within their scope of practice and competence, practitioners adhere to the BACB’s Professional and Ethical Compliance Code for Behavior Analysts ©, and other aspects of service delivery and daily operations captured by standards developed by external entities such as the BHCOE or CASP. Examples of concrete outcome standards may be harder to come by so organizations may need to rely more heavily on an internal approach to standards development. Organizations may use survey methodology to develop consumer standards related to service and outcomes (e.g., Coyne & Associates Education Corporation, n.d. ), such as caregiver views on the benefits of the service, how home-based services are managed, and staff professional conduct. Determination of relevant indicators of financial health for ABA organizations beyond revenue and profit could be explored in future research. For example, organizations may consider indicators like cash on hand to manage reimbursement interruptions, investment in support departments such as training and human resources, or strategic planning costs. However, considerably more research is needed in these areas.

An ABA service provider cannot demonstrate high standards attainment by meeting professional standards alone, nor by meeting consumer standards alone. High standards are comprised of the combination of high professional standards attainment and high consumer standards attainment. High standards attainment is demonstrated to the extent that an ABA service provider maintains high professional and consumer standards attainment over time, especially in response to market changes (i.e., threats or challenges) such as the recent COVID-19 pandemic, changes in the legal requirements for insurance companies to cover ABA services, changes in billing codes, or shifts in the popularity of competing services (e.g., speech and language services).

This definition of ASDQ offers several benefits. We suggest that within an ASDQ framework, organizations could adopt professional and local consumer performance and outcome standards sensitive to the local conditions (i.e., cultural factors) in which a given organization operates are operationally defined. Attaining standards might be assessed with objective ASDQ metrics (i.e., measures of ASDQ), and then organizations could systematically evaluate interventions on organizational cultural practices that affect those metrics. For example, in initiatives that directly increase ASDQ by strengthening relevant organizational cultural practices and weakening or eliminating those that decrease ASDQ, thereby bringing ASDQ squarely within a science of behavior. This may ultimately free the industry from lore, common sense, and so-called clinical wisdom that guide current conceptualizations of quality only indirectly informed by the science and focused on the behavior of individual practitioners. This definition accounts for cultural factors and changes in behavioral technology over time. It makes ASDQ amenable to empirical study within organizations in a manner that is broadly applicable across contexts where ABA services are provided (e.g., from autism-focused services in Saudi Arabia to workplace-focused services in California’s Silicon Valley). Furthermore, it allows for changes in technology due to new knowledge emerging from research, changes in best practices, textbook revisions, and shifts in professional and consumer standards promoted by professional organizations with their own interests and contingencies. We also suggest that by taking into consideration both professional and consumer standards, this definition of ASDQ could aid in the prevention of certain potential pitfalls of quality management thought to arise from relying only on external industry standards (i.e., developed and marketed by external quality auditing entities). For example, (1) shifting responsibility for quality problems exclusively to external sources, (2) establishment of a “compliance mentality” in the organization, (3) relatively heavy influence on organizational practices by professional standards rather than consumers of the service, (4) creating an aversive or punitive organizational culture in which individuals are singled out and punished for their deficiency reports, and (5) deficiency reports becoming an ends in themselves rather than being used to improve ASDQ (Sluyter, 1998).

We have learned to support the quality of life of individuals with intellectual and developmental disabilities with function-based interventions that weaken challenging topographies of behavior by preventing those topographies from continuing to produce their maintaining consequences, and instead arranging those same consequences to select socially appropriate alternative behavior (e.g., functional communication training; Carr & Durand, 1985). Likewise, the consequences that control misuses of behavioral technology associated with low ASDQ may be rearranged to program for alternative cultural practices within organizations that are associated with high ASDQ. Whatever we ultimately decide “quality” is, if quality is an aspect of ABA services or products generated by an organization, culturo-behavioral science may be able to help us identify and arrange controlling contingencies at the organizational level selective for the organization’s cultural practices.

Culturo-Behavioral Science

Culturo-behavioral science or cultural systems analysis (e.g., Mattaini, 2019), also referred to as cultural analysis (e.g., Glenn et al., 2016; Malagodi, 1986), is a growing branch of behavior analysis. Culturo-behavioral science is focused on achieving a better understanding of, “the selection and maintenance of cultural practices, the processes involved in dynamic systems, the interactions between and amongst complex systems (e.g., political, economic, educational, social, legal, religious), and the evolution of culture” (Cihon & Mattaini, 2019, p. 700). In culturo-behavioral science experiments, cultural practices and their products are often dependent variables and selecting environmental conditions are independent variables (e.g., Vichi et al., 2009). Similar to ABA at the level of the individual, an applied cultural analysis may apply fundamental principles of culture in empirical tests of interventions for cultural problems, such as low ASDQ, individualized to the group or organizations and context.

As noted earlier in this article, one unit of analysis in culturo-behavioral science is an analogue of the operant contingency, called a metacontingency (Glenn, 1986). Just as a two-term operant contingency (R-S) describes a functional relation between behavior and its consequences at the level of the individual (e.g., a rat’s lever pressing produces food pellets), a two-term metacontingency describes a functional relation between cultural practices and their controlling cultural consequences within a receiving system (Glenn et al., 2016) at the level of the group (or at the level of the organization). The metacontingency concept enables researchers to experimentally evaluate the effects of cultural selection on cultural practices in the laboratory (e.g., Vichi et al., 2009) and, in theory, in applied settings. Therefore, the metacontingency concept may also aide in an empirical approach to understanding ASDQ.

Metacontingencies

In the two-term metacontingency (Figure 1; ([IBC-AP]–CC]), cultural practices are “interlocking behavioral contingencies” (IBC). They can result in outcomes, referred to as “aggregate products” (AP; e.g., computer chips produced, construction projects completed, a clinical team’s completed treatment plan) that cannot be produced by any individual group member. Thus, a dependency of the AP on the IBC (IBC → AP), referred to as a “culturant” (Hunter, 2012) is the first term in the metacontingency. IBCs consist of the functional interdependence between the operant behavior of individual group members. That is, each member of the group engages in behaviors which serve as antecedents and consequences for the operant behaviors of other group members. The IBCs generate an AP which cannot otherwise be produced by any individual member of the group. The second term in the metacontingency is an aspect of the selecting environment referred to as a “cultural consequence” (CC). The selective effects of CC control the future rate, and probably other aspects, of the AP. Thus, just as the operant contingency describes the functional relation between the future probability of behavior and reinforcement at the level of the organism, the metacontingency describes the functional relation between the future probability of a culturant and its controlling CC at the level of the group (Glenn, 1988). Operants exhibited by a group member have their own operant lineage (i.e., learning history), and the social transmission of recurring operant behavior (i.e., embedded in IBCs) across group members over time as determined by CC in a metacontingency is called a culturo-behavioral lineage (Glenn et al., 2016).

A benefit of the metacontingency concept is that it expands the tools we can use to understand and promote an organization’s product and service quality over time and in response to changes in the receiving system (i.e., markets and their CC). For example, through an experimental analysis of the downstream effects of CC on the operant variables that control coordination between members of an organization (i.e., IBC) and their products and services (AP).

Consider a hypothetical ABA organization that provides clinic-based services to children with autism spectrum disorder. The organization routinely receives referrals for services and uses a standard process involving multiple staff within the organization to convert referrals to patients. When a referral from a caregiver is received (i.e., a parent calls the organization to inquire about services), an intake coordinator fields the call, describes the services the organization offers, and invites the caregiver for a clinic visit. The purpose of the clinic visit is to convert the referral to a new patient (i.e., a “conversion”). The organization is rewarded for each individual conversion ultimately through reimbursement for services by the patient’s insurance. With this intake process, no individual employee at the organization alone can convert a referral to a patient. That requires a team of staff (e.g., a receptionist to route the call, an intake coordinator to secure the clinic visit, and a clinical director to coordinate a clinic visit with therapists, supervisors, and other patients to successfully produce the conversion). In this scenario, the IBCs are the coordinated interlocking behaviors of the staff involved in the referral-to-conversion process. The potential outcomes of the process, such as an immediate conversion (i.e., AP1, the target AP), delayed conversion (AP2), or no conversion (AP3), are variations in AP from which CC can select. Determining a true CC selective for successful immediate conversions (e.g., in this case, for simplicity, we suggest reimbursement by the patient’s insurance) is difficult to identify precisely without experimentation. We may consider, for our purposes, that the functionally relevant CC is reimbursement from the insurance company for services rendered. This may actually be the terminal CC at the end of the chain of multiple metacontingencies. For example, if we consider the IBCs staff engage in after confirmation of the conversion necessary to produce an authorization for assessment, the AP may be considered the request for assessment, and the CC may be the authorization for assessment. And so on, depending on how you slice it. Regardless of where the real metacontingency lays, the organization has some options to deal with a low rate of APs and CCs in the form of reimbursed rendered services, for example, if the problem is that the team is insufficiently motivated (although other OBM tools may classify the problem differently or identify other relevant variables such as skill deficits). For example, the executive team in the organization can intervene by arranging an operant reinforcement contingency with the clinical director in the form of a bonus for behavior that results in an immediate conversion. Or, as an alternative, they could apply a similar contingency for the intake coordinator, a group contingency applied to all individual team members, or a metacontingency in which a contingency is arranged for all team members not contingent on behavior (i.e., specific operant behaviors of each individual team member contributing to the IBCs and AP), but on the AP itself (with IBC free to vary within the bounds of the organization’s professional and consumer standards). In other words, if the team exceeds a target rate of APs, everyone on the team receives a bonus. In theory, through experimentation in that setting the metacontingency concept could lead to the identification of variables that ensure that variations in IBC used to adapt and maximize CC not only occur in a timely and appropriate manner, but without reductions in standards attainment.

Some Effects of ASDQ Metacontingencies on Organizational Culture

Graphed data of learner performance holds therapists, consultants, and teachers accountable to the individual learner and relevant stakeholder. Likewise, standardized ASDQ metrics could hold ABA service organizations accountable for the quality of their services and strengthen organizational culture (i.e., its beneficial cultural practices). In particular, by bringing ASDQ under the control of metacontingencies that are selective for high ASDQ. Policy tied to ASDQ metrics could be implemented to strengthen the contingency between ASDQ indicators and controlling CC such as new contracts, insurance reauthorizations, higher quality applicants, accreditations, referrals, and revenue, thereby establishing and maintaining beneficial lineages of organizational cultural practices. A hypothetical example is illustrated in Fig. 2 in which two different organizations operate in a receiving system designed by policies that promote strong contingencies between ASDQ metrics and CC important to ABA service delivery organizations. The size of the circles encompassing each organization’s CC attainment represents the extent to which they are maximizing CC. Larger circles represent increasing CC attainment. The top of Fig. 2 illustrates an organization that exhibits increasingly high ASDQ (as indicated by ASDQ metrics) through culturo-behavioral lineages that result in improvements in professional and customer standards attainment and maintenance of those improvements over time. As a result, they are able to maximize CC in the receiving system in the form of a steady flow of revenue, reauthorizations, contracts, increased employee retention, etc. In contrast the bottom of Fig. 2 illustrates an organization that exhibits low, inconsistent, or decreasing ASDQ metrics through problematic culturo-behavioral lineages that fail to maximize CC in the receiving system.

Lineages of Organizational Cultural Practices Demonstrating Selection of a Clinical ABA Organizations’ ASDQ Metric Performance by a Receiving System over Time. Note. The top figure depicts the gradual maximization of CC (e.g., revenue, referrals, school contracts, and insurance contract authorizations) by an organization over time as a result of consistently demonstrating high ASDQ metrics. The bottom figure depicts gradual reductions in CC obtained by an organization as a result of demonstrating low or inconsistent ASDQ metrics over time. The size of the circles encompassing each organization’s CC attainment represents the extent to which they are maximizing CC. Larger circles indicate more CC attainment. AP = aggregate product; ASDQ = applied behavior analysis service delivery quality; CC = cultural consequence; IBC = interlocking behavioral contingencies

Without ASDQ metrics that the market can select, we may risk a higher likelihood that the CC that sustain and grow ABA service delivery organizations will select IBCs that maximize profits without maximizing ASDQ. If market forces are not selecting service providers' products and services based on quality indicators, then organizational practices are perhaps more likely to deviate from what might be considered high quality. Yet, those practices may nevertheless maximize reinforcers through other competitive business practices, and the cultural practices of the organization may be less likely to trend towards those that are in the best interest of consumers. As we will explain below, within an ASDQ framework we suggest it is advantageous for organizations to avoid reliance on external entities and to structure their business in a way that establishes and strengthens contingencies selective for high ASDQ.

ASDQ and the Evidence-Based Practice of ABA

The concept of EBP in ABA at the practitioner level is well-developed. By drawing some analogies, we believe the current definition of ASDQ may help to extend the concept of EBP in ABA to the level of the organization. This analogy is important, in that the EBP of ABA at the organizational level can be thought of as the means by which an organization systematically evaluates whether cultural practices and change initiative methods actually result in higher ASDQ, rather than the business-as-usual leap of faith that quality is improving.

First, we review EBP at the practitioner level. The EBP of ABA is practitioner decision making based on the best available evidence, client values/context, and clinical expertise (Slocum et al., 2014). The top two images in Fig. 3 illustrate relationships between evidential certainty, relevance, empirical support, progress monitoring, and best available evidence in the EBP of ABA at this level. Following along with the axes of the images in Fig. 3 below will help readers identify the following relations.

Relations between the EBP of ABA and ASDQ. Note. This figure compares EBP in ABA at the individual level to EBP in ABA at the organizational level. The figure shows that an ABA service provider organization demonstrates EBP in ABA at the organizational level to the extent that organizational makes decisions based on consumer values/context (not shown), operational expertise (not shown), and the best available evidence. ASDQ is a combination of industry and consumer standards maintained over time as a result of engaging in EBP of ABA at the organizational level. ABA = applied behavior analysis; ASDQ = applied behavior analysis service delivery quality; BAE = Best available evidence; EBP = evidence-based practice

The best available evidence is a combination of empirical support for a practice (e.g., nonremoval of the spoon in the treatment of food refusal in a child with a feeding disorder) and progress monitoring (e.g., the number, duration, and frequency of sessions, the frequency of data collection, pace of trials, and high control over extraneous variables; see Fig. 3, top right). A practitioner is using the best available evidence to make clinical decisions to the extent that they use high-progress monitoring (e.g., frequently weekly sessions, a high number and rate of trials, trial-by-trial data collection, graphing at the end of each session, making treatment decisions every three to five data points, and evaluations of experimental control at each step of the intervention using single-subject methodology) with behavioral interventions that have high empirical support.

Empirical support is a combination of evidential certainty and relevance (see Fig. 3, top left), so high empirical support means high evidential certainty and high relevance. When a practitioner is faced with a problem to solve for a client, they characterize the problem (e.g., inappropriate mealtime behavior maintained by escape) and the client (e.g., 5-year-old boy with food selectivity, autism diagnosis, no oral-motor deficits, no nutritional or growth deficiencies), and search the scientific literature for relevant behavioral interventions (i.e., conducted with participants in settings similar to the practitioner’s client and clinical setting). Of those relevant interventions and associated studies, the practitioner examines the certainty of the evidence. In general, to the extent that studies demonstrate large meaningful changes in behavior attributable to the intervention, and those studies are replicated multiple times by multiple researchers, they are said to provide high evidential certainty, especially if they meet the standards for EBP set by a major professional organization such as the Council for Exceptional Children (CEC; Cook et al., 2014). To the extent that studies provide high evidential certainty, and are highly relevant, the interventions in those studies are considered to have high empirical support.

When empirical support is high, high progress monitoring is less essential. When empirical support is low (e.g., few studies showing experimental control, few replications, studies published by one research group, participant characteristics deviate significantly from the client’s characteristics), high progress monitoring becomes more important.

To summarize, in an ideal situation, a practitioner identifies highly relevant studies of interventions with high evidential certainty. They implement the intervention with high progress monitoring, but in doing so they use clinical expertise to adapt the intervention to match the client’s values and context, which includes social validity. That is, they select socially significant target behaviors, use appropriate procedures, and achieve socially important outcomes, as indicated by stakeholders (Wolf, 1978).

As an analogy, we define EBP of ABA at the organizational level in an ASDQ framework as organizational decision making based on the best available evidence, consumer values/context (i.e., consumer perspectives on services and outcomes), and operational expertise (i.e., implementation and evaluation of performance and systems level change initiatives; e.g., McGee & Crowley-Koch, 2019). Fig. 3d illustrates that the best available evidence is a combination of empirical support for tools, strategies, processes, and concepts used for performer- (e.g., Performance Management (PM), Austin, 2000; Daniels & Bailey, 2014) or systems- (e.g., Behavioral Systems Analysis (BSA); Sigurdsson & McGee, 2015) level change initiatives (i.e., change initiative methods), and progress monitoring of ASDQ indicators (i.e., systematic data collection on standards attainment and organizational financial health related to key ASDQ indicators). Empirical support is a combination of the certainty of the evidence for change initiative methods such as tools (e.g., stimulus preference assessment methodology, see Wine et al., 2014; job models for supervision, see Garza et al., 2017; process maps, see Malott, 2003), strategies or processes (e.g., Malott’s [1974] ASDIER process), and concepts (e.g., metacontingency; Glenn et al., 2016) derived from our own science (e.g., OBM) or other disciplines such as the six sigma and lean sigma DMAIC process (e.g., Borror, 2009) applied to influence cultural practices within an organization, and their relevance to characteristics of the organization. We might say that an organization is applying change initiatives with high empirical support if those initiatives are supported by the scientific literature (i.e., evidential certainty) and are applied in ways that are relevant to the characteristics of the organization. In this application, relevant could be determined by demonstrating that the initiatives are consistent with the organization’s values, strategic plan, and the collective expertise of their staff. Once the organization has established objective professional and consumer standards (i.e., consumer ratings of outcomes or customer service) associated with that organization’s ASDQ indicators or metrics, they can use progress monitoring (i.e., systematic data collection) to evaluate the extent to which change initiatives targeting the organization’s cultural practices result in standards attainment. The outcome is that the organization is using the best available evidence at the organization level to the extent that they rely on empirical support and progress monitoring of the effects of performer- or systems-level change initiatives on standards attainment.

As illustrated in Figure 3e, we suggest that organizations can be conceptualized as demonstrating high ASDQ to the extent that they use the best available evidence, consumer values/context, and operational expertise in consistently demonstrating high standards attainment pertaining to ABA service delivery and strong financial health over time in response to internal or external systemic changes that affect the organization’s decision making. Figure 4 illustrates, in oversimplified manner for convenience, how ASDQ might be visualized over time (i.e., at quarterly meetings), with three different scenarios (i.e., top, middle, and bottom panel). In the top panel, standards attainment and financial health are high. Financial health varies across the next three quarters, whereas standards attainment drops from high to moderate but remains steady for most of the year. This pattern of performance suggests that in Q3, contingencies promoted financial health without positively affecting standards attainment. In the middle panel, standards attainment and financial health increase systematically across quarters, suggesting the organization is operating under contingencies that tie standards attainment to financial health. In the bottom panel, high financial health is demonstrated across all quarters despite a drop in standards attainment in the mid-quarters, suggesting the organization should focus their efforts on establishing new contingencies that tie standards attainment to financial health to ensure that the organization is prepared to prevent similar future drops in standards attainment.

In summary, in this framework, the EBP of ABA at the organizational level describes a decision-making process that organizations use to systematically evaluate the effects of variables (e.g., OBM tools; recommendations from professional organizations, blogs, state departments of education, and those of prominent figures in the field) until now only hypothesized to benefit quality on objective indicators of ASDQ. Antecedent stimulus control over executive decision making by graphed ASDQ data over time, in the EBP of ABA at the organizational level, might even enhance the timeliness and effectiveness of executive decision making on ASDQ through more contact with the relevant contingencies. Practitioners can already draw from a large literature base in the EBP of ABA at the practitioner level. Research on ASDQ may occasion the emergence of a literature base in the EBP of ABA at the organizational level.

A Call to Action

ABA organizations do not need to wait for the industry to develop professional and consumer standards or an ASDQ evidence base. Here are six practical steps ABA organizations can take now in the EBP of ABA at the organizational level, to start working toward high ASDQ. Organizations do not necessarily need to complete these steps in the order presented.

Step 1

Leaders of the organization can engage in strategic planning focused on identifying key performance indicatrs (KPI; i.e., financial health) and the strategies likely to result in attainment of KPI targets or goals. Wikipedia (2020) defines strategic planning as “an organization’s process of defining its strategy, or direction, and making decisions on allocating its resources to pursue this strategy.” Strategic planning is typically performed by executives in the organization. During strategic planning, planning team members set strategic goals, determine the actions to achieve those goals, and activate resources needed to perform those actions. Common considerations during strategic planning include reflecting on the organization’s accomplishments and obstacles; determining the organization’s values; their strengths and weaknesses, opportunities, and threats (i.e., SWOT; see Wikipedia, 2021) to the organization; a determination of activities the organization should stop, start, or keep doing; determining the core purpose of the organization; setting a 10–25 year stretch goal; developing a brand promise; developing an action plan with initiatives, goals, timelines, and project leads; and developing a system for evaluating the success of the strategic plan over time. There are firms that specialize in strategic planning, such as Rhythm Systems (https://www.rhythmsystems.com/), that organizations can reach out to for assistance as needed.

Step 2

Next, organizational leaders could establish what we call “quality-dependent KPIs” or “QD-KPIs.” QD-KPIs are key performance indicators tied to professional and consumer performance and outcome standards (i.e., defining dimensions of ASDQ). For example, “In 3–5 years the organization will generate $500,000.00 in annual revenue under conditions in which professional and consumer performance standards and outcomes are met for three consecutive quarters.” The first part, “In 3–5 years the organization will generate $500,000.00 in annual revenue,” is a typical KPI. By adding the second part, “under conditions in which professional and consumer performance standards and outcomes are met for three consecutive quarters,” we now have a QD-KPI—a KPI tied to ASDQ quality indicators. As a result of setting QD-KPIs, the strategic planners have arranged a contingency in the organization with the potential to drive both growth- and quality-oriented initiatives simultaneously, setting the occasion for the organization to maximize ASDQ through maintaining high levels of both financial health and standards attainment over time. With this approach, the organization takes a step towards holding itself accountable for high ASDQ and eliminates its need for external entities to serve that regulatory function. An organization can then develop standards and associated metrics, and create benchmarks that work for their organization to assess progress toward QD-KPIs. For example, organizations can use Patrick Thean’s (2008) red/yellow/green success criteria to quickly and routinely assess whether the organization is meeting their internal professional and consumer performance and outcome standards (e.g., green is met, yellow is nearly met, red is not met).

Step 3

Organizational leaders can then establish a dashboard commonly used by executives to actively monitor progress towards QD-KPI attainment (e.g., progress on initiatives, goals and actions intended to result in QD-KPI attainment). There are a wide variety of dashboard variations and numerous firms online who can provide dashboard software and consultation, like Envisio (https://envisio.com/solutions/strategic-plan-dashboard/).

Step 4

Organizations also need to adopt or develop professional and consumer performance and outcome standards on which they base their QD-KPIs. Standards can be internal or external (e.g., BHCOE standards). If professional standards are internally developed, we recommend that organizations base their standards on current research, best practices, recommendations of professional organizations, and leveraging tools from OBM within an EBP of ABA framework (Slocum et al., 2014). Organizations can use survey methods to develop consumer performance and outcome standards. Forexample, organizations could develop in-house parent satisfaction surveys thatmeasure constructs the organization considers important, such as caregivers’perceptions of the benefits of the services, staff skill level, and treatmentplan oversight, and publish the results routinely on their website, similar toreports on the Coyne and Associates Quality webpage (Coyne & AssociatesEducation Corporation, https://coyneandassociates.com/quality/).

Step 5

Organizational leaders can also design and implement a TQM system for promoting standards attainment on an ongoing basis while monitoring QD-KPI. The ASQ website describes TQM as “a management approach to long-term success through customer satisfaction.” They explain, “TQM can be summarized as a management system for a customer-focused organization that involves all employees in continual improvement. It uses strategy, data, and effective communications to integrate the quality discipline into the culture and activities of the organization” (ASQ, 2021a, “Primary Elements of TQM”). They identify eight principles of TQM and “Deming’s 14 Points for Total Quality Management” (ASQ, 2021b), summarized in Table 1. For example, the “fact-based decision-making” principle states that, “in order to know how well an organization is performing, data on performance measures are necessary. TQM requires that an organization continually collect and analyze data in order to improve decision making accuracy, achieve consensus, and allow prediction based on past history” (ASQ, 2021a, “Primary Elements of TQM”). More information about these elements and points can be found on ASQ’s (2021a) TQM page. Organizations can view the principles as propositions or values that guide organizational behavior. To apply those principles in pursuit of high ASDQ, organizations can engage in Deming’s specific management practices with the goal of maximizing standards attainment (ASQ, 2021b).

Step 6

The final step on this list is to set a routine schedule for evaluating ASDQ (i.e., evaluating the current state of QD-KPIs) and publicizing ASDQ metrics for stakeholders. For example, the executive team in the organization could meet quarterly to review red/yellow/green indicators of progress towards QD-KPIs and use plan-do-check-act cycles (e.g., https://asq.org/quality-resources/pdca-cycle) other TQM tools such as Six Sigma (https://asq.org/quality-resources/six-sigma), and BSA (e.g., Malott & Garcia, 1987) in the organization’s systematic approach to accelerating progress towards QD-KPI targets set during strategic planning. A plan-do-check-act cycle is a tool for producing process change. In the Plan stage, you recognize an opportunity to improve on a product, service or process that ideally directly affects QD-KPIs. In the Do stage, you carry out a small-scale study for the purpose of producing the desired change. In the Check stage, you review the test, analyze the results, and determine what you have learned from the test. In the Act phase, you leverage the data to make the appropriate change to the product, service, or process, or repeat the cycle with a different plan, and this process repeats until the desired change is obtained. Recall that within a culturo-behavioral framework we can apply the metacontingency concept to understand how organizational culture and its practices are shaped over time by CC in a selecting environment. Within this framework, we can conceptualize values on QD-KPI indicators (e.g., values representing revenue earned under conditions of a given level of standards attainment) as AP. We can conceptualize collaboration between employees who work together to complete the activities that generate those APs as IBCs. Executives can arrange metacontingencies (i.e., cultural level of selection) or operant contingencies within the organization to select IBCs that increase QD-KPI values. In theory, publicizing their quarterly ASDQ metrics and leveraging them to effectively maximize sources of revenue (i.e., CCs) could constitute a metacontingency selective for those cultural practices in the organization that achieve high ASDQ over time.

An organization’s executive team can use ASDQ data on a quarterly basis to objectively and systematically make adjustments (e.g., processes, procedures, or staffing) within the organization to maximize ASDQ. With public facing ASDQ reports, stakeholders can better discern higher quality ABA service delivery organizations from others by whether they routinely publish ASDQ metrics, the breadth and depth of the metrics, and their consistency over time. Future research within this framework is needed to understand how variation in standards across organizations factor into interpretations of organization’s ASDQ data, but organizations’ ability and willingness to report ASDQ data itself may be viewed as a good step towards objective quality indicators stakeholders can initially rely on.

The steps we have outlined here largely pertain to executive or leadership teams in organizations with the power to enact change initiatives within the organization that may drive ASDQ. Future research could examine steps other members of organizations, such as frontline staff (e.g., BCBAs, BCaBAs, RBTs), could take to promote ASDQ within their organization. For example, by stimulating conversation about the organization’s processes, policies, and procedures that may affect ASDQ and how the organization evaluates their attainment of professional and consumer standards.

Final Summary, Conclusions, and Future Research