Abstract

Purpose of the review

Digital mental health interventions (DMHIs) are an effective and accessible means of addressing the unprecedented levels of mental illness worldwide. Currently, however, patient engagement with DMHIs in real-world settings is often insufficient to see a clinical benefit. In order to realize the potential of DMHIs, there is a need to better understand what drives patient engagement.

Recent findings

We discuss takeaways from the existing literature related to patient engagement with DMHIs and highlight gaps to be addressed through further research. Findings suggest that engagement is influenced by patient-, intervention-, and system-level factors. At the patient level, variables such as sex, education, personality traits, race, ethnicity, age, and symptom severity appear to be associated with engagement. At the intervention level, integrating human support, gamification, financial incentives, and persuasive technology features may improve engagement. Finally, although system-level factors have not been widely explored, the existing evidence suggests that achieving engagement will require addressing organizational and social barriers and drawing on the field of implementation science.

Summary

Future research clarifying the patient-, intervention-, and system-level factors that drive engagement will be essential. Additionally, to facilitate an improved understanding of DMHI engagement, we propose the following: (a) widespread adoption of a minimum necessary 5-element engagement reporting framework, (b) broader application of alternative clinical trial designs, and (c) directed efforts to build upon an initial parsimonious conceptual model of DMHI engagement.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

With mental health concerns reaching unprecedented prevalence [1, 2], there is an urgent need for accessible, evidence-based psychiatric treatments. Digital mental health interventions (DMHIs) delivered via the Internet and/or mobile apps offer a promising avenue for meeting this challenge. A number of reviews and meta-analyses have found that DMHIs are efficacious [3, 4]. Additionally, they have the potential to remove many of the barriers that plague traditional psychiatric treatment [5,6,7]. Most notably, DMHIs designed by professionals trained in evidence-based treatment can be much more widely available than treatment provided by individual clinicians at virtually no marginal cost. They also address other key issues with standard mental health treatment: they promote patient autonomy, they offer convenience (not requiring workday appointments or transportation), and they can be accessed at times when patients are most in need of support (which often do not align with when clinic appointments are scheduled).

Despite their promise, DMHIs have increasingly been demonstrated to have a major shortcoming: patient engagement with them is poor. When speaking about DMHI engagement, it is important to note that this concept refers to engagement in real-world conditions, such as when these interventions are implemented in routine care or commercial settings (e.g., sold via the Google Play or the Apple App Store). Engagement in efficacy trials, consisting of highly motivated users who seek out participation in a study and meet multiple eligibility criteria, is typically high but does not reflect real-world conditions [8•]. This difference between engagement in more controlled efficacy trials and less controlled implementation settings is well illustrated in a study conducted by Gilbody et al. [9] in which two DMHIs (Beating the Blues and MoodGYM)—both with prior randomized controlled trials (RCTs) demonstrating strong efficacy [10, 11]—were implemented in routine care. Fewer than 20% of participants completed either of the assigned interventions. The issue of DMHI engagement is not specific to the interventions studied in Gilbody et al. [9]. Indeed, studies using other DMHIs show similarly low rates of sustained engagement and intervention completion in real-world settings [12,13,14,15,16].

When discussing engagement, it is also important to note that some drop out from any treatment or technology is to be expected. After all, meta-analyses of psychotherapy suggest that around 20% of patients discontinue prematurely, and these rates are even higher for treatment with psychotropic medication [17, 18]. Similarly, average 30-day retention rates for mobile applications in general are under 6% [19]. Even for exceptionally popular apps outside the healthcare domain, like Instagram and Twitter, 90-day retention rates are only 30–50% [20]. It is important to note, however, that unlike standard consumer apps, DMHIs are clinical treatments where suboptimal engagement can have significant repercussions.

Thus, in the quest to understand DMHI engagement and to ascertain whether DMHIs are a viable solution to the unprecedented mental health concerns worldwide, several core questions emerge. First, at the patient level, what characterizes patients who are likely to engage with these interventions? Second, at the intervention level, what intervention components improve engagement? Third, at the system level, what aspects of the larger healthcare and social climate promote engagement? And fourth, in the context of research, how can we build an evidence base that allows us to address this problem?

Definitions of engagement

Before examining these questions, it is important to clarify what is meant by “engagement.” Within psychiatry, the most common definitions of engagement relate to behaviors, and this is where our discussion will focus. We offer an operational definition of behavioral engagement as the use of the core components of a DMHI. Common metrics of behavioral engagement include uptake (i.e., downloading and using the intervention at least once), sustained use (i.e., remaining active in using the intervention for some period of time after downloading), and adherence/completion (i.e., using the intervention at the intended frequency for the intended duration). For DMHIs, these behavioral engagement metrics can readily be tracked on system backends, making engagement with DMHIs easier to gauge than engagement with other treatments such as psychotropic medication. This proposed operational definition of engagement is supported by significant research suggesting a relationship between behavioral engagement (i.e., usage) and clinical outcomes [21]. It is important to note that engagement requirements differ across DMHIs. There is not one set amount of use that defines completion. Instead, this is defined in the context of each specific DMHI.

Engagement can also be defined more broadly. Many human–computer interaction researchers define engagement as capturing and maintaining the attention and interest of users and their temporal, emotional, and cognitive investment with an intervention [22]. Nahum-Shani et al. [23] discuss engagement with digital content (i.e., using the DMHI), engagement with notifications from the DMHI (i.e., reading and thinking about them), and engagement with non-digital tasks (e.g., behavioral activation exercises recommended by the DMHI) all as part of the multi-dimensional construct of engagement. Definitions like these are conceptually important as they capture the full and true meaning of DMHI engagement. They are also more difficult to reliably measure in the context of intervention trials and real-world implementations. Metrics such as the number of clicks or active time spent in the DMHI may be reasonable proxies for cognitive engagement but are far from exact. Some studies ask users for a self-report of their emotional reactions to content or whether they acted upon the intervention recommendations as a way to measure these more nuanced yet critical aspects of engagement. Additionally, self-report measures such as the User Engagement Scale [24, 25], Digital Working Alliance Inventory [26, 27], and the Unified Theory of Acceptance and Use of Technology Scale [28] are validated options for measuring aspects of this broader definition of engagement. While such measures can be useful, backend measures of behavioral engagement are still typically the most available and most feasible in the context of real-world implementation, where engagement is most likely to be a concern.

The literature on engagement also frequently discusses two other metrics. The first is study completion, which refers to the completion of post-treatment assessments, not the completion of the key components of the DMHI. While study completion could be argued to serve as a proxy of DMHI engagement, its applicability is limited to research contexts. It is primarily useful as a metric for calculating sample size requirements for efficacy and effectiveness trials, not as an indication of DMHI engagement. The second is patient interest in certain interventions or aspects of interventions. Understanding patient interests and differences in interest across different demographic subgroups is useful for designing effective interventions. However, it does not necessarily extend to actual in situ engagement with those interventions.

Patient-level engagement considerations

Across medicine, differential rates of treatment engagement by patient population have been identified and vary by healthcare domain. A handful of demographic variables have consistently been associated with higher engagement with DMHIs. Specifically, women show higher levels of DMHI engagement than men [29,30,31,32,33,34]. Similarly, several studies have found that higher education levels predict higher engagement [32, 35,36,37]. Finally, a number of studies suggest that certain personality traits, specifically neuroticism, agreeableness, and introversion, are associated with greater interest in using DMHIs [38, 39]. Out of these, however, only neuroticism has been found to be associated with greater usage [40].

There remains some ambiguity on the extent to which other patient-level variables like race and ethnicity, age, and symptom severity are related to engagement. With regard to race and ethnicity, some studies suggest that DMHI interest and intervention usage is greater among racial and ethnic minorities [41,42,43]. Yet other studies indicate that White patients show higher engagement [29]. This is an important area for future inquiry because racial and ethnic minority patients tend to engage with traditional outpatient mental health services less frequently [44]. If interest and usage are high in these populations, a potential opportunity exists to focus on reaching them with evidence-based services using DMHIs. These conflicting findings raise important questions about the extent to which tailoring DMHIs for specific racial or ethnic groups may improve engagement.

Similarly, studies have drawn different conclusions about the relationship between age and engagement in DMHIs. Some studies have found that younger patients express higher interest or show more behavioral engagement, whereas other studies have shown the opposite [29, 43, 45,46,47]. These seemingly conflicting findings could potentially be attributed to differences in age breakdowns across studies and a potential nonlinear relationship. That is, perhaps age at either extreme (e.g., younger than 30 and older than 50) is a risk factor for disengagement [48•].

Finally, a number of studies suggest that individuals with more severe symptoms report greater interest in DMHIs [47, 49, 50]. However, when it comes to actual usage, some studies suggest higher usage among those with more severe symptoms [29, 50], whereas other studies suggest that more severe symptoms are associated with lower usage [34, 51, 52]. It is possible that some of these conflicting findings could be attributed to the difficulty in drawing conclusions across studies of patients with different diagnoses. For example, some research suggests that patients with depression, which is marked by a lack of motivation, show lower engagement than patients with anxiety disorders [53, 54]. It also could be that the relationship between symptom severity and engagement is not linear. That is, higher severity of symptoms may motivate engagement up to a point, but those with the most severe symptoms may be less engaged. These questions require further exploration with a more refined classification of data.

Intervention-level engagement considerations

An important attribute of any DMHI intervention is the extent to which it includes some approach or strategy to enhance engagement. To date, the most heavily researched intervention-level engagement strategy has been the addition of human support. Meta-analyses suggest a medium positive effect size of including human support versus no human support on the efficacy of DMHIs [55, 56]. But the impact on engagement, and specifically engagement in real-world implementations of DMHIs, is less clear. Various studies suggest that human support increases engagement [57,58,59], but the finding is not universal. For example, Levin et al. [60] found that weekly coaching calls did not increase engagement relative to automated email prompts. Additionally, strategies for adding human support are widely varied—from less scalable options like weekly phone calls with a clinician to more scalable options like asynchronous communication with a health coach. The more scalable coach support protocols have been found to be effective at enhancing engagement in some studies [36, 61, 62]; however, other studies have found that this style of coach support does not enhance engagement [63, 64]. Thus, while human support appears to be a promising engagement strategy, results are not unequivocally favorable. The existing literature leaves many questions unanswered regarding the specific components of such support that drive engagement and the optimal dose of such support.

Gamification is another engagement strategy that has garnered recent interest. Gamification refers to incorporating principles from gaming into the DMHI. These include leveling up, winning points, or virtual rewards, integration of short-term challenges, and use of imaginary settings or narratives [65]. One of the key arguments for gamification has been that it could make interventions more fun or rewarding and, therefore, keep patients engaged for longer. Yet, while gamified interventions have often been found effective, few studies have actually evaluated how effective gaming intervention components are at improving engagement [66, 67]. Users have expressed mixed interest in gamified interventions [68], and at least one recent meta-analysis suggested that gamified depression apps did not generate improved adherence or efficacy over depression apps without gamification [69].

The use of contingency management is also a frequently discussed intervention-level DMHI engagement strategy. Contingency management is a principle drawn from behavior therapy referring to reinforcing or rewarding behavior change. The most common types of rewards applied are monetary either in the form of cash or prizes, but other types of rewards can also be used. There is significant research showing that contingency management improves treatment outcomes in the context of health concerns like substance use, medication and treatment adherence, and a range of health risk behaviors [70,71,72]. Some recent studies have shown that these types of incentives also increase digital intervention adherence for health behaviors [73, 74]. Applications of contingency management specific to engagement with DMHIs are limited, but early results are promising. For example, Boucher et al. [75] found that monetary incentives increased the regularity and volume of usage of a DMHI for depression and/or anxiety.

Finally, persuasive technology is another promising DMHI engagement strategy. Persuasive technology features that have been applied in DMHIs include text messages, push notifications, interactive features, opportunities for data visualization, and tailoring/personalization of intervention content [76]. Significant research supports the use of persuasive technology for engagement. Survey and qualitative studies suggest that patients express a desire for personalized content [77,78,79]. Additionally, use of reminders [80], interactivity [81, 82], tailored push notifications [83], and data visualization [84] have been found to increase engagement with digital interventions. Persuasive technology may even be as effective as human support in enhancing engagement. For example, Kelders et al. [85] found that engagement was equivalent when a DMHI was enriched with persuasive technology features (i.e., tailoring, personalized feedback) and when it was enriched with coach support.

Just-in-time adaptive interventions (JITAIs) are a promising application of persuasive technology where users are prompted to interact with specific intervention content based on contextual data collected via self-report or passive monitoring [86]. JITAIs can be effective for mental health concerns [87, 88] and may enhance engagement by delivering intervention content when a user is most receptive to it. However, real-world implementations in mental health are still limited [89], and the impact on engagement has not been directly evaluated.

Systems-level engagement considerations

Strategies for healthcare systems to seamlessly integrate DMHIs in the context of routine care, thereby supporting engagement, have not been a focus of DMHI research to date. However, studies suggest that weaving DMHIs into the fabric of existing primary or specialty care may be a particularly promising approach for enhancing engagement. Specifically, previous work has shown that patients endorse greater interest in interventions recommended by their care team than those not accompanied by such a recommendation [90, 91]. Additionally, referral from a healthcare provider is associated with lower DMHI attrition [92]. These findings are consistent with research suggesting that social influence, defined as the extent to which important others support a given behavior, positively impacts technology adoption [28].

The research on barriers and facilitators of DMHI adoption and sustained use suggests a number of organizational variables that merit attention. Both Borghouts et al. [48•] and Graham et al. [93] provide excellent overviews of this literature. Findings regarding several system-relevant barriers and facilitators offer a starting point for determining what engagement strategies may be worth evaluating at the system level. Specifically, system-relevant barriers identified in this literature include interoperability issues with other clinical systems, limited technical support, limited staff resources, cost/limited avenues for DMHI reimbursement, clinicians’ negative perspectives on DMHIs, and limited support from clinical leadership [48•, 93]. Anastasiadou et al. [77] found that both patients and providers perceived barriers related to the organizational environment as more prominent than patient-level and intervention-level barriers. These findings suggest value in drawing on allied fields like implementation science and its rich set of implementation frameworks to inform the study of DMHI engagement.

Implementation science has a long tradition of exploring multi-level strategies to support the uptake and sustainment of evidence-based interventions. For example, the Expert Recommendations for Implementing Change study systematically compiled input from stakeholders and published a list of implementation strategies and definitions of these strategies [94]. This list offers a directory for identifying implementation strategies that could promote engagement with DMHIs at the system level. Studies drawing on this strong body of implementation science literature that test the application of various strategies in different contexts (e.g., primary care, community care, specialty care, marketplace) are an important next step in DMHI research.

Important gaps for research

There are a plethora of important areas for research into engagement with DMHIs, many of which we have noted in the previous sections. Below, we highlight three additional areas that we have not yet touched upon that are particularly important for the research community to address.

Adopting standards for reporting engagement metrics

A recent review [95•] of DMHIs for depression indicated that consistency in reporting engagement metrics is alarmingly poor. Specifically, only 64% of studies reported the number of participants who used the DMHI at least once, only 23% of studies reported how many participants were still using the DMHI during the last week of the treatment period, and only 50% of studies reported the number of participants who completed the DMHI.

Unfortunately, we cannot attribute these results to difficulty measuring engagement because metrics for DMHI use, as noted above, are typically quantifiable on the system backend. We also cannot attribute them to the study of DMHIs being a new field because depression represents one of the most heavily researched clinical areas for DMHIs.

These results suggest that establishing reporting guidelines that specify the minimum necessary provision of information on engagement when publishing clinical trials of DMHIs is critical. As a starting point, Lipschitz et al. [95•] suggest that a five-element standard of engagement reporting be adopted for all studies of DMHIs. This framework encompasses the following essential metrics:

-

(1) Adherence criterion. This is defined as an explicit statement of what it means for participants to have used the DMHI as intended or met some minimum intervention use threshold. This could be defined in terms of content coverage (e.g., 80% of modules completed), frequency of use (e.g., use at least three times per week during the intervention period), or some other a priori threshold for intervention adherence.

-

(2) Rate of uptake. This is defined as the percentage of participants randomized or referred to the DMHI who downloaded the intervention and used it at least once.

-

(3) Level-of-use metrics. These are defined as both the total number of DMHI launches (i.e., average number of times used) and the total amount of time the DMHI was used (e.g., total minutes of use) during the intervention period.

-

(4) Duration-of-use metrics. These are defined as the number of participants who used the app at least once per week every week of the intervention period unless less frequent use is identified as sufficient in the adherence criteria. Reporting the number of participants still using the DMHI in the final week of the intervention period or a survival analysis of time to last use is also particularly helpful for duration-of-use metrics because they convey how long patients typically engage with the DMHI. When positive clinical outcomes are observed, these metrics also offer insight into the timeline for expected clinical improvement.

-

(5) Number of intervention completers. This is defined as the number of participants who completed the intervention as intended per the specified adherence criteria.

Adopting this or some other minimum necessary reporting criteria is essential to move the field of DMHI engagement forward. Such reporting guidelines would allow for new insights into what constitutes sufficient engagement for clinical benefit, facilitate comparisons among DMHIs and between DMHIs and other treatment options, and offer benchmarks upon which further research must improve.

Considering alternative study designs

To date, RCTs with parallel group designs have been the most common methodology in DMHI trials. But these only tell us whether an intervention package as a whole has a causal impact on outcomes of interest. Such trials are not designed to shed light on which components of an intervention impact the outcomes or when and how different intervention components should be applied.

Several other clinical trial designs offer data-driven strategies for answering questions related to treatment optimization for user engagement. As such, they provide efficient strategies for answering a number of questions related to engagement. The most widely used example is the factorial clinical trial, which involves packaging intervention components into various combinations such that the impact of each combination, as well as the main effect of each component itself (across combinations), can be evaluated [96, 97]. Other less widely used examples of trial designs include sequential multiple assignment randomized trials (SMARTs; 98, 99) and micro-randomized trials (MRTs; 100).

SMARTs [98, 99] offer insight into how components of a treatment package should be sequenced to optimize outcomes. They involve testing alternative treatments as a starting intervention and then identifying, at pre-specified points early in treatment, which individuals are responding/not responding to the initial intervention and randomizing both responders and non-responders to appropriate second-stage treatments. The causal effects of the initial treatment approach and the adapted treatment approach on an outcome of interest can then be evaluated. SMARTs could be used to help identify how to augment interventions or what intervention components to add for patients who exhibit poor engagement with DMHIs. For example, one possible first-stage intervention in a SMART might be a self-guided DMHI with or without tailored motivational messaging (randomized at a 50–50 split). After 2 weeks, those participants in either condition showing insufficient engagement could be re-randomized to receive augmentation with coach support or to continue with the self-guided intervention. SMARTs offer a particularly compelling design for implementation studies because they allow an opportunity to test adaptive implementation strategies for clinics or specific patients who do not respond favorably to an initial approach (e.g., [101, 102]).

MRTs [100] offer insight into whether intervention components of a DMHI have a proximal or near-term effect on engagement and when those components should be delivered to maximize that effect. They involve establishing a schedule of “decision points”: times at which a component of a DMHI might be delivered (e.g., multiple times per day). For example, a DMHI component may involve the delivery of motivational push messages to the patient via a smart device. At each decision point, participants would be randomly assigned to receive or not receive these motivational push messages. Then, the proximal impact (i.e., over the next hour, day, or week rather than the full intervention period) of the intervention component (i.e., motivational push messages) and the interaction between that proximal impact and context (e.g., time of day, location when the message was delivered) are evaluated.

Studies employing these designs offer untapped opportunities to better understand engagement and optimize interventions for engagement. For example, they offer opportunities to efficiently test the effects of tailoring intervention components to a given patient’s demographic characteristics (e.g., age, gender, or race).

Conducting studies that facilitate building a theory of DMHI engagement

At this point, there is not one widely accepted theory of what drives DMHI engagement. Probably, the most widely applied theoretical models are the technology acceptance model [103] and the unified theory of acceptance and use of technology (UTAUT; 28). These models were developed and validated predominantly in the context of employee adoption of new information technologies, a considerably different context than the adoption of patient-facing, medical treatment technologies. Furthermore, the recently articulated affect-integration-motivation and attention-context-translation (AIM-ACT) framework has been proposed as an outline of psychological processes that dictate in-the-moment engagement with digital stimuli and may inform the development of a broader theory on DMHI engagement [23]. Finally, there is an expansive literature on behavior change theories related to treatment adherence [104]. However, adherence to treatments like prescribed medication, for example, is also considerably different from adherence to DMHIs. Most notably, medication adherence is typically less cognitively demanding, less time-consuming, and supported by more established efficacy data. While this literature base provides a starting point for conceptualizing what drives adoption and sustained engagement with DMHIs, there is likely room to improve and hone these models.

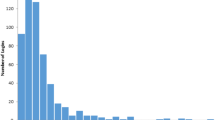

The key will be to develop and validate theories that can inform intervention designers about constructs that are most robustly associated with DMHI engagement. To do so, we can look toward constructs that show strong evidence in both the technology adoption and treatment adherence literature. Several constructs already exhibit robust associations with both technology adoption and treatment adherence and could serve as a starting point for a parsimonious theory of DMHI engagement. These constructs include social influence (beliefs among people who are important to the patient that he/she should engage in the new behavior; [105,106,107]), facilitating conditions (environmental support for the new behavior; [108, 109]), attitude (the balance of positive and negative feelings about the behavior; [110,111,112,113,114,115]), self-efficacy (beliefs in one’s ability to execute the new behavior; [111, 116,117,118,119]), and habit strength (degree to which the behavior is an automatic part of one’s daily routine; [120,121,122]). Initial theories specific to DMHI engagement could capitalize on these constructs as well as some of the predisposing characteristics discussed above and add additional constructs as new research emerges (see Fig. 1).

Using this framework, some intervention characteristics, such as contingency management or gamification, could be conceptualized as variables that may produce higher engagement by shifting users’ attitudes toward the intervention (i.e., greater positive feelings about using the intervention). Other intervention characteristics, such as the integration of human support, could be conceptualized as enhancing engagement via social influence (in the case of involvement of a clinical team member with a prior relationship with the patient) or a facilitating condition (in the case of a newly assigned health coach specific to the intervention).

Taken together, DMHI engagement theory is an area ripe for innovation. Research can help evolve our understanding of theory by measuring constructs drawn from the cross-disciplinary theories and frameworks put forward above and evaluating associations between these constructs and DMHI usage data or other engagement metrics. This will be an essential part of building a science of DMHI engagement and improving the utility of these treatments.

Conclusions and recommendations

DMHIs hold tremendous promise to transform psychiatric treatment by dramatically increasing access to evidence-based care. However, engagement is a critical issue that will likely determine the extent to which DMHIs become a mainstay of psychiatric treatment. Adequately addressing the issue of engagement will require acknowledging several key points. First, engagement is a multi-level issue. It must be addressed at the patient-, intervention-, and systems-levels. Second, engagement is a multidisciplinary issue. Addressing it will require collaboration between clinicians, data scientists, human-centered design researchers, technologists, and organizational leaders. Third, while there are many aspects of engagement, at its core, the engagement problem is an implementation problem. Like other innovations and evidence-based practices that are the focus of implementation research, many DMHIs may have demonstrated efficacy in controlled settings, but in real-world settings, they do not get and keep patients engaged enough to show sound clinical impact. Evaluating engagement requires studies in naturally occurring, uncontrolled, routine care and marketplace settings. And finally, the engagement problem can only be solved by rigorous research. Specifically, researchers must address the engagement issue head-on by systematizing their reporting of engagement levels, considering alternative clinical trial designs rather than defaulting to RCTs with parallel group designs, and building a robust theoretical basis for evaluating the relationship between possible engagement-driving constructs and observed behavioral engagement. Only then will DMHIs have the potential to be the paradigm-changing force they could be in the treatment of mental health conditions.

References and Recommended Readings

Papers of particular interest, published recently, have been highlighted as: • Of importance

Richter D, Wall A, Bruen A, Whittington R. Is the global prevalence rate of adult mental illness increasing? Systematic review and meta-analysis. Acta Psychiatr Scand. 2019;140(5):393–407.

Collaborators C-MD MMD. Global prevalence and burden of depressive and anxiety disorders in 204 countries and territories in 2020 due to the COVID-19 pandemic. Lancet. 2021;398(10312):1700–12.

Firth J, Torous J, Nicholas J, Carney R, Pratap A, Rosenbaum S, et al. The efficacy of smartphone-based mental health interventions for depressive symptoms: a meta-analysis of randomized controlled trials. World Psychiatry. 2017;16(3):287–98.

Richards D, Richardson T. Computer-based psychological treatments for depression: a systematic review and meta-analysis. Clin Psychol Rev. 2012;32(4):329–42.

Weinberger MI, Mateo C, Sirey JA. Perceived barriers to mental health care and goal setting among depressed, community-dwelling older adults. Patient Prefer Adherence. 2009;3:145–9.

Mojtabai R. Unmet need for treatment of major depression in the United States. Psychiatr Serv. 2009;60(3):297–305.

Harvey AG, Gumport NB. Evidence-based psychological treatments for mental disorders: modifiable barriers to access and possible solutions. Behav Res Ther. 2015;68:1–12.

Baumel A, Edan S, Kane JM. Is there a trial bias impacting user engagement with unguided e-mental health interventions? A systematic comparison of published reports and real-world usage of the same programs. Transl Behav Med. 2019;9(6):1020–33. Study examining discrepancies between digital mental health intervention engagement in real-world versus controlled research settings.

Gilbody S, Littlewood E, Hewitt C, Brierley G, Tharmanathan P, Araya R, et al. Computerised cognitive behaviour therapy (cCBT) as treatment for depression in primary care (REEACT trial): large scale pragmatic randomised controlled trial. BMJ. 2015;351:h5627.

Proudfoot J, Ryden C, Everitt B, Shapiro DA, Goldberg D, Mann A, et al. Clinical efficacy of computerised cognitive-behavioural therapy for anxiety and depression in primary care: randomised controlled trial. Br J Psychiatry. 2004;185:46–54.

Christensen H, Griffiths KM, Jorm AF. Delivering interventions for depression by using the internet: randomised controlled trial. BMJ. 2004;328(7434):265.

Cavanagh K, Seccombe N, Lidbetter N. The implementation of computerized cognitive behavioural therapies in a service user-led, third sector self help clinic. Behav Cogn Psychother. 2011;39:427–42.

Fleming T, Bavin L, Lucassen M, Stasiak K, Hopkins S, Merry S. Beyond the trial: systematic review of real-world uptake and engagement with digital self-help interventions for depression, low mood, or anxiety. J Med Internet Res. 2018;20(6):e199.

Hensel JM, Shaw J, Ivers NM, Desveaux L, Vigod SN, Cohen A, et al. A web-based mental health platform for individuals seeking specialized mental health care services: multicenter pragmatic randomized controlled trial. J Med Internet Res. 2019;21(6):e10838.

Lattie EG, Schueller SM, Sargent E, Stiles-Shields C, Tomasino KN, Corden ME, et al. Uptake and usage of IntelliCare: a publicly available suite of mental health and well-being apps. Internet Interv. 2016;4(2):152–8.

Baumel A, Muench F, Edan S, Kane JM. Objective user engagement with mental health apps: systematic search and panel-based usage analysis. J Med Internet Res. 2019;21(9):e14567.

Swift JK, Greenberg RP. Premature discontinuation in adult psychotherapy: a meta-analysis. J Consult Clin Psychol. 2012;80(4):547–59.

Swift JK, Greenberg RP, Tompkins KA, Parkin SR. Treatment refusal and premature termination in psychotherapy, pharmacotherapy, and their combination: a meta-analysis of head-to-head comparisons. Psychotherapy (Chic). 2017;54(1):47–57.

Tafradzhiyski N. Mobile App Retention [Internet]. Business of Apps; 2023 Apr 26 [cited 2023 Apr 28]. Available from: https://www.businessofapps.com/guide/mobile-app-retention/.

Tison G, Hsu K, Hsieh JT, Ballinger BM, Pletcher MJ, Marcus GM, et al. Abstract 21029: Achieving high retention in mobile health research using design principles adopted from widely popular consumer mobile apps. Circulation. 2017;136(Suppl_1):A21029.

Gan DZQ, McGillivray L, Han J, Christensen H, Torok M. Effect of engagement with digital interventions on mental health outcomes: a systematic review and meta-analysis. Front Digit Health. 2021;3:764079.

O’Brien HL, Morton E, Kampen A, Barnes SJ, Michalak EE. Beyond clicks and downloads: a call for a more comprehensive approach to measuring mobile-health app engagement. BJPsych Open. 2020;6(5):e86.

Nahum-Shani I, Shaw SD, Carpenter SM, Murphy SA, Yoon C. Engagement in digital interventions. Am Psychol. 2022;77(7):836–52.

O’Brien HL, Toms EG. The development and evaluation of a survey to measure user engagement. J Assoc Inf Sci Technol. 2009;61(1):50–69.

O'Brien HL, Cairns P, Hall M. A practical approach to measuring user engagement with the refined user engagement scale (UES) and new UES short form. Int J Hum Comput Stud. 2018;112:28–39.

Henson P, Wisniewski H, Hollis C, Keshavan M, Torous J. Digital mental health apps and the therapeutic alliance: initial review. BJPsych Open. 2019;5(1):e15.

Goldberg SB, Baldwin SA, Riordan KM, Torous J, Dahl CJ, Davidson RJ, et al. Alliance with an unguided smartphone app: validation of the digital working alliance inventory. Assessment. 2022;29(6):1331–45.

Venkatesh V, Morris MG, Davis FB, Davis FD. User acceptance of information technology: toward a unified view. MIS Q. 2003;27(3):425–78.

Ben-Zeev D, Scherer EA, Gottlieb JD, Rotondi AJ, Brunette MF, Achtyes ED, et al. mHealth for schizophrenia: patient engagement with a mobile phone intervention following hospital discharge. JMIR Ment Health. 2016;3(3):e34.

Marinova N, Rogers T, MacBeth A. Predictors of adolescent engagement and outcomes - a cross-sectional study using the togetherall (formerly Big White Wall) digital mental health platform. J Affect Disord. 2022;311:284–93.

Proudfoot J, Parker G, Manicavasagar V, Hadzi-Pavlovic D, Whitton A, Nicholas J, et al. Effects of adjunctive peer support on perceptions of illness control and understanding in an online psychoeducation program for bipolar disorder: a randomised controlled trial. J Affect Disord. 2012;142(1–3):98–105.

Gunn J, Cameron J, Densley K, Davidson S, Fletcher S, Palmer V, et al. Uptake of mental health websites in primary care: insights from an Australian longitudinal cohort study of depression. Patient Educ Couns. 2018;101(1):105–12.

Mattila E, Lappalainen R, Välkkynen P, Sairanen E, Lappalainen P, Karhunen L, et al. Usage and dose response of a mobile acceptance and commitment therapy app: secondary analysis of the intervention arm of a randomized controlled trial. JMIR Mhealth Uhealth. 2016;4(3):e90.

Nicholas J, Proudfoot J, Parker G, Gillis I, Burckhardt R, Manicavasagar V, et al. The ins and outs of an online bipolar education program: a study of program attrition. J Med Internet Res. 2010;12(5):e57.

Kemmeren LL, van Schaik A, Smit JH, Ruwaard J, Rocha A, Henriques M, et al. Unraveling the Black Box: exploring usage patterns of a blended treatment for depression in a multicenter study. JMIR Ment Health. 2019;6(7):e12707.

Arnold C, Villagonzalo KA, Meyer D, Farhall J, Foley F, Kyrios M, et al. Predicting engagement with an online psychosocial intervention for psychosis: exploring individual- and intervention-level predictors. Internet Interv. 2019;18:100266.

Garnett C, Perski O, Tombor I, West R, Michie S, Brown J. Predictors of engagement, response to follow up, and extent of alcohol reduction in users of a smartphone app (drink less): secondary analysis of a factorial randomized controlled trial. JMIR Mhealth Uhealth. 2018;6(12):e11175.

March S, Day J, Ritchie G, Rowe A, Gough J, Hall T, et al. Attitudes toward e-mental health services in a community sample of adults: online survey. J Med Internet Res. 2018;20(2):e59.

Ervasti M, Kallio J, Määttänen I, Mäntyjärvi J, Jokela M. Influence of personality and differences in stress processing among Finnish students on interest to use a mobile stress management app: survey study. JMIR Ment Health. 2019;6(5):e10039.

Sanatkar S, Heinsch M, Baldwin PA, Rubin M, Geddes J, Hunt S, et al. Factors predicting trial engagement, treatment satisfaction, and health-related quality of life during a web-based treatment and social networking trial for binge drinking and depression in young adults: secondary analysis of a randomized controlled trial. JMIR Ment Health. 2021;8(6):e23986.

Wells K, Thames AD, Young AS, Zhang L, Heilemann MV, Romero DF, et al. Engagement, use, and impact of digital mental health resources for diverse populations in COVID-19: community-partnered evaluation. JMIR Form Res. 2022;6(12):e42031.

Klee A, Stacy M, Rosenheck R, Harkness L, Tsai J. Interest in technology-based therapies hampered by access: a survey of veterans with serious mental illnesses. Psychiatr Rehabil J. 2016;39(2):173–9.

Hermes ED, Tsai J, Rosenheck R. Technology use and interest in computerized psychotherapy: a survey of veterans in treatment for substance use disorders. Telemed J E Health. 2015;21(9):721–8.

Padgett DK, Patrick C, Burns BJ, Schlesinger HJ. Ethnicity and the use of outpatient mental health services in a national insured population. Am J Public Health. 1994;84(2):222–6.

Abel EA, Shimada SL, Wang K, Ramsey C, Skanderson M, Erdos J, et al. Dual Use of a patient portal and clinical video telehealth by veterans with mental health diagnoses: Retrospective, Cross-Sectional Analysis. J Med Internet Res. 2018;20(11):e11350.

Krebs P, Duncan DT. Health app use among US mobile phone owners: a national survey. JMIR Mhealth Uhealth. 2015;3(4):e101.

Lipschitz JM, Connolly SL, Miller CJ, Hogan TP, Simon SR, Burdick KE. Patient interest in mental health mobile app interventions: demographic and symptom-level differences. J Affect Disord. 2020;263:216–20.

Borghouts J, Eikey E, Mark G, De Leon C, Schueller SM, Schneider M, et al. Barriers to and facilitators of user engagement with digital mental health interventions: systematic review. J Med Internet Res. 2021;23(3):e24387. Comprehensive and recent review of barriers and facilitators of user engagement with digital mental health interventions.

Crisp DA, Griffiths KM. Participating in online mental health interventions: who is most likely to sign up and why? Depress Res Treat. 2014;2014:790457.

Toscos T, Carpenter M, Drouin M, Roebuck A, Kerrigan C, Mirro M. College students’ experiences with, and willingness to use, different types of telemental health resources: do gender, depression/anxiety, or stress levels matter? Telemed J E Health. 2018;24(12):998–1005.

von Brachel R, Hötzel K, Hirschfeld G, Rieger E, Schmidt U, Kosfelder J, et al. Internet-based motivation program for women with eating disorders: eating disorder pathology and depressive mood predict dropout. J Med Internet Res. 2014;16(3):e92.

Farrer LM, Griffiths KM, Christensen H, Mackinnon AJ, Batterham PJ. Predictors of adherence and outcome in Internet-based cognitive behavior therapy delivered in a telephone counseling setting. Cognit Ther Res. 2014;38:358–67.

Gordon D, Hensel J, Bouck Z, Desveaux L, Soobiah C, Saragosa M, et al. Developing an explanatory theoretical model for engagement with a web-based mental health platform: results of a mixed methods study. BMC Psychiatry. 2021;21(1):417.

Lipschitz JM, Van Boxtel R, Fisher E, Altman AN, Bullis JR, Sprich SE, et al. Exploring risk factors for poor engagement with an internet-based CBT program implemented in routine care during the COVID-19 pandemic. Poster presented at: The Society for Digital Mental Health; 2022 Jun; Virtual/Online.

Linardon J, Cuijpers P, Carlbring P, Messer M, Fuller-Tyszkiewicz M. The efficacy of app-supported smartphone interventions for mental health problems: a meta-analysis of randomized controlled trials. World Psychiatry. 2019;18(3):325–36.

Andersson G, Cuijpers P. Internet-based and other computerized psychological treatments for adult depression: a meta-analysis. Cognitive Behavioral Therapy. 2009;38(4):196–205.

Kelders SM, Kok RN, Ossebaard HC, Van Gemert-Pijnen JE. Persuasive system design does matter: a systematic review of adherence to web-based interventions. J Med Internet Res. 2012;14(6):e152.

Andersson G, Cuijpers P. Internet-based and other computerized psychological treatments for adult depression: a meta-analysis. Cogn Behav Ther. 2009;38(4):196–205.

Saleem M, Kühne L, De Santis KK, Christianson L, Brand T, Busse H. Understanding engagement strategies in digital interventions for mental health promotion: scoping review. JMIR Ment Health. 2021;8(12):e30000.

Levin ME, Krafft J, Davis CH, Twohig MP. Evaluating the effects of guided coaching calls on engagement and outcomes for online acceptance and commitment therapy. Cogn Behav Ther. 2021;50(5):395–408.

Carolan S, Harris PR, Greenwood K, Cavanagh K. Increasing engagement with an occupational digital stress management program through the use of an online facilitated discussion group: results of a pilot randomised controlled trial. Internet Interv. 2017;10:1–11.

Blonigen DM, Harris-Olenak B, Kuhn E, Timko C, Humphreys K, Smith JS, et al. Using peers to increase veterans’ engagement in a smartphone application for unhealthy alcohol use: a pilot study of acceptability and utility. Psychol Addict Behav. 2021;35(7):829–39.

Jesuthasan J, Low M, Ong T. The impact of personalized human support on engagement with behavioral intervention technologies for employee mental health: an exploratory retrospective study. Front Digit Health. 2022;4:846375.

Mohr DC, Schueller SM, Tomasino KN, Kaiser SM, Alam N, Karr C, et al. Comparison of the effects of coaching and receipt of app recommendations on depression, anxiety, and engagement in the IntelliCare platform: factorial randomized controlled trial. J Med Internet Res. 2019;21(8):e13609.

Fleming T, Poppelaars M, Thabrew H. The role of gamification in digital mental health. World Psychiatry. 2023;22(1):46–7.

Simões de Almeida R, Marques A. User engagement in mobile apps for people with schizophrenia: a scoping review. Front Digit Health. 2022;4:1023592.

Brown M, O’Neill N, van Woerden H, Eslambolchilar P, Jones M, John A. Gamification and adherence to web-based mental health interventions: a systematic review. JMIR Ment Health. 2016;3(3):e39.

Fleming T, Merry S, Stasiak K, Hopkins S, Patolo T, Ruru S, et al. The importance of user segmentation for designing digital therapy for adolescent mental health: findings from scoping processes. JMIR Ment Health. 2019;6(5):e12656.

Six SG, Byrne KA, Tibbett TP, Pericot-Valverde I. Examining the effectiveness of gamification in mental health apps for depression: systematic review and meta-analysis. JMIR Ment Health. 2021;8(11):e32199.

Haff N, Patel MS, Lim R, Zhu J, Troxel AB, Asch DA, et al. The role of behavioral economic incentive design and demographic characteristics in financial incentive-based approaches to changing health behaviors: a meta-analysis. Am J Health Promot. 2015;29(5):314–23.

Dutra L, Stathopoulou G, Basden SL, Leyro TM, Powers MB, Otto MW. A meta-analytic review of psychosocial interventions for substance use disorders. Am J Psychiatry. 2008;165(2):179–87.

Giles EL, Robalino S, McColl E, Sniehotta FF, Adams J. The effectiveness of financial incentives for health behaviour change: systematic review and meta-analysis. PLoS ONE. 2014;9(3):e90347.

Wurst R, Maliezefski A, Ramsenthaler C, Brame J, Fuchs R. Effects of incentives on adherence to a web-based intervention promoting physical activity: naturalistic study. J Med Internet Res. 2020;22(7):e18338.

Granek B, Evans A, Petit J, James MC, Ma Y, Loper M, et al. Feasibility of implementing a behavioral economics mobile health platform for individuals with behavioral health conditions. Health Technol. 2021;11(3):505–10.

Boucher EM, Ward HE, Mounts AC, Parks AC. Engagement in digital mental health interventions: can monetary incentives help? Front Psychol. 2021;12:746324.

Oinas-Kukkonen H, Harjumaa M. Persuasive systems design: key issues, process model, and system features. Commun Assoc Inf Syst. 2009;24(1):28.

Anastasiadou D, Folkvord F, Serrano-Troncoso E, Lupiañez-Villanueva F. Mobile health adoption in mental health: user experience of a mobile health app for patients with an eating disorder. JMIR Mhealth Uhealth. 2019;7(6):e12920.

Hartmann R, Sander C, Lorenz N, Böttger D, Hegerl U. Utilization of patient-generated data collected through mobile devices: insights from a survey on attitudes toward mobile self-monitoring and self-management apps for depression. JMIR Ment Health. 2019;6(4):e11671.

Wachtler C, Coe A, Davidson S, Fletcher S, Mendoza A, Sterling L, et al. Development of a mobile clinical prediction tool to estimate future depression severity and guide treatment in primary care: user-centered design. JMIR Mhealth Uhealth. 2018;6(4):e95.

Fry JP, Neff RA. Periodic prompts and reminders in health promotion and health behavior interventions: systematic review. J Med Internet Res. 2009;11(2):e16.

Hurling R, Fairley BW, Dias MB. Internet-based exercise intervention systems: are more interactive designs better? Psychol Health. 2006;21(6):757–72.

Ritterband LM, Cox DJ, Gordon TL, Borowitz SM, Kovatchev BP, Walker LS, et al. Examining the added value of audio, graphics, and interactivity in an Internet intervention for pediatric encopresis. Child Health Care. 2006;35(1):47–59.

Bidargaddi N, Almirall D, Murphy S, Nahum-Shani I, Kovalcik M, Pituch T, et al. To prompt or not to prompt? A microrandomized trial of time-varying push notifications to increase proximal engagement with a mobile health app. JMIR Mhealth Uhealth. 2018;6(11):e10123.

Melcher J, Patel S, Scheuer L, Hays R, Torous J. Assessing engagement features in an observational study of mental health apps in college students. Psychiatry Res. 2022;310:114470.

Kelders SM, Bohlmeijer ET, Pots WT, van Gemert-Pijnen JE. Comparing human and automated support for depression: fractional factorial randomized controlled trial. Behav Res Ther. 2015;72:72–80.

Nahum-Shani I, Smith SN, Spring BJ, Collins LM, Witkiewitz K, Tewari A, et al. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med. 2018;52(6):446–62.

Gustafson DH, McTavish FM, Chih MY, Atwood AK, Johnson RA, Boyle MG, et al. A smartphone application to support recovery from alcoholism: a randomized clinical trial. JAMA Psychiat. 2014;71(5):566–72.

Juarascio A, Srivastava P, Presseller E, Clark K, Manasse S, Forman E. A clinician-controlled just-in-time adaptive intervention system (CBT+) designed to promote acquisition and utilization of cognitive behavioral therapy skills in bulimia nervosa: development and preliminary evaluation study. JMIR Form Res. 2021;5(5):e18261.

Teepe GW, Da Fonseca A, Kleim B, Jacobson NC, Salamanca Sanabria A, Tudor Car L, et al. Just-in-time adaptive mechanisms of popular mobile apps for individuals with depression: systematic app search and literature review. J Med Internet Res. 2021;23(9):e29412.

Lipschitz J, Miller CJ, Hogan TP, Burdick KE, Lippin-Foster R, Simon SR, et al. Adoption of mobile apps for depression and anxiety: cross-sectional survey study on patient interest and barriers to engagement. JMIR Mental Health. 2019;6(1):e11334.

Hogan TP, Etingen B, Lipschitz JM, Shimada SL, McMahon N, Bolivar D, et al. Factors associated with self-reported use of web and mobile health apps among US military veterans: cross-sectional survey. JMIR Mhealth Uhealth. 2022;10(12):e41767.

Young CL, Mohebbi M, Staudacher HM, Kay-Lambkin F, Berk M, Jacka FN, et al. Optimizing engagement in an online dietary intervention for depression (My Food & Mood Version 3.0): Cohort Study. JMIR Ment Health. 2021;8(3):e24871.

Graham AK, Lattie EG, Powell BJ, Lyon AR, Smith JD, Schueller SM, et al. Implementation strategies for digital mental health interventions in health care settings. Am Psychol. 2020;75(8):1080–92.

Powell BJ, Waltz TJ, Chinman MJ, Damshroder LJ, Smith JL, Matthieu MM, et al. A refined compliation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10(21):1–14.

Lipschitz JM, Van Boxtel R, Torous J, Firth J, Lebovitz JG, Burdick KE, et al. Digital mental health interventions for depression: scoping review of user engagement. J Med Internet Res. 2022;24(10):e39204. Review that systematically documents insufficient reporting of user engagement metrics for DMHIs and offers a minimum necessary, five-element standard of engagement reporting to be adopted for all studies of DMHIs.

Baker TB, Smith SS, Bolt DM, Loh WY, Mermelstein R, Fiore MC, et al. Implementing clinical research using factorial designs: a primer. Behav Ther. 2017;48(4):567–80.

Collins LM, Dziak JJ, Kugler KC, Trail JB. Factorial experiments: efficient tools for evaluation of intervention components. Am J Prev Med. 2014;47(4):498–504.

Almirall D, Nahum-Shani I, Sherwood NE, Murphy SA. Introduction to SMART designs for the development of adaptive interventions: with application to weight loss research. Transl Behav Med. 2014;4(3):260–74.

Murphy SA. An experimental design for the development of adaptive treatment strategies. Stat Med. 2005;24(10):1455–81.

Klasnja P, Hekler EB, Shiffman S, Boruvka A, Almirall D, Tewari A, et al. Micro-randomized trials: an experimental design for developing just-in-time adaptive interventions. Health Psychol. 2015;34:1220–8.

Kilbourne AM, Smith SN, Choi SY, Koschmann E, Liebrecht C, Rusch A, et al. Adaptive school-based implementation of CBT (ASIC): clustered-SMART for building an optimized adaptive implementation intervention to improve uptake of mental health interventions in schools. Implement Sci. 2018;13(1):119.

Kilbourne AM, Almirall D, Eisenberg D, Waxmonsky J, Goodrich DE, Fortney JC, et al. Protocol: adaptive implementation of effective programs trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement Sci. 2014;9:132.

Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology. MIS Q. 1989;13(3):319–40.

Martin LR, Haskard-Zolnierek KB, DiMatteo MR. Health behavior change and treatment adherence: evidence-based guidelines for improving healthcare: Oxford University Press, USA; 2010.

Venkatesh V, Thong JYL, Xu X. Unified theory of acceptance and use of technology: a synthesis and the road ahead. J Assoc Inf Syst. 2016;17(5):328–76.

Lipschitz J, Miller CJ, Hogan TP, Burdick KE, Lippin-Foster R, Simon SR, et al. Adoption of mobile apps for depression and anxiety: cross-sectional survey study on patient interest and barriers to engagement. JMIR Ment Health. 2019;6(1):e11334.

Scheurer D, Choudhry N, Swanton KA, Matlin O, Shrank W. Association between different types of social support and medication adherence. Am J Manag Care. 2012;18(12):e461–7.

Thompson RL, Higgens CA, Howell JM. Personal computing: toward a conceptual model of utilization. MIS Q. 1991;15(1):125–43.

Atinga RA, Yarney L, Gavu NM. Factors influencing long-term medication non-adherence among diabetes and hypertensive patients in Ghana: a qualitative investigation. PLoS ONE. 2018;13(3):e0193995.

Hall KL, Rossi JS. Meta-analytic examination of the strong and weak principles across 48 health behaviors. Prev Med. 2008;46(3):266–74.

Kahwati L, Viswanathan M, Golin CE, Kane H, Lewis M, Jacobs S. Identifying configurations of behavior change techniques in effective medication adherence interventions: a qualitative comparative analysis. Syst Rev. 2016;5(83).

Gagnon MD, Waltermaurer E, Martin A, Friedenson C, Gayle E, Hauser DL. Patient beliefs have a greater impact than barriers on medication adherence in a community health center. J Am Board Fam Med. 2017;30(3):331–6.

Dillon P, Phillips LA, Gallagher P, Smith SM, Stewart D, Cousins G. Assessing the multidimensional relationship between medication beliefs and adherence in older adults with hypertension using polynomial regression. Ann Behav Med. 2018;52(2):146–56.

Ajzen I, Fishbein M. Understanding attitudes and predicting social behavior. Englewood Cliffs, NJ: Prentice-Hall; 1980.

Shaw H, Ellis DA, Ziegler FV. The Technology integration model (TIM): predicting the continued use of technology. Comput Human Behav. 2018;83:204–14.

Yi MY, Hwang Y. Predicting the use of web-based information systems: self-efficacy, enjoyment, learning goal orientation, and the technology acceptance model. Int J Hum Comput Stud. 2003;59(4):431–49.

Sheeran P, Maki A, Montanaro E, Avishai-Yitshak A, Bryan A, Klein WMP, et al. The impact of chanign attitudes, norms and self-efficacy on health related intentions and behavior: a meta-analysis. Health Psychol. 2016;35(11):1178–88.

Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977;84:191–215.

Stretcher VJ, DeVellis BM, Becker MH, Rosenstock IM. The role of self-efficacy in achieving health behavior change. Health Educ Q. 1986;13(1):73–92.

Bolman C, Arwert TG, Vollink T. Adherence to prophylactic asthma medication: habit strength and cognitions. Heart Lung: J Acute Crit Care. 2011;40(1):63–75.

Phillips LA, Leventhal H, Leventhal EA. Assessing theoretical predictors of long-term medication adherence: patients’ treatment-related beliefs, experiential feedback and habit development. Psychol Health. 2013;28(10):1135–51.

Setterstrom AJ, Pearson JM, Orwig RA. Web-enabled wireless technology: an exploratory study of adoption and continued use intentions. Behav Inf Technol. 2013;32(11):1139-54.d.

Funding

This work was partially supported by grants from the National Institutes of Health (K23 MH120324; P50 DA054039).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lipschitz, J.M., Pike, C.K., Hogan, T.P. et al. The Engagement Problem: a Review of Engagement with Digital Mental Health Interventions and Recommendations for a Path Forward. Curr Treat Options Psych 10, 119–135 (2023). https://doi.org/10.1007/s40501-023-00297-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40501-023-00297-3