Abstract

A meta-analysis of 52 studies teaching functional living skills to individuals with autism spectrum disorder was conducted. Using the Tau effect size with the Dunn and the Kruskal–Wallis post-hoc analyses, the following categories were analyzed: age, diagnosis, intervention type, dependent variable, setting, and implementer. Analyses for age yielded statistically significant findings supporting greatest outcomes for elementary-aged individuals compared to secondary-aged individuals as well as adolescents and adults in comparison with preschool- and secondary-aged individuals. Moderate to strong effect sizes were noted across categories for diagnosis, intervention, and dependent variable. Outcomes indicated strong effects across categories for setting and implementer. Convergent validity of Tau effect sizes with visually analyzed ratings of evidence was evaluated, which largely resulted in correspondence.

Similar content being viewed by others

Building functional living skills to prepare individuals with autism spectrum disorder (ASD) for independence and employment has both humanitarian and economic advantages (Hendricks 2010). Functional living skills (e.g., personal care, vocational skills, and home-keeping) are often seen as distal indices for quality of life, as these skills are pivotal to independence and meaningful community participation (Alwell and Cobb 2009). It is widely recognized that a functional curricular approach tailored to individual needs must be adopted to produce meaningful outcomes for people with ASD (Ayres et al. 2011). According to the Centers for Disease Control and Prevention (CDC), ASD currently affects an estimated one in 68 children (CDC 2014); as this growing population transitions into adulthood and employment, the number of individuals with ASD supported by federal vocational rehabilitation has increased substantially (Cimera and Cowan 2009).

Since the early 1970s, the rise of a treatment-oriented approach has afforded more opportunities for individuals with disabilities to gain independence, be integrated into their communities, and lead meaningful lives (Fesko et al. 2012). In a 28-year follow-up study of 11 individuals with characteristics that would now be recognized as having ASD, Kanner (1971) noted that the quality of the environment appeared to affect the outcomes of these few cases, equating admission to a state institution as “a total retreat to near-nothingness” and “tantamount to a life sentence” (p. 144). Public Law 94–142 was enacted in 1975, protecting the right to free and appropriate public education for youth with disabilities (Education for All Handicapped Children’s Act of 1975). The most recent incarnation of this law, the Individuals with Disabilities Education Improvement Act (IDEIA 2004), emphasizes the uses of evidence-based practices and postsecondary transition planning in educational programs.

Today, under the No Child Left Behind Act (NCLB 2001), students with disabilities are mandated to have access to grade level standards and participate in annual standardized testing measuring progress in general education standards. Under an academic-focused curriculum, the acquisition of functional life skills to prepare for long-term independence in the post-school environment often becomes less of an instructional priority (Ayres et al. 2011). Once these individuals exit the public school system, more responsibilities fall on the shoulders of caregivers and these individuals approach adulthood with fewer supports (Graetz 2010). The current prevalence rates of ASD coupled with limited resources devoted to a functional curriculum suggest a need to determine the relative effects of various educational practices used to teach functional skills tailored to this exceptional population.

Several empirical studies suggest that individuals with ASD present unique patterns of adaptive behaviors in relation to their cognitive abilities (see review by Jung Lee and Ran Park 2007; Lopata et al. 2012; Matson et al. 2009). For instance, Matson et al. (2009) found that adaptive behaviors were more impaired as symptoms of ASD increased while individuals with intellectual disability alone demonstrated less impairment in adaptive behaviors. Therefore, it would be useful to evaluate the acquisition of functional living skills among individuals with ASD separately from other disability categories as well as between various cognitive functioning levels of ASD.

Although there has been significant research and improvements in early treatment of ASD, it appears that relatively less research has focused beyond elementary-aged individuals (Cimera and Cowan 2009; Machalicek et al. 2008). Early and intensive behavioral intervention, grounded in the principles of applied behavior analysis, is a well-validated approach to improve the independent functioning, including functional living skills, among young children with ASD (e.g., Dawson et al. 2010; Eikeseth et al. 2007; Virués-Ortega 2010). However, Matson et al. (2012) noted the stark need for research to evaluate interventions to teach skills of functional living to adolescents and adults with ASD.

Although several strategies (e.g., prompting, modeling, reinforcement, shaping, and chaining) have shown to be effective to teach functional living skills (Alwell and Cobb 2009; Bennett and Dukes 2014; Flynn and Healy 2012; Palmen et al. 2012a; Walsh et al. 2014), we have a limited understanding of the comparative value among various study characteristics to promote the acquisition of these skills among persons with ASD because studies have not been analyzed via meta-analytic techniques. If it is found that some strategies (e.g., behavioral and video modeling) are as effective as others, this would allow practitioners to select interventions based on ease of implementation. Alternatively, if one intervention was found to be far more effective than others, the practitioners would have information justifying an intervention that may be more costly or time-consuming (Charlop-Christy et al. 2000). Relatedly, it is not known whether current practices are more or less effective for particular categories of dependent variables (i.e., community access, employment, household chores, and self-help skills). Finally, individuals with ASD often have difficulties with generalizing and maintaining skills (National Research Council 2001). Therefore, treatment must be consistent across environments and implementers to demonstrate meaningful gains, indicating that natural change agents must be equipped with the appropriate strategies. Further study is warranted concerning the contexts in which evidence-based interventions to teach functional living skills have been evaluated.

Comprehensive standards for the analysis of single-case research design quality and evidence of effect have been developed by the What Works Clearinghouse (WWC) of the Institute of Education Science, a component of the U.S. Department of Education (Kratochwill et al. 2010). These criteria allow for a cohesive approach to ensuring quality methods in data aggregations of single-case research designs through the use of a gating procedure (Kratochwill et al. 2010; Maggin et al. 2013). Employing these criteria produced high levels of reliability among visual analysts on ratings of design quality and evidence of effect (Maggin et al. 2013).

Rigorous innovations in nonoverlap methods of effect size calculations have been recognized and recommended as quantitative measures to be used in conjunction with design analysis and visual analysis of single-case research (Brossart et al. 2014; Carter 2013; Kratochwill and Levin 2014; Parker and Vannest 2012). Nonoverlap techniques are non-parametric statistical methods used to analyze single-case research designs and are a particularly well suited for the characteristics of most single-case research studies. These statistics measure the extent of nonoverlap of the data between adjacent phases (Parker et al. 2011a). Nonoverlap indexes have been refined over recent years and appear to be increasingly acknowledged through applications in the literature (e.g., Ganz et al. 2012; Maggin et al. 2013).

The Tau metric offers greater power and precision over other nonoverlap statistic options (Parker et al. 2011a). Nonoverlap of all pairs (NAP) and Tau are similar nonoverlap effect size metrics that compare all possible pairs of data points between phases (Parker et al. 2011b). The NAP calculation is similar to Tau but differs in scale, and Tau provides an option of baseline trend control (i.e., Tau-U) in addition to nonoverlap. The NAP and Tau effect sizes have been used in single-case research studies (Ganz et al. 2013a, b; Hutchins and Prelock 2013), systematic reviews (Gaskin et al. 2013; Harrison et al. 2013), and meta-analytic reviews of single-case research (Bowman-Perrott et al. 2013; Roth et al. 2014).

Tau is largely consistent with visually analyzed ratings of graphed data (Hutchins and Prelock 2013; Brossart et al. 2014). However, this particularly holds true when the data are clear (Brossart et al. 2014). The use of credible effect sizes to supplement visual analysis in addition to considering methodical rigor through design analysis has become the current state of the art in meta-analysis of single-case research (Brossart et al. 2014). It is also advisable to validate emerging nonoverlap effect sizes by assessing their convergence with findings from other measures (Carter 2013; Reynhout and Carter 2011). Given that the most widely accepted and used method of data interpretation in single-case research is visual analysis (Kratochwill et al. 2010), the credibility of effect sizes may be gleaned by comparing them against indicators of visually analyzed effect.

The purpose of this meta-analytic review of single-case research studies was to determine the characteristics of supports that have evidence to suggest effectiveness for teaching functional living skills to individuals with ASD. Effects were broken down by participant characteristics, independent variable categories, dependent variable categories, treatment settings, and treatment implementers to determine potential moderators. The convergent validity of visual analysis criteria and Tau measures was empirically evaluated by testing specific categories of visually analyzed evidence as moderators using the Tau metric.

Research Questions

-

1.

What are the magnitudes of effect (i.e., Tau effect sizes) of educational interventions for teaching functional living skills to people with ASD, differentiated by categories within the following variables: (a) participant age, (b) participant diagnoses, (c) independent variables, (d) dependent variables, (e) setting, and (f) implementer?

-

2.

Are there statistically significant differences between categories of the evaluated variables?

-

3.

To what extent do various systematically evaluated qualities of evidence determined through visual analysis agree with Tau effect sizes?

Methods

Search Procedures

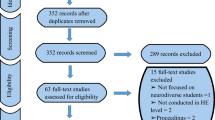

A systematic search was conducted during April 2013 in five electronic databases: ERIC, Academic Search Complete, Professional Development Collection, Social Science Full Text, and PsycINFO. The terms autis*, Asperger, ASD, PDD, and pervasive developmental disorder were each combined with the terms daily living, daily skill*, employ*, functional skill*, hygiene, independen* living, independen* skill, life skill, practical skill*, self-help, and self-care. Publication year and language were not restricted, but the search was restricted to peer-reviewed articles. This search resulted in 1896 articles. Articles that did not have an author or were not classified as a peer-reviewed journal article were excluded resulting in a total of 1761 articles to be screened for inclusion and exclusion criteria.

Inclusion and Exclusion Criteria

An article had to meet the following criteria to be included in this review. First, the study had to include a participant diagnosed with an ASD. Studies had participants with a pervasive developmental disorder, Asperger syndrome, ASD or “autism”, and participants described as having “autistic behaviors”. Second, the studies had to employ single-case research methodology including multiple-baseline, multiple-probe, alternating treatment, reversal or withdrawal designs, or a combination of these. Third, the article had to display a line graph indicating repeated measurement of a behavior. Fourth, the study had to target an independent adaptive or functional living skill of a person with ASD as a dependent variable (defined as housekeeping tasks, employment, transportation use, cooking, hygiene and personal care, shopping, accessing public settings, banking and money management, self-feeding, and toileting initiations). Studies with dependent variables related to social, play, communication, or leisure skills that did not also include a measure of functional living skills were excluded. Further, studies in which the researcher behavior was indistinguishable from the participant behavior (e.g., Toelken and Miltenberger 2012) were excluded. Fifth, studies had to investigate an educational intervention (i.e., involved participation in a learning activity). Studies investigating pharmacological treatments were excluded. Finally, articles had to be published in English.

This initial screening of inclusion and exclusion criteria of articles resulted in 44 studies for further evaluation. The included articles were then screened to ensure that they met basic Design Standards, as described below. A total of 28 studies met the predetermined Design Standards and 16 were excluded. Following Design Standards screening, an ancestral search was conducted. The reference lists of the 28 initially included studies were appraised and an electronic search was conducted using the first authors’ surnames to identify other potential articles. The results from this iterative ancestral search were screened based on the initial inclusion and exclusion criteria. This search resulted in an additional 27 articles being screened over Design Standards producing a total of 71 articles when in addition to the 44 articles screened prior to the ancestral search.

Inter-rater Reliability on Inclusion and Exclusion Criteria

For the initial search and determination of whether a study met the inclusion criteria, an independent second rater applied the initial inclusion and exclusion criteria to 881 of the 1761 articles reviewed (50 %). All inter-rater reliability (IRR) scores in this meta-analysis were calculated by dividing agreements between raters by agreements plus disagreements and multiplying by 100. This initial screening resulted in an IRR of 97 % for overall inclusion or exclusion of each article. If there was a disagreement, the study was either rated by a third reviewer and the final determination was made by the third reviewer or the two reviewers discussed the discrepancy until they came to consensus.

Application of the What Works Clearinghouse Standards

Standards of design quality and evidence were evaluated based on the standards developed by the WWC (Kratochwill et al. 2010) and applied via codes developed by Maggin et al. (2013). Articles meeting initial inclusion criteria were reviewed to ensure they reached minimum Design Standards criteria, and the included cases from articles were then screened for Evidence Standards. Standards were applied specifically to the dependent variables and participants of interest in this review.

Design Standards

The coding protocol and appendix developed by Maggin et al. (2013) were adopted in the current analysis to evaluate six Design Standards for each study. Design Standard 1 evaluated if the independent variable was manipulated systematically (Maggin et al. 2013). A score of 1 was assigned if the standard was met and a score of 0 was assigned if it was not met. For Design Standard 2A, a score of 1 was assigned if interobserver agreement (IOA) was systematically measured and reported for each outcome and a score of 0 was assigned if IOA was not reported. Design Standard 2B evaluated how often IOA was assessed. For the purpose of the current analysis, this original standard was adapted to include an additional rating option in order to broaden inclusion and gather enough qualifying studies to permit meta-analyzing. A score of 2 (originally a 1; Maggin et al. 2013) was assigned if IOA was collected for at least 20 % of the sessions within each condition. The adaptation made was that a score of 1 was assigned if the study reported that IOA was collected for at least 20 % of the sessions overall, but did not report that IOA was collected for each condition. A score of 0 was assigned if the IOA data collection did not meet the 20 % minimum. Design Standard 2C evaluated whether the results of the IOA met minimum quality thresholds, defined as 0.80 for percentage agreement indices or 0.60 for kappa measures (Kratochwill et al. 2010). Design Standard 2C contained a rating of 1 indicating IOA met minimum thresholds and 0 indicating IOA did not meet minimum thresholds. Design Standard 3 assessed whether the study included a minimum of three attempts to demonstrate effects at differing points in time, resulting in a rating of 1 if the study met this criterion and 0 if the study did not. Design Standard 4 evaluated the demonstration of effects through a minimum number of data points per phase. This standard was adapted from Maggin et al. (2013) with a clarification to reflect the standard as described by Kratochwill et al. (2010) specifying that alternating treatment designs must include a minimum of four data points. For a study to receive a score of 2, the conditions (phases) had to include a minimum of five data points. A score of 1 was assigned if a condition had at least three data points but not a total of five, or if an alternating treatments design had only four data points. A score of 0 was assigned if the study did not meet these criteria.

Articles were then designated a score for an Overall Design classification (range 0 to 2; Kratochwill et al. 2010; Maggin et al. 2013). Studies that received the highest score for each Design Standard received an Overall Design classification of 2, indicating that the article Meets Design Standards. Articles that did not receive the highest scores for all Design Standards but did not score 0 for any of the individual Design Standards received an Overall Design classification of 1, or Meets Design Standards with Reservations. Articles that were assigned a 0 for any of the basic Design Standards were given an Overall Design classification of 0, or Does Not Meet Design Standards, and were excluded from further review. Studies using an alternating treatments design with no baseline (e.g., Kern et al. 2007) were excluded at this point as well, given that effects were to be evaluated by comparing the A phase to the B phase. Following the application of Design Standards to 71 articles, 52 articles were qualified for inclusion. From these, three articles were classified as Meets Design Standards (Cannella-Malone et al. 2006; Cihak and Grim 2008; Goodson et al. 2007). The remaining 49 included articles were classified as Meets Design Standards with Reservations.

Moderator Coding of Evidence Standards

Convergent validity of the Tau effect sizes with the WWC Evidence Standards was evaluated on data of single dependent measures from individual participants (i.e., cases) within designs qualifying from the 52 included studies. There were 160 cases analyzed in total which met the aforementioned inclusion criteria. Cases were rated on items of Evidence Standards within three categories of visually analyzed indicators of effect described by Maggin et al. (2013) and based on Kratochwill et al. (2010): (a) Baseline Analysis, (b) Within Phase Analysis, and (c) Between Phase Basic Effects. Between Phase Experimental Effects were rated considering each design as a whole and Overall Evidence for each case was then classified as Strong Evidence, Moderate Evidence, or No Evidence based on three Overall Effectiveness items (i.e., ratings based on the number of data points, the total number of effects, and the ratio of those effects to the non-effects; see Maggin et al. 2013).

Ratings on cases of two Between Phase Basic Effects (Between Basic and Between Overlap) were evaluated as potential moderators in addition to the Overall Evidence (Maggin et al. 2013). Between Basic ratings documented the presence (rated as 1 or Basic Effects Present) or absence (rated as 0 or No Basic Effects Present) of a basic effect between phases. Between Basic ratings were evaluated as a potential moderator because this represents a holistic rating of visual analysis indicators (i.e., immediacy of effects, trend, overlap, variability, level, and consistency of effects) to be compared against Tau, which principally measures overlap. Between Overlap ratings indicated if the overlap between baseline and treatment phases was sufficiently low to document an effect (Maggin et al. 2013). Ratings of either Overlap Sufficiently Low (rated as 1) or Overlap Not Sufficiently Low (rated as 0) were assigned per case by exclusively judging the overlap between phases through visual analysis. Between Overlap ratings were evaluated as a moderator because as Tau is a statistical measure of overlap, it might be posited to have a high level of agreement with visually analyzed overlap. Finally, the Overall Evidence classification of Strong Evidence, Moderate Evidence, or No Evidence was analyzed as a potential moderator because it documents not only the occurrence of basic effects, but also the level of experimental control (e.g., at least three demonstrations of effect) demonstrated within each design as a whole. Because overlap statistics do not account for experimental control within a single-case design, the consistency between Tau scores with the Overall Evidence classifications for this group of studies can, in a manner, demonstrate the potential validity of findings from other moderators in terms of experimental control.

Inter-rater Reliability on Design and Evidence Standards

Two independent raters analyzed each of the articles (n = 71, 100 %) for six Design Standards and independently classified the study as Meets Design Standards, Meets Design Standards with Reservations, or Does Not Meet Design Standards. For this overall classification of the 71 articles, there was 80 % IRR (14 disagreements). Averaged across the six Design Standards with a total of 426 coded items, IRR reached 85 % (range, 69 to 99 %). There were 65 disagreements total including three for Design Standard 1, one for Design Standard 2A, 16 for Design Standard 2B, 12 for Design Standard 2C, 11 for Design Standard 3, and 22 for Design Standard 4. All disagreements were either discussed until consensus was reached or a third rater evaluated the disagreements and a final determination was made by two of the three raters. Thus, final IRR was 100 % for individual Design Standards and overall classifications. Prior to rating Evidence Standards independently, three articles were selected to be discussed and evaluated by the two raters. Then, the two raters independently analyzed 16 randomly selected articles (31 %) or 52 of 160 total cases (33 %). Percentages of IRR for Evidence Standard codes reached 100 % for Between Basic ratings, 98 % for Between Overlap ratings, and 92 % for Overall Evidence classifications. Disagreements were discussed between the two raters until consensus was reached.

Moderator Coding of Descriptive Study Characteristics

In addition to the Evidence Standard codes described above, each A-B phase contrast was summarized based on the following potential moderators: participant age, participant diagnosis, description of the independent variable, description of the dependent variable, setting, and implementer characteristics. If a study variable did not fit in the defined categories below within potential moderators or the article did not specify, a denotation of OTHER was given. The OTHER denotation was not evaluated within categories as a potential moderator due to the variability within the classification.

The participant’s age was coded as PRESCH if the age was reported as less than 5 years, ELEM for 5 to 10 years, SECOND for 10 to less than 15 years, and ADOLADULT for greater than 15 years. For diagnosis, if it was reported that the participant had ASD or autism, a code of AU was assigned. A code of HFAAS was assigned for cognitively high-functioning autism or Asperger syndrome. A code of AUIDD was assigned for a diagnosis of autism and either mental retardation or intellectual disability, if the reported IQ was less than 70, or if adaptive behavior scores were two or more years delayed as reported by the age equivalent score.

The categories for independent variable encompassed four categories of interventions that were selected based on an initial informal review of the included articles. If a study utilized video modeling as the independent variable, the article was coded as VM. An intervention using audio cueing (e.g., “bug in the ear” Bluetooth technology that allowed the instructor to give the participant verbal instructions) was coded as AC. If a study used behavioral in vivo instruction alone (e.g., prompting, chaining, fading prompts, and use of reinforcement), the study was coded as BIV. The use of visual cueing (schedules, pictorial task analysis, social stories, and/or self-monitoring) was coded as VC. Studies that used video modeling, audio cueing, or visual cueing in combination with behavioral in vivo instruction were categorized as the former (e.g., visual cueing) given that behavioral methods of instruction were commonly used in tandem with each of these interventions.

Four categories were coded for the dependent variables. Targeted skills that included toileting, cooking, hygiene, bathing, tooth-brushing, dressing, or independent eating were coded as self-help (SH). Skills identified as household chores (e.g., cleaning and laundry) were coded as HC. Skills related to employment were coded as ES, and skills related to accessing the community (e.g., transportation use, banking and shopping) were coded as COMMACC.

The setting where the intervention occurred was coded as SCHL for a school or clinic setting, EMP for an employment setting, HM for a home setting, and COMMU for a community setting. Finally, the implementer was coded as TCHR if a teacher, aide, or paraprofessional was described as being the implementer of the intervention, RCHR was assigned if a researcher or graduate student implemented the intervention, and PAR was assigned for a parent or caregiver implemented the intervention.

Inter-rater Reliability on Moderator Coding of Study Characteristics

In order to establish IRR for codes across the six aforementioned moderator categories, 89 randomly selected effects (i.e., A-B phase contrasts) under analysis (35 % of a total of 251) across 16 studies (31 %) were coded by two independent raters. IRR was calculated based on whether the two raters agreed on the codes per moderator category of each effect, with a total of 534 possible agreements. The overall percentage IRR for the moderator coding was 96 % (range, 83 to 100 % across moderator categories) due to 22 disagreements. In the event of disagreements, a third rater reviewed the disagreement and made a final decision.

Data Extraction and Analysis

Data were extracted from each line graph in each article that displayed data for a participant with behaviors meeting inclusion criteria (i.e., participants in an article who did not have ASD were excluded; data related to other behaviors, such as challenging behaviors, were excluded). Phases selected for contrasts in this meta-analysis included adjacent baseline and intervention phases (Parker et al. 2011b). That is, for example, in a reversal design (A1B1A2B2), phase A1 was contrasted with B1 and A2 was contrasted with B2. Data were extracted by hand using a rank-order approach (Parker et al. 2011a). The order of data points in each graph was ranked according to their relative order across phases. The lowest point across phases was assigned a rank of 1 and the rank increased with the relative position of the data on the graph until all data were assigned a relative rank. Dependent variables intended for reduction were ranked in reverse order. Data points that were on the same level were given the same ranked score. Ranks for data points were entered into a table in time-series order and by phase.

Inter-rater Reliability on Data Extraction

IRR was calculated on data extraction to control for potential errors in transposing rank order. Out of 52 included studies, data from 44 studies (85 %) were rank ordered by a second independent rater. The IRR was then calculated as point-to-point percentage agreement to determine correspondence of point-to-point rank ordered data across phases (including generalization and maintenance phases to permit later analyses in addition to baseline and intervention phases for the current analyses). There were 3502 agreements from a total of 3721 possible pairs of agreement, reaching an IRR of 94 % between raters. For those rankings in which two raters did not agree, a third independent rater coded the rank order of data points and consulted with the other coders until 100 % agreement was reached.

Effect Size Calculation

As single-case research was the focus of this analysis, a non-parametric effect size was utilized for all moderator analyses. Tau is an effect size measure that tests the degree of nonoverlap between phases (Parker et al. 2011b). Tau results in scores ranging from −1.0 to 1.0. A positive score between 0.0 and 1.0 indicates improvement between the two phases and a negative score indicates a deteriorating data set. For the purpose of this analysis, individual Tau effect sizes were aggregated to obtain omnibus effects for defined moderators. All of the Tau analyses were calculated and aggregated using original software developed through the Maple platform available from the seventh author, John Davis.

After calculating the omnibus effects according to different moderators of interest, additional analyses were conducted to evaluate the statistical significance of the results. First, the Kruskal–Wallis one-way analysis of variance was utilized (Kruskal and Wallis 1952). In the event that any of the moderator variables obtained significance with the Kruskal–Wallis analysis, a Dunn post-hoc test was conducted to evaluate the pair-wise combinations (Dunn 1964).

Results

Data from this study yielded 251 separate AB contrasts from 52 unique studies with 133 participants. The omnibus Tau across all studies was 0.85 CI95 [0.83, 0.89], which indicates that interventions to improve functional living skills with people with ASD have overall strong effects (i.e., 0.85−0.95). Within these studies, a broad range of Tau values was identified (from −0.75 to 1.00) while a majority of studies resulted in strong effects or moderate effects (i.e., 0.70−0.84). Given the broad range of effect sizes across studies, analyses of potential moderator variables were conducted.

Age

Four unique variables were categorized within the age category (see Table 1). Within this analysis, Tau effect sizes ranged from a strong effect of 0.92 CI95 [0.86, 0.99] for elementary (ages 5–9 years) to a weak effect of 0.52 CI95 [0.43, 0.61] for secondary level learners (ages 10–14 years). The Kruskal–Wallis analysis indicated that there were statistically significant differences between outcomes (p < 0.01). The Dunn post-hoc procedure (see Table 2) indicated a statistically significant difference between the preschool (less than 5 years) and adolescent/adult age groups (ages 15 years and up), suggesting the adolescent/adult age group had relatively stronger effects. Results also showed statistically significant differences between the elementary and secondary age groups, suggesting that interventions with elementary-aged children had stronger effects than those among secondary-aged individuals. In addition, statistically significant differences were found between the secondary and adolescent/adult participant age groups, suggesting the interventions with the adolescent/adult age group had stronger effects than those with the secondary age group.

Diagnosis

Within the diagnosis category, three unique variables were categorized (see Table 3). Within this analysis, Tau effect sizes ranged from a strong effect of 0.87 CI95 [0.83, 0.91] for a diagnosis categorized as autism and intellectual disability to a moderate effect of 0.70 CI95 [0.61, 0.80] for a diagnosis categorized as high-functioning autism/Asperger syndrome. The Kruskal–Wallis analysis indicated statistically significant differences between participants based on diagnostic categorization (p = 0.025). However, the Dunn post-hoc procedure did not indicate statistically significant differences between any of the diagnostic categories. Studies including participants described as having multiple prior diagnoses on the autism spectrum or eligibility for special education services under the diagnosis of autism were considered vague or mixed and thus were denoted as OTHER under participant diagnosis (n = 2).

Independent Variable

The investigation of differences between studies based on the independent variable had four unique variables (see Table 4). This analysis found strong effects for visual cueing (Tau = 0.93 CI95 [0.83, 1.00]), behavioral in vivo (Tau = 0.89 CI95 [0.83, 0.95]), and audio cueing (Tau = 0.85 CI95 [0.77, 0.94]). Moderate effects were found for video modeling (Tau = 0.83 CI95 [0.79, 0.87]). The Kruskal–Wallis analysis indicated no statistically significant differences between studies based on the targeted independent variable (p = 0.156). Independent variables which were denoted as OTHER consisted of additional component or mixed intervention studies including parent-therapist collaboration or parent-training programs, personal digital assistants, and multi-media or mixed instruction modes (n = 7).

Dependent Variable

The investigation of differences between studies based on the dependent variable had four unique variables (see Table 5). Within this analysis, Tau effect sizes ranged from a strong effect of 0.91 CI95 [0.85, 0.96] for employment skills (e.g., assembling and cleaning at a job) to a moderate effect of 0.78 CI95 [0.51, 1.00] for household chores (e.g., cleaning and laundry). The Kruskal–Wallis analysis showed no statistically significant differences, although marginal, between studies based on the targeted dependent variable (p = 0.061). One study was denoted as OTHER for the dependent variable, which targeted off-task behavior.

Setting

Analysis of the study setting had four unique variables (see Table 6). Within this analysis, Tau effect sizes showed strong effects for each of the four of the setting variables. The Kruskal–Wallis analysis showed no statistically significant differences between studies based on the setting variable (p = 0.72). Under the setting category, studies were denoted as OTHER if they included mixed settings (n = 8).

Implementer

Analysis of studies based on implementer had three unique variables (see Table 7). Within this analysis, Tau effect sizes showed strong effects for each of the three variables. The Kruskal–Wallis analysis showed no statistically significant differences between studies based on the implementer (p = 0.92). Studies were denoted as OTHER under implementer when they included mixed categories of implementers or the implementer’s role could not be determined (n = 24).

Convergent Validity with Evidence Standards

Between Basic

Analysis of differences between studies based on Between Basic ratings had two unique variables. The Basic Effects Present variable yielded 236 effect sizes from 50 studies with 124 subjects and the No Basic Effects Present variable yielded 15 effect sizes from eight studies with 15 subjects. Within this analysis, Tau effect sizes ranged from a strong effect of 0.89 CI95 [0.86, 0.92] for the Basic Effects Present variable to weak effects of 0.29 CI95 [0.18, 0.41] for the No Basic Effects Present variable. The Kruskal–Wallis analysis indicated statistically significant differences between studies based on the Between Basic variable (p = <0.001).

Between Overlap

Analysis of differences between studies based on the Between Overlap codes had two unique variables. The Overlap Sufficiently Low variable yielded 231 effect sizes from 47 studies with 118 subjects and the Overlap Not Sufficiently Low variable yielded 20 effect sizes from nine studies with 18 subjects. Tau effect sizes ranged from a strong effect of 0.91 CI95 [0.87, 0.94] for the Overlap Sufficiently Low variable to weak effects of 0.29 CI95 [0.19, 0.40] for the Overlap Not Sufficiently Low variable. The Kruskal–Wallis analysis indicated statistically significant differences between studies based on the Between Overlap variable (p = <0.001).

Overall Evidence

Analysis of differences between studies based on the Overall Evidence had three unique variables (see Table 8). Within this analysis, Tau effect sizes ranged from a strong effect of 0.91 CI95 [0.86, 0.96] for Moderate Evidence ratings to weak effects of 0.43 CI95 [0.33, 0.53] for No Evidence ratings. The Kruskal–Wallis analysis indicated statistically significant differences between studies based on the Overall Evidence variable (p = <0.001). The Dunn post-hoc procedure (see Table 9) indicated statistically significant differences between the Moderate Evidence and No Evidence variables. Similar statistically significant results were found between the Strong Evidence and No Evidence variables. No statistically significant differences were found between the Moderate Evidence and Strong Evidence classifications.

Discussion

This meta-analysis synthesized the findings from single-case research studies to evaluate the effectiveness of multiple types of interventions for improving functional living skills in individuals with ASD. This appears to be the first meta-analytic review on single-case research studies conducted to determine the characteristics of the existing research that has evidence to suggest effectiveness for teaching functional living skills to individuals with ASD. Findings provide overall support for the efficacy of the interventions under analysis to improve various functional living skills among individuals with ASD across ages and cognitive functioning levels. Across settings and implementers, interventions generally resulted in improvements as well.

We first sought to identify differences in magnitude of effect across ages of participants. In this review, approximately half of studies included adolescents and adults with ASD which yielded strong and statistically significant effects relative to both secondary- and preschool-aged individuals. The representativeness of adolescents and adults among studies in this review appears contrary to the educational literature base on ASD at large, but the current review appears to be the most inclusive of all age categories specific to the area of teaching functional living skills to persons with ASD and thus may present a more accurate overview of the literature in this area to date. Previous reviews in this area have targeted specific age categories (Machalicek et al. 2008; Bennett and Dukes 2014; Palmen et al. 2012a) and dependent variables most relevant to specific age categories (Flynn and Healy 2012; Walsh et al. 2014). The common targeting of adolescents and adults found here may be due to the fact that acquisition of functional living skills takes a high priority within the habilitation programs of adolescents and adults with disabilities (Stancliffe et al. 2000). Elementary-aged individuals also yielded strong effects which included more participants relative to preschool-aged individuals. Perhaps older children were more developmentally ready to learn or had more learning experiences with skills that are typically targeted during childhood years (e.g., self-help), relative to preschool-aged children. However, effects were not linear in relation to age. Secondary-aged individuals were less responsive to functional living skills interventions than were elementary-aged individuals to a statistically significant degree. This could potentially be due to the nature of the targeted skills or interventions across ages.

The second question addressed by this study was whether differential effects existed based on participants’ cognitive functioning levels. Overall, moderate to strong treatment effects were found across cognitive functioning levels of participants. While participants with high-functioning autism or Asperger syndrome showed the lowest treatment effect, few studies were conducted with these participants making it difficult to have confidence in concluding that the interventions for individuals with autism or autism and intellectual disabilities were more effective. Further, given the pronounced characteristics (i.e., severely impaired language, behavior, and social skills) of individuals with classic autism, more research might have been focused on these individuals than on individuals that were high functioning.

The third question addressed by this meta-analysis was whether differential effects occurred based on the type of intervention. Overall, moderate to strong treatment effects were found across interventions. Findings were consistent with the broad literature base on functional living skills interventions for persons with disabilities, providing support for behavioral intervention components (Alwell and Cobb 2009). Studies that used visual cueing showed the strongest treatment effect followed by behavioral in vivo instruction alone. Visual cue interventions tended to include components of behavioral procedures such as modeling, prompting, reinforcement, or prompt fading (e.g., Parker and Kamps 2011; Pierce and Schreibman 1994; Ganz and Sigafoos 2005; Mechling and Stephens 2009). These findings may suggest that combining visual cues with behavioral procedures produced additive effects relative to behavioral procedures alone. However, it is not clear whether the visual cue interventions, when used alone, can be considered effective in improving functional living skills. Furthermore, only seven studies used visual cueing which may be an indication of too little evidence to provide strong support for these findings. The majority of analyzed studies utilized video modeling. Although video modeling interventions had lower effects (although not statistically significant) than those of behavioral in vivo instruction alone, component analyses conducted in included studies demonstrated additive effects of video modeling to behavioral in vivo instruction (Charlop-Christy et al. 2000; Murzynski and Bourret 2007).

Another question addressed by this meta-analysis was whether differential effects occurred due to the type of functional living skills for which the interventions were implemented. Moderate to strong effects were found across categories of dependent variables. Most studies evaluated employment skills or self-help skills and these categories also yielded the strongest effects. These strong effects could potentially be due to the developed literature bases, providing more resources to advance interventions particularly suited to teach these skills. Relatively few participants (n = 18) were taught skills used to improve their access to the community (e.g., grocery shopping, ordering foods, and accessing transportation), indicating a weaker level of external validity for the strong effects found.

The fifth question addressed by this review was whether differential effects occurred based on different settings in which the studies were conducted. Studies were carried out at different settings including community, employment, home, and school contexts. Strong treatment effects were found across all of the settings. However, studies conducted in school settings included more than half of the total number of participants in this review and only eight participants were included in studies conducted in community settings. Studies conducted in home settings also included few participants (n = 18). The small number of participants in those studies conducted in community and home settings make it difficult to make solid conclusions based on the findings for settings.

Sixth, we sought to identify the variability of effects based on implementer. Strong treatment effects were found across categories of implementers, indicating that parent- and teacher-implemented interventions appear to be as effective as researcher-implemented interventions. However, most studies included researchers or teachers as implementers and only two studies utilized parents as implementers. Despite this finding, these interventions may be best implemented by persons found within the contexts that people typically demonstrate functional living skills, as the participation of natural change agents tends to promote skill generalization and maintenance (Brookman-Frazee et al. 2009).

Lastly, the current study appears to be the first to evaluate convergent validity of visually analyzed indicators of effect for single-case research with an effect size calculation by testing visual analysis codes as moderators. We used Tau to calculate effect sizes and evaluated the agreement of Tau with three visually analyzed Evidence Standards (Kratochwill et al. 2010; Maggin et al. 2013): (a) basic effects between phases, (b) overlap between phases, and (c) overall presence of evidence (which accounts for experimental control). For both the Between Basic and Between Overlap tests, results indicated large discrepancies in the Tau effect size estimations between moderator categories with high statistical significance, indicating convergent validity between Tau and visually analyzed judgments of both overlap and basic effects overall. For Overall Evidence, there were statistically significant discrepancies between effects classified as No Evidence versus Moderate Evidence and for studies classified as No Evidence versus Strong Evidence. This indicates that the Tau metric generally concurred with the overall presence versus absence of experimental control. There were no statistically significant differences in effects identified between studies classified as having Moderate Evidence versus Strong Evidence, which might be expected given that Tau is not designed to be sensitive to grades of experimental control (e.g., replications of effect in design as a whole) beyond the demonstration of an effect between two phases. Therefore, discriminant validity (i.e., a lack of relation) appeared to exist between the degree of experimental control demonstrated and the Tau metric.

Limitations

Some limitations exist for this meta-analysis. First, data on generalization and maintenance conditions were not analyzed. Therefore, it is unclear whether the interventions resulted in meaningful changes in the daily lives of those individuals with ASD. Second, we did not analyze the latency of target behavior acquisition, thus did not account for speed of acquisition in our analysis of effects. We also did not analyze the intensity of the intervention components. It is possible that the severity of cognitive functioning level could impact the intensity of treatment requirements, but this is not something we were able to determine from our analyses. Fourth, this review is inclusive of a variety of targeted behaviors meeting our definition of functional living skills, some of which require more steps or greater response effort relative to others. As a result of these limitations, moderator effects must be interpreted cautiously. Fifth, this review included only peer-reviewed journal articles involving single-case research, which may weaken the validity of the findings (Duval and Tweedie 2000). By excluding group studies and unpublished studies, it does not synthesize all available evidence on the effects of interventions developed to improve functional living skills of individuals with ASD. Furthermore, it would have been beneficial to conduct a hand search of journals for recently released articles to potentially obtain additional studies not yet propagated to online databases. Finally, in analyzing design quality of each study, the Design Standards were slightly adapted from Maggin et al. (2013) to permit inclusion of more studies. Therefore, although studies included in this meta-analysis were systematically identified to be those of relatively high quality among the existing literature base, they should not be considered to meet the specific quality indicators of design delineated by Kratochwill et al. (2010).

Future Research

Results of this meta-analysis suggest several questions that can be addressed in future research. Follow-up investigation is recommended for including data in generalization and maintenance conditions and analyzing whether the acquired skills were maintained and generalized across multiple settings and people. Relatedly, since carrying out studies in more naturalistic settings (e.g., community, employment, and home) implemented by teachers or parents may promote maintenance and generalization of skills that individuals with ASD acquire, there is an obvious need for further research in these areas. Additionally, most of the studies reviewed in this meta-analysis included adolescents or adult participants with ASD and more than half of the participants were diagnosed with ASD co-morbid with intellectual disability. More studies should be conducted with other age groups and diagnoses of individuals to confirm the findings that functional living skills of individuals with ASD can be improved regardless of ages and cognitive functioning levels. We underscore the need to include more detailed characteristics of participants in order to determine moderators beyond cognitive functioning levels and with more specificity (e.g., the presence or absence of stereotypical behavior patterns). Also, it is recommended that component analyses of interventions be conducted to determine the additive effects of audio cues, visual cues, and video models. Since many of these intervention strategies were combined with some types of behavioral intervention procedures for which effects were not disaggregated, it may discount a certainty of identified treatment effects.

Regarding methodological considerations, future studies are suggested to synthesize the quality of evidence from both group research and single-case research on teaching functional living skills to persons with ASD. Standards of study quality exist from the Council for Exceptional Children which permit such a synthesis (Cook et al. 2014). A similar meta-analysis of the existing group research would be a useful area for an extension and a comparison of findings. Also, future studies should continue to evaluate convergent validity of effect sizes with visually analyzed indicators. Comparisons of different metrics can be evaluated in moderator analyses of visually analyzed indicators, such as through using Tau versus Tau-U metrics. Additionally, other important indicators of visually analyzed evidence can be evaluated as moderators, such as ratings on trend or the immediacy of effects. Further, convergent validity of visual analysis codes with effect size metrics can be evaluated through use of correlational methods. Such extensions will help towards exploring limitations and improving the soundness of techniques used for data aggregations of single-case research.

References

*Indicates study included in the meta-analysis

*Alcantara, P. R. (1994). Effects of videotape instructional package on purchasing skills of children with autism. Exceptional Children, 61, 40–55.

*Allen, K. D., Burke, R. V., Howard, M. R., Wallace, D. P., & Bowen, S. L. (2012). Use of audio cuing to expand employment opportunities for adolescents with autism spectrum disorders and intellectual disabilities. Journal of Autism and Developmental Disorders, 42, 2410–2419. doi:10.1007/s10803-012-1519-7.

*Allen, K. D., Wallace, D. P., Greene, D. J., Bowen, S. L., & Burke, R. V. (2010a). Community-based vocational instruction using videotaped modeling for young adults with autism spectrum disorders performing in air-inflated mascots. Focus on Autism and Other Developmental Disabilities, 25, 186–192. doi:10.1177/1088357610377318.

*Allen, K. D., Wallace, D. P., & Renes, D. (2010b). Use of video modeling to teach vocational skills to adolescents and young adults with autism spectrum disorders. Education and Treatment of Children, 33, 339–349.

Alwell, M., & Cobb, B. (2009). Functional life skills curricular interventions for youth with disabilities: a systematic review. Career Development for Exceptional Individuals, 32, 82–93. doi:10.1177/0885728809336656.

Ayres, K. M., Alisa Lowrey, K., Douglas, K. H., & Sievers, C. (2011). I can identify Saturn but I can’t brush my teeth: What happens when the curricular focus for students with severe disabilities shifts? Education and Training in Autism and Developmental Disabilities, 46, 11–21. doi:10.1177/0885728809336656.

*Bainbridge, N., & Smith Myles, B. (1999). The use of priming to introduce toilet training to a child with autism. Focus on Autism and Other Developmental Disabilities, 14, 106–109. doi:10.1177/108835769901400206.

Bennett, K. D., & Dukes, C. (2014). A systematic review of teaching daily living skills to adolescents and adults with autism spectrum disorder. Review Journal of Autism and Developmental Disorders, 1, 2–10. doi:10.1007/s40489-013-0004-3.

*Bennett, K. D., Ramasamy, R., & Honsberger, T. (2013a). The effects of covert audio coaching on teaching clerical skills to adolescents with autism spectrum disorder. Journal of Autism and Developmental Disorders, 43, 585–593. doi:10.1007/s10803-012-1597-6.

*Bennett, K. D., Ramasamy, R., & Honsberger, T. (2013b). Further examination of covert audio coaching on improving employment skills among secondary students with autism. Journal of Behavioral Education, 22, 103–119. doi:10.1007/s10864-013-9168-2.

*Bennett, K., Brady, M. P., Scott, J., Dukes, C., & Frain, M. (2010). The effects of covert audio coaching on the job performance of supported employees. Focus on Autism and Other Developmental Disabilities, 25, 173–185. doi:10.1177/1088357610371636.

*Bereznak, S., Ayres, K. M., Mechling, L. C., & Alexander, J. L. (2012). Video self-prompting and mobile technology to increase daily living and vocational independence for students with autism spectrum disorders. Journal of Developmental and Physical Disabilities, 24, 269–285. doi:10.1007/s10882-012-9270-8.

*Bledsoe, R., Smith-Myles, B., & Simpson, R. L. (2003). Use of a social story intervention to improve mealtime skills of an adolescent with Asperger syndrome. Autism, 7, 289–295. doi:10.1177/1362361303007003005.

Bowman-Perrott, L., Davis, H., Vannest, K., Williams, L., Greenwood, C., & Parker, R. (2013). Academic benefits of peer tutoring: a meta-analytic review of single-case research. School Psychology Review, 42, 39–55.

Brookman-Frazee, L., Vismara, L., Drahota, A., Stahmer, A., & Openden, D. (2009). Parent training interventions for children with autism spectrum disorders. In J. L. Matson (Ed.), Applied behavior analysis for children with autism spectrum disorders (1st ed., pp. 237–257) Springer Science + Business Media. doi:10.1007/978-1-4419-0088-3_14

Brossart, D. F., Vannest, K. J., Davis, J. L., & Patience, M. A. (2014). Incorporating nonoverlap indices with visual analysis for quantifying intervention effectiveness in single-case experimental designs. Neuropsychological Rehabilitation, 24, 464–491. doi:10.1080/09602011.2013.868361.

*Cannella-Malone, H. I., Wheaton, J. E., Wu, P., Tullis, C., & Park, J. (2012). Comparing the effects of video prompting with and without error-correction on skill acquisition for students with intellectual disability. Education and Training in Autism and Developmental Disabilities, 47, 332–344.

*Cannella-Malone, H., Sigafoos, J., O’Reilly, M., de la Cruz, B., Edrisinha, C., & Lancioni, G. E. (2006). Comparing video prompting to video modeling for teaching daily living skills to six adults with developmental disabilities. Education and Training in Developmental Disabilities, 41, 344–356.

*Cannella-Malone, H. I., Fleming, C., Chung, Y., Wheeler, G., Basbagill, A. R., & Singh, A. H. (2011). Teaching daily living skills to seven individuals with severe intellectual disabilities: a comparison of video prompting to video modeling. Journal of Positive Behavior Interventions, 13, 144–153. doi:10.1177/1098300710366593.

*Carr, E. G., & Carlson, J. I. (1993). Reduction of severe behavior problems in the community using a multicomponent treatment approach. Journal of Applied Behavior Analysis, 26, 157–172. doi:10.1901/jaba. 1993.26-157.

Carter, M. (2013). Reconsidering overlap-based measures for quantitative synthesis of single-subject data: what they tell us and what they don’t. Behavior Modification, 37, 378–390. doi:10.1177/0145445513476609.

*Cavkaytar, A., & Pollard, E. (2009). Effectiveness of parent and therapist collaboration program (PTCP) for teaching self-care and domestic skills to individuals with autism. Education and Training in Developmental Disabilities, 44, 381–395.

Centers for Disease Control and Prevention (2014). Prevalence of autism spectrum disorder among children aged 8 years — Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2010. MMWR Surveillance Summary, 63, 1–21. Retrieved from: http://www.cdc.gov/mmwr/preview/mmwrhtml/ss6302a1.htm?s_cid=ss6302a1_w

*Charlop-Christy, M. H., Le, L., & Freeman, K. A. (2000). A comparison of video modeling with in vivo modeling for teaching children with autism. Journal of Autism and Developmental Disorders, 30, 537–552. doi:10.1023/A:1005635326276.

*Cihak, D. F., & Grim, J. (2008). Teaching students with autism spectrum disorder and moderate intellectual disabilities to use counting-on strategies to enhance independent purchasing skills. Research in Autism Spectrum Disorders, 2, 716–727. doi:10.1016/j.rasd.2008.02.006.

*Cihak, D. F., & Schrader, L. (2008). Does the model matter? Comparing video self-modeling and video adult modeling for task acquisition and maintenance by adolescents with autism spectrum disorders. Journal of Special Education Technology, 23, 9–20.

Cimera, R. E., & Cowan, R. J. (2009). The costs of services and employment outcomes achieved by adults with autism in the US. Autism, 13, 285–302. doi:10.1177/1362361309103791.

*Collins, B. C., Stinson, D. M., & Land, L. (1993). A comparison of in-vivo and simulation prior to in-vivo instruction in teaching generalized safety skills. Education and Training in Mental Retardation, 28, 128–142.

Cook, B. G., Buysse, V., Klingner, J., Landrum, T. J., McWilliam, R. A., Tankersley, M., & Test, D. W. (2014). CEC’s standards for classifying the evidence base of practices in special education. Remedial and Special Education. doi:10.1177/0741932514557271. ahead of print.

Dawson, G., Rogers, S., Munson, J., Smith, M., Winter, J., Greenson, J., & Varley, J. (2010). Randomized controlled trial of an intervention for toddlers with autism: the Early Start Denver Model. Pediatrics, 125(1), e17–e23. doi:10.1542/peds.2009-0958.

Dunn, O. J. (1964). Multiple contrasts using rank sums. Technometrics, 6, 241–252. doi:10.2307/1266041.

Duval, S., & Tweedie, R. (2000). A nonparametric “trim and fill” method of accounting for publication bias in meta-analysis. Journal of the American Statistical Association, 95, 89–98. doi:10.1080/01621459.2000.10473905.

Education for All Handicapped Children’s Act of 1975, 20 U.S.C. §1400 (1975).

Eikeseth, S., Smith, T., Jahr, E., & Eldevik, S. (2007). Outcome for children with autism who began intensive behavioral treatment between ages 4 and 7: a comparison controlled study. Behavior Modification, 31, 264–278. doi:10.1177/0145445506291396.

Fesko, S., Cohen Hall, A., Quinlan, J., & Jockell, C. (2012). Active aging for individuals with intellectual disability: Meaningful community participation through employment, retirement, service, and volunteerism. American Journal of Intellectual and Developmental Disabilities, 117, 497–508. doi:10.1352/1944-7558-117-6.497.

Flynn, L., & Healy, O. (2012). A review of treatments for deficits in social skills and self-help skills in autism spectrum disorder. Research in Autism Spectrum Disorders, 6, 431–441. doi:10.1016/j.rasd.2011.06.016.

Ganz, J. B., Earles-Vollrath, T. L., Heath, A. K., Parker, R. I., Rispoli, M. J., & Duran, J. B. (2012). A meta-analysis of single case research studies on aided augmentative and alternative communication systems with individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 42, 60–74. doi:10.1007/s10803-011-1212-2.

Ganz, J. B., Goodwyn, F. D., Boles, M. M., Hong, E. R., Rispoli, M. J., Lund, E. M., & Kite, E. (2013a). Impacts of a PECS instructional coaching intervention on practitioners and children with autism. AAC: Augmentative and Alternative Communication, 29, 210–221. doi:10.3109/07434618.2013.818058.

Ganz, J. B., Hong, E. R., & Goodwyn, F. D. (2013b). Effectiveness of the PECS phase III app and choice between the app and traditional PECS among preschoolers with ASD. Research in Autism Spectrum Disorders, 7, 973–983. doi:10.1016/j.rasd.2013.04.003.

*Ganz, J. B., & Sigafoos, J. (2005). Self-monitoring: Are young adults with MR and autism able to utilize cognitive strategies independently? Education and Training in Developmental Disabilities, 40, 24–33.

*Gardill, M. C., & Browder, D. M. (1995). Teaching stimulus classes to encourage independent purchasing by students with severe behavior disorders. Education and Training in Mental Retardation and Developmental Disabilities, 30, 254–264.

Gaskin, C. J., McVilly, K. R., & McGillivray, J. A. (2013). Initiatives to reduce the use of seclusion and restraints on people with developmental disabilities: a systematic review and quantitative synthesis. Research in Developmental Disabilities, 34, 3946–3961. doi:10.1016/j.ridd.2013.08.010.

*Goodson, J., Sigafoos, J., O’Reilly, M., Cannella, H., & Lancioni, G. E. (2007). Evaluation of a video-based error correction procedure for teaching a domestic skill to individuals with developmental disabilities. Research in Developmental Disabilities, 28, 458–467. doi:10.1016/j.ridd.2006.06.002.

Graetz, J. E. (2010). Autism grows up: opportunities for adults with autism. Disability and Society, 25, 33–47. doi:10.1080/09687590903363324.

Harrison, J. R., Bunford, N., Evans, S. W., & Sarno Owens, J. S. (2013). Educational accommodations for students with behavioral challenges: a systematic review of the literature. Review of Educational Research, 83, 551–597. doi:10.3102/0034654313497517.

Hendricks, D. (2010). Employment and adults with autism spectrum disorders: challenges and strategies for success. Journal of Vocational Rehabilitation, 32, 125–134. doi:10.3233/JVR-2010-0502.

Hutchins, T. L., & Prelock, P. A. (2013). The social validity of Social Stories™ for supporting the behavioural and communicative functioning of children with autism spectrum disorder. International Journal of Speech-Language Pathology, 15, 383–395. doi:10.3109/17549507.2012.743174.

Individuals with Disabilities Education Improvement Act Amendments, 20 U.S.C. §1400 (2004).

Jung Lee, H., & Ran Park, H. (2007). An integrated literature review on the adaptive behavior of individuals with Asperger syndrome. Remedial and Special Education, 28, 132–139. doi:10.1177/07419325070280030201.

Kanner, L. (1971). Follow-up study of eleven autistic children originally reported in 1943. Journal of Autism and Childhood Schizophrenia, 1, 119–145. doi:10.1177/108835769200700501.

*Kellems, R. O., & Morningstar, M. E. (2012). Using video modeling delivered through iPods to teach vocational tasks to young adults with autism spectrum disorders. Career Development and Transition of Exceptional Individuals, 35, 155. doi:10.1177/0885728812443082.

*Kemp, D. C., & Carr, E. G. (1995). Reduction of severe problem behavior in community employment using an hypothesis-driven multicomponent intervention approach. Journal of the Association for the Severely Handicapped, 20, 229–247. doi:10.1177/154079699602000401.

Kern, P., Wakeford, L., & Aldridge, D. (2007). Improving the performance of a young child with autism during self-care tasks using embedded song interventions: a case study. Music Therapy Perspectives, 25, 43–51. doi:10.1093/mtp/25.1.43.

Kratochwill, T. R., Hitchcock, J., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2010). Single-case designs technical documentation. Retrieved from: http://ies.ed.gov/ncee/wwc/pdf/wwc_scd.pdf

Kratochwill, T. R., & Levin, J. R. (2014). Meta-and statistical analysis of single-case intervention research data: quantitative gifts and a wish list. Journal of School Psychology, 52, 231–235. doi:10.1016/j.jsp.2014.01.003.

Kruskal, W. H., & Wallis, A. W. (1952). Use of ranks in one-criterion variance analysis. Journal of the American Statistical Association, 47, 583–621. doi:10.2307/2280779.

*Lattimore, L. P., Parsons, M. B., & Reid, D. H. (2008). Simulation training of community job skills for adults with autism: a further analysis. Behavior Analysis in Practice, 1(1), 24–29.

*Lattimore, L. P., Parsons, M., & Reid, D. H. (2009). Rapid training of a community job skill to nonvocal adults with autism: an extension of intensive teaching. Behavior Analysis in Practice, 2(1), 34–42.

*LeBlanc, L., Carr, J. E., Crossett, S. E., Bennett, C. M., & Detweiler, D. D. (2005). Intensive outpatient behavioral treatment of primary urinary incontinence of children with autism. Focus on Autism and Other Developmental Disabilities, 20, 98–105. doi:10.1177/10883576050200020601.

Lopata, C., Fox, J. D., Thomeer, M. L., Smith, R. A., Volker, M. A., Kessel, K., McDonal, C., & Lee, G. K. (2012). ABAS-II ratings and correlates of adaptive behavior in children with HFASDs. Journal of Developmental and Physical Disabilities, 24, 391–402. doi:10.1007/s10882-012-9277-1.

Machalicek, W., O’Reilly, M. F., Beretvas, N., Sigafoos, J., Lancioni, G., Sorrells, A., & Rispoli, M. (2008). A review of school-based instructional interventions for students with autism spectrum disorders. Research in Autism Spectrum Disorders, 2, 395–416. doi:10.1016/j.rasd.2007.07.001.

Maggin, D. M., Briesch, A. M., & Chafouleas, S. M. (2013). An application of the what works clearinghouse standards for evaluating single-subject research: synthesis of the self-management literature base. Remedial and Special Education, 34, 44–58. doi:10.1177/0741932511435176.

Matson, J. L., Dempsey, T., & Fodstad, J. C. (2009). The effect of autism spectrum disorders on adaptive independent living skills in adults with severe intellectual disability. Research in Developmental Disabilities, 30, 1203–1211. doi:10.1016/j.ridd.2009.04.001.

Matson, J. L., Hattier, M. A., & Belva, B. (2012). Treating adaptive living skills of persons with autism using applied behavior analysis: a review. Research in Autism Spectrum Disorders, 6, 271–276. doi:10.1016/j.rasd.2011.05.008.

*Mays, N. M., & Heflin, L. J. (2011). Increasing independence in self-care tasks for children with autism using self-operated auditory prompts. Research in Autism Spectrum Disorders, 5, 1351–1357. doi:10.1016/j.rasd.2011.01.017.

*Mechling, L. C. (2004). Effects of multimedia, computer-based instruction on grocery shopping fluency. Journal of Special Education Technology, 19, 23.

*Mechling, L. C., & Ayres, K. M. (2012). A comparative study: Completion of fine motor office related tasks by high school students with autism using video models on large and small screen sizes. Journal of Autism and Developmental Disorders, 42, 2364–2373. doi:10.1007/s10803-012-1484-1.

*Mechling, L. C., & Gast, D. L. (2003). Multi-media instruction to teach grocery word associations and store location: a study of generalization. Education and Training in Developmental Disabilities, 38, 62–76.

*Mechling, L. C., Gast, D. L., & Langone, J. (2002). Computer-based video instruction to teach persons with moderate intellectual disabilities to read grocery store aisle signs and locate items. The Journal of Special Education, 35, 224–240. doi:10.1177/002246690203500404.

*Mechling, L. C., Gast, D. L., & Seid, N. H. (2009). Using a personal digital assistant to increase independent task completion by students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 39, 1420–1434. doi:10.1007/s10803-009-0761-0.

*Mechling, L. C., Gast, D. L., & Seid, N. H. (2010). Evaluation of a personal digital assistant as a self-prompting device for increasing multi-step task completion by students with moderate intellectual disabilities. Education and Training in Autism and Developmental Disabilities, 45, 422–439.

*Mechling, L. C., & Gustafson, M. R. (2008). Comparison of static picture and video prompting on the performance of cooking-related tasks by students with autism. Journal of Special Education Technology, 23, 31.

*Mechling, L. C., Pridgen, L. S., & Cronin, B. A. (2005). Computer-based video instruction to teach students with intellectual disabilities to verbally respond to questions and make purchases in fast-food restaurants. Education and Training in Developmental Disabilities, 40, 47–59.

*Mechling, L. C., & Stephens, E. (2009). Comparison of self-prompting of cooking skills via picture-based cookbooks and video recipes. Education and Training in Developmental Disabilities, 44, 218–236.

*Mechling, L. C., & O’Brien, E. (2010). Computer-based video instruction to teach students with intellectual disabilities to use public bus transportation. Education and Training in Autism and Developmental Disabilities, 45, 230–241.

*Murzynski, N. T., & Bourret, J. C. (2007). Combining video modeling and least‐to‐most prompting for establishing response chains. Behavioral Interventions, 22, 147–152. doi:10.1002/bin.224.

National Research Council. (2001). Educating Children with Autism. Washington, DC: National Academy Press.

No Child Left Behind (NCLB) Act of 2001, Pub. L. No. 107–110, § 115, Stat. 1425 (2002).

*Ozcan, N., & Cavkaytar, A. (2009). Parents as teachers: teaching parents how to teach toilet skills to their children with autism and mental retardation. Education and Training in Developmental Disabilities, 44, 237–243.

*Palmen, A., & Didden, R. (2012). Task engagement in young adults with high-functioning autism spectrum disorders: Generalization effects of behavioral skills training. Research in Autism Spectrum Disorders, 6, 1377–1388. doi:10.1016/j.rasd.2012.05.010.

Palmen, A., Didden, R., & Lang, R. (2012a). A systematic review of behavioral intervention research on adaptive skill building in high-functioning young adults with autism spectrum disorder. Research in Autism Spectrum Disorders, 6, 602–617. doi:10.1016/j.rasd.2011.10.001.

*Palmen, A., Didden, R., & Verhoeven, L. (2012b). A personal digital assistant for improving independent transitioning in adolescents with high-functioning autism spectrum disorder. Developmental Neurorehabilitation, 15, 401–413. doi:10.3109/17518423.2012.701240.

*Parker, D., & Kamps, D. (2011). Effects of task analysis and self-monitoring for children with autism in multiple social settings. Focus on Autism and Other Developmental Disabilities, 26, 131–142. doi:10.1177/1088357610376945.

Parker, R. I., & Vannest, K. J. (2012). Bottom-up analysis of single-case research designs. Journal of Behavioral Education, 21, 254–265. doi:10.1007/s10864-012-9153-1.

Parker, R. I., Vannest, K. J., & Davis, J. L. (2011a). Effect size in single-case research: a review of nine nonoverlap techniques. Behavior Modification, 35, 303–322. doi:10.1177/0145445511399147.

Parker, R. I., Vannest, K. J., Davis, J. L., & Sauber, S. B. (2011b). Combining nonoverlap and trend for single-case research: Tau-U. Behavior Therapy, 42, 284–299. doi:10.1016/j.beth.2010.08.006.

*Pierce, K. L., & Schreibman, L. (1994). Teaching daily living skills to children with autism in unsupervised settings through pictorial self-management. Journal of Applied Behavior Analysis, 27, 471–481. doi:10.1901/jaba. 1994.27-471.

*Rayner, C. (2011). Teaching students with autism to tie a shoelace knot using video prompting and backward chaining. Developmental Neurorehabilitation, 14, 339–347. doi:10.3109/17518423.2011.606508.

Reynhout, G., & Carter, M. (2011). Evaluation of the efficacy of Social Stories™ using three single subject metrics. Research in Autism Spectrum Disorders, 5, 885–900. doi:10.1016/j.rasd.2010.10.003.

*Riffel, L. A., Wehmeyer, M. L., Turnbull, A. P., Lattimore, J., Davies, D., Stock, S., & Fisher, S. (2005). Promoting independent performance of transition-related tasks using a palmtop PC-based self-directed visual and auditory prompting system. Journal of Special Education Technology, 20, 5–14. doi:10.1016/j.rasd.2010.10.003.

*Rosenberg, N. E., Schwartz, I. S., & Davis, C. A. (2010). Evaluating the utility of commercial videotapes for teaching hand-washing to children with autism. Education and Treatment of Children, 33, 443–455. doi:10.1353/etc.0.0098.

Roth, M. E., Gillis, J. M., & DiGennaro Reed, F. D. (2014). A meta-analysis of behavioral interventions for adolescents and adults with autism spectrum disorders. Journal of Behavioral Education, 23, 258–286. doi:10.1007/s10864-013-9189-x.

*Sewell, T. L., Collings, B. C., Hemmeter, M. L., & Schuster, J. W. (1998). Using simultaneous prompting within an activity-based format to teach dressing skills to preschoolers with developmental delays. Journal of Early Intervention, 21, 132–145. doi:10.1177/105381519802100206.

*Shipley-Benatou, R., Lutzker, J. R., & Taubman, M. (2002). Teaching daily living skills to children with autism through instructional video modeling. Journal of Positive Behavior Interventions, 4, 166–177. doi:10.1177/10983007020040030501.

*Sigafoos, J., O’Reilly, M., Cannella, H., Edrisinha, C., de la Cruz, B., Upadhyaya, M., & Young, D. (2007). Evaluation of a video prompting and fading procedure for teaching dish washing skills to adults with developmental disabilities. Journal of Behavioral Education, 16, 93–109. doi:10.1007/s10864-006-9004-z.

*Sigafoos, J., O’Reilly, M., Cannella, H., Upadhyaya, M., Edrisinha, C., Lancioni, G., & Young, D. (2005). Computer-presented video prompting for teaching microwave oven use to three adults with developmental disabilities. Journal of Behavioral Education, 14, 189–201. doi:10.1007/s10864-005-6297-2.

Stancliffe, R. J., Hayden, M. F., & Lakin, K. C. (2000). Quality and content of individualized habilitation plan objectives in residential settings. Education and Training in Mental Retardation and Developmental Disabilities, 35, 191–207.

Toelken, S., & Miltenberger, R. G. (2012). Increasing independence among children diagnosed with autism using a brief embedded teaching strategy. Behavioral Interventions, 27, 93–104. doi:10.1002/bin.337.

*Van Laarhoven, T., Van Laarhoven-Myers, T., & Zurita, L. M. (2007). The effectiveness of using a pocket PC as a video modeling and feedback device for individuals with developmental disabilities in vocational settings. Assistive Technology Outcomes and Benefits, 4, 28–45.

Virués-Ortega, J. (2010). Applied behavior analytic intervention for autism in early childhood: Meta-analysis, meta-regression and dose–response meta-analysis of multiple outcomes. Clinical Psychology Review, 30, 387–399. doi:10.1016/j.cpr.2010.01.008.

Walsh, L., Lydon, S., & Healy, O. (2014). Employment and vocational skills among individuals with autism spectrum disorder: predictors, impact, and interventions. Review Journal of Autism and Developmental Disorders. doi:10.1007/s40489-014-0024-7. Advance online publication.

Funding

The contents of this manuscript were developed under the Preparation of Leaders in Autism Across the Lifespan grant awarded by the U.S. Department of Education, Office of Special Education Programs (Grant No.: H325D110046). The views herein are those of the authors and do not necessarily reflect those of the U.S. Department of Education.

Compliance with Ethical Standards

The manuscript does not contain clinical studies or patient data.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Articles Included in the Analysis

Appendix A: Articles Included in the Analysis

Alcantara (1994).

Allen, Burke, Howard, Wallace, & Bowen (2012).

Allen, Wallace, Greene, Bowen, & Burke (2010a).

Allen, Wallace, & Renes (2010b).

Bainbridge & Smith Myles (1999).

Bennett, Ramasamy & Honsberger (2013a).

Bennett, Ramasamy, & Honsberger (2013b).

Bennett, Brady, Scott, Dukes, & Frain (2010).

Bereznak, Ayres, Mechling, & Alexander (2012).

Bledsoe, Smith-Myles, & Simpson (2003).

Cannella-Malone, Wheaton, Wu, Tullis, & Park (2012).

Cannella-Malone, Sigafoos, O'Reilly, de la Cruz, Edrisinha, & Lancioni (2006).

Cannella-Malone, Fleming, Chung, Wheeler, Basbagill, & Singh (2011).

Carr & Carlson (1993).

Cavkaytar & Pollard (2009).

Charlop-Christy, Le, & Freeman (2000).

Cihak & Grim (2008).

Cihak & Schrader (2008).

Collins, Stinson, & Land (1993).

Ganz & Sigafoos (2005).

Gardill & Browder (1995).

Goodson, Sigafoos, O'Reilly, Cannella, & Lancioni (2007).

Kellems & Morningstar (2012).

Kemp & Carr (1995).

Lattimore, Parsons, & Reid (2008).

Lattimore, Parsons, & Reid (2009).

LeBlanc, Carr, Crossett, Bennett, & Detweiler (2005).

Mays & Heflin (2011).

Mechling (2004).

Mechling & Ayres (2012).

Mechling & Gast (2003).

Mechling, Gast, & Langone (2002).

Mechling, Gast, & Seid (2009).

Mechling, Gast, & Seid (2010).

Mechling & Gustafson (2008).

Mechling, Pridgen, & Cronin (2005).

Mechling & Stephens (2009).

Mechling & O'Brien (2010).

Murzynski & Bourret (2007).

Ozcan & Cavkaytar (2009).

Palmen & Didden (2012).

Palmen, Didden, & Verhoeven (2012).

Parker & Kamps (2011).

Pierce & Schreibman (1994).

Rayner (2011).

Riffel, Wehmeyer, Turnbull, Lattimore, Davies, Stock, & Fisher (2005).

Rosenberg, Schwartz, & Davis (2010).

Sewell, Collings, Hemmeter, & Schuster (1998).