Abstract

The current study examined the impacts on students’ cognitive performance of the key versus ordinary school system in China, using an analytic approach that combines hierarchical linear modeling with propensity score stratification. The results show that students from key schools score significantly higher on a mathematical achievement test than their counterparts in ordinary schools, after controlling for student characteristics and their family background. The specific magnitude of the school effect varies substantially across the geographic locations of the school. The advantages of key schools over ordinary schools are found to be generally greater among urban schools compared with suburban schools. The results are noteworthy as both key and ordinary schools are state-funded and the system was formed directly by policy initiatives and differential resource allocation. As such, they bear important policy implications on systematic-level school management in general.

Similar content being viewed by others

Notes

Missing data analyses showed that no systematic differences were found using the intent-to-treat sample and the available-case sample; this is possibly due to the fact that the missing rate is very low (about 0.2 %).

Because there is no solid theory in school effect research, we have no way to ensure that the observed across-sector differences are clearly pre-treatment differences, rather than consequences of school effect. However, were some of the differences caused positively by school effect, the magnitude of the school effect would be reduced by balancing differences of such variables across sectors. Consequently, by including such variables in the model, conservative estimates of school effect would be obtained. If a significant school effect could still be found under this condition, our confidence in the existence of school effect is stronger as the actual magnitude of such an effect would like be larger.

Although it is arguable that students’ problem solving scores are in fact affected by school effects as our data are cross-sectional, two points can be made here to justify the use of such scores as a covariate: First, PISA items on problem solving are designed to assess student’s ability to solve complex systems without resort to much subject-matter material. The essential construct measured in this test is students’ planning and logical reasoning ability which are generally considered as the core of intelligence and the modifiability of such components by school experiences are suspected. Second, as mentioned in note 2, the inclusion of problem solving scores in the model would lead to conservative estimates of school effect, were such scores positively affected by key schools. Therefore, if little school effect was reduced after adding this covariate, we could increase our confidence that our estimates of school effects are robust.

References

Alexander, K. L., & Palls, A. M. (1985). School sector and cognitive performance: When is a little is a little? Sociology of Education, 58, 115–128.

Bidwell, C., & Dreeben, R. (2006). Public and private education: Conceptualizing the distinction. In M. Hallinan (Ed.), School sector and student outcomes (pp. 9–38). Notre Dame, IN: University of Notre Dame Press.

Bradley, S., & Taylor, J. (1998). The effect of school size on exam performance in secondary schools. Oxford Bulletin of Economics and Statistics, 60, 291–325.

Brookover, W. B., Beady, D., Flood, P., Schweitzer, J., & Wisenbaker, J. (1979). School social systems and student achievement: Schools can make a difference. New York, NY: Praeger.

Bryk, A., Lee, V., & Holland, P. (1993). Catholic Schools and the common Good. Cambridge, MA: Harvard University Press.

Carbonaro, W. (2006). Public-private differences in achievement among Kindergarten students: Differences in learning opportunities and student outcomes. American Journal of Education, 113, 31–65.

Carbonaro, W., & Covay, E. (2010). School sector and student achievement in the era of standard based reforms. Sociology of Education, 83, 160–182.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (3rd edn.). New York: Academic Press.

Coleman, J., Campbell, E., Hobson, C., McPartland, J., Mood, A., Weinfeld, F., et al. (1966). Equality of educational opportunity. Washington, DC: U.S. Department of Health, Education and Welfare.

Coleman, J., & Hoffer, T. (1987). Private and public schools. New York, NY: Basic Books.

Coleman, J., Hoffer, T., & Kilgore, S. (1982). Cognitive outcomes in public and private schools. Sociology of Education, 55, 65–76.

D’Agostino, R. B, Jr. (1998). Propensity score methods for bias reduction in the comparison of a treatment to a non-randomized control group. Statistics in Medicine, 17, 2265–2281.

Dearden, L., Ferri, J., & Meghir, C. (2002). The effect of school quality on educational attainment and wages. Review of Economics and Statistics, 84, 1–20.

Desforges, C. and Abouchaar, A. (2003). The impact of parental involvement, parental support and family education on pupil achievement and adjustment: A literature review. Report Number 433, Department of Education and Skills.

Edmonds, R. R. (1979). Effective schools for the urban poor. Educational Leadership, 37, 15–24.

Fang, X. C. (2000). Should we stop the key school system? In Q. R. Zhong, Z. Y. Jin, & G. P. Wu (Eds.), Understanding education in China (pp. 318–323). Bejing, China: Educational Science Press.

Feinstein, L., & Symons, J. (2001). Attainment in secondary school. Oxford Economics Papers, 51, 300–321.

Feng, D. (2007). School effectiveness and improvement research in mainland China. In T. Townsend (Ed.), International handbook of school effectiveness and improvement (pp. 287–306). Dordrecht, The Netherlands: Springer.

Graddy, K., & Stevens, M. (2005). The impact of school resources on student performance: A study of private schools in the United Kingdom. Industrial and Labor Relations Review, 58, 435–451.

Greeley, A. M. (1982). Catholic high schools and minority students. New Brunswick, NJ: Transaction.

Grolnick, W. S., Benjet, C., Kurowski, C. O., & Apostoleris, N. H. (1997). Predictors of parent involvement in children’s schooling. Journal of Educational Psychology, 89, 538–548.

Hallinan, M. (2006). School sector and student outcomes. Notre Dame, IN: University of Notre Dame Press.

He, X. L. (2010). Key school: From ideation to common practice. Educational Science Research, 6, 20–23.

Hoffer, T., Greeley, A., & Colemen, J. (1985). Achievement growth in Catholic and public schools. Sociology of Education, 54, 74–97.

Holland, P. W. (1986). Statistics and causal inference. Journal of the American Statistics Association, 81, 945–970.

Hong, G., & Raudenbush, S. W. (2005). Effects of Kindergarten retention policy on children’s cognitive growth in reading and mathematics. Educational Evaluation and Policy Analysis, 27, 205–224.

Imbens, G., & Rubin, D. B. (1997). Bayesian inference for causal effects in randomized experiments with noncompliance. Annals of Statistics, 25, 305–327.

Jencks, C. (1985). How much do high school student learn? Sociology of Education, 58, 128–135.

Jin, S. H. (2000). Educational system for the Elite and the concept of key school. Educational Research and Experiment, 4, 18–21.

Li, L. (2003). Talking about education: Interview with Li Langqing. Bejing: People’s Education Press.

Liu, J. (2005). Success from initiatives and efforts. In L. Ma (Ed.), A wise conversation with sixty principals (pp. 8–14). Shanghai: Shanghai Educational Publishing House.

Lubienski, S., & Lubienski, C. (2006). School sector and academic achievement: A multilevel analysis of NAEP data. American Education Research Journal, 43, 651–700.

Ma, X. Q., Peng, W. R., & Thomas, S. (2006). Value-added research on school effect: An empirical research on secondary schools in Baoding of the Heibei province. Educational Research, 10, 74–84.

McNabb, R., Sarmistha, P., & Sloane, P. (1998). Gender differences in student attainment: The case of university students in the UK. Mineo: University of Cardiff.

Meredith, P., & Flashman, J. (2007). How did the statewide assessment and accountability policies of the 1990s affect instructional quality in low income schools. In A. Garmoran (Ed.), Standards-based reform and the poverty gap (pp. 45–88). Washington, DC: Brookings Institution.

Ministry of Education (MOE). (1993). The ongoing reform of China’s education. Beijing: Higher Education Press.

Ministry of Education (MOE). (1998). Reinforcing the development of disadvantaged schools and making every elementary and middle school works in large and medium cities. Retrieved from http://www.bjsupervision.gov.cn/zcfg/. Accessed 25 Dec 2011.

Ministry of Education (MOE). (2005). Further promoting the even development in nine-year compulsory education. Beijing: China Education Daily.

Morgan, S. L. (2001). Counterfactuals, causal effect heterogeneity, and the Catholic school effect on learning. Sociology of Education, 74, 341–374.

Morgan, S. L., & Sørensen, A. (1999). Parental networks, social closure and mathematics learning: A test of Coleman’s social capital explanation of school effects. American Sociological Review, 64, 661–681.

Morgan, S. L., & Winship, C. (2007). Counterfactuals and causal inference: Methods and principles for social research. New York, NY: Cambridge University Press.

Mortimore, P. (1998). The road to improvement: Reflections on school effectiveness. Lisse: Swets & Zeitlinger Publisher.

Mortimore, P., Sammons, P., Stoll, L., Lewis, D., & Econb, R. (1988). School matters: The junior years. Somerset, England: Open Books.

No Child Left Behind (NCLB) Act of 2001, Pub. L. No. 107-110, § 115, Stat. 1425 (2002).

OECD. (2009). PISA 2009 assessment framework—Key competencies in reading mathematics and science. Paris: OECD.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks, CA: Sage Publication.

Raudenbush, S. W., & Willms, J. D. (1995). The estimation of school effects. Journal of Educational and Behavioral Statistics, 20, 307–335.

Reardon, S., Cheadle, J., & Robinson, J. (2009). The effect of Catholic schooling on math and reading development in Kindergarten through fifth grade. Journal of Research on Educational Effectiveness, 2, 45–87.

Rosenbaum, P. R. & Rubin, D. B. (1983). The central role of the propensity score in observational studies for causal effects. Biometrics, 70, 41 -55.

Rosebaum, P. R., & Rubin, D. B. (1984). Reducing bias in observational studies using subclassification on the propensity score. Journal of the American Statistical Association, 79, 516–524.

Rosebaum, P. R., & Rubin, D. B. (1985). Constructing a control group using multivariate matched sampling methods. American Statistician, 39, 33–38.

Rowan, B., Raudenbush, S. W., & Kang, S. J. (1991). Organizational design in high schools: A multilevel analysis. American Journal of Education, 99, 238–266.

Rubin, D. B. (1974). Estimating causal effects of treatments in randomized and nonrandomized studies. Journal of Educational Psychology, 66, 688–701.

Rubin, D. B. (1986). Comment: Which ifs have causal answers. Journal of the American Statistical Association, 81, 961–962.

Rubin, D. B. (2000). The utility of counterfactuals for causal inference. Journal of the American Statistical Association, 95, 435–438.

Rutter, M, Maughan, B., Mortimore, P., & Ouston, J. (1979). Fifteen thousand hours: Secondary schools and their effects on children. London: Open Books.

Smith, J., & Naylor, R. A. (2001). Determinants of degree performance in UK universities: A statistical analysis of the 1993 student cohort. Oxford Bulletin of Economics and Statistics, 63, 29–60.

Sørensen, A. B., & Morgan, S. L. (2000). School effects: Theoretical and methodological issues. In M. T. Hallinan (Ed.), Handbook of the sociology of education (pp. 137–160). New York, NY: Kluwer Academic/Plenum Publishers.

Teddlie, C., & Stringfield, S. (2007). A history of school effectiveness and improvement research in the USA focusing on the past quarter century. In T. Townsend (Ed.), International handbook of school effectiveness and improvement (pp. 131–166). Dordrecht, The Netherlands: Springer.

Thomas, S. (2013). Personal communication. Bristol: University of Bristol.

Walford, G. (2013). The development of private and state schools in England. In A. Gürlevik, C. Palentien, & R. Heyer (Eds.), Privatschulen versus Staatliche Schulen (pp. 89–102). New York, NY: Springer.

Wang, J. M. (2009). Theory and practices of value-added educational assessment. Unpublished master thesis, Jiangxi Normal University, Nanchang, Jiangxi.

Weber, G. (1971). Inner-city children can be taught to read: Four successful schools. Washington, DC: Council for Basic Education.

Yang, D. P. (2003). The ideal and reality of educational equity in China. Beijing, China: Beijing University Press.

Yuan, Z. G. (1999). Change of educational policy in China: A case study on the equity and quality in key schools. Guangzhou, China: Guangdong Educational Press.

Zagoumennov, I. (1999). School effectiveness and school improvement in the Republic of Belarus. In T. Townsend, P. Clarke, & M. Ainscow (Eds.), Third millennium schools: A world of difference in school effectiveness and school improvement (pp. 89–106). Lisse: Swets and Zeitlinger.

Zhang, D. (2004). Providing good education for every student. Beijing: Education and Science Publishing House.

Acknowledgments

This article is supported financially by the MOE of China under Grant Nos. 2009JJD880011 and 2011JJD880030 of the National Key Research Center in Social Science and Humanities. The opinions expressed here do not necessarily reflect those of the funding agency.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Propensity Score Estimation and Stratification

Using all 20 covariates in Table 2, an iterative approach was taken to estimate each student’s propensity score via logistic models. Within each iteration, students were divided into five strata according to their propensity scores. The balance of each covariate across school sectors was examined within each stratum by Cochran–Mantel–Haenszel (CMH) χ 2 test and bar chart examination. Higher-order term of a covariate and/or interaction terms with other important covariates would be added into the initial model, if a large value of the CMH statistic was obtained after propensity score stratification. This process continues until all covariates are balanced across school sectors.

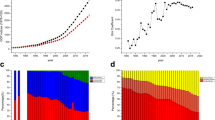

It took three iterations before the final propensity scores were obtained. The final model contained 32 terms, including four interaction terms, six quadratic terms, one cubic term, and one quartic term. Figure 2 displays the distributions of the final estimated propensity scores for key and ordinary school students on a logit scale. The two distributions overlap substantially, which implies that for most students in key schools, there is a comparable student in ordinary schools in the sense of having similar probability of getting into key schools. However, a few students in key schools have propensity scores higher than any of the ordinary school students. Similarly, a few ordinary school students have propensity scores lower than any of the key school students. For these students, there are no students in the other sector with comparable propensity scores. To take these students into account, students were classified into seven strata based on their estimated propensity scores, in which the first (and the seventh) stratum contains exclusively these students with highest (and lowest) propensity scores, and the other five strata contain key or ordinary school students with comparable propensity scores.

The last column of Table 2 shows the results of balance check for each covariate after the stratification. As expected, there is considerably greater balance on the observed covariates after propensity score stratification. None of the 20 covariates now exhibit significant differences across school sectors after stratification. To show this is indeed the case, Fig. 3 depicts the balance within each stratum for covariate Mother’s educational level, the only variable that needs to add more higher-order terms after the second iteration. Compare to across-sector differences before stratification, greater balances are achieved within each stratum of the propensity scores.

Similar process was taken to obtain refined propensity score after adding students’ problem solving scores as a covariate. Figure 4 displays the balance check after stratifying students on the re-estimated propensity scores. As expected, great balances are achieved for almost all strata.

Appendix 2: Hierarchical Linear Models Used for School Effect Estimation

After stratifying students based on their propensity scores, within-stratum school effect between key and ordinary schools were estimated using the following model

where \(Y_{ij}\) is the mathematics score for student \(i\) in school \(j\), \({\text{Sector}}_{j}\) is the school type (0 = ordinary school, 1 = key school), and \(\gamma_{01}\) indicates school effect on \(Y_{ij}\). In the random part of the model, \(u_{j}\) and \(e_{ij}\) are residuals at school and student level, respectively. Both follow normal distributions with mean 0 and variances \(\tau\) and \(\sigma^{2}\), respectively.

The combined school effect across strata was estimated using the following model

where \({\text{Strata}}_{hij}\) are a series of dummy variables created to indicate four of the five propensity score strata and were added to model 1 to control for selection bias.

Before stratification, school effect estimation with regression adjustment for propensity score was estimated using the following model

where \({\text{Logit}}P_{ij}\) is the logit of propensity score for student \(i\) in school \(j\).

After stratification, Eq. 3 was used to estimate within-stratum school effects with regression adjustment for propensity score. For the combined school effect across strata, estimate with regression adjustment for propensity score was obtained by adding \({\text{Logit}}P_{ij}\) to model 2.

Rights and permissions

About this article

Cite this article

Yang, X., Ke, Z., Zhan, Y. et al. The Effect of Choosing Key Versus Ordinary Schools on Student’s Mathematical Achievement in China. Asia-Pacific Edu Res 23, 523–536 (2014). https://doi.org/10.1007/s40299-013-0126-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40299-013-0126-5