Abstract

The work presented in this paper was part of our investigation in the ROBOSKIN project. The project has developed new robot capabilities based on the tactile feedback provided by novel robotic skin, with the aim to provide cognitive mechanisms to improve human–robot interaction capabilities. This article presents two novel tactile play scenarios developed for robot-assisted play for children with autism. The play scenarios were developed against specific educational and therapeutic objectives that were discussed with teachers and therapists. These objectives were classified with reference to the ICF-CY, the International Classification of Functioning—version for Children and Youth. The article presents a detailed description of the play scenarios, and case study examples of their implementation in HRI studies with children with autism and the humanoid robot KASPAR.

Similar content being viewed by others

1 The Importance of Play and the Case of Autism: A Brief Introduction

Play is an essential activity during childhood, and its absence provides an obstacle to the development of a healthy child. The World Health Organisation in its International classification of functioning and disabilities, version for children and youth (ICF-CY) publication considers play to be one of the most important aspects of a child’s life when assessing children’s quality of life [1].

A major rationale for the importance of play in early childhood special education settings is that play is thought to be correlated with development in other areas such as cognition, social development, and language development [2]. During play children can learn about themselves and their environments as well as develop cognitive, social and perceptual skills [3]. Play activity is one of the striking examples of the creation and use of the auxiliary stimuli that plays a crucial part in the child’s development [4]. According to Vygotsky, the potential for cognitive development depends upon the level of development achieved when children engage in social interaction. Bruner [5] has shown that the motivation for play, and that play itself, is socially constructed. Meanings of things are learnt in a social way within a particular context [5, 6]. In Bruner’s view, growth of the mind is assisted from outside the person by the culture he or she lives in.

Absence of play during childhood may lead to general impairment of children’s cognitive development, learning potential and may result in isolation from the social environment [4, 7, 8].

Autism is a life-long developmental disorder that can occur to different degrees and in a variety of forms [9]. The main impairments that are characteristic of people with autism are impaired social interaction, social communication and social imagination (referred to by many authors as the triad of impairments, e.g. [10]). A child with autism will have difficulty in interpreting other people intentions, facial expressions and emotional reactions, might experience an inability to relate to other people, show little use of eye contact and have difficulty in verbal and nonverbal communication [11]. Some do not have any language skills at all and some have limited language. Because of these impairments, children with autism have great difficulty in forming and maintaining social relationships [12]. It is difficult for them to engage in social play, much less in collaborative play, and they will typically play by themselves with their own toys [10, 13].

In the play ground, touch and physical contact are used by children to communicate, to give or receive support and to develop their social relationships. For some children with autism, tactile interaction presents difficulties that impede their ability to appropriately interact with their social environment. However, as some children with autism do not have verbal skills, or use their verbal skills inadequately, tactile interaction (if tolerated) might be an important way of communication for these children. It is suggested that problems with verbal skills and eye gaze in children with autism create the need for touch to replace these detrimental ways of communicating [14].

In therapy, touch has a social element, a sense of community that positively affirm the patients. Touch of another person when it happened is seen also as a way of breaking through isolation. It has a social element, a sense of community that positively affirm the patients [15, 16]. It is very common in therapy in situations where direct interaction between people is too difficult, or not possible at all (as in the case of autism) that props are being used which can become particularly significant as bridges for relating to others, be it in the client–therapist relationship, or in relationships amongst peers [17, 18]. In a similar way, by the use of robots as possible therapeutic or educational toys, we may provide this bridge, whereby autistic children can feel safe to explore during the interaction with the robot, behaviours that otherwise they would not be able to. In recent years there have been many examples of robots being used in interaction with children with autism for therapeutic or educational purposes e.g. improving imitation skills [19, 20] or eliciting a motivation to share mental states [21] to mention just a few [22]. With the robot acting as a mediator the children can also be encouraged to explore their interaction with other people in a way that is non-threatening to them [23–27]. A ‘tactile’ robot can be used as a buffer that mediates indirect rather than direct human–human contact, until such time that the person builds enough strength and confidence to tolerate direct human contact. A robot with tactile applications could allow a person with autism to explore touch in a way that could be completely under their control. In addition, as the nature of touch is very individual to a person, a robot used within such applications could take into account individual needs and differences and could adjust its behaviour accordingly.

The play scenarios presented in this article and the robotic system used (the humanoid robot KASPAR which was equipped with tactile capabilities) may provide the play experiences mentioned above [4] and can be viewed as the auxilary stimuli and the support to increment the child’s current level of development.

The main aim of this article, is to present a detailed description of novel play scenarios, and case study examples of their implementation in human–robot interaction (HRI) studies with children with autism. These case study examples show the potential use of the play scenarios towards embodied and cognitive learning of children with autism in tactile interactions with the robot. The play scenarios are presented here in a comprehensive way to allow their use and implementation by other researchers. The work presented here was part of our investigation in the ROBOSKIN project [28]. The project has developed new robot capabilities based on the tactile feedback provided by novel robotic skin, with the aim to provide cognitive mechanisms to improve human-robot interaction capabilities. A further longitudinal study to investigate how persistent the learning effect might be for children with autism is planned for the future.

2 The Study

The case study examples presented in this article are taken from studies that were conducted in three different special needs schools in the UK for children from different age groups and with different abilities (moderate and severe learning difficulties) as follows:

-

a.

Pre-school nursery for young children with autism, some of which with very limited abilities.

-

b.

Primary special school for children with moderate learning difficulties.

-

c.

A secondary school for children with severe learning difficulties.

The following table summarises the evaluation activities performed to evaluate the play scenarios for robot-assisted play for children with autism and the initial evaluation of tactile social behaviour in child–robot interactions (Table 1). This table also includes a precursor evaluation study of the scenarios with typically developing children in mainstream school. It is important to note that the project’s objective in the area of skin-based social cognition was to provide a proof-of-concept in the field of robot-assisted play for children with autism. Any clinical evaluation and long-term interventions were beyond the scope of the project, and will be reported in future publications.

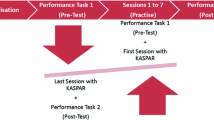

2.1 Experimental Design, Trials Set Up and Procedure

The sessions were defined as adult-facilitated, semi-structured play conditions for individual sessions. The trials were designed to allow the children to get used to the presence of the investigator, get familiar with the robot and to have opportunities for free and unconstrained interactions with the robot and with the present adults (i.e. teacher, experimenter) should they choose to.

The sessions were recorded by two stationary video cameras. Each session was divided into three parts: the Familiarization part provided the introduction to the robot, the Play specific scenario phase comprised the intervention by the experimenter according to the scenario’s procedure and the Closing part which included free interaction on the child’s initiative as well as time to say goodbye.

The sessions were conducted in a familiar room often used by the children for various activities. Before the trials, the humanoid robot was placed on a table, connected to a laptop. The investigator was seated next to the table. The children were brought to the room by their carer, and then greeted by the investigator and by the robot that introduced itself and invited the children to play with it. The robot could respond autonomously to different tactile interactions, as well as be operated remotely via a wireless remote control (a specially programmed keypad), either by the investigator or by the child. Each session lasted approximately 15 min. The sessions would stop early should the child indicate that they wanted to leave the room, although this has rarely happened.

2.2 The Humanoid Robot KASPAR

KASPAR is a child-sized robot which acts as a platform for Human-Robot-Interaction studies, using mainly bodily expressions (movements of the head, arms torso), facial expressions, and gestures to interact with a human. KASPAR is a 60 cm tall robot that is fixed in a sitting position (see Fig. 1). KASPAR has 8 degrees of freedom in the head and neck, 1 DOF in the torso and 6 in the arms. The face is a silicon-rubber mask, which is supported by an aluminium frame. It has 2 DOF eyes, eye lids that can open and shut and a mouth capable of opening and smiling. KASPAR is mounted with several skin patches on cheeks, torso, left and right arm, back and palm of the hands and also soles of the feet. These skin patches are made of distributed pressure sensors based on capacitive technology and covered by layer of foam [29]. An emphasis on the features used for communication allows the robot to present facial/gestural feedback clearly e.g. by changing orientations of the head, moving the eyes and eye lids, moving the arms, and ‘speaking’ simple, pre-recorded sentences. The tactile sensing capabilities allow the robot to respond autonomously when being touched [30]. The robot could also be operated by a remote controlled keypad (implementation examples of the robot’s operation can be found in [31]).

3 The Play Scenarios

Although children with autism share the same core difficulties, each child displays these in an individual way [32]. In addition to impaired communication, atypical sensory processing, motor difficulties, and cognitive impairment are other very common characteristics of autism. As children with autism may manifest these symptoms to varying degrees, this results in an extremely heterogeneous population [33]. Building tactile play scenarios to be used by children with autism therefore requires ongoing exploratory field trials together with continued consultation with the people who know the children in order to feed back into the design loop. This is due to the nature of this user group which includes children with a wide range of abilities and a variety of expected behaviours (unpredictable at times). In previous work by the authors (and as part of a larger consortium in the FP6 project IROMEC [34]) user requirements, therapeutic and educational objectives and play scenarios were developed in consultation with panels of experts and user studies were conducted over several years. One of the outcomes of that project was a set of novel play scenarios encouraging children with learning difficulties, children with severe motor impaiment and children with autism to discover a range of play styles, from solitary to collaborative play using a non-humanoid mobile robot [35].

In the current work, play scenarios were developed specifically for skin-based interaction for robot-assisted play targeting children with autism. Precursor studies which were carried out by the authors to elicit high level requirements for skin based interaction for these children were reported in [36]. This was followed by a series of preliminary experimental investigations with initial play scenarios that were conducted with children with autism and the robot KASPAR, providing essential observational data on children’s behaviour during child–robot tactile interaction which were reported in [37, 38]. These precursor studies provided valuable input to the development of the tactile play scenarios reported here.

In this article, scenarios are regarded as higher-level conceptualisations of the “use of the robot in a particular context”. A design process based on user-centred design principles and a unified structure of the scenarios were adopted and modified from the scenario-based design methodology [39, 40] and from results of the authors’ previous work [34, 41] where a further detailed description of the whole developmental process of play scenarios can be found.

In summary, the structure of the scenarios consists of the description of actors and their roles, the type of play, the description of the activity, the activity model, the place and setting, the artifact used, and the duration of the activity. The play scenarios were developed against specific therapeutic and educational objectives adopted from previous work of the authors [42], where the objectives have been identified and developed in consultation with panels of experts and according to the ICF-CY classification [1].

It is important to note that children do not develop their skills in isolation from each other, and that the abilities they gain might overlap in different developmental areas (e.g. cognitive social and emotional development). The therapeutic and educational objectives selected for the play scenario were classified into five areas of child development: sensory development, communication and interaction, cognitive development, motor development and social and emotional development.

3.1 Tactile Play Scenario TS01—“Make It Smile”—A Cause and Effect Game

Theoretical/Methodological Rationale

-

(a)

Non-formal therapy and learning The development of this play scenario was based on concepts taken from the non-formal therapy and learning methodology where learning emerges from play situations that offer resources for joyful experiences and expressive interactions, and where the child is empowered to control feedback stimuli [43].

-

(b)

Integration of symbolic activity with motor manipulation Play appears to involve the integration of symbolic activity with motor manipulation [2]. To further explore and understand the implications of these associations, Eisert and Lamorey pointed out the need to investigate the relationships between play and the domains of cognitive and language development with children who have developmental deficits in these symbolic areas e.g. young children with autism. The following play scenario TS01 (“make it smile”) is a ‘cause and effect’ game that allows low functioning children with autism to explore simple motor manipulation integrated with basic symbolic activity—an area of development known to be a difficulty for this population.

-

(c)

Supporting the curriculum of autism in early years: Cumine et.al. in their practical guide for the curriculum of Autism in early years advised different methods to support developing skills in different areas of the curriculum (e.g. personal social and emotional development, communication and language) [32]. Some of their advice include:

-

Provide experience which will enable the child to make choices.

-

Help young children recognise their own feelings and those of people around them.

-

Give the child opportunity to link language to physical movement.

These guidelines were implemented in the cause and effect play scenario TS01 where the children can choose what robot behaviour they want to explore. Some of the behaviours of the robot combined physical movement with spoken language (of which the children often repeated out loud). Some of the robot behaviours related to expression of feelings (combining facial expression e.g. sad or happy, with appropriate posture and audio feedback).

-

-

(d)

Action and reinforcement cycle Interaction with the environment provides stimuli in what can be viewed as a dyadic model, that influences and controls the behaviour of the child and is crucial to child development [44]. Here, the interaction between the child and the environment is based on reciprocal stimulation that creates transitions of change and modification. This leads to refinement in the nature of the child’s behaviour, which also becomes more orderly. An example of this can be observed when an infant makes initial attempts at motor co-ordination. As he receives approval and encouragement from his carer (e.g. parent) he puts more effort into it, and that leads to a small refinement that leads to more encouragement and so on. This sequence of actions and reinforcements becomes orderly and predictable, and could enhance the quality of the child’s behaviour and can affect the speed with which he develops. The play scenario TS01 is implementing this dyadic model of interaction at a basic level to be used with low functioning children with autism. Here the Robot KASPAR, equipped with ROBOSKIN tactile capability (tactile feedback from a robotic skin [28]), provides stimuli and reinforcement in a controlled manner (a gradual increase in complexity) helping the child’s social cognition and interaction skills.

Scenario TS01:

TITLE: “Make it Smile”—a Cause and Effect game with a humanoid robot—KASPAR

ACTORS/ROLES: The actors of the scenario are one child and one adult. The adult can be a parent, a teacher, a therapist, etc. The child engages in tactile interaction with the robot, taking the initiative to explore the robot’s autonomous reactions. The adult’s role is to operate the robot with the remote control to add and reinforce the appropriate feedback when needed, and to present further cognitive learning opportunities for the child when possible (according to the child’s abilities).

ACTIVITY DESCRIPTION: The child is shown how to operate the robot by touch. Touching different parts of the robot will cause different reaction and movements, e.g. touching one hand will cause the robot to raise the opposite hand. Touching the upper arm will cause the robot to turn the head to that direction, touching the side of the head will cause the robot to play some sound etc. The robot is able to classify different types of touch and depending on whether a child touches the robot e.g. in a gentle or rough manner, the robot will respond with appropriate feedback (body movements, gestures, speech) and facial expressions (in this case a smile for a gentle touch and a frown as a response to a rough touch). For example, light touch or tickling the left foot, will cause the robot to smile and say ‘this is nice you are tickling me’, light touch of the torso will cause the robot to smile and ‘laugh’ out loud saying “ha ha ha”, inappropriate tactile interaction (e.g. hitting the robot or using too much force in the interaction) will cause the robot to have a ‘sad’ expression, turn his face and torso away to one side, cover its face with its hand and give audible feedback saying “ouch you are hurting me”.

The game starts with the child operating the robot by touching it in different locations, exploring the different autonomous reactions. The adult is using the remote control when needed to produce additional feedback (e.g. if the child hit the robot in an area that is not covered by the sensors, the adult can activate remotely the robot to give a discouraging feedback. In addition, depending on the child’s cognitive abilities, the adult can prompt the child after each improper interaction, to look at the robot’s face and to say how the robot ’feels’, whether it shows a sad or happy face and then encourage the child to make the robot smile again (e.g. tickling the foot or the torso). The adult can also re-emphasize the robot’s response by imitating the robot’s movement and posture and exaggerate its facial expression. This can be repeated many times.

ACTIVITY MODEL

The robot has nine behaviours movements/postures:

-

Moving each arm individually up or down (reaction to touch on each hand)

-

Moving the head to each of the sides individually

-

‘Happy’ posture—arms open to the side—head and eyes straight forward, mouth open with a smile audio play “ha ha ha“ (reaction to a light touch/tickling to torso area)

-

‘sad’ posture—hands covering the face, head and eyes looking down, head and torso turned away to the side and the robot says “ouch you are hurting me” (reaction to inappropriate tactile interaction)

-

‘encouraging’ posture—eyes blinking, mouth open and closed, then remains in a smile position, audio play “this is nice, you are tickling me” (reaction to a touch of the robot’s right foot)

-

Blinking and playing bell sounds (reaction to touch on the sides of the head)

The interaction here between the child and the robot is under the child’s control. The child gets sensory stimuli whilst exploring the robot’s response to tactile interaction. In addition there could be interaction between the child and the adult (with more able children), to encourage the child to look and detect what effect his/her actions have had on the robot’s expression (sad or happy).

PLACE/SETTING

The robot is placed on a table. The adult sits next to it. The child is sitting in front of the robot exploring cause and effect by playing tactile interaction games with it. The adult may use the remote control to activate certain robot behaviour when needed.

ARTIFACTS/MEDIA

The child-sized humanoid robot KASPAR.

TIME/FLOW

The duration of this activity can be from under a minute to 15 min or even longer, depending on how long the child is interested and engaged in the game.

KEYWORDS

Cause and effect, enjoyment, excitement, taking initiative, cognitive learning of basic ‘sad’ and ‘happy’ expression, social interaction with another person

3.2 Play Scenario TS02 (A), (B), (C)—“Follow Me”

Rationale

The game was developed adopting basic principles taken from the ‘Flow theory ‘ developed by the Psychologist Mihaly Scikszentmihalyi. “Flow is the mental state that a person had during an activity characterised by the energy and joy that keeps motivate the person to perform the activity. Flow could be understood as the pleasing or fun moment of an action where the challenge of a new activity combines with the personal skills of each individual” [45].

In his research he identified factors that make an activity enjoyable, where the participants would want to carry on. Some of these factors, which the game in scenario TS01 is based upon are as follows:

-

A challenging activity that requires skills: here there is a need for balance between the opportunity of action, the challenge in the game, and the player’s skill. Too high a challenge will produce anxiety; too easy an activity will produce boredom. In Scenario TS02 we implemented three levels of difficulties, that challenge the player, and we achieve the balance between the challenge and the skills by going up a level to present a challenge, but also going down a level when needed (according to the player’s ability) to allow the player to continue play successfully when the challenge was too high.

-

Clear goals and feedback: Clear, immediate feedback allows the individual to know they have succeeded. Such knowledge creates ‘order in consciousness’.—This was implemented in the game by giving audible sensory feedback in every step of the game.

-

Concentration on the task at hand: When one is thoroughly absorbed in an enjoyable activity there is no room for troubling thoughts. The game in scenario TS02 presents small challenges in every step and thus requires the attention of the player throughout the game.

Scenario TS02:

TITLE: ‘Follow Me’ game with a humanoid robot—KASPAR

ACTORS/ROLES

The actors of the scenario are one child and one adult. The adult can be a parent, a teacher, a therapist, etc. The child engages in a tactile interaction game with the robot. The adult’s role in the basic scenario is simply to encourage the child and re-enforce positive feedback on successful operations. The adult, using the remote control, can also increase or decrease the level of difficulty of the game as needed (according to child’s abilities).

ACTIVITY DESCRIPTION: In each round of the game after announcing the beginning of that round, KASPAR points with one of his hands to a sequence of 2, 3 or 4 of its different body parts that have sensors attached to them (e.g. right leg, torso, left arm), according to the level of the game. The game has three levels of difficulty:

-

i.

In the first level, only 2 body parts are shown in each sequence.

-

ii.

In the second level three different body parts are shown in each sequence.

-

iii.

In the third level 4 different body parts are shown in each sequence.

The robot’s pointing is accompanied by blinking of the eye lids and movement of the head in the direction of the respective body part. The player, in his/her turn, has to activate the sensors by touching KASPAR’s body parts, in the same order that they were presented. For each correct activation of a sensor, KASPAR blinks its eye lids and plays a soft bell sound to provide positive audible feedback. KASPAR will give a distinct different audible feedback when the player touches a wrong sensor and will suggest (in a ‘spoken’ language) to try again. After completing three rounds correctly at a certain level, the game progresses to a higher level. If the player touches the wrong body part in two consecutive rounds, then the game regresses to a lower level. The game ends when the player completed all three levels or the player completed successfully a lower level after a second unsuccessful attempt at a higher level.

On successful completion of the last round, KASPAR announces the end of the game and provides additional audible and vocal positive feedback.

ACTIVITY MODEL

The robot has nine sequences of movements as follows:

-

Three different sequences at level 1 where in each sequence KASPAR shows different combinations of two body parts (e.g. right leg and torso, left arm and right cheek etc)

-

Three different sequences at level 2 where in each sequence KASPAR shows different combinations of three body parts (e.g. left cheek, right leg and torso; left leg, left arm and right cheek etc)

-

Three different sequences at level 4 where in each sequence KASPAR shows different combinations of four body parts (e.g. right leg, left arm, right cheek and torso; torso, right arm, cheek and left leg etc)

The game has a basic scenario and two additional variations as follows:

-

(a)

At the basic scenario [TS02(A)], the interaction is between the child and the robot under the adult’s supervision and encouragement. The child receives sensory stimuli in each tactile interaction and additional sensory reward at the end of each successful round.

-

(b)

Variation 1—[TS02 (B)] this variation aims at low functioning children with autism. In this variation after each sequence that the robot presents, the adult shows the child the correct sequence by pointing/grasping his own body parts one at a time, showing the child which body part to touch on KASPAR. This in effect brings additional imitation aspects to the game where the interaction is also between the child and the adult. The child copies the adult but using KASPAR limbs (e.g. when the adult is grasping his own left arm with his right hand, the child will know to grasp KASPAR’s left arm).

-

(c)

Variation 2—[TS02 (C)] this variation aims at the more able children. This variation adds another dimension to the game by turning it into a turn-taking game. First the adult shows the child the correct interaction for each sequence shown by KASPAR as in Variation 1, (the child copies the adult) and then the child and the adult swap roles and it is the child’s turn to show the adult the correct sequence that KASPAR initiated (the adult ‘copies’ the child, and from time to time can also introduce ‘mistakes’ in order to give opportunities to the child to ‘help’ the adult by showing the correct sequence again).

PLACE/SETTING

The robot is placed on a table. The adult sits next to it. The child/participant is sitting in front of the robot exploring cause and effect by playing tactile interaction with it. The adult may use the remote control to activate certain robot behaviour when needed.

ARTIFACTS/MEDIA

The child-sized humanoid robot KASPAR.

TIME/FLOW

The duration of this activity can be from under a minute to 15 minutes or even longer, depending on how long the child/participant is interested and engaged in the game.

KEYWORDS

Cause and effect, enjoyment, excitement, taking initiative, cognitive learning of basic ‘sad’ and ‘happy’ expression, social interaction with another person.

3.3 Scenario Evaluation Tools

As the goal of the play scenarios is to facilitate and promote tactile social interaction in the way of playing games, basic tools were developed to test the scenarios’ usability and to evaluate their playfulness to monitor how the users have been motivated and/or enjoyed the game, feeding back and helping to improve the scenarios themsleves and the user’s interaction with the robot and other human present. The tools that were used are theScenario Observation Sheet and the Scenario Assesment sheet (see samples in apendices A and B respectly)Footnote 1.

3.3.1 Scenario Observation Sheet

This tool is for an observer (e.g. investigator, teacher, additional observer) to fill in during or immediately after the play session. It aims to observe the interaction between the children and the robot, as well as the children’s behaviour and the robot’s actions and may highlight the following:

-

The difficulties the player faced following the game, or unexpected ways of the player operating the robot.

-

Any positive, negative or unusual reaction of the player to the robot’s behaviour during the game.

-

Issues of the robot’s behaviour/reaction during interactions (e.g. response time).

3.3.2 Scenario Assessment Sheet

This assessment sheet is a simple metric that is based on observation and designed to collect information about the scenario played where the following aspects can be rated:

Playability and motivation: Understood as the moments of fun during the game (e.g. laughter or positive commentaries during the play), and understanding of the game’s dynamics (e.g. users understand how to play the scenario). Motivation is understood as the desire they have to participate and play a specific scenario with a robot.

Usability: The overall intention of measuring the usability of the robot in a specific play scenario. On a higher level, does the robot do what is required in that specific scenario, does it carry out the actions that the users are expecting according to the scenario script? How does the robot respond to unexpected user behaviour/reaction in specific scenario? Any aspect regarding the use of the robot that may be considered important, any type of observation, commentary or action on behalf of the users that the observers consider important and relevant are recorded.

Tactile interaction: the general level of tactile child–robot interaction whether scripted or not.

Spontaneous tactile interaction: unscripted tactile interaction on the player’s own initiative.

4 Play Scenarios Implementations

The refinement and evaluation of the tactile play scenarios continued in user studies with children with autism in different schools. It is important to note here, not only that interactive scenarios with low functioning children with autism often feature free or less-structured interactions, but also, as stated above, that children with autism are extremely heterogeneous population and although they share the same core difficulties, each child displays these in an individual way. Therefore the evaluation and refinement of the scenarios were done on a case study basis. Here, we consulted the teachers and therapists who observed some of the sessions and used the scenarios evaluation tools (i.e. Scenario Observation Sheet, Scenario Evaluation sheet) as well as general observation notes taken by them during the sessions. At times, the scenarios were also adapted during the sessions to the needs or abilities of a specific child. Examples can be seen below.

4.1 Play Scenario TS01—“Make it Smile”—Case Study Examples

The play scenario TS01 is a ‘cause and effect’ game that allows low functioning children with autism to explore simple motor manipulation integrated with basic symbolic activity—an area of development known to be a difficulty for this population.

In this scenario the child engages in tactile interaction with the robot, taking the initiative to explore the robot’s autonomous reactions. The adult’s role is to operate the robot with the remote control to add and re-enforce the appropriate feedback when needed, and to present further cognitive learning opportunities for the child when possible (according to the child’s abilities).

4.1.1 Example 1—Young Children Exploring Cause and Effect

The children who were attending the pre-school nursery were young and less able and it was agreed with the teachers that the best objectives for the sessions with KASPAR would be to work on simple, basic cause and effect games, helping the children to link between their actions and the response of the robot (Fig. 2). Some of the existing robot behaviours e.g touching one hand of the robot causes the robot to raise its other hand, were already found to be too difficult for some of the children to follow and the robot’s response had to be changed for these children accordingly. The children were often using the other simple robot behaviours e.g. touching the side of the head to activate a ‘bleep’ sound, stroking the leg or the torso in order to activate the a ‘happy ‘ posture with a big smile accompanied by verbal response of the robot (e.g. “this is nice, it tickles me”, “ha ha ha“), or when hitting or pressing too hard the robot, the verbal response is “outch you are hurting me” accompanying a ‘sad’ posture (Fig. 3). Initial exploration into cognitive learning, when the robot gave feedback according to the nature of the tactile interaction, also took place. The following are some examples observed by the teacher in this respect. However for these young children with autism it was too early to see any lasting positive results and a long-term study is needed.

Examples of a teacher’s observations of children Footnote 2 at the nursery:

(these notes were taken by the head-teacher who observed all sessions)

-

AG—Although needed lots of prompting, eventually recognised sad face when hurt

-

AI—after pressing only the buttons on the remote control for some time without paying attention to anything else (typical autistic behaviour), he started to take initiative to touch the body of the robot exploring robot’s reactions.

-

AI displaying an emerging awareness of cause and effect.

It was also noted by teachers in this nursery and in another secondary school, both having low functioning children with autism, that having the verbal response of the robot to tactile interaction incorporated in the play scenario was found to be beneficial to the children. Some of the children who have very limited use of language skills were found trying to or repeating out loud the robot’s verbal response which might help to improve their verbal skills as well as their understanding of their action or the robot behaviour in the context of the interaction (e.g. ‘sad’ hurt’, happy’ tickling’ etc). It was also found to excite the children promoting them to further explore cause and effect through the tactile interaction with the robot. The following are some examples in this respect, involving several very low functioning children with autism, observed by the teachers in different sessions.

Examples of a teacher’s observations of several children at a secondary school:

(these notes were taken by a teacher assistant who was present in all sessions)

-

New for GT—immediately engaged with KASPAR, touched his foot, stroked his chest and leant forward in his chair to get a close look at the robot. explored cause and effect and was able to make the robot happy when asked for by stroking the robot’s chest.

-

GT express some speech e.g “sa” for sad and “tickle”.

-

GT very excited, rocking in his chair and making loud vocalisations.

-

In the following session GT showed good response to cause and effect. HS looks at KASPAR to see the robot’s reaction to him pressing buttons on the control panel, or when he touches the robot.

-

ID laughed, when KASPAR laughed. ID copied robot’s hand movements, both hands up in the air. Smiled when KASPAR said “this is nice and gentle” (KASPAR’s response to gentle touch to his leg)

-

In the following session ID stroked the robot on its chest to make it laugh, and then expresses his joy with a big smile.

-

ID gently stroked KASPAR’s arm to trigger the ‘happy’ posture after he tried to put his finger in the robot’s eye, which caused the robot to responds with ”outch you are hurting me” (which was triggered by the experimenter) and which was followed by the teacher demonstrating a gentle stroke on ID’s hand.

-

This game prompted CT to take initiative in several sessions and to stroke the torso and touch the leg, enjoying, with a big smile, the robot’s behaviour and verbal response.

4.1.2 Example 2—Children Responding to KASPAR’s Reactions to Their Touch, Exploring ‘Happy’ and ‘Sad’ Expressions

Case-study analysis of these sessions emphasizes aspects of embodiment and interaction kinesics. They revealed that autistic children demonstrated an inclination for tactile contact with the robot and showed some responsiveness to KASPAR’s embodied reactions to their touch. There was also some initial evidence to suggest that the children learnt across trials.

Some children, when first discovering the robot’s response to an inappropriate and forceful touch (i.e turning away, hiding its face behind its hand and having a verbal response saying ‘ouch you are hurting me”) were repeatedly seeking this response (possibly due to sensory stimulation). This was outside the context and meaning of the overall interaction and despite KASPAR responding by displaying his ‘sad’ expression. However, after several times, and in some cases in later sessions they started to pay attention to their action in the context of robot behaviours it caused (making the robot display a ‘sad’ expression and say ‘it hurts’), they also started to appreciate that this is an inappropriate behaviour. One child for example, first started to ask ‘why he is doing it’ and ‘what is wrong’ when he noticed KASPAR’s sad face, to which the investigator provided an explanation. In later sessions, he continued to explore this behaviour, but after making KASPAR ‘sad’ he used to stroke the robot on the back of the head, on his own initiative and without any prompting said ‘sorry KASPAR’.

Often after following the onset of KASPAR’s display of sad expression, the children started to gently stroke KASPAR in the torso or tickle his foot to cause him to display its ‘happy’ expressions and at times this followed with a satisfactory smile that the children displayed. There were times that the investigator, using the remote control, triggered manually a ‘sad’ expression on the robot’s face encouraging the children to make it display a ‘happy’ expression (Fig. 4).

4.1.3 Example 3—Low Functioning Children with Autism Learning Cause and Effect Across Trials

The basic cause and effect game in scenario TS01 potentially could be useful also to very low functioning children with autism, too. The example below (Fig. 5) is of a child (ID) that has almost no language skills at all and has very short attention span. He enjoyed exploring KASPAR in tactile interactions, although at times he handled KASPAR in a very rough way. When prompted with the teacher’s help to be gentle, in the following sessions IB frequently stroked the robot on his chest to make it laugh, and then expressed his joy with a big smile.

4.2 Play Scenario TS02 “Follow Me”—Case Study Examples from Primary and Secondary Schools

Play scenario TS02 “follow me”, with all its variations has been tried out first with typically developing children in a mainstream school, before implimenting it, initially only the basic form of the scenario, with children with autism. In this scenario the robot points to a sequence of body parts (where there are skin patches attached) and the child, in his turn, needs to follow the sequence by touching the appropriate body parts of the robot in the same order that they were first shown. The robot then provides audio feedback—verbal encouragement on a succesful round or a notification of an error. Low functioning children with autism might have difficulties to focus their attention on the sequence that the robot shows and to understand the overall procedure in this turn-taking game. As anticipated, the experimenter initially had to point to the required body parts of the robot showing the child where to touch, helping them to focus on the task and to complete it successfully (having the sensory stimuli as a reward at the end). In a later stage, we further explored the implementation of this play scenario with children with autism, refining the basic form of the game as necessary, and explored also variations 1 & 2 of the game (where it included additional elements of turn-taking and imitation) with this population.

4.2.1 Example 1: Trials with Low Functioning Teenagers with Autism and a Secndary School

During consultations with the therapists in a secondary school with very low functioning children with autism, the therapists advised that it could be very beneficial for some of the children, if the “follow me” game will also verbally name out loud each body part that the robot is pointing to during the game. The game was than adapted accordingly, additional voice recording of body parts names were produced and all the game sequences were modified to play the related recordings to match the sequence of the robot’s movements.

This proved successful to some children and particularly to CD. It attracted the child’s attention, helping him to better concentrate on the game, whilst at the same time it further developed the sense of self: at times, he first followed the shown sequence by pointing to his own body parts before touching the corresponding parts of the robot (see Fig. 6).

At a later stage the experimenter introduced variations 1 & 2 of the game, turning it into an imitation and turn taking tactile game. In Fig. 7 below we can see on the left how CD demonstrates on himself and showing the experimenter the correct sequence of the robot’s body parts to touch, and on the right we can see how this role has been swapped, and the experimenter in his turn, shows the child the correct sequence of the robot’s body part to touch.

5 Discussion and Future Work

In recent years there have been many examples of robots being used in play activities of children with special needs, for therapeutic or educational purposes [21, 35, 46–51] to mention just a few. As mentioned in [35], these robots have shown to be useful in promoting spontaneous play in children with developmental disorders, engaging them in playful interactions thus pointing out the need for a shared framework that would help the process of developing play activities against therapeutic objectives. A number of recent research projects focus on developing therapeutic tools for children with autism, see reviews in [52, 53]. This research area will benefit from scenarios and methodologies shared among researchers and this article can contribute to such an exchange which allows e.g. for the replication of results and experiments by different research groups.

Based on the framework presented in [35], this article presented a set of tactile play scenarios, each with its relevant educational and therapeutic objectives in five key developmental areas (i.e. sensory development, communication and interaction, cognitive development, motor development and social and emotional development). Although the play scenarios were originally developed for and tested with children with autism and with the robot KASPAR, the play scenarios may be considered for use with other user groups or in other applications involving human–robot interaction with different robotic toys.

The studies presented in this article highlight the possible important role that can be played by assistive technology enabled with tactile feedback capabilities. Tactile play scenarios built around a basic cause and effect game, for example, may help children with autism to link their actions and the response of the robot and might help the children in their social cognition through embodied and cognitive learning.

Play scenario TS01 is a ‘cause and effect’ game that allows low functioning children with autism to explore simple motor manipulation integrated with basic symbolic activity—an area of development known to be a difficulty for this population. In this scenario the child engages in tactile interaction with the robot, taking the initiative to explore the robot’s autonomous reactions.

The data suggest that children initiate a tactile engagement with KASPAR from their first encounter. Some of these tactile engagements in early encounters could be understood to be more ‘forceful’. However, especially in the later trials children started to pay attention to their action in the context of robot behaviours it caused. When KASPAR displayed a sad face and a discomfort posture, some children took the initiative to touch the sensors to make the robot display a ‘happy’ face.

Play scenario TS02 is a tactile game where the robot points to a sequence of body parts and the child in his turn, needs to follow the sequence by touching the appropriate robot’s body parts in the same order that they were first shown. Variations of the scenario also include additional elements of turn-taking and imitation when the child and the experimenter take turns to show each other the longer more difficult sequences that the robot has displayed. This resulted in a triadic interaction between a child the experimenter and the robot which may help foster social skills in this population.

In their evaluation of scenario TS01, teachers and therapists noted examples of how this scenario promoted the children to take initiative in their interaction, help the emergence of awareness of cause and effect and help to link ‘sad’ expression to being ‘hurt’. In addition, it was noted by the therapists that scenario TS02 could help some children to extend their focus and concentrate skills whilst at the same time further developing a sense of self, e.g. when a child first followed the shown sequence by pointing to their own body parts before touching the corresponding parts of the robot. It must be noted here that at this stage, it is not known if these will be lasting results and a therapeutically-oriented, long-term study is needed.

A future plan is to carry out a randomized controlled study (clinical trial) to assess whether mediated human-robot interaction can improve the social skills of children/adolescents diagnosed with ‘lower functioning autism’ (LFA). A randomised two-phased clinical trial of a humanoid robot-mediated social interaction package will be evaluated against a clinical waiting list control. Participants will be measured at pre- and post intervention periods with a broad based as well as autism specific social skills measure, as well as a period of three to 6 months post-intervention, in order to assess the durability of treatment impact. It is hypothesised that children exposed to the robot–mediated intervention will display greater multi-contextual social skills progress than those children in the control group. Such a pattern of results would suggest that the impact of robot–mediated interaction has the potential to significantly develop generalisable social skills in children with low functioning autism.

Notes

Adopted and modified from tools developed by the team (including the authors) at the University of Hertfordshire in previous FP6 project IROMEC [34].

In order to maintain the confidentiality of participants, the identification of children are represented by abbreviated codes (e.g. AI, RI, ID).

References

WHO (2001) International classification of functioning, disability and health. World Health Organization, Geneva

Eisert D, Lamorey S (1996) Play as a window on child development: the relationship between play and other developmental domains. Early Educat Dev 7(3):221–235

Ferland F (1977) The Ludic model: play, children with physical disabilities and occupational therapy. University of Ottawa Press, Ottawa

Vygotsky LS (1978) Mind in society. Harvard University Press, Cambridge

Bruner JS (1990) Acts of meaning. Harvard University Press, Cambridge, MA

Powell S (2000) Helping children with autism to learn. David Fulton Publishers, London

Piaget JP (1962) Play, dreams, and imitation in childhood. Norton, New York

Winnicott DW (1971) Playing and reality. Penguin Books Ltd, Middlesex

Jordan R (1999) Autistic spectrum disorders—an introductory handbook for practitioners. David Fulton Publishers, London

Wing L (1996) The autistic spectrum. Constable Press, London

Baron-Cohen S (1995) Mindblindness: an essay on autism and theory of mind. Bradford Book, Cambridge

Landau E (2001) Autism. Franklin Watts, London

Howlin P (1986) Social behaviour in autism. Plenum Press, New York, pp 103–132

Caldwell P (1996) Getting in touch: ways of working with people with severe learning disabilities and extensive support needs. Pavilion Publishing Ltd, Brighton

Bernstein P (1986) Theoretical approaches in dance/movement therapy, I & II. Kendall Hunt, Dubuque

Costonis M (1978) Therapy in motion. University of Illinois Press, Urbana

Bannerman-Haig S (1999) Dance movement therapy—a case study. Process in the arts therapies. Jessica Kingley Publishers, London

Meekums B (2002) Dance movement therapy: a creative psychotherapeutic approach. Sage Publication, London

Boccanfuso L, O’Kane JM (2011) CHARLIE : an adaptive robot design with hand and face tracking for use in autism therapy. Int J Soc Robot 3:337–347

Fujimoto I, Matsumoto T, Ravindra P, Silva SD, Kobayashi M, Higashi M (2011) Mimicking and evaluating human motion to improve the imitation skill of children with autism through a robot. Int J Soc Robot 3:349–357

Kozima H, Michalowski M, Nakagawa C (2009) Keepon—a playful robot for research, therapy, and entertainment. Int J Soc Robot 1:3–18

Cabibihan J-J, Javed H, Ang-Jr M, Aljunied SM (2013) Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. Int J Soc Robot 5:593– 618

Robins B, Dautenhahn K (2007) Encouraging social interaction skills in children with autism playing with robots: a case study evaluation of triadic interactions involving children with autism, other people (peers and adults) and a robotic toy. Enfance 59(1):72–81

Robins B, Dautenhahn K, Dickerson P (2009) From isolation to communication: a case study evaluation of robot assisted play for children with autism with a minimally expressive humanoid robot. Proceedings of the second international conferences on advances in computer–human interactions, ACHI 09

Robins B, Dautenhahn K, Dickerson P (2012) Embodiment and cognitive learning—can a humanoid robot help children with autism to learn about tactile social behaviour? In: International conference on social robotics (ICSR’12) Chengdu

Robins B, Dautenhahn K, te Boekhorst R, Billard A (2005) Robotic assistants in therapy and education of children with autism: can a small humanoid robot help encourage social interaction skills? Univers Access Inf Soc (UAIS) 4(No. 2):105–120

Robins B, Dickerson P, Stribling P, Dautenhahn K (2004) Robot-mediated joint attention in children with autism: a case study in robot-human interaction. Interact Stud 5(2):161–198

ROBOSKIN (2013) URL: http://www.roboskin.eu. Last Accessed 26 May 2013

Maiolino P, Ascia A, Natale L, Cannata G, Metta G (2012) Large scale capacitive skin for robots. In: Berselli GV, R. Vassura (ed) Smart actuation and sensing systems—recent advances and future challenges. InTech

Ji Z, Amirabdollahian F, Polani D, Dautenhahn K (2011) Histogram based classification of tactile patterns on periodically distributed sensors for a humanoid robot. In: 20th IEEE international symposium on robot and human interactive communication- RO-MAN 2011, Atlanta, GA

Dautenhahn K, Nehaniv CL, Walters ML, Robins B, Kose-Bagci H, Mirza NA, Blow M (2009) KASPAR—a minimally expressive humanoid robot for human–robot interaction research. Special issue on “humanoid robots”, Appl Bionics Biomech 6(3): 369–397 (published by Taylor and Francis)

Cumine V, Leach J, Stevenson G (2000) Autism in the early years: a practical guide David. Fulton Publishers, London

Parham D, Fasio L (2008) Play in occupational therapy for children. Mosby-Elsevier, St. Louis

IROMEC (2013) URL: http://www.iromec.org. Last Accessed 14 May 13

Robins B, Dautenhahn K, Ferrari E, Kronreif G, Prazak-Aram B, Marti P, Iacono I, Gelderblom G, Bernd T, Caprino F, Laudanna E (2012) Scenarios of robot-assisted play for children with cognitive and physical disabilities. Interact Stud 13(2):189–234

Amirabdollahian F, Robins B, Dautenhahn K (2009) Robotic skin requirements based on case studies on interacting with children with autism. In: “Tactile Sensing” workshop at IEEE Humanoids09, Paris

Robins B, Amirabdollahian F, Ji Z, Dautenhahn K (2010) Tactile interaction with a humanoid robot for children with autism: a case study analysis from user requirements to implementation. In: The19th IEEE international symposium in robot and human interactive communication (RO-MAN10), Viareggio

Robins B, Dautenhahn K (2010) Developing play scenarios for tactile interaction with a humanoid robot: a case study exploration with children with autism. In: International conference on social robotics (ICSR’10), Singapore pp 24–25

Carroll JM (1995) Scenario-based design: envisioning work and technology in system development. Wiley, New York

Rozzo A, Marti P, Decortis F, Moderini C, Rutgers J (2003) The design of POGO story world. In: Hollnagel E (ed) Cognitive task design. Earlbaum, London

Robins B, Ferrari E, Dautenhahn K, Kronrief G, Prazak-Aram B, Gerderblom G, Caprino F (2010) Human-centred design methods: developing scenarios for robot assisted play informed by user panels and field trials. Int J Hum Comput Stud IJHCS 68:873–898

Ferrari E, Robins B, Dautenhahn K (2009) Therapeutic and educational objectives in robot assisted play for children with autism. In: 18th IEEE international workshop on robot and human interactive communication—RO-MAN 2009, Toyama

Petersson E, Brooks A (2007) Non-formal therapy and learning potentials through human gesture synchronised to robotic gesture. Univers Access Inf Soc 6:167–177

Cohen S (1976) Social and personality development in childhood. Macmillan Publishing Company, NewYork

Dietz C (2004) Mihaly Csikszentmihalyi’s theory of flow. Teaching Expertise— http://www.teachingexpertise.com/articles/mihaly-csikszentmihalyis-theory-of-flow-1674—Optimus Professional Publishing, Last Sccessed 27 August 2012

Lathan C, Malley S (2001) Development of a new robotic interface for telerehabilitation. In: EC/NSF workshop on Universal accessibility of ubiquitous computing, Alcácer do Sal, Portugal, pp 80–83

Marti P, Pollini A, Rullo A, Shibata T (2005) Engaging with artificial pets. In: Proceedings of annual conference of the European association of cognitive ergonomics, Chania, Greece

Michaud F, Larouche H, F. Larose, T. Salter, A. Duquette, H. Mercier, Lauria M (2007) Mobile robots engaging children in learning. In: Canadian medical and biological engineering Conference, Toronto

Robins B, Dautenhahn K, te-Boekhorst R, Billard A (2005) Robotic assistants in therapy and education of children with autism: can a small humanoid robot help encourage social interaction skills? Univers Access Inf Soc 4:2

Robins B, K. Dautenhahn, Dickerson P (2009) From isolation to communication: a case study evaluation of robot assisted play for children with autism with a minimally expressive humanoid robot. Paper presented at the second international conference on advances in CHI, ACHI09, Cancun, Mexico

Stiehl WD, Lee JK, Breazeal C, Nalin M, Morandi A, Sanna A (2009) PatitPo the huggable: a platform for research in robotic companions for pediatric care. In: Creative interactive play for disabled children workshop at the 8th international conference on interaction design and children (IDC 2009), Como, Italy

Diehl J, Schmitt L, Villano M, Crowell C (2012) The clinical use of robots for individuals with autism spectrum disorders: a critical review. Res Autism Spectr Disord 6:249–262

Scassellati B, Admoni H, Matarić M (2012) Robots for use in autism research. Ann Rev Biomed Eng 14:275–294

Acknowledgments

This work has been partially supported by the European Commission under contract number FP7-231500-ROBOSKIN

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Appendix 2

This assessment sheet is based on observation and designed to collect information about the scenario played where the following aspects can be rated:

Playability and Motivation: Understood as the moments of fun during the game. (e.g. laughter or positive commentaries during the play), understanding the game’s dynamics (e.g. users understand how to play the scenario). Motivation is understood as the desire they have to participate and play a specific scenario with a robot,

Usability: The overall intention of measuring the usability of the robot in a specific play scenario. On a grand scale, does the robot do what is required in that specific scenario, does it carry out the actions that the users are expecting according to the scenario script? How does the robot respond to unexpected user behavior/reaction in specific scenario,. Any aspect regarding the use of the robot that may be considered important, any type of observation, commentary or action on behalf of the users that the observers consider important and relevant.

Tactile Interaction—the general level of tactile interaction whether scripted or not

Spontaneous Tactile Interaction—unscripted tactile interaction on the players’ own initiative

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Robins, B., Dautenhahn, K. Tactile Interactions with a Humanoid Robot: Novel Play Scenario Implementations with Children with Autism. Int J of Soc Robotics 6, 397–415 (2014). https://doi.org/10.1007/s12369-014-0228-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-014-0228-0